Autonomic Semantic-Based Context-Aware Platform for Mobile Applications in Pervasive Environments

Abstract

:1. Introduction

2. Related Works

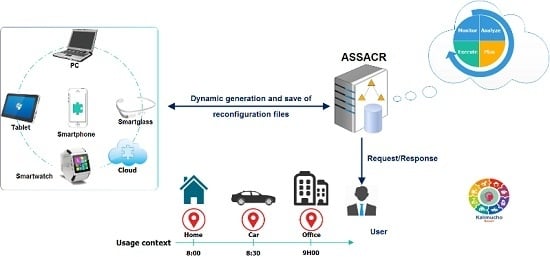

3. Kali-Smart: Autonomic Semantic-Based Context-Aware Adaptation Platform

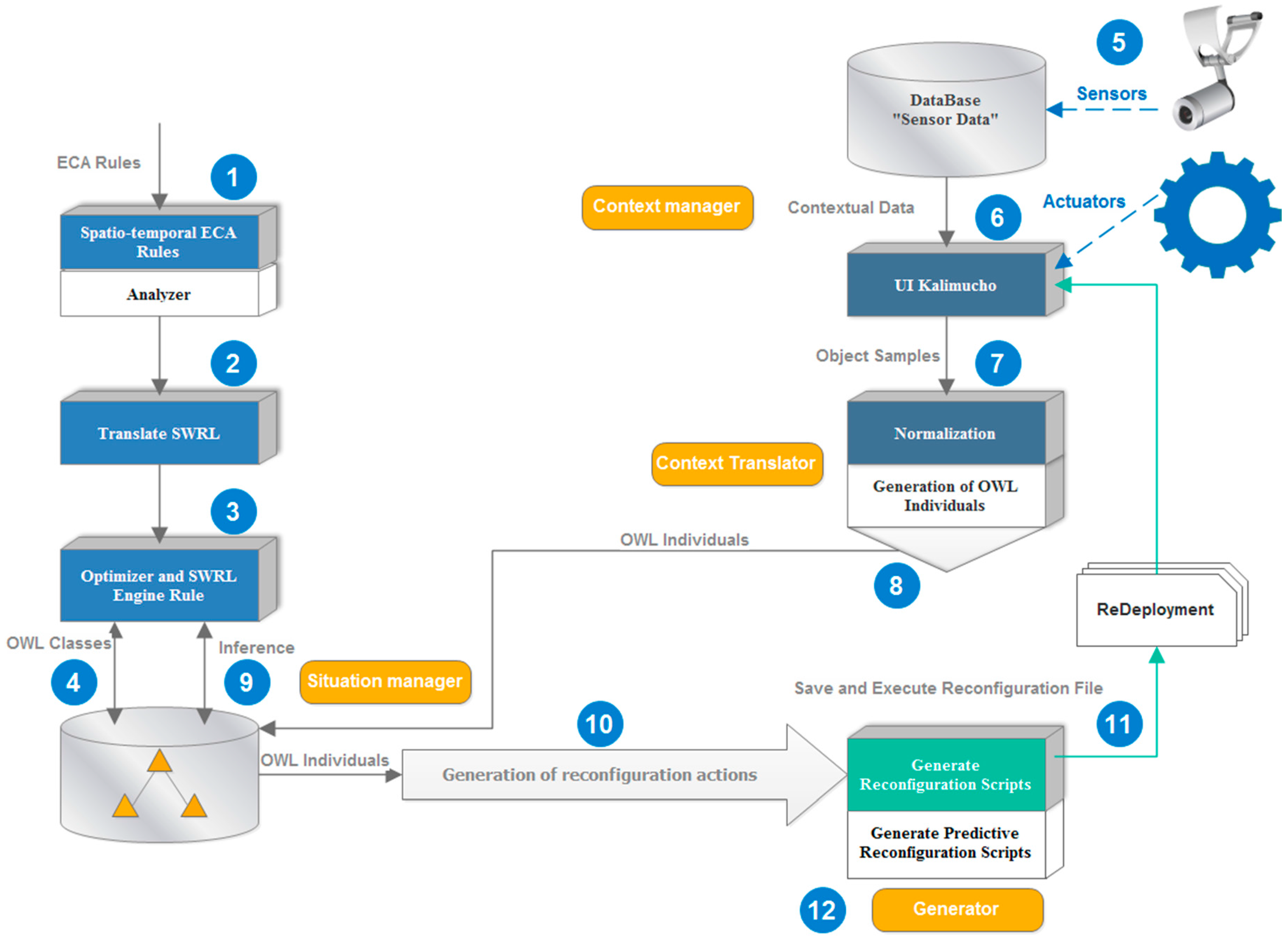

3.1. Platform Architecture

- The Knowledge Base (KB) Management Layer is the part of the platform that is responsible for the representation of the context information in OWL-DL(Description Logics). In our approach, KB consists of the central component, which corresponds to a knowledgebase of different users’ profiles, semantic multimodal services and reconfigurations files and a knowledgebase of semantic service description. We exploit most of the capabilities that OWL provides, such as reusability, sharing and extensibility, in way to offer wide representation of the context in smart-* domains.

- The Semantic Context-aware Services Management Layer is responsible for managing the adaptation process and providing a continuous response to a user by adapting dynamically its context provisioning paths according to the change happened during its execution. This core layer relies on the following components:

- The User Context Manager is responsible for capturing user context changes and storing these in a KB repository. The user context is enriched from different explicit constraints and implicit resource characteristics, services with various modalities and shared multimedia documents. This component includes:

- -

- Semantic Constraint Analyzer: interprets the profile, which expresses the users’ preferences (e.g., explicit constraint), context information about the device (memory size, battery level, CPU speed, screen resolution, location, etc.), supported documents (media format, media type, content size, etc.), network characteristics (bandwidth, protocol type, etc.).

- -

- Constraint Translator: converts some semantic users’ constraints specified in qualitative terms into triplet pattern in the OWL format.

- -

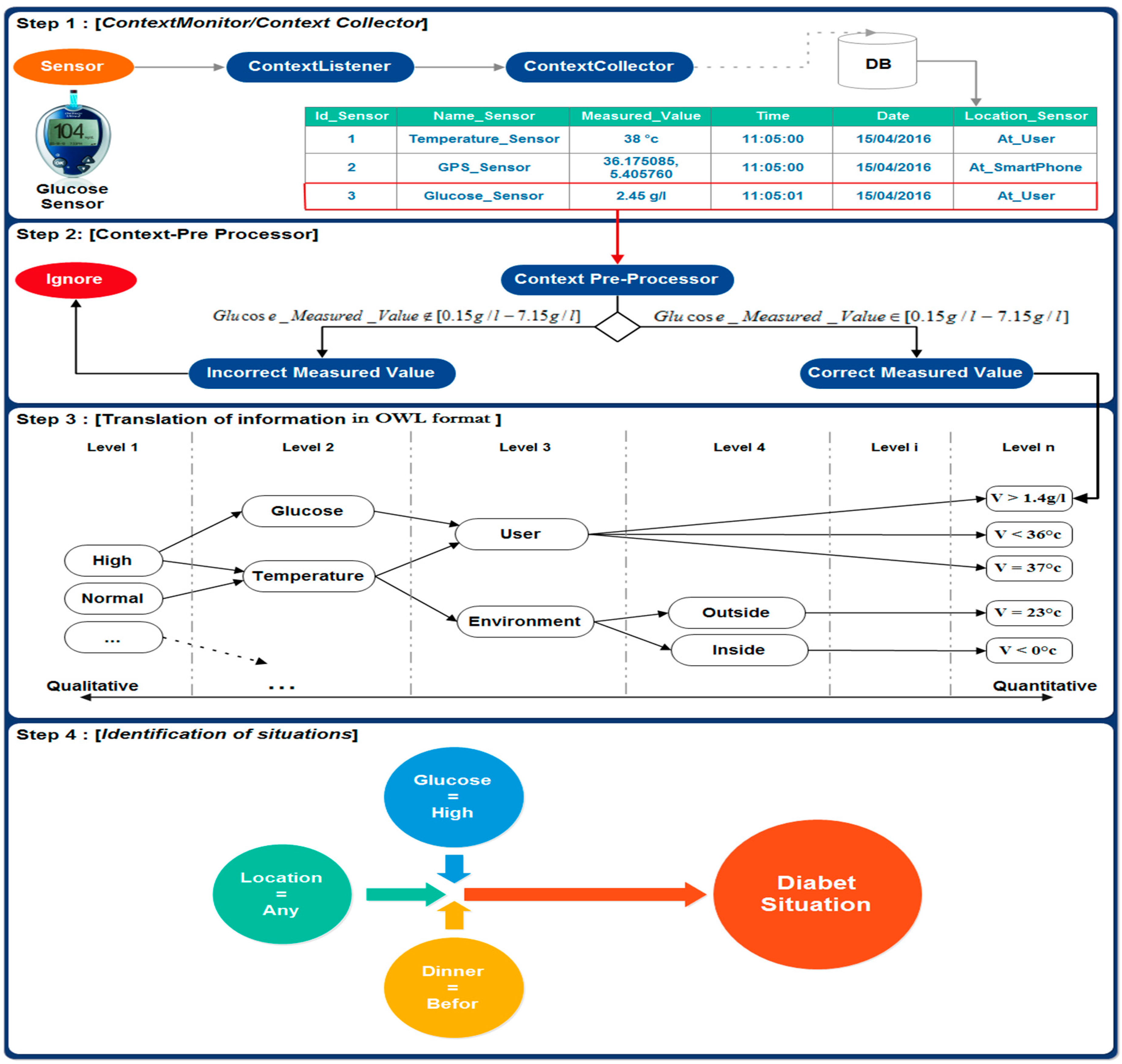

- Context Collector: collects static and dynamic context (user’s information, device’s information and sensor data) via different interfaces. The collected information will be integrated to make the low-level context and stored as the XML format.

- -

- Context Pre-Processor: is responsible for analyzing sensor data in order to remove duplicated data und unify sensor data that can have different measurement units.

- -

- Context Translator: is responsible to convert the context data into a triplet pattern in the OWL format.

- Services Context Manager is responsible for extracting and storing the context service multimodal description (gesture, voice, pen click and mouse click) with semantic context service constraints in a service repository. It is enriched by various QoS parameters (media quality, execution time, etc.).

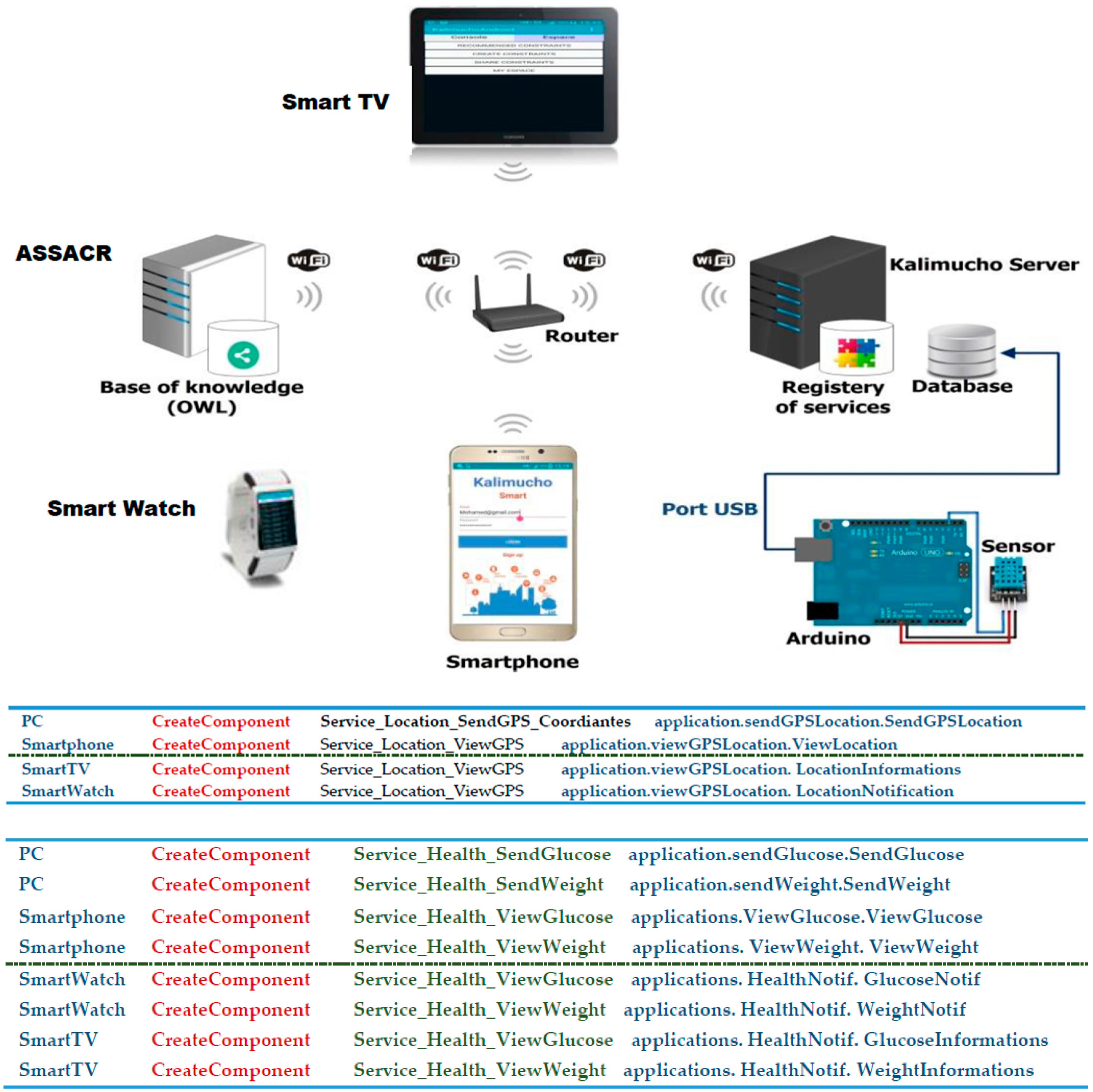

- The Autonomic Semantic Service Adaptation Controller and Reconfiguration (ASSACR) Layer supports the building of personalized and adapted process from available adaptation services. It is designed to follow an incremental dynamic strategy for provisioning services regarding the context changes during execution. We takes user’s current context (battery level, CPU load, bandwidth, user location) and all of his or her surrounding resources as the input (local, remote). Solving the single user constraint in a low service space (local, neighbors) is easier and less time consuming.

- Context Monitor: is responsible of verifying the user’s context change by contacting the User Context Manager component.

- Context Reasoner: is responsible for making inferences on the ontology information, determining and deploying the appropriate services according to the deduced situations. This component includes:

- -

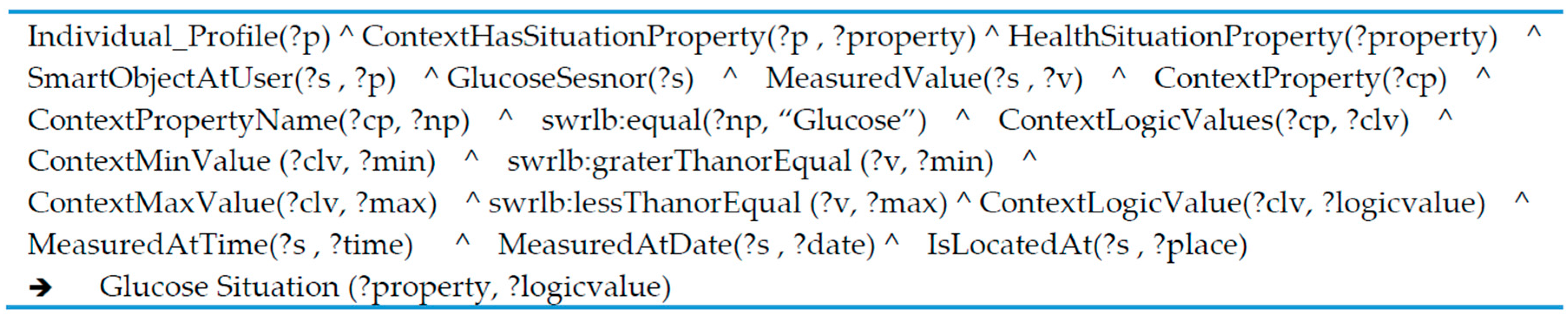

- Situation Reasoner: This is based on the JESS (Java Expert System Shell) inference engine [14], executes the SWRL (Semantic Web Rule Language) situation rules and infers the current situations. The SWRL situation rules include user-defined rules written and executed on the available context information in order to infer additional knowledge and conclusions about it. We choose to follow the rules-based approach for the basic advantage that a user can simply reconfigure the behavior of the environment by defining new rules, without having to change the source code.

- -

- Action Reasoner: which is based on the JESS inference engine, as well [15], executes the SWRL service rules and determines the appropriate actions according to the deduced situations.

- -

- Prediction Reasoner: is responsible for generating predictions about the activity and situations happening in the environment.

- Service Controller is responsible for re-routing the data transfer to another path when there is poor QoS, connection failure, low battery, etc., in order to ensure service continuity and perform reconfiguration changes at the Kalimucho server.

- -

- Automatic Semantic Service Discovery: all gateways and services in the same local network are automatically discovered by sending SNMP (Simple Network Management Protocol: which represents the management information control that describes the router state) broadcast. Only mobile devices hosting the Kalimucho platform with SNMP will send a response to the SNMP request broadcast by ASSACR. SNMP responses will be processed by ASSACR to establish the neighbors/services individuals of the OWL Host and Services Classes in the ontology repository.

- -

- Semantic Service Analyzer: analyzes equivalent semantic services based on location, time and activity with different qualities (execution time, modality input/output). Two cases are possible for equivalent semantic services with different qualities: (1) semantic equivalent services with different service qualities at the same Kalimucho gateway; in this case, redundancy is supported by the Kalimucho gateway; and (2) semantic equivalent services with different service qualities selected from two (or more) gateways. In this case, redundancy is supported by the Kalimucho server.

- -

- Reconfiguration Generator: SNMP is a simple protocol to ensure dynamic service reconfiguration; ASSACR can automatically create configuration files to be saved in a Kalimucho repository.

- -

- Service Deplorer: the configuration file created in the previous phase is copied in the Kalimucho cloud server, and all mobile devices affected by the equivalent path and the new services configuration are saved.

- The Kalimucho Platform Layer: offers service-level functions: the physical (re-)deployment and dynamic incremental reconfiguration strategy according to its system (Android, laptop with QoS requirement, dynamic supervision of adaptation components and communication protocols between mobile nodes).

3.2. Functional Model of the Smart Semantic–Based Context-Aware Adaptation Platform

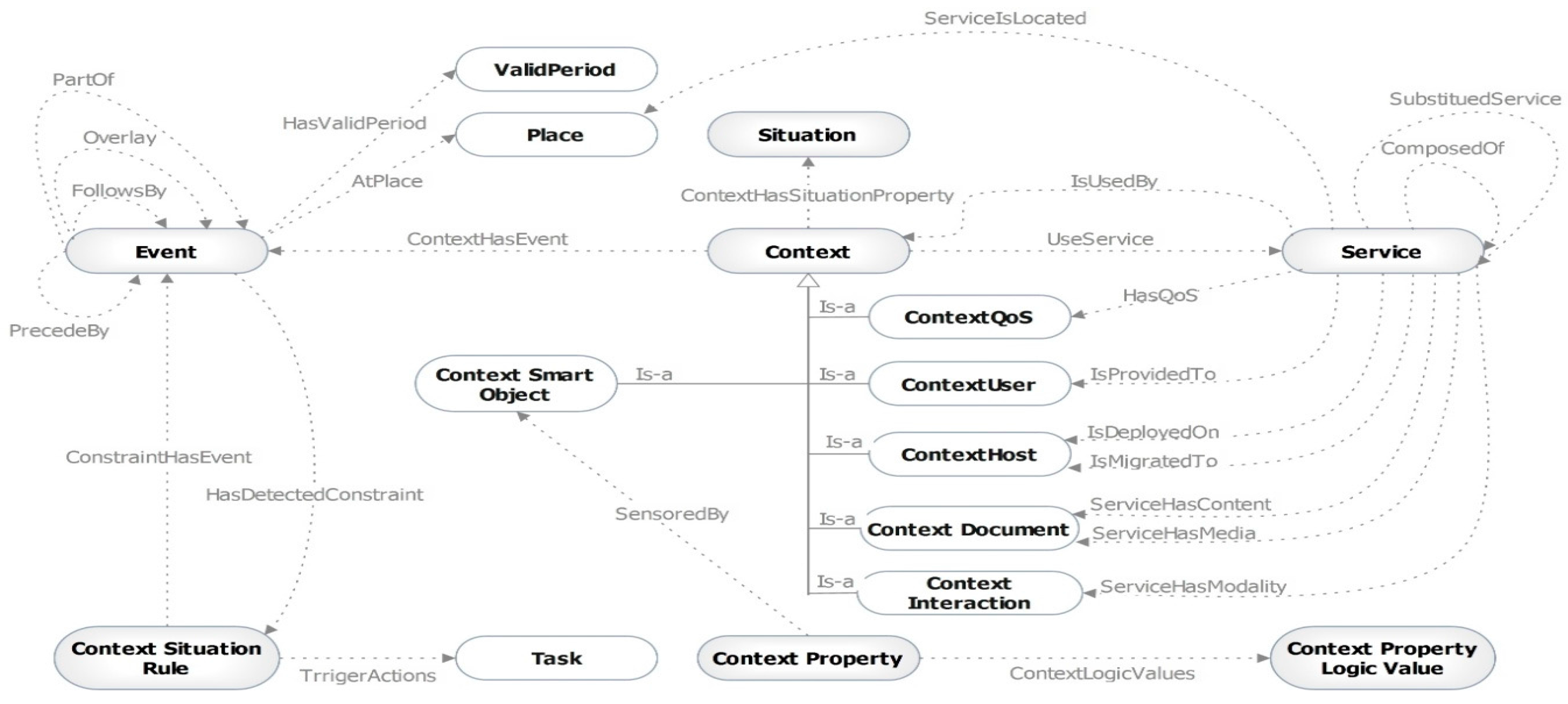

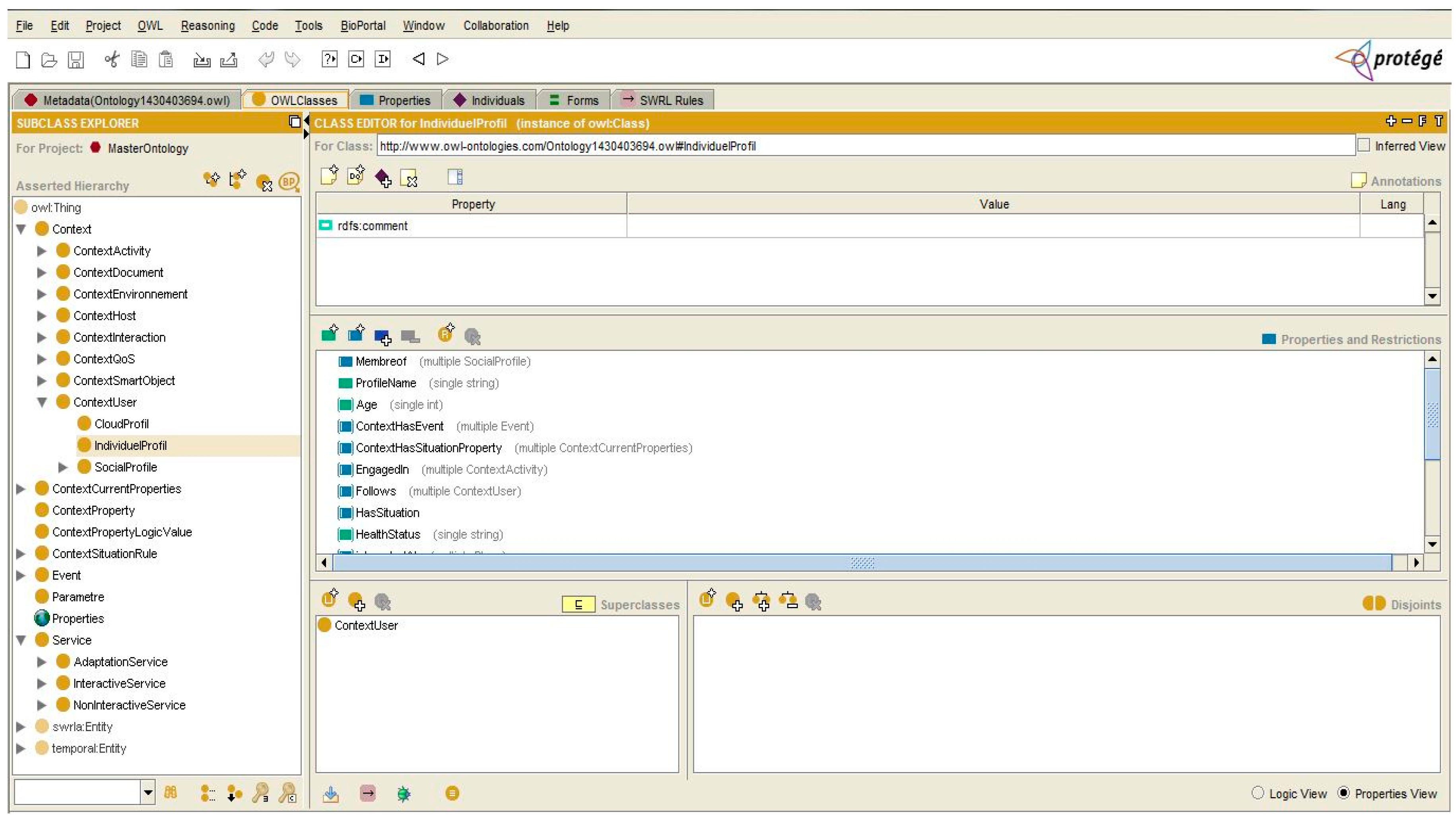

4. Ontology and Rules-Based Context Model

4.1. Context Modeling

4.1.1. Context Sub-Ontology

- The RessourceContext describes the current state of the hard equipment and soft equipment (memory size, CPU speed, battery energy, etc.).

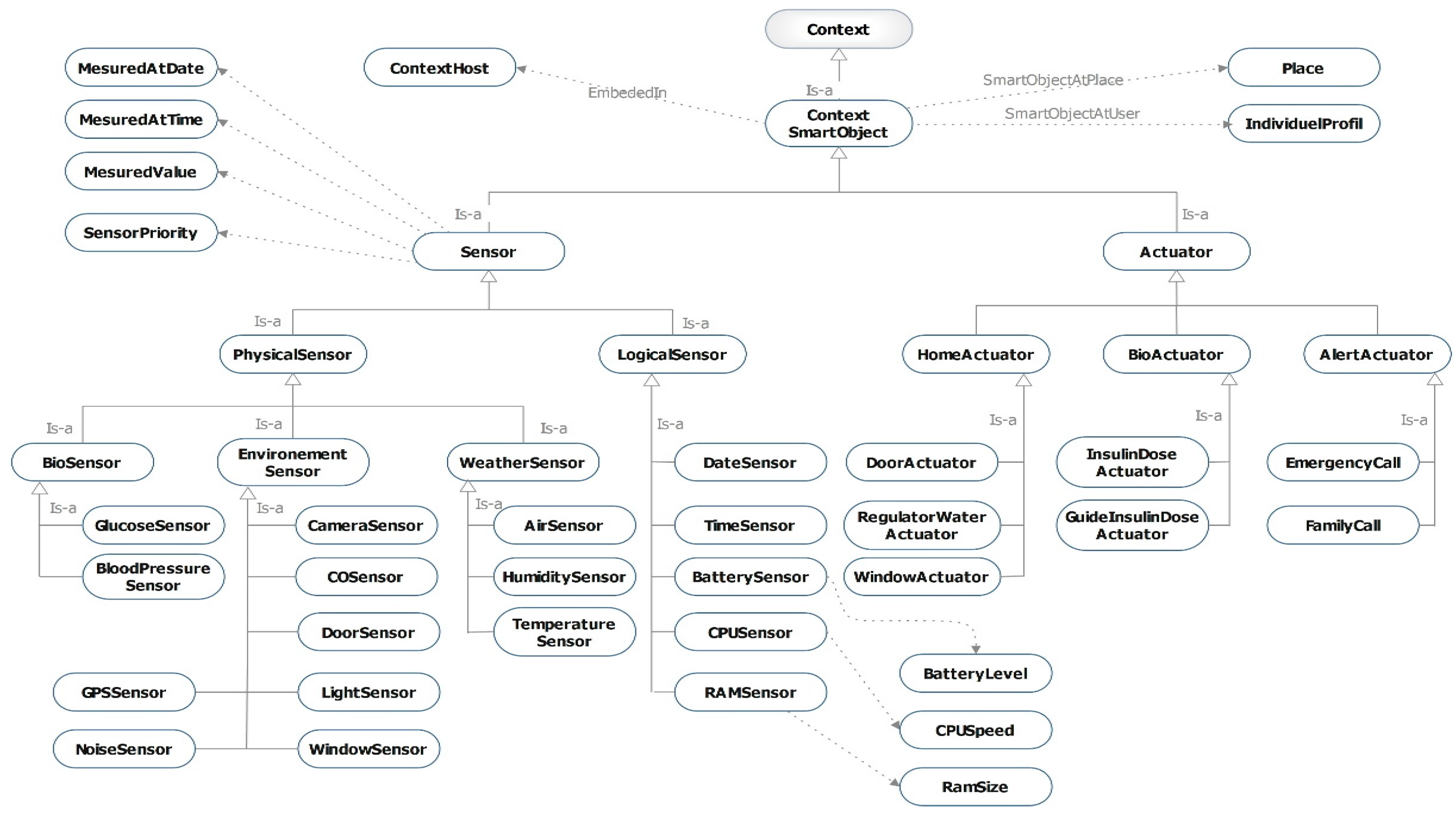

- The User Context describes information about the user, which can alter the adaptation service. User preferences include user age, preferred languages and preferred modalities (voice, gesture, pen click, mouse click, etc.). A user can select which multimedia object can be adapted (image, text, video or audio), for example if he or she receives audio when he or she is at work, he or she would rather receive a text instead; that means we need an adapting service to change the audio to a text. We can find also a description of the user’s health situation, as the user can be healthy or handicapped (Figure 4).

- The Smart-Object Context describes data that are gathered from different sensors and describe orchestrated acts of a variety of actuators in smart environments (Figure 5). We have three types of sensor data: (1) bio-sensor data represent data that are captured by bio-sensors, like blood pressure, blood sugar and body temperature; (2) environmental sensor data represent data that are captured by environmental sensors, like home temperature, humidity, etc.; (3) device sensor data represent data that are captured by sensors, like CPU speed, battery energy, etc.

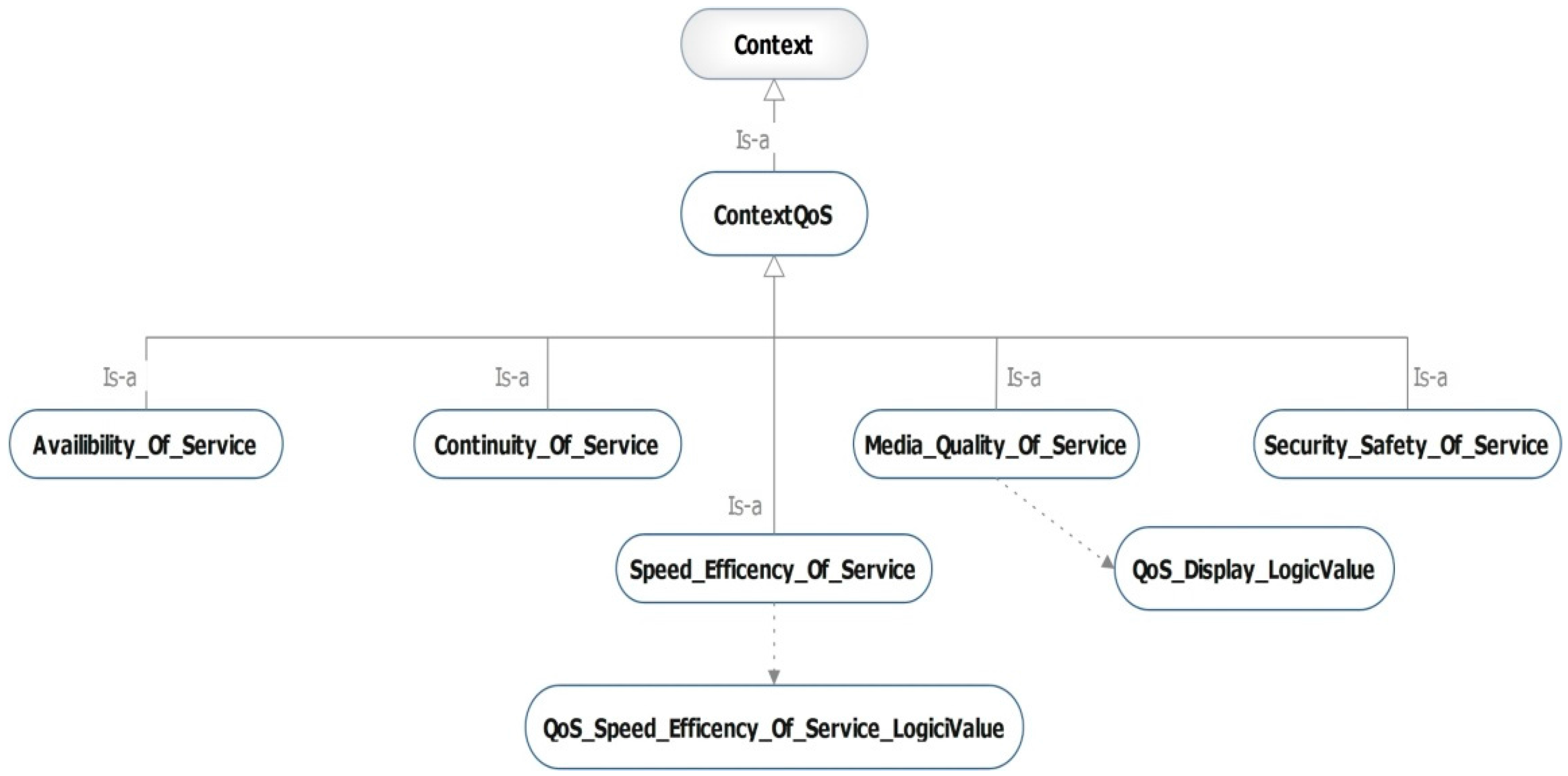

- The QoS Context describes the quality of any mobile-based application, which can be represented in our ontology, which is defined as a set of metadata parameters. These QoS parameters are: (1) continuity of service; (2) durability of service; (3) speed and efficiency of service; and (4) safety and security (see Figure 6).

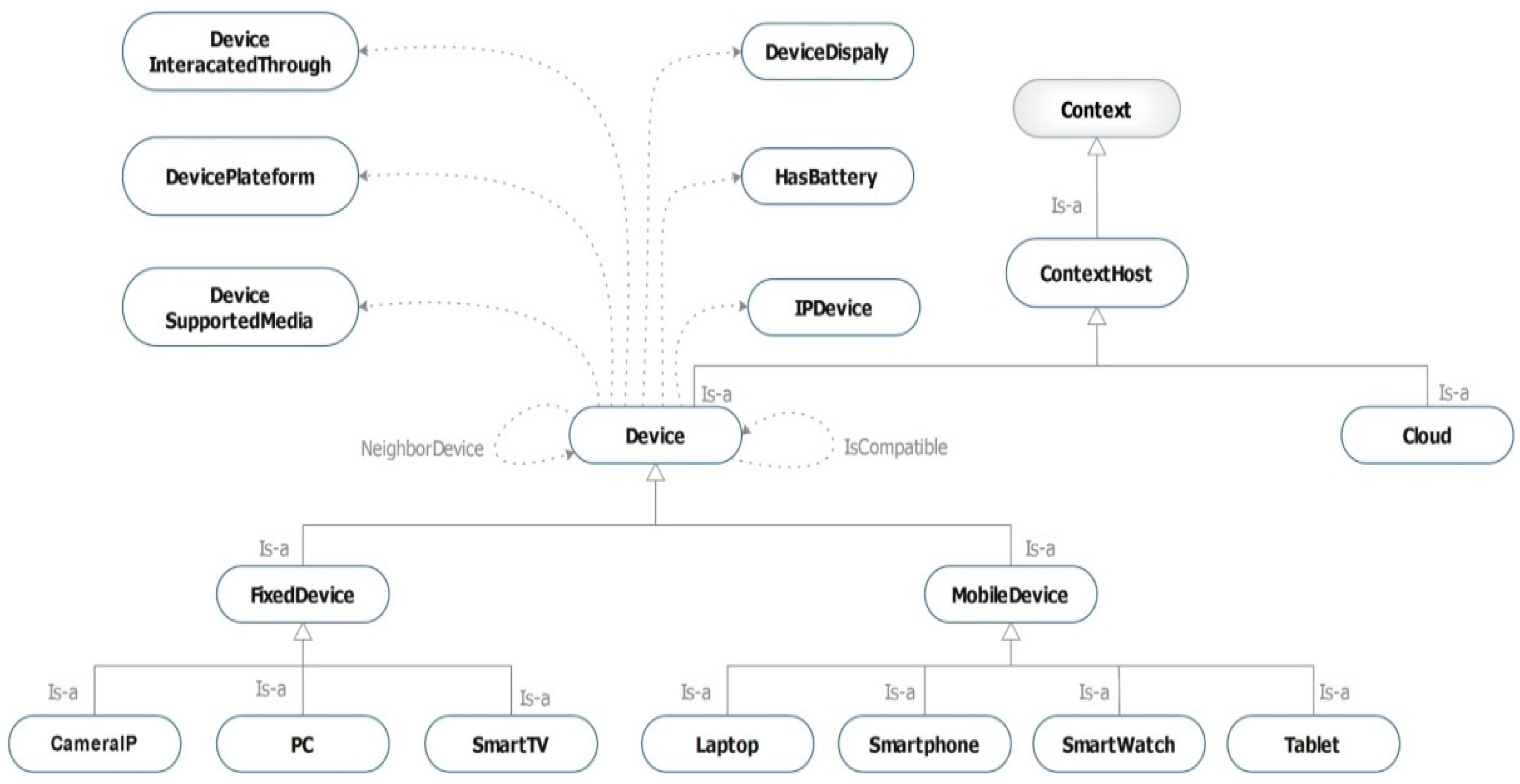

- The Host Context represents different hosts of services proposed by providers. For example, services can be hosted on local devices or on the cloud. The local device class contains information about fixed devices or mobile devices. Mobile devices have limited resources, such as battery, memory and CPU. The Cloud class contains information about the cloud server (e.g., Google cloud) that can be used for hosting services. The service is deployed and migrated on the host, and as the service has constraints, so a substitution of the service location could occur (a possible scenario is when the battery level is low, the service should be migrated on the cloud, so that the data could be stored separately and that could help minimize the use of energy). As mobile limited resources can break the mobile services, we are looking to the cloud or resources in proximity as a way to ensure the service continuity on mobile devices (see Figure 7).

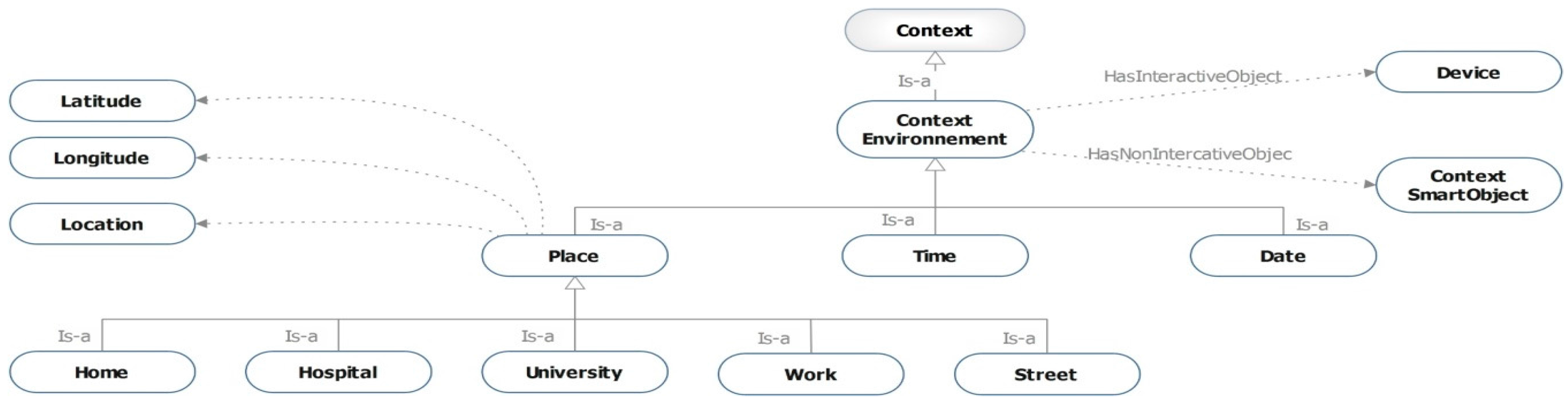

- The Environment Context describes spatial and temporal information (Figure 8):

- -

- Temporal information can be a date or time used as a timestamp. Time is one aspect of durability, so it is important to date information as soon as it is produced.

- -

- The Place describes related information about the user’s location {longitude, altitude and attitude}, in a given location, where we can find available mobile resources. The mobile resources are mobile devices, such as tablets, smartphones, laptops and smart objects, such as bio-sensors, environment sensors and actuators, etc. Resources are accessible by users.

- -

- The ActivityContext: according to a schedule, a user can engage in a scheduled activity.

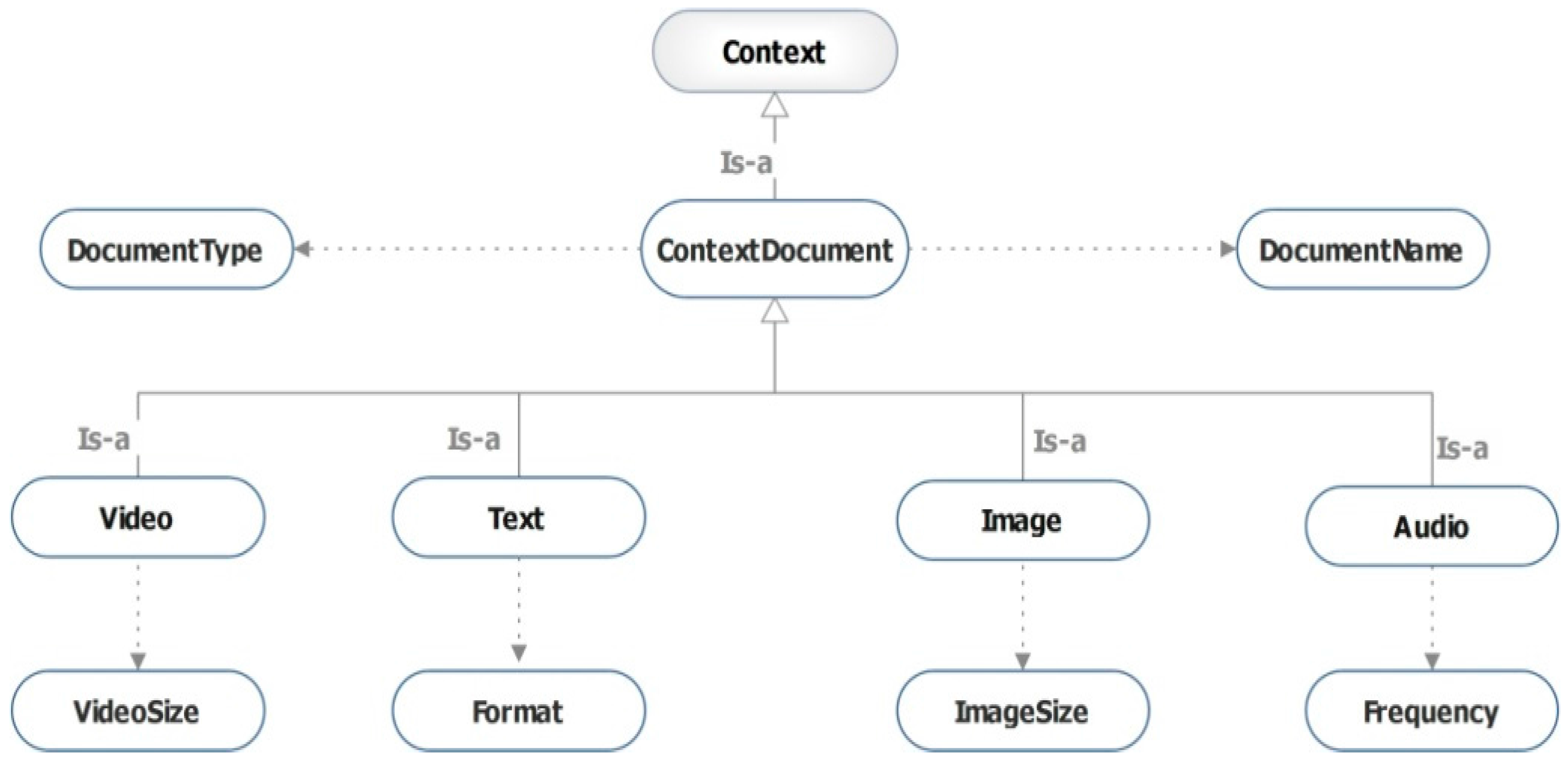

- The Document Context describes the nature of the documents (text, video, audio). The document context specifies a set of properties related to a specific media type: (1) text: alignment, font, color, format, etc.; (2) image: height, width, resolution, size, format, etc.; (3) video: title, color, resolution, size, codec etc.; (4) sound: frequency, size, resolution, and format (Figure 9).

4.1.2. Context Constraint Ontology

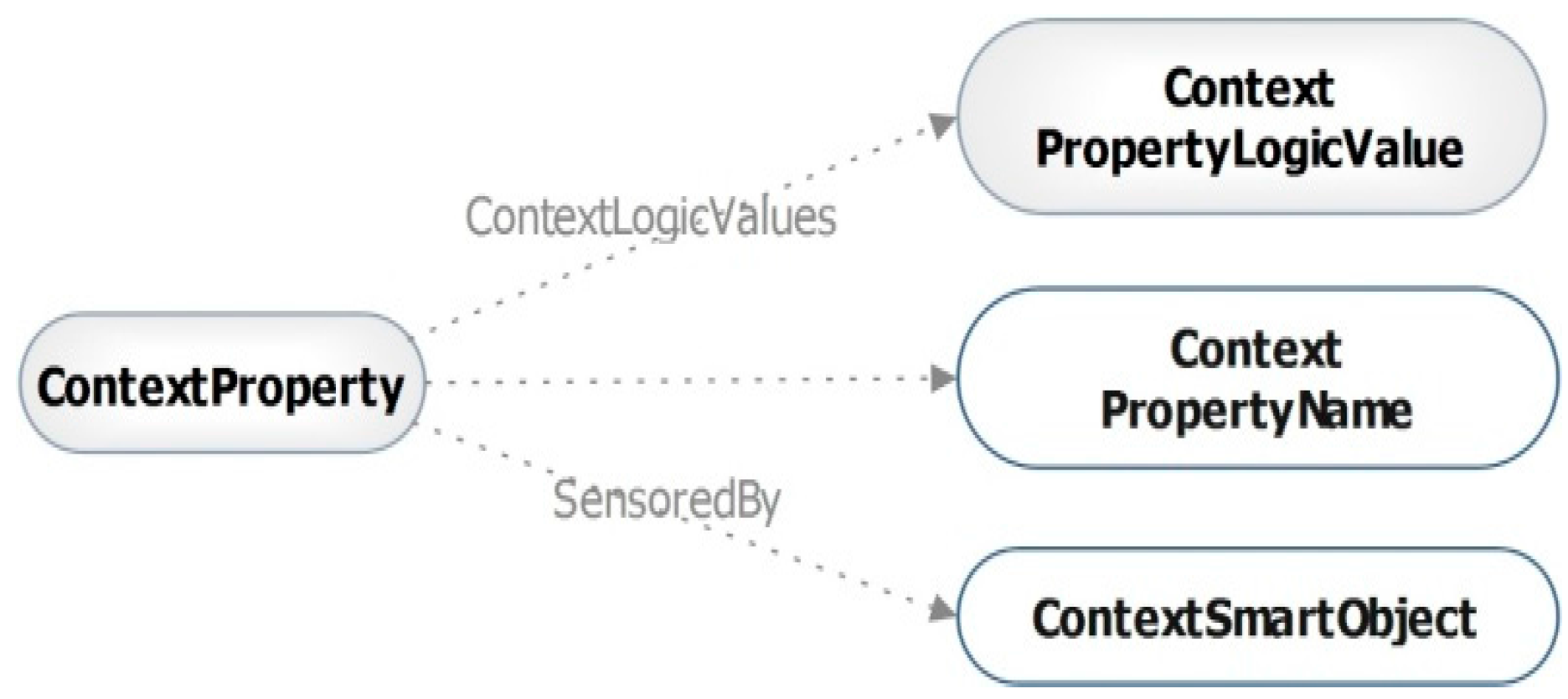

- Context_property: Each context category has specific context properties. For example, the device-related context is a collection of parameters (memory size, CPU power, bandwidth, battery lifecycle, etc.). Some context parameters may use semantically-related terms, e.g., CPU power, CPU speed.

- Context_expression: denotes an expression that consists of the context parameter, logic operator and logic value. For instance: glucose level = ‘very low’.

- Context_constraint: consists of simple or composite context expression. For example, a context constraint can be IF glucose level =‘very high’ and Time= ‘Before Dinner’ and Location=‘Any’ Then Situation= Diabet_Type_1_Situation.

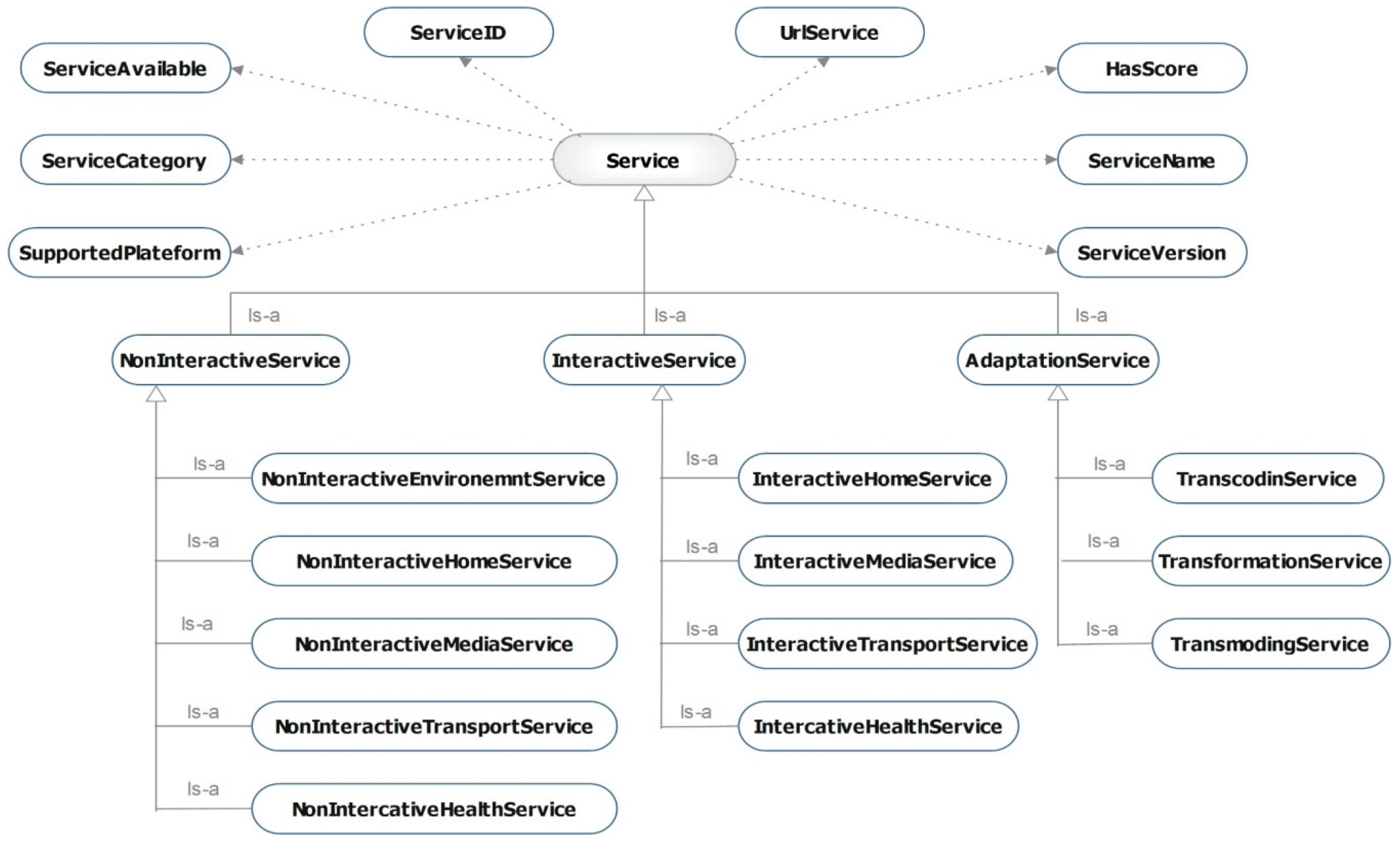

4.1.3. Service Ontology

4.1.4. Context Property Sub-Ontology

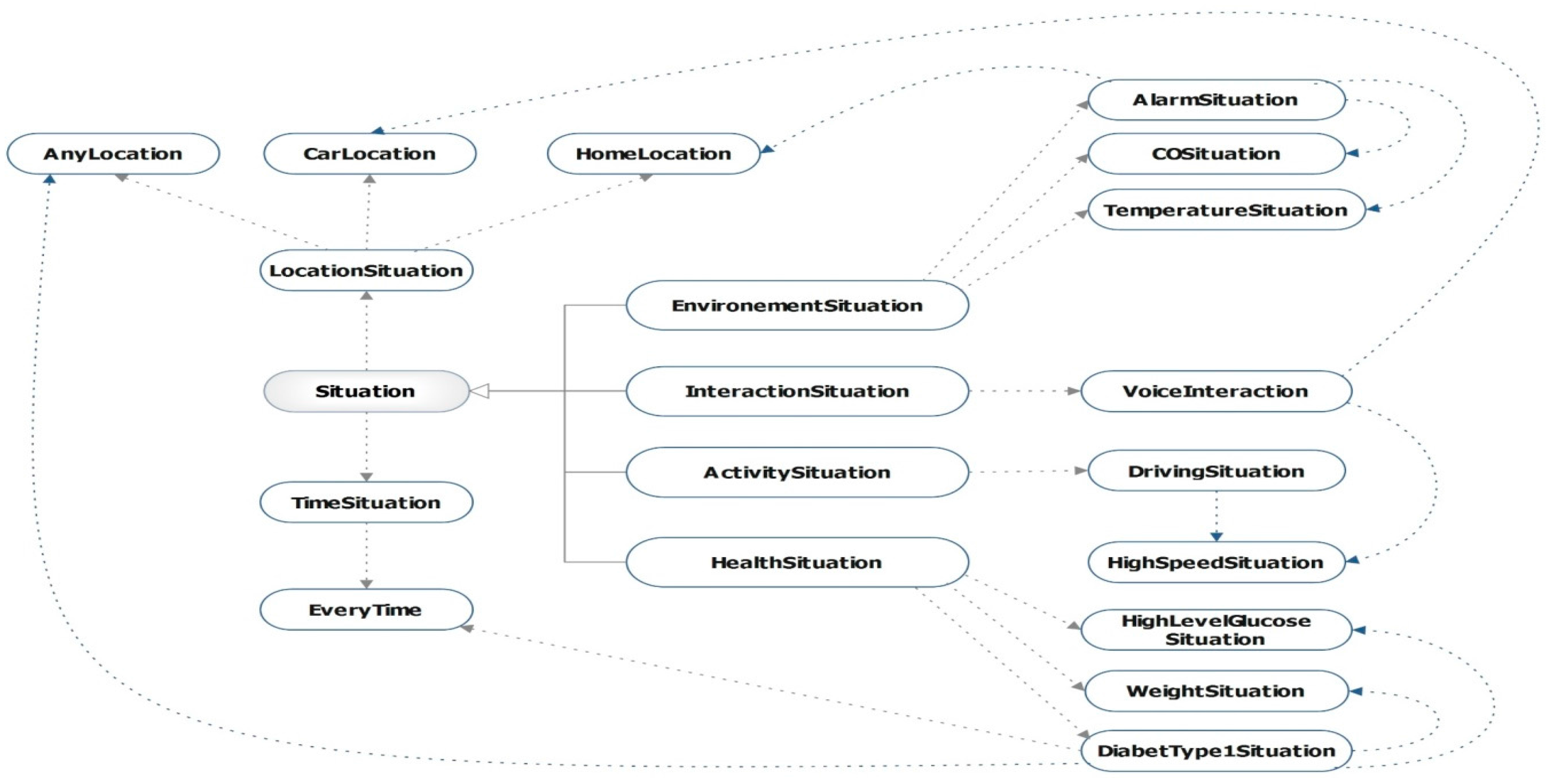

4.1.5. Situation Ontology

4.2. Dynamic Situation Searching

| Algorithm 1: Situation Matching and Dynamic Service Selection |

| Input: Profile [], profile Status[],two contexts C1 and C2/C1S and C2 Q // set of profiles and profile status |

| Output: Overall score Sim (Q,S) // best semantically equivalent services |

| Matched_Relation // EXACT, SUBSUMES, NEAREST-NEIGHBOUR, FAIL |

| MatchedService_List // a set of services that meet the user’s context preferences |

| 1. Matching preferences with local services and get each constraint similarity value defined in [1]:

MatchedService_List = MatchedService_List ∪ SLocal;

|

| 2. Matching preferences with nearby services and get each constraint an overall similarity in [1]:

MatchedService_List = MatchedService_List ∪ SNearBy;

|

| 3. Selection of quality equivalent semantic services defined in [11]. |

| 4. Generation of reconfiguration file with k-best services. |

| 5. Save new reconfiguration file in Kalimucho Server. |

4.3. Context Provisioning

- Provision the next substituted service of the list of services offering the same service functionalities, which require less available resources and sorting the QoS of available services.

- Add new services into the list of found services. The new service is matched from the new provisioned changes in the profile constraint (e.g., the user context).

| Algorithm 2: Incremental Context Changes Prediction |

| Input: Predict user context changes |

Output: Generates provisioned Services reconfiguration file

|

4.4. Context Reasoning

5. Potentials Scenarios and Validation

5.1. Implementation of Kali-Smart

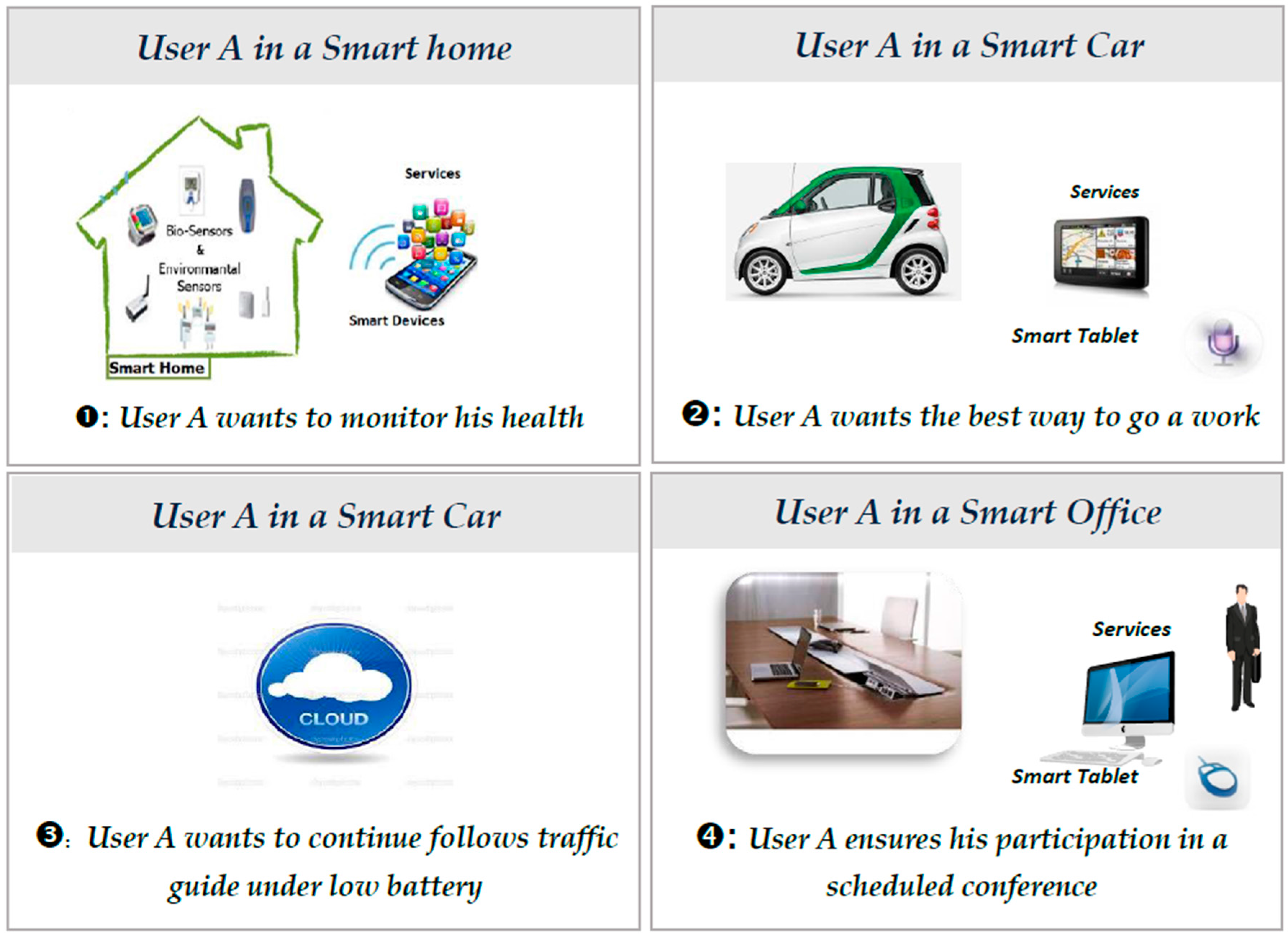

5.2. Real-Life Scenarios

5.2.1. Scenario I: User A in Home Using Smartphone (or Smart TV or Smart Watch)

5.2.2. Scenario II: User A in a Car using a Car Tablet

5.2.3. Scenario III: Low Battery Level

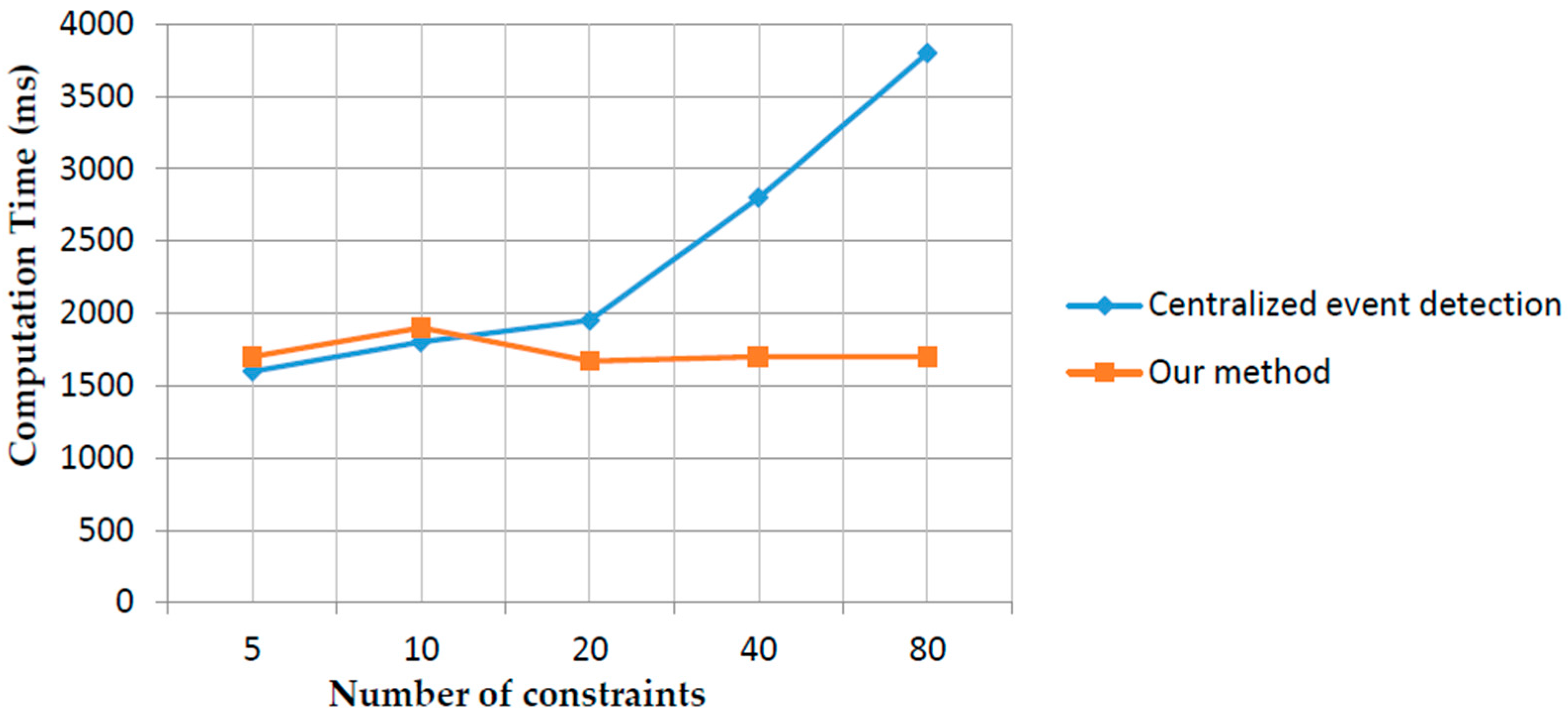

5.3. Performance Comparison and Discussion

5.4. Advantages and Limitations of Kali-Smart Platform

- Flexibility: our platform allows a user to describe his or her requirements in the file profile. Therefore, it facilitates modification. Our platform can add, remove or modify easily and quickly certain services in the user environment in a transparent and uniform way according to the user needs and contextual usage. Hence, the user can execute a service corresponding to any context and any device. The introduction of the distributed action mechanism introduces much flexibility and many opportunities for further extensions regarding how context entities should behave.

- Semantic intelligence and autonomic optimization: the proposed platform is a complete solution that minimizes the response time of the situation matching under the criteria’s priority (location, time, category), which facilitates inference in the ontology and maximizes redundancy relays and switching mobile services.

- Interactive service experience: our platform uses semantic technologies and the concept of multi-device context data representation to facilitate seamless and interactive media services in a common contextual mobile environments.

- High flexibility: the proposed platform depends on users’ constraints and the service repository. If the user requires constraints with any solved services, he or she cannot obtain the content

- More dynamicity: our platform needs to dynamically change the executing environment the same way as other context-aware mobile applications.

- Scalability: our architecture lacks several aspects (timing, scalability, etc.) related to the usage in realistic smart environments.

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Cassagnes, C.; Roose, P.; Dalmau, M. Kalimucho: software architecture for limited mobile devices. ACM SIGBED Rev. 2009, 6, 1–6. [Google Scholar] [CrossRef]

- Alti, A.; Laborie, S.; Roose, P. Cloud semantic-based dynamic multimodal platform for building mHealth context-aware services. In Proceedings of the IEEE 11th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Abu Dhabi, UAE, 19–21 October 2015.

- Alti, A.; Laborie, S.; Roose, P. Dynamic semantic-based adaptation of multimedia documents. Trans. Emerg. Telecommun. Technol. 2014, 25, 239–258. [Google Scholar] [CrossRef]

- Nicolas, F.; Vincent, H.; Stéphane, L.; Gaëtan, R.; et Jean-Yves, T. WComp: Middleware for Ubiquitous Computing and System Focused Adaptation. In Computer Science and Ambient Intelligence; ISTE Ltd: London, UK; John Wiley and Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Romain, R.; Barone, P.; Ding, Y.; Eliassen, F.; Hallsteinsen, S.; Lorenzo, J.; Mamelli, A.; Scholz, U. MUSIC: Middleware Support for Self-Adaptation in Ubiquitous and Service-Oriented Environments. In Software Engineering for Self-Adaptive Systems; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- OSGi Platforms. Available online: http://www.osgi.org/Main/HomePage (accessed on 27 May 2007).

- Da, K.; Roose, P.; Dalmau, M.; Novado, J. Kali2Much: An autonomic middleware for adaptation-driven platform. In Proceedings of the International Workshop on Middleware for Context-Aware Applications in the IoT (M4IOT 2014) @ MIDDLEWARE’2014, Bordeaux, France, 25–30 December 2014.

- Mehrotra, A.; Pejovic, V.; Musolesi, M. SenSocial: A Middleware for Integrating Online Social Networks and Mobile Sensing Data Streams. In Proceedings of the 15th International Middleware Conference (MIDDLEWARE 2014); ACM Press: Bordeaux, France, 2014. [Google Scholar]

- Taing, T.; Wutzler, M.; Spriner, T.; Cardozo, T.; Alexander, S. Consistent Unanticipated Adaptation for Context-Dependent Applications. In Proceedings of the 8th International Workshop on Context Oriented Programming (COP’2016); ACM Press: Rome, Italy, 2016. [Google Scholar]

- Yus, R.; Mena, E.; Ilarri, S.; Illarramendi, A. SHERLOCK: Semantic management of Location-Based Services in wireless environments. Pervasive Mob. Comput. 2014, 15, 87–99. [Google Scholar] [CrossRef]

- Etcheverry, P.; Marquesuzaà, C.; Nodenot, T. A visual programming language for designing interactions embedded in web-based geographic applications. In Proceedings of the 2012 ACM International Conference on Intelligent User Interfaces, Haifa, Israel, 14–17 February 2012.

- Pappachan, P.; Yus, R.; Joshi, A.; Finin, T. Rafiki: A semantic and collaborative approach to community health-care in underserved areas. In Proceedings of 10th IEEE International Conference on Collaborative Computing: Networking, Applications and Worksharing (CollaborateCom), Miami, FL, USA, 22–25 October 2014.

- Chai, J.; Pan, S.; Zhou, M.X. MIND: A Semantics-based Multimodal Interpretation Framework for Conversational Systems. In Proceedings of the International Workshop on Natural, Intelligent and Effective Interaction in Multimodal Dialogue Systems, Copenhagen, Denmark, 28–29 June 2002.

- Clark, K.; Parsia, B. JESS: OWL 2 Reasoner for Java. Available online: http://clarkparsia.com/Jess (accessed on 17 July 2014).

- Kim, J.; Chung, K.-Y. Ontology-based healthcare context information model to implement ubiquitous environment. Multimed. Tools Appl. 2014, 71, 873–888. [Google Scholar] [CrossRef]

- Khallouki, H.; Bahaj, M.; Roose, P.; Laborie, S. SMPMA: Semantic multimodal Profile for Multimedia documents adaptation. In Proceedings of the 5th IEEE International Workshop on Codes, Cryptography and Communication Systems (IWCCCS’14), El Jadida, Morocco, 27–28 November 2014.

- Bahaj, M.; Khallouki, H. UPOMA: User Profile Ontology for Multimedia documents Adaptation. J. Technol. 2014, 6, 448–457. [Google Scholar]

- Hai, Q.P.; Laborie, S.; Roose, P. On-the-fly Multimedia Document Adaptation Architecture. Procedia Comput. Sci. 2012, 10, 1188–1193. [Google Scholar] [CrossRef]

- Anagnostopoulos, C.; Hadjiefthy Miades, S. Enhancing situation aware systems through imprecise reasoning. IEEE Trans. Mob. Comput. 2008, 7, 1153–1168. [Google Scholar] [CrossRef]

- Protégé 2007. Why Protégé. Available online: http://protege.stanford.edu/ (accessed on 27 August 2007).

| Related Works | Context Monitor and Event Detection | Smart Multimodality Event Detection | Situation Reasoner | Action Mechanism and Prediction |

|---|---|---|---|---|

| [13] | Centralized | None | Centralized | None |

| [1,7,9,12] | Distributed | None | Centralized | Centralized |

| [8] | Centralized | Social mechanisms | None | Centralized |

| [4,5,12] | Distributed | multimodal | Centralized | Centralized |

| Situation Rules | EVERY TIME |

| IF User Has Biosensor Data AND | |

| Glucose Level > Min Value AND | |

| Glucose Level < Max Value | |

| THEN Person HAS Situation “DiabetType1” | |

| Services Rules | EVERY TIME |

| IF Service IS Health-Care AND | |

| Service Has Type “Service Type” AND | |

| User HAS Situation Situation AND | |

| Situation Has Type “Situation Type” WHERE | |

| “SituationType” IS EQUAL TO “Service Type” | |

| THEN | |

| Situation Trigger Service Service WHERE | |

| Service isProvided To User | |

| Smart Services Rules | EVERY TIME |

| IF Service IS Health-Care AND | |

| Service is Deployed On Device “D1” AND | |

| User HAS Situation Situation AND | |

| Situation Is Detected By Detection_Function AND | |

| Situation Depends On Device “D2” AND | |

| Detection_Function Has Modality “Modality Type” | |

| THEN | |

| Situation Migrate Service Service WHERE | |

| Service is Deployed On Device “D2” |

| Location | Situation | Constraint Description |

|---|---|---|

| Any | High Blood Sugar | Every time IF blood sugar level is high THEN adjust insulin dose and activate diabetes guide |

| Danger Blood Sugar | Every time IF blood sugar level is danger THEN activate emergency call | |

| Low Blood Sugar | Every time IF blood sugar level is low THEN activate diabetes guide |

| Location | Situation | Constraint Description |

|---|---|---|

| Any | Low battery level | Every time IF battery level is low THEN migrate service |

| High battery level | Every time IF battery level is High THEN replace service | |

| Schedule Time | IF activity scheduled time is come THEN activate service | |

| Schedule Time | IF activity scheduled time is out THEN remove service |

| Location | Situation | Constraint Description |

|---|---|---|

| Car | Driving situation | Every time IF driving speed is high THEN activate text to speech |

| Home | Shower situation | IF morning time AND bath room faucet is activated AND Temperature level is high THEN adjust water temperature |

| Drink situation | IF morning time AND breakfast is detected THEN activate email | |

| Office | Work situation | Every time IF Temperature level is high AND CO level is Low THEN call emergency |

| Services Composition Paths | Score |

|---|---|

| Adjusting Insulin Service (User E) + Display Guide1 Video (User E) | 0.83 |

| Adjusting Insulin Service (User E) + Display Guide1 Image (User A) | 0.62 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alti, A.; Lakehal, A.; Laborie, S.; Roose, P. Autonomic Semantic-Based Context-Aware Platform for Mobile Applications in Pervasive Environments. Future Internet 2016, 8, 48. https://doi.org/10.3390/fi8040048

Alti A, Lakehal A, Laborie S, Roose P. Autonomic Semantic-Based Context-Aware Platform for Mobile Applications in Pervasive Environments. Future Internet. 2016; 8(4):48. https://doi.org/10.3390/fi8040048

Chicago/Turabian StyleAlti, Adel, Abderrahim Lakehal, Sébastien Laborie, and Philippe Roose. 2016. "Autonomic Semantic-Based Context-Aware Platform for Mobile Applications in Pervasive Environments" Future Internet 8, no. 4: 48. https://doi.org/10.3390/fi8040048

APA StyleAlti, A., Lakehal, A., Laborie, S., & Roose, P. (2016). Autonomic Semantic-Based Context-Aware Platform for Mobile Applications in Pervasive Environments. Future Internet, 8(4), 48. https://doi.org/10.3390/fi8040048