Abstract

The increasing demand for accessible and efficient machine learning solutions has led to the development of the Adaptive Learning Framework (ALF) for multi-class, single-label image classification. Unlike existing low-code tools, ALF integrates multiple transfer learning backbones with a guided, adaptive workflow that empowers non-technical users to create custom classification models without specialized expertise. It employs pre-trained models from TensorFlow Hub to significantly reduce computational costs and training times while maintaining high accuracy. The platform’s User Interface (UI), built using Streamlit, enables intuitive operations, such as dataset upload, class definition, and model training, without coding requirements. This research focuses on small-scale image datasets to demonstrate ALF’s accessibility and ease of use. Evaluation metrics highlight the superior performance of transfer learning approaches, with the InceptionV2 model architecture achieving the highest accuracy. By bridging the gap between complex deep learning methods and real-world usability, ALF addresses practical needs across fields like education and industry.

1. Introduction

Image classification is one of the most prominent applications of computer vision. The process of developing custom models requires expertise in programming and a strong understanding of neural networks, which are pivotal in the field of computer vision [1]. The process has become much easier with the help of transfer learning and tools like TensorFlow Hub. Even small datasets can power new classification tasks efficiently by reusing pre-trained models. But many non-technical users find these tools inaccessible. That is exactly what this project tackles-to present a Teachable Machine, which is a user-friendly Graphical User Interface (GUI) tool for creating machine learning classification models without any specialized technical expertise [2,3,4].

Although other low-code machine learning platforms support a small number of vision machine learning models, they offer minimal freedom when it comes to selecting a model, comparing its performance, or directing its training. The existing Teachable Machine can have only one backbone and does not allow obtaining much information concerning training and performance. Otherwise, model-diverse technical frameworks like TensorFlow and PyTorch do require more disciplinary background coding experience, which poses a significant barrier to teachers, small firms, and amateur designers., The gap has already been addressed by our proposed solution, which constitutes an interpolative and GUI-friendly system that merges multiple models of transfer learning, automatic evaluation, and downloadable trained models that do not require any coding expertise.

The motivation for this project is to bring high-level machine learning within the reach of everyone. Experts may easily develop complex models, but other users, such as educators, small businesses, and hobbyists, do not have the technical wherewithal to use image classification, even though they might have good ideas and lots of data. Most tools have been designed to require knowledge of programming, experience with deep learning libraries, and complex configurations. This project eliminates such obstacles by providing a solution wherein training a model would be as easy as uploading images and customizing a few settings. Using transfer learning, it allows for fast, accurate training—even with small datasets—making it accessible to a greater population of users, who will be able to utilize the efficiency of machine learning [4,5].

The exponential growth of interest in user-friendly machine learning platforms has increased the demand for the development of accessible tools and frameworks. The reviewed literature shows growth in transfer learning, accessible machine learning tools, and data-driven optimization, thus setting a base for developing platforms such as ALF that simplify image classification with high accuracy and efficiency. This section discusses the existing research in the concerned domains, emphasizing their contributions to transfer learning techniques and image classification.

- (a)

- Key features of ALF:

- (i)

- Transfer Learning Integration: Leveraging TensorFlow Hub, ALF utilizes advanced pre-trained models like MobileNet, ResNet, and Inception, enabling high accuracy with minimal data and computation [5,6].

- (ii)

- User-Friendly Interface: Built with Streamlit, it offers a simple web interface where users can upload images, define classes, and train models without coding [7].

- (iii)

- Automation of Model Training and Deployment: The system takes care of everything, starting from preprocessing user data to training and supporting model downloads for local usage.

- (iv)

- Training Efficiency: Transfer learning and data optimization reduce the requirements of resources [8,9]. Hence, even with small datasets and limited resources, it works effectively.

- (v)

- Multi-Model Flexibility: The user can compare and train by various transfer learning backbones (e.g., MobileNet, ResNet, Inception, EfficientNet) within the same GUI framework.

- (vi)

- Transparency: Respective internal callbacks, e.g., early stopping or learning-rate schedulers, are both accompanied by a transparent measure reporting (evaluation metrics, learning curves, and other visualizations).

- (vii)

- Versatile Applications: Suitable for various fields such as healthcare, education, and retail, it enables users to tackle specific classification challenges with ease.

- (b)

- Literature review:

- (i)

- Transfer Learning in Image Classification: Transfer learning has completely changed the scenario of image classification tasks by allowing for the capability to just fine-tune the already pre-trained models for achieving state-of-the-art accuracies with minimum computational cost and number of images [8,9,10]. For example, MobileNetV1, proposed by Tao Sheng et al., 2018, showed the power of depth-wise separable convolutions in reducing the parameter size and computational overhead [11]. Later, Sandler et al., 2019, enhanced this architecture by using inverted residuals and linear bottlenecks [12]. As a result, MobileNetV2 was particularly suited to resource-constrained devices. Furthermore, EfficientNetV2, introduced by Mingxing Tan and Quoc V. Le in 2021, achieved state-of-the-art results due to its compound scaling, balancing depth, width, and resolution in a manner that is both faster and more accurate [13]. Various studies have also used pre-trained models such as ResNet and Inception. ResNet introduced residual learning, solving the problem of the vanishing gradient, hence allowing for deeper networks that could be trained effectively [14].Classical standard classification image backbones like Vision Transformer (ViT), Swin Transformer, and ConvNeXt have shown their ability to perform optimally in terms of results on large-scale databases. Although these architectures were not used in the current implementation of ALF, it is expected that they will be added in future releases, and therefore extend the level of adaptabilities and performance standards set by the framework.

- (ii)

- Machine Learning with Accessibility: Various research works have been carried out to simplify the intricacies of machine learning for non-technical users. Google’s Teachable Machine, discussed in a review by Michelle Carney et al., 2020, illustrates how AI can be more accessible with the help of user-friendly tools. This tool enables users to train classification models for their specific needs without prior experience in coding, thereby ensuring fairness in machine learning applications [3] and the place of teachable machines in accessibility, especially in the design of assistive technologies for people with disabilities [4]. These works all underpin the importance of intuitive interfaces in bridging the gap between AI technologies and their end-users.

- (iii)

- Data-Driven Optimization for Image Classification: Data preprocessing and optimization techniques are effective in the creation of robust classification models [15]. Various works using MobileNet architectures cite image resizing and normalization as necessary preprocessing to match model requirements. EfficientNetV2’s progressive learning strategy underlines the dynamic scaling of data dimensions toward improving the performance of a model. Transfer learning models have been applied in diverse fields due to their versatility. For example, Mijwil et al. used MobileNetV1 for brain tumor classification, showing an accuracy of over 97% and high sensitivity and specificity [16]. Similarly, the use of ResNet and Inception models in medical imaging and object recognition highlights their utility in real-world challenges.

Several machine learning platforms have simplified model training for non-technical users by providing an interactive and user-friendly web-based interface [3]. However, these platforms have no scope for model selection by the user. ALF addresses this issue and offers users a more flexible and efficient customizable approach. It enables users to select from multiple transfer learning models, including various versions of MobileNet, ResNet, EfficientNet, and Inception. The training process of ALF adapts by using early stopping, activation function and automatic learning rate modification to reduce overfitting.

ALF offers a user-guided, multiple-backbone environment, with the transparency and friendliness offered to non-technical people. This makes ALF a convenient, teachable, and extensive personalization option in the field of usable machine learning tools.

2. Methodology

This section describes the approach taken in this study, including the preparation of the dataset, the preprocessing methods applied, the models used, and the evaluation techniques implemented to ensure accurate image classification.

- (a)

- Dataset Overview

The image dataset used in conducting this study consists of 1200 cat images, 1500 dog images, and 400 human images, in a non-uniform distribution setting where the Adaptive Learning Framework (ALF) was evaluated. The cat and dog pictures were downloaded using the Microsoft Cat vs. Dog Dataset, and the human pictures were obtained using the Human Faces Dataset on Kaggle.

To boost the dataset and artificially expand the number of training samples, data augmentation was applied using Keras’ ImageDataGenerator. The process involved a range of transformations, such as rotating images by up to 20 degrees, shifting them horizontally and vertically by 15%, applying a shear factor of 0.1, zooming in or out by 20%, and flipping the images horizontally. Nearest-neighbor filling was used to fill any gaps created during the transformations. These augmentations helped to introduce a variety of image modifications, allowing for the model to learn to generalize better and become more resilient to variations in real-world data.

The dataset was split into 80% training and 20% validation from the Photos directory, with class proportions preserved using stratified sampling. A separate TestPhotos directory was used as the final test set, ensuring that there was no overlap with training or validation data. This setup allowed for performance evaluations on data that closely reflects real-world class distributions and imbalance.

- (b)

- Flow of Methodology

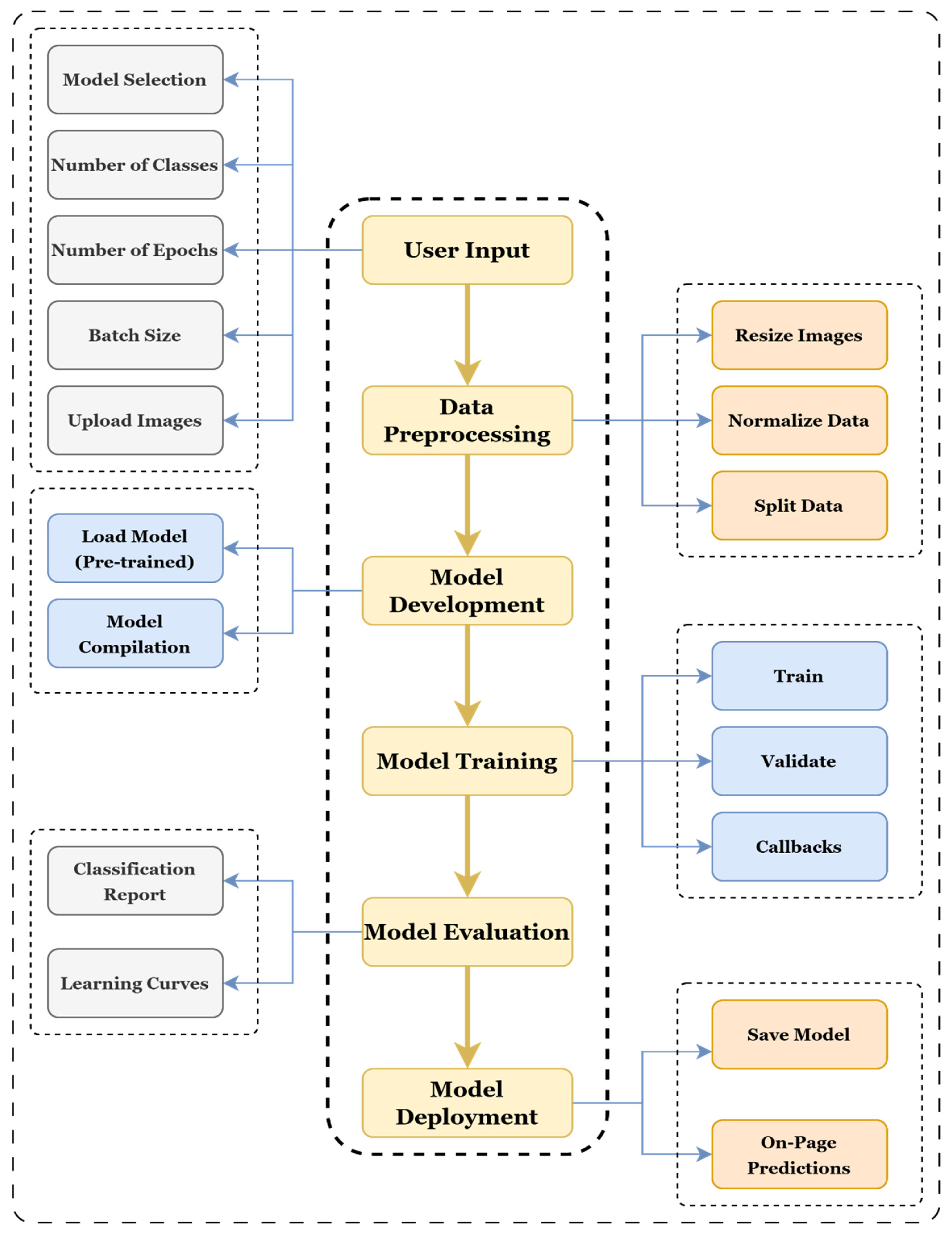

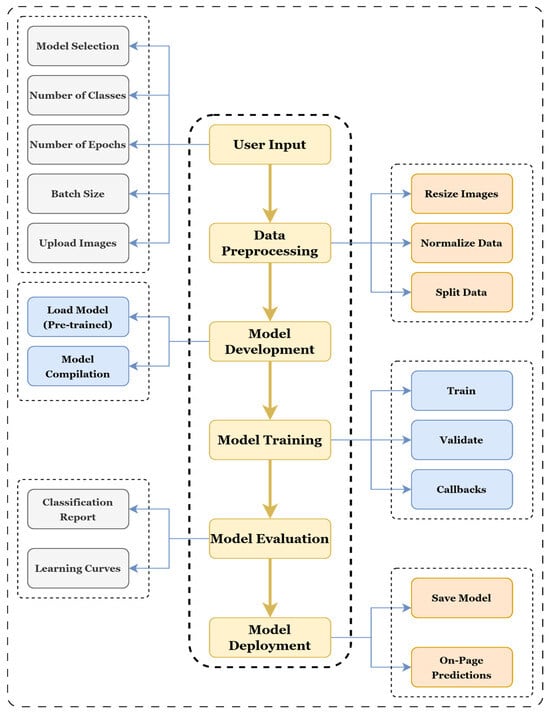

Figure 1 shows a systematic machine learning workflow. It starts with the user input and includes essential steps such as data preprocessing, model development, and training, with an added functionality in early stopping and learning rate adjustments to reduce overfitting and to improve performance [17,18]. Upon evaluation, the model is then deployed, allowing for the user to conduct real-time predictions and model-saving for reuse. This will ensure that this machine learning pipeline is streamlined and efficient.

Figure 1.

Methodology flow diagram.

- (i)

- Data Preprocessing: Preprocessing the data ensures that it is well-prepared for use in machine learning models [15,19]. This involves resizing, normalizing, and splitting the data. MobileNetV2 expects the input image to be of the shape 224 × 224 × 3, and InceptionV3 expects it to be of 299 × 299 × 3. In this way, the aspect ratio of images is preserved by not allowing for their distortion [15,16]. Normalization scales pixel values to a range of [0, 1], achieved by dividing each pixel value by 255 [15,20]. This standardization step helps stabilize computations and speeds up the model’s learning process. Splitting divides the dataset into the training set, which forms the majority (60%) and is used to teach the model the relationships between input images and their labels. Techniques like flipping, cropping, or rotating images are applied to enhance diversity. The validation set (20%) acts as a checkpoint during training, allowing for adjustments to the model parameters and the detection of overfitting early. The test set (20%) is used for the evaluation of new data.

- (ii)

- Models: The choice of model plays an important role in achieving accurate results in image classification tasks. By using transfer learning, it is possible to achieve higher accuracy with reduced training time. Each transfer learning model has unique strengths, as shown in Table 1.

Table 1. Transfer learning models with unique strengths.

Table 1. Transfer learning models with unique strengths.

- (iii)

- Model Performance Evaluation: Evaluating how well a model performs is crucial for understanding its ability to generalize unseen data. Several approaches are used. Metrics like accuracy, precision, recall, and F1-scores are calculated to compare the strengths and weaknesses of different models [23,24]. Learning curves visually track the accuracy and loss across training and validation phases. These curves help detect problems such as overfitting [25].

- (iv)

- Model Deployment: Once trained, the models are released for use in real-world applications. There are two ways for users to interact with them: through a web interface, users upload images and obtain predictions directly with confidence scores; or the model is downloaded as a trained model file (h5) that can be deployed offline or integrated into other systems.

- (v)

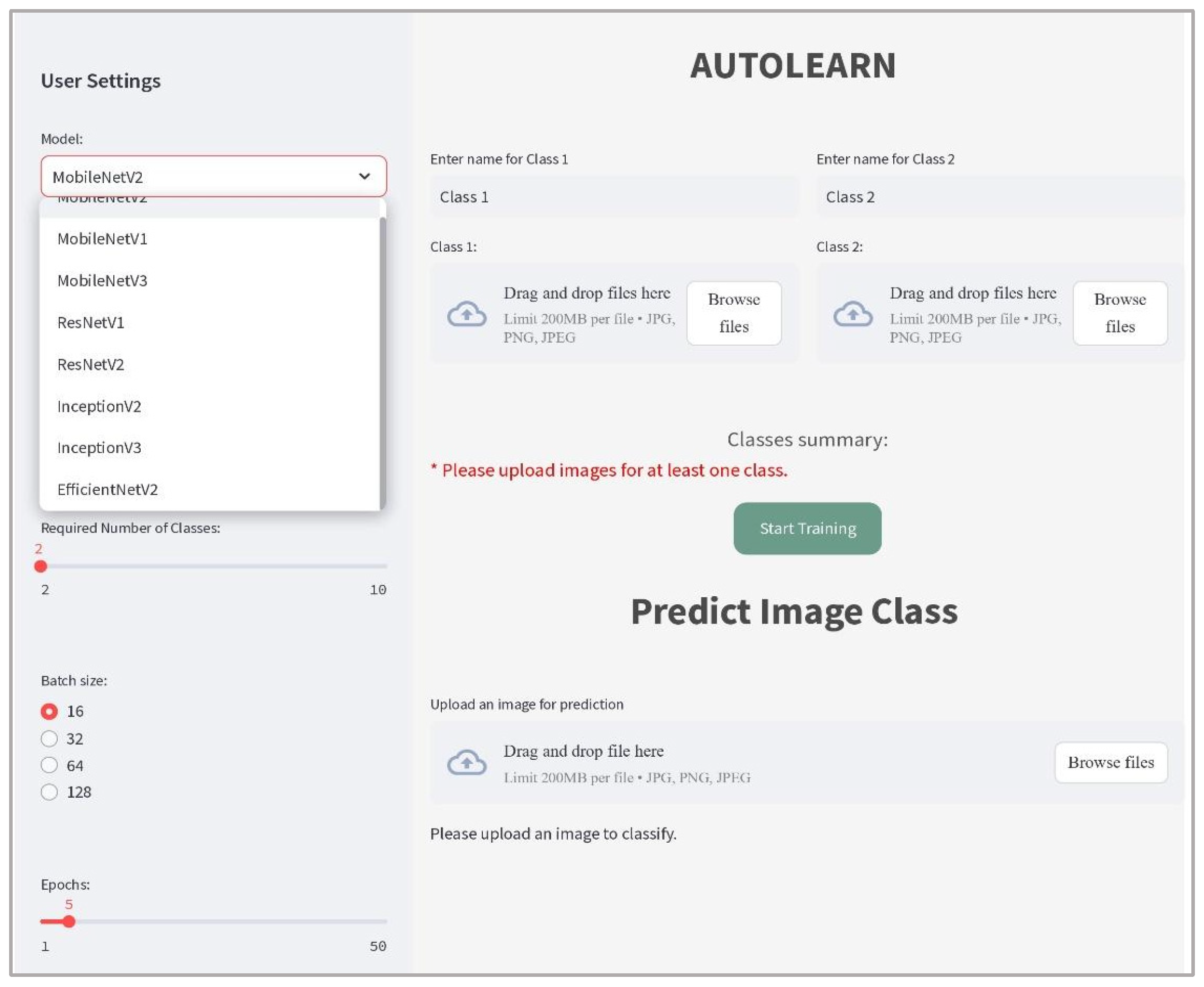

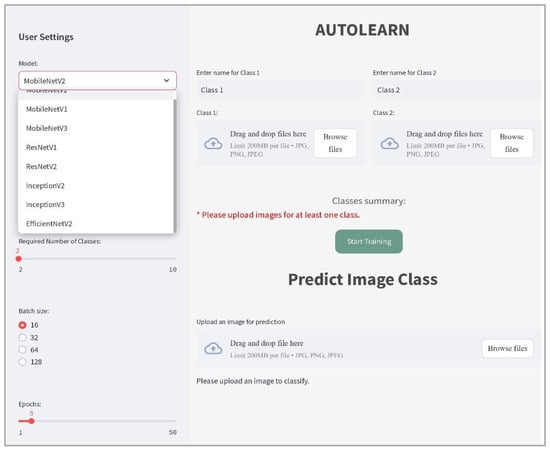

- User Interface Development: Figure 2 shows the User Interface for the ALF application that was designed using Streamlit 1.35.0, an open-source Python framework. The UI of the machine learning application was developed to make interactions with it simple and easy to use, even for people who are not technical. The interface allows for users to select models, adjust parameters, and train transfer learning models with minimal effort. It has been designed such that the entire process is seamless, accessible, and efficient.

Figure 2. ALF User Interface.

Figure 2. ALF User Interface.

The following steps have been followed in creating the UI:

- Interface Layout: To declutter the main interface, a sidebar has been utilized to maintain a track of available user settings. The UI has been divided into sections for model selection, dataset upload, parameter selection and customization, and result display.

- Model Selection and Configuration: ALF offers users a drop-down menu to select from various transfer learning models. The customizable parameters include options such as selection of a number of classes, batch size, and the number of epochs to maintain model training integrity.

- Dataset Management: ALF allows for users to upload image datasets for classification tasks.

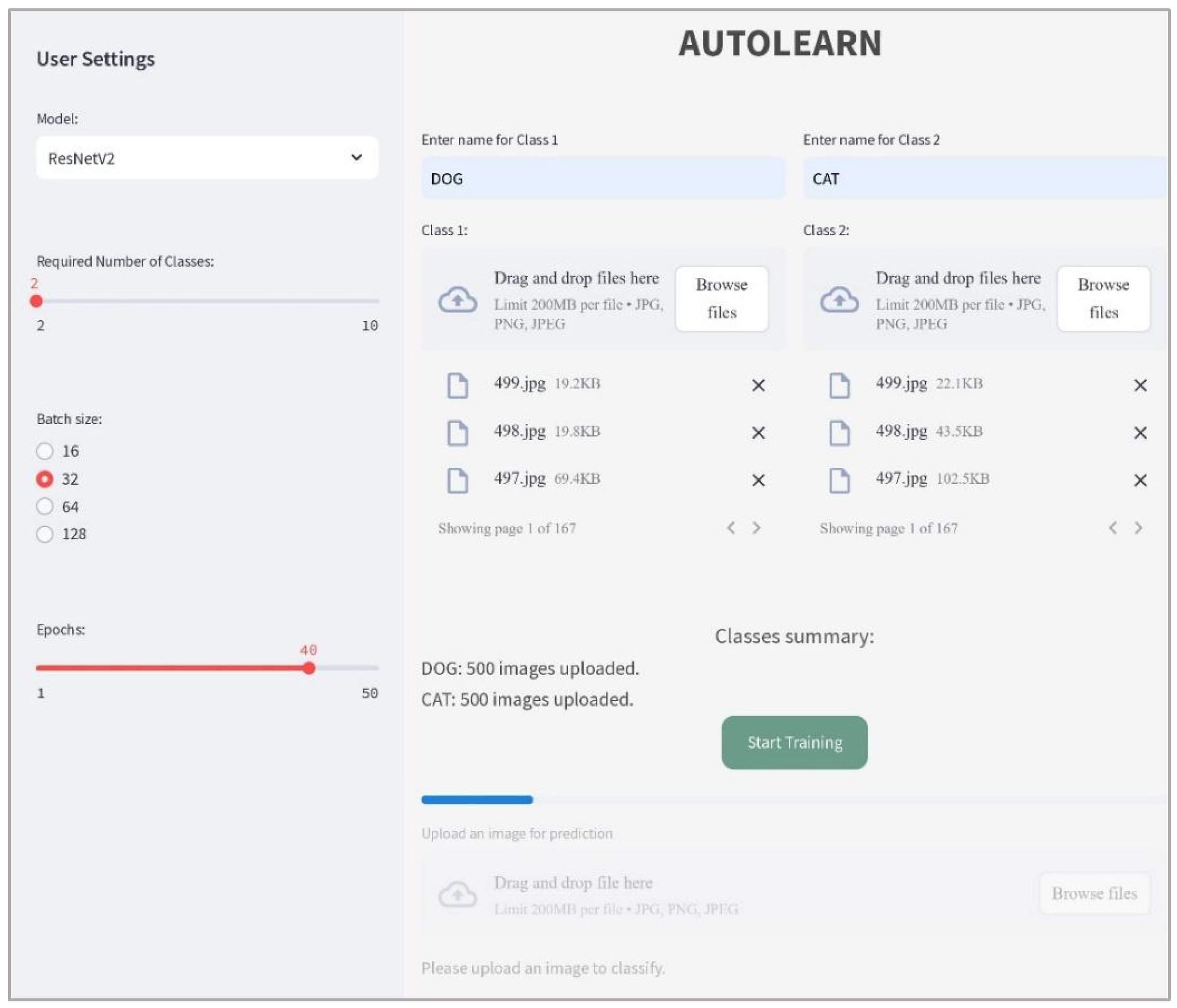

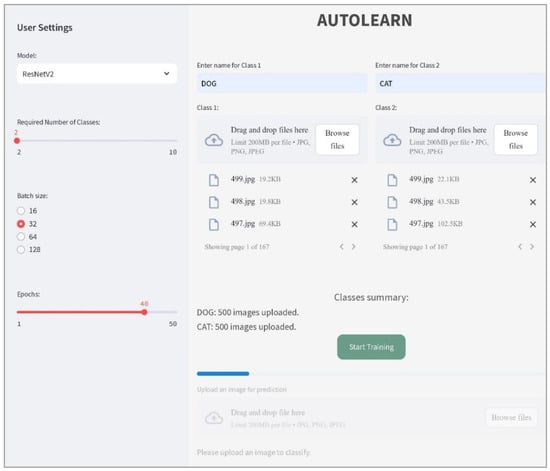

- User Feedback: Real-time progress bars were integrated to keep users informed of the training progress. Error messages and class summary sections update constantly based on user input, as shown in Figure 3.

Figure 3. Classes summary and real-time progress bar.

Figure 3. Classes summary and real-time progress bar. - Graph Visualization: Upon completion of the training process, visualization of learning curves (for accuracy and loss plots per epoch) is displayed for user transparency.

- Download Options and Model Evaluation: A tabular summary of evaluation metrics such as training accuracy, precision, recall, F1-score, validation accuracy, and time taken is presented. A download button allows for users to retrieve trained models, which can be used for deployment in offline applications.

- On-Screen Prediction: Users can upload images for prediction of unlabeled data using the trained model.

Hence, ALF’s user-friendly UI design helps technical and non-technical users to effectively exploit the potential of transfer learning for image classification.

3. Results

Model Performance: Table 2 gives a brief overview of the evaluation metrics for different deep learning and transfer learning models. It also provides important performance metrics like accuracy, precision, recall, F1-score, time taken, and epochs taken, which allows for a clear model comparison. This analysis allows for determining the most appropriate model for the task, guaranteeing optimal results and reliable predictions.

Table 2.

Model performance metrics.

- Learning Curves:

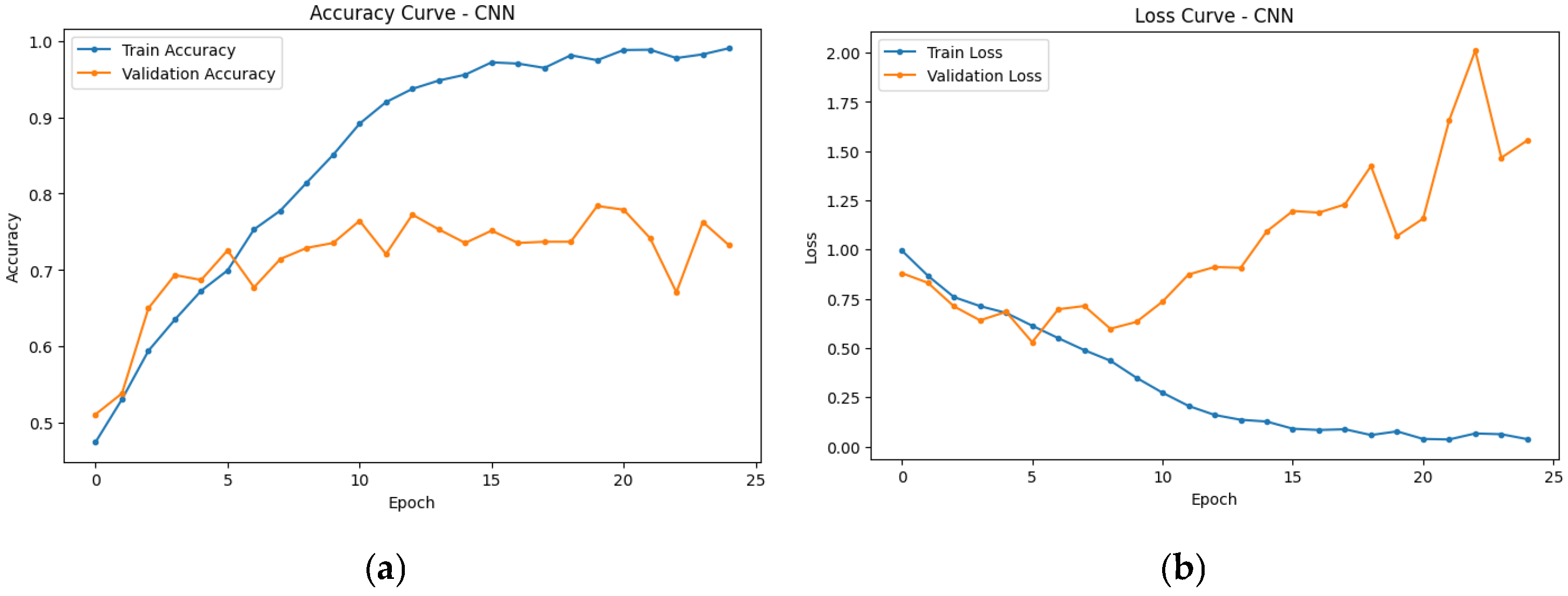

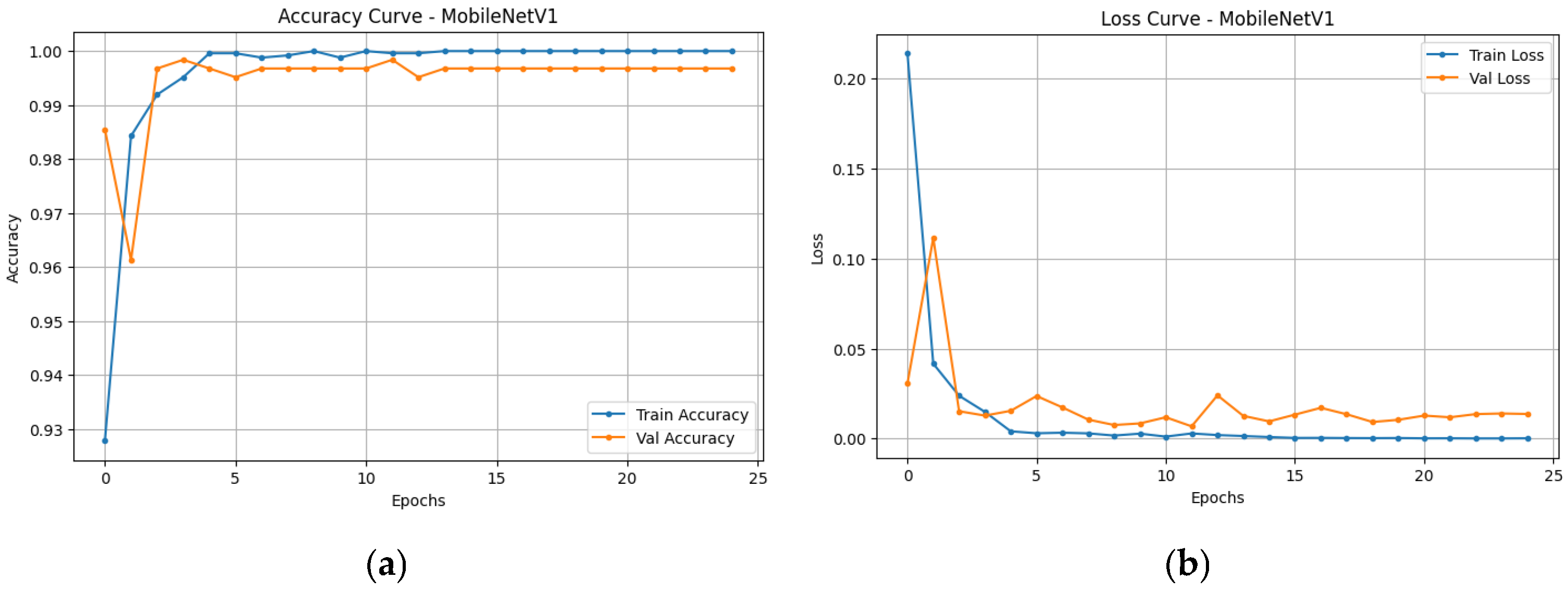

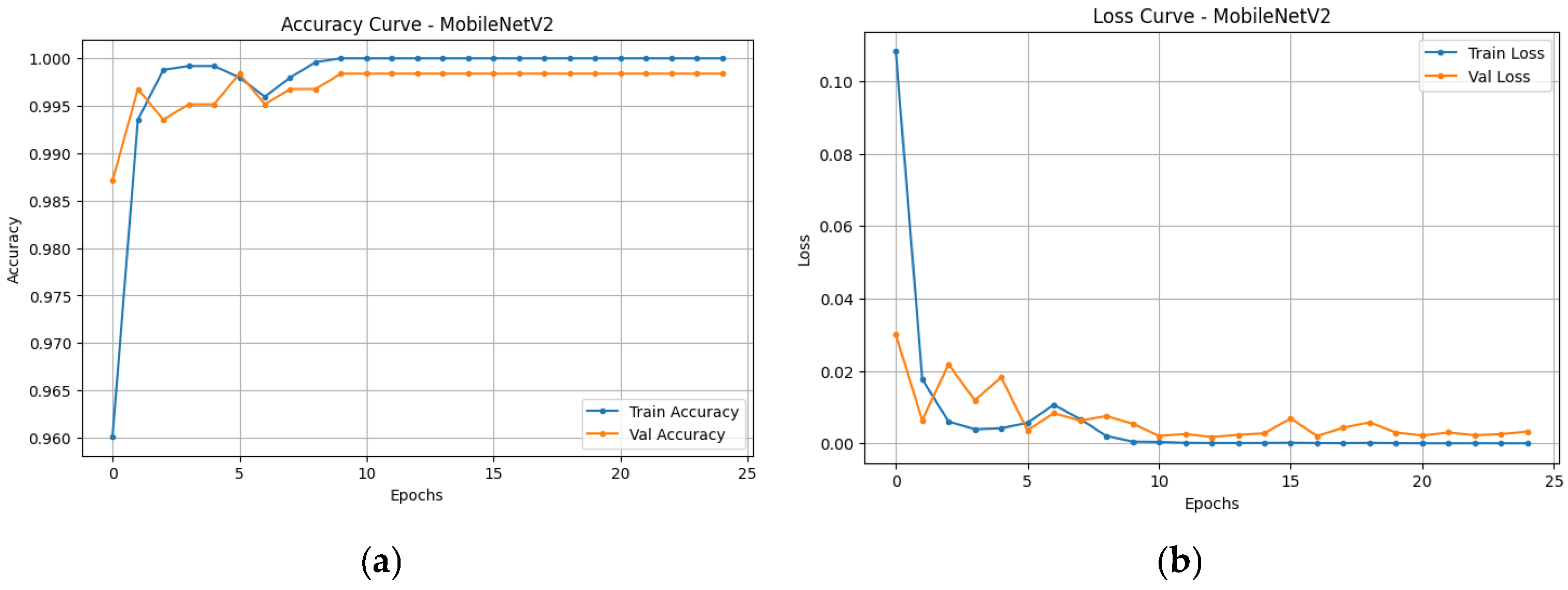

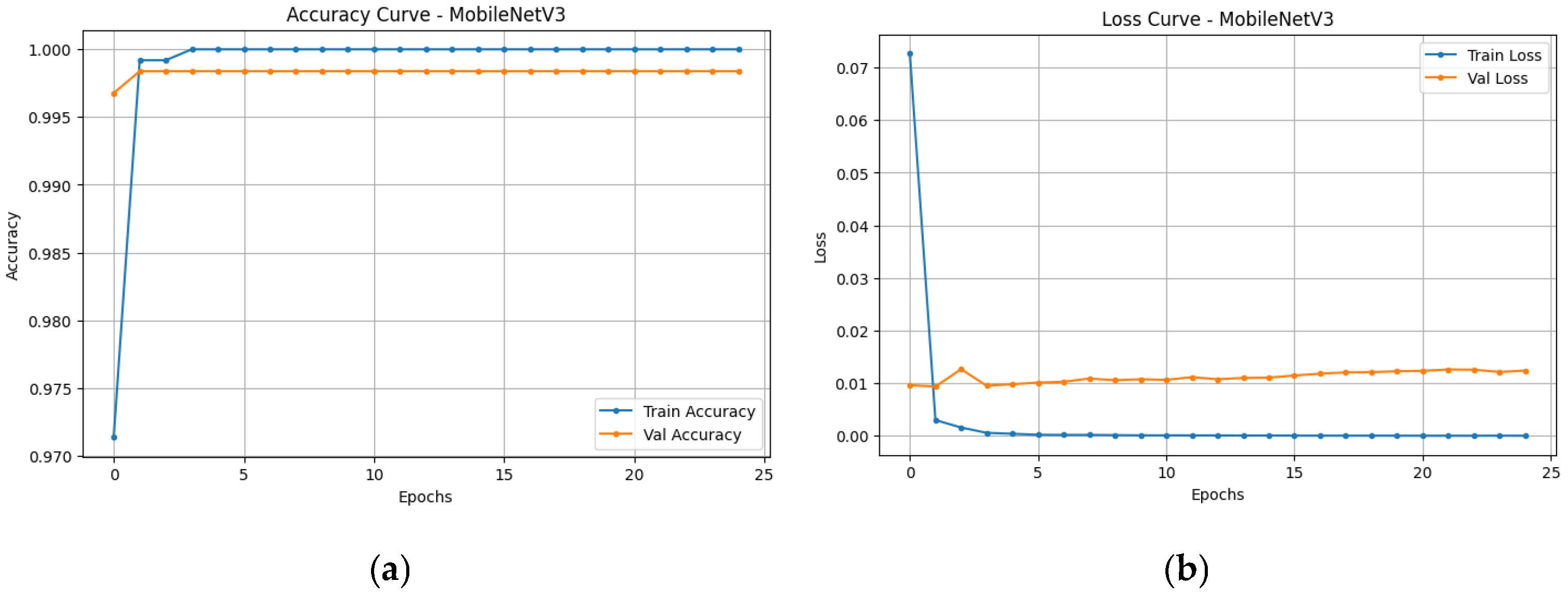

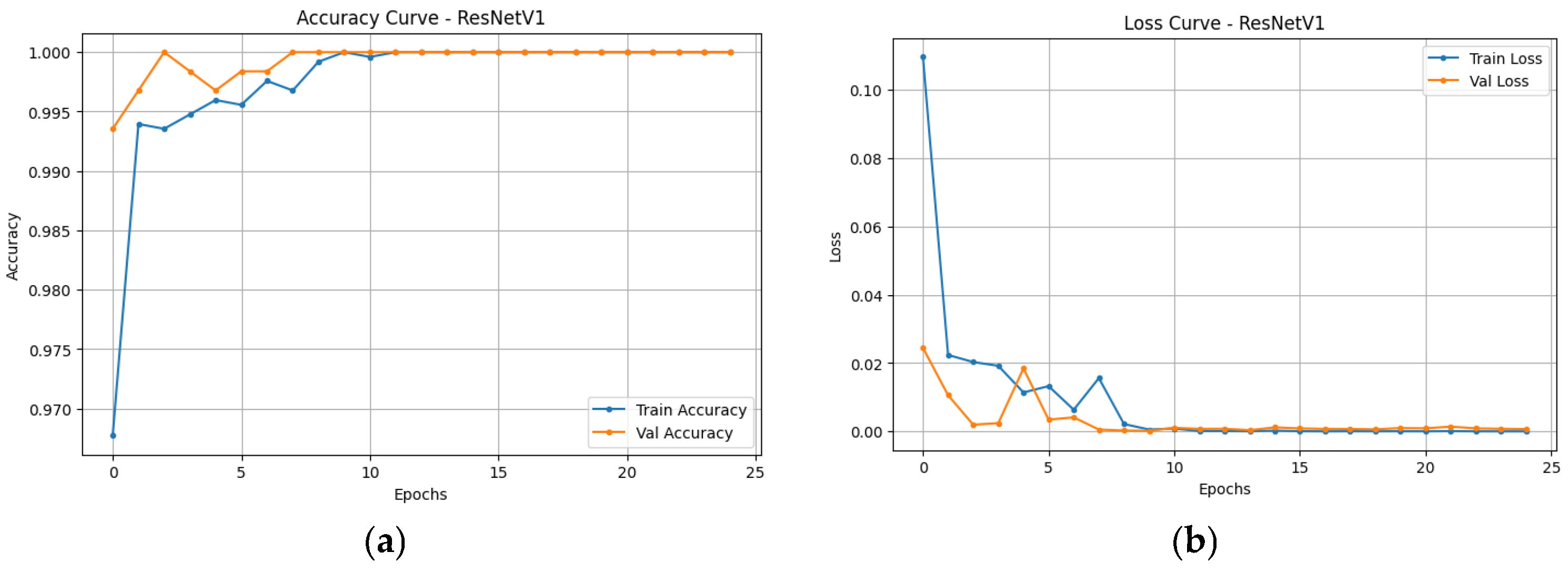

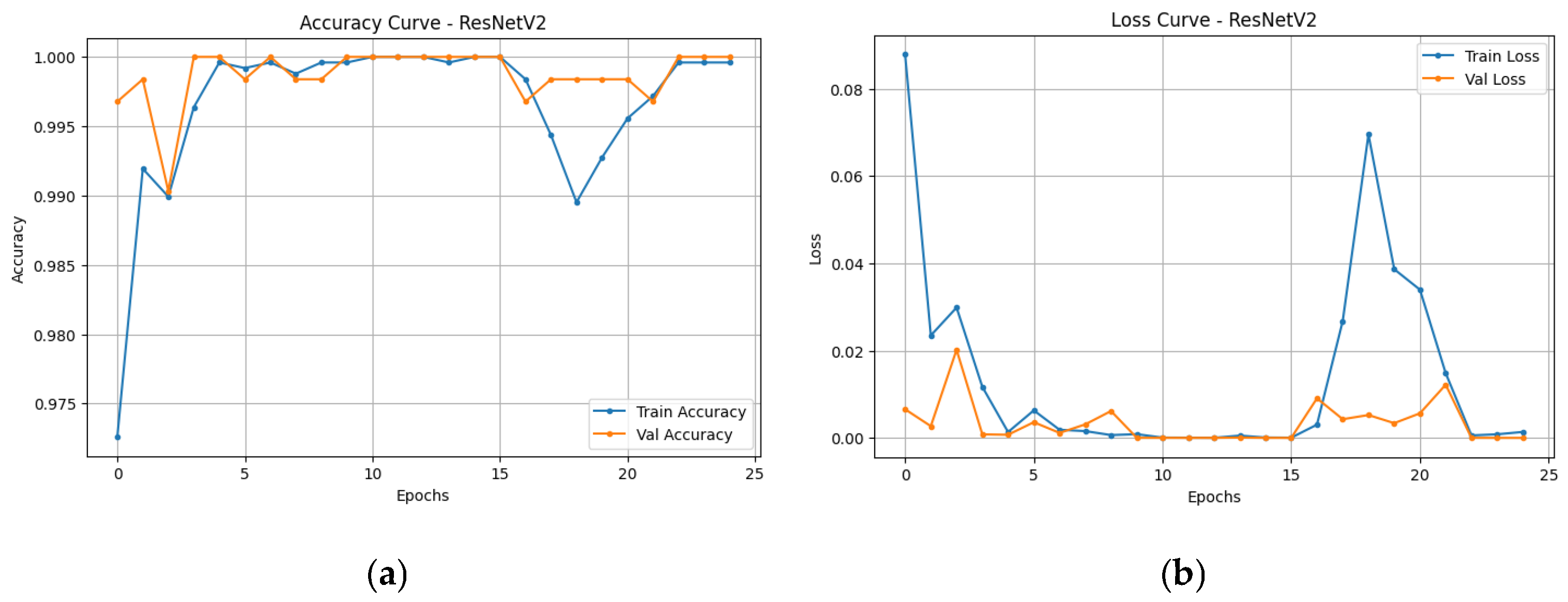

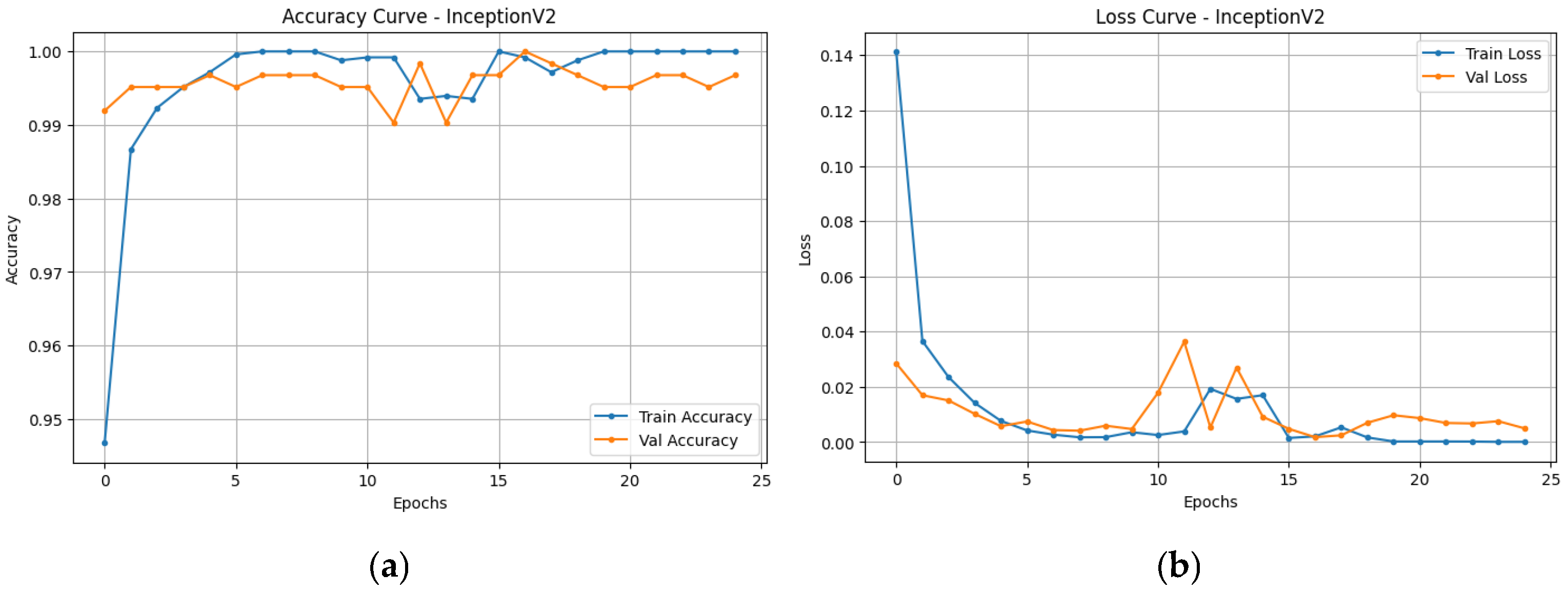

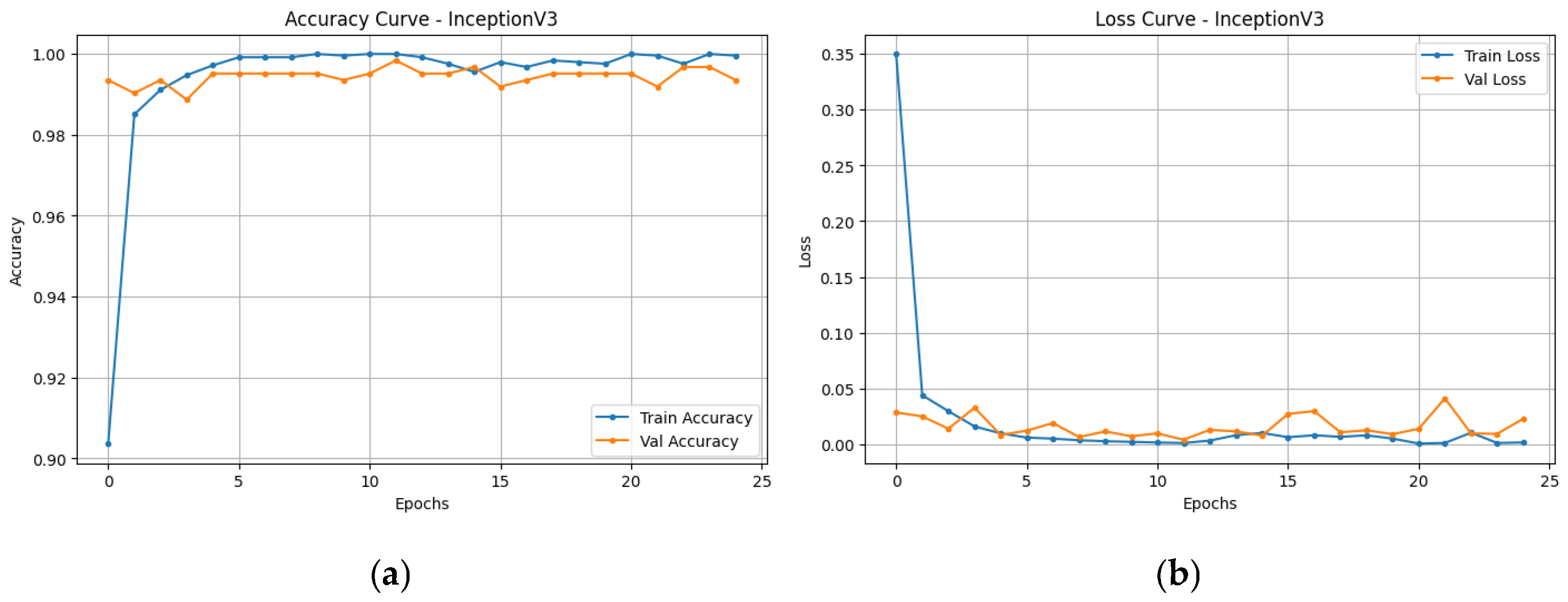

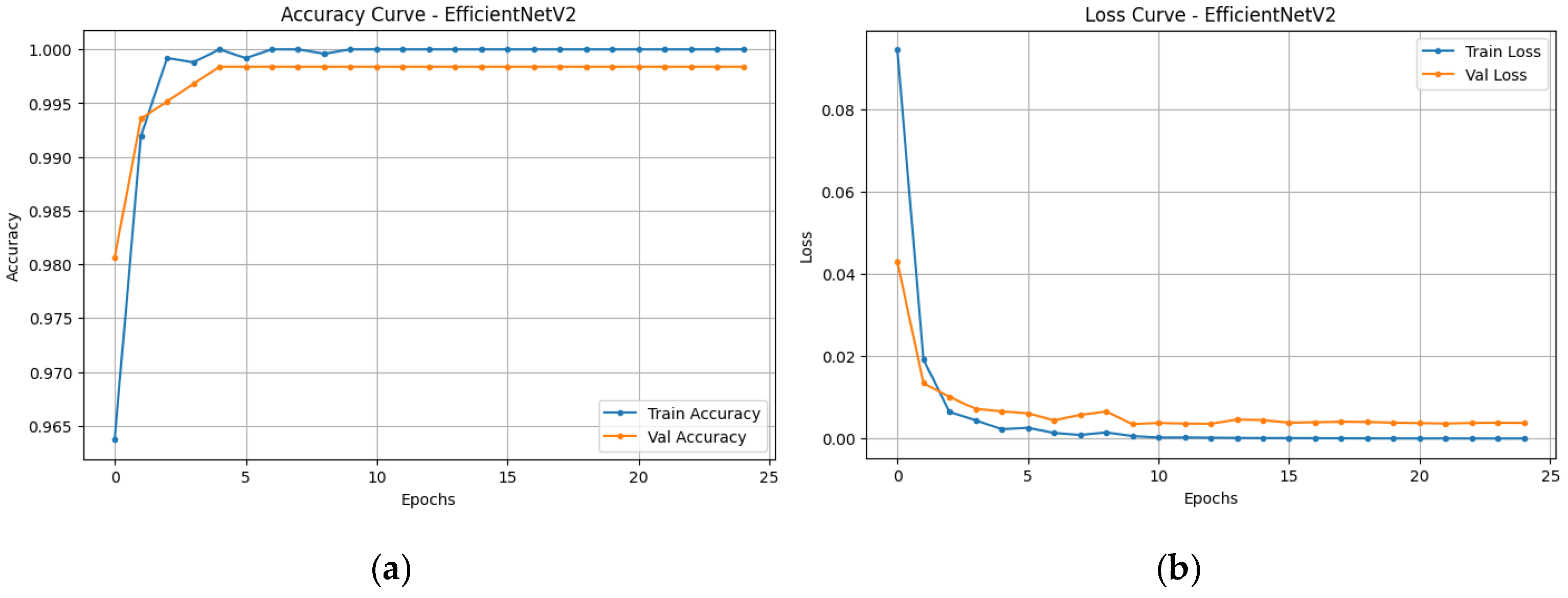

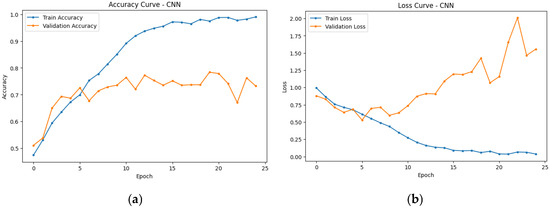

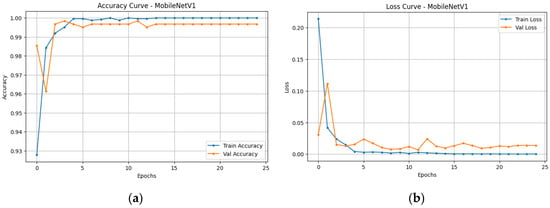

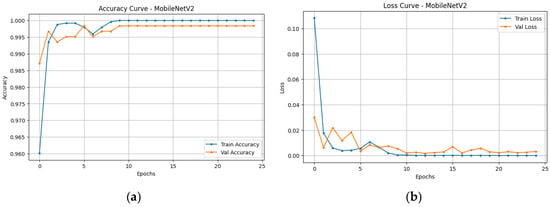

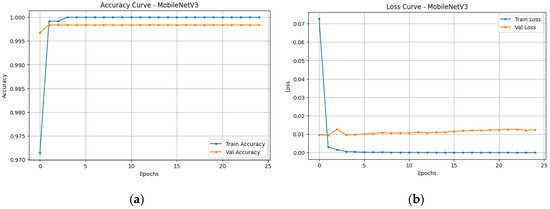

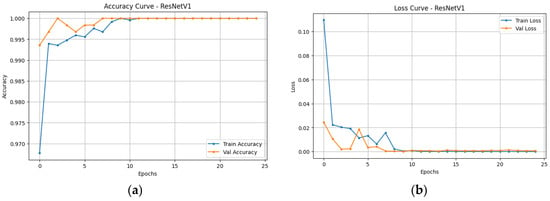

Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12 show the learning curves for each of the models, which give a much more detailed visualization of their training and validation performance over epochs. These curves are very important for the analysis of model behavior, helping to identify overfitting, underfitting, or balanced learning. By observing these trends, one can judge how the model converges, and then make informed adjustments to improve general performance.

Figure 4.

(a) Accuracy vs. epoch graph for custom CNN. (b) Loss vs. epoch graph for custom CNN.

Figure 5.

(a) Accuracy vs. epoch graph for MobileNetV1. (b) Loss vs. epoch graph for MobileNetV1.

Figure 6.

(a) Accuracy vs. epoch graph for MobileNetV2. (b) Loss vs. epoch graph for MobileNetV2.

Figure 7.

(a) Accuracy vs. epoch graph for MobileNetV3. (b) Loss vs. epoch graph for MobileNetV3.

Figure 8.

(a) Accuracy vs. epoch graph for ResNetV1. (b) Loss vs. epoch graph for ResNetV1.

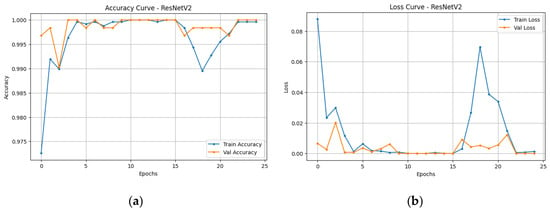

Figure 9.

(a) Accuracy vs. epoch graph for ResNetV2. (b) Loss vs. epoch graph for ResNetV2.

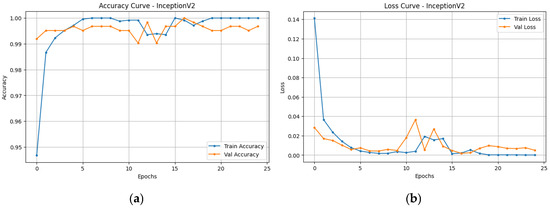

Figure 10.

(a) Accuracy vs. epoch graph for InceptionV2. (b) Loss vs. epoch graph for InceptionV2.

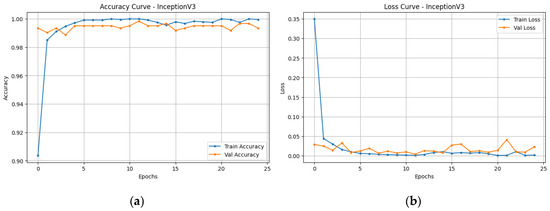

Figure 11.

(a) Accuracy vs. epoch graph for InceptionV3. (b) Loss vs. epoch graph for InceptionV3.

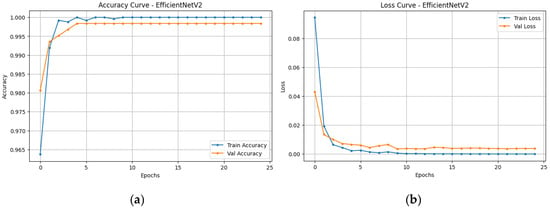

Figure 12.

(a) Accuracy vs. epoch graph for EfficientNetV2. (b) Loss vs. epoch graph for EfficientNetV2.

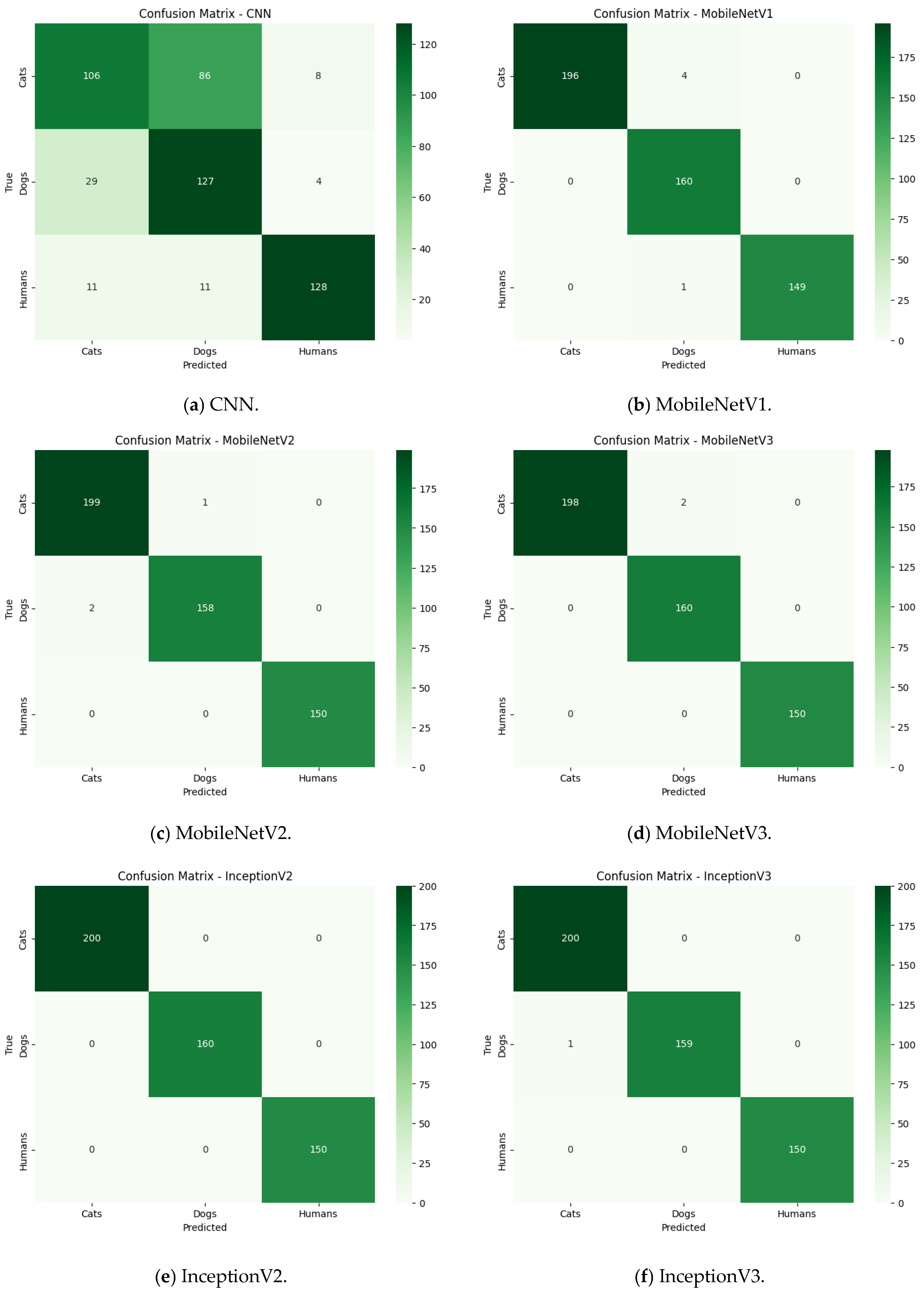

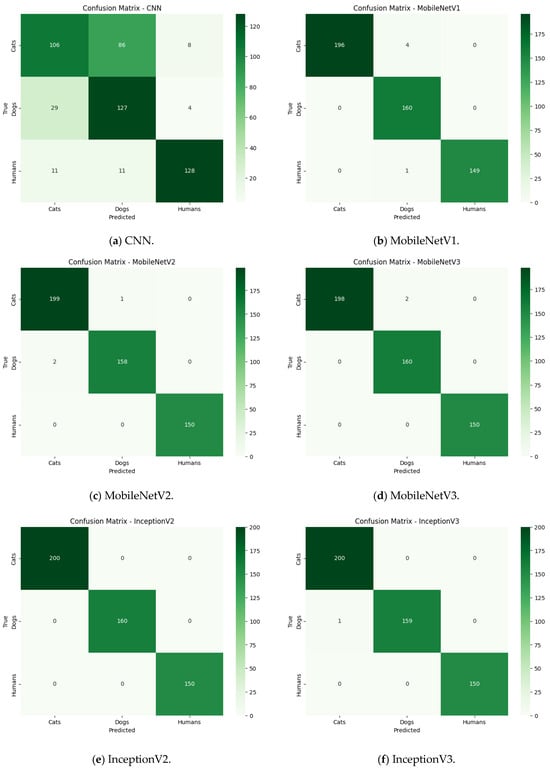

- Confusion Matrix:

Figure 13 shows the confusion matrix, given below, which is a very essential matrix in evaluating classification performance of models. It gives a detailed breakdown of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN), providing insight into the model’s predictive accuracy. This analysis helps identify specific areas where the model excels or struggles, allowing for targeted improvements to raise overall reliability.

Figure 13.

Confusion matrix for different models.

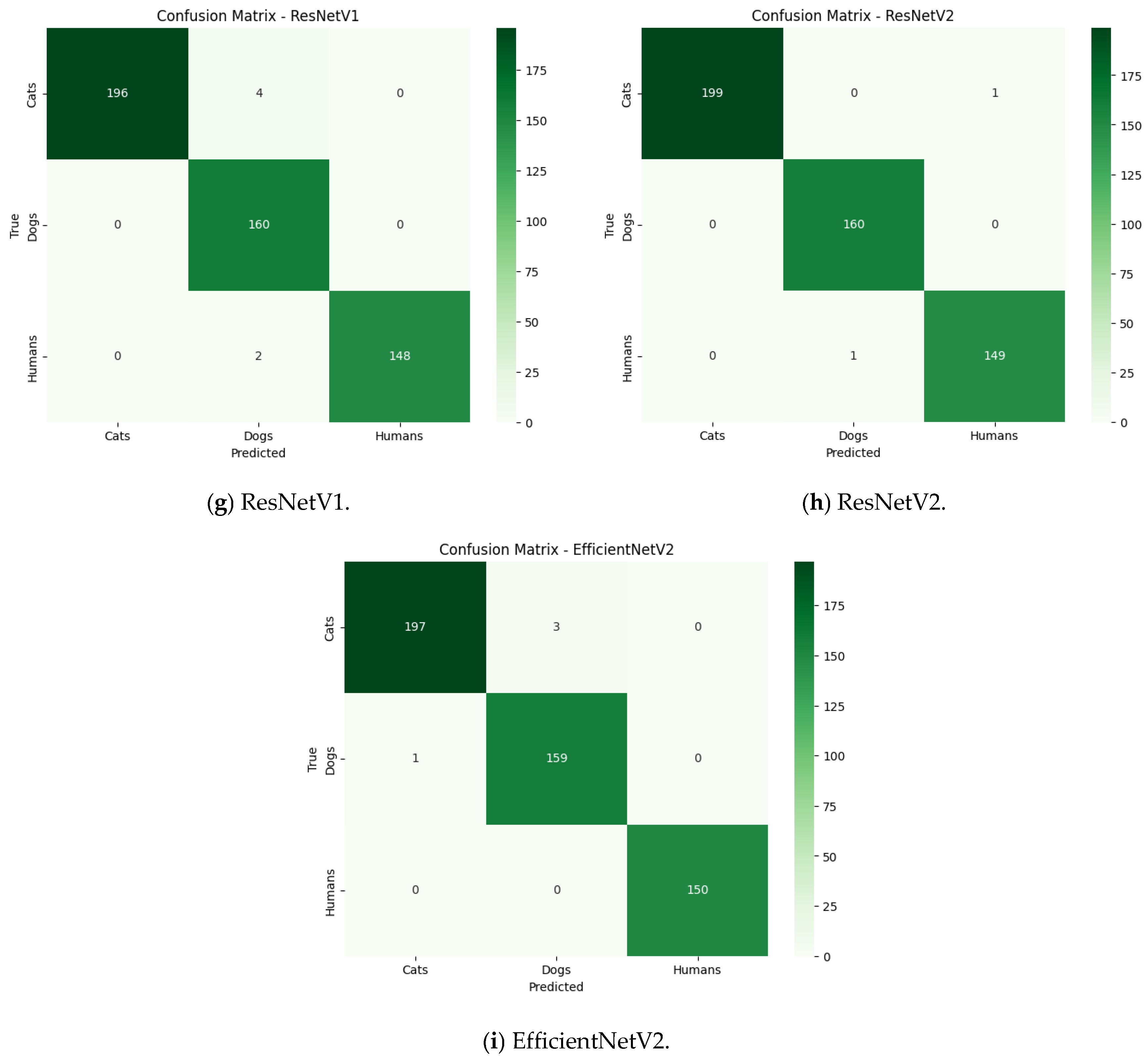

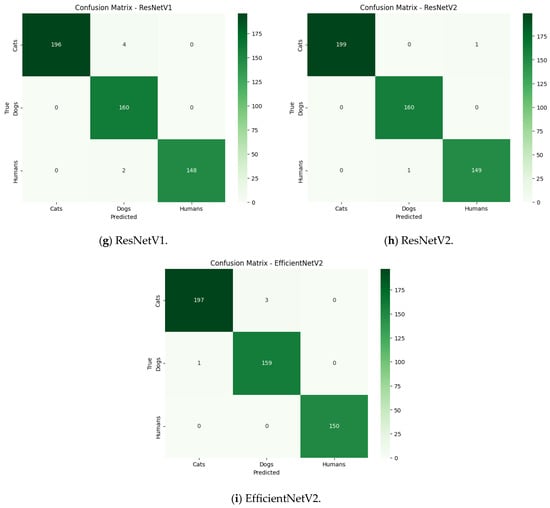

Figure 14 shows the results section of the application, which presents a visually appealing and structured UI to display model performance. The design facilitates the presentation of complex heuristics in a comprehensive and interactive manner, ensuring that users interpret and act upon the information effectively.

Figure 14.

Summary table (top left). Confusion matrix (top right). Graphical analysis and download button.

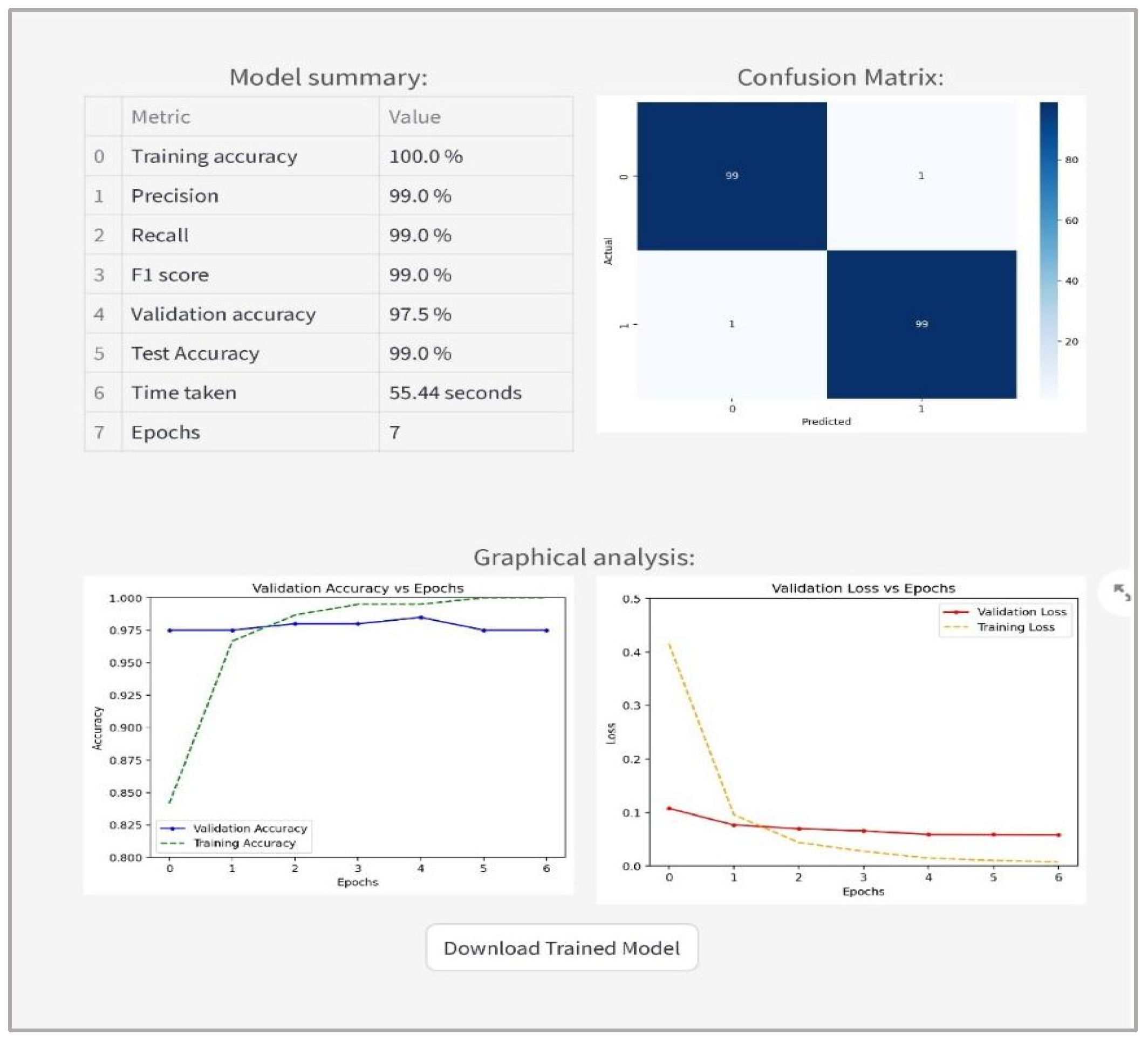

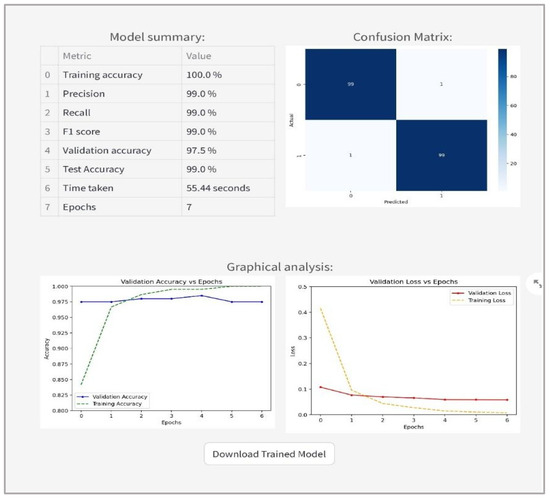

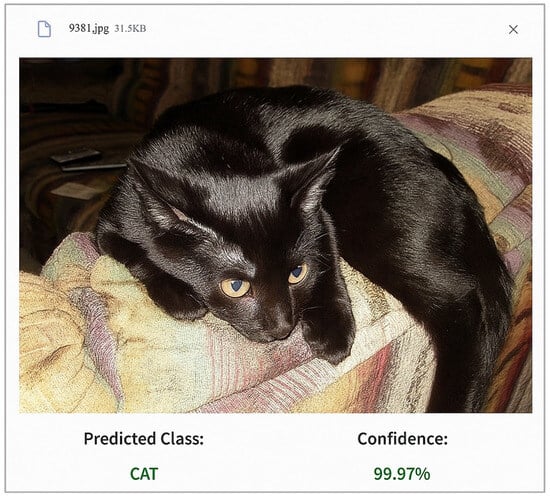

The confusion matrix is displayed, using a heatmap for user clarity, highlighting correct and incorrect predictions. This helps users tune parameters for improvising the prediction accuracy. The ‘Validation Accuracy vs. Epochs’ graph illustrates the rate of improvement of validation accuracy over the number of training epochs, helping users to assess a learning trend. The ‘Validation Loss vs. Epochs’ plot helps users to analyze the decrease in loss, indicating model convergence and stability. A download button, as shown in Figure 13, has been provided for users to download the model (in .h5 format), which can be deployed on other systems for additional testing on vast applications. The User Interface (UI) displays the predicted class and confidence score, as shown in Figure 15.

Figure 15.

On-page predictions with confidence level.

4. Discussion

The comparative evaluation of models in this study provides a clear view of how different deep learning architectures perform in terms of classification accuracy, precision, recall, and computational efficiency. Each architecture demonstrates distinct strengths, making them suitable for varied deployment scenarios depending on the trade-off between performance and resource constraints.

The baseline CNN recorded the lowest overall performance, with a testing accuracy of 70.78%, precision of 73.57%, and recall of 72.56%. Although its results were modest, it served as a useful benchmark for assessing the advantage gained by using more advanced architectures. The relatively high training time (644.28 s), despite its simple structure, suggests that the architecture may not be optimal for this dataset, especially when speed and accuracy are both priorities.

The MobileNet family, designed for lightweight deployment, demonstrated a significant leap in performance compared to CNN, while maintaining lower computational demands. MobileNetV1 achieved a validation accuracy of 99.68% and testing accuracy of 99.02% in just 361.18 s, highlighting its efficiency. MobileNetV2 improved slightly, with precision, recall, and F1-scores all around 99.44–99.46%, likely due to its inverted residuals and linear bottlenecks enhancing feature extraction. MobileNetV3 further refined this balance, achieving the second highest precision (99.59%) and recall (99.67%) in the MobileNet series, while also being the fastest among all tested models (339.33 s). These results confirm that MobileNet variants are highly competitive when deployment speed and hardware constraints are critical.

The ResNet series delivered consistently high accuracy, leveraging deep residual connections to mitigate vanishing gradient issues and enable the effective training of very deep networks. ResNetV1 reached a perfect 100% validation accuracy, but showed a slightly lower testing accuracy (98.82%) compared to the MobileNet and Inception families, and required over 1126s of computation time. ResNetV2 addresses some of these limitations, achieving 99.61% testing accuracy with enhanced residual structures and batch normalization placement, but at the cost of the highest computation time (1184.17 s) among all models. These findings suggest that, while ResNet variants excel in extracting deep hierarchical features, they may be overkill for resource-sensitive applications.

The Inception family, known for its multi-scale convolutional approach, performed exceptionally well. InceptionV2 was the only model to achieve perfect precision, recall, and F1-score (100%) alongside a 99.68% validation accuracy, all within 555.51 s. InceptionV3 maintained this high level of accuracy (99.80% testing accuracy), while slightly reducing computational efficiency due to its deeper and more complex architecture (749.95 s). This consistency indicates strong generalization capabilities, especially for datasets with varied spatial patterns.

EfficientNetV2 demonstrated an outstanding balance between accuracy and computational efficiency. With 99.84% validation accuracy, 99.22% testing accuracy, and a moderate computation time (397.38 s), it leveraged compound scaling to optimize depth, width, and resolution simultaneously. This efficiency makes it particularly attractive for scenarios where high accuracy is necessary, but inference speed remains a concern.

Overall, the results show that no single architecture dominates across all metrics. For maximum performance, InceptionV2 stands out with perfect precision, recall, and F1-score. For a balance between high accuracy and speed, MobileNetV3 and EfficientNetV2 are optimal choices. ResNet architectures, while powerful, may be more suitable for applications where accuracy outweighs computation time constraints. This study reinforces the importance of aligning model selection with the operational requirements of the target environment—whether prioritizing speed, accuracy, or resource efficiency.

5. Limitations and Future Scope

The current study focuses on the integration of classical CNN-based backbones for transfer learning within the ALF. This choice was made to ensure computational efficiency and accessibility for non-expert users; however, it also means that more recent architectures, such as Vision Transformers (ViT), Swin Transformers, and ConvNeXt, have not yet been explored. Incorporating these architectures could potentially improve performance and expand the applicability of the framework to a wider range of image classification tasks. Although ALF has been designed with usability as a core objective, no formal usability validation has yet been conducted. Structured user studies or surveys involving non-technical participants would provide empirical evidence to substantiate the accessibility claims and identify areas for interface or workflow improvement.

Future work will address these limitations in several directions. First, the evaluation will be extended to larger, more diverse datasets, including those that are imbalanced and noisy, to better assess the framework’s robustness in realistic, heterogeneous data environments. Second, modern architectures, such as ViT and Swin Transformers, will be integrated into ALF to enable comparative analysis with the current CNN-based backbones and to leverage their potential advantages in feature representation. Third, formal usability testing and user studies will be undertaken to validate the framework’s suitability for non-expert users and to refine its interface design. Finally, explainable AI (XAI) components, such as saliency maps and Grad-CAM, will be incorporated to enhance interpretability, allowing users to better understand model predictions thereby increasing transparency and trust in the system.

6. Conclusions

This work demonstrates that the Adaptive Learning Framework (ALF) effectively bridges the gap between advanced deep learning techniques and practical usability for non-technical users. By integrating multiple pre-trained models from TensorFlow Hub into a guided, user-friendly interface, ALF enables efficient, high-accuracy image classification without requiring coding expertise. The results confirm that transfer learning architectures such as MobileNetV3, EfficientNetV2, and InceptionV2 deliver high accuracy while maintaining competitive training times, making them suitable for both high-performance and resource-constrained environments. The main findings and future directions are as follows:

- InceptionV2 is preferable when maximum precision and recall are required, whereas MobileNetV3 and EfficientNetV2 provide a balanced trade-off between speed and accuracy, making them more suitable for real-time or edge deployments.

- The framework performs well on small and imbalanced datasets, showing strong generalization, even without early stopping or advanced regularization techniques.

- Future work will focus on integrating modern architectures such as Vision Transformers, applying optimization strategies such as pruning and quantization, and incorporating explainable AI (XAI) components to improve interpretability.

Author Contributions

Conceptualization, M.K., M.S. and S.S.; methodology, M.K. and M.S.; validation, J.S.; formal analysis, S.S. and J.S.; investigation, J.S.; resources, S.S. and J.S.; data curation, M.K.; writing—original draft preparation, M.K. and M.S.; writing—review and editing, S.S. and J.S.; supervision, S.S. and J.S.; project administration, S.S. and J.S.; funding acquisition, S.S. and J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Symbiosis Research Fund (RSF) of Symbiosis International (Deemed University), Pune, Maharashtra, India.

Data Availability Statement

The supporting data of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ALF | Adaptive Learning Framework |

| CNN | Convolutional Neural Network |

| F1-score | Harmonic Mean of Precision and Recall |

| FN | False Negative |

| FP | False Positive |

| GUI | Graphical User Interface |

| h5 | HDF5 (Hierarchical Data Format version 5) |

| NAS | Neural Architecture Search |

| SE | Squeeze-and-Excitation |

| TN | True Negative |

| TP | True Positive |

| UI | User Interface |

| ViT | Vision Transformer |

| XAI | Explainable Artificial Intelligence |

References

- McNeely-White, D.; Beveridge, J.R.; Draper, B.A. Inception and ResNet Features are (Almost) Equivalent. Cogn. Syst. Res. 2020, 59, 312–318. [Google Scholar] [CrossRef]

- Mathew, M.P.; Mahesh, T.Y. Object Detection Based on Teachable Machine. J. VLSI Des. Signal Process. 2021, 7, 20–26. [Google Scholar] [CrossRef]

- Carney, M.; Webster, B.; Alvarado, I.; Phillips, K.; Howell, N.; Griffith, J.; Jongejan, J.; Pitaru, A.; Chen, A. Teachable machine: Approachable web-based tool for exploring machine learning classification. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Association for Computing Machinery, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Kacorri, H. Teachable machines for accessibility. ACM SIGACCESS Access. Comput. 2017, 119, 10–18. [Google Scholar] [CrossRef]

- Salehi, A.W.; Khan, S.; Gupta, G.; Alabduallah, B.I.; Almjally, A.; Alsolai, H.; Siddiqui, T.; Mellit, A. A Study of CNN and Transfer Learning in Medical Imaging: Advantages, Challenges, Future Scope. Sustainability 2023, 15, 5930. [Google Scholar] [CrossRef]

- Kim, H.E.; Cosa-Linan, A.; Santhanam, N.; Jannesari, M.; Maros, M.E.; Ganslandt, T. Transfer learning for medical image classification: A literature review. BMC Med. Imaging 2022, 22, 69. [Google Scholar] [CrossRef] [PubMed]

- Kannan, M.K.J.; Sengar, A.; Bhardwaj, A.; Singh, A.; Jain, H.; Shrivastava, V. Simplifying Machine Learning: A Streamlit-Powered Interface for Rapid Model Development with PyCaret. Int. J. Innov. Res. Comput. Commun. Eng. 2024, 12, 5857–5871. [Google Scholar]

- Desai, C. Image Classification Using Transfer Learning and Deep Learning. Int. J. Eng. Comput. Sci. 2021, 10, 25394–25398. [Google Scholar] [CrossRef]

- Ariefwan, M.R.M.; Diyasa, I.G.S.M.; Hindrayani, K.M. InceptionV3, ResNet50, ResNet18 and MobileNetV2 Performance Comparison on Face Recognition Classification. Literasi Nusant. 2021, 4, 1–10. [Google Scholar]

- Hussain, M.; Bird, J.J.; Faria, D.R. A study on CNN transfer learning for image classification. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 191–202. [Google Scholar] [CrossRef]

- Sheng, T.; Feng, C.; Zhuo, S.; Zhang, X.; Shen, L.; Aleksic, M. A Quantization-Friendly Separable Convolution for MobileNets. In Proceedings of the 2018 1st Workshop on Energy Efficient Machine Learning and Cognitive Computing for Embedded Applications (EMC2), Williamsburg, VA, USA, 25 March 2018. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. 2021. Available online: https://github.com/google/ (accessed on 20 May 2025).

- Deep Learning and Transfer Learning Approaches for Image Classification. Available online: https://www.researchgate.net/publication/333666150 (accessed on 20 May 2025).

- Dharavath, K.; Amarnath, G.; Talukdar, F.A.; Laskar, R.H. Impact of image preprocessing on face recognition: A comparative analysis. In Proceedings of the 2014 International Conference on Communication and Signal Processing, Melmaruvathur, India, 3–5 April 2014; pp. 631–635. [Google Scholar] [CrossRef]

- Mijwil, M.M.; Doshi, R.; Hiran, K.K.; Unogwu, O.J.; Bala, I. MobileNetV1-Based Deep Learning Model for Accurate Brain Tumor Classification. Mesopotamian J. Comput. Sci. 2023, 2023, 32–41. [Google Scholar] [CrossRef] [PubMed]

- Jeong, H. Feasibility Study of Google’s Teachable Machine in Diagnosis of Tooth-Marked Tongue. J. Dent. Hyg. Sci. 2020, 20, 206–212. [Google Scholar] [CrossRef]

- Mahesh, T.R.; Thakur, A.; Gupta, M.; Sinha, D.K.; Mishra, K.K.; Venkatesan, V.K.; Guluwadi, S. Transformative Breast Cancer Diagnosis using CNNs with Optimized ReduceLROnPlateau and Early Stopping Enhancements. Int. J. Comput. Intell. Syst. 2024, 17, 14. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Meier, U.; Masci, J.; Maria Gambardella, L.; Schmidhuber, J. Flexible, High Performance Convolutional Neural Networks for Image Classification. In Proceedings of the IJCAI Proceedings-International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Alruwaili, M.; Shehab, A.; Abd El-Ghany, S. COVID-19 Diagnosis Using an Enhanced Inception-ResNetV2 Deep Learning Model in CXR Images. J. Healthc. Eng. 2021, 2021, 6658058. [Google Scholar] [CrossRef] [PubMed]

- Dong, K.; Zhou, C.; Ruan, Y.; Li, Y. MobileNetV2 Model for Image Classification. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application, ITCA 2020, Guangzhou, China, 18–20 December 2020; IEEE: Piscataway, NJ, USA; pp. 476–480. [Google Scholar] [CrossRef]

- Hindarto, D. Revolutionizing Automotive Parts Classification Using InceptionV3 Transfer Learning. Int. J. Softw. Eng. Comput. Sci. (IJSECS) 2023, 3, 324–333. [Google Scholar] [CrossRef]

- Krishnapriya, S.; Karuna, Y. Pre-trained deep learning models for brain MRI image classification. Front. Hum. Neurosci. 2023, 17, 1150120. [Google Scholar] [CrossRef] [PubMed]

- Hossin, M.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Didyk, L.; Yarish, B.; Beck, M.A.; Bidinosti, C.P.; Henry, C.J. Strategies and Impact of Learning Curve Estimation for CNN-Based Image Classification. 2023. Available online: http://arxiv.org/abs/2310.08470 (accessed on 25 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).