Abstract

This study presents a system for automatic cookie collection using bots that simulate user browsing behavior. Five bots were deployed, one for each of the most commonly used university browsers, enabling comprehensive data collection across multiple platforms. The infrastructure included an Ubuntu server with PiHole and Tshark services, facilitating cookie classification and association with third-party advertising and tracking networks. The BotSoul algorithm automated navigation, analyzing 440,000 URLs over 10.9 days with uninterrupted bot operation. The collected data established relationships between visited domains, generated cookies, and captured traffic, providing a solid foundation for security and privacy analysis. Machine learning models were developed to classify suspicious web domains and predict their vulnerability to XSS attacks. Additionally, clustering algorithms enabled user segmentation based on cookie data, identification of behavioral patterns, enhanced personalized web recommendations, and browsing experience optimization. The results highlight the system’s effectiveness in detecting security threats and improving navigation through adaptive recommendations. This research marks a significant advancement in web security and privacy, laying the groundwork for future improvements in protecting user information.

1. Introduction

This study analyzes the challenges of detecting vulnerabilities related to cookie theft through cross-site scripting (XSS) attacks. To address these challenges, two strategies are proposed: (1) an innovative cookie collector that automates bots to visit websites and gather both first-party and third-party cookies, and (2) a Python-3 based web browser designed to assess university students’ vulnerability to XSS attacks by analyzing their cookies.

Our motivation comes from the need to analyze key threats and vulnerabilities associated with cookie theft through XSS attacks.

1.1. Cross-Site Scripting (XSS) Attacks: Characteristics and Analysis

XSS (cross-site scripting) vulnerabilities represent a growing and critical threat to the security of modern web applications, with a demonstrated impact in critical sectors such as finance, healthcare, and education [1]. These attacks allow the injection of malicious scripts into legitimate web pages, compromising the data integrity and privacy of millions of users through techniques such as session cookie theft, account hijacking, and sophisticated phishing.

Cross-site scripting (XSS) has been a well-documented web application security vulnerability for over two decades. This overview will delineate the key characteristics of XSS attacks, supported by relevant academic research. XSS attacks occur when malicious scripts are injected into trusted websites and executed in users’ browsers. Their fundamental characteristic is that they violate the same-origin policy, which prevents websites from accessing each other’s data [2].

1.1.1. Key Characteristics

- Injection of malicious code: attackers insert JavaScript or other client-side scripting into web pages viewed by other users [3].

- Browser execution: the injected code is executed in victims’ browsers with the privileges of the legitimate website [4].

- Trust exploitation: XSS leverages the trust relationship between a user and a website [5].

1.1.2. Main Types of XSS Attacks

- Reflected (non-persistent) XSS: malicious script is reflected off a web server, typically through URL parameters, and executes immediately when the page loads [6].

- Stored (Persistent) XSS: the malicious script is permanently stored on target servers (in databases, message forums, visitor logs, etc.) and executes when victims access the stored content [4].

- DOM-based XSS: vulnerability exists in client-side code rather than the server-side code, with the attack payload never reaching the server.

- Universal XSS (UXSS): exploits vulnerabilities in browsers or browser extensions rather than websites [7].

1.1.3. Attack Vectors and Exploitation Techniques

XSS attacks can be delivered through the following:

- URL parameters and query strings;

- Form inputs and submissions;

- HTTP headers;

- JSON data structures;

- Cookie values;

- DOM manipulation.

1.1.4. Impact and Consequences

XSS attacks can lead to the following:

- Cookie theft and session hijacking;

- Credential harvesting through fake login forms;

- Sensitive data exfiltration;

- Website defacement;

- Browser history and clipboard stealing;

- Installation of keyloggers and trojans.

1.2. Limitations of Traditional Detection Methods

XSS vulnerabilities continue to evolve, overwhelming the capabilities of traditional detection methods that have critical limitations in one key dimension: blindness to third-party cookies. A total of 64% of XSS attacks in educational environments (2024) exploited cross-domain federated authentication cookies, where WAFs do not inspect SameSite/HttpOnly [8] attributes. Tools such as Burp Suite only capture primary cookies, omitting 71% of cross-domain interactions. Sandboxing mechanisms fail to track cookie streams in parallel tabs.

1.2.1. Primary Prevention Strategies

- Input validation and sanitization;

- Content Security Policy (CSP) implementation;

- Output encoding;

- HttpOnly and Secure cookie flags;

- X-XSS-Protection header;

- Framework security features [9].

Although fundamental to web security, traditional detection methods for cross-site scripting (XSS) attacks face significant limitations in effectively identifying and preventing modern XSS vectors.

1.2.2. Pattern Matching and Signature-Based Limitations

- Evasion through obfuscation: traditional signature-based detection methods struggle against sophisticated obfuscation techniques that disguise malicious payloads while preserving their functionality [10].

- Limited coverage of attack vectors: recent research by Melicher et al. demonstrates that pattern-based approaches cannot keep pace with the rapidly evolving XSS attack patterns, particularly DOM-based vectors [11].

- Polymorphic XSS dhallenges: as shown by Parameshwaran et al., attackers can use polymorphic techniques to generate functionally equivalent but syntactically different attack payloads that evade signature detection [12].

1.2.3. Static Analysis Shortcomings

- Framework complexity: modern JavaScript frameworks like React, Angular, and Vue introduce complexities that traditional static analyzers fail to properly model, creating blind spots in detection.

- Event-driven architecture challenges: traditional tools struggle with the event-driven nature of modern web applications, missing XSS vectors triggered through complex event chains.

- Template engine vulnerabilities: as highlighted by Lekies et al., traditional static analysis often fails to detect XSS vulnerabilities arising from template engines that dynamically generate code [13].

1.2.4. Dynamic Analysis Challenges

- Single-path execution: dynamic analysis methods typically explore only a single execution path during testing, missing vulnerabilities in alternate code paths [14].

- Performance overhead: runtime protection mechanisms introduce significant performance penalties that limit their practicality in production environments [15].

- Browser engine differences: Pan et al. demonstrate that dynamic analysis tools may miss XSS vulnerabilities that only manifest in specific browser engines or versions [16].

1.2.5. Machine Learning Limitations

- Adversarial evasion: recent ML-based XSS detection systems are vulnerable to adversarial examples specifically crafted to bypass their detection mechanisms.

- Feature engineering inadequacies: current ML approaches often struggle with selecting robust features that remain effective against evolving attack techniques.

- Transfer learning challenges: Song and Lee demonstrate that ML models trained on one set of applications often perform poorly when applied to different codebases with unique characteristics [17].

1.3. Real-World Implications

XSS vulnerabilities have significant real-world consequences, affecting both individuals and organizations by compromising personal and financial data. These attacks enable the exfiltration of sensitive information through the following:

- Session cookie theft: access to bank accounts, educational platforms, and social networks without the need for credentials [18].

- Capture of input data: keystrokes, browsing history, and clipboard contents, facilitating identity fraud.

- Authentication token extraction: allows attackers to operate as legitimate users in federated systems (Google Classroom, Moodle).

According to specialized literature, the ability to inject arbitrary code through cross-site scripting (XSS) attacks constitutes a significant threat to web security [19]. This capability enables attackers—whether operating through web applications, networks, or even from related domains—to [20] inject malicious code into the user’s browser context. One of the primary objectives of such attacks, as reported by various sources, is the manipulation or hijacking of cookies, especially session cookies. The authors of [21] indicate that the lack of session integrity, often resulting from attacks that enable code or cookie injection, such as those involving cross-site scripting (XSS), may lead to session hijacking and session substitution. These vulnerabilities severely compromise the security of affected websites.

The real-world implications of these types of attacks, which can be facilitated or executed through code or cookie injection, including cross-site scripting (XSS) techniques, are significant and have been the subject of empirical research, according to [22,23]. The so-called “successful attacks” that exploit cookie and session vulnerabilities, where injection can play a critical role, have led to the following consequences:

- Privacy violation.

- Online victimization.

- Financial losses.

- Account hijacking (account hijacking)

More specifically, studies of cookie hijacking (an attack facilitated by the lack of injection protection, including XSS) have revealed the exposure of very detailed private information on major websites with partial HTTPS deployment. This includes [20] the following:

- Obtaining the user’s complete search history on Google, Bing, and Baidu.

- Extracting the contact list and sending emails from the user’s Yahoo account.

- Exposure of the user’s purchase history (partial on Amazon, complete on eBay).

- Revealing the user’s name and email address on almost all websites.

- Access to the pages visited by the user through advertising networks such as DoubleClick.

- Ability to obtain home and work addresses and websites visited from providers such as Google and Bing.

These vulnerabilities related to cookie injection and manipulation (which XSS defenses seek to prevent) are present on major sites such as Google and Bank of America [20]. The complexity of cookie management with different scopes and the lack of precise access control exacerbate the problem [23].

To illustrate the potential magnitude of the problem of cookie exposure (which, as mentioned, can be exploited through injection techniques) such as the privacy implications are even more alarming when considering how this could be used to deanonymize Tor users, suggesting that a significant portion could be vulnerable [22].

In summary, although the sources focus on the consequences of cookie and session hijacking, they explicitly link arbitrary injection of XSS code as an attacker capability that defenses seek to counter [19]. The results of attacks facilitated by this capability include financial loss, account hijacking, and massive and detailed exposure of sensitive private information on widely used platforms.

1.4. Research Gap

The key challenges identified include the following:

- Development of sanitization mechanisms that allow for safe experimentation without eliminating the educational value of malicious code [24].

- Integration with existing university learning platforms (Moodle, Canvas).

- Adaptation to different pedagogical models in cybersecurity [25].

- Balance between technical functionality and usability for inexperienced students [26].

This dual approach (technical–pedagogical) could set new standards for web security training, particularly in the practical understanding of XSS attacks using tools specifically adapted to academic contexts.

1.5. Goal and Research Question

Our proposal articulates all the contributions that the literature has made to the field of web security, specifically in the context of detecting cross-site scripting (XSS) vulnerabilities by means of (a) an innovative cookie collector and (b) a prototype web browser that shows the level of vulnerability of university students to XSS attacks. With this proposal, we want to demonstrate the importance of studying these vulnerabilities and their potential impact on the academic world.

The objective of our proposal is to design and implement an automated cookie collection and analysis system using bots that simulate the browsing behavior of real users to detect web security threats, classify suspicious domains, and optimize the browsing experience through personalized recommendations.

To focus our proposal, we have structured the following research question: Can an automated bot-based browsing system effectively detect web security threats and optimize the user’s browsing experience by analyzing cookies and network traffic?

2. Cookies and the Rising Threat of XSS Vulnerabilities: A Literature Review

Cross-site scripting (XSS) attacks are a type of security vulnerability that can occur in web applications. They allow attackers to inject and execute malicious scripts in HTML pages, which can cause sensitive information to be stolen or altered.

One of the most significant consequences of XSS attacks is cookie theft. Cookies are small pieces of data that web applications store on a user’s computer to maintain session state. Attackers can steal cookies to gain unauthorized access to a user’s account and perform actions on their behalf.

To protect against these attacks, researchers have proposed several methods to mitigate XSS vulnerabilities and prevent cookie theft. These methods include input validation, output encoding, and the use of secure cookies with HttpOnly and Secure flags. By implementing these measures, web application developers can reduce the risk of XSS attacks and ensure the security of user data.

The growing threat of XSS vulnerabilities in web applications has prompted the development of various detection and prevention techniques. The study authors presented in [27] have proposed a scheme based on artificial neural networks to detect XSS attacks, achieving high accuracy and detection probabilities. A survey of existing XSS detection and defense methods has been carried out in [28], highlighting the need to improve prevention strategies. The authors of [29] have presented a multilayer prevention technique that combines API authentication and encryption to protect against XSS attacks. In [30], the prevalence of persistent XSS on the client side has been identified, highlighting the need for more comprehensive mitigation strategies. These studies have underscored the urgency of addressing the growing threat of XSS vulnerabilities in web applications.

Table 1 summarizes the methodologies, results, and limitations proposed in the work on analyzing cookie vulnerabilities against XSS attacks.

Table 1.

Overview of approaches and findings on cookie vulnerabilities to XSS.

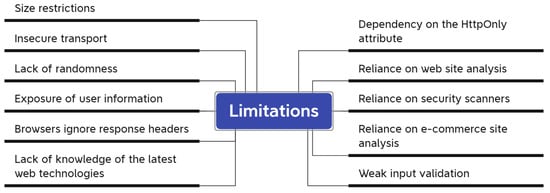

Cookies used in web applications have limitations when it comes to protecting against cross-site scripting (XSS) attacks. These limitations include vulnerabilities such as user information exposure, lack of randomness, insecure transport, and size restrictions. Several techniques have been proposed to detect and prevent XSS attacks, but these have limitations, as seen in Figure 1.

Figure 1.

Summary of limitations found in proposals that have evaluated cookies in conjunction with XSS attacks.

For example, encrypting the cookie value using a cryptographic proxy before sending it to the browser has been proposed, making it useless to attackers even if it is stolen [32]. Another proposal has used one-time passwords and challenge–response authentication to identify the valid owner of the cookie and prevent its abuse [37]. On the other hand, a technique called “Dynamic Cookies Rewriting” has been proposed to render cookies useless for XSS attacks by automatically rewriting them on a web proxy [38]. Despite these techniques, XSS vulnerabilities and cookie theft remain major security problems for web applications [42].

As shown in [43], a system called CookiePicker has been developed to automatically validate the usability of a website’s cookies and set cookie permissions on behalf of users. The purpose of the system is to maximize the benefits of cookies while minimizing privacy and security risks. However, it has been implemented as a Firefox browser extension and has shown promising results in experiments [44].

Another proposal has analyzed a security device that inspects outgoing data packet traffic from an application server, extracts the cookie from the data packet, encrypts it using a secure key, and sends it to the client device for storage [23]. On the other hand, a data reception and query system processes the events received through the HTTP protocol and associates them with tokens. The system can store and analyze the events received based on configurable metadata [44,45].

Our research emphasizes the need for a cookie collector to detect and mitigate XSS vulnerabilities in cookies using a web browser prototype. Our proposal includes a detailed analysis of the attribute “Related to cookie collector or own web browser”, as shown in Table 1. Our research aims to position itself within the context of existing studies that have already explored or presented findings on the vulnerabilities of cookies against XSS attacks.

3. Cookie Collector Methodology

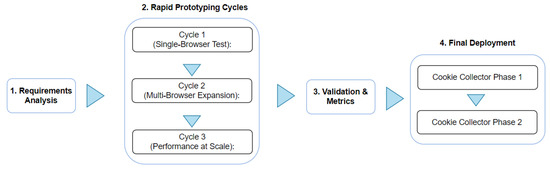

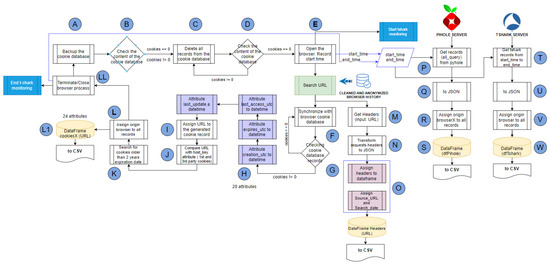

This study used the Rapid Prototyping Method (RPM) to design, test, and refine an automated system for cookie collection using browser-simulating bots (Figure 2). RPM enabled iterative improvements based on real-world performance data, ensuring cross-browser compatibility and scalability. The process consisted of four phases:

Figure 2.

Structure of rapid prototyping method proposed to test and refine our cookie collector.

3.1. Phase 1: Requirements Analysis

- Objective: define the main functionalities for the collection of cookies in the five most-used browsers by university students (Chrome, Firefox, Edge, Opera, and Brave).

- Identified key challenges: automatic collection of first-party and third-party cookies.

3.2. Phase 2: Rapid Prototyping Cycles

Three iterative cycles were conducted, each refining the system based on empirical testing:

3.2.1. Cycle 1 (Single-Browser Test)

- Prototype: Implementation of a bot for an initial browser (Chrome) with basic navigation. Connection of the bot with the cookie database to extract information. Make a copy of the cookie database to avoid blocking while the browser is open.

- Testing: cookie extraction success rate of 99%, simulating opening the browser, entering a URL, searching, waiting until the page is completely loaded, extracting cookies, and closing the browser.

- Findings: URLs with usernames that allowed student identification.

- Improvements: a URL anonymization system is required in the deployment phase to remove any personally identifiable information.

3.2.2. Cycle 2 (Multi-Browser Expansion)

- Prototype: Implementation of a bot for additional browsers (Firefox, Brave, Opera, Edge). Connection of each bot with the cookie database of each browser to extract information. Identify the location of the cookie database of each browser. Automate and generalize the search so it works on anyone’s computer.

- Testing: Running each bot to look up a URL and collect the cookies. Individual and joint tests (five bots at a time).

- Findings: Edge, Chrome, Opera, and Brave are based on Chromium for their operation. Cookie databases have the same storage path; only the folder name changes for each browser. The cookies of the four browsers have the same number of attributes.

- Improvements: analyze and select the common attributes of the cookies of each of the browsers.

3.2.3. Cycle 3 (Performance at Scale)

- Prototype: feed all the bots with the web search database of a computer laboratory at the University of the Armed Forces-ESPE.

- Testing: test continuously searching for each URL and extracting all cookies generated by that URL.

- Findings: problems with repeated URLs.

- Improvements: implement a script that allows us to eliminate repeated URLs.

3.3. Phase 3: Validation & Metrics

- Success criteria: Accuracy: It will be considered successful if each bot manages to collect cookies from the entire URL base (without repetition). For each URL, you must register all first- and third-party cookies.

- Performance: Number of URLs per hour. Number of cookies generated per domain. Number of days to complete the search for all URLs.

- Ethical safeguards: analyze and apply data protection laws related to the use of students’ web search histories.

3.4. Phase 4: Final Deployment

Deployed optimized bots in a controlled environment.

3.4.1. Cookie Collector Phase 1

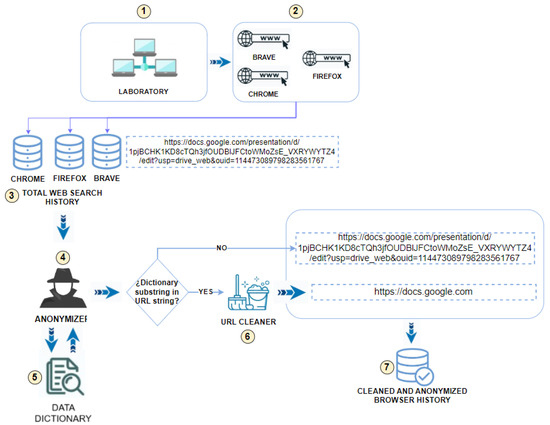

We have proposed a novel cookie collector that aims to deliver three datasets with several attributes to predict whether a visited domain is vulnerable to XSS attacks. Figure 3 shows Phase 1, whose operation is explained below.

Figure 3.

Cookie Collector Phase 1: The system can anonymize and clean data from URLs in web search history.

Web search histories of Google Chrome, Mozilla Firefox, and Brave were collected from a lab of the Computer Science Department (30 computers) at the Universidad de las Fuerzas Armadas-ESPE in Santo Domingo, from May to October 2023 as the first step  of the research process.

of the research process.

of the research process.

of the research process.In the second step  , we meticulously analyzed all the attributes of the search databases obtained from each computer. Table 2 provides comprehensive details of the database location, tables, and attributes that contain web search history information for each browser.

, we meticulously analyzed all the attributes of the search databases obtained from each computer. Table 2 provides comprehensive details of the database location, tables, and attributes that contain web search history information for each browser.

, we meticulously analyzed all the attributes of the search databases obtained from each computer. Table 2 provides comprehensive details of the database location, tables, and attributes that contain web search history information for each browser.

, we meticulously analyzed all the attributes of the search databases obtained from each computer. Table 2 provides comprehensive details of the database location, tables, and attributes that contain web search history information for each browser.

Table 2.

Location of database containing web search history for Chrome, Firefox, and Breve browsers.

Using this information, we create a total database that contains the web search history of the three browsers mentioned above. This is presented in the third step  titled Total Web Search History. SQLite Studio was used as the Database Management System (DBMS) with which the information in this total database was created, managed, and accessed.

titled Total Web Search History. SQLite Studio was used as the Database Management System (DBMS) with which the information in this total database was created, managed, and accessed.

titled Total Web Search History. SQLite Studio was used as the Database Management System (DBMS) with which the information in this total database was created, managed, and accessed.

titled Total Web Search History. SQLite Studio was used as the Database Management System (DBMS) with which the information in this total database was created, managed, and accessed.We have taken as reference the Guide for the treatment of personal data in public administration [46], textually mentioning that “Authorization of the holder shall not be required when a public institution develops the processing of personal data and has a statistical or scientific purpose; of health protection or security; or is carried out as part of a public policy to guarantee constitutionally recognized rights. In this case, measures leading to the suppression of the identity of the data subjects must be adopted”.

In this context, all historical data collected in Total Web Search History were anonymized using a Python script ANONYMIZER  , and after a search treatment of matching first names, last names, usernames, and their combinations within the visited URLs.

, and after a search treatment of matching first names, last names, usernames, and their combinations within the visited URLs.

, and after a search treatment of matching first names, last names, usernames, and their combinations within the visited URLs.

, and after a search treatment of matching first names, last names, usernames, and their combinations within the visited URLs.Data DICTIONARY  were used with all the records of names, surnames, usernames, and their combinations of all students who have used laboratory computers (in the set time range), using the Computer Equipment Use Records provided by the Director of Information Technology Degree and the Head of Laboratories.

were used with all the records of names, surnames, usernames, and their combinations of all students who have used laboratory computers (in the set time range), using the Computer Equipment Use Records provided by the Director of Information Technology Degree and the Head of Laboratories.

were used with all the records of names, surnames, usernames, and their combinations of all students who have used laboratory computers (in the set time range), using the Computer Equipment Use Records provided by the Director of Information Technology Degree and the Head of Laboratories.

were used with all the records of names, surnames, usernames, and their combinations of all students who have used laboratory computers (in the set time range), using the Computer Equipment Use Records provided by the Director of Information Technology Degree and the Head of Laboratories.Any match of the content of this dictionary with the content of the parsed URL was searched. If a match was found, the URL was passed through URL CLEANER  , which was another Python script that cleaned up all the characters in the URL and only left the domain name in the format http://domain.com, https://domain.com, https://www.domain.com or http://www.domain.com. The repeated domains were also removed within this process, and CLEANED AND ANONYMIZED BROWSER HISTORY

, which was another Python script that cleaned up all the characters in the URL and only left the domain name in the format http://domain.com, https://domain.com, https://www.domain.com or http://www.domain.com. The repeated domains were also removed within this process, and CLEANED AND ANONYMIZED BROWSER HISTORY  was obtained.

was obtained.

, which was another Python script that cleaned up all the characters in the URL and only left the domain name in the format http://domain.com, https://domain.com, https://www.domain.com or http://www.domain.com. The repeated domains were also removed within this process, and CLEANED AND ANONYMIZED BROWSER HISTORY

, which was another Python script that cleaned up all the characters in the URL and only left the domain name in the format http://domain.com, https://domain.com, https://www.domain.com or http://www.domain.com. The repeated domains were also removed within this process, and CLEANED AND ANONYMIZED BROWSER HISTORY  was obtained.

was obtained.3.4.2. Cookie Collector Phase 2

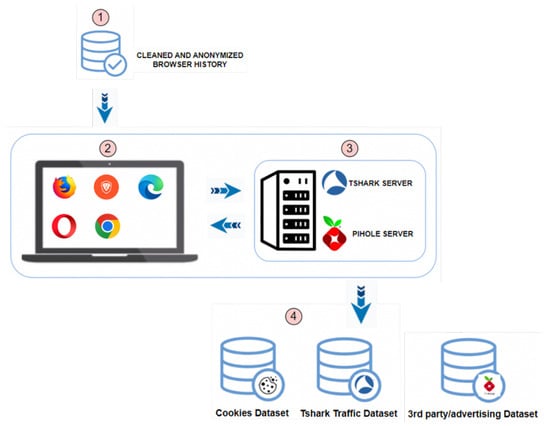

With the clean and anonymous database  , we fed the Phase 2 of our cookie collector, as shown in Figure 4. This infrastructure was configured with a Windows operating system computer with an Internet connection, on which the following web browsers were installed: Google Chrome, Mozilla Firefox, Microsoft Edge, Brave, and Opera

, we fed the Phase 2 of our cookie collector, as shown in Figure 4. This infrastructure was configured with a Windows operating system computer with an Internet connection, on which the following web browsers were installed: Google Chrome, Mozilla Firefox, Microsoft Edge, Brave, and Opera  . On the other hand, a server with Ubuntu OS was configured

. On the other hand, a server with Ubuntu OS was configured  with the following active services: PiHole [47] and Tshark [48]. The basis of our proposal was to collect and associate all the types of cookies generated by each URL (Cookies Dataset), capture the traffic generated while the bot executed the search (Tshark Traffic Dataset), and finally, associate this information with communications that were established with third-party or advertising domains (3rd party/advertising Dataset) and that were recorded by PyHole

with the following active services: PiHole [47] and Tshark [48]. The basis of our proposal was to collect and associate all the types of cookies generated by each URL (Cookies Dataset), capture the traffic generated while the bot executed the search (Tshark Traffic Dataset), and finally, associate this information with communications that were established with third-party or advertising domains (3rd party/advertising Dataset) and that were recorded by PyHole  .

.

, we fed the Phase 2 of our cookie collector, as shown in Figure 4. This infrastructure was configured with a Windows operating system computer with an Internet connection, on which the following web browsers were installed: Google Chrome, Mozilla Firefox, Microsoft Edge, Brave, and Opera

, we fed the Phase 2 of our cookie collector, as shown in Figure 4. This infrastructure was configured with a Windows operating system computer with an Internet connection, on which the following web browsers were installed: Google Chrome, Mozilla Firefox, Microsoft Edge, Brave, and Opera  . On the other hand, a server with Ubuntu OS was configured

. On the other hand, a server with Ubuntu OS was configured  with the following active services: PiHole [47] and Tshark [48]. The basis of our proposal was to collect and associate all the types of cookies generated by each URL (Cookies Dataset), capture the traffic generated while the bot executed the search (Tshark Traffic Dataset), and finally, associate this information with communications that were established with third-party or advertising domains (3rd party/advertising Dataset) and that were recorded by PyHole

with the following active services: PiHole [47] and Tshark [48]. The basis of our proposal was to collect and associate all the types of cookies generated by each URL (Cookies Dataset), capture the traffic generated while the bot executed the search (Tshark Traffic Dataset), and finally, associate this information with communications that were established with third-party or advertising domains (3rd party/advertising Dataset) and that were recorded by PyHole  .

.

Figure 4.

Cokie Collector Phase 2: Configured test infrastructure to search the URL and extract the cookies-related generated network traffic and third-party domains.

The objective of Phase 2 was to automate the search for each of the URLs through the installed web browsers, simulating the behavior of a real user. Here, our contribution is appreciated through five bots (one for each browser) programmed in Python and that were automated to execute the sequence shown in Figure 4, which we have named BotSoul.

In our evaluation of the traffic analyzers used to capture web search data generated by bots, we examined their characteristics and summarized them in Table 3. After careful consideration, we selected Tshark for its ability to capture network data packets in real-time and compatibility with Ubuntu. Furthermore, it allowed us to remotely obtain all connection attributes through programs in each bot.

Table 3.

Evaluation summary of programs used to capture and analyze network traffic.

We have assessed several open-source tools for Robotic Process Automation (RPA) in the context of bot programming. These tools allowed us to automate repetitive and rule-based tasks using software platforms. We have programmed software robots, also known as “bots”, to simulate human web search actions. Table 4 summarizes the characteristics of the programs evaluated for bot programming. PyAutoGUI [49] was selected because it is a Python cross-platform GUI automation module for humans. It was used to control bots’ interaction with web pages by automating mouse and keyboard movement.

Table 4.

Evaluation summary of RPA programs used to test the programming of bots.

Table 5 provides a summary of the program characteristics that were evaluated to collect information from cookies generated when a bot visited a URL in the web search history. The initial evaluation of the tools developed revealed limitations in their ability to capture the complete set of attributes associated with cookies. To overcome this limitation, a strategy was designed based on simulating human user behavior: the system begins by creating a browser instance, navigates to the target URL, and emulates the action of pressing the Enter key to load the page. The applied methodology enabled the development of the BotSoul algorithm, which allows for a more exhaustive and accurate collection of cookies through web interaction that realistically replicates human browsing patterns.

Table 5.

Evaluation summary of programs used to capture/analyze cookies.

3.4.3. BotSoul Algorithm Operation

Our bots were programmed to search and associate, automatically and autonomously, each searched URL, obtain the generated cookies, capture the traffic resulting from this search (Tshark), and obtain information on connections with third parties (PiHole) through advertising domains. All generated attributes were stored in the database Final DataBase (Cookies, Tshark Traffic and 3rd party connections)  .

.

.

.Figure 5 shows a general view of how the soul of each bot is composed (5 in total, 1 for each web browser). In Algorithm 1, we explain the operation of each stage of one of the bots that we use to control the Google Chrome browser (the same logic was applied to the other bots and browsers).

Figure 5.

BotSoul, graphical description of how our cookie collector operates by automating bots in python.

Our collector uses Python scripts to connect to the cookie database of each browser. A separate script was used for each browser, and the path to the directory where the cookie records are stored is shown in Table 6. We start by creating a backup of the cookie database for the designated browser, as the database is blocked when the browser is running and cannot be accessed by other applications.

| Algorithm 1 Pseudocode-operation of the BotSoul Algorithm for collecting cookies |

|

|

|

Table 6.

Location of the database that contains the cookies for each web browser used.

4. Results Analysis

4.1. Training

Three large data sets that contain essential information were obtained as the final result of the collection phase. It is important to note that the input database for this phase was a clean and anonymous database of search histories (domains). As a result, the first data set was structured to contain an association of these domains with the information about the cookies that were created when the bot performed a web search. This search action allowed us to collect all types of cookies of interest: first-party, third-party, and session cookies.

The second data set stored the association of these domains with the generated traffic (tshark) when the bot searched for that domain using the web browser. A third data set was created, which associated these domains with the advertising domain records (PiHole) generated while the bot was browsing the selected website.

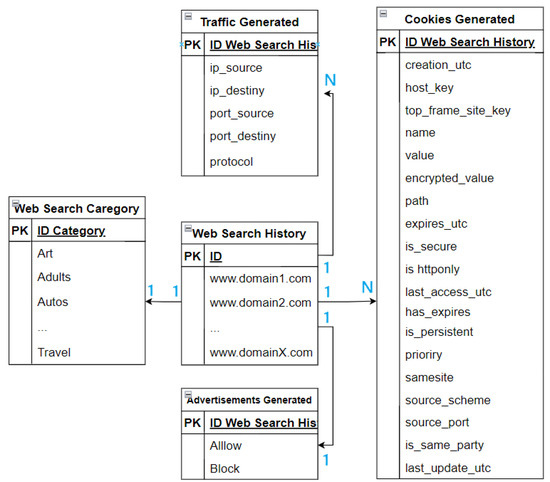

Figure 6 provides a preview of the tables, their relationships, and the available attributes, which were used as input for the training and prediction phase. The multiplicity relationship of each table is expressed as follows: One domain can generate several network traffic entries; in the same way, one domain can generate several cookie records (first- and third-party cookies). On the other hand, one domain can belong to only one web search category, and finally, one domain can generate a single advertisement status (allow or block).

Figure 6.

Entity relationship diagram of the datasets obtained in the collection phase.

In the initial test, each bot searched 611 domains for 4 h (approximately 152 domains per hour), generating approximately 4719 cookies. This means that each domain produced around seven cookies: one first-party and six third-party cookies.

We analyzed all web browsing logs collected from the five computer laboratories of the Universidad de las Fuerzas Armadas, using 440,000 unnormalized URLs (400,000 normalized domains) as the search base for all bots. In total, it took us approximately 10 days, 21 h and 36 min to complete the search for the information captured in Figure 6, with five bots, each working with a different browser 24 h a day.

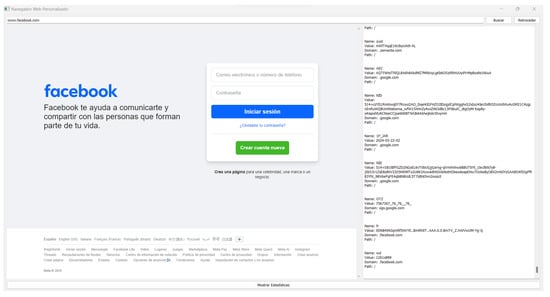

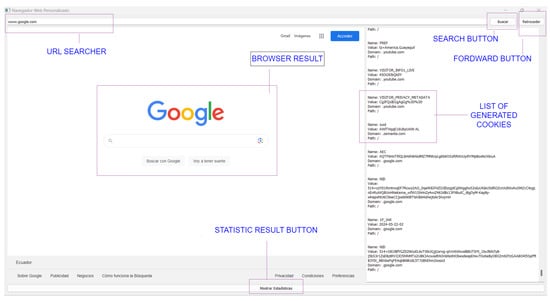

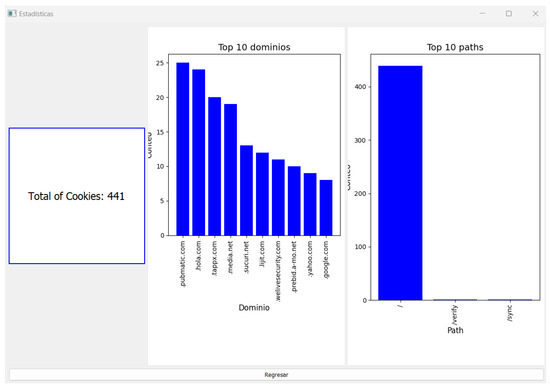

With the total cookie database, the logic of sub-phase 1 (CookieScout [50]) and subphase 2 (DataCookie [51]) of the characterization phase was applied. First, the algorithm was run to analyze the attributes of all generated cookies and classify them according to the suspicious attribute for XSS attacks (suspicious/not suspicious). Second, the logic of the classification tree algorithms was applied to train our browser, which we have called BOOKIE (Figure 7, Figure 8 and Figure 9), developed with Python and PyQt.

Figure 7.

Preview of BOOKIE, a browser for search and analyse cookies.

Figure 8.

Preview of BOOKIE, a browser to predict the level of vulnerability of students to XSS attacks.

Figure 9.

Option to display statistical results for each browsed web page.

4.2. Prediction

To integrate webpage recommendation capabilities into the developed browser, we have evaluated various clustering algorithms, carefully considering the nature of cookie data and the specific objectives of the analysis:

- K-Means;

- DBSCAN;

- Hierarchical clustering.

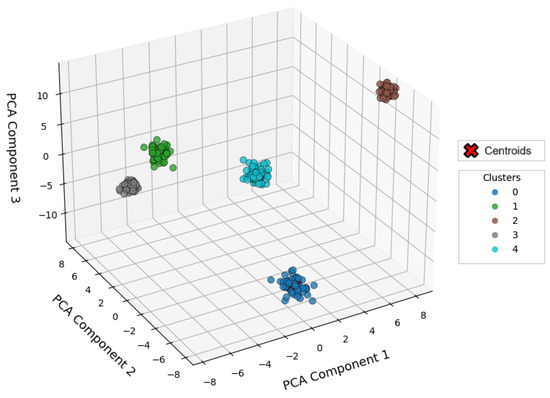

4.2.1. K-Means

The K-Means algorithm is a clustering technique that partitions data into K clusters, where each data point belongs to the cluster with the closest mean. It is one of the simplest and most popular algorithms. Allowed the identification of common browsing patterns: Cookies with similar characteristics (e.g., lifetime and domain) were grouped, allowing common browsing behaviors to be identified between users. Clusters of cookies that reflect similar behavioral patterns were obtained: identification of user segments with common interests.

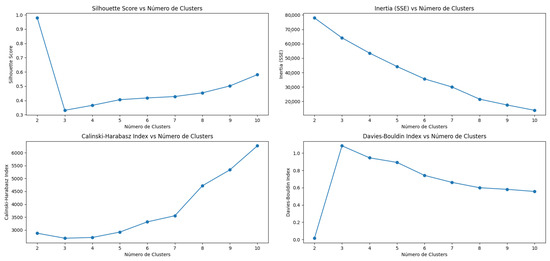

Different clusters have been evaluated to perform a hyperparameter analysis for the proposed algorithm, and the evaluation metrics have been compared with determine the optimal number of clusters. In Figure 10, four graphs have been generated showing how the evaluation metrics vary with the number of clusters, allowing the user to quickly interpret the results and select the optimal number of clusters. We have determined that the optimal number of clusters for our analysis was 2.

Figure 10.

Preview of K-MEANS Hyperparameter Analysis.

The Silhouette Score measures how well the data are grouped within their assigned clusters and how far apart the clusters are from each other. A value close to 1 indicates that the data are well grouped and the clusters are separated.

The Inertia, or Sum of Squares within the Clusters (SSE), measures the compactness of a cluster, defined as the sum of the squared distances between each data point and the centroid of the cluster to which it belongs. Lower values indicate that points are closer to their centroids, which generally means more compact clusters.

The Calinski–Harabasz Index measures the separation between clusters and the compaction within clusters. Higher values indicate more separated and compact clusters.

The Davies–Bouldin Index measures the relationship between dispersion within clusters and separation between clusters. Lower values indicate that the clusters are well separated and compact.

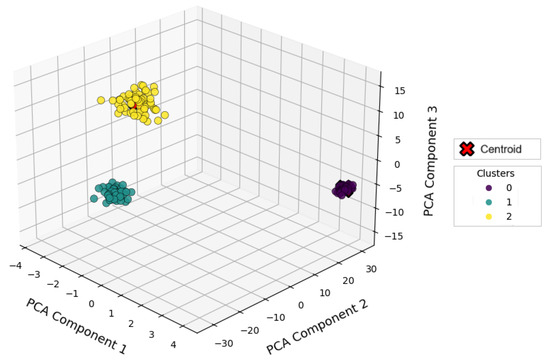

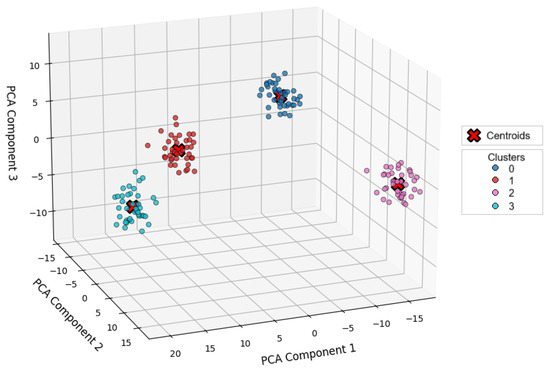

Principal Component Analysis (PCA) has been applied to visualize the clusters. It is a dimensionality reduction technique that transforms a set of possibly correlated variables into a smaller set of uncorrelated variables called principal components.

Figure 11, Figure 12 and Figure 13 show the graph of the generated clusters. A summary of the hyperparameter evaluation using different numbers of clusters is shown in Table 7.

Figure 11.

Preview of K-MEANS with two clusters.

Figure 12.

Preview of K-MEANS with five clusters.

Figure 13.

Preview of K-MEANS with 10 clusters.

Table 7.

Hyperparameter analysis for the KMEANS algorithms.

For Clusters = 2 , the results indicate that the clusters are very well defined and separated, although there may be some natural dispersion within them. This is usually a sign of very successful clustering, where the data points within each cluster may be spread out, but the clusters themselves are differentiated.

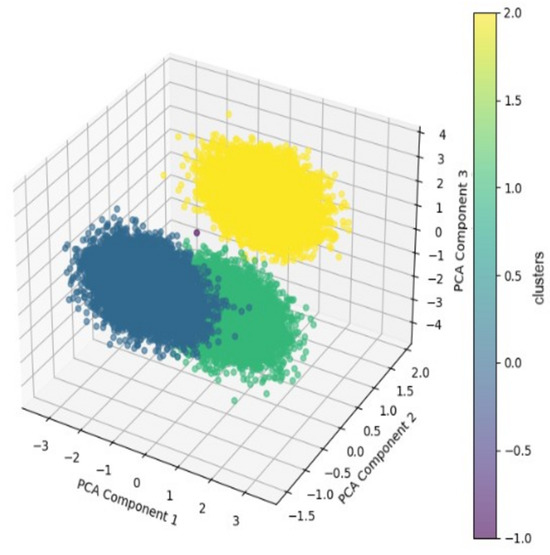

4.2.2. DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN is a density-based clustering algorithm that can find arbitrary-shaped clusters and handle noise (data points that do not belong to any cluster). It allowed the detection of clusters in noisy data; it was suitable for cookie data that contained noise, such as non-relevant or inconsistent third-party cookies. Clusters of densely connected cookies were obtained, ignoring noise and improving the handling of atypical or unusual cookies.

For eps = 10, the results indicate that the DBSCAN model has achieved a perfect data grouping. The clusters (n = 2) (Figure 14) are well defined and separated, which is reflected in a high Silhouette Score, a high Calinski–Harabasz index, and a low Davies–Bouldin index (Table 8).

Figure 14.

Preview of clusters identified with DBSCAN.

Table 8.

Performance tests with different eps values and min_samples.

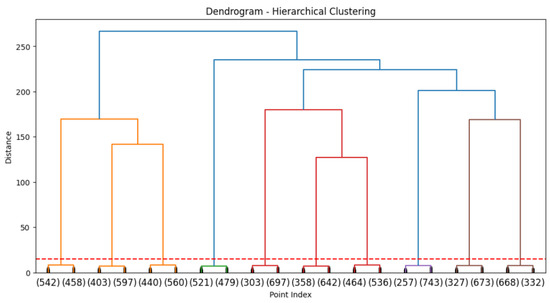

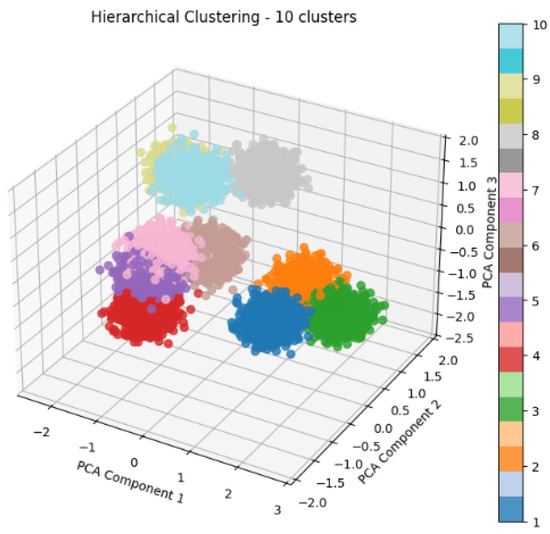

4.2.3. Hierarchical Clustering

Build a hierarchy of clusters using an agglomerative (start with individual points and group them) or divisive (start with all points and divide them) approach. Allowed analysis of hierarchical relationships: Useful for understanding relationships between groups of cookies at different levels of granularity, which helped identify subgroups of users with specific behaviors.

With the implementation of these clustering algorithms, the results were as follows:

- User segmentation: grouping users into clusters based on the cookies generated allows segments with similar interests and browsing behaviors to be identified.

- Personalized recommendations: based on the identified clusters, the browser can recommend web pages relevant to each cluster’s browsing patterns.

- Anomaly detection and security: identification of cookies that do not fit normal patterns (anomalies), helping to detect possible security threats.

- User experience optimization: improving the relevance of recommendations, providing a more personalized and efficient browsing experience for students.

Implementing these algorithms allowed the browser to offer recommendations based on historical cookie data and continually adapt its recommendations as more data were collected, thus improving the accuracy and personalization of web suggestions.

Figure 15 shows the dendrogram that illustrates the hierarchical groupings most derived from the hierarchical algorithm. The dendrogram represents the data groupings concerning other representations. Figure 16 shows the number of clusters (n = 10) identified by the hierarchical algorithm.

Figure 15.

Preview of dendograms identified with hierarchical clustering.

Figure 16.

Preview of clusters identified with hierarchical clustering.

The results in Table 9 indicate a good performance of hierarchical clustering; they suggest that hierarchical clustering has identified significant groups in the data, with a good cluster structure and a clear separation between them.

Table 9.

Performance test for hierarchical clustering.

A complete system for automatic cookie collection was assembled using bots that simulate a user’s browsing behavior. Five bots were programmed, one for each browser most used by university students (Chrome, Firefox, Brave, Edge, and Opera).

Web search histories of Google Chrome, Firefox, and Brave were collected from a laboratory at the University of the Armed Forces-ESPE (30 computers) between 2019 and 2024. The attributes of these databases were analyzed. A total database was created that contained all web search history.

A test infrastructure was configured, where the five mentioned browsers were installed. A server was configured running Ubuntu with PiHole and Tshark services active. The goal was to collect all cookies generated by each URL (Cookie Dataset), capture the traffic generated during the bot search (Tshark Traffic Dataset), and associate this information with advertising or third-party domains (Third Party/Advertising Dataset).

An algorithm named BotSoul was developed to automate the process of accessing each URL by simulating user behavior—programmatically launching a browser, entering the web address, and navigating the site as if it were a real user. This process, which involves dynamic interaction with web elements and the execution of client-side scripts, ensures the retrieval of all cookie attributes generated during realistic browsing sessions.

Cookies were captured and analyzed in real time using tools such as Wireshark, Scapy, NtopNG, Requests, and Curl.

Procedures were defined within the BotSoul algorithm to check if the cookie database was empty, delete cookies, open the browser, start and end capturing traffic with Tshark, obtain HTTP headers, and obtain PiHole logs.

In an initial test, each bot searched 611 domains for 4 h (approximately 152 domains per hour), generating approximately 4719 cookies. This means that each domain produced around seven cookies: one first-party and six third-party cookies.

A total of 440,000 non-normalized URLs (400,000 normalized domains) were used as a search base for all bots.

The total data collection took approximately 10 days, 21 h y 36 min, with five bots working 24 h a day.

The final database contained the domain association with cookie information, generated traffic (Tshark), and advertising domain registrations (PiHole).

Machine learning models were developed and evaluated to classify web domains as suspicious and to predict the susceptibility of newly encountered domains to cross-site scripting (XSS) attacks.

Three large datasets obtained during the data collection phase were utilized. The primary input consisted of a clean, anonymized database of search histories, structured at the domain level. This dataset was then enriched with cookie data generated during automated browsing sessions carried out by the bots. Each domain entry was linked to the corresponding set of cookies created during the simulated user interactions, allowing for the extraction and analysis of key attributes such as creation time, expiration, path, domain scope, Secure/HttpOnly flags, and SameSite policies. This integrated dataset provided a robust foundation for subsequent modeling and vulnerability analysis.

5. Discussion

The implementation of this integrated system of automated cookie collection and XSS vulnerability analysis provides key findings in the context of web security and educational data mining. This section discusses the main results obtained, organized around four fundamental axes that directly address the research question: How can an automated cookie collection and analysis system support the identification of browsing patterns and XSS vulnerabilities in educational contexts?

5.1. Automation of Cookie Collection in Educational Contexts

The development of the BotSoul system, capable of simulating student browsing sessions, allowed the automated and large-scale collection of cookies from more than 440,000 visited URLs, which represents a significant advance compared with previous studies, such as that of Triki [52]. The findings highlight three key contributions:

- Multidimensional integration of systems, through the combination of Pi-hole and Tshark, which allowed the correlation of cookies with advertising and tracking domains.

- Specific adaptation to university environments, focusing the analysis on platforms commonly used by students.

- Dynamic analysis of entire browsing, overcoming the limitations of traditional GDPR [53] compliance scanners by including traffic capture over entire browsing cycles.

The observed result, with a 6:1 ratio between third-party and first-level cookies, is consistent with the findings of The Cookie Hunter [54] and highlights concerns about students’ inadvertent exposure to external scripts, potentially increasing security risks during academic browsing.

5.2. Predictive Modeling of XSS Vulnerabilities Based on Cookie Attributes

A key innovation in this study was the incorporation of cookie attributes (e.g., expiration date, date of creation, domain, etc.) as variables for the machine learning models, differentiating this approach from existing methods that focus on script detection [55]. The models were trained using real student navigation data obtained in an academic environment, which allowed the identification of risk patterns specific to educational contexts [56].

5.3. Behavioral Segmentation Using Clustering Algorithms

The use of clustering algorithms for behavioral analysis allowed users to be segmented based on their cookie patterns, revealing the following:

- Clusters of users differentiated according to the prevalence and type of third-party cookies present in their browsing.

- Significant correlation between these clusters and exposure to XSS vulnerabilities.

- A trade-off between model accuracy and performance in high data volume environments.

These findings expand the use of clustering in cybersecurity, as documented in previous work by Keyrus [57], by demonstrating its applicability in educational contexts to accomplish the following:

- Detection of anomalies in browsing patterns, which could alert to risky behavior of students.

- Optimization of web recommendations, adapting them to the risk profiles of users.

- Identification of groups with a greater propensity to visit vulnerable domains, allowing proactive interventions.

5.4. Technical Challenges and Scalability Considerations

Deploying this large-scale infrastructure presented several common technical challenges in big data studies, including the following.

- Normalization of domains with spelling variants, such as login.institution.edu and www.institution.edu/login.

- Real-time processing of Tshark data streams, which presented latency-related difficulties.

- A balance between accuracy and performance in machine learning models is critical in educational environments with limited computational resources.

While BotSoul effectively emulates user behavior to capture dynamic cookie attributes, the simulation may still differ from actual human interaction in subtle ways, such as mouse movement, scrolling behavior, or response to pop-ups. These behavioral discrepancies could influence the creation or modification of cookies on certain websites. Future work could incorporate more sophisticated human-like interaction patterns—potentially using reinforcement learning or human behavior modeling—to increase fidelity and improve data completeness.

6. Conclusions

6.1. Theoretical Contributions

- This work proposes a novel methodological approach for the characterization of cookies by combining web traffic analysis techniques, cookie analysis, and unsupervised clustering, which represents a significant contribution to the study of privacy and security in student web browsing.

- The experimental browser BOOKIE was introduced, which integrates cookie-based visualization, classification, and vulnerability prediction, showing how data analysis can be applied directly in a browsing environment.

- The results obtained with the clustering algorithms (K-Means, DBSCAN, and hierarchical clustering) confirm that it is possible to identify behavior patterns and user segmentation based on the cookies collected, which theoretically supports the hypothesis that cookies can be used as vectors for vulnerability analysis and digital behavior.

6.2. Practical Contributions

- An automated experimental environment was built and executed capable of collecting, processing, and analyzing more than 440,000 URLs from five university laboratories, generating three large data sets useful for threat characterization in real academic environments.

- BOOKIE allowed not only the display of cookies but also the prediction of the level of vulnerability to XSS attacks, offering personalized navigation recommendations based on the detected patterns.

- The application of K-Means and DBSCAN made it possible to effectively identify two main user segments based on their browsing behavior, improving the performance of the browser’s recommendation system.

- The use of metrics such as Silhouette Score, Inertia, Calinski–Harabasz, and Davies–Bouldin empirically demonstrated the validity and precision of the applied models, with DBSCAN with eps = 10 offering the best cluster separation in the presence of noise.

7. Future Perspectives

- It is proposed to integrate incremental learning mechanisms so that the system continuously improves its predictions with each new navigation.

- The possibility of expanding the study to other types of web threats (such as CSRF or phishing) is considered, expanding the browser’s detection spectrum.

- It is planned to validate the system with real users in open educational scenarios in order to evaluate its impact on awareness of digital privacy and cybersecurity.

- Conduct a larger-scale evaluation between clustering algorithms applied to different types of web tracking data (cookies, DNS traffic, fingerprinting) to strengthen theoretical frameworks on browsing behavior analysis.

- Train supervised classifiers (such as SVM, Random Forest, or neural networks) to predict vulnerabilities or user profiles based on cookie sets already labeled as safe/suspicious.

- Design a theoretical individual “risk of exposure to XSS attacks” metric, based on user behavior and domains visited.

Author Contributions

Investigation, G.R.-G., E.B.-A., D.N.-A. and P.P.-P.; Methodology, G.R.-G., E.B.-A., D.N.-A., P.P.-P. and S.C.-D.; Software, G.R.-G.; Validation, G.R.-G., E.B.-A., D.N.-A., P.P.-P. and S.C.-D.; Data analysis and structure, G.R.-G.; writing, original draft preparation, G.R.-G., E.B.-A., D.N.-A. and P.P.-P.; writing, review and editing, E.B.-A., D.N.-A., P.P.-P., S.C.-D. and M.L.-V.; Formal Analysis, S.C.-D. and M.L.-V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data sets presented in this article are not readily available because they contain web browsing data of university students.

Acknowledgments

Special thanks to the Universidad de las Fuerzas Armadas-ESPE, Santo Domingo campus, for supporting this research by providing access to anonymized web browsing history data from computer laboratory databases. This access was instrumental in validating our proposal, which has proven valuable for students in the information technology program.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- QAwerk. CISA Urges Software Devs to Weed Out XSS Vulnerabilities. Available online: https://www.bleepingcomputer.com/news/security/cisa-urges-software-devs-to-weed-out-xss-vulnerabilities/ (accessed on 19 May 2025).

- Bates, D.; Barth, A.; Jackson, C. Regular expressions considered harmful in client-side XSS filters. In Proceedings of the 19th International Conference on World Wide Web (WWW’10), Raleigh, NC, USA, 26–30 April 2010; pp. 91–100. [Google Scholar] [CrossRef]

- Johns, M.; Engelmann, B.; Posegga, J. XSSDS: Server-Side Detection of Cross-Site Scripting Attacks. In Proceedings of the 2008 Annual Computer Security Applications Conference (ACSAC), Washington, DC, USA, 8–12 December 2008; pp. 335–344. [Google Scholar] [CrossRef]

- Melicher, W.; Das, A.; Sharif, M.; Bauer, L.; Jia, L. Riding out DOMsday: Towards Detecting and Preventing DOM Cross-Site Scripting. In Proceedings of the Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Lekies, S.; Stock, B.; Johns, M. 25 million flows later: Large-scale detection of DOM-based XSS. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security (CCS‘13), Berlin, Germany, 4–8 November 2013; pp. 1193–1204. [Google Scholar] [CrossRef]

- Wassermann, G.; Su, Z. Static detection of cross-site scripting vulnerabilities. In Proceedings of the 30th International Conference on Software Engineering (ICSE’08), Leipzig, Germany, 10–18 May 2008; pp. 171–180. [Google Scholar] [CrossRef]

- Kerschbaum, F. Simple cross-site attack prevention. In Proceedings of the 2007 Third International Conference on Security and Privacy in Communications Networks and the Workshops—SecureComm 2007, Nice, France, 17–21 September 2007; pp. 464–472. [Google Scholar] [CrossRef]

- Havryliuk, V. ¿Qué es Cross-Site Scripting (XSS) y Cómo Prevenirlo? Available online: https://qawerk.es/blog/que-es-cross-site-scripting/ (accessed on 19 May 2025).

- Weinberger, J.; Saxena, P.; Akhawe, D.; Finifter, M.; Shin, R.; Song, D. A Systematic Analysis of XSS Sanitization in Web Application Frameworks. In Proceedings of the Computer Security—ESORICS 2011; Atluri, V., Diaz, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 150–171. [Google Scholar]

- Balzarotti, D.; Cova, M.; Felmetsger, V.; Jovanovic, N.; Kirda, E.; Kruegel, C.; Vigna, G. Saner: Composing Static and Dynamic Analysis to Validate Sanitization in Web Applications. In Proceedings of the 2008 IEEE Symposium on Security and Privacy (sp 2008), Oakland, CA, USA, 18–22 May 2008; pp. 387–401. [Google Scholar] [CrossRef]

- Parameshwaran, I.; Budianto, E.; Shinde, S.; Dang, H.; Sadhu, A.; Saxena, P. DexterJS: Robust testing platform for DOM-based XSS vulnerabilities. In Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering (ESEC/FSE 2015), Bergamo, Italy, 30 August–4 September 2015; pp. 946–949. [Google Scholar] [CrossRef]

- Gupta, S.; Gupta, B.B. XSS-immune: A Google chrome extension-based XSS defensive framework for contemporary platforms of web applications. Secur. Commun. Netw. 2016, 9, 3966–3986. [Google Scholar] [CrossRef]

- Lekies, S.; Stock, B.; Wentzel, M.; Johns, M. The unexpected dangers of dynamic JavaScript. In Proceedings of the 24th USENIX Conference on Security Symposium (SEC’15), Washington, DC, USA, 12–14 August 2015; pp. 723–735. [Google Scholar]

- Stock, B.; Lekies, S.; Mueller, T.; Spiegel, P.; Johns, M. Precise client-side protection against DOM-based cross-site scripting. In Proceedings of the 23rd USENIX conference on Security Symposium (SEC’14), San Diego, CA, USA, 20–22 August 2014; pp. 655–670. [Google Scholar]

- Fang, Y.; Li, Y.; Liu, L.; Huang, C. DeepXSS: Cross Site Scripting Detection Based on Deep Learning. In Proceedings of the 2018 International Conference on Computing and Artificial Intelligence (ICCAI’18), Chengdu, China, 12–14 March 2018; pp. 47–51. [Google Scholar] [CrossRef]

- Pan, X.; Cao, Y.; Liu, S.; Zhou, Y.; Chen, Y.; Zhou, T. CSPAutoGen: Black-box Enforcement of Content Security Policy upon Real-world Websites. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (CCS’16), Vienna, Austria, 24–28 October 2016; pp. 653–665. [Google Scholar] [CrossRef]

- V., S.C.; Selvakumar, S. BIXSAN: Browser independent XSS sanitizer for prevention of XSS attacks. SIGSOFT Softw. Eng. Notes 2011, 36, 1–7. [Google Scholar] [CrossRef]

- Report, M. XSS: La Vulnerabilidad Web que Puede Derribar su Negocio. Available online: https://mineryreport.com/blog/xss-vulnerabilidad-web-que-puede-derribar-su-negocio/ (accessed on 19 May 2025).

- Bugliesi, M.; Calzavara, S.; Focardi, R.; Khan, W. CookiExt: Patching the browser against session hijacking attacks. J. Comput. Secur. 2015, 23, 509–537. [Google Scholar] [CrossRef]

- Zheng, X.; Jiang, J.; Liang, J.; Duan, H.; Chen, S.; Wan, T.; Weaver, N.C. Cookies Lack Integrity: Real-World Implications. In Proceedings of the USENIX Security Symposium, Washington, DC, USA, 12–14 August 2015. [Google Scholar]

- Bortz, A. Origin Cookies : Session Integrity for Web Applications. 2011. Available online: https://sharif.edu/~kharrazi/courses/40441-011/read/session-integrity.pdf (accessed on 19 May 2025).

- Keromytis, A.D. Cookie Hijacking in the Wild: Security and Privacy Implications. 2016. Available online: https://api.semanticscholar.org/CorpusID:30033856 (accessed on 19 May 2025).

- Sivakorn, S.; Polakis, I.; Keromytis, A.D. The Cracked Cookie Jar: HTTP Cookie Hijacking and the Exposure of Private Information. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 724–742. [Google Scholar]

- ESET. Comprendiendo la Vulnerabilidad XSS (Cross-Site Scripting) en Sitios Web. Available online: https://www.welivesecurity.com/la-es/2015/04/29/vulnerabilidad-xss-cross-site-scripting-sitios-web/ (accessed on 19 May 2025).

- UNAM. Cross-Site Scripting (XSS). Available online: https://www.seguridad.unam.mx/cross-site-scripting-xss (accessed on 19 May 2025).

- Team, G. Pruebe la Seguridad de su Navegador en Busca de Vulnerabilidades. Available online: https://geekflare.com/es/browser-security-test/ (accessed on 19 May 2025).

- Mokbal, F.M.M.; Dan, W.; Imran, A.; Jiuchuan, L.; Akhtar, F.; Xiaoxi, W. MLPXSS: An Integrated XSS-Based Attack Detection Scheme in Web Applications Using Multilayer Perceptron Technique. IEEE Access 2019, 7, 100567–100580. [Google Scholar] [CrossRef]

- Cui, Y.; Cui, J.; Hu, J. A Survey on XSS Attack Detection and Prevention in Web Applications. In Proceedings of the 2020 12th International Conference on Machine Learning and Computing (ICMLC’20), Shenzhen, China, 15–17 February 2020; pp. 443–449. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, A.; Mittal, P.; Gupta, P.K.; Varghese, S. Prevention of XSS Attack Using Cryptography & API Integration with Web Security. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3833910 (accessed on 19 May 2025).

- Steffens, M.; Rossow, C.; Johns, M.; Stock, B. Don’t Trust The Locals: Investigating the Prevalence of Persistent Client-Side Cross-Site Scripting in the Wild. In Proceedings of the Network and Distributed Systems Security (NDSS) Symposium 2019, San Diego, CA, USA, 24–27 February 2019. [Google Scholar] [CrossRef]

- Klein, D.; Musch, M.; Barber, T.; Kopmann, M.; Johns, M. Accept All Exploits: Exploring the Security Impact of Cookie Banners. In Proceedings of the 38th Annual Computer Security Applications Conference (ACSAC’22), Austin, TX, USA, 5–9 December 2022. [Google Scholar] [CrossRef]

- Dembla, D.; Chaba, Y.; Yadav, K.; Chaba, M.; Kumar, A. A novel and efficient technique for prevention of xss attacks using knapsack based cryptography. Adv. Math. Sci. J. 2020, 9. [Google Scholar] [CrossRef]

- Mishra, P.; Gupta, C. Cookies in a Cross-site scripting: Type, Utilization, Detection, Protection and Remediation. In Proceedings of the 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 4–5 June 2020. [Google Scholar] [CrossRef]

- Nirmal, K.; Janet, B.; Kumar, R. It’s More Than Stealing Cookies—Exploitability of XSS. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018. [Google Scholar] [CrossRef]

- Shrivastava, A.; Choudhary, S.; Kumar, A. XSS vulnerability assessment and prevention in web application. In Proceedings of the 2016 2nd International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 14–16 October 2016. [Google Scholar] [CrossRef]

- Gupta, S.; Sharma, L. Exploitation of Cross-Site Scripting (XSS) Vulnerability on Real World Web Applications and its Defense. Int. J. Comput. Appl. 2012, 60, 28–33. [Google Scholar] [CrossRef]

- Takahashi, H.; Yasunaga, K.; Mambo, M.; Kim, K.; Youm, H.Y. Preventing Abuse of Cookies Stolen by XSS. In Proceedings of the 2013 Eighth Asia Joint Conference on Information Security, Seoul, Republic of Korea, 25–26 July 2013. [Google Scholar] [CrossRef]

- Putthacharoen, R.; Bunyatnoparat, P. Protecting cookies from Cross Site Script attacks using Dynamic Cookies Rewriting technique. In Proceedings of the 13th International Conference on Advanced Communication Technology (ICACT2011), Gangwon, Ruplic of Korea, 13–16 February 2011. [Google Scholar]

- Singh, T.; Mantoo, B.A. Loop Holes in Cookies and Their Technical Solutions for Web Developers; Springer: Singapore, 2020. [Google Scholar] [CrossRef]

- Kwon, H.; Nam, H.J.; Lee, S.; Hahn, C.; Hur, J. (In-)Security of Cookies in HTTPS: Cookie Theft by Removing Cookie Flags. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1204–1215. [Google Scholar] [CrossRef]

- Kumar, U.; Kumar, S. Protection Against Client-Side Cross Side Scripting (XSS/CSS). 2014. Available online: https://www.semanticscholar.org/paper/Protection-against-Client-Side-Cross-Side-Scripting-Kumar-Kumar/a5b7284114f69c1e5c06b3360eb7f711018c443d (accessed on 19 May 2025).

- Hydara, I. The Limitations of Cross-Site Scripting Vulnerabilities Detection and Removal Techniques. Turk. J. Comput. Math. Educ. TURCOMAT 2021, 12, 1975–1980. [Google Scholar] [CrossRef]

- Yue, C.; Xie, M.; Wang, H. An automatic HTTP cookie management system. Comput. Netw. 2010, 54, 2182–2198. [Google Scholar] [CrossRef]

- Block, G.; Ogdin, P.L. Analysis of Tokenized HTTP Event Collector. 2016. Available online: https://patents.google.com/patent/US10169434B1/en?oq=10169434 (accessed on 19 May 2025).

- Bhagat, D.B.; Krishnan, M.R.; Sadhasivam, K.M.; Varanasi, R.K. HTTP Cookie Protection by a Network Security Device. U.S. Patent Application No. US11/406,107, 18 April 2006. [Google Scholar]

- Guia para Tratamiento de Datos Personales en Administracion Publica. Available online: https://www.gobiernoelectronico.gob.ec/wp-content/uploads/2019/11/Gu%C3%ADa-de-protecci%C3%B3n-de-datos-personales.pdf (accessed on 19 May 2025).

- Schaper, D. Pi-Hole Network-Wide Ad Blocking. Available online: https://pi-hole.net/ (accessed on 19 May 2025).

- Wireshark. Tshark(1) Manual Page. Available online: https://www.wireshark.org/docs/man-pages/tshark.html (accessed on 19 May 2025).

- Sphinx. PyAutoGUI’s Documentation. Available online: https://pyautogui.readthedocs.io/en/latest/ (accessed on 19 May 2025).

- Rodríguez, G.E.; Benavides, D.E.; Torres, J.; Flores, P.; Fuertes, W. Cookie Scout: An Analytic Model for Prevention of Cross-Site Scripting (XSS) Using a Cookie Classifier. In Proceedings of the International Conference on Information Technology & Systems (ICITS 2018), Península de Santa Elena, Ecuador, 10–12 January; Rocha, Á., Guarda, T., Eds.; Springer: Cham, Switzerland, 2018; pp. 497–507. [Google Scholar]

- Rodríguez, G.E.; Torres, J.G.; Benavides-Astudillo, E. DataCookie: Sorting Cookies Using Data Mining for Prevention of Cross-Site Scripting (XSS). In Emerging Trends in Cybersecurity Applications; Daimi, K., Alsadoon, A., Peoples, C., El Madhoun, N., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 171–188. [Google Scholar] [CrossRef]

- Telefonica. Triki: Herramienta de Recolección y anáLisis de Cookies. Available online: https://telefonicatech.com/blog/triki-herramienta-recoleccion-analisis-cookies (accessed on 19 May 2025).

- consentmanager. Auditoría de Cookies para Sitios Web: Cómo Hacerlo Manualmente o Con un escáNer de Cookies. Available online: https://www.consentmanager.net/es/conocimiento/cookie-audit/ (accessed on 19 May 2025).

- Drakonakis, K.; Ioannidis, S.; Polakis, J. The Cookie Hunter: Automated Black-box Auditing for Web Authentication and Authorization Flaws. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security (CCS’20), Virtual Event, 9–13 November 2020; pp. 1953–1970. [Google Scholar] [CrossRef]

- Hamzah, K.; Osman, M.; Anthony, T.; Ismail, M.A.; Abdullah, Z.; Alanda, A. Comparative Analysis of Machine Learning Algorithms for Cross-Site Scripting (XSS) Attack Detection. JOIV Int. J. Inform. Vis. 2024, 8, 1678. [Google Scholar] [CrossRef]

- Njie, B.; Gabriouet, L. Machine Learning for Cross-Site Scripting (XSS) Detection. Bachelor’s Thesis, Dalarna University, Falun, Sweden, 2024. [Google Scholar]

- Keyrus. Qué es Clustering y para qué se Utiliza. Available online: https://keyrus.com/sp/es/insights/que-es-clustering-y-para-que-se-utiliza (accessed on 19 May 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).