Abstract

Stakeholders in Environmental Education (EE) often face difficulties identifying and selecting programs that best suit their needs. This is due, in part, to the lack of expertise in evaluation knowledge and practice, as well as to the absence of a unified database of Environmental Education Programs (EEPs) with a defined structure. This article presents the design and development of a web application for evaluating and selecting EEPs. The certified users of the application can insert, view, and evaluate the registered EEPs. At the same time, the application creates and maintains for each user an individual and dynamic user model reflecting their personal preferences. Finally, using all the above information and applying a combination of Multi-Criteria Decision-Making Methods (MCDM), the application provides a comparative and adaptive evaluation in order to help each user to select the EEPs that best suit his/her needs. The personalized recommendations are based on the information about the user stored in the user model and the results of the EEPs evaluations by the users that have applied them. As a case study, we used the EEPs from the Greek Educational System.

1. Introduction

Environmental education undoubtedly has a key role in developing human strategies that facilitate the solution of many environmental problems [1]. The role of environmental education is strongly transformative since it contributes to changing people’s attitudes, behavior, and values toward the environment and society [2]. However, in order to ensure that an EEP promotes these objectives, its success should be evaluated [3,4]. There are many kinds of research that point out the vital role of EEPs evaluation in the success and improvement of EEPs [5,6].

Despite the significant role of the EE and the importance of evaluation of the EEPs, the support provided to the educators that want to apply EEPs is incomplete. The absence of a unified database of EEPs and support tools for the management of EEPs, as well as the lack of experience and knowledge in the evaluation of EEPs, are among the main obstacles to the implementation of EEPs. Educators face difficulties in identifying and selecting the EEPs that best suit their needs and expectations, and the re-use of EEPs is discouraged [7,8,9].

Taking into account the above-mentioned problems, this paper presents the design and development of a web application, AESEEP (Application for the Evaluation and Selection of Environmental Education Programs). AESEEP was designed to support Environmental Education and act as a useful tool for those implementing EEPs (Environmental Education Centers (EECs), Environmental Education Coordinators (EEC), Educators, etc.) in terms of registration, evaluation, search, and selection of EEPs. As a case study, we used the EEPs of the Greek Educational System, but they can be applied in any country.

More specifically, the application enables the user to enter EEPs with a defined structure, thus creating a unified database. The user can search among the registered EEPs using search criteria and/or evaluate EEPs after having obtained from the application all the necessary knowledge of each registered program.

The main objective of the application is to provide the user with a useful tool for the search and selection of EEPs based on personalized recommendations. For this purpose, the system runs a comparative and adaptive evaluation of EEPs. More specifically, the data from the evaluations are collected and used to produce a Comparative Evaluation ranking of the EEPs. Each program, compared to the others, is given an index—Comparative Evaluation Index—that identifies its ranking position concerning the other programs that were included in the evaluation. The comparative evaluation ranking is provided to the user in each search in order to help his/her make the most appropriate choice of EEPs.

In addition, in order to provide a personalized recommendation to each user, the application takes advantage of information about the users that are stored in the user model. The user model is dynamically updated as the user interacts with the system. Each program, based on the data from the user model, is assigned a second index—Adaptive Evaluation Index—that determines its ranking position based on the user’s preferences. According to the adaptive evaluation ranking, each program has a different score per user, and thus, each user has a different proposal for selecting an EEP based on his/her specific needs and preferences.

Finally, the Comparative Evaluation Index (CEI) and the Adaptive Evaluation Index (AEI) are combined to produce the Unified Evaluation Index (UEI) for the final selection proposal. The use of the UEI ensures that the final ranking takes into account both the quality of the programs’ features and the preferences of the individual user. For the Comparative and Adaptive evaluation, the Multi-Criteria Decision-Making (MCDM) Theories AHP, TOPSIS, and SAW were used in the manner that is discussed further down in this paper.

The significance of such a system is four-fold. Firstly, such a system will assist in the re-use of EEPs. Secondly, automation will facilitate the evaluation process and make it accessible to all. Thirdly, it creates a database of EEPs and EEP’s evaluation data. Fourthly, the data analysis methods, by exploiting the evaluation data and the user model, provide the user with personalized recommendations and thus help him/her to choose EEPs that best fit his/her needs and achieve better results.

The rest of the paper is organized as follows: Section 2 presents related work. Section 3 presents the design and implementation of the system. Section 4 describes in detail the steps for applying the Comparative Evaluation by the use of AHP and TOPSIS. Then, in Section 5, the steps for applying Adaptive Evaluation by the use of AHP and SAW are described. In Section 6, the combination of those evaluations is suggested in order to rank the EEPs and provide a Personalized Proposal Selection to the end user. The first evaluation results are also discussed in this section. Finally, the conclusions drawn by this study are discussed in Section 7.

2. Related Work

Quality environmental education involves many partners and stakeholders who collaborate in a research-implementation space where science, decision making, and local culture and environment intersect [10]. However, selecting the right EE program is not an easy task. The problem is twofold: (a) the absence of a unified database and (b) the lack of evaluation data on these projects.

The great need for evaluation data of EE programs has been highlighted by many researchers [6,7,11,12,13,14,15,16,17,18]. Most of them believe that the main reason for this shortage is the difficulty in implementing those experiments. Indeed, this is confirmed by different reviews on EE projects [19], EE activities [20], EE projects [15,21], and EE Centers [22].

An automated system could help users implement the difficult task of EE project evaluation. For this purpose, the web application “MEERA” (My Environmental Education Evaluation Resource Assistant) has been developed. MEERA provides program evaluation capabilities and gives users access to existing evaluations to support them in the evaluation process [7]. Another application for the automated evaluation of EEPs is the web-based Applied Environmental Education Program Evaluation (AEEPE), which provides learners with an understanding of how evaluation can be used to improve EEPs and equips them with the necessary skills to design and evaluate EEPs [23]. However, none of these applications provide a comparative and adaptive evaluation of EEPs or Multi-Criteria Analysis to achieve this.

Multi-Criteria Analysis was used in the approach of Kabassi et al. [24]. They described an automated system to evaluate EE programs based on a multi-criteria decision-making (MCDM) approach. In this approach, the combination of the MCDM models proved rather effective for evaluating EE projects. However, this approach was quite different from the approach proposed in this paper. First, Kabassi et al. [24] used a model for EEPs’ evaluation before their implementation, while our approach uses the evaluation data of other people after they have implemented the programs. Secondly, they did not provide personalized recommendations to their users as they did not adapt each interaction to each user. The novelty of the proposed evaluation is twofold and is based on (a) the combination of evaluation data using multi-criteria decision making and (b) providing individualized recommendations based on a combination of MCDM models.

The MCDM that are used in the proposed approach are AHP (Analytic Hierarchy Process) [4], TOPSIS (Technique for the Order of Preference by Similarity to an Ideal Solution) [25], and especially fuzzy TOPSIS [26], as well as, SAW (Simple Additive Weighting) [25,27]. AHP has been selected among those MCDM models for calculating the weights of the criteria, and this is because the particular theory has a well-defined procedure for the calculation of the weights. Furthermore, the particular theory can quantify the qualitative criteria of the alternatives and, in this way, removes the subjectivity of the result. However, it has the main drawback that results from its rationale, which is that AHP is based mainly on pairwise comparisons. Therefore, if the number of alternatives is high, then this process is dysfunctional and time-consuming. Therefore, AHP was selected to be combined with a theory that has a better procedure for calculating the values of the criteria. Such theories are TOPSIS, fuzzy TOPSIS, PROMETHEE, VIKOR, SAW, and WPM.

These theories have been compared in the past [8]. SAW is considered very easy as far as its application is concerned, but the values of the criteria must be positive to provide valuable results. TOPSIS is also considered very easy, and it can maintain the same number of steps regardless of problem size has allowed it to be utilized quickly to review other methods or to stand on its own as a decision-making tool [28]. The comparison of those methods [8] revealed that SAW is very robust and TOPSIS is very sensitive. We decided to use both those theories to combine them with AHP. In particular, we combine AHP with SAW for the personalizing interaction and AHP with fuzzy TOPSIS for comparing EE projects concerning the users’ evaluation. The fuzzy approach seems more appropriate because the evaluation uses linguistic terms.

3. Designing and Implementation of the System

AESEEP aims at helping the re-use of EEPs, facilitating educators selecting EEPs that best fit their goals and plans and helping them evaluate those projects. Therefore, certified users are authorized to enter EEPs with a defined structure, thus creating a unified database. It is also supported to view, evaluate and search EEPs based on specific criteria. The system maintains an individual and dynamic user model for each user interacting with the system. When the user searches for projects, the system takes into account the existing evaluations and the user model and provides personalized recommendations for EEPs.

More specifically, the system provides two types of evaluations.

- The Comparative Evaluation (CE) applies the multi-criteria decision-making methods, AHP and TOPSIS, to comparatively evaluate user-selected programs using the existing evaluations registered in the database. The results of this evaluation are common to all users, but the list of alternative EEPs changes as the search criteria of the users are not identical. As a result, the list of the EEPs involved and the result of this evaluation are both dynamically calculated.

- The Adaptive Evaluation (AE) uses the multi-criteria decision-making methods, AHP and SAW, to evaluate the user-selected programs based on the information provided by the dynamic and individual user modeling, thus providing an evaluation that is different depending on the preferences of each user.

The function “Personalized Search—Proposal” initiates both evaluations described above, presenting a search form with specific criteria for selecting programs. This function is also used to dynamically configure the user model by recording the user’s preferences.

The final ranking of programs displayed to the user is sorted in descending order, first according to the score of the Adaptive Evaluation (Adaptive Evaluation Index—AEI) and then in descending order according to the score of the Comparative Evaluation (Comparative Evaluation Index—CEI).

In this proposal, priority is given to the score of Adaptive Evaluation since the ranking of programs is conducted first in terms of the Adaptive Evaluation and then in terms of the Comparative Evaluation.

For this reason, it was considered necessary to create an alternative final ranking proposal where the two scores would equally participate. Thus, it was decided to implement the function of the “Personalized Search—Final Proposal” for the selection of EEPs. This function takes into account the Comparative Evaluation Index (CEI) and the Adaptive Evaluation Index (AEI) and produces the Unified Evaluation Index (UEI) for EEPs. The way the “Personalized Search—Final Proposal” works and combines the different indexes are described in Section 4.

Summarizing, the application includes the following basic functions:

- Create—Modify—Login—Logout/User

- Insert—View—Modify/EEPs

- EEPs Evaluation

- Comparative Evaluation

- Adaptive Evaluation

- Personalized Search—Proposal of EEPs

- Personalized Search Final Proposal of EEPs

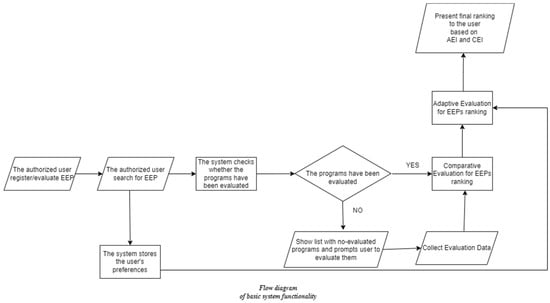

A flow diagram of the basic functionality of the system is shown in Figure 1.

Figure 1.

Flow diagram of basic system functionality.

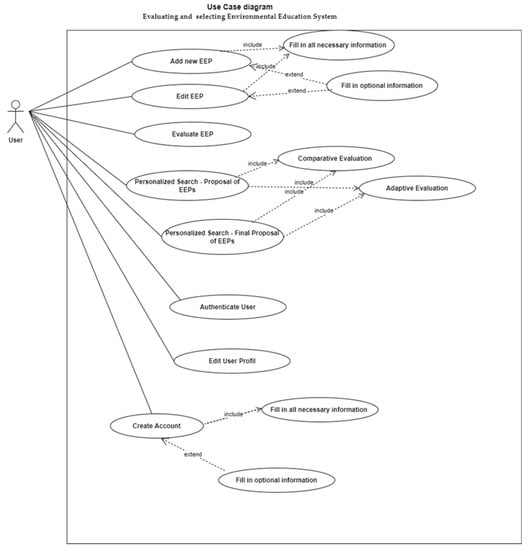

The Use Cases of the application and the interaction of the users with the Use Cases are shown in the UML diagram in Figure 2.

Figure 2.

Use Case Diagram.

4. Multi-Criteria Decision Making for the Evaluation of Environmental Education Programs

The main aim of the comparative evaluation process is for each program participating in this evaluation to acquire an index (Comparative Evaluation Index—CEI), which will determine its relative position in the ranking of the evaluated programs. For the comparative evaluation of EEPs, the combination of the multi-criteria decision-making theories AHP and Fuzzy TOPSIS was used according to the design previously proposed by [8,29].

Questions are related to the criteria used by the multi-criteria decision-making model. The selection of the criteria has been formed by a group of experts and is described in detail by [8]. The criteria are finally used for comparative evaluation. Each user has the potential to evaluate each EEP once by answering the following questionnaire [29] presented in detail in Table 1.

Table 1.

The questionnaire for comparative evaluation [29].

The user’s answers are stored in the database and used in a comparative evaluation. Each question corresponds to a criterion, and each answer to a fuzzy number as described below:

Question 1 corresponds to uc1—Adaptivity: This criterion reveals how flexible the program is and how adaptable it is to each age group of participants.

Question 2 corresponds to uc2—Completeness: This criterion shows if the available description of the program covers the topic and to what extent.

Question 3 corresponds to uc3—Pedagogy: This criterion shows whether the EE program is based on a pedagogical theory or if it uses a particular pedagogical method.

Question 4 corresponds to uc4—Clarity: This criterion represents whether or not the objectives of this program are explicitly expressed or stated.

Question 5 corresponds to uc5—Effectiveness: This criterion shows the overall impact, depending on the programming and available support material.

Question 6 corresponds to uc6—Knowledge: The quantity and quality of a cognitive object offered to students

Question 7 corresponds to uc7—Skills: This criterion reveals if skills are cultivated through activities involving active student participation

Question 8 corresponds to uc8—Behaviors: This criterion reveals the change in the student’s intentions and behavior through the program.

Question 9 corresponds to uc9—Enjoyment: This criterion shows the enjoyment of the trainees throughout the EE project

Question 10 corresponds to uc10—Multimodality: This criterion represents whether the EE project provides many different kinds of activities, interventions, and methods.

For the comparative evaluation of EEPs, AHP is combined with TOPSIS in order to process the results. AHP is used for weight calculation, while fuzzy TOPSIS is used for ranking alternatives. AHP has been used for the first phase because it has a well-defined way of calculating weights. In the particular system, the list of alternative EEPs is being formed dynamically. Therefore, an MCDM theory such as TOPSIS is considered more appropriate for ranking the alternative EEPs.

The weights of the criteria using AHP have been calculated in [29] and are presented in Table 2. The criterion ‘Skills’ was considered by the experts to be the most important criterion while evaluating EE projects. ‘Effectiveness’, ‘Clarity’, and ‘Knowledge’ were also considered rather important. Less important criteria were the criteria ‘Pedagogy’ and ‘Multimodality’.

Table 2.

The estimated weights of the comparative evaluation criteria [29].

After weights were calculated, we applied TOPSIS. More specifically, we used Fuzzy TOPSIS as the answers to the questions were given in linguistic terms. According to the rationale of TOPSIS, each linguistic term is assigned to a fuzzy number, which is a vector of the form: .

Then, a Fuzzy Positive-Ideal Solution (FPIS) and the Fuzzy Negative-Ideal Solution (FNIS) are determined as follows:

The distance of each alternative from FPIS and FNIS is used for calculating the distance from the ideal best and ideal worst solutions, which is called the closeness coefficient and is calculated as . The distance and for each weighted alternative from and is calculated as follows:

where is the distance between two fuzzy numbers The distance of two fuzzy numbers and is calculated as follows:

The closeness coefficient is the Comparative Evaluation Index (CEI) for each program participating in the comparative evaluation and is used for the final ranking of the EEPs. For a more detailed implementation of the steps of the Fuzzy TOPSIS application in evaluating EEPs, one can refer to [29].

5. Adaptive Recommendations

Comparative evaluation provides an objective ranking of EEPs, but it does not reflect the preferences and requirements of each user. In order to achieve an Adaptive Evaluation, we used the information from the individual and dynamic user model. The user model store information about the users’ preferences, interests, and needs as these are identified during his/her interaction with the system. Therefore, the user model is dynamically updated through the user’s interaction. More specifically, each time the user selects “Personalized Search—Proposal of EEPs” or “Personalized Search—Final Proposal of EEPs”, his/her preferences are collected and stored in the database. The stored information is used to generate an index for each EEP, which corresponds only to the specific user and reflects his/her individual preferences, the Adaptive Evaluation Index (AEI). In order to calculate the AEI, we use MCDM. Again, for this purpose, AHP is used.

As a first step of the AHP, the criteria indicating the user’s preferences for the EEPs had to be determined. In our case, a different group of experts was formed to select and evaluate the criteria for the adaptive evaluation. The experts involved two Software Engineers and two experts in EE. The criteria selected by the experts are:

- Uc1—Educational Region

- Uc2—Environmental Education Centre

- Uc3—Education Grade

- Uc4—Activity Area

- Uc5—Duration in days

- Uc6—Maximum number of pupils

- Uc7—Pedagogical theory

- Uc8—Method of implementation

As the second step of the AHP application is setting up a matrix for the pairwise comparison of the criteria. The pairwise comparisons among the criteria were carried out again by the group of experts, and the results are presented in Table 3.

Table 3.

Matrix for the pairwise comparison of the adaptive evaluation criteria using the arithmetic means of the values assigned by evaluators.

Finally, as the last step of the AHP application, the final weights of the criteria were calculated using the arithmetic mean, and Table 4 was constructed. The criterion of Education Grade was considered by the experts as the most important, while the criteria of Activity Area, Pedagogical Theory, and Implementation Method were considered quite important.

Table 4.

The estimated weights of the Adaptive Evaluation.

Once the weights of the criteria had been calculated, we had to decide how to exploit the information from the user model to implement the new evaluation of the EEPs.

It was therefore decided to create a mapping per program and per user based on the information from the user model and the characteristics of the programs. Each criterion participates in the calculation with its own weight.

For the calculation of the values of the criteria, the following hypotheses are made:

- Each time the user selects a value in a criterion during the search, the value of this criterion is increased by 1 for each program.

- If the criterion value is selected in the user’s profile, then the total score of the criterion value in the program is doubled for that user.

- In case the value of the criterion is selected in the profile but has not been selected in any search by the user, so it has a value of 0 in the table of values, then its value is incremented by the highest criterion value in the program for this user.

As already mentioned before, the use of AHP was proven to be very successful in calculating the weights of the criteria but very time-consuming for evaluating the EEPs due to the pairwise comparisons it uses.

Furthermore, the TOPSIS method used in the comparative Evaluation was not considered appropriate in the case of the Adaptive Evaluation due to the following reasons:

- The values configured in the criteria are different for each user and program. Moreover, these values are configured exclusively by the user concerned, so we are interested in an easy way of incorporating these values per user and per EEP.

- There is no ideal or less ideal solution; the optimal solution is the one with the highest value at any given time.

Given the above reasons, the use of Simple Additive Weighting (SAW) was considered more appropriate in the case of Adaptive Evaluation. As a result, the system uses SAW to incorporate the information of users and provide personalized recommendations to the users regarding the EEPs. SAW is used for calculating the Adaptive Evaluation Index (AEI) per program and per user.

Having calculated the weight of each criterion with AHP and the value of each criterion using the way presented above, we apply the Simple Additive Weighting method according to the formula:

where U(EEpj) is an AEI, is the value per user per criterion, and is the estimated weight for this criterion.

Finally, we rank the alternatives in descending order, a ranking that expresses the order of preference of each user according to his or her user model.

6. Final Recommendations Using a Combination of MCDM

As has been presented in more detail in Section 4 and Section 5, the CEI compares the programs to each other, taking into account their characteristics as assessed by the users, while the AEI ranks the programs based on the users’ preferences.

The “Personalized Search—Proposal of EEPs” provides a proposal to the educator by ranking the EEPs first in terms of the AEI and then in terms of the CEI. Therefore, in this case, it is considered that the user’s preferences are more important than the quality of the features of the program.

It was considered important to provide the user with an alternative proposal in which both indexes have the same importance, so we created the Unified Evaluation Index (UEI), which is calculated by the formula:

where U(EEpj) is the AEI and CCi is the CEI for the .

The U(EEpj) represents how important is the EEpj for the particular user and, therefore, acts as a weight of importance, while the value of the criterion is CEI (CCi), which is given by the general evaluation. As a result, the UEI is derived from the CEI (CCi) and the AEI (U(EEpj)) so that the Comparative Evaluation Index acts as a weighting factor for the Adaptive Evaluation Index, producing a Unified Evaluation Index by which the programs are finally ranked.

The function “Personalized Search—Final Proposal of EEPs” initiates the calculation of the Unified Evaluation Index, which is used for the final ranking of EEPs. We consider that this final ranking is the best available proposal since it manages to combine the evaluation based on the features of the program with the evaluation based on the user’s preferences.

In order to test if the system can successfully reproduce the reasoning of human experts when proposing EE projects, we run a short evaluation experiment. The evaluation experiment involved three expert users, who were asked to test the particular approach in terms of how successful the proposals of the system were. The evaluation involved 85 different cases of proposals. In 52 cases, which represent 61.2% of total cases, the first proposal of the system was identical to the experts’ proposal. In the other 24 cases, which represent 28.2% of the total cases, the proposal of the expert users was in the second or third place. The results show that in 76 cases, the experts’ proposals were among the first three proposals of the system. This means that the system’s success was 89.4%.

7. Conclusions

The contribution of the EE in building and implementing human development strategies is rather crucial. However, the difficulties faced by those involved in EE implementation (Educators, Environmental Education Coordinators, and Environmental Education Centers) are many and indicate the importance of developing tools to support the EE.

This paper presents the design and development of a web-based application for the management of EEPs that aims to support stakeholders in terms of evaluation, selection, and re-use of the EEPs. For this purpose, the application enables users to register EEPs, thus creating a structure of EEPs and a unified database. In addition, it provides the means to evaluate the registered programs based on their characteristics according to a specific set of criteria.

In this way, the existence of a unified database for evaluated EEPs will contribute to their re-use and gradual improvement. The stakeholders in EE can have access to a wide range of EEPs and/or improve their EEPs by identifying their weaknesses based on users’ evaluations. Taking advantage of MCDM and user evaluations, the system provides each user with a comparative evaluation of the programs that may interest him/her. For the implementation of the comparative evaluation, we used a combination of MCDM theories, AHP, and fuzzy TOPSIS.

AHP’s main advantage is that it uses pairwise comparisons of criteria for estimating their weights. However, these pairwise comparisons increase the complexity of the application of the MCDM model when the number of alternatives increases. Therefore, AHP does not seem appropriate when the number of alternatives is great, and in the case of the EE projects, the alternatives may be many. A solution to this problem is given through the use of Fuzzy TOPSIS. The complexity of Fuzzy TOPSIS applications does not increase so dramatically with the increase in alternatives. Furthermore, Fuzzy TOPSIS uses linguistic terms and seems ideal for an experiment where real users, without prior experience in the implementation of multi-criteria decision-making theories, are involved, as is the case of the evaluation of EE projects.

In addition, the system provides an adaptive evaluation of the EEPs based on the information about the users. For this case, a simple MCDM was considered more efficient. The application of SAW is considered simple and quite effective [8]. However, SAW does not have a predefined way of weight calculation. Therefore, the multi-criteria theories AHP and SAW incorporate the data from the characteristics of the EEPs but also from the dynamic and individual user model.

Finally, the results obtained from the comparative and adaptive evaluation are combined in order to make personalized recommendations and propose to the user the program that best suits his/her personal preferences and achieves the best score compared to the other programs that meet the criteria requested each time.

In this way, the recommendations provided to the users are in the form of a list of EEPs. The first project in the list is considered the most important. The particular application can be found very useful to all stakeholders in EE and can help in the re-use of the EEP without being overwhelmed if the number of EEPs in the unified databases increases. This is because the recommendations and the search results are personalized based on the users’ preferences, interests, and needs. Furthermore, the best-evaluated projects are promoted. Taking into account the double evaluation of EEP in order to make recommendations, the application mainly proposes the best programs that fit the user’s characteristics. The first evaluation results show that the system can successfully capture the reasoning of human experts when proposing EE projects.

Author Contributions

Conceptualization, K.K., A.P. and A.B.; methodology, K.K., A.P. and A.B.; validation, A.P.; formal analysis, K.K., A.P. and A.B.; investigation, A.P.; data curation, A.P.; writing—original draft preparation, A.P.; writing—review and editing, K.K. and A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data available on request due to restrictions, e.g., privacy or ethical.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pauw, J.B.-D. Moving Environmental Education Forward through Evaluation. Stud. Educ. Eval. 2014, 41, 1–3. [Google Scholar] [CrossRef]

- Carleton-Hug, A.; Hug, J.W. Challenges and opportunities for evaluating environmental education programs. Eval. Program Plan. 2010, 33, 159–164. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Buchman, A. Evaluating and selecting web sources as external information resources of a data warehouse. In Proceedings of the Third International Conference on Web Information Systems Engineering, WISE 2002, Singapore, 14 December 2002; pp. 149–160. [Google Scholar]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Salvatierra da Silva, D.; Jacobson, S.; Monroe, M.; Israel, G. Using evaluability assessment to improve program evaluation for the Bluethroated Macaw Environmental Education Project in Bolivia. Appl. Environ. Educ. Commun. 2016, 15, 312–324. [Google Scholar] [CrossRef]

- Zint, M.T.; Dowd, P.F.; Covitt, B.A. Enhancing environmental educators’ evaluation competencies: Insights from an examination of the effectiveness of the My Environmental Education Evaluation Resource Assistant (MEERA) website. Environ. Educ. Res. 2011, 17, 471–497. [Google Scholar] [CrossRef]

- Zint, M. An introduction to My Environmental Education Evaluation Resource Assistant (MEERA), a web-based resource for self-directed learning about environmental education program evaluation. Eval. Program Plan. 2010, 33, 178–179. [Google Scholar] [CrossRef]

- Kabassi, K. Comparing Multi-Criteria Decision Making Models for Evaluating Environmental Education Programs. Sustainability 2021, 13, 11220. [Google Scholar] [CrossRef]

- Chenery, L.; Hammerman, W. Current practices in the evaluation of resident outdoor education programs: Report of a national survey. J. Environ. Educ. 1984, 16, 35–42. [Google Scholar] [CrossRef]

- Toomey, A.H.; Knight, A.T.; Barlow, J. Navigating the space between research and implementation in conservation. Conserv. Lett. 2017, 10, 619–625. [Google Scholar] [CrossRef]

- Romero-Gutierrez, M.; Jimenez-Liso, M.R.; Martinez-Chico, M. SWOT analysis to evaluate the programme of a joint online/onsite master’s degree in environmental education through the students’ perceptions. Eval. Program Plan. 2016, 54, 41–49. [Google Scholar] [CrossRef]

- Ardoin, N.M.; Biedenweg, K.; O’Connor, K. Evaluation in Residential Environmental Education: An Applied Literature Review of Intermediary Outcomes. Appl. Environ. Educ. Commun. 2015, 14, 43–56. [Google Scholar] [CrossRef]

- Ardoin, N.M.; Bowers, A.W.; Gaillard, E. Environmental education outcomes for conservation: A systematic review. Biol. Conserv. 2020, 241, 108224. [Google Scholar] [CrossRef]

- Ardoin, N.M.; Bowers, A.W.; Roth, N.W.; Holthuis, N. Environmental education and K-12 student outcomes: A review and analysis of research. J. Environ. Educ. 2018, 49, 1–17. [Google Scholar] [CrossRef]

- Thomas, R.E.W.; Teel, T.; Bruyere, B.; Laurence, S. Metrics and outcomes of conservation education: A quarter century of lessons learned. Environ. Educ. Res. 2018, 25, 172–192. [Google Scholar] [CrossRef]

- Norris, K.; Jacobson, S.K. A content analysis of tropical conservation education programs: Elements of Success. J. Environ. Educ. 1998, 30, 38–44. [Google Scholar] [CrossRef]

- Fien, J.; Scott, W.; Tilbury, D. Education and conservation: Lessons from an evaluation. Environ. Educ. Res. 2001, 7, 379–395. [Google Scholar] [CrossRef]

- Linder, D.; Cardamoneb, C.; Cash, S.B.; Castellot, J.; Kochevar, D.; Dhadwal, S.; Patterson, E. Development, implementation, and evaluation of a novel multidisciplinary one health course for university undergraduates. One Health 2020, 9, 100121. [Google Scholar] [CrossRef]

- O’Neil, E. Conservation Audits: Auditing the Conservation Process—Lessons Learned, 2003–2007; Conservation Measures Partnership: Bethesda, MD, USA, 2007. [Google Scholar]

- Silva, R.L.F.; Ghilard-Lopes, N.P.; Raimundo, S.G.; Ursi, S. Evaluation of Environmental Education Activities. In Coastal and Marine Environmental Education; Brazilian Marine Biodiversity; Ghilardi-Lopes, N., Berchez, F., Eds.; Springer: Cham, Switzerland, 2019; Available online: https://link.springer.com/chapter/10.1007%2F978-3-030-05138-9_5 (accessed on 1 August 2021).

- Stern, M.J.; Powell, R.B.; Hill, D. Environmental education program evaluation in the new millennium: What do we measure and what have we learned? Environ. Educ. Res. 2013, 20, 581–611. [Google Scholar] [CrossRef]

- Chao, Y.-L. A Performance Evaluation of Environmental Education Regional Centers: Positioning of Roles and Reflections on Expertise Development. Sustainability 2020, 12, 2501. [Google Scholar] [CrossRef]

- Fleming, L.; Easton, J. Building environmental educators’ evaluation capacity through distance education. Eval. Program Plan. 2010, 33, 172–177. [Google Scholar] [CrossRef]

- Kabassi, K.; Martinis, A.; Charizanos, P. Designing a Tool for Evaluating Programs for Environmental Education. Appl. Environ. Educ. Commun. 2020, 20, 270–287. [Google Scholar] [CrossRef]

- Hwang, C.L.; Yoon, K. Multiple Attribute Decision Making: Methods and Applications a State-of-the-Art Survey; Lecture Notes in Economics and Mathematical Systems; Springer: Berlin, Germany, 1981; p. 186. [Google Scholar]

- Chen, C.T. Extensions of the TOPSIS for group decision-making under fuzzy environment. Fuzzy Sets Syst. 2000, 114, 1–9. [Google Scholar] [CrossRef]

- Fishburn, P.C. Additive Utilities with Incomplete Product Set: Applications to Priorities and Assignments. Oper. Res. 1967, 15, 537–542. [Google Scholar] [CrossRef]

- Velasquez, M.; Hester, P.T. An Analysis of Multi-Criteria Decision Making Methods. Int. J. Oper. Res. 2013, 10, 56–66. [Google Scholar]

- Kabassi, K.; Martinis, A.; Botonis, A. Using Evaluation Data Analytics in Environmental Education Projects, Advances in Signal Processing and Intelligent Recognition Systems. In Proceedings of the 5th International Symposium, SIRS 2019, Trivandrum, India, 18–21 December 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).