Abstract

In this paper, we propose an incremental-type subgradient scheme for solving a nonsmooth convex–concave minimax optimization problem in the setting of Euclidean spaces. We investigate convergence results by deriving an upper bound for the absolute value of the difference between the function value of the averaged iterates and the saddle value, provided that the step size is a constant. By assuming that the step-size sequence is diminishing, we prove the convergences of both the averaged sequence of function values and the sequence of function values of averaged iterates to the saddle value. Finally, we also show some numerical examples for illustrating the obtained theoretical result.

1. Introduction

In this work, we focus on developing an iterative method for solving a finite-sum nonsmooth convex–concave minimax optimization problem in a decentralized setting:

where the component coupling function for each is a convex–concave function and the constrained sets and are compact convex sets. The convex–concave optimization problem (1) has been extensively studied, with numerous methods proposed in the literature. It arises in various areas of convex optimization. In addition, it has been widely applied in multiple fields, namely, generative adversarial networks (GANs) [1,2], adversarial robust learning [3], classification [4,5,6], and image processing [7,8].

When , the gradient descent–ascent method, a well-known approach introduced by Arrow, Hurwicz, and Uzawa [9], is commonly used to solve the problem in the smooth case. Furthermore, for cases where the coupling function is not necessarily smooth, Nedić and Ozdaglar [10] proposed the subgradient method, which uses subgradient computation in the place of gradient computation. Since the coupling objective function is a convex–concave real-valued function, the existence of subgradients for both variables is guaranteed. A common difficulty with gradient or subgradient methods for convex optimization is that while subgradients exist, they are often hard to compute. To address this issue, the concept of delay techniques for calculating subgradients was introduced [11,12,13,14]. Specifically, the next iteration can be updated using information from stale iterates rather than computing the subgradient in the current iterate, which saves computational runtime. Recently, Arunrat and Nimana [15] proposed a method based on the subgradient method, together with the delayed technique to give more strategies in order to calculate the subgradient for solving problem (1) when . In this approach, the iterates are updated simultaneously using delayed subgradients at stale iterates of their respective variables, combined with the current iterates of the other variable. The method is referred to as the delayed subgradient method and can be explicitly formulated as follows: For a current iterate , compute

and

where is a subgradient of F with respect to at a stale iterate ; is a subgradient of F with respect to at a stale iterate ; and and are metric projections on and , respectively. In some situations, the objective functions can be expressed as a finite sum or within a stochastic setting. Focusing on each coordinate individually rather than on the entire function can often yield better results. Several methods were also developed to address this type of convex–concave optimization problem [16,17,18,19,20].

On the other hand, many authors are interested in studying convex minimization problems where the objective function is expressed as a finite sum. This problem is known as the additive convex optimization problem. Specifically, let ; for all , let be a convex function and let be a nonempty closed and convex set. The additive convex optimization problem is defined as follows:

In 2001, Nedić and Bertsekas proposed the so-called incremental subgradient methods [21,22] to address this problem. At each iteration, the solution is updated based on the subgradient of a single component of the objective function. The method is essentially structured as follows: is the vector obtained after k cycles, and the vector is updated by initializing with , where we compute

and generate after one more cycle of m steps as

where is a subgradient of at , and is a positive step size. These components can be selected either cyclically or randomly at each step. These methods are well suited for large-scale problems, as they reduce the memory and computational requirements compared with batch methods. Furthermore, this approach eliminates the need for a central user to collect all the data from each component. We notice that the delay technique has not yet been applied to the incremental subgradient method for solving problem (1).

In this work, we propose the incremental delayed subgradient method for solving the decentralized nonsmooth convex–concave minimax problem (1). This approach updates the next iterates incrementally by incorporating delayed subgradients from past iterates of their respective variables while using the current iterates of the other variable. We provide a detailed characterization of the fundamental relations required to analyze the behavior of the generated sequences. Furthermore, we establish convergence results for both constant and diminishing step-size sequences. Finally, we present numerical examples to demonstrate the effectiveness of the proposed method.

The following sections are structured as follows: In Section 2, we review essential concepts and important results related to convex–concave coupling functions and the min–max optimization problem. Section 3 introduces the incremental delayed subgradient method, outlines the assumptions, and provides relevant discussions. In addition, this section includes an analysis of the convergence properties of the proposed method. Section 4 presents numerical experiments on simple convex–concave functions to demonstrate the effectiveness of the proposed method. Finally, the concluding section summarizes our findings and provides closing remarks.

2. Preliminaries

In this section, we recall some definitions and useful facts used in this work. We denote the symbols as the set of real numbers and as the set of nonnegative integers. Let and in ; the of and , denoted by , is defined by . Moreover, the of , denoted by , is defined by . If is a bounded set in , then we denote the diameter of by .

Let and be nonempty sets and be a function. We say that F is a convex–concave function if the function is a convex function for each fixed , that is,

and is a concave function for each fixed , that is,

A vector pair is said to be a saddle point of F on (with respect to minimizing in and maximizing in ) if

We call the value the saddle value. Note that if and are nonempty closed bounded convex sets and is a convex–concave function, then F has a saddle point on (see [23], Corollary 37.6.2).

Let be a function and let . A vector is called a of f at if

We denote the set of all subgradients of f at by and refer to it as the subdifferential of f. The function f is called subdifferentiable at if . Note that if is a convex function and , then f is subdifferentiable at . If is a concave function, a vector is called a of f at if

The set of all supergradients of f at is denoted by and is called the superdifferential of f. For simplicity, we also refer to them as the subgradient and subdifferential sets. Furthermore, we note that . Moreover, since is a convex function, we also have that f is subdifferentiable at .

Let be a convex–concave function. Following the above definition of a subgradient for a convex function, we extend this concept to define the subgradients of a convex–concave function as follows. And, for a fixed and , we call a vector a subgradient of the convex function with respect to at the point if

And for a fixed and let , we call a vector a subgradient of the concave function with respect to at the point if

Let be a nonempty subset and . If there is a point such that

for all , then is said to be a metric projection of onto and we usually symbolize it by writing . Note that if is a nonempty closed convex set, then for each , there exists a unique metric projection .

3. Incremental Delayed Subgradient Method

In this section, we present the incremental delayed subgradient method (in short, IDSM), which is the main algorithm of this work. After that, we state the technical lemmata that is used in the convergence results.

To begin with, we need the following delay bound assumption.

Assumption 1.

The delay sequences are bounded, that is, there exists a non-negative integer such that

We are in a position to present the incremental delayed subgradient method in Algorithm 1 as follows.

| Algorithm 1 Incremental Delayed Subgradient Method (in short, IDSM) |

| Initialization: Given the step-size sequence of real numbers, choose initial points and arbitrarily. |

| Iterative Step: For an iterate (), starting with |

| For each , compute |

| Compute |

| Update . |

To make the analysis of the convergence results of the Algorithm 1 more straightforward, we assume throughout this work that

Remark 1.

If the number , the proposed IDSM reduces to the method proposed by Arunrat and Nimana [15]. Furthermore, if, additionally, the delays have vanished, that is , the IDSM is the subgradient method proposed by Nedić and Ozdaglar [10].

3.1. Technical Lemmata

The boundedness assumption of the constraint sets and , along with the fact that the subgradient of a real-valued convex function is uniformly bounded over any bounded subset, leads to the boundedness property as the following lemma.

Lemma 1.

There is a real constant such that for all and for all ,

Next, we focus on characterizing the fundamental repeated relations of the iterative sequences and and points and , respectively, which are utilized in the next steps of the convergence results. For the simplicity of the notation, we denote the following:

Lemma 2.

Let and be sequences generated by the IDSM, and be a real number. The following statements are true:

- (i)

- For all and for all integers ,

- (ii)

- For all and for all integers ,

Proof.

We present only the proof of (i). Since the proof of (ii) trivially follows the line of reasoning of the proof of (i) by replacing the convexity by the concavity of for all , we omit it here.

Let and be given. For every to m, by using the definition of and the property of metric projection, we note that

Let us arrange the terms on the right-hand side of the inequality above. Now, the first term can be arranged as

Since is the subgradient of a convex function at , we obtain that the second term can be bounded as

Regarding the last term in the inequality above, we begin by noting that

By using the facts that the constant and the number , we note that

which implies that

Using the same technique for deriving (6), we also have

Thus, by applying the obtained inequalities (6) and (7), inequality (5) becomes

By applying inequalities (3), (4) and (8) in inequality (2), we obtain

Now, we apply the subgradient inequality for a convex function at and to obtain

Using the same technique to derive inequality (6), we can obtain the following inequalities:

and

From inequalities (11) and (12), along with (10), it follows that inequality (9) becomes

and it is organized as

where the constant B is the bound of the subgradients given in Lemma 1. We observe that the term in inequality (13) above can be written as

Thus, using this inequality in (13), we have

Now, we investigate the terms and in inequality (14) as follows. By using the convexity of , we note that

With the same technique as above, the term is bounded as

Therefore, by applying inequalities (15) and (16) in inequality (14), we obtain that

By adding inequality (17) over , we obtain

and then

implying that

as desired. □

3.2. Bounds for Constant Step Size

Before proposing the lemma below, we define the notations in the case of a constant step size, where we write the averaged iterates and as

The proof of the following lemma can be carried out in the same manner as the proof of Lemma 4.2 in [15] by using Lemma 2 with .

Lemma 3.

Let and be sequences generated by the IDSM, and be a real number. Suppose that the step-size constant . The following statements are true:

- (i)

- For all and for all integers ,

- (ii)

- For all and for all integers ,

Next, we determine the upper bound for the minimax gap of the averaged iterates and by using the obtained results in Lemma 3 as follows:

Theorem 2.

Let and be sequences generated by the IDSM, and be a real number. Suppose that the step-size constant . Then, for all and any saddle point , we have

Proof.

Furthermore, we can derive the upper bound for the difference in the function values of the averaged iterates and the saddle value as the following theorem.

Theorem 3.

Let and be sequences generated by the IDSM, and be a real number. Suppose that the step-size constant . Then, for all and any saddle point , we have

Proof.

For any , we set and in (18) and (19), respectively, where we have that

and

Since and for all , the saddle-point relation implies that

and

We invoke these two results with inequalities (21) and (22), respectively, which are

and

Now, by combining inequalities (23) and (24), we obtain

In addition, by using and in (18) and (19), respectively, we derive that

and

Next, we combine the two obtained relations and multiply by on both sides of the inequality to obtain that

Finally, we sum up inequalities (25) and (26) to obtain

which completes the proof. □

By noticing the proving lines of Theorem 3, we can derive the complexity upper bound of the IDSM as the following remark.

Remark 2.

Let be a saddle point of problem (1) and . According to (25), we have

which yields the upper bound on the average of function values for the saddle value as

Notice that by choosing a small step size γ so that the term is small allows for a small error level, as required. To obtain an ε-optimal solution to problem (1), we now want to know how many iterations N are required. Now, let be so that

Note that finding the nonnegative integer N for which

is achieved in the same manner as

Let us fix a length . By putting the positive real number and committing over this length, we may choose the constant step size for some constant so that

Since we know that and , we have . Now, by particularly putting , we obtain the above constant bound:

Therefore, we obtain the complexity upper bound within a fixed length and a constant as

3.3. Convergences of Function Values

For notation simplicity, we write the averaged iterates and as

Lemma 4.

Let and be sequences generated by the IDSM, and be a real number. Suppose that the step-size sequence is non-increasing.

- (i)

- For all and for all integers ,

- (ii)

- For all and for all integers ,

Proof.

(i) Let be given. Taking into account Lemma 2 (i), we note that for all ,

For a fixed integer , by summing this relation over k from 0 to N, we obtain

For the last term of the above inequality, the non-increasing property of the step-size sequence implies that

By applying this obtained inequality in inequality (29), we have

Now, the condition of the step-size sequence implies that for all , and so

implying that

As a result of the concavity of the function , it follows that

which means that the above inequality becomes

(ii) Let be given. By utilizing Lemma 2 (ii), we have that for all ,

We sum up the above inequality from to a fixed number and we obtain that

In a similar technique to inequality (30), we also obtain

Invoking this relation in inequality (31), we have

Again, by applying the condition of the step-size sequence , we obtain

and then

Furthermore, since we know that is a convex function for a fixed we also have

Finally, we can conclude that

as desired. □

Theorem 4.

Let and be sequences generated by the IDSM, and be a real number. Suppose that the step-size sequence is non-increasing and satisfies and . Then, the averaged sequence of function values and the sequence of function values of averaged iterates both converge to .

Proof.

Let and in (27) and (28), respectively. We obtain that for all ,

and

For all , since the sets and are convex, we have that and . This, together with the saddle-point relation of , implies that

Now, applying inequalities (32) and (33) with the saddle-point relation (34), we obtain that for all ,

and

Therefore, we have that for all ,

Next, by the condition that , we obtain

Since , we have

By using these two results with inequality (35), we arrive at the conclusion that

Again, by setting and in (27) and (28), respectively, we have that for all ,

Combining this with inequalities (36)–(38), it becomes

as desired. □

4. Numerical Examples

In this section, we consider the numerical examples of the proposed method (IDSM) for solving the distributed matrix game problem of the following form:

where the constrained sets and are standard simplices in and , respectively, and the component coupling function for each is given by

where is the vector in , all of whose components are 1. We generated matrices for each randomly, where its entries were independently and uniformly distributed in the interval . We compared the IDSM with the existing methods NO-09 and AN-23. We set the delay sequences with the cyclic order delays for all and , with the delay bounds , and 10 for both the IDSM and AN-23. We set the step-size constant as for the IDSM and for AN-23 and NO-09. We performed 100 independent random tests and terminated all tested methods when the number of iterations reached 100. We examined the considered problem in various problem sizes , as detailed below.

4.1. The Case

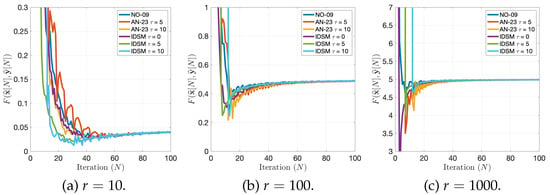

In Figure 1a–c, we consider the behavior of the average of 100 independently sampled function values with a fixed . We observed that for all values r, all methods tended to converge to the saddle values.

Figure 1.

Behaviors of the average of 100 independent values performed by NO-09, AN-23, and the IDSM for the cases and , and 1000.

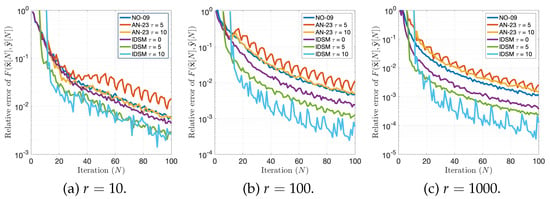

According to Figure 2, which shows the behaviors of the relative errors of the function value for each problem size, we noticed that the IDSM with an appropriate delay bound selection, particularly for a fixed delay bound of , provided the most accurate results with the lowest relative error across all sizes. Additionally, for fixed delay bounds of and , it still exhibited lower relative error values when compared with the AN-23 and NO-09 methods.

Figure 2.

Behaviors of the average of 100 independent relative errors of values performed by NO-09, AN-23, and the IDSM for the cases and , and 1000.

4.2. The Case

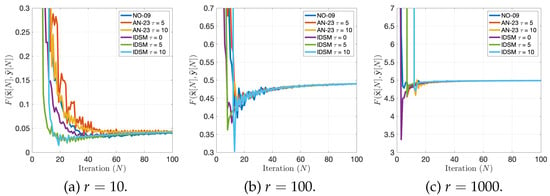

It can be observed from Figure 3 that for all values r, all methods tended to converge to the saddle values.

Figure 3.

Behaviors of the average of 100 independent values performed by NO-09, AN-23, and the IDSM for the cases and , and 1000.

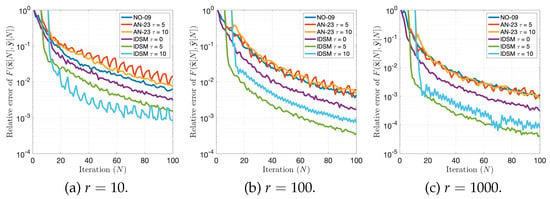

In Figure 4, we observed that when , the relative error with a fixed delay bound of for the IDSM exhibited the lowest relative error values. In contrast, for and , the IDSM with a fixed delay bound of provided the most accurate results, showing the lowest relative error. Moreover, we found that the IDSM, in the case of fixed delay bounds, gave lower relative errors than both the AN-23 and NO-09 methods under the same fixed delay bounds.

Figure 4.

Behaviors of the average of 100 independent relative errors of values performed by NO-09, AN-23, and the IDSM for the cases and , and 1000.

5. Conclusions

In this work, we introduce the so-called incremental delayed subgradient method (in short, IDSM) to solve the saddle-point problem. We characterize the relations associated with the IDSM for use in the next crucial theorem. Moreover, we prove the convergence results, which are divided into two parts. In the part concerning the constant step size, we provide an upper bound for the absolute value of the difference between the function value of the averaged iterates and the saddle value. We also derive the complexity upper bound within a fixed time length. For the case of the diminishing step-size sequence, we prove the convergence results by demonstrating the convergences of both the averaged sequence of function values and the sequence of function values of averaged iterates to the saddle value. We finally showed numerical examples for matrix game problems. Even if the IDSM with delay effects yielded a better convergence behavior than those of NO-09 and AN-23, we noticed that the selection of appropriate delay bounds for some particular problems will be an interesting research direction.

Author Contributions

Conceptualization, T.F., T.A. and N.N.; methodology, T.F., T.A. and N.N.; software, T.A. and N.N.; validation, T.F., T.A. and N.N.; formal analysis, T.F., T.A. and N.N.; investigation, T.F., T.A. and N.N.; writing—original draft preparation, T.F.; writing—review and editing, T.F., T.A. and N.N.; visualization, N.N.; supervision, N.N.; project administration, N.N.; funding acquisition, N.N. All authors read and agreed to the published version of this manuscript.

Funding

This research was supported by the Fundamental Fund of Khon Kaen University. This research received funding support from the National Science, Research and Innovation Fund (NSRF).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available upon request from the authors.

Acknowledgments

The authors are thankful to the editor and two anonymous referees for comments and remarks that improved the quality and presentation of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Goodfellow, I.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Boţ, R.I.; Sedlmayer, M.; Vuong, P.T. A relaxed inertial forward-backward-forward algorithm for solving monotone inclusions with application to GANs. J. Mach. Learn. Res. 2023, 24, 1–37. [Google Scholar]

- Boţ, R.I.; Böhm, A. Alternating proximal-gradient steps for (stochastic) nonconvex-concave minimax problems. SIAM J. Optim. 2023, 33, 1884–1913. [Google Scholar] [CrossRef]

- Boţ, R.I.; Csetnek, E.R.; Sedlmayer, M. An accelerated minimax algorithm for convex-concave saddle point problems with nonsmooth coupling function. Comput. Optim. Appl. 2023, 86, 925–966. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Lyu, S.; Ying, Y.; Hu, B. Learning with average top-k loss. Adv. Neural Inf. Process. Syst. 2017, 497–505. [Google Scholar]

- Ying, Y.; Wen, L.; Lyu, S. Stochastic online AUC maximization. Adv. Neural Inf. Process. Syst. 2016, 451–459. [Google Scholar]

- He, B.S.; Yuan, X.M. Convergence analysis of primal–dual algorithms for a saddle point problem: From contraction perspective. SIAM J. Imaging Sci. 2012, 5, 119–149. [Google Scholar] [CrossRef]

- Cai, X.; Han, D.; Xu, L. An improved first-order primal-dual algorithm with a new correction step. J. Glob. Optim. 2013, 57, 1419–1428. [Google Scholar] [CrossRef]

- Arrow, K.J.; Hurwicz, L.; Uzawa, H. Studies in Linear and Non-Linear Programming; Stanford University Press: Stanford, CA, USA, 1958. [Google Scholar]

- Nedić, A.; Ozdaglar, A. Subgradient methods for saddle-point problems. J. Optim. Theory Appl. 2009, 142, 205–228. [Google Scholar] [CrossRef]

- Aytekin, A. Asynchronous First-Order Algorithms for Large-Scale Optimization: Analysis and Implementation. Ph.D. Dissertation, KTH Royal Institute of Technology, Stockholm, Sweden, 2019. [Google Scholar]

- Feyzmahdavian, H.R.; Aytekin, A.; Johansson, M. An asynchronous mini-batch algorithm for regularized stochastic optimization. IEEE Trans. Autom. Control 2016, 61, 3740–3754. [Google Scholar] [CrossRef]

- Gurbuzbalaban, M.; Ozdaglar, A.; Parrilo, P.A. On the convergence rate of incremental aggregated gradient algorithms. SIAM J. Optim. 2017, 27, 1035–1048. [Google Scholar] [CrossRef]

- Vanli, N.D.; Gurbuzbalaban, M.; Ozdaglar, A. Global convergence rate of proximal incremental aggregated gradient methods. SIAM J. Optim. 2018, 28, 1282–1300. [Google Scholar] [CrossRef]

- Arunrat, T.; Nimana, N. A delayed subgradient method for nonsmooth convex-concave min-max optimization problems. Results Control Optim. 2023, 12, 100266. [Google Scholar] [CrossRef]

- Beznosikov, A.; Scutari, G.; Rogozin, A.; Gasnikov, A. Distributed saddle-point problems under data similarity. Adv. Neural Inf. Process. Syst. 2021, 34, 8172–8184. [Google Scholar]

- Dai, Y.H.; Wang, J.; Zhang, L. Stochastic approximation proximal subgradient method for stochastic convex-concave minimax optimization. arXiv 2024, arXiv:2403.20205. [Google Scholar]

- Rafique, H.; Liu, M.; Lin, Q.; Yang, T. Weakly-convex–concave min–max optimization: Provable algorithms and applications in machine learning. Optim. Methods Softw. 2021, 37, 1087–1121. [Google Scholar] [CrossRef]

- Jiang, F.; Zhang, Z.; He, H. Solving saddle point problems: A landscape of primal-dual algorithm with larger stepsizes. J. Glob. Optim. 2023, 85, 821–846. [Google Scholar] [CrossRef]

- Luo, L.; Xie, G.; Zhang, T.; Zhang, Z. Near optimal stochastic algorithms for finite-sum unbalanced convex-concave minimax optimization. arXiv 2021, arXiv:2106.01761. [Google Scholar]

- Nedić, A.; Bertsekas, D.P. Incremental subgradient methods for nondifferentiable optimization. SIAM J. Optim. 2001, 12, 109–138. [Google Scholar] [CrossRef]

- Nedić, A.; Bertsekas, D.P.; Borkar, V.S. Distributed asynchronous incremental subgradient methods. Stud. Comput. Math. 2001, 8, 381–407. [Google Scholar]

- Rockafellar, R.T. Convex Analysis; Princeton University Press: Princeton, NJ, USA, 1970. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).