Abstract

Software testing is fundamental to ensuring the quality, reliability, and security of software systems. Over the past decade, artificial intelligence (AI) algorithms have been increasingly applied to automate testing processes, predict and detect defects, and optimize evaluation strategies. This systematic review examines studies published between 2014 and 2024, focusing on the taxonomy and evolution of algorithms across problems, variables, and metrics in software testing. A taxonomy of testing problems is proposed by categorizing issues identified in the literature and mapping the AI algorithms applied to them. In parallel, the review analyzes the input variables and evaluation metrics used by these algorithms, organizing them into established categories and exploring their evolution over time. The findings reveal three complementary trajectories: (1) the evolution of problem categories, from defect prediction toward automation, collaboration, and evaluation; (2) the evolution of input variables, highlighting the increasing importance of semantic, dynamic, and interface-driven data sources beyond structural metrics; and (3) the evolution of evaluation metrics, from classical performance indicators to advanced, testing-specific, and coverage-oriented measures. Finally, the study integrates these dimensions, showing how interdependencies among problems, variables, and metrics have shaped the maturity of AI in software testing. This review contributes a novel taxonomy of problems, a synthesis of variables and metrics, and a future research agenda emphasizing scalability, interpretability, and industrial adoption.

1. Introduction

In the digital era, software is a fundamental engine driving modern technology. Its relevance is manifested in its ability to transform data into useful information, to automate processes, and to foster efficiency and innovation across various industrial sectors. As the core of digital transformation, software not only facilitates digitization but also creates unprecedented business opportunities. According to a study by McKinsey & Company, firms that adopt advanced digital technologies, including software, can achieve significantly increased productivity and competitiveness [1]. Moreover, software plays a key role in developing new applications that are transforming sectors such as healthcare, education, and transportation, thereby reshaping the economic and social landscape [2]. The software industry also contributes significantly to the global economy by improving productivity and efficiency across other sectors [3]. In terms of security, it protects personal and corporate data against cyber threats [4] and has revolutionized teaching and learning methods through the development of interactive and accessible platforms that enhance educational effectiveness [5].

Software testing (ST) is a critical phase in the development cycle that ensures the quality and functionality of the final product [6]. Since 57% of the world’s population uses internet-connected applications, it is imperative to develop secure, high-quality software to avoid the risk of significant harm, including major financial losses [7]. The inherent complexity and defects in software require that approximately 50% of development time be devoted to testing, which is essential to ensure the delivery of high-quality products [8].

The introduction of artificial intelligence (AI) algorithms is revolutionizing ST, making it more intelligent, efficient, and accurate. These algorithms enhance testing processes by reducing the time and costs involved [9]. Techniques such as machine learning (ML) allow for analysis of source code or expected application behavior, to enable more exhaustive tests to be generated and potential errors identified. They are also used in data mining and clustering to prioritize critical areas of the code and to enable automatic test case generation (TCG) [10,11,12]. Moreover, genetic and search-based algorithms are employed in automated interface validation and the generation of software defect prediction (SDP) models to identify parts of the code that are more prone to failure based on factors such as code complexity and defect history [13,14,15,16]. This enables testing efforts to focus on critical areas, thereby increasing efficiency and reducing the test time.

In recent years, advances in AI algorithms have significantly transformed the domain of ST, with notable impacts across various key areas. For example, the authors of [17] applied deep learning (DL) techniques using object detection algorithms such as EfficientDet and Detection Transformer, along with text generation models like GPT-2 and T5, achieving outstanding accuracy rates of 93.82% and 98.08% in TCG. In another study [18], researchers used ML methods for software defect detection, achieving an impressive accuracy of 98%. Similarly, the authors of [19] explored the use of neural networks (NNs) with natural language processing (NLP) models for e-commerce applications, reporting excellent results of 98.6% and 98.8% in correct test case generation. In [9], NNs were applied to calculate the failure-proneness score, giving high-level metrics that supported their effectiveness. Finally, the study in [20] highlighted the potential of DL in software fault prediction, with a confidence level of 95%.

The growing number of studies on the use of AI in ST has prompted researchers to conduct systematic literature reviews. The authors of [21] highlighted ML-based defect prediction methods, although they noted a lack of practical applications in industrial contexts. In [22], the increasing use of AI was confirmed, whereas in [23], NLP-based approaches were investigated for requirements analysis and TCG, with challenges such as the generalization of algorithms across domains being identified. The researchers in [24] classified ML methods applied to testing, while those in [25] observed a decline in traditional methods in favor of innovations such as automatic program repair. In [26], the current lack of theoretical knowledge in anticipatory systems testing was emphasized. In [27], the development of generalized metamorphic rules for testing AI-based applications was promoted. Finally, the authors of [28,29] analyzed improvements in test case prioritization and generation using ML techniques and highlighted the urgent need for further research to align academic work with industrial demand.

AI algorithms can have a significant impact on ST by improving the testing time, accuracy, and overall quality. It is therefore essential to address the question of how AI algorithms have evolved in ST; this will help to highlight the advances in these algorithms and their growing importance in ST, since AI enables the automation and optimization of tests, reduces human error and development time, and facilitates the early detection of complex defects.

The purpose of this study is to analyze and explore the evolution in the use of AI algorithms for ST from 2014 to 2024, with the aim of helping quality engineers and software developers identify the relevant AI algorithms and their applications in ST, while also supporting researchers in the development of new approaches. To achieve this, a systematic literature review will be conducted on AI algorithms in ST.

This paper makes the following contributions to the field of AI-based software testing:

- (1)

- A taxonomy of problems in software testing, proposed by the authors by creating categories according to the issues identified in the reviewed literature.

- (2)

- A systematization of input variables used to train AI models, organized into thematic categories, with special emphasis on structural source code metrics and complexity/quality metrics as drivers of algorithmic focus.

- (3)

- A synthesis of performance metrics applied to assess the effectiveness and robustness of AI models, distinguishing between classical performance indicators and advanced classification measures.

- (4)

- An integrative and evolutionary perspective that highlights the interplay between problems, input variables, and performance metrics, and traces the maturation and diversification of AI in software testing.

- (5)

- A future research agenda that outlines open challenges related to scalability, interpretability, and industrial adoption, while drawing attention to the role of hybrid and explainable AI approaches.

This article is organized into six sections. Section 2 reviews ST. Section 3 presents a systematic literature review of the use of AI algorithms in ST, while their evolution, including variables and metrics, is described in Section 4 Finally, a discussion and some conclusions are presented in Section 5 and Section 6, respectively.

2. Software Testing (ST)

2.1. Concept and Advantages

ST originated in the 1950s, when software development began to be consolidated as a structured, systematic activity. In its early days, ST was considered an extension of debugging, with a primary focus on identifying and correcting code errors. During the 1950s and 1960s, testing was mostly ad hoc and informal and was done with the aim of correcting failures after their detection during execution. However, in the 1970s, a more systematic approach emerged with the introduction of formal testing techniques, which contributed to distinguishing ST from debugging. Glenford J. Myers was one of the pioneers in establishing ST as an independent discipline through his seminal work The Art of Software Testing [30].

ST is a systematic process carried out to evaluate and verify whether a program or system meets specified requirements and functions as intended. It involves the controlled execution of applications with the goal of detecting errors. According to the ISO/IEC/IEEE 29119 Software Testing Standard [31]. ST is defined as “a process of analyzing a component or system to detect the differences between existing and required conditions (i.e., defects) and to evaluate the characteristics of the component or system”. Accordingly, ST has several objectives: verifying functionality, identifying defects, validating user requirement compliance, and improving the overall quality of the final product [32].

The systematic application of ST ensures that the final product meets the required quality standards. By detecting and correcting defects prior to release, software reliability and functionality are enhanced. In [33], it was asserted that systematic testing is essential to ensure proper performance under all intended scenarios. Moreover, ST contributes to long-term cost reductions, as the cost of correcting a defect increases exponentially the later it is discovered in the software life cycle [34]. In addition, security testing plays a key role in preventing fraud and protecting sensitive information, making it an essential component of secure software development [35]. Finally, ST delivers the promised value to the user [36] and facilitates future maintenance, provided that the software is free from significant defects [37].

2.2. Forms of Software Testing

For a better understanding, software testing can be classified into four main dimensions: testing level, testing type, testing approach, and degree of automation, as described below.

By testing level:

- Unit Testing (UTE): This focuses on validating small units of code, such as individual functions or methods, as these are the closest to the source code and the fastest to execute [38].

- Integration Testing (INT): This evaluates the interaction between different modules or components to ensure that they work together correctly [39].

- System Testing (End-to-End): The aim of this is to simulates complete system usage to verify that all components function properly from the user’s perspective [40].

- Acceptance Testing (ACT): This is conducted to validate that the software meets the client’s requirements or acceptance criteria before release [41].

- Stress and Load Testing (SLT): In this approach, the system’s behavior is analyzed under extreme or high-demand conditions.

By test type:

- Functional Testing (FUT): This ensures that the software fulfills the specified functionalities [42].

- Non-functional Testing (NFT): This is conducted to evaluate attributes that are related to performance and external quality rather than directly to internal functionality. It includes:

- Performance Testing (PET): This analyzes response times, load handling, and capacity under different conditions.

- Security Testing (SET): This is done to verify protection against attacks or unauthorized access.

- Usability Testing (UST): This assesses the user experience. Although usually conducted manually, some aspects such as accessibility may be partially automated [43].

By testing approach:

- Test-Driven Development (TDD): Tests are written before the code, guiding the development process. The input data and expected results are stored externally to support repeated execution [44].

- Behavior-Driven Development (BDD): Tests are formulated in natural language and aligned with business requirements [44].

- Keyword-Driven Testing (KDT): Predefined keywords representing actions are used, which separate the test logic from the code and allow non-programmers to create tests [45].

By degree of automation:

- Automated Testing (AUT): This involves the use of tools such as Selenium or Cypress to interact with the graphical user interface [46], JUnit for unit testing in Java, or Appium in mobile environments for Android/iOS. Backend or API tests are typically conducted using Postman, REST-assured, or SoapUI.

- Fully Automated Testing (FAT): The entire testing cycle (execution and reporting) is carried out without human intervention [47].

- Semi-Automated Testing (SAT): In this approach, part of the process is automated, but human involvement is required in certain phases, such as result analysis or environment setup [47].

2.3. Standards

ST is governed by a set of internationally recognized standards that define best practices, processes, and requirements to ensure quality and consistency throughout the testing life cycle. The primary framework is established by the ISO/IEC/IEEE 29119:2013 standard [31], which provides a comprehensive foundation for software testing concepts, processes, documentation, and evaluation techniques. Complementary standards, such as ISO/IEC 25010 (Software Product Quality Model), IEEE 1028 (Software Reviews and Audits), and ISO/IEC/IEEE 12207 (Software Life Cycle Processes), extend this framework by addressing aspects of product quality, review procedures, and integration of testing activities into the broader software development process. Together, these standards ensure alignment with international software quality assurance practices and provide a structured basis for the systematic application of ST.

2.4. Aspects of Software Testing

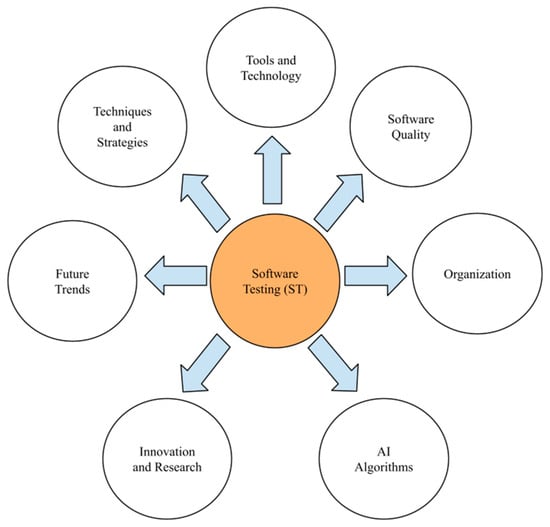

There are several aspects of ST that contribute to ensuring the quality of the final product. These are illustrated in Figure 1 and described below:

Figure 1.

Aspects of software testing.

- Techniques and Strategies: These refer to the methods and approaches used to design, execute, and optimize software tests, such as test case design, automation, and risk-based testing. The aim of these is to maximize the efficiency and coverage of the testing process [48].

- Tools and Technology: These involve the collection of systems, platforms, and tools employed to support testing activities, from test case management to automation and performance analysis, thereby facilitating integration within modern development environments such as CI/CD [48].

- Software Quality: This encompasses a set of attributes such as functionality, maintainability, performance, and security, which determine the level of software excellence, supported by metrics and evaluation techniques throughout the testing cycle [49].

- Organization: This refers to the planning and management of the testing process, including role assignments, team integration, and the adoption of agile or DevOps methodologies, to ensure alignment with project goals [50].

- AI Algorithms in ST: The use of AI involves the application of techniques such as ML, data mining, and optimization to enhance the efficiency, effectiveness, and coverage of the testing process. These tools enable intelligent TCG, defect prediction, critical area prioritization, and automated result analysis, thereby significantly reducing the manual effort required [51].

- Innovation and Research: These include the exploration of advanced trends such as the use of AI, explainability in testing, and validation of autonomous systems, which contribute to the development of new techniques and approaches to address challenges in ST 52.

- Future Trends: These refer to emerging and high-potential areas such as IoT system validation, testing in the metaverse, immersive systems, and testing of ML models, which reflect technological advances and new demands in software development [52].

3. Systematic Literature Review on AI Algorithm in Software Testing

In view of the relevance of the use of AI in ST and its impact on software quality, it is essential to conduct a comprehensive literature review to identify and analyze recent advancements and contributions in this field. To achieve this, it is necessary to adopt a structured methodology that allows for the efficient organization of information.

3.1. Methodology

This systematic literature review was conducted following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA 2020) guidelines. The PRISMA 2020 checklist and flow diagram have been included as Supplementary Materials to ensure methodological transparency and reproducibility.

The methodology for this state-of-the-art study is based on a guideline that was initially proposed in [53], and which has been adapted for systematic literature reviews in software engineering. This approach has been widely applied in related research, including the use of model-based ST tools [54], general studies of ST [55], investigations of software quality [56], and software defect prediction using AI techniques [57]. The review process consists of four stages: planning, execution, results, and analysis.

3.2. Planning

To explore the evolution of AI algorithms in ST, the following research questions were formulated:

RQ1: Which AI algorithms have been used in ST, and for what purposes?

RQ2: Which variables are used by AI algorithms in ST?

RQ3: Which metrics are used to evaluate the results of AI algorithms in ST?

To answer these questions, a journal article search strategy was developed based on a specific search string, including Boolean operators and applied filters, as detailed in Table 1, ensuring transparency and reproducibility according to PRISMA 2020 guidelines. The selection of keywords reflected the relevant aspects and context of the study, and the search was carried out using the Scopus and Web of Science (WoS) databases. These databases were chosen due to their extensive peer-reviewed coverage, continuous inclusion of new journals, frequent updates, and relevance in terms of providing up-to-date impact metrics, stable citation structures, and interoperability with bibliometric tools, which are crucial for automated data curation and large-scale analysis. Inclusion and exclusion criteria were established to filter and select relevant studies, as specified in Table 2.

Table 1.

Search strings used with Database.

Table 2.

Inclusion and exclusion criteria.

We acknowledge that the rapid impact of emerging technologies in Software Engineering may reshape any existing taxonomy and its evolution over time. Some of these developments are often first introduced at leading international conferences (e.g., ICSE, FSE, ISSTA), reflecting the continuous adaptation of the field to new requirements—particularly those driven by advances in Artificial Intelligence models. While this dynamism is inevitable, the taxonomy proposed in this study remains valuable as a foundational scientific framework that can guide future refinements and inspire further research addressing contemporary challenges in software testing. Furthermore, future extensions of this systematic review may incorporate peer-reviewed conference proceedings from these venues to broaden the scope and capture cutting-edge contributions that often precede journal publications.

The final search string was iteratively refined to balance inclusiveness and precision, ensuring the retrieval of relevant studies without excessive noise. During the filtering process, when searching for software testing methods, the databases consistently returned studies addressing software defect prediction, test case prioritization, fuzzing, and other key topics that directly contributed to defining the proposed taxonomy.

This empirical verification supports that, although more specialized keywords could have been included, the applied search string effectively captured the main families of studies relevant to the research questions. In addition to general methodological terms (“method,” “procedure,” “guide”), domain-specific terminology was already embedded within the retrieved dataset through metadata and indexing structures in Scopus and WoS.

Furthermore, the validity of the search strategy was implicitly supported through the PRISMA-based screening and deduplication process, which acted as a quality control mechanism comparable to a “gold standard” verification. This ensured that the taxonomy and trend analysis reflected a comprehensive and representative overview of AI-driven software testing research.

3.3. Execution

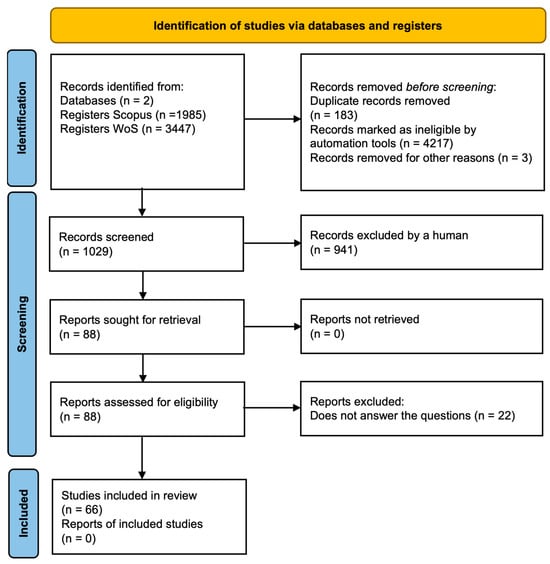

According to the previously defined planning strategy, the initial search yielded 1985 articles from Scopus and 3447 from WoS, resulting in a total of 5432 articles. Using a filtering tool based on predefined exclusion criteria, this number was significantly reduced by eliminating 4217 articles, leaving a total of 1215.

Subsequently, 183 duplicate articles were removed (182 from WoS and one from Scopus). In addition, three retracted articles were excluded, including two from WoS and one from Scopus. As a result, 1029 articles remained for further detailed screening using additional filters.

The filters that were applied were as follows:

- Title: 676 articles were excluded (173 from Scopus and 503 from WoS)

- Abstract and Keywords: 246 articles were removed (134 from Scopus and 112 from WoS)

- Introduction and Conclusion: Nine articles were excluded (seven from Scopus and two from WoS)

- Full Document Review: 10 articles were rejected (eight from Scopus and two from WoS)

This process excluded 941 articles, leaving a total of 88 for in-depth review. Of these, 22 were excluded as they did not directly address the proposed research questions, resulting in 66 articles which were selected as relevant in answering the research questions.

The literature search covered 2014–2024 and was last updated on 30 September 2024 across Scopus and Web of Science; search strings were adapted per database (Table 1). The inclusion and exclusion criteria used to filter studies are detailed in Figure 2, following PRISMA 2020 recommendations [58] based on selection parameters in Table 2.

Figure 2.

PRISMA 2020 flow diagram of the systematic review process. Adapted from Page et al. (2021) [58], PRISMA 2020 guideline.

Data Screening and Extraction Process

The selection and data extraction processes were carried out by two independent reviewers (A.E., D.M.) who applied predefined inclusion and exclusion criteria across four sequential stages: title screening, abstract and keyword review, introduction and conclusion assessment, and full-text analysis. Each reviewer performed the screening independently, and any discrepancies were resolved through discussion and consensus. The process was supported using Microsoft Excel to ensure traceability and consistency across all stages. For each selected study, information was extracted regarding the publication year, algorithm type, testing problem category, input variables, evaluation metrics, and datasets used. The extracted information was cross-checked with the original articles to ensure completeness and accuracy, and the consolidated dataset served as the basis for the analytical synthesis presented in the following sections. The overall workflow is summarized in Figure 2, following the PRISMA 2020 flow diagram.

To further ensure methodological rigor and minimize bias, the screening and data extraction stages were conducted independently by both reviewers, with all decisions cross-verified and reconciled through consensus. Although a formal inter-rater reliability coefficient (e.g., Cohen’s κ) was not computed, the dual-review approach followed established SLR practices in software engineering, ensuring transparency, traceability, and reproducibility throughout the process.

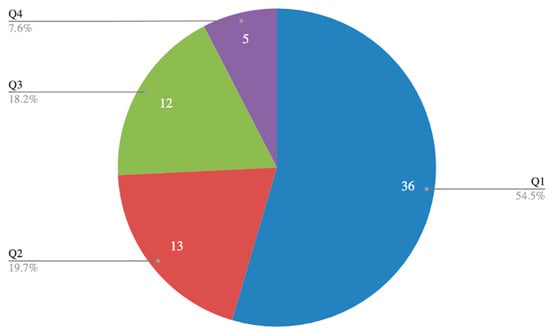

In terms of study quality, all included papers were peer-reviewed journal publications indexed in Scopus and WoS, guaranteeing a baseline of methodological soundness. As illustrated in the results in Section 3.4, 54.5% of the selected studies were published in Q1 journals, reflecting the high scientific quality and credibility of the dataset. Consequently, an additional numerical quality scoring was deemed unnecessary. Nevertheless, we recognize that future reviews could be strengthened by incorporating a formal quality assessment checklist (e.g., Kitchenham & Charters, 2007 [53]) and quantitative reliability metrics to further enhance objectivity and consistency.

To strengthen transparency and reproducibility, all key artifacts from the systematic review have been made publicly available in the supplementary repository (https://github.com/escalasoft/ai-software-testing-review-data (accessed on 31 October 2025). The repository includes:

- (1)

- filtering_articles_marked.xlsx, documenting the screening stages across title, abstract/keywords, and introduction/conclusion, along with complementary filters such as duplicates, retracted papers, and studies not responding to the research question.

- (2)

- raw_data_extracted.xlsx, containing the raw data extracted from each selected study, including problem codes (e.g., SDP, TCM, ATE), dataset identifiers, algorithm names, number of instances, and evaluation metrics (e.g., Accuracy, Precision, Recall, F1-score, ROC-AUC);

- (3)

- coding_book_taxonomy.xlsx, defining the operational rules applied to classify studies into taxonomy categories.

- (4)

- PRISMA_2020_Checklist.docx, presenting the full checklist followed during the review.

Additional details on algorithms, variables, and metrics are included in the Appendix B, Appendix C and Appendix D. Together, these materials ensure full traceability and compliance with PRISMA 2020 guidelines.

3.4. Results

3.4.1. Potentially Eligible and Selected Articles

Our systematic literature review resulted in the selection of 66 articles that met the established criteria and were relevant to addressing the research questions. These articles are denoted using references in the format [n]. The complete list of selected studies is provided in Appendix A. Table 3 presents a summary of the potentially eligible articles and those ultimately selected after the review process.

Table 3.

Potential and selected articles.

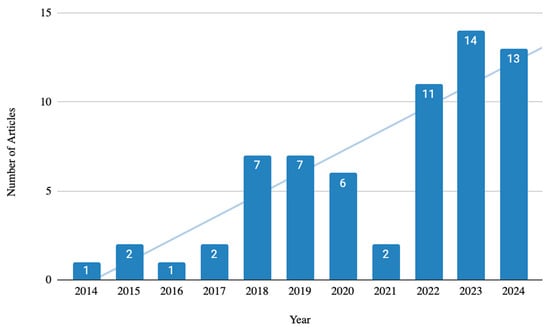

3.4.2. Publication Trends

Figure 3 reveals a trend towards greater numbers of publications on AI algorithms in ST over the past decade. From 2014 to 2024, there is a consistent increase in related studies, with 66 selected articles, thus highlighting the rising interest in this topic and the importance that researchers and software engineering professionals have placed on this field.

Figure 3.

Numbers of publications over time.

Although the temporal evolution in Figure 3 was analyzed descriptively through frequency counts and visual trends, the purpose of this analysis was to illustrate the progressive growth of AI-related research in software testing rather than to perform inferential validation. The counts were normalized per year to ensure comparability, and the trend line reflects a consistent increase across the decade. Formal trend tests (e.g., Mann–Kendall or Spearman rank correlation) were not applied, since the aim of this review was exploratory and descriptive. Nevertheless, future studies could complement this analysis with statistical trend testing and confidence intervals to quantify uncertainty in the reported proportions and reinforce the robustness of temporal interpretations.

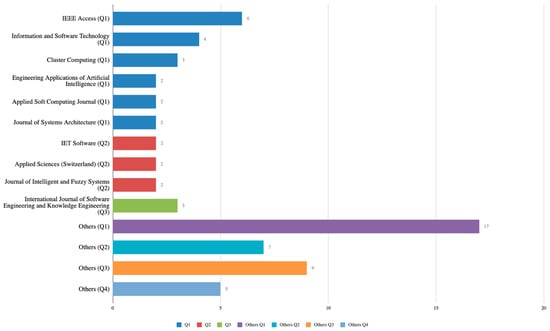

Figure 4 shows the journals in which the selected articles were published, classified by quartile and accompanied by the corresponding number of publications. In total, 28 articles were published in 10 journals with two or more publications. The journals contributing the most to the topic were IEEE Access and Information and Software Technology, both of which are ranked in Q1, with six and four articles, respectively. The category Others includes 38 articles distributed across 17 journals in Q1, seven in Q2, nine in Q3, and five in Q4, each contributing a single article. In total, 48 journals were examined, of which 36 were classified as Q1, reflecting the high quality and relevance of the sources considered in this study.

Figure 4.

Journals reviewed by quartile.

Figure 5 illustrates the number of selected studies by quartile for this analysis. Notably, 54.5% of these correspond to articles published in Q1-ranked journals, illustrating the high quality of the data. This distribution highlights the robustness and relevance of the findings obtained in this research.

Figure 5.

Articles selected by quartile.

The predominance of Q1 and Q2 journals among the selected studies indirectly reflects the high methodological rigor, peer-review standards, and overall credibility of the evidence base considered in this systematic review.

3.5. Analysis

3.5.1. RQ1: Which AI Algorithms Have Been Used in ST, and for What Purposes?

To ensure methodological consistency and avoid double-counting, the identification and classification of algorithms followed a structured coding process. Each algorithm mentioned across the selected studies was first normalized by its canonical name (e.g., “Random Forest” = RF, “Support Vector Machine” = SVM), and algorithmic variants (e.g., “Improved RF,” “Hybrid RF–SVM”) were mapped to their base algorithm family unless they introduced a new methodological contribution described by the authors as proposed.

Duplicates were resolved by cross-checking algorithm names within and across studies using the consolidated list in coding_book_taxonomy.xlsx. When the same algorithm appeared in multiple problem contexts (e.g., SDP and ATE), it was counted once for its family but associated with multiple application categories. Of the 66 selected studies a total of 332 unique algorithmic implementations were thus identified, of which 96 were novel proposals and 236 were previously existing algorithms reused for comparison. This classification ensures reproducibility and consistency across the dataset and Supplementary Materials.

To better understand these algo-rhythms, classification is necessary. It is worth noting that 14 algorithms appeared in both the novel and existing categories.

However, no study was found that proposed a specific taxonomy for these, and a classification based on forms of ST is not applicable, since some categories overlap. For example, a fully automated ST process (classified by the degree of automation) may also be functional (classified by test type). This indicates that the conventional forms of ST are not a suitable criterion for classifying AI algorithms and highlights the need for a new taxonomy.

After reviewing the identified algorithms, we observed that each was designed to solve specific problems within ST. This suggested that a classification based on the testing problems addressed by these algorithms would be more appropriate. In view of this, Table 4 presents the main problems identified in ST, which may serve as the foundation for a new taxonomy of AI algorithms applied to ST. This classification provides a precise and useful framework for analyzing and applying these algorithms in specific testing contexts, enabling optimization of their selection and use according to the needs of the system under evaluation.

Table 4.

Taxonomy of AI Algorithms based on Software Testing.

To strengthen the transparency and reproducibility of the proposed taxonomy, each category (e.g., TCM, ATE, STR, DEM, VI) was defined through explicit operational criteria derived from the problem–variable–metric relationships identified during the data extraction stage. Ambiguities or overlaps between categories were resolved by consensus between the two reviewers, following a structured coding guide that prioritized the dominant research objective of each study. The “Other” category included a limited number of interdisciplinary studies that did not fully fit within the main taxonomy dimensions but were retained to preserve representativeness. Although a formal inter-rater reliability coefficient (e.g., Cohen’s κ) was not computed, complete agreement was achieved after iterative verification and validation in Microsoft Excel, ensuring traceability and methodological rigor throughout the classification process.

3.5.2. AI Algorithms in Software Defect Prediction

In this category, a total of 229 AI algorithms were identified as being applied to software defect prediction (SDP). Of these, 40 distinct algorithms were proposed in the papers, while 146 distinct algorithms were not novel. In addition, 25 novel hybrid algorithms and 18 existing hybrid algorithms were identified, with 11 algorithms appearing in both categories.

Hybrid algorithms combine two or more individual algorithms and are identified using the “+” symbol. For example, C4.5 + ADB represents a combination of the individual algorithms C4.5 and ADB. Singular algorithms are represented independently, such as SVM, or with variants indicated using hyphens, such as KMeans-QT. In some cases, they may include combinations enclosed in parentheses, such as 2M-GWO (SVM, RF, GB, AB, KNN), indicating an ensemble or multi-model approach.

Table 5 summarizes the AI algorithms proposed or applied in each study, as well as the existing algorithms used for comparative evaluation.

Table 5.

Algorithms in SDP.

3.5.3. AI Algorithms in SDD, TCM, ATE, CST, STC, STE and Others

In these categories, a total of 103 AI algorithms were identified, which were distributed as follows:

- In the SDD category, eight algorithms were found, of which two were novel (one singular and one hybrid), six were existing (all singular), and one was repeated.

- In the TCM category, 28 algorithms were identified, including 10 novel singular algorithms, 18 existing (15 singular and three hybrid), and one repeated.

- The ATE category comprised 21 algorithms, of which six were novel (four singular and two hybrid), 14 existing (all singular), and one repeated.

- In the CST category, four algorithms were identified: one novel and three existing, with no hybrids or repetitions. The STC category included 18 algorithms: four novel (three singular and one hybrid), 14 existing (all singular), and no repetitions.

- For the STE category, seven algorithms were found: three novel (two singular and one hybrid), one existing (singular), and no repetitions.

- In the OTH category, 17 algorithms were identified: five novel (all singular), and 12 existing (all singular), with no repetitions.

Table 6 provides a consolidated summary of the novel and existing algorithms identified in each category.

Table 6.

Algorithms in SDD, TCM, ATE, CST, STC, STE, and OTH.

3.5.4. RQ2: Which Input Variables Are Used by AI Algorithms in ST?

In the context of this systematic review, the term variable refers exclusively to the input data that are used to feed AI algorithms in ST tasks. These variables originate from the datasets used in the studies reviewed here and represent the observable features that define the problem to be solved. They should not be confused with the internal parameters of the algorithms (such as learning rate, number of neurons, or trees), nor with the evaluation metrics used to assess the model performance (e.g., precision, recall, or F1-score), which are addressed in RQ3.

These input variables are important, as they determine how the problem is represented, and hence directly influence the model training process (see Figure 6), its generalization capability, and the quality of the predictions. For instance, in the case of software defect prediction, it is common to use metrics extracted from the source code, such as the cyclomatic complexity or the number of public methods.

Figure 6.

Data algorithm and models used in software testing.

Based on an analysis of the selected studies, a total of 181 unique variables were identified, which were organized into a taxonomy of ten thematic categories. This classification provided a clearer understanding of the different types of variables used, their nature, and their source. Table 7 presents a consolidated summary: for each category, it shows the identified subcategories, the total number of variables, the number of associated studies, and the corresponding reference codes. A detailed list of these variables can be found in Appendix C.

Table 7.

AI input Variables used in ST.

3.5.5. RQ3: Which Metrics Are Used to Evaluate the Performance of AI Algorithms in ST?

Table 8 summarizes the metrics employed in the primary studies to evaluate the performance of AI algorithms when applied to ST. These metrics have been organized into six evaluation disciplines to enable a better understanding not only of their frequency of use but also of their functional purpose across different evaluation contexts. A total of 62 distinct metrics were identified. A detailed list, including definitions and the studies that used them, is available in Appendix D.

Table 8.

AI Algorithm Metrics for evaluating ST.

For transparency and reusability, the proposed taxonomies of algorithms, input variables, and evaluation metrics are formally defined and documented. The detailed operational definitions, coding rules, and representative examples for each category are provided in the Supplementary Material on the file: coding_book_taxonomy.xlsx.

4. Evolution of AI Algorithms in ST

This section examines the evolution of AI algorithms applied to ST. The process used to explore this evolution was structured into three key stages, reflecting the methodology employed, the development of the investigation, and the main results. Each of these stages is described in detail below.

4.1. Method

To analyze the evolution of AI algorithms in ST, the following methodological phases were implemented:

- Phase 1—Algorithm Inventory

The AI algorithms that have been applied to ST are collected and cataloged based on the specialized literature.

- Phase 2—Aspects

The aspects to be analyzed are identified to explore the evolution of the algorithms listed in Phase 1.

- Phase 3—Chronological Behavior

The AI algorithms are organized chronologically, according to the aspects defined in Phase 2.

- Phase 4—Evolution Analysis

The changes and trends in the use of AI algorithms in ST are examined over time, based on each identified aspect.

- Phase 5—Discussion

The findings are discussed with their implications in terms of the observed evolutionary patterns.

4.2. Development

Phase 1. As detailed in Section 3, an exhaustive review of the specialized literature on AI algorithms in ST was conducted, in which we identified 332 algorithms across 66 selected studies. These were classified into 21 problems, which were further organized into eight categories: software defect prediction (SDP), software defect detection (SDD), test case management (TCM), test automation and execution (ATE), collaboration (CST), test coverage (STC), test evaluation (STE), and others (OTH) (see Table 4).

Phase 2. Three key aspects were identified for analysis:

- ST Problems: This refers to the categories of algorithms oriented toward specific testing problems.

- ST Variables: This represents the input variables related to the datasets used in the studies.

- ST Metrics: These are the evaluation metrics used by the algorithms to assess their performance.

An inventory was compiled from the summary data presented in Table 5, Table 6, Table 7 and Table 8. This inventory identified:

- 66 studies in which AI algorithms were applied to ST problems.

- 108 instances involving the use of input variables across the 66 selected studies. Since a single study may contribute to multiple categories, the total number of instances exceeds the number of unique studies.

- 106 instances in which evaluation metrics were employed across the same set of studies. Again, the difference reflects overlaps where one study reported results in more than one metric category.

Table 9 provides a consolidated overview of the relationships among AI algorithms, problem categories, input variables, and evaluation metrics in software testing. Unlike previous figures that illustrated these dimensions separately, this table integrates them into a unified framework, allowing the identification of consistent research patterns and cross-dimensional connections. Each entry lists the corresponding literature codes [n], which facilitates traceability to the original studies while avoiding redundancy in naming all algorithms explicitly. This representation not only highlights the predominant associations—such as defect prediction with structural and complexity metrics evaluated through classical performance measures—but also captures emerging and exploratory combinations across less frequent categories. By mapping algorithms to problems, variables, and metrics simultaneously, Table 9 serves as the foundation for the integrative analysis presented in Section 5.4. The acronyms used in this figure correspond to the categories described in Table 4, Table 7 and Table 8.

Table 9.

Relationships between Problems, Variables and Metrics.

A description of the algorithms used in each and information on the variables and evaluation metrics is provided in Appendix B, Appendix C and Appendix D. In addition, the dataset, the evaluated instances, and the performance results for each algorithm can be found in the path: https://github.com/escalasoft/ai-software-testing-review-data (accessed on 3 November 2025).

Phase 3. The algorithms were classified according to the three aspects under analysis, and their changes and trends over time were examined. The results are presented in Section 4.3.

Phase 4. The results obtained in Phase 3 were analyzed and interpreted, and a discussion is provided in Section 5.

4.3. Evolution of IA Algorithms and Their Application Categories in Software Testing

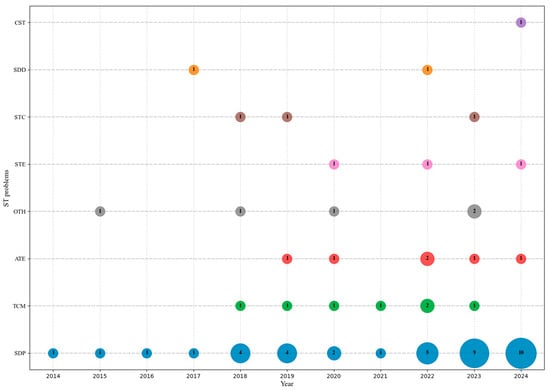

Figure 7 illustrates how the different problem categories in ST evolved from 2014 to 2024. The vertical axis shows the seven identified problem categories in ST, along with an additional category labeled Other (OTH) to represent miscellaneous problems. The horizontal axis displays the year of publication.

Figure 7.

Evolution of AI algorithms in software testing problem domains. Each bubble represents the number of studies associated with a specific algorithm category, where the bubble size is proportional to the total count of studies. The color of each bubble denotes the problem domain: blue = Software Defect Prediction (SDP), green = Test Case Management (TCM), red = Automation and Execution of Testing (ATE), lead = Otros (OTH), pink = Software Test Evaluation (STE), brown = Software Test Coverage (STC), orange = Software Defect Detection (SDD), and purple = Collaboration Software Testing (CST).

These studies reveal a clear research trend in the application of AI algorithms to various ST problems. For instance, the software defect prediction (SDP) category stands out as the most extensively addressed, while the automation and execution of testing (ATE) and test case management (TCM) categories show a promising upward trend in recent year.

This visualization highlights the relative research intensity and prevalence of each algorithm within the software testing domain.

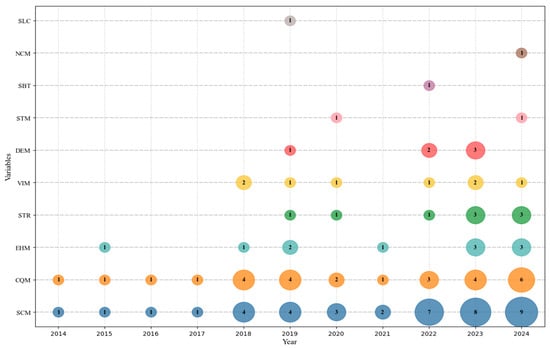

The vertical axis of Figure 8 shows the distribution of 10 categories of software testing input variables, which are grouped based on their structural, semantic, dynamic, and functional characteristics. These categories reveal a significant evolution over the past decade. The horizontal axis represents the year of publication of the studies.

Figure 8.

Evolution of IA algorithms in relation to software testing variables. Each bubble represents the number of studies within a given variable category, where bubble size corresponds to the total number of studies, and color indicates the related metric domain: blue = Structural Code Metrics (SCM), orange = Complexity Quality Metrics (CQM), sky blue = Evolutionary Historical Metrics (EHM), green = Semantic Textual Representation (STR), yellow = Visual Interface Metrics (VIM), red = Dynamic Execution Metrics (DEM), pink = Sequential Temporal Models (STM), purple = Search Based Testing (SBT), brown = Network Connectivity Metrics (NCM), and lead Supervised Labeling Classification (SLC).

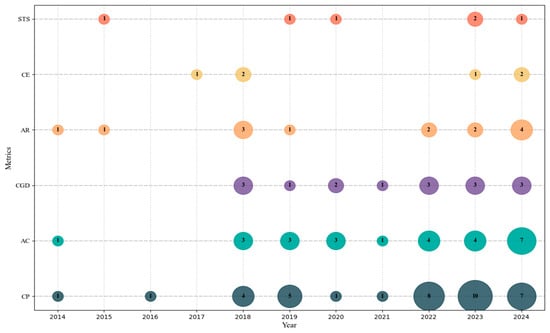

To illustrate the evolution in the usage of evaluation metrics, the vertical axis of Figure 9 displays the six metric disciplines applied in AI-based ST, while the horizontal axis represents the year of publication. It can clearly be seen that most studies employ classical performance metrics (CPs), such as accuracy, precision, recall, and F1-score, as well as those within the advanced classification discipline (AC), which includes indicators such as MCC, ROC-AUC, balanced accuracy, and G-mean.

Figure 9.

Evolution of AI algorithms with respect to software testing metrics. Each bubble represents the number of studies using a particular evaluation metric, where bubble size reflects the total count of studies and color differentiates the metric groups: lead = Classical Performance (CP), green = Advanced Classification (AC), purple = Coverage GUI Deep Learning (CGD), orange = Alarms and Risk (AR), yellow = Cost Error (CE), and dark orange = Software Testing Specific (STS).

Limitations and Validity Considerations

Although the evolution of AI algorithms in software testing has been systematically analyzed, this study is not exempt from potential limitations. Regarding construct validity, the taxonomy and classification trends were derived from existing studies and may not fully represent emerging paradigms. Concerning internal validity, independent screening and consensus-based extraction aimed to reduce bias, though subjective interpretation during categorization may have influenced some patterns.

In terms of external validity, the analysis was restricted to peer-reviewed journal publications indexed in Scopus and Web of Science, which may exclude newer conference papers that could reflect recent industrial practices. Finally, conclusion validity may be affected by dataset heterogeneity and publication bias. These issues were mitigated through rigorous inclusion criteria, adherence to PRISMA 2020 recommendations, and transparent reporting to ensure reproducibility and reliability of the synthesis.

5. Discussion

AI algorithms play a crucial role in ST, a key component of the software development lifecycle that directly affects the quality of the final product. In view of their importance, it is essential to analyze and discuss how these algorithms have evolved and their contributions to ST over time.

5.1. Evolution of Algorithms in Software Testing Problems

Our analysis of the evolution of AI algorithms applied to software testing (ST) problems reveals a growing emphasis on automation, optimization, and process enhancement across different stages of the ST lifecycle. From our classification of these problems into eight main categories, a progressive maturation of research approaches in this field is evident.

First, software defect prediction (SDP) has historically been the most dominant category. This research stream has focused on estimating the likelihood of defects occurring prior to deployment, as well as predicting the severity of test reports to enable more effective prioritization. Its persistent use over time underscores the continued relevance of this approach in contexts where software quality and reliability are critical.

Software defect detection (SDD) has recently gained more attention, targeting not only the prediction of unstable failures but also the direct identification of defects at the source code level. This reflects the growing need for intelligent systems capable of detecting issues before they reach production, thereby strengthening quality assurance.

A particularly noteworthy trend is the expansion of the test case management (TCM) category, which includes problems related to the prioritization, generation, classification, execution, and optimization of test cases. Its sustained growth in recent years reflects increasing interest in leveraging AI solutions to scale, automate, and streamline validation activities, particularly within agile and continuous integration environments.

Progress has also been observed in the automation and execution of tests (ATE) category, which ranges from UI automation to the automatic generation of test data and code. This category has become more prominent with the rise of generation techniques such as code synthesis and test data creation, which reduce manual effort and accelerate testing cycles.

The collaborative software testing (CST) category, which focuses on the collective and coordinated management of testing activities, has emerged as an incipient yet promising area. Supported by collaborative platforms and shared tools, this approach suggests an evolution toward more distributed and cooperative testing practices.

Test coverage (STC) remains a less frequent but relevant dimension, especially in evaluating the effectiveness of tests over source code or graphical interfaces. Its integration with AI has enabled the identification of uncovered areas and improvements in the design of automated test strategies.

Finally, the test evaluation (STE) category, which encompasses mutation testing and security analysis, has also advanced significantly in the past five years. These methodologies facilitate the assessment of the robustness of generated test suites and their ability to detect changes or vulnerabilities in the system.

Other problems (OTH) group heterogeneous tasks that do not neatly fit the previous families but are relevant to the evolution of AI in ST. Examples include integration test ordering, mutation-specific defect prediction, automated end-to-end testing workflows (e.g., for game environments), software process automation, and combinatorial test design. Although less frequent, this category captures emerging or domain-specific applications and preserves completeness without forcing weak assignments to other families.

In summary, the evolution of ST problem categories shows a transition from classical defect-centric approaches (SDP, SDD) toward more sophisticated strategies that span the entire testing value chain (TCM, ATE, CST), while also incorporating collaborative (STC) and evaluation-oriented (STE) dimensions, together with a residual OTH group that reflects emergent and domain-specific tasks. This diversification indicates that the application of AI in ST has not only intensified but also matured to embrace multidisciplinary approaches and adapt to increasingly complex operational contexts.

5.2. Evolution of Algorithms Regarding Software Testing Variables

The analysis of input variables used to train AI algorithms in software testing reveals a progressive diversification over the last decade. A total of 10 categories of variables were identified, each contributing distinct perspectives on how testing problems are represented and addressed. These categories are: Structural Code Metrics (SCM), Complexity/Quality Metrics (CQM), Evolutionary/Historical Metrics (EHM), Dynamic/Execution Metrics (DEM), Semantic/Textual Representation (STR), Visual/Interface Metrics (VIM), Search-Based Testing/Fuzzing (SBT), Sequential and Temporal Models (STM), Network/Connectivity Metrics (NCM), and Supervised Labeling/Classification (SLC).

A closer look at their evolution allows us to distinguish three stages:

2014–2017: Foundation on SCM and CQM.

Research in this initial stage was largely dominated by structural code metrics (e.g., size, complexity, cohesion, coupling) and complexity/quality metrics (e.g., Halstead or McCabe indicators). These variables were critical for early AI-based models, providing a static view of the software structure and code quality.

2018–2020: Expansion toward EHM, DEM, and STR.

As testing scenarios became more dynamic, the field incorporated evolutionary/historical metrics (e.g., change history, defect history), dynamic/execution metrics (e.g., traces, execution time, call frequency), and semantic/textual representations (e.g., bug reports, documentation, natural language descriptions). This transition reflects an interest in contextual and behavioral features that move beyond static code.

2021–2024: Diversification into emerging categories.

In the most recent stage, less explored but innovative categories gained relevance: visual/interface metrics (e.g., GUI features, graphical models), search-based testing and fuzzing, sequential and temporal models (e.g., recurrent patterns, autoencoders), network/connectivity metrics, and supervised labeling/classification. Although these categories appear with lower frequency, their emergence highlights novel approaches aligned with the complexity of modern software ecosystems, including mobile, distributed, and intelligent systems.

In summary, the evolution of input variables illustrates a transition from traditional static code-centric approaches (SCM and CQM) toward a multidimensional perspective that integrates historical, dynamic, semantic, and even network-oriented features. This shift demonstrates how AI in software testing has matured, not only broadening the range of variables but also adapting to the complexity of contemporary testing environments.

More recently, the emergence of categories such as VIM, STM, and NCM—reported only in a small number of studies between 2019 and 2024 (see Figure 8 and Table 7)—illustrates the diversification of input variables in AI-based software testing. These categories point to novel perspectives, such as visual interactions, temporal modeling, and network connectivity, which had not been addressed in earlier work. Their introduction has driven initial experimentation with hybrid and explainable AI approaches documented in the reviewed literature, particularly in contexts where capturing sequential dependencies, user interfaces, or connectivity is essential. Consequently, these studies often require more advanced performance metrics to evaluate robustness and generalization. Taken together, the findings indicate that the evolution of variables, algorithms, and metrics has been interdependent, with progress in one dimension enabling advances in the others.

5.3. Evolution of Algorithms in Software Testing Metrics

The evolution of AI algorithms in software testing also reflects a progressive refinement of the metrics employed to evaluate their performance, robustness, and practical applicability. Based on the reviewed studies, six main categories of metrics were identified, ranging from classical evaluation to testing-specific measures (Table 8, Figure 9). Initially, research was dominated by classical performance (CP) metrics, such as accuracy, precision, recall, and F1-score. These measures, particularly linked to prediction tasks, provided the most accessible foundation for assessing algorithmic capacity and comparability, although they often fall short in capturing robustness or scalability in complex contexts.

From 2018 onward, studies began incorporating advanced classification (AC) metrics, including MCC, ROC-AUC, and balanced accuracy. These measures offered greater robustness in handling imbalanced datasets, a frequent issue when predicting software defects. Their adoption illustrates a methodological shift toward richer and more nuanced evaluation strategies, which became more prevalent as algorithms diversified in scope.

A further development was the introduction of cost/error (CE) metrics, alarms and risk (AR) indicators, and coverage/execution/GUI-driven (CGD) metrics. These reflected the community’s growing interest in evaluating algorithms not only on accuracy but also on their operational impact, error sensitivity, and ability to capture the completeness of testing processes. Similarly, software testing-specific (STS) measures were adopted to directly benchmark AI methods against domain-grounded baselines, ensuring fairer assessments across heterogeneous testing scenarios.

Periodization of metric evolution reveals three distinct phases.

2014–2017: Early studies relied almost exclusively on classical performance (CP) metrics, focusing on accuracy and recall as the standard for validating predictive models.

2018–2020: The field expanded to advanced classification (AC) and cost/error (CE) metrics, reflecting the need to handle imbalanced datasets and quantify error propagation more precisely.

2021–2024: There is a clear transition toward coverage-oriented (CGD) and testing-specific (STS) measures, alongside alarms and risk (AR) metrics. This diversification indicates the community’s growing emphasis on robustness, scalability, and the operational reliability of AI-based testing in industrial contexts.

In summary, the evolution of metrics reveals a clear transition from general-purpose evaluation (CP) toward more robust, domain-specific, and context-aware approaches (STS, CGD). This trend underscores the growing need to align evaluation strategies with the complexity of AI models and the operational realities of modern software testing.

5.4. Integrative Analysis

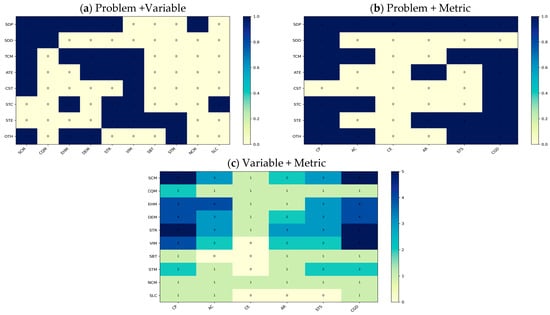

As shown in Table 9, the relationships between algorithms, problem categories, input variables, and evaluation metrics reveal a complex interplay that goes beyond examining these dimensions in isolation. This integrative view enables the identification of consolidated research patterns as well as emerging directions in AI-based software testing. Figure 10 visualizes these relationships through three complementary heatmaps that illustrate the co-occurrence frequencies between problems, variables, and metrics across the 66 studies analyzed.

Figure 10.

Integrative heatmaps of AI algorithms in software testing. The color intensity represents the frequency of co-occurrence across the 66 studies analyzed.

The heatmap also reflects the strength and nature of interdependencies among testing problems and algorithmic approaches. High-frequency associations, such as “SDP–SCM–CP,” indicate mature research intersections where predictive models are frequently integrated with configuration management and change propagation processes. In contrast, low-frequency patterns like “VIM–CGD” suggest emerging or underexplored connections, potentially representing novel directions for integrating visual interface metrics with code generation defects.

In first place, defect prediction (SDP) continues to dominate the landscape, consistently associated with structural code metrics (SCM) and complexity/quality metrics (CQM). These studies are primarily evaluated through classical performance indicators (CP), such as accuracy, recall, and F1-score, reinforcing their maturity and long-standing presence in the field. The concentration of high-intensity cells in the heatmap confirms this consistent alignment between SDP, SCM/CQM, and CP, reflecting the community’s confidence in leveraging structural code features for predictive purposes and highlighting the foundational role of SDP in establishing AI as a viable tool for software testing. This is important because it allows this combination of components to continue addressing SDP-related cases within the testing development cycle. At present, companies that recognize this frequency may incorporate similar features into their own testing models, thereby strengthening SDP-related practices and enhancing overall productivity across the software development and quality assurance cycle.

In second place, the categories of automation and execution of tests (ATE) and test case management (TCM) show significant expansion. ATE demonstrates a broader combination of input variables, particularly dynamic execution metrics (DEM) and semantic/textual representations (STR). Their evaluation increasingly relies on advanced classification (AC) and coverage-oriented (CGD) metrics, evidencing a shift toward more sophisticated and realistic testing environments. However, the datasets maintained by companies may contain noise depending on their specific business domain, which could lead to uncertainty regarding their implementation reliability. Moreover, hasty decisions derived from the low recurrence of these metrics could negatively impact short-term return on investment. Meanwhile, TCM is frequently linked with evolutionary/historical variables (EHM) and semantic/textual features (STR). Its evaluation integrates both classical and advanced metrics, underscoring its evolution toward scalable solutions for prioritization, optimization, and automation in agile and continuous integration contexts. These tendencies are clearly visible in the heatmaps, where clusters combining DEM/STR with AC/CGD emphasize the strong methodological coupling that supports the expansion of ATE and TCM research. This is particularly relevant for industrial development teams that are continuously exploring ways to maximize the efficiency of their models. An effective combination of metrics is essential for organizations to sustain key performance indicators and mitigate productivity risks throughout the software testing lifecycle.

In third place, emerging categories such as collaborative testing (CST), test evaluation (STE), and the integration of sequential/temporal models (STM), network connectivity metrics (NCM), and visual/interface metrics (VIM) appear less frequently but add methodological diversity. These approaches often combine heterogeneous variables and metrics, addressing challenges such as distributed systems, time-dependent fault detection, security validation, and usability assessment. Although less consolidated, they represent innovative directions that could expand the scope of AI-based software testing soon. This trend is also supported by the heatmaps, which display lighter but distinct links between VIM and CGD as well as STM and STS, suggesting emerging but still underexplored lines of investigation. The significance of these tendencies provides valuable input for academia–industry collaborations, which could leverage these findings to design high-impact research initiatives and foster the creation of innovative products that contribute to the virtuous cycle of scientific and technological advancement.

Finally, categories grouped under “Other” (OTH) illustrate exploratory lines of research where algorithms are tested across varied and heterogeneous combinations of problems, variables, and metrics. While not yet mature, these contributions enrich the methodological landscape and open opportunities for cross-domain applications, particularly when combined with advances in explainability and hybrid AI approaches. The low but widespread co-occurrence patterns in the heatmaps visually confirm this experimental nature and highlight how these studies are paving new interdisciplinary bridges for future AI-driven testing frameworks.

Overall, this integrative analysis confirms that the evolution of AI algorithms in software testing cannot be fully understood without considering the interdependencies between the problems addressed, the nature of the input variables, and the evaluation strategies employed. Advances in one dimension—such as the refinement of variables or the design of new metrics—have consistently enabled progress in the others. This interdependence underscores the need for holistic frameworks that explicitly connect problems, variables, and metrics, thereby guiding the design, benchmarking, and industrial adoption of AI-based testing solutions.

However, the gap between academic research and industrial adoption remains one of the main challenges in applying AI-driven testing solutions. In industrial environments, models are often constrained by excessive noise in historical test data, incomplete labeling, and high operational costs associated with model deployment and maintenance. These factors limit the reproducibility of experimental results reported in academic studies. Furthermore, the lack of standard test environments and privacy restrictions on industrial data often prevent large-scale validation, making the transfer of research prototypes into production environments difficult.

Industrial Applicability and Maturity of AI Testing Approaches

While most of the reviewed studies emphasize academic contributions and their challenges in industry, several AI testing approaches have reached a level of maturity that enables industrial adoption. SDP and ATE techniques are highly deployable thanks to their integration with continuous integration pipelines, historical code metrics, and model-based testing tools. These approaches demonstrate reproducible performance and scalability across diverse projects, making them viable candidates for adoption in DevOps environments.

Conversely, categories such as Collaborative Software Testing (CST) and Test Evaluation (STE) still face significant practical barriers. Challenges arise from the lack of explainability (XAI) in complex AI models, limited interoperability with legacy testing infrastructures, and the absence of standard evaluation benchmarks for cross-organizational collaboration. Addressing these limitations requires closer collaboration between academia and industry, focusing on interpretability, scalability, and sustainable automation pipelines that can operate within real-world software ecosystems.

5.5. Future Research Directions

The findings of this review highlight several avenues for future research at the intersection of artificial intelligence and software testing. First, there is a pressing need for systematic empirical comparisons of AI algorithms applied to testing tasks. Although numerous studies report improvements in defect prediction, test case management, and automation, the lack of standardized datasets and evaluation protocols makes it difficult to assess progress consistently. Establishing benchmarks and open repositories with shared data would enable reproducibility and facilitate meaningful comparative studies.

Second, the review shows that the interplay between problems, variables, and metrics remains fragmented. Future work should focus on integrated frameworks that jointly consider these three dimensions, since advances in one often act as enablers for the others. For example, the adoption of richer input variables has demanded new evaluation metrics, while the emergence of hybrid algorithms has shifted the way problems are addressed. Developing methodologies that explicitly link these dimensions could provide more coherent strategies for designing and assessing AI-based testing solutions.

Third, the growing application of AI in testing raises questions of interpretability, transparency, and ethical use. As models become more complex, particularly in safety-critical domains, ensuring explainability will be essential to foster trust and industrial adoption. Research should explore explainable AI techniques tailored to testing contexts, balancing predictive performance with the need for human understanding of algorithmic decisions.

Another promising line involves addressing the challenges of data scale and quality. Many of the advances reported rely on datasets of limited size or scope, which constrains the generalizability of results. Future studies should investigate mechanisms to curate high-quality, representative datasets, while also developing strategies to handle noisy, imbalanced, or incomplete data—issues that increasingly characterize industrial testing environments.

Finally, there is an opportunity to expand research toward collaborative and cross-disciplinary approaches. The integration of AI-driven testing with continuous integration pipelines, DevOps practices, and human-in-the-loop strategies could accelerate adoption in practice. Likewise, stronger collaboration between academia and industry will be critical to validate the scalability and cost-effectiveness of proposed methods.

In summary, advancing the field will require moving beyond isolated studies toward comparative, reproducible, and ethically grounded research programs. By addressing these challenges, future work can consolidate the role of AI as a transformative force in software testing, enabling more reliable, efficient, and explainable solutions for increasingly complex systems and bridging the gap between academic innovation and industrial practice.

6. Conclusions

This study proposed a comprehensive taxonomy and evolutionary analysis of AI algorithms applied to software testing, identifying the main trajectories that have shaped the field between 2014 and 2024. Beyond summarizing the classification system and evolutionary trends, this work also highlights several avenues for improvement. Future research should focus on refining the classification criteria and operational definitions of variable indicators to ensure consistency and comparability across studies. Greater emphasis should be placed on defining the semantic boundaries of categories such as test prediction, optimization, and evaluation, which remain partially overlapping in the current literature.

Additionally, the applicability of the proposed taxonomy should be extended and validated across diverse testing environments, including embedded systems, real-time software, and cloud-based testing frameworks. These contexts present different performance constraints and data characteristics, offering opportunities to assess the robustness and generalizability of AI-driven testing models.

Finally, the study encourages a stronger collaboration between academia and industry to address the gap between theoretical model design and industrial implementation. By promoting reproducible frameworks and well-defined evaluation indicators, future studies can strengthen the reliability, interpretability, and sustainability of AI-based testing research.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/a18110717/s1, coding_book_taxonomy.xlsx: Taxonomy of AI algorithms based on software testing; PRISMA_2020_Checklist.docx: Full PRISMA 2020 checklist followed during the review.

Author Contributions

Conceptualization, A.E.-V. and D.M.; methodology, A.E.-V.; validation, A.E.-V. and D.M.; formal analysis, A.E.-V.; investigation, A.E.-V.; writing—original draft preparation, A.E.-V.; writing—review and editing, D.M.; supervision, D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets generated and analyzed during the current study are publicly available in the GitHub repository: https://github.com/escalasoft/ai-software-testing-review-data (accessed on 3 November 2025). This repository contains the filtering_articles_marked.xlsx file (article selection and screening data) and the raw_data_extraction.xlsx file (complete extracted dataset used for synthesis).

Acknowledgments

The authors would like to thank the Universidad Nacional Mayor de San Marcos (UNMSM) for supporting this research and providing access to academic resources that made this study possible.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Note: The identifiers [Rxx] denote the internal codes of the 66 studies included in this review. Detailed information about the algorithms, input variables, and evaluation metrics can be found in Appendix B, Appendix C and Appendix D. These materials, together with the complete dataset used for synthesis, are also available in the open repository: https://github.com/escalasoft/ai-software-testing-review-data.

Table A1.

Selected Articles.

Table A1.

Selected Articles.

| ID | Reference(s) | ID | Reference(s) |

|---|---|---|---|

| [R01] | R. Malhotra and K. Khan, 2024 [59] | [R02] | Z. Zulkifli et al., 2023 [60] |

| [R03] | F. Yang, et al., 2024 [61] | [R04] | L. Rosenbauer, et al., 2022 [62] |

| [R05] | A. Ghaemi and B. Arasteh, 2020 [63] | [R06] | S. Zhang et al., 2024 [64] |

| [R07] | M. Ali et al., 2024 [65] | [R08] | T. Rostami and S. Jalili, 2023 [66] |

| [R09] | M. Ali et al., 2024 [67] | [R10] | A. K. Gangwar and S. Kumar, 2024 [68] |

| [R11] | H. Wang et al., 2024 [69] | [R12] | G. Abaei and A. Selamat, 2015 [70] |

| [R13] | S. Qiu et al., 2024 [71] | [R14] | R. Sharma and A. Saha, 2018 [72] |

| [R15] | R. Jayanthi and M. L. Florence, 2019 [73] | [R16] | N. Nikravesh and M. R. Keyvanpour, 2024 [74] |

| [R17] | I. Mehmood et al., 2023 [75] | [R18] | L. Chen et al., 2018 [76] |

| [R19] | K. Rajnish and V. Bhattacharjee, 2022 [77] | [R20] | A. Rauf and M. Ramzan, 2018 [114] |

| [R21] | S. Abbas, et al., 2023 [78] | [R22] | C. Shyamala et al., 2024 [115] |

| [R23] | M. Bagherzadeh, et al., M., 2022 [116] | [R24] | N. A. Al-Johany et al., 2023 [79] |

| [R25] | Y. Lu et al., 2024 [80] | [R26] | L. Zhang and W.-T. Tsai, 2024 [81] |

| [R27] | W. Sun et al., 2023 [82] | [R28] | K. Pandey et al., 2020 [83] |

| [R29] | Z. Li et al., 2021 [84] | [R30] | P. Singh and S. Verma, 2020 [85] |

| [R31] | D. Manikkannan and S. Babu, 2023 [86] | [R32] | F. Tsimpourlas et al., 2022 [87] |

| [R33] | Y. Tang et al., 2022 [117] | [R34] | E. Sreedevi et al., 2022 [18] |

| [R35] | Z. Khaliq et al., 2023 [19] | [R36] | G. Kumar and V. Chopra, 2022 [88] |

| [R37] | M. Ma et al., 2022 [89] | [R38] | M. Sangeetha and S. Malathi, 2022 [90] |

| [R39] | Z. Khaliq et al., 2022 [17] | [R40] | I. Zada et al., 2024 [91] |

| [R41] | L. Šikić et al., 2022 [92] | [R42] | T. Hai, et al., 2022 [93] |

| [R43] | A. P. Widodo et al., 2023 [94] | [R44] | E. Borandag, 2023 [20] |

| [R45] | S. Fatima et al., 2023 [95] | [R46] | E. Borandag et al., 2019 [96] |

| [R47] | D. Mesquita et al., 2016 [97] | [R48] | S. Tahvili et al., 2020 [98] |

| [R49] | K. K. Kant Sharma et al., 2022 [99] | [R50] | B. Wójcicki and R. Dąbrowski, 2018 [100] |

| [R51] | F. Matloob et al., 2019 [101] | [R52] | M. Yan et al., 2020 [102] |

| [R53] | C. W. Yohannese et al., 2018 [103] | [R54] | L.-K. Chen et al., 2020 [104] |

| [R55] | B. Ma et al., 2014 [105] | [R56] | P. Singh et al., 2017 [106] |

| [R57] | D.-L. Miholca et al., 2018 [107] | [R58] | S. Guo et al., 2017 [108] |

| [R59] | L. Gonzalez-Hernandez, 2015 [109] | [R60] | M. M. Sharma et al., 2019 [110] |

| [R61] | G. Czibula et al., 2018 [111] | [R62] | M. Kacmajor and J. D. Kelleher, 2019 [112] |

| [R63] | X. Song et al., 2019 [113] | [R64] | Y. Xing et al., 2021 [118] |

| [R65] | A. Omer et al., 2024 [119] | [R66] | T. Shippey et al., 2019 [120] |

Appendix B

Description of Algorithms.

Table A2.

Selected Articles.

Table A2.

Selected Articles.

| ID | Novel Algorithm(s) | Description | Existing Algorithm(s) | Description |

|---|---|---|---|---|

| [R01] | 2M-GWO (SVM, RF, GB, AB, KNN) | Two-Phase Modified Grey Wolf Optimizer combined with SVM (Support Vector Machine); RF (Random Forest); GB (Gradient Boosting); AB (AdaBoost); KNN (K-Nearest Neighbors) classifiers for optimization and classification | HHO, SSO, WO, JO, SCO | HHO: Harris Hawks Optimization, a metaheuristic inspired by the cooperative behavior of hawks to solve optimization problems; SSO: Social Spider Optimization, an optimization algorithm based on the communication and cooperation of social spiders; WO: Whale Optimization, an algorithm bioinspired by the hunting strategy of humpback whales; JO: Jellyfish Optimization, an optimization technique based on the movement patterns of jellyfish; SCO: Sand Cat Optimization, an algorithm inspired by the hunting strategy of desert cats to find optimal solutions. |

| [R02] | ANN, SVM | ANN: Artificial Neural Network, a basic neural network used for classification or regression; SVM: Support Vector Machine, a robust supervised classifier for binary classification problems | n/a | n/a |