1. Introduction

Recently, research on the analysis and control of complex networks has advanced rapidly [

1]. The presence of complex networks in nature and society has sparked significant interest in this field [

2], as they are integral to various systems in our daily lives [

3]. For instance, the organization and function of a cell result from complex interactions between genes, proteins, and other molecules [

4]; the brain comprises a vast network of interconnected neurons [

5]; social systems can be represented by graphs illustrating interactions among individuals [

6]; ecosystems consist of species whose interdependencies can be mapped into food webs [

7]; and large networked infrastructures, such as power grids and transportation networks, can be represented by graphs describing interactions among components [

8,

9].

In complex networks, a key challenge is ensuring control and stability while minimizing resource consumption. This requires selecting a subset of nodes, known as pinning nodes, to control the entire network. Pinning control achieves global stabilization by intervening in these selected nodes. The optimal selection of these nodes is crucial, with various centrality metrics, like Degree Centrality [

10], Betweenness Centrality [

11], and Closeness Centrality [

12], proposed in the literature. These metrics offer different advantages depending on the network’s structure and dynamics [

3,

13,

14].

Recent advances in complex network control have led to more sophisticated algorithms designed to handle the growing complexity of modern networks. Techniques such as adaptive control [

15], robust control [

16], and sliding mode control [

17] have been developed to improve stability and robustness under various uncertainties. However, these methods often struggle with the optimal selection of pinning nodes, especially in large networks with vast combinations of nodes.

Existing methods are limited by their reliance on fixed-dimension approaches and their difficulty adapting to dynamic network environments. These algorithms often require prior knowledge of the network’s structure, which may not always be available, and can become computationally expensive as the network grows, making them impractical for real-time use.

The PHA-MD algorithm proposed in this work addresses these limitations by offering a novel approach to pinning control. Inspired by the parasitic relationship between the

Phymastichus coffea wasp and the

Hypothenemus hampei coffee borer, PHA-MD prioritizes the network’s asymptotic stability while optimizing pinning node selection. Unlike its predecessor [

18,

19], PHA-MD autonomously determines the number of pinning nodes, avoiding the issues associated with fixed-dimension algorithms. This innovation allows PHA-MD to dynamically adapt to network changes, ensuring stability even in complex scenarios.

PHA-MD also leverages the V-stability tool [

20] to ensure stability during optimization. Comparative simulations demonstrate PHA-MD’s superior performance compared to several heuristic optimization algorithms, particularly in achieving network stability with fewer pinned nodes and efficient energy use.

The performance of PHA-MD is compared with other heuristic optimization algorithms, such as Ant Lion Optimizer (ALO) [

21], Teaching–Learning-Based Optimization (TLBO) [

22], Grey Wolf Optimizer (GWO) [

23], Animal Migration Optimization (AMO) [

24], Particle Swarm Optimization (PSO) [

25], Artificial Bee Colony (ABC) [

26], Gaining–Sharing Knowledge Based Algorithm (GSK) [

27], Biogeography-Based Optimization (BBO) [

28], Whale Optimization Algorithm (WOA) [

29], Ant Colony Optimization (ACO) [

30], Osprey Optimization Algorithm (OOA) [

31], Mayfly Algorithm (MA) [

32], Archimedes Optimization Algorithm (AOA) [

33], Coronavirus Herd Immunity Optimizer (CHIO) [

34], and Driving Training-Based Optimization (DTBO) [

35].

These algorithms were selected for several reasons. They represent a wide range of optimization techniques, from bio-inspired methods like ALO [

21] and PSO [

25] to recent innovations such as CHIO [

34] and OOA [

31]. This diversity allows for a comprehensive comparison across different strategies. Many of these algorithms have shown high performance in solving complex optimization problems, making them robust benchmarks for evaluating PHA-MD. Their popularity in the research community ensures that the comparison results are relevant and easily interpretable.

The selected algorithms also cover different optimization mechanisms, including swarm intelligence (PSO, WOA [

29]), natural evolution (Genetic Algorithm (GA) [

36]), intensive local search (GWO [

23]), and reinforcement learning (Q-Learning based algorithms [

37]). Comparing PHA-MD with these approaches helps its competitiveness with the latest developments in heuristic optimization.

In summary, the selection of these algorithms provides a rigorous benchmarking framework, allowing for a thorough assessment of PHA-MD’s capabilities, particularly in solving the permutation problem in node selection and ensuring the network’s asymptotic stability. Additionally, PHA-MD’s ability to handle multi-constraint optimization problems makes it versatile and effective in various applications.

The rest of this paper is organized as follows.

Section 2 provides an overview of complex network pinning control, detailing the V-stability tool and other essential mathematical preliminaries. This section also defines the optimization problem, focusing on minimizing the energy consumed by control actions at the pinning nodes and the number of pinning nodes required for network stability. In

Section 3, the biological foundation of the PHA-MD algorithm is described, highlighting its unique characteristics and mechanisms.

Section 4 presents a comprehensive simulation study of the proposed PHA-MD algorithm across various complex network topologies, including a detailed comparison with other heuristic optimization algorithms. Finally,

Section 5 discusses the conclusions and potential future directions of the research.

2. Problem Statement

The optimization problem in complex networks is formulated to achieve two primary objectives:

- (i)

Minimizing the energy consumed by the control actions at the pinning nodes: this objective focuses on reducing the overall energy required to stabilize the network through targeted interventions at selected nodes.

- (ii)

Minimizing the number of pinning nodes required to achieve network stability: this objective aims to reduce the number of nodes that need to be pinned to maintain the stability of the entire network, thereby optimizing resource usage.

These objectives define the optimization problem as follows. Let

denote the closed-loop matrix built from the node passivity degrees

, the coupling matrix

G, and the diagonal gain matrix

K; let

denote the spectrum (set of eigenvalues); and let

denote the real-part operator applied to those eigenvalues. Then

where

is the index set of pinning nodes and

is its cardinality. The control law for node

is

, with

and

the

i-th diagonal entry of

K, defined as

The inequality

expresses exponential stability.

Specifically, the optimization problem involves the construction of the control gain matrix K. The goal is to find the optimal set of pinning nodes that achieves efficient network control. In this context, the construction of the control gain matrix K is crucial. The matrix K determines the effectiveness of the pinning control strategy, directly impacting the stability and efficiency of the network. Ensuring an optimal configuration of K is essential for achieving network stability with minimal resource consumption. Thus, the following describes the relevance of the matrix K in the pinning control of complex networks, and its impact on the stability of the network.

Consider the following Network (

2) of

nodes with linear diffusive couplings and an

-dimensional dynamical system [

38]:

where

denotes the

self-dynamics of node

, i.e., the intrinsic vector field that governs its evolution in isolation (all couplings and controls set to zero). The constants

are the coupling strengths between

and

,

specifies how state components are coupled for each connected pair

, and the connection matrix

encodes the network topology: if there is a connection between

and

for

, then

; otherwise

for

. The diagonal elements are defined by

, where

is the degree of node

. A small subset of nodes in the network is subjected to local feedback as part of the pinning control, and these are called

pinning nodes [

39]. Assuming the diffusive condition

Network (

2) can be rewritten in a compact controlled form as

where

is the

input (actuation) matrix that maps the control vector

into the state derivatives of node

. In the context of pinning control,

specifies which state components are directly actuated (full or partial channels). Typical choices are

(full–state actuation) or

with

(selected coordinates), where

denotes the

identity matrix (ones on the diagonal and zeros elsewhere). Unless otherwise stated, we adopt

in the simulations.

The control input is defined nodewise as

where

is the set of pinning nodes with

[

40]. Hence, the self-dynamics of the controlled nodes becomes

To demonstrate network stability and to calculate the lower bound

for the number of pinned nodes, consider a continuously differentiable Lyapunov function

, satisfying

with

, such that for the self-dynamics (

6), there is a scalar

and

guaranteeing

where

and

is the passivity degree of

. Then, to determine whether Network (

4) is synchronized at the equilibrium point

, such that

let us consider

for the controlled Network (

4); then

or, using the Kronecker product,

where

are the entries of

,

,

, …,

,

, and

. Then, according to [

20], Network (

2) is locally asymptotically stable around its equilibrium point if the closed-loop characteristic matrix

is negative definite; then, the number of pinned nodes cannot be less than the number of positive eigenvalues

with

, such that,

. Thus, satisfying the above conditions, Network (

2) is V-stable [

20], demonstrating the importance of the

K matrix to ensure stability in fixation control.

In (

11), the importance of the

K matrix for ensuring stability in the pinning control is evident. However, the energy consumed by the pinning nodes during the control process is a significant factor that impacts the overall efficiency of the network. Therefore, optimizing the energy consumption is essential to ensure that the network operates effectively without unnecessary expenditure of resources.

The objective function

in (

1) focuses on minimizing the energy consumed by the pinning nodes at a specific time

. This approach not only helps in achieving the desired control with minimal energy, but also ensures that the network remains stable and efficient. By concentrating on energy optimization, the control strategy can be made more sustainable and cost-effective, addressing both performance and resource utilization concerns in complex network systems.

The selected objective function for this problem is the energy consumed by the pinning nodes at time

,

, defined as

where

is the set of pinned nodes (only those that contribute to the sum; equivalently, summing over all

i with

for

yields the same value).

Remark 1. Considering V-stability and that energy is consumed only by the pinning nodes, the maximum energy occurs at time [20]. This allows the optimization to jointly minimize and , ensuring efficient and stable control of the complex network. 3. PHA-MD Algorithm

The PHA-MD algorithm iteratively constructs the control gain matrix K, leveraging the adaptive nature of its agents. Each agent presents a unique and variable candidate solution in each iteration, inheriting information and discarding non-optimal nodes to improve the solution in successive generations. This approach enables the optimization of the pinning node set configuration , achieving a network controlled efficiently with the minimum number of pinning nodes and the lowest possible energy consumption. In summary, the PHA-MD algorithm excels in simultaneously optimizing the energy consumed and the cardinality of the set of pinning nodes, optimizing the control gain matrix K for complex networks.

3.1. Biological Basis

The proposed PHA-MD algorithm is inspired by the parasitic symbiotic relationship between the parasitoid wasp (

Phymastichus coffea, LaSalle, Hymenoptera: Eulophidae,

Figure 1a) and the coffee borer (

Hypothenemus hampei, Ferrari, Coleoptera: Curculionidae: Scolytinae,

Figure 1b). This symbiotic relationship is used as a biological control mechanism because the borer is considered the most harmful pest affecting the coffee crop due to its attack on the berry, producing weight loss, depreciation of the grain, and loss of quality due to the presence of impurities in the infected beans [

41].

The life cycle of the wasp begins when

P. coffea parasitizes

H. hampei, depositing up to two eggs per host, typically one male and one female. From this point, the incubation process begins. Upon hatching, the larvae feed on the abdominal tissues of the host, the coffee borer, until they complete their metamorphosis. Once this stage is finished, the larvae emerge from the host as adult wasps [

42].

The

i-th symbiotic relationship

between the

i-th agents

P. coffea and

H. hampei at iteration

t is represented as

where

and

are the

i-th agents

P. coffea and

H. hampei respectively, whose populations are defined as

where

is the number of agents for both populations.

is a penalty function, defined as

where

and

are penalty parameters, with the difference between them defining the importance between the second and third terms in (

15);

is the cardinality of the agent

; and

is the maximum value between zero and the maximum real eigenvalue of matrix (

11), defined as follows:

where the elements

of the main diagonal of the

i-th control gains matrix

are determined by the relationship of the agents

and

, such that

The symbiotic relationship

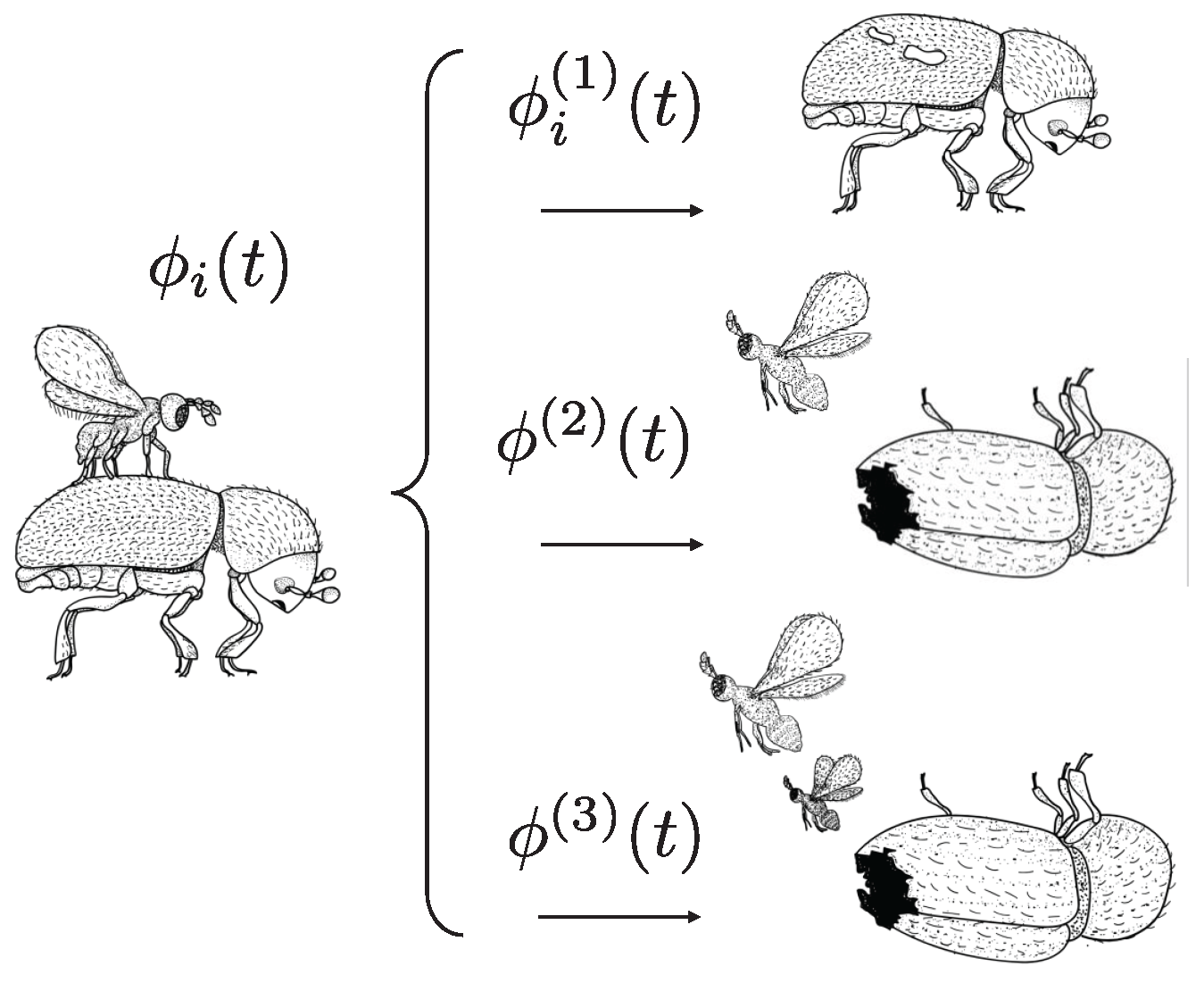

is classified in three possible favorable events for the

P. coffea species (see

Figure 2), which are as follows:

where

is the favorable parasitization event, when the wasp parasites the beetle;

implies the birth of only a female; and

corresponds to the birth of a female and a male. Note that types 2 and 3 affect the entire population; however, type 1 is particular for each agent, and this is reflected in the subscript

i for this type of symbiotic relationship. A single male fertilizes numerous females; however, it requires a female to bore the host abdomen in order to emerge, and therefore, the birth of a female and a male is the ideal scenario for the

P. coffea species [

42].

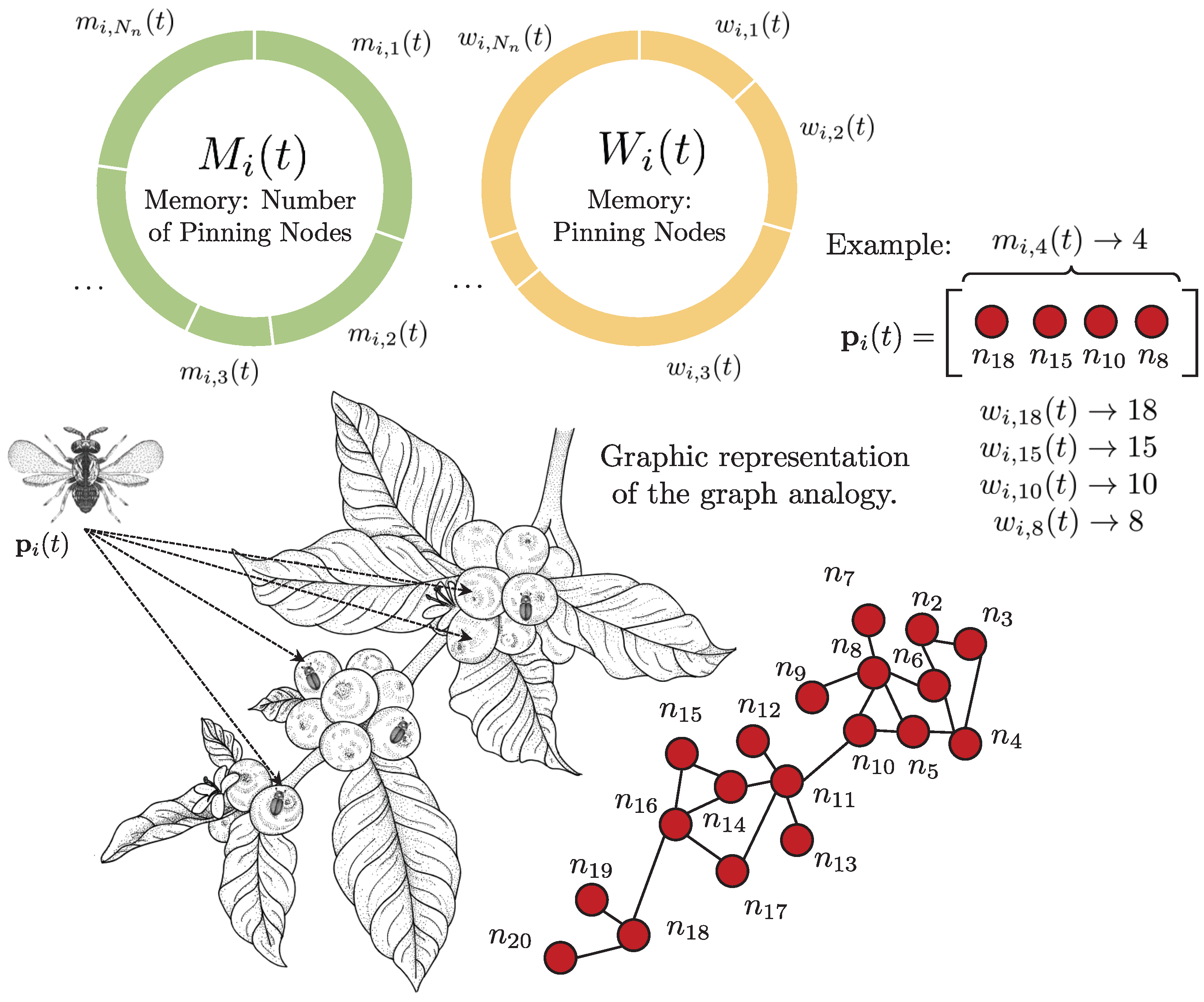

The concept of Memory Inheritance justifies the relevance of these events. This attribute implies that each i-th wasp can pass down two types of memories, and , to its future generations. Since the coffee tree can be modeled as a graph, whose berries are equivalent to nodes, and are, respectively, “how many nodes to select” and “which nodes to select”.

The example in

Figure 3 shows the decision of the

i-th agent

to visit four nodes, selecting

,

,

, and

. The elements

and

of each memory are selected by the roulette method. Note that in case of

or

, if the element is selected, it returns the index it represents, and not its occurrence value. In the following sections, the process of modifying the frequency of occurrence is explained.

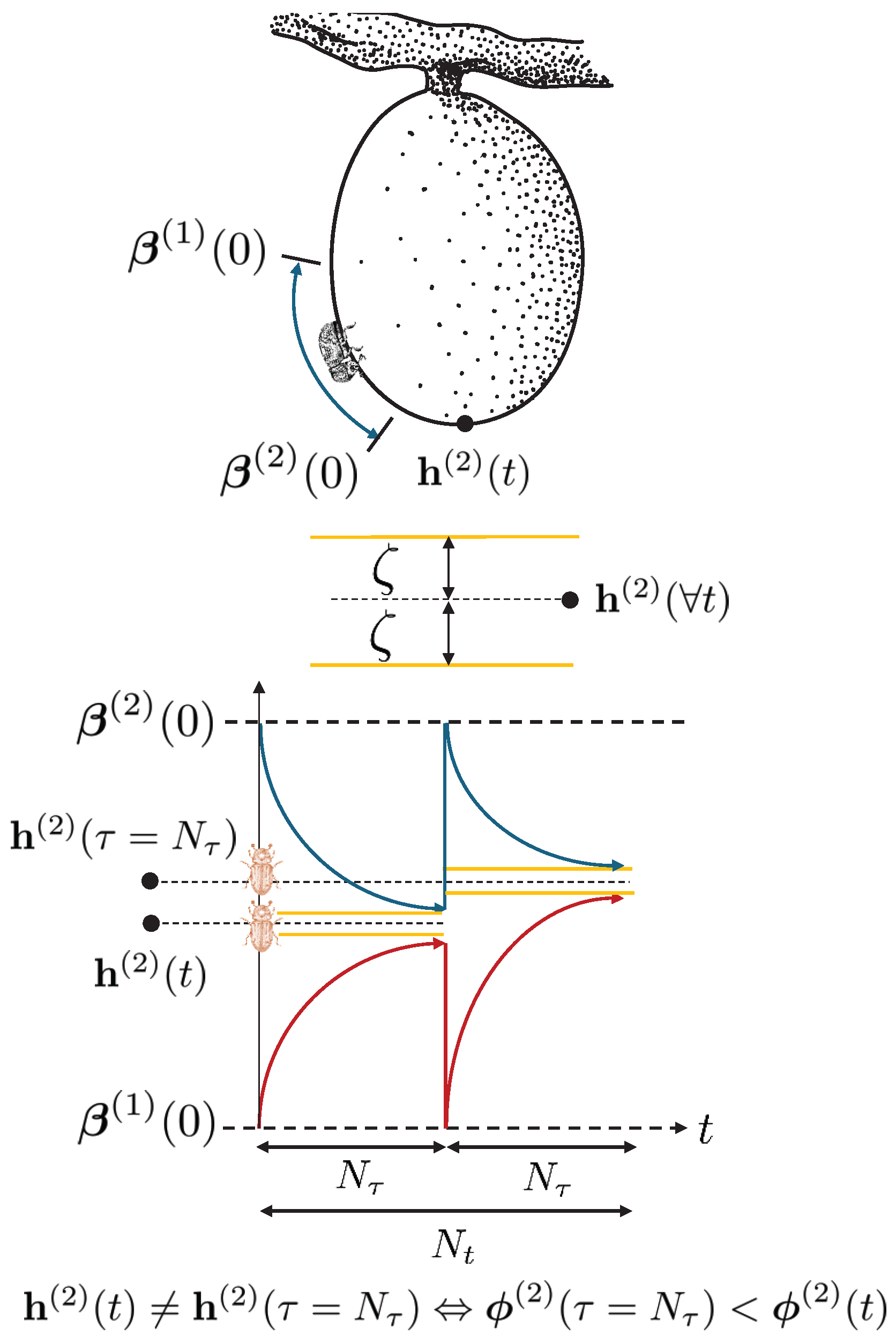

Another attribute of biological inspiration is the behavior of

H. hampei. In nature, the beetle drills into coffee berries at or near the apex, which is the softest area of the coffee bean [

43] (

Figure 4). Similar to how the gravity, shape, relief, or orientation of the berry influence its movement, the

borer functions guide

H. hampei towards the optimal regions identified by the group of beetles, without direct interference. These functions reduce the search space in favor of the symbiotic relationship

, because the beetles that manage to bore into the fruit will be able to feed, thereby increasing the probability that at least one

P. coffea individual will be born. The

borer functions are defined as

where

and

are the lower and upper borer functions, respectively;

,

denotes the absolute value;

is the compression parameter, which prevents the limits

and

from converging to

;

is the maximum interaction number per epoch;

is an iteration coefficient; and

and

are, respectively, the initial upper and lower bounds. It is important to mention that the

borer functions limit the values of each dimension

j; however, they can be specific for each

i-th agent

H. hampei. It should be understood that with the notation of (

21) and (

22), the entire population

H is delimited equally.

3.2. Attribute Updating

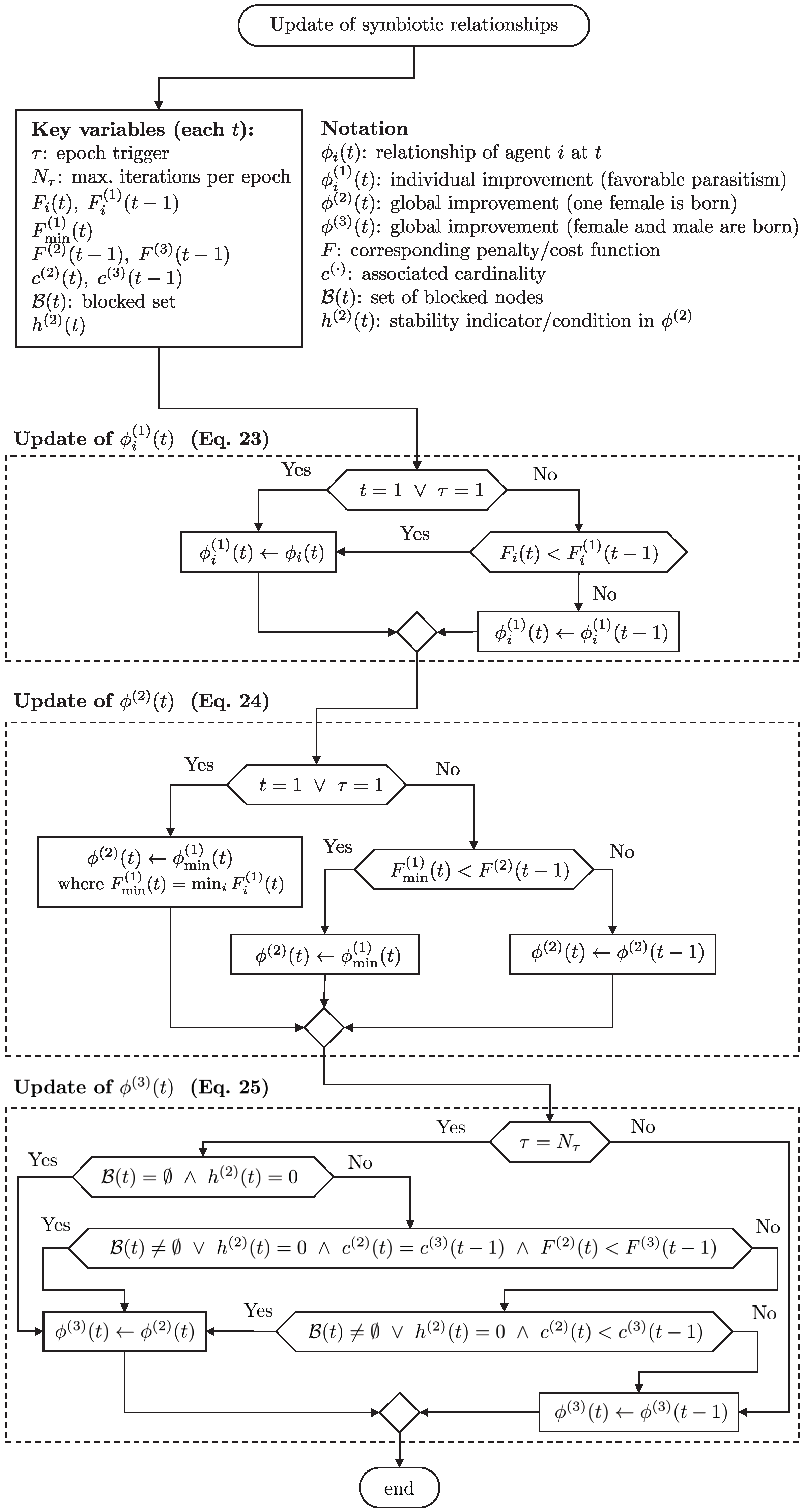

This section describes the creation of new generations for each type of agent based on updating parameters such as symbiotic relationships, updating subtypes, and optimizing inheritance memories. The subtypes of symbiotic relationships are updated as follows:

where

is the epoch trigger, which increases by one with each iteration

t. When

, a new epoch starts, whose first generation has its initial conditions reset, including the epoch trigger (

Figure 4),

.

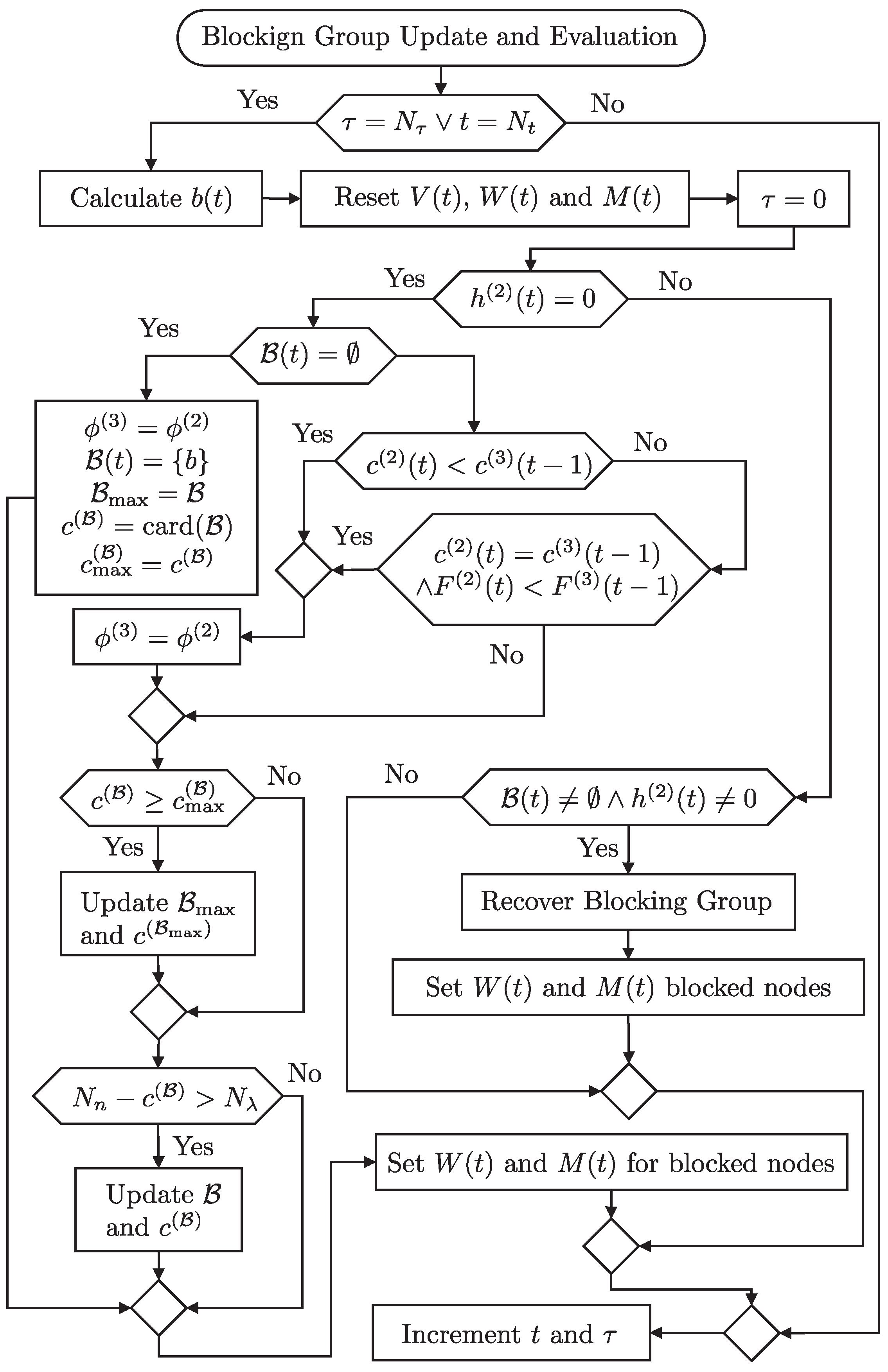

Figure 5 details how PHA-MD updates the three symbiotic subtypes at each iteration and at the end of every epoch. First, each agent evaluates its candidate and updates the per-agent best

whenever its current cost

improves (Equation (

23)). Next, the algorithm promotes the population-wide best

using the minimum among

(Equation (

24)). When the epoch boundary is reached (

), the blocking stage is invoked; based on stability (

), cardinality, and cost,

may be adopted as the epoch-level best

(Equation (

25)). If the stated conditions are not met, the corresponding records are preserved (“else” branches in Equations (

23)–(

25)). While the flow focuses on

-updates, it is coordinated with the blocking-set dynamics

(Equations (26) and (27)) that are executed at epoch end.

In the PHA-MD algorithm, a new feature has been introduced compared to its previous version, a node-blocking stage. This stage involves the identification and blocking of nodes, with the set of blocked nodes denoted as

. During each epoch, if and only if

, a node will be selected for blocking. This action increases the cardinality of the set

by one. The set

captures the nodes that are currently blocked at time

t, reflecting the evolving state of the algorithm as it progresses through each epoch. The set of blocked nodes is defined as

where

is a blocked node, and

is the best set of blocked nodes, such that

with

and

. The

i-th node will be blocked when its number of visits implies the minimum number of successes achieved, thus

where

represents the number of visits made by the

i-th agent of

P. coffea at the

j-th node. A visit by this agent is considered successful if it leads to performance improvement. The memory vector

increases its

j-th dimension by 1 to signify successful visits.

In this context,

accounts for both successful and unsuccessful visits, while

specifically tracks successful visits by incrementing its

j-th dimension when the

i-th agent enhances its performance after visiting the

j-th node. As shown in

Figure 3, the first type of memory that

P. coffea agents inherit from their offspring is

. This memory represents “how many nodes to select”, that is, the cardinality of each agent, and its elements

are updated in the following way:

where

. The second type of memory that

P. coffea agents inherit from their offspring is

. This memory represents “which nodes to select”, and its elements

are updated in the following way:

where

is a set of uniformly distributed random nodes (without repetition) with random cardinality.

3.3. Saturated Sigmoid Switch Functions

Saturated sigmoids are employed as compact binary comparators (switches) with explicit tie handling through a small

. The parameter

is taken as a fixed tolerance (machine epsilon,

eps) and is used to disambiguate equalities [

44]. Let

denote the comparison residual. The ideal steep-slope limit is indicated by “

” (in code, a large gain

is used).

Non-constancy of the switches in the proposed rules. The value of x depends on random draws and evolving state/memory variables, so both activation and deactivation are produced:

In the cardinality rule (Equation (

35)),

compares a uniform draw

with cumulative weights

; across

j, both cases

and

are obtained, so

toggles. The quantity

counts strictly positive success weights

, and

is activated only when

.

In node selection (Equation (

36)),

operates analogously with a fresh

and cumulative weights derived from

, yielding both

and

.

In the clipping function (Equation (

39)),

and

compare the current value

x with the moving upper and lower bounds

and

; depending on the outcome,

x is passed through or snapped to the nearest bound.

The offsets enforce a consistent tie policy: equality is included in (≤) and (≥) and excluded in and . This policy is applied consistently in all the referenced rules.

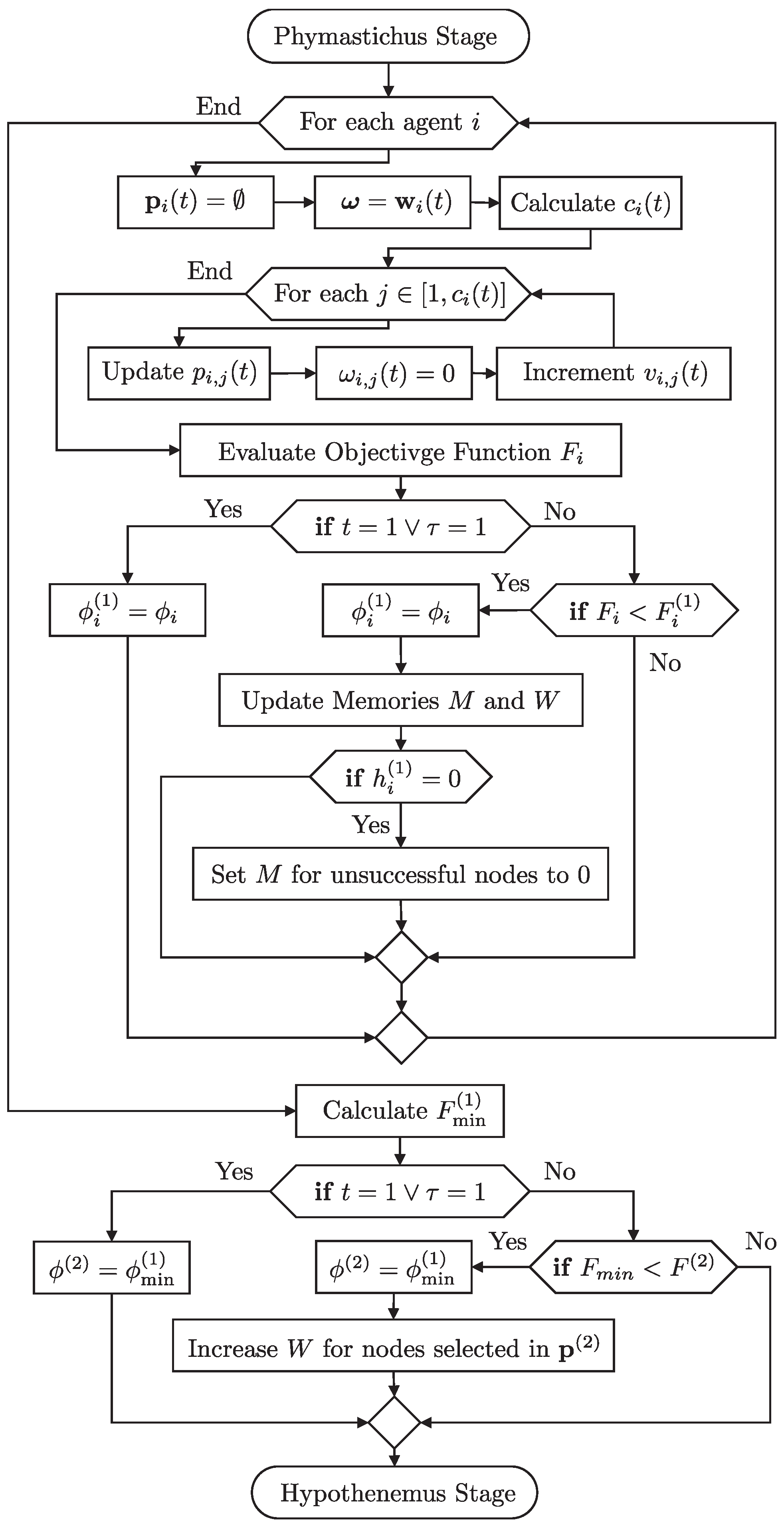

3.4. Offspring: Phymastichus

This subsection delves into the process governing the generation of new offspring of

P. coffea agents. As previously discussed, PHA–MD introduces the capability to block nodes, reducing the pool of available nodes. This attribute materially influences the decision-making of each

P. coffea agent through the memory

M. Situations may arise where the number of available nodes is fewer than the destinations desired by an agent. To avoid this condition, the cardinality of each agent

is defined as

where

and

are the saturated sigmoid switch functions defined in

Section 3.3, and

is a uniformly distributed random number. Once

is selected, the set of pinning nodes is defined (

Figure 3) so that the

j-th node visited by agent

is

where

is defined in

Section 3.3 and

is uniformly distributed. If node

is selected, its corresponding

during iteration

t is set to 0 so that

is not re-selected by the same agent. The auxiliary vector

is used for this purpose, allowing temporary modification without altering the original

.

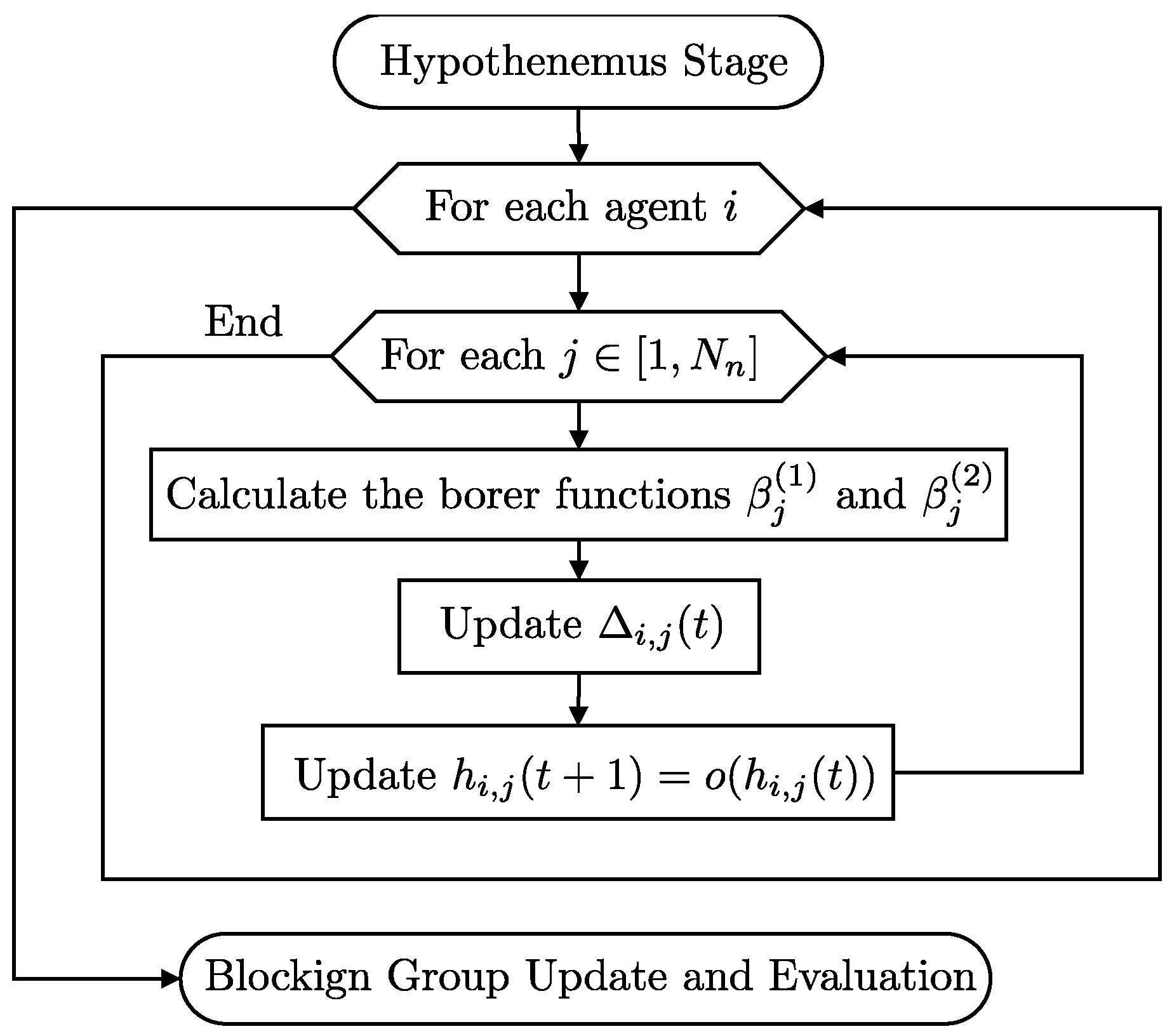

3.5. Offspring: Hypothenemus

In this subsection, the generation process of the offspring in the population

is described. The population

evolves under random perturbations and position increments, and is given by

where

is uniformly distributed. The position increment of the

i-th individual is

where

is the local-influence constant (two-female births),

is the global-influence constant (female–male births),

is an inertial constant, and

is uniformly distributed.

The clipping function

prevents

H. hampei agents from exceeding the search space bounds:

where

and

are the saturated sigmoid switch functions defined in

Section 3.3. In this way, values inside the interval

are preserved, whereas values attempting to cross a bound are snapped to the closest limit. This mechanism ensures that the population remains within the feasible search space while adapting to the evolving influence cues.

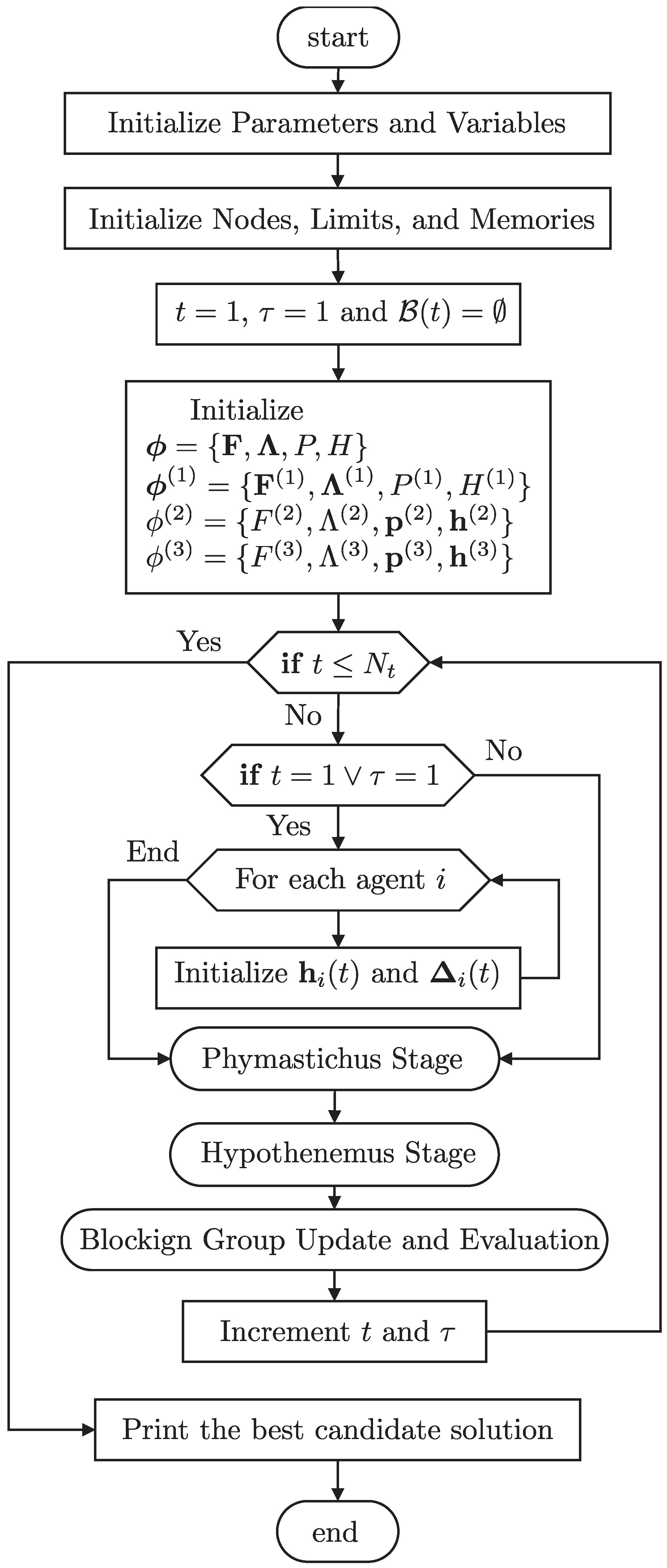

3.6. Flowchart Analysis of the PHA-MD Algorithm

Figure 6 sketches the overall control flow of PHA-MD. It highlights, at a high level, the cycle that repeats across iterations and epochs: (i) initialization at the start of each epoch; (ii) agent-driven updates—

Phymastichus constructs the candidate pinning set via the inheritance memories, while

Hypothenemus refines the continuous gains through the borer and clipping functions; and (iii) the epoch-end blocking step, where visits and successes are evaluated to update the blocking set. The diagram intentionally omits low-level details to emphasize data flow and stage interactions. For completeness, Algorithm 1 provides the corresponding pseudocode, aligned with

Section 3, which can be read in parallel with the equations to recover the full procedural detail.

The steps that describe the Phymastichus Agent Behavior stage are as follows. “Initialization”: Each agent initializes with a random set of potential pinning. “Node Selection”: Using cumulative probabilities, the agent selects nodes to visit based on the success memories (

) and counts (

). “Objective Function Evaluation”: The selected nodes are evaluated using the objective function to determine their effectiveness in controlling the network. “Memory Update”: The agent updates its success memories and counts based on the evaluation results (

Figure 7).

The steps that describe the Hypothenemus Agent Behavior stage are as follows. “Parameter Adjustment”: The agents adjust their parameters (

and

) using the sigmoid functions based on the iteration count. “Delta Update”: Each agent updates its delta values, incorporating local and global influences. “Node Evaluation”: Nodes are evaluated using the clipping function to ensure they remain within the bounds of

and

. “Update Influence”: The agents update the network influence values based on their evaluations (

Figure 8).

The steps that describe the Update and Evaluation of the Blocking Group stage are as follows. “Epoch Check”: The algorithm checks if the current epoch or iteration limit has been reached. “Node Blocking”: Nodes are blocked based on their visitation and success rates. “Reset Counters”: Visitation and success memories are reset for the next iteration. “Stability Check”: The algorithm checks the stability of the network and updates the best blocking group if stability is achieved. In summary, these flowcharts provide a visual representation of the PHA-MD algorithm’s structure and operations, offering insights into the systematic and iterative processes that underlie its effectiveness in optimizing pinning control for complex networks (

Figure 9).

| Algorithm 1 PHA-MD |

- Data:

, bounds , , , - Result:

(global best), (best under blocking), , - 1:

Init:

- 2:

, with , - 3:

Records: (current), (per-agent best), (global best), (blocked best) - 4:

while

do - 5:

if or then ▹ epoch start - 6:

Initialize H within as in Equation ( 37); set - 7:

end if - 8:

for to do ▹ Phymastichus: node selection via memories - 9:

Compute - 10:

CardinalityBy Equation ( 35) using , , - 11:

▹ temporary copy to avoid reselection - 12:

- 13:

for to do - 14:

- 15:

Select SelectNodeBy Equation ( 36) using , ; set ▹ no reselection - 16:

; - 17:

end for - 18:

Evaluate by Equation ( 15); form (Equation ( 13)) - 19:

Update by Equation ( 23); if improved then , - 20:

if then ▹ stable at current size - 21:

▹ prune larger cardinalities - 22:

end if - 23:

end for - 24:

Update by Equation ( 24) using and argmin k- 25:

Broadcast replicated to rows - 26:

if improved this stepthen ▹ as in code: only if improves - 27:

Draw random subset ; set - 28:

end if - 29:

Hypothenemus: borer & clipping - 30:

Update lower/upper bounds by Equations ( 21) (lower), ( 22) (upper) using - 31:

▹ Equation ( 38) - 32:

▹ Equation ( 39) with - 33:

if or then ▹ blocking stage - 34:

▹ Equation ( 28) - 35:

Reset epoch counters: - 36:

if then ▹ stability at epoch end - 37:

if then - 38:

; ; - 39:

else - 40:

If or (tie & ): - 41:

If : - 42:

if then - 43:

- 44:

end if - 45:

end if - 46:

; - 47:

else if and then ▹ recover best blocking if no stability - 48:

; ; - 49:

end if - 50:

end if - 51:

; - 52:

end while - 53:

return

|

3.7. Spatial Complexity Analysis

The spatial complexity of the proposed algorithm is determined by analyzing the memory usage in relation to the input parameters, specifically the number of agents and the number of nodes in the complex network. The key data structures contributing to memory consumption include matrices and cell arrays that are dynamically allocated during the algorithm’s execution.

The primary matrices W, M, V, and , as well as the matrices and , each have a size of . Each of these matrices contributes to the spatial complexity. Since there are six such matrices, their combined complexity is .

The cell array

, where each cell contains a vector of selected nodes, also contributes to the spatial complexity. In the worst case, the memory required is

. Additional vectors, such as

F,

h, and their counterparts in

,

, and

, have a size of

, contributing

in total. When combined, the total spatial complexity is

Given that the term dominates, the spatial complexity can be simplified to . This indicates that the memory usage of the algorithm increases linearly with both the number of agents and the number of nodes in the complex network, demonstrating the algorithm’s spatial efficiency.

4. Simulation Study

It is important to note that the proposed PHA-MD algorithm features variable dimensions across its agents, with each agent having a different number of dimensions. This characteristic initially precluded a direct comparison with other algorithms, such as ALO [

21], TLBO [

22], GWO [

23], AMO [

24], PSO [

25], ABC [

26], GSK [

27], BBO [

28], WOA [

29], ACO [

30], OOA [

31], MA [

32], AOA [

33], CHIO [

34], and DTBO [

35]. These algorithms assume a fixed number of dimensions throughout the optimization process, which is not compatible with the dynamic dimensionality of PHA-MD.

The optimization problem addressed by PHA-MD involves both combinatorial and continuous elements, further complicating the use of traditional algorithms. PHA-MD was specifically designed to handle the dynamic dimensionality needed to identify the minimum number of pinning nodes, a task that requires agents to propose different sets of nodes. This flexibility in dimension handling is not supported by the aforementioned algorithms, which rely on a fixed dimension setup. For instance, initializing agents with a predefined number of dimensions for both gain constants and potential pinning nodes imposes assumptions that may not be accurate.

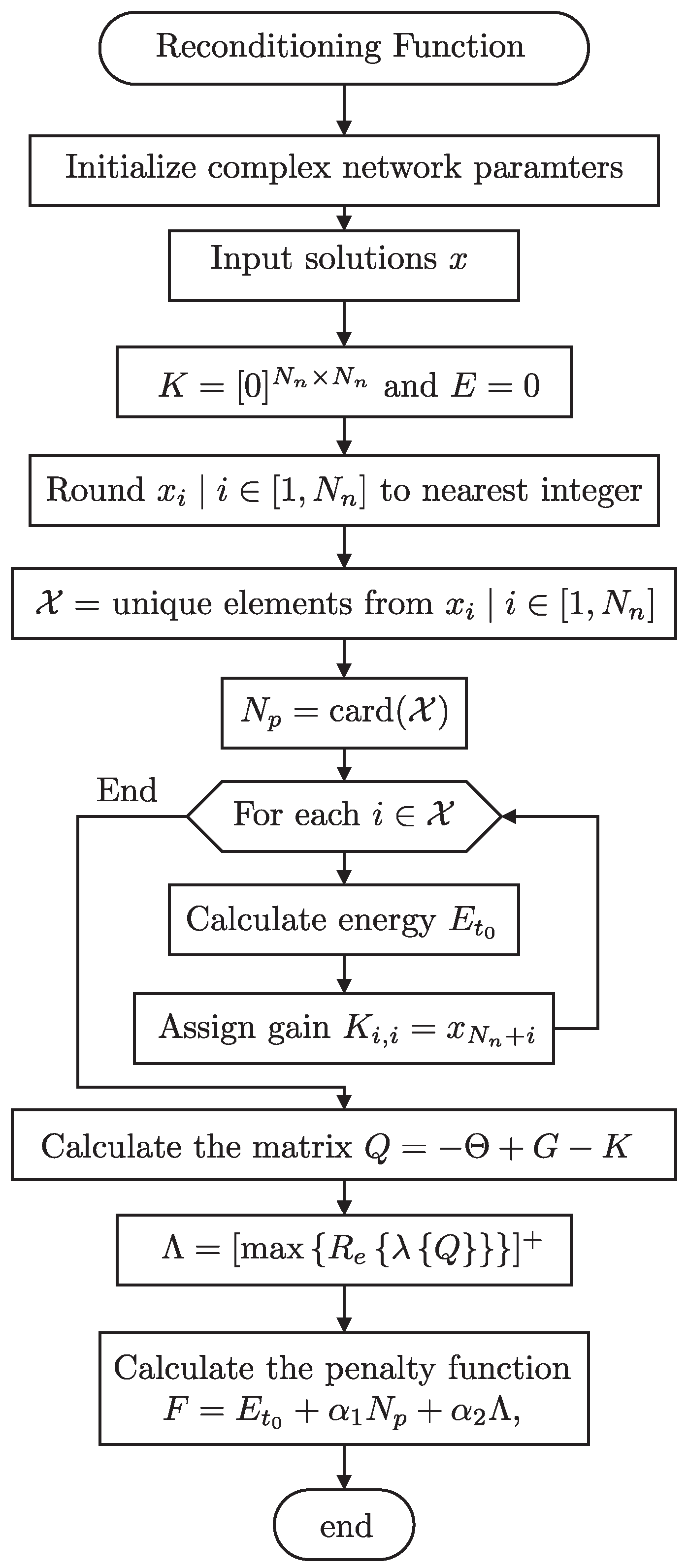

To allow a fair comparison, a reconditioning function was introduced. This function adjusts the dimensions by rounding them to the nearest integer corresponding to node indices in the matrix K and selecting unique elements. This approach standardizes the input, enabling fixed-dimension algorithms to adapt to the variable dimensionality required by PHA-MD.

All runs were performed on a single workstation under Ubuntu 22.04.3 LTS (64-bit) using MATLAB R2023b in double precision. Default multithreaded BLAS (Intel oneMKL) was enabled; GPU acceleration was not used. Randomness was controlled with MATLAB’s

rng(’twister’,2024). The population size, iteration budget, and epoch length followed the values stated in

Section 3, PHA-MD Algorithm, and the figure set

Convergence curves for the Networks. The hardware and software stack is summarized in

Table 1.

Remark 2. The number of runs required to obtain a representative solution cannot be specified a priori in a universal manner, since the selection of pinning nodes constitutes a combinatorial NP–hard problem. Consequently, the evaluation protocol is grounded in a theoretical feasibility criterion: under the V-stability of Xiang and Chen, the number of pinned nodes cannot be less than the number of non–negative eigenvalues of the characteristic matrix; thus, is a natural lower bound. Operationally, each method was executed up to the point at which the baseline algorithms exhibited instability, whereas PHA–MD preserved feasible solutions. Accordingly, a finite iteration budget was adopted as the stopping criterion; its value was selected experimentally to reach a regime in which baseline methods typically displayed instability in their solutions, while PHA–MD continued to yield feasible trajectories. Stability therefore informs the choice of iteration budget, although simulations are not terminated upon the detection of instability itself.

Figure 10 illustrates the reconditioning function used in the simulation study. This function is essential for aligning fixed-dimension algorithms with the variable dimensionality necessary for pinning node selection in complex networks. The properties of the six complex networks analyzed are presented in

Table 2. These properties include the number of edges (

), positive eigenvalues (

), average connection degree (

), average coupling strength (

), average passivity degree (

), average initial conditions (

), and their respective distribution intervals (

). Additionally, the search space (

) defines the feasible region for selecting control gains during the optimization process.

The six synthetic networks were sampled uniformly within the intervals reported in

Table 2 under two design criteria: (i) passivity degrees

and coupling strengths

chosen to yield a nonzero count of positive eigenvalues

in the V-stability analysis, and (ii) a relatively large

compared to

, which increases the theoretical lower bound on the number of required pinning nodes. This produces challenging instances where stability is nontrivial and energy–cardinality trade-offs are visible. Although synthetic, these topologies are representative of interaction patterns commonly found in signed social influence graphs (trust/distrust) [

45], neuronal microcircuits with mixed excitatory/inhibitory synapses [

46], gene-regulatory subnetworks with activation/repression [

47], distribution grids with grid-connected power-electronic converters exhibiting impedance interactions [

48,

49], and antagonistic ecological webs (predator–prey) [

50]. Thus, “Network 1–6” should be read as topology classes that capture these motifs, rather than as single specific datasets.

The parameter settings used in the simulations for the various algorithms are summarized in

Table 3. For each baseline, hyperparameters were taken from the authors’ recommended operating conditions in their original sources; no per-benchmark retuning was performed to avoid overfitting to the synthetic networks. The population size and number of iterations were kept constant across all methods to enforce a common computational budget. A reconditioning wrapper was employed to standardize candidate representations so fixed-dimension heuristics could accept the variable-cardinality solutions produced by PHA–MD. Because the control objective was stability-first, candidates that failed the stability test are reported as unstable and are not considered acceptable low-energy optima. This protocol separates algorithmic design differences (fixed versus variable dimensionality with stability feedback) from hyperparameter tweaks and supports a fair comparison.

As mentioned, the performance of the proposed PHA-MD algorithm is compared with several other heuristic optimization algorithms across six different complex network topologies. These topologies were generated uniformly at random within the intervals specified in

Table 2. The networks were designed to challenge current optimization algorithms and reveal scenarios where stability is difficult to achieve. The aim was to create increasingly complex networks to showcase the superior capability of the PHA-MD algorithm. If a network were encountered where even PHA-MD could not find stable solutions, the algorithm would need to be reconfigured to address these new challenges.

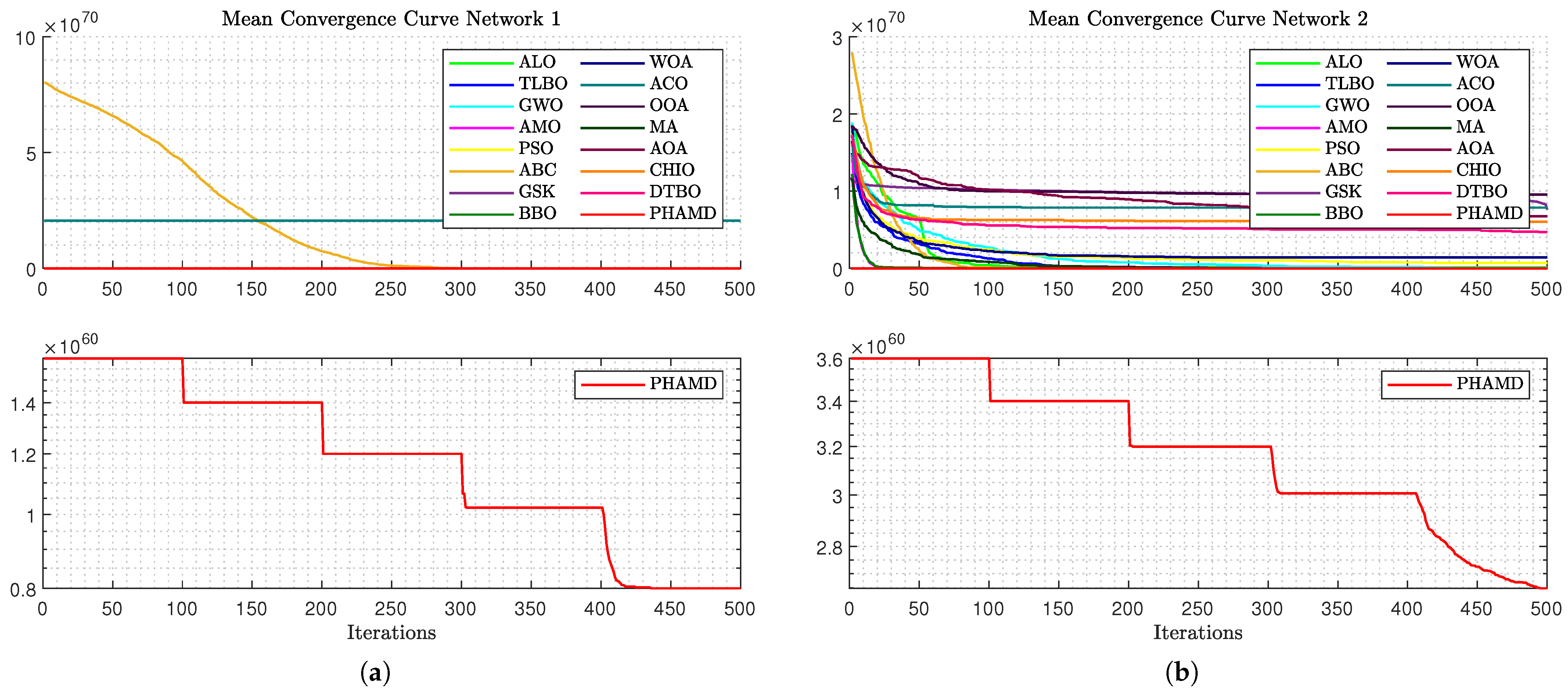

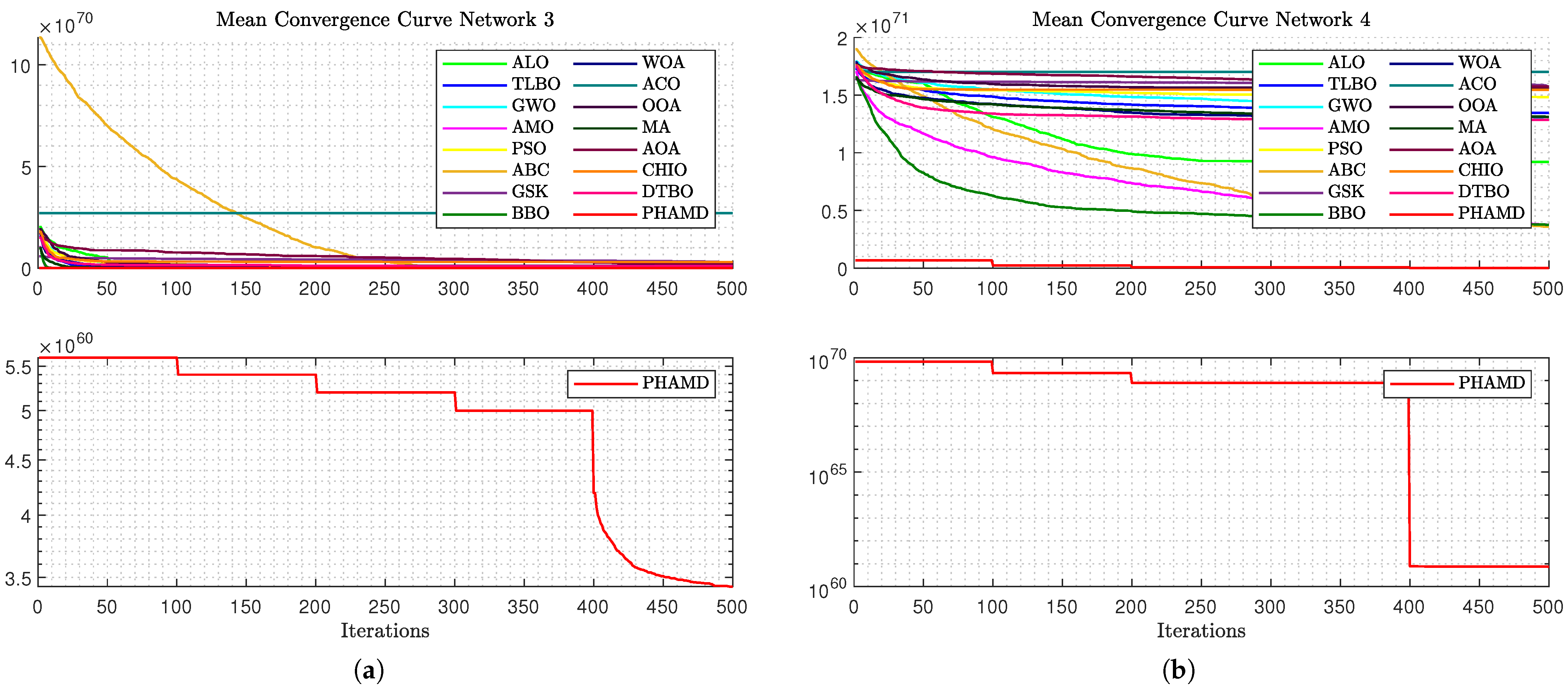

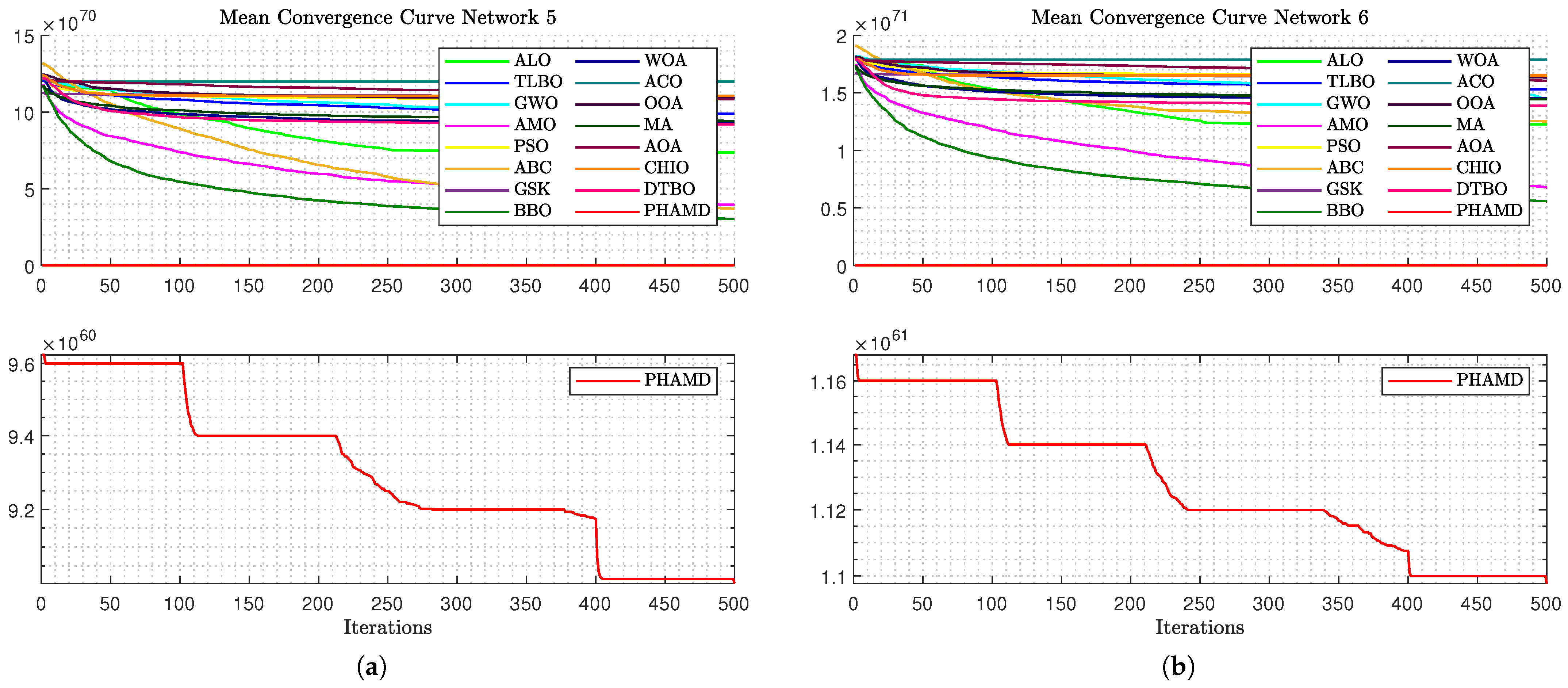

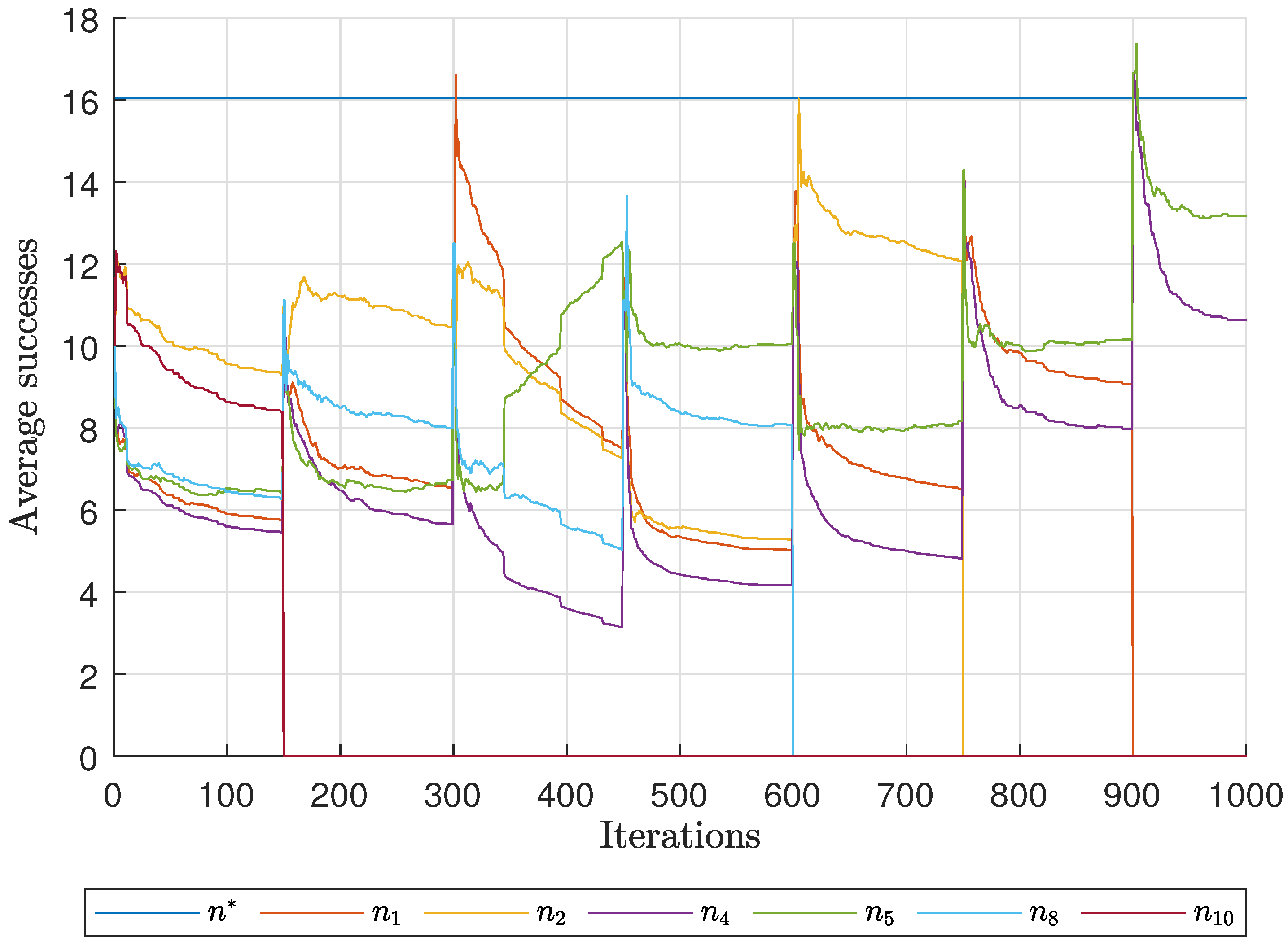

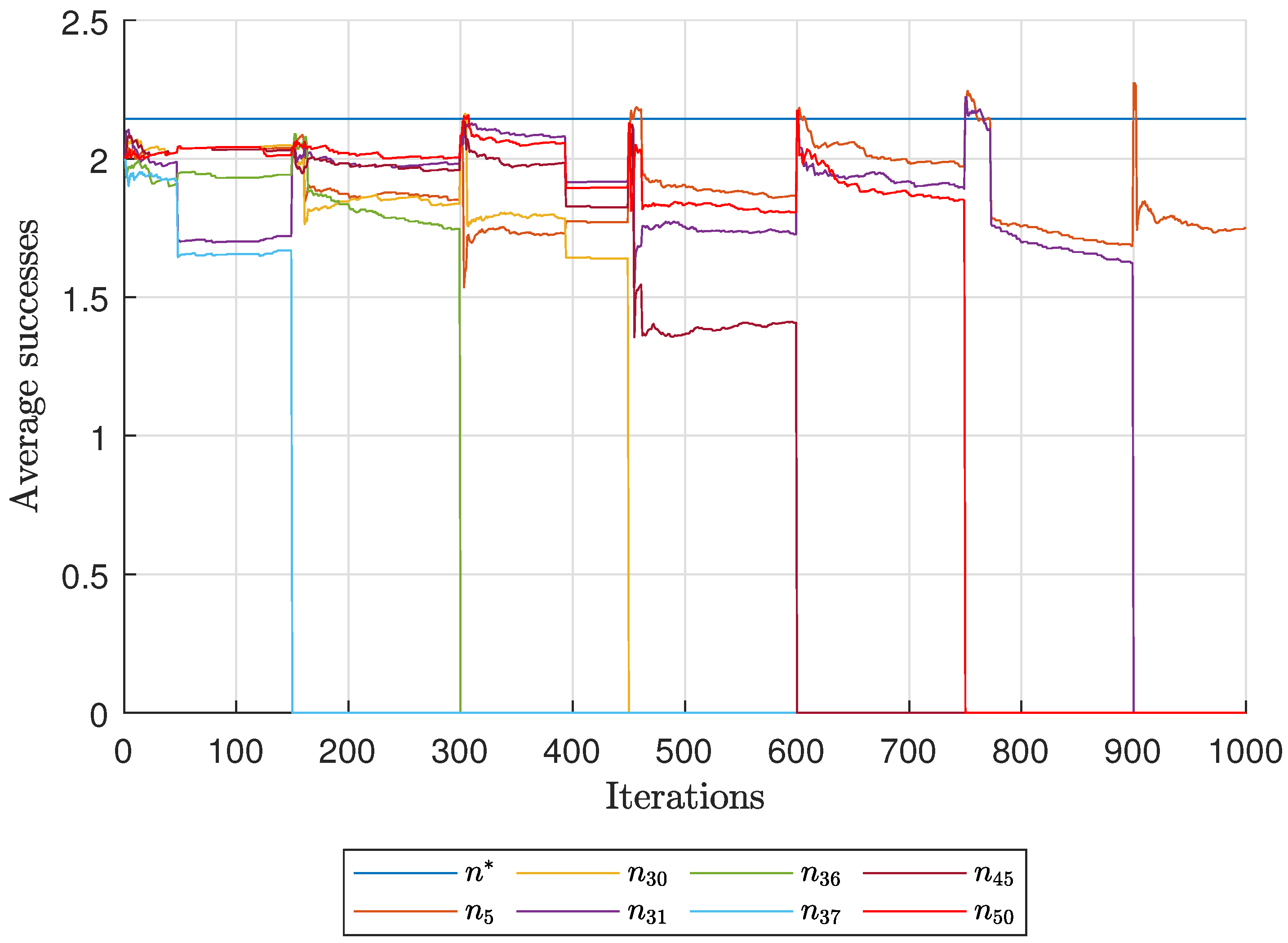

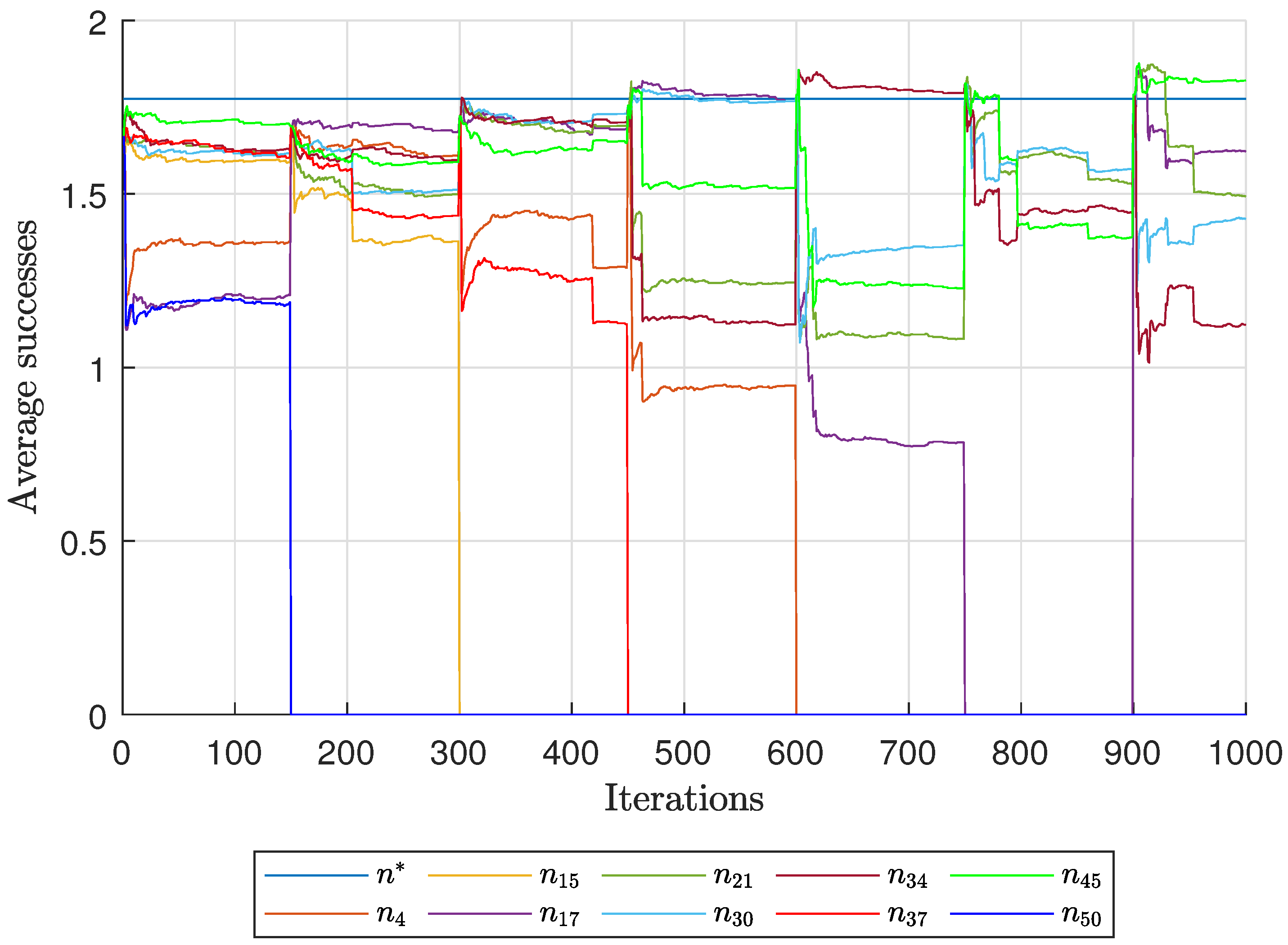

The simulation results include two sets of graphs. The first set (

Figure 11,

Figure 12 and

Figure 13) presents the convergence curves for each network’s optimization, comparing the performance of the PHA-MD algorithm with that of other algorithms. The second set (

Figure 14,

Figure 15 and

Figure 16) of graphs shows the optimization of energy

against the percentage of unstable solutions, emphasizing solution stability.

In

Figure 11a, the convergence behavior for Network 1 is depicted. Due to the scale, only the ABC algorithm is prominently visible, with others appearing unchanged.

Figure 11b allows for a comparison across all algorithms, showing similar convergence except for in the case of GSK and ACO.

The PHA-MD algorithm reaches its minimum value earlier than others, demonstrating its efficiency.

Figure 12a complicates comparisons due to the ABC algorithm’s scale, yet most algorithms show early convergence, with PHA-MD continuing to converge rapidly.

As shown in

Figure 12b, the optimization process becomes more challenging due to Network 4’s size, with a clear gap between PHA-MD and the others, and only four out of five epochs observed due to unchanged candidate solutions.

Figure 13a displays similar behavior, with drilling functions proving effective, and BBO and AMO following PHA-MD in performance. Finally,

Figure 13b shows an even greater gap between PHA-MD and the other algorithms, highlighting PHA-MD’s superior performance in minimizing the cost function

F.

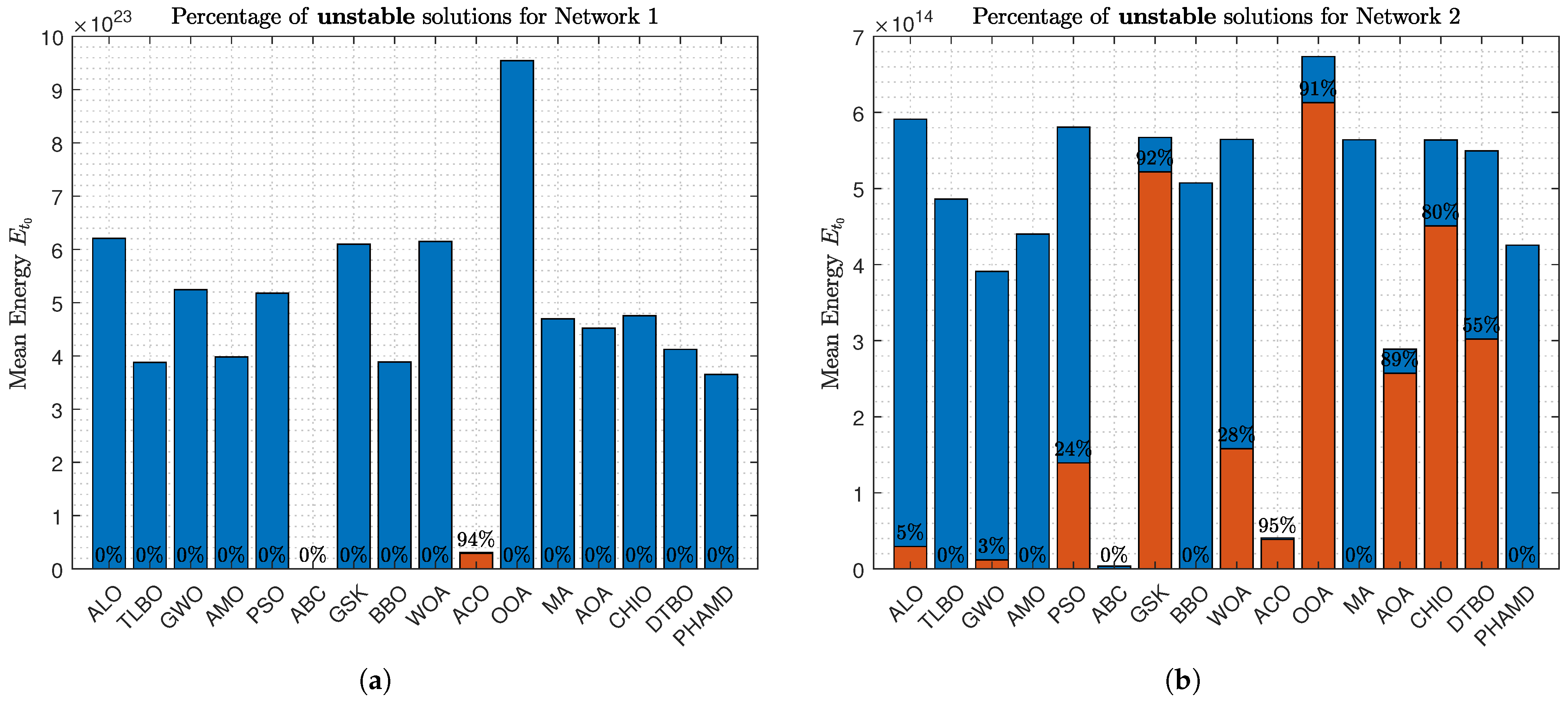

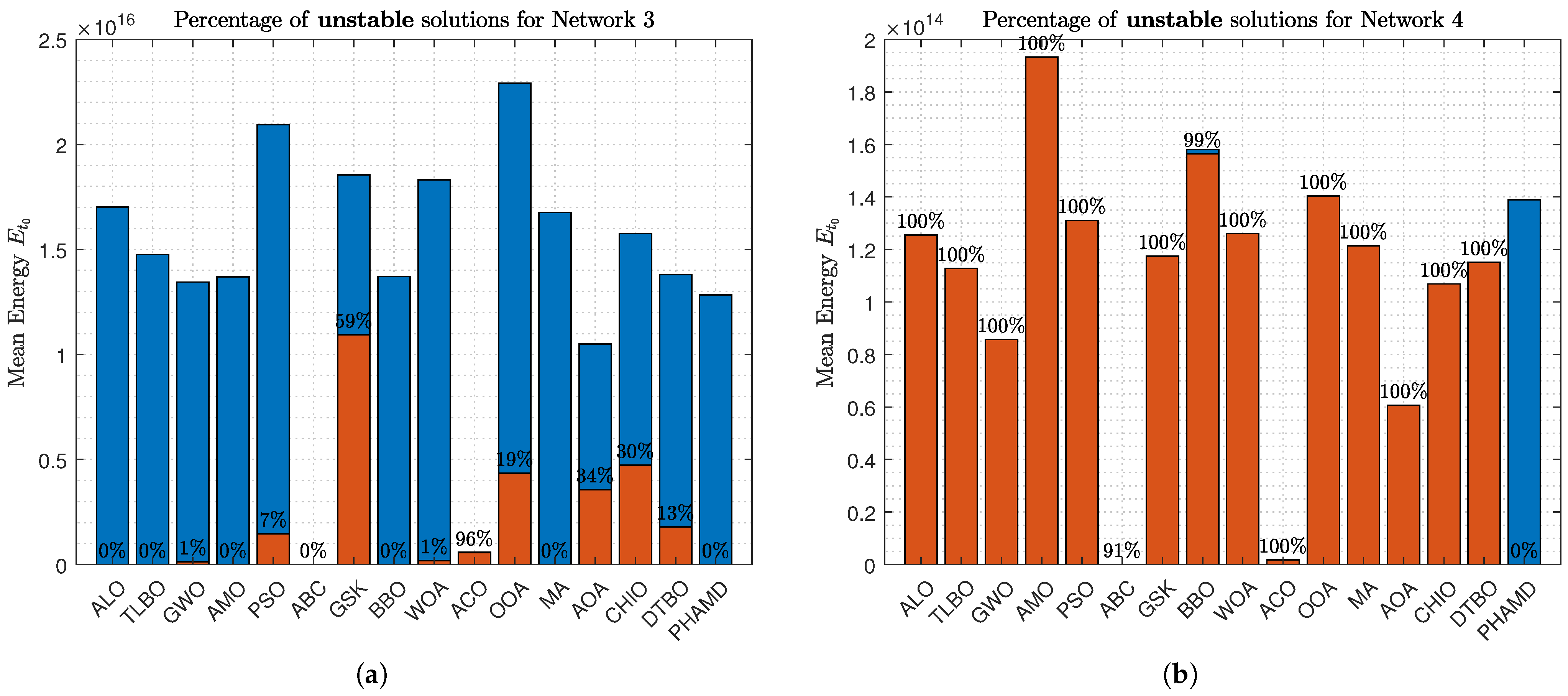

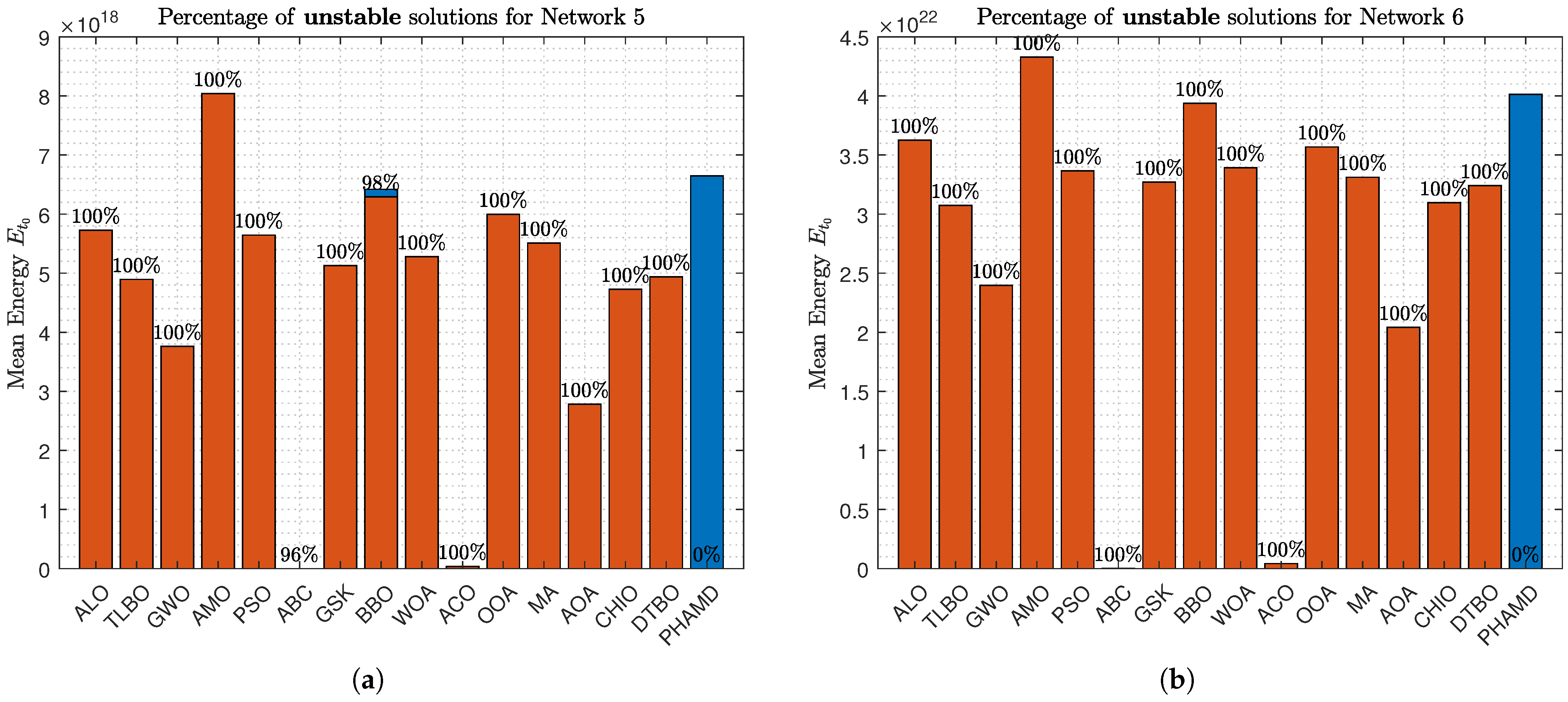

In addition to the convergence curves,

Figure 14,

Figure 15 and

Figure 16 present the optimization of energy

against the percentage of unstable solutions. Blue bars represent the average energy consumption of each algorithm’s solutions, with percentages indicating the proportion of unstable solutions.

Figure 14a shows that the ABC algorithm achieves the lowest energy consumption with 0% unstable solutions, while ACO, despite being energy-efficient, has 94% unstable solutions, highlighting the trade-off between energy efficiency and stability.

In

Figure 14b, the ABC algorithm remains the most energy-efficient, but several algorithms, including ALO, GWO, PSO, WOA, GSK, and ACO, present unstable solutions, with ACO having the highest instability rate at 95%.

Figure 15a shows a reduction in unstable solutions due to Network 3 having fewer states. By

Figure 15b, nearly all algorithms exhibit 100% unstable solutions, despite Network 4 having the same number of states, indicating that complexity depends on more than just the number of states.

Figure 16a shows some algorithms achieving stable solutions, while

Figure 16b highlights that only PHA-MD provides stable solutions, underscoring its prioritization of stability over energy efficiency.

These results demonstrate the PHA-MD algorithm’s effectiveness in achieving rapid convergence and superior optimization compared to other algorithms. Its ability to handle variable dimensionality and combinatorial optimization makes it particularly suitable for pinning control in complex networks. The simulation results are summarized in

Table 4, which provides a comprehensive overview of each algorithm’s performance across the six networks.

The Average Cost Function (Avg. CF) represents the mean value of the cost function, indicating overall performance efficiency. The Best Cost Function Result (Best CF) shows the lowest cost function value achieved, reflecting the algorithm’s capability to find optimal solutions. The Best Number of Pinning Nodes (Best NP) and the Average Number of Pinning Nodes (Avg. NP) provide insight into the algorithm’s effectiveness in minimizing control nodes. Energy consumption is also evaluated, with the Best Energy Consumption (Best E) indicating the lowest energy usage, and the Average Energy Consumption (Avg. E) representing mean energy consumption.

To emphasize stability, values corresponding to unstable solutions are marked in red, indicating networks that did not achieve stability, while stable solutions are marked in black. This color-coding facilitates quick assessment of which algorithms balance energy efficiency with network stability. Overall,

Table 4 underscores the PHA-MD algorithm’s superior performance, particularly in achieving stable solutions with efficient energy use and minimal pinning nodes.

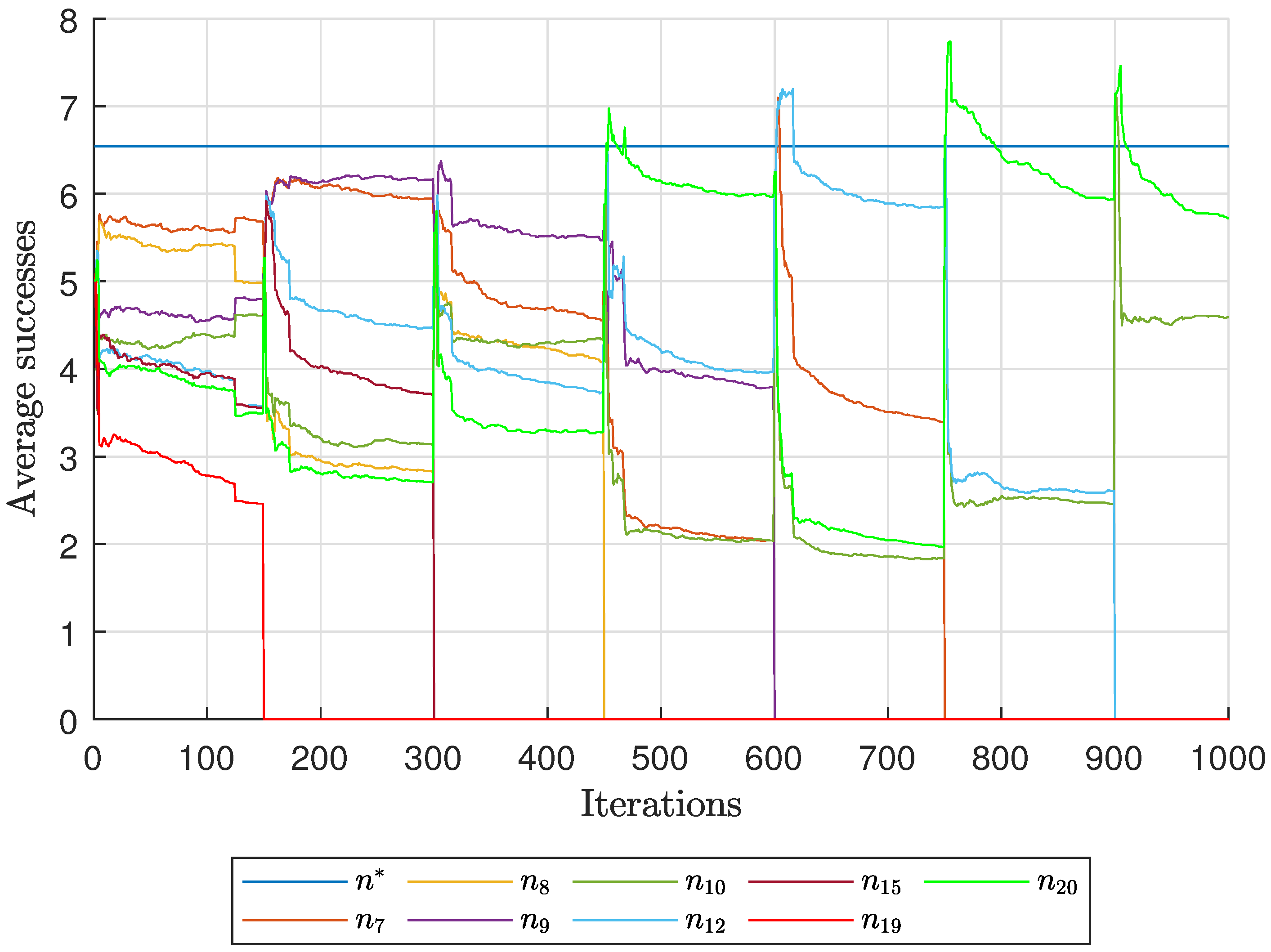

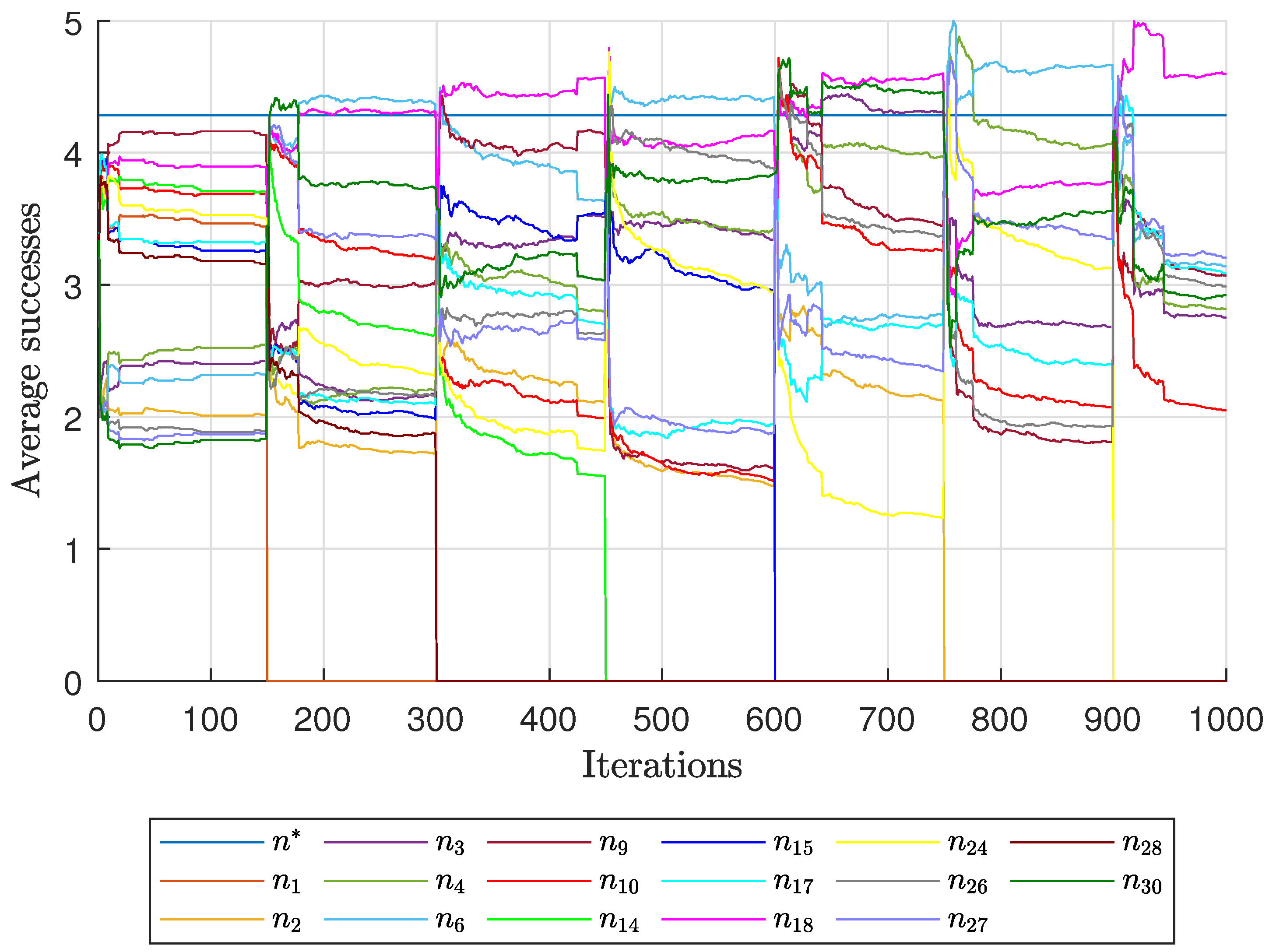

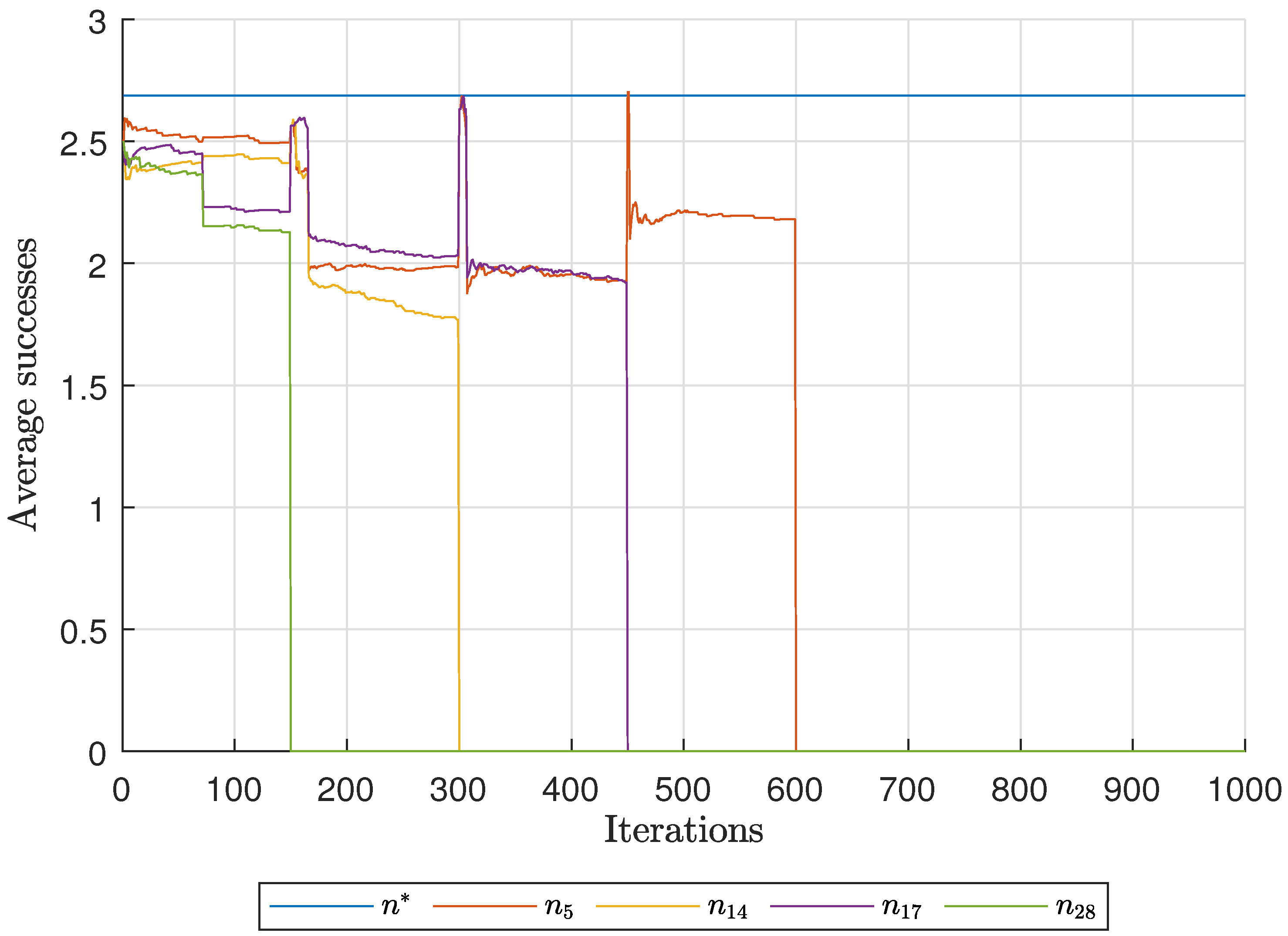

Diagnostic Visualization of the Node-Blocking Stage

To illustrate the internal mechanics of the proposed blocking stage—biologically, how

P. coffea wasps cease to visit unpromising nodes—we include the following diagnostic plots (

Figure 17,

Figure 18,

Figure 19,

Figure 20,

Figure 21 and

Figure 22). These figures are intended to explain how nodes are progressively blocked or excluded from the pinning set, rather than to provide additional performance benchmarks. For clarity, let

denote the current average number of successful visits across the remaining (non-blocked) nodes; nodes below this running threshold are provisionally excluded from the pinning set, while blocked nodes become members of

. If stability is still not achieved after blocking or excluding a node, that node can be returned to the candidate pool, consistent with the update rules in

Section 3.2.

As shown in

Figure 17, the first network begins without any blocked nodes, but several nodes lie below the threshold.

Figure 18 presents the corresponding behavior for Network 2, while

Figure 19 illustrates a topology that requires fewer pinning nodes.

Figure 20,

Figure 21 and

Figure 22 summarize the results for the remaining networks.

5. Conclusions

The proposed PHA-MD algorithm is an effective method for pinning control of complex networks, eliminating the need for prior information on the minimal number of pinned nodes. It is designed to solve multi-constraint optimization problems, focusing on optimal node selection with the minimal number of pinned nodes, while ensuring network stabilization through the V-stability tool.

A stability-first evaluation has been adopted. In the pinning control setting, feasibility (stability) is the primary objective; therefore, candidates that are energy-efficient but unstable are not considered valid solutions. Cross-method comparisons have been reported under equal population and iteration budgets and with explicit instability flags, so as not to conflate algorithmic merit with implementation details or with external enumeration required by fixed-dimension heuristics. Within this framing, PHA–MD consistently identified smaller, stable pinning sets and competitive energy levels across increasingly challenging network topologies.

A key advantage of PHA-MD is its ability to handle variable dimensions for its agents, unlike current algorithms that operate with fixed dimensions. This adaptability allows PHA-MD to address challenges related to permutations in pinning node selection, making it a robust and versatile solution for complex network control. Consequently, the hybrid nature of the optimization problem tackled by PHA-MD complicates direct comparisons with other collaborative behavior algorithms.

Future research could explore various weighting methodologies in penalty functions, analyze convergence times in border functions, and investigate more complex decision vector dynamics. These studies could further enhance the algorithm’s efficiency and applicability in more challenging scenarios, reinforcing its role as a leading solution in complex network control.