Abstract

This review surveys the past five years of research on energy-aware path optimization for both solar-powered and battery-only UAVs. First, the energy constraints of these two platforms are contrasted. Next, advanced computational frameworks—including model predictive control, deep reinforcement learning, and bio-inspired metaheuristics—are examined along with their hardware implementations. Recent studies show that hybrid methods combining neural networks with bio-inspired search can boost net energy efficiency by 15–25% while maintaining real-time feasibility on embedded GPUs or FPGAs. Among the remaining challenges are federated learning at the edge, multi-UAV coordination under partial observability, and field trials on ultra-long-endurance platforms like the Airbus Zephyr HAPS. Addressing these issues will accelerate the deployment of truly persistent and green aerial services.

1. Introduction

Unmanned Aerial Vehicles (UAVs) enable rapid, flexible sensing and communications in domains such as agriculture, disaster relief, reconnaissance and construction [1]. Purely battery-powered UAVs, however, are limited by finite onboard energy. Integrating high-efficiency photovoltaic (PV) cells into a UAV’s wings and fuselage can extend its endurance by harvesting solar energy during flight. Nevertheless, a solar-powered UAV must coordinate three coupled processes—energy harvesting, storage, and expenditure—in real time, adapting to moving clouds, wind gusts, and payload demands. This tight coupling of path planning with power management requires predictive models of solar flux [2], weather uncertainty, and battery state-of-charge to inform flight decisions.

Many studies have surveyed general UAV path planning techniques and challenges [3,4]. For instance, Aggarwal and Kumar [3] provide a comprehensive review of path planning algorithms and their limitations. More recent work has started to address energy constraints in UAV planning—Faizah et al. [5] discuss optimization techniques and future directions that account for factors like battery endurance. However, solar-powered platforms introduce unique complexities. For battery-only UAVs, path optimization typically focuses on minimizing distance or time given a fixed energy budget. In contrast, a solar-powered UAV may deliberately fly longer or detour to sunnier areas to maximize net energy gain, banking energy for night-time flight. Path planning for solar UAVs thus involves choosing headings and altitudes that balance progress toward the mission goal with exposure to sunlight, sometimes sacrificing the shortest path in favour of sustained energy intake. These solar-specific considerations require new algorithms and models beyond conventional battery-UAV planning [6].

Recent breakthroughs in deep learning [7], edge computing, onboard vision or classification tasks [8] and nature-inspired optimization [9,10] now enable real-time, energy-aware path planning on resource-limited avionics. In light of these advances, this review is organized as follows. Section 2 details solar-UAV energy-management hardware and control strategies. Section 3 covers neural-network-based path planning and reinforcement learning methods. Section 4 discusses classical, evolutionary, and bio-inspired optimization techniques. Section 5 addresses practical challenges-including onboard computing limits, regulatory/safety issues, and swarm coordination [11]-and outlines future directions. This structure highlights where solar-specific constraints alter otherwise generic UAV algorithms, clarifying the open research needed for truly persistent flight.

2. Solar-Powered UAV’s: Design and Engineering Innovations

2.1. Overview of Solar-Powered UAVS

Solar-powered UAVs represent a significant advancement in aerospace engineering, using solar energy harvested by high-efficiency PV cells integrated into the airframe. This design allows for prolonged flight, a key advantage for missions requiring extensive operation time (e.g., environmental monitoring, agricultural surveys, border surveillance, communications relays). Such UAVs offer a reduced carbon footprint and greater range than fuel-powered models. A recent state-of-the-art review comprehensively categorizes UAV power systems, underlining the growing relevance of solar and hybrid solutions [12]. The prospect of near-continuous flight has drawn considerable interest from both military and civilian sectors, with growing demand for platforms that operate continuously without frequent refuelling or recharging.

Case Example-Airbus Zephyr HAPS: A prominent demonstration of solar-powered UAV capabilities is the Airbus Zephyr; a high-altitude pseudo-satellite (HAPS) designed for multi-month stratospheric flight [13]. In 2022, the Zephyr flew continuously for 64 days, effectively doubling the previous UAV endurance record. With an ultra-light 25 m (82 ft) wingspan airframe weighing only ~75 kg, it operates around 20 km (70,000 ft) altitude to remain above weather and maximize solar exposure. The Zephyr uses solar panels to charge secondary batteries during the day, then draws on those batteries to power overnight flight, achieving carbon-neutral operations. This engineering feat demonstrated the feasibility of multi-month UAV missions, but also underscored challenges. Maintaining high-altitude station-keeping and managing battery health over repeated day–night cycles required precise energy budgeting and adaptive control. Seasonal and latitude changes in solar irradiance can force the aircraft to adjust altitude or course to meet power needs. The Zephyr case highlights the need for intelligent path-planning algorithms that dynamically adjust altitude and heading to maximize solar gain while conserving enough energy for night. Ongoing Zephyr trials are providing invaluable data on endurance, efficiency, and control in the stratosphere, guiding the development of robust strategies for future high-altitude, long-endurance solar UAVs.

2.2. Energy Management Strategies

Efficient operation of a solar-powered UAV is a two-level problem: harvest as much energy as possible and distribute it among propulsion, avionics, and storage in real-time [14]. Early work relied on static optimization (e.g., linear programming), which breaks down when moving shadows or wind gusts shift the energy budget. Recent studies have proposed intelligent control frameworks for SUAVs that jointly optimize energy harvesting, trajectory planning, and communication through reinforcement learning, enabling adaptive responses to environmental uncertainty and energy constraints [15]. Machine-learning predictors extend this horizon using on-board sky imagers and weather feeds [16]. Bio-inspired methods such as the Slime Mould Algorithm (SMA) emulate decentralised nutrient transport, allowing the controller to reroute power between propulsion and payload as environmental conditions drift [17]. Hybrid battery–super-capacitor packs further smooth supply–demand mismatch, cutting transient energy loss by up to 10% [18].

A key challenge in energy management is maintaining the balance between energy intake and consumption, particularly during periods of low solar irradiance, such as cloudy conditions or nighttime operations. To address this, predictive algorithms and machine learning models are implemented to forecast energy availability and optimize energy consumption in real-time. These models dynamically allocate power between propulsion and other onboard systems, utilizing input from solar sensors and power meters to make informed adjustments. Recent research using deep neural networks (DNN) has shown promise in predicting battery energy consumption and degradation patterns in UAVs, enhancing power management strategies and extending operational lifespan [19]. Selecting an appropriate energy source is vital, with recent systematic reviews comparing the efficiency of mobile robot power systems providing key insights for UAV applications [20].

While traditional optimization techniques have proven effective, exploring bio-inspired algorithms such as the Slime Mould Algorithm (SMA) could offer alternative approaches to adaptive energy allocation. SMA and similar bio-inspired strategies simulate decentralized, self-organizing distribution patterns observed in nature, which might provide UAVs with enhanced adaptability in varying environmental conditions. However, it is essential to investigate these algorithms further to determine their practical advantages or potential drawbacks in UAV energy management compared to established methods.

Table 1 provides a detailed overview of recent advancements in energy management strategies for solar-powered UAVs, showcasing diverse approaches to optimize energy allocation and storage. Each study highlights unique strategies, from model predictive control (MPC) and machine learning-based forecasting to bio-inspired techniques like the Slime Mould Algorithm (SMA), offering potential solutions for adaptive energy management in varying environmental conditions. These strategies have demonstrated improvements in energy efficiency, UAV endurance, and system scalability, often using sophisticated tools like MATLAB/Simulink for simulation. Studies leveraging advanced power electronics, supercapacitor integration, and IoT-based control systems reveal significant gains in operational efficiency and response time under real-time conditions. By examining these approaches, Table 1 emphasizes the critical role of innovative energy management techniques and the potential of emerging bio-inspired and predictive models to enhance UAV performance across different mission scenarios.

Table 1.

Overview of Energy Management Strategies for Solar-Powered UAVs.

Traditional optimization algorithms, such as Genetic Algorithms (GA) and Linear Programming (LP), often rely on static assumptions and cannot rapidly adjust to volatile conditions like changing solar irradiance or wind. As a result, they frequently fail to maintain optimal energy efficiency in real-time applications. Despite their fundamental effectiveness, the rigidity of methods like GA or LP under dynamic conditions limits their utility for solar-powered UAVs. Environmental disturbances-such as shifting sunlight, wind gusts, or sudden shading-violate the stable operating assumptions of these models, causing them to fail to continuously optimize energy distribution when unexpected variations arise.

In contrast, bio-inspired approaches like the Slime Mould Algorithm (SMA) capitalize on adaptive, decentralized decision-making principles derived from natural foraging. SMA’s inherent responsiveness allows it to continuously update its solutions as the environment changes, redistributing power between propulsion, avionics, and storage systems with minimal delay. This adaptive capacity ensures that even under erratic weather patterns, UAVs employing SMA-based strategies can maintain closer-to-optimal energy efficiency. Ultimately, such adaptability promises more robust performance over long-duration missions where conditions remain unpredictable. These strategies are increasingly supported by computational modelling tools, simulation-based optimization, and robust numerical frameworks that enable engineers to evaluate trade-offs and refine control algorithms for enhanced energy efficiency.

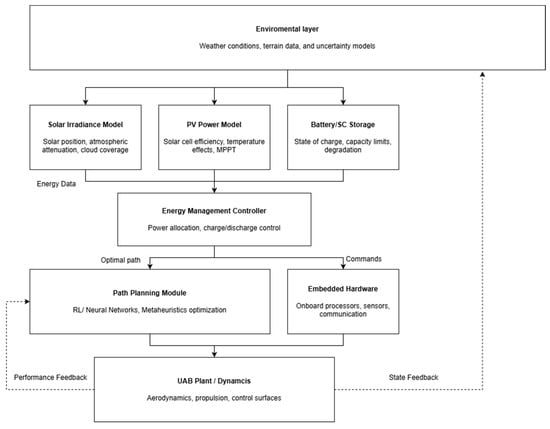

To contextualize these strategies, Figure 1 illustrates the integrated energy-aware path-planning architecture for a solar-powered UAV.

Figure 1.

System Architecture diagram for solar powered UAVs with path planning.

3. Neural Networks in UAV Path Planning

3.1. Neural Networks & Hybrid Metaheuristic Integration for Energy-Aware Path Planning

Classical UAV autopilot and path-planning methods often assume fixed models and static energy budgets. By contrast, solar-powered UAVs are inherently nonlinear and time-varying systems, and their operation is further influenced by fluctuations in energy input. Deep neural networks (DNNs) can learn complex mappings from sensor inputs, such as irradiance, wind, and battery state, to control actions, enabling the UAV to adapt its flight path within milliseconds.

Neural networks have significantly advanced UAV control by modeling dynamics that challenge traditional controllers. They can process high-dimensional sensor data (LiDAR, radar, cameras) to make real-time navigation decisions in uncertain environments. Modern frameworks (TensorFlow, PyTorch, MATLAB) and training techniques (backpropagation with optimizers like Adam and regularization) allow these networks to be trained efficiently and deployed onboard. Studies demonstrate that well-trained networks can even replace conventional controllers, achieving trajectory tracking errors comparable to PID controllers while maintaining stable flight in previously untrained scenarios.

Simpler network models offer lower latency but reduced accuracy, highlighting a trade-off between computational efficiency and precision. Beyond standalone neural strategies, integrating neural networks with evolutionary and bio-inspired optimization algorithms has shown further performance gains. Hybrid approaches combine the pattern recognition and fast decision-making of neural networks with the global search and optimization capabilities of algorithms like Genetic Algorithms (GA) or Particle Swarm Optimization (PSO).

For example, a neural network may quickly estimate the energy cost of a trajectory, while an outer GA or PSO loop uses that estimate as a fitness function to evolve better trajectories. Such frameworks improve obstacle avoidance, multi-UAV coordination, and real-time energy management. Representative studies are summarized in Table 2.

Table 2.

Meta-Heuristic and Hybrid Algorithms in UAV Path Planning.

3.2. Neural Network Architectures for Path Planning

Different neural network architectures have been explored for UAV path planning, each leveraging unique data-processing capabilities. Convolutional Neural Networks (CNNs), originally developed for image recognition, can interpret high-resolution aerial images or terrain maps to assist in route planning and obstacle avoidance. Recurrent Neural Networks (RNNs) and especially Long Short-Term Memory (LSTM) networks excel at time-series prediction, allowing a UAV to anticipate future states (e.g., wind or energy availability) based on historical data. Battery performance forecasting using deep neural networks has proven effective in extending UAV operational lifespan [28].

Different neural network architectures are utilized for UAV path planning due to their unique data-processing capabilities. Table 3 provides an overview of key studies and applications that have explored these architectures in UAV path planning, highlighting their specific aims, neural network types, frameworks used, and outcomes achieved.

Convolutional Neural Networks (CNNs): Originally used for computer vision, CNNs are now applied to UAV path planning to process high-resolution aerial imagery and sensor data, including 2D object detection tasks [29,30,31]. This architecture captures spatial hierarchies, aiding in tasks like terrain mapping and obstacle detection [32,33].

Recurrent Neural Networks (RNNs) and Long Short-Term Memory Networks (LSTMs): RNNs and LSTMs are ideal for time-series data, allowing UAVs to predict future states based on historical information.

(LSTMs improve upon standard RNNs by including gating mechanisms that manage information flow, addressing the vanishing gradient problem.)

Frameworks like Keras, PyTorch, and TensorFlow provide pre-built modules for RNNs and LSTMs, enabling quick prototyping. These architectures are often combined with tools like OpenCV and SLAM (Simultaneous Localization and Mapping) for integrated visual navigation systems.

Table 3.

Representative neural network-based approaches in UAV path planning and control.

Table 3.

Representative neural network-based approaches in UAV path planning and control.

| Title | Authors | Year | Neural Network Approach | Application |

|---|---|---|---|---|

| Energy-Optimal Trajectory Planning for Near-Space Solar-Powered UAV Based on Hierarchical Reinforcement Learning [33] | Tichao Xu; Di Wu; Wenyue Meng; Wenjun Ni; Zijian Zhang | 2024 | Hierarchical Deep RL (option-based RL algorithm) | Energy-optimal trajectory planning for high-altitude solar-powered UAV (maximize net energy gain for near-space flight) |

| Energy-Optimal Flight Strategy for Solar-Powered Aircraft Using Reinforcement Learning with Discrete Actions [34] | Wenjun Ni; Di Wu; Xiaoping Ma | 2021 | Deep Reinforcement Learning (discrete action space controller) | Energy management for HALE solar-powered UAV (extended endurance via optimized 24-h flight trajectory) |

| Deep Reinforcement Learning-Driven UAV Data Collection Path Planning: A Study on Minimizing AoI [23] | Hesong Huang; Yang Li; Ge Song; Wendong Gai | 2024 | Multi-Agent Deep RL (Twin Delayed DDPG variant, MATD3 with PSO) | Multi-UAV collaborative path planning to minimize Age of Information (freshness of IoT sensor data) |

| Path Planning of Multi-UAVs Based on Deep Q-Network for Energy-Efficient Data Collection in UAVs-Assisted IoT [35] | Xiumin Zhu; Lingling Wang; Yumei Li; Shudian Song; et al. | 2022 | Multi-Agent Deep Q-Network (HAS-DQN algorithm combining hexagonal search + DQN) | Energy-efficient multi-UAV data collection (maximize wireless sensor data gathered while avoiding coverage overlap) |

| Multi-UAV Autonomous Path Planning in Reconnaissance Missions Considering Incomplete Information: A Reinforcement Learning Method [36] | Yu Chen; Qi Dong; Xiaozhou Shang; Zhenyu Wu; Jinyu Wang | 2023 | Multi-Agent RL (PPO)-centralized training & decentralized execution (with recurrent neural network state) | Coordinated multi-UAV path planning for reconnaissance in dynamic, partially observed environments (robust to incomplete info) |

| A Novel Reinforcement Learning Based Grey Wolf Optimizer Algorithm for Unmanned Aerial Vehicles (UAVs) Path Planning [37] | Qu; Gai; Zhong; Zhang | 2020 | Hybrid RL + Metaheuristic (RLGWO: RL-guided Grey Wolf Optimizer) | Global 3D path optimization with obstacle avoidance (computes collision-free and shortest routes in complex terrain) |

| 6-DOF UAV Path Planning and Tracking Control for Obstacle Avoidance: A Deep Learning-Based Integrated Approach [38] | Yanxiang Wang; Honglun Wang; Yiheng Liu; Jianfa Wu; Yuebin Lun | 2024 | LSTM Network (offline-trained LSTM for fast online path generation) | Real-time 3D path planning with obstacle avoidance for fixed-wing UAV (integrated path planning & trajectory tracking control) |

3.3. Reinforcement Learning in Path Optimization

In the context of solar-powered UAVs, reinforcement learning is used primarily to adapt flight decisions to real-time energy availability and environmental fluctuations. Unlike conventional neural-network controllers, which generate actions from learned mappings, RL explicitly optimizes long-horizon energy objectives such as maximizing net energy gain or minimizing total consumption over an entire mission. This section focuses on the practical differences between major RL algorithms (DQN, PPO, and multi-agent variants) and their suitability for solar-powered UAV path planning.

Hybrid neural-metaheuristic frameworks, however, introduce additional complexity. Coupling a learning module with an optimizer increases computational overhead and requires careful tuning to ensure stable convergence. Functional overlaps, where both the neural policy and the evolutionary planner adjust the route, must be managed to avoid redundancy.

Despite these challenges, hybrid RL approaches yield adaptive and efficient routing solutions, particularly in dynamic scenarios such as solar-powered UAVs navigating fluctuating energy conditions. Neural networks provide real-time predictive intelligence, while metaheuristics ensure globally optimized paths. Together, they achieve solutions that neither method could reach alone, making this combination a compelling direction for advanced UAV path planning.

3.3.1. Deep Q-Networks (DQN)

DQN is widely utilized in path optimization for tasks requiring discrete action spaces The core of DQN lies in the Bellman equation as shown in Equation (1):

This formulation makes DQN suitable for discretized flight decisions (e.g., turn left/right, climb/descend), which matches many fixed-wing solar UAV motion primitives. where is the action-value function representing the expected reward of taking action a in state , is the immediate reward, is the discount factor that balances immediate and future rewards, is the next state, and is the next action.

Several studies illustrate the effectiveness of DQN in UAV path optimization:

- “Deep Reinforcement Learning-Based Adaptive Real-Time Path Planning for UAV” Jiankang Li and Yang Liu (2021) [39]: This research presents an adaptive real-time path planning method for fixed-wing UAVs using Deep Q-Networks (DQN). The approach considers kinematic constraints and emphasizes path smoothness, achieving efficient obstacle avoidance and energy conservation in unknown environments.

- “Deep Reinforcement Learning-Based UAV Path Planning Algorithm in Agricultural Time-Constrained Data Collection” by M. Cai, S. Fan, G. Xiao, and K. Hu (2023) [31]: This study introduces a UAV path planning algorithm based on Deep Reinforcement Learning (DRL) for agricultural data collection. The algorithm optimizes location, energy, and time constraints to maximize data collection efficiency, demonstrating the adaptability of DRL in dynamic environments.

3.3.2. Proximal Policy Optimization (PPO)

Proximal Policy Optimization (PPO) is often preferred. PPO, a policy gradient algorithm, stabilizes training through a clipped objective function as shown inside Equation (2):

This clipped objective directly improves stability when a solar-powered UAV must continuously adjust pitch, bank, or altitude based on solar input or thermal conditions. where rt(θ) represents the probability ratio between new and old policies, at is the advantage function at time t, and ϵ is a hyperparameter to control the range of updates. (This clipping function prevents extreme policy updates, stabilizing training in continuous action spaces).

One study that exemplifies PPO in UAV path optimization is, “Proximal Policy Optimization for Multi-Rotor UAV Autonomous Guidance, Tracking and Obstacle Avoidance” by Hu et al., 2022 [22]: This study applied PPO to enable energy-efficient, autonomous guidance and real-time obstacle avoidance in multi-rotor UAVs. By integrating LSTM modules into the PPO framework, the authors enhanced stability and convergence during flight in complex environments. The approach achieved smooth tracking and reduced control effort, underscoring PPO’s potential for responsive and energy-aware UAV navigation under dynamic conditions.

3.3.3. Multi-Agent and Cooperative RL Approaches

In scenarios involving multiple UAVs working together, Multi-Agent Reinforcement Learning (MARL) enables collaborative path optimization: “Multi-UAV Adaptive Path Planning Using Deep Reinforcement Learning” by Westheider et al., 2023 [40]: By employing a Multi-Agent Deep Deterministic Policy Gradient (MADDPG), this study focused on cooperative path planning for large-scale monitoring tasks. UAVs shared data to prevent redundant pathing and optimized terrain mapping across team members, resulting in 30% improved task efficiency. This MARL approach offers promising applications in coordinated search and rescue missions, where multiple UAVs must navigate collectively to maximize coverage.

These studies collectively demonstrate how RL enables UAVs to develop advanced path optimization strategies. Implementations frequently leverage frameworks such as Gym and Stable Baselines3 for simulation, while tools like ROS (Robot Operating System) and OpenCV support real-world navigation and visual processing. Through RL, UAVs not only improve route efficiency and energy use but also gain the ability to adapt to complex and variable environments, making RL a powerful paradigm in UAV path planning.

Across the RL spectrum, DQN remains attractive for its low computational cost and suitability to discretized flight actions, whereas PPO provides smoother and more energy-aware control in continuous domains. Multi-agent extensions such as MADDPG or MATD3 further enable cooperative energy management among UAV teams, improving coverage efficiency and reducing redundant flight segments under shared solar constraints. These distinctions are more critical in solar-powered UAVs than in conventional platforms because energy availability varies with time, altitude, and weather conditions, making long-horizon adaptability a core requirement.

3.3.4. Case Studies and Applications

The integration of hybrid and bio-inspired algorithms in UAV path planning has demonstrated significant potential in enhancing energy efficiency and adaptability. Various studies have explored these innovative approaches to address the complex challenges associated with UAV navigation and energy management.

One notable approach combines traditional control methods with machine learning techniques. For instance, integrating Model Predictive Control (MPC) with Reinforcement Learning (RL) has been effective for quadrotor guidance. MPC provides real-time predictive control based on the UAV’s dynamic model, ensuring stability and responsiveness. Simultaneously, RL refines control policies through continuous environmental feedback, enabling the system to adapt to dynamic conditions. This hybrid architecture enhances trajectory tracking and obstacle avoidance, crucial for efficient and safe UAV operations [41,42].

Deep Reinforcement Learning (DRL) frameworks, such as those presented by Lee et al. [43], using actor–critic models, have also been employed to enable UAVs to learn optimal navigation policies through environmental interactions. Implemented on platforms like TensorFlow, these methods enhance adaptability to changing conditions and unforeseen obstacles. The actor–critic architecture facilitates continuous learning and decision-making, leading to more energy-efficient flight paths and extended mission durations.

Bio-inspired algorithms, which mimic natural processes, have been particularly impactful in UAV path planning. Ant Colony Optimization (ACO), as explored by Konatowski et al., 2018 [44], simulates the foraging behaviour of ants to efficiently explore the search space. By finding the shortest and most energy-efficient paths, ACO reduces energy consumption and improves mission efficiency. Similarly, Particle Swarm Optimization (PSO) leverages the collective behaviour of particle swarms to search for optimal paths, accounting for energy constraints and environmental factors. These methods effectively balance exploration and exploitation, leading to optimized routes that conserve energy.

Genetic Algorithms (GA) have been integrated with traditional path planning methods like the A* algorithm to enhance efficiency. These studies utilize and blend traditional control methods with machine learning and bio-inspired algorithms. The outcomes demonstrate improvements in key performance metrics:

- Response Time: Quick response times enable UAVs to react promptly to dynamic environments, essential for real-time obstacle avoidance and decision-making. Enhanced response times contribute to safer and more reliable operations.

- Energy Consumption: Minimizing energy consumption directly impacts flight endurance, especially for solar-powered UAVs with limited energy resources. Efficient energy use allows for longer missions and reduces reliance on energy-harvesting intervals.

- Adaptability Under Changing Conditions: The ability to adapt to environmental changes, such as wind variations or unexpected obstacles, ensures consistent UAV performance. Adaptive algorithms enhance reliability and increase mission success rates.

In the context of solar-powered UAVs, efficient energy management is particularly vital. By optimizing energy consumption through advanced path planning algorithms, these UAVs can maximize flight duration and mission success, making them more viable for prolonged operations [42].

The current body of research, while promising, indicates that there is ample room for further exploration in hybrid and bio-inspired methods. The limited number of case studies underscores the need for continued research to fully realize the potential of these approaches in UAV path planning. By contributing to this area, our work addresses both the scarcity of research and the demand for specialized solutions that improve energy efficiency and adaptability in UAV operations.

Artificial intelligence, including advanced neural networks and machine learning algorithms, greatly refines energy management and route optimization in solar-powered UAVs. By fusing environmental predictions with onboard energy profiles, AI-driven approaches dynamically tailor flight paths and power distribution to current conditions. This intelligence enables UAVs to exploit solar peaks, conserve energy during low-irradiance periods, and reroute around obstacles or adverse weather. Yet, these methods may increase computational demands and complexity, necessitating careful hardware selection and robust model validation. Compared to simpler, rule-based control systems, AI-driven strategies offer significantly enhanced adaptability and efficiency, but decision-makers must balance these benefits against potential trade-offs in cost, interpretability, and the need for reliable, high-quality training data.

4. Optimization Algorithms in UAV Applications

4.1. Importance of Optimization in UAVs

Optimization algorithms are fundamental to the operational efficiency and effectiveness of UAVs. They enable UAVs to perform complex tasks such as path planning, energy management, sensor allocation, and multi-agent coordination with high precision and minimal resource consumption. Without proper optimization, UAVs may experience increased energy consumption, reduced mission duration, and even mission failure due to collisions or an inability to navigate complex environments. This paper focuses on the computational complexity, numerical stability, and algorithmic efficiency of these optimization techniques, examining how cutting-edge methods such as parallel computing and GPU acceleration can meet the real-time demands of UAV operations.

For instance, unoptimized path planning can lead UAVs to take longer routes, consuming more energy and reducing operational time. This inefficiency not only shortens mission duration but also increases the risk of mission failure due to depleted power reserves. Studies in engineering optimization have demonstrated the role of evolutionary and meta-heuristic algorithms in improving solution quality and computational performance [45]. When applied to UAVs, these techniques have been shown to reduce energy consumption, improve trajectory smoothness, and extend mission duration, as demonstrated in UAV-specific path-planning research such as Aggarwal & Kumar (2020) [3], Gómez Arnaldo et al. (2024) [6], and Faizah et al. (2021) [5].

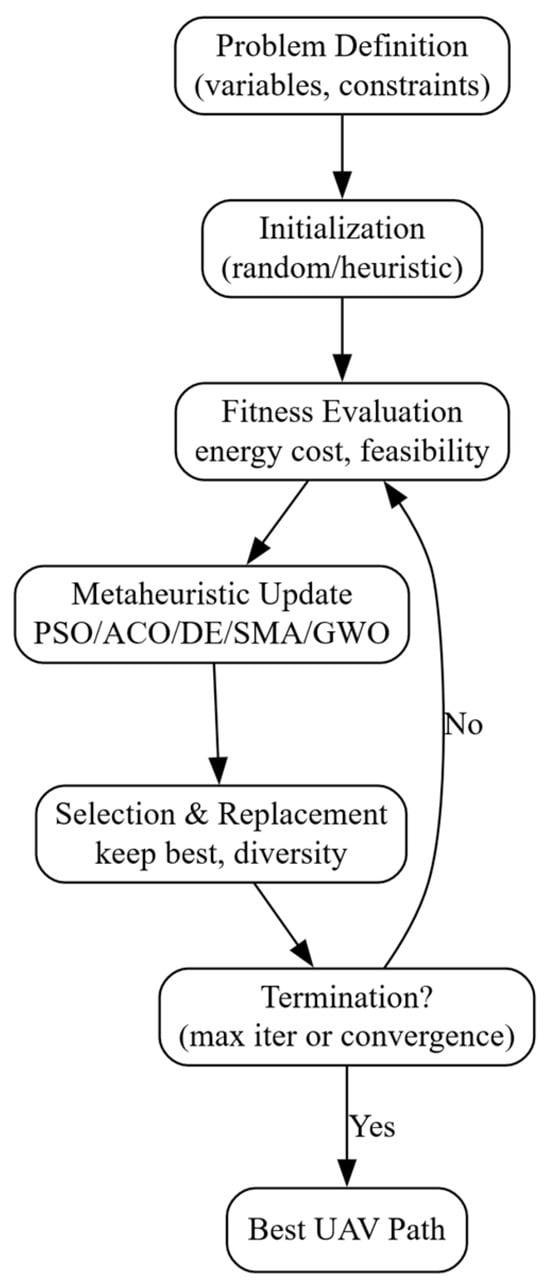

This is consistent with the findings of Cao et al. (2024) [11], whose comprehensive survey on CI-based UAV swarm networking and collaboration highlights that optimization-driven approaches substantially improve cooperative task assignment, reduce redundancy in coverage, and enhance the stability of multi-UAV missions across diverse operational scenarios. This optimization is crucial in applications such as surveillance, search and rescue, and agricultural monitoring, where coordination among multiple UAVs determines mission success. To illustrate the overall process, the optimization workflow for UAVs can be represented in Figure 2:

Figure 2.

System Architecture diagram for solar powered UAVs with path planning. (created by the authors).

Figure 2 highlights the flow from mission objectives and environmental inputs, through mathematical formulation and modeling, to the optimization engine and UAV execution, with real-time feedback closing the loop.

Therefore, optimization in UAVs is crucial for maximizing performance, ensuring safety, and achieving mission objectives efficiently. The mathematical representation of these optimization problems often involves multiple objectives and constraints, requiring specialized algorithms to solve due to their non-linear and non-convex nature.

For example, Wang et al. (2024) developed an ACO-based path-planning algorithm specifically for UAV navigation in dense urban airspace and demonstrated significant reductions in collision risk and travel distance under real-world constraints [46]. The optimization problems in UAV applications are often multi-objective and can be expressed mathematically as shown in Equation (3):

Objective Function:

Subject to Equations (4) and (5):

where: represents the set of objective functions, and and are inequality and equality constraints, respectively. Solving these problems often requires specialized algorithms due to their non-linear and non-convex nature.

4.2. Classical Optimization Techniques

Traditional optimization methods such as Linear Programming (LP), Nonlinear Programming (NLP), and Dynamic Programming (DP) have long been applied in UAV systems [47]. LP is typically used for problems that involve linear objectives and constraints, making it suitable for tasks such as static resource allocation or simplified routing under fixed energy budgets. NLP extends this capability to nonlinear relationships and is frequently employed when aerodynamic effects, nonlinear energy consumption models, or terrain-dependent constraints must be incorporated. Dynamic Programming, in contrast, is used for sequential decision-making problems where each flight action depends on the outcomes of previous steps. It is particularly useful for discretized time-of-day energy scheduling or multi-stage path planning, where the UAV must evaluate the long-term impact of current decisions on future energy availability. While these classical methods provide strong theoretical guarantees, their applicability to real-time solar-powered UAV operations is often limited by dimensionality and computational complexity, especially when dealing with highly dynamic environments, stochastic solar input, or large multi-UAV action spaces.

4.3. Evolutionary Algorithms

Evolutionary algorithms (EAs), inspired by natural selection, are widely used for nonlinear and multi-modal optimization in UAV systems [48]. Their population-based nature enables exploration of complex search spaces, making them suitable for path planning, cooperative task allocation, and control parameter tuning.

- Genetic Algorithms (GA)

GA evolve candidate solutions through selection, crossover, and mutation. In UAV path planning, GAs are used to optimize trajectory smoothness, energy consumption, and collision avoidance. Zhang et al. (2019) [49] applied a GA-driven receding horizon controller for multi-UAV formation reconfiguration, demonstrating reduced energy consumption and improved maneuver stability. Similarly, Sonmez et al. (2015) [48] used GA to generate obstacle-aware UAV trajectories, achieving shorter paths and higher computational efficiency compared to classical graph-search techniques. GA optimization can be expressed using a fitness function such as:

- 2.

- Differential Evolution (DE)

DE optimizes candidate solutions by combining population vectors through scaled differences. Kok and Rajendran (2016) [50] demonstrated DE for UAV path planning, significantly improving trajectory quality while reducing computational load. DE mutation is defined as:

where represents the next-generation candidate vector for the i-th individual, are vectors from the current generation (with r1, r2, and r3 being distinct random indices of individuals in the population) and F is a scaling factor used to control the amplification of the difference.

DE has also been used to tune control parameters, as in Ramirez-Atencia et al. (2017) [51], who applied multi-objective GA/DE strategies for complex multi-UAV mission planning with balanced workload distribution and reduced mission time.

These examples underscore the versatility and effectiveness of evolutionary algorithms in addressing various optimization challenges in UAV applications, from path planning to control parameter optimization. The iterative and adaptive nature of EAs makes them well-suited for the dynamic and complex environments in which UAVs operate.

Additional work using DE for neural network parameter optimization in UAV obstacle avoidance [39] illustrates its versatility for control and sensing tasks.

- 3.

- Hybrid GA-A* Approaches

Hybrid GA-A* planners combine GA’s global exploration with A*’s deterministic refinement. Dewangan et al. (2019) [52] applied enhanced evolutionary techniques for 3D UAV path planning, showing that hybrid planners outperform standalone GA under difficult terrain and obstacle conditions. Such hybrids are especially suitable for disaster response missions, where dynamic terrain and multi-agent coordination require continuous replanning.

Overall, evolutionary algorithms provide robust and flexible optimization capabilities across diverse UAV applications. Their iterative population-based structure makes them effective in dynamic environments and suitable for both offline mission design and onboard trajectory refinement.

4.4. Swarm Intelligence Algorithms

Swarm intelligence algorithms mimic collective behaviors found in nature and are widely used in multi-UAV coordination, distributed sensing, and dynamic path planning.

- Particle Swarm Optimization (PSO):

PSO simulates flocking behavior by updating particle velocities based on personal and global best positions. Phung and Ha (2021) [47] proposed a spherical-vector PSO formulation for UAV path planning in threat-rich environments, demonstrating improved safety and trajectory efficiency. The equation governing this can be seen in Equation (8).

In PSO, is the velocity of particle I, is the inertia weight, and are cognitive and social coefficients, and are random numbers, pi is the particle’s best position, and g is the global best position. The equation is given in Equation (9).

- 2.

- Ant Colony Optimization (ACO):

ACO uses pheromone trails to guide UAVs through complex environments. Bui et al. (2024) [53] used ACO for cooperative inspection path planning with multiple UAVs, achieving improved coverage efficiency and reduced total path length. Wang et al. (2024) [46] also implemented ACO for urban UAV navigation, showing reduced collision rates and improved adaptability to dynamic obstacles. Ant Colony Optimization (ACO) uses a pheromone update rule (Equation (10)) to mimic ant foraging behaviour.

In ACO, is the pheromone level on path ij, is the evaporation rate and is the additional pheromone laid by the k-th ant.

SwarmOps, PSO Toolbox, and ACO libraries facilitate the use of these algorithms. A study by Wang et al. (2024) [46] presented an ACO-based path planning algorithm for UAV navigation in dynamic environments. Their approach accounted for obstacles and changing conditions, enabling UAVs to adapt their paths in real-time, thereby improving navigation safety and reliability. A comprehensive review by Hooshyar and Huang (2023) [25] analysed the application of meta-heuristic algorithms in UAV path planning, providing insights into the effectiveness of different algorithms and guiding future research in UAV path optimization [24].

Particle Swarm Optimization (PSO) excels at quickly converging to workable solutions, making it suitable for environments where conditions remain relatively stable and computational speed is a priority. The Slime Mould Algorithm (SMA), however, offers continuous adaptability and decentralized decision-making, enabling UAV swarms to reorganize routes as conditions evolve. For multi-UAV cooperative navigation in dynamic, obstacle-rich environments, SMA’s capacity to continuously adjust makes it more effective than PSO, ultimately ensuring safer navigation through unpredictable terrains.

These examples demonstrate the versatility and effectiveness of swarm intelligence algorithms in UAV applications, particularly in scenarios requiring dynamic adaptation and coordination among multiple agents.

4.5. Bio-Inspired Optimization

Bio-inspired algorithms draw from biological systems to provide adaptable, distributed solutions to UAV optimization challenges.

- Slime Mould Algorithm (SMA):

Xiong et al. (2023) [54] introduced an improved SMA variant for cooperative multi-UAV path planning, significantly enhancing collision avoidance and real-time coordination. SMA simulates the adaptive behaviour of slime moulds as Equation (11):

In the Slime Mould Algorithm, is the position of the best solution, b, and c are coefficients that adjust based on the iteration, and D is a distance factor.

A comprehensive review by Chen et al. (2023) [55] discusses SMA variants and their suitability for complex UAV path-planning problems.

- 2.

- Grey Wolf Optimizer (GWO):

GWO models the hierarchical social structure and hunting strategies of grey wolves. In UAV optimization, GWO has been applied successfully in path planning and resource allocation. Studies [27,52] introduced a GWO-based approach for UAVs, focusing on energy efficiency and obstacle avoidance in 3D path planning. Their findings demonstrated that GWO outperformed traditional path-planning algorithms, providing optimal path solutions in complex mission scenarios. The models of hierarchical leadership in wolves can be deduced as such in Equation (12):

In the Grey Wolf Optimizer , , and represent the positions of the alpha, beta, and delta wolves.

These algorithms can be explored to determine their effectiveness under real-time constraints and varying environments, but further research is needed to verify their applicability. For example, GWO has been applied in UAV 3D path planning by considering real-world factors such as obstacle avoidance and flight constraints.

A comprehensive review [56] covers recent variants and applications of the Slime Mould Algorithm (SMA), highlighting its potential in solving complex optimization problems, including those in UAV path planning.

Researchers have enhanced the application of the Slime Mould Algorithm (SMA) by integrating it with deep learning techniques for resource allocation in UAV-enabled wireless networks. Studies [57,58] developed an Enhanced Slime Mould Optimization with Deep-Learning-Based Resource Allocation Approach (ESMOML-RAA). This approach employs a highly parallelized long short-term memory (HP-LSTM) model optimized by an enhanced SMA, leading to improved network coverage and energy efficiency. The study demonstrates the potential of combining bio-inspired optimization with advanced machine learning for UAV tasks.

In Ant Colony Optimization (ACO), the pheromone evaporation rate (ρ) governs how quickly past solutions fade, encouraging the algorithm to seek out fresh routes rather than clinging to paths that once seemed optimal. By tuning ρ, ACO can rapidly adapt to dynamic obstacles and shifting conditions, ensuring UAVs continually discover viable, energy-efficient trajectories. Meanwhile, the Grey Wolf Optimizer (GWO) employs a hierarchical leadership model that mimics social hunting. As alpha, beta, and delta wolves guide the search, the algorithm adapts routes to avoid obstacles, conserve energy, and exploit favourable air currents. This hierarchical approach, combined with GWO’s capacity to navigate complex three-dimensional spaces, enables UAVs to achieve energy-efficient 3D path planning under strict real-time constraints, improving mission reliability and endurance.

4.6. Hybrid Optimization Techniques

Combining algorithms can harness their respective strengths:

- Neural Network with Evolutionary Algorithms:

Integrating neural networks with evolutionary algorithms enables the approximation of complex solution landscapes, facilitating efficient optimization in UAV operations. For instance, a study [59] proposed a hybrid framework combining reinforcement learning and convex optimization for UAV-based autonomous data collection in the Metaverse. This approach utilized neural networks to model the environment and evolutionary strategies to optimize UAV trajectories, resulting in improved data collection efficiency and reduced mission completion time. A neural network can approximate the solution landscape; this relationship is formalized in Equation (13).

- 2.

- PSO and GA Integration:

Combining Particle Swarm Optimization (PSO) and Genetic Algorithms (GA) leverages PSO’s rapid convergence capabilities and GA’s robust search mechanisms. In UAV applications, this integration has been applied to optimize path planning and energy consumption. For example, a study [60] introduced a hybrid algorithm that integrates PSO and Differential Evolution (DE) for UAV inspection path planning in urban pipe corridors. The hybrid approach effectively optimized the cost function, resulting in superior path planning performance compared to individual algorithms. Use PSO for global search followed by GA for refinement as seen in Equation (14):

Off-the-shelf libraries (e.g., DEAP, Scikit-Optimize, MATLAB/Simulink) facilitate the implementation of these hybrid algorithms. For instance, Si et al. [59] developed a combined reinforcement learning and convex optimization framework for UAV-based data collection in a metaverse scenario, which reduced mission completion time and improved data collection efficiency. The study demonstrated that the hybrid approach reduced mission completion time and improved data collection efficiency compared to traditional methods.

Wang et al. (2023) [42] proposed a cooperative game-based hybrid optimization algorithm that combines spherical vector particle swarm optimization (SPSO) and differential evolution (DE) for UAV inspection path planning. The hybrid algorithm outperformed traditional methods in planning optimal paths in complex urban environments These examples illustrate the effectiveness of hybrid optimization techniques in enhancing UAV performance across various applications. By combining the strengths of different algorithms, hybrid approaches offer robust solutions to the multifaceted challenges encountered in UAV operations.

The study proposed a cooperative game-based hybrid optimization algorithm that combines Particle Swarm Optimization (PSO) and Differential Evolution (DE) for UAV inspection path planning. The hybrid algorithm outperformed traditional methods in planning optimal paths in complex urban environments.

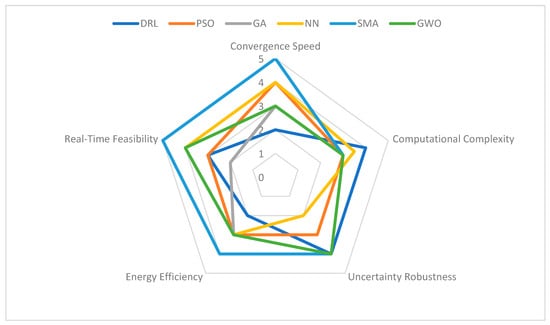

Combining algorithms can harness their respective strengths, with various optimization methods proving useful across UAV applications. Each algorithm offers unique benefits in terms of convergence speed, computational complexity, suitability for real-time applications, and adaptability to dynamic environments. Table 4 provides a comparative summary of commonly used optimization algorithms, highlighting these key characteristics to aid in selecting an appropriate approach based on mission requirements.

Table 4.

Comparison of Optimization Algorithms for UAV Applications.

Figure 3 provides a radar-chart comparison of the algorithms, rated on a scale from 0 (unusable) to 4 (reliably used).

Figure 3.

Comparative Performance of Computational Strategies for UAV Path Planning.

Blending Particle Swarm Optimization (PSO) and Differential Evolution (DE) can yield more robust solutions for UAV inspection path planning. PSO’s rapid convergence accelerates the initial search, while DE’s variation operators help the algorithm escape local optima, resulting in more thorough exploration. Together, these methods identify efficient inspection routes that minimize travel time, reduce energy usage, and improve overall mission outcomes.

In parallel, hybrid frameworks combining neural networks with evolutionary algorithms enable UAVs to adapt resource allocation in wireless networks dynamically. Neural models predict traffic patterns, interference, and user demands, while evolutionary algorithms adjust the UAV’s flight patterns or antenna orientations to optimize coverage and bandwidth distribution. This integrated approach merges predictive intelligence with adaptive optimization, fostering energy efficiency, enhanced connectivity, and robust performance in complex operational settings.

Despite the successes of these optimization techniques, several practical challenges remain before solar-powered UAVs can fully realize near-perpetual operation.

4.7. Comparative Analysis of Algorithm Categories

A rigorous comparison of neural networks, deep reinforcement learning (DRL), evolutionary algorithms, and swarm intelligence methods is essential for evaluating their suitability for solar-powered UAV missions. Unlike the preceding sections, which described each class independently, this subsection synthesizes findings across multiple studies to identify patterns, operational trade-offs, and context-specific strengths. The comparison also includes metaheuristic techniques not covered in Table 4, such as Differential Evolution (DE) [49,50], Ant Colony Optimization (ACO) [44,46,53], Spherical Vector PSO [47], improved Slime Mould Algorithms (SMA) [54,55], enhanced Grey Wolf Optimizers (GWO) [52], and hybrid PSO-GA or PSO-DE approaches [10,57]. These variants have become essential components of modern UAV optimization pipelines.

Neural networks (NNs) play a central role in modeling nonlinear solar-energy dynamics, irradiance fluctuations, and high-dimensional sensor inputs. Foundational work by LeCun et al. [7] and compression studies such as Jacob et al. [61] and Han et al. [28] demonstrate that NNs can be adapted to low-power embedded systems when pruned or quantized. UAV-specific implementations, including drone perception and classification systems [29,30,32] and energy-prediction networks for UAV batteries [19], confirm their suitability for solar-powered UAVs operating under varying environmental conditions. Their main limitations are sensitivity to out-of-distribution inputs and compute overhead on micro-controller-class platforms.

Deep reinforcement learning (DRL) provides advanced adaptability in long-duration missions where solar irradiance, thermal conditions, and wind patterns are nonstationary. DRL has been applied to energy-optimal solar-UAV trajectories [33], long-endurance flight strategies [34], agricultural data collection [31], reconnaissance under incomplete information [36], and multi-UAV cooperative behaviors [40]. Policy-gradient and actor–critic variants, including PPO implementations for UAV guidance and obstacle avoidance [22], demonstrate stable continuous-control behavior. DRL’s major disadvantage remains computational intensity, though recent embedded neural computing platforms [62,63] and quantization methods [61] enable increasingly efficient onboard deployment.

Evolutionary algorithms, including GA [48,51], DE [49,50], and hybrid PSO-GA methods [10], remain attractive for offline optimization due to their global search characteristics, robustness, and limited computational requirements. GA has been effectively applied to path planning [48] and multi-objective UAV mission design [51], while DE-based receding-horizon controllers have shown strong performance in multi-UAV formation reconfiguration [49]. However, evolutionary algorithms generally lack fast adaptation capabilities for real-time flight in rapidly changing solar environments.

Swarm intelligence techniques such as PSO, ACO, SMA, and GWO exhibit strong performance in both single- and multi-UAV path planning scenarios. PSO extensions, including hybrid PSO frameworks [10] and spherical-vector PSO [47], improve turbulence robustness and obstacle-avoidance stability. ACO has been successfully deployed for cooperative path planning [53] and urban airspace navigation [46]. SMA and its enhanced variants have shown effectiveness in dynamic multi-UAV environments [54,55], while GWO has demonstrated reliable 3D path planning performance [52]. Despite these strengths, swarm algorithms rely heavily on parameter tuning and may still experience premature convergence under highly variable solar conditions.

These distinctions lead to important mission-specific trade-offs. In platforms with strict power or processing limitations, such as long-endurance micro-UAVs, lightweight metaheuristics (GA, DE, PSO, GWO, SMA) are preferred due to their modest memory footprint. Missions characterized by rapid, unpredictable irradiance changes benefit from DRL and LSTM-augmented policies [33,34], which can learn temporal patterns in solar energy conditions. Multi-UAV cooperative missions can leverage multi-agent RL architectures such as MADDPG or MATD3 when hardware permits [40], or adopt classical swarm approaches (PSO, ACO, SMA) when power constraints are severe. Hybrid techniques combining metaheuristics with learning methods, such as RL-enhanced GWO [37], SMA-DL resource allocation [58], or PSO-refined planners [10], consistently achieve superior robustness and convergence efficiency, making them promising candidates for solar-powered UAV systems.

To better illustrate the typical workflow of metaheuristic algorithms in UAV optimization, Figure 4 shows the step-by-step process from problem definition to output:

Figure 4.

Metaheuristic Algorithm Flow.

Overall, comparative evidence across the literature indicates that no algorithm class offers universal superiority. Optimal performance depends on the interplay among computational resources, environmental variability, the need for real-time adaptation, and mission duration. Hybrid algorithms, combinations of metaheuristics, neural models, and DRL policies, represent one of the most promising directions for future solar-powered UAV optimization due to their complementary strengths in exploration, robustness, and adaptability.

5. Challenges and Future Directions

From a computational engineering perspective, future research must address scalability, real-time responsiveness, and the integration of high-performance computing platforms. Developing streamlined algorithms, employing distributed optimization frameworks, and validating these methods through simulation and hardware-in-the-loop testing will be paramount.

5.1. Challenges in Neural Network Implementation

Implementing neural networks in UAVs presents multiple challenges, largely due to the computational and energy limitations of onboard hardware. Neural networks, especially deep architectures, demand significant processing power and memory, which are scarce in UAVs that rely on lightweight embedded systems for flight-critical functions. One primary approach to addressing these constraints is through model compression techniques, such as network pruning and quantization [61]. Pruning reduces the model size by removing less significant weights as shown in Equation (15):

Here, ∣∣ represents the absolute value of weight , and threshold is a predefined value. If the absolute weight is below this threshold, it is set to zero, effectively pruning it from the network. Studies like Han et al., 2015 [28] showed that pruning can decrease network size by up to 90% without major accuracy loss, while Jacob et al., 2018 [61] demonstrated that quantization maintains accuracy levels near those of full-precision models with half the memory usage.

Model compression techniques like pruning and quantization reduce the computational load but risk weakening the accuracy essential for tasks like obstacle detection. Striking the right balance is crucial: too much compression can produce a lightweight model that reacts too slowly or inaccurately to hazards, while insufficient compression may preserve accuracy at the cost of excessive energy consumption. Fine-tuning pruned models restores a portion of lost accuracy, and employing mixed-precision training-assigning higher precision to critical layers-ensures responsiveness remains intact. These refinements allow UAVs to maintain safe navigation and real-time decision-making even within strict resource limits.

However, these methods can impact model accuracy, which is crucial for UAV applications like obstacle detection. Research by Ullrich et al., 2017 [64] highlighted how fine-tuning pruned networks can recover up to 95% of lost accuracy, and mixed-precision training, with higher precision in key layers, helps retain functionality in real-time tasks.

Energy efficiency is another critical issue, as UAVs rely on limited battery power, making it challenging to run complex neural networks without draining energy reserves. Specialized hardware, such as NVIDIA Jetson Nano and Field-Programmable Gate Arrays (FPGAs), enhances energy efficiency for such tasks. For instance, GPU support optimized for inference tasks, [62] found it could improve energy efficiency in UAVs. Similarly, Liu et al., 2020 [63] showed that FPGAs, configured specifically for neural network operations, could reduce power consumption by up to 60%, making them suitable for repetitive tasks like obstacle detection.

Real-time data processing is vital for UAVs, as delayed responses could result in missed navigation cues or even collisions. To meet these real-time requirements, parallel processing and pipelined architectures are commonly used. For example, Zhang et al., 2020 [49] demonstrated that partitioning neural networks across multiple GPUs improved processing speed by 30%, allowing UAVs to handle complex environments in real-time. Likewise, Wang et al., 2022 [65] found that pipelining convolutional network layers on FPGAs increased inference speed by 40%, allowing multiple operations to be processed simultaneously, meeting stringent timing demands.

Integrating these methods addresses the primary challenges of implementing neural networks in UAVs, offering a balance between efficient model size, energy use, and real-time capability. Studies highlight that by combining model compression, hardware acceleration, accuracy recovery techniques, and dynamic processing strategies, UAVs can leverage neural networks for advanced capabilities even within their limited resources. Future advances could include adaptive model scaling for mission phases, bio-inspired algorithms that adjust to resource constraints, and hybrid cloud-edge architectures for offloaded computation, all potentially driving further efficiency and reliability in UAV neural network applications.

NVIDIA Jetson Nano boards and Field-Programmable Gate Arrays (FPGAs) can help UAVs overcome onboard computational bottlenecks and energy constraints. By accelerating neural network inference and offloading intensive computations, these platforms maintain real-time responsiveness while reducing the energy overhead of complex processing tasks. For instance, CNN-based obstacle detection can run efficiently on a Jetson Nano, and LSTM-driven trajectory updates can be handled by FPGA pipelines, ensuring consistent performance without overwhelming the UAV’s limited power supply.

5.2. Environmental Uncertainties

Environmental uncertainty, particularly variable cloud cover, fluctuating irradiance, and wind turbulence, directly affects the net energy balance of a solar-powered UAV. Studies show that a 15–30% drop in irradiance can reduce available propulsion energy by up to 22% during critical mission segments [2,12,17,21]. Yet most current research assumes simplified irradiance models or ideal weather conditions [2,12,21,33]. The lack of high-fidelity uncertainty models leads to overly optimistic performance claims. Future work should integrate probabilistic weather models, Monte Carlo irradiance forecasting, and uncertainty-aware reinforcement learning to quantify and mitigate performance degradation [2,21,33].

Despite frequent mention of environmental uncertainty, few studies quantify its impact on computational strategy performance. For example, stochastic irradiance can cause deep reinforcement learning (DRL) agents to exhibit up to 20% higher variance in reward convergence, while genetic algorithms (GA) and particle swarm optimization (PSO) may require up to 50% more iterations to maintain feasible paths [5,25,33,34,45]. Slime Mould Algorithm (SMA)-based controllers diverge under rapid irradiance shifts if parameter tuning is static [54,55]. Providing quantitative benchmarks under controlled variability would enable more realistic cross-algorithm comparison and strengthen future review studies.

5.3. Computational Limitations

The deployment of complex neural networks and optimization algorithms on UAVs is often restricted by onboard computational resources, which need to balance power consumption, weight, and processing capability. Current onboard processors (e.g., Jetson Nano, STM32, Pixhawk) exhibit strict power and thermal limits. A full-size DRL model may require 5–20 W additional power, reducing endurance by 6–12% in typical solar UAVs [62,63]. Algorithms must therefore be evaluated not only by accuracy but by their energy overhead. Compression techniques such as pruning, 8-bit quantization, and weight-sharing-based compression can reduce model size by up to 90% while preserving more than 95% accuracy [28,61,64], but their impact on energy-aware decision latency must be rigorously benchmarked [62,63]. Most existing studies do not report these hardware-dependent trade-offs.

Utilizing hardware accelerators like field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) can significantly enhance computational efficiency. These devices are optimized for matrix operations and deep learning inference, reducing computation time and power use. Techniques such as pruning, quantization, and model compression reduce memory usage and computation demands, making advanced algorithms viable for UAV applications.

5.4. Regulatory and Safety Concerns

Ensuring safety and regulatory compliance is vital as UAV technology continues to evolve. The current regulatory frameworks often lag behind technological advancements, leading to challenges in maintaining safety and privacy standards.

Developing reliable autonomous collision avoidance systems is essential. These systems should integrate sensor fusion, using data from LiDAR, radar, cameras, and GPS to create an accurate real-time map for pathfinding and obstacle detection. Algorithms like Rapidly-exploring Random Trees (RRTs) and A* search help UAVs make immediate, safe navigation decisions.

UAV systems must adapt to regional regulations, which include geo-fencing technology, altitude limits, and automatic dependent surveillance-broadcast (ADS-B) systems for real-time location tracking. Engineers need to consider these evolving global standards to ensure UAV compliance.

5.5. Energy-Harvesting Efficiency

Improving energy harvesting is crucial for extending the flight duration of solar-powered UAVs. Although traditional silicon-based photovoltaic cells are common, their efficiency.

Research into perovskite solar cells, which can achieve higher efficiency levels (up to 30%) and offer flexibility, shows promise for UAV applications [66,67]. However, their environmental stability needs improvement, which can be achieved through hybrid structures and protective coatings.

Integrating thermoelectric generators (TEGs) to convert temperature differences into electrical energy can complement solar energy during low sunlight periods. Lightweight nano-materials like graphene and carbon nanotubes are being studied to improve TEG conversion efficiency and reduce UAV weight.

5.6. Swarm Coordination

Swarm operations offer opportunities for UAVs to perform wide-area tasks and complex missions, such as search-and-rescue operations. However, coordinating multiple UAVs effectively presents challenges in communication and control.

Algorithms inspired by natural behaviours, like Boids and ant colony optimization (ACO), can enable UAVs to maintain formation and adapt to environmental changes autonomously. These systems must be designed to handle communication latency and packet loss without compromising swarm performance.

Robust consensus algorithms, such as the Distributed Consensus-Based Algorithm (DCBA), help UAVs agree on shared tasks and flight paths. This is crucial for cooperative missions like perimeter surveillance, where data from multiple UAVs contribute to a single mission goal.

Multi-UAV coordination introduces compounded uncertainty, communication delays, bandwidth fluctuation, and partial observability. Simulations often assume ideal communication links, while real-world tests show packet loss of 5–15% under moderate wind or urban obstruction, causing swarm divergence or premature landing [9,14,39]. Future research must evaluate coordination strategies under realistic communication constraints and develop consensus algorithms that maintain stability even under intermittent connectivity [52,53,55].

5.7. Integration of Emerging Technologies

The future of UAV path planning will also hinge on edge computing and federated learning, especially for fleets of networked UAVs. In traditional setups, computationally heavy tasks (like retraining a path planning model or processing high-volume sensor data) either run onboard with strict resource limits or offload entirely to ground stations or the cloud. Edge computing offers a middle ground: processing is distributed across nearby edge servers or among the UAVs themselves, reducing latency and dependence on constant connectivity. For example, a solar-powered UAV could offload portions of neural network inference or optimization calculations to a proximal edge node (such as a ground control station or another UAV with free capacity) to save its own battery and computation cycles. This hybrid computation model has been shown to improve real-time responsiveness without overtaxing any single platform. Recent case studies demonstrate that using lightweight onboard AI together with edge-offloading can maintain high-frequency path re-planning (for obstacle avoidance and energy optimization) while keeping UAV energy consumption low. In one instance, an obstacle detection CNN running partly on an NVIDIA Jetson Nano onboard and partly on an edge server achieved a 30% faster inference rate than an onboard-only setup, with negligible loss in accuracy.

In parallel, federated learning (FL) is emerging as a way to leverage data from multiple UAVs to improve path planning models collaboratively. In a federated learning paradigm, each UAV (or edge node) trains a local neural network on its own flight data (e.g., experienced wind patterns, solar conditions, obstacles encountered) and periodically shares only the model updates with a central server or among peers-not the raw data. This allows the global model to improve using knowledge from the entire fleet, without requiring UAVs to transmit large datasets or violate privacy/security by revealing sensitive observations. In the context of multi-UAV path planning, FL can enable the fleet to learn a common policy or value function for energy-efficient routing in diverse environments. For example, a group of solar UAVs surveying different regions can collectively train a neural network that predicts the best altitude to maximize solar gain given weather forecasts, by aggregating their learned parameters. This approach was noted as a key future challenge in making UAV AI more robust, since it can significantly accelerate learning while being bandwidth-efficient and resilient to intermittent connectivity.

Initial studies on federated deep reinforcement learning for UAVs show promising results, with UAVs benefiting from each other’s experiences to speed up convergence to optimal paths. One recent work reported that federated training of a UAV path planning DQN across four drones led to a 15% increase in cumulative reward (energy efficiency) compared to training each drone’s model independently, while reducing the communication load by transmitting only model weights. Moreover, FL naturally accommodates edge-of-network computation-each UAV’s updates can be computed at the edge (onboard or at a nearby base station), and only minimal information is sent over the air. This synergy between federated learning and edge computing aligns well with the distributed nature of UAV networks.

Overall, incorporating edge computing and federated learning can address the scalability and privacy challenges of deploying advanced AI in UAV swarms. By processing data locally (or on nearby infrastructure) and sharing insights rather than raw data, these methods improve real-time performance and robustness. A solar-powered UAV network can thus continuously refine its path optimization policies as a whole, learning from collective experience while each UAV remains self-reliant and energy-efficient. Embracing these technologies will be crucial for the next generation of persistent UAV operations, where swarms of solar-powered drones collaboratively map, monitor, and navigate vast areas without centralized control.

5.8. Research Gaps

Despite significant advancements, notable research gaps remain in the field of solar-powered UAVs. Current studies often do not integrate real-time environmental data effectively into flight path optimization, and adaptive energy management systems capable of reacting to sudden energy changes are lacking.

While bio-inspired algorithms, like those based on slime moulds behaviour, show promise for optimization, their practical application in UAVs requires further exploration. Research should investigate how biomimicry can be used to create more adaptive, resilient control systems that respond to dynamic conditions.

Emphasis should be placed on validating bio-inspired approaches through experimental flight tests and real-time simulations. This includes developing systems that can adapt their parameters based on live data, bridging the gap between theoretical models and practical use.

Table 5 summarizes these gaps across various areas, including flight path optimization, neural network optimization techniques, integration of biomimicry principles, energy management systems, real-time environmental data integration, aerodynamic design, and solar energy capture efficiency.

Table 5.

Research Gap.

Several promising research avenues can address current limitations and enhance energy efficiency in solar-powered UAVs. First, developing hybrid control frameworks that integrate reinforcement learning with bio-inspired optimization could yield systems capable of continuously adapting to fluctuating conditions. These frameworks would manage energy flow, obstacle avoidance, and flight stability more cohesively, improving resilience against unpredictable environmental changes.

Second, exploring specialized low-power hardware accelerators, such as application-specific integrated circuits or next-generation FPGAs, could support real-time inference from deep neural models without draining critical energy reserves. By synergizing advanced architectures with lightweight computation, researchers can unlock more powerful onboard AI capabilities.

Third, investigating cooperative multi-UAV missions where platforms share data, coordinate tasks, and collectively respond to environmental cues can significantly boost operational efficiency. Distributed decision-making strategies, supported by robust communication protocols, would allow swarms of UAVs to adapt flight patterns and resource allocation dynamically. These three areas-hybrid intelligences, hardware acceleration, and cooperative strategies-are paramount to extending flight times, improving mission success rates, and ensuring that solar-powered UAVs meet the evolving demands of future aerial applications.

6. Conclusions

This review systematically examined optimization techniques for UAV path planning—with a particular focus on energy-aware routing, dynamic-environment adaptability, and real-time feasibility. By comparing evolutionary algorithms, swarm-based methods, bio-inspired metaheuristics, neural-network-based models, and deep reinforcement learning (DRL), several cross-study patterns emerge.

First, swarm-based optimizers such as PSO and GWO consistently achieve faster convergence and lower computational load, making them suitable for real-time trajectory adjustments in dynamic environments. In contrast, GA and other evolutionary strategies show stronger global search performance but generally require higher computational resources and longer tuning times, limiting their practicality for onboard execution.

Second, bio-inspired algorithms such as SMA demonstrate strong robustness under environmental uncertainty, including variable solar irradiance, wind disturbances, and long-duration operations. Studies that integrate energy-harvesting models report that these methods maintain stable performance even when the energy budget fluctuates significantly.

Third, learning-based methods diverge in capability. Traditional neural networks excel in low-dimensional tasks such as collision-avoidance prediction, but DRL provides the strongest adaptability in highly dynamic and partially observable environments, especially when paired with model-based or hierarchical variants. However, the training cost and sample inefficiency of DRL currently limit its deployment in fully onboard embedded systems.

Across the reviewed literature, two additional trends are evident.

- (1)

- Hybrid approaches outperform single-method algorithms, especially combinations such as PSO-GA, DRL-PSO, or NN-GWO, which leverage complementary strengths in exploration, prediction, and policy adaptation.

- (2)

- Successful real-world UAV software implementations almost always integrate perception, control, and optimization jointly rather than treating path planning as an isolated module.

Overall, no algorithm is universally optimal; instead, the most effective method depends on mission constraints. For energy-constrained solar-powered UAVs, SMA and hybrid swarm-based methods are most promising. For high-speed dynamic missions, PSO and GWO remain strong candidates due to their computational economy. For complex multi-agent or uncertain environments, DRL-based frameworks offer the greatest adaptability provided training resources are available.

Future work should therefore prioritize:

- (i)

- Hybrid algorithm architectures that combine global and local search capabilities;

- (ii)

- Energy-aware DRL models that incorporate full UAV power-budget dynamics;

- (iii)

- Unified benchmark environments to allow performance comparison across studies.

This synthesis highlights not only the current capabilities of optimization strategies for UAV path planning but also the practical considerations needed to transition these methods toward robust, real-world autonomous flight systems.

Author Contributions

Conceptualization, G.H., M.T.H.S., and A.Ł.; methodology, G.H., F.S.S., and M.N.; software, G.H.; validation, M.T.H.S., A.Ł., M.N. and F.S.S.; formal analysis, G.H., M.T.H.S., M.N. and A.Ł.; investigation, G.H. and F.S.S.; resources, G.H.; data curation, G.H.; writing, original draft preparation, G.H.; writing, review and editing, M.T.H.S., A.Ł., M.N. and F.S.S.; visualization, G.H.; supervision, M.T.H.S., A.Ł., M.N. and F.S.S.; project administration, F.S.S.; funding acquisition, M.T.H.S., M.N., and A.Ł. All authors have read and agreed to the published version of the manuscript.

Funding

The authors are grateful for the financial support given by The Ministry of Higher Education Malaysia (MOHE) under the Higher Institution Centre of Excellence (HICOE2.0/5210004) at the Institute of Tropical Forestry and Forest Products. This research was partially financed by the Ministry of Science and Higher Education of Poland with allocation to the Faculty of Mechanical Engineering, Bialystok University of Technology, for the WZ/WM-IIM/5/2023 academic projects in the mechanical engineering discipline and by research 159/2025 PR at Military Institute of Armoured and Automotive Technology.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

The authors would also like to express their gratitude to the Department of Aerospace Engineering, Faculty of Engineering, Universiti Putra Malaysia for their close collaboration in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ACO | Ant Colony Optimization |

| ADS-B | Automatic Dependent Surveillance-Broadcast |

| AI | Artificial Intelligence |

| APF | Artificial Potential Field |

| ASIC | Application-Specific Integrated Circuit |

| BINN | Bio-Inspired Neural Network |

| B-APFDQN | An algorithm combining DQN and APF |

| CNN | Convolutional Neural Network |

| DCBA | Distributed Consensus-Based Algorithm |

| DE | Differential Evolution |

| DEAP | Distributed Evolutionary Algorithms in Python |

| DNN | Deep Neural Network |

| DQN | Deep Q-Network |

| DRL | Deep Reinforcement Learning |

| EA | Evolutionary Algorithm |

| ESMOML-RAA | Enhanced Slime Mould Optimization with Deep-Learning-Based Resource Allocation Approach |

| FL | Federated Learning |

| FPGA | Field-Programmable Gate Array |

| GA | Genetic Algorithm |

| GPS | Global Positioning System |

| GPU | Graphics Processing Unit |

| GWO | Grey Wolf Optimizer |

| HALE | High-Altitude Long-Endurance |

| HAPS | High-Altitude Pseudo-Satellite |

| HAS-DQN | Hexagonal Search and Deep Q-Network algorithm |

| HDGWO | Hybrid Discrete Grey Wolf Optimizer |

| HHO | Harris Hawks Optimization |

| HP-LSTM | Highly Parallelized Long Short-Term Memory |

| IoT | Internet of Things |

| LiDAR | Light Detection and Ranging |

| LP | Linear Programming |

| LSTM | Long Short-Term Memory |

| MADDPG | Multi-Agent Deep Deterministic Policy Gradient |

| MATD3 | Multi-Agent Twin Delayed Deep Deterministic Policy Gradient |

| MPC | Model Predictive Control |

| MPPT | Maximum Power Point Tracking |

| NLP | Nonlinear Programming |

| PID | Proportional-Integral-Derivative (controller) |