Electric Vehicle Energy Management Under Unknown Disturbances from Undefined Power Demand: Online Co-State Estimation via Reinforcement Learning

Abstract

1. Introduction

- Unlike conventional reinforcement learning schemes [21,22,23], which typically rely on iterative learning and extensive offline training, the proposed approach employs a co-state network that is trained solely using online data in real time. This design enables the formulation of an optimal energy management controller without requiring a comprehensive dataset or prior knowledge of the entire operational domain. Additionally, the framework inherently avoids the curse of dimensionality, making it well-suited for practical deployment in embedded systems.

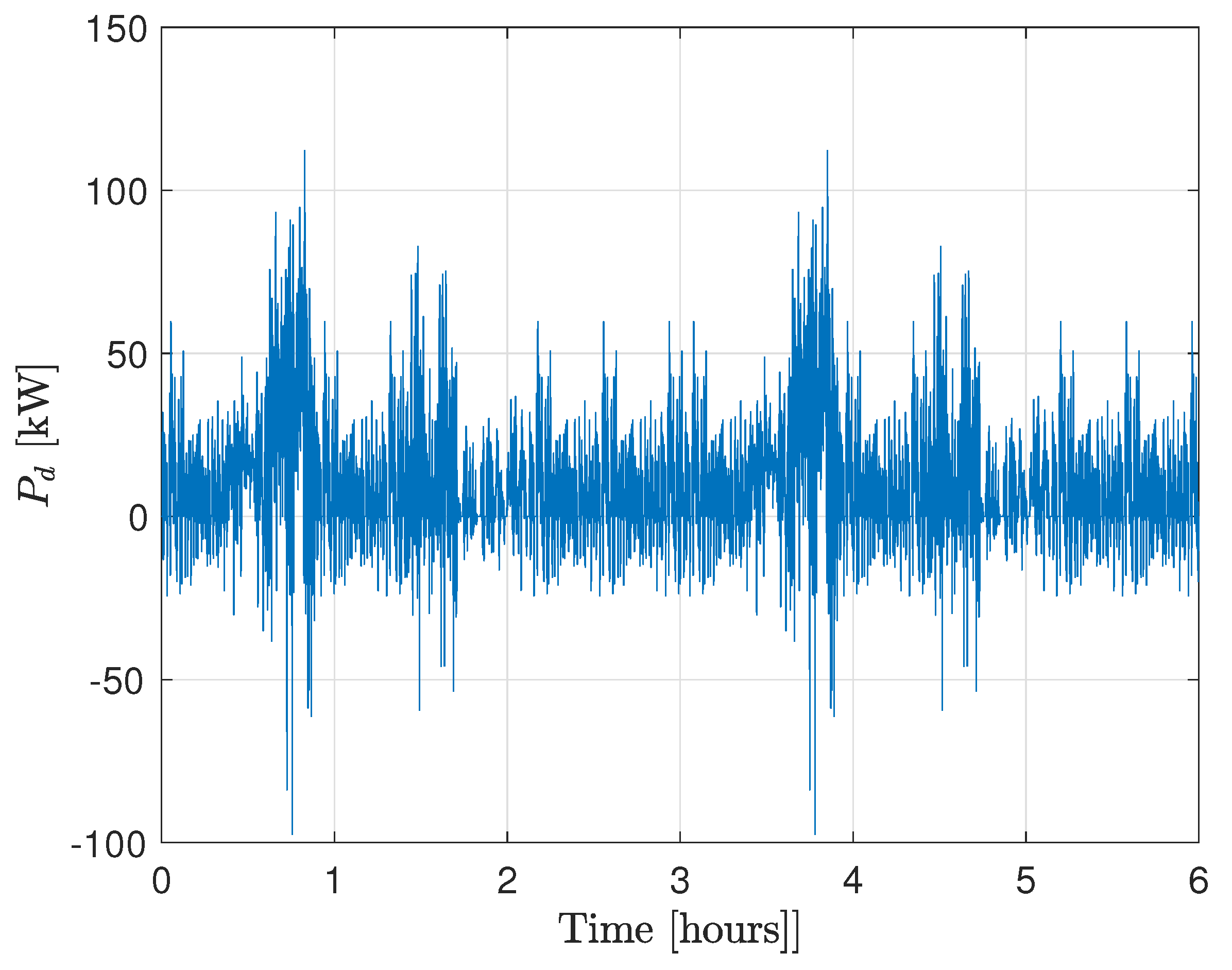

- By treating power consumption associated with air conditioning systems, time-varying slopes and road conditions, passenger support systems, and other onboard demands as unknown disturbances, the robustness of the proposed scheme is demonstrated both from a practical perspective and through theoretical analysis.

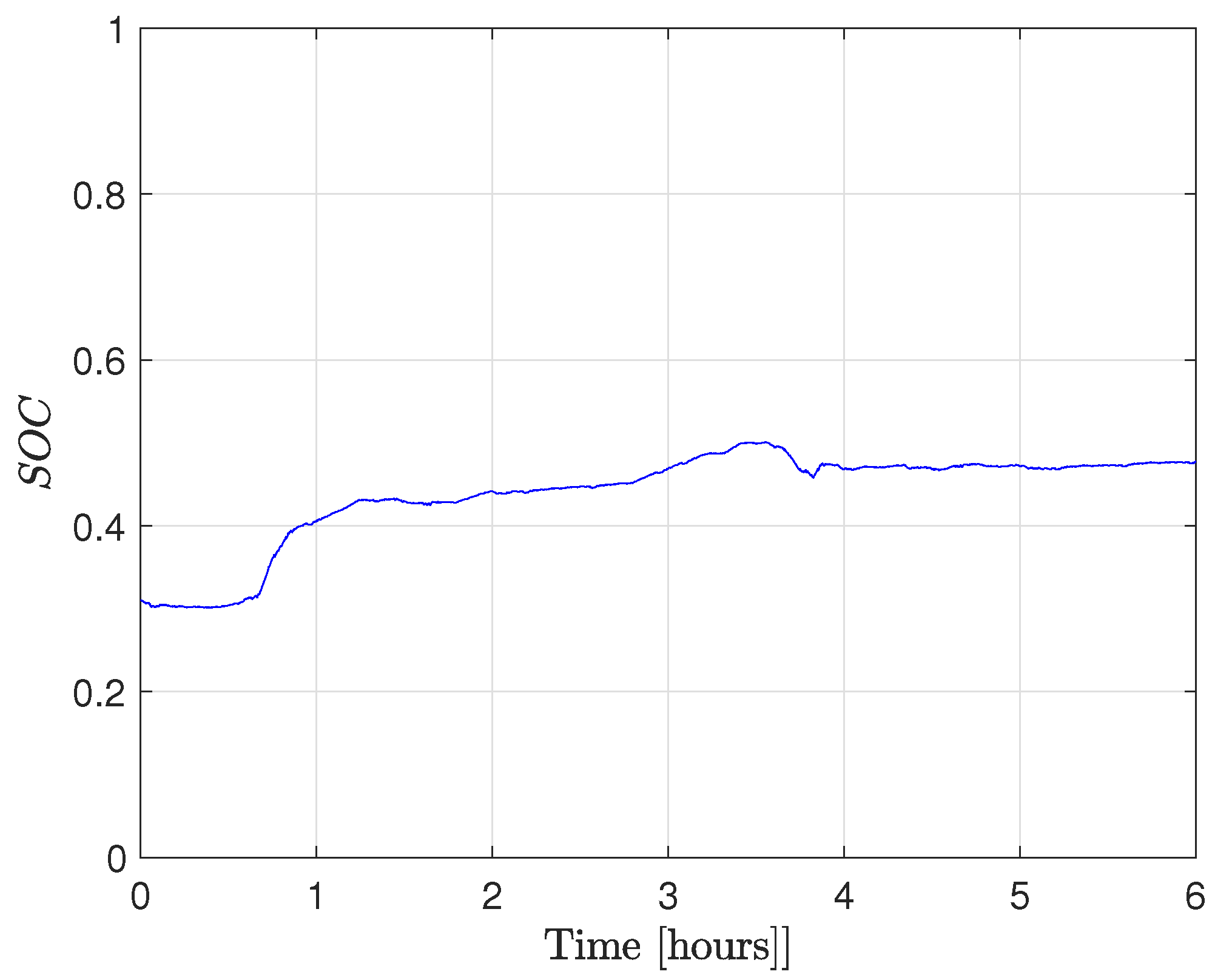

- From the perspective of energy management as a control system, the desired state of charge (SOC) is formulated as the reference trajectory, while the optimal control input is computed using the proposed control law under full operational constraints.

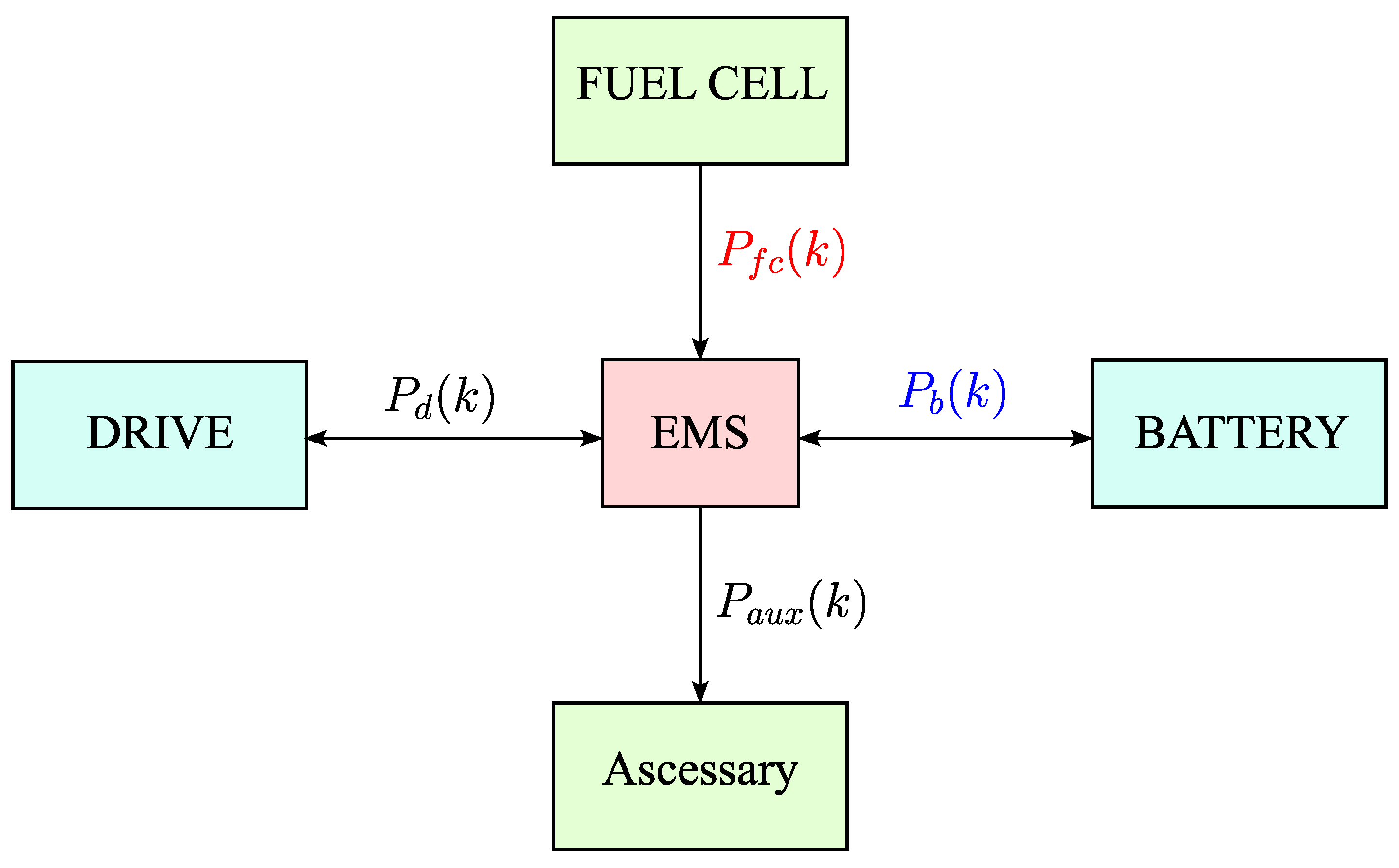

2. Problem Formulation with EV-EMS Framework

2.1. A Class of Control Systems Based on Model-Free EV-EMS

2.2. Characterization of the Optimal Solution

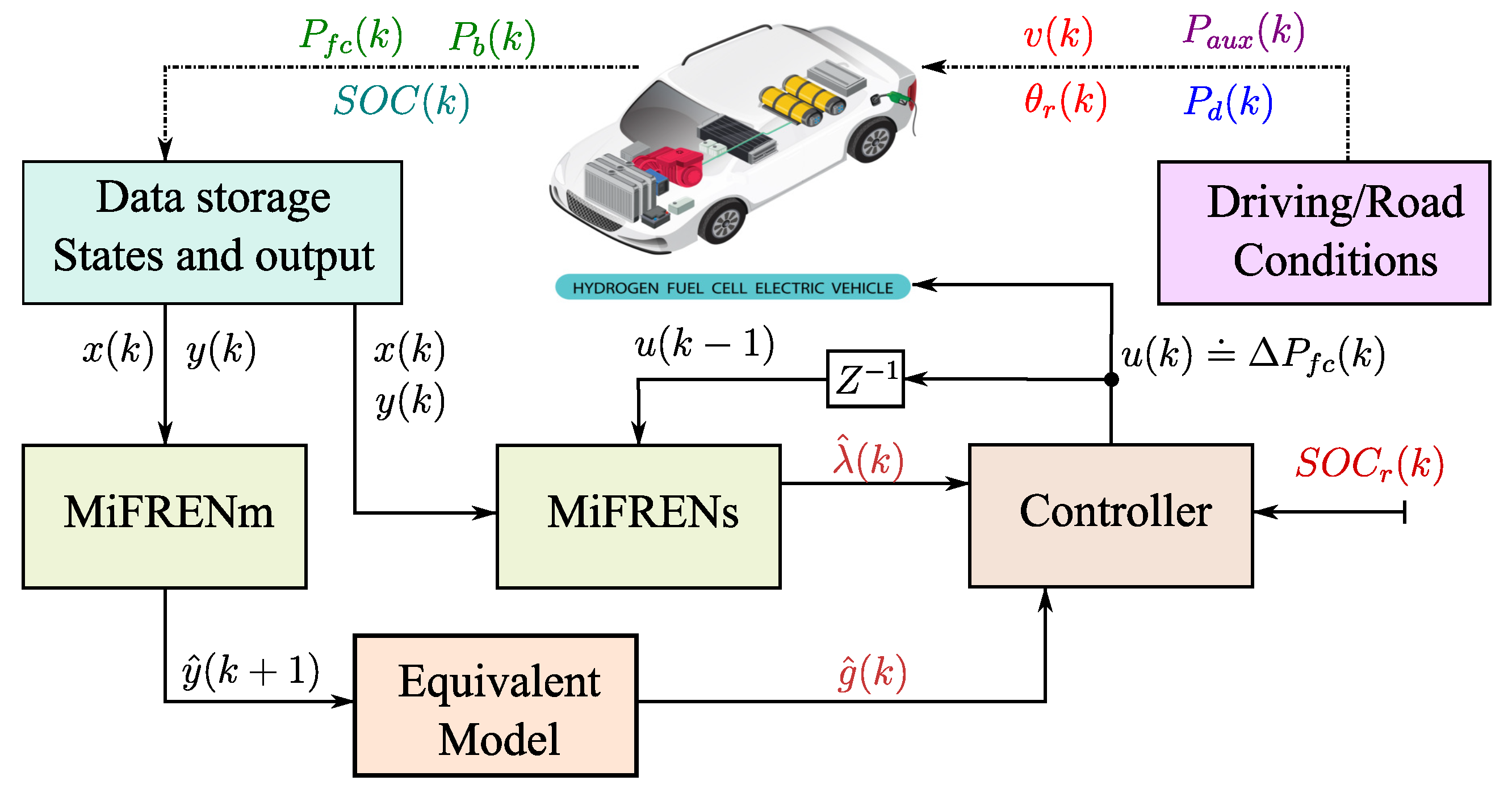

3. Controller as EMS with MiFREN-Estimators

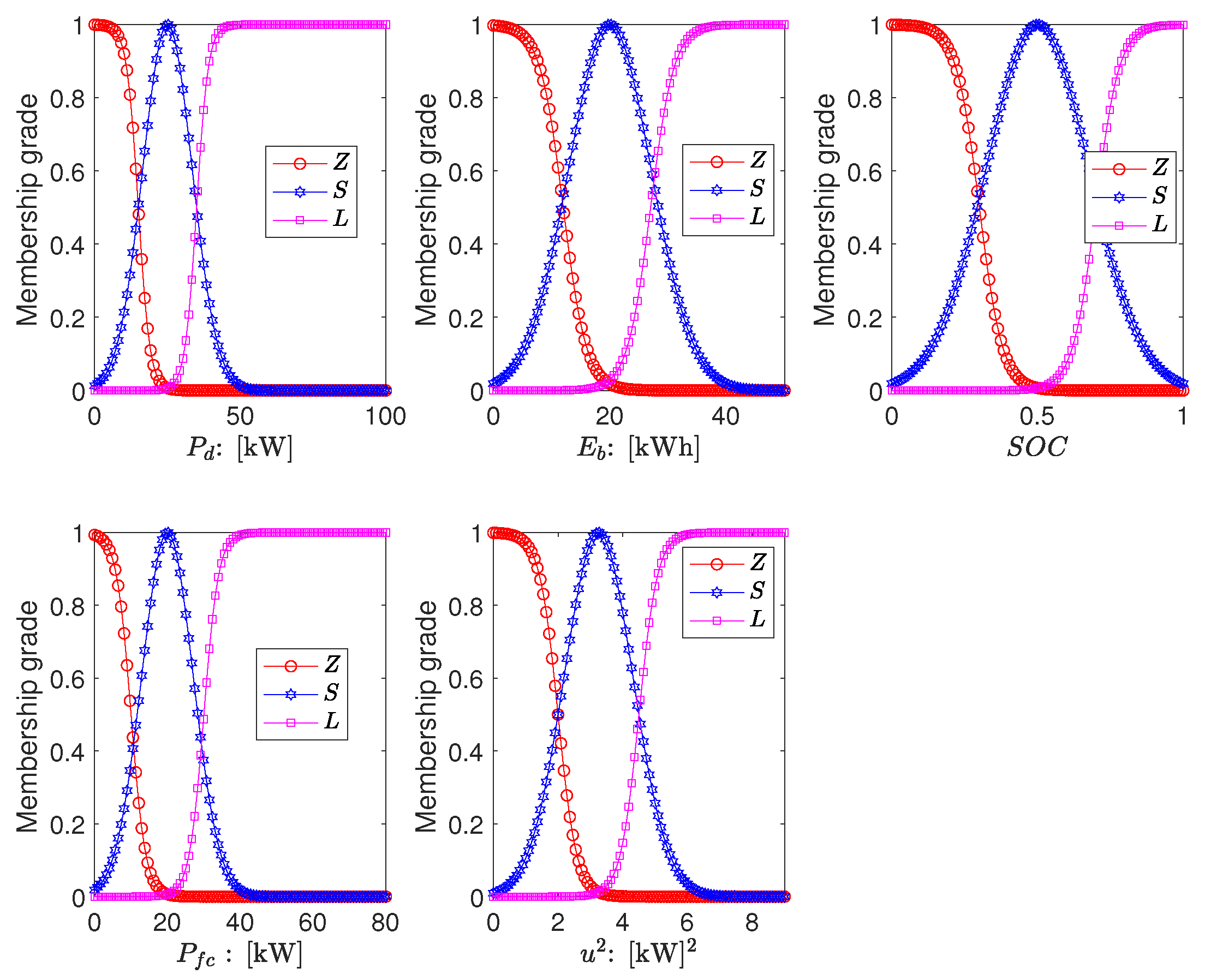

3.1. Dynamic Equivalent Model

3.2. Co-State Estimation

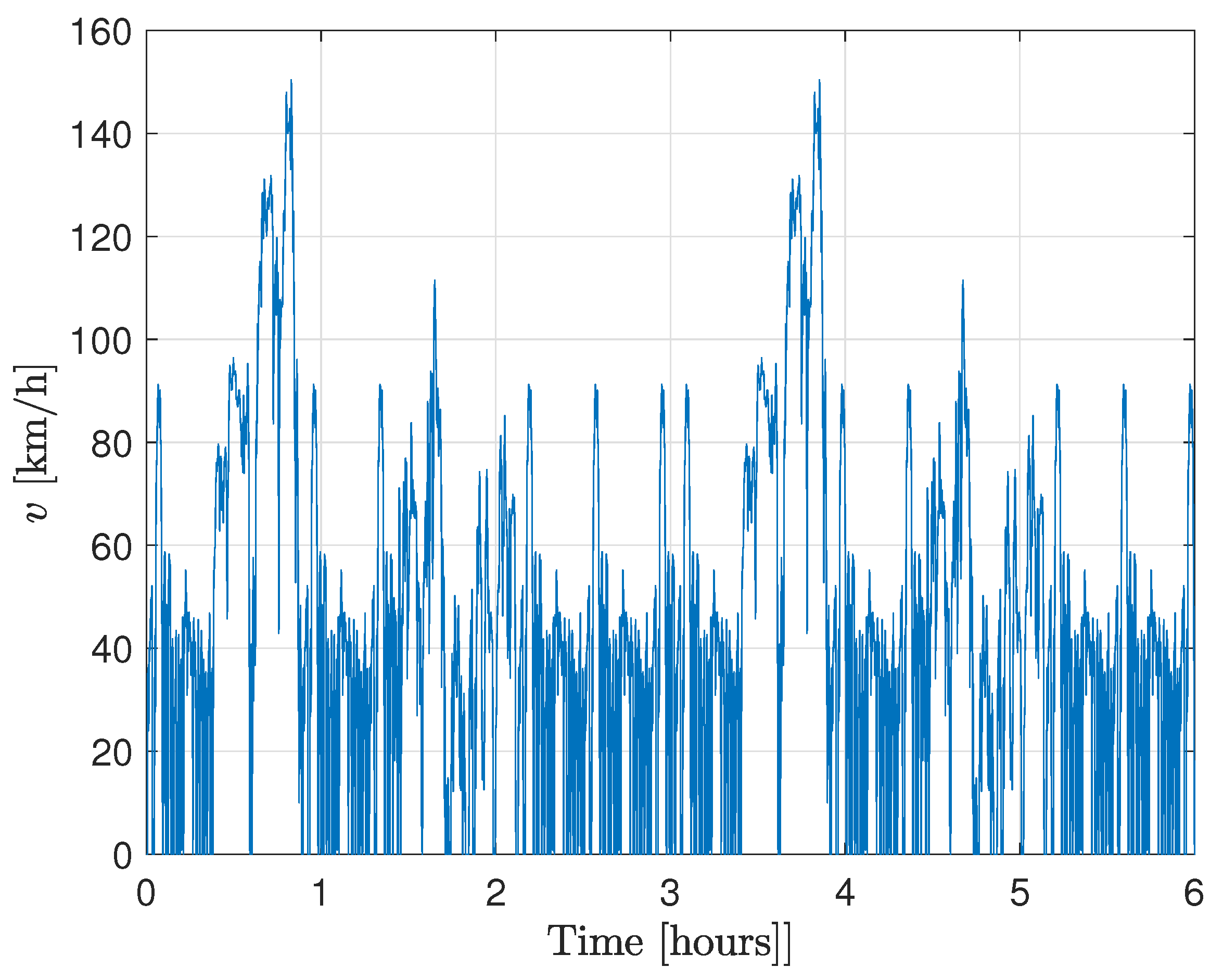

4. Validation and Comparative Results

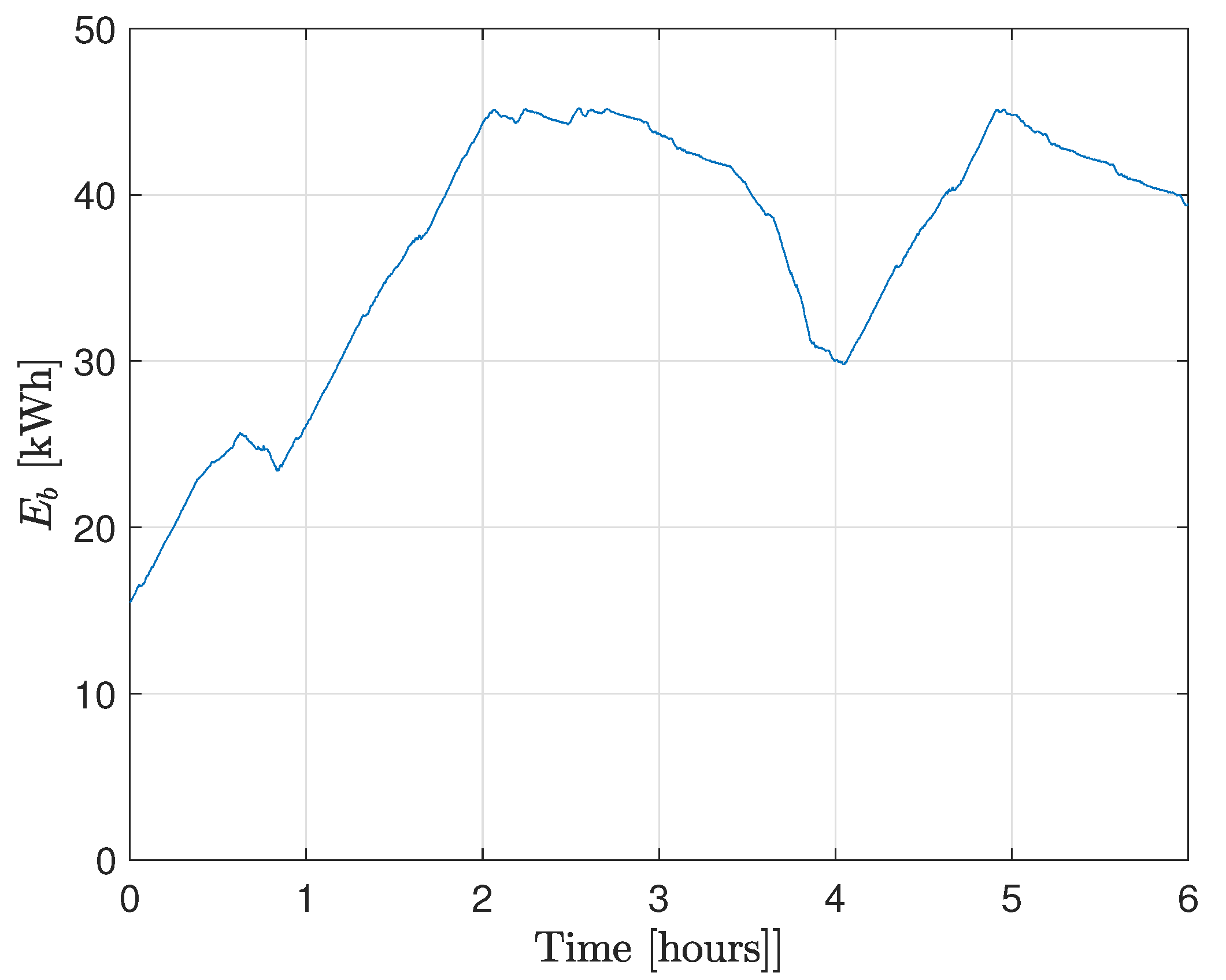

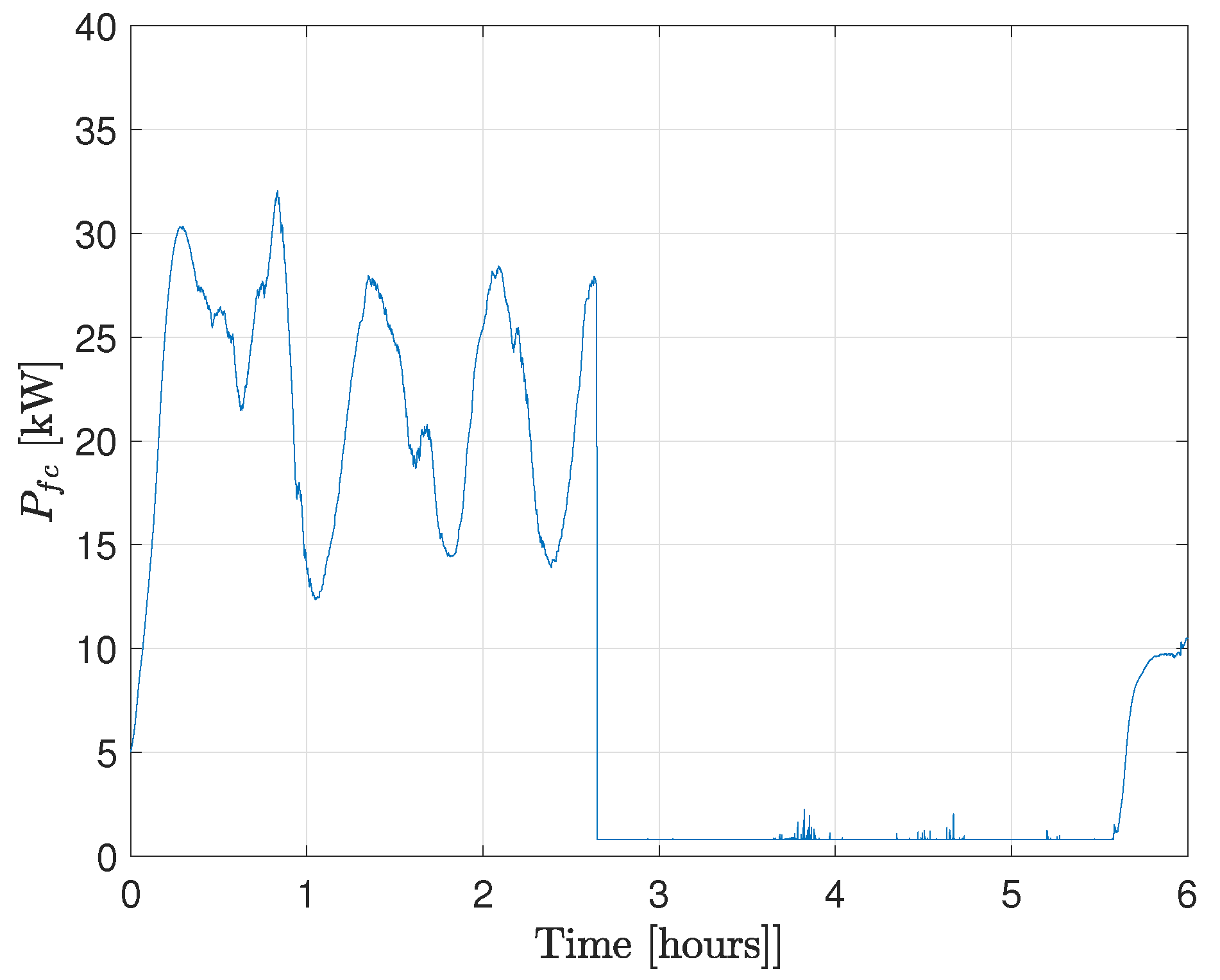

4.1. Validation Results

4.2. Comparative Results

4.2.1. Comparative Controller A

4.2.2. Comparative Controller B

5. Conclusions

- Stable battery operation with SOC maintained within a practical range;

- A significant reduction in high-frequency fluctuations of fuel cell power output compared to benchmark controllers;

- Improved overall energy efficiency relative to constant SOC and soft actor–critic methods.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sidharthan, V.P.; Kashyap, Y.; Kosmopoulos, P. Adaptive-Energy-Sharing-Based Energy Management Strategy of Hybrid Sources in Electric Vehicles. Energies 2023, 16, 1214. [Google Scholar] [CrossRef]

- Deng, L.; Li, S.; Tang, X.; Yang, K.; Lin, X. Battery thermal- and cabin comfort-aware collaborative energy management for plug-in fuel cell electric vehicles based on the soft actor–critic algorithm. Energy Convers. Manag. 2023, 283, 116889. [Google Scholar] [CrossRef]

- Chan, C.C. The State of the Art of Electric, Hybrid, and Fuel Cell Vehicles. Proc. IEEE 2007, 95, 704–718. [Google Scholar] [CrossRef]

- Gioffrè, D.; Manzolini, G.; Leva, S.; Jaboeuf, R.; Tosco, P.; Martelli, E. Quantifying the Economic Advantages of Energy Management Systems for Domestic Prosumers with Electric Vehicles. Energies 2025, 18, 1774. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Y.; Zhang, Y.; Xi, L.; Yang, N.; Zhao, Z.; Lai, C.S.; Lai, L.L. Strategy for optimizing the bidirectional time-of-use electricity price in multi-microgrids coupled with multilevel games. Energy 2025, 323, 135731. [Google Scholar] [CrossRef]

- Maroufi, S.M.; Karrari, S.; Rajashekaraiah, K.; De Carne, G. Power Management of Hybrid Flywheel-Battery Energy Storage Systems Considering the State of Charge and Power Ramp Rate. IEEE Trans. Power Electron. 2025, 40, 9944–9956. [Google Scholar] [CrossRef]

- Nawaz, M.; Ahmed, J.; Abbas, G. Energy-efficient battery management system for healthcare devices. J. Energy Storage 2022, 51, 104358. [Google Scholar] [CrossRef]

- Uralde, J.; Barambones, O.; del Rio, A.; Calvo, I.; Artetxe, E. Rule-Based Operation Mode Control Strategy for the Energy Management of a Fuel Cell Electric Vehicle. Batteries 2024, 10, 214. [Google Scholar] [CrossRef]

- Li, Y.; Pu, Z.; Liu, P.; Qian, T.; Hu, Q.; Zhang, J.; Wang, Y. Efficient predictive control strategy for mitigating the overlap of EV charging demand and residential load based on distributed renewable energy. Renew. Energy 2025, 240, 122154. [Google Scholar] [CrossRef]

- Kim, D.J.; Kim, B.; Yoon, C.; Nguyen, N.D.; Lee, Y.I. Disturbance Observer-Based Model Predictive Voltage Control for Electric-Vehicle Charging Station in Distribution Networks. IEEE Trans. Smart Grid 2023, 14, 545–558. [Google Scholar] [CrossRef]

- Khan, B.; Ullah, Z.; Gruosso, G. Enhancing Grid Stability Through Physics-Informed Machine Learning Integrated-Model Predictive Control for Electric Vehicle Disturbance Management. World Electr. Veh. J. 2025, 16, 292. [Google Scholar] [CrossRef]

- Khan, K.; Samuilik, I.; Ali, A. A Mathematical Model for Dynamic Electric Vehicles: Analysis and Optimization. Mathematics 2024, 12, 224. [Google Scholar] [CrossRef]

- Previti, U.; Brusca, S.; Galvagno, A.; Famoso, F. Influence of Energy Management System Control Strategies on the Battery State of Health in Hybrid Electric Vehicles. Sustainability 2022, 14, 12411. [Google Scholar] [CrossRef]

- Meteab, W.K.; Alsultani, S.A.H.; Jurado, F. Energy Management of Microgrids with a Smart Charging Strategy for Electric Vehicles Using an Improved RUN Optimizer. Energies 2023, 16, 6038. [Google Scholar] [CrossRef]

- Shen, Y.; Li, Y.; Liu, D.; Wang, Y.; Sun, J.; Sun, S. Energy Management Strategy for Hybrid Energy Storage System based on Model Predictive Control. J. Electr. Eng. Technol. 2023, 18, 3265–3275. [Google Scholar] [CrossRef]

- Oksuztepe, E.; Yildirim, M. PEM fuel cell and supercapacitor hybrid power system for four in-wheel switched reluctance motors drive EV using geographic information system. Int. J. Hydrogen Energy 2024, 75, 74–87. [Google Scholar] [CrossRef]

- Gao, H.; Yin, B.; Pei, Y.; Gu, H.; Xu, S.; Dong, F. An energy management strategy for fuel cell hybrid electric vehicle based on a real-time model predictive control and pontryagin’s maximum principle. Int. J. Green Energy 2024, 21, 2640–2652. [Google Scholar] [CrossRef]

- Liu, W.; Yao, P.; Wu, Y.; Duan, L.; Li, H.; Peng, J. Imitation reinforcement learning energy management for electric vehicles with hybrid energy storage system. Appl. Energy 2025, 378, 124832. [Google Scholar] [CrossRef]

- Han, R.; He, H.; Wang, Y.; Wang, Y. Reinforcement Learning Based Energy Management Strategy for Fuel Cell Hybrid Electric Vehicles. Chin. J. Mech. Eng. 2025, 38, 66. [Google Scholar] [CrossRef]

- Guo, D.; Lei, G.; Zhao, H.; Yang, F.; Zhang, Q. The A3C Algorithm with Eligibility Traces of Energy Management for Plug-In Hybrid Electric Vehicles. IEEE Access 2025, 13, 92507–92518. [Google Scholar] [CrossRef]

- Liu, H.; You, C.; Han, L.; Yang, N.; Liu, B. Off-road hybrid electric vehicle energy management strategy using multi-agent soft actor–critic with collaborative-independent algorithm. Energy 2025, 328, 136463. [Google Scholar] [CrossRef]

- Wang, J.; Du, C.; Yan, F.; Duan, X.; Hua, M.; Xu, H.; Zhou, Q. Energy Management of a Plug-In Hybrid Electric Vehicle Using Bayesian Optimization and Soft Actor–Critic Algorithm. IEEE Trans. Transp. Electrif. 2025, 11, 912–921. [Google Scholar] [CrossRef]

- Sun, Z.; Guo, R.; Luo, M. Integrated energy-thermal management strategy for range extended electric vehicles based on soft actor–critic under low environment temperature. Energy 2025, 330, 136868. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, J.; Wang, A.; Wang, Z.; Yang, N.; Zhao, Z.; Lai, C.S.; Lai, L.L. Prioritized sum-tree experience replay TD3 DRL-based online energy management of a residential microgrid. Appl. Energy 2024, 368, 123471. [Google Scholar] [CrossRef]

- Jia, C.; He, H.; Zhou, J.; Li, J.; Wei, Z.; Li, K. Learning-based model predictive energy management for fuel cell hybrid electric bus with health-aware control. Appl. Energy 2024, 355, 122228. [Google Scholar] [CrossRef]

- Cavus, M.; Dissanayake, D.; Bell, M. Next Generation of Electric Vehicles: AI-Driven Approaches for Predictive Maintenance and Battery Management. Energies 2025, 18, 1041. [Google Scholar] [CrossRef]

- Omakor, J.; Alzayed, M.; Chaoui, H. Particle Swarm-Optimized Fuzzy Logic Energy Management of Hybrid Energy Storage in Electric Vehicles. Energies 2024, 17, 2163. [Google Scholar] [CrossRef]

- Treesatayapun, C. Prescribed performance of discrete-time controller based on the dynamic equivalent data model. Appl. Math. Model. 2020, 78, 366–382. [Google Scholar] [CrossRef]

| Parameter | Description | Value | Unit | Remark |

|---|---|---|---|---|

| Aerodynamic drag coefficient | 0.3 | |||

| Fronted area | 2.2508 | [] | ||

| Air density | 1.293 | [k/] | ||

| Curb weight | 2024 | [kg] | ||

| Rotational inertia coefficient | 1 | |||

| Rolling resistance coefficient | 0.013 | |||

| g | Gravity acceleration | 9.81 | [m/] | |

| Motor efficiency | 0.9 | |||

| Mechanical drive efficiency | 0.9 | |||

| Inverter efficiency | 0.95 | |||

| Converter efficiency | 0.95 | |||

| Coulombic efficiency | 0.98 | |||

| Battery capacity | 50 | [kWh] |

| Limit | Value | Unit | Limit | Value | Unit |

|---|---|---|---|---|---|

| 0.25 | [kW] | 80 | [kW] | ||

| 9 | [kW] | 50 | [kW] | ||

| 0.2 | Per Unit | 0.9 | Per Unit | ||

| 80 | [kW] | 100 | [kW] | ||

| 0.5 | [kW] | 20 | [kW] | ||

| 50 | [A] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Treesatayapun, C.; Munoz-Vazquez, A.J.; Korkua, S.K.; Srikarun, B.; Pochaiya, C. Electric Vehicle Energy Management Under Unknown Disturbances from Undefined Power Demand: Online Co-State Estimation via Reinforcement Learning. Energies 2025, 18, 4062. https://doi.org/10.3390/en18154062

Treesatayapun C, Munoz-Vazquez AJ, Korkua SK, Srikarun B, Pochaiya C. Electric Vehicle Energy Management Under Unknown Disturbances from Undefined Power Demand: Online Co-State Estimation via Reinforcement Learning. Energies. 2025; 18(15):4062. https://doi.org/10.3390/en18154062

Chicago/Turabian StyleTreesatayapun, C., A. J. Munoz-Vazquez, S. K. Korkua, B. Srikarun, and C. Pochaiya. 2025. "Electric Vehicle Energy Management Under Unknown Disturbances from Undefined Power Demand: Online Co-State Estimation via Reinforcement Learning" Energies 18, no. 15: 4062. https://doi.org/10.3390/en18154062

APA StyleTreesatayapun, C., Munoz-Vazquez, A. J., Korkua, S. K., Srikarun, B., & Pochaiya, C. (2025). Electric Vehicle Energy Management Under Unknown Disturbances from Undefined Power Demand: Online Co-State Estimation via Reinforcement Learning. Energies, 18(15), 4062. https://doi.org/10.3390/en18154062