Abstract

Fault prediction of hydropower station is crucial for the stable operation of generator set equipment, but the traditional method struggles to deal with data with an imbalanced distribution and untrustworthiness. This paper proposes a fault detection method based on a convolutional neural network (CNNs) and long short-term memory network (LSTM) with a generative adversarial network (GAN). Firstly, a reliability mechanism based on principal component analysis (PCA) is designed to solve the problem of data bias caused by multiple monitoring devices. Then, the CNN-LSTM network is used to predict time series data, and the GAN is used to expand fault data samples to solve the problem of an unbalanced data distribution. Meanwhile, a multi-scale feature extraction network with time–frequency information is designed to improve the accuracy of fault detection. Finally, a dynamic multi-task training algorithm is proposed to ensure the convergence and training efficiency of the deep models. Experimental results show that compared with RNN, GRU, SVM, and threshold detection algorithms, the proposed fault prediction method improves the accuracy performance by , , , and , with at least a improvement in the fault recall rate.

1. Introduction

Hydroelectric power is a kind of environmentally friendly renewable energy, so hydropower stations are of great significance for solving the energy shortage problem in economic development, improving the ecological environment and promoting the coordination and sustainable development of regional economies [1]. Globally, hydropower accounts for approximately of total electricity generation, and the installed capacity of hydropower accounts for more than of the global installed capacity of renewable energy. As of 2024, the cumulative installed capacity of hydropower worldwide reached 427 million kilowatts, and the annual electricity generation reached trillion kilowatt-hours. The normal operation of hydropower stations’ equipment is of great significance for ensuring power supply and preventing safety accidents [2,3]. Fault detection is an indispensable step in ensuring the safe and reliable operation of industrial systems [4], such as power transmission, manufacturing industry, and transportation and telecommunication systems. Specially, due to the complexity of hydropower station systems, such as in the large number of equipment and variable operating environment, equipment failures are difficult to completely avoid. This not only leads to significant economic losses but may also cause serious production safety accidents, posing adverse effects on society and the environment.

With the development of intelligence and information technologies, the monitoring systems of hydropower stations are now capable of collecting a large amount of real-time operation data from equipment. These data contain rich information [5,6], providing the possibility for fault prediction. By analyzing these data, potential anomalies and fault signs of equipment can be detected in a timely manner [7], so as to take corresponding preventive measures and avoid the occurrence of accidents.

Traditional fault detection technologies mainly rely on signal processing and statistical methods. The former extracts the characteristics of fault data through time–frequency conversion methods such as wavelet transform [8], the empirical mode decomposition method [9], short-time Fourier transform (STFT) [10], and spectral analysis. The latter mainly uses some multivariate statistical analysis methods, such as partial least squares [11], to calculate the fault thresholds for identifying abnormal data. Although these methods can identify, to a certain extent, the potential faults of equipment, their applicability is mainly limited to scenarios with a limited data scale and a relatively simple system structure. Facing the complex data bias problems commonly existing in the operation environment of hydropower stations, such as large data scale and severely imbalanced sample class distribution, traditional methods face significant technical bottlenecks in terms of the robustness of feature extraction, the stability of model training, and the accuracy of fault diagnosis. Firstly, the extracted data features may not fully reflect the real state of the equipment. Secondly, the calculated fault thresholds may no longer be applicable under new operating conditions, and continuous updates are required to maintain the effectiveness of detection. In addition, the above methods only analyze the fault data, and it is impossible to conduct the same analysis and prediction for the huge amount of normal detection data. That is to say, it is difficult to deal with the nonlinear relationships in the data and the dynamic non-stationary changes in time series, and there are also high requirements for the professional knowledge and experience of operators.

At present, some studies have researched intelligent detection technologies for the increasingly complex faults of hydropower stations. This is mainly based on the breakthroughs and developments of artificial intelligence technologies, among which machine learning is the most representative. A fault detection method is proposed based on support vector machine (SVM) in [12], which is optimized based on the particle swarm algorithm [13], thereby improving the judgment accuracy of the model. An improved random forest algorithm [14] is proposed to achieve accurate fault detection and enhance the processing ability of the detection model through optimized parallel processing methods.

The above-mentioned machine learning models usually have a relatively simple structure, and may not fully capture the complex data features. In contrast, deep learning, which uses neural network models for analysis and prediction, can extract more in-depth feature information from the data, and has significant advantages in handling complex data structures and performing accurate modeling and prediction [15]. Through its multi-layer structure, the neural network automatically learns nonlinear relationships. This enables it to more effectively identify potential fault patterns when facing systems with high complexity and dynamic change characteristics such as hydropower stations. In addition, deep learning models can also adapt to changes in the data and enhance the accuracy and robustness of prediction through learning. For example, a fault diagnosis network based on the convolutional neural network (CNN) architecture was designed [16], and its performance has been greatly improved compared with traditional machine learning methods. A residual CNN algorithm based on Bayesian optimization was studied [17]. This algorithm achieved a significant improvement in model performance by embedding residual connections and automatically optimizing hyperparameters. Stacked attention autoencoder fault monitoring methods [18,19] have been put forward to pretrain the model and derive deep fault features for improving the classification accuracy.

Compared with the CNN and SAE models, the recurrent neural network (RNN) model that can acquire the correlation of time series is more applicable to time series prediction tasks. For example, a power grid fault diagnosis scheme based on feature clustering and RNN [20] has significantly improved the utilization rate of unlabeled samples and the diagnostic accuracy. However, RNN often encounters the issues of gradient vanishing or explosion in deep network training, which limits its ability to learn long-distance temporal dependencies. To overcome this obstacle, the long short-term memory (LSTM) network solves the problem of gradient vanishing by introducing a gating mechanism, learning long-distance temporal dependencies. Then, fault detection models for industrial machines and large-scale multi-machine power systems were developed based on the LSTM architectures [21,22,23]. The advantages of LSTM are mainly the following: Firstly, it has excellent memory ability and can store long-term dependency information in the data. Secondly, the gating design of LSTM ensures the training stability and significantly reduces the risk of gradient vanishing and explosion. Finally, LSTM shows good adaptability to processing sequence data of different lengths. In addition, hybrid ensemble learning methods can improve prediction accuracy generally by combining multiple weak learners [24]; however, their structural homogeneity limits comprehensive feature extraction and introduces computational inefficiencies. Meanwhile, due to the high sensitivity of ensemble learning to the selection of base learners and the quality of data, it is difficult to always ensure a robust and reliable performance in the scenario of hydropower stations. The Transformer model, a deep learning framework built on the self-attention mechanism, excels at capturing long-distance relationships within sequences by leveraging global attention [25,26]. This unique capability enables it to deliver outstanding results in natural language processing (NLP) tasks, with sentiment analysis serving as a notable example. However, its direct application to time series forecasting faces limitations, as the strong local correlations inherent in temporal data fundamentally differ from the global semantic requirements of NLP, causing standard attention mechanisms to potentially overlook local temporal patterns.

On the other hand, the equipment of hydropower stations is usually designed with a large safety margin, which leads to a severely imbalanced distribution of actual fault data compared with normal data. In this situation, it is particularly difficult to extract fault features and conduct effective detection. Although meta-learning [27] and contrastive learning [28] are capable of improving the model’s generalization performance for new tasks, their high complexity may lead to overfitting in new tasks, thus affecting the expected performance of the model. In addition, this type of method has limitations in generalization ability. Even after training, the model may demand a large volume of new task data to attain the desired performance. In this situation, the generative adversarial network (GAN) [29], which was originally used for image processing, has gradually been discovered to have the potential to expand samples. It does not have to consider the adaptability of the model to small sample data. Instead, by simulating the data generation process, it directly creates new samples that are extremely similar to the real fault data in statistical features, thus enriching the fault sample library and providing more data support for the training of the fault detection model.

In response to the challenges faced by the fault detection technology of hydropower stations, this paper proposes a fault detection method for data prediction and enhancement based on CNN-LSTM-GAN to enhance the accuracy of fault detection in hydropower stations. In this case, the specific criticalities are dealing with data credibility, imbalance distribution, prediction accuracy and fault missed detection. The main contributions of the paper are as follows.

- We propose a fault prediction framework for the hydropower station with unbalanced and unreliable data. First, we propose a controllable credibility detection mechanism based on principal components analysis (PCA). This mechanism calculates the credibility of the data by analyzing the main change directions of the monitoring data to reduce the impact of the unreliable data. Then, we utilize the GAN to expand the training fault data of the hydropower station, thereby addressing the issue of insufficient fault data volume and imbalanced data distribution. In addition, the CNN-LSTM network is designed to extract detail data features and the long-term dependencies in the time series data for fault prediction.

- We propose a multi-scale feature extraction network architecture based on joint time–frequency information for fault detection. First, the model extracts the local and global time-domain features of the data by distributing the sample to multiple independent network branches with different depths. Then, we analyze the frequency-domain parameters of the sample sequence using discrete STFT, and the frequency-domain information is used to assist in the time-domain feature for improving fault detection accuracy.

- We propose a dynamic multi-task training algorithm for our deep network framework to ensure the convergence and training efficiency. In this paper, both time series prediction tasks and fault detection tasks are considered, but it is difficult to jointly train the entire network model. Therefore, we dynamically adjust the proportion between the prediction sub-network loss and the global detection network loss during each training iteration. This allows the model to focus more on fine-grained subtask errors in the early stages, and gradually shift attention to the global fault detection objective as training progresses.

- The proposed method improves the detection accuracy compared to the existing schemes within a manageable time cost. In particular, our method has a significant improvement in recall performance, which is of great significance in scenarios with low fault tolerance and imbalanced data such as hydropower stations.

2. Fault Detection Method Based on CNN-LSTM-GAN

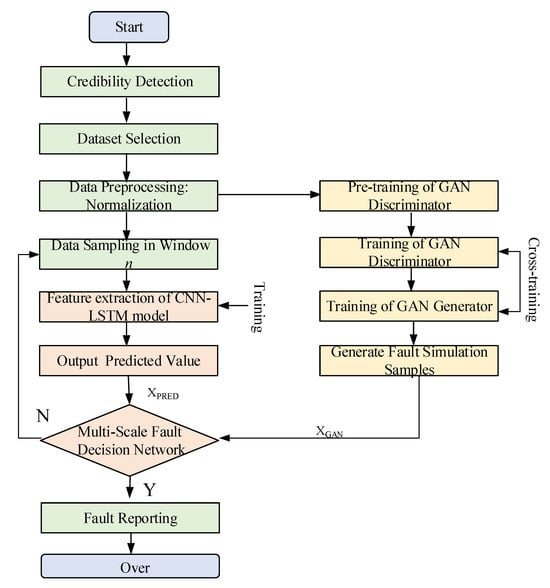

The process of the proposed fault detection method for hydropower stations is illustrated in Figure 1. Firstly, a controllable credibility detection mechanism is designed to analyze the mainstream data trends of the same monitoring data, calculate the credibility of different monitoring data, and screen out the dataset with the highest credibility to ensure the accuracy of the simple data and the efficiency of network training. Then, the dataset is normalized, and the normal monitoring data are sampled according to a time window with a length of n to capture the time series characteristics of the data.

Figure 1.

Fault prediction process of hydropower station.

The sampled data is subsequently input into the CNN-LSTM network for accurate prediction, and the GAN is utilized to generate new samples with statistical characteristics similar to real fault data for data augmentation. Finally, in order to grasp the global features of the detection data and make fault evaluation more accurate, a multi-scale deep neural network (DNN) model is designed to extract and classify the features of the data predicted by the CNN-LSTM network, so as to determine whether there is fault data in the predicted data and output the fault identification results accordingly. If there is no fault, the sampling window is returned to continue predicting and inspecting the next batch of data.

2.1. Data Prediction Model Based on PCA and CNN-LSTM

2.1.1. Data Credibility Detection Based on PCA

Before formal data prediction, data screening and preprocessing are required. In this study, a controllable credibility mechanism is adopted for data screening, which mainly includes two key steps: data analysis and feature selection. Firstly, principal component analysis is utilized to identify the main change directions of the monitoring data, due to its key feature extraction capability [30] and the statistical distribution of data samples. By calculating the component matrix P and the proportion of explained variance , the data are projected into a new coordinate system, so as to find the direction with the largest variance, that is, the principal component. These principal components represent the main change patterns of the data and help to identify the structure of the data. Then, we calculate the contribution degree of each group of monitoring data to the PCA results to evaluate the credibility of the data as

where is the contribution degree of the i-th group of monitoring data to the PCA result. J is the number of principal components, is the load of the j-th component for the corresponding variable of the i-th group of data, and is the variance of the j-th component. Then, sort each group of monitoring data according to these contribution degrees and assign selection probabilities. The higher the contribution degree of the data, the greater the probability of being selected. In the PCA, normal data, that is, data with the main change pattern, have a greater weight when calculating the contribution degree. By screening out the dataset with the highest contribution degree, those data that best conform to the main change pattern of the data are actually selected, thus ensuring the accuracy and reliability of the subsequent network training.

2.1.2. CNN-LSTM Data Prediction

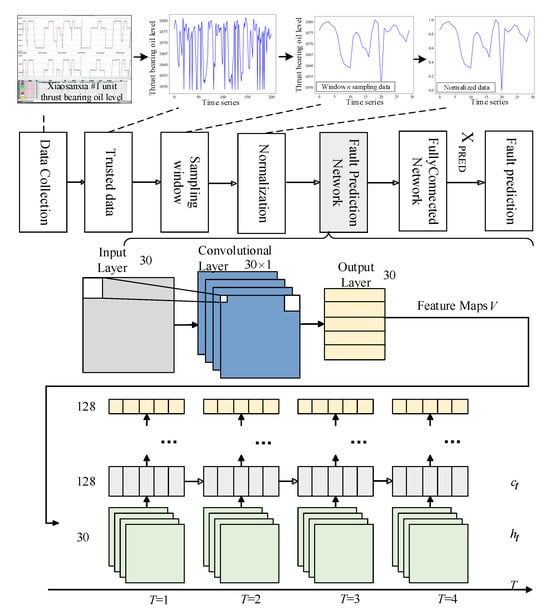

The data are normalized as a preprocessing step. The structure of this prediction model is provided in Figure 2. This model possesses the advantages of both a CNN and LSTM, extracting features from the monitoring data and using a gating mechanism to control the transmission state, thereby maintaining long-term memory of certain features. The network structure mainly consists of CNN layers, LSTM layers, and fully connected layers. The network’s input dimension, represented by the window size n, is configured as 30. This indicates that the model utilizes historical data from the past 30 time steps to predict the value at the subsequent () time step. Given the 2 min sampling interval, this window size corresponds to 1 hour of temporal coverage. This parameter selection achieves an optimal balance between capturing meaningful temporal patterns and maintaining computational efficiency by preventing oversampling-induced redundancy [31].

Figure 2.

Fault prediction module of hydropower station based on PCA and LSTM.

The input data shape of the network is [BatchSize, Channels, SequenceLength], where BatchSize refers to the batch size, which is 32. Channels refers to the number of input data channels, i.e., the feature dimension. SequenceLength is the sequence length, which is 30. Since the input sequence contains data from 30 time steps, with each time step corresponding to one channel, the total number of input channels is also 30. The kernel size is set to 1, indicating that this CNN layer performs cross-channel weighted fusion of data at each time step (with weights shared across all time steps). This design integrates multi-channel information through linear transformation to provide fused features for the subsequent LSTM layer, rather than extracting local temporal patterns. By performing convolution operations using the predefined kernels over the input sequence, the network generates output features that maintain the same dimensionality as the input data. These feature maps can be represented as

where and represent the convolution kernel weights and the input matrix, respectively. z is the activation function, b is the bias parameter, and i is the element index. After several layers of convolution and pooling operations, the resulting feature maps are sequentially flattened into a vector, which is then fed into the fully connected layer as the final output.

Next, the LSTM layer receives the output from the convolutional layer as its input. The hidden state and cell state are then initialized with the shape [NumLayers, BatchSize, HiddenSize]. In this model, there are three hidden layers, each containing 128 neurons. This design ensures the model has sufficient learning capacity while also being able to capture complex patterns within the sequence. Compared to a standard RNN, which only has a single hidden state , the LSTM introduces a distinctive cell state . This cell state evolves slowly throughout the sequence, allowing important temporal features to be retained over long periods or forgotten when necessary. To manage this cell state effectively, the LSTM includes three key gating units. They work together to determine how the cell state is updated and how the final hidden state is produced. During computation in this prediction network, each gate multiplies the input vector by a weight matrix, and the result is processed by an activation function. The outcome is a value between 0 and 1, which serves as the gate activation controlling the information flow. This architecture allows the LSTM to flexibly and effectively capture key features in time series data, especially when dealing with long sequences.

The forget gate selectively removes information from the prior cell state that is no longer relevant, based on the current input. So, the model can focus on retaining critical information while freeing up memory capacity to incorporate new data. The gating mechanism of the forget gate is expressed as

where is the current value of the forget gate. is the activation function, is the weight matrix of the forget gate, and is the input vector at t. is the hidden state vector from , and is the bias vector of the forget gate.

The input gate plays a filtering role. It selects useful information from the current input that is relevant to the prediction and incorporates it into the new candidate cell state. This process ensures that the cell states are continuously updated with the most recent sequence information. The first step involves using the sigmoid function to determine the weight of information being accepted as

Next, the candidate cell state that contains the new information is

The output gate is responsible for determining which information needs to be transmitted to the hidden state, or to serve as the current model output. These updated pieces of information are passed to the next layer or directly used as the output of the model as

The updates of the hidden state and the cell state shown above are

where ⊙ represents element-wise multiplication, is the cell state at time , and is the candidate state at the previous moment . Through this ingenious gating mechanism, the LSTM can not only effectively capture the long-term dependencies but also avoid gradient vanishing or explosion. Consequently, it can model the long-distance dependencies in the data more accurately.

Finally, the network includes a fully connected layer with a shape of [BatchSize, HiddenSize]. The hidden layer has 128 neurons, and its function is to map the output of the LSTM layer to a single neuron for predicting future data values.

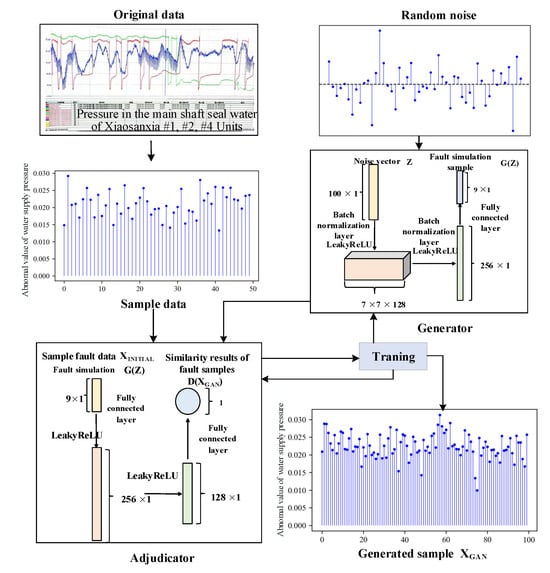

2.2. Fault Data Augmentation Model Based on GAN

The hydropower fault data enhancement model based on GAN is shown in Figure 3. It mainly consists of two modules: the generator and the discriminator [32]. During the training process of the model, a unique and dynamic adversarial relationship is formed between and . The responsibility of is to create new data through algorithms. Its goal is to mimic the real-world data to deceive the discriminator . represents the data generated by , where z is a random noise vector. This noise vector is mapped to a vector of shape through a fully connected layer. Subsequently, the batch normalization (BN) layer normalizes the output of the previous layer.

Figure 3.

Fault data enhancement model of hydropower station based on GAN.

Immediately afterwards, the leaky rectified linear unit (Leaky-ReLU) activation function provides a non-zero gradient for negative input values. This characteristic helps mitigate the gradient vanishing and maintains the nonlinearity during training. To further improve the performance of the generator, a second fully connected layer with 256 units is incorporated into the model. Finally, the output layer uses the activation function to activate the generated data, restricting the output values to the range of . Such a restriction helps match or limit the range of the generated data, making it closer to the distribution of the real fault data. The input layer receives data samples from the generator or the dataset and utilizes a fully connected layer to derive features from the input data. The Leaky-ReLU layer and the Dropout layer are used to introduce nonlinearity and regularization techniques, respectively, reducing the risk of model overfitting. In the output layer generating a scalar value, the function is used to compress the output within the range of . Among them, a value close to 1 indicates that the model considers the input to be real, while a value close to 0 indicates that the input is generated. The objective function is

where x represents the actual data, describes the distribution of this data, and denotes the expectation. Variable z refers to Gaussian random noise, with as its distribution. evaluates the generated data, yielding , and similarly assesses real data, producing . The discriminator’s outputs are fed back to the generator, guiding it to create more precise and lifelike data. Through this adversarial training mechanism, the generator and discriminator iteratively improve in a competitive process. Eventually, when the GAN objective function stabilizes, the generator can generate increasingly realistic data that cannot be accurately distinguished, thus achieving the global optimum.

As the training progresses continuously, becomes closer and closer to the real data, and the discriminative ability of is also constantly improving. In this mutually adversarial process, the capabilities of both and are enhanced. Under the ideal training state, can eventually create fake data that is almost indistinguishable from the real data. For the discriminator , since the data given by are already very realistic, will be hesitant when judging these data; that is, is close to , indicating that the discriminator cannot determine whether these data are real or fake. Finally, a batch of generated fault values that are close to the real fault values are obtained.

2.3. Fault Detection Model Based on Multi-Scale Feature Network

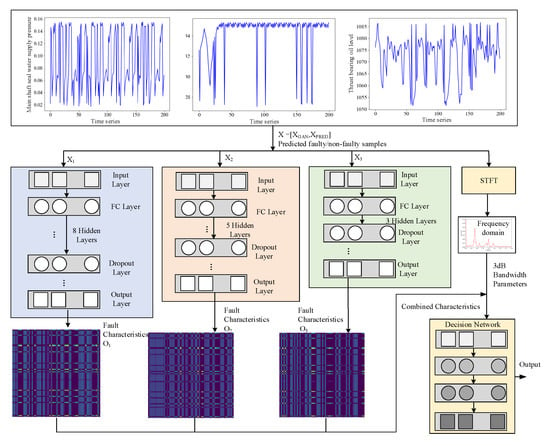

After the above process is completed, the batch of generated fault data is mixed with the predicted data of the CNN-LSTM, and then fed into the multi-scale feature extraction fault detection network for training, so as to enhance the recognition accuracy of the fault detection network. The trained multi-scale feature extraction network is used to conduct fault detection on the predicted values output by the CNN-LSTM network, and the detection results are output. The structure of this network is illustrated in Figure 4. The same input data enters the network through three branches. Each sub-network independently processes the received data and extracts fault features. These features form a collection of all sub-network features through a merging layer. Subsequently, this collection is processed by the hidden layer again, and finally, the fault detection results are output.

Figure 4.

Fault detection network with multi-scale feature extraction.

The hidden layer of each sub-network is composed of several fully connected layers and Dropout layers. The former are responsible for learning the nonlinear relationships among the input data. As the learning progresses, the output dimensions of these layers will vary. In this network, from left to right, the sub-networks have three, five, and eight hidden layers, respectively, which reflects the extraction of features at different levels and depths, and increases the diversity of the model. When the features of these sub-networks are fused, since each sub-network focuses on different parts of the dataset, the fused features can provide a more comprehensive perspective. This fusion can also enhance the generalization ability, enabling the model to have better detection performance on unseen data, thereby enhancing the robustness.

In addition to the mixed time-domain features, we also use the frequency-domain parameters of the sample data, such as frequency peak position and 3 dB bandwidth, as auxiliary information to assist the model in making its diagnosis. Therefore, incorporating spectrograms as auxiliary inputs into the classification network can improve both the accuracy and robustness of fault detection. We employ the STFT,

to extract frequency-domain features from time series signals, where denotes the time series signal, is the sliding window function, m represents the time shift, and is the frequency variable. In practical applications, the discrete form is typically used as

where is the number of samples. By applying the Fourier transform to each time window, a time–frequency representation is obtained, which is then used to enhance the discriminative performance of the multi-scale network.

2.4. Dynamic Multi-Task Training Algorithm

To ensure the convergence and training efficiency, we propose a dynamic multi-task training algorithm, as shown in Algorithm 1. We define the loss functions of the prediction network based on the CNN-LSTM for the regression task and the detection network based on multi-scale DNN for the classification task as in SubNet and in GlobalNet, respectively. We jointly optimize the parameters of the SubNet and GlobalNet. The algorithm initializes the following inputs: training set , validation set , maximum training steps N, early stopping patience P, and minimum improvement threshold . Finally, it outputs the optimal parameters . After initializing network parameters, the dynamic weight coefficients are generated at each training iteration step n as

where decays with training steps to prioritize the subnet task, while increases to amplify the global task influence, enabling a progressive shift in learning focus from local features to global semantics.

| Algorithm 1 Dynamic Multi-Task Training Algorithm |

|

During training, each batch undergoes forward propagation to obtain the subnet regression predictions and global network classification predictions , with loss functions computed as

The weighted total loss can be set as

for parameter updating. After each iteration, the validation set is evaluated by

When is satisfied, is updated and the early stopping counter is reset. Otherwise, the counter increments. Training terminates when reaching N steps or when no significant improvement is observed for P consecutive iterations, returning the optimized parameters .

3. Experiments and Result Analysis

3.1. Dataset and Parameter Description

To evaluate the applicability and efficacy of the proposed scheme in detecting fault data of hydropower stations, experiments were conducted using the data recorded by the computer monitoring system of the control center of a hydropower station in 2024. There were four types of faults: high oil level anomaly in the thrust oil tank of the generator thrust bearing system, low oil level anomaly in the thrust oil tank of the generator thrust bearing system, oil temperature anomaly in the thrust oil tank of the generator thrust bearing system, and abnormal pressure in the main shaft seal water of the turbine unit. These collectively constitute the comprehensive abnormalities of the unit. The dataset comprises 4000 data groups, each containing 3000 data points. The sensors used to measure the above parameters include ultrasonic and hydrostatic level sensors for oil level, RTD and thermocouple sensors for oil temperature, and strain gauge pressure sensors for shaft seal water pressure. Typical noise levels range from °C to °C for temperature sensors, and from to in full scale for level and pressure sensors, depending on the specific sensor type and installation conditions. Of these data, 75 percent are used as the training set for model training, and the rest is used as the test set to verify the detection accuracy. Through the data processing and model training process, we aimed to verify the practicality and effectiveness of the proposed scheme in the actual fault detection task of hydropower stations. The parameter settings of the CNN-LSTM model, GAN model and DNN model adopted are shown in Table 1, Table 2 and Table 3.

Table 1.

Parameter setting ofs the CNN-LSTM model for data prediction.

Table 2.

Parameter settings of the GAN model for data enhancement.

Table 3.

Parameter settings of the multi-scale feature extraction network model.

The model was executed on a computer equipped with a Core i7 4.0 GHz processor and 16 GB of RAM. Testing the model on data points took 5219 s, with an average processing time of s per data point. Table 4 compares the runtime of all methods. Although the proposed approach requires longer time than the RNN, GRU, CNN-GRU, and SVM, it still fully meets practical requirements with hour-level timing precision in real-world applications. In addition, our method require shorter training and testing times than the Transformer method.

Table 4.

Comparison of training time and testing time.

3.2. Fault Detection Results

3.2.1. Comparison of Data Prediction Results

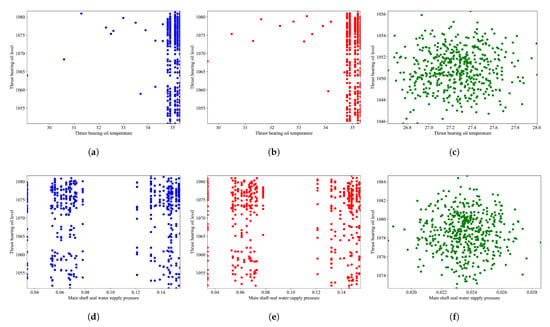

It is of vital importance to conduct a credibility check on the data before selecting data. Due to the limitations of environmental factors and the stability of monitoring equipment, certain data may contain errors and deviate from the range of most normal data. As shown in Figure 5, scatter plots of the monitoring data of oil temperature in the oil tank, water pressure, and oil level in the tank are presented. Figure 5a,b,d,e show the scatter distribution of normal monitoring data, while Figure 5c,f show the scatter distribution of erroneous data. It can be clearly observed that the concentration trend of abnormal data is different from that of normal data.

Figure 5.

Scatter plot of monitoring data for (a–c) oil temperature and oil level deviation in the oil tank and (d–f) water pressure and oil level deviation in the oil tank.

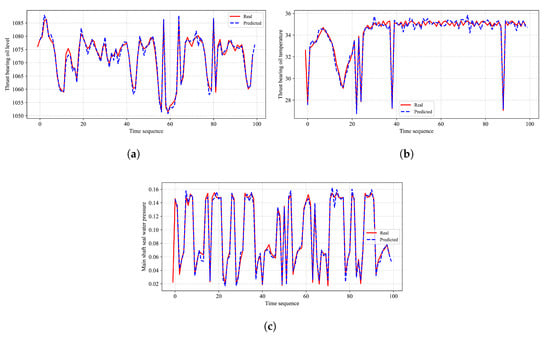

Figure 6 shows an example of the comparison between the prediction results of the CNN-LSTM in this scheme and the true values after the abnormal data are supplemented and enhanced by the GAN network. The abnormal values in each sub-graph in the figure are, respectively, the abnormal oil level in the thrust bearing oil sump of the unit, the abnormal oil temperature in the thrust oil sump of the unit, and the abnormal water supply pressure of the main shaft seal of the unit. We can see that the predicted results are highly consistent with the actual data, and the data fluctuations can also be well predicted.

Figure 6.

Prediction results of the CNN-LSTM model. (a) Thrust bearing oil level. (b) Thrust bearing oil temperature. (c) Main shaft seal water pressure.

To evaluate the fault prediction performance of the models, the coefficient of determination was calculated. This indicator is an important metric for measuring the goodness of fit of a regression model and was compared with several traditional methods. These traditional methods included the support vector machine (SVM) method, the traditional RNN method, and the improved Gated Recurrent Unit (GRU) method based on it. In Table 5, the differences in prediction accuracy among different schemes are reflected intuitively by comparing the values. The results show that the proposed scheme has the highest fitting degree of the data, and the average prediction accuracy relative to the RNN, GRU, SVM, and Transformer schemes is, respectively, increased by , , , and , proving the outstanding performance of this scheme in the prediction task. Although the Transformer excels in parallel computing and large-scale pretraining, LSTM still outperforms in temporal modeling. Its gating mechanism more effectively captures long-range dependencies, whereas the Transformer, even with positional encoding, suffers from homogenized deep-layer outputs. Moreover, the Transformer is highly sensitive to hyperparameters like network depth and attention heads, incurring substantial tuning costs. In contrast, LSTM’s simpler and more stable architecture proves more practical in scenarios with limited data or computational resources.

Table 5.

Comparison of prediction performance in terms of .

3.2.2. Comparison of Fault Detection Results

The effectiveness of our fault detection method was tested. Meanwhile, other RNN, GRU, and SVM algorithms, as well as the threshold-based detection method, were compared. Additionally, fault data enhancement was conducted for the RNN, GRU, and SVM algorithms, respectively, to fully verify the model effectiveness.

From Table 6, it can be seen that this scheme demonstrates the highest model robustness and can effectively adapt to complex and variable environmental characteristics as well as diverse data distribution characteristics. The detection performance of our method has been improved by , , , and , respectively, compared with the RNN, GRU, SVM, and threshold detection algorithms. Compared with this scheme, although the RNN scheme can capture the dependency of time series in some cases, its training process is slow and prone to gradient vanishing and explosion, thereby affecting the stability and accuracy of the model. The GRU scheme, although solving the gradient vanishing problem of the RNN to some extent and improving the training efficiency of the model, still has the problem of insufficient modeling ability for long-term time series data, resulting in poor performance in complex tasks. The SVM scheme struggles to capture time dependence and long-term patterns and is not directly applicable to time series tasks, and its decision boundary is fixed, making it difficult to dynamically adjust with new data after training, and it has poor flexibility. Traditional threshold setting and weight-based fault detection methods often rely on expert experience, which may struggle with meeting the actual requirements in terms of the detection effect and efficiency when dealing with multiple abnormal patterns.

Table 6.

Fault detection performance of the generator sets in hydropower stations.

Meanwhile, from Table 6, we also see that the recall rate of the proposed scheme is much higher than that of the RNN, GRU, SVM, and threshold detection algorithms, and it has at least increased by . The accuracy and recall rate of traditional methods are mostly seriously imbalanced, and this phenomenon is also clearly reflected in the F1 score index, reflecting the inherent defects of the existing fault detection models under imbalanced distribution data. The results show that, while ensuring that the recall rate is much higher than that of traditional methods, the proposed method has achieved an improvement in detection performance. This demonstrates that the proposed scheme has a good application prospect in scenarios such as hydropower stations, which are very sensitive to faults with an extremely low fault tolerance rate.

In particular, we see from the results in Table 4 and Table 6 that although the CNN-GRU-GAN scheme has a shorter training time, its test time overhead is almost the same as that of the proposed scheme. More importantly, although the proposed scheme only has a slightly higher accuracy than the CNN-GRU-GAN scheme, its recall rate index is much higher than that of the latter. The recall rate is a more effective indicator for measuring classification performance under imbalanced fault data.

3.2.3. Ablation Study

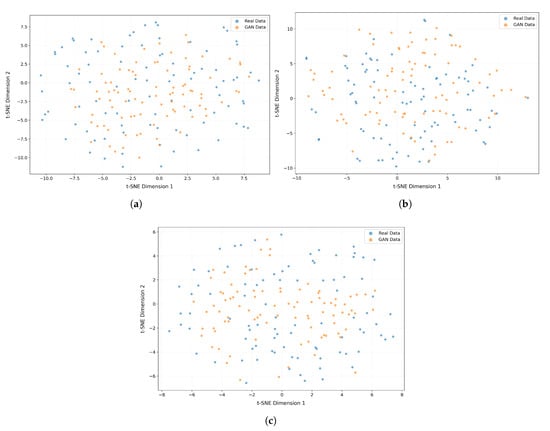

Figure 7 shows a t-SNE visualization of the real and GAN data in the features (t-SNE, dimension 1; t-SNE, dimension 2) after dimensionality reduction. The highly mixed distribution of real (blue) and generated (yellow) data points demonstrates that the GAN successfully captured the essential distribution characteristics of real data. The absence of clearly separated clusters in t-SNE space indicates strong global similarity between generated and real data distributions.

Figure 7.

t-SNE Visualization of Real vs GAN Data. (a) Thrust bearing oil level. (b) Thrust bearing oil temperature. (c) Main shaft seal water pressure.

In Table 7, the complete model combining all components outperforms any individual variant, confirming the complementary roles of each module within the overall framework. In addition, using a CNN-LSTM structure instead of a standalone LSTM enables more effective extraction of both spatial features and temporal dynamics from the input data, enhancing the quality of feature representation. We name the standalone LSTM method without a CNN as LSTM-GAN-MSN. The incorporation of the GAN module increases the diversity of training data, which helps improve the model robustness for imbalanced samples or unseen fault types. We name the method without a GAN as CNN-LSTM-MSN. Moreover, the multi-scale DNN architecture captures feature patterns at different granular levels, further enhancing the model ability to represent complex data structures. We name the single-scale DNN method as CNN-LSTM-GAN-SSN. From Table 7, we can see that the recall rate of the proposed method has been significantly enhanced with the improvement in accuracy.

Table 7.

Ablation study of the generator sets in the hydropower station.

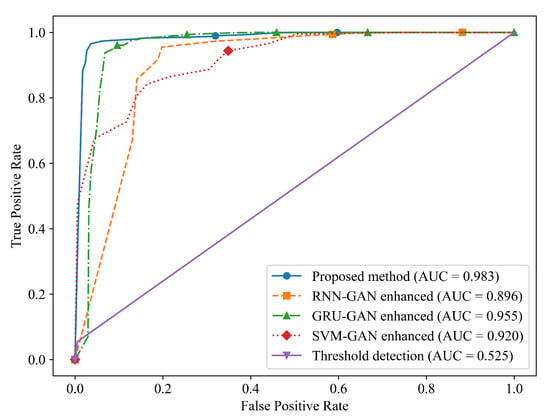

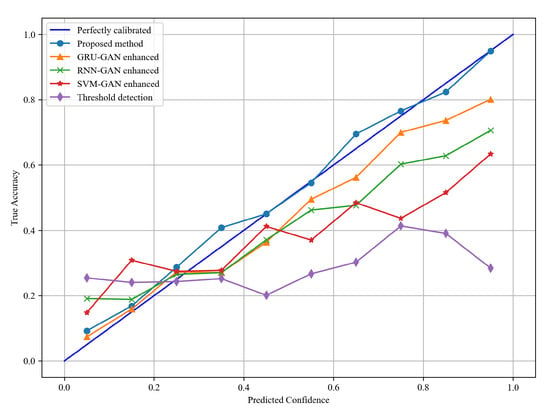

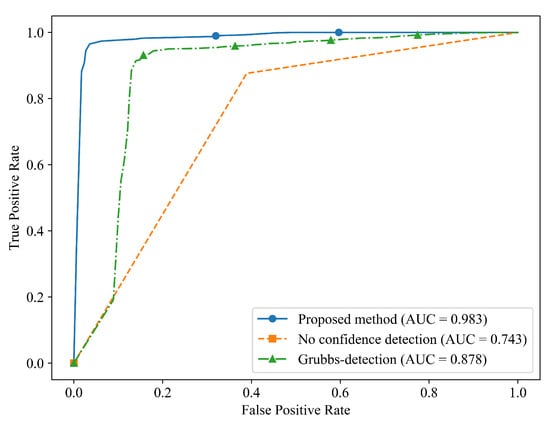

3.2.4. Comparison of ROC Results

To evaluate the comprehensive performance of the proposed detection method more scientifically, the average receiver operating characteristic (ROC) curve of each scheme is illustrated in Figure 8, from which we see that the proposed scheme is significantly superior to other schemes and is higher than other schemes in most false positive rate intervals, indicating that the model has a stronger ability to distinguish positive cases from negative cases. Figure 9 presents the reliability diagrams of different methods. The proposed method is close to the diagonal line, outperforming the other methods.

Figure 8.

Average ROC curve for fault detection performance.

Figure 9.

Reliability diagram for fault detection performance.

Figure 10 presents a comparison of ROC curves between PCA-based methods with and without the reliability detection mechanism, as well as a comparison with the Grubbs’ test for outlier detection. The Grubbs’ test method can identify anomalies to some extent but performs less effectively than PCA-based anomaly detection. The Grubbs’ test analyzes each variable independently for outlier detection in a multivariate context. Its advantages lie in computational efficiency, so it is particularly suitable for data with weakly correlated features. However, this method has significant limitations: it fails to account for interdependencies among variables, potentially missing complex anomaly patterns that require multivariate joint determination (e.g., combined anomalies where multiple features exhibit slight deviations simultaneously), and it suffers from computational redundancy in high-dimensional data. In contrast, PCA-based anomaly detection enables multivariate collaborative evaluation through principal component contribution analysis. It automatically captures intrinsic correlations among variables, and its contribution-based ranking mechanism prioritizes high-quality samples that align with the primary data patterns. This approach is particularly effective for high-dimensional industrial monitoring data with strong correlations, as its mathematical framework inherently supports the detection of multivariate joint anomalies.

Figure 10.

Average ROC curve for detection with and without the credibility mechanism.

After introducing the reliability mechanism, we can effectively avoid the interference of untrusted data on model training and fault detection by screening out normal training data. The confidence mechanism makes the training data more reliable by assessing the confidence of each data point and screening out data that contains more noise or does not fit the actual failure mode. After the treatment of this mechanism, the ROC curve of the model was significantly improved, showing more accurate classification ability, and the area under the curve (AUC) index was increased by , and the false positive rate and false negative rate were effectively controlled. Therefore, the reliability mechanism enhances the fault detection accuracy of the model and improves its adaptability to the complex hydropower station environment.

4. Conclusions

This paper presents a fault prediction scheme for a hydropower station based on CNN-LSTM-GAN with biased data, which can effectively enhance the accuracy and robustness of fault detection. Aiming at the problem of imbalanced fault data distributions of hydropower stations, the GAN method was used to expand the training dataset. The reliability detection mechanism based on the PCA algorithm was designed to reduce the influence of environment and equipment on data accuracy. A depth model based on multi-scale feature extraction was constructed to improve the classification accuracy. Furthermore, a multi-task training method was designed to enhance the convergence and training efficiency of the model. The experimental results show that the proposed scheme is superior to traditional methods in accuracy, recall ratio, F1 score, AUC performance, and many other indicators. It has better fitting and detection accuracy for data prediction, which has a good application prospect in hydropower station fault prediction. Future research will focus on model structure optimization, reducing training time, and exploring lightweight model architectures while maintaining high prediction accuracy.

Author Contributions

Conceptualization, B.L. and X.W. (Xiaoming Wang); methodology, B.L. and Z.Z. (Zhenjie Zhao); software, B.L. and Z.Z. (Zhenjie Zhao); validation, Z.Z. (Zhaoxin Zhang) and X.W. (Xiao Wang); formal analysis, X.W. (Xiao Wang) and X.W. (Xiaoming Wang); investigation, Z.Z. (Zhenjie Zhao); resources, Z.Z. (Zhaoxin Zhang) and X.W. (Xiaoming Wang); data curation, X.W. (Xiao Wang); writing—original draft preparation, B.L.; writing—review and editing, X.W. (Xiaoming Wang); visualization, Z.Z. (Zhenjie Zhao); supervision, X.W. (Xiaoming Wang) and T.L.; project administration, Z.Z. (Zhaoxin Zhang) and X.W. (Xiaoming Wang); funding acquisition, X.W. (Xiaoming Wang). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Jiangsu Province, Grant Number BK20241885.

Data Availability Statement

Due to privacy and confidentiality agreements related to engineering design, detailed data supporting the results reported in this article, including schematics and related technical documents, cannot be made publicly available. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Authors Bei Liu, Xiao Wang and Zhaoxin Zhang were employed by the company SDIC Gansu Xiaosanxia Power Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Cunningham, J.; Paserba, J. Superpower: Regional coordination a century ago [history]. IEEE Power Energy Mag. 2025, 23, 99–105. [Google Scholar] [CrossRef]

- Bechara, H.; Ibrahim, R.; Tahan, A.; Zemouri, R.; Merkhouf, A.; Kedjar, B. Unleashing artificial intelligence: Monitoring and diagnosing large hydrogenerators. IEEE Power Energy Mag. 2024, 22, 89–99. [Google Scholar] [CrossRef]

- Selak, L.; Butala, P.; Sluga, A. Condition monitoring and fault diagnostics for hydropower plants. Comput. Ind. 2014, 65, 924–936. [Google Scholar] [CrossRef]

- Zhou, G.; Han, M.; Filizadeh, S.; Geng, Z.; Zhang, X. A fault detection scheme in MTDC systems using a superconducting fault current limiter. IEEE Syst. J. 2022, 16, 3867–3877. [Google Scholar] [CrossRef]

- Peng, Y.; Yi, H.; Dan, Y.; Kuang, D. Simulation analysis and treatment of main transformer fault in a hydropower station. In Proceedings of the IEEE International Conference on Power and Energy Systems, Chengdu, China, 13–16 December 2024. [Google Scholar]

- Chen, J.; Zheng, Y.; Deng, X.; Wang, Y.; Hu, W.; Xiao, Z. Design of a progressive fault diagnosis system for hydropower units considering unknown faults. Meas. Sci. Technol. 2023, 35, 015904. [Google Scholar] [CrossRef]

- Cai, X.; Wai, J. Intelligent DC arc-fault detection of solar PV power generation system via optimized VMD-based signal processing and PSO–SVM classifier. IEEE J. Photovoltaics 2022, 12, 1058–1077. [Google Scholar] [CrossRef]

- Liam, Y.; Huang, Y.; Zheng, X.; Cheng, J. Seismic time-frequency analysis based on entropy-optimized paul wavelet transform. Geosci. Remote Sens. Lett. 2020, 17, 342–346. [Google Scholar]

- Samal, P.; Hashmi, F. Ensemble median empirical mode decomposition for emotion recognition using EEG signal. IEEE Sens. Lett. 2023, 7, 7001704. [Google Scholar] [CrossRef]

- Gao, W.; Li, B. Octonion short-time Fourier transform for time-frequency representation and its applications. IEEE Trans. Signal Process. 2021, 69, 6386–6398. [Google Scholar] [CrossRef]

- Hu, C.; Luo, J.; Kong, X.; Xu, Z. Orthogonal multi-block dynamic PLS for quality-related process monitoring. IEEE Trans. Autom. Sci. Eng. 2024, 21, 3421–3434. [Google Scholar] [CrossRef]

- Jeong, K.; Choi, S. Fuzzy Observer-based magneto rheological damper fault diagnosis using a support vector machine. IEEE Trans. Control. Syst. Technol. 2022, 30, 1723–1735. [Google Scholar] [CrossRef]

- Zhang, H.; Guo, X.; Zhang, P. Improved PSO-SVM-based fault diagnosis algorithm for wind power converter. IEEE Trans. Ind. Appl. 2024, 60, 3492–3501. [Google Scholar] [CrossRef]

- Roy, S.; Dey, S.; Chatterjee, S. Autocorrelation aided random forest classifier-based bearing fault detection framework. IEEE Sens. J. 2020, 20, 10792–10800. [Google Scholar] [CrossRef]

- Guo, M.; Gao, J.; Shao, X.; Chen, D. Location of single-line-to-ground fault using 1-D convolutional neural network and waveform concatenation in resonant grounding distribution systems. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Thomas, J.; Chaudhari, S.; Verma, N. CNN-based transformer model for fault detection in power system networks. IEEE Trans. Instrum. Meas. 2023, 72, 2504210. [Google Scholar] [CrossRef]

- Song, Q.; Wang, M.; Lai, W.; Zhao, S. On Bayesian optimization-based residual CNN for estimation of inter-turn short circuit fault in PMSM. IEEE Trans. Power Electron. 2023, 38, 2456–2468. [Google Scholar] [CrossRef]

- Wu, Q.; Yan, X. Interval-valued-based stacked attention autoencoder model for process monitoring and fault diagnosis of nonlinear uncertain systems. IEEE Trans. Instrum. Meas. 2023, 72, 3503010. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, H.; Yuan, X.; Shardt, Y.; Yang, C.; Gui, W. Deep learning for fault-relevant feature extraction and fault classification with stacked supervised auto-encoder. J. Process Control. 2020, 92, 79–89. [Google Scholar] [CrossRef]

- Mansouri, M.; Dhibi, K.; Hajji, M.; Bouzara, K.; Nounou, H.; Nounou, M. Interval-valued reduced RNN for fault detection and diagnosis for wind energy conversion systems. IEEE Sens. J. 2022, 22, 13581–13588. [Google Scholar] [CrossRef]

- Lu, W.; Li, Y.; Cheng, Y.; Meng, D.; Liang, B.; Zhou, P. Early fault detection approach with deep architectures. IEEE Trans. Instrum. Meas. 2018, 67, 1679–1689. [Google Scholar] [CrossRef]

- Park, D.; Kim, S.; An, Y.; Jung, J. LiReD: A light-weight real-time fault detection system for edge computing using LSTM recurrent neural networks. Sensors 2018, 18, 2110. [Google Scholar] [CrossRef] [PubMed]

- Belagoune, S.; Bali, N.; Bakdi, A.; Baadji, B.; Atif, K. Deep learning through LSTM classification and regression for transmission line fault detection, diagnosis and location in large-scale multi-machine power systems. Measurement 2022, 177, 109330. [Google Scholar] [CrossRef]

- Wei, W.; Jiang, F.; Yu, X.; Du, J. An ensemble learning algorithm based on resampling and hybrid feature selection, with an application to software defect prediction. In Proceedings of the 7th International Conference on Information and Network Technologies (ICINT), Okinawa, Japan, 21–23 May 2022. [Google Scholar]

- Catelli, R.; Pelosi, S.; Esposito, M. Lexicon based vs. BERT-based sentiment analysis: A comparative study in Italian. Electronics 2022, 11, 374. [Google Scholar] [CrossRef]

- Chinatalapudi, N.; Battineni, G.; Amenta, F. Sentiment analysis of COVID-19 tweets using deep learning models. Infect. Dis. Rep. 2021, 13, 329–339. [Google Scholar] [CrossRef] [PubMed]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Krishnan, D. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 18661–18673. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Xu, Y.; Shen, S.; He, Y.; Zhu, Q. A novel hybrid method integrating ICA-PCA with relevant vector machine for multivariate process monitoring. IEEE Trans. Control. Syst. Technol. 2019, 27, 1780–1787. [Google Scholar] [CrossRef]

- Peng, G.; Shi, C.; Zhong, Y.; Ai, X. U-Shape spatial-temporal prediction network based on 3D convolution and BDLSTM. In Proceedings of the 2024 IEEE 4th International Conference on Software Engineering and Artificial Intelligence (SEAI), Xiamen, China, 21–23 June 2024. [Google Scholar]

- Silva, J.C.F.; Silva, M.C.; Amorim, V.J.P.; Lazaroni, P.S.O.; Oliveira, R.A.R. Wearable Sensors: Improving AI for walking activities through GAN-Based data augmentation. In Proceedings of the 2024 XIV Brazilian Symposium on Computing Systems Engineering (SBESC), Recife, Brazil, 26–29 November 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).