Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots

Abstract

1. Introduction

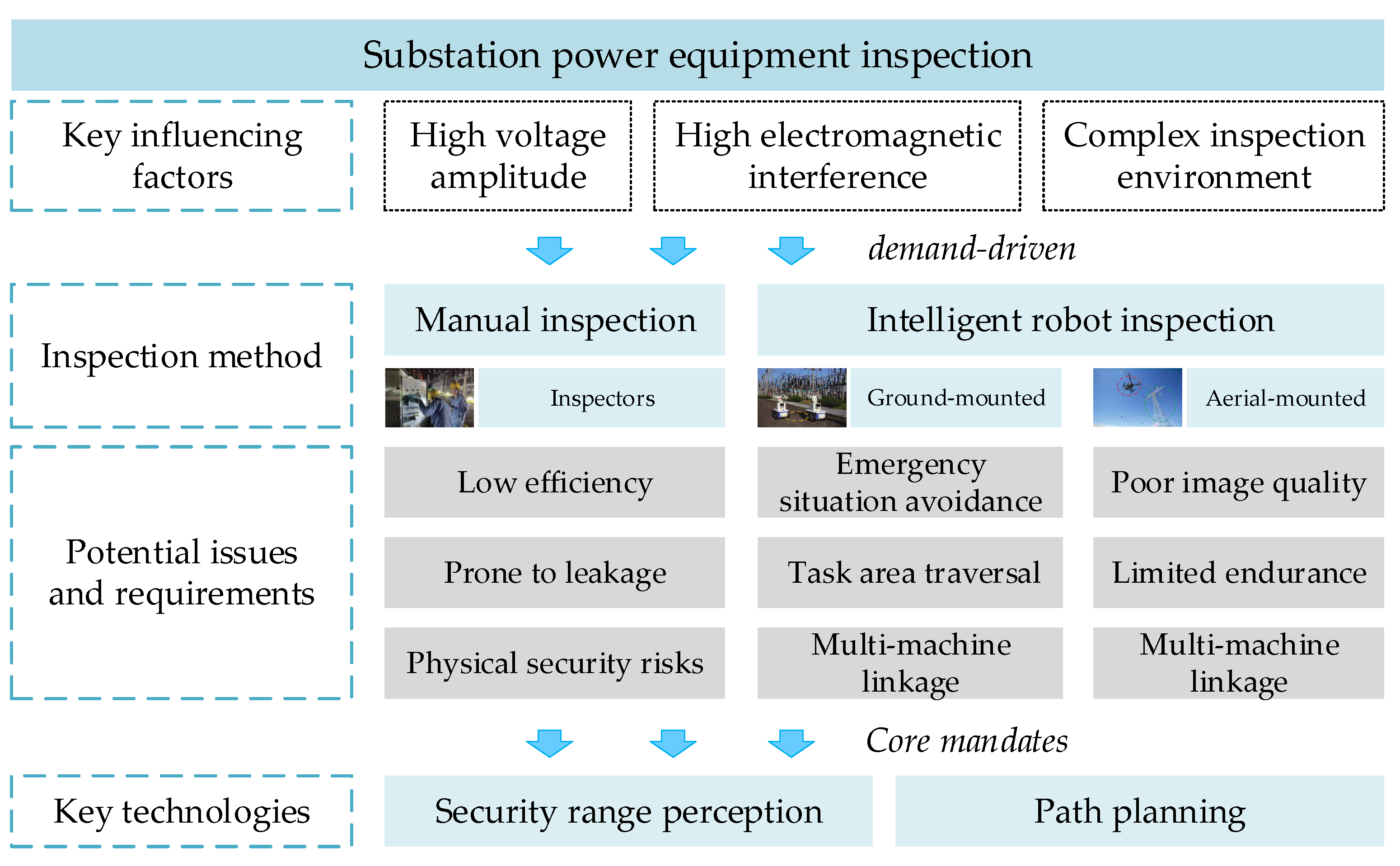

2. Robot Inspection Scenarios in Substations

2.1. Inspection Scenarios in Substations

- Monitoring of remote equipment in substations

- Infrared monitoring of thermal defects in equipment

- Identification and monitoring of equipment status

- Equipment anomaly monitoring

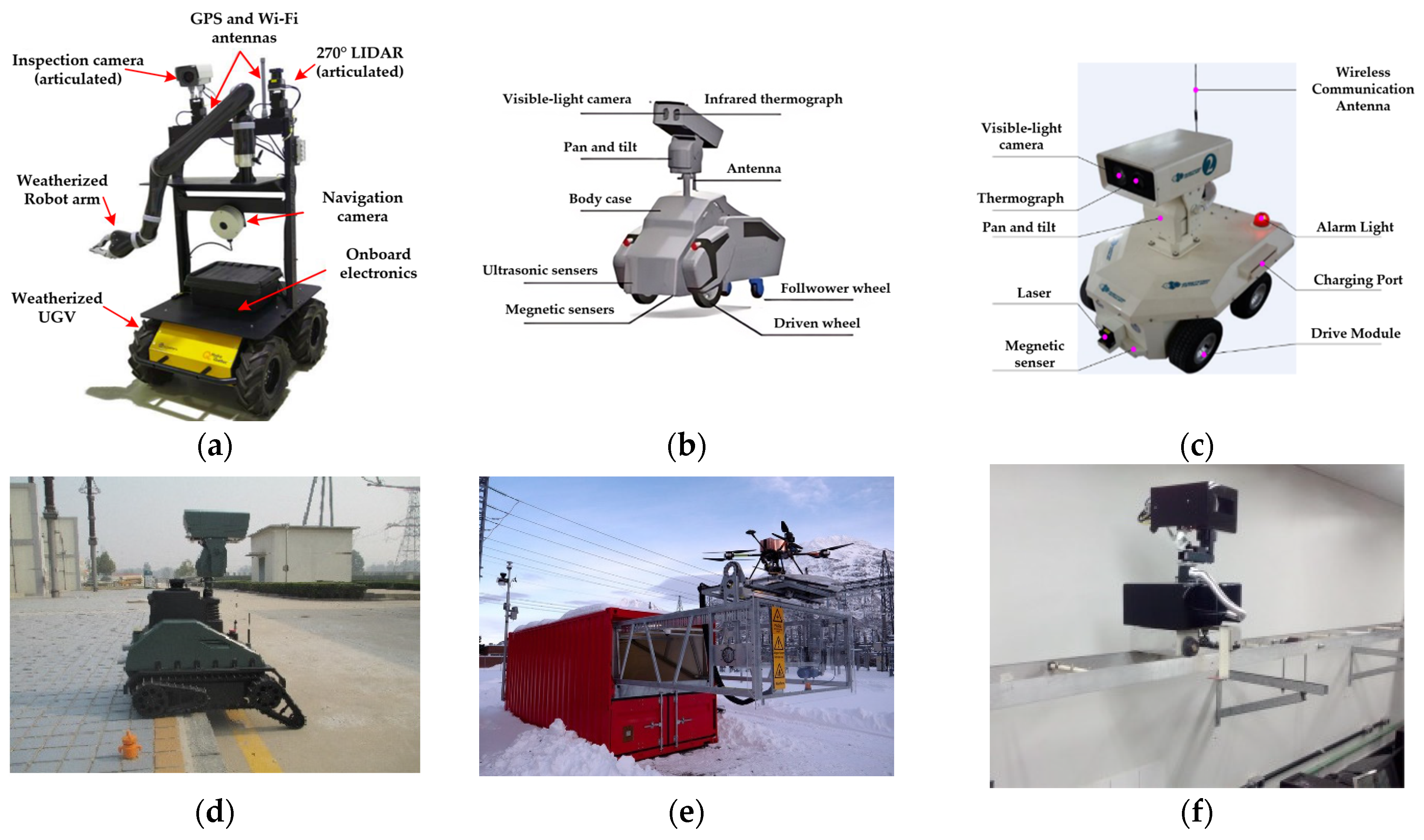

2.2. Mobile Robots in Substations

3. Security Range Perception Methods

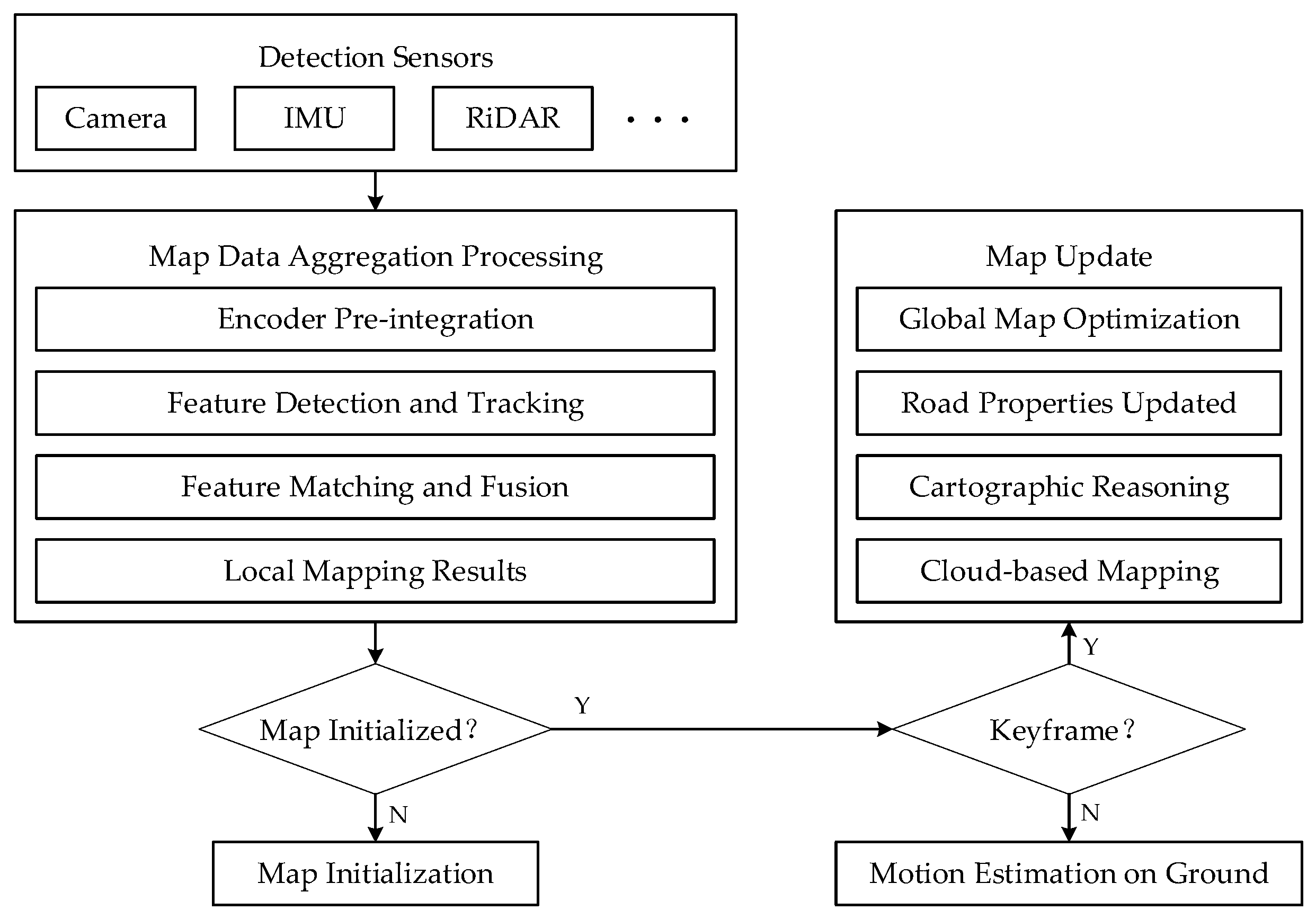

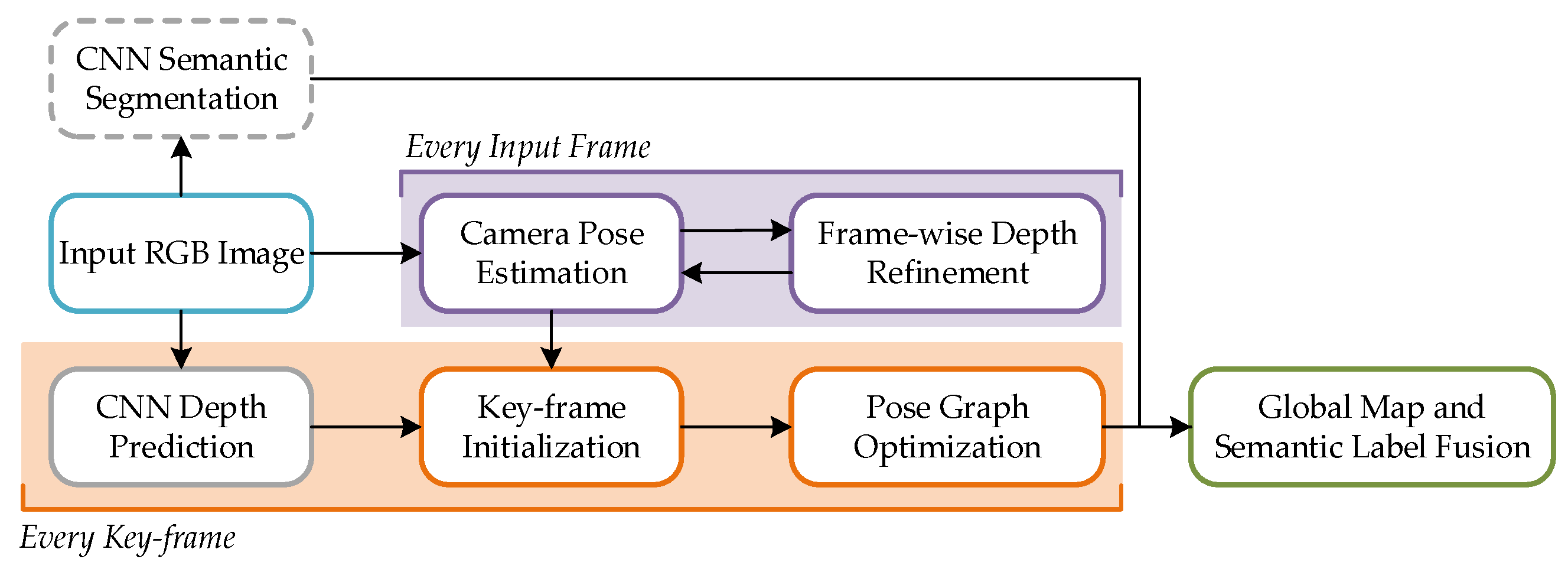

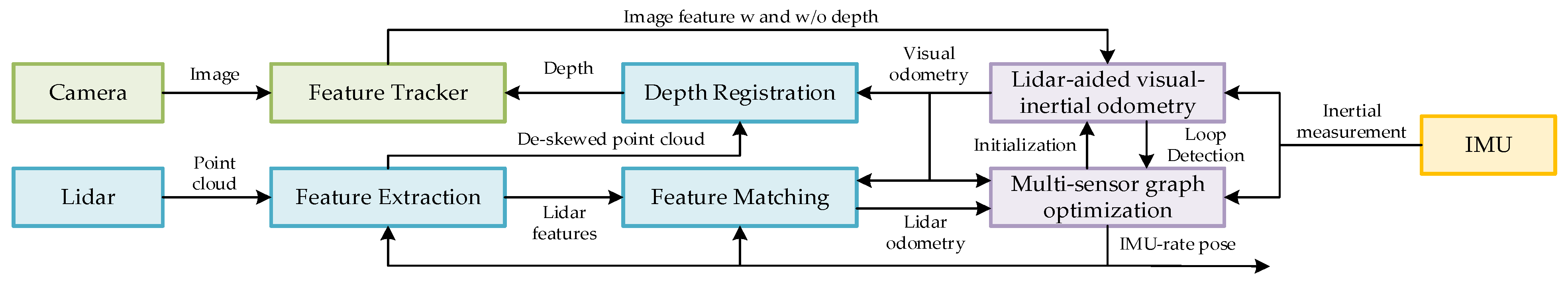

3.1. Global-Mapping-Based Range Perception Method

3.1.1. Local Environment Matching Method

- (1)

- Visual Matching

- Feature point method

- Direct method

- (2)

- LiDAR

- Two-dimensional lasers

- Three-dimensional lasers

3.1.2. Pre-Information-Based Mapping Method

3.2. Regional-Sensing-Based Range Perception Method

3.2.1. Pre-Information-Based Localization Method

- (1)

- RFID

- (2)

- UWB

3.2.2. Real-Time Electrothermal Sensing Method

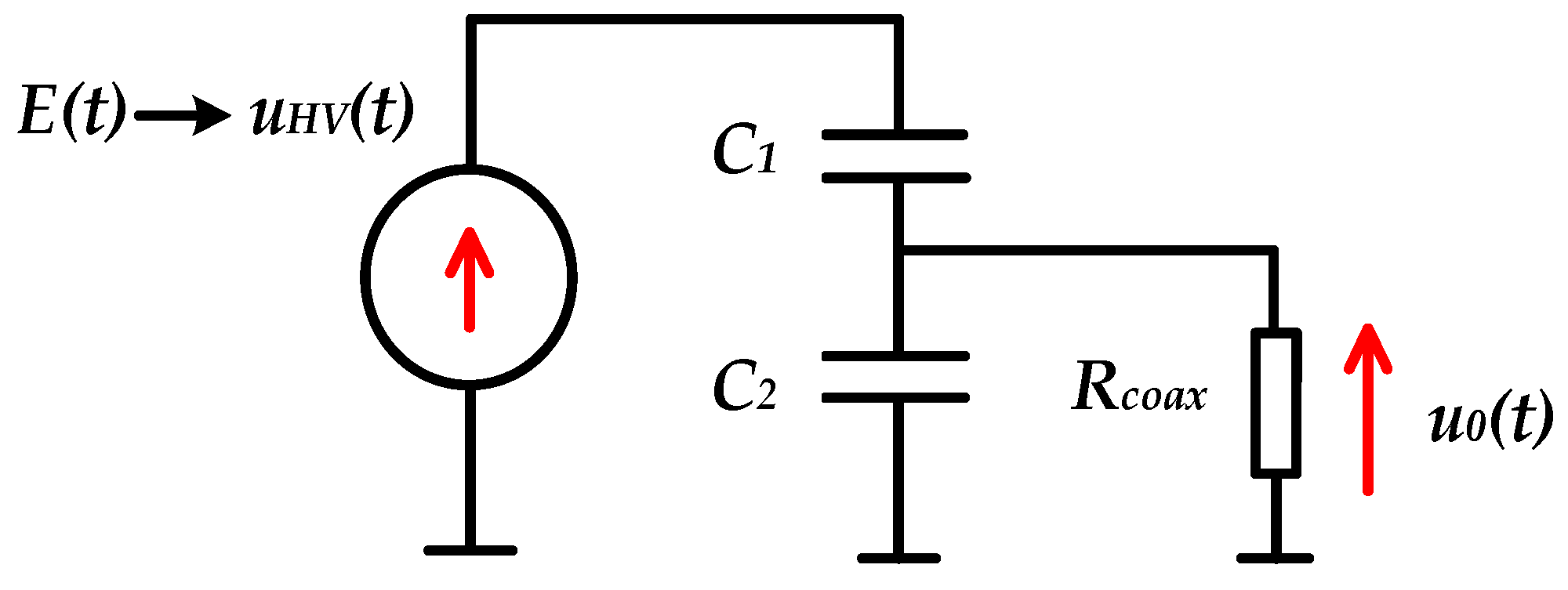

- (1)

- Electric Field and Voltage Sensors

- (2)

- Infrared Image Technology and Thermocouple Technology

3.3. Comparison and Analysis

4. Path-Planning Techniques

4.1. Global Path Planning Based on Prior Information

- Dijkstra algorithm

- Rapidly exploring random tree algorithm

- A* algorithm

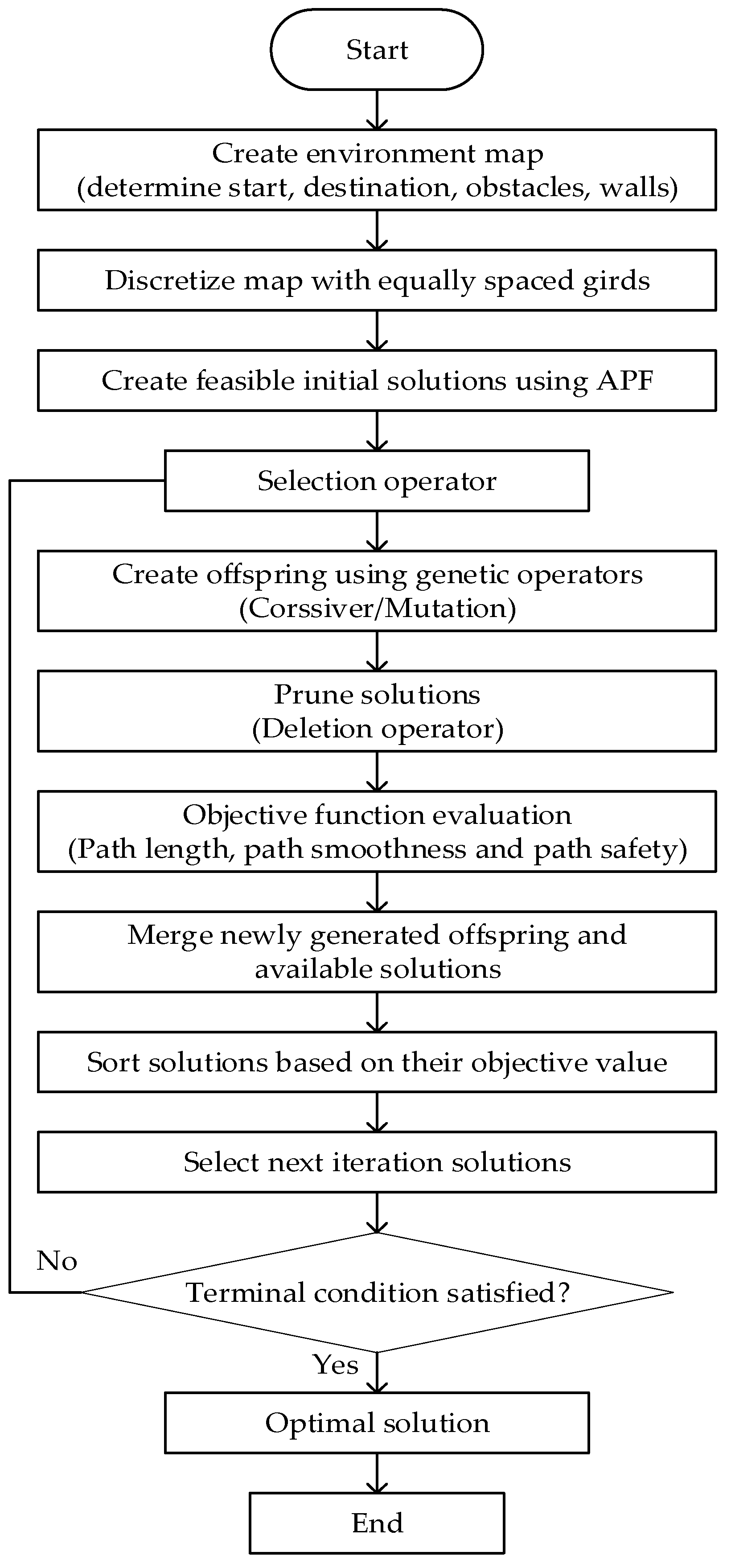

- Genetic algorithm

4.2. Regional Path Planning Based on Sensing Information

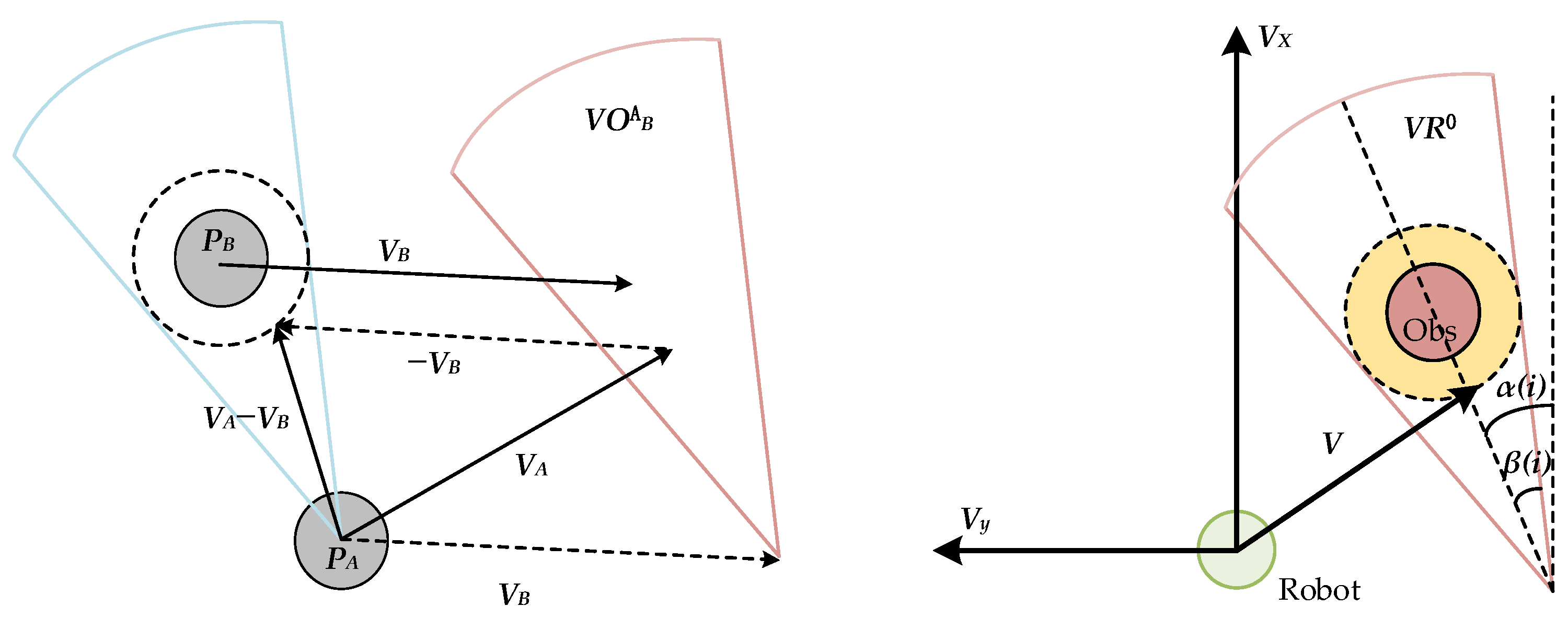

- Artificial potential field method

- D* algorithm

- Ant colony optimization

- Reinforcement learning

- Based on reinforcement learning, path-planning algorithms enable agents to learn optimal paths through interaction with their environment. Agents take actions based on states to maximize cumulative rewards. These techniques include Q-learning, deep Q-networks (DQN), and policy gradient methods. They are widely applied in robotic navigation, autonomous driving, and game AI, effectively addressing path-planning problems in complex environments. As algorithms continue to advance and computational power increases, the application of reinforcement learning in path planning is becoming increasingly mature and widespread.

- Song et al. [138] proposed an improved Q-learning algorithm to solve the problem of Q-learning converging slowly to the optimal solution. The pollination algorithm is used to improve Q-learning initialization. The experimental results show that the proper initialization of the Q-value can accelerate the convergence of Q-learning. Zhao et al. [139] proposed an empirical memory learning (EMQL) algorithm based on the continuous update of the shortest distance from the current state node to the start point, which outperforms the traditional Q-learning algorithm regarding the planning time, the number of iterations, and the path length achieved. Wang et al. [140] proposed a reinforcement learning approach using an improved exploration mechanism (IEM) based on prioritized experience replay (PER) and curiosity-driven exploration (CDE) for the problem of time-constrained path planning for UAVs operating in a complex unknown environment. Compared with the original off-policy RL algorithm, an algorithm incorporating IEM can reduce the planning time of the rescue path and achieve the goal of rescuing all trapped individuals. Bai et al. [141] used the double deep Q-network (DDQN) to obtain the adaptive optimal path-planning solution. They designed a comprehensive reward function integrated with a heuristic function to navigate a robot into a target area. A greedy strategy and optimized DNN are used to improve the global search capability and the convergence speed of the DDQN algorithm.

4.3. Path Planning for Multi-Robot Systems

- Tran et al. [142] proposed a new swarm-based control algorithm for multi-robot exploration and repeated coverage in an unknown dynamic obstacle environment. A series of comparative experiments verified the effectiveness of the strategy. Xie et al. [143] proposed an autonomous multi-robot navigation and collaborative SLAM system architecture, including multi-robot planning and local navigation. This architecture realizes multi-robot collaborative environment detection and path planning without a prior navigation map. Similarly, Zhang et al. [144] applied the particle swarm optimization algorithm (PSO) to the robot swarm situation and proposed a moving-distance-minimized PSO for mobile robot swarms (MPSO). The algorithm uses the principle of PSO to deduce the moving distance of the robot crowd so that the total moving distance is minimized. On this basis, Zhang et al. [145] proposed a PSO algorithm based on virtual sources and virtual groups (VVPSO), which divides the search area into multiple units on average, with one virtual source in the center of each unit. A new particle swarm called real–virtual mapping PSO (RMPSO) searches the corresponding units to locate the real source. It can map the robot asymmetrically to a particle swarm with multiple virtual particles for particle swarm optimization. This approach dramatically advances the field of multi-source localization using mobile robot crowds. However, the environment in which the robot moves changes in real time, so the ability of the robot to handle both bounded and unbounded environments is essential. Inspired by animal group foraging behavior, Zhang et al. [146] proposed a dual-environment herd-foraging-based coverage path-planning algorithm (DH-CPP). It enables swarm robots to handle bounded and unbounded environments without prior knowledge of environmental information.

4.4. Comparison of Path-Planning Algorithms

5. Case Study

5.1. Practical Application Cases

5.1.1. Practical Application of Security Range Perception

5.1.2. Practical Application of Path Planning

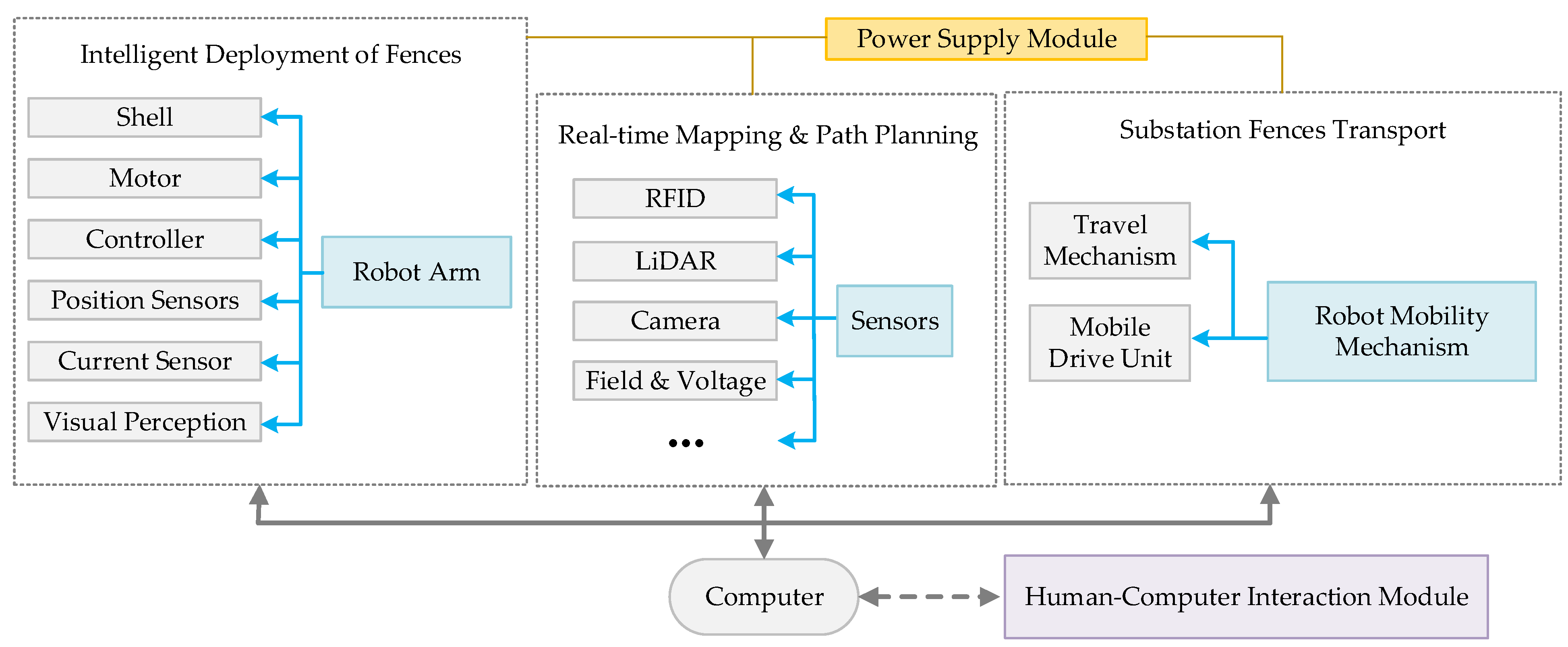

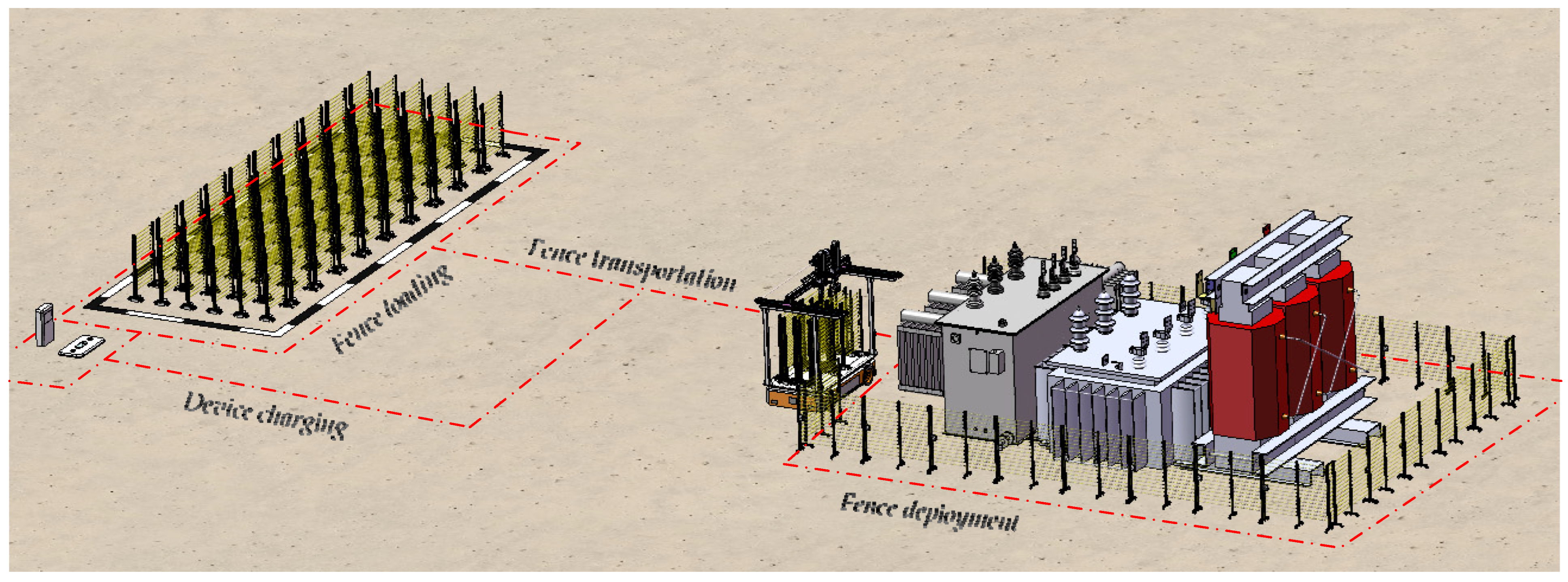

5.2. Security Range Control and Path Planning

6. Conclusions

- (1)

- Real-time sensing technology for the state of substation equipment operations

- Integration at the technical level

- Integration at the method level

- (2)

- Three-dimensional panoramic visualization of substations

- Three-dimensional modeling technology of substations

- Equipment abnormal state identification and localization technology

- Risk-area control strategy

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, M.K.; Yan, J.F.; Zhou, X.X. Real-time online analysis of power grid. CSEE J. Power Energy Syst. 2020, 6, 236–238. [Google Scholar]

- Wen, M.; Li, Y.; Xie, X.T.; Cao, Y.J.; Wu, Y.W.; Wang, W.Y.; He, L.; Cao, Y.; Xu, B.K.; Huang, L.M. Key factors for efficient consumption of renewable energy in a provincial power grid in southern China. CSEE J. Power Energy Syst. 2020, 6, 554–562. [Google Scholar]

- Feng, D.; Lin, S.; He, Z.Y.; Sun, X.J.; Wang, Z. Failure risk interval estimation of traction power supply equipment considering the impact of multiple factors. IEEE Trans. Transp. Electrif. 2018, 4, 389–398. [Google Scholar] [CrossRef]

- Leo Kumar, S.P. State of the art-intense review on artificial intelligence systems application in process planning and manufacturing. Eng. Appl. Artif. Intell. 2017, 65, 294–329. [Google Scholar] [CrossRef]

- Chen, D.Q.; Guo, X.H.; Huang, P.; Li, F.H. Safety distance analysis of 500 kV transmission line tower UAV patrol inspection. IEEE Lett. Electromagn. Compat. Pract. Appl. 2020, 2, 124–128. [Google Scholar] [CrossRef]

- Li, S.F.; Hou, X.Z. Research on the AGV based robot system used in substation inspection. In Proceedings of the International Conference on Power System Technology, Chongqing, China, 22–26 October 2006; pp. 1–4. [Google Scholar]

- Katrasnik, J.; Pernus, F.; Likar, B. A survey of mobile robots for distribution power line inspection. IEEE Trans. Power Deliv. 2010, 25, 485–493. [Google Scholar] [CrossRef]

- Zhang, H.J.; Su, B.; Song, H.P.; Xiong, W. Development and implement of an inspection robot for power substation. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium, Seoul, Republic of Korea, 28 June–1 July 2015; pp. 121–125. [Google Scholar]

- Allan, J.F.; Beaudry, J. Robotic systems applied to power substations—A state-of-the-art survey. In Proceedings of the 2014 3rd International Conference on Applied Robotics for the Power Industry, Foz do Iguacu, Brazil, 14–16 October 2014; pp. 1–6. [Google Scholar]

- Lu, S.Y.; Zhang, Y.; Su, J.J. Mobile robot for power substation inspection: A survey. IEEE/CAA J. Autom. Sin. 2017, 4, 830–847. [Google Scholar] [CrossRef]

- Tang, J.; Zhou, Q.; Tang, M.; Xie, Y. Study on Mathematical model for VHF partial discharge of typical insulated defects in GIS. IEEE Trans. Dielect. Electr. Insul. 2007, 14, 30–38. [Google Scholar] [CrossRef]

- Xu, Z.R.; Tang, J.; Sun, C.X. Application of complex wavelet transform to suppress white noise in GIS UHF PD signals. IEEE Trans. Power Deliv. 2007, 22, 1498–1504. [Google Scholar]

- Li, J.; Sun, C.X.; Grzybowski, S. Partial discharge image recognition influenced by fractal image compression. IEEE Trans. Dielect. Electr. Insul. 2008, 15, 496–504. [Google Scholar]

- Li, J.; He, Z.M.; Bao, L.W.; Wang, Y.Y.; Du, L. Condition monitoring of high voltage equipment in smart grid. In Proceedings of the 2012 International Conference on High Voltage Engineering and Application, Shanghai, China, 17–20 September 2012; pp. 608–612. [Google Scholar]

- Yan, H.; Gao, G.; Huang, J.; Cao, Y. Study on the condition monitoring of equipment power system based on improved control chart. In Proceedings of the 2012 International Conference on Quality, Reliability, Risk, Maintenance, and Safety Engineering, Chengdu, China, 15–18 June 2012; pp. 735–739. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual servo control. I. basic approaches. IEEE Robot. Automat. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Guo, R.; Li, B.; Sun, Y.; Han, L. A patrol robot for electric power substation. In Proceedings of the 2009 IEEE International Conference on Mechatronics and Automation, New York, NY, USA, 9–12 August 2009; pp. 55–59. [Google Scholar]

- Guo, L.; Liu, S.; Lv, M.; Ma, J.; Xie, L.; Yang, Q. Analysis on internal defects of electrical equipments in substation using heating simulation for infrared diagnose using heating simulation for infrared diagnose. In Proceedings of the 2014 China International Conference on Electricity Distribution (CICED), Shenzhen, China, 23–26 September 2014; pp. 39–42. [Google Scholar]

- Xu, Y.; Chen, H. Study on UV detection of high-voltage discharge based on the optical fiber sensor. In Electrical Power Systems and Computers; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2011; pp. 15–22. [Google Scholar]

- Wong, C.Y.; Lin, S.C.F.; Ren, T.R.; Kwok, N.M. A survey on ellipse detection methods. In Proceedings of the 2012 IEEE International Symposium on Industrial Electronics, Hangzhou, China, 28–31 May 2012; pp. 1105–1110. [Google Scholar]

- Taib, S.; Shawal, M.; Kabir, S. Thermal imaging for enhancing inspection reliability: Detection and characterization. Infrared Thermogr. Mar. 2012, 10, 209–236. [Google Scholar]

- Wang, B.; Guo, R.; Li, B.; Han, L.; Sun, Y.; Wang, M. SmartGuard: An autonomous robotic system for inspecting substation equipment. J. Field Robot. 2012, 29, 123–137. [Google Scholar] [CrossRef]

- Zhang, H.; Su, B.; Meng, H. Development and implementation of a robotic inspection system for power substations. Ind. Robot. Int. J. 2017, 44, 333–342. [Google Scholar] [CrossRef]

- Li, J.; Hao, Y.; Xu, W.; Zhou, D.; Huang, R.; Lv, J.; Wang, H. Substation tire-track combined mobile robot. In Proceedings of the International Conference on Optics, Electronics and Communications Technology (OECT); Destech Publications, Inc.: Lancaster, UK, 2017; pp. 168–173. [Google Scholar]

- Langåker, H.A.; Kjerkreit, H.; Syversen, C.L.; Moore, R.J.; Holhjem, Ø.H.; Jensen, I.; Morrison, A.; Transeth, A.A.; Kvien, O.; Berg, G.; et al. An autonomous drone-based system for inspection of electrical substations. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211002973. [Google Scholar] [CrossRef]

- Silva, B.P.A.; Ferreira, R.A.M.; Gomes, S.C.; Calado, F.A.R.; Andrade, R.M.; Porto, M.P. On-rail solution for autonomous inspections in electrical substations. Infrared Phys. Technol. 2018, 90, 53–58. [Google Scholar] [CrossRef]

- Smith, R.C.; Cheeseman, P. On the representation and estimation of spatial uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Leonard, J.J.; Durrant-Whyte, H.F. Mobile robot localization by tracking geometric beacons. IEEE Trans. Robot. Automat. 1991, 7, 376–382. [Google Scholar] [CrossRef]

- Dhond, U.R.; Aggarwal, J.K. Structure from stereo-a review. IEEE Trans. Syst. Man Cybern. 1989, 19, 1489–1510. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous Localization and Mapping (SLAM): Part II. IEEE Robot. Automat. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Nister, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 652–659. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 1–10. [Google Scholar]

- Quan, M.; Piao, S.; He, Y.; Liu, X.; Qadir, M.Z. Monocular visual SLAM with points and lines for ground robots in particular scenes: Parameterization for lines on ground. J. Intell. Robot. Syst. 2021, 101, 72. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Schoeps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014, PT II, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, C. A Semi-Direct Monocular Visual SLAM Algorithm in Complex Environments. J. Intell. Robot. Syst. 2021, 101, 25. [Google Scholar] [CrossRef]

- Tang, F.; Li, H.; Wu, Y. FMD Stereo SLAM: Fusing MVG and direct formulation towards accurate and fast stereo SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 133–139. [Google Scholar]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. CNN-SLAM: Real-time dense monocular SLAM with learned depth prediction. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6565–6574. [Google Scholar]

- Bloesch, M.; Czarnowski, J.; Clark, R.; Leutenegger, S.; Davison, A.J. CodeSLAM—Learning a compact, optimisable representation for dense visual SLAM. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2560–2568. [Google Scholar]

- Loo, S.Y.; Amiri, A.J.; Mashohor, S.; Tang, S.H.; Zhang, H. CNN-SVO: Improving the mapping in semi-direct visual odometry using single-image depth prediction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5218–5223. [Google Scholar]

- Zhan, H.; Weerasekera, C.S.; Bian, J.-W.; Reid, I. Visual odometry revisited: What should be learnt? In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4203–4210. [Google Scholar]

- Li, A.; Wang, J.; Xu, M.; Chen, Z. DP-SLAM: A Visual SLAM with Moving Probability towards Dynamic Environments. Inf. Sci. 2021, 556, 128–142. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem; Association for the Advancement of Artificial Intelligence (AAAI): Washington, DC, USA, 2003; pp. 593–598. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Konolige, K.; Grisetti, G.; Kümmerle, R.; Burgard, W.; Limketkai, B.; Vincent, R. Efficient sparse pose adjustment for 2D mapping. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 22–29. [Google Scholar]

- Olson, E.B. Real-time correlative scan matching. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4387–4393. [Google Scholar]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable SLAM system with full 3D motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Bekrar, A.; Kacem, I.; Chu, C.; Sadfi, C. A branch and bound algorithm for solving the 2D strip packing problem. In Proceedings of the 2006 International Conference on Service Systems and Service Management, Troyes, France, 25–27 October 2006; pp. 940–946. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems X, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Chen, K.; Lopez, B.T.; Agha-mohammadi, A.; Mehta, A. Direct LiDAR odometry: Fast localization with dense point clouds. IEEE Robot. Autom. Lett. 2022, 7, 2000–2007. [Google Scholar] [CrossRef]

- Du, W.; Beltrame, G. Real-time simultaneous localization and mapping with LiDAR intensity. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 4164–4170. [Google Scholar]

- Chen, X.; Läbe, T.; Milioto, A.; Röhling, T.; Vysotska, O.; Haag, A.; Behley, J.; Stachniss, C. OverlapNet: Loop closing for LiDAR-based SLAM. In Proceedings of the Robotics: Science and Systems XVI, Corvalis, OR, USA, 12–16 July 2020. [Google Scholar]

- Ma, J.; Zhang, J.; Xu, J.; Ai, R.; Gu, W.; Chen, X. OverlapTransformer: An efficient and yaw-angle-invariant transformer network for LiDAR-based place recognition. IEEE Robot. Autom. Lett. 2022, 7, 6958–6965. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A fast, robust LiDAR-inertial odometry package by tightly-coupled iterated Kalman filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast direct LiDAR-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Paul, T.; Vincent, R.; Margarita, C.; Kurt, K.; Roland, Y. Keyframe-based stereo visual-inertial SLAM using nonlinear optimization. In Proceedings of the Robotis Science and Systems; Robotics: Science and Systems, Berlin, Germany, 24–28 June 2013; pp. 1–8. [Google Scholar]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust stereo visual inertial odometry for fast autonomous flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Wisth, D.; Camurri, M.; Das, S.; Fallon, M. Unified multi-modal landmark tracking for tightly coupled lidar-visual-inertial odometry. IEEE Robot. Autom. Lett. 2021, 6, 1004–1011. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Ratti, C.; Rus, D. LVI-SAM: Tightly-coupled lidar-visual-inertial odometry via smoothing and mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xian, China, 30 May–5 June 2021; pp. 5692–5698. [Google Scholar]

- Lin, J.; Zheng, C.; Xu, W.; Zhang, F. R2LIVE: A robust, real-time, LiDAR-Inertial-Visual tightly-coupled state estimator and mapping. IEEE Robot. Autom. Lett. 2021, 6, 7469–7476. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. R3LIVE: A robust, real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state estimation and mapping package. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 10672–10678. [Google Scholar]

- Chen, X.; Xie, L.; Wang, C.; Lu, S. Adaptive accurate indoor-localization using passive RFID. In Proceedings of the 2013 International Conference on Parallel and Distributed Systems, Seoul, Republic of Korea, 15–18 December 2013; pp. 249–256. [Google Scholar]

- Zhao, Y.; Liu, Y.; Ni, L.M. VIRE: Active RFID-based localization using virtual reference elimination. In Proceedings of the 2007 International Conference on Parallel Processing (ICPP 2007), Xi’an, China, 10–14 September 2007; p. 56. [Google Scholar]

- Shangguan, L.F.; Jamieson, K. The design and implementation of a mobile RFID tag sorting robot. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, Singapore, 26–30 June 2016; pp. 31–42. [Google Scholar]

- Liu, Y.; Zhao, Y.; Chen, L.; Pei, J.; Han, J. Mining frequent trajectory patterns for activity monitoring using radio frequency tag arrays. IEEE Trans. Parallel Distrib. Syst. 2012, 23, 2138–2149. [Google Scholar] [CrossRef]

- Han, J.; Qian, C.; Wang, X.; Ma, D.; Zhao, J.; Xi, W.; Jiang, Z.; Wang, Z. Twins: Device-free object tracking using passive tags. IEEE/ACM Trans. Netw. 2016, 24, 1605–1617. [Google Scholar] [CrossRef]

- Yang, L.; Chen, Y.; Li, X.Y.; Xiao, C.; Li, M.; Liu, Y. Tagoram: Real-time tracking of mobile RFID tags to high precision using COTS devices. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 237–248. [Google Scholar]

- Wang, J.; Vasisht, D.; Katabi, D. RF-IDraw: Virtual touch screen in the air using RF signals. In Proceedings of the 2014 ACM Conference on SIGCOMM, Chicago, IL, USA, 17–22 August 2014; pp. 235–246. [Google Scholar]

- Shangguan, L.F.; Zhou, Z.; Jamieson, K. Enabling gesture-based interactions with objects. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, Niagara Falls, NY, USA, 19–23 June 2017; pp. 239–251. [Google Scholar]

- Shangguan, L.; Jamieson, K. Leveraging electromagnetic polarization in a two-antenna whiteboard in the air. In Proceedings of the 12th International on Conference on emerging Networking Experiments and Technologies, Irvine, CA, USA, 12–15 December 2016; pp. 443–456. [Google Scholar]

- Wei, T.; Zhang, X. Gyro in the air: Tracking 3D orientation of batteryless internet-of-things. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 55–68. [Google Scholar]

- Ding, H.; Qian, C.; Han, J.; Wang, G.; Xi, W.; Zhao, K.; Zhao, J. RFIPad: Enabling cost-efficient and device-free in-air handwriting using passive tags. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 447–457. [Google Scholar]

- Wang, C.; Liu, J.; Chen, Y.; Liu, H.; Xie, L.; Wang, W.; He, B.; Lu, S. Multi-touch in the air: Device-free finger tracking and gesture recognition via COTS RFID. In Proceedings of the 2018 IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 1691–1699. [Google Scholar]

- Bu, Y.; Xie, L.; Gong, Y.; Wang, C.; Yang, L.; Liu, J.; Lu, S. RF-Dial: An RFID-based 2D human-computer interaction via tag array. In Proceedings of the 2018 IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 837–845. [Google Scholar]

- Lin, Q.; Yang, L.; Sun, Y.; Liu, T.; Li, X.Y.; Liu, Y. Beyond one-dollar mouse: A battery-free device for 3D human-computer interaction via RFID tags. In Proceedings of the 2015 IEEE Conference on Computer Communications, Kowloon, Hong Kong, 26 April–1 May 2015; pp. 1661–1669. [Google Scholar]

- Jiang, C.; He, Y.; Yang, S.; Guo, J.; Liu, Y. 3D-OmniTrack: 3D tracking with COTS RFID systems. In Proceedings of the 18th International Conference on Information Processing in Sensor Networks, Montreal, QC, Canada, 16–18 April 2019; pp. 25–36. [Google Scholar]

- Wang, C.; Xie, L.; Zhang, K.; Wang, W.; Bu, Y.; Lu, S. Spin-antenna: 3D motion tracking for tag array labeled objects via spinning antenna. In Proceedings of the 2019 IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1–9. [Google Scholar]

- Wang, C.; Liu, J.; Chen, Y.; Xie, L.; Liu, H.B.; Lu, S. RF-Kinect: A wearable RFID-based approach towards 3D body movement tracking. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–28. [Google Scholar] [CrossRef]

- Jin, H.; Yang, Z.; Kumar, S.; Hong, J.I. Towards wearable everyday body-frame tracking using passive RFIDs. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–23. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Nguyen, T.M.; Xie, L. Tightly-coupled ultra-wideband-aided monocular visual SLAM with degenerate anchor configurations. Auton. Robot. 2020, 44, 1519–1534. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Nguyen, T.M.; Xie, L. Tightly-coupled single-anchor ultra-wideband-aided monocular visual odometry system. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 665–671. [Google Scholar]

- Shi, Q.; Zhao, S.; Cui, X.; Lu, M.; Jia, M. Anchor self-localization algorithm based on UWB ranging and inertial measurements. Tinshhua Sci. Technol. 2019, 24, 728–737. [Google Scholar] [CrossRef]

- Kang, J.; Park, K.; Arjmandi, Z.; Sohn, G.; Shahbazi, M.; Menard, P. Ultra-wideband aided UAV positioning using incremental smoothing with ranges and multilateration. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4529–4536. [Google Scholar]

- Lawrence, D.; Donnal, J.S.; Leeb, S.; He, Y. Non-contact measurement of line voltage. IEEE Sens. J. 2016, 16, 8990–8997. [Google Scholar] [CrossRef]

- Jakubowski, J.; Kuchta, M.; Kubacki, R. D-dot sensor response improvement in the evaluation of high-power microwave pulses. Electronics 2021, 10, 123. [Google Scholar] [CrossRef]

- Zhao, P.; Wang, J.; Wang, Q.; Xiao, Q.; Zhang, R.; Ou, S.; Tao, Y. Simulation, design, and test of a dual-differential D-dot overvoltage sensor based on the field-circuit coupling method. Sensors 2019, 19, 3413. [Google Scholar] [CrossRef]

- Zhang, R.H.; Xu, L.X.; Yu, Z.Y.; Shi, Y.; Mu, C.P.; Xu, M. Deep-IRTarget: An automatic target detector in infrared imagery using dual-domain feature extraction and allocation. IEEE Trans. Multimed. 2022, 24, 1735–1749. [Google Scholar] [CrossRef]

- Zhao, Z.X.; Xu, S.; Zhang, J.S.; Liang, C.Y.; Zhang, C.X.; Liu, J.M. Efficient and model-based infrared and visible image fusion via algorithm unrolling. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1186–1196. [Google Scholar] [CrossRef]

- Kim, K.; Song, B.; Fernández-Hurtado, V.; Lee, W.; Jeong, W.; Cui, L.J.; Thompson, D.; Feist, J.; Reid, M.T.H.; García-Vidal, F.J.; et al. Radiative heat transfer in the extreme near field. Nature 2015, 528, 387–391. [Google Scholar] [CrossRef]

- Kou, Z.H.; Wang, Q.Y.; Li, G.C.; Zhang, W. Application of high temperature wall thermocouple temperature measurement technology in aircraft engine. J. Eng. Therm. Energy Power 2023, 38, 202–210. [Google Scholar]

- Yang, S.M.; Huang, Y.J. On the performance of thermoelectric energy generators by stacked thermocouples design in CMOS process. IEEE Sens. J. 2022, 22, 18318–18325. [Google Scholar] [CrossRef]

- Gonzalez, D.; Perez, J.; Milanes, V.; Nashashibi, F. A review of motion planning techniques for automated vehicles. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1135–1145. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A note on two problems in connexion with craphs. In Edsger Wybe Dijkstra: His Life, Work, and Legacy; Association for Computing Machinery: New York, NY, USA, 2022; pp. 287–290. ISBN 978-1-4503-9773-5. [Google Scholar]

- Ming, Y.; Li, Y.; Zhang, Z.; Yan, W. A survey of path planning algorithms for autonomous vehicles. SAE Int. J. Commer. Veh. 2021, 14, 97–109. [Google Scholar] [CrossRef]

- Wai, R.-J.; Liu, C.-M.; Lin, Y.-W. Design of switching path-planning control for obstacle avoidance of mobile robot. J. Frankl. Inst.-Eng. Appl. Math. 2011, 348, 718–737. [Google Scholar] [CrossRef]

- Alshammrei, S.; Boubaker, S.; Kolsi, L. Improved Dijkstra algorithm for mobile robot path planning and obstacle avoidance. CMC-Comput. Mater. Contin. 2022, 72, 5939–5954. [Google Scholar] [CrossRef]

- Duraklı, Z.; Nabiyev, V. A new approach based on Bezier curves to solve path planning problems for mobile robots. J. Comput. Sci. 2022, 58, 101540. [Google Scholar] [CrossRef]

- Lavalle, S. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Research Report; Department of Computer Science, Iowa State University: Ames, IA, USA, 1998. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, D.; Gu, J.; Li, F. A path-planning strategy for unmanned surface vehicles based on an adaptive hybrid dynamic stepsize and target attractive force-RRT algorithm. J. Mar. Sci. Eng. 2019, 7, 132. [Google Scholar] [CrossRef]

- Li, Y.; Wei, W.; Gao, Y.; Wang, D.; Fan, Z. PQ-RRT*: An improved path planning algorithm for mobile robots. Expert Syst. Appl. 2020, 152, 113425. [Google Scholar] [CrossRef]

- Wang, J.; Li, B.; Meng, M.Q.H. Kinematic constrained bi-directional RRT with efficient branch pruning for robot path planning. Expert Syst. Appl. 2021, 170, 114541. [Google Scholar] [CrossRef]

- Hu, B.; Cao, Z.; Zhou, M. An efficient RRT-Based framework for planning short and smooth wheeled robot motion under kinodynamic constraints. IEEE Trans. Ind. Electron. 2021, 68, 3292–3302. [Google Scholar] [CrossRef]

- Li, B.; Chen, B. An adaptive rapidly-exploring random tree. IEEE/CAA J. Autom. Sin. 2022, 9, 283–294. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, H.; Liu, S.; Wang, J.; Wang, Y.; Shangguan, D. Path planning of scenic spots based on improved A* algorithm. Sci. Rep. 2022, 12, 1320. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Huang, X.; Ding, J.; Song, K.; Lu, S. Global path planning based on a bidirectional alternating search A* algorithm for mobile robots. Comput. Ind. Eng. 2022, 168, 108123. [Google Scholar] [CrossRef]

- Erke, S.; Bin, D.; Yiming, N.; Qi, Z.; Liang, X.; Dawei, Z. An improved a-star based path planning algorithm for autonomous land vehicles. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420962263. [Google Scholar] [CrossRef]

- Likhachev, M.; Gordon, G.J.; Thrun, S. ARA*: Anytime A* with provable bounds on sub-optimality. In Proceedings of the 16th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 9–11 December 2003; pp. 767–774. [Google Scholar]

- Holland, J. Outline for a logical theory of adaptive systems. J. ACM 1962, 9, 297–314. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V.; Pehlivanoglu, P. An enhanced genetic algorithm for path planning of autonomous UAV in target coverage problems. Appl. Soft. Comput. 2021, 112, 107796. [Google Scholar] [CrossRef]

- Lee, J.; Kim, D.-W. An effective initialization method for genetic algorithm-based robot path planning using a directed acyclic graph. Inf. Sci. 2016, 332, 1–18. [Google Scholar] [CrossRef]

- Chen, W.-J.; Jhong, B.-G.; Chen, M.-Y. Design of path planning and obstacle avoidance for a wheeled mobile robot. Int. J. Fuzzy Syst. 2016, 18, 1080–1091. [Google Scholar] [CrossRef]

- Nazarahari, M.; Khanmirza, E.; Doostie, S. Multi-objective multi-robot path planning in continuous environment using an enhanced genetic algorithm. Expert Syst. Appl. 2019, 115, 106–120. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation Proceedings, St. Louis, MO, USA, 25–28 March 1985; pp. 500–505. [Google Scholar]

- Abdalla, T.Y.; Abed, A.A.; Ahmed, A.A. Mobile robot navigation using PSO-optimized fuzzy artificial potential field with fuzzy control. J. Intell. Fuzzy Syst. 2017, 32, 3893–3908. [Google Scholar] [CrossRef]

- Duhe, J.-F.; Victor, S.; Melchior, P. Contributions on artificial potential field method for effective obstacle avoidance. Fract. Calc. Appl. Anal. 2021, 24, 421–446. [Google Scholar] [CrossRef]

- Jayaweera, H.M.P.C.; Hanoun, S. A dynamic artificial potential field (D-APF) UAV path planning technique for following ground moving targets. IEEE Access 2020, 8, 192760–192776. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, X.; Huang, G.; Wang, Y.; Zhang, X. Two potential fields fused adaptive path planning system for autonomous vehicle under different velocities. ISA Trans. 2021, 112, 176–185. [Google Scholar] [CrossRef] [PubMed]

- Stentz, A. The focused D* algorithm for realtime replanning. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; pp. 1–8. [Google Scholar]

- Yu, J.; Yang, M.; Zhao, Z.; Wang, X.; Bai, Y.; Wu, J.; Xu, J. Path planning of unmanned surface vessel in an unknown environment based on improved D*lite algorithm. Ocean. Eng. 2022, 266, 112873. [Google Scholar] [CrossRef]

- Ren, Z.; Rathinam, S.; Likhachev, M.; Choset, H. Multi-objective path-based D* lite. IEEE Robot. Autom. Lett. 2022, 7, 3318–3325. [Google Scholar] [CrossRef]

- Sun, B.; Zhang, W.; Li, S.; Zhu, X. Energy optimised D* AUV path planning with obstacle avoidance and ocean current environment. J. Navig. 2022, 75, 685–703. [Google Scholar] [CrossRef]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: An autocatalytic optimizing process. Clustering 1991, 3, 340. [Google Scholar]

- Luo, Q.; Wang, H.; Zheng, Y.; He, J. Research on path planning of mobile robot based on improved ant colony algorithm. Neural Comput. Appl. 2020, 32, 1555–1566. [Google Scholar] [CrossRef]

- Wang, L.; Wang, H.; Yang, X.; Gao, Y.; Cui, X.; Wang, B. Research on smooth path planning method based on improved ant colony algorithm optimized by Floyd algorithm. Front. Neurorobot. 2022, 16, 955179. [Google Scholar] [CrossRef]

- Miao, C.; Chen, G.; Yan, C.; Wu, Y. Path planning optimization of indoor mobile robot based on adaptive ant colony algorithm. Comput. Ind. Eng. 2021, 156, 107230. [Google Scholar] [CrossRef]

- Dai, X.; Long, S.; Zhang, Z.; Gong, D. Mobile robot path planning based on ant colony algorithm with A* heuristic method. Front. Neurorobot. 2019, 13, 15. [Google Scholar] [CrossRef]

- Low, E.S.; Song, P.; Cheah, K.C. Solving the optimal path planning of a mobile robot using improved Q-learning. Robot. Auton. Syst. 2019, 115, 143–161. [Google Scholar] [CrossRef]

- Zhao, M.; Lu, H.; Yang, S.; Guo, F. The experience-memory Q-learning algorithm for robot path planning in unknown environment. IEEE Access 2020, 8, 47824–47844. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, W.; Li, G.; Wang, Z.; Gong, M. Path Planning for Unmanned Aerial Vehicle via Off-Policy Reinforcement Learning With Enhanced Exploration. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2625–2639. [Google Scholar] [CrossRef]

- Bai, Z.; Pang, H.; He, Z.; Zhao, B.; Wang, T. Path Planning of Autonomous Mobile Robot in Comprehensive Unknown Environment Using Deep Reinforcement Learning. IEEE Internet Things J. 2024, 11, 22153–22166. [Google Scholar] [CrossRef]

- Tran, V.P.; Garratt, M.A.; Kasmarik, K.; Anavatti, S.G. Dynamic frontier-led swarming: Multi-robot repeated coverage in dynamic environments. IEEE/CAA J. Autom. Sin. 2023, 10, 646–661. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, D.; Hu, X.; Zhou, M.; Cao, Z. Autonomous multirobot navigation and cooperative mapping in partially unknown environments. IEEE Trans. Instrum. Meas. 2023, 72, 4508712. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, Y.; Che, L.; Zhou, M. Moving-distance-minimized PSO for mobile robot swarm. IEEE Trans. Cybern. 2022, 52, 9871–9881. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Lin, Y.; Zhou, M. Virtual-source and virtual-swarm-based particle swarm optimizer for large-scale multi-source location via robot swarm. IEEE Trans. Evol. Computat. 2024. [Google Scholar] [CrossRef]

- Zhang, J.; Zu, P.; Liu, K.; Zhou, M. A herd-foraging-based approach to adaptive coverage path planning in dual environments. IEEE Trans. Cybern. 2024, 54, 1882–1893. [Google Scholar] [CrossRef]

- Li, J.H.; Shuang, F.; Huang, J.J.; Wang, T.; Hu, S.J.; Hu, J.H.; Zheng, H.B. Safe distance monitoring of live equipment based upon instance segmentation and pseudo-LiDAR. IEEE Trans. Power Deliv. 2023, 38, 2953–2964. [Google Scholar] [CrossRef]

- Cai, H.Q.; Fu, J.; Yang, N.; Shao, G.W.; Wen, Z.K.; Tan, J.Y. Safe distance of inspection operation by unmanned aerial vehicles in 500 kV substation. High Volt. Eng. 2023, 50, 3199–3208. [Google Scholar]

- Wu, Y.; Low, K.H. An adaptive path replanning method for coordinated operations of drone in dynamic urban environments. IEEE Syst. J. 2021, 15, 4600–4611. [Google Scholar] [CrossRef]

- Sun, Z. Analysis of the optimization strategy based on the cooperation of drones and intelligent robots for the inspection of substations. Acad. J. Eng. Technol. Sci. 2022, 5, 44–50. [Google Scholar]

| Refs. | Research Method | Type | Characteristics |

|---|---|---|---|

| [34] | Two-threaded structure | Visual matching | Camera trajectories and globally consistent environment maps were obtained |

| [35] | Point–line fusion | Visual matching | Improves estimation accuracy and reduces the impact of redundant parameters |

| [40] | Monocular vision SLAM algorithm | Visual matching | Resolves problems of low texture, moving targets, and perceptual aliasing in complex environments |

| [41] | FMD-SLAM | Visual matching | Combines the multi-view geometry and direct method to estimate position information |

| [46] | DP-SLAM | Visual matching | Combines the moving probabilistic propagation model for dynamic keypoint detection |

| [48] | RBPF | 2D LiDAR | Adopts the particle-filtering technique, which can be processed for nonlinear systems |

| [51] | Gauss–Newton | 2D LiDAR | Requires the robot to move at a lower speed to obtain better map-building results |

| [55] | LeGO-LOAM | 3D LiDAR | Extracts features by projecting a 3D point cloud onto a 2D image to separate the ground and nonground points and remove noise |

| [56] | Direct laser odometry for fast localization | 3D LiDAR | Reduces the repetitive information in submaps based on keyframe composition |

| [58] | OverlapNet | 3D LiDAR | Uses a depth map, an intensity map, a normal vector map, and a semantic map as model inputs and outputs predictions of image overlap rate and yaw angle |

| [60] | LIO-SAM | Multi-sensor fusion | Extracts feature points and uses IMU data to correct point cloud aberrations and provide the initial value of the bit position transformation between data frames |

| [66] | VINS-Fusion | Multi-sensor fusion | Supports multiple types of visual and inertial sensors |

| [68] | LVI-SAM | Multi-sensor fusion | Works even when the VIS or the LIS fail |

| Refs. | Research Method | Type | Characteristics |

|---|---|---|---|

| [74] | - | RFID | Effectively avoids production hazards caused by shortcuts taken by workers |

| [76,77] | - | RFID | Deploys tags on fingers or small objects and tracks the 2D motion trajectories of the tags through their phase signals |

| [83,84,85,86] | - | RFID | Extends simple 2D trajectory tracking to synthesize the displacement and rotation of a target in 3D space |

| [89,90] | - | UWB | Fuses the localization results of monocular ORB-SLAM with the position information solved by UWB through an EKF |

| [91] | - | UWB | Adds the UWB anchor position information to the system state variables and estimates it through a data-fusion filter as a way of reducing the dependence on anchor information |

| [92] | - | UWB | Integrates the UWB-based weak constraints on distance and strong constraints on position results with IMU measurements into a unified factor graph framework |

| [93] | Coupled capacitive voltage sensors | Electric field and voltage sensors | Avoids the system between the ferromagnetic resonances and plays a carrier communication role |

| [96] | Deep-IRTarget | Infrared image technology | Utilizes the hypercomplex infrared Fourier transform approach to design a hypercomplex representation in the frequency domain to compute the significance of infrared intensity |

| [97] | AUIF | Infrared image technology | Preserves the texture details of the visible image while preserving the thermal radiation information of the infrared image; this is the current state-of-the-art method |

| [100] | - | Thermocouple technology | Improves the performance of electrothermal coupling |

| Types | Characteristics | Applicable Scenarios | |

|---|---|---|---|

| Global-mapping-based range perception method | Visual matching | Provides richer environmental details and depth information. Efficient feature matching and tracking enabled by the extraction of feature points in the image, focusing on real-time tracking | Excellent performance in environments with large structural and textural variations; however, it needs more computational resources in the information processing of large-scale scenes |

| LiDAR | Able to detect any object that can reflect light and obtain a map of the surrounding area based on the time and angle of the reflected laser light | Senses the surrounding environment in real time and realizes autonomous navigation, obstacle avoidance, target recognition, and other functions | |

| Regional-sensing-based range perception method | RFID | Transport information obtained through electromagnetic coupling, rapid tracking, and data exchange | Indoor positioning |

| UWB | High multi-path resistance, interference immunity, time resolution, and energy efficiency | Large-scale manufacturing scenarios, such as factories, parks, etc., with at least four UWB base stations installed around the area | |

| Field and voltage sensors | Small size, wide bandwidth, and good transient response | Can be used in many substations; however, in most cases, it still needs to be close to the electrical equipment to be effective | |

| Infrared imagery | Effectively responds to the characteristics of thermal targets, but it is not sensitive to brightness | Needs to be applied in scenarios with large temperature changes, such as transformer-aging-produced heat | |

| Thermocouple | Simple structure, slow thermal response, low cost, and there is no need to provide additional excitation for it to work | Scenarios with large temperature changes, but it needs to be close to the device in question to effectively recognize the temperature change | |

| Path-Planning Algorithm | Characteristics | Reference | Improvements | Advantages |

|---|---|---|---|---|

| Dijkstra | Simple and can obtain optimal solutions | [105] | Detects obstacles in real time and modifies the Dijkstra node diagram | Simple and reliable, low calculation costs, high-cost performance |

| RRT | Probabilistic sampling, fully exploring a space through random searches | [109] | Adaptive hybrid dynamic step size and target attractive force | Fast speed through narrow areas |

| [110] | Introduces a potential function to accelerate the exploration, and the wiring process is improved by using triangle inequality | Better initial solution and faster convergence rate | ||

| [111] | Bidirectional and kinematic constraints are introduced | Avoids unnecessary growth of the tree and has an efficient branch-pruning strategy | ||

| [112] | A moving RRT algorithm for wheeled robots under dynamic constraints which can complete path planning without collisions | Less computation, with smoother and shorter trajectories | ||

| [113] | Combining RRT and RRV, it is an adaptive RRT-connected planning method | More efficient through narrow-channel environments | ||

| A* | Heuristic search, direct | [115] | The heuristic function is weighted by exponential decay; the impact factors of the road conditions are introduced into the evaluation function | Effectively reduces road costs |

| [116] | Uses a bidirectional alternate search strategy; a node-filtering function is introduced to reduce redundant nodes | Low calculation time and smooth turning angle | ||

| [117] | Employed a guideline generated by humans or global planning to develop the heuristic function; introduced keypoints around the obstacle | Strong obstacle-avoidance ability, good robustness and stability | ||

| GA | Based on the principles of natural selection and genetics; good global search ability | [120] | Used the ACO algorithm and the anti-collision method to enhance the initial population | Fast convergence process; a decrease of at least 70% in the required number of objective function evaluations |

| [123] | Introduced a collision removal operator; the algorithm is extended to multi-mobile robot path planning | Can better balance the path length, safety, and smoothness and generate a collision-free path for multiple moving bodies | ||

| APF | Simple structure, small computation cost, and strong real-time performance | [125] | Combined with fuzzy logic control | Overcomes the minimum problem and has better navigation ability in a complex and narrow environment |

| [127] | Dynamic tracking in three-dimensional space is realized by setting the attraction of exponential change to the tracking target | Can track a ground-moving target in real time while avoiding obstacles | ||

| [128] | Based on APF, the velocity potential field is introduced, and the adaptive weight assignment unit is used to adjust the weights of the two potential fields | Strong ability to deal with obstacles of different types and different speeds | ||

| D* | Dynamic planning; quickly adapts to environmental changes | [130] | Improved path cost function to reduce the expansion range of nodes; introduced the Dubins algorithm | Avoids repeated calculation; has a high calculation efficiency and smooth paths |

| [131] | Uses a path-based expansion strategy to prune dominated solutions; has a multi-objective optimization capability | Strong multi-objective optimization ability, taking into account path risk, arrival time, and other factors | ||

| [132] | Introduced obstacle cost term and the steering-angle cost term | Optimal energy consumption path | ||

| ACO | Pheromone and heuristic function; strong robustness and parallelism | [135] | Introduced the Floyd algorithm to generate a guiding path; applied a fallback strategy | More efficient initial path and shorter path length |

| [136] | Introduced the angle guidance factor and the obstacle exclusion factor | Good path security and algorithm stability while ensuring real-time performance | ||

| [137] | Introduced the evaluation function and bending suppression operator of the A* algorithm | Fast convergence, smooth path, and does not converge prematurely | ||

| Reinforcement learning | Interaction with the environment; no modeling required | [138] | Partially guided Q-learning flower pollination algorithm | Fast convergence |

| [140] | Prioritized experience replays and curiosity-driven exploration | Focus on time constraints; fast path planning | ||

| [141] | Designed a comprehensive reward function integrated with heuristic function and an optimized deep neural network with an adaptive greedy action selection policy | Safer and shorter global paths in comprehensive unknown environments; adaptability and robustness in multi-target scenarios | ||

| Multi-robot path planning | A variety of robots cooperating with each other to complete more complex tasks | [143] | An autonomous multi-robot navigation and cooperative SLAM system, including multi-robot planning and local navigation | No map required |

| [144,145] | The PSO algorithm is equivalent to a robot swarm | The ability to use mobile robots for multi-source positioning | ||

| [146] | Proposed a dual-environmental herd-foraging-based coverage path-planning algorithm | The ability to handle both bounded and unbounded environments |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, J.; Chen, T.; He, J.; Wang, Z.; Gao, B. Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots. Energies 2024, 17, 4106. https://doi.org/10.3390/en17164106

Zheng J, Chen T, He J, Wang Z, Gao B. Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots. Energies. 2024; 17(16):4106. https://doi.org/10.3390/en17164106

Chicago/Turabian StyleZheng, Jianhua, Tong Chen, Jiahong He, Zhunian Wang, and Bingtuan Gao. 2024. "Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots" Energies 17, no. 16: 4106. https://doi.org/10.3390/en17164106

APA StyleZheng, J., Chen, T., He, J., Wang, Z., & Gao, B. (2024). Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots. Energies, 17(16), 4106. https://doi.org/10.3390/en17164106