Abstract

Background. We investigated whether individuals with high levels of autistic traits integrate relevant communicative signals, such as facial expression, when decoding eye-gaze direction. Methods. Students with high vs. low scores on the Autism Spectrum Quotient (AQ) performed a task in which they responded to the eye directions of faces, presented on the left or the right side of a screen, portraying different emotional expressions. Results. In both groups, the identification of gaze direction was faster when the eyes were directed towards the center of the scene. However, in the low AQ group, this effect was larger for happy faces than for neutral faces or faces showing other emotional expressions, whereas participants from high AQ group were not affected by emotional expressions. Conclusions. These results suggest that individuals with more autistic traits may not integrate multiple communicative signals based on their emotional value.

1. Introduction

Faces are among the most important visual stimuli, conveying complex information of considerable importance in the context of social interactions, including identity, race, sex, attractiveness, and emotions [1,2,3]. Humans have a marked preference for real face visual features and face-like configurations very early in their ontogeny. Infants, and even fetuses, show a visual preference for basic face-like configurations [4,5,6]. This preference is very functional to the newborn, facilitating connection with the caregiver and evoking a response [7]. Infants use a wide variety of social signals to respond contingently to their social partner, for instance, coupling their facial movements and vocalizations with the facial expression of their caregivers [8,9]. The preference for face visual features has also been observed in school-aged children [10,11,12] and in adults [13,14,15]. For example, masked faces are detected more quickly and accurately than masked objects [16], and facial changes are better detected than changes in non-facial objects [17].

Among the changeable aspects of the face, gaze shifts and facial expressions are crucial, as they provide humans with powerful social signals that allow to infer internal states and intentions. Eye-gaze direction signals another person’s focus of interest and can orient our attention to potentially relevant locations or objects in the surrounding space [18,19]. When interpreting eye-gaze direction, people consider information from different sources, such as the iris/sclera ratio [20], the head posture [21], the presence of object near the fixation point of another person’s [22] and, of relevance for the present study, the emotional facial expression.

Facial expressions of other people can help to determine the emotional state or motivational intentions, and several pieces of evidence indicate that the processing of gaze direction and emotional expression mutually interact.

On the one hand, some studies have observed that gaze direction can modulate the time to judge facial expressions. For example, faces expressing anger or joy are recognized more quickly when presented with gaze directed at the viewer than when presented with gaze averted. Contrastingly, sadness and fear are recognized faster with averted gaze [23,24]. Adams and Kleck interpreted these findings in terms of a shared signal hypothesis, in which happiness and anger are considered ‘approach-oriented’ emotions and sadness and fear ‘avoidance-oriented’.

On the other hand, the perception of gaze direction is modulated by emotional expressions. For example, Lobmaier and Perrett (2011) [25] asked participants to judge whether faces presented in different orientations and with different facial expressions were looking towards them. They found that smiling faces are more likely to be interpreted as directed towards the observer than fearful, angry, and neutral faces. These findings are not consistent with the shared signal hypothesis, and they have been explained by the “self-referential positivity bias” hypothesis [26], according to which people are more likely to believe that they are the source of someone else’s happiness, so as to improve self-esteem.

Reduced interest in the human face and malfunctioning of the above-described face-related attentional processes represent some of the most pronounced social deficits associated with autism spectrum disorder (ASD) [27,28,29]. A large amount of empirical evidence has highlighted the presence of an atypical imbalance in attention to social versus non-social stimuli in ASD [30]. Children and adults with ASD exhibit poorer recognition memory for faces and reduced visual attention to facial stimuli than typically developing (TD) individuals [31,32]. Neurophysiological evidence also corroborated the presence of abnormal facial processing in ASD. For example, recent studies combining EEG and eye-tracking measures have observed a reduction in social bias and an abnormal orientation to faces in individuals with ASD [33,34]. Eye-tracking research has also shown that a reduced fixation to the eye area in ASD [35] can result in significant differences in brain activation. For instance, people with ASD showed greater activation in the social neural network to averted than to direct gaze, this pattern being the opposite of that observed in TD [36]. Nevertheless, those differences do not seem to affect the interpretation of gaze direction and object detection but rather the ability to infer gaze intentionality [37].

Indeed, some studies have shown that people with ASD are equally adept at correctly identifying the direction of a gaze as TD individuals [38,39,40,41]. However, they seem to present difficulties in integrating gaze direction with communicative and social contexts [42,43]. In particular, of relevance for the present study, Akechi et al. [44] observed that autistic children had a deficit in integrating the information of facial expressions with eye-gaze direction.

Additionally, several research studies have suggested that ASD represents the upper extreme of a pattern of social–emotional and communicative traits continuously distributed in the general population [45,46,47]. Initial support comes from studies demonstrating that the degree of autistic traits measured by the Autism Spectrum Quotient (AQ) [48] in a typical population is related to performance on behavioral tasks that show impairments in ASD, such as the ability to draw mentalistic inferences from the eyes [49] (Baron-Cohen et al., 2001a), the identification of emotional facial expressions [50], and the attentional cueing from eye gaze [51,52].

The main aim of the present study was to investigate whether individuals with high levels of autistic traits integrate facial expression when decoding eye-gaze direction. As mentioned above, the combination of expression and gaze direction provides essential information for understanding another individual’s intentions, and difficulties in their encoding and integration have been observed in ASD. However, it is not clear whether this impairment is directly related to autism traits per se or rather depends on different social communication patterns formed by years of altered social experience. Testing individuals who function normally in their everyday lives and who do not usually avoid social contacts would allow testing the specific contribution of autistic traits, minimizing the influence of experience, such as the amount of social involvement.

To achieve this aim, we used the gaze discrimination task developed by Cañadas and Lupiáñez (2012) [53] to explore the importance of eye-gaze direction in spatial interference paradigms. These authors demonstrated that gaze direction discrimination of a lateralized face (i.e., presented to the left of right of fixation point) is faster and more accurate when the gaze is oriented inwards, towards the center of the scene (e.g., right averted gaze presented on the left) than when it is directed outwards (e.g., right averted gaze presented on the right). This effect was opposite that of the classical results generally observed with non-social stimuli, such as arrows (e.g., faster reaction time for arrows pointing outwards) [54], and it was interpreted in terms of eye contact (e.g., a speeding up of responses when the target face seems to look directly at the participants). A further investigation revealed that the emotional expression of the face modulated the inward effect [55] according to the “shared signal hypothesis” [33,34]: the effect was larger when it was coupled with approach-oriented emotions such as happiness and anger, while it was smaller for the avoidance-oriented emotions such as fear.

The predictions of the present study were straightforward. If the degree of autistic traits in typical population is related to the difficulties in integrating gaze direction with communicative and social contexts generally observed in ASD, then these difficulties should be observed only in participants with high levels of autistic traits but not in participants with low autistic traits. In other words, the identification of gaze direction should not be affected by the emotional expression of facial stimuli in participants with high levels of autistic traits, while the inward effect from gaze direction should be modulated in participants with low levels of autistic traits.

Moreover, there are two possible scenarios regarding the modulation of facial expressions on the identification of gaze direction in participants with low autistic traits. If the emotional expression modulates the identification of gaze direction, according to the shared signal hypothesis [33,34], then a larger inward effect should be observed with both happy and angry faces (approach-oriented emotions) as compared with sad and fearful faces (avoidance-oriented emotions). In contrast, if the identification of gaze direction is modulated by emotional expression according to the “self-referential-positivity bias hypothesis” [25,56], then the inward effect should be larger for happy faces than for faces showing other emotional expressions or a neutral expression.

2. Methods

2.1. Participants

Initially, 459 students completed the AQ (mean (M) score = 16.29; standard deviation (SD) = 5.88). Next, 36 students from the upper and lower quartiles of the AQ distribution (using cutoff scores equal to or lower than 11 and scores equal to or higher than 22) were invited to complete further testing. Based on previous research [57,58], we decided to use permissive cutoff scores for reasons related to sample size since only 1.74% of the initial group would have met the clinical AQ cutoff scores of 32 [48]. All participants from the initial group were undergraduate psychology students. Women were overrepresented in the initial group (80%) and the sample selected for this study (86.11%). The characteristics of the high and low AQ groups are outlined in Table 1. The groups did not differ significantly in age distribution, F(1,34) < 1. Numerically, there was a higher proportion of females in the high AQ group than in the low AQ group, but this difference was not statistically significant, χ2(1) = 2.09, p = 0.148. There were no significant socio-demographic (e.g., education, ethnic origin, native language) differences between these two groups.

Table 1.

Male–female ratio and means (and SDs) for AQ score and age.

2.2. The Autism Spectrum Quotient (AQ)

The AQ is a 50-item self-report questionnaire designed for measuring autistic traits in the general population [48]. In particular, it assesses five different domains relevant for autistic traits: social skills, attention to detail, attention switching, communication, and imagination. This instrument (retrieved from https://www.autismresearchcentre.com/arc_tests (accessed on 8 April 2018) has been used specifically for quantifying where participants are situated on the continuum from autism to normality. The AQ score has been shown to have good test–retest reliability, good internal consistency, and acceptably high sensitivity and specificity [48].

2.3. Apparatus and Stimuli

The E-Prime 2.0 software (Psychology Software Tools Inc, Pittsburgh, PA, USA) was used to control stimuli presentation, timing, and data collection. Stimuli were presented on a 17″ screen running at a 1024 × 768 pixel resolution. They consisted of 40 full-color photographs of four males and four females (dimensions = 180 pixels × 200 pixels or 6.67° × 5.72°) displaying either a neutral, angry, sad, fearful, or happy emotional expression. Faces were selected from the Karolinska Directed Emotional Faces [59] and were manipulated with Adobe Photoshop CS6 to change gaze directions to the left and right sides.

2.4. Procedure

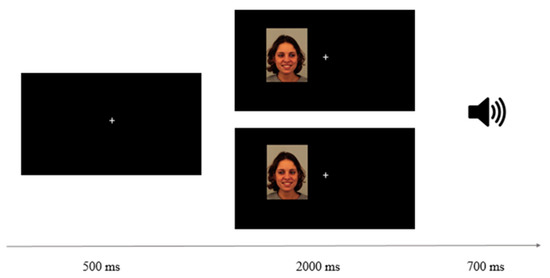

Gaze Discrimination Task. Participants were required to discriminate, as fast and accurately as possible, the direction (left or right) of the eye gaze of the faces presented to the right or the left of a fixation point. They were tested while seated at approximately 60 cm away from the monitor in a faintly lit room. Each trial started with the onset of a white fixation cross (0.5° × 0.5°) centered on a black computer screen for 500 ms. Then, a face displaying different emotional expressions, was presented either to the right or left of the fixation cross and gazing either to the right or left (see Figure 1). The distance from the inner edge of the face to the central fixation was approximately 3.02°. Importantly, this design produced inward trials where eyes were directed towards the central fixation location (i.e., a right-averted gaze of faces presented on the left, and a left-averted gaze of faces presented on the right) or outward trials (i.e., a left averted gaze presented on the left and a right averted gaze presented on the right). Participants had to discriminate the gaze direction of the face by pressing the “Z” or “M” key of the computer keyboard when the correct answer was left or right, respectively. Feedback on no-response or incorrect response trials was provided via a 220 Hz tone for 700 ms. All possible combinations of stimuli, 8 (face identity) × 5 (emotional expression) × 2 (presentation side) × 2 (gaze direction), formed a total of 160 trials. Two blocks of trials with all combinations were presented for a total of 320 trials. Participants completed a practice block of 16 randomly selected trials to familiarize themselves with the task, followed by eight experimental subblocks of 40 randomly selected trials each, with a rest period between blocks.

Figure 1.

Schematic view of a trial sequence from left to right. The examples illustrate, from top to bottom, an inward and an outward trial of a woman with an emotional expression of happiness. The speaker icon represents the auditory feedback given on incorrect answers.

Emotional Expression Categorization Task. After completing the gaze discrimination task, participants were prompted to pay attention to the screen for one last activity, in which they had to identify the emotional expressions of the same faces presented in the previous task. Faces with a direct gaze displaying either angry, happy, sad, neutral, or fearful emotional expression appeared at the center of the screen for an unlimited time. Participants were required to identify the expression of faces by typing the answer on a computer keyboard.

2.5. Design

A 2 (Group: high AQ vs. low AQ) × 5 (Emotional Expression: happy, angry, fearful, neutral, or sad) × 2 (Gaze: inward trials vs. outward trials) mixed design was used to analyze the data. The extent of AQ traits was treated as a between-participants variable, and emotional expression and gaze direction represented within-participants factors. Two univariate analyses of variance (ANOVA) separately considered mean corrected RTs and percentage of errors as dependent variables. If the relevant high-order interactions were significant, the inward effect will also be calculated (inward trials—outward trials) and used as a dependent variable in the Bonferroni post hoc testing. As in the Cañadas and Lupiáñez study (2012) [53], trials with reaction times (RTs) faster than 200 ms (0.2% of the trials) or slower than 1300 ms (0.8% of the trials) were considered anticipations and lapses, respectively, and were excluded from the RTs analysis, together with incorrect responses (5.5% of the trials). Mean RTs were computed for each experimental condition using the remaining observations (see Table 2).

Table 2.

Mean correct reaction times (RTs, in milliseconds), standard deviations (SDs), and percentages of incorrect responses errors (%IR) as a function of Emotional Expression, Gaze, and Group (high and low scores on AQ).

3. Results

3.1. Gaze Discrimination Task

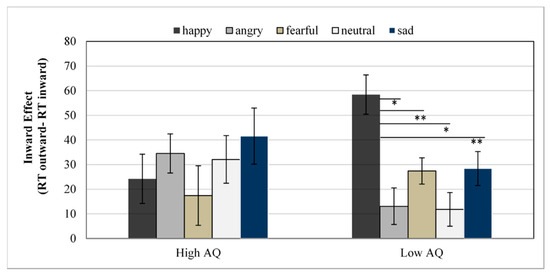

Reaction Times. The ANOVA revealed a significant main effect of Emotional Expression, F(4,136) = 13.68, p < 0.001, η2p = 0.29, with lowest reaction times for fearful faces (617 ms) and highest for angry faces (645 ms). The main effect of the Group was not significant, F(1,34) = 2.16, p = 0.150. A main effect of Gaze was also found, F(1,34) = 48.85, p ˂ 0.001, η2p = 0.59, with faster RTs for inward (616 ms) than for outward trials (645 ms). Furthermore, significant interactions were observed between Emotional Expression and Gaze, F(4,136) = 3.14, p = 0.016, η2p = 0.08, and between Group, Emotional Expression, and Gaze, F(4,136) = 5.84, p ˂ 0.001, η2p = 0.15 (see Figure 2). Emotional Expression × Gaze ANOVAs were conducted separately for each Group, showing that the interaction was only significant in the low AQ group, F(4,68) = 7.12, p ˂ 0.001, η2p = 0.29. Post hoc test using the Bonferroni correction revealed an inward gaze effect (RT outward trials—RT inward trials) significantly larger for happy faces compared with neutral (mean difference = 46.61, p ˂ 0.001), anger (mean difference = 45.33, p ˂ 0.001), fear (mean difference = 31.00, p = 0.03), or sadness (mean difference = 29.99, p = 0.04) faces, as shown in Figure 2. No other significant difference between emotions was found. In the high AQ group, the Emotional Expression by Gaze interaction was not significant, F(4,68) = 1.81, p = 0.137, η2p = 0.09. Post hoc analysis revealed no difference between any emotion (all p > 0.05).

Figure 2.

Mean reaction times for the inward effect (RTs differences between outward and inward trials) as a function of emotional expressions and group. Error bars represent the standard error of the mean for each condition. * = p < 0.05; ** = p < 0.001.

Errors. The ANOVA revealed a significant main effect of Emotional Expression, F(4,136) = 5.56, p ˂ 0.001, η2p = 0.14, with the lowest percentage of errors being for fearful faces (4.1%) and the highest for angry faces (6.9%). A main effect of Gaze was also found, F(1,34) = 7.40, p = 0.01, η2p = 0.18, with a lower percentage of errors for inward trials (4.2%) than for outward trials (6.3%). The main effect of Group was not significant, F(1,34) = 1.53, p = 0.224, η2p = 0.04. The Emotional Expression x Group interaction was also significant, F(4,136) = 2.80, p = 0.028, η2p = 0.08. Post hoc test using the Bonferroni correction showed that the Low AQ group committed more errors responding to angry faces than happy (mean difference = 3.28, p = 0.05) and neutral ones (mean difference = 3.33, p = 0.04). However, the high AQ group committed more errors responding to happy faces than to fearful ones (mean difference = 3.47, p = 0.027). No other differences between groups or emotions were found. No other interaction reached significance.

3.2. Emotional Expression Identification Task

For the analysis of responses in the emotional expression task, a 2 (Group: high AQ vs. low AQ) × 5 (Emotional Expression: happy, angry, fearful, neutral, or sad) mixed design was conducted with the accuracy of responses as the dependent variable. The analysis of accuracy data indicated a main effect of emotional expression, F(4,136) = 2.80, p < 0.001, η2p = 0.48. The highest accuracy was observed for faces displaying happiness (97.9%) and the lowest for neutral faces (45.1%). Importantly, the results show that Group did not have any effect, F < 1, and did not modulate the effects of emotional expression, F < 1.

4. Discussion

The present study examined the modulation of people’s autistic traits in the discrimination of the eyes direction displayed by faces expressing different emotions. In particular, we aimed to investigate whether the ability to integrate facial expression when decoding eye-gaze direction depended on the extent of autistic-like traits measured with the AQ. Both low and high AQ groups showed that the identification of gaze direction was faster when the eyes were directed towards the center of the scene (inward effect). It is interesting that participants from the high AQ group also showed this effect, as it has been recently shown that it is not observed until late childhood, with 4-year-old children showing instead a similar effect for gaze and arrows in this task [60].

However, this effect was mediated by facial expression only in the group with low autistic traits, so the inward effect was larger for happy faces than for neutral faces or faces showing other emotional expressions. This finding contrasts with the pattern of results observed by Jones [55] in the general population.

Consistent with the shared signal hypothesis, Jones showed larger inward effects when faces displayed approach-oriented emotions and smaller effects with avoidance-oriented emotions. In contrast, our findings revealed a distinction between negative and positive emotions and are more coherent with the self-referential-positivity bias hypothesis, according to which people prefer to interpret positive emotions as being directed towards them and negative facial expressions as directed away, in order to enhance self-esteem (cf. [25,56]). However, it is important to note that the shared signal hypothesis has been generally used to explain how the recognition of emotional expressions is affected by eye gaze direction [33,34], while the self-referential-positivity bias hypothesis has been generally used to explain how the identification of gaze direction is affected by emotional expression [25,56]. In our study, participants were required to identify gaze direction (left or right) of faces with different emotional expressions. In line with the self-referential-positivity bias predictions, we observed that the inward effect was larger for happy faces than for neutral faces or faces showing other emotional expressions in the AQ Low group.

There are some discrepancies between Jones’ study and ours. For example, Jones [55] (2009) used four facial expressions (happy, angry, fearful, and neutral), while our faces showed happy, angry, fearful, sad, and neutral expressions. Second, our participants were selected according to their autistic traits scores (e.g., students from the upper and lower quartiles of the AQ distribution), while in the Jones’ study, participants were recruited from the general population and were not selected based on their autistic traits. Therefore, it can be suggested that the different results of our study compared with Jones’s can be traced back to the autistic traits, high or low. However, future studies are needed to fully elucidate the reasons for these different findings.

Of relevance, data from the present study further show that participants with high levels of autistic traits were not affected by the facial expression of the stimuli when decoding eye-gaze direction. Note that the current finding cannot be explained by a general difficulty of individuals with high levels of autistic traits in decoding eye-gaze direction because in the gaze discrimination task we did not find any group differences in overall accuracy or RTs. At the same time, we found no group differences in overall accuracy of the facial emotion identification task, which suggests that the absence of interaction between emotional expression and gaze direction in participants with high levels of autistic traits cannot be attributed to a general difficulty in decoding facial emotional expressions. Our findings are in line with studies showing that autistic individuals have difficulties integrating communicative signals present in the eyes with social and communicative contexts [42,43] and with their emotional value [44]. A possible explanation for why participants with high levels of autistic traits do not show a greater inward effect for happy faces may be related to a weaker self-referential-positivity bias of this group as compared with the low AQ group of participants. This is in line with previous research suggesting reduced positive self-evaluations in ASD [61,62], and it is coherent with previous research showing that judgments of persons with ASD appear to be less strongly modulated by the emotional value of the available information [63]. Another explanation may also be that these results are related to a decreased reward in the social interaction of individuals with high AQ. For this reason, they would appear to be less influenced by happy faces than people with low AQ. This corroborates previous evidence of a reduced reward value of smiling faces in autistic people compared with typical individuals [64]. Finally, the lack of integration between eye-gaze direction and emotional expressions may be based on perceptual or cognitive style, such as weak central coherence [65], which is not specific to the social domain. Individuals with ASD exhibit weak central coherence [66], which is an impaired ability to integrate individuals’ features into a coherent percept. If this impairment extends to those with high ASD traits, this could have impeded the integration of gaze direction and facial expression observed in our study.

To summarize, findings from the present study suggest that the integration between facial expression and gaze direction may be absent or reduced in individuals with a high level of autistic traits. Emotional expression is a key factor when interpreting eye-gaze direction. For instance, smiling faces are more likely to be interpreted as being directed towards the observer than fearful, angry, and neutral faces [25,26]. Our results indicate that this is not the case for individuals with high levels of ASD traits. Previous data using different emotional tasks suggested that ASD participants were not affected by gaze direction when recognizing facial expressions [44]. We report for the first time that individuals with high ASD traits display a similar absence of integration between these two types of social cues. In particular, in our study, the inward effect elicited by the gaze direction was not affected by the face’s emotional expression. This extends the cognitive socio-emotional similarities between ASD and individuals with high autistic traits and contributes to the dimensional view of ASD as the pathological extreme of a phenotype continuously distributed in the general population. Further studies are needed to investigate the ability of individuals with ASD to encode and integrate non-verbal social cues, which could reveal the source of communication and social interaction difficulties in ASD.

Author Contributions

Conceptualization, A.M., B.A.-M., M.Á.B.-D. and J.L.; methodology, J.L. and B.A.-M.; formal analysis, A.M., M.D.C. and B.A.-M.; investigation, A.M., M.D.C. and B.A.-M.; resources, J.L. and A.M.; data curation, A.M., M.D.C. and B.A.-M.; writing—original draft preparation, A.M. and B.A.-M.; writing—review and editing, A.M., B.A.-M., M.Á.B.-D., M.C. and J.L.; supervision, M.C., A.M., M.Á.B.-D. and J.L.; project administration, J.L.; funding acquisition, A.M. and J.L. This work is part of the doctoral dissertation by B.A.-M. under the supervision of M.Á.B.-D. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Spanish Ministry of Economy, Industry, and Competitiveness, through research project PID2020-114790GB-I00 to J.L., and by the Andalusian Council and European Regional Development Fund, through research project B-SEJ-572-UGR20 to A.M. B.A.-M. was supported by a predoctoral fellowship from the FPU program from the Spanish Ministry of Science and Education (FPU16/07124).

Institutional Review Board Statement

The experiment was conducted following the ethical standards of the 1964 Declaration of Helsinki (last update: Seoul, 2008), integrated into a larger research project, approved by the Ethical Committee of the University of Granada (code: 536/CEIH/2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Röder, B.; Ley, P.; Shenoy, B.H.; Kekunnaya, R.; Bottari, D. Sensitive periods for the functional specialization of the neural system for human face processing. Proc. Natl. Acad. Sci. USA 2013, 4, 16760–16765. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bar-Haim, Y.; Ziv, T.; Lamy, D.; Hodes, R.M. Nature and nurture in own-race face processing. Psychol. Sci. 2006, 4, 159–163. [Google Scholar] [CrossRef] [PubMed]

- Yankouskaya, A.; Booth, D.A.; Humphreys, G. Interactions between facial emotion and identity in face processing: Evidence based on redundancy gains. Atten. Percept. Psychophys. 2012, 4, 1692–1711. [Google Scholar] [CrossRef] [Green Version]

- De Heering, A.; Rossion, B. Rapid categorization of natural face images in the infant right hemisphere. Elife 2015, 4, e06564. [Google Scholar] [CrossRef]

- Buiatti, M.; Di Giorgio, E.; Piazza, M.; Polloni, C.; Menna, G.; Taddei, F.; Baldo, E.; Vallortigara, G. Cortical route for facelike pattern processing in human newborns. Proc. Natl. Acad. Sci. USA 2019, 4, 4625–4630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reid, V.M.; Dunn, K.; Young, R.J.; Amu, J.; Donovan, T.; Reissland, N. The human fetus preferentially engages with face-like visual stimuli. Curr. Biol. 2017, 4, 1825–1828. [Google Scholar] [CrossRef] [Green Version]

- Shultz, S.; Klin, A.; Jones, W. Neonatal Transitions in Social Behavior and Their Implications for Autism. Trends Cogn. Sci. 2018, 4, 452–469. [Google Scholar] [CrossRef]

- Bigelow, A.E.; Power, M. Effect of Maternal Responsiveness on Young Infants’ Social Bidding-Like Behavior during the Still Face Task. Infant Child Dev. 2016, 4, 256–276. [Google Scholar] [CrossRef]

- Smith, L.B. Cognition as a dynamic system: Principles from embodiment. Dev. Rev. 2005, 4, 278–298. [Google Scholar] [CrossRef]

- Fischer, J.; Smith, H.; Martinez-Pedraza, F.; Carter, A.S.; Kanwisher, N.; Kaldy, Z. Unimpaired attentional disengagement in toddlers with autism spectrum disorder. Dev. Sci. 2016, 4, 1095–1103. [Google Scholar] [CrossRef] [Green Version]

- Shaffer, R.C.; Pedapati, E.V.; Shic, F.; Gaietto, K.; Bowers, K.; Wink, L.K.; Erickson, C.A. Brief report: Diminished gaze preference for dynamic social interaction scenes in youth with autism spectrum disorders. J. Autism Dev. Disord. 2017, 4, 506–513. [Google Scholar] [CrossRef]

- Wilson, C.E.; Brock, J.; Palermo, R. Attention to social stimuli and facial identity recognition skills in autism spectrum disorder. J. Intellect. Disabil. Res. 2010, 4, 1104–1115. [Google Scholar] [CrossRef] [PubMed]

- Crouzet, S.M.; Kirchner, H.; Thorpe, S.J. Fast saccades toward faces: Face detection in just 100 ms. J. Vis. 2010, 4, 16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fletcher-Watson, S.; Findlay, J.M.; Leekam, S.R.; Benson, V. Rapid detection of person information in a naturalistic scene. Perception 2008, 4, 571–583. [Google Scholar] [CrossRef] [PubMed]

- Shah, P.; Gaule, A.; Bird, G.; Cook, R. Robust orienting to protofacial stimuli in autism. Curr. Biol. 2013, 4, R1087–R1088. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Purcell, D.G.; Stewart, A.L. The face-detection effect: Configuration enhances detection. Percept. Psychophys. 1988, 4, 355–366. [Google Scholar] [CrossRef]

- Kikuchi, Y.; Senju, A.; Tojo, Y.; Osanai, H.; Hasegawa, T. Faces do not capture special attention in children with autism spectrum disorder: A change blindness study. Child Dev. 2009, 4, 1421–1433. [Google Scholar] [CrossRef] [Green Version]

- Friesen, C.K.; Kingstone, A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychon. Bull. Rev. 1998, 4, 490–495. [Google Scholar] [CrossRef] [Green Version]

- Marotta, A.; Casagrande, M.; Lupiáñez, J. Object-based attentional effects in response to eye-gaze and arrow cues. Acta Psychol. 2013, 4, 317–321. [Google Scholar] [CrossRef]

- Ando, S. Perception of gaze direction based on luminance ratio. Perception 2004, 4, 1173–1184. [Google Scholar] [CrossRef]

- Langton, S.R. The mutual influence of gaze and head orientation in the analysis of social attention direction. Q. J. Exp. Psychol. Sect. A 2000, 4, 825–845. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lobmaier, J.S.; Fischer, M.H.; Schwaninger, A. Objects capture perceived gaze direction. Exp. Psychol. 2006, 4, 117–122. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.B., Jr.; Kleck, R.E. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci. 2003, 4, 644–647. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.B., Jr.; Kleck, R.E. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion 2005, 5, 3. [Google Scholar] [CrossRef] [Green Version]

- Lobmaier, J.S.; Perrett, D.I. The world smiles at me: Self-referential positivity bias when interpreting direction of attention. Cogn. Emot. 2011, 4, 334–341. [Google Scholar] [CrossRef] [PubMed]

- Pahl, S.; Eiser, J.R. Valence, comparison focus and self-positivity biases: Does it matter whether people judge positive or negative traits? Exp. Psychol. 2005, 52, 303–310. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Pub: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Bird, G.; Catmur, C.; Silani, G.; Frith, C.; Frith, U. Attention does not modulate neural responses to social stimuli in autism spectrum disorders. Neuroimage 2006, 4, 1614–1624. [Google Scholar] [CrossRef]

- Pelphrey, K.A.; Sasson, N.J.; Reznick, J.S.; Paul, G.; Goldman, B.D.; Piven, J. Visual scanning of faces in autism. J. Autism Dev. Disord. 2002, 4, 249–261. [Google Scholar] [CrossRef]

- Volkmar, F.R. Understanding the social brain in autism. Dev. Psychobiol. 2011, 4, 428–434. [Google Scholar] [CrossRef]

- Guillon, Q.; Hadjikhani, N.; Baduel, S.; Rogé, B. Visual social attention in autism spectrum disorder: Insights from eye tracking studies. Neurosci. Biobehav. Rev. 2014, 4, 279–297. [Google Scholar] [CrossRef]

- Kirchner, J.C.; Hatri, A.; Heekeren, H.R.; Dziobek, I. Autistic symptomatology, face processing abilities, and eye fixation patterns. J. Autism Dev. Disord. 2011, 4, 158–167. [Google Scholar] [CrossRef] [PubMed]

- Vettori, S.; Dzhelyova, M.; Van der Donck, S.; Jacques, C.; Van Wesemael, T.; Steyaert, J.; Rossion, B.; Boets, B. Combined frequency-tagging EEG and eye tracking reveal reduced social bias in boys with autism spectrum disorder. Cortex 2020, 125, 135–148. [Google Scholar] [CrossRef] [PubMed]

- Vettori, S.; Van der Donck, S.; Nys, J.; Moors, P.; Van Wesemael, T.; Steyaert, J.; Rossion, B.; Boets, B. Combined frequency-tagging EEG and eye-tracking measures provide no support for the “excess mouth/diminished eye attention” hypothesis in autism. Mol. Autism 2020, 11, 94. [Google Scholar] [CrossRef]

- Chita-Tegmark, M. Attention Allocation in ASD: A Review and Meta-analysis of Eye-Tracking Studies. Rev. J. Autism Dev. Disord. 2016, 4, 209–223. [Google Scholar] [CrossRef]

- Georgescu, A.L.; Kuzmanovic, B.; Schilbach, L.; Tepest, R.; Kulbida, R.; Bente, G.; Vogeley, K. Neural correlates of “social gaze” processing in high-functioning autism under systematic variation of gaze duration. NeuroImage Clin. 2013, 4, 340–351. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nomi, J.S.; Uddin, L.Q. Face processing in autism spectrum disorders: From brain regions to brain networks. Neuropsychologia 2015, 71, 201–216. [Google Scholar] [CrossRef] [Green Version]

- Baron- Cohen, S.; Campbell, R.; Karmiloff-Smith, A.; Grant, J.; Walker, J. Are children with autism blind to the mentalistic significance of the eyes? Br. J. Dev. Psychol. 1995, 4, 379–398. [Google Scholar] [CrossRef]

- Leekam, S.; Baron-Cohen, S.; Perrett, D.; Milders, M.; Brown, S. Eye- direction detection: A dissociation between geometric and joint attention skills in autism. Br. J. Dev. Psychol. 1997, 4, 77–95. [Google Scholar] [CrossRef]

- Wallace, S.; Coleman, M.; Pascalis, O.; Bailey, A.J. A study of impaired judgment of eye-gaze direction and related face-processing deficits in autism spectrum disorders. Perception 2006, 4, 1651–1664. [Google Scholar] [CrossRef] [Green Version]

- Macinska, S.T. Implicit Social Cognition in Autism Spectrum Disorder. Ph.D. Thesis, University of Hull, Kingston Upon Hull, UK, 2019. [Google Scholar]

- Pelphrey, K.A.; Morris, J.P.; McCarthy, G. Neural basis of eye gaze processing deficits in autism. Brain 2005, 4, 1038–1048. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Baldwin, D.A.; Crowson, M. Do children with autism use the speaker’s direction of gaze strategy to crack the code of language? Child Dev. 1997, 4, 48–57. [Google Scholar] [CrossRef]

- Akechi, H.; Senju, A.; Kikuchi, Y.; Tojo, Y.; Osanai, H.; Hasegawa, T. Does gaze direction modulate facial expression processing in children with autism spectrum disorder? Child Dev. 2009, 80, 1134–1146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Piven, J.; Palmer, P.; Jacobi, D.; Childress, D.; Arndt, S. Broader autism phenotype: Evidence from a family history study of multiple-incidence autism families. Am. J. Psychiatry 1997, 154, 185–190. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spiker, D.; Lotspeich, L.J.; Dimiceli, S.; Myers, R.M.; Risch, N. Behavioral phenotypic variation in autism multiplex families: Evidence for a continuous severity gradient. Am. J. Med. Genet. 2002, 114, 129–136. [Google Scholar] [CrossRef]

- Constantino, J.N.; Todd, R.D. Autistic traits in the general population: A twin study. Arch. Gen. Psychiatry 2003, 60, 524–530. [Google Scholar] [CrossRef] [Green Version]

- Baron-Cohen, S.; Wheelwright, S.; Skinner, R.; Martin, J.; Clubley, E. The autism-spectrum quotient (AQ): Evidence from asperger syndrome/high functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 2001, 4, 5–17. [Google Scholar] [CrossRef]

- Baron-Cohen, S. Theory of mind in normal development and autism. Prisme 2001, 34, 74–183. [Google Scholar]

- Poljac, E.; de-Wit, L.; Wagemans, J. Perceptual wholes can reduce the conscious accessibility of their parts. Cognition 2012, 4, 308–312. [Google Scholar] [CrossRef]

- Bayliss, A.P.; Di Pellegrino, G.; Tipper, S.P. Sex differences in eye gaze and symbolic cueing of attention. Q. J. Exp. Psychol. Sect. A 2005, 4, 631–650. [Google Scholar] [CrossRef] [Green Version]

- Bayliss, A.P.; Tipper, S.P. Gaze and arrow cueing of attention reveals individual differences along the autism spectrum as a function of target context. Br. J. Psychol. 2005, 96, 95–114. [Google Scholar] [CrossRef] [Green Version]

- Cañadas, E.; Lupiáñez, J. Spatial interference between gaze direction and gaze location: A study on the eye contact effect. Q. J. Exp. Psychol. 2012, 4, 1586–1598. [Google Scholar] [CrossRef] [PubMed]

- Marotta, A.; Román-Caballero, R.; Lupiáñez, J. Arrows don’t look at you: Qualitatively different attentional mechanisms triggered by gaze and arrows. Psychon. Bull. Rev. 2018, 4, 2254–2259. [Google Scholar] [CrossRef]

- Jones, S. The mediating effects of facial expression on spatial interference between gaze direction and gaze location. J. Gen. Psychol. 2015, 4, 106–117. [Google Scholar] [CrossRef] [PubMed]

- Lobmaier, J.S.; Tiddeman, B.P.; Perrett, D.I. Emotional expression modulates perceived gaze direction. Emotion 2008, 4, 573. [Google Scholar] [CrossRef] [Green Version]

- Hudson, M.; Nijboer, T.C.; Jellema, T. Implicit social learning in relation to autistic-like traits. J. Autism Dev. Disord. 2012, 4, 2534–2545. [Google Scholar] [CrossRef]

- Zhao, S.; Uono, S.; Yoshimura, S.; Toichi, M. Is impaired joint attention present in non-clinical individuals with high autistic traits? Mol. Autism 2015, 4, 67. [Google Scholar] [CrossRef] [Green Version]

- Lundqvist, D.; Flykt, A.; Öhman, A. The Karolinska directed emotional faces (KDEF). CD ROM from Department of Clinical Neuroscience, Psychology Section. Karolinska Inst. 1998, 4, 630. [Google Scholar]

- Aranda-Martín, B.; Ballesteros-Duperón, M.Á.; Lupiáñez, J. What gaze adds to arrows: Changes in attentional response to gaze versus arrows in childhood and adolescence. Br. J. Psychol. 2022; advance online publication. [Google Scholar] [CrossRef] [PubMed]

- Mezulis, A.H.; Abramson, L.Y.; Hyde, J.S.; Hankin, B.L. Is there a universal positivity bias in attributions? A meta-analytic review of individual, developmental, and cultural differences in the self-serving attributional bias. Psychol. Bull. 2004, 4, 711. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williamson, S.; Craig, J.; Slinger, R. Exploring the relationship between measures of self-esteem and psychological adjustment among adolescents with Asperger syndrome. Autism 2008, 4, 391–402. [Google Scholar] [CrossRef] [PubMed]

- Kuzmanovic, B.; Rigoux, L.; Vogeley, K. Brief report: Reduced optimism bias in self-referential belief updating in high-functioning autism. J. Autism Dev. Disord. 2019, 4, 2990–2998. [Google Scholar] [CrossRef] [PubMed]

- Sepeta, L.; Tsuchiya, N.; Davies, M.S.; Sigman, M.; Bookheimer, S.Y.; Dapretto, M. Abnormal social reward processing in autism as indexed by pupillary responses to happy faces. J. Neurodev. Disord. 2012, 4, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Happé, F. Autism: Cognitive deficit or cognitive style? Trends Cogn. Sci. 1999, 4, 216–222. [Google Scholar] [CrossRef]

- Happé, F.; Frith, U. The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. J. Autism Dev. Disord. 2006, 4, 5–25. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).