Abstract

Assessment of heart sounds which are generated by the beating heart and the resultant blood flow through it provides a valuable tool for cardiovascular disease (CVD) diagnostics. The cardiac auscultation using the classical stethoscope phonological cardiogram is known as the most famous exam method to detect heart anomalies. This exam requires a qualified cardiologist, who relies on the cardiac cycle vibration sound (heart muscle contractions and valves closure) to detect abnormalities in the heart during the pumping action. Phonocardiogram (PCG) signal represents the recording of sounds and murmurs resulting from the heart auscultation, typically with a stethoscope, as a part of medical diagnosis. For the sake of helping physicians in a clinical environment, a range of artificial intelligence methods was proposed to automatically analyze PCG signal to help in the preliminary diagnosis of different heart diseases. The aim of this research paper is providing an accurate CVD recognition model based on unsupervised and supervised machine learning methods relayed on convolutional neural network (CNN). The proposed approach is evaluated on heart sound signals from the well-known, publicly available PASCAL and PhysioNet datasets. Experimental results show that the heart cycle segmentation and segment selection processes have a direct impact on the validation accuracy, sensitivity (TPR), precision (PPV), and specificity (TNR). Based on PASCAL dataset, we obtained encouraging classification results with overall accuracy 0.87, overall precision 0.81, and overall sensitivity 0.83. Concerning Micro classification results, we obtained Micro accuracy 0.91, Micro sensitivity 0.83, Micro precision 0.84, and Micro specificity 0.92. Using PhysioNet dataset, we achieved very good results: 0.97 accuracy, 0.946 sensitivity, 0.944 precision, and 0.946 specificity.

Keywords:

CVD; heart sounds; PCG; denoising; segmentation; deep learning; convolutional neural network 1. Introduction

Sudden heart failure caused by cardiovascular diseases (CVDs) is one of the top causes of death globally. It causes about 17.3 million deaths per year, an amount that is estimated to rise to more than 23.6 million by 2030 according to the latest WHO report [1]. Moreover, it causes 45% of deaths in Europe [2], 34.3% in America [3], and more than 75% in developing countries [4]. In other words, due to unhealthy lifestyle, unavailability, financial or even carelessness constraints, persons neglect regular heart screening, which can favor the CVDs. Cardiovascular problems are considered as a potential medical emergency and must be detected without delay [5]. Earlier diagnosis of CVDs helps patients to decrease considerably the heart failure condition [6].

CVD diagnosis can be done by using the widely known auscultation methods based on stethoscope, phonocardiogram, or echocardiogram. A cardiologist expert could use phonocardiogram (or PCG) to visualize the recorded heart sound during a cardiac cycle based on a phonocardiograph device [7,8]. Also, they can use an echocardiogram (average cost of 1500 as per current cost [9]) to visualize the heart beating and blood pumping. Using a stethoscope, the cardiologist listens to the patient heart sound and tries to find out clues of unusual heart sound (murmurs), which is symptomatic of cardiac abnormalities. The recorded heartbeat sounds different between a normal heart sound and an abnormal heart sound as their PCG signal differs significantly from each other with respect to time, amplitude, intensity, homogeneity, spectral content, etc. [10].

Roughly, all of these heart screening procedures are expensive and require a lot of experience. As stated previously, auscultation requires an experimented cardiologist to obtain an accurate diagnosis [3]. According to some research, medical students and primary care physicians can reach only 20 to 40% accuracy in the heart screening process [11,12,13], and roughly 80% can be achieved when conducted by expert cardiologists [11,13]. In other words, there is a lack of a reliable solution for earlier diagnosis of CVDs.

Developing an accurate, accessible, and easy-to-use solution enables the democratization of the early heart screening, which can significantly help patients to stabilize or even to heal cardiovascular disease. Therefore, the PCG heart screening is considered a high-potential research topic that will expand and develop in the near future [11,13]. Many of the existing research work generally focuses on automatic cardiac auscultation based on classical machine learning methods [14,15] and deep learning models [16,17].

Relying on these ascertainments, this research aims at proposing a reliable CVD screening based on PCG signal classification. Particularly, an automatic method for PCG heart sounds analysis and classification, which is useful to detect heart pathology in clinical applications. The main contribution of our work concerns the proposition of a new and powerful preprocessing approach based on: infinite impulse response (IIR) filter for automatic noise deletion, an automatic powerful heart cycle segmentation (HCS) method based on envelop detection using Daubechie’s wavelet decomposition, a new HCS segment selection approach based on PCG feature clustering relaying on Gaussian mixture model (GMM). This new preprocessing approach is experimented on both Pascal and PhysioNet datasets with an extensive experimental study based on 17 convolution neural network (CNN) pretrained and fine-tuned models for the automatic PCG disease classification.

This paper is laid out as follows: Section 2 presents related work of existing methods, then Section 3 introduces the proposed model. The experiment setting and implementation are described in Section 4. Section 5 discusses the experimental results. Section 6 concludes the paper and indicates future and related research directions.

Contributions

This research focuses on the e-health field and aims in providing a PCG classification approach that may help to detect earlier heart abnormalities. Our aim is to design and optimize an accurate algorithm to recognize the signatures of normal, murmur, and extrasystole heart rhythms using available experimental dataset. In this contribution, we focus on supervised machine learning techniques with the aim of extracting the signatures that identify normal, murmur, and extrasystole PCG signal. Our main contribution concerns the proposition of a new and powerful preprocessing approach that involves: IIR filter for automatic noise deletion; an automatic powerful Heart Cycle Segmentation (HCS) method based on envelop detection using Daubechies wavelet decomposition; a new HCS segment selection approach based on PCG feature clustering relaying on Gaussian mixture model (GMM), and an extensive experimental study based on 17 CNN pretrained and fine-tuned models for the automatic PCG disease classification.

2. Related Work

A substantial amount of research studies was presented towards the identification and classification of PCG signal, i.e., a digital heart sound signals recorded through an electronic stethoscope. Processing and analyzing PCG signal is based on solving three main challenges towards fully automatic heart sound identification and classification.

The first is preprocessing and PCG signal denoising to detect the noncardiac sounds. In this step, the additional noise is removed or reduced, and heart sounds are enhanced. This is usually achieved by removing some undesired frequencies or frequency bands in the signal, a process known as filtering.

The second challenge is heart sound segmentation, which is used to localize the main heart sound components. In this step, the heart sound signal is split into the following heart cycles: first heart sound (S1), the systolic period (siSys), second heart sound (S2), and the diastolic period (siDias). In the literature, there are several possible approaches to segment PCG signal. One of the used approaches is to identify the time instant and duration of the S1 and S2 heart sounds, by using some sort of a peak-picking algorithm. Advanced approaches apply temporal statistical models to search for the most likely hidden state sequence according to a set of observations.

The third challenge is feature extractions and classification of PCG signal into normal and abnormal heart sounds classes. In this step, researchers usually apply standard procedure that consists of the following steps: (1) extracting the features from the PCG signal, (2) feeding the selected classifier with the extracted features, and (3) finally, the classifier algorithm infers the presence or not of abnormal heart sounds.

Different survey papers discussed the PCG signal analysis challenges. A survey done by Meziani et al. discussed the analysis of different PCGs signals using wavelet transform-based methods (WT) only [18]. Another review was done by Chakrabarti et al. where the authors compared different methodologies used in the PCG signal analysis. Based on their comparative study, the authors suggested that empirical mode decomposition (EMD) is better suited for noisy PCG signals. In addition, they suggested the use of hybrid machine learning classifiers to improve the classification results [19].

Nabih et al. [20] reviewed research papers between 2004–2016 that cover intelligent computer-aided diagnosis (CAD) systems based on PCG signal analysis. They concluded that large databases are needed for use with different machine learning classifiers to improve the heart sounds classification accuracy. Also, they suggested to look on deep for more effective methods to reduce the heart sound signals noises.

2.1. PCG Signal Preprocessing, Denoising, and Enhancing

In the process of collecting the heart sounds recording, it is often disturbed by external and internal noisy sources such as chest movements, respiration sounds, muscle contraction, external noise from the surrounded environment, etc. All these noises may change the characteristics of recorded PCG signal and can make the analysis more difficult. Therefore, it is important to use appropriate denoising algorithm on PCG signal before any further analysis. PCG signal denoising is generally achieved through the utilization of suitable filter, most commonly infinite impulse response (IIR) or finite impulse response (FIR), to separate the PCG signal from the attached noises as a simple denoising method [21].

Kwak and Kwon [5] applied the Wiener filter to reduce the background noise, while Dewangan [22] developed an adaptive filter that can remove the noise from the signal using least mean square (LMS) algorithm. In [23], PCG signals were denoised using the maximally flat magnitude (Butterworth) filter. The authors in [24,25,26,27] applied wavelet transformation (WT), a well-known denoising technique to identify true PCG signal components. Another PCG signal denoising method can be achieved via EMD, where complicated data are decomposed into a finite-small number of components [28]. A combined multilevel singular value decomposition (SVD) and compressed sensing method is also proposed by [29] for PCG signal noise removal. Moreover, in the [30], PCG signal denoising technique was proposed based non-negative matrix factorization (NMF) and adaptive contour representation computation (ACRC).

2.2. PCG Signal Segmentation

Heart sounds segmentation is a fundamental step in PCG signal analysis. In this step, the locations of S1 (beginning of the systole) and S2 (end of the systole) heart sounds in a PCG signal are identified. Heart sounds are created by blood flow and vibrations of tissues during the cardiac cycle, and transient heart sounds can be classified into four heart sounds (S1, S2, S3, and S4). In general, only the first S1 and the second S2 heart sounds can be called as the main primary heart sounds, and the cardiac cycle can then be estimated according to the locations of S1 and S2. Certain variations over S1 and S2 properties such as their duration or intensities can be considered as the primal signs of cardiac anomalies.

For PCG signal segmentation, there exists various prior research works that proposed different techniques: firstly, the envelope-based method, which is one of the popular approaches in PCG segmentation. Choi and Jiang made a comparative study about the most used envelope-based methods: Shannon energy, Hilbert transform, and the casdiac Sound characteristic waveform (CSCW) [31]. Shannon energy and entropy envelope was used by [25,26,32,33,34,35,36]. Other techniques use envelope extraction based on WT to gain the frequency characteristics of of S1 and S2 sound components [15]. Various research studies used different envelope extraction methods for segmentation including Hilbert phase envelope [33], ensemble empirical mode decomposition (EEMD) [37], Hilbert transform [38,39,40], and autocorrelation [41,42].

Recently, methods such as a hidden Markov model (HMM) and a hidden semi-Markov model (HSMM) were used [43,44] for PCG segmentation. Gamero and Watrous [44] suggested the use of HMM to identify the S1 and S2 sounds. They used a topology combining two separate HMMs to model the Mel-Frequency Cepstral Coefficients (MFCC) of both systolic and diastolic intervals, respectively. The method achieved a sensitivity of 95% and positive predictivity of 97%. Schmidt et al. [43] proposed a method that extracts a range of features that are then used to train a duration-dependent HSMM to segment the PCG heart signals. Moreover, Logistic Regression-HSMM-based algorithm [45] is considered one of the most advanced method that achieved reasonable results in heart sound segmentation. Springer et al. [45] used the HSMM with the modified Viterbi algorithm to identify the start and end state of the PCG heart sound signal. The proposed method achieved an average F1 score of 95.63% on the testing dataset.

2.3. PCG Signal Feature Extraction and Classification

Feature extraction is a key step in PCG signal analysis as extracting the correct features is the basis for a successful heart sounds classification. Most of the extracted features for PCG heart signal are computed mainly using time, frequency, and statistical measures. A list of the most used features are as follows: heart rate, duration of S1, S2, Systole or Diastole, total power of the PCG signal, zero crossing-rate, MFCC, WT, Linear Predictive Coding (LPC) coefficients, and Shannon entropy. After extracting PCG signal features, the next step is to select suitable classifier to perform the classification process. Various machine learning algorithms were proposed by researchers to complete the PCG heart signal classification, such as artificial neural network (ANN), support vector machine (SVM), K-nearest neighbors (KNN), and other blended classification methods.

ANN is one of the most widely used machine learning-based approaches for classification. However, there is relatively little work done on deploying this method in heart signals identification. Eslamizadeh and Barati [46] used the ANN for heart disease classification. Continuous wavelet transform (CWT) with Morlet wavelet function were used to extract primary heart sounds S1 and S2 from the PCG signal. Features such as maximum amplitude were first normalized and then used by the ANN classifier to detect the murmur of heart sound signals.

Another successful machine learning algorithm that used mostly for heart sounds classification is SVM. Zheng et al. [15] used SVM to identify automatically the coronary heart diseases. Wavelet decomposition methods were utilized firstly on the PCG signal, and then the total energy and the sample entropy of each sublevel are used as input features for the SVM classifier. A classification accuracy of 97.17%, with a specificity of 98.55% and a sensitivity of 93.48%, were reported using the proposed method.

Research done by Kang et al. [47] also used SVM and ANN classifiers to detect Still’s murmur in children. They used the following features for classification: time domain features, including the average Shannon energy and envelope detection in addition to the frequency domain features, specifically the spectral width and peak frequency of the main heart sounds S1 and S2. They achieved to 84–93% sensitivity and 91–99% specificity using the proposed classification method. On other hand, Deng and Han [48] reached to accuracy equal to 91% using SVM classifier and autocorrelation features such as the sub-band autocorrelation function. Discrete wavlet transform (DWT) was used to identify the sub-band envelopes derived from the sub-band coefficients of PCG signal which then was used to extract autocorrelation features. Later, these features were fused using diffusion maps to get unified features and fed to the SVM classifier. To extract the discriminative features, Zhang et al. [32] used Partial Least Squares Regression (PLSR) to reduce the dimension of the scaled spectrograms. Afterword, SVM was used with the extracted features for classification. The proposed method was able to differentiate heart murmur from extrasystole with precision reached 91% using two public datasets offered by the PASCAL classifying heart sounds challenge. Another research study from the same authors Zhang et al. [49] proposed a method to analyze the heart signals based on scaled spectrogram and tensor decomposition. They used the following steps: (1) scaling the heart signal spectrograms into a defined size; (2) reducing the dimension of the scaled spectrograms; (3) extracting the intrinsic structure of the scaled spectrograms using tensor decomposition method, and finally, (4) classifying the heart signals using SVM and extracted features. The proposed method is evaluated on PASCAL and 2016 PhysioNet challenge, and the highest normal precision was 96%.

Redlarski et al. [50] combined SVM and modified cuckoo search algorithm with linear predictive coding (LPC) coefficients as input feature to build heart sounds diagnostic system. The developed system achieved accuracy of 93% for separating innocent murmur (S1, S2, S3, and S4) and organic murmur. Güraksin and Uguz [51] proposed the use of Least-squares SVM (LS-SVM) for heart sound signal classification. The wavelet Shannon entropy feature vectors were extracted and inputted to the classifier. A classification accuracy of 96.6% was obtained using their proposed technique. Patidar and Pachori [52] reported a method for cardiac sound signals features extraction using constrained tunable-Q wavelet transform (TQWT). LS-SVM was used then for classification with various kernel functions. An classification accuracy of 94.01% was registered using their proposed model.

Other research studies proposed the use of KNN algorithms to classify abnormal heart sounds. Oliveira et al. [53] utilized KNN algorithms to detect cardiac murmurs using a combination of time-frequency domain and perceptual and fractal analysis. Hamidi et al. [54] suggested two techniques to distinguish between normal and abnormal heart sound signals. In the first proposed technique, the power spectrum for the fitted signal curve was calculated and used as the first feature. In the second technique, the cardiac signal was divided into segments and the fractal dimension was calculated for each segment then the resultant signal was considered as another feature. Both features were used as inputs into KNN classifier and an overall accuracy of 92%, 81% and 98% were achieved, respectively, for three used datasets.

Potes et al. [55] used both the Adaboost and Convolutional Neural Network (CNN) classifiers to classify the heart sounds into normal and abnormal for the PhysioNet/CinC Challenge 2016. A group of time-frequency extracted features was used for PCG signal classification and their accuracy was 86%. A study by Bozkurt et al. [56] suggested the use of MFCC, Mel-Spectrogram, and sub-band envelopes features to automatically detect heart abnormality from PCG signal. They reported 81.5% accuracy, 78.5% specificity, and 84.5% sensitivity detection rate after inputting the proposed features into the CNN learning algorithm.

Messner et al. [57] detected the positions of S1 and S2 in heart sound signals using deep recurrent neural network (D-RNN) along with spectral and envelope features. They used virtual-adversarial training (VAT) dropout and data augmentation for regularization. They achieved an average score of F1 around 96% on an independent test set. Yaseen et al. [58] proposed heart sound automatic classification based on several extracted features. MFCC and DWT were used to extract the features of heart sound signals. While for classification, deep neural network (DNN), SVM, and centroid displacement-based KNN were selected for the classification stage. Their proposed methodology was proven to diagnose heart disorders in patients with 97% accuracy.

Chen et al. [59] used regression tree-based classification scheme with a CWT to differentiate organic from functional murmurs. They reported 90% classification accuracy in their research paper. For feature extraction, SVD and QR-Factorization were used on the time-frequency matrix attained using the CWT. In addition, features based on Gini index and the Shannon entropy were calculated as well on the decomposition process. To reduce the computational complexity, only number of features was selected using the Sequential Forward Floating Selection (SFFS) algorithm for the classification system.

Safara et al. [60] used BayesNet classifier to identify cardiac valve disorders, and they reached 96% classification accuracy. New wavelet packet entropy feature was introduced in their research paper to classify of five types of heart sounds and murmurs. Wavelet packet transform was employed for heart sound analysis, and the entropy was calculated for deriving feature vectors.

Guillermo et al. [61] proposed a Radial Wavelet Neural Network (RWNN) with Extended Kalman Filter (EKF) model for heart disease classifications. CWT was used to segment PCG signal and identify primary heart sounds, S1 and S2. The dimensional features that were extracted from the cardiac cycles are then used as inputs into the proposed model. They reported 98.04% classification accuracy rate using the proposed learning model.

Safara et al. [62] considered the use of multilevel basis selection (MLBS) method for signals with a small range of frequencies. Their method based on preserving only the most useful bases of a wavelet packet decomposition tree through applying the following elimination criteria: frequency range, noise frequency, and energy threshold. In classifying heart sounds, an accuracy of 97.56% was achieved using the MLBS method.

Thiyagaraja et al. [63] presented patient-centered device system that can monitor patient’s cardiac status. The reported system helps on recording, processing, and classification heart sounds signals. In their system, they used both MFCC and HMM for heart signals classification into normal/murmur with accuracy of 92.68%.

Choi et al. [64] proposed to segment the cardiac spectral using multi-Gaussian (MG) fitting technique to detect abnormal heart sounds. The following measurements of the Gaussian peaks: spectral profiles, maximum frequency, amplitude, half-width, area portion, and loss of area were examined to segment the cardiac spectral curve of different heart sounds.

In another work proposed by Varghees and K.I. [65], the PCG signal was initially decomposed by the experimental wavelet transform (EWT). The boundaries of the heart sounds were detected using the Shannon entropy and instantaneous phase. The accuracy results for the proposed system was 91.92%.

Choi et al. [66] proposed the use of wavelet packet (WP) technique for heart sounds analysis. They use the upper-limit peak frequency, the WP coefficient position related to the upper-limit peak frequency, and the wavelet energy fractions and entropy information features to detect the heart murmurs. Their murmur detection method yielded a classification efficiency of 99.78% specificity and 99.43% sensitivity.

In 2012, Xiefeng et al. [67] used a family of wavelets to develop their model, after that, they extracted features of heart sounds by using of the heart sounds linear band frequency cepstral (HS-LBFC). For heart sound identification, they used the similarity distance method.

Abo-Zahhad et al. [68] introduced an approach for human recognition using heart sounds. The proposed method is based on adopting wavelet packet cepstral coefficient (WPCC) as features for heart sound signal identification. The proposed features employ a nonlinear wavelet packet filter banks that were constructed to match the acoustic nature of the heart sound. After evaluated against an open dataset HSCT-11, their proposed method reported 91.05% classification accuracy.

3. The Proposed Model

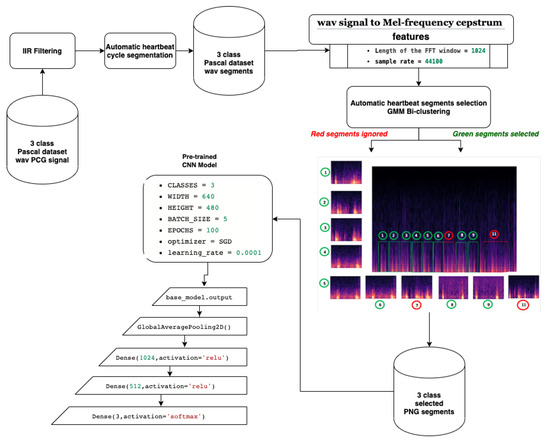

In this paper, a method that combines both supervised and unsupervised learning approaches was developed. The proposed model implements a classification approach that enables the recognition of both normal and abnormal heartbeat rhythms. Figure 1 gives a general overview of the proposed model. In the next subsections, we explain each step in more detail.

Figure 1.

Proposed heart sound detection model.

3.1. Preprocessing

In this paper, the preprocessing step comprises four parts, namely, denosing, automatic heart cycle segmentation, Mel-Frequency spectrum images, and segment selection by clustering.

3.1.1. Noise Filtering

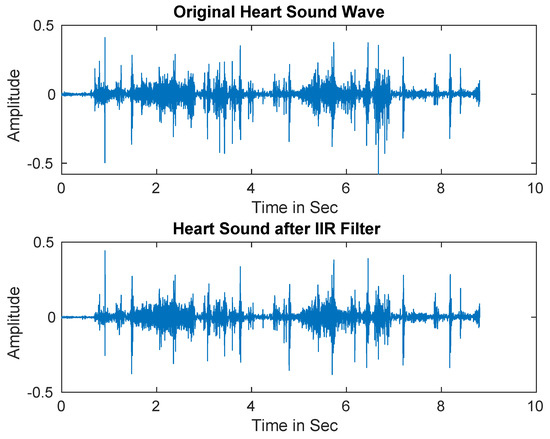

In practice, PCG signals are often corrupted by different types of noise that may decrease the detection accuracy. Therefore, IIR filter was first utilized to separate the noise from the signals [69]. Figure 2 shows the original heart sound signal versus the denoised signal.

Figure 2.

Heart sound signals after applying Infinite Impulse Response (IIR) filter.

3.1.2. Automatic Heart Cycle Segmentation

After IIR filtering, we proceed with heart cycle segmentation. Firstly, signals were downsampled to 2 kHz since most low heart sound signal frequency is 25–120 Hz, whereas our signal sampling frequency was kHz. Then signals were normalized according to Equation (1).

where and denote the normalized heart signal and the original heart signal, respectively.

After that, we performed envelope detection using Daubechies wavelet decomposition. To get low frequency signals, we computed adaptive threshold using wavelet decomposition coefficients C, . After calculating adaptive threshold, we set wavelet decomposition coefficients smaller than threshold and larger than threshold assign as zero as seen in Equation (2).

where is wavelet decomposition coefficient.

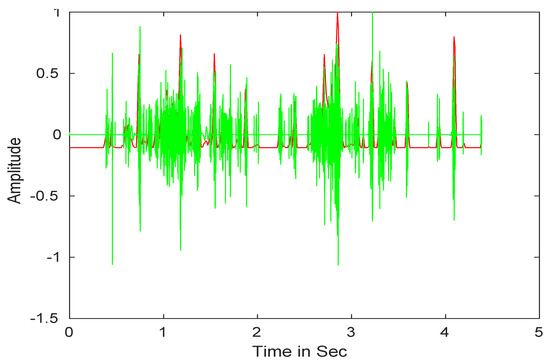

After that, we performed the wavelet reconstruction to extract the low-frequency heart sound. Finally, we computed Shannon entropy (see Equation (3)), then, the average Shannon entropy is standardized as seen in Equation (4) [70]. The envelope of input signals is shown in Figure 3.

where , N and denote the low-frequency heart sound segment, the number of signal samples per segment, and the Shannon entropy, respectively.

where is the the normalized Shannon energy, is the mean of energy of the signal t, and is the standard deviation of energy of the signal t.

Figure 3.

Heart sound signals envelope detection.

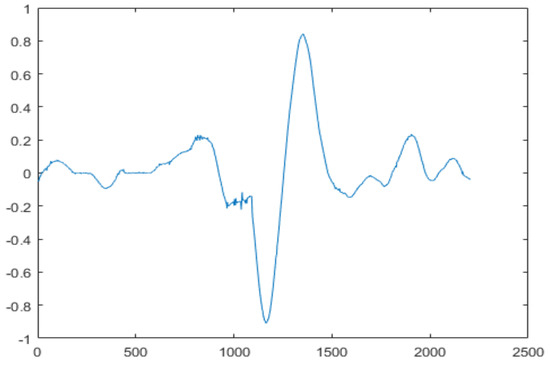

The final step is to identify the heart sound segments. Given the semiperiodic nature of heart sounds, this step can be accomplished more efficiently if the cardiac cycle is calculated. In this study, we used a cardiac cycle calculation approach based on the unbiased autocorrelation function (UACF) [70,71]. After defining the cardiac cycle, the components of the sound of the heart can be identified and segmented. A single heart cycle segment is shown in Figure 4.

Figure 4.

A single heart cycle segment.

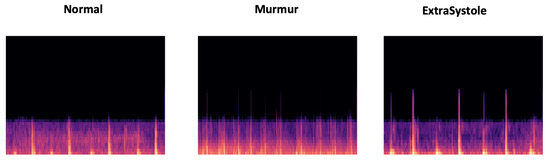

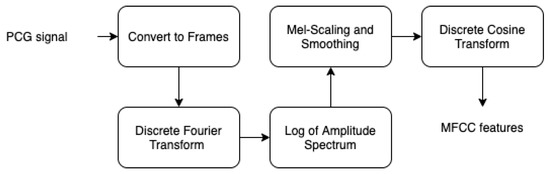

3.1.3. Mel-Frequency Spectrum Images

MFCC is considered as a powerful acoustic feature extractor generating essential information from any audio signal. This technique proved its robustness especially in speech recognition field Dave [72], Han et al. [73], Al Marzuqi et al. [74] through the ability to represent the signal amplitude spectrum in a compact form. In our case, we used MFCC technique for the aim to extract PCG spectrum features to be stored in PNG image (see Figure 5). In fact, Figure 6 shows the different processing steps related to MFCC:

Figure 5.

Overview of extrasystole-mumur-normal MFCC features represented in PNG images.

Figure 6.

MFCC steps.

- By performing a Hamming windowing at fixed interval of 1024 (in our case), the PCG signal is divided into acoustic chunks. The outcome of this step is a vector representing the cepstal features related to each chunks.

- Applying discrete Fourier transform (DFT) to each window chunk.

- For each DFT chunk, it retains only the amplitude spectrum logarithm to conserve the signal loudness property, which was found to be approximately logarithmic.

- To obtain essential frequency features, MFCC technique is based on spectrum smoothing process.

- By applying discrete cosine transform to the fourth step output, we obtain the MFCC features of our PCG signal.

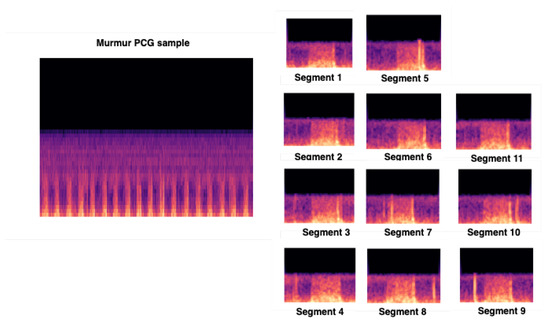

3.1.4. Segment Selection by Clustering

The main objective of our heartbeat segmentation method is to divide PCG signal into different heartbeat cycles with the aim of improving CVD recognition. However, it is well-known that PCG signal is very noisy, which means we can find noise even in one or multiple heart cycle segments. Therefore, the CVD training process is affected by this constraint, causing a CVD signature extraction failure. The idea behind our segment selection method is to apply clustering technique to eliminate the undesired segments; those that influence on the recognition result. We start with the hypothesis that the majority of obtained heart cycle segments are correlated and contain less noise, which means it could be adopted for CVD signature extraction. Firstly, we proceed to a biclustering by applying a parametric clustering method. Then, we ignore the cluster having the minimal number of segments (noisy segments). In other words, the segment selection process are based on the segments belonging to the bigger cluster.

We chose to use mixture Gaussian model (GMM) [75], which is a parametric unsupervised clustering method. This method could be used for partitioning data into different groups according to the probabilities of belonging to each Gaussian. GMM is based on a mixture of Gaussian’s relying on learning the laws of probability that generated the observation data (See Equation (5)).

With , : the probability of belonging to a Gaussian k with ), : the set of the M Gaussian averages, : the set of covariances matrices and . Similarly, the multidimensional version of the Gaussian is as follows: . The best-known method for estimating the GMM parameters ( and ), is the iterative method of maximum likelihood calculation (expectation-maximization algorithm or EM [76]). The EM algorithm could be defined through 3 steps:

- -

- Step 1: Parameter initialization

- -

- Step 2: Repeat until convergence

- •

- Estimation step: calculation of conditional probabilities that the sample i comes from the Gaussian k. with : the set of Gaussians.

- •

- Maximization step: update settings and , ,

The time complexity of EM algorithm for GMM parameters estimation McLachlan and Peel [75], McLachlan and Krishnan [76], Bishop [77], Hastie et al. [78], is as following: If X: is the dataset size, M: the Gaussian number and D: the dataset dimension.

EM Estimation step .

EM Maximization step .

3.2. CNN Classification

The technological progress of deep learning paved the way for boosting the use of computer vision, especially by using CNN. Much research was conducted to recognize objects [79], speech emotion [80], gestures [81], or even visual speech recognition [82]. In fact, CNN using transfer learning techniques was extremely exploited [83,84,85,86], especially when it comes with a small training set. Due to the lack of publicly available big training set of labeled PCG signals, we chose to adopt CNN transfer learning technique [87]. By fine-tuning the existing pretrained CNN models that were already trained on ImageNet, we can just train our model on new classification layer. After applying the different preprocessing steps presented in Figure 1 on pascal PCG dataset, we obtain a set of PNG images containing visual representation of MFCC features that are trained by our fine-tuned CNN model.

We used CNN input shape equals to (480, 640, 3), and we conserved the pretrained convolutional layers used for feature extraction. We proceeded to fine-tuning by adding 4 layers. For a better feature vector representation, we added GlobalAveragePooling2D, which uses a parser window moving across the feature matrix and pools the data by averaging it (to take the corner cases into the account). Then, we added two dense layers, respectively, 1024 and 512, to allow learning more complex functions, and therefore, for better classification results. To be able to classify the results, we added dense layer, with Softmax as activation function. Figure 7 gives an overview of the input training images segments.

Figure 7.

Overview of our CNN input training images issued from preprocessing steps.

4. Performance Evaluation

In this section, we first present the experimental setup. Secondly, the used dataset is explained.

4.1. Experimental Setup

In our pretrained CNN experimental setup, we preserved all the convolutional layers related to all the used Keras pretrained models and we added 4 layers as described in the section (CNN classification). We used Stochastic gradient descent optimizer for weight update with learning rate = 0.0001 and Keras default momentum, batch size = 5 and epochs = 100.

The CNN training process was performed on Google Colab platform allowing the use of a dedicated GPU: 1xTesla K80, having 2496 CUDA cores, compute 3.7, 12 GB (11.439 GB Usable) GDDR5 VRAM. Table 1 presents the details related to the different Keras Pretrained CNN models used in this work.

Table 1.

Keras pretrained CNN models.

4.2. Dataset

Our work is based on the publicly available pascal Bentley et al. [88] and Physionet datasets [89]. As shown in Table 2, which summarizes the structure of this dataset, we used 231 samples obtained by merging the Normal samples from training set A and training set B without considering Btraining_noisynormal (samples). Concerning the Murmur class, we merged 34 samples from training set A with 95 samples issued from merging 66 samples from training set B and 29 samples from noisy_murmur folder. Considering Extrasystole class, we relayed on 65 samples issued from merging 19 samples from training set A and 46 samples from training set B. Concerning PhysioNet [89] dataset, it contains 665 normal samples, and 2575 abnormal samples in WAV format, and the majority of PCG samples are concentrated in the duration range between 8 and 40 s for normal and abnormal class.

Table 2.

Overview of pascal dataset structure.

In fact, after performing the preprocessing step, we obtained a set of PCG samples (heart cycle) that represent the selected heart cycles. These PCG heartbeat cycles are then transformed into PNG images to be trained by our CNN models. As shown in Table 3, our segment selection process selects only the segments having close MFCC features and ignores the others. For example, 323 of Normal PCG segments are selected and 33 are ignored from a total of 356 PCG segments. Except the Extrasystole class, we notice that the training set size of Normal and Murmur class increases. The total number of Normal class samples goes from 231 to 323 samples; Murmur goes from 129 to 317 samples, and Extrasystole goes from 65 to 62 samples. In other words, the CNN model is trained only on heart cycle segments and not on the overall PCG signal.

Table 3.

Overview of selected PCG segments according to each class.

5. Results and Discussion

In this section, we present and discuss our experimental results. The main objective behind this experimental study is to analyze the effect of the segment selection process on the classification results. After performing our preprocessing steps, we experimented 17 Keras pretrained CNN models with and without the use of our segment selection process.

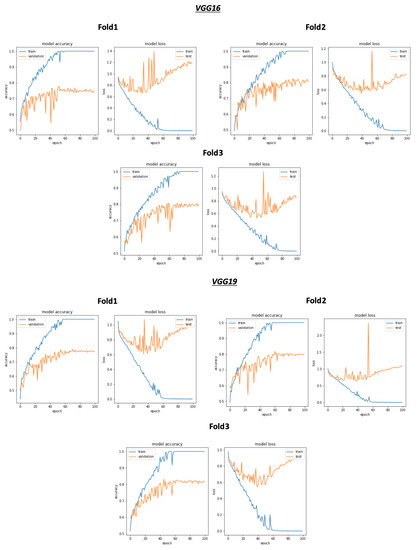

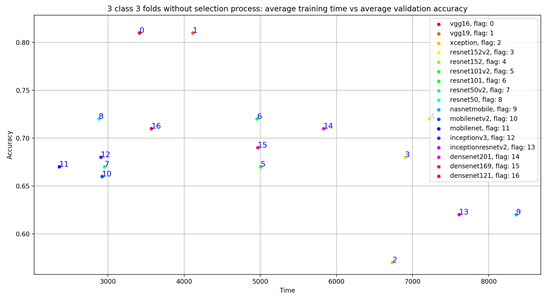

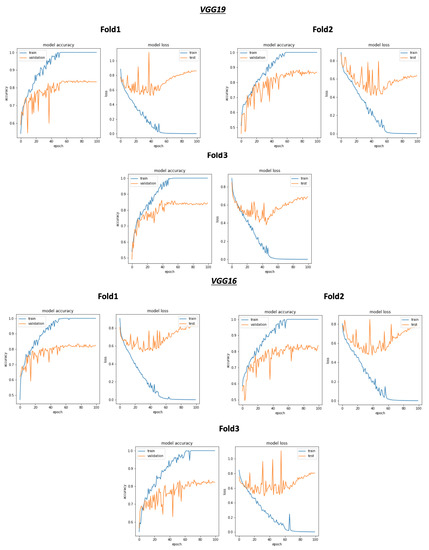

As shown in Figure 8 and Table 4, the best average validation accuracy = 0.81 is obtained using VGG16 and VGG19 through 3 cross validation folds. The training time plots seen in Figure 9 gives us an idea about the VGG16 and VGG19 ranking, which is respectively VGG16_rank = 6 and VGG19_rank = 9. By using Fold1, VGG16 and VGG19 reached their best validation accuracy respectively in Epoch 55 and Epoch 58. Considering Fold2, respectively in Epoch 80 and Epoch 62, VGG16 and VGG19 reached their best validation accuracy, and using Fold3, VGG16 and VGG19 reached their validation accuracy peaks in Epoch 60 and Epoch 48, respectively. Concerning TPR results, VGG19 reached the best average TPR = 0.73 value (as seen in Table 5).

Figure 8.

Overview of CNN VGG16-VGG19 validation accuracy curve without selection process.

Table 4.

Validation accuracy of CNN models using 3 class 3 folds without segment selection.

Figure 9.

Overview of CNN models average training time vs average validation accuracy without selection process.

Table 5.

Validation true positive rate (TPR) of CNN models using 3 classes (E: Extrasystole; M: Murmur; N: Normal) and 3 folds without selection process.

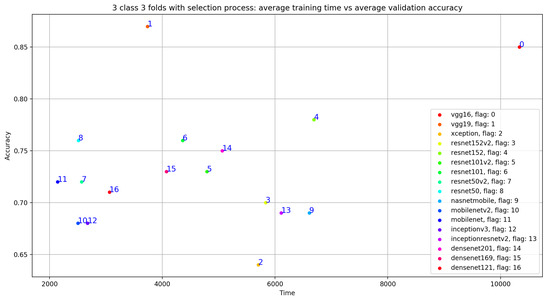

Concerning the classification results using the selection process, there is a significant improvement in the average validation accuracy and the average TPR results. As seen in Figure 10, Table 6 and Table 7, the best validation accuracy average and TPR average are obtained using VGG19. The validation accuracy average and TPR average improvement in VGG19 respectively goes from 0.81 to 0.87 and from 0.73 to 0.83. In other words, the additional three convolutional layers for VGG19 depth = 26 (as seen in Table 1), compared to the depth = 23 for VGG16, have a direct impact on the validation accuracy related to this configuration. Despite the deep architecture used in DenseNet201 with a number of layers equal to 201, we can see that the validation accuracy (as seen in Table 6) is equal to 0.75 but is less than VGG16 and VGG19, which argues that the depth of the model has a random impact on the validation accuracy.

Figure 10.

Overview of CNN VGG16-VGG19 validation accuracy curve with selection process.

Table 6.

Validation accuracy of CNN models using 3 class 3 folds after segment selection.

Table 7.

Validation TPR of CNN models using 3 class (E: Extrasystole; M: Murmur; N: Normal) 3 folds with selection process.

As shown in Figure 9, despite the same validation accuracy results without the use of the selection process, VGG16 requires less training time compared to that of VGG19. On the other hand, Figure 11 shows that by using the selection process, the training time of VGG19 is considerably less than VGG16 training time, which is the worst one compared to all the used models.

Figure 11.

Overview of CNN models average training time VS average validation accuracy with selection process.

We also conducted a comparative study to compare our classification results with that of some recent related works that are based on Pascal 2011 Dataset. As seen in Table 8, except the work of Zhang et al. [32], the majority of these works don’t exploit the entire Pascal dataset samples. For example, in the work of Malik et al. [99], the authors used 31 signals. Similarly, Chakir et al. [100] relayed on 52 signal, Chakir et al. [101] exploited 14 signals from dataset A, and 127 from dataset B. Pedrosa et al. [41] used 111 signals, and in Sidra et al. [102] work, the authors relayed on 24 signal for normal class and 31 for abnormal class. This selection strategy can be explained by the fact that Pascal Dataset contains too much noisy signals (with background noise), which influences the classification results. The fact that we exclude the noisy signals means the classification result improves immediately, which explains the good results obtained by Malik et al. [99] with overall accuracy = 0.89, overall precision = 0.91, and overall TPR = 0.98. By applying our methodology on the totality of signals in Pascal dataset, we just select the useful heart cycle segments and ignore those with noise without ignoring the overall sample. Due to the use of our segmentation and selection process, we obtained more accurate classification results compared to that of Zhang et al. [32] and Balili et al. [103] works. Also, as seen in the Table 9, we obtained encouraging results in term of micro_accuracy = 0.91, micro_sensitivity = 0.84, micro_precision = 0.84 and micro_specificity = 0.92.

Table 8.

An overview of our model results compared to that of some related works.

Table 9.

Detailed average results of our model (VGG19) in terms of micro accuracy, micro TPR, micro precision, and micro specificity.

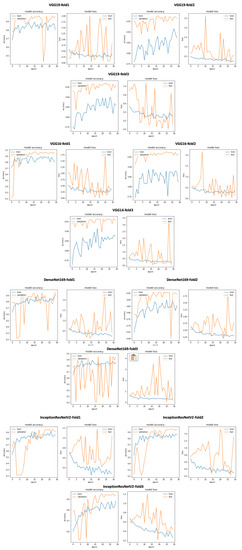

We experimented with our approach also on PhysioNet data set (two class dataset). We adapted the classification layer of all of the 17 CNN models to be able to recognize 2 classes (Normal and Abnormal). Figure 12 gives an overview of training and validation accuracy with model loss related to VGG19, VGG16, DenseNet169 and InceptionResNetV2. As seen in Table 10, VGG19 outperforms all the other Keras 16 models with excellent classification results: accuracy = 0.97, TPR = 0.946, Precision = 0.944 and Specificity = 0.946. On the other hand, we performed a comparative study with relevant state of the art approach summarized in Table 11. As seen in this table, we achieved excellent classification results with an accuracy equal to 0.97, a sensitivity equal to 0.946, a precision equal to 0.944, and specificity equal to 0.946.

Figure 12.

An overview of our approach using VGG19, VGG16, DenseNet169 and InceptionResNetV2 training and validation curves on PhysioNet dataset.

Table 10.

3 Folds Average CNN test results using PhysioNet dataset.

Table 11.

Comparative analysis of our method with state-of-the-art methods using PhysioNet 2016.

6. Conclusions and Future Work

In this work, we presented an AI-based approach for automatic phonocardiogram (PCG) signal analysis to help in the preliminary diagnosis of different heart diseases. The discussed method is considered as a new cardiovascular disease recognition approach experimented on two PCG datasets: Pascal and PhysioNet. Firstly, we performed preprocessing steps through the use of infinite impulse response (IIR) filtering followed by a robust heart cycle segmentation technique. Secondly, we presented our segment selection process, which enables the automatic selection of the maximum correlated segments. Finally, we fine-tuned pretrained model to be trained on the heart cycle mfcc spectrogram images. We obtained encouraging classification results for both Pascal and PhysioNet datasets with overall accuracy 0.87, overall precision 0.81, and overall sensitivity 0.83 using Pascal, and accuracy 0.97, sensitivity 0.946, precision 0.944, and specificity 0.946 using PhysioNet dataset. To our knowledge, these results can be considered the best classification results compared to that of the majority of previous works, which relied on the entire PhysioNet and Pascal dataset signals. We plan to combine both mask RCNN for object detection and CNN models to improve the classification results based on models voting.

Author Contributions

Conceptualization, M.B. and A.B.; methodology, M.B.; software, M.B., R.A. and A.A.; validation, M.B.; formal analysis, M.B.; investigation, A.B.; resources, R.A. and A.A.; data curation, M.B.; writing—original draft preparation, M.B., R.A. and A.A.; writing—review and editing, M.B.; visualization, M.B.; supervision, M.B. and A.B.; project administration, M.B.; funding acquisition, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, under grant No. (RG-23-611-38).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study did not report any data.

Acknowledgments

The authors, therefore, gratefully acknowledge DSR technical and financial support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- WHO. World Health Ranking; WHO: Geneva, Switzerland, 2020. [Google Scholar]

- Wilkins, E.; Wilson, L.; Wickramasinghe, K.; Bhatnagar, P.; Leal, J.; Luengo-Fernandez, R.; Burns, R.; Rayner, M.; Townsend, N. European Cardiovascular Disease Statistics 2017; European Heart Network: Brussel, Belgium, 2017. [Google Scholar]

- Lloyd-Jones, D.; Adams, R.; Brown, T.; Carnethon, M.; Dai, S.; De Simone, G.; Ferguson, T.; Ford, E.; Furie, K.; Gillespie, C.; et al. Heart disease and stroke statistics—2010 update: A report from the American Heart Association. Circulation 2010, 121, e46. [Google Scholar]

- Latif, S.; Khan, M.Y.; Qayyum, A.; Qadir, J.; Usman, M.; Ali, S.M.; Abbasi, Q.H.; Imran, M. Mobile technologies for managing non-communicable-diseases in developing countries. In Mobile Applications and Solutions for Social Inclusion; Paiva, S., Ed.; IGI Global: Hershey, PA, USA, 2018; pp. 261–287. [Google Scholar] [CrossRef] [Green Version]

- Kwak, C.; Kwon, O. Cardiac disorder classification by heart sound signals using murmur likelihood and hidden markov model state likelihood. IET Signal Process. 2012, 6, 326–334. [Google Scholar] [CrossRef]

- Yang, Z.J.; Liu, J.; Ge, J.P.; Chen, L.; Zhao, Z.G.; Yang, W.Y. Prevalence of Cardiovascular Disease Risk Factor in the Chinese Population:the 2007–2008 China National Diabetes and Metabolic Disorders Study. Eur. Heart J. 2011, 33, 213–220. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tang, H.; Zhang, J.; Sun, J.; Qiu, T.; Park, Y. Phonocardiogram signal compression using sound repetition and vector quantization. Comput. Biol. Med. 2016, 71, 24–34. [Google Scholar] [CrossRef] [PubMed]

- Silverman, M.; Fleming, P.; Hollman, A.; Julian, D.; Krikler, D. British Cardiology in the 20th Century; Springer: London, UK, 2000. [Google Scholar] [CrossRef]

- Care, A.A.H. How Much Does an EKG Cost? 2020. Available online: https://health.costhelper.com/ecg.html (accessed on 15 February 2020).

- Mondal, A.; Kumar, K.; Bhattacharya, P.; Saha, G. Boundary Estimation of Cardiac Events S1 and S2 Based on Hilbert Transform and Adaptive Thresholding Approach. In Proceedings of the 2013 Indian Conference on Medical Informatics and Telemedicine (ICMIT), Kharagpur, India, 28–30 March 2013. [Google Scholar]

- Mangione, S.; Nieman, L.Z. Cardiac Auscultatory Skills of Internal Medicine and Family Practice Trainees: A Comparison of Diagnostic Proficiency. JAMA 1997, 278, 717–722. [Google Scholar] [CrossRef] [PubMed]

- Lam, M.; Lee, T.; Boey, P.; Ng, W.; Hey, H.; Ho, K.; Cheong, P. Factors influencing cardiac auscultation proficiency in physician trainees. Singap. Med. J. 2005, 46, 11–14. [Google Scholar]

- Roelandt, J. The decline of our physical examination skills: Is echocardiography to blame? Eur. Heart J. Cardiovasc. Imaging 2013, 15, 249–252. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Lim, C.; Chauhan, S.; Foo, J.Y.A.; Venkataraman, A. Phonocardiographic Signal Analysis Method Using a Modified Hidden Markov Model. Ann. Biomed. Eng. 2007, 35, 367–374. [Google Scholar] [CrossRef]

- Zheng, Y.; Guo, X.; Ding, X. A novel hybrid energy fraction and entropy-based approach for systolic heart murmurs identification. Expert Syst. Appl. 2015, 42, 2710–2721. [Google Scholar] [CrossRef]

- Uguz, H. A Biomedical System Based on Artificial Neural Network and Principal Component Analysis for Diagnosis of the Heart Valve Diseases. J. Med. Syst. 2010, 36, 61–72. [Google Scholar] [CrossRef]

- Mishra, M.; Singh, A.; Dutta, M.K.; Burget, R.; Masek, J. Classification of normal and abnormal heart sounds for automatic diagnosis. In Proceedings of the 2017 40th International Conference on Telecommunications and Signal Processing (TSP), Barcelona, Spain, 5–7 July 2017; pp. 753–757. [Google Scholar]

- Meziani, F.; Debbal, S.; Atbi, A. Analysis of phonocardiogram signals using wavelet transform. J. Med. Eng. Technol. 2012, 36, 283–302. [Google Scholar] [CrossRef]

- Chakrabarti, T.; Saha, S.; Roy, S.S.; Chel, I. Phonocardiogram signal analysis - practices, trends and challenges: A critical review. In Proceedings of the 2015 International Conference and Workshop on Computing and Communication (IEMCON), Vancouver, BC, Canada, 15–17 October 2015; pp. 1–4. [Google Scholar]

- Nabih, M.; El-Dahshan, E.S.; Yahia, A.S. A review of intelligent systems for heart sound signal analysis. J. Med. Eng. Technol. 2017, 41, 1–11. [Google Scholar] [CrossRef]

- Patel, S.B.; Callahan, T.F.; Callahan, M.G.; Jones, J.T.; Graber, G.P.; Foster, K.S.; Glifort, K.; Wodicka, G.R. An adaptive noise reduction stethoscope for auscultation in high noise environments. J. Acoust. Soc. Am. 1998, 103, 2483–2491. [Google Scholar] [CrossRef]

- Dewangan, N. Noise Cancellation Using Adaptive Filter for PCG Signal. Blood 2014, 3, 38–43. [Google Scholar]

- Papadaniil, C.; Hadjileontiadis, L. Efficient Heart Sound Segmentation and Extraction Using Ensemble Empirical Mode Decomposition and Kurtosis Features. IEEE J. Biomed. Health Inform. 2014, 18, 1138–1152. [Google Scholar] [CrossRef]

- Ali, M.N.; El-Dahshan, E.S.A.; Yahia, A.H. Denoising of Heart Sound Signals Using Discrete Wavelet Transform. Circuits Syst. Signal Process. 2017, 36, 4482–4497. [Google Scholar] [CrossRef]

- Kang, S.; Doroshow, R.; McConnaughey, J.; Khandoker, A.; Shekhar, R. Heart Sound Segmentation toward Automated Heart Murmur Classification in Pediatric Patents. In Proceedings of the 2015 8th International Conference on Signal Processing, Image Processing and Pattern Recognition (SIP), Jeju, Korea, 25–28 November 2015; pp. 9–12. [Google Scholar] [CrossRef]

- Ahmad, M.; Khan, A.; Khattak, J.; Khattak, S. A Signal Processing Technique for Heart Murmur Extraction and Classification Using Fuzzy Logic Controller. Res. J. Appl. Sci. Eng. Technol. 2014, 8, 1–8. [Google Scholar] [CrossRef]

- Naseri, H.; Homaeinezhad, M.R. Detection and Boundary Identification of Phonocardiogram Sounds Using an Expert Frequency-Energy Based Metric. Ann. Biomed. Eng. 2012, 41, 279–292. [Google Scholar] [CrossRef] [PubMed]

- Salman, A.; Ahmadi, N.; Mengko, R.; Langi, A.Z.R.; Mengko, T. Empirical Mode Decomposition (EMD) Based Denoising Method for Heart Sound Signal and Its Performance Analysis. Int. J. Electr. Comput. Eng. (IJECE) 2016, 6, 2197. [Google Scholar] [CrossRef]

- Zheng, Y.; Guo, X.; Jiang, H.; Zhou, B. An innovative multi-level singular value decomposition and compressed sensing based framework for noise removal from heart sounds. Biomed. Signal Process. Control 2017, 38, 34–43. [Google Scholar] [CrossRef]

- Pham, D.H.; Meignen, S.; Dia, N.; Fontecave-Jallon, J.; Rivet, B. Phonocardiogram Signal Denoising Based on Non-negative Matrix Factorization and Adaptive Contour Representation Computation. IEEE Signal Process. Lett. 2018. [Google Scholar] [CrossRef] [Green Version]

- Choi, S.; Jiang, Z. Comparison of Envelope Extraction Algorithms for Cardiac Sound Signal Segmentation. Expert Syst. Appl. 2008, 34, 1056–1069. [Google Scholar] [CrossRef]

- Zhang, W.; Han, J.; Deng, S. Heart sound classification based on scaled spectrogram and partial least squares regression. Biomed. Signal Process. Control 2017, 32, 20–28. [Google Scholar] [CrossRef]

- Varghees, N.; Ramachandran, K.I. Heart murmur detection and classification using wavelet transform and Hilbert phase envelope. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015. [Google Scholar] [CrossRef]

- Hamidah, A.; Saputra, R.; Mengko, T.; Mengko, R.; Anggoro, B. Effective heart sounds detection method based on signal’s characteristics. In Proceedings of the 2016 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Phuket, Thailand, 24–27 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Moukadem, A.; Dieterlen, A.; Hueber, N.; Brandt, C. A robust heart sounds segmentation module based on S-transform. Biomed. Signal Process. Control 2013, 8, 273–281. [Google Scholar] [CrossRef] [Green Version]

- Gupta, C.N.; Palaniappan, R.; Swaminathan, S.; Krishnan, S.M. Neural Network Classification of Homomorphic Segmented Heart Sounds. Appl. Soft Comput. 2007, 7, 286–297. [Google Scholar] [CrossRef]

- Jimenez, J.A.; Becerra, M.A.; Delgado-Trejos, E. Heart murmur detection using Ensemble Empirical Mode Decomposition and derivations of the Mel-Frequency Cepstral Coefficients on 4-area phonocardiographic signals. In Proceedings of the Computing in Cardiology 2014, Cambridge, MA, USA, 7–10 September 2014; pp. 493–496. [Google Scholar]

- Dominguez-Morales, J.P.; Jimenez-Fernandez, A.F.; Dominguez-Morales, M.J.; Jimenez-Moreno, G. Deep Neural Networks for the Recognition and Classification of Heart Murmurs Using Neuromorphic Auditory Sensors. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 24–34. [Google Scholar] [CrossRef]

- Sun, S.; Wang, H.; Jiang, Z.; Fang, Y.; Ting, T. Segmentation-based heart sound feature extraction combined with classifier models for a VSD diagnosis system. Expert Syst. Appl. Int. J. 2014, 41, 1769–1780. [Google Scholar] [CrossRef]

- He, J.; Jiang, Y.; Du, M. Analysis and classification of heart sounds with mechanical prosthetic heart valves based on Hilbert-Huang transform. Int. J. Cardiol. 2011, 151, 126–127. [Google Scholar] [CrossRef]

- Pedrosa, J.; Castro, A.; Vinhoza, T.T. Automatic heart sound segmentation and murmur detection in pediatric phonocardiograms. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 2294–2297. [Google Scholar]

- Kao, W.C.; Wei, C.C. Automatic Phonocardiograph Signal Analysis for Detecting Heart Valve Disorders. Expert Syst. Appl. 2011, 38, 6458–6468. [Google Scholar] [CrossRef]

- Schmidt, S.; Egon, T.; Holst-Hansen, C.; Graff, C.; Struijk, J. Segmentation of Heart Sound Recordings from an Electronic Stethoscope by a Duration Dependent Hidden Markov Model. In Proceedings of the 2008 Computers in Cardiology, Bologna, Italy, 14–17 September 2008; Volume 35, pp. 345–348. [Google Scholar] [CrossRef] [Green Version]

- Gamero, L.G.; Watrous, R. Detection of the First and Second Heart Sound Using Probabilistic Models. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No.03CH37439), Cancun, Mexico, 17–21 September 2003; Volume 25, pp. 2877–2880. [Google Scholar] [CrossRef]

- Springer, D.; Tarassenko, L.; Clifford, G. Logistic Regression-HSMM-based Heart Sound Segmentation. IEEE Trans. Biomed. Eng. 2015, 63. [Google Scholar] [CrossRef] [PubMed]

- Eslamizadeh, G.; Barati, R. Heart murmur detection based on Wavelet Transformation and a synergy between Artificial Neural Network and modified Neighbor Annealing methods. Artif. Intell. Med. 2017, 78. [Google Scholar] [CrossRef] [PubMed]

- Kang, S.; Doroshow, R.; McConnaughey, J.; Shekhar, R. Automated Identification of Innocent Still’s Murmur in Children. IEEE Trans. Biomed. Eng. 2017, 64, 1326–1334. [Google Scholar] [CrossRef]

- Deng, S.W.; Han, J. Towards heart sound classification without segmentation via autocorrelation feature and diffusion maps. Future Gener. Comput. Syst. 2016, 60. [Google Scholar] [CrossRef]

- Zhang, W.; Han, J.; Deng, S.W. Heart sound classification based on scaled spectrogram and tensor decomposition. Expert Syst. Appl. 2017, 84. [Google Scholar] [CrossRef]

- Redlarski, G.; Gradolewski, D.; Palkowski, A. A System for Heart Sounds Classification. PLoS ONE 2014, 9, e112673. [Google Scholar] [CrossRef]

- Güraksin, G.E.; Uguz, H. Classification of heart sounds based on the least squares support vector machine. Int. J. Innov. Comput. Inf. Control IJICIC 2011, 7, 7131–7144. [Google Scholar]

- Patidar, S.; Pachori, R. Classification of cardiac sound signals using constrained tunable-Q wavelet transform. Expert Syst. Appl. 2014, 41, 7161–7170. [Google Scholar] [CrossRef]

- Oliveira, J.; Oliveira, C.; Cardoso, B.; Sultan, M.S.; Coimbra, M.T. A multi-spot exploration of the topological structures of the reconstructed phase-space for the detection of cardiac murmurs. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015. [Google Scholar] [CrossRef]

- Hamidi, M.; Ghassemian, H.; Imani, M. Classification of Heart Sound Signal Using Curve Fitting and Fractal Dimension. Biomed. Signal Process. Control 2018, 39, 351–359. [Google Scholar] [CrossRef]

- Potes, C.; Parvaneh, S.; Rahman, A.; Conroy, B. Ensemble of Feature-based and Deep learning-based Classifiers for Detection of Abnormal Heart Sounds. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2016. [Google Scholar] [CrossRef]

- Bozkurt, B.; Germanakis, I.; Stylianou, Y. A study of time-frequency features for CNN-based automatic heart sound classification for pathology detection. Comput. Biol. Med. 2018, 100. [Google Scholar] [CrossRef]

- Messner, E.; Zöhrer, M.; Pernkopf, F. Heart Sound Segmentation-An Event Detection Approach Using Deep Recurrent Neural Networks. IEEE Trans. Biomed. Eng. 2018, 65, 1964–1974. [Google Scholar] [CrossRef] [PubMed]

- Yaseen; Son, G.Y.; Kwon, S. Classification of Heart Sound Signal Using Multiple Features. Appl. Sci. 2018, 8, 2344. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Wang, S.; Shen, C.H.; Choy, F. Matrix decomposition based feature extraction for murmur classification. Med. Eng. Phys. 2011, 34, 756–761. [Google Scholar] [CrossRef] [PubMed]

- Safara, F.; Doraisamy, S.; Azman, A.; Jantan, A.; Ranga, A. Multi-level basis selection of wavelet packet decomposition tree for heart sound classification. Comput. Biol. Med. 2013, 43, 1407–1414. [Google Scholar] [CrossRef] [PubMed]

- Guillermo, J.; Ricalde, L.J.; Sanchez, E.; Alanis, A. Detection of Heart Murmurs Based on Radial wavelet Neural Network with Kalman Learning. Neurocomputing 2015, 164. [Google Scholar] [CrossRef]

- Safara, F.; Doraisamy, S.; Azman, A.; Jantan, A.; Ranga, A. Wavelet Packet Entropy for Heart Murmurs Classification. Adv. Bioinform. 2012, 2012, 327269. [Google Scholar] [CrossRef]

- Thiyagaraja, S.; Dantu, R.; Shrestha, P.; Chitnis, A.; Thompson, M.; Anumandla, P.T.; Sarma, T.; Dantu, S. A novel heart-mobile interface for detection and classification of heart sounds. Biomed. Signal Process. Control 2018, 45, 313–324. [Google Scholar] [CrossRef]

- Choi, S.; Jung, G.; Park, H.K. A novel cardiac spectral segmentation based on a multi-Gaussian fitting method for regurgitation murmur identification. Signal Process. 2014, 104, 339–345. [Google Scholar] [CrossRef]

- Varghees, V.N.; Ramachandran, K.I. Effective Heart Sound Segmentation and Murmur Classification Using Empirical Wavelet Transform and Instantaneous Phase for Electronic Stethoscope. IEEE Sens. J. 2017. [Google Scholar] [CrossRef]

- Choi, S.; Shin, Y.; Park, H.K. Selection of wavelet packet measures for insufficiency murmur identification. Expert Syst. Appl. 2011, 38, 4264–4271. [Google Scholar] [CrossRef]

- Xiefeng, C.; Ma, Y.; Liu, C.; Zhang, X.; Guo, Y. Research on heart sound identification technology. Sci. China Inf. Sci. 2012, 55, 281–292. [Google Scholar] [CrossRef]

- Abo-Zahhad, M.; Ahmed, S.; Seha, S.N. Biometrics from heart sounds: Evaluation of a new approach based on wavelet packet cepstral features using HSCT-11 database. Comput. Electr. Eng. 2016, 53. [Google Scholar] [CrossRef]

- Chandrakar, B.; Yadav, O.; Chandra, V. A survey of noise removal techniques for ecg signals. Int. J. Adv. Res. Comput. Commun. Eng. 2013, 2, 1354–1357. [Google Scholar]

- Liu, Q.; Wu, X.; Ma, X. An automatic segmentation method for heart sounds. BioMed Eng. Online 2018, 17. [Google Scholar] [CrossRef] [Green Version]

- Tang, H.; Li, T.; Qiu, T. Segmentation of heart sounds based on dynamic clustering. Biomed. Signal Process. Control 2012, 7. [Google Scholar] [CrossRef]

- Dave, N. Feature extraction methods LPC, PLP and MFCC in speech recognition. Int. J. Adv. Res. Eng. Technol. 2013, 1, 1–4. [Google Scholar]

- Han, W.; Chan, C.F.; Choy, C.S.; Pun, K.P. An efficient MFCC extraction method in speech recognition. In Proceedings of the 2006 IEEE International Symposium on Circuits and Systems, Kos, Greece, 21–24 May 2006. [Google Scholar]

- Al Marzuqi, H.M.O.; Hussain, S.M.; Frank, A. Device Activation based on Voice Recognition using Mel Frequency Cepstral Coefficients (MFCC’s) Algorithm. Int. Res. J. Eng. Technol. 2019, 6, 4297–4301. [Google Scholar]

- McLachlan, G.; Peel, D. Finite Mixture Models; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- McLachlan, G.; Krishnan, T. The EM Algorithm and Extensions; John Wiley & Sons: Hoboken, NJ, USA, 2007; Volume 382. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.; Franklin, J. The elements of statistical learning: Data mining, inference and prediction. Math. Intell. 2005, 27, 83–85. [Google Scholar]

- Gandarias, J.M.; Garcia-Cerezo, A.J.; Gomez-de Gabriel, J.M. CNN-based methods for object recognition with high-resolution tactile sensors. IEEE Sens. J. 2019, 19, 6872–6882. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Cheng, W.; Sun, Y.; Li, G.; Jiang, G.; Liu, H. Jointly network: A network based on CNN and RBM for gesture recognition. Neural Comput. Appl. 2019, 31, 309–323. [Google Scholar] [CrossRef] [Green Version]

- Saitoh, T.; Zhou, Z.; Zhao, G.; Pietikäinen, M. Concatenated frame image based cnn for visual speech recognition. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 277–289. [Google Scholar]

- Alexandre, L.A. 3D object recognition using convolutional neural networks with transfer learning between input channels. In Intelligent Autonomous Systems 13; Springer: Berlin/Heidelberg, Germany, 2016; pp. 889–898. [Google Scholar]

- Gao, Y.; Mosalam, K.M. Deep transfer learning for image-based structural damage recognition. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Pandey, G.; Baranwal, A.; Semenov, A. Identifying Images with Ladders Using Deep CNN Transfer Learning. In Intelligent Decision Technologies 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 143–153. [Google Scholar]

- Yang, Z.; Yu, W.; Liang, P.; Guo, H.; Xia, L.; Zhang, F.; Ma, Y.; Ma, J. Deep transfer learning for military object recognition under small training set condition. Neural Comput. Appl. 2019, 31, 6469–6478. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2018; pp. 270–279. [Google Scholar]

- Bentley, P.; Nordehn, G.; Coimbra, M.; Mannor, S. The PASCAL Classifying Heart Sounds Challenge 2011 (CHSC2011) Results. 2011. Available online: http://www.peterjbentley.com/heartchallenge/index.html (accessed on 15 January 2020).

- Clifford, G.D.; Liu, C.; Moody, B.; Springer, D.; Silva, I.; Li, Q.; Mark, R.G. Classification of normal/abnormal heart sound recordings: The PhysioNet/Computing in Cardiology Challenge 2016. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2016; pp. 609–612. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Malik, S.I.; Akram, M.U.; Siddiqi, I. Localization and classification of heartbeats using robust adaptive algorithm. Biomed. Signal Process. Control 2019, 49, 57–77. [Google Scholar] [CrossRef]

- Chakir, F.; Jilbab, A.; Nacir, C.; Hammouch, A. Phonocardiogram signals processing approach for PASCAL classifying heart sounds challenge. Signal Image Video Process. 2018, 12, 1149–1155. [Google Scholar] [CrossRef]

- Chakir, F.; Jilbab, A.; Nacir, C.; Hammouch, A. Phonocardiogram signals classification into normal heart sounds and heart murmur sounds. In Proceedings of the 11th International Conference on Intelligent Systems: Theories and Applications (SITA), Mohammedia, Morocco, 19–20 October 2016; pp. 1–4. [Google Scholar]

- Sidra, G.; Ammara, N.; Taimur, H.; Bilal, H.; Ramsha, A. Fully Automated Identification of Heart Sounds for the Analysis of Cardiovascular Pathology. In Applications of Intelligent Technologies in Healthcare; Springer: Berlin/Heidelberg, Germany, 2019; pp. 117–129. [Google Scholar]

- Balili, C.C.; Sobrepena, M.C.C.; Naval, P.C. Classification of heart sounds using discrete and continuous wavelet transform and random forests. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 655–659. [Google Scholar]

- Nogueira, D.M.; Ferreira, C.A.; Jorge, A.M. Classifying heart sounds using images of MFCC and temporal features. In EPIA Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2017; pp. 186–203. [Google Scholar]

- Ortiz, J.J.G.; Phoo, C.P.; Wiens, J. Heart sound classification based on temporal alignment techniques. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2016; pp. 589–592. [Google Scholar]

- Tang, H.; Chen, H.; Li, T.; Zhong, M. Classification of normal/abnormal heart sound recordings based on multi-domain features and back propagation neural network. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2016; pp. 593–596. [Google Scholar]

- Rubin, J.; Abreu, R.; Ganguli, A.; Nelaturi, S.; Matei, I.; Sricharan, K. Recognizing abnormal heart sounds using deep learning. arXiv 2017, arXiv:1707.04642. [Google Scholar]

- Kay, E.; Agarwal, A. DropConnected neural networks trained on time-frequency and inter-beat features for classifying heart sounds. Physiol. Meas. 2017, 38, 1645. [Google Scholar] [CrossRef]

- Abdollahpur, M.; Ghiasi, S.; Mollakazemi, M.J.; Ghaffari, A. Cycle selection and neuro-voting system for classifying heart sound recordings. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2016; pp. 1–4. [Google Scholar]

- Singh, S.A.; Majumder, S. Short unsegmented PCG classification based on ensemble classifier. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 875–889. [Google Scholar] [CrossRef]

- Han, W.; Yang, Z.; Lu, J.; Xie, S. Supervised threshold-based heart sound classification algorithm. Physiol. Meas. 2018, 39, 115011. [Google Scholar] [CrossRef]

- Whitaker, B.M.; Suresha, P.B.; Liu, C.; Clifford, G.D.; Anderson, D.V. Combining sparse coding and time-domain features for heart sound classification. Physiol. Meas. 2017, 38, 1701. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.; Dai, Z.; Jiang, Y.; Li, T.; Liu, C. PCG classification using multidomain features and SVM classifier. BioMed Res. Int. 2018, 2018, 4205027. [Google Scholar] [CrossRef] [Green Version]

- Plesinger, F.; Viscor, I.; Halamek, J.; Jurco, J.; Jurak, P. Heart sounds analysis using probability assessment. Physiol. Meas. 2017, 38, 1685. [Google Scholar] [CrossRef] [PubMed]

- Abdollahpur, M.; Ghaffari, A.; Ghiasi, S.; Mollakazemi, M.J. Detection of pathological heart sounds. Physiol. Meas. 2017, 38, 1616. [Google Scholar] [CrossRef] [PubMed]

- Homsi, M.N.; Warrick, P. Ensemble methods with outliers for phonocardiogram classification. Physiol. Meas. 2017, 38, 1631. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.A.; Majumder, S. Classification of unsegmented heart sound recording using KNN classifier. J. Mech. Med. Biol. 2019, 19, 1950025. [Google Scholar] [CrossRef]

- Langley, P.; Murray, A. Heart sound classification from unsegmented phonocardiograms. Physiol. Meas. 2017, 38, 1658. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).