Abstract

Event-based cameras, inspired by biological vision, asynchronously capture per-pixel brightness changes, producing streams of events with higher temporal resolution, dynamic range, and lower latency than conventional cameras. These advantages make event cameras promising for human pose estimation in challenging scenarios, such as motion blur and low-light conditions. However, human pose estimation with event cameras is still in its early research stages. Among major challenges is information loss from stationary parts of the human body, where the stationary parts at instances cannot trigger events. This issue, inherent to the nature of event data, cannot be resolved in a short-range event stream alone. Therefore, incorporating motion cues from a longer temporal range offers a intuitive solution. This paper proposes a joint global and local temporal modeling network (JGLTM), designed to extract essential cues from a longer temporal range to complement and refine local features for more accurate current pose prediction. Unlike existing methods that rely only on short-range temporal correspondence, this approach expands the temporal perception field to effectively provide crucial contexts for the lost information of stationary body parts at the current time instance. Extensive experiments on public datasets and the dataset proposed in this paper demonstrate the effectiveness and superiority of the proposed approach in event-based human pose estimation across diverse scenarios.

1. Introduction

In computer vision, human pose estimation aims to detect the positions of human keypoints from images or videos. As one of the challenging research areas, human pose estimation provides geometric and motion information about the human body for many downstream tasks, such as human–computer interaction, action recognition, and virtual reality. Therefore, it is essential to improve the accuracy and efficiency of human pose estimation. Although existing works have achieved significant performance [,,,], problems arise from challenging scenarios, such as motion blur and poor lighting conditions, for human pose estimation with conventional RGB cameras.

Unlike a conventional camera, which outputs dense representations at a fixed frame rate, an event camera asynchronously generates an event at a pixel only when the brightness change of that pixel exceeds a set threshold. An event consists of its location (x, y), timestamp (t), and polarity (p). Due to the imaging paradigm shift, event cameras possess competitive advantages over conventional cameras, including high temporal resolution, high dynamic range, low latency, and low power consumption. These properties make event cameras the best for addressing the above problems in challenging scenarios with conventional cameras [,,,,]. However, meanwhile, a new issue arises from using event cameras; few events will be generated when the corresponding body part remain stationary in a time period, leading to body part information being missed.

To address this issue, video-based methods [,,,] offer a potential solution by utilizing adjacent frames to provide complementary information for the current frame. However, for event data, missing body parts at a specific time instance may not always be recoverable from nearby frames within a short temporal range. These parts may only be identifiable in frames captured over a longer temporal span, as illustrated in Figure 1. Therefore, this paper proposes JGLTM to consider leveraging both the long-range temporal information distant from the current time instance and short-range adjacent information to offer motion cues for the human pose, while the motion consistency is kept for an event stream. The framework consists of three components. (1) For the purpose of long-range temporal modeling, JGLTM models a Global Memory Network (GMN) to iteratively abstract the long-range memory by applying a cross-attention block, where a learnable latent vector serves as the query input to continuously update the latent vector in the online recurrent manner. (2) JGLTM models the local spatial and temporal consistency of neighboring frames in a short range by building a local spatial–temporal transformer (LST), allowing the visual tokens of the current frame to interact not only with the tokens themselves but also with those of the adjacent frames. (3) Lastly, pose prediction is achieved by Mid-frame Prediction Refining (MPR) module, in which the global memory is used as the context to refine the feature of the middle frame in the LST module based on a cross-attention block.

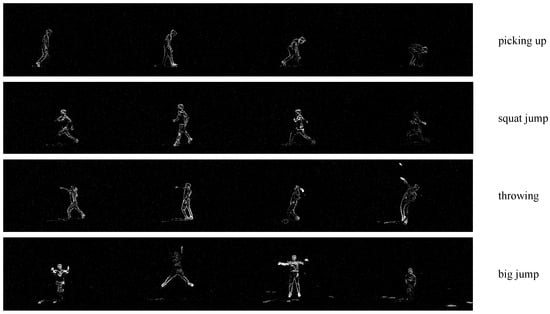

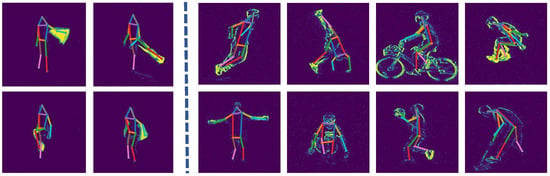

Figure 1.

Event frames mapped from asynchronous event stream. Due to some body parts remaining stationary and not generating event information, certain body information is missing.

With this joint global and local temporal modeling, JGLTM can take advantage of both short- and long-range information to enhance the feature at the current frame. Specifically, the feature is refined using a cross-attention mechanism to inject the global memory into the current frame, complementing the contextual motion cues and alleviating the issue of event loss. The contribution of this paper can be summarized as follows:

- This paper proposes a novel framework, JGLTM, for event-based human pose estimation. It explicitly captures both long-range contextual motion cues through the GMN module and short-range spatial–temporal consistency via the LST module, enhancing robustness to event sparsity. Furthermore, the MPR module is introduced, where global context from the GMN is injected into the middle frame’s features in LST using a cross-attention mechanism to improve prediction quality.

- This paper extends previous a dataset, CDEHP, to a larger benchmark CDEHP-E (CDEHP-E dataset is available at: https://cdehp-dataset.github.io/ accessed on 1 March 2025), including both indoor and outdoor scenarios, providing a more comprehensive evaluation platform.

- Extensive experiments on CDEHP, CDEHP-E, MMHPSD, and DHP19 demonstrate that the method proposed in this paper outperforms existing CNN-based and attention-based baselines, showing strong generalization across diverse conditions.

2. Related Work

2.1. Video-Based Human Pose Estimation

In the field of image-based human pose estimation, torso occlusion poses a significant challenge that necessitates considerable improvement. Similarly, the unique characteristics of event cameras, which generate event information only when brightness changes exceed a certain threshold, lead to accumulated grayscale images that suffer from information loss not only in the occluded torso regions but also in stationary body parts. Consequently, the primary challenge that must be addressed is how to retrieve the missing information from the neighboring frames of the target frame and effectively use it to enhance the target frame.

Early works [,,] utilize additional optical flow information to supplement the data and impose constraints on the prediction results for the current frame. Specifically, Flowing ConvNets [] aligns the heatmaps generated from neighboring frames with the current frame using optical flow estimation, resulting in warped heatmaps. They then fuse the information from the target frame’s heatmap with the warped heatmaps from neighboring frames through a 1 × 1 convolution kernel.

Similarly, Thin-Slicing Networks [] extracts image features from neighboring frames using optical flow estimation to generate a structured model. This model effectively provides prior knowledge, regulates skeletal structures, and ensures spatiotemporal consistency, thereby reducing the likelihood of significant joint displacement. RPM [] enhance geometric consistency between frames by utilizing LSTM memory units to store and update information from preceding and succeeding frames, building upon the CPM framework []. The authors of [] propose a 3D extension of Mask R-CNN [] to incorporate temporal information from neighboring frames into the current frame.

In general, adjacent frames should maintain consistency in both temporal and spatial dimensions. Addressing these aspects, ClipTrackingNetwork [] transforms the problem of predicting the optimal position of each joint in every frame into a shortest path problem, solving it using Dijkstra’s algorithm. They generate clusters at hypothesized joint positions using the mean shift algorithm and enforce temporal consistency of clusters through a self-adaptive similarity function. DCPose [] extracts temporal and spatial information through two parallel modules and inputs these data into a pose correction network, refining pose estimation with a narrowed search range and pose residual information. DiffPose [] redefines the pose estimation task as a conditional heatmap generation task, using the visual features of the target and neighboring frames as conditions while applying a denoising diffusion probabilistic model for noise reduction. SLT-Pose [] leverages self-attention to extract and refine features from both local sequence frames and the target frame. They input the refined features into a cross-attention module to establish associations between the target and neighboring frames, thereby supplementing the target frame with additional information. Meanwhile, FAMI-Pose [] extracts additional relevant and complementary information from neighboring frames using feature alignment methods.

2.2. Event-Based Human Pose Estimation

Unlike dense data such as images, event information is sparsely distributed in space, making it challenging to use directly as input for neural networks. Consequently, various representations of event information have been proposed in prior works, raising the critical issue of selecting appropriate network structures for different representations. For event stream representation, the temporal information of events is preserved, making RNNs or Transformers suitable for capturing dynamic temporal features. For event point cloud representation, events are treated as spatiotemporal points, which can be effectively modeled using PointNet or GNNs to extract both local and global features. In this work, we adopt the event frame representation, where discrete events are accumulated into fixed-interval images, making CNNs a suitable choice for processing.

DHP19 [] maps event information onto a 2D plane to generate event frames and employs a CNN to produce heatmaps. By setting a confidence threshold, they determine whether to update the joint positions in the current frame, thus mitigating information loss caused by stationary body parts. For each boundary pixel of the event frame, EventCap [] searches for the closest event and solves a nonlinear least squares optimization problem to obtain boundary information, refining the pose accordingly. The authors of [] input the sequence frames generated from the event stream into a CNN for optical flow estimation. They iteratively utilize the optical flow estimation and sequence frame information to generate pose estimation results. EventHPE [] proposes a rasterized event point cloud that retains the 3D characteristics of event information, enhancing processing speed without compromising accuracy compared to directly projecting event information onto a 2D plane. MoveEnet [] introduces an online, high-frequency, and lightweight network structure that uses the event stream directly as input. The event stream is converted into EROS representation and processed by a pre-trained network. Lastly, tDenseRNN [] proposes a recurrent architecture featuring a novel temporal dense connection mechanism that integrates connections between the current frame and all preceding frames into a Long Short-Term Memory (LSTM) network, enabling comprehensive temporal modeling beyond simple sequential connections.

2.3. Vision Transformer for HPE

The application of transformer models, originally designed for translation tasks [], to the traditional image domain Vit [] has opened up a wider array of options beyond CNNs for various fields of computer vision, including human pose estimation. In Transpose [], image features processed by a CNN are fed into the transformer’s encoder, uncovering image-specific and joint-specific dependencies through the derived dependency area.

In TokenPose [], visual tokens are directly extracted from images, with new keypoint tokens introduced to learn features and predict joint positions, demonstrating a high similarity between keypoints and their neighboring or symmetric counterparts. The multi-resolution parallel design proposed in HRNet [] is integrated into HRFormer [], where self-attention is applied within non-overlapping small image windows to learn high-resolution representations. Additionally, 3 × 3 depthwise convolutions in two point-wise MLPs of the transformer facilitate information exchange between windows.

As the first transformer-based human pose estimation framework, TFPose [] inherently reveals the structured dependencies among keypoints without the need for heuristic design. Building on TokenPose [], PPT [] introduces Human Token Identification (HTI) to locate a rough human body region, performing self-attention only within the selected tokens. In POT [], a pose-oriented self-attention mechanism is proposed to explicitly model the topological interactions among body joints, while distance-related positional embeddings encode the distances from each joint to the root joint, effectively differentiating joint groups based on varying regression difficulties. Leveraging the sparse nature of event information, EventTransformer [] presents a patch-based event data representation to reduce computational resource requirements. This approach introduces latent memory vectors to learn features and generate heatmaps, updating the latent memory vectors with each new frame when multiple frames are processed. In [], a transformer is utilized to provide global spatial information, dynamically adjusting the spiking threshold of the SNN module.

3. Method

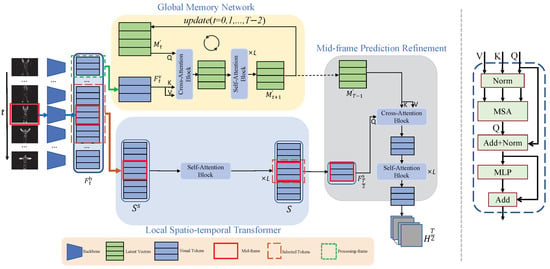

In this section, the JGLTM method, consisting of three major components, is introduced, as shown in Figure 2(left). The detailed approaches are described in the following subsections, including feature extraction, local spatio-temporal transformer, global memory network, and finally, mid-frame prediction refining, which considers taking advantage of long-short temporal abstract memory to refine the local feature.

Figure 2.

(left) An overview of network proposed in this paper. Event frames are fed into backbone to obtain visual tokens, which are then processed by the GMN module (highlighted in the orange background) to extract information from the processing frame. After processing all input frames, GMN acquires global pose information M. Concurrently, LST inputs the visual tokens from the middle frame and its neighboring frames into Self-Attention Block to facilitate the exchange of spatio-temporal information. Next, MPR combines the visual tokens from the middle frame with M and inputs them into Cross-Attention Module to complete the information for the middle frame and generate the final heatmap. (right) Architecture shared by the Cross and Sel-Attention Blocks.

3.1. Preliminary Details

In this paper, a self-attention block is used to further extract input features and a cross−attention block to acquire global memory, continuously extracting global information from the input features. The self-attention block and cross-attention block have the same structure, except for the input, as shown in Figure 2(right). This structure mainly consists of , , , and operations. Next, a detailed explanation of and is provided.

3.1.1. -

The formula for the self-attention mechanism is expressed as in Equation (1):

where Q is the query matrix, K is the key matrix, V denotes value matrix, and represent the dimension of Q and K.

The module is a combination of several self-attention mechanism modules, given by Equation (2):

where , , and are the projection parameter matrices. Projection refers to using a linear transformation to map a vector from one space to another. In neural networks, it is typically implemented through matrix multiplication to adjust the dimensionality and feature representation of the input data.

3.1.2.

The is mainly composed of two fully connected layers and a linear activation layer.

where , , and , represent the weights and biases of the two fully connected layers, respectively.

In addition, a Layer Normalization (LN) layer is applied before each module, and a skip connection is employed after each module. Thus, each transformer layer can be expressed as in Equation (4):

3.2. Feature Extraction

With the variation of light intensity in the environment, an event camera outputs an event , where () represents the pixel location where the event is triggered, denotes the timestamp, and p indicates the trend of intensity change 1 for increase, −1 for decrease). In this paper, each event stream is segmented along the temporal dimension into a sequence of event packets , where each event occurs within a time interval . Each event packet is then accumulated onto a 2D plane using the method in [] to generate a 2D grayscale frame . JGLTM adopts a CNN backbone HRNet-w32 pretrained on the ImageNet to extract frame features.

Here, T represents the number of event frames input into the network. After the feature extraction, JGLTM divides the feature map into patches and flattens it into 1D vectors to obtain visual tokens , where (, , C) is the shape of the feature map, (P, P, C) is the shape of each patch, and N = / is the number of patches. To perform cross-attention computation with the latent vectors, we feed the extracted visual tokens through an to align the dimension with the latent vectors , and B is the number of latent vectors, . Here, is used to represent the processed visual tokens.

3.3. Local Spatio-Temporal Transformer

Alongside extracting the global feature matrix M, JGLTM also obtains the visual tokens from all image frames. Since nearby frames significantly influence the prediction of the current frame, it is crucial to extract both spatiotemporal information from neighboring frames and spatial information from the current frame. To achieve this, JGLTM inputs the visual tokens of the target frame along with its neighboring frames, denoted as , into self-attention blocks. This allows for the exchange of spatio-temporal information, capturing local details more effectively.

Subsequently, JGLTM extracts the visual tokens of the mid-frame, where each token has exchanged information with both the visual tokens of nearby frames and its own internal tokens. The extracted tokens, denoted as , are obtained from the output of the self-attention blocks.

3.4. Global Memory Network

Similar to [], JGTLM initializes latent vectors as memory to extract and store event frame information. By continuously processing the features of the current input frame, the memory is gradually refined and updated, resulting in global information encompassing all key details from the input frames. Next, the process of refining and updating the memory will be explained in detail.

3.4.1. Memory Refinement

To further refine the memory based on previous frames, JGLTM inputs the current frame’s information along with the latent vectors into a Cross Attention Module, which consists of a cross-attention Block and K self-attention blocks. In this setup, the visual tokens serve as keys () and values (), while the latent vectors act as queries (). Each visual token is added with position embedding to preserve its relative position in the original frame.

3.4.2. Memory Update

To supplement and enhance the memory, JGLTM adds the refined memory to the previous memory using a simple sum operation. This updated memory is then used to extract features for the next frame in subsequent processing. After all frame inputs are completed, the latent vectors are updated to obtain the final .

3.5. Mid-Frame Prediction Refinement

In order to supplement the global information of the current frame and mitigate the issues of local stillness in human poses and torso occlusion, JGLTM injects the latent vectors representing global information, obtained from the previously introduced GMN module, into the refined middle frame information produced by the LST module. JGLTM inputs the processed visual tokens along with the final result M of GMN into a Cross Attention Module which is the same as in the previous GMN module. Here, the visual tokens are treated as , M as and , in contrast to the previous step of extracting the image frames.

Finally, in order to better predict the pose, JGLTM concatenates the rough information extracted by the previous backbone with the current refined information to obtain more comprehensive feature information. Next, it is passed through a convolution kernel to adjust the dimensions of the feature map corresponding with the number of keypoints, resulting in the final heatmap , where h and w represent the size of the heatmap.

3.6. Training of the Network

For each event frame input into the network, we only output a mid frame heatmap for prediction. We use the MSE loss function to compare the predicted heatmaps with the ground truth heatmaps.

where and are the predicted heatmaps and ground truth for the k-th joint in the mid event frame, respectively.

3.7. CDEHP-E Dataset

CDEHP is a multimodal human pose dataset captured in outdoor scenes and currently stands as the most challenging event camera-based human pose dataset. To better leverage the high dynamic range characteristics of event cameras, we followed the data collection and annotation methods used in the CDEHP dataset to create an indoor dataset. This indoor dataset comprises samples from 10 participants, as shown in Table 1, each performing 13 different actions at varying speeds (slow, medium, and fast) for 3 to 4 repetitions over a period of time. In total, we collected 130 video samples, each consisting of RGB video sequences, depth video sequences, and event streams. This results in approximately 45,000 frames of RGB-D data. We then combined the collected indoor dataset with the outdoor dataset from CDEHP, resulting in a new dataset named CDEHP-E, as shown in Table 2.

Table 1.

Lists of recorded human actions performed at low, medium, and fast speeds.

Table 2.

Existing event-based human pose datasets are compared in terms of the number of subjects (Sub#), the number of actions per subject (Act#), the number of frames (Frame#), the total duration, the resolution of event camera, the number of used event cameras and multi-modality (MM). The shooting scenes are also listed for comparison.

4. Experiment

4.1. Experiment Setup

4.1.1. Dataset

We evaluate method proposed in this paper on four datasets: DHP19 [], MMHPSD [], CDEHP [], and CDEHP-E. The DHP19 dataset comprises 33 movement recordings from 17 subjects (12 females and 5 males), aged between 20 and 29. The movements are categorized into upper-limb, lower-limb, and whole-body movements, distributed across 5 sessions. MMHPSD is the largest event-based 3D human pose and shape dataset, featuring recordings of 15 subjects (11 males and 4 females). Each subject performs 3 groups of actions (21 distinct actions in total) four times, with each group including actions executed at fast, medium, and slow speeds. This results in 180 videos, each approximately 1.5 min long, and a total of 240,000 grayscale images. The CDEHP dataset is a multi-modal human pose dataset captured in outdoor settings, including samples from 20 subjects (15 males and 5 females) recorded in four different outdoor environments. Each subject performs 25 distinct actions at varying speeds (slow, medium, and fast) 3 to 4 times, resulting in a total of 101,000 frames and 300 event streams in the dataset.

4.1.2. Implementation

The proposed method and comparison approaches in this paper all use event frames as network inputs. Since DHP19 contains only event data, we accumulate the events in each event stream to generate event frames. To focus on the pose estimation with event cameras, we assume the detection stage has been completed with human areas in frames detected, and we crop event frames to a fixed size of 256 × 256 with the human bodies set at the center. The backbone we used in our network is HRNet-w32, which is a specific variant of HRNet []. The number of self-attention blocks in the Cross-Attention module L is set to 3. The temporal length of input frames T is set to 9. The temporal length of nearby frames w is set to 1. The number of latent vectors (B) and the number of visual tokens (N) are set to 64. The size of the patch generated by cutting the feature map P is set to 8. Dimension of latent vectors and visual tokens (D) are set to 512. The Adam optimizer is initialized with a learning rate of 1e-4 and adjusted using a cosine annealing schedule with a cycle of 32. Model convergence is determined based on the stability or slight fluctuations of the AP metric on the validation set. According to experimental observations, the total number of training epochs is set to 32 for the DHP19 and MMHPSD datasets, and 64 for the CDEHP and CDEHP-E datasets, when performance tends to plateau. The training batch size is set to 32, and data augmentation includes rotation, random scaling, and random flipping. The model is trained on four NVIDIA 2080Ti GPUs.

4.1.3. Evaluation Metric

We utilize average precision () and percentage of correct keypoints () as our evaluation metrics on the CDEHP and MMHPSD datasets. The metric is computed based on Object Keypoints Similarity () [], which quantifies the similarity between two sets of keypoints. Specifically, we report ( at = 0.50), ( at = 0.75), and (the mean over thresholds from 0.50 to 0.95, with a step of 0.05). For the metric, a detected joint is considered correct if the Euclidean distance between the predicted and ground-truth locations falls within a certain threshold. In our evaluation, we use @0.5, where a joint is deemed correctly detected if it lies within 0.5 × head_bone_length of the ground truth. Since and tend to saturate on DHP19 when using a simple network, making it unsuitable for verifying our model’s performance, we additionally employ the mean per joint position error () as an evaluation metric for the DHP19 dataset. measures the mean L2 distance between the predicted and ground-truth keypoints, defined as . Here, and represent the ground-truth and predicted positions of the k-th joint in the image space, respectively, providing a more accurate evaluation of keypoint localization performance.

4.2. Experimental Analysis

4.2.1. The Influence of Input Resolution

To analyze the impact of input event frame resolution on model accuracy, we conducted experiments at resolutions of 224 × 224, 256 × 256, and 384 × 384. To eliminate the influence of the varying numbers of visual tokens, we set the patch sizes to 7 × 7, 8 × 8, and 12 × 12, respectively. This ensures that the model segments the feature maps generated by the convolutional network into 64 visual tokens and uses 64 as the input for latent vectors.

As shown in Table 3, the AP does not consistently increase with higher image resolutions. When the resolution increases from 224 × 224 to 256 × 256, the AP improves by 1.62, indicating that larger patches provide richer feature information, thereby enhancing prediction accuracy. However, further increasing the resolution to 384 × 384 leads to a drop in AP by 2.37. Although higher-resolution patches contain denser information, they may also introduce more background noise or redundant details, which can negatively affect the model’s discriminative ability. Additionally, the model may struggle to effectively utilize the added fine-grained information. Overall, 256 × 256 achieves a good balance between informative content and irrelevant noise, making it the optimal patch granularity for the current task.

Table 3.

The performance of JGLTM with different resolutions on CDEHP val set.

4.2.2. Effect of the Global Temporal Length

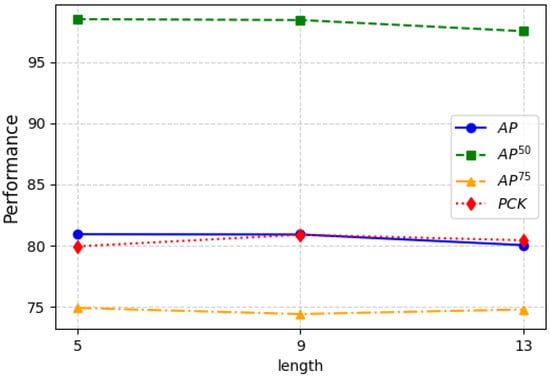

Global temporal length refers to the length of the event frame sequence we input into network. By extracting features from the entire input sequence and updating the latent vectors, we ultimately obtain global pose information. A short event frame length may result in insufficient temporal information, making it difficult for the model to capture motion patterns, while an excessively long frame length may introduce redundant information, reducing computational efficiency. Therefore, this paper selects multiple frame lengths (5, 9, and 13) to cover short, medium, and long time spans of event information, enabling an analysis of the impact of time window size on model performance. As shown in Figure 3, although the metric slightly decreases when the length is 9, there is a significant improvement in . When the length increases to 13, both and decrease to varying degrees. The experimental results indicate that a longer global temporal length for generating global pose information is not necessarily better, and an overly long sequence input can interfere with the generation of global pose information. Therefore, even though the metric is not optimal at a length of 9, considering all factors, we set the global temporal length to 9 in our experiments for this paper.

Figure 3.

Ablation studies of different temporal lengths of input frames on CDEHP dataset.

4.2.3. Effect of the Local Temporal Length

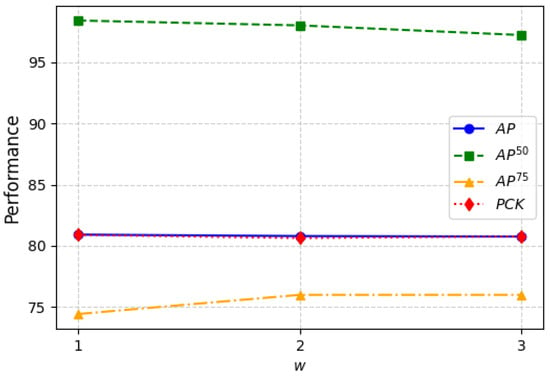

The nearby temporal length is a hyperparameter of our model, representing the number of frames taken from the prediction of nearby frames. As shown in the results in Figure 4, with the increase in w, most evaluation metrics such as , , and tend to decrease, while shows a slight improvement. This indicates that as the number of frames for local information exchange increases, the overall prediction accuracy of our model slightly decreases, but improves to above 75% for some inputs. The results suggest that more local information is not always better and excessive information exchange can lead to the prediction frame deviating from the correct trajectory. This indicates that more temporal information does not always lead to better predictions. An excessively long time window may introduce redundant information, cause prediction drift, and increase computational complexity. Therefore, choosing an appropriate w helps balance capturing local temporal information and maintaining prediction accuracy. Considering these findings, we set w to 1 for the experiments in this paper.

Figure 4.

Ablation studies of different lengths (w) of nearby frames on CDEHP dataset. Here, means the total frames fed to the LST module will be , including the mid-frame.

Additionally, we found that PCK and AP exhibit very similar performance. PCK evaluates whether keypoint predictions fall within a certain error threshold, while AP measures the precision and recall of keypoint detection. This similarity may be attributed to the stable confidence scores of JGLTM on the CDEHP dataset and the relatively uniform error distribution, leading to comparable trends in AP and PCK results.

4.2.4. Contribution of Each Component

Our model is primarily composed of three parts: extraction of global or local information, and infilling local prediction frames with global information. To validate the effectiveness of our modules, we conducted extensive experiments. First, we removed the GMN module from our proposed network and replaced the K and V inputs of MPR with Q information to avoid global information filling. We denote this configuration as JGLTM-w/o-global. Next, we set the number of neighboring frames in the LST module to 0 to prevent the middle frame from obtaining information from adjacent frames, referred to as JGLTM-w/o-local.

The results in Table 4 clearly show that removing either global or local information significantly impacts the model’s performance. Compared to JGLTM, the model without global information shows a 0.65 decrease in and a 1.71 decrease in , while the model without local information exhibits a 1.11 decrease in and a 1.5 decrease in . Additionally, we observe that removing global information has a more substantial effect on , whereas removing local information impacts more significantly.

Table 4.

Ablation studies on contributions of different components on CDEHP dataset. ↑ indicates better performance with higher values. The best results are highlighted in bold.

4.3. Comparisons with State of the Art

4.3.1. Results on DHP19

Table 5 summarizes the results of various methods on the DHP19 dataset. Our proposed method shows a decrease of 0.17 and 0.43 compared to tDenseRNN []. Furthermore, we observe that the differences between other methods and our proposed method are minimal. This is likely due to the relatively simple pose information collected in the DHP19 dataset, which does not effectively evaluate the performance of our method. Partial prediction results of DHP19 can be viewed in Figure 5.

Table 5.

Comparison with state-of-the-art methods on the CDEHP, MMHPSD, DHP19 and CDEHP-E datasets. ↑ indicates better performance with higher values, ↓ signifies better performance with lower values. The best results are highlighted in bold, second-best underlined. The input size for all methods is 256 × 256.

Figure 5.

Visual results of our JGLTM on DHP19 (left) and CDEHP-E (right). Challenging scenarios including fast motion and mutual occlusion are involved.

4.3.2. Results on CDEHP

In Table 5, we present a comparison of our model with other state-of-the-art models based on the evaluation metrics and . Since GNN networks with point-based representations still show a significant performance gap compared to event frame-based methods, we do not include them for comparison in this paper. Among the compared models, Hourglass [], SimpleBaseline [], HigherHRNet [], TokenPose [] and VitPose [] are representative algorithms for human pose estimation based on images, achieving scores of 75.87, 77.51, 75.60, 79.68 and 80.01, respectively. LSTM-CPM [], DKD [], DCPose [], FAMI-Pose [] and tDenseRNN [] are representative algorithms based on videos, achieving scores of 59.37, 78.97, 77.56, 79.33 and 80.18, respectively. Compared to tDenseRNN, which achieved the best results on videos, our model shows an improvement of 1.29 and 0.73, respectively. The above comparison demonstrates that our model achieves excellent performance in event-based human pose estimation. The modules within our model effectively extract both global and local pose information, and the infusion of global information into local details optimizes pose estimation.

4.3.3. Result on CDEHP-E

We observe that all methods show significant improvements compared to their results on the CDEHP-E dataset. Our proposed JGLTM achieves better performance on the CDEHP-E dataset, with improvements of 2.81 and 3.15 compared to the results from CDEHP. To investigate the reason behind the observed performance improvement, we conducted experiments based on the CDEHP training set, as shown in Table 6. We added additional indoor data to the training set and evaluated the results on the CDEHP validation set. Compared to the original training set without indoor data, the AP and PCK dropped by 1.28 and 1.86, respectively, suggesting that adding indoor data did not benefit training for outdoor validation. This asymmetry may be attributed to the fact that indoor data tend to be less noisy and more structured than outdoor data, making it easier for the model to learn and make accurate predictions. Partial prediction results of CDEHP-E can be viewed in Figure 5.

Table 6.

Comparison of results trained on different training sets.

4.3.4. Results on MMHPSD

We achieved similar results on the MMHPSD dataset as we did on the CDEHP dataset. Compared to tDenseRNN, our model shows an improvement of 3.08 in and 1.94 in . The greater improvement on the MMHPSD dataset compared to the CDEHP dataset suggests that our model performs consistently well across datasets of varying complexity.

4.3.5. Action-Wise Result Comparison on CDEHP Dataset

To analyze the performance of our method on different actions, we report the action-wise results on the CDEHP dataset. As shown in Table 7, the actions in the CDEHP dataset are categorized into slow, medium, and fast movements. Our method achieves the best results for the majority of actions, particularly excelling in slow and medium movements. This indicates that our approach effectively utilizes global pose information to fill in missing body part data caused by stationary positions during slow movements. The results of FAMI-Pose and tDenseRNN are relatively close to our method. To our understanding, tDenseRNN achieves results that are close to our method in the action-wise evaluation by utilizing dense connections and attention maps and FAMI-Pose achieves this performance through leveraging feature alignment methods to extract additional relevant and complementary information from neighboring frames. Among the other methods we compared, Hourglass performs best on jumping jacks, SimpleBaseline excels in spinning and jumps in various directions, while TokenPose achieves the best results in big jumps and crotch high five. Furthermore, we observe that in actions like crawling, cartwheeling, spinning, and long jumps, severe body part occlusion prevents all methods from achieving satisfactory performance.

Table 7.

Action-wise result comparison on CDEHP dataset in terms of the AP metric. Slow actions, medium actions, and fast actions are included in the top, middle, and bottom parts, respectively, while we separate the table into three parts in terms of the action speed. Best in bold, second-best underlined.

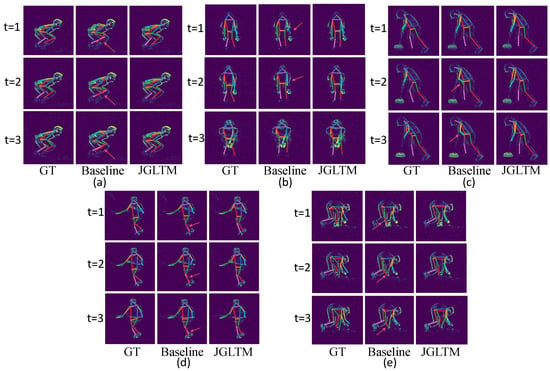

4.4. Result Visualization

In Figure 6, we visualize some of the prediction results on our model and JGLTM-w/o-global from the test set. These include frontal (b,d) and side poses (a,c,e), as well as cases with body part occlusion (a,d,e) and partial body information loss (b,c). We observe that in situations where body parts are occluded, the baseline’s predictions often show significant deviations for certain joints (e.g., the left foot and right knee in (a), both feet in (d), and the right knee and right foot in (e)), leading to partial distortion of the pose. When body parts remain stationary and generate less event information, the baseline predictions show inconsistencies over time (e.g., the left hand in (b) is correctly predicted at but incorrectly at and ; the hands in (c) are correctly predicted at but are incorrect at and ).

Figure 6.

Visual results on the CDEHP dataset using our model JGLTM and the baseline model JGLTM+w/o global, respectively. (a–e) are visualization of five differentiated actions: (a) frog jumping, (b) crotch high five, (c) sweeping, (d) throwing, and (e) picking up.

5. Conclusions

This paper presents JGLTM for human pose estimation based on event information. JGLTM introduces a local attention mechanism to facilitate information exchange between the middle frame and nearby frames, thereby supplementing information for the target frame. Furthermore, to address the challenges of event cameras in capturing event information with stationary body parts and occlusions, JGLTM incorporates a global memory network to extract global pose information and use it to fill in the middle frame, enhancing pose prediction accuracy. Additionally, to demonstrate the universality of our method, this paper collects a substantial amount of indoor human pose data to supplement the CDEHP dataset. Experiments conducted on four datasets validate the effectiveness of our method for event-based human pose estimation, achieving the best results. Although frame-based event representation has demonstrated strong performance in human pose estimation, it has not fully taken advantage of the asynchronous and low-latency properties of event cameras, while the frame-based representation requires extra accumulation preprocessing. Moreover, this representation overlooks the inherent sparsity of event data and does not fully leverage its temporal information. Future research can focus on enhancing computational efficiency and exploiting the unique properties of event data to facilitate the deployment of event cameras in low-power, real-time computing scenarios.

Author Contributions

Conceptualization, X.W. and J.D.; Methodology, Z.S.; Writing—original draft, F.D.; Writing—review & editing, Z.S. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under Grant 61976191 and Grant 62203168, in part by the Hunan Provincial Natural Science Foundation of China under Grant 2025JJ50340.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and analysed during the current study are available online. All data generated or analysed during this study are included in this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Li, Y.; Zhang, S.; Wang, Z.; Yang, S.; Yang, W.; Xia, S.T.; Zhou, E. Tokenpose: Learning keypoint tokens for human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11313–11322. [Google Scholar]

- Ma, H.; Wang, Z.; Chen, Y.; Kong, D.; Chen, L.; Liu, X.; Yan, X.; Tang, H.; Xie, X. Ppt: Token-pruned pose transformer for monocular and multi-view human pose estimation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 424–442. [Google Scholar]

- Luo, Y.; Ren, J.; Wang, Z.; Sun, W.; Pan, J.; Liu, J.; Pang, J.; Lin, L. Lstm pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5207–5215. [Google Scholar]

- Liu, Z.; Chen, H.; Feng, R.; Wu, S.; Ji, S.; Yang, B.; Wang, X. Deep dual consecutive network for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 525–534. [Google Scholar]

- Liu, Z.; Feng, R.; Chen, H.; Wu, S.; Gao, Y.; Gao, Y.; Wang, X. Temporal feature alignment and mutual information maximization for video-based human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11006–11016. [Google Scholar]

- Gai, D.; Feng, R.; Min, W.; Yang, X.; Su, P.; Wang, Q.; Han, Q. Spatiotemporal learning transformer for video-based human pose estimation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4564–4576. [Google Scholar] [CrossRef]

- Pfister, T.; Charles, J.; Zisserman, A. Flowing convnets for human pose estimation in videos. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1913–1921. [Google Scholar]

- Song, J.; Wang, L.; Van Gool, L.; Hilliges, O. Thin-slicing network: A deep structured model for pose estimation in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4220–4229. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Girdhar, R.; Gkioxari, G.; Torresani, L.; Paluri, M.; Tran, D. Detect-and-track: Efficient pose estimation in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 350–359. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Wang, M.; Tighe, J.; Modolo, D. Combining detection and tracking for human pose estimation in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11088–11096. [Google Scholar]

- Feng, R.; Gao, Y.; Tse, T.H.E.; Ma, X.; Chang, H.J. Diffpose: Spatiotemporal diffusion model for video-based human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 14861–14872. [Google Scholar]

- Calabrese, E.; Taverni, G.; Awai Easthope, C.; Skriabine, S.; Corradi, F.; Longinotti, L.; Eng, K.; Delbruck, T. DHP19: Dynamic vision sensor 3D human pose dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Xu, L.; Xu, W.; Golyanik, V.; Habermann, M.; Fang, L.; Theobalt, C. Eventcap: Monocular 3d capture of high-speed human motions using an event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4968–4978. [Google Scholar]

- Scarpellini, G.; Morerio, P.; Del Bue, A. Lifting monocular events to 3d human poses. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1358–1368. [Google Scholar]

- Zou, S.; Guo, C.; Zuo, X.; Wang, S.; Wang, P.; Hu, X.; Chen, S.; Gong, M.; Cheng, L. Eventhpe: Event-based 3d human pose and shape estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10996–11005. [Google Scholar]

- Goyal, G.; Di Pietro, F.; Carissimi, N.; Glover, A.; Bartolozzi, C. Moveenet: Online high-frequency human pose estimation with an event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4024–4033. [Google Scholar]

- Shao, Z.; Wang, X.; Zhou, W.; Wang, W.; Yang, J.; Li, Y. A temporal densely connected recurrent network for event-based human pose estimation. Pattern Recognit. 2024, 147, 110048. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yang, S.; Quan, Z.; Nie, M.; Yang, W. Transpose: Keypoint localization via transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11802–11812. [Google Scholar]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. Hrformer: High-resolution vision transformer for dense predict. Adv. Neural Inf. Process. Syst. 2021, 34, 7281–7293. [Google Scholar]

- Mao, W.; Ge, Y.; Shen, C.; Tian, Z.; Wang, X.; Wang, Z. Tfpose: Direct human pose estimation with transformers. arXiv 2021, arXiv:2103.15320. [Google Scholar]

- Li, H.; Shi, B.; Dai, W.; Zheng, H.; Wang, B.; Sun, Y.; Guo, M.; Li, C.; Zou, J.; Xiong, H. Pose-oriented transformer with uncertainty-guided refinement for 2d-to-3d human pose estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 1296–1304. [Google Scholar]

- Sabater, A.; Montesano, L.; Murillo, A.C. Event transformer. a sparse-aware solution for efficient event data processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2677–2686. [Google Scholar]

- Zhang, J.; Dong, B.; Zhang, H.; Ding, J.; Heide, F.; Yin, B.; Yang, X. Spiking transformers for event-based single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8801–8810. [Google Scholar]

- Cheng, B.; Xiao, B.; Wang, J.; Shi, H.; Huang, T.S.; Zhang, L. Higherhrnet: Scale-aware representation learning for bottom-up human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13– 19 June 2020; pp. 5386–5395. [Google Scholar]

- Hua, G.; Li, L.; Liu, S. Multipath affinage stacked—Hourglass networks for human pose estimation. Front. Comput. Sci. 2020, 14, 1–12. [Google Scholar] [CrossRef]

- Nie, X.; Li, Y.; Luo, L.; Zhang, N.; Feng, J. Dynamic kernel distillation for efficient pose estimation in videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6942–6950. [Google Scholar]

- Xu, Y.; Zhang, J.; Zhang, Q.; Tao, D. Vitpose++: Vision transformer for generic body pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1212–1230. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).