Abstract

Noises and outliers often degrade the final prediction performance in practical data processing. Multi-view learning by integrating complementary information across heterogeneous modalities has become one of the core techniques in the field of machine learning. However, existing methods rely on explicit-view clustering and stringent alignment assumptions, which affect the effectiveness in addressing the challenges such as inconsistencies between views, noise interference, and misalignment across different views. To alleviate these issues, we present a latent multi-view representation learning model based on low-rank embedding by implicitly uncovering the latent consistency structure of data, which allows us to achieve robust and efficient multi-view feature fusion. In particular, we utilize low-rank constraints to construct a unified latent subspace representation and introduce an adaptive noise suppression mechanism that significantly enhances robustness against outliers and noise interference. Moreover, the Augmented Lagrangian Multiplier Alternating Direction Minimization (ALM-ADM) framework enables efficient optimization of the proposed method. Experimental results on multiple benchmark datasets demonstrate that the proposed approach outperforms existing state-of-the-art methods in both clustering performance and robustness.

1. Introduction

With the continuously emerging large amounts of data and information from various perspectives, data from different viewpoints often exhibit high degrees of complementarity [1]. Due to the consistency between different perspectives, learning from a single view is insufficient to capture full information from the data [2,3,4]. This is particularly critical in cross-view learning, which allows one to effectively integrate heterogeneous data such as images, text, and audio towards promoting mutual understanding and collaborative learning across different views [5]. To alleviate this, multi-view learning by integrating data from various perspectives is presented to uncover latent patterns to enhance the performance and generalization ability of algorithms. By treating the subspace representations of different views as tensors and integrating with a low-rank constraint, we can effectively capture higher-order correlations in multi-view data, to reduce redundancy and improve the clustering accuracy [6,7]. In multi-view learning, low-rank embedding methods facilitate the processing of high-dimensional data, reduce the impact of noise, and improve the robustness and generalization capabilities of the model. Meanwhile, shared representations and kernel methods are integrated to further enhance the performance of cross-modal clustering and effectively address nonlinearity and noise issues in the data [8,9].

In recent years, multi-view learning has encompassed a variety of methods for integrating information from different perspectives. In the early stages, traditional approaches, such as co-training, have significantly improved model learning performance by leveraging complementarity between multiple views [10,11]. Locality-preserving projection (LPP) is a nonlinear dimensionality reduction technique that aims to preserve the local structure of the data by minimizing the reconstruction error between neighboring data points and effectively captures nonlinear relationships in the data, thus improving the robustness of the model to noise and incomplete labels [12]. Low-rank representation (LRR), on the other hand, enables one to enhance the accuracy of data representation in low-dimensional space by capturing the global structure of the data. Recently, improved variants of LRR, such as the graph-based LRR and weighted LRR, have been proposed to enhance the robustness when dealing with high-dimensional noisy or incomplete data [13]. Latent space is a core concept in representation learning, which can be used to map high-dimensional data to low-dimensional latent representations and to capture the deepest features in the data [14,15]. Moreover, the optimization techniques such as Variational Autoencoders (VAEs) and Conditional Generative Adversarial Networks (cGANs) have been proposed to enhance the expressiveness of the latent space.

Although the LRR model has demonstrated the advantages in handling sparse noise, this model is also limited in practical applications due to the sensitivity to non-sparse and structured noise that significantly restricts robustness in real-world scenarios [16]. Moreover, the traditional LRR methods primarily focus on capturing the global structure of data while overlooking the preservation of local geometric features [17]. Despite these advancements, existing methods still suffer from the following key challenges: (1) Each view in multi-view data may contain noise and outliers, which can negatively impact the learning process, thereby reducing the accuracy and robustness of the model; (2) Different views often exhibit distinct data distributions, which are crucial to identify and leverage the shared latent structure between multiple views; (3) Multi-view learning typically involves processing high-dimensional data, and the computational complexity of the model tends to increase with the number of views and data dimensions. Addressing these limitations is necessary for the development of more robust frameworks with strong theoretical foundations to simultaneously tackle noise adaptability, local-global structure preservation, and computational efficiency.

Multi-view learning often faces challenges from inherent data redundancy and noise contamination, which propagate through affinity matrices and degrade representation learning performance. To alleviate this issue, we assume that multiple heterogeneous views originate from a shared latent representation. Based on this assumption, we propose a novel Low-Rank Embedded Latent Multi-View Subspace Clustering (LRE-LAMVSC) framework. First, due to the capability to retain global discriminative structure in reduced space by the low-rank embedding method [18], we integrate this method into latent space learning by decomposing the global subspace through a projection matrix, effectively capturing cross-view consistency while suppressing noise propagation. Then, we apply the latent representation learning mechanism to unify different feature modalities (e.g., visual and textual data) into a common subspace and to mitigate feature mismatches caused by inter-view differences while preserving complementary information. Finally, our comprehensive experimental results demonstrate that the LRE-LAMVSC achieves superior clustering accuracy and robustness by comparing to the state-of-the-art methods while maintaining computational efficiency for practical deployment.

The main contributions of this paper are as follows:

- (1)

- By leveraging low-rank embedding to model the shared subspace structure of multi-view data, our approach effectively suppresses noise and redundant information that allows us to enhance the robustness in handling outliers and noisy environments;

- (2)

- We can map information from different views into a unified semantic space through a latent multi-view learning fusion mechanism. This process preserves the unique characteristics of each view while strengthening inter-view consistency;

- (3)

- We have designed a cross-view projection mapping mechanism that transforms the original high-dimensional heterogeneous data into a low-rank latent space. This allows the separation of shared subspace and view-specific information, enabling a more precise capture of the consistent structure across multiple views.

The structure of this thesis is as follows. Section 2 provides a brief review of the key algorithms related to low-rank embedding and latent spaces. Section 3 introduces the proposed LRE-LAMVSC algorithm along with the optimization strategy. In Section 4, we conduct experiments on real-world datasets to demonstrate the superiority of the proposed algorithm. Finally, Section 5 concludes the paper with a summary of the research findings.

2. Related Works

Recent advancements in multi-view learning have led to the development of various approaches that have been effectively applied across different practical scenarios. In the field of multi-task learning, Cai et al. proposed the Guided Multi-Task Learning (GMT) framework by balancing task detection and Re-ID sub-tasks conflicts using the GMHL module and BMOF method, which enabled them to enhance the efficiency and accuracy of end-to-end person search [19]. Fu et al. have proposed a robust modeling framework that integrates manifold regularization by learning a low-rank coefficient matrix to capture the global structure of the original data, aiming to reduce the negative impact of outliers and to enhance the ability to interpret the global structure of industrial data containing anomalies [20,21]. Nikita et al. have proposed a novel gradient-based optimization method (MAMGD) using exponential decay by incorporating mechanisms that include exponential decay, adaptive learning rate, and discrete second-order derivatives, which improved convergence speed and stability across multiple function optimization tasks and neural network training scenarios [22]. Zheng et al. have introduced a multi-view subspace clustering method with feature concatenation and graph regularization. This method improved the model performance by exploiting consistency information across multiple views, while enhancing the robustness of the algorithm by leveraging both the consistency and complementarity of the data from different views [23]. Maria et al. have proposed a multi-view low-rank sparse subspace clustering method that learns a joint subspace to represent the information from all views. This method jointly learned an affinity matrix constrained by sparsity and low-rank assumptions to capture the shared information across views. Zhang et al. have addressed the computational cost associated with low-rank structure learning in multiple views by proposing a fast multi-view subspace clustering method. This approach decomposes the data into two smaller factor matrices, which reduces the computational cost of solving the SVD problem while preserving the underlying low-rank structure of the views [24]. Low-rank linear embedding for robust clustering employs low-rank representation, showing excellent performance in single-view clustering. Similar to SCBSV, it applies a low-rank representation to each view to achieve optimal clustering results [25]. Wang et al. proposed a popular method based on dual consistency to guide incomplete multi-view clustering to achieve consensus representation through reverse regularization by recovering incomplete view data and, thus, to balance the importance between tasks and improve the clustering accuracy [26]. Multi-view learning methods have made significant advancements and have been widely applied across various practical domains. Numerous approaches have significantly enhanced the robustness and computational efficiency of multiview data modeling by integrating techniques such as low-rank representation, graph regularization, and sparsity constraints.

Traditional multi-view learning methods enhanced modeling performance by integrating same-type information from different perspectives within the same dataset (e.g., images and text), but they were constrained by explicit feature fusion and homogeneous data representations [27]. Unlike traditional multi-modal learning, the binary multi-view clustering method presented in [28] is capable of simultaneously processing heterogeneous data from different modalities (e.g., text, speech, etc.) and, by modeling the complex nonlinear relationships between these modalities, it achieves deeper semantic understanding and improved generalization capabilities [29]. Recently, research on the latent multi-view subspace clustering has mainly focused on integrating features from different perspectives to uncover low-dimensional representations of the data, with the aim of improving clustering accuracy and addressing issues of heterogeneity and data inconsistency across views. Zhang et al. proposed a new multi-view subspace clustering model based on latent representation [29]. Compared to the existing methods, this model simultaneously searches for latent representations while exploiting the complementary information across multiple views. This enables the model to represent data in a more comprehensive manner than a single view, making the subspace representation more precise and robust. Xue et al. introduced an unsupervised multi-view dimensionality reduction method based on a dual-layer latent space learning approach. By learning in layers, this method addresses the differences between views and utilizes both shared and private information to preserve the features of each view. The second layer employs joint matrix factorization to fuse the different views into a low-dimensional representation, enhancing both flexibility and robustness [30]. Furthermore, Yao et al. proposed a novel tensor-based incomplete multi-view clustering method that improved missing-view completion with dual tensor constraints and addressed the feature degeneration problem with an adaptive fusion graph learning strategy [31]. Huang et al. have proposed a new multi-view joint learning framework that integrates multiple similarity matrices and applies nuclear norm regularization to learn reliable similarity matrices and low-dimensional latent representations, aiming to improve the robustness over noises and outliers [32]. Many researchers have proposed the robust multi-view spectral clustering method, which constructs a shared transition probability matrix through low-rank and sparse decomposition to reduce the impact of noise in multi-view data and improve multi-view clustering performance [33]. Unlike the RMSC method, the diversity-induced multi-view subspace clustering method reduced data redundancy by integrating subspace representations from different views into a single affinity matrix [34]. The Multi-view Intact Space Learning (MISL) algorithm focuses on integrating multi-view information and learning the latent complete representation, while the multi-instance clustering algorithm focuses on the multi-view analysis and anomaly detection of sensor data in smart building system [1,27]. Therefore, research on the latent multi-view subspace clustering primarily focuses on integrating features from different perspectives to uncover low-dimensional representations of the data, aiming to improve the clustering accuracy and to address the issues of heterogeneity and data inconsistency across views. To this end, various methods have been proposed by leveraging the multi-view information, to reduce the noise interference, to enhance the robustness of models, and, finally, to effectively improve clustering performance and model stability.

The latent rank embedding multi-view learning has been effectively integrated with information from different views by using low-rank representations, which helps to reduce the negative impact of noise and outliers, towards improving the robustness of dimensionality reduction and clustering on multi-view data. Zhao et al. proposed the cross-lingual font style transfer with full-domain convolutional attention (FCAGAN) model, which enabled cross-lingual font style transfer with a small number of samples. Unlike traditional methods, FCAGAN can transfer the font style of one language to another [35]. Zhou et al. proposed an end-to-end robust clustering method (RCLR) that combines low-rank linear embedding with k-means clustering, capable of simultaneously learning sparse coefficients and spatial projection matrices while capturing both global and local structures [36]. This method integrates clustering, dimensionality reduction, low-rank representation, and local property preservation into a unified model, effectively avoiding the error accumulation issue encountered in traditional two-stage frameworks. Li et al. have introduced a low-rank embedding-based method for single-view and multi-view learning, which integrates multiple views from drug and protein data to improve the accuracy of interaction prediction [37]. Meng et al. have proposed a multi-view low-rank preserving embedding method, which effectively integrates multi-view information by minimizing the inconsistency between each view and a central view while preserving the low-rank reconstruction relationships. Unlike existing methods, this approach automatically assigns appropriate weights to each view, eliminating the need for manual parameter tuning [8]. The low-rank tensor constrained multi-view subspace clustering method employs the low-rank tensor decomposition techniques to enforce consistency in high-order data [38]. Additionally, it integrates complementary information from multiple views to enhance the efficiency of data processing. However, the multi-modal sparse and low-rank subspace clustering method constructs a unified model of data from different views to obtain a shared representation [39], thereby enhancing the efficiency of multi-view learning. Latent rank embedding multi-view learning integrates information from different views, reducing the impact of noise and outliers, thereby improving the robustness of dimensionality reduction and clustering. Related methods include low-rank embedding-based linear dimensionality reduction and multi-view low-rank preserving embedding, which improve data integration by automatically optimizing the view weights and reducing inconsistency between views, while eliminating the necessity for manual parameter tuning.

3. Proposed Approach

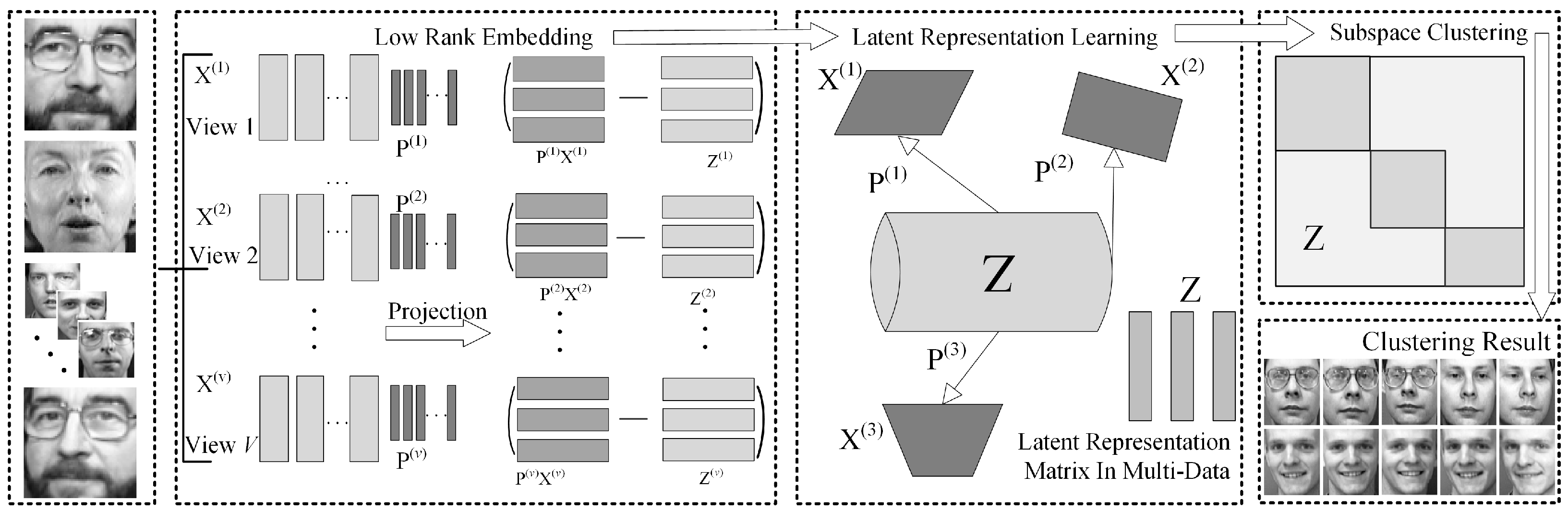

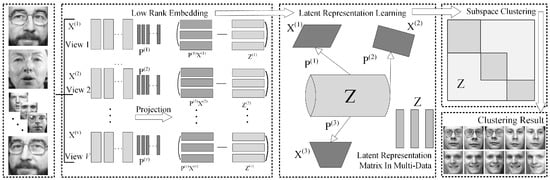

In this section, we first present a novel representation method, i.e., the Low-Rank Embedding (LRE), which preserves the low-rank reconstruction relationships among samples in various ways. Then, by integrating with the latent space learning, we further propose a Low-Rank Embedding-based Latent Multi-View Representation Learning (LRE-LAMVSC) method. This approach models the shared subspace of multi-view data using the structure of low-rank embedding and then utilizes a fusion mechanism in latent multi-view learning to map information from different views into a unified semantic space. Finally, we design a cross-view projection mapping that maps high-dimensional data into the low-rank latent space to effectively capture the consistency structure across multi-view data. Figure 1 illustrates the structure of the LRE-LAMVSC algorithm and Table 1 shows the explanations of the key notations throughout this work.

Figure 1.

The framework of a novel Low-Rank Embedded Latent Multi-View Subspace Clustering (LRE-LAMVSC). We first pre-process the dataset by dividing into matrices of v different views. Then, we reconstruct the data using a low-rank embedding approach, which allows us to robustly reveal the underlying relationships between images. Next, we map the data from each view to a low-rank space, ensuring that the representations in this space have similar structures and shared latent information. Furthermore, these data representations are embedded into a shared latent space using the corresponding mapping matrix . Finally, we employ an iterative strategy and the Augmented Lagrangian Multiplier method (ALM-ADM) to compute the optimal solution for LRE-LAMVSC.

Table 1.

Key notations in this work.

3.1. Low-Rank Embedding

The main objective of the low-rank embedding is to improve the robustness in addressing the linear dimensionality reduction problem related to characteristic damage and outliers, towards enhancing the robustness. To achieve this, low-rank embedding uses the norm as the fundamental measure for error reconstruction. The objective function of the LRE can be expressed in the following formula [40]:

In Equation (1), contains the input raw data, is the low-rank matrix, and is the orthogonal subspace. is the regularization parameter.

The objective of Equation (1) is to find the optimal low-rank reconstruction and projection, with the primary goal to model the specific damage and outlier issues of the samples. Therefore, the main problem can be addressed by replacing the relevant lower bounds with a rank function, for which we introduce the kernel norm minimization as follows:

In Equation (2), denotes the nuclear norm of a matrix. The norm exhibits superior robustness compared to the Frobenius norm, making the nuclear norm a more stable choice when dealing with outliers or corrupted sample data.

3.2. The Latent Multi-View Representation Based on Low-Rank Embedding

In real-world data, each view often carries specific physical significance but suffers from the susceptible to noise. To address this issue, we propose a novel low-rank error reconstruction relationship to improve the embedding of the given views. This approach not only preserves the low-rank error reconstruction relationships between samples in the original space, but also ensures their validity in a latent space. By minimizing the spatial reconstruction function, we maintain the low-rank relationships between samples in a stable manner:

In Equation (3), denotes the data in the v-th view. Let represent the dimensionality of the -th view and the projection matrix for the -th view, which maps to . The matrix serves as the shared low-dimensional embedding across different views. This model effectively reduces the dimensionality and denoises the data, while maintaining strong structural consistency and significantly enhancing robustness and cross-view correlation. In Equation (1), there exists a trivial solution for and that leads to a lower bound of zero. To resolve this, an additional constraint is introduced, along with a regularization term involving the Frobenius norm. The resulting formulation is as follows:

Low-rank embedding is highly sensitive to noise and missing values, which often results in outputs deviating from the true underlying structure. Furthermore, the limited interpretability and poor adaptability to real-world tasks hinder the ability to produce embeddings that meet the specific demands of these tasks. To overcome these limitations, combining low-rank embedding with latent space learning can significantly enhance the model generalization ability. This enables the model to capture complex patterns in the data while preserving both local and global structural information.

Low-rank embedding can effectively reduce dimensionality, denoise, and uncover the underlying structure of the data, while latent multi-view learning integrates information from multiple views to enhance the model’s expressiveness, robustness, and generalization capability. By combining low-rank embedding with latent multi-view representation learning, the strengths of both approaches can be leveraged, resulting in a more efficient low-rank embedding latent multi-view representation learning model. The final objective function can be expressed as follows:

In Equation (5), is the input matrix of the raw data, is the projection matrix, is the representation learned by the model, and is the error matrix obtained after error reconstruction; and are regularization parameters. This model can improve both the robustness and adaptability of the embedding process, while also demonstrating greater tolerance to noise and missing values. Furthermore, the relevant low-dimensional representations generated through latent space learning can further enhance the robustness and generalization ability of the embedding.

3.3. ALM-ADM Optimization

Based on the final objective function derived in Section 3.2, our goal is to identify the low-rank projection space and effective latent representations across different views. Since not all variables in the objective function are jointly convex, we decompose the objective into sub-problems that can be efficiently solved. To tackle these sub-problems, we employ the Augmented Lagrangian Multiplier Alternating Direction Minimization (ALM-ADM) framework method, which incorporates the constraints into the objective function. By introducing the augmented Lagrange multipliers and penalty terms, the method progressively brings the solution closer to satisfying the constraints during optimization. To ensure that the objective function is separable during optimization, we introduce an auxiliary variable, , to transform the problem into the following objective function:

Thus, the augmented Lagrangian function for the above problem takes the following form:

In Equation (7), is the penalty parameter and are the Lagrange multipliers. Therefore, the above objective function can be redefined as

Therefore, the above problem is unconstrained and can be optimized by fixing the other variables separately, using the following steps:

Step 1, Update , fix , , :

In Equation (9), can be computed using Singular Value Thresholding (SVT).

In Equation (10), represents the scaling factor in Singular Value Thresholding (SVT).

Step 2, Update , fix , , and as follows:

Equation (11) can be further rewritten in trace form and then the derivative can be computed as follows:

By setting the derivative to zero, the final solution of is

Step 3, Update and fix , , as follows:

This function can be expanded into trace form and the gradient with respect to can be further computed as follows:

During the gradient descent process, the objective function can be minimized by updating as follows:

In Equation (16), represents the learning rate in the gradient descent process and k is the iteration count.

Then, the iteration stops when the change in the objective function is smaller than a threshold as follows:

Step 4, Update and fix , , as follows:

Let ; this problem can be reformulated as follows:

The optimal solution to the above objective function can be further expressed as

Then, the optimization can be performed through matrix multiplication as follows:

In Equation (21), .

Update Lagrange multipliers and the penalty parameter :

In Equation (25), is a user-defined balancing parameter. Algorithm 1 outlines the complete optimization process of LRE-LAMVSC. Then, in practical applications, the representation matrix can be randomly initialized to prevent convergence to an all-zero solution. Furthermore, the complexity of LRE-LAMVSC is determined by the dimension of the views. In specific, the computational complexity of the proposed method is and in the matrix update process, where k represents the latent dimension and d represents the original data dimension. Since the latent representation’s dimension is significantly smaller than that of the original data, the computational complexity can be noted as .

| Algorithm 1: Optimization for the LRE-LAMVSC. |

Set Repeat: update according to Problem (9) update according to Problem (11) update according to Problem (14) update according to Problem (18) update , and according to Problems (22–25) Until: Output: |

4. Results and Discussion

In this section, we evaluate the performance of LRE-LAMVSC by comparing it with several classic multi-view learning methods on four benchmark datasets. First, Section 4.1 provides parameter settings and evaluation indicators. Next, Section 4.2 provides a detailed description of the datasets and comparison methods. Then, comparative studies are conducted in Section 4.3, and comparisons with five state-of-the-art methods are summarized in Section 4.4.

4.1. Parameter Settings and Evaluation Indicators

In the experiment, we used four parameters to evaluate the performance of our model. The parameters were set as follows for the four datasets MSRCV1, Reuters, ORL, and BBCSport: , , , , which are chosen to achieve optimal experimental results.

Moreover, we used four metrics: NMI, ACC, F-measure, and RI, to evaluate the proposed model.

Normalized Mutual Information (NMI):

NMI measures the similarity between the true labels and the predicted labels in the dataset. Based on our model, the NMI calculation method for this experiment is as follows:

In this function, represents the intersection between the true labels and predicted labels in the dataset, is the number of experimental samples, is the number of samples in the j-th class, and N is the total number of experimental samples. Then, the entropy for each class is calculated:

Finally, we obtain the calculation formula of NMI for this experiment:

Accuracy:

ACC can be obtained by using the Hungarian [41] algorithm to solve the problem of label mismatch in the data set. The specific calculation is as follows:

In this function, is computed by calculating the confusion matrix between the true labels, while and are the best matching values obtained after using the Hungarian algorithm.

F-measure:

Here, we denote as the number of samples between the predicted label and the true label, as the number of samples matching it in the predicted label, and as the number of samples matching it in the true label. Finally, we know that the calculation method of F-measure is

Rand Index (RI):

During this experiment, the calculation method of RI index is as follows:

In this function, A is the number of matching pairs between cluster labels and M is the number of all possible cluster label pairs.

4.2. Datasets and Comparative Algorithms

In many applications, data such as text, images, and videos can often be represented through multi-view features. In this study, we conduct experiments on four datasets of different modalities—see Table 2. Experiments are implemented in Python 3.8 with 32 GB RAM and a 2.5 GHz Intel i5 processor. This code is compiled using Visual Studio Code (version: 1.99.2) released by Microsoft Corporation (Silicon Valley, CA, USA).

Table 2.

Detailed information of the experimental datasets.

In this experiment, we use four different real-world datasets to quantitatively evaluate the effectiveness of our model. Specifically, the ORL [42] dataset is a widely used dataset for face recognition, containing images of 40 individuals, with a total of 400 images, consisting of 40 directories, each representing a different person, meaning that each directory contains 10 images. The Reuters [43] dataset mainly consists of documents written in five different languages and translations for six common categories. In this dataset, English documents are treated as one view, and translations are provided in four different languages. In our experiment, 210 documents are randomly selected, with 42 documents per class. The MSRCV1 [44] dataset mainly consists of 210 images from 7 different categories; it includes feature extraction for 5 different types. The BBCSports [45] dataset consists of sports news articles corresponding to five themes, with each document associated with two different types of features.

We apply multiple metrics, including Normalized Mutual Information (NMI) [46], Accuracy (ACC) [47], F-measure [48], and Rand Index (RI) [49], to evaluate the performance of the proposed algorithm. Higher values indicate better performance.

We have conducted experiments on multiple datasets by comparing with five state-of-the-art models:

MSSC [50]: A multi-modal extension of sparse subspace clustering and low-rank representation algorithms is proposed, which achieves the robust clustering of multi-modal data by leveraging the self-expression property of each modality and enforcing shared representations across modalities, while also handling nonlinear data through kernelization.

DiMSC [34]: This method explores the complementary information between multiple views by introducing the HSIC standard, reducing redundancy and improving clustering accuracy.

LT-MSC [38]: A Low-Rank Tensor Constrained Multi-view Subspace Clustering (LT-MSC) method is proposed, which views the subspace representation matrices of different views as a tensor and introduces a low-rank constraint to effectively reduce redundancy and improve clustering accuracy.

LMVSC [51]: A large-scale multi-view subspace clustering method is proposed to handle linearly complex multi-view data.

MCLES [52]: A unified optimization method is proposed to achieve multi-view clustering in the latent embedding space.

4.3. Comparative Studies

Our method first projects all samples to a consistent dimensionality using low-rank embedding, followed by a latent multi-view learning fusion mechanism that maps information from different views into a unified semantic space. In contrast, the other methods fix the dimensionality of the embeddings.

Based on the data in Table 3, we have conducted a comparative analysis based on the following four metrics.

Table 3.

Comparisons with the MSSC [50], DiMSC [34], LT-MSC [38], LMVSC [51], and MCLES [52] methods. Higher values indicate better performance.

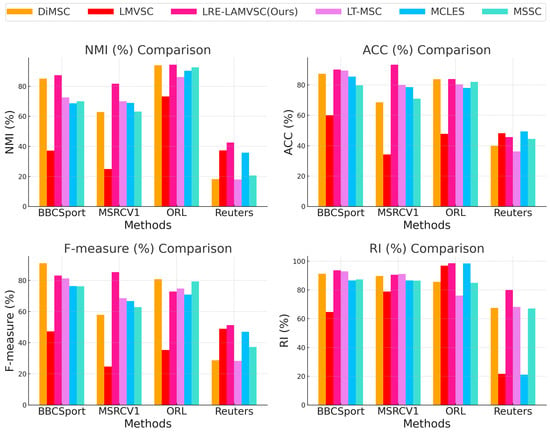

The NMI metric: The proposed LRE-LAMVSC method demonstrates excellent results across all four datasets, particularly in the ORL dataset, where the NMI value of LRE-LAMVSC reaches , significantly exceeding the second-best method, i.e., DiMSC (). In the Reuters dataset, the NMI value of LRE-LAMVSC is , which, although relatively low, still outperforms LMVSC () and MCLES ().

The ACC metric: LRE-LAMVSC performs exceptionally well in the ORL and MSRCV1 datasets, with values of and , significantly surpassing other comparison methods. Although the ACC for LRE-LAMVSC in the Reuters dataset is , which is lower than LMVSC () and MCLES (), it still outperforms MSSC () and DiMSC ().

The F-measure metric: LRE-LAMVSC consistently maintains high performance, particularly in the ORL and MSRCV1 datasets, where it is clearly superior to other methods. In the Reuters dataset, LRE-LAMVSC achieves an F-measure of , showing a certain improvement compared to LMVSC () and MCLES ().

The RI metric: LRE-LAMVSC also performs outstandingly, particularly in the ORL and MSRCV1 datasets, where it significantly outperforms other methods. Although the RI value for LRE-LAMVSC in the Reuters dataset is relatively low, it still exceeds that of MSSC () and DiMSC ().

Overall, the proposed LRE-LAMVSC method demonstrates excellent performance across the four metrics. The advantage of the proposed method lies in its ability to efficiently integrate information from different perspectives while demonstrating high robustness in terms of clustering accuracy, quality, and consistency.

In comparison, the proposed method combining low-rank embedding with latent space outperforms the state-of-the-art methods on the robustness in handling outliers and noisy environments. In particular, the proposed method shows better consistency among different views with improved ability of generalization and robustness.

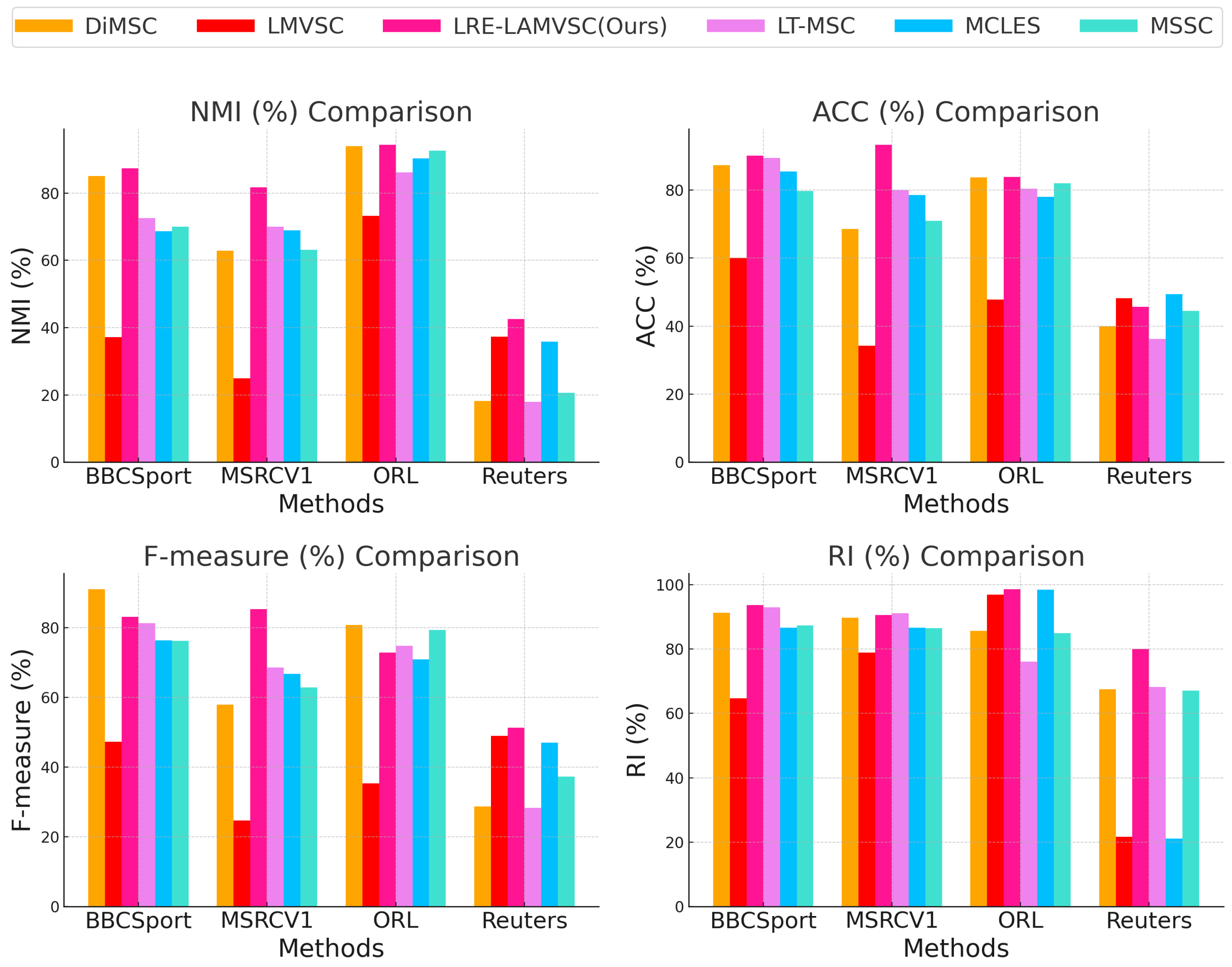

Figure 2 shows the comparisons between the LRE-LAMVSC method and the other state-of-the-art methods across four different evaluation metrics (NMI, ACC, F-measure, and RI) and four different datasets (ORL, Reuters, MSRCV1, and BBCSport). The results show that the proposed LRE-LAMVSC method demonstrates better performance in all datasets, particularly in terms of NMI and RI, and achieves the best performance on the ORL and MSRCV1 datasets, indicating the significant advantage in clustering consistency and information sharing. Therefore, the overall performance of the LRE-LAMVSC method surpasses that of the other comparison methods across multiple datasets, fully validating the effectiveness and potential for development in multi-view clustering tasks.

Figure 2.

Comparison with the MSSC [50], DiMSC [34], LT-MSC [38], LMVSC [51], and MCLES [52] methods on four datasets, which are evaluated with four metrics: NMI, ACC, F-measure, and RI.

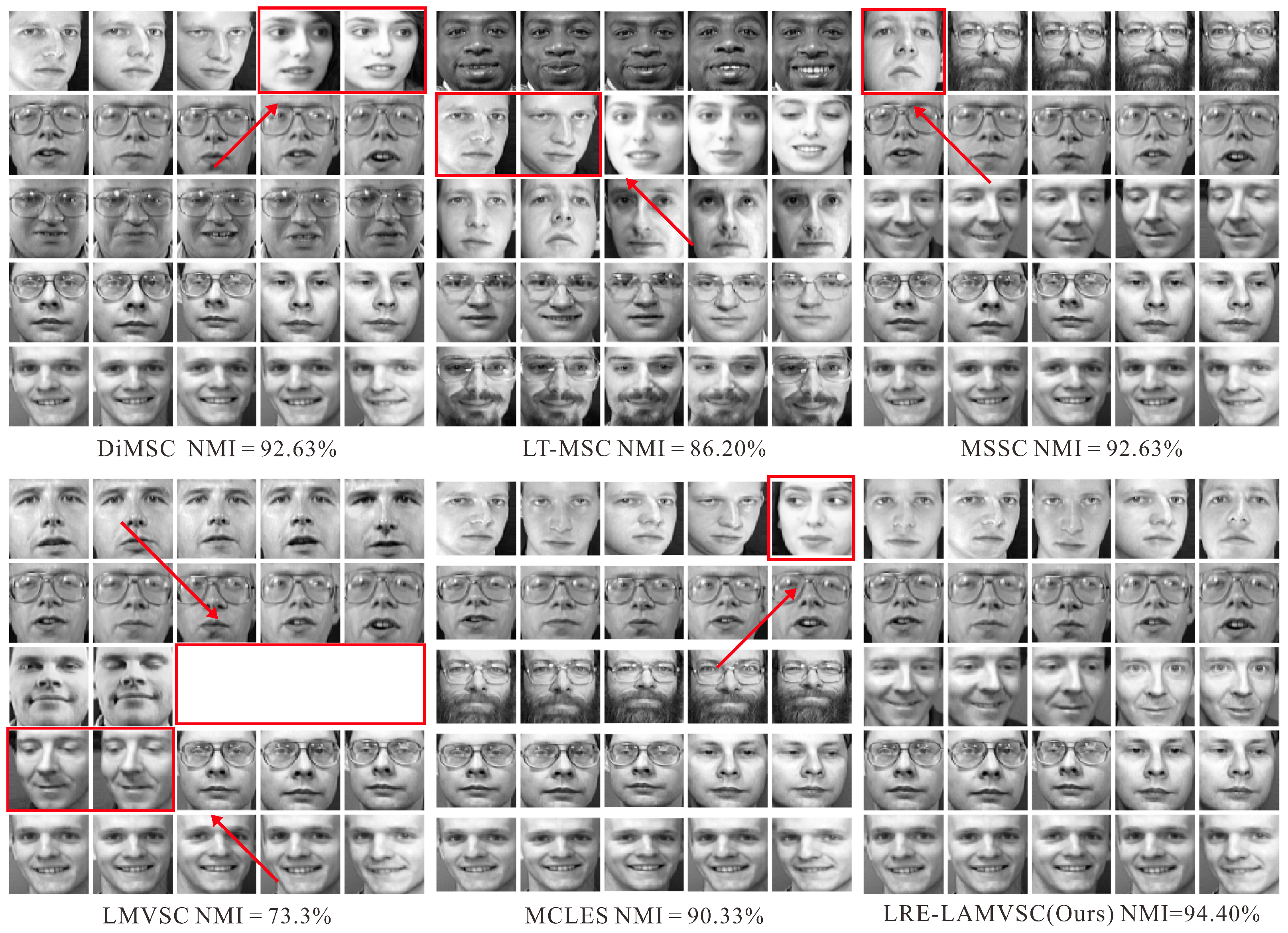

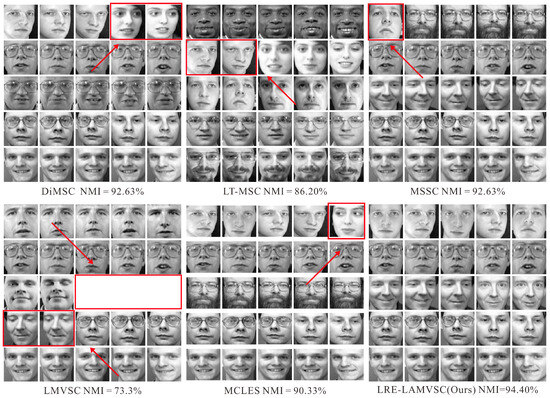

Figure 3 shows the visualization of the experimental results on the real dataset for both the proposed method and the other three methods. As can be seen, our method outperforms the other three methods. Especially when comparing to the MSSC method, the LRE-LAMVSC method shows a significant improvement in clustering accuracy, indicating that this method can effectively capture the underlying structure of the data, thus enhancing the overall performance.

Figure 3.

The output results of the proposed method and the state-of-the-art methods, including DiMSC [34], LT-MSC [38], MSSC [50], LMVSC [51], and MCLES [52]. The red arrows and boxes indicate the clustering errors.

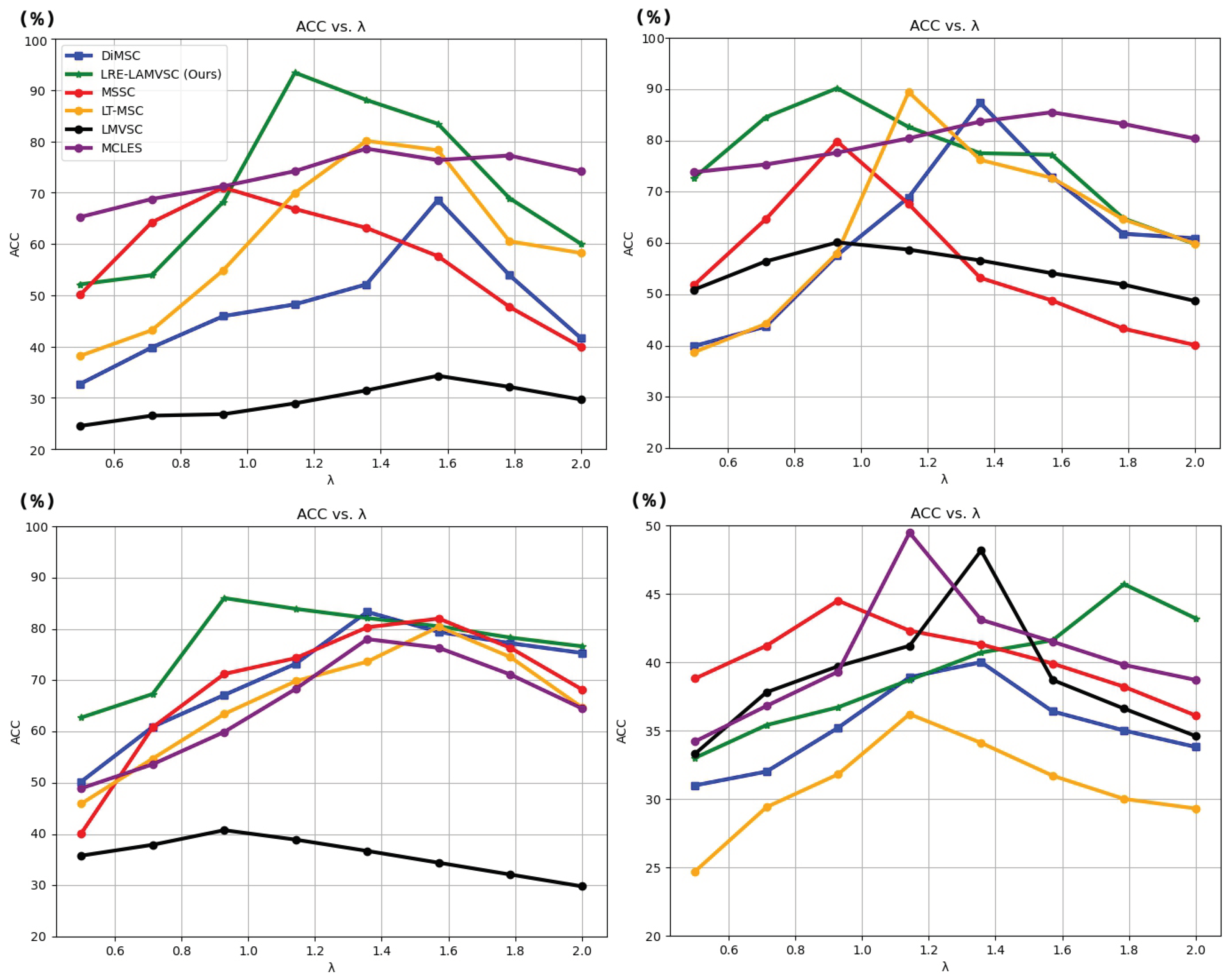

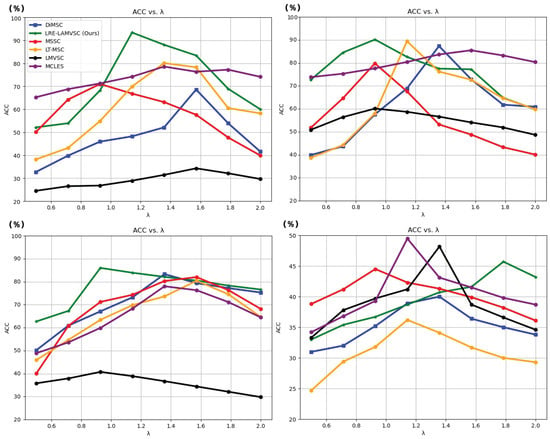

Figure 4 shows a detailed analysis of the ACC metric of the LRE-LAMVSC method with varying and the comparisons with the DiMSC, MSSC, LT-MSC, LMVSC, and MCLES methods. For the ORL dataset, on the bottom left, the proposed method demonstrated a certain advantage. When the parameter changed to a moderate value, LRE-LAMVSC maintained a steady increase compared to the other three models, reaching a peak of , indicating the stability and effectiveness of the method on the ORL dataset. In comparison, LMVSC, MCLES, DiMSC, MSSC, and LT-MSC outperform LRE-LAMVSC in the parameter range from 0.50 to 1.25, but do not surpass LRE-LAMVSC overall. This indicates that our method exhibits high generalization ability on the ORL dataset. For the results of the Reuters dataset, in the top right of Figure 4, the proposed method also maintains superior performance, especially when , where the ACC values of our method are higher than the other methods and reach a peak of . The bottom left of Figure 4 shows the results of the MSRCV1 dataset. As can be seen, the proposed method shows excellent performance on the ORL and Reuters datasets, with an ACC value improvement of by comparing to the second-best model. The bottom-right of Figure 4 shows the results on the BBCSport dataset. As can seen, the proposed method shows minimal difference when compared to the other methods. But, for the comparison with DiMSC, our approach shows better performance.

Figure 4.

The comparisons among the proposed approach and the state-of-the-art methods, including LRE-LAMSC, DiMSC [34], LT-MSC [38], MSSC [50], LMVSC [51], and MCLES [52], with the ACC metric on the MSRCV1 dataset (top left), Reuters dataset (top right), ORL dataset (bottom left), and the BBCSport dataset (bottom right).

Overall, the LRE-LAMVSC method outperforms other comparison methods on multiple datasets, especially on the ORL and Reuters datasets, where the ACC metric is improved significantly over the other methods. To sum up, the proposed LRE-LAMVSC shows significant advantages on the ORL and Reuters datasets: the proposed LRE-LAMVSC method maintains a high accuracy for four different metrics on different experimental datasets, demonstrating competitive generalization ability and robustness. The method also performs stably across multiple datasets and different parameter settings, which show the potential to perform effectively in various application scenarios. In the proposed LRE-LAMVSC method, a and b are two key parameters that control the low-rank constraint and noise matrix regularization, respectively. These parameters play a crucial role in balancing the model’s data fitting ability and overfitting prevention.

4.4. Discussions on the Comparisons with the Existing Methods

The core of the proposed LRE-LAMVSC method is to unify the latent features of multiple views through low-rank embedding, which significantly improves the consistency and anti-interference of cross-view learning. As can be seen in Table 3, the comparisons between the LRE-LAMVSC method with MSSC, LT-MSC, DiMSC, LMVSC, and MCLES on the ORL dataset show that the NMI () and ACC () of the LRE-LAMVSC method were better than those of MSSC ( and ), DiMSC ( and ), and LMVSC ( and ). Therefore, our method shows more stability and consistency in noisy environments.

Moreover, previous works, such as the MCLES method, suffer falling into a local optimal solution during the process of the alternating optimization of the objective function, while the proposed LRE-LAMVSC method avoids this issue by using the ALM-ADM optimization framework. By comparing to the existing LMVSC methods, our proposed method on the Reuters dataset is better than LMVSC (NMI: , ACC: , F-measure: ) and MCLES (NMI: , ACC: , F-measure: ). The RI by our method is dominant among the compared methods, which shows that our model has certain advantages in cross-view consistency.

Furthermore, the proposed LRE-LAMVSC method not only retains the ability of traditional latent multi-view representation learning methods for complex data structures, but also fuses them into a unified latent representation in the process of low-rank embedding. From the results on the MSRCV1 dataset shown in Table 3, the ACC () and F-measure () of LRE-LAMVSC are better than other comparison methods, indicating that our model is more stable. Therefore, our method shows higher accuracy and consistency among noise and heterogeneity views in the MSRCV1 dataset.

5. Conclusions

This paper proposes a latent multi-view representation learning algorithm based on low-rank embedding to address the challenges of high-dimensional heterogeneity and cross-view association modeling in multi-view data analysis. Unlike traditional methods that rely on explicit view partitions, the proposed approach integrates low-rank matrix factorization with latent space mapping, creating an association learning framework with noise robustness. The contributions include (1) the design of a latent space representation model that effectively captures cross-view structural consistency through low-rank constraints; (2) the introduction of a collaborative iterative optimization mechanism for the projection matrix and adaptive view weights, employing an alternating direction optimization algorithm to jointly solve the feature embedding and weight assignment problems. Experimental results on the ORL and Reuters datasets benchmark demonstrate that the proposed method significantly outperforms existing state-of-the-art algorithms in terms of clustering, with the RI improving by and Normalized Mutual Information increasing by , alongside notably better stability in noisy scenarios. Future work will focus on exploring nonlinear kernel space extensions and deep neural network fusion strategies to enhance the modeling capability for complex nonlinear view relationships.

Author Contributions

S.W.: Methodology, Investigation and Analysis, Writing—Preparation of Original Draft. L.C.: Numerical Computation, Methodology, Experiments, Writing—Review and Editing. Z.L.: Experiments, Data Curation, Code Verification, Writing—Review and Editing. Q.L.: Supervision, Data Curation, Writing—Review, Editing, Grammar Checking and Revision. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Natural Science Foundation of Jiangxi Province: (20224BAB202018).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data and experimental code of this article are available from the corresponding author upon request. For privacy reasons, the experimental code is not made public. During the writing process of this article, the author reviewed and edited the content as needed and takes full responsibility for the content of the publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Devagiri, V.M.; Boeva, V.; Abghari, S.; Basiri, F.; Lavesson, N. Multi-View Data Analysis Techniques for Monitoring Smart Building Systems. Sensors 2021, 21, 6775. [Google Scholar] [CrossRef]

- Hao, C.-Y.; Chen, Y.-C.; Ning, F.-S.; Chou, T.-Y.; Chen, M.-H. Using Sparse Parts in Fused Information to Enhance Performance in Latent Low-Rank Representation-Based Fusion of Visible and Infrared Images. Sensors 2024, 24, 1514. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, Q.; Zhang, B.; He, S.; Dan, T.; Peng, H. Deep Multiview Clustering via Iteratively Self-Supervised Universal and Specific Space Learning. IEEE Trans. Cybern. 2021, 52, 11734–11746. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Zhou, J.T.; Zhu, H.; Zhang, C.; Lv, J.; Peng, X. Deep Spectral Representation Learning from Multi-view Data. IEEE Trans. Image Process. 2021, 30, 5352–5362. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Chen, Z.; Du, S.; Lin, Z. Learning deep sparse regularizers with applications to multi-view clustering and semi-supervised classification. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5042–5055. [Google Scholar] [CrossRef]

- Li, Z.; Tang, C.; Liu, X.; Zheng, X.; Zhang, W.; Zhu, E. Consensus Graph Learning for Multi-view Clustering. IEEE Trans. Multimed. 2021, 24, 2461–2472. [Google Scholar] [CrossRef]

- Fang, U.; Li, M.; Li, J.; Gao, L.; Jia, T.; Zhang, Y. A comprehensive survey on multi-view clustering. IEEE Trans. Knowl. Data Eng. 2023, 35, 12350–12368. [Google Scholar] [CrossRef]

- Meng, X.; Feng, L.; Wang, H. Multi-view low-rank preserving embedding: A novel method for multi-view representation. Eng. Appl. Artif. Intell. 2021, 99, 104140. [Google Scholar] [CrossRef]

- Chen, J. Multiview subspace clustering using low-rank representation. IEEE Trans. Cybern. 2021, 52, 12364–12378. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhu, J.; Li, Z. Collaborative unsupervised multi-view representation learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4202–4210. [Google Scholar] [CrossRef]

- He, C.; Wei, Y.; Guo, K.; Han, H. Removal of Mixed Noise in Hyperspectral Images Based on Subspace Representation and Nonlocal Low-Rank Tensor Decomposition. Sensors 2024, 24, 327. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Dong, W.; Liu, Q. Multi-view representation learning with deep Gaussian processes. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4453–4468. [Google Scholar] [CrossRef]

- Dang, P.; Zhu, H.; Guo, T.; Wan, C.; Zhao, T.; Salama, P.; Wang, Y.; Cao, S.; Zhang, C. Generalized Matrix Local Low Rank Representation by Random Projection and Submatrix Propagation. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 390–401. [Google Scholar] [CrossRef]

- Xie, D.; Zhang, X.; Gao, Q.; Han, J.; Xiao, S.; Gao, X. Multiview clustering by joint latent represcnta-tion and similarity lcarning. IEEE Trans. Cybcrnctics 2019, 50, 4848–4854. [Google Scholar] [CrossRef]

- Fu, Z.; Zhao, Y.; Chang, D.; Wang, Y.; Wen, J. Latent low-rank representation with weighted distance penalty for clustering. IEEE Trans. Cybern. 2022, 53, 6870–6882. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, S.; Peng, C.; Hua, Z.; Zhou, Y. Generalized Nonconvex Low-Rank Tensor Approximation for Multi-View Subspace Clustering. IEEE Trans. Image Process. 2021, 30, 4022–4035. [Google Scholar] [CrossRef]

- Chen, M.; Lin, J.; Li, X.; Liu, B.; Wang, C.; Huang, D.; Lai, J. Representation learning in multi-view clustering: A literature review. Data Sci. Eng. 2022, 7, 225–241. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, M.; Yang, B. Low-rank preserving embedding. Pattern Recognit. 2017, 70, 112–125. [Google Scholar] [CrossRef]

- Cai, B.; Wang, H.; Yao, M.; Fu, X. Focus More on What? Guiding Multi-Task Training for End-to-End Person Search. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Fu, Y.; Luo, C.; Bi, Z. Low-rank joint embedding and its application for robust process monitoring. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Li, S.; Li, X. Fully Incomplete Information for Multiview Clustering in Postoperative Liver Tumor Diagnoses. Sensors 2025, 25, 1215. [Google Scholar] [CrossRef]

- Sakovich, N.; Aksenov, D.; Pleshakova, E.; Gataullin, S. MAMGD: Gradient-based optimization method using exponential decay. Technologies 2024, 12, 154. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhu, J.; Li, Z.; Pang, S.; Wang, J.; Li, Y. Feature concatenation multi-view subspace clustering. Neurocomputing 2020, 379, 89–102. [Google Scholar] [CrossRef]

- Zhang, G.; Huang, D.; Wang, C. Facilitated low-rank multi-view subspace clustering. Knowl.-Based Syst. 2023, 260, 110141. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Yu, Y.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 171–184. [Google Scholar] [CrossRef]

- Wang, H.; Yao, M.; Chen, Y.; Xu, Y.; Liu, H.; Jia, W.; Fu, X.; Wang, Y. Manifold-based incomplete multi-view clustering via bi-consistency guidance. IEEE Trans. Multimed. 2024, 26, 10001–10014. [Google Scholar] [CrossRef]

- Xu, C.; Tao, D.; Xu, C. Multi-view intact space learning. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2531–2544. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, L.; Shen, F.; Shen, H.T.; Shao, L. Binary Multi-View Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1774–1782. [Google Scholar] [CrossRef]

- Zhang, C.; Fu, H.; Hu, Q.; Cao, X.; Xie, Y.; Tao, D. Generalized Latent Multi-View Subspace Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 86–99. [Google Scholar] [CrossRef]

- Xue, Z.; Li, G.; Wang, S.; Zhang, W.; Huang, Q. Bilevel multiview latent space learning. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 327–341. [Google Scholar] [CrossRef]

- Yao, M.; Wang, H.; Chen, Y.; Fu, X. Between/within view information completing for tensorial incomplete multi-view clustering. IEEE Trans. Multimed. 2024, 27, 1538–1550. [Google Scholar] [CrossRef]

- Huang, A.; Chen, W.; Zhao, T.; Chen, C. Joint learning of latent similarity and local embedding for multi-view clustering. IEEE Trans. Image Process. 2021, 30, 6772–6784. [Google Scholar] [CrossRef]

- Xia, R.; Pan, Y.; Du, L.; Yin, J. Robust multi-view spectral clustering via low-rank and sparse decomposition. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; Volume 28. [Google Scholar] [CrossRef]

- Cao, X.; Zhang, C.; Fu, H.; Liu, S.; Zhang, H. Diversity-induced multi-view subspace clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 586–594. Available online: https://openaccess.thecvf.com/content_cvpr_2015/papers/Cao_Diversity-Induced_Multi-View_Subspace_2015_CVPR_paper.pdf (accessed on 6 March 2025).

- Zhao, H.-h.; Ji, T.-l.; Rosin, P.L.; Lai, Y.-K.; Meng, W.-l.; Wang, Y.-n. Cross-lingual font style transfer with full-domain convolutional attention. Pattern Recognit. 2024, 155, 110709. [Google Scholar] [CrossRef]

- Zhou, J.; Pedrycz, W.; Wan, J.; Gao, C.; Lai, Z.; Yue, X. Low-rank linear embedding for robust clustering. IEEE Trans. Knowl. Data Eng. 2022, 35, 5060–5075. [Google Scholar] [CrossRef]

- Li, L.; Cai, M. Drug target prediction by multi-view low rank embedding. IEEE/ACM Trans. Comput. Biol. Bioinform. 2017, 16, 1712–1721. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Fu, H.; Liu, S.; Liu, G.; Cao, X. Low-rank tensor constrained multiview subspace clustering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1582–1590. Available online: https://openaccess.thecvf.com/content_iccv_2015/papers/Zhang_Low-Rank_Tensor_Constrained_ICCV_2015_paper.pdf (accessed on 6 March 2025).

- Abavisani, M.; Patel, V. Multimodal sparse and low-rank subspace clustering. Inf. Fusion 2018, 39, 168–177. [Google Scholar] [CrossRef]

- Wong, W.; Lai, Z.; Wen, J.; Fang, X.; Lu, Y. Low-rank embedding for robust image feature extraction. IEEE Trans. Image Process. 2017, 26, 2905–2917. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Samaria, F.S.; Harter, A.C. Parameterisation of a stochastic model for human face identification. In Proceedings of the 1994 IEEE Workshop on Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994; pp. 138–142. [Google Scholar] [CrossRef]

- Amini, M.R.; Usunier, N.; Goutte, C. Learning from multiple partially observed views—An application to multilingual text categorization. Adv. Neural Inf. Process. Syst. 2009, 22, 28–36. Available online: https://proceedings.neurips.cc/paper/2009/hash/f79921bbae40a577928b76d2fc3edc2a-Abstract.html (accessed on 6 March 2025).

- Greene, D.; Cunningham, P. Practical solutions to the problem of diagonal dominance in kernel document clustering. In Proceedings of the 23rd International Conference on Machine Learning (ICML’06), Pittsburgh, PA, USA, 25–29 June 2006; pp. 377–384. [Google Scholar] [CrossRef]

- He, X.; Yan, S.; Hu, Y.; Niyogi, P.; Zhang, H.-J. Face recognition using Laplacianfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 328–340. [Google Scholar] [CrossRef]

- Estévez, P.A.; Tesmer, M.; Perez, C.A.; Zurada, J.M. Normalized mutual information feature selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. Morgan Kaufman Publishing. 1995. Available online: https://www.researchgate.net/profile/Ron-Kohavi/publication/2352264_A_Study_of_Cross-Validation_and_Bootstrap_for_Accuracy_Estimation_and_Model_Selection/links/02e7e51bcc14c5e91c000000/A-Study-of-Cross-Validation-and-Bootstrap-for-Accuracy-Estimation-and-Model-Selection.pdf (accessed on 6 March 2025).

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Sundqvist, M.; Chiquet, J.; Rigaill, G. Adjusting the adjusted Rand Index—A multinomial story. arXiv 2020, arXiv:2011.08708. [Google Scholar] [CrossRef]

- Brbić, M.; Kopriva, I. Multi-view low-rank sparse subspace clustering. Pattern Recognit. 2018, 73, 247–258. [Google Scholar] [CrossRef]

- Kang, Z.; Zhou, W.; Zhao, Z.; Shao, J.; Han, M.; Xu, Z. Large-scale multi-view subspace clustering in linear time. AAAI Conf. Artif. Intell. 2020, 34, 4412–4419. [Google Scholar] [CrossRef]

- Chen, M.-S.; Huang, L.; Wang, C.-D.; Huang, D. Multi-view clustering in latent embedding space. AAAI Conf. Artif. Intell. 2020, 34, 3513–3520. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).