Abstract

Most visual localization (VL) methods typically assume that keypoints in the query image are detected with the same algorithm as those stored in the reference map. This poses a serious limitation, as new and better detectors may progressively appear, and we would like to ensure the interoperability and coexistence of cameras with heterogeneous detectors in a single map representation. While rebuilding the map with new detectors might seem a solution, it is often impractical, as original images may be unavailable or restricted due to data privacy constraints. In this paper, we address this challenge with two main contributions. First, we introduce and formalize the problem of cross-detector VL, in which the inherent spatial discrepancies between keypoints from different detectors hinder the process of establishing correct correspondences when relying strictly on the similarity of descriptors for matching. Second, we propose CoplaMatch, the first approach to solve this problem by relaxing strict descriptor similarity and imposing geometric coplanarity constraints. The latter is achieved by leveraging 2D homographies between groups of query and map keypoints. This process involves segmenting planar patches, which is performed offline once for the map, and also in the query image, which adds an extra computational overhead to the VL process, although we demonstrated in our experiments that this does not hinder the online applicability. We extensively validate our proposal through experiments in indoor environments using real-world datasets, demonstrating its effectiveness against two state-of-the-art methods by enabling accurate localization in cross-detector scenarios. Additionally, our work validates the feasibility of cross-detector VL and opens a new direction for the long-term usability of feature-based maps.

1. Introduction

In this work, we consider the problem of visual localization (VL) [1], in which the pose of a camera is estimated from a query image, given a previously built map of 3D points. This task heavily relies on establishing reliable correspondences between the keypoints observed in the query image and the projections of the map points. The discrepancy or error for each correspondence pair serves to construct the cost function to be minimized, which results in the estimated camera pose. The fundamental assumption that supports this process is that each pair of matched points represents the same physical entity in the scene [2]. Crucially, standard VL pipelines assume that the keypoint detector used on the query image is identical to the one applied when the map was built. Clearly, this is not guaranteed in heterogeneous sensor setups, where the keypoint detector used on the query image differs from the one applied when the map was built.

To date, this situation, which we coin cross-detector visual localization, has not been addressed in the literature yet, despite its practical importance. The motivation for tackling this problem is driven by the constant emergence of new and better keypoint detectors, and it would be of great interest to reuse an existing visual map for any kind of feature. This is particularly relevant for heterogeneous robotic fleets, for which legacy robots and newer models with different sensors must share a common map. Furthermore, in the context of long-term SLAM, ensuring cross-detector interoperability allows for hardware upgrades without the computationally expensive need to re-map entire environments, preserving the utility of historical map data. At first glance, rebuilding the map with new detectors could be seen as a solution, but in many cases, this is impractical since the original images may no longer be available or, simply due to data privacy constraints, may prevent devices from sharing images, leaving only the map features accessible. Addressing this crucial research gap, cross-detector VL would avoid the need for rebuilding and maintaining a separate world representation for each keypoint detector.

Although, to the best of the authors’ knowledge, this problem has not been explored yet, it maintains parallelism with the translation of descriptors from different algorithms into a common representation where they can be compared. This is known as the cross-descriptor problem, which has recently been addressed by [3]. Yet, it is important to stress that, for this approach, it was assumed that the features were identified using the same detector, focusing only on translating descriptors.

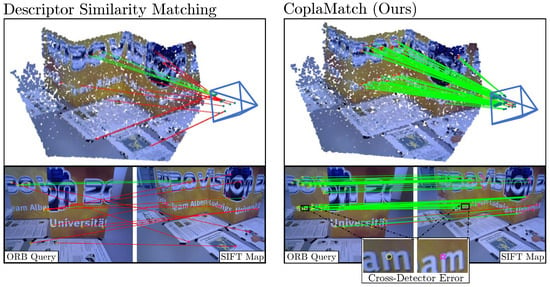

Figure 1 depicts an illustrative example in which a camera using a corner detector (concretely, ORB) attempts to localize itself against an existing map built using a SIFT detector (blob-based). Note that, to ensure that these keypoints are comparable, we employed the same feature descriptor, BRIEF, for both the query image and the map. As is evident from the top scenario in Figure 1, relying solely on descriptor information for feature matching results in sparse matches, with the majority being incorrect. We can also observe in the figure that most keypoints represent different physical entities that are spatially close in 3D space (see the zoomed-in area in the bottom-right corner). Intuitively, these keypoints could be used to establish correspondences between keypoints of a different nature if some extra information were provided to ease the matching.

Figure 1.

Example scenario of a camera using a corner detector (ORB) and trying to localize itself against a map built with SIFT keypoints (i.e., blobs). Note that, in order to ensure the comparability of both sets of keypoints, the same descriptor (i.e., BRIEF) was employed. Additionally, a map image is also depicted to illustrate the concept of the cross-detector error.

This paper addresses this identified research gap through two contributions: first, we analyze whether minimizing reprojection errors is suitable for the cross-detector scenario, something that is not intuitive at first. Once validated, we introduce and formalize cross-detector VL, in which the inherent spatial discrepancies of keypoints representing not the same but close physical entities diminish the distinctiveness of descriptor-based correspondences and hinder feature matching. Secondly, to tackle this problem, we propose CoplaMatch, a novel method that establishes additional coplanarity constraints to establish more suitable correspondences between keypoints extracted with different detectors. Unlike traditional descriptor-based matching or recent learning-based methods such as SuperGlue [4], which struggle with fundamental differences in keypoint types or require specific retraining for each detector pair, CoplaMatch provides a detector-agnostic solution. This is achieved by leveraging homography transformations between keypoints from the map and the query image to impose coplanarity constraints. Unlike standard geometric methods (e.g., epipolar constraints [5]), which rely on verifying the identity of specific points across views, our approach uses set-to-set geometric verification. This aids in identifying correspondences among cross-detected keypoints that may not represent the exact same 3D point but are close enough (refer to Figure 1).

To enforce these constraints, keypoints must be annotated beforehand with their coplanar group indices, indicating which groups of keypoints are locally coplanar (i.e., within the query image or the map). This annotation process is conducted offline for the map and online for each query image, incurring a specific computational overhead that is later analyzed in detail. However, it is important to note that a limitation of this approach is its reliance on the presence of structural planes (e.g., walls), which restricts the method’s applicability to indoor environments or structured urban scenes.

Our approach is validated through extensive experiments using real-world data, for which different keypoint detectors are set for the map and the query image. However, to ensure comparability between their descriptors, for each experiment, the same feature descriptor is applied to both the map and the query image. Particularly to the evaluation of CoplaMatch, we only considered keypoints lying on structural planes in indoor environments (i.e., walls and planar objects attached to them such as pictures). The choice of structural planes is motivated not only because it reduces computational demands but also because structural planes are known for capturing environmental features that do not often change over time, thus facing long-term challenges [6]. The results demonstrate that CoplaMatch achieves precise localization results in this scenario. Although it incurs an extra computational overhead to apply the coplanar constraints for guiding the feature matching, it does not preclude its use for online applications (∼10–25 Hz, depending on the choice of the plane segmentation technique). Note that, while our experiments focus on indoor environments, our proposal could also be applied to outdoors with similar settings, such as urban areas with visible facades. In summary, our work provides the following contributions:

- Formalizing the novel problem of cross-detector visual localization and analyzing its key challenge, the cross-detector error.

- Proposing CoplaMatch, the first approach to solve cross-detector VL by leveraging geometric coplanarity constraints over descriptor similarity.

- Providing an open-source implementation of our method, publicly available at https://github.com/EricssonResearch/copla-match (accessed on 11 November 2025).

2. Related Work

This section covers three different aspects related to the proposal in this work. First, it reviews methods for keypoint detection to then elaborate on current approaches to performing feature matching. Finally, a review of computer vision works employing coplanar constraints is presented.

2.1. Local Keypoint Detectors

Traditionally, local keypoint extraction from images has been performed through handcrafted algorithms, which are designed to detect certain types of interest points (e.g., corners, blobs, and ridges) that are repeatable and robust to changes (e.g., lighting conditions or different viewpoints). One of the widely adopted ones is the Scale-Invariant Feature Transform (SIFT) [7], which computes the Difference of Gaussians to detect blobs. Later, FAST [8] was presented as the first high-speed method for corner detection based on machine learning techniques. FAST was extended via ORB [9], which modifies the original algorithm to provide rotation invariance using the keypoint orientation. BRISK [10] was proposed as a binary corner detector built upon FAST but adding a scale-space to develop scale invariance.

Given the superior performance of neural network-based methods for many computer vision tasks, multiple learning-based keypoint detectors have been proposed. LIFT [11] is one of the first of these approaches. It is inspired by the traditional SIFT and demonstrates competitive results against handcrafted detectors. Similarly, MagicPoint [12] is a corner detection neural network that presented domain adaptation difficulties that its successor, SuperPoint [13], overcame, introducing homographic adaptation to boost performance. More recently, DeDoDe [2] introduced a novel approach by decoupling the detection and description processes, learning keypoints from 3D consistency. It should be noted that the choice of the keypoint detection algorithm depends on the application and its requirements, since each detector operates at a different rate, based on its computational complexity.

2.2. Local Feature Matching

Given two sets of local features, data association is typically performed under the assumption that corresponding keypoints represent the same physical entity in the scene. On this premise, the classical setting employs a nearest neighbor (NN) search to extract matches that are later filtered using techniques such as Lowe’s ratio test [7], cross-checking, and other heuristics [14,15]. Recently, leveraging the potential of neural networks, multiple learning-based methods have been proposed to improve feature matching. For instance, SuperGlue [4] relies on graph neural networks and attention mechanisms to learn priors about scene geometry and feature assignments, showing excellent performance but being hard to train. LightGlue [16] enhances the latter by reducing the training complexity and improving its performance. Another remarkable work is LoFTR [17], a detector-free approach that performs dense matching, which demonstrates impressive performance even in low-textured regions but at the cost of greater computational complexity. Building upon advancements in dense matching, RoMa [18] further improves robustness by combining strong pre-trained features from foundation models with a novel transformer-based decoder for robust dense feature matching. In the field of VL, [19] addresses VL through a learnable pipeline that relies on geometric descriptors instead of the traditional visual descriptors, reducing the storage requirements while relieving privacy concerns. Further addressing the challenges of VL, particularly in dynamic scenarios with significant appearance variations, other relevant works include InLoc [20], which leverages dense feature matching and view synthesis for robust large-scale indoor localization. Similarly, for long-term scenarios, methods like [6] employ retrieval-based strategies and robust correspondence verification to handle scene changes.

However, to the best of our knowledge, the crucial problem of cross-detector matching has not been previously addressed. This scenario fundamentally breaks the common assumption that keypoints from different images are extracted using the same detector. Consequently, traditional methods relying on descriptor similarity become unreliable or are entirely precluded. Furthermore, existing learning-based matching approaches are typically tailored to specific feature types, which inherently limits their generalization ability across different detectors and often demands complex and expensive re-training. Therefore, cross-detector matching represents a significant gap in this field, a challenge that this work formalizes and addresses for the first time in the literature.

2.3. Coplanarity Constraints in Computer Vision Tasks

The utilization of planar surfaces or, alternatively, the coplanarity of points lying on the same plane, is widely adopted to solve computer vision tasks. For example, in 3D scene reconstruction, recent works have demonstrated the benefit of exploiting coplanarity, improving the accuracy of the structure estimation while providing a more reliable data association [21,22,23]. Extensively, the application of these primitives for 3D pose estimation has also emerged with relevant works [24,25,26,27], which highlight planes as a compact representation that allows for efficient and robust pose estimation in large-scale environments. Not only the primitives but also their properties (e.g., homography transformations) are applied, and they are leveraged for tasks such as camera calibration [28,29] and image alignment [30,31], among others.

3. Analyzing Keypoint Cross-Detection in Visual Localization

Performing VL with heterogeneous keypoint detectors poses a major problem, as the keypoints in the query image () extracted with a keypoint detector, , represent different physical entities than those keypoints in the map () extracted with a keypoint detector, D. As illustrated in Figure 2, those keypoints may be proximate, but they are not in the same location. This significantly hinders the traditional feature matching process, making it more difficult to discern the correct correspondences. Moreover, it exacerbates the overall localization problem by adding an extra error term (see Section 3.2) in the cross-detected correspondences.

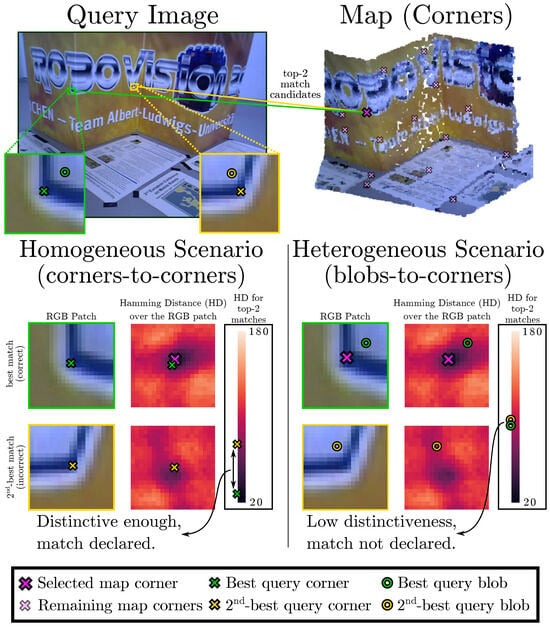

Figure 2.

Simple example illustrating the lack of distinctiveness between matches candidates in cross-detector scenarios. The map is created using corner keypoints, so the homogeneous scenario uses corners (×) in the query image, while the heterogeneous employs blobs (○). We depict the zoomed-in RGB patch around the best and the second-best query matches for one map keypoint for both scenarios. The comparison between the Hamming distances between the first and second matches reveals that the best match in the homogeneous case is substantially more distinctive than the heterogeneous case. Consequently, typical feature matching processes relying on this distinctiveness will suffer in the cross-descriptor case.

3.1. Cross-Detector Feature Matching

In the scope of camera localization, feature matching methods assume that the same physical entity is represented in both sets of keypoints (query image and map) with almost identical visual descriptors. Thus, to declare a corresponding pair, we expect a query keypoint to have strong descriptor similarity to the first-best candidate in the map while also having enough distance to the second-best one [7]. However, when different detectors are employed, this assumption does not hold.

For a better understanding, we illustrate this problem in Figure 2, for which the evaluation of feature matching is performed for the homogeneous case, where both map and query image employ a corner detector, as well as for the heterogeneous case, where a blob detector is used on the query image. In both scenarios, the matching of a corner feature in the map is evaluated against two candidates in the query image by measuring the difference between the descriptor distances (e.g., Hamming distance) of the first-best and second-best matches. It can be seen how, for the homogeneous case, the distinctiveness between the two candidates is high enough to declare with confidence the correct match. For the heterogeneous case (blob-to-corner), the distance between the best and second-best matches is minimal, making the declaration of the right match unreliable.

3.2. Cross-Detector Error

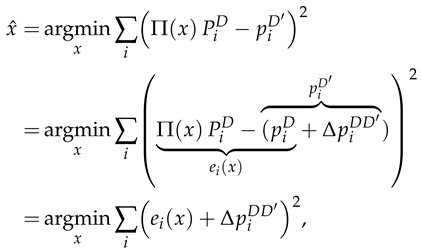

Let be the set of established correspondences, where each is a match between a keypoint in the query image and a 3D keypoint in the map . Commonly, given a set of matches, VL is formulated as a Least Squares Estimation (LSE) problem:

where SE(3) refers to the relative transformation of the camera w.r.t. the map frame and (·) is the projection matrix.

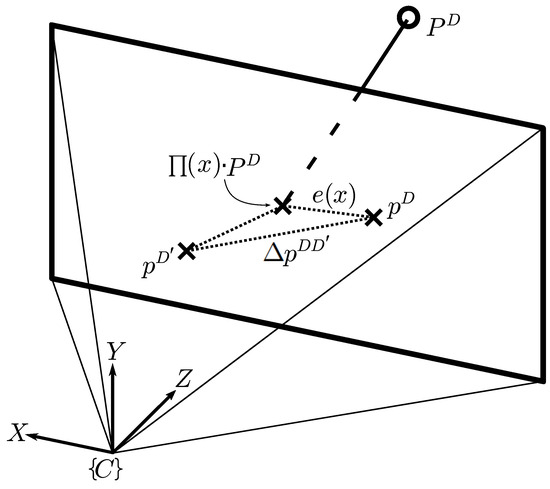

Particularizing to the cross-detector scenario, the correspondences are pairs between a keypoint () in the query image extracted with a keypoint detector, , and a 3D point () in the map extracted with a different detector D (see Figure 3). Then, Equation (1) can be reformulated as follows:

where refers to the standard reprojection error, and stands for the cross-detector error, representing the error due to the use of different detectors in the feature match.

where refers to the standard reprojection error, and stands for the cross-detector error, representing the error due to the use of different detectors in the feature match.

Figure 3.

Overview of reprojection error in cross-detector scenario. A 3D map point () is projected in the query image, with corresponding keypoints and for detectors D and . Both standard reprojection error () and cross-detector error () are depicted. Errors are exaggerated for clarity.

Provided that acts as an additive term in the LSE problem, we can argue that the solution of the cross-detector VL will remain the same as in the homogeneous scenario, as long as the cross-detector error distribution is zero-mean [32], i.e., , which is experimentally validated in Section 5.

4. CoplaMatch: Coplanarity-Constrained Feature Matching

The analysis presented in the previous section stresses the difficulty of declaring reliable matches in cross-detector scenarios. This observation motivates our main contribution: CoplaMatch, a novel approach based on geometric constraints, which allows the establishment of reliable matches in cross-detector scenarios. As discussed in Section 3.1, conventional descriptor similarity approaches face great difficulties because keypoints extracted with different detectors tend to represent different physical entities, subsequently leading to low descriptor distinctiveness. To enhance the matching of cross-detected keypoints, we define the Coplanar Feature Groups (CFGs), which are sets of coplanar keypoints annotated with their respective feature descriptors.

Given the set of CFGs in the map, namely and the query set , our goal is to make reliable matches between keypoints from the map (i.e., landmarks) and keypoints from the query image, by forcing them to fulfill a geometric restriction, i.e., features belonging to a must be matched to features of a . This constraint lies at the core of this work, and it allows us to replace the one-to-one descriptor-based distinctiveness verification of current matching methods with a set-to-set geometric verification, which is applicable to both homogeneous and heterogeneous settings of keypoint detectors. Concretely, our proposal exploits the projective transformation (i.e., homography) that applies between a set of observed coplanar features in the query image and coplanar features in the map. It should be noted that, to establish correspondence pairs of cross-detected keypoints, we assume that the keypoints of both map and query image are described in a common domain, either by using the same feature descriptor or by projecting them to a common space where their similarity can be measured with a given metric, e.g., the Euclidean norm [3].

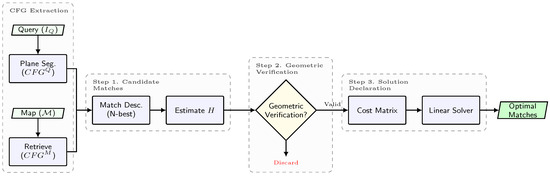

Imposing coplanarity constraints comes at a price. While annotating the coplanarity information in the map has to be peformed just once in an offline procedure, for the query image, an extra process is required that demands either the depth of the detected keypoints (e.g., from an RGB-D camera) or performing plane segmentation in the RGB image (e.g., using Plane R-CNN [33]), which can be time-consuming. Specifically, we generate CFGs by projecting the segmentation masks onto the image and assigning each keypoint to the plane index of the mask pixel it occupies. This assumes that keypoints falling within a mask belong to that physical plane. While segmentation noise at boundaries can occur, our RANSAC-based homography estimation (Step 1) naturally filters out keypoints that are spatially inconsistent with the dominant plane. Nonetheless, in practice, this time-consuming process is a manageable limitation since, here, we are interested in a feasible solution for global camera localization or for resetting the accumulated odometry drift in a pose-tracking task. In both cases, real-time performance is not strictly demanded, and indeed, the current state-of-the-art SLAM architectures, such as Hydra [34], rely on such segmentation processing. With that being said, the CoplaMatch algorithm can be decomposed into the following three steps (illustrated in Figure 4 and summarized in Algorithm 1).

Figure 4.

Overview of the CoplaMatch algorithm for cross-detector visual localization. The method takes Coplanar Feature Groups (CFGs) from the query and map as input. It establishes correspondences by relaxing descriptor similarity (Step 1) and enforcing geometric consistency via homography estimation (Step 2). Finally, a linear assignment solver determines the optimal set of matches based on inlier consensus (Step 3).

Step 1. Candidate matches between CFGs: For each , we establish putative matches with all (lines 3–5 in Algorithm 1). First, for each pair <, , we establish matches between their features based on an N-best similarity descriptor, without forcing a minimum similarity between them, to accommodate relaxed matches because of cross-detected keypoints. Empirically, we have verified that a value of is a good trade-off to limit the number of matches and not miss plausible candidates. Then, within a RANSAC framework, we solve for the homography with a maximum number of supporting keypoint pairs (inliers). An example is illustrated in Figure 5, where, from a set of 6 possible <, pairs ( and ), only 3 of them (<, , <, and <, ) are considered as correspondence candidates, that is, a homography is established between them. It is worth noting that segmentation errors are naturally handled in this step: over-segmented regions simply result in valid partial matches against the map plane, while under-segmentation (merged planes) is mitigated via this RANSAC process, which rejects features belonging to the non-dominant plane as outliers.

| Algorithm 1: CoplaMatch feature matching |

|

Figure 5.

CoplaMatch is presented here in a simple example between a query image and a map, but only considering structural planes (i.e., walls) for clarity. Given the CFGs from the query and the map, potential correspondences between them are established using a descriptor-based method. For each <, pair (referred to as in the illustration for clarity), if enough feature matches are available, a homography, H, is estimated and used to verify geometrically whether the matches are consistent. Finally, the optimal <, correspondences are selected by solving a linear assignment problem between the candidates. Note that, in the illustration, discarded pairs represent either not enough matches to estimate a homography or that the homography has not passed geometric verification.

Step 2. Geometric Verification: Each obtained candidate CFG match <, is represented with a homography, . We can now exploit their decomposition [35] to filter out spatially inconsistent pairs:

- Rotation Verification. The estimated homography must be orientation-preserving; that is, the keypoints of any must keep the spatial ordering of their counterparts in . Otherwise, these CFGs belong to different physical planes. This property is verified through the determinant of the rotational submatrix of the estimated homography H ( upper-left submatrix):

- Translation Verification. The decomposition of a homography, H [35], gives an up-to-scale translation vector, t, between the plane in the map and the camera image plane. Yet, this scale can be approximated from the depth information contained in the reference map, therefore obtaining an estimation of t. This step is critical for filtering degenerate homographies caused by the spatial noise inherent to cross-detector keypoints. Since such noise often results in ill-conditioned homographies that yield physically implausible (over-scaled) translations, we set a threshold, , for the maximum acceptable translation.

Step 3. Solution Declaration: Notice that, in certain cases, enough keypoints of a <, pair that do not represent the same physical plane could share similar spatial distribution, leading to an acceptable homography, as illustrated between and in Figure 5. In this context, it becomes necessary to solve the data association but while ensuring two requirements: (i) uniqueness, that is, a can only be matched with one , and (ii) <, pairs with greater consensus should be prioritized. To this end, linear assignment solvers (e.g., Hungarian or Sinkhorn algorithms [36,37]) are appropriate to find a globally optimal solution over the set of verified <, correspondences. These solvers require a feasibility score for each pair candidate to quantify the degree of fitness of each candidate in order to select the optimal pairs, taking into account the trade-off between the number of pairs and their fitness. In our case, to promote pairs with high consensus, we set the feasibility score as the number of inliers in the homography estimation, but other alternatives, such as the ratio between inliers and the total number of feature matches, are also applicable. In the example shown in Figure 5, it could be appreciated that, as the pair <, has a greater consensus than <, , it is prioritized for the optimal solution. As this scoring is computed per CFG pair and depends on geometric consensus, rather than on the total number of detected keypoints, detectors producing denser keypoint sets do not necessarily have an implicit advantage, but CFGs with stronger geometric consensus are naturally favored.

5. Experimental Validation

5.1. Experimental Setup

This section details the experimental setup employed to evaluate CoplaMatch in cross-detector scenarios. All experiments were conducted on a workstation equipped with an Intel Core i7-4790K CPU and an NVIDIA GeForce RTX 2060 GPU with 6 GB of dedicated memory, ensuring a consistent and robust testbed for performance evaluation.

We performed two distinct sets of experiments. Firstly, a preliminary study was conducted to analyze the cross-detector error (presented in Section 3), in order to validate the suitability of the cross-detected correspondences generated via CoplaMatch for cross-detector VL tasks. Secondly, we assessed the practical applicability and performance of our proposed CoplaMatch within a comprehensive cross-detector VL pipeline, specifically utilizing HLoc [38]. We first built the maps through COLMAP [39] triangulation, using the known camera poses of the mapping sequences and the camera intrinsics. For localization, HLoc was set to use the data from the map available in the top-10 map images retrieved according to NetVLAD [40]. This choice of limiting map images to the top-10 is a common practice in VL to balance localization accuracy with computational efficiency, focusing on the most geometrically coherent and visually similar map views.

A critical aspect of CoplaMatch is its reliance on coplanar feature sets. For all experiments involving CoplaMatch, we exclusively considered feature sets lying on the structural planes (e.g., walls and planar objects attached to them, such as pictures or posters). These structural planes were extracted using Sigma-FP (https://github.com/MAPIRlab/Sigma-FP/tree/plane_segmentation (accessed on 12 November 2025)) [22], a depth-based plane segmentation method. Sigma-FP was selected for its efficiency in processing images (demonstrating lower average processing times compared to RGB-based methods like PlaneRecNet [41]) when depth information is available. The focus on structural planes is motivated by two key factors: it significantly reduces the computational burden by limiting the search space for coplanar groups, and structural planes are inherently robust features in indoor environments [42,43], remaining consistent over time and thus beneficial for long-term SLAM challenges. These identified coplanar groups were then integrated into HLoc to construct planar maps.

To ensure the statistical robustness of our results, our experiments were conducted across sequences from two different real-world datasets, including changes in camera types and environmental conditions: concretely, the structure_texture sequences from TUM RGB-D dataset [44], and the Aria Digital Twin dataset [45] using two Apartment_release sequences. The Aria Digital Twin dataset is particularly valuable, as it represents a cross-device scenario, where localization is performed using a different camera (front-facing RGB camera) than those used for mapping (grayscale stereo cameras) (https://www.projectaria.com/glasses/ (accessed on 12 November 2025)). The Apartment_release sequences are captured in an environment with dynamic changes, further challenging the localization. In this context, our specific reliance on structural planes allows the method to ignore moving objects and anchor to the stable parts of the scene. Both datasets feature distinct trajectories for mapping and localization, allowing for a thorough evaluation of generalization capabilities.

Furthermore, for all experiments, we use five well-known local keypoint detectors, both handcrafted (ORB [9], SIFT [7], FAST [8], and BRISK [10]) and learning-based (SuperPoint [13]), ensuring that our work is not tied to specific algorithm choices and demonstrating consistent performance across heterogeneous setups. This selection provides a representative range of detector types (e.g., corner-based like ORB/FAST/BRISK, blob-based like SIFT, and learned features from SuperPoint), enabling a robust analysis of our method’s performance across different keypoint characteristics. Also, we consider two independent feature descriptors: the handcrafted BRIEF [46] and the learning-based SuperPoint [13], with both being able to describe the five types of keypoints previously mentioned. In this manner, we also evaluate the impact of the feature description on the performance of the matching. Note that, for each experiment, the same feature descriptor is used for the map and the query to ensure the comparability of their descriptors in cross-detector scenarios.

Beyond the general setup, specific algorithmic parameters were carefully chosen and tuned. For instance, in identifying candidate matches, an N-best similarity descriptor with was empirically selected to balance the number of potential correspondences with the avoidance of missing plausible candidates. Homography estimation relies on a RANSAC framework, employing standard parameters to ensure robust outlier rejection. Furthermore, for geometric verification, a translation threshold of 5 m was empirically set to filter spatially inconsistent matches derived from degenerate homographies, avoiding the need for specific parameter tuning across different sequences. However, if per-scene tuning is required to maximize robustness, reinforcement learning techniques (e.g., DDPG or TD3) [47] could be employed to automatically adjust these settings based on scene characteristics. Finally, the linear assignment solver’s feasibility score was defined as the number of inliers, promoting solutions with a high geometric consensus.

We compare our proposal with two state-of-the-art feature-matching approaches, with the descriptor similarity matching with Lowe’s criteria [7] and SuperGlue [4]. These comparisons provide a strong baseline against both conventional and advanced matching techniques, highlighting the benefits of our coplanarity-constrained approach in challenging cross-detector environments.

5.2. Cross-Detector Error in CoplaMatch Correspondences

As discussed in Section 3, the cross-detector VL problem is equivalent to the standard VL when the expected value of the cross-detector error is zero. On this premise, it is possible to use common VL techniques based on minimizing the reprojection error to solve cross-detector VL.

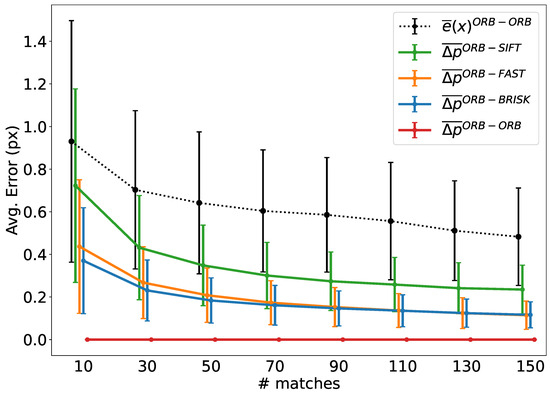

In this section, we experimentally analyze the individual impact of the two terms comprising the reprojection error for cross-detected correspondences obtained using CoplaMatch (see Equation (2)): the standard reprojection error , and the cross-detector error . Concretely, we focus on the analysis of the expected value of the errors across a large number of images. By leveraging the linearity property of the expectation, we can decompose the expected value of the overall reprojection error into the sum of the expected values of and . Consequently, this decomposition allows for a separate examination of both errors.

Given an image pair, we analyze for correspondences computed using the same detector algorithm between both images. In contrast, we characterize on individual images, so we can decouple it from the impact of . To do so, we apply different detection algorithms to the image, and then we compute correspondences between the resulting sets of keypoints. For this experiment, we use a large number of image pairs from TUM RGB-D dataset and calculate the correspondences through CoplaMatch. Particularly, we employ ORB as the detector for analyzing ; and, to analyze the cross-detector error term, we match ORB keypoints with those obtained with other three different detectors (i.e., SIFT, FAST and BRISK). Note that, to ensure the comparability of different types of keypoints, we processed all with the BRIEF descriptor.

Figure 6 compares the average L2-norm of these errors, in terms of the number of matches of the image pairs. It should be noted that to properly characterize and isolate the cross-detector error, only correct matches are selected. The average error is computed as follows:

where M is the number of correct matches considered, states for the keypoints extracted with the detector D and their correspondences obtained with the detector . It should be noted that the spatial error of the keypoints is separated and computed along the x- and y-axes of the image.

Figure 6.

Contrasting standard reprojection error (for homogeneous scenarios) and cross-detector error (in heterogeneous cases) with reference to the number of matches per image pair, with ORB serving as the reference keypoint detector. Note that the total cross-detector reprojection error is the L2-norm of the sum of the two error vectors (see Equation (2)).

The results demonstrate that the expected values of both errors, and , exhibit larger magnitudes when a limited number of matches (≤30) is considered. However, as the number of matches increases, both errors tend to diminish, suggesting a trend in the reduction in the error with the increasing number of matches. This empirical convergence supports the statistical assumption that the cross-detector spatial discrepancies are uncorrelated and zero-mean, effectively canceling out the cross-terms in the error expansion (Equation (2)). This behavior is visually corroborated by the standard deviation bars in Figure 6, which demonstrate that, while individual cross-detector errors vary, they stochastically cancel each other out when aggregated. We should note that, while the errors decrease simultaneously, they are isolated, and thus, there is no direct interaction between the depicted and .

It should be stressed that, in the cross-detector scenario, the total error results from the vector addition of both errors (see Figure 3). As a final note, when the cross-detector errors from individual detectors are compared, it is noticeable that the error for the pair ORB-SIFT, as expected, is greater than the others, given that the natures of the salience points are different (corners vs. blobs).

5.3. Analyzing the Computational Cost of CoplaMatch

Generally, introducing constraints incurs an inevitable computational overhead. Particularly, in our proposal, this overhead predominantly comes from the following: (i) plane segmentation within the query image to identify coplanar feature groups, and (ii) applying coplanar constraints during feature matching, which requires the estimation of a homography for each pair of coplanar feature groups between the query image and the map. Thus, it becomes necessary to quantify the additional burden to provide insights into the trade-off between performance and computational cost.

Table 1 presents the average processing times of two plane segmentation methods, PlaneRecNet [41] and Sigma-FP [22]. PlaneRecNet is a learning-based method that identifies planes directly from RGB images. These kind of approaches often estimate depth implicitly, which in turn results in longer processing times. In contrast, depth-based methods such as Sigma-FP depict lower processing times at the cost of requiring depth sensors. It should be noted that the choice of the plane segmentation approach is usually coupled with the available data and the requirements of the application, considering that plane segmentation is performed once per query image. However, it should be noted that, since CoplaMatch is agnostic to the segmentation method, more efficient alternatives can be adopted as they become available. Furthermore, as discussed in Section 4, for the intended applications of global localization and drift reset, the achieved throughput is sufficient.

Table 1.

Average times for plane segmentation of images from TUM RGB-D dataset using different methods: PlaneRecNet [41] and Sigma-FP [22].

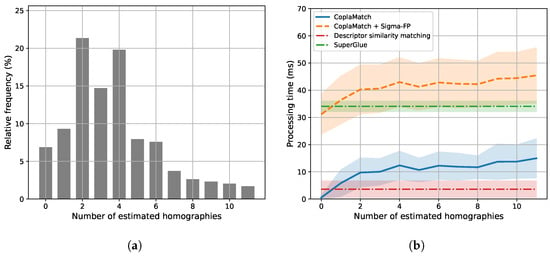

Once the CFGs of the query are determined, CoplaMatch tries to establish correct correspondences <, . The latter involves the estimation of a homography between the features of each CFG pair, provided that there are sufficient features available in both groups. Figure 7 illustrates the computational time of CoplaMatch relative to the number of estimated homographies between a query image and the map. As expected, the required time exhibits a proportional increase with the number of estimated homographies. However, given the adequacy for concurrent processing of different CFG pairs (lines 3–11 of Algorithm 1), the overall increase in the throughput time is alleviated, although a notable variance is appreciable. In contrast to SuperGlue and the descriptor similarity approach, which exhibit low variance, as they depend solely on the number of matches, CoplaMatch’s variance primarily stems from the variability in the time required to perform RANSAC-based homography estimation. This variability ranges from early convergence, which results in shorter times, to prolonged times in those cases where a solution is not found (i.e., timeout). Additionally, it can be seen that, for >8 homography estimations, the variance increases further, reflecting the parallelization of the algorithm, executed concurrently for this experiment on an eight-core processor. Finally, the histogram shown at the top of Figure 7 indicates that, for of query images, the number of homographies to be estimated typically falls between 1 and 6, resulting in an average processing time of ms.

Figure 7.

Time analysis based on the number of estimated homographies. (a) illustrates the distribution of the number of estimated homographies per image. This histogram illustrates the percentage of images from which a specific number of homographies were estimated.) Meanwhile, (b) compares the processing times of the evaluated approaches. (Comparison of processing times for different methods. The x-axis indicates the number of homographies estimated, which affects the processing time of CoplaMatch and CoplaMatch + Sigma-FP. The processing times for Descriptor similarity matching and SuperGlue are independent of the number of estimated homographies.)

In Figure 7, as a reference, we exhibit the computational times for the joint scenario of CoplaMatch with Sigma-FP for plane segmentation. As anticipated, our proposal entails a certain overhead compared to state-of-the-art methods. Yet, this overhead is the cost of guiding the feature matching through the imposition of coplanar constraints to operate effectively in cross-detector scenarios. Although this overhead may limit operations strictly defined as real-time, it operates at ∼20–25 Hz for the Sigma-FP + CoplaMatch case and at ∼10 Hz for PlaneRecNet + CoplaMatch. These computational times are sufficient for tasks such as global localization or for reducing the accumulated odometry drift.

5.4. Visual Localization Experiments

We assess the pose recall at different position and orientation thresholds for VL (as in [38]), as well as the median number of matches employed for localization.

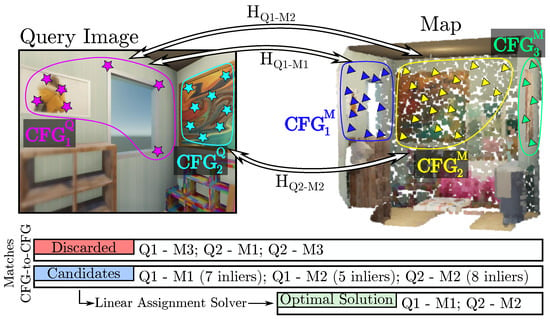

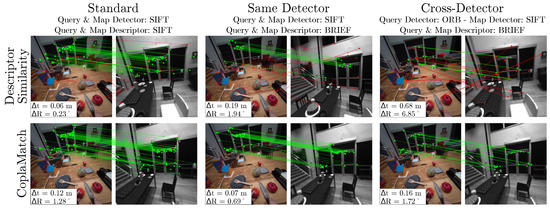

For a comprehensive evaluation, we consider two different cases: (i) Standard, referring to the classic setting using a feature detector and descriptor from the same algorithm (e.g., ORB detector with ORB descriptor); and (ii) Cross-Detector, which represents the scenario where a fixed description algorithm is used but in combination with different keypoint detectors. Table 2 compares both cases for the TUM RGB-D dataset using BRIEF as the fixed descriptor. As expected, we see that the Standard is the preferable solution since the description algorithm is designed and optimized for that particular detector, and hence, optimal for setting keypoint matches using their descriptor distance (descriptor similarity matching). However, when moving to the challenging Cross-Detector scenario, a significant drop in the number of matches can be observed for descriptor similarity matching (see Figure 8), which has a detrimental impact on the localization performance. The latter is even clearer in the case of an ORB map, where the number of matches is too low and makes localization unfeasible. Additionally, it can be observed that this challenge is more accentuated in blob-to-corner combinations (e.g., SIFT-ORB), which prove more difficult than corner-to-corner (e.g., ORB-BRISK) combinations due to the fundamental difference in feature nature. In contrast, CoplaMatch substantially improves feature matching, increasing the number of pairs and consequently enabling accurate localization with keypoint pairs of a completely different nature. This demonstrates that complementing descriptor similarity with geometry awareness is necessary for cross-detector scenarios.

Table 2.

Report on VL results of different methods (descriptor similarity matching [7] and CoplaMatch) in the TUM RGB-D dataset for Standard and Cross-Detector scenarios. Standard: same detector and descriptor algorithm. Cross-Detector: BRIEF as a common descriptor but different detectors. The rightmost column shows the median inlier matches for localization. Best results are marked in bold.

Figure 8.

Qualitative results on the Aria Digital Twin dataset comparing feature matching methods for the same query image. For each method, the map image containing the greatest number of correct matches is shown. Standard VL (left): Both approaches are able to obtain successful localization. Same Detector (center): In this case, as the detector and descriptor use different algorithms, the descriptor similarity approach exhibits certain performance degradation. Cross-Detector (right): Finally, for the most challenging scenario, the descriptor similarity approach is not able to establish correct correspondences, whereas CoplaMatch successfully estimates the localization.

Table 3 depicts the results for Cross-Detector settings. We include the Same Detector configuration as a baseline, where the query and map detectors are the same, but unlike the Standard setting, in this case, the descriptor algorithm is set to one that can be used with any detector, thus making it different from the detector algorithm employed (e.g., ORB detector with BRIEF descriptor). This experiment is performed using two independent feature descriptors (BRIEF and SuperPoint). The map is created with the detector SIFT, while ORB, BRISK, FAST, and SuperPoint are the detectors used for the query. First, it can be seen that, for the Same Detector setting, in which corresponding keypoints refer to the same physical entity, SuperGlue shows impressive performance while both the descriptor similarity matching and CoplaMatch still exhibit competitive results. Yet, in the Cross-Detector case, CoplaMatch is the only alternative that presents consistent performance, whereas the performance of the descriptor similarity approach highly depends on the descriptor distinctiveness, and SuperGlue shows particularly poor performance, demonstrating low generalization ability for the cross-detector problem. It should be noted that, as CoplaMatch still relies on descriptors for establishing CFG correspondence candidates, the difference in the median number of correct matches is notable between both descriptors.

Table 3.

Evaluation of localization performance for different feature-matching methods across two datasets and two common descriptors (BRIEF [46] and SuperPoint [13]). Results are reported for two query configurations: Same Detector, where the query uses the same SIFT detector as the map, and Cross-Detector, which reports the average performance when the query uses ORB, FAST, BRISK, or SuperPoint detectors against the SIFT map. Localization success is reported at different accuracy thresholds (position, orientation). Best results are marked in bold. Please note that SuperGlue requires specific training for each descriptor, and a BRIEF-compatible version of SuperGlue was not available for evaluation.

Finally, we must highlight that the performance of our proposal is compromised when few planar structures are observed, or they lack textures. This is noticeable in the results of the Aria dataset in Table 3, as well as illustrated in Figure 8, as in this dataset, at times, only one plane is observed and some walls lack texture. This is consistent with the lower number of validated matches seen in Table 3, since the planar regions in Aria are often smoother and yield smaller CFGs than those in TUM RGB-D, directly limiting homography stability and reducing pose recall. In addition, while our experiments on the Aria dataset demonstrate robustness to significant motion, extreme viewpoint changes (e.g., grazing angles) remain a failure case where the underlying descriptor invariance breaks down, rendering geometric verification impossible.

6. Conclusions and Discussion

This paper has formally defined and addressed the problem of cross-detector VL, a scenario where distinct keypoint detector algorithms are employed. Such heterogeneity leads to spatial discrepancies among keypoints, reducing descriptor distinctiveness and consequently hindering the typical feature matching based on descriptors’ similarity.

First, we validated, with a wide range of experiments, that the mainstream approach to solving VL, which consists of minimizing the reprojection error, is also applicable to cross-detector scenarios, provided that a significant number of matches are found. In particular, it has been demonstrated that cross-detector correspondences inherently include a cross-detector error, but it acts as an additive term in the LSE formulation, which does not affect the VL solution as long as it exhibits a zero-mean distribution.

Once we validated the feasibility of addressing this problem, we proposed CoplaMatch as the first alternative to find feature correspondences in cross-detector scenarios. To do so, CoplaMatch relaxes the dependence on descriptors’ similarity by imposing geometric coplanarity constraints to guide the matching process. These coplanarity constraints are imposed through 2D homographies between sets of coplanar keypoints, which are denoted as Coplanar Feature Groups (CFGs). In this sense, we perform a geometric verification to discern whether two CFGs (one from the query image and another from the map) represent the same physical plane. This enables the establishment of cross-detector correspondences without heavily relying on descriptors’ similarity. Through a comprehensive set of experiments, we have validated that the correspondences generated via CoplaMatch meet the requirement for the reprojection error minimization approach: the inherent cross-detector error, though present, has a mean of close to zero. This confirmed that, despite the spatial discrepancies introduced via heterogeneous detectors, CoplaMatch effectively produces a set of matches in which the cumulative effect of these errors averages out, thus enabling the accurate performance of cross-detector VL.

However, it is imperative to acknowledge the limitations of our proposal. CoplaMatch fundamentally relies on the observation and detection of planar structures within the scene. Consequently, if the environment lacks discernible structural planes (e.g., outdoors), our method cannot be employed effectively. In such texture-less or non-planar scenarios, the only alternative would be to rely exclusively on descriptor-based matching, which, as shown in our experimental results, suffers from severe performance degradation due to the lack of distinctiveness in cross-detector features. In addition, for extreme scenarios such as observing only parallel planes or those that are similarly textured, the underlying geometry becomes inherently ambiguous, and CoplaMatch may fail to discern the correct solution. Moreover, CoplaMatch introduces an unavoidable computational overhead due to the need for plane segmentation, although such overhead is not excessively large, allowing the operation in the range of approximately 10 to 25 Hz, depending on the plane segmentation technique used.

In conclusion, this work has validated the viability of cross-detector VL, which opens a promising new research direction with a significant impact on the computer vision field. While the method presented in this work serves as a first alternative, there is considerable room for improvement. Future work should explore the cross-detector problem further, investigating alternative approaches that remove the reliance of CoplaMatch on planar observations and enabling the performance of cross-detector VL outdoors or in unstructured environments also. Furthermore, a crucial direction is to extend this work with the cross-descriptor problem to fully enable interoperable visual localization between heterogeneous devices. Additional promising directions include exploiting multi-plane constraints to reinforce geometric consistency and integrating learning-based models for robust CFG identification.

Author Contributions

Conceptualization, J.-L.M.-B., A.J., J.A. and J.G.-J.; methodology, J.-L.M.-B. and A.J.; software, J.-L.M.-B. and A.J.; validation, J.-L.M.-B. and A.J.; investigation, J.-L.M.-B. and A.J.; data curation, J.-L.M.-B. and A.J.; writing—original draft preparation, J.-L.M.-B.; writing—review and editing, A.J., C.G., A.C.H., J.M., J.A. and J.G.-J.; supervision, C.G., A.C.H., J.M., J.A. and J.G.-J.; funding acquisition, J.A. and J.G.-J. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by the grant program FPU19/00704 and the research projects MINDMAPS (PID2023-148191NB-I00) and ARPEGGIO (PID2020-117057GB-I00), all funded by the Spanish Government.

Data Availability Statement

All datasets employed in this work are publicly available.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Piasco, N.; Sidibé, D.; Demonceaux, C.; Gouet-Brunet, V. A survey on Visual-Based Localization: On the benefit of heterogeneous data. Pattern Recognit. 2018, 74, 90–109. [Google Scholar] [CrossRef]

- Edstedt, J.; Bökman, G.; Wadenbäck, M.; Felsberg, M. DeDoDe: Detect, don’t describe—Describe, don’t detect for local feature matching. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; IEEE: New York, NY, USA, 2024; pp. 148–157. [Google Scholar]

- Dusmanu, M.; Miksik, O.; Schönberger, J.L.; Pollefeys, M. Cross-Descriptor Visual Localization and Mapping. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6038–6047. [Google Scholar] [CrossRef]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching with Graph Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4937–4946. [Google Scholar] [CrossRef]

- Tan, X.; Sun, C.; Sirault, X.; Furbank, R.; Pham, T.D. Feature matching in stereo images encouraging uniform spatial distribution. Pattern Recognit. 2015, 48, 2530–2542. [Google Scholar] [CrossRef]

- Kabalar, J.; Wu, S.C.; Wald, J.; Tateno, K.; Navab, N.; Tombari, F. Towards long-term retrieval-based visual localization in indoor environments with changes. IEEE Robot. Autom. Lett. 2023, 8, 1975–1982. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar] [CrossRef]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. LIFT: Learned Invariant Feature Transform. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 467–483. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Toward Geometric Deep SLAM. arXiv 2017, arXiv:1707.07410. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar] [CrossRef]

- Čech, J.; Matas, J.; Perdoch, M. Efficient Sequential Correspondence Selection by Cosegmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1568–1581. [Google Scholar] [CrossRef] [PubMed]

- Bian, J.; Lin, W.Y.; Matsushita, Y.; Yeung, S.K.; Nguyen, T.D.; Cheng, M.M. GMS: Grid-Based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2828–2837. [Google Scholar] [CrossRef]

- Lindenberger, P.; Sarlin, P.E.; Pollefeys, M. LightGlue: Local Feature Matching at Light Speed. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 17581–17592. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8918–8927. [Google Scholar] [CrossRef]

- Edstedt, J.; Sun, Q.; Bökman, G.; Wadenbäck, M.; Felsberg, M. RoMa: Robust dense feature matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19790–19800. [Google Scholar]

- Zhou, Q.; Agostinho, S.; Ošep, A.; Leal-Taixé, L. Is Geometry Enough for Matching in Visual Localization? In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; pp. 407–425. [Google Scholar] [CrossRef]

- Taira, H.; Okutomi, M.; Sattler, T.; Cimpoi, M.; Pollefeys, M.; Sivic, J.; Pajdla, T.; Torii, A. InLoc: Indoor visual localization with dense matching and view synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7199–7209. [Google Scholar]

- Shi, Y.; Xu, K.; Nießner, M.; Rusinkiewicz, S.; Funkhouser, T. PlaneMatch: Patch Coplanarity Prediction for Robust RGB-D Reconstruction. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 767–784. [Google Scholar] [CrossRef]

- Matez-Bandera, J.L.; Monroy, J.; Gonzalez-Jimenez, J. Sigma-FP: Robot Mapping of 3D Floor Plans with an RGB-D Camera Under Uncertainty. IEEE Robot. Autom. Lett. 2022, 7, 12539–12546. [Google Scholar] [CrossRef]

- Arndt, C.; Sabzevari, R.; Civera, J. Do Planar Constraints Improve Camera Pose Estimation in Monocular SLAM? In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; pp. 2213–2222. [Google Scholar] [CrossRef]

- Kaess, M. Simultaneous localization and mapping with infinite planes. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4605–4611. [Google Scholar] [CrossRef]

- Hou, Z.; Ding, Y.; Wang, Y.; Yang, H.; Kong, H. Visual Odometry for Indoor Mobile Robot by Recognizing Local Manhattan Structures. In Proceedings of the Computer Vision—ACCV 2018, Perth, Australia, 2–6 December 2019; pp. 168–182. [Google Scholar] [CrossRef]

- Frohlich, R.; Tamas, L.; Kato, Z. Absolute Pose Estimation of Central Cameras Using Planar Regions. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 377–391. [Google Scholar] [CrossRef] [PubMed]

- Reyes-Aviles, F.; Fleck, P.; Schmalstieg, D.; Arth, C. Bag of World Anchors for Instant Large-Scale Localization. IEEE Trans. Vis. Comput. Graph. 2023, 29, 4730–4739. [Google Scholar] [CrossRef] [PubMed]

- Chuan, Z.; Long, T.D.; Feng, Z.; Li, D.Z. A planar homography estimation method for camera calibration. In Proceedings of the Proceedings 2003 IEEE International Symposium on Computational Intelligence in Robotics and Automation. Computational Intelligence in Robotics and Automation for the New Millennium (Cat. No.03EX694), Kobe, Japan, 16–20 July 2003; Volume 1, pp. 424–429. [Google Scholar] [CrossRef]

- Knorr, M.; Niehsen, W.; Stiller, C. Online extrinsic multi-camera calibration using ground plane induced homographies. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 236–241. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, X.; Zhang, Z. Deep Lucas-Kanade Homography for Multimodal Image Alignment. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15945–15954. [Google Scholar] [CrossRef]

- Hong, M.; Lu, Y.; Ye, N.; Lin, C.; Zhao, Q.; Liu, S. Unsupervised Homography Estimation with Coplanarity-Aware GAN. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17642–17651. [Google Scholar] [CrossRef]

- Freund, R.; Wilson, W.; Sa, P. Regression Analysis; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Liu, C.; Kim, K.; Gu, J.; Furukawa, Y.; Kautz, J. PlaneRCNN: 3D Plane Detection and Reconstruction From a Single Image. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 15–20 June 2019; pp. 4445–4454. [Google Scholar] [CrossRef]

- Hughes, N.; Chang, Y.; Carlone, L. Hydra: A Real-time Spatial Perception System for 3D Scene Graph Construction and Optimization. arXiv 2022, arXiv:2201.13360. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar] [CrossRef]

- Cuturi, M. Sinkhorn Distances: Lightspeed Computation of Optimal Transportation Distances. arXiv 2013, arXiv:1306.0895. [Google Scholar] [CrossRef]

- Crouse, D.F. On implementing 2D rectangular assignment algorithms. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 1679–1696. [Google Scholar] [CrossRef]

- Sarlin, P.; Cadena, C.; Siegwart, R.; Dymczyk, M. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 15–20 June 2019; pp. 12708–12717. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Arandjelović, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef]

- Xie, Y.; Shu, F.; Rambach, J.; Pagani, A.; Stricker, D. PlaneRecNet: Multi-Task Learning with Cross-Task Consistency for Piece-Wise Plane Detection and Reconstruction from a Single RGB Image. arXiv 2022, arXiv:2110.11219. [Google Scholar] [CrossRef]

- Alqobali, R.; Alshmrani, M.; Alnasser, R.; Rashidi, A.; Alhmiedat, T.; Alia, O.M. A survey on robot semantic navigation systems for indoor environments. Appl. Sci. 2023, 14, 89. [Google Scholar] [CrossRef]

- Alqobali, R.; Alnasser, R.; Rashidi, A.; Alshmrani, M.; Alhmiedat, T. A Real-Time Semantic Map Production System for Indoor Robot Navigation. Sensors 2024, 24, 6691. [Google Scholar] [CrossRef] [PubMed]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar] [CrossRef]

- Pan, X.; Charron, N.; Yang, Y.; Peters, S.; Whelan, T.; Kong, C.; Parkhi, O.; Newcombe, R.; Ren, Y. Aria Digital Twin: A New Benchmark Dataset for Egocentric 3D Machine Perception. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 20076–20086. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features. In Proceedings of the Computer Vision—ECCV 2010, Crete, Greece, 5–11 September 2010; pp. 778–792. [Google Scholar] [CrossRef]

- Hazem, Z.B.; Saidi, F.; Guler, N.; Altaif, A.H. Reinforcement learning-based intelligent trajectory tracking for a 5-DOF Mitsubishi robotic arm: Comparative evaluation of DDPG, LC-DDPG, and TD3-ADX. Int. J. Intell. Robot. Appl. 2025, 9, 1982–2002. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).