Abstract

Surface defects are inevitable in the production of steel. However, traditional methods in industrial production face great challenges in detecting complex defects. Therefore, we propose LCED-YOLO based on YOLOv11 for steel defect detection. Firstly, an edge information enhancement module, C3K2-MSE, is designed to strengthen the extraction of edge information. Secondly, LDConv is introduced to lightweight the neck structure and reduce parameters. Then, a lightweight decoupling head designed for model detection tasks is proposed, further achieving model lightweighting. Finally, by introducing a learnable attention factor to optimize the CIoU loss, we focused on locating difficult samples, enhancing the detection capability. A large number of experiments were conducted on the NEU-DET and GC10-DET datasets. Compared to YOLOv11, the mAP50 of the proposed model improved by 2.6% and 3.3%, attaining 79.8% and 70.3%, respectively. It decreased 19% of parameters and 23% of floating-point operations, fulfilling the needs of lightweight and detection precision.

1. Introduction

Deformation damage is inevitable in the actual production and manufacturing of steel, which causes defects such as crazing, pitted surface, and inclusions [1]. These defects significantly impact the quality of steel products, causing safety concerns and economic losses for companies. In the process of steel production, it is necessary to find and eliminate product defects in time, so as to improve the safety of products and avoid economic losses [2]. The traditional method is mainly the visual detection method [3], which is a manual detection method. Manual visual inspection methods have the disadvantages of low detection efficiency and limited accuracy. The limitations of the inspectors themselves can also lead to product misdetection and missed detection.

With the advancements in deep learning and computer vision, deep learning-based defect detection methods are increasingly being applied to various detection tasks. Deep learning methods have great advantages in detection speed and accuracy. Researchers have started employing these methods to solve different defect detection tasks in production.

Currently, deep learning-based detection methods are divided into one-stage methods and two-stage methods. Among one-stage methods, Deng et al. [4] introduced the LFD-YOLO model, which is designed to detect surface defects on engine turbine blades. To detect printed circuit board (PCB) defects, Jiang et al. [5] introduced dilated convolution and coordinate attention into the Single-Shot MultiBox Detector (SSD). Li et al. [6] embedded an Efficient Channel Attention (ECA) mechanism into the YOLOX model to realize wood surface defect detection. Xing et al. [7] constructed different scale convolution layers in the backbone network to detect the defects of railway train wheels. Xiang et al. [8] used the HookNet model to detect fabric defects. Chen et al. [9] designed a lightweight network, YOLOv8-FSD, for detecting surface defects on photovoltaic cells. Gao et al. [10] introduced LGR-Net, a method for detecting defects in elevator guide rail clamps. This method employs a small object detection layer to build a multi-scale feature fusion network, thereby attaining greater detection accuracy. In two-stage methods, Chen et al. [11] proposed an MANet network model applied to road defects detection based on MobileNet. Xiao et al. [12] proposed a model for tiny target detection. In the model, the context enhancement module (CEM) is responsible for strengthening target feature information, and the feature purification module (FPM) is responsible for eliminating conflicting feature information. Tan et al. [13] introduced an image processing method that they integrated into their proposed segmentation algorithm, thereby enabling the automatic identification of tunnel water leakage. Cui et al. [14] proposed a CAB-Net, which uses dilated convolution to enhance target context information and improves ability to detect tiny targets. Urbonas et al. [15] used Faster R-CNN to identify defects on the surface of wood panels.

Although deep learning techniques offer advantages in various defect detection tasks, they still face significant challenges and limitations in practical industrial applications. Firstly, the complexity of surface defects brings some challenges to detection. Secondly, the accuracy, parameter quantity and inference speed of defect detection models are also very important in practical applications [16].

In view of the issues present in existing detection methods, we proposed a detection model named LCED-YOLO. The model aims to enhance model performance while maintaining a lightweight structure. First of all, we designed a module to enhance the model’s extraction capability. Secondly, LDConv was incorporated into the network bottleneck, which decreases the model’s parameters. Thirdly, we developed a lightweight decoupled head specifically for detection tasks, further lowering the model’s parameters. Finally, by introducing a learnable factor to optimize the CIoU loss function, the sample imbalance issue is addressed, and detection performance is strengthened. The main contributions are as follows:

1. A multi-scale enhancement module (MSE module) was designed in conjunction with the C3K2 module to create the C3K2-MSE module, which effectively enhances the feature information processing capability by accentuating edge information within the features.

2. A lightweight neck network and detection head were designed. In comparison to the original network, this approach reduces both the parameter count and FLOPs, while simultaneously enhancing detection accuracy.

3. By employing Focal-CIoU as the model’s loss function, the introduction of learnable factors effectively mitigates the sample imbalance problem in detection tasks, thereby enhancing the model’s detection performance.

2. Related Works

2.1. Object Detection

With the development of target detection, a large number of excellent target detection algorithms have emerged. Most of them are high-efficiency, high-precision, and general-purpose target detection frameworks, which can solve most practical target detection problems. In general, deep learning-based object detection methods are categorized into two main frameworks: one-stage methods and two-stage methods.

The one-stage method directly obtains the target’s class and location coordinates, representing algorithms include SSD [17] and You Only Look Once (YOLO) models [18,19,20]. The two-stage method generates candidate boxes and then classifies the candidate box areas, representing algorithms include Region-based Convolutional Neural Network (RCNN) [21], fast RCNN [22], and mask RCNN [23]. Because the two-stage algorithm first generates the candidate boxes and then reclassifies them, the first-stage algorithm has more advantages in detection speed.

In the two-stage detection method, Ma et al. [24] designed a method to improve robotic picking accuracy based on the Mask RCNN object detection algorithm. Han et al. [25] employed an enhanced Faster-RCNN model to detect traffic signs. Qi et al. [26] used an enhanced Fast-RCNN for accurate target detection. In the one-stage detection method, Chen et al. [27] employed an enhanced SSD model to accurately detect small vehicles; Liu et al. [28] proposed the lightweight network YOLO-BFRV, designed to enhance the detection of PCB defect by expanding the receptive field. Chen et al. [29] used the improved YOLOv3 to complete face detection. By changing the regression loss function, the model detection results are more accurate. Yi et al. [30] applied the YOLOv5 model to detect pedestrian helmets.

2.2. Defect Detection

As deep learning technology continues to develop and advance, a growing number of researchers are applying it to defect detection tasks. The manual feature extraction of traditional methods relies heavily on subjective experience, resulting in a low recognition rate for various defects. The deep learning method extracts strong representative features through neural network, locates and classifies defects, and achieves excellent results in defect detection.

Many defect detection studies are based on classic object detection frameworks, such as the YOLO series and Fast R-CNN. For example, Xie et al. [31] detected steel surface defects based on Fast R-CNN. Yu et al. [32] proposed an efficient detection network based on YOLO. Zhao et al. [33] proposed a detection model RDD-YOLO. Liu et al. [34] realized the detection of surface defects on aero-engine blades by improving Faster R-CNN. Chen et al. [35] designed a lightweight CenterNet model that enhances defect features by leveraging contextual information, resulting in improved detection performance. Zhao et al. [36] obtained a lightweight network model by fusing ShuffleNet and SSD models to detect turbine blade defects.

Two-stage detection methods offer high accuracy, but their large model size and slower detection speed make them less suitable for industrial production. One-stage methods directly acquire target category and location information, enabling faster detection. Therefore, this paper proposes a one-stage algorithm specifically designed for steel surface defect detection.

3. Methods

3.1. YOLOv11 Network Architecture

Due to variations in network depth, width, and model parameters, YOLOv11 is currently available in five versions: YOLOv11n, YOLOv11s, YOLOv11m, YOLOv11l, and YOLOv11x. The C3K2 module is a residual module, which integrates C2f and C3 modules, and applies the C3K structure internally based on the C2f module structure. The SPPF module processes features by substituting a pooling layer with a single large pooling kernel with pooling layers that use multiple smaller pooling kernels, thereby reducing the computational load while maintaining the capability to integrate multi-scale features. The C2PSA module is an extension of the C2f module, which combines the Pointwise Spatial Attention (PSA) mechanism [37], retaining the global information of the features. The neck uses the PAN-FPN structure [38] for feature information fusion. As the prediction part of the model, the head structure can effectively present the target position and category. YOLOv11 employs depthwise separable convolution (DWConv) [39] within its head branch for feature fusion, thereby reducing redundant calculations. This paper’s model is enhanced from YOLOv11n to increase the precision of steel surface defect detection and to lower the challenges associated with model deployment.

3.2. The Proposed LCED-YOLO

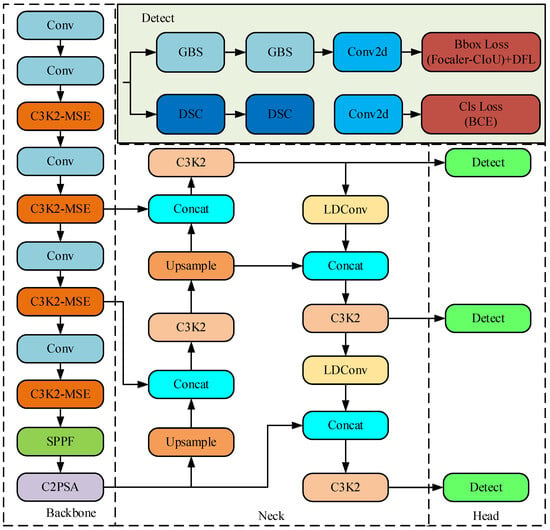

The proposed LCED-YOLO is introduced. LCED-YOLO belongs to the one-stage detection network, as shown in Figure 1.

Figure 1.

LCED-YOLO network architecture.

In the backbone network, an edge extraction module was designed to accurately extract information about edge defects on the steel surface. By constructing the correlation between local and global feature information, the information expression is effectively enhanced. For the neck network, we introduced the LDConv lightweight module to reduce the model’s parameters and computational complexity. In the head part, the task is decoupled so that the learned features are orientated to the corresponding tasks. Next, the proposed LCED-YOLO network model structure will be introduced in detail.

3.2.1. Backbone

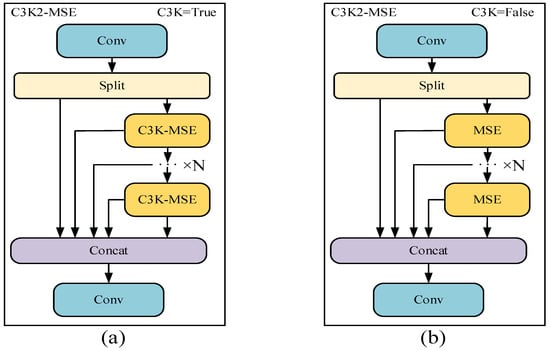

Steel surface defects vary greatly in position and shape, while CNNs have limitations in effectively modeling long-range dependencies, which restricts the ability to capture features. Therefore, in the face of changeable defect detection tasks, the model often fails to achieve the expected results. To improve the ability to extract complex defects in steel, the C3K2-MSE module is designed by introducing a multi-scale edge information enhancement module into C3K2, as shown in Figure 2.

Figure 2.

C3K2-MSE module structure. (a) The C3K-MSE module is utilized when C3K is set to True; (b) the MSE module is utilized when C3K is set to False.

Inspired by the idea of fusing different scale features from the RFB structure [40], this paper proposes a multi-scale edge information enhancement module. First, multi-scale pooling is applied to the input features by performing adaptive average pooling with kernel sizes of 3, 6, 9, and 12, respectively, to extract features at various scales. This approach effectively captures the image’s multi-level feature information. Secondly, the feature map is adjusted by successively applying two convolutions with 1 × 1 and 3 × 3 kernel sizes. Subsequently, an upsample operation is employed to align features from different scales to the same spatial resolution. Thirdly, the edge enhancement module (EEM) is responsible for enhancing the model’s sensitivity to edge features. Subsequently, the processed multi-scale features and local features are spliced, and the convolution is fused into a unified feature, as shown in Figure 3.

Figure 3.

MSE module structure.

The EEM retains the effective feature information while enhancing the edge information. By strengthening the edge information, the defect edge is clearer, and the edge feature enhancement effect is achieved. Firstly, the pooling operation is performed on the input features to remove noise and detail information in the image and retain its low-frequency information. Secondly, the difference between original and smoothed features is calculated to obtain the edge feature information. These extracted features are further processed by the convolution operation, thereby highlighting defect edge details. Finally, the edge-enhanced features are obtained by integrating the refined edge details with the original information. This process can be formalized as shown in Equations (1) and (2).

where denotes the input feature, denotes the edge feature, and denotes the edge-enhanced feature.

In summary, the MSE module can effectively integrate the extracted multi-scale edge information, improve the flexibility of the model, and provide an efficient solution.

3.2.2. Neck

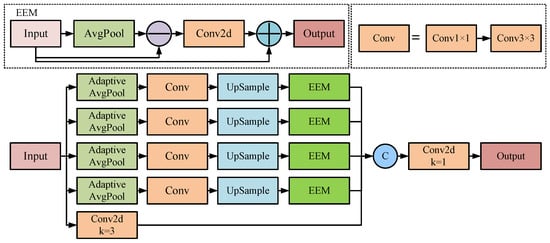

In the model neck network, standard convolution is used for down-sampling. Standard convolution uses fixed sampling positions for feature extraction, which is limited to local windows and cannot effectively capture global information. To overcome this, we introduce Linear Deformable Convolution [41] (LDConv), which dynamically adjusts the position of sampling points through the characteristics of LDConv, so that it can adapt to the geometric shape and texture changes in input features and improve the precision of feature extraction.

LDConv is shown in Figure 4. Firstly, according to the convolution kernel size, the initial sampling coordinate is generated. Regular or irregular coordinates are then produced by calculating the quantity of convolution kernels. The corresponding convolution operation at the position is shown in Equation (3).

where , and denote the generated sampling grid, convolution parameters, and the pixel values at their respective spatial locations.

Figure 4.

LDconv structure diagram.

Secondly, the offset of the corresponding convolution kernel is obtained according to the convolution operation, and these offsets dynamically adjust the sampling positions of the kernel. Specifically, these offsets are learned from the input features through convolution operations, and the offsets are added to the original coordinates to generate new sampling coordinates to achieve accurate modeling of the geometric features of the target. Finally, according to the adjusted sampling position, the features are resampled, and the resampled feature map is shaped. After convolution, normalization, and the SiLU activation function, a new output feature map is obtained.

LDConv can dynamically generate the sampling shape according to the change in the target shape, enabling convolutional kernels to adapt based on feature content and effectively enhance the ability to capture irregular targets. Compared with the traditional convolution kernel fixed-sampling-point method, LDConv makes the sampling point distribution more flexible by learning the offset, so as to better deal with targets with complex boundaries or irregular shapes. In addition, LDConv avoids the computational burden of quadratic growth of parameters in traditional deformable convolution by designing parameters with linear growth, so that it has better computational efficiency while maintaining high flexibility. Therefore, the model’s PAFPN structure was redesigned by introducing LDConv (kernel size 3, stride 2) to replace the neck downsampling module, which reduced the number of model parameters.

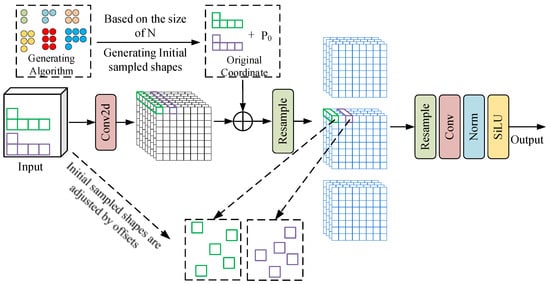

3.2.3. Head

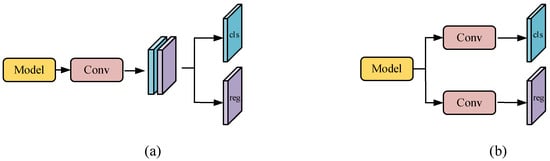

In most network models, the head is usually used to complete the task of predicting target location and class, which is generally divided into the Coupled head [18,42] and Decoupled head [43], as shown in Figure 5. These two methods are used in different ways in dealing with classification and regression tasks. The coupling head uses a single network layer to complete the prediction of the target position and category, and the decoupling head completes the prediction of the target position and category through two different branches. The characteristics of the coupling head will impact classification and regression tasks, and its prediction accuracy will be affected in the face of complex defects. Therefore, we use a decoupled head for prediction.

Figure 5.

Illustration of two types of detection heads: (a) coupled head and (b) decoupled head.

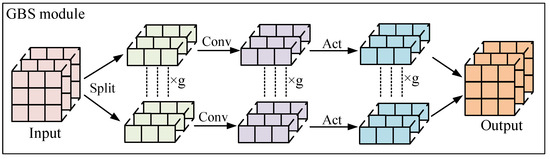

We design a lightweight detection head. This head handles classification and regression tasks with two separate modules. For regression tasks, we propose a GBS module, and for the classification task, we use the DSC module.

As shown in Figure 6, the GBS module initially splits the input features into g uniform segments across the channel axis. These segments are then multiplied with correspondingly partitioned convolution kernels. The processed features subsequently pass through a BN layer and activation function layer before being concatenated channel-wise to generate the new feature representation. The GBS module divides the features into groups, so that each group can be calculated independently, which effectively improves the parallelism of model calculation. Each group is only convoluted with a part of the input channel, so the model’s parameters and calculations will be reduced. The GBS module uses fewer parameters and calculations to achieve the same effect as before, which effectively reduces the overall parameter and computational complexity. The parameters and computational complexity of the original head module and GBS module are shown in Equations (4)–(7). It is evident that the GBS module reduces the parameters and computational complexity by one g and realizes the lightweight optimization of the model head.

where denotes the height of the feature map, denotes the width of the feature map, denotes the height of the convolution kernel, denotes the width of the convolution kernel, denotes the number of input channels, denotes the number of output channels, and denotes the number of groups.

Figure 6.

GBS model structure.

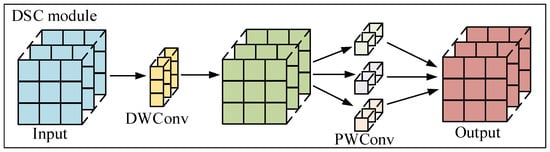

As shown in Figure 7, the DSC module is composed of DWConv and PWConv, which is responsible for processing model classification tasks. The DSC module initially employs a 3 × 3 depthwise separable convolution (DWConv) to independently process each channel of the input feature map for spatial feature extraction. Although this operation can reduce the model’s computational complexity, it fails to effectively leverage cross-channel feature correlations at identical spatial positions. Therefore, we added PWConv to deeply integrate these maps. The formalization process of the DSC module is shown in Equation (8).

where denotes the input feature, and denotes the output feature. In this way, the DSC module can significantly reduce the model’s parameters and computational complexity while maintaining its feature extraction ability.

Figure 7.

DSC model structure.

3.2.4. Loss Function

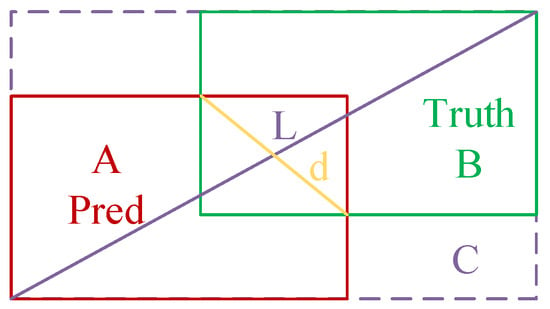

The CIoU [44] loss function used in the original YOLOv11 model was proposed in 2020; CIoU is shown in Figure 8. By leveraging the disparity in width-to-height ratios between predicted and target boxes, the accuracy of the target regression box is enhanced. CIoU initially computes the Intersection over Union (IoU) between two objects, followed by incorporating an influence factor to account for their difference.

Figure 8.

CIoU schematic.

In the figure, represents the minimum rectangular area containing the true box and the prediction box, denotes the prediction box, and denotes the true box. denotes the diagonal length of the minimum rectangular region of , and denotes the Euclidean distance between the centroids of the two boxes. Therefore, the calculation formula is shown in Equations (9)–(11).

where , and denote the prediction box and the true box, and denote their respective widths, and denote their corresponding heights.

While CIoU accounts for the aspect ratios of both bounding boxes, its reliance on relative difference metrics may occasionally impede the model’s ability to optimize similarity measures, particularly when handling objects with substantial scale and aspect ratio variations. In order to solve this problem, we optimize CIoU by introducing a learnable attention factor to dynamically weight different samples, thereby prioritizing those that significantly influence localization precision. The IoU was reformulated through a linear interval mapping approach, as expressed in Equation (12).

where is the reconstructed Focaler-IoU, and . The parameters and are adjustable. By adjusting the value of and , can focus on different regression samples. The definition of loss is shown in Equation (13).

Therefore, the Focaler-IoU loss is applied to the CIoU loss function, and the definition of is shown in Equation (14).

For the dynamic focusing of Focaler-IoU on various regression samples, it is defined as in Equation (15), where > 0 serves as a balancing factor controlling the magnitude of the weights, and ≥ 0 acts as a focusing factor that dynamically adjusts the weights of easy versus hard samples. For the , if the focusing factor > 0, this term’s value increases monotonically as the IoU value increases, as demonstrated in Equation (16).

This indicates that the weight function can dynamically adjust the processing intensity for different samples based on the IoU. When the overlap between the predicted box and the ground truth box is low (IoU → 0+), the weight approaches zero, which enhances the gradient response of the loss function for difficult samples. Conversely, when the overlap is high (IoU → 1−), the weight stabilizes at a constant , preventing the gradients of easy samples from dominating overall. This method makes the model focus more on difficult-to-classify bounding boxes by reducing the weights of high-confidence samples.

The incorporation of Focaler-IoU loss optimizes the model, enabling it to mitigate class imbalance challenges in detection tasks while boosting bounding box regression accuracy, ultimately elevating overall performance.

4. Experiments

4.1. Datasets

To verify the effectiveness of the proposed LCED-YOLO network, we conduct experimental verification on the NEU-DET [45] and GC10-DET [46] datasets. The NEU-DET dataset includes six distinct categories of steel surface defects, which comprise crazing (Crz), rolled-in scale (Rs), inclusions (In), patches (Pa), scratches (Sc), and a pitted surface (Ps). This study employs an 8:1:1 ratio to partition the dataset. There are 1440 images for training, 180 images for validation, and 180 images for testing. Additionally, the GC10-DET dataset includes ten categories of steel surface defects, which comprise oil spots (Os), creases (Crs), water spots (Ws), crescent gaps (Cg), silk spots (Ss), inclusions (In), weld lines (Wl), containing punching (Pu), waist folding (Wf), and rolled pits (Rp). This study employs an 8:1:1 ratio to partition the dataset, consisting of 1834 training images, 230 validation images, and 230 test images. The defects contained in these datasets are diverse and random in defect distribution and shape size. These characteristics will greatly increase the difficulty of extracting complex features of defects.

4.2. Experimental Setup

The experiment was completed under the Windows10 operating system, based on torch 1.12.1 and Python 3.8 as the deep learning framework implementation model. The system utilized an NVIDIA GeForce RTX 3060 GPU with 12 GB memory and an Intel Core i5-13400F CPU. The version of CUDA is 11.6. All input images are standardized to a resolution of 640 × 640, the training cycle is 300, the batch size is 8, and the SGD optimizer is used to optimize the model. The initial learning rate is 0.01, the weight attenuation coefficient is 0.0005, and the momentum is 0.937. The Mosaic method is used for data enhancement, and the last 10 epochs are closed. In order to ensure the fairness and comparability of the experiment, pre-training weights are not used in all experiments in this paper. All comparison methods and our proposed model were trained from scratch under identical training conditions.

4.3. Evaluation Basis

In order to evaluate the model’s performance and evaluate the effectiveness of the proposed method from different aspects, we select the average precision (AP), mean average precision (mAP), model parameters, model calculation, and FPS as the evaluation indexes of the model. The calculation formulas of these indexes are given in Equations (17)–(20).

where represents the accuracy rate, which is the probability of predicting the correct number of positive samples in all predicted positive samples, and represents the recall rate, which is the probability of predicting the correct number of positive samples in all actual samples. represents the number of positive samples predicted correctly, represents the number of positive samples predicted incorrectly, and represents the number of positive samples predicted as negative samples.

4.4. Comparison Experiments

To validate the advantages of LCED-YOLO, it was compared against several popular algorithms. The main comparison algorithms include SSD [17], YOLOv5s, YOLOv8n, YOLOv11n, Faster R-CNN [22], RT-DETR [47], and the advanced defect detection models WSS-YOLO [48] and RDD-YOLO [33]. Comparative experiments were performed on both the NEU-DET and GC10-DET datasets, which further demonstrated the efficacy and advantages of our proposed approach. In our experimental results, we employed multiple-repetition averaging to ensure the fairness of the outcomes.

4.4.1. Comparisons with Other Methods on NEU-DET

According to Table 1 and Table 2, the LCED-YOLO model shows significant performance compared with other methods. Compared with YOLOv11n, LCED-YOLO has a 2.6% increase in mAP50. Although it has a slight decrease in FPS, its model achieves a 19.2% reduction in parameters and a 23.1% decrease in computational complexity. It has better comprehensive performance and can meet the lightweight requirements of industry. LCED-YOLO reaches 79.8% mAP50, which is the best for the three defects of In, Ps, and Pa compared with all the other models.

Table 1.

Results on the NEU-DET dataset.

Table 2.

Comparison of results on the NEU-DET dataset.

Compared with SSD, Faster R-CNN, and RT-DETR, LCED-YOLO increases mAP50 by 7.1%, mAP50 by 4.9%, and mAP50 by 5.8%, respectively. Compared with YOLOv5s and YOLOv8n, it increases mAP50 by 4.5% and mAP50 by 2.8%, respectively. Compared with RDD-YOLO, the proposed method improves mAP50 by 2.8% while paying less resources and achieves higher detection accuracy. Although mAP50 is reduced by 0.1 compared with WSS-YOLO, LCED-YOLO has greater advantages in parameter quantity and computational complexity. Compared with WSS-YOLO, the parameter quantity is reduced by 53.3% and the computational complexity is reduced by 47.9%, which is more suitable for lightweight industrial deployment tasks.

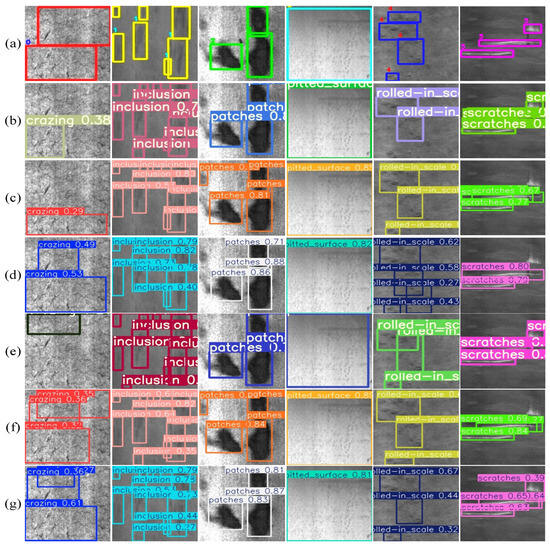

To validate the detection ability of the LCED-YOLO, we conducted a visual experiment of the detection effect. In the experiment, the LCED-YOLO model was compared with advanced detection models such as YOLOv5s, YOLOv8n, YOLOv11n, RDD-YOLO, and WSS-YOLO. As shown in Figure 9, in the experiment, a total of 6 different types of defects were selected; the prediction results were compared with ground truth boxes. Compared with other methods, LCED-YOLO shows excellent detection ability and can complete the detection task efficiently and accurately.

Figure 9.

The prediction results for defects. (a) Ground truth. (b) YOLOv5s. (c) YOLOv8n. (d) YOLOv11n. (e) RDD-YOLO. (f) WSS-YOLO. (g) LCED-YOLO.

4.4.2. Comparisons with Other Methods on GC10-DET

To further verify the effectiveness and generalization ability of LCED-YOLO, the model parameters are kept unchanged, and the verification is performed on the GC10-DET dataset. The specific results are shown in Table 3 and Table 4.

Table 3.

Results on the GC10-DET dataset.

Table 4.

Comparison of results on the DC10-DET dataset.

Compared with other advanced methods, LCED-YOLO showed the best performance, reaching 70.3% mAP50. SSD, Faster R-CNN, RT-DETR, and YOLOv5s show relatively low performance in the GC10-DET dataset. Models such as YOLOv8n, YOLOv11n, RDD-YOLO, and WSS-YOLO achieve higher mAP50, but their model sizes are relatively large and are not suitable for application in actual industrial environments. LCED-YOLO has obvious advantages, such as higher detection accuracy and lower model parameters and computational complexity. These results validate the model’s enhanced performance in practical industrial defect detection applications, establishing a robust basis for quality assurance in manufacturing workflows.

4.5. Ablation Experiments

This section performs ablation experiments on the proposed enhanced approach using the NEU-DET dataset to validate its efficacy. Furthermore, experimental comparisons were made regarding the combination of multi-scale pooling kernels within the C3K2-MSE module and the choice of the model’s loss function. For clarity in presenting the ablation results, seven experimental ablation schemes are abbreviated as follows:

The Baseline using the C3K2-MSE module is called E-YOLO.

The Baseline using the LDConv module is called D-YOLO.

The Baseline using the lightweight detection head is called L-YOLO.

The Baseline using Focaler-CIoU is called C-YOLO.

The Baseline using C3K2-MSE and LDConv modules is called ED-YOLO.

The Baseline using C3K2-MSE, LDConv, and lightweight detection head modules is called LED-YOLO.

The Baseline using C3K2-MSE, LDConv, a lightweight detection head, and Focaler-CIoU is called LCED-YOLO.

According to Table 5, the experimental results confirm the effectiveness of the proposed method. The LCED-YOLO model improves mAP50 by 2.6% compared to the Baseline. E-YOLO uses the C3K2-MSE module, and the model mAP50 is increased by 2.1%, which highlights the effectiveness of C3K2-MSE in capturing edge details of defects. D-YOLO introduces the LDConv module. While there is a marginal decline in detection accuracy, the model achieves a reduction of roughly 7.7% in parameters and 3.1% in computational complexity. By utilizing a lightweight decoupled head, L-YOLO improves mAP50 from 77.2% to 79%, reduces parameters by 15.3%, and reduces FLOPS to 5.2 G. This paper introduces a lightweight decoupled head that enhances model accuracy while simultaneously decreasing both parameters and computational complexity. Through the application of Focaler-CIoU for regression loss in bounding box prediction, C-YOLO improves its mAP50 by 1.1% while maintaining the same model parameters and computational load. This result indicates that Focaler-CIoU effectively strengthens the model’s ability to process imbalanced data samples. ED-YOLO uses both C3K2-MSE and LDConv modules to achieve 77.3% mAP50, which optimizes the model’s parameters. LDConv reduces the learning of positional offsets at each location via linearization, substituting downsampling techniques in the neck network to reduce the model’s parameters and computational complexity. It exhibits a robust capability for targets with significant feature variations, offering flexibility in response to deformations and local changes. Nevertheless, for targets that are less sensitive to feature variations, LDConv’s capacity for capturing global feature information is comparatively limited, potentially compromising the model’s detection performance. LED-YOLO uses C3K2-MSE, LDConv, and a lightweight decoupling head to achieve 78.2% mAP50 and 158 FPS, while optimizing the parameters and computational complexity of the model. Finally, the proposed method is integrated into LCED-YOLO, with mAP50 of 79.8%. The total number of parameters in the model is 2.1 M, representing a 19.2% reduction from the baseline. The computational complexity (FLOPS) is 5.0 G, a 23.1% decrease from the baseline, and the model achieves an inference speed of 151 FPS. In summary, this experiment proves the excellent detection performance of the LCED-YOLO model.

Table 5.

Ablation experiment results.

In the MSE module, it is necessary to conduct experiments to determine the optimal size for combining multi-scale pooling kernels. To ensure a sufficient and reasonable number of feature channels from the output of each convolutional layer, we experimented with the three combination sizes [3], [3, 6], and [3, 6, 9, 12]. The combination with the most significant impact on model performance was selected, and the results are presented in Table 6.

Table 6.

Comparison of multi-scale pooling kernel combinations in C3K2-MSE.

As shown in Table 6, the model performs optimally with the size combination [3, 6, 9, 12]. Conversely, the combinations [3] and [3, 6] result in a diminished capacity for capturing global feature information due to a higher number of channels, thereby compromising the model’s detection performance. A larger channel count also leads to an increase in the number of model parameters. Consequently, the [3, 6, 9, 12] combination is selected for use in the MSE module.

In the model, different loss functions will affect its performance. Therefore, when selecting a loss function, experiments are required to determine the best choice. This paper compares Focal-CIoU with other mainstream loss functions while keeping other conditions constant. From the results in Table 7, it can be seen that Focal-CIoU’s mAP50 is 79.8%, which is 1.7%, 1.0%, 0.5%, 0.7%, 1.2%, and 0.2% higher than the other loss functions, respectively. The introduction of Focal-CIoU enables the model to achieve the best overall performance. This indicates that compared to other loss functions, Focal-CIoU is the most suitable loss function for this model.

Table 7.

Comparison of different loss functions.

5. Conclusions

This paper introduces the LCED-YOLO model for detecting defects on steel surfaces. Firstly, the C3K2-MSE module is designed to enhance the model’s capacity to extract edge information, which in turn enhances the model’s detection accuracy for complex objects. Secondly, LDConv is introduced to lightweight the neck structure of the model, which effectively reduces the model’s parameter and computational complexity. Thirdly, a lightweight decoupling head is designed. Through the grouping and refinement of feature information, the model’s detection performance is significantly improved while achieving a lightweight architecture. Finally, the CIoU loss is optimized through the introduction of learnable attention factors, improving the model’s adaptability to imbalanced samples and thereby boosting its overall performance. LCED-YOLO on NEU-DET is 79.8% mAP50, Params is 2.1 M, FLOPS is 5.0 G, FPS is 151; on GC10-DET, the precision reaches 70.3% mAP50 and the FPS is 188. Compared with other excellent models, it demonstrated superior overall performance. In summary, the model demonstrates strong performance in detection accuracy, parameters, and computational complexity, though opportunities for enhancement remain. The next research focus should be on maintaining the model detection ability while optimizing the network structure to achieve a more lightweight effect and make it easier to apply in actual industrial settings.

Author Contributions

Conceptualization: A.Z. and X.J.; Methodology: X.J. and W.L.; Software: W.L. and X.J.; Formal analysis: A.Z.; Writing—original draft preparation: A.Z. and X.J.; Writing—review and editing: A.Z. and W.L.; Supervision: A.Z. and W.L.; Project administration: W.L.; Funding acquisition: W.L., A.Z. and X.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the S&T Program of Hebei (22282203Z) and the Natural Science Foundation of Hebei Province (E2022209086).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xu, Y.Y.; Li, D.W.; Xie, Q.; Wu, Q.Y.; Wang, J. Automatic defect detection and segmentation of tunnel surface using modified Mask R-CNN. Measurement 2021, 178, 109316. [Google Scholar] [CrossRef]

- Zhou, X.F.; Fang, H.; Liu, Z.; Zheng, B.L.; Sun, Y.Q.; Zhang, J.Y.; Yan, C.G. Dense Attention-Guided Cascaded Network for Salient Object Detection of Strip Steel Surface Defects. IEEE Trans. Instrum. Meas. 2022, 71, 5004914. [Google Scholar] [CrossRef]

- Mordia, R.; Verma, A.K. Visual techniques for defects detection in steel products: A comparative study. Eng. Fail. Anal. 2022, 134, 106047. [Google Scholar] [CrossRef]

- Deng, W.; Liu, G.X.; Meng, J. Study on Novel Surface Defect Detection Methods for Aeroengine Turbine Blades Based on the LFD-YOLO Framework. Sensors 2025, 25, 2219. [Google Scholar] [CrossRef]

- Jiang, W.J.; Li, T.F.; Zhang, S.L.; Chen, W.B.; Yang, J. PCB defects target detection combining multi-scale and attention mechanism. Eng. Appl. Artif. Intell. 2023, 123, 106359. [Google Scholar] [CrossRef]

- Li, D.J.; Zhang, Z.L.; Wang, B.G.; Yang, C.M.; Deng, L.W. Detection method of timber defects based on target detection algorithm. Measurement 2022, 203, 111937. [Google Scholar] [CrossRef]

- Xing, Z.Y.; Zhang, Z.Y.; Yao, X.W.; Qin, Y.; Jia, L.M. Rail wheel tread defect detection using improved YOLOv3. Measurement 2022, 203, 111959. [Google Scholar] [CrossRef]

- Xiang, Z.; Shen, Y.J.; Ma, M.; Qian, M. HookNet: Efficient Multiscale Context Aggregation for High-Accuracy Detection of Fabric Defects. IEEE Trans. Instrum. Meas. 2023, 72, 5016311. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Z.; Li, H.; Wang, Y.W.; Lei, G.Z.; Wu, L.L. Research on Defect Detection in Lightweight Photovoltaic Cells Using YOLOv8-FSD. Sensors 2025, 25, 843. [Google Scholar] [CrossRef] [PubMed]

- Gao, R.Z.; Chen, M.; Pan, Y.; Zhang, J.X.; Zhang, H.P.; Zhao, Z.Y. LGR-Net: A Lightweight Defect Detection Network Aimed at Elevator Guide Rail Pressure Plates. Sensors 2025, 25, 1702. [Google Scholar] [CrossRef]

- Chen, J.D.; Wen, Y.X.; Nanehkaran, Y.A.; Zhang, D.F.; Zeb, A. Multiscale Attention Networks for Pavement Defect Detection. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Xiao, J.S.; Guo, H.W.; Zhou, J.; Zhao, T.; Yu, Q.Z.; Chen, Y.H.; Wang, Z.Y. Tiny object detection with context enhancement and feature purification. Expert Syst. Appl. 2023, 211, 118665. [Google Scholar] [CrossRef]

- Tan, L.; Hu, X.X.; Tang, T.; Yuan, D.J. A lightweight metro tunnel water leakage identification algorithm via machine vision. Eng. Fail. Anal. 2023, 150, 107327. [Google Scholar] [CrossRef]

- Cui, L.S.; Lv, P.; Jiang, X.H.; Gao, Z.M.; Zhou, B.; Zhang, L.M.; Shao, L.; Xu, M.L. Context-Aware Block Net for Small Object Detection. IEEE Trans. Cybern. 2022, 52, 2300–2313. [Google Scholar] [CrossRef] [PubMed]

- Urbonas, A.; Raudonis, V.; Maskeliunas, R.; Damasevicius, R. Automated Identification of Wood Veneer Surface Defects Using Faster Region-Based Convolutional Neural Network with Data Augmentation and Transfer Learning. Appl. Sci. 2019, 9, 4898. [Google Scholar] [CrossRef]

- Zhang, D.H.; Hao, X.Y.; Wang, D.C.; Qin, C.B.; Zhao, B.; Liang, L.L.; Liu, W. An efficient lightweight convolutional neural network for industrial surface defect detection. Artif. Intell. Rev. 2023, 56, 10651–10677. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 27th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ma, S.H.; Shi, M.M.; Hu, C.F. Objects detection and location based on mask RCNN and stereo vision. In Proceedings of the 14th IEEE International Conference on Electronic Measurement and Instruments (ICEMI), Changsha, China, 1–3 November 2019; pp. 369–373. [Google Scholar]

- Han, C.; Gao, G.Y.; Zhang, Y. Real-time small traffic sign detection with revised faster-RCNN. Multimed. Tools Appl. 2019, 78, 13263–13278. [Google Scholar] [CrossRef]

- Qi, Q.; Zhang, K.; Tan, W.X.; Huang, M.X. Object Detection with Multi-RCNN Detectors. In Proceedings of the 10th International Conference on Machine Learning and Computing (ICMLC), Univ Macau, Zhuhai, China, 26–28 February 2018; pp. 193–197. [Google Scholar]

- Chen, W.P.; Qiao, Y.T.; Li, Y.J. Inception-SSD: An improved single shot detector for vehicle detection. J. Ambient Intell. Hum. Comput. 2022, 13, 5047–5053. [Google Scholar] [CrossRef]

- Liu, J.X.; Kang, B.Y.; Liu, C.; Peng, X.H.; Bai, Y. YOLO-BFRV: An Efficient Model for Detecting Printed Circuit Board Defects. Sensors 2024, 24, 6055. [Google Scholar] [CrossRef]

- Chen, W.J.; Huang, H.B.; Peng, S.; Zhou, C.S.; Zhang, C.P. YOLO-face: A real-time face detector. Visual Comput. 2021, 37, 805–813. [Google Scholar] [CrossRef]

- Yi, Z.T.; Wu, G.; Pan, X.L.; Tao, J. Research on Helmet Wearing Detection in Multiple Scenarios Based on YOLOv5. In Proceedings of the 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 769–773. [Google Scholar]

- Xie, Q.Y.; Zhou, W.Q.; Tan, H.; Wang, X.L. Surface Defect Recognition in Steel Plates Based on Impoved Faster R-CNN. In Proceedings of the 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; pp. 6759–6764. [Google Scholar]

- Yu, X.Y.; Lyu, W.T.; Zhou, D.; Wang, C.Q.; Xu, W.Q. ES-Net: Efficient Scale-Aware Network for Tiny Defect Detection. IEEE Trans. Instrum. Meas. 2022, 71, 3511314. [Google Scholar] [CrossRef]

- Zhao, C.; Shu, X.; Yan, X.; Zuo, X.; Zhu, F. RDD-YOLO: A modified YOLO for detection of steel surface defects. Measurement 2023, 214, 112776. [Google Scholar] [CrossRef]

- Liu, Y.X.; Wu, D.B.; Liang, J.W.; Wang, H. Aeroengine Blade Surface Defect Detection System Based on Improved Faster RCNN. Int. J. Intell. Syst. 2023, 2023, 1992415. [Google Scholar] [CrossRef]

- Chen, W.X.; Meng, S.M.; Wang, X.P. Local and Global Context-Enhanced Lightweight CenterNet for PCB Surface Defect Detection. Sensors 2024, 24, 4729. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.M.; Gao, Y.S.; Deng, W. Defect Detection Using Shuffle Net-CA-SSD Lightweight Network for Turbine Blades in IoT. IEEE Internet Things J. 2024, 11, 32804–32812. [Google Scholar] [CrossRef]

- Zhao, H.S.; Zhang, Y.; Liu, S.; Shi, J.P.; Loy, C.C.; Lin, D.H.; Jia, J.Y. PSANet: Point-wise Spatial Attention Network for Scene Parsing. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 270–286. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. In Proceedings of the European Conference on Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 404–419. [Google Scholar]

- Zhang, X.; Song, Y.Z.; Song, T.T.; Yang, D.G.; Ye, Y.C.; Zhou, J.; Zhang, L.M. LDConv: Linear deformable convolution for improving convolutional neural networks. Image Vision Comput. 2024, 149, 105190. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Zheng, Z.H.; Wang, P.; Liu, W.; Li, J.Z.; Ye, R.G.; Ren, D.W. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the 34th AAAI Conference on Artificial Intelligence/32nd Innovative Applications of Artificial Intelligence Conference/10th AAAI Symposium on Educational Advances in Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- He, Y.; Song, K.C.; Meng, Q.G.; Yan, Y.H. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L. Deep Metallic Surface Defect Detection: The New Benchmark and Detection Network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.Y.; Xu, S.L.; Wei, J.M.; Wang, G.Z.; Dan, Q.Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Lu, M.; Sheng, W.Q.; Zou, Y.; Chen, Y.T.; Chen, Z.G. WSS-YOLO: An improved industrial defect detection network for steel surface defects. Measurement 2024, 236, 115060. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).