Abstract

To address the challenge of accurately predicting carbon price fluctuations, which are influenced by multiple factors, a multisource, spatiotemporal, federated learning framework with cross-modal feature fusion is proposed. Firstly, a three-level hierarchical federated learning network, consisting of perception clients, regional (edge) nodes, and a central server, is designed. The server incrementally aggregates the parameters generated by the local large model of the perception client through incremental data training, improving the efficiency of parameter aggregation in federated learning and avoiding the problem of network traffic data exposure. Secondly, a cross-modal, spatiotemporal, enhanced attention model is proposed. In order to extract the joint features of carbon price time series data and spatial correlation, spatiotemporal feature encoding is adopted. In order to share the semantic space of aligning market factors and carbon emission data in the embedding layer, cross-modal alignment is adopted. Finally, the experimental results demonstrate that the proposed framework can effectively predict carbon prices.

1. Introduction

With the increasing urgency of global climate change, carbon emissions and carbon trading have become critical environmental and economic concerns [1]. The development of carbon emission trading markets has steadily progressed, transforming carbon into a financial asset and providing an effective means to curb excessive emissions. Accurate prediction of carbon trading prices has become essential for informed decision making by governments, enterprises, and investors. However, carbon trading prices are characterized by strong volatility and instability and are significantly influenced by a variety of market factors. Reliable forecasting of carbon prices plays a crucial role in supporting evidence-based policymaking [2].

Existing studies have demonstrated that market factors affecting carbon prices include both economic variables and energy prices [3]. Economic variables encompass GDP growth rates, exchange rates, and capital market indicators, while energy prices include oil, coal, natural gas, and electricity prices [4,5]. Macroeconomic development shapes societal demand, thereby influencing carbon pricing dynamics [6,7]. For instance, to examine economic impacts, a spatial panel econometric model (SPEM) optimized with an ant colony algorithm was proposed to analyze how urban morphology and socioeconomic variables affect CO2 emissions [8]. Results indicated a 151% increase in emissions, exhibiting spatial clustering, underscoring the significant influence of urban form and economic development on emissions. Moreover, the effects of four types of economic policy uncertainty on carbon trading prices were analyzed using a nonlinear ARDL (autoregressive distributed lag) model and asymmetric causality tests [9,10].

To explore the combined effects of economic indicators and energy prices, the dynamic relationships between them and carbon prices were examined using a VAR-VEC (vector autoregressive–vector error correction) model [11], revealing long-term equilibrium relationships. Additionally, models such as ARMA-GARCH and grey correlation analysis were employed to explore the fluctuations in carbon prices and their potential links with energy and economic conditions [12]. Linear econometric models, such as Granger causality analysis, have also been used to study the relationships between carbon prices, stock markets, and macroeconomic variables [4,10]. However, such methods fail to capture the inherent nonlinearities and lag effects present in real-world multimodal market influences. Since different factors have varying degrees of influence on carbon prices, using irrelevant or weakly related variables may impair model performance. To address this, XGBoost (Extreme Gradient Boosting) has been introduced to capture complex nonlinear relationships more effectively [13,14], while simultaneously performing feature selection and dimensionality reduction to enhance model generalization and accuracy. The traditional centralized training methods mentioned above are prone to the following problems: The Internet of Things (IoT) devices generate different types of heterogeneous data from multiple sources, resulting in data silos and privacy security issues. How to effectively utilize data from IoT devices while ensuring data privacy and security has become an urgent need, hence the emergence of federated learning technology. In order to protect enterprise data privacy, a hierarchical federated learning framework is adopted to train a cross institutional carbon price prediction model. Multisource data fusion sensing technology is employed to achieve minute-level acquisition of carbon market factors, and the multisource data acquisition combined with the IoT equipment and real-time processing of edge computing. Lightweight edge nodes are deployed to process real-time data streams. The central node is responsible for global model aggregation and communication delay control.

To investigate the nonlinear causality and hysteresis between market factors and carbon prices, it is necessary to examine price dynamics across different temporal scales (daily, weekly, monthly, quarterly, and yearly). Decomposing raw carbon price data into homogeneous subsequences has become a standard approach to handling highly complex time series. Given the uncertainty and nonlinearity of carbon price data, ensemble empirical mode decomposition (EEMD) has been adopted to break down the original signal into multiple stable components and residuals, effectively suppressing high-frequency noise [15]. To capture long-term trends, complementary EEMD (CEEMD) has also been applied for a more refined decomposition of complex patterns [16]. Furthermore, CEEMDAN (Complete Ensemble Empirical Mode Decomposition with Adaptive Noise) has been used to smooth carbon price and volume data, improving data stationarity [17,18]. Nevertheless, due to the strong volatility and non-stationarity of carbon price signals, traditional models often face difficulties in parameter estimation and risk of overfitting. To overcome these issues, the adaptive chirp mode decomposition (ACMD) algorithm has shown advantages in extracting key features from multimodal signals with strong time-varying characteristics [19].

To better capture the temporal logic, regularity, and responsiveness of carbon price changes and their influencing factors, various prediction models have been proposed. For example, GARCH-based models have been used to analyze price volatility [20], while combinations of EMD and GARCH have been explored for enhanced forecasting [21]. However, these machine learning approaches often fail to fully resolve the issue of mode mixing during decomposition. In contrast, neural networks are capable of extracting useful features from large, fuzzy, and noisy datasets. Deep learning methods combined with wavelet transforms have been adopted to improve carbon price feature extraction and forecasting accuracy [22]. Hybrid models based on unstructured combinations have also been proposed to enhance predictive robustness [23]. For rapidly rising carbon prices, models that integrate GARCH with long short-term memory (LSTM) networks have been developed [24]. Other hybrid approaches combining LSTM with Light Gradient Boosting Machine (LGBM) have also demonstrated improved prediction performance [25,26,27,28]. Despite these advances, the nonlinear and unstable nature of carbon pricing continues to pose challenges. Temporal convolutional networks (TCNs), with their ability to extract features from complex time series, have recently emerged as promising alternatives [29].

In order to solve the problem of difficulty in accurately predicting changes in carbon prices due to multiple factors, a perception and prediction of carbon price influencing factors based on hierarchical federated learning and cross-modal spatiotemporal enhanced attention is proposed. Firstly, data hierarchical aggregation is achieved through multi-level nodes (such as edge devices, regional servers, and cloud centers) to optimize global model training efficiency while protecting local data privacy. Perception terminal devices (e.g., IoT sensors) perform local model training. Edge nodes (such as regional servers) aggregate multi-aware device parameters. Cloud center coordinates global model updates. Secondly, a cross-modal spatiotemporal enhanced attention perception network model is proposed. In order to extract the joint features of carbon price time series data and spatial correlation, spatiotemporal feature encoding is adopted. In order to share the semantic space of aligning market factors and carbon emission data in the embedding layer, cross-modal alignment is adopted. Thirdly, a dynamic attention mechanism is designed. The time attention mechanism is used to adaptively weigh the impacts of key time points. The spatial attention constructs a region correlation matrix through graph neural networks, highlighting the contribution of highly correlated nodes. Federated learning achieves collaborative training under multisource data privacy protection and combines time-delay dynamic weighting and multimodal fusion to improve carbon price prediction accuracy. Finally, experimental results confirm that the proposed method significantly improves the accuracy and robustness of carbon price forecasting.

2. Hierarchical Federated Learning Cross-Modal Spatiotemporal Enhanced Attention Construction

2.1. Hierarchical Federated Learning Framework

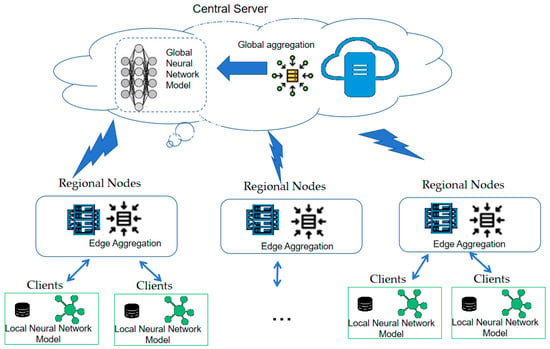

To clarify the conceptual hierarchy, the proposed model adopts a three-level federated architecture consisting of perception clients, regional (edge) nodes, and a central server. Each perception client (e.g., enterprise or institution) conducts local training using its own carbon trading and energy consumption data. The edge nodes aggregate parameters from multiple local clients, while the central server performs global aggregation and updates. This process preserves data locality and emulates hierarchical federated aggregation rather than centralized training. In our experiments, this communication structure is simulated in a controlled environment, with separate processes representing local, regional, and global updates. In order to protect enterprise data privacy, a hierarchical federated learning framework is adopted to train a cross institutional carbon price prediction model. Hierarchical federated learning reduces the central server’s workload through multi-level aggregation, making it well suited for environments with a large number of devices. In contrast, FedAvg follows a simple client–server architecture where all clients communicate directly with the central server and perform model updates through weighted averaging, without specific mechanisms to handle data heterogeneity or communication efficiency. FedProx adds a proximal term to FedAvg to improve stability but retains the same architecture. Hierarchical federated learning, on the other hand, offers enhanced privacy protection and energy efficiency [30,31]. The motivation for developing the hierarchical federated framework lies in addressing two main limitations of conventional federated learning: heterogeneous client resources and reliance on labeled data. The hierarchical federated learning framework is adopted to integrate heterogeneous data sources such as macroeconomic indicators, energy trading data, and policy texts through a dynamic feature alignment mechanism. The hierarchical Federated Learning uses a hierarchical aggregation mechanism to optimize communication efficiency and model performance. Its framework model is shown in Figure 1.

Figure 1.

Hierarchical federated learning framework.

A three-tier architecture consisting of a central server, regional nodes and local perception clients is adopted. Assuming there are clients in the federated learning network, represented by = 1, 2, 3, …, .

(1) Aware terminal device layer: Local training (such as IoT devices) is performed to generate model parameters. The multisource data fusion sensing technology is adopted to realize the minute-level data acquisition system of carbon price market factors, and the multisource data acquisition combined with the IoT equipment and real-time processing of edge computing.

The real-time data acquisition module integrates multimodal sensors, edge computing, and adaptive communication technology to dynamically monitor and analyze key parameters affecting market fluctuations.

(2) Edge node layer: The parameters of multiple perception terminal devices are aggregated to generate an edge model. Lightweight edge nodes are deployed to process real-time data streams. The central node is responsible for global model aggregation and communication delay control.

(3) Cloud server layer: All edge node parameters are aggregated to generate a global model. A horizontal–vertical hybrid federated architecture is adopted. The horizontal federated aggregation samples are used for homogeneous market data (such as carbon prices on various exchanges). The vertical federated alignment feature space is used for heterogeneous influencing factor data.

The goal of hierarchical federated learning: The objective function is the core optimization problem of federated learning, which achieves efficient distributed training through hierarchical aggregation. The global objective function minimizes both local loss and regularization terms while ensuring that original data remain on local devices. In each training round, local clients train models on their own data and send the updated parameters to regional servers. The regional models are then aggregated at the cloud center to form the global model, showing how the system operates in a small-scale experimental setting. To handle differences among clients, weighted aggregation is used when data sizes vary, and regularization is added to reduce the impact of non-independent and identically distributed data. These strategies help maintain stable convergence and efficient communication across heterogeneous clients. This study uses public carbon market data to test the proposed hierarchical federated learning framework as a prototype of a privacy-preserving system. In real applications, the same architecture can be applied to enterprises holding private data, where each client trains locally and shares only encrypted parameters through the hierarchical network. This ensures data privacy while enabling joint model learning across regions.

2.2. Cross-Modal Spatiotemporal Enhanced Attention Model

Enterprises or institutions hold private carbon trading data (such as historical carbon prices and energy consumption), which are locally trained through the model and only upload encrypted model parameters to regional nodes. Local data includes time series carbon prices, energy consumption, production data, and market impact factors dynamically injected into regional nodes through IoT. The federated spatiotemporal prediction network captures the interregional transmission effects of carbon prices. A multi-task learning architecture is adopted. The task of training short-term volatility prediction and long-term trend analysis is combined, and market factors are dynamically weighted through an attention mechanism.

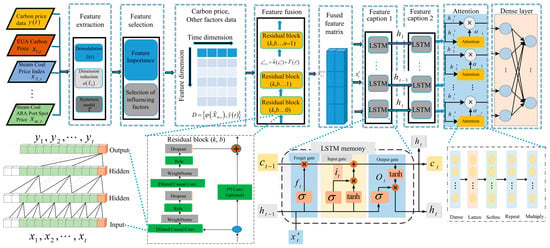

To effectively capture the high-dimensional and dynamic interactions between carbon prices and multiple market influencing factors, a time–frequency lagged effect model is proposed. This model aims to fuse time-domain and frequency-domain features from both carbon price and its influencing variables, enabling a more nuanced understanding of temporal dependencies and market responses. The model architecture is designed to capture both short-term and long-term hysteresis effects and is capable of learning complex nonlinear relationships. In particular, an attention mechanism is incorporated to assign dynamic weights to different time steps, alleviating the issue of feature vanishing caused by increasing sequence lengths. This mechanism ensures that critical information is retained and emphasized in the modeling process. The overall architecture of the proposed model is illustrated in Figure 2.

Figure 2.

Cross-modal spatiotemporal enhanced attention mechanism.

The architecture comprises four key layers, each with distinct functionality:

- Horizontal Federation Aggregation Carbon Price Data: This layer accepts raw carbon prices and influencing factor time series. It utilizes time–frequency decomposition techniques for denoising and trend extraction. The goal is to perform quantitative analysis and eliminate the noise component of carbon price fluctuations, enabling the model to focus on stable trends and critical dynamics. Unified data descriptors are established during the feature engineering phase to ensure consistency across regional feature spaces.

- Vertical Federation Alignment Feature Space: To reduce dimensionality and model complexity, this layer computes the feature importance scores for each time–frequency component using mutual information, correlation metrics, or model-based ranking. Only the top-ranked features are passed on to subsequent layers. This step ensures that only the most relevant lagged features are retained for training. Privacy protection technology enables cross institutional data collaboration. In the gradient aggregation stage, weights are dynamically adjusted based on the contribution of features to model loss to optimize the fusion effect of market related features.

- Spatiotemporal Enhanced Attention Mechanism: The time attention mechanism is used to adaptively weigh the impacts of key time points. The spatial attention constructs a region correlation matrix through graph neural networks, highlighting the contribution of highly correlated nodes. The horizontal federated learning achieves collaborative training under multisource data privacy protection and combines time-delay dynamic weighting and multimodal fusion to improve carbon price prediction accuracy.

- Output Layer: The perception terminal sends the locally updated model parameters to the aggregation server. The server aggregates parameters such as weight matrix and bias vector.

3. Cross-Modal Horizontal–Vertical Hybrid Federation

3.1. Horizontal Federation Aggregation Carbon Price Data

The horizontal federated aggregation samples are used for homogeneous market data (such as carbon prices on various exchanges). Due to the strong volatility and instability of carbon prices, direct modeling of raw carbon price data tends to result in overfitting. Therefore, a model is needed to quantitatively analyze carbon prices and reveal their patterns, timeliness, and inherent logic. To improve the prediction accuracy of carbon prices, an econometric approach is adopted to capture the impact of market factors such as macroeconomic conditions, energy price fluctuations, market speculation, and alternative pricing mechanisms. Additionally, to address frequency inconsistencies across different time scales (daily, weekly, monthly, quarterly, and yearly), a decomposition technique is applied to the carbon price data. This enables the identification of various underlying trends, including seasonal effects, cyclical behaviors, and other periodic features embedded in the complex carbon price signal. The decomposition proceeds by first decomposing the price series, then solving an optimization problem to refine component separation.

3.1.1. Construction of a Time–Frequency Matrix for Carbon Price Series

The input carbon price series undergoes time–frequency analysis, transforming the data from the time domain into the frequency domain to obtain its time–frequency representation. This process yields the distribution of signal energy across both time and frequency, laying the foundation for subsequent decomposition. Initially, background noise and outliers in were removed before analysis. During prediction, the weights are adaptively learned to enhance both robustness and accuracy.

Unstable signals often consist of multiple sub-signals , each of which can be modeled by the following chirp-mode representation:

where is the observed carbon price data, is the number of sub-signals, , , and represent the instantaneous amplitude, frequency, and initial phase of the sub-signals, respectively, and .

The resulting time–frequency matrix derived from captures the primary spectral and temporal features of the signal. From this matrix, eigenvalues and eigenvectors are extracted to reveal the underlying structural patterns in the time–frequency domain. By leveraging these features and physical characteristics, modal components corresponding to various chirp patterns are identified and grouped.

To further isolate individual modal components from the original signal , a logarithmic transformation is applied, and Equation (1) is restructured as:

Here, and are demodulation operators, denotes the chirp frequency functions. These operators are further defined as:

The instantaneous amplitude is given by . When , the frequency deviation vanishes, yielding periodic signals from which the subcomponents of the carbon price data can be estimated. The time–frequency matrix based on then reveals periodic patterns and embedded regularities at different frequencies and time instances.

3.1.2. Modal Feature Extraction and Iterative Decomposition Analysis

Based on the extracted chirp-mode features, the signal is decomposed iteratively into several chirped modal components using an adaptive algorithm. The decomposition parameters are dynamically tuned in accordance with the inherent characteristics of the data to ensure accurate separation of each modal component. The quality of decomposition is assessed by minimizing the mean square error, and the associated parameters are updated accordingly.

To solve the decomposition optimization problem under the assumption , the optimization task for the -th sub-signal is defined as:

where is the second derivative of , is the second derivative of , denotes the -norm, and is the penalty factor. Let denote the number of time samples; the discrete form of Equation (4) becomes:

Here, is a block-diagonal matrix constructed from the second-order finite difference operator , which enforces smoothness: ; is the design matrix defined as: , with and .

During the iterative decomposition, each extracted modal component is subtracted from the original signal before proceeding to extract the next one. This ensures that each chirped mode contains only one dominant physical pattern. The iteration terminates when the residual of the signal satisfies a predefined threshold.

The reconstructed signal then consists of the sum of all modal components and the final residual:

where is the estimated n-th modal subcomponent, and is the residual. Based on this decomposition model of Equation (6), the temporal variation laws of amplitude, frequency, and phase of the carbon price signal can be quantitatively characterized.

3.2. Vertical Federation Alignment Feature Space

A vertical federated alignment feature space is used to handle heterogeneous influencing factor data. Spatiotemporal alignment and feature aggregation of multisource market influencing factors are implemented. Because market factors exhibit multisource heterogeneity, high correlation, and hysteresis, predicting carbon prices is inherently challenging. It is therefore necessary to analyze how these market factors influence carbon price fluctuations and reduce the complexity of input data through dimensionality reduction.

First, the data for market influencing factors over time steps is organized into a matrix , where each column corresponds to one influencing factor:

Let represent the value of the -th influencing factor at time . Each column is mapped to a high-dimensional feature space using a nonlinear transformation . The mean of the transformed feature is:

Each feature is then normalized:

where is the standard deviation of , i.e.,

The kernel matrix is constructed using the kernel function of a multi-layer perceptron. The elements of are computed as:

where is the bandwidth of the Gaussian kernel, .

To center the kernel matrix, the normalized (standard) kernel matrix is computed as:

where is a matrix with all elements equal to .

To address the multicollinearity problem caused by strong correlations among market influencing factors, principal component analysis (PCA) is employed. By extracting the principal components, redundant information is effectively removed, allowing for the identification of the most relevant features.

The eigenvalues and eigenvectors of the covariance matrix of the market influencing factors are computed to quantify the correlation structure between variables. The covariance matrix of the centered kernel matrix is defined as:

where is the -th column of the centered kernel matrix , and .

To perform PCA, the singular value decomposition (SVD) of the covariance matrix is calculated:

where is a diagonal matrix containing the eigenvalues of , is the matrix of left singular vectors (eigenvectors), is the conjugate transpose of the right singular vector matrix.

The eigenvectors in are ordered according to descending eigenvalues:

where is the eigenvector corresponding to the -th largest eigenvalue of the covariance matrix . These eigenvectors define the directions of maximum variance in the transformed feature space.

The eigenvectors are sorted in descending order of their corresponding eigenvalues, and the top principal components are selected. The larger the eigenvalue, the greater the variance of the data along the associated eigenvector direction, and thus, the more information it retains.

The original data is projected onto the selected principal components, effectively transforming it into a new low-dimensional feature space. This feature space is defined by the selected eigenvectors. By performing matrix multiplication, the transformed data is obtained by projecting the standardized feature data onto the reduced eigenspace.

The reduced feature matrix is calculated as:

This dimensionality reduction process helps eliminate multicollinearity present in the original market influencing factor dataset. As a result, only the most strongly correlated and informative features are retained for subsequent modeling.

3.3. Modeling of Hysteresis Effects and Automated Feature Selection

3.3.1. Hysteresis Effect Identification and Modeling

To model the hysteresis effects in market influencing factors, lagged versions of each variable are treated as candidate features. Each variable is analyzed individually to evaluate its time-delayed impact on carbon price fluctuations. Specifically, the hysteresis period (i.e., time lag) at which a given variable exhibits the strongest correlation with carbon price is identified.

For each factor, correlation coefficients between its lagged versions (from 0 to 12 months) and the carbon price are calculated. The lag order with the highest correlation is selected and further verified using Granger causality testing to assess statistical significance. Three approaches are adopted: time-shifted cross-correlation analysis, Granger causality test, and lagged regression modeling.

Time-Shifted Cross-Correlation Analysis

The objective of this method is to determine the correlation coefficient between different hysteresis orders (e.g., 0 to 12 months) of market influencing factors and the carbon price. The time lag corresponding to the maximum correlation is then identified.

Given the standardized time series for the -th influencing factor and the logarithmic carbon price series , index restoration is performed as . The time-shifted cross-correlation function is defined as:

where denotes the hysteresis order (in months), is the lagged time series of the -th influencing factor, and is the carbon price at time . For high-frequency data (e.g., daily), a rolling window analysis is used to dynamically assess the variation in optimal lag order .

To evaluate nonlinear correlations, mutual information coefficient (MIC) is computed between the lagged feature and the carbon price across different delay orders . The dataset is used to compute mutual information:

Here, denotes the mutual information between and over a grid of rows and columns.

A grid search is then performed over all possible 2D grids to identify the configuration that maximizes the standardized mutual information. The MIC(D) of the dataset is assigned to the highest point on the resulting feature surface:

where * indicates the grid product and is a threshold for the product.

If and are statistically independent under all hysteresis orders, the mutual information is zero. Conversely, maximum MIC implies strong nonlinear dependence.

The algorithm is then used to rank the influence of each lagged variable based on its correlation with carbon price under different hysteresis orders. The direction of hysteresis is inferred from the sign of the correlation coefficient. The variable and lag with the highest MIC or correlation are retained for prediction.

Granger Causality Test

To verify whether the lagged variable provides predictive power for , the Granger causality test statistic is calculated as:

where is the lag order of , is the lag order of , and are the residual sum of squares of the restricted and unrestricted models, respectively. If GC exceeds the critical value, the lagged variable is considered to have significant predictive effect.

Lagged Variable Regression Modeling

Once the optimal hysteresis order for each market influencing factor is determined through time-shifted cross-correlation, mutual information, or Granger causality analysis, the corresponding lagged features are incorporated into the predictive regression model.

These lagged variables serve as nonlinear explanatory factors that reflect the delayed impact of market signals on carbon price dynamics. Due to the complexity and potential multicollinearity of such lagged variables, traditional linear regression models may not fully capture their effects. Therefore, tree-based ensemble learning methods, particularly gradient boosting regression models, are adopted to model these nonlinear dependencies.

3.3.2. Automated Selection of Influential Features

Since the influence of different market factors on the carbon price varies in intensity and temporal delay, building an accurate prediction model requires the elimination of redundant and irrelevant features. In this study, we adopt an automatic feature selection mechanism to identify the most significant variables for carbon price prediction. These selected features not only provide relevant information but also encapsulate hysteresis effects embedded in the complex temporal dynamics of the original dataset.

To enhance the generalization capability and predictive accuracy of the model, we calculate the correlation coefficient between each influencing factor and the carbon price across different hysteresis orders. The maximum correlation value for each variable is then extracted and used to determine the optimal lag configuration.

Once the significant features are identified, an ensemble learning framework is applied. In this framework, weak learners (decision trees) are iteratively added to minimize the prediction error. At each stage, the residuals from the previous iteration are used to train the next learner. After a sufficient number of iterations, the outputs of all weak learners are aggregated to produce the final prediction result.

The prediction function of the ensemble model is expressed as:

where is the predicted carbon price at time , denotes the -th regression tree, is the number of trees in the ensemble, and is the function space of all possible CART sets.

The objective function for the model training process is defined as:

where is the loss function, is the regularization term that penalizes model complexity, and is a constant.

The regularization term is defined as:

where is the regularization coefficient, is the number of leaves in the -th tree, is the weight vector of the leaves, and is the weight penalty parameter.

To simplify the objective function, a second-order Taylor expansion is applied:

where and are the first and second derivatives of the loss function:

The additive model assumption implies that each newly trained function is added to the existing ensemble without altering previous trees. The simplified objective function becomes:

Given the complexity of tuning and in Equation (27), Bayesian optimization is used to adaptively select optimal hyperparameters before training. This ensures better generalization and improved learning efficiency. The hyperparameter optimization problem is:

Let be the optimal solution. The steps for Bayesian optimization are as follows:

- Step 1: Initialize candidate set .

- Step 2: Evaluate function value , and compute initial evaluation set .

- Step 3: Build the surrogate model from .

- Step 4: Choose the next evaluation point by minimizing the acquisition function:

- Step 5: Add the new evaluation to the evaluation set:

- Step 6: Repeat until convergence and return the best hyperparameter combination .

It is important to clarify the implementation scope of the proposed framework. In the current study, we focus on vertical feature federation and time series modeling within a unified prediction network. While a hierarchical federated architecture (client ⟶ edge ⟶ cloud) is introduced conceptually to illustrate the potential deployment framework, the present experiments do not implement parameter-level hierarchical model aggregation or heterogeneous small-model fusion across nodes. Instead, the experiments simulate the communication structure in a controlled environment to evaluate the model’s prediction performance using public carbon market datasets. The exploration of hierarchical model weight aggregation and small model–large model fusion is identified as an important direction for future work, particularly for real-world deployments involving privacy-sensitive enterprise data.

4. Spatiotemporal Enhanced Attention Mechanism

The hierarchical federated learning network adopts a multi-task learning architecture to capture the transmission effect of carbon prices between regions. The task of training short-term volatility prediction and long-term trend analysis is combined, and market factors are dynamically weighted through an attention mechanism.

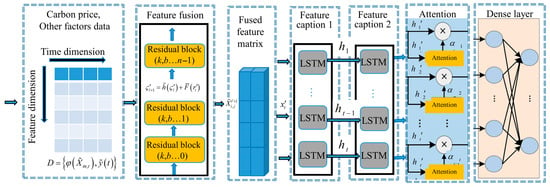

4.1. Multiscale Spatiotemporal Modeling

The feature fusion network layer is designed to effectively capture time-varying patterns of carbon price and multiple market influencing factors across different temporal scales. To achieve this, the model incorporates stacked dilated convolution layers, pooling layers, and fully connected layers, enabling the fusion of both short- and long-term dependencies in multivariate time series data.

In this architecture, the dilated convolution (also known as hole convolution) plays a key role in extracting features across various time scales without increasing computational cost. By adjusting the dilation (void) rate, the receptive field of the convolutional filters is expanded, allowing the network to capture patterns from wider temporal contexts. The multiscale spatiotemporal model is illustrated in Figure 3.

Figure 3.

Multiscale spatiotemporal modeling framework.

The model receives inputs and processes them via causal convolutions that respect the time order. To capture different time-scale dependencies, dilated convolutions are applied. The hole (dilated) convolution operation is formulated as:

where denotes the extracted multiscale feature representation, is the -th element of the convolution kernel, and d’ is the dilation factor. represents the input sequence of the -th modality, and is the time index. denotes the activation function, and is the bias term. represents the cross-attention fusion mechanism, which receives the feature maps from dilated convolutions with different dilation rates. These multiscale tensors are concatenated along the specified dimension to connect features across multiple temporal resolutions. After splicing and fusion, the resulting feature tensor has rows and columns.

Residual connections are introduced to form deeper architectures and mitigate gradient vanishing. Each residual block consists of a skip connection and a convolutional transformation:

where represents a 1 × 1convolution-based direct mapping.

To optimize feature fusion network hyperparameters (e.g., filter size, depth, learning rate), we use the Sparrow Search Algorithm (SSA), a bio-inspired metaheuristic optimization technique. Each sparrow represents a candidate hyperparameter vector, encoded in a position matrix:

where denotes the d-th dimension of the n′-th sparrow. n′ represents the number of sparrows, and d represents the dimension of the variable to be optimized.

The fitness of each individual is calculated based on model performance:

where the value of each row in indicates the fitness value of the individual. SSA includes distinct update rules for producers, scroungers, and sentinels. Producers update their positions as:

where denotes the current iteration, . denotes the value of the j-th dimension of the first sparrow at iteration . is the constant with the highest number of iterations. is a random number. and stand for the alarm value and the safety threshold, respectively. is a random number obeying the normal distribution. denotes a matrix, where each element is 1. When , the producer enters the wide search mode.

The position update rule for vigilant scroungers is:

where is the best position occupied by the producer. denotes the current global worst position. denotes the matrix of , which each element is randomly assigned a value of 1 or −1, and . When , it indicates that the -th gleaner with the worse fitness value is most likely to starve. Danger-aware sparrows (10–20\% of the population) have specialized update rules:

where is the current global optimal position. The step control parameter is a normal distribution with mean 0 and variance 1. is a random number. is the current fitness value of the sparrow. and are the current global optimal and worst fitness values, respectively. is the smallest constant to avoid division by zero error. indicates that the sparrow is at the edge of the flock for simplicity. represents the position of the center of the population, around which it is safe.

Through SSA, the feature fusion network model achieves a more effective configuration of its architecture and learning dynamics, leading to enhanced accuracy in capturing complex patterns in carbon price fluctuations.

After the multiscale temporal features are extracted, we employ a gated recurrent structure to capture long-range dependencies across the time series. This recurrent mechanism is specifically designed to mitigate issues such as vanishing gradients, thereby enabling the model to effectively learn long-term patterns in carbon price dynamics. The internal operations of the recurrent unit at time step are defined by the following recursive update equations:

where is the merged weight matrix, and bis the merged bias vector. is the input at time , , , , are the current memory unit of input gate, output gate, forget gate, and cell state unit, respectively, is the sigmoid activation function, and denotes the hyperbolic tangent function. The memory unit and hidden state through two independent equations are updated:

4.2. Cross-Modal Attention Fusion Hierarchical Federated Learning

Time attention mechanism: To enhance the interpretability and focus of the model, we introduce an time attention mechanism on top of the LSTM outputs. The time attention mechanism allows the model to dynamically assign importance scores to different time steps, effectively emphasizing the most relevant historical features. For each time step , an intermediate attention score is computed as:

where and are trainable parameter vectors and is the bias term.

These scores are then normalized using the softmax function to obtain the time attention weights:

where is the normalized exponential function. Finally, the time- and attention-weighted output is computed as a weighted sum of all LSTM hidden states:

This aggregated context vector is passed to the output layer for final carbon price prediction.

Spatial attention mechanism: Construct a region association matrix through graph neural networks to highlight the contributions of highly correlated nodes. The core idea of graph attention network (GAT) has been adopted. The multi-head attention mechanism calculates the dynamic correlation weights between nodes. Weighted summation aggregates neighbor information and introduces multi-head attention to enhance stability. The spatial attention coefficient of client to client :

where is the normalized exponential function, and are the original feature vector of client and , is the learnable weight matrix used for feature transformation, and is the parameter vector of spatial attention mechanism. (Leaky Rectified Linear Unit) is an improved version of the ReLU activation function. is a concatenation operation, which means connecting multiple vectors along a specific dimension to form a longer vector.

The objective function of federated learning: The global model parameter is , the local model parameter of the client is . The objective function of federated learning is:

where, is the total loss function of the federated learning system, is the total number of clients participating in federated learning, is the client index , is the weight coefficient of the -th client, used to balance the importance of different clients, is the loss function of the -th client on the local dataset , is the local model parameter of the -th client, is the local training dataset of the -th client, is the regularization coefficient, which controls the strength of model aggregation, is the globally shared model parameter, and is the Euclidean norm (L2 norm). The steps for hierarchical federated learning are as follows:

- Step 1: The cloud server initializes the global model and regularization coefficient .

- Step 2: The cloud server selects edge nodes to participate in this round of training according to the data distribution strategy. The total number of edge nodes is denoted as .

- Step 3: Each edge node downloads the initial model parameters from the cloud server, including global model weights , hyperparameter configurations, and training control parameters.

- Step 4: Each edge node synchronously connects to a group of clients.

- Step 5: Each client uses its local data to train the received model.

- Step 6: After local training, each client updates its local model parameters .

- Step 7: Upon completing training, each client uploads the updated parameters to its corresponding edge node.

- Step 8: Each edge node aggregates all uploaded client parameters (e.g., , , ) to form an intermediate edge-level model using incremental aggregation.

- Step 9: Each edge node uploads the aggregated parameters to the cloud server, reducing communication frequency through the edge-level computation.

- Step 10: The cloud server aggregates all model parameters uploaded by the edge nodes (i.e., , , across all clients and nodes) to update the global parameters .

- Step 11: The cloud server executes the optimization objective (Equation (45)) and broadcasts the updated global model parameters to all edge nodes to start the next training iteration.

5. Results and Discussion

This section presents the experimental setup and evaluation results of the proposed model. In the research process of simulating real network environments, Mininet (a process virtualization network simulation tool) is used to construct the network topology structure. Each perception client is mainly composed of local models, IoT sensors, web servers, and data storage units. Each client is responsible for collecting and training. All experiments of perception client were conducted on a Windows 10 operating system using an Intel Core i7-10750H processor. The implementation was carried out using MATLAB (2024b) and Python (version 3.7). It should be noted that the current work demonstrates the conceptual feasibility of a privacy-preserving federated learning framework under a small-scale simulated environment, rather than a real-world deployment involving millions of clients. The scalability and privacy-preserving mechanisms were implemented conceptually within the simulation framework, and full-scale deployment is considered future work. The process of hierarchical federated learning is as follows:

Initialize global model: The server generates initial parameters for the deep learning model.

Client local training: Each client trains a deep learning model based on local data, calculates gradients or updated parameters.

Server aggregation update: The server collects model updates from clients and aggregates them through strategies such as weighted averaging to generate a new global model.

5.1. Evaluation Metrics

To assess the model’s effectiveness, standard evaluation metrics are employed. To enhance prediction accuracy, the sequence data are normalized as follows:

where denotes the normalized data, is the original data, is the maximum value, and is the minimum value in the original dataset.

The following four metrics are used to quantify the prediction accuracy of the model. The Mean Absolute Error (MAE) is defined as:

which measures the average magnitude of the prediction errors. The Mean Absolute Percentage Error (MAPE) is given by:

and provides a relative error in percentage terms. The Root Mean Square Error (RMSE) is calculated as:

which penalizes larger errors more heavily and reflects the standard deviation of the prediction residuals. Finally, the Coefficient of Determination () is used to evaluate the goodness of fit:

where is the mean of the observed values. An value close to 1 indicates strong predictive performance. Together, these metrics provide a comprehensive assessment of the model’s prediction accuracy, robustness, and generalization ability.

5.2. Effectiveness of Carbon Price Decomposition

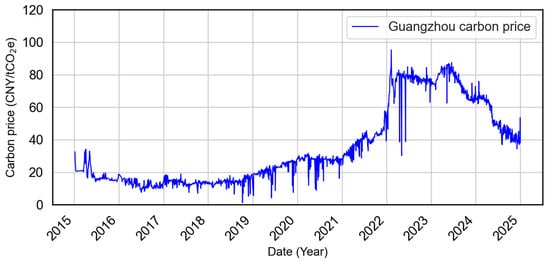

The carbon price data were obtained from the Guangzhou Carbon Emissions Exchange, one of China’s pilot carbon trading markets established to test mechanisms ahead of the national ETS launched in 2021. As a major economic center in southern China, Guangzhou provides an important case for examining how regional carbon pricing interacts with national ETS policies. The dataset was partitioned such that approximately 90% of the data was used for training (2015–2023), and the remaining approximately 10% (2024) was reserved for model testing to ensure chronological integrity.

In addition to the target carbon price series, a set of external influencing variables was incorporated to improve forecasting accuracy. These include macroeconomic indices, commodity prices, exchange rates, and international carbon prices. A complete list and discussion of their economic rationale is provided in Section 3.3.

Before model training, the dataset underwent a series of preprocessing steps. Missing and abnormal values were handled through data cleaning routines. For time series with high-frequency noise, such as the EURO STOXX 50 Index and CSI 300 Index, a five-day sliding mean was applied. For volatile commodity prices (e.g., Brent crude oil, NYMEX natural gas), outliers were truncated based on confidence intervals to mitigate extreme fluctuations. All continuous variables, including carbon prices and influencing factors, were standardized using Z-score normalization to ensure numerical comparability and model stability.

As shown in Figure 4, Guangzhou carbon prices exhibit significant temporal dynamics. From 2015 to 2020, prices remained low and stable. Beginning in 2021, they rose steadily, peaking in 2023, followed by a soft decline. This nonstationary behavior, marked by abrupt structural changes, necessitates robust decomposition techniques capable of isolating meaningful patterns across temporal scales.

Figure 4.

Guangzhou carbon price time series (2015–2024). The market experienced a prolonged low-price phase from 2015 to 2020, a rapid surge in mid-2022 to mid-2023, and a gradual decline thereafter.

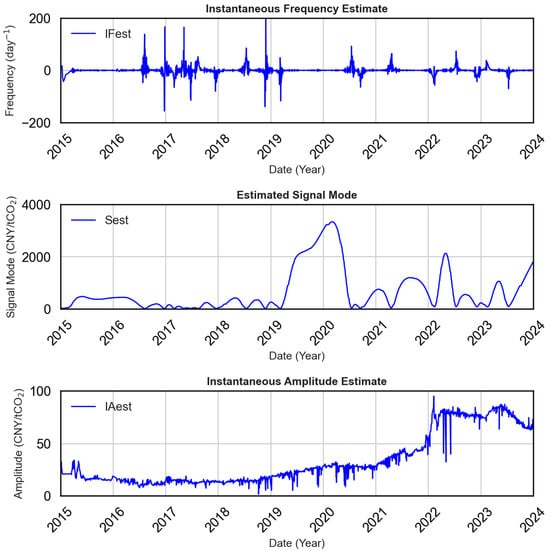

To address this, the signal is decomposed into subcomponents with distinct instantaneous frequencies and amplitudes, facilitating improved learning from nonstationary data. The decomposition results are shown in Figure 5.

Figure 5.

Decomposition results of carbon prices in the Guangzhou market. Top: estimated instantaneous frequency; Middle: decomposed mode components; Bottom: instantaneous amplitude, indicating smoothed price trends.

The top panel of Figure 5 shows the instantaneous frequency, capturing periods of sharp fluctuation. The middle panel displays the decomposed mode components, adaptively segmented into broadband or narrowband regions. The framework employs adaptive bandwidth adjustment and spectrum sensing to distinguish meaningful signal components from noise. This enhances the signal-to-noise ratio and reduces the impact of nonstationarity.

In summary, the temporal structure and stability of the carbon price input is improved, laying a stronger foundation for accurate downstream modeling with the model framework.

5.3. Impact of Feature Selection and Lag Modeling

5.3.1. Selection and Analysis of Influencing Variables

To ensure a comprehensive and interpretable forecasting model, we first curated a set of market influencing variables based on economic theory and empirical relevance to carbon price fluctuations. These variables reflect macroeconomic activity, energy market dynamics, and financial sentiment. Below is a brief description of the candidate variables:

- EUA Carbon Price: The allowance price in the EU Emissions Trading System, representing international carbon market trends. Data are sourced from www.carbonmonitor.org.cn.

- Steam Coal Price Index: A domestic index reflecting the average cost of thermal coal in China, indicative of power generation costs. Data are sourced from the China Coal Transportation and Distribution (CCTD) website.

- Brent Crude Oil Futures: A benchmark for global crude oil prices, affecting fuel substitution and overall energy market sentiment. Data are sourced from investing.com.

- Europe Stoxx 50 Index: A major European equity index used as a proxy for economic growth and investment sentiment in developed economies. Data are sourced from investing.com.

- CSI 300 Index: Represents the performance of the top 300 A-share stocks in Shanghai and Shenzhen, reflecting domestic financial market conditions. Data are sourced from the China Financial Futures Exchange.

- USD/RMB Exchange Rate: Captures fluctuations in the value of the Chinese yuan relative to the US dollar, impacting import energy prices. Data are sourced from investing.com.

- Euro/RMB Exchange Rate: Represents the currency linkage with the Eurozone, affecting import/export cost structures. Data are sourced from xe.com.

- NYMEX Natural Gas Futures: Reflects international gas price trends, providing insight into fuel substitution and emissions displacement. Data are sourced from investing.com.

- Steam Coal ARA Port Spot Price: The spot price of coal at Amsterdam-Rotterdam-Antwerp, serving as a global benchmark for seaborne thermal coal. Data are sourced from Platts Energy Information.

To quantify the time-lagged influence of market factors on carbon prices, we conduct a lag detection analysis using Granger causality tests, mutual information (MIC), and cross-correlation. These methods help identify the most relevant features not only in terms of magnitude but also in terms of the timing of their impact. Such modeling is crucial for carbon markets, where price dynamics are not only volatile but also reactive to delayed economic signals.

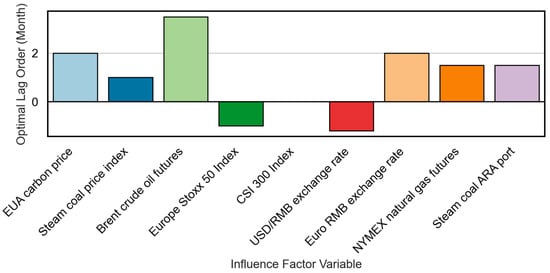

Figure 6 shows the optimal lag order (in months) for each core influencing factor, determined by maximizing the correlation under a significance threshold of from the Granger causality test. This lag structure is used to build a dynamic lag characteristic matrix that captures the temporal dependency and predictive lag pattern for each variable.

Figure 6.

Optimal lag order (Month) of major influencing factor variables in the Guangzhou market.

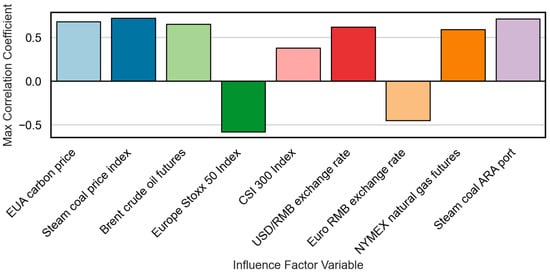

Figure 7 presents the maximum MIC values obtained from the cross-correlation tests. When the lagged feature and the target are completely independent, the MIC is close to zero. Higher values indicate stronger nonlinear dependencies, highlighting the ability of some variables to explain hidden or complex relationships in the pricing process.

Figure 7.

Maximum MIC correlation coefficients between lagged influencing factors and carbon prices.

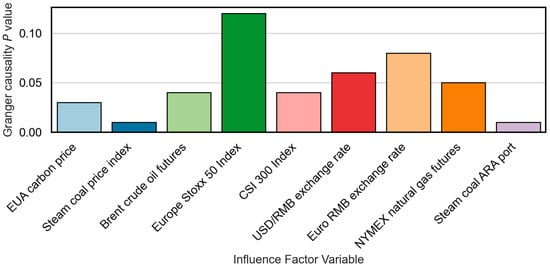

Granger causality values of each factor are shown in Figure 8. A -value less than 0.05 indicates statistically significant Granger causality. This not only confirms the predictive utility of certain features but also supports their causal precedence in influencing carbon price changes.

Figure 8.

Granger causality -values of influencing factor variables.

The combined results demonstrate that steam coal prices at ARA Port (, ), steam coal index (, ), Brent crude oil (, ), and EU EUA carbon prices (, ) have strong predictive power for Guangzhou carbon prices, with optimal lags ranging from one to two months. Conversely, variables such as the Euro Stoxx 50 Index (, ) and the euro exchange rate (, ) exhibit weak or no statistical causality, suggesting indirect or delayed effects through broader macroeconomic channels.

5.3.2. Feature Importance Ranking and Final Selection

Following lag detection, we conducted feature selection and importance evaluation. Bayesian optimization is used to tune hyperparameters. Hyperparameters including maximum tree depth, learning rate, subsampling rate, and column sampling rate are optimized using Bayesian optimization. Table 1 summarizes the optimization results. This grid-based optimization ensures that the model architecture is tailored to the underlying feature structure and avoids overfitting.

Table 1.

Parameter configuration and evaluation results of feature selection model.

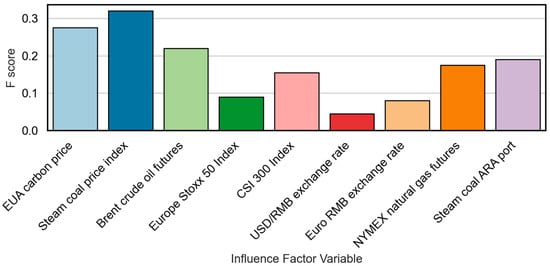

Figure 9 illustrates the feature importance scores (F-score) of input variables. These scores provide a quantitative basis for selecting the most relevant features. The F-score reflects how frequently a feature is used in the model’s decision trees and with what discriminative power.

Figure 9.

F-score importance of influencing factor features.

The top features, including EU EUA prices, thermal coal indices, Brent crude oil, and NYMEX natural gas, exhibit both high F-scores and statistically significant Granger causality, supporting their inclusion in the final prediction model. A one to three-month lag feature matrix is constructed to dynamically capture market shifts and short-term policy responses. Core inputs include the EU EUA carbon price (two-month lag), steam coal price (1-month lag), and CSI 300 index (current value), each capturing a different dimension of supply, demand, and investment climate.

Less informative variables such as the Euro Stoxx 50 and euro exchange rate (with ) are excluded to reduce noise and the risk of overfitting. However, these variables are still monitored externally to detect possible structural breaks or regime shifts, particularly during currency volatility or global economic shocks. This dynamic selection mechanism enhances model interpretability and robustness in forecasting carbon prices in a rapidly evolving market landscape.

Before model training, the dataset underwent standardized preprocessing. Missing values were imputed using linear interpolation for short gaps and mean substitution for longer gaps. Outliers exceeding three standard deviations were capped using a local smoothing filter. High-frequency noise in indices such as EURO STOXX 50 and CSI 300 was suppressed using a five-day moving average. Volatile commodity series (e.g., Brent crude oil, NYMEX natural gas) were truncated based on confidence intervals to mitigate extreme fluctuations. All continuous variables, including carbon prices and influencing factors, were standardized using Z-score normalization to ensure numerical comparability and model stability.

5.4. Prediction Accuracy Comparison

5.4.1. Ablation Study: Effect of Model Components

To examine the contribution of each component in the proposed prediction framework, we performed an ablation study by progressively enabling three key modules: (i) feature selection via XGBoost, (ii) temporal modeling using TCN and LSTM, and (iii) an attention mechanism. Specifically: Model-1 is a baseline TCN model trained using the full set of original features, without feature selection or attention. Model-2 incorporates XGBoost-based feature selection and adopts a hybrid TCN-LSTM architecture for time series modeling. The proposed model builds upon Model-2 by inserting an attention layer after the LSTM module to enhance feature weighting and capture long-range temporal dependencies.

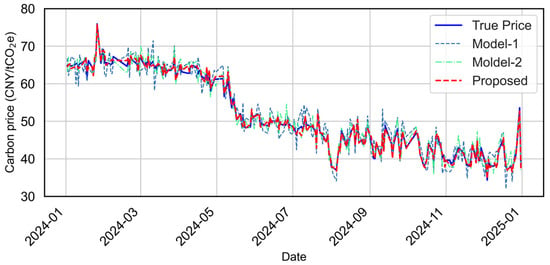

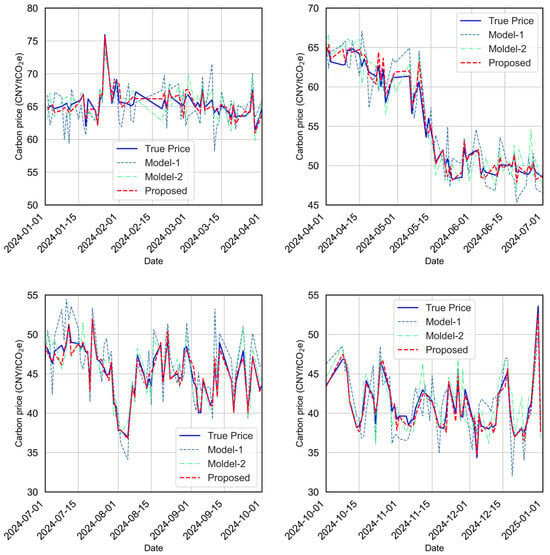

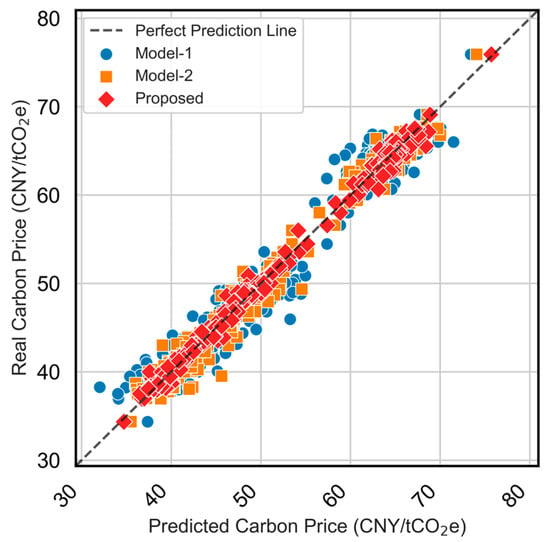

Model performance is evaluated using RMSE, MAE, MAPE, and R2. Overall results are shown in Figure 10, while Figure 11 provides zoomed-in quarterly comparisons to highlight behavior under different market regimes (volatile, stable, rising, and falling periods). Figure 12 presents the predicted-versus-observed scatter plots, and detailed numerical results are summarized in Table 2. The evaluation metrics (RMSE, MAE, and MAPE) are all calculated on the original scale of the carbon price data after reversing the normalization. It should be noted that normalization was applied only during model training to improve numerical stability, and all reported results are converted back to the actual price units for interpretability.

Figure 10.

Overall prediction performance of different model configurations for the testing period (2024/01–2024/12), including the TCN (Model-1), feature-enhanced TCN-LSTM (Model-2), and the proposed XGBoost-TCN-LSTM with attention.

Figure 11.

Quarter-wise prediction accuracy comparison for the testing period (2024/01–2024/12) of different model configurations.

Figure 12.

Scatter plot of predicted vs. actual carbon prices for different model configurations for the testing period (2024/01–2024/12).

Table 2.

Quantitative performance comparison of different model configurations using MAE, MAPE, RMSE, and R2 metrics.

The ablation results reveal several observations. First, XGBoost-based feature selection offers clear benefits, reducing noise in the input space and improving stability across different market phases. Second, combining TCN and LSTM enhances the ability to capture multiscale temporal dependencies, resulting in more accurate and smoother predictions than the baseline. Third, adding attention yields further gains, particularly during periods of sharp market movements, by adaptively emphasizing informative temporal patterns. As visualized in Figure 9, the baseline model exhibits the largest deviation from observations, especially during high-volatility intervals, indicating its sensitivity to redundant features and limited long-term dependency modeling. Model-2 improves markedly in both accuracy and stability, while the proposed model achieves the tightest alignment with ground truth in all quarters and effectively suppresses large-magnitude prediction errors.

Quantitatively, the proposed model attains the best scores across all metrics (e.g., MAE = 0.576, RMSE = 0.772, MAPE = 0.011, R2 = 0.994. The scatter in Figure 11 further confirms the strong agreement between predicted and observed values, with the proposed model demonstrating the most compact distribution along the identity line. In summary, the ablation study shows that (i) feature selection is essential for robust carbon price prediction, (ii) the TCN-LSTM combination effectively captures complex temporal dynamics, and (iii) the attention mechanism delivers additional improvements by refining dynamic feature contributions, particularly under volatile market conditions.

5.4.2. Comparison with Baseline Models

To validate the effectiveness of the proposed model framework, we compare it against two representative baseline models: ARMA-GARCH and LSTM-LGBM. ARMA-GARCH is a classical econometric model suitable for modeling volatility in financial time series, while LSTM-LGBM is a hybrid model combining deep sequence learning and gradient boosting trees.

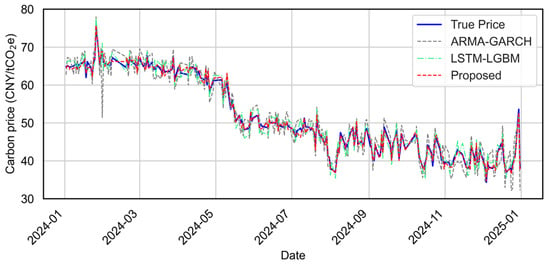

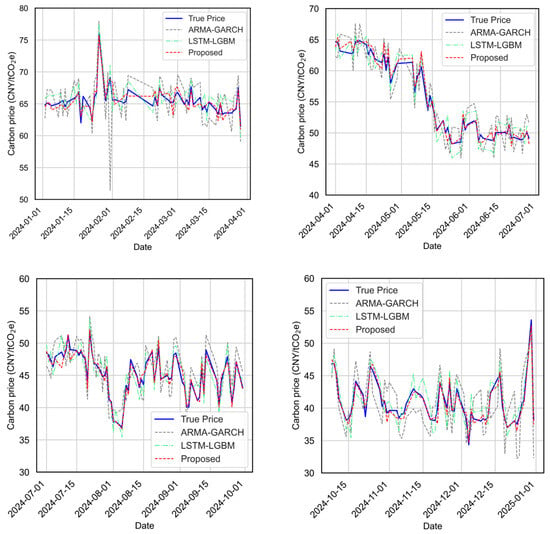

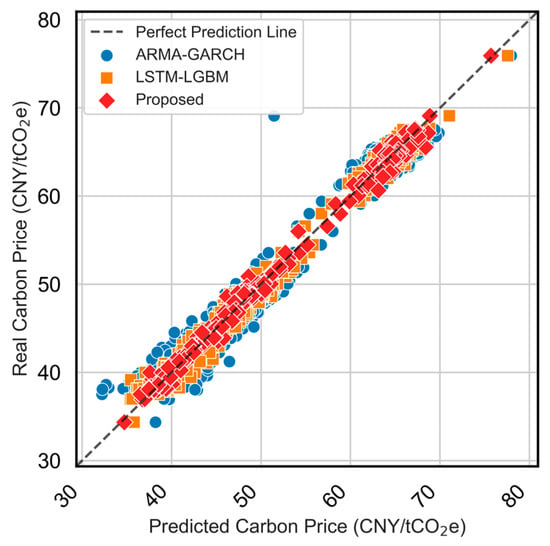

Figure 13 presents the full-year prediction performance of the three models. Figure 14 further presents results by quarter to illustrate how each model responds to different price dynamics, such as trend shifts and volatility spikes. Figure 15 compares the predicted values versus actual carbon prices for all models. Quantitative evaluation metrics (MAE, MAPE, RMSE, R2) are reported in Table 3.

Figure 13.

Overall prediction performance comparison among ARMA-GARCH, LSTM-LGBM, and the proposed models for the testing period (2024/01–2024/12).

Figure 14.

Quarter-wise prediction accuracy comparison across ARMA-GARCH, LSTM-LGBM, and the proposed models for the testing period (2024/01–2024/12).

Figure 15.

Scatter plot of predicted vs. actual carbon prices for ARMA-GARCH, LSTM-LGBM, and the proposed models for the testing period (2024/01–2024/12).

Table 3.

Quantitative performance comparison of ARMA-GARCH, LSTM-LGBM, and the proposed models using MAE, MAPE, RMSE, and R2 metrics.

From both the visual and quantitative perspectives, the proposed model framework consistently outperforms the baseline models. As shown in Figure 13, the proposed model predictions closely track the true carbon price trend throughout the year, exhibiting minimal deviation in both smooth and turbulent phases. In quarterly views (Figure 14), the proposed model adapts better to local fluctuations, maintaining higher fidelity across varying temporal patterns.

The scatter plot in Figure 15 further confirms the effectiveness of the proposed model, with its predicted points tightly clustered around the perfect prediction line. Compared to ARMA-GARCH’s underestimation during volatile periods and LSTM-LGBM’s relatively larger amplitude deviations, the proposed model demonstrates more robust performance.

Table 3 shows that the proposed model reduces the MAE and RMSE by more than 50% compared to ARMA-GARCH, and even significantly outperforms the hybrid LSTM-LGBM. The R2 of 0.994 indicates near-perfect fitting capability, highlighting the advantages of the proposed model in learning complex temporal dependencies and capturing nonlinear relationships in carbon market dynamics.

After presenting the experimental results, this section further discusses the practical implications of the proposed model. In particular, we highlight how it can support both policy formulation and trading decisions in carbon markets. The proposed model can support both policy formulation and trading decisions in carbon markets. For policy decisions, the model quantifies how policy changes—such as quota adjustments or market expansion—affect carbon prices by tracking real-time correlations between policy variables and market responses. This enables simulation of policy scenarios and evaluation of phased policy impacts. The attention mechanism also helps detect unusual market movements, offering insights into refining regulatory tools like quota reserves. For trading decisions, the model integrates data from multiple regional markets through hierarchical federated learning to generate inter-regional carbon price patterns. These results help identify arbitrage opportunities and volatility risks, while the spatiotemporal attention structure provides early warnings of price shifts, supporting both short-term trading and long-term portfolio planning in the carbon market.

6. Conclusions

In order to solve the problem of difficulty in accurately predicting changes in carbon prices due to multiple factors, a perception and prediction of carbon price influencing factors based on hierarchical federated learning and cross-modal spatiotemporal enhanced attention is proposed. This study proposes a novel carbon price prediction model that comprehensively considers the influence of multiple market-driving factors. By applying time–frequency decomposition techniques, the model effectively denoises the carbon price series and enables a more precise analysis of its fluctuation patterns. Dimensionality reduction is employed to extract the most relevant features from high-dimensional influencing factors, while the temporal correlation and hysteresis effects are explicitly captured through lagged modeling strategies. The prediction framework integrates these processed features to forecast carbon prices. Experimental results confirm that the proposed multi-model fusion approach achieves superior predictive performance compared to traditional methods, demonstrating its effectiveness and robustness in modeling the complex dynamics of carbon trading markets. Future work will focus on implementing and experimentally validating hierarchical parameter aggregation mechanisms and secure model fusion strategies for heterogeneous client models within the federated framework. In addition, although the proposed framework simulates a hierarchical federated structure, the present experiments do not involve real confidential data and therefore do not address model weight distillation attacks. Integrating secure aggregation and differential privacy techniques into the hierarchical framework will be an important direction for future work, especially for real industrial deployments with sensitive information.

Author Contributions

Conceptualization, P.W. and X.Z.; methodology, P.W. and X.Z.; software, P.W. and X.Z.; validation, P.W. and X.Z.; formal analysis, P.W.; investigation, P.W. and X.Z.; resources, P.W.; data curation, P.W.; writing—original draft preparation, P.W.; writing—review and editing, P.W. and X.Z.; visualization, P.W.; supervision, P.W. and X.Z.; project administration, P.W. and X.Z.; funding acquisition, P.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Shanghai Open University Center for Research on Digital Management and Service Innovation (Grant No. YJZX2403), and by the Shanghai Local Universities Capacity Building Project (Grant No. 19070502900).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is available from the corresponding authors upon request.

Acknowledgments

During the preparation of this work, the authors used ChatGPT 4.0 in order to improve language expression and grammar checking. After using this tool, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| ACMD | Adaptive Chirp Mode Decomposition |

| ARMA | Autoregressive Moving Average |

| BO | Bayesian Optimization |

| EEMD | Ensemble Empirical Mode Decomposition |

| GARCH | Generalized Autoregressive Conditional Heteroskedasticity |

| KPCA | Kernel Principal Component Analysis |

| LGBM | Light Gradient Boosting Machine |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| RMSE | Root Mean Square Error |

| RBF | Radial Basis Function |

| SSA | Sparrow Search Algorithm |

| TCN | Temporal Convolutional Network |

| VAR-VEC | Vector Autoregression–Vector Error Correction |

| VMD | Variational Mode Decomposition |

| XGBoost | Extreme Gradient Boosting |

References

- Hong, Q.; Cui, L.; Hong, P. The impact of carbon emissions trading on energy efficiency: Evidence from quasi-experiment in China’s carbon emissions trading pilot. Energy Econ. 2022, 110, 106025. [Google Scholar] [CrossRef]

- Ji, C.J.; Hu, Y.J.; Tang, B.J. Research on carbon market price mechanism and influencing factors: A literature review. Nat. Hazards 2018, 92, 761–782. [Google Scholar] [CrossRef]

- Li, H.; Lei, M. The influencing factors of China carbon price: A study based on carbon trading market in Hubei province. Proc. IOP Conf. Ser. Earth Environ. Sci. 2018, 121, 052073. [Google Scholar] [CrossRef]

- Oestreich, A.M.; Tsiakas, I. Carbon emissions and stock returns: Evidence from the EU Emissions Trading Scheme. J. Bank. Financ. 2015, 58, 294–308. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, X.; Chen, X.; Dai, H. Allowance allocation matters in China’s carbon emissions trading system. Energy Econ. 2020, 92, 105012. [Google Scholar] [CrossRef]

- Rambeli, N.; Jalil, N.A.; Hashim, E.; Mahdinezhad, M.; Hashim, A.; Bakri, S.M. The impact of selected macroeconomic variables on Carbon dioxide (CO2) emission in Malaysia. Int. J. Eng. Technol. 2018, 7, 204–208. [Google Scholar] [CrossRef]

- Kaufmann, R.K.; Hines, E. The effects of combined-cycle generation and hydraulic fracturing on the price for coal, oil, and natural gas: Implications for carbon taxes. Energy Policy 2018, 118, 603–611. [Google Scholar] [CrossRef]

- Li, Z.; Wu, H.; Wu, F. Impacts of urban forms and socioeconomic factors on CO2 emissions: A spatial econometric analysis. J. Clean. Prod. 2022, 372, 133722. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Su, C.-W.; Umar, M.; Shao, X. Exploring the asymmetric impact of economic policy uncertainty on China’s carbon emissions trading market price: Do different types of uncertainty matter? Technol. Forecast. Soc. Change 2022, 178, 121601. [Google Scholar] [CrossRef]

- Batten, J.A.; Maddox, G.E.; Young, M.R. Does weather, or energy prices, affect carbon prices? Energy Econ. 2021, 96, 105016. [Google Scholar] [CrossRef]

- Zhou, K.; Li, Y. Influencing factors and fluctuation characteristics of China’s carbon emission trading price. Phys. A Stat. Mech. Its Appl. 2019, 524, 459–474. [Google Scholar] [CrossRef]

- Sheng, Z.; Han, Z.; Qu, Y.; Zeng, B. Study on Price Fluctuation and Influencing Factors of Regional Carbon Emission Trading in China under the Background of High-quality Economic Development. Int. Energy J. 2021, 21, 201–212. [Google Scholar]

- Lu, H.; Ma, X.; Huang, K.; Azimi, M. Carbon trading volume and price forecasting in China using multiple machine learning models. J. Clean. Prod. 2020, 249, 119386. [Google Scholar] [CrossRef]

- Wang, J.; Zhuang, Z.; Gao, D. An enhanced hybrid model based on multiple influencing factors and divide-conquer strategy for carbon price prediction. Omega 2023, 120, 102922. [Google Scholar] [CrossRef]

- Sun, W.; Xu, C. Carbon price prediction based on modified wavelet least square support vector machine. Sci. Total Environ. 2021, 754, 142052. [Google Scholar] [CrossRef]

- Sun, W.; Li, Z. An ensemble-driven long short-term memory model based on mode decomposition for carbon price forecasting of all eight carbon trading pilots in China. Energy Sci. Eng. 2020, 8, 4094–4115. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, S. A Carbon Price Prediction Model Based on the Secondary Decomposition Algorithm and Influencing Factors. Energies 2021, 14, 1328. [Google Scholar] [CrossRef]

- Li, H.; Jin, F.; Sun, S.; Li, Y. A new secondary decomposition ensemble learning approach for carbon price forecasting. Knowl.- Based Syst. 2021, 214, 106686. [Google Scholar] [CrossRef]

- Chen, S.; Yang, Y.; Peng, Z.; Dong, X.; Zhang, W.; Meng, G. Adaptive chirp mode pursuit: Algorithm and applications. Mech. Syst. Signal Process. 2019, 116, 566–584. [Google Scholar] [CrossRef]

- Ji, H. Analysis of national average carbon trading price fluctuation based on GARCH family model. China Price 2022, 6, 96–98. [Google Scholar]

- Zhang, W.; Wu, Z.b. A decomposition-integration forecasting method of carbon emission based on EMD-PSO-LSSVM. Control. Decis. 2022, 7, 1837–1846. [Google Scholar]

- Li, Y.; Yang, N.; Bi, G.; Chen, S.; Luo, Z.; Shen, X. Carbon price forecasting using a hybrid deep learning model: TKMixer-BiGRU-SA. Symmetry 2025, 17, 962. [Google Scholar] [CrossRef]

- Huang, Y.; He, Z. Carbon price forecasting with optimization prediction method based on unstructured combination. Sci. Total Environ. 2020, 725, 138350. [Google Scholar] [CrossRef]

- Huang, Y.; Dai, X.; Wang, Q.; Zhou, D. A hybrid model for carbon price forecasting using GARCH and long short-term memory network. Appl. Energy 2021, 285, 116485. [Google Scholar] [CrossRef]

- Sun, W.; Huang, C. A novel carbon price prediction model combines the secondary decomposition algorithm and the long short-term memory network. Energy 2020, 207, 118294. [Google Scholar] [CrossRef]

- Liu, H.; Shen, L. Forecasting carbon price using empirical wavelet transform and gated recurrent unit neural network. Carbon Manag. 2020, 11, 25–37. [Google Scholar] [CrossRef]

- Xu, J. A hybrid deep learning approach for purchasing strategy of carbon emission rights—Based on Shanghai pilot market. arXiv 2022, arXiv:cs.LG/2201.13235. [Google Scholar]

- Chen, X.; Gong, S.; He, Y.; Cao, W.; Liu, H. Carbon price forecasting in Chinese carbon trading market based on multi-strategy CNN-LSTM. China J. Econom. 2022, 2, 237. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:cs.LG/1803.01271. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, R.; Xue, M.; Wen, S.; Camtepe, S.; Nepal, S.; Xiang, Y. Leakage-Resilient and Carbon-Neutral Aggregation Features the Federated AI-Enabled Critical Infrastructure. IEEE Trans. Dependable Secur. Comput. 2022, 22, 3661–3675. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, R.; Xue, M.; Ma, W.; Wen, S.; Nepal, S.; Xiang, Y. Hardening LLM Fine-Tuning: From Differentially Private Data Selection to Trustworthy Model Quantization. IEEE Trans. Inf. Forensics Secur. 2025, 20, 7211–7226. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).