Non-Destructive Detection and Grading of Plum Quality Based on Multimodal Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Material Selection

2.2. Data Collection

2.2.1. Image Acquisition

2.2.2. Near-Infrared Spectral Information Acquisition

2.2.3. Soluble Solids Content Data Acquisition

2.3. Grading Standards

2.3.1. Comprehensive Index Formula

- Core quality indicator: SSC (weight 0.5). According to global fruit grading standards (e.g., EU No. 543/2011 ), SSC is one of the core grading parameters. Combined with the GH/T 1358—2021 plum grading standard [28], SSC serves as a direct representation of plum sweetness, thus assigned a weight of 0.5.

- Maturity and visual appeal indicators: Peel red color ratio (weight 0.3) and circularity (weight 0.2). For the March plums and Sanhua plums studied in this research, the red hue is not only related to anthocyanin accumulation (reflecting antioxidant activity) but also indicates fruit maturity [29]. As fruit ripens, the peel color deepens, corresponding to improved taste. High circularity fruits are more suitable for automated pitting and slicing equipment, improving processing efficiency [30]. Therefore, a color indicator with weight 0.3 and a circularity indicator with weight 0.2 were incorporated.

2.3.2. Grade Classification Standards

- Premium grade: Comprehensive score ≥ 0.7, representing excellent quality meeting high-end market demands;

- Standard grade: Comprehensive score 0.3 ≤ Score < 0.7, corresponding to mainstream market circulation quality;

- Processing grade: Comprehensive score < 0.3, designated for processing or secondary markets.

2.4. Data Preprocessing

2.5. Multimodal Fusion Grading Model

2.5.1. Feature Extraction

- (1)

- Manual Feature Extraction

- (2)

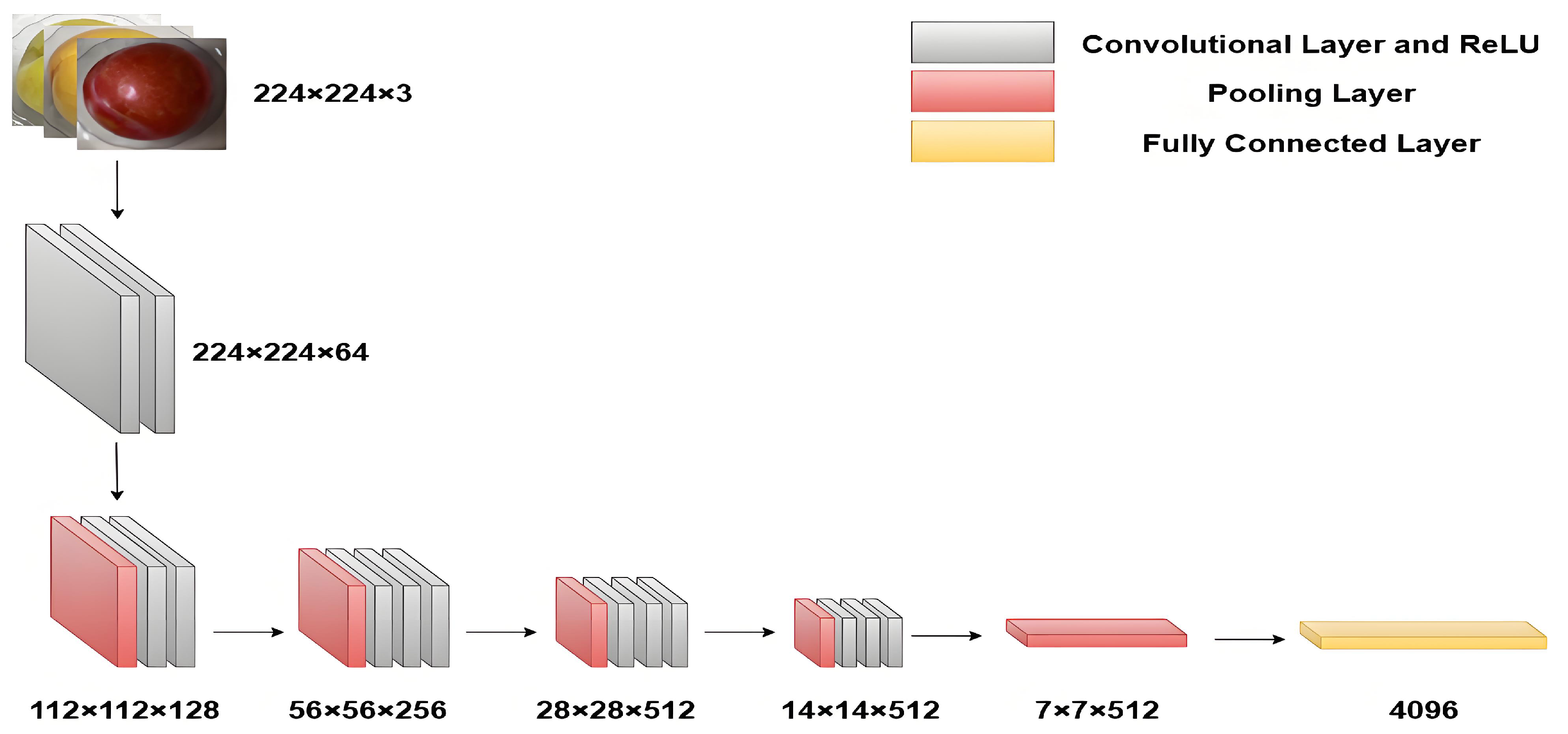

- Automatic Feature Extraction

2.5.2. Feature Fusion

2.5.3. Classification Training

2.5.4. Model Evaluation

3. Results and Discussion

3.1. Analysis of Plum Soluble Solids Content

3.2. Spectral Data Analysis

3.3. Comparison of Multimodal Fusion Grading Model and Single-Modal Grading Models

3.3.1. Single-Modal Grading Models

3.3.2. Multimodal Fusion Grading Model

3.4. Adversarial Label Noise Testing and Model Robustness Analysis

- -

- 257 samples (33% of the training set) were randomly selected for label flipping

- -

- Labels were changed from original class (e.g., “processing”) to opposite class (e.g., “premium”)

- -

- Model was retrained with identical hyperparameters

- -

- Test set remained clean (no noise) for fair evaluation.

- (1)

- Performance under noise: The model achieved 79.06% test accuracy with 33% label noise (compared to 100% without noise), representing a 20.94 percentage point decrease.

- (2)

- Baseline comparison: Under identical noise conditions, single-modal models showed accuracy decreases exceeding 35 percentage points (e.g., spectroscopy model decreased from 83.33% to approximately 54%), demonstrating that multimodal fusion significantly improved noise tolerance.

- (3)

- Overfitting verification: The training loss and validation loss curves converged synchronously without obvious separation, ruling out overfitting risks.

- (4)

- Data leakage exclusion: Test set was strictly isolated from noise injection, and predictions on noisy samples showed no significant correlation with original labels (Pearson r = 0.12, p > 0.05).

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abasi, S.; Minaei, S.; Jamshidi, B.; Fathi, D. Dedicated non-destructive devices for food quality measurement: A review. Trends Food Sci. Technol. 2018, 78, 197–205. [Google Scholar] [CrossRef]

- Tian, Y.H.; Wu, W.; Lu, S.Q.; Deng, H. Application of deep learning in fruit quality detection and grading classification. Food Sci. 2021, 42, 260–269. [Google Scholar]

- Gao, Y.W.; Geng, J.F.; Rao, X.Q. Research progress in nondestructive detection of internal damage in postharvest fruits and vegetables. Food Sci. 2017, 38, 277–287. [Google Scholar]

- Goh, G.L.; Goh, G.D.; Pan, J.W.; Teng, P.S.P.; Kong, P.W. Automated Service Height Fault Detection Using Computer Vision and Machine Learning for Badminton Matches. Sensors 2023, 23, 9759. [Google Scholar] [CrossRef]

- Zhao, H.; Tang, Z.; Li, Z.; Dong, Y.; Si, Y.; Lu, M.; Panoutsos, G. Real-time object detection and robotic manipulation for agriculture using a YOLO-based learning approach. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Zhou, A.M.; Qu, B.Y.; Li, H.; Zhao, S.Z.; Suganthan, P.N.; Zhang, Q. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 2011, 1, 32–49. [Google Scholar] [CrossRef]

- Coello, C.C.; Brambila, S.G.; Gamboa, J.F.; Tapia, M.G.C. Multi-objective evolutionary algorithms: Past, present, and future. In Springer Optimization and Its Applications; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Tao, Y. Spherical transform of fruit images for on-line defect extraction of mass objects. Opt. Eng. 1996, 35, 344–350. [Google Scholar] [CrossRef]

- Bai, F.; Meng, C.Y. Research progress and commercialization of the automatic classification technology. Food Sci. 2005, 26, 145–148. [Google Scholar]

- Tsuchikawa, S.; Ma, T.; Inagaki, T. Application of near-infrared spectroscopy to agriculture and forestry. Anal. Sci. 2022, 38, 635–642. [Google Scholar] [CrossRef]

- Beč, K.B.; Grabska, J.; Huck, C.W. Miniaturized NIR spectroscopy in food analysis and quality control: Promises, challenges, and perspectives. Foods 2022, 11, 1465. [Google Scholar] [CrossRef]

- Li, T.L.; Chen, W.X.; Chen, X.J.; Chen, X.; Yuan, L.M.; Shi, W.; Huang, G.Z. Mango variety recognition based on near-infrared spectroscopy and deep domain adaptation. Spectrosc. Spect. Anal. 2025, 45, 1251–1256. [Google Scholar]

- Khodabakhshian, R.; Emadi, B.; Khojastehpour, M.; Golzarian, M.R.; Sazgarnia, A. Effect of repeated infrared radiation on the quality of pistachio nuts and its shell. Int. J. Food Prop. 2017, 20, 41–53. [Google Scholar] [CrossRef]

- Rong, D.; Wang, H.; Ying, Y.B.; Zhang, Z.Y. Pear detection using regression neural networks based on attention features. Comput. Electron. Agric. 2020, 175, 105553. [Google Scholar] [CrossRef]

- Pu, S.S.; Zheng, E.R.; Chen, B. Research on A Classification Algorithm of Near-Infrared Spectroscopy Based on 1D-CNN. Spectrosc. Spect. Anal. 2023, 43, 2446–2452. [Google Scholar]

- Chen, B.; Jiang, S.Y.; Zheng, E.R. Research on the Wavelength Attention 1DCNN Algorithm for Quantitative Analysis of Near-Infrared Spectroscopy. 1DCNN. Spectrosc. Spect. Anal. 2025, 45, 1598–1604. [Google Scholar]

- Wang, R.; Zheng, E.R.; Chen, B. Detection method for main components of grain crops using near-infrared spectroscopy based on PCA-1DCNN. J. Chin. Cereals Oils Assoc. 2023, 38, 141–148. [Google Scholar]

- Khonina, S.N.; Kazanskiy, N.L.; Oseledets, I.V.; Nikonorov, A.V.; Butt, M.A. Synergy between Artificial Intelligence and Hyperspectral Imagining—A Review. Technologies 2024, 12, 163. [Google Scholar] [CrossRef]

- Kazanskiy, N.; Khabibullin, R.; Nikonorov, A.; Khonina, S. A Comprehensive Review of Remote Sensing and Artificial Intelligence Integration: Advances, Applications, and Challenges. Sensors 2025, 25, 5965. [Google Scholar] [CrossRef]

- Xia, F.; Lou, Z.; Sun, D.; Li, H.; Zeng, L. Weed resistance assessment through airborne multimodal data fusion and deep learning: A novel approach towards sustainable agriculture. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103352. [Google Scholar] [CrossRef]

- Li, W.; Liu, Z.; Hu, Z. Effects of nitrogen and potassium fertilizers on potato growth and quality under multimodal sensor data fusion. Mob. Inf. Syst. 2022, 2022, 6726204. [Google Scholar] [CrossRef]

- Lan, Y.; Guo, Y.; Chen, Q.; Lin, X. Visual question answering model for fruit tree disease decision-making based on multimodal deep learning. Front. Plant Sci. 2023, 13, 1064399. [Google Scholar] [CrossRef]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal deep learning and visible-light and hyperspectral imaging for fruit maturity estimation. Sensors 2021, 21, 1288. [Google Scholar] [CrossRef]

- Lu, Z.H.; Zhao, M.F.; Luo, J.; Wang, G.H.; Wang, D.C. Design of a winter-jujube grading robot based on machine vision. Comput. Electron. Agric. 2021, 186, 106290. [Google Scholar] [CrossRef]

- Raghavendra, S.; Ganguli, S.; Selvan, P.; Bhat, A. Deep learning based dual channel banana grading system using convolution neural network. J. Food Qual. 2022, 2022, 7532806. [Google Scholar] [CrossRef]

- Mazen, F.M.A.; Nashat, A.A. Ripeness classification of bananas using an artificial neural network. Arab. J. Sci. Eng. 2019, 44, 6901–6910. [Google Scholar] [CrossRef]

- Cortés-Montaña, D.; Serradilla, M.J.; Bernalte-García, M.J.; Velardo-Micharet, B. Postharvest melatonin treatments reduce chilling injury incidence in the ’Angeleno’ plum by enhancing the enzymatic antioxidant system and endogenous melatonin accumulation. Sci. Hortic. 2025, 339, 113719. [Google Scholar] [CrossRef]

- GH/T 1413-2021; Li (Plum) Grade Specifications. Standards Press of China: Beijing, China, 2021.

- Zhang, X.Y. Study on Fruit Coloration and Anthocyanin Biosynthesis Mechanism in Plum (Prunus salicina Lindl.). Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2008. [Google Scholar]

- Ruiz-Altisent, M.; Ruiz-Garcia, L.; Moreda, G.P.; Lu, R.; Hernandez-Sanchez, N.; Correa, E.C.; Diezma, B.; Nicolai, B.; García-Ramos, J. Sensors for product characterization and quality of specialty crops—A review. Comput. Electron. Agric. 2010, 74, 176–194. [Google Scholar] [CrossRef]

- Sun, T. Study on Static and Online Detection of Soluble Solids Content and Acidity in Pear by Visible/Near-Infrared Spectroscopy. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2011. [Google Scholar]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal deep learning via late fusion for non-destructive papaya fruit maturity classification. In Proceedings of the 2021 18th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 10–12 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Zhang, H.C.; Li, L.X.; Liu, D.J. A survey on multimodal data fusion research. J. Comput. Sci. Explor. 2024, 18, 2501–2520. [Google Scholar]

- Nicolai, B.M.; Beullens, K.; Bobelyn, E.; Peirs, A.; Saeys, W.; Theron, K.I.; Lammertyn, J. Nondestructive measurement of fruit and vegetable quality by means of NIR spectroscopy: A review. Postharvest Biol. Technol. 2007, 46, 99–118. [Google Scholar] [CrossRef]

| Models | Accuracy | Precision | Recall | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Training Set | Validation Set | Test Set | Training Set | Validation Set | Test Set | Training Set | Validation Set | Test Set | |

| VGG16 | 97.13% | 83.42% | 85.71% | 94.40% | 61.87% | 67.28% | 95.65% | 61.97% | 65% |

| 1D-CNN | 86.77% | 84.62% | 83.33% | 58.04% | 56.77% | 58.18% | 61.35% | 60.70% | 60.12% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Tong, W.; Di, B.; Zhang, L.; Lin, J. Non-Destructive Detection and Grading of Plum Quality Based on Multimodal Data. Sensors 2025, 25, 6962. https://doi.org/10.3390/s25226962

Liu X, Tong W, Di B, Zhang L, Lin J. Non-Destructive Detection and Grading of Plum Quality Based on Multimodal Data. Sensors. 2025; 25(22):6962. https://doi.org/10.3390/s25226962

Chicago/Turabian StyleLiu, Xian, Weibin Tong, Biao Di, Ling Zhang, and Juan Lin. 2025. "Non-Destructive Detection and Grading of Plum Quality Based on Multimodal Data" Sensors 25, no. 22: 6962. https://doi.org/10.3390/s25226962

APA StyleLiu, X., Tong, W., Di, B., Zhang, L., & Lin, J. (2025). Non-Destructive Detection and Grading of Plum Quality Based on Multimodal Data. Sensors, 25(22), 6962. https://doi.org/10.3390/s25226962