Highlights

What are the main findings?

- A novel label refinement framework is proposed for compound fault diagnosis under source-scarce conditions, which iteratively evolves soft labels to enhance domain generalization.

- A KL-divergence-based stability coefficient autonomously guides the iterative label refinement process.

What is the implication of the main finding?

- ALRN achieves over 22% accuracy gain, setting a new state-of-the-art under source-scarce conditions

- It offers a practical solution for industrial diagnosis where collecting diverse labeled data is prohibitive.

Abstract

Domain generalization (DG) aims to develop models that perform robustly on unseen target domains, a critical but challenging objective for real-world fault diagnosis. The challenge is further complicated in compound fault diagnosis, where the rigidity of hard labels and the simplicity of label smoothing under-represent inter-class relations and compositional structures, degrading cross-domain robustness. While current domain generalization methods can alleviate these issues, they typically rely on multi-source domain data. However, considering the limitations of equipment operational conditions and data acquisition costs in industrial applications, only one or two independently distributed source datasets are typically available. In this work, an adaptive label refinement network (ALRN) was designed for learning with imperfect labels under source-scarce conditions. Compared to hard labels and label smoothing, ALRN learns richer, more robust soft labels that encode the semantic similarities between fault classes. The model first trains a convolutional neural network (CNN) to obtain initial class probabilities. It then iteratively refines the training labels by computing a weighted average of predictions within each class, using the sample-wise cross-entropy loss as an adaptive weighting factor. Furthermore, a label refinement stability coefficient based on the max-min Kullback–Leibler (KL) divergence ratio across classes is proposed to evaluate label quality and determine when to terminate the refinement iterations. With only one or two source domains for training, ALRN achieves accuracy gains exceeding 22% under unseen operating conditions compared with a conventional CNN baseline. These results validate that the proposed label refinement algorithm can effectively enhance the cross-domain diagnostic performance, providing a novel and practical solution for learning with imperfect supervision in cross-domain compound fault diagnosis.

1. Introduction

Condition monitoring and fault diagnosis of mechanical equipment are crucial for ensuring the safety and reliability of modern industrial systems [1,2,3]. In this field, vibration analysis utilizing accelerometer signals has been established as the most prevalent and mature methodology. The high signal-to-noise ratio of vibration signals and their direct relationship to mechanical dynamics make them particularly suitable for fault diagnosis [4,5,6]. However, the requirement for physical contact and precise sensor mounting can be a limitation in many scenarios. In comparison, the non-contact nature of acoustic analysis has garnered significant attention as a complementary technique, presenting unique advantages in scenarios where sensor installation is impractical or where faults generate distinct acoustic signatures [7,8,9]. Nevertheless, the accurate and robust fault identification using acoustic signals remains challenging because of their inherent non-stationarity and high susceptibility to environmental noise [10,11,12].

To address these challenges, deep learning models have been widely adopted to automatically learn discriminative features from complex signals. Various advanced architectures have been explored in the literature. Notably, graph neural networks (GNNs) have shown great promise in modeling the structural dependencies within mechanical systems for fault diagnosis, as evidenced by recent works on self-supervised graph feature enhancement (EG-SAGCN) [13], multi-sensor graph attention (MMHGAT) [14], and multiscale channel attention with graph fusion [15]. However, these methods typically require data from multiple sensors to construct meaningful graphs and capture the topological interactions among them.

In single-sensor scenarios, convolutional neural network (CNN) [16,17,18,19], by leveraging its local connectivity and weight-sharing mechanisms, combined with multi-layer convolution and pooling operations, can effectively capture local time-frequency features and global contextual information within signals. This has led to their increased application in fault diagnosis. Recently, attention mechanisms have also been integrated into CNN, guiding the models to prioritize salient fault-related information and thereby enhancing feature discrimination capabilities [20,21]. The core strength of CNN lies in its ability to hierarchically learn from structured data, effectively capturing essential patterns for accurate diagnosis. However, when distributional discrepancies exist between source and target domains due to variations in operating conditions, the non-stationary nature of acoustic signals exacerbates this issue, causing severe feature distribution mismatches [22,23]. This degrades the model generalization performance on unseen target domains and hinders the learning of cross-domain invariant features.

Domain adaptation (DA) has emerged as a critical methodology for resolving domain shift issues. Its fundamental objective is to learn invariant feature representations across source and target domains, thereby eliminating the adverse effects induced by distribution discrepancies [24,25,26]. Numerous methods have been developed to achieve this cross-domain alignment, including the Gaussian mixture variational-based transformer (GMVTDA) [27] and domain adversarial training (DAT) [28]. Furthermore, building upon maximum mean discrepancy (MMD), a novel discrepancy metric termed maximum mean square discrepancy (MMSD) was introduced to comprehensively capture both mean and variance discrepancies in the reproducing Kernel Hilbert space [29,30]. Despite these advancements, an inherent limitation of DA methods is their requirement for target domain data during training, regardless of label availability [31]. This poses a significant challenge for industrial applications, where obtaining target domain data is often difficult or expensive.

In contrast, domain generalization (DG) aims to generalize models trained on multiple source datasets to unseen target domains [32,33,34]. It achieves this by learning domain-invariant representations from the source domains alone, thereby eliminating the need for target data and offering broader applicability [35,36,37]. For example, domain-invariant features can be extracted through a multi-domain fusion generation module that incorporates cross-domain multivariate linearization [38]. DG also allows distributions to be more comprehensive by matching both joint distributions and domain-relevant distributions, rather than only marginal statistics [39]. Furthermore, data augmentation techniques like CycleGAN have been leveraged for domain generalization by synthesizing novel source domains to learn domain-invariant features [40,41,42].

While DG methods excel in mechanical fault diagnosis, their performance in compound fault scenarios is hindered by the inherent limitations of hard labels, often requiring multiple source domains for accurate detection. Considering the industrial scenario, only one or two source domains are typically available, which severely affects the applicability of existing methods. To overcome this challenge, techniques like label smoothing [43,44] have been employed. However, label smoothing’s assumption of uniform label distribution often leads to suboptimal performance in complex industrial environments [45,46]. To directly learn richer, more robust supervisory signals from scarce source domains, this paper proposes an adaptive label refinement network (ALRN). The primary objective is to develop a framework that autonomously evolves and enhances label supervision. This approach moves beyond static one-hot encodings to capture the rich, inter-class semantic relationships essential for generalizing to unseen domains. The core idea is implemented through an iterative process where a new soft label for each class is generated by a weighted aggregation of the model output probability distributions across all samples within that class. The weight assigned to each sample is determined by its training loss, ensuring that samples which are more challenging for the current model contribute more significantly to the refined label definition. This process is autonomously guided by a KL-divergence-based stability coefficient, which is employed as the convergence criterion to ensure refinement quality. The main contributions of this study are as follows:

- This study reveals imperfect label supervision as a critical factor undermining cross-domain generalization performance under the challenging yet practical conditions of scarce source domains and prevalent compound faults.

- A novel adaptive label refinement algorithm is proposed, through which soft labels are dynamically calibrated by an intra-class weighting mechanism. This process is autonomously guided by a KL-divergence-based stability coefficient, which is utilized to quantitatively monitor the refinement process and determine its convergence, thereby eliminating the need for a pre-defined iteration count.

- Extensive experiments on a planetary gearbox compound fault dataset demonstrate that the proposed ALRN framework establishes a new state-of-the-art for cross-domain fault diagnosis, achieving a significant accuracy improvement against conventional supervised baselines in both single-source and dual-source settings.

2. Preliminaries

2.1. Domain Generalization Problem Statement

DG learns robust models from known source domains that can be directly transferred to operate effectively on unseen target domains. The learning process in the source domain can be described as finding a mapping function that operates on data pairs drawn from the source distribution:

where and denote a set of observed samples and their corresponding class labels drawn from the source domain input space and output space , and is the joint probability distribution of the source domain.

The goal of domain generalization is to leverage to make accurate predictions on an unseen target domain:

where denotes an input sample from the target domain, is its predicted label, and is the joint probability distribution of the target domain. This entails learning invariant patterns from limited source observations that preserve discriminative power under distributional shifts.

The establishment of transferable mappings from source to target domains is primarily governed by three critical factors: (1) the observational data in the source domain space, (2) the methodology for constructing the mapping function , and (3) the quality of source-domain labels . The precision of the data is inherently constrained by the data acquisition equipment. Prevailing DG methods aim to enhance cross-domain fault diagnosis by learning domain-invariant features through an optimal mapping function , but they typically rely on the availability of multiple source domains. However, in the context of compound faults under source-scarce conditions, the effectiveness of these methods is fundamentally limited. The presence of imperfect labels hinders the learning of sufficient domain-invariant knowledge from limited data, as the poor supervisory signal cannot adequately guide the feature learning process across domains.

2.2. Limitation of Hard Labels and Label Smoothing

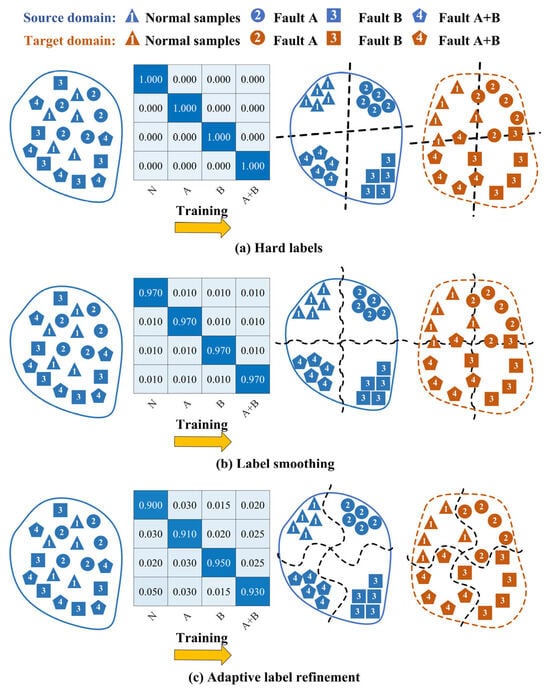

Hard labels, typically represented as one-hot encoded vectors, compel models to learn discrete and often overly confident decision boundaries [47]. For single faults, high diagnostic accuracy can still be achieved even with a limited number of source domains, owing to their distinct inter-class characteristics and relatively clear decision boundaries. For compound faults, abundant source domains can facilitate high-accuracy diagnosis even with hard labels, as sufficient data enables the learning of transferable domain-invariant features. However, diagnosing compound faults under limited source domains remains a formidable challenge, as it confronts the dual obstacles of extracting sufficient domain-invariant features from scarce source domains and distinguishing classes with inherently ambiguous boundaries, as depicted in Figure 1a. Label smoothing has been adopted to soften the categorical boundaries by introducing a uniform smoothing factor to the hard labels. But this method applies a constant smoothing value uniformly across all classes and samples, failing to capture the specific and often asymmetric semantic relationships between different fault types, as illustrated in Figure 1b. While it may slightly improve calibration, it remains ineffective at modeling the nuanced inter-class correlations essential for accurate compound fault diagnosis under domain shift. Consequently, this paper proposes an adaptive label refinement network (ALRN) to achieve more precise and adaptive label softening through a sample-wise weighting algorithm, as shown in Figure 1c.

Figure 1.

Domain generalization performance of different labeling strategies in compound fault diagnosis.

3. Methodology

3.1. Adaptive Label Refinement Network (ALRN)

The adaptive label refinement network utilizes intra-class loss weighting to enhance label representation. By iteratively optimizing the labels multiple times and dynamically fusing fault features with cross-condition common features, it achieves adaptive refinement of the fault classification model.

3.1.1. Feature Extraction

The feature extraction module is trained using a preconstructed dataset through a designed convolutional neural network (CNN), whose detailed architecture is shown in Table 1. The network primarily consists of three convolutional layers and three pooling layers for feature extraction, followed by fully connected layers and a Softmax function for pattern recognition. For the discrepancy between the predicted class probability distribution generated by the CNN and the label distribution , cross-entropy is employed as the quantitative metric, which is defined as follows:

where denotes the number of classes.

Table 1.

Structure of feature extraction module.

3.1.2. Label Refinement Algorithm

The label refinement employs an intra-class loss weighting algorithm to redefine the label. Samples in the training set from the same class are individually fed into the classification model to obtain the probability distribution outputs for all samples within each class. After the -th label refinement iteration, for the -th sample among all samples of the -th class in the training set, its probabilistic output after forward propagation through the network is obtained:

The optimized label is defined as:

when , the denotes an identity matrix, which corresponds to the conventional hard-label assignment.

For training samples propagated through the neural network, the loss demonstrates a strong positive correlation with the degree of deviation from ideal label assignments. Therefore, larger loss values are assigned greater weights in the probabilistic output. The cross-entropy loss is preferred over the Kullback–Leibler divergence in our weighting scheme due to its balanced weighting behavior. While KL divergence could potentially accelerate convergence by focusing more aggressively on high-loss samples, it approaches zero when predictions match labels and thus risks excluding well-classified samples from contributing to label refinement. In contrast, cross-entropy maintains a finite positive value that preserves the participation of all samples. This design effectively prevents a few potentially erroneous samples from dominating the label refinement process, thereby significantly improving the robustness of the aggregation. Consequently, from Equation (3), the weight for the probabilistic output is expressed as:

Then the label after the -th refinement is represented as:

where denotes the label for the -th class after the -th label refinement.

The proposed label refinement strategy mitigates the impact of imperfect supervision by adaptively weighting the intra-class probabilistic outputs from a trained CNN to generate calibrated soft labels. Finally, the network undergoes iterative training using the updated labels .

3.1.3. Label Refinement Stability Coefficient

For the -th sample among all samples of the -th class in the training set, the average KL-divergence loss after forward propagation through the CNN model is defined as follows:

Under the assumption of ideal model convergence with near-perfect label assignments, the average KL-divergence values across different classes should be approximately equal or at least of comparable magnitude. Hence, a label refinement stability coefficient is addressed to quantify the deviation from ideal label assignments, defined as:

The iteration stops when falls below the threshold , namely:

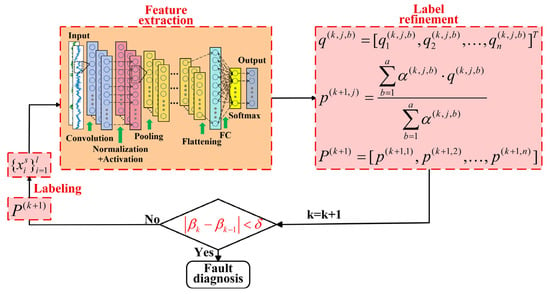

The intra-class loss weighting algorithm was shown in Figure 2. And the flowchart of pseudocode describing training process of ALRN was shown in Algorithm 1.

| Algorithm 1 Training and test procedures for ALRN. |

| ① Training: |

| Input: Labeled source domain dataset . |

| Set the hyperparameters, including the learning rate, convolutional layers, pooling layers, activation functions, epochs, batch size. Set the labels as the identity matrix and threshold . |

| 1: For -th label refinement iteration do |

| 2: Let . |

| 3: For each epoch do |

| 4: Calculate the output of the model. |

| 5: Solve loss based on Equation (3). |

| 6: Calculate the gradients and update the model parameters. |

| 7: End for |

| 8: Calculate weights for the probability distribution outputs based on Equation (6). |

| 9: Slove based on Equation (7). |

| 10: If do |

| 11: Calculate based on Equation (9). |

| 12: Else do |

| 13: Calculate based on Equation (9). |

| 14: If do |

| 15: Break out |

| 16: End if |

| 17: End if |

| 18: End for |

| 19: Save trained model. |

| Output: The trained ALRN diagnostic model. |

| ② Testing: |

| Feed the target domain samples into model for fault diagnosis. |

Figure 2.

Structure of the ALRN.

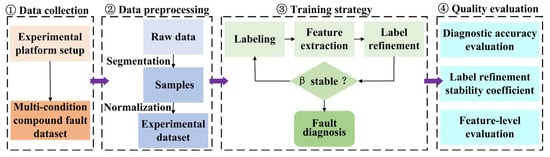

3.2. Overall Structure

Based on the proposed model, the research framework is shown in Figure 3. The procedure begins with establishing an experimental platform for planetary gearbox fault diagnosis to collect acoustic signals under multiple operating conditions and for different fault types. The collected data are then segmented into fixed-length samples and normalized to construct the experimental dataset. Subsequently, a CNN-based feature extraction module is built, and the adaptive label refinement algorithm is executed. This algorithm, guided by the stability coefficient, autonomously determines when the refinement process is sufficient. Finally, the method’s effectiveness is evaluated using both diagnostic accuracy and the proposed stability coefficient.

Figure 3.

Overall structure of the proposed framework.

4. Experiments and Results

4.1. Data Collection and Description

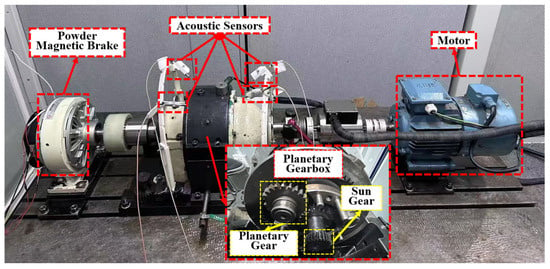

The fault acoustic signals were collected from the planetary gearbox within a semi-anechoic chamber. The experimental setup, illustrated in Figure 4, consisted of a planetary gearbox and an acoustic testing system. By adjusting the variable-frequency motor and powder magnetic brake, the reducer could operate under different conditions including various rotational speeds and torque loads. The acoustic testing system was equipped with four detachable acoustic sensors (Type 46AE, GRAS Sound & Vibration, Holte, Denmark) with a sampling frequency of 10 kHz and a data acquisition device (Model NI 9234, National Instruments, Austin, TX, USA) [48,49].

Figure 4.

Acoustic signal acquisition platform.

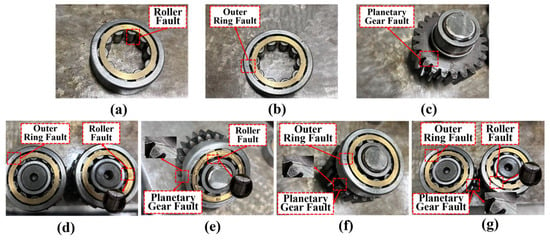

The planetary gearbox was seeded with seven distinct crack-related fault types, as depicted in Figure 5. These comprised three single-component faults: bearing outer ring (O), bearing roller (R), and planetary gear (P). Four compound faults were also introduced: combined failures of the outer ring and roller (OR), outer ring and planetary gear (OP), roller and planetary gear (RP), and a tri-component failure involving all three elements (ORP). A normal condition (N) without any faults was also included. Data samples under six distinct operating conditions were collected, with each sample containing 4096 data points, as shown in Table 2.

Figure 5.

The fault types of planetary gearboxes: (a–c) Single-component faults; (d–g) Compound faults.

Table 2.

Settings of different operating conditions.

Stochastic Gradient Descent with Momentum (SGDM) was used with an initial learning rate of 0.001. During each refinement phase, single-source domain training was conducted with a batch size of 32 for 200 epochs. The learning rate was reduced from 0.001 to 0.0001 after the first 150 epochs. Similarly, dual-source training was performed with a batch size of 64 for 300 epochs, with the learning rate reduced from 0.001 to 0.0001 after the first 250 epochs. It is noted that the model was continuously trained on the refined labels without parameter reinitialization, thus preserving knowledge from previous stages. Moreover, all algorithms were implemented on a computing platform with an Intel(R) Core(TM) i7-8700 CPU @ 3.20 GHz, 16.0 GB RAM, and an NVIDIA GeForce GTX 1070 GPU.

4.2. Performance of Adaptive Label Refinement Network

The domain generalization capability of the proposed Adaptive Label Refinement Network (ALRN) was evaluated through cross-domain fault diagnosis tasks under limited source domain conditions. The experimental framework incorporated two representative scenarios: (1) single-source domain generalization and (2) dual-source domain generalization. Particular attention was devoted to examining the model robustness against significant distribution shifts between source and target domains, especially under substantial rotational speed variations. Table 3 provides the detailed experimental configuration for both scenarios.

Table 3.

Fault diagnosis experimental configurations.

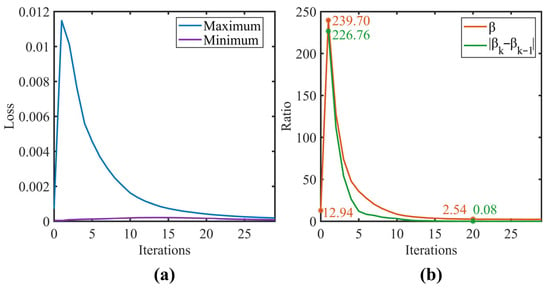

The variation of diagnostic accuracy with refinement iterations is shown in Figure 6. The model diagnostic performance reached its optimum at around 18 to 22 iterations in both scenarios. Compared to the baseline CNN model, the average accuracy after 20 refinement iterations improved significantly from 68.75% to 91.73% for the single-source domain and from 61.01% to 87.50% for the dual-source domain. A slight initial accuracy decrease was observed in task G4 (single-source scenario), but it gradually increased and eventually stabilized as the refinement iterations progressed. Figure 7a illustrates the variation of the maximum and minimum KL-divergence values, while Figure 7b shows the resulting label refinement stability coefficient for tasks G1 and G2. As depicted in Figure 7b, underwent a sharp transition from step 0 to 1. This sharp transition was attributed to the initial instability during the hard-to-soft label transition. However, this perturbation did not compromise the overall convergence. After the first iteration, monotonically decreased from 239.70 and eventually converged to 2.54 at the 20th iteration, coinciding with the peak diagnostic accuracy. The convergence threshold was set to 0.1. This value was empirically validated by the convergence behavior observed in our experiments, where the absolute difference in decreased to 0.08 at the 20th iteration, falling below the predefined threshold. These results demonstrate that the proposed coefficient effectively quantifies the fidelity of label assignments and its convergence behavior can serve as a reliable criterion for terminating the refinement process.

Figure 6.

Diagnostic accuracy over refinement iterations: (a) Single-source domain; (b) Dual-source domain.

Figure 7.

Label refinement stability coefficient for task G1 and G2: (a) Bounds of KL-Divergence; (b) Maximun/Minimum.

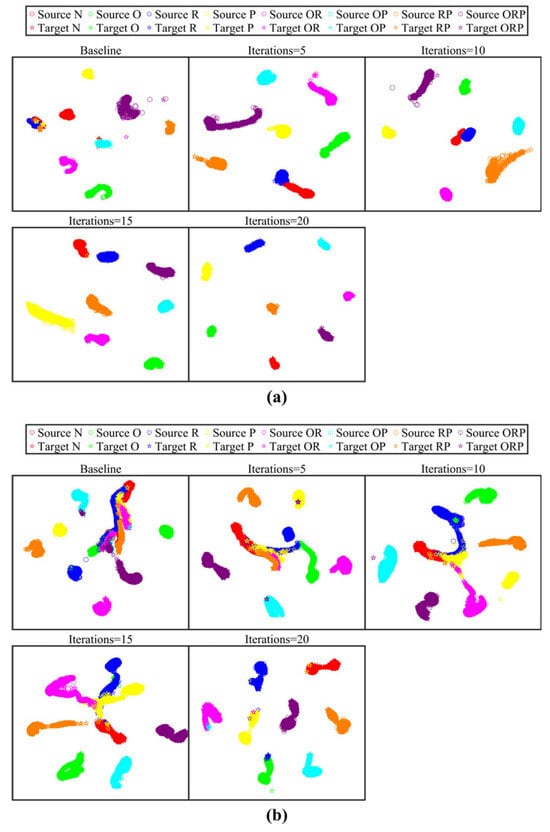

To provide an intuitive evaluation, t-SNE [50] was employed to visualize the evolution of feature distributions for single-source (G1) and dual-source (Q3) tasks. Figure 8 shows a comparative analysis between the baseline model (0th iteration) and optimized iterations (5th, 10th, 15th, and 20th), revealing a progressive improvement in class separability. In task G1, the initial iteration exhibited significant cluster overlap between classes N and R. In task Q3, the initial distributions of classes N, O, R, P, and OR were highly entangled. Through successive refinement iterations, the inter-class boundaries became increasingly distinct, achieving nearly linear separability across all fault categories by the 20th iteration. These visual results empirically confirm that the ALRN method effectively mitigates the adverse effects of imperfect label supervision in cross-domain scenarios.

Figure 8.

Feature visualization results across label refinement iterations: (a) Task G1; (b) Task Q3.

4.3. Ablation Studies

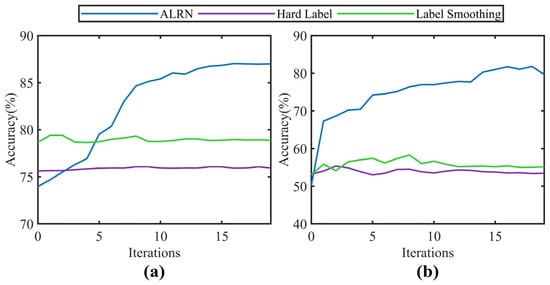

An ablation study was conducted to further evaluate the role of the label weighting strategy in the Adaptive Label Refinement Network (ALRN). Figure 9 displays the accuracy trends for ALRN, hard labels, and label smoothing (with a smoothing factor of 0.1) under the same number of iterations on tasks G4 and Q2. The results indicate that while hard labels and label smoothing show no significant change in performance with increasing iterations, only ALRN demonstrates a marked rise in diagnostic accuracy. This outcome substantiates not only the effectiveness of the adaptive label weighting strategy but also the rationality of the selected training cycle, as it ensures that the model is sufficiently trained to converge after each label refinement.

Figure 9.

Accuracy of different label weighting strategies across iterations: (a) Task G4; (b) Task Q2.

4.4. Comparative Experiments

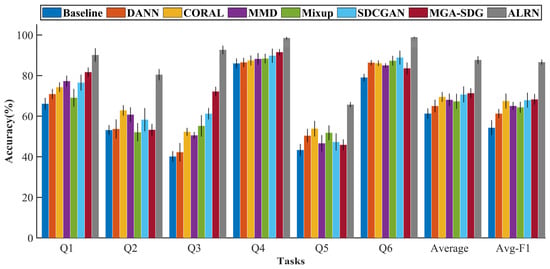

To systematically evaluate the enhancement effect of adaptive label refinement on model cross-domain generalization capability under compound fault conditions and validate the performance superiority of the proposed ALRN, this study conducted comparative experiments with several representative domain generalization approaches, including the classic DANN [28], CORAL [51], MMD [29], and Mixup [52], as well as the recent SDCGAN [41] method, which augments the source domain by generating divergent domains via CycleGAN to learn domain-invariant features through adversarial training, and the MGA-SDG [53] method, which leverages a multi-Gaussian attention mechanism to project multi-scale features into Gaussian spaces for obtaining more consistent and robust feature representations.

As shown in Table 4 and Figure 10, the proposed ALRN framework demonstrated statistically significant superiority over all benchmark methods across multiple cross-domain tasks. These results were validated through ten independent experimental trials. Each trial was initiated with a different random seed for parameter initialization to ensure the robustness and reliability of our findings. Notably, ALRN achieved near-optimal classification performance in tasks Q4 and Q6, with accuracies of 98.47 ± 0.55% and 98.85 ± 0.31%, respectively. In the challenging scenario of task Q3, it attained a substantially higher accuracy of 92.64 ± 2.11% compared to alternative approaches. A comparative analysis reveals that the classification accuracies in tasks Q2 and Q5 were universally lower for all models investigated. This performance degradation is primarily attributable to the substantial discrepancy in rotational speed between the source and target domains within these specific tasks; namely, a transfer from 900 rpm to 2700 rpm in task Q2, and the reverse from 2700 rpm to 900 rpm in task Q5. Such a significant domain shift exacerbates the difficulty of learning domain-invariant features, thereby presenting a considerable challenge for any domain adaptation method. Crucially, despite these adverse conditions, the proposed ALRN model consistently surpassed all baseline methods, which serves to underscore its superior robustness and enhanced generalization capability in the presence of large domain gaps.

Table 4.

Diagnosis result of different domain generalization methods.

Figure 10.

Diagnostic performance comparison different domain generalization methods.

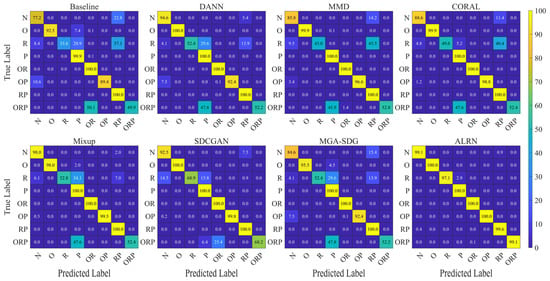

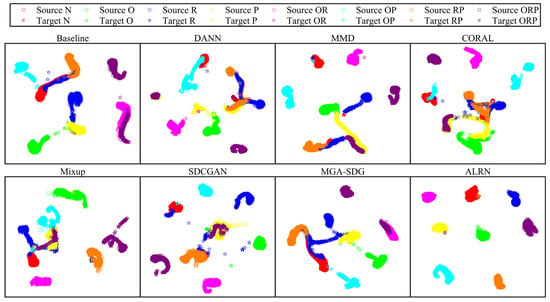

The confusion matrix analysis for task Q6, presented in Figure 11, provides further insight into model performance across fault categories. While existing methods, particularly Mixup, showed notable improvement in detecting normal conditions (class N, increasing from 77.2% to 98.0%), their performance remained limited for fault categories R and ORP. In contrast, the ALRN method demonstrated consistent superiority across all fault categories, achieving near-perfect accuracy approaching 100% for classes N, R, and ORP. Feature space visualizations for task Q6, presented in Figure 12, provide complementary structural insight into the model performance. Comparative approaches exhibit substantial feature space overlap among the challenging categories N, R, and ORP. In contrast, the proposed ALRN method establishes clearly separable decision boundaries for all these categories. These visual manifestations corroborate the quantitative results, demonstrating ALRN’s ability to learn discriminative representations that remain invariant under domain shifts. Together, the consistent advantage of ALRN across both quantitative metrics and qualitative visualizations underscores the effectiveness of the adaptive label refinement mechanism in enhancing model generalization under complex compound fault scenarios.

Figure 11.

Confusion matrices of different domain generalization methods for task Q6.

Figure 12.

Feature visualization results of different domain generalization methods for task Q6.

5. Discussion

The efficacy of the proposed Adaptive Label Refinement Network (ALRN) has been systematically validated through iterative refinement analysis and comprehensive comparative experiments. The concurrent improvement in diagnostic accuracy and the label refinement stability coefficient across training iterations demonstrates that the adaptive label refinement mechanism effectively mitigates the adverse effects of imperfect supervisory signals, thereby enhancing the model’s generalization robustness under domain shift. Notably, the stability coefficient serves as a novel quantitative metric for assessing label boundary clarity. It achieves this by quantifying inter-class confusion through the disparity in KL-divergence losses across categories under the current label distribution.

Feature space visualizations offer complementary structural evidence for the model’s classification performance. These visualizations reveal that ALRN successfully reduces feature entanglement among easily confusable categories by progressively calibrating the soft label assignments of ambiguous samples. This process leads to more discriminative feature representations and clearer decision boundaries, which are critical for accurate fault diagnosis under distribution discrepancies.

Ablation studies provide further validation of the adaptive weighting mechanism. The observed performance plateau under static label strategies confirms their limitation in refining feature representations, while the progressive accuracy gain achieved by ALRN underscores the critical role of iterative label refinement. This evidence substantiates that the proposed method effectively overcomes the limitations of imperfect labels, enabling the learning of more robust domain-invariant features.

The comparative experiments reveal a notable limitation of existing domain generalization methods such as DANN [28], CORAL [51], and MGA-SDG [53], as their performance is substantially constrained in compound fault diagnosis tasks with scarce source domains. This performance constraint underscores that the imperfection of supervisory signals, which these methods do not explicitly address, becomes a primary bottleneck for generalization in such challenging scenarios. In contrast, ALRN directly addresses the imperfect label issue through its label refinement process, thereby achieving significantly enhanced accuracy and robustness.

Despite its demonstrated advantages, the proposed method is not without limitations. The primary drawback lies in its computational overhead during the training phase. Since ALRN requires iterative refinement cycles, and each cycle involves a full training pass of the backbone network, the total training time is approximately , where is the time required to train a standard CNN baseline once. This represents a linear increase in costs compared to non-iterative methods. However, this limitation must be evaluated within the context of practical application. Firstly, the training phase is typically offline, where increased computation is an acceptable trade-off for superior performance. Secondly, the inference complexity remains identical to standard CNN. As the iterative refinement is confined to training and does not alter the final model’s architecture, it introduces no additional latency during deployment. This preserves the diagnostic system’s real-time capability, which is crucial for industrial applications.

Furthermore, the current work has three additional aspects that warrant further investigation. Firstly, the convergence threshold , while effective in our experiments, was determined empirically. Its generalizability and optimal setting across a wider range of fault types and operational conditions should be explored in future studies. Secondly, the proposed weighting strategy, which is central to the label refinement process, is designed for and validated on high-quality datasets. Its performance may degrade in the presence of significant noise. Therefore, a critical future direction is to investigate the integration of robust data pre-processing techniques, such as data denoising or sample screening, with the ALRN framework to ensure its reliability in more diverse and challenging industrial environments. Finally, the experimental validation in this study is primarily based on data from planetary gearboxes. While the results are promising, the generalizability of ALRN to other critical rotating machinery (such as bearings and motors) under compound fault conditions remains to be further verified. Expanding its scope of application is another key objective for our future research.

6. Conclusions

This article proposes a novel Adaptive Label Refinement Network (ALRN) for compound fault diagnosis. The method tackles the limited domain generalization capability arising from the inherent limitations of hard labels and label smoothing. Its core is a progressive label refinement algorithm that mitigates the impact of imperfect supervision, thereby enhancing the model’s ability to learn transferable and discriminative features in cross-domain scenarios. A key innovation is the KL-divergence-based refinement stability coefficient, which objectively measures label assignment quality and provides a principled criterion for terminating the refinement process.

Experimental results demonstrate ALRN’s superior diagnostic performance under data-scarce conditions, outperforming conventional domain generalization approaches that typically require abundant source domains. This advance is particularly significant for industrial applications where source domains are severely limited. Although ALRN incurs greater computational overhead during training, it maintains the high inference efficiency of conventional methods, fully meeting the real-time requirements of industrial fault diagnosis systems.

Future work will focus on three key directions to further enhance the practical applicability of the method. Firstly, we aim to optimize training efficiency, facilitating potential online learning applications. Secondly, the generalizability of the ALRN will be systematically evaluated on compound fault datasets from other critical components of rotating machinery, such as bearings and motors. Lastly, we will rigorously assess the robustness of the algorithm in real-world industrial settings, characterized by significant acoustic noise and interference, which is essential for transitioning ALRN from a laboratory solution to a reliable industrial diagnostic tool.

Author Contributions

Writing—original draft preparation and methodology, Q.D.; conceptualization, J.Y. (Jiajia Yao); data collection F.T.; writing—review and editing, J.Y. (Jingyuan Yang); supervision, S.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 52275538.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions, which have significantly improved the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gao, Z.; Cecati, C.; Ding, S. A survey of fault diagnosis and fault-tolerant techniques part II: Fault diagnosis with knowledge-based and hybrid/active approaches. IEEE Trans. Ind. Electron. 2015, 62, 3768–3774. [Google Scholar] [CrossRef]

- Cai, B.; Huang, L.; Xie, M. Bayesian networks in fault diagnosis. IEEE Trans. Ind. Inf. 2017, 13, 2227–2240. [Google Scholar] [CrossRef]

- Xiao, Y.; Shao, H.; Wang, J.; Yan, S.; Liu, B. Bayesian variational transformer: A generalizable model for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2024, 207, 110936. [Google Scholar] [CrossRef]

- Spirto, M.; Nicolella, A.; Melluso, F.; Malfi, P.; Cosenza, C.; Savino, S.; Niola, V. Enhancing SDP-CNN for Gear Fault Detection Under Variable Working Conditions via Multi-Order Tracking Filtering. J. Dyn. Monit. Diagn. 2025. early access. [Google Scholar] [CrossRef]

- Hou, B.; Wang, Y.; Wang, D. Investigations on Multiclass Classification Model-Based Optimized Weights Spectrum for Rotating Machinery Condition Monitoring. J. Dyn. Monit. Diagn. 2025, 4, 194–202. [Google Scholar]

- Zuo, J.; Lin, J.; Miao, Y. Planetary Gearbox Fault Detection under Speed-varying Conditions with Angular Domain Cyclostationary Feature Mode Decomposition. IEEE Sens. J. 2025, 25, 36725–36734. [Google Scholar] [CrossRef]

- Liu, T.; Mao, Y.; Dou, H.; Zhang, W.; Yang, J.; Wu, P.; Li, D.; Mu, X. Emerging wearable acoustic sensing technologies. Adv. Sci. 2025, 12, 2408653. [Google Scholar] [CrossRef] [PubMed]

- Choudhary, A.; Mishra, R.K.; Fatima, S.; Panigrahi, B.K. Multi-input CNN-based vibro-acoustic fusion for accurate fault diagnosis of induction motor. Eng. Appl. Artif. Intell. 2023, 120, 105872. [Google Scholar] [CrossRef]

- Yang, H.; Lai, M.; Li, G. Novel underwater acoustic signal denoising: Combined optimization secondary decomposition coupled with original component processing algorithms. Chaos Solitons Fractals 2025, 193, 116098. [Google Scholar] [CrossRef]

- Kundu, P. Review of rotating machinery elements condition monitoring using acoustic emission signal. Expert Syst. Appl. 2024, 252, 124169. [Google Scholar] [CrossRef]

- Tang, L.; Wu, X.; Wang, C.; Liu, X. Single-sensor defect localization for low-speed bearing based on acoustic emission signal dispersion characteristics. IEEE Trans. Instrum. Meas. 2025, 74, 3521111. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, G.; Tang, S.; Wang, R.; Su, H.; Wang, C. Acoustic signal-based fault detection of hydraulic piston pump using a particle swarm optimization enhancement CNN. Appl. Acoust. 2022, 192, 108718. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, J.; Zhang, X.; Lu, Y. Self-supervised graph feature enhancement and scale attention for mechanical signal node-level representation and diagnosis. Adv. Eng. Inform. 2025, 65, 103197. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Liu, J.; Wu, B.; Hu, Y. Graph features dynamic fusion learning driven by multi-head attention for large rotating machinery fault diagnosis with multi-sensor data. Eng. Appl. Artif. Intell. 2023, 125, 106601. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, J.; Zhang, X.; Lu, Y. Multiscale channel attention-driven graph dynamic fusion learning method for robust fault diagnosis. IEEE Trans. Ind. Inform. 2024, 20, 11002–11013. [Google Scholar] [CrossRef]

- Jia, L.; Chow, T.W.S.; Yuan, Y. GTFE-Net: A Gramian time frequency enhancement CNN for bearing fault diagnosis. Eng. Appl. Artif. Intell. 2023, 119, 105794. [Google Scholar] [CrossRef]

- Peng, D.; Wang, H.; Liu, Z.; Zhang, W.; Zuo, M.J.; Chen, J. Multibranch and multiscale CNN for fault diagnosis of wheelset bearings under strong noise and variable load condition. IEEE Trans. Ind. Inf. 2020, 16, 4949–4960. [Google Scholar] [CrossRef]

- Tang, S.; Zhu, Y.; Yuan, S. An improved convolutional neural network with an adaptable learning rate towards multi-signal fault diagnosis of hydraulic piston pump. Adv. Eng. Inform. 2021, 50, 101406. [Google Scholar] [CrossRef]

- Yang, J.; Duan, H.; Li, L.; Stewart, E.; Huang, J.; Dixon, R. 1D CNN based detection and localisation of defective droppers in railway catenary. Appl. Sci. 2023, 13, 6819. [Google Scholar] [CrossRef]

- Siddique, M.F.; Saleem, F.; Umar, M.; Kim, C.H.; Kim, J.M. A hybrid deep learning approach for bearing fault diagnosis using continuous wavelet transform and attention-enhanced spatiotemporal feature extraction. Sensors 2025, 25, 2712. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Jiang, Y.; Jiao, W.; Zhang, F.; Wang, Z.; Huang, J.; Wang, X.; Zhang, K. Double attention-guided tree-inspired grade decision network: A method for bearing fault diagnosis of unbalanced samples under strong noise conditions. Adv. Eng. Inform. 2025, 64, 103004. [Google Scholar] [CrossRef]

- Lu, B.; Zhang, Y.; Sun, Q.; Li, M.; Li, P. A novel multidomain contrastive-coding-based open-set domain generalization framework for machinery fault diagnosis. IEEE Trans. Ind. Inf. 2024, 20, 6369–6381. [Google Scholar] [CrossRef]

- Qian, Q.; Qin, Y.; Luo, J.; Wang, Y.; Wu, F. Deep discriminative transfer learning network for cross-machine fault diagnosis. Mech. Syst. Signal Process. 2023, 186, 109884. [Google Scholar] [CrossRef]

- Gholami, B.; Sahu, P.; Rudovic, O.; Bousmalis, K.; Pavlovic, V. Unsupervised multi-target domain adaptation: An information theoretic approach. IEEE Trans. Image Process. 2020, 29, 3993–4002. [Google Scholar] [CrossRef]

- Shermin, T.; Lu, G.; Teng, S.W.; Murshed, M.; Sohel, F. Adversarial network with multiple classifiers for open set domain adaptation. IEEE Trans. Multimed. 2021, 23, 2732–2744. [Google Scholar] [CrossRef]

- Wang, R.; Huang, W.; Wang, J.; Shen, C.; Zhu, Z. Multisource domain feature adaptation network for bearing fault diagnosis under time-varying working conditions. IEEE Trans. Instrum. Meas. 2022, 71, 3511010. [Google Scholar] [CrossRef]

- An, Y.; Zhang, K.; Chai, Y.; Zhu, Z.; Liu, Q. Gaussian mixture variational-based transformer domain adaptation fault diagnosis method and its application in bearing fault diagnosis. IEEE Trans. Ind. Inf. 2024, 20, 615–625. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Li, X. Weakly supervised adversarial domain adaptation for semantic segmentation in urban scenes. IEEE Trans. Image Process. 2019, 28, 4376–4386. [Google Scholar] [CrossRef]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.; Schölkopf, B.; Smola, A.J. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 2006, 22, e49–e57. [Google Scholar] [CrossRef]

- Qian, Q.; Wang, Y.; Zhang, T.; Qin, Y. Maximum mean square discrepancy: A new discrepancy representation metric for mechanical fault transfer diagnosis. Knowl.-Based Syst. 2023, 276, 110748. [Google Scholar] [CrossRef]

- Song, Q.; Jiang, X.; Liu, J.; Shi, J.; Zhu, Z. Contrast-assisted domain-specificity-removal network for semi-supervised generalization fault diagnosis. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 5403–5416. [Google Scholar] [CrossRef]

- Korevaar, S.; Tennakoon, R.; Bab-Hadiashar, A. Failure to achieve domain invariance with domain generalization algorithms: An analysis in medical imaging. IEEE Access 2023, 11, 39351–39372. [Google Scholar] [CrossRef]

- Li, S.; Zhao, Q.; Kivaisi, A.R.; Wang, L.; Liu, W.; Wang, X. Causality-aware single-source domain generalization for transferring knowledge to multiple, unknown target domain. Tsinghua Sci. Technol. 2026, 31, 518–529. [Google Scholar] [CrossRef]

- Mai, J.; Gao, C.; Bao, J. Domain generalization through data augmentation: A survey of methods, applications, and challenges. Mathematics 2025, 13, 824. [Google Scholar] [CrossRef]

- Lei, X.; Shao, H.; Tang, Z.; Xu, S.; Zhong, D. Cross-domain remaining useful life prediction under unseen condition via mixed data and domain generalization. Measurement 2025, 244, 116451. [Google Scholar] [CrossRef]

- Gao, Z.; Guo, S.; Xu, C.; Zhang, J.; Gong, M.; Del Ser, J.; Li, S. Multi-domain adversarial variational Bayesian inference for domain generalization. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 3081–3093. [Google Scholar] [CrossRef]

- Xiao, Y.; Shao, H.; Yan, S.; Wang, J.; Peng, Y.; Liu, B. Domain generalization for rotating machinery fault diagnosis: A survey. Adv. Eng. Inform. 2025, 64, 103063. [Google Scholar] [CrossRef]

- Guan, W.; Wang, S.; Chen, Z.; Wang, G.; Liu, Z.; Cui, D.; Mao, Y. Domain generalization network based on inter-domain multivariate linearization for intelligent fault diagnosis. Reliab. Eng. Syst. Saf. 2025, 261, 111055. [Google Scholar] [CrossRef]

- Pu, H.; Teng, S.; Xiao, D.; Xu, L.; Luo, J.; Qin, Y. Domain generalization for machine compound fault diagnosis by domain-relevant joint distribution alignment. Adv. Eng. Inform. 2024, 62, 102771. [Google Scholar] [CrossRef]

- Fan, C.; Wang, P.; Ma, H.; Zhang, Y.; Ma, Z.; Yin, X.; Zhang, X.; Zhao, S. Performance degradation assessment of rolling bearing cage failure based on enhanced CycleGAN. Expert Syst. Appl. 2024, 255, 124697. [Google Scholar] [CrossRef]

- Guo, Y.; Li, X.; Zhang, J.; Cheng, Z. SDCGAN: A CycleGAN-based single-domain generalization method for mechanical fault diagnosis. Reliab. Eng. Syst. Saf. 2025, 258, 110854. [Google Scholar] [CrossRef]

- Sandfort, V.; Yan, K.; Pickhardt, P.J.; Summers, R.M. Data augmentation using generative adversarial networks (CycleGAN) to improve generalizability in CT segmentation tasks. Sci. Rep. 2019, 9, 16884. [Google Scholar] [CrossRef]

- Chao, K.; Shih, Y.; Lee, C. A novel sensor-based label-smoothing technique for machine state degradation. IEEE Sens. J. 2023, 23, 10879–10888. [Google Scholar] [CrossRef]

- Wang, P.; Song, Y.; Wang, X.; Xiang, Q. MD-BiMamba: An aero-engine inter-shaft bearing fault diagnosis method based on Mamba with modal decomposition and bidirectional features fusion strategy. Measurement 2025, 242, 115870. [Google Scholar] [CrossRef]

- Guo, Z.; Yu, K.; Jolfaei, A.; Ding, F.; Zhang, N. Fuz-Spam: Label smoothing-based fuzzy detection of spammers in Internet of Things. IEEE Trans. Fuzzy Syst. 2022, 30, 4543–4554. [Google Scholar] [CrossRef]

- Ren, H.; Zhao, Y.; Zhang, Y.; Sun, W. Learning label smoothing for text classification. PeerJ Comput. Sci. 2024, 10, e2005. [Google Scholar] [CrossRef]

- Liu, Z.; Xiong, X.; Li, Y.; Yu, Y.; Lu, J.; Zhang, S.; Xiong, F. HyGloadAttack: Hard-label black-box textual adversarial attacks via hybrid optimization. Neural Netw. 2024, 178, 106461. [Google Scholar] [CrossRef]

- Tu, F.; Zhang, T.; Liu, T.; Zhang, D.; Yang, S. A novel acoustic-based framework for compound fault diagnosis in rotating machinery with limited samples. IEEE Trans. Instrum. Meas. 2025, 74, 3521415. [Google Scholar] [CrossRef]

- Tu, F.; Yang, S.; Yang, J. Fault diagnosis for rotating machine based on Mel spectrogram and residual neural network. In Proceedings of the International Conference on Condition Monitoring and Asset Management, Oxford, UK, 17–20 June 2024; pp. 1–12. [Google Scholar]

- Wang, D.; Zhang, M.; Xu, Y.; Lu, W.; Yang, J.; Zhang, T. Metric-based meta-learning model for few-shot fault diagnosis under multiple limited data conditions. Mech. Syst. Signal Process. 2021, 155, 107510. [Google Scholar] [CrossRef]

- Zhong, X.; Wang, Q.; Liu, D.; Liao, J.; Yang, R.; Duan, S.; Ding, G.; Sun, J. A deep domain adaptation framework with correlation alignment for EEG-based motor imagery classification. Comput. Biol. Med. 2023, 163, 107235. [Google Scholar] [CrossRef]

- Liu, M.; Cheng, Z.; Yang, Y.; Hu, N.; Yang, Y. Multi-target domain adaptation intelligent diagnosis method for rotating machinery based on multi-source attention mechanism and mixup feature augmentation. Reliab. Eng. Syst. Saf. 2024, 250, 110298. [Google Scholar] [CrossRef]

- Song, Y.; Zhuang, Y.; Wang, D.; Li, Y.; Zhang, Y. Fault diagnosis in rolling bearings using multi-gaussian attention and covariance loss for single domain generalization. IEEE Trans. Instrum. Meas. 2025, 74, 3516910. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).