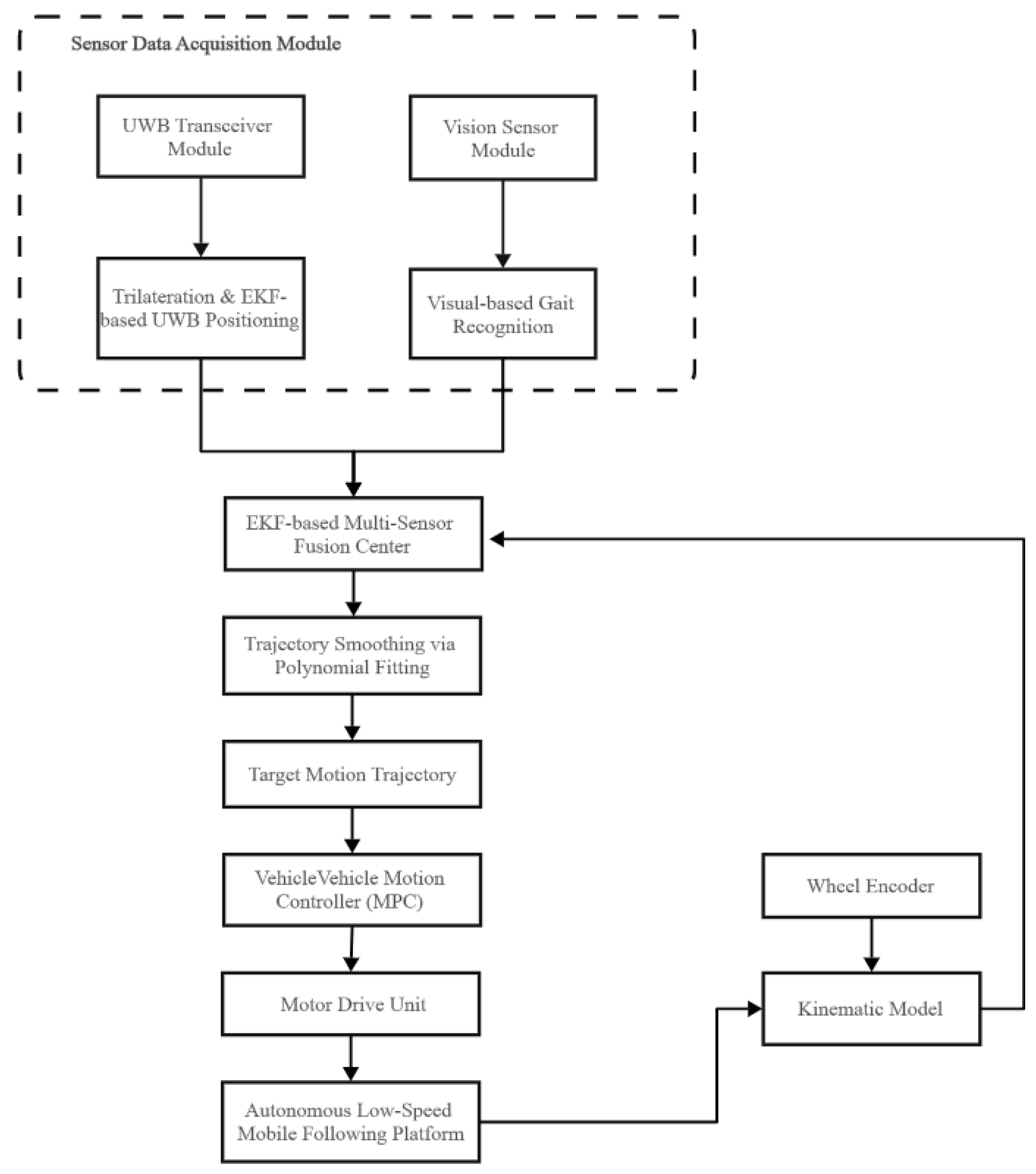

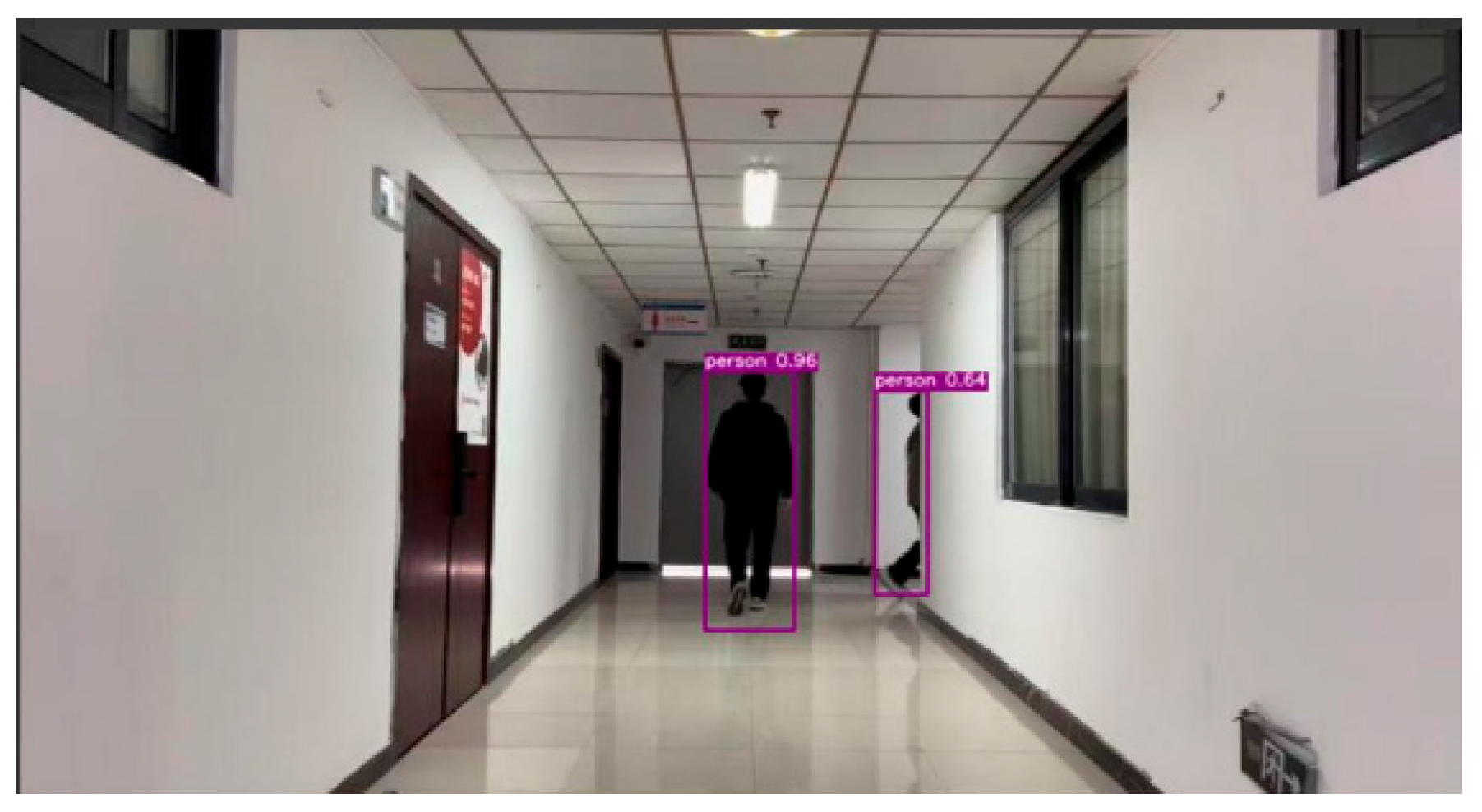

In order to maintain the tracking capability of the system in scenarios where UWB performance degrades, such as visual occlusion, this chapter introduces vision-based gait recognition as a complementary perception module.

4.3. Gait Feature Extraction Based on GaitPart

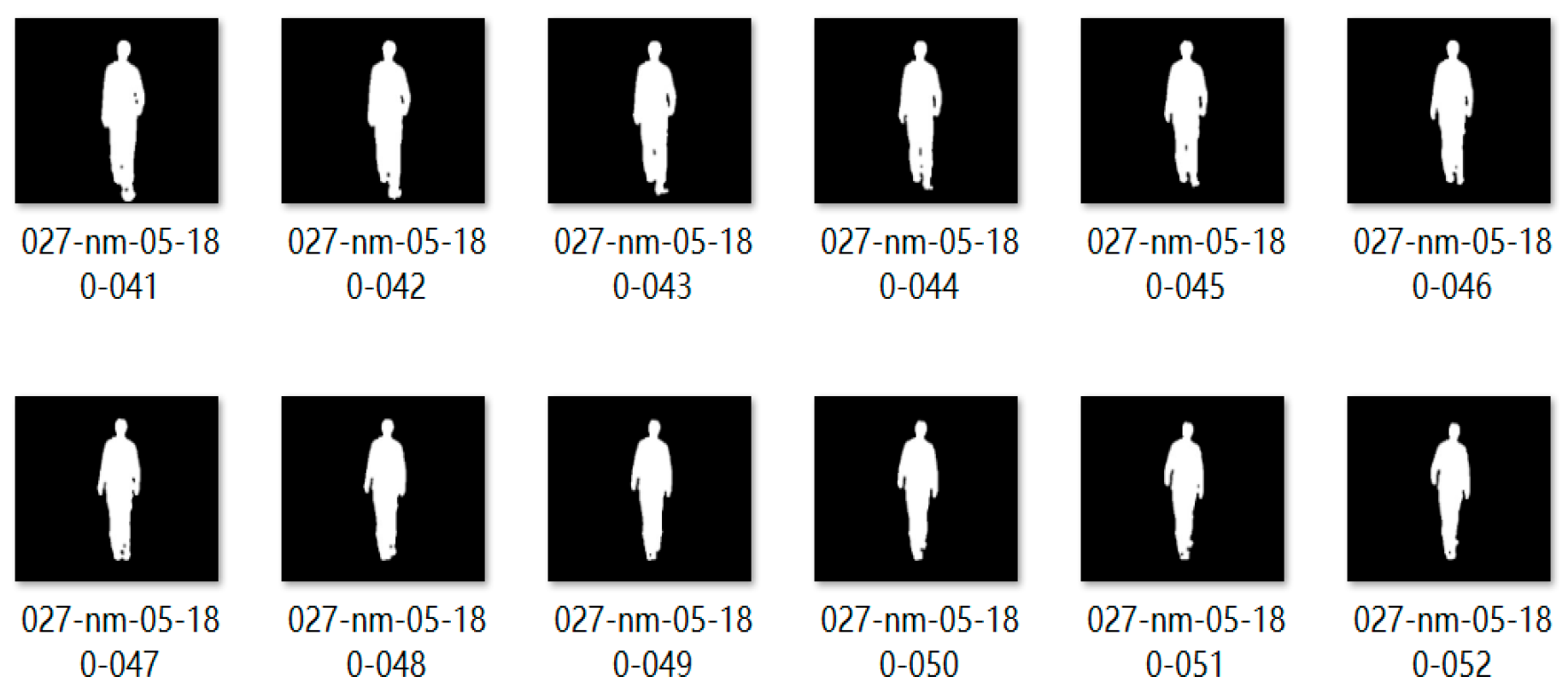

Based on the standardized gait contour sequences extracted previously, this study employs the GaitPart network [

21] for gait feature extraction. Let the input be a preprocessed gait contour image sequence I = {I

1, I

2, …, I

t}, where I

t∈R

64 ×

44 represents the binarized contour image of the t-th frame, and T is the sequence length. The specific implementation includes the following steps:

Each contour image It is uniformly divided into P local regions {Rt1, Rt2, …, Rtp}. This paper adopts a division strategy of P = 8, where each region corresponds to a key motion part of the human body to capture local motion patterns.

For each local region

Rtp, features are extracted via a Frame-level Part Feature Extractor (FPFE):

where

∈R

C is the C-dimensional feature vector, and

θFPFE denotes the network parameters of the FPFE. This paper employs ResNet-18 as the backbone network, with the feature dimension C set to 256.

To effectively integrate temporal information, the temporal feature sequence {

,

, …,

} of each local part is aggregated using Temporal Pyramid Aggregation (TPA) to obtain its corresponding global feature

g. The features from all local parts are then concatenated to form the global gait feature vector:

Based on the aforementioned parameter configuration (P = 8, C = 256), a final d = 2048-dimensional global feature vector g is obtained.

The GaitPart network was implemented in PyTorch (Version 1.8.1), initialized with weights pre-trained on the CASIA-B dataset, and underwent lightweight modifications tailored for deployment on mobile platforms. The model was trained for 50 epochs to ensure full convergence of the loss function. The Adam optimizer was employed with a learning rate of 1 × 10−4 and momentum parameters β1 = 0.9 and β2 = 0.999.

Utilizing a step decay schedule. The ArcFace loss function, with a scale factor set to 32 and a margin parameter of 0.1, was adopted to enhance feature discriminability. To validate the advantages of the proposed method, comparative experiments were conducted on the CASIA-B dataset against traditional methods, including GEI + SVM [

22] and HOG + RNN [

23].

Table 3 presents the performance comparison of these methods under three different testing conditions:

Different levels of Gaussian noise are added to the contour images (as shown in

Table 4).

The proposed method achieved the highest recognition rates under all three conditions—normal walking (NM), carrying a bag (BG), and wearing a coat (CL)—with an average accuracy of 90.5%. Furthermore, it exhibited the smallest performance degradation in robustness tests with added Gaussian noise, demonstrating its superiority.

4.4. Following Path Generation by Fusing Localization and Recognition

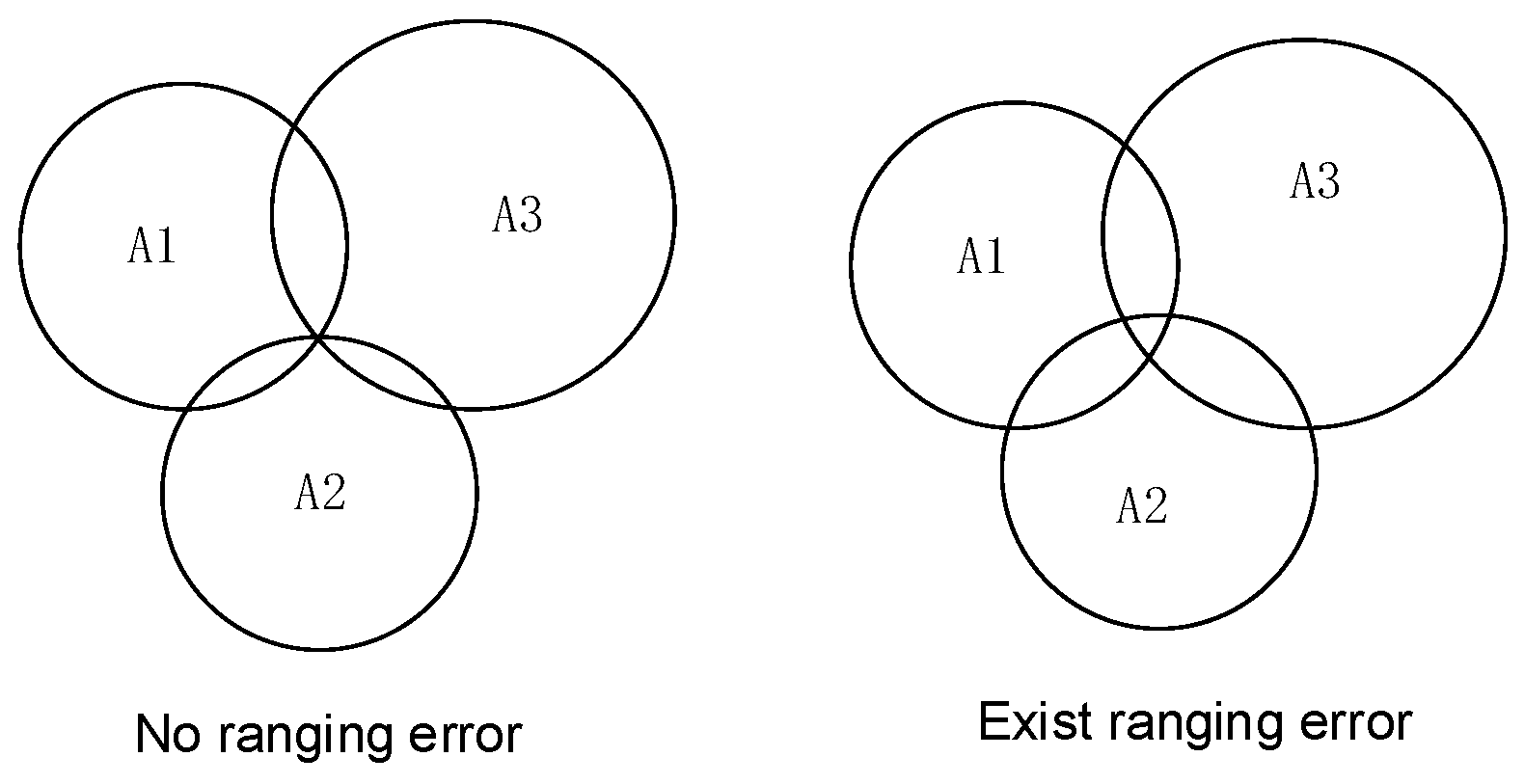

The aforementioned UWB positioning and visual gait recognition each possess distinct advantages and limitations: UWB provides absolute positioning but is susceptible to NLOS conditions, while gait offers continuous relative motion but suffers from cumulative error. To overcome these limitations inherent to individual sensors, this chapter designs a fusion algorithm based on the Extended Kalman Filter (EKF) to generate smooth, continuous, and accurate trajectory estimates of the leader.

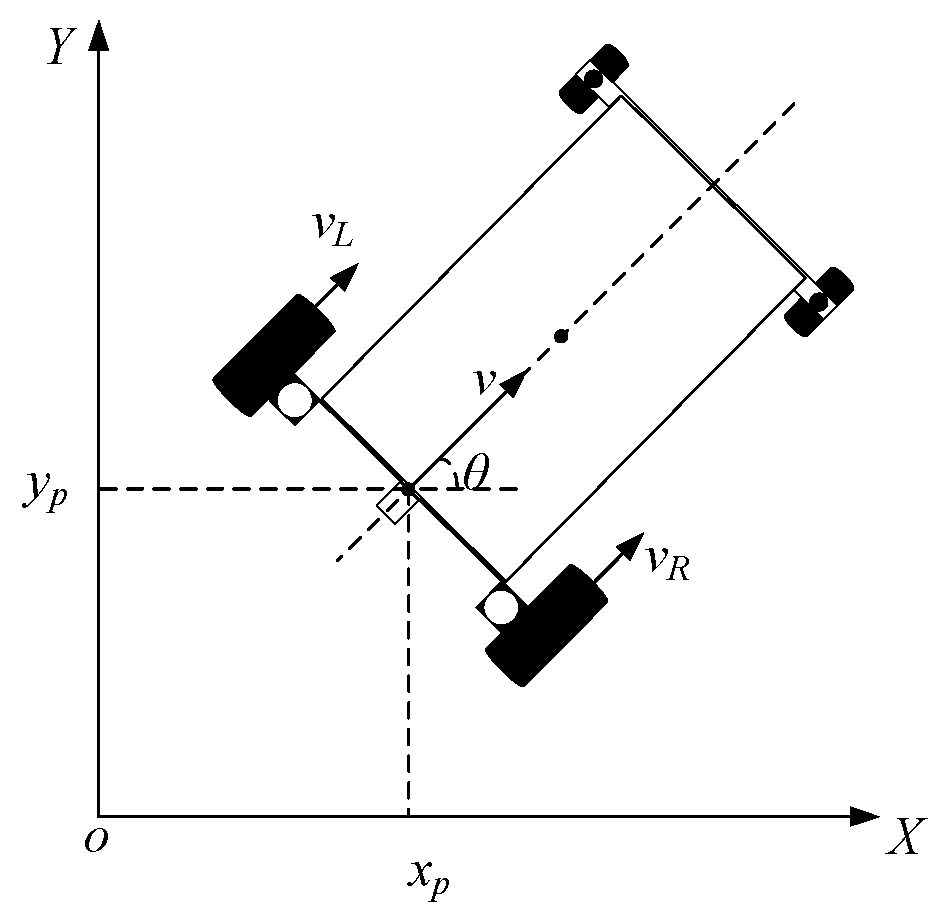

To accurately characterize the relative motion relationship between the leader and the mobile platform, a world coordinate system is defined with its origin at the initial position of the mobile platform upon system activation. A mobile platform coordinate system is also established, which moves along with the platform and has its x-axis aligned with the platform’s forward direction.

The state vector is defined in this study as the motion state of the leader within the mobile platform coordinate system:

where

px and

py represent the two-dimensional position coordinates of the leader, and

vx and

vy denote its translational velocities along the

x and

y directions, respectively.

Considering the continuity of human walking motion, the leader is assumed to move with constant velocity during adjacent sampling intervals (Δ

t = 0.1 s). The corresponding linear state-space model is formulated as follows:

where the state transition matrix is:

The process noise wk~

N(0,

Q) characterizes the discrepancy between the constant velocity model and actual motion (acceleration/deceleration). With reference to the continuous-time white noise acceleration model, the process noise covariance matrix

Q is derived through discretization based on biomechanical studies where the standard deviation of normal human walking acceleration is approximately 0.2 m/s

2 [

24]:

Here, q is the process noise intensity parameter.

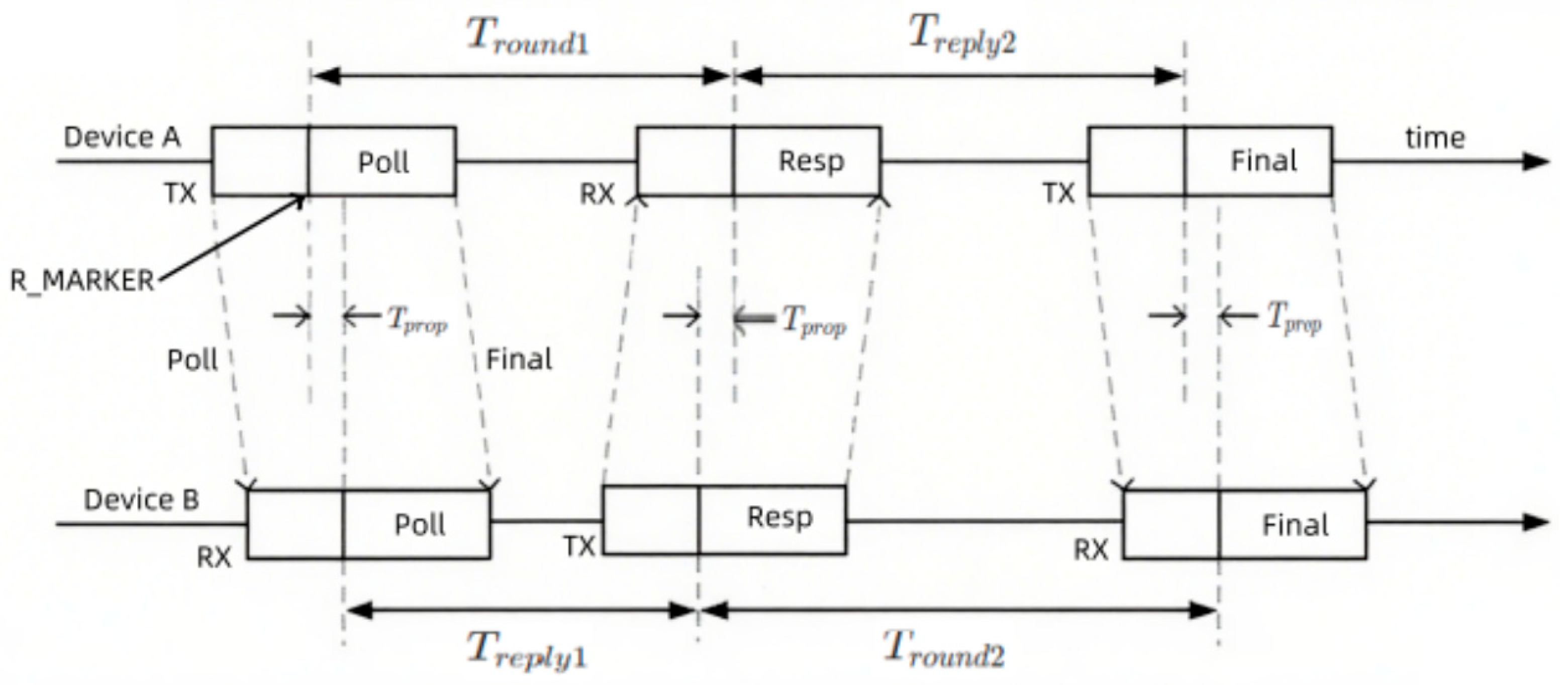

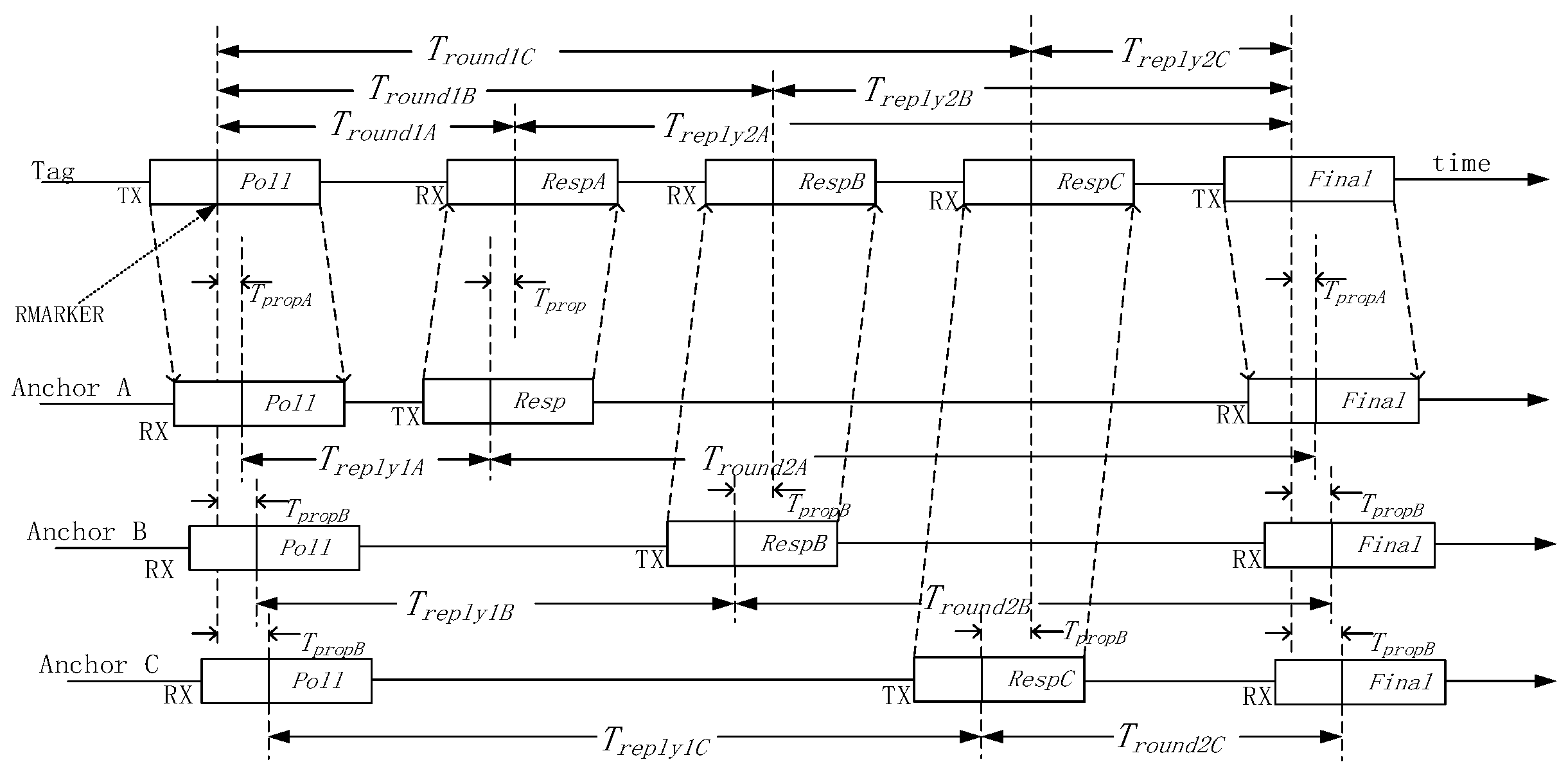

The system is equipped with two observational sensors.

UWB Observation Model: The

UWB sensor provides polar coordinate observations of the navigator relative to the mobile platform:

where

F denotes the state transition matrix, Δ

t is the sampling time interval, and

wk represents the process noise, which follows a Gaussian distribution with zero mean and covariance matrix

Q. Observations in the system originate from two sensors. UWB observations provide the absolute distance (

d) and azimuth angle (

θ) of the leader.

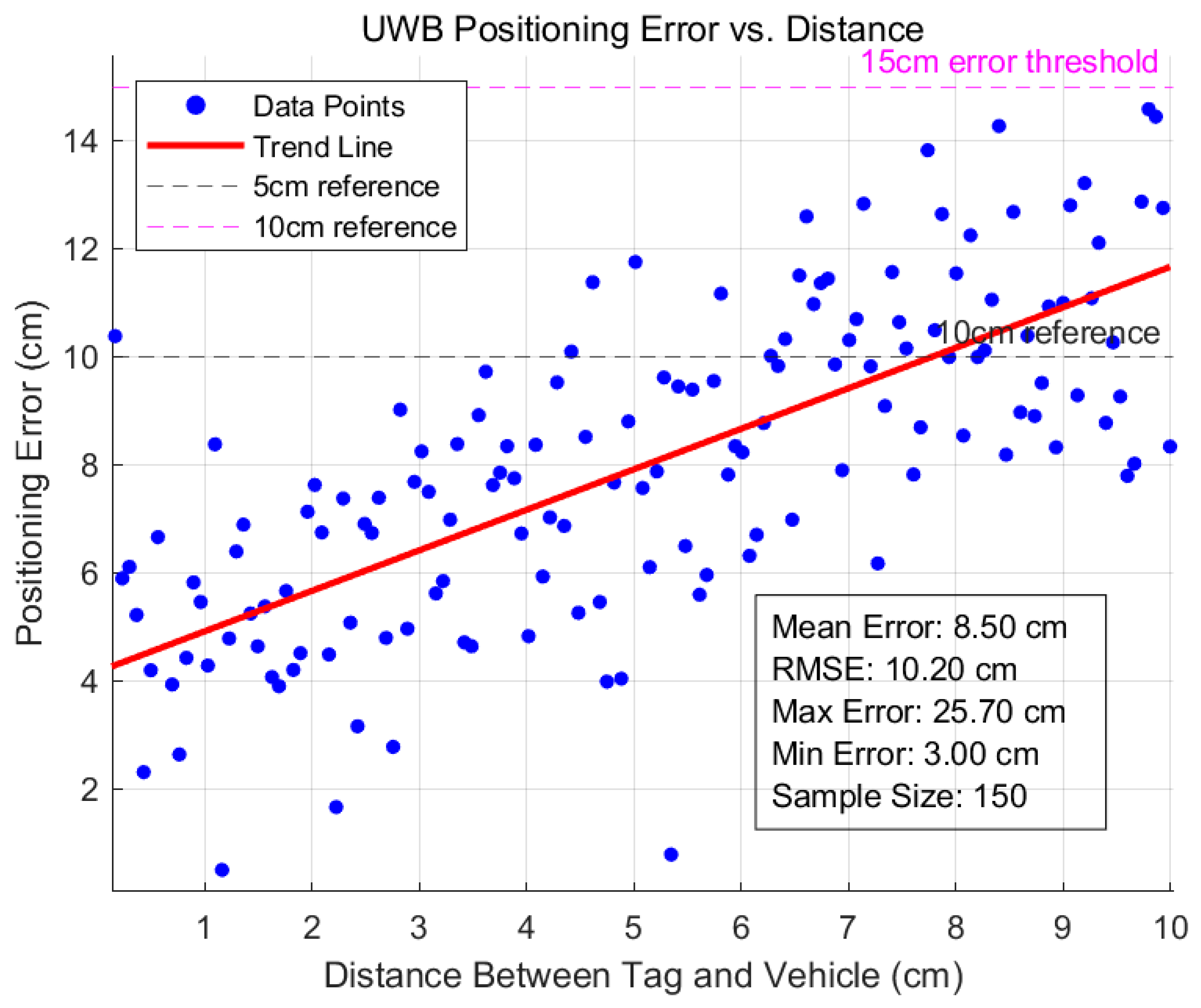

UWB Observation Noise

~

N(0,

RUWB):

Based on the technical manual of the adopted DW1000 UWB module, the standard deviations of the observation noise were set as d = 0.1 m and θ = 5°(0.087 rad).

Gait Observation Model: Based on the gait recognition results, the relative position increment of the navigator in the platform coordinate system can be directly obtained, and its observation equation is linear:

Here, and represent the observation noises, which follow zero-mean Gaussian distributions with covariance matrices , respectively. Since the gait observation model is linear, its observation matrix is given by .

The gait observation noise ~N(0,) is modeled based on the error propagation law and the Cramér–Rao Lower Bound analysis, incorporating the representational capacity of the GaitPart network. Accordingly, a diagonal covariance matrix is constructed as RGait = diag(0.0025, 0.0025).

The EKF recursively fuses data through prediction and update steps. The prediction step projects the prior state and covariance based on the motion model. The update step is then performed depending on the availability of valid sensor data.

Here, denotes the predicted state estimate, and represents the predicted state covariance matrix.

Update Step:

When UWB data is available, the Jacobian matrix

of the UWB observation model is computed as follows:

Subsequently, the Kalman gain

is computed, and the state and covariance are updated as follows:

When

UWB data is unavailable, the state and covariance are updated using only the gait observation:

To demonstrate the advantages of the proposed EKF fusion method, comparisons were conducted in a simulation environment against UWB-only positioning, gait-only positioning, and a complementary filter fusion method. Fifty Monte Carlo simulation runs were performed in a typical indoor environment measuring 20 × 15 m, the results are presented in

Table 5.

The results demonstrate that the proposed EKF fusion method outperforms the other approaches in terms of positioning accuracy, achieving an RMSE of 0.078 m.

To clearly demonstrate the novelty and comprehensive advantages of the proposed method,

Table 6 compares the UWB-Gait fusion scheme presented in this work with two mainstream schemes from the literature (UWB + IMU and UWB + RGBD-VO) across key performance dimensions:

In summary, the proposed scheme demonstrates unique advantages in target-specific identification and adaptability to complex scenarios while maintaining high positioning accuracy.

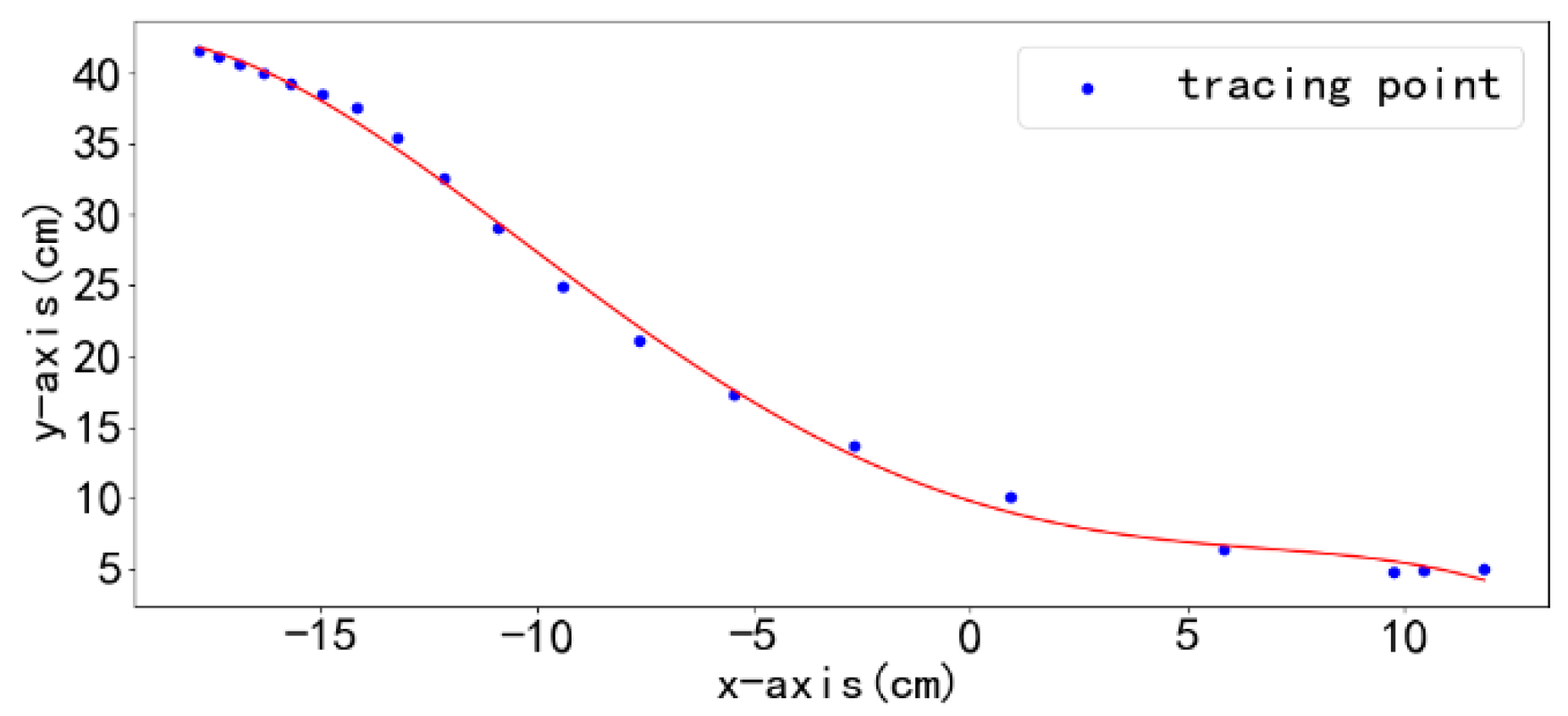

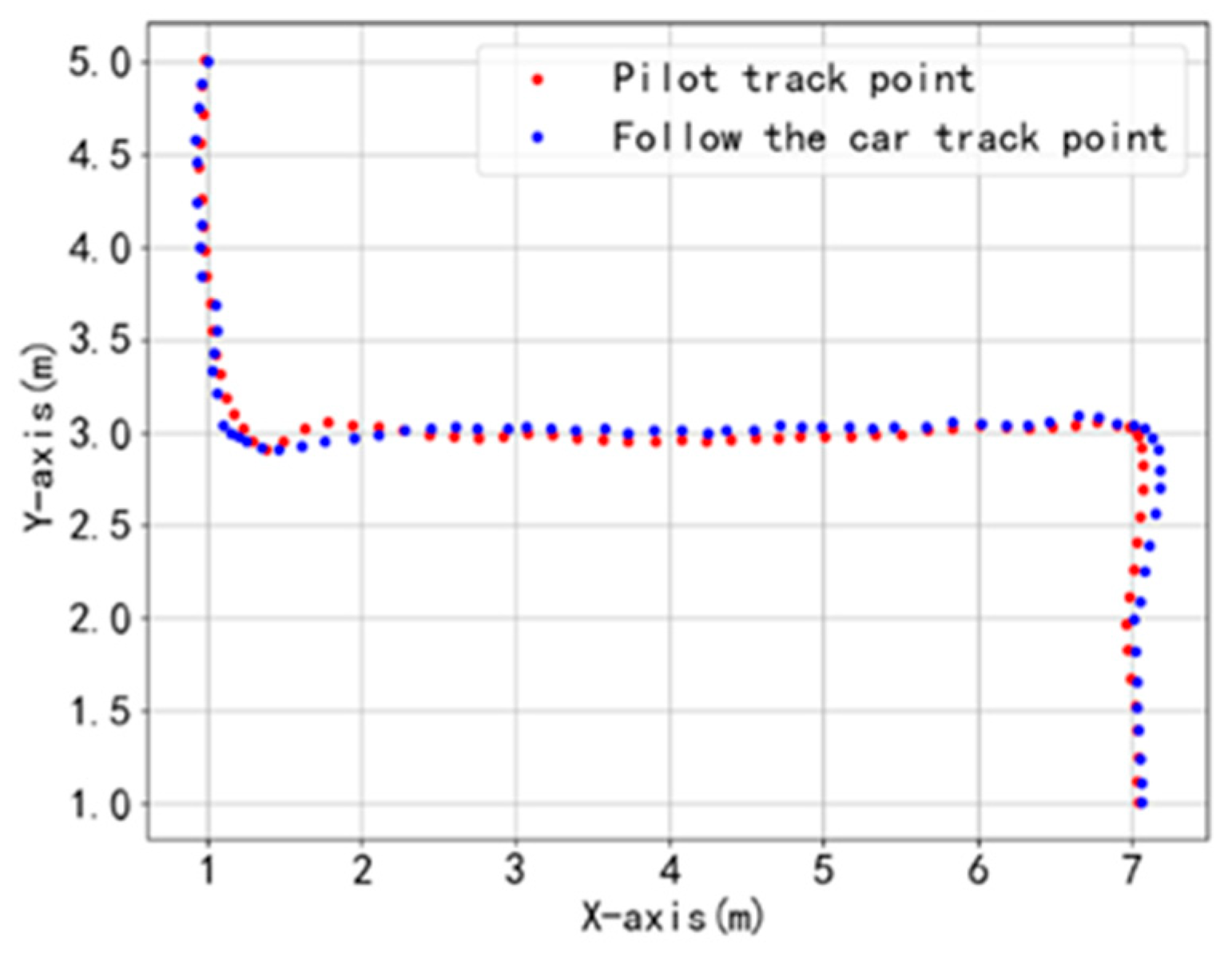

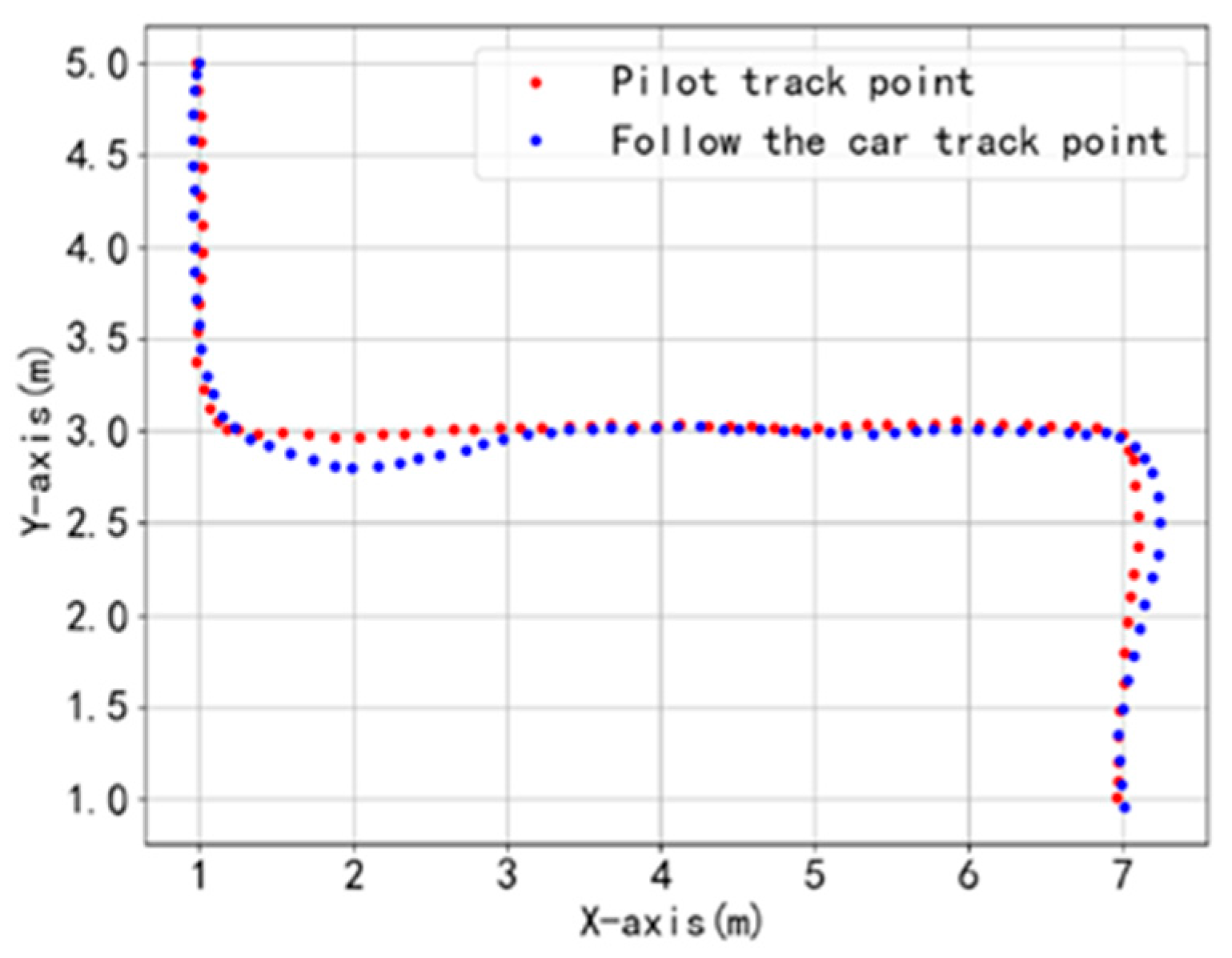

The EKF outputs the optimal state estimate at each filtering cycle. The position information (px, py) is then stored in the trajectory sequence.

After obtaining the motion trajectory points of the leader, a fourth-order polynomial is employed to fit these points, generating a smooth path as shown in

Figure 8. To mitigate cumulative errors, the system resets the origin of the generalized coordinate system every 15 s.