Highlights

This study employs a simulated driving experiment to systematically compare the effects of three visualization conditions—black-box, standard, and enhanced—on drivers’ trust, cognitive load, and behavioral responses, integrating multimodal data from eye-tracking, manual interventions, and questionnaire assessments.

What are the main findings?

- Enhanced visualization significantly improves users’ functional trust and perceived usefulness in autonomous driving systems.

- The black-box system does not reduce physiological stress, but instead leads to an “out-of-the-loop” state, characterized by cognitive withdrawal and monitoring complacency.

What is the implication of the main finding?

- Demonstrates that “meaningful transparency” is key to establishing appropriate human–machine trust, outperforming designs that are either completely opaque or involve information overload.

- Provides a trust assessment basis and optimization direction for autonomous driving human–machine interface design, grounded in eye-tracking and behavioral data.

Abstract

Autonomous systems’ “black-box” nature impedes user trust and adoption. To investigate explainable visualizations’ impact on trust and cognitive states, we conducted a within-subjects study with 29 participants performing high-fidelity driving tasks across three transparency conditions: black-box, standard, and enhanced visualization. Multimodal data analysis revealed that enhanced visualization significantly increased perceived usefulness by 28.5% (p < 0.001), improved functional trust, and decreased average pupil diameter by 15.3% (p < 0.05), indicating lower cognitive load. The black-box condition elicited minimal visual exploration, lowest subjective ratings, and “out-of-the-loop” behaviors. Fixation duration showed no significant difference between standard and enhanced conditions. These findings demonstrate that well-designed visualizations enable balanced trust calibration and cognitive efficiency, advocating “meaningful transparency” as a core design principle for effective human–machine collaboration in autonomous vehicle interfaces. This study provides empirical evidence that transparency enhances user experience and system performance.

1. Introduction

Advances in electronic and information technologies have accelerated the development of automotive automation. The Society of Automotive Engineers (SAE) International defines a maturity scale for autonomous driving (Levels L0–L5), spanning from fully manual to fully automated operation [1]. Currently, the majority of passenger vehicles worldwide operate at Level 2 (partial automation, requiring continuous driver supervision), while select high-end models have achieved Level 3 (conditional automation, wherein the system assumes control under predefined conditions). Level 4 systems (high automation) are undergoing commercial pilot deployments in select regions—such as Waymo’s operations in Phoenix and Baidu Apollo’s trials in Chinese cities—while Level 5 (full automation) remains confined to laboratory environments [2,3,4,5,6].

In practice, end-to-end deep learning models—while efficient at processing complex environments—are inherently black-box, rendering their decision-making logic difficult to trace. However, eXplainable Artificial Intelligence (XAI) tools enable systems to generate local explanations for drivers or regulators, thereby enhancing comprehension of critical decisions [7,8,9].

The complexity of autonomous driving systems stems from their heavy reliance on fusing multi-source sensor data. Early, mid-, and late-stage fusion strategies collectively support environmental perception, path planning, and decision execution [10,11]. Nevertheless, this complexity renders system decision logic “opaque” to users—even when supplemented with local explanations—triggering a trust crisis [12]. Consequently, the trust gap between autonomous systems and human operators has emerged as a core bottleneck to large-scale commercialization, exacerbated by opaque and redundant information displays in human–machine interfaces that further elevate cognitive load and perceived uncertainty [13]. Establishing and calibrating appropriate user trust in black-box systems now constitutes a pivotal technical challenge and a central concern in human factors engineering for autonomous driving.

1.1. Background

Some scholars argue that users can establish trust through social consensus and safety assurances without needing to understand the inner workings of black-box systems [14]. Heitor’s study found that users exhibit high trust in black-box systems, and explainability elements do not significantly influence trust behaviors [15]. Domain experts place greater value on high-quality explanations, whereas lay users exhibit lower demand for such detail. Concise, task-relevant explanations tend to enhance trust more effectively, while overly complex or inconsistent explanations may erode it [16,17]. In certain domains, XAI does not significantly improve participants’ self-reported trust in AI systems [18]. Trust cultivated through communication, education, and training proves more robust and enduring than trust derived solely from system explainability [19].

In contrast, other studies emphasize that users prioritize safety and controllability above all else [20,21]. Trust grounded solely in social consensus or system performance often falls short of users’ expectations for reliability. Transparency and explainability are still considered essential prerequisites for earning user trust in AI systems—particularly in safety-critical domains [22,23,24]. Enhancing the transparency and explainability of autonomous driving systems thus remains a key challenge in trust building. Researchers commonly employ semantic text explanations: Dong et al. [25] frame driving decisions as image captioning tasks, using Transformer models to generate meaningful sentences describing driving scenes, thereby explaining the rationale behind driving behaviors. Zhang et al. [26] leverage attention-based modules to capture interactions between the ego vehicle and surrounding traffic objects, generating semantic textual explanations.

Beyond textual approaches, visualization techniques such as augmented reality (AR) and high-fidelity 3D rendering also enhance users’ understanding of system states and decision logic, effectively reducing anxiety toward “black-box” systems and improving user experience and trust [27,28,29,30]. For instance, automakers like Tesla and academic research teams have employed Unreal Engine to optimize visualization systems, enhancing environmental realism and enabling users to intuitively perceive how the system interprets its surroundings [31,32]. Tobias et al. [33] overlaid color-coded blocks and lane indicators onto driving simulators via AR, providing explanations during or after driving; results showed such interventions could transform negative experiences into neutral ones. High-fidelity, interactive visual feedback not only improves situational awareness and perceived safety, but also enables better trust calibration by visually conveying system uncertainty and decision rationale in real time [34]. However, information presentation must balance richness with cognitive load, avoiding user confusion or over-trust due to information overload [35].

Current research widely employs multimodal physiological signals, behavioral metrics, and self-report questionnaires to assess users’ cognitive states. Lu et al. [36] successfully used eye-tracking to infer trust levels in real time. Wang et al. [37] collected drivers’ eye movement data via the ETG-2w portable eye-tracking glasses and measured takeover reaction time by recording when drivers pressed the “takeover” button. J. Choi and Y. Ji [38] constructed a survey based on an extended Technology Acceptance Model (TAM) to explore how perceived usefulness, ease of use, trust, perceived risk, and system transparency influence behavioral intention to use autonomous driving systems.

1.2. Research Motivation

In the context of trust-building for high-risk AI systems such as autonomous driving, the academic community remains divided between two seemingly opposing perspectives—reflecting a fundamental debate over whether users need to understand system behavior to trust it. Automotive manufacturers face a strategic dilemma: should R&D resources be prioritized toward improving the underlying system safety, or allocated to developing costly, complex visualization-based explanation systems [39]? Is explainable visualization a functional necessity for user trust, or merely a value-added feature for marketing appeal? Moreover, when users self-report increased trust, does this subjective perception genuinely align with their cognitive states and behavioral responses?

Much of the existing literature relies heavily on self-report scales, which are vulnerable to cognitive biases and social desirability effects. For instance, users may verbally express confidence in a system while instinctively intervening during critical moments—a behavioral contradiction that reveals a potential disconnect between stated trust and actual reliance. Objective measures—such as physiological responses and behavioral interventions—may offer more reliable indicators of users’ true cognitive and affective states when interacting with “black-box” systems. These metrics are critical for empirically evaluating the competing paradigms of “black-box acceptability” versus “transparency necessity.” For example: Under a no-visualization condition (Level 0), do users exhibit higher intervention rates or physiological arousal in response to system decisions? Conversely, does enhanced visualization (Level 2) lead to measurable reductions in stress-related physiological indicators? If physiological stress remains unchanged despite the absence of explanations, this would lend empirical support to the “black-box acceptability” hypothesis—suggesting users may tolerate opacity without sustained cognitive or physiological strain.

To address these questions, this study employs a within-subjects, single-factor experimental design with three levels of visualization transparency. The independent variable, visualization level, comprises: Level 0 (Black-box): No system state information provided; Level 1 (Standard): Basic system status feedback; Level 2 (Enhanced): Rich, explainable visualizations of system intent and reasoning. During simulated autonomous driving tasks, we collected multimodal data including eye-tracking metrics, behavioral interventions, and validated scales measuring technology acceptance and trust. This design aims to answer two core research questions: (1) Compared to a black-box system, does explainable visualization significantly enhance users’ self-reported trust? (2) In the absence of explanations, can users maintain low physiological stress levels—suggesting passive acceptance rather than active distrust?

The main contributions of this study are as follows:

- Through a high-fidelity simulated driving experiment, we systematically compare the effects of multiple levels of system transparency on users’ self-reported perceptions and behavioral responses;

- We employ a multimodal measurement framework—including visual attention patterns (eye-tracking), intervention behaviors, and subjective trust scales—to uncover how different levels of visualization influence trust formation in autonomous driving contexts;

- By integrating quantitative experimental data with qualitative insights from post-task interviews, we identify the distinct strengths and limitations of each visualization level, offering actionable guidance for the design of human-centered explainable interfaces in autonomous systems.

2. Materials and Methods

2.1. Participants

To ensure statistical reliability, an adequate sample size of qualified participants was determined a priori using the GPower 3.1.9.7 software. Power analysis indicated that with an effect size of f = 0.25, α = 0.05, and a target power of 0.80, a minimum sample size of 28 participants was required. The achieved power with N = 28 was 0.812.

To account for potential data attrition and further safeguard result robustness, we recruited 30 participants. During data preprocessing, eye-tracking data quality was evaluated, and one participant was excluded due to poor signal accuracy. The final analytical sample thus comprised 29 participants (16 female, 13 male), reflecting intentional recruitment to ensure gender diversity. Participants ranged in age from 18 to 60 years (M = 26.31, SD = 7.42).

Eligibility criteria required all participants to: Hold a valid driver’s license; Have normal or corrected-to-normal visual acuity; Possess normal hearing; Exhibit no color blindness or color vision deficiency.

All procedures were reviewed and approved by the Institutional Review Board of Nanjing University of Science and Technology. Written informed consent was obtained from each participant prior to the experiment. Upon completion, participants received a compensation of CNY 15.

2.2. Experiment Environment

This study employs a high-fidelity autonomous driving simulation system built upon the open-source CARLA platform (0.9.13 software), utilizing the Town10HD high-definition urban map as the foundational virtual environment. Dynamic scenario generation is implemented via CARLA’s Python API, enabling parametric control over environmental and behavioral variables. Experimental tasks are categorized into two conditions: Basic Task: Path-following under normal traffic flow; Complex Task: Reactive obstacle avoidance triggered by sudden events.

Scenario parameters—including obstacle placement and weather conditions—are dynamically configured using probabilistic distribution-based randomization algorithms to ensure ecological validity and inter-trial variability.

Leveraging the Unreal Engine 4.2 rendering backend, the system synchronously simulates dynamic environmental elements (e.g., time-of-day lighting, precipitation) and static infrastructure (e.g., road network topology). This provides a standardized, reproducible experimental environment for studying human trust in autonomous driving systems.

Each simulated driving trial begins with the vehicle starting from a non-motorized lane and autonomously navigating from Point A to Point B along a pre-defined high-precision GPS route. The ego-vehicle strictly adheres to virtual traffic regulations while dynamically encountering pedestrians, bicycles, and unexpected obstacles to evaluate system decision-making under complex urban conditions. Task completion is marked by successful autonomous parking at a designated residential zone. Prior to the experiment, participants were instructed to familiarize themselves with the simulated environment by manually driving the vehicle freely within the map for 10 min. Each trial spans approximately 2 km in distance and is constrained to under 3 min in duration. The total duration of the three experimental trials, including eye tracker calibration, was approximately 15 min. This duration was determined based on a pilot study (N = 7), which confirmed that it was sufficient to elicit meaningful trust assessments and intervention behaviors while minimizing eye fatigue (mean subjective fatigue rating <2 on a 5-point scale).

Participants interacted with the simulation via an immersive virtual driving setup. A Logitech G923 force-feedback steering wheel was employed to realistically replicate the tactile and kinesthetic feedback of steering during autonomous operation. Visual stimuli were presented across a multi-display setup: Two 4K UHD (3840 × 2160, 60 Hz, U270Q, ZLAU Corp., Guangzhou, China) monitors arranged to provide a 120° horizontal field of view, closely approximating natural human peripheral vision during driving; One 2K touchscreen simulating the vehicle’s center console interface, enabling real-time status monitoring and interactive control (e.g., mode switching, visualization toggling).

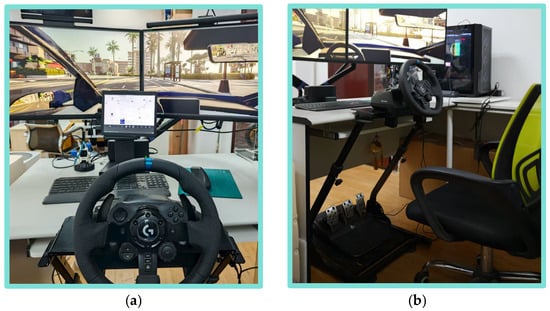

Visual attention was recorded using the Tobii Pro Glasses 3 mobile (Tobii AB, Stockholm, Sweden) eye-tracking device. Equipped with a binocular infrared tracking system (50 Hz sampling rate, spatial accuracy <0.5°), the device continuously captured gaze coordinates, saccadic trajectories, and pupil diameter dynamics. Participants viewed the simulated environment through the device’s integrated head-mounted display (FOV: 46° × 34°), enabling precise spatiotemporal alignment between gaze behavior and environmental context (see Figure 1a).

Figure 1.

Experimental environment and approach: (a) view; (b) equipment.

Manual intervention behaviors—specifically braking actions—were captured via a Logitech G920 pedal (Logitech, Lausanne, Switzerland) unit with nonlinear response characteristics (see Figure 1b). Integrated with a high-resolution displacement sensor (0.1 mm resolution) and haptic feedback module, the pedal quantified brake depression depth (0–100 mm) in real time. Data were streamed via USB 2.0 to the host computer, where a custom Python 3.10.11 software script synchronized pedal inputs with the CARLA simulation engine, mapping user interventions to longitudinal vehicle control commands. This architecture enabled fine-grained monitoring of the dynamic interplay between human override and autonomous system behavior.

In CARLA, synchronous mode was enabled to ensure that all sensor data were strictly aligned to the same simulation timestamp. Additionally, data streams from CARLA, the Logitech G923 throttle/brake pedals, and the eye tracker were all timestamped using a monotonic system clock. A software-based synchronization marker was inserted at the beginning of each trial, enabling post hoc alignment of eye-tracking, vehicle control, and simulation events with sub-50-millisecond precision.

2.3. Scenario

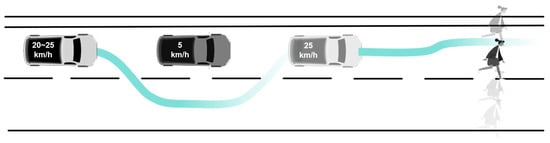

This experiment is based on typical urban driving scenarios from the i-VISTA Intelligent and Connected Vehicle Challenge, implemented within the Town10HD high-definition urban map of the CARLA platform, constructing an autonomous driving task from Point A to Point B. The experimental route spans 1 km and passes through 3 traffic lights and 6 intersections. During autonomous driving, the vehicle performs the following maneuvers: starting from standstill, merging into the main road, proceeding through an intersection under a flashing yellow light, decelerating to yield at pedestrian crosswalks, executing consecutive lane changes, exiting the main road, navigating narrow road segments, and performing curb-side parking (see Figure 2).

Figure 2.

Schematic diagram of task process.

In addition, two non-routine events are incorporated. Based on typical accident patterns associated with automated driving assistance functions, the following two non-routine events are included to simulate unexpected disturbances in real-world driving: an overtaking event and a pedestrian avoidance event.

The overtaking event is triggered when the lead vehicle suddenly decelerates (speed drops to 5 km/h), testing the system’s real-time perception of dynamic obstacles and its path planning capability. The technical requirement specifies that, on a road with a 20 km/h speed limit, the test vehicle must overtake at 25 km/h, with lateral acceleration satisfying a <0.13 m/s2 to ensure ride comfort [40].

The pedestrian avoidance event is triggered when a pedestrian suddenly crosses from the roadside into the crosswalk (at a speed of 1.2 m/s), evaluating the system’s ability to detect vulnerable road users in unstructured scenarios. The technical requirement specifies that the braking response time must be <1.5 s, and the minimum safety distance must exceed 1.5 m [41].

2.4. Measurement

To systematically measure and compare the effects of different multi-level visualization methods on user experience—including both self-reported perceptions and behavioral responses—this study designed a Likert-scale questionnaire grounded in the Technology Acceptance Model (TAM). The questionnaire captures users’ self-reported trust perceptions toward the visualization system, as well as their subjective evaluations of its functional effectiveness (perceived usefulness) and operational convenience (perceived ease of use).

In parallel, users’ actual intervention behaviors during task execution were logged, and eye-tracking metrics were collected to objectively quantify cognitive processes and interaction patterns. By integrating self-reported questionnaire data with objective behavioral measures, this study enables a comprehensive assessment of how different visualization designs influence trust formation and user interaction behavior.

2.4.1. Technology Acceptance Questionnaire Survey

TAM, introduced by Davis [42], serves as a foundational theoretical framework for understanding user adoption of information technology. According to TAM, an individual’s behavioral intention to use a technology is primarily shaped by Perceived Usefulness (PU) and Perceived Ease of Use (PEOU), with PEOU also positively influencing PU. This behavioral intention, in turn, directly predicts actual system usage.

In high-risk, highly interactive intelligent systems such as autonomous driving, perceived usefulness and trust are widely recognized as key determinants of users’ willingness to adopt autonomous vehicles [38]. The degree of trust users place in the system directly shapes their assessment of its functional effectiveness (i.e., PU) and their perception of operational complexity (i.e., PEOU). Higher levels of trust enhance users’ confidence in the system’s capabilities, thereby increasing perceived usefulness and alleviating concerns about usability. Conversely, diminished trust substantially undermines adoption intention. Thus, trust not only acts as an antecedent within the TAM framework but also indirectly influences behavioral intention via PU and PEOU, functioning as a crucial moderating or mediating variable in modeling user acceptance of autonomous driving technology [43]. Incorporating trust into the TAM framework offers a robust theoretical foundation for evaluating user experience and adoption intention in autonomous driving systems.

Guided by the Technology Acceptance Model, this study developed questionnaire items to assess users’ perceptions. Specifically, Q1 (“This system helps me complete driving tasks”) and Q2 (“The functions provided by this system are useful”) were designed to measure Perceived Usefulness (PU)—reflecting users’ subjective assessment of the technology’s ability to enhance task performance. Q3 (“I find this system difficult to use”) and Q4 (“I need to invest considerable effort to understand the system’s prompts”) were formulated to evaluate Perceived Ease of Use (PEOU)—capturing users’ perceived cognitive effort in operating the system. Notably, Q3 is reverse-scored. All items utilize a five-point Likert scale to systematically quantify subjective attitudes, facilitating a structured analysis of user acceptance toward multi-level visual driving assistance systems. To verify semantic appropriateness, we invited human factors (N = 7), engineering experts to conduct a content validity index (CVI) assessment, yielding a CVI score of 0.88. Additionally, a pre-test was carried out, which demonstrated a Cronbach’s α coefficient of 0.82.

This study integrates subjective and objective data through Spearman’s rank-order correlation analysis: each item score from the TAM questionnaire (Q1–Q4) is pairwise correlated with eye-tracking metrics (fixation entropy, mean pupil diameter) and behavioral measures (intervention frequency, reaction time) to assess the consistency between users’ self-reported perceptions and their implicit cognitive–behavioral responses.

2.4.2. Intervention Behavior Metrics

This study developed a data acquisition module using Python to capture operational input signals from the autonomous driving simulation system in real time, with a focus on recording activation timestamps and pedal depression sequences of the brake pedal in the driving simulator. By analyzing the temporal sequence of these signals, intervention behaviors are identified through cycle detection: a complete “press–hold–release” process is defined as one intervention cycle. An effective intervention cycle is recognized when the system detects the pedal signal rising from zero (or near-zero) to exceed a preset activation threshold, and subsequently returning to a near-zero level. Based on this, the following key behavioral metrics are calculated: (1) Number of Intervention Instances —the total number of times users actively take over control during the experimental period; (2) Intervention Duration —the time span from the start to the end of each intervention, used to evaluate the persistence of interventions; (3) Average Intervention Intensity—quantifies the severity of user operations by computing the time-weighted average brake intensity during each intervention. The calculation formulas are as follows:

In Equation (1), denotes the time-weighted average brake intensity across all intervention events; is the instantaneous or mean brake intensity observed during the i-th intervention cycle; represents the temporal duration of that cycle; and n is the total count of distinct intervention intervals recorded during the experiment.

2.4.3. Visual Behavior Metrics

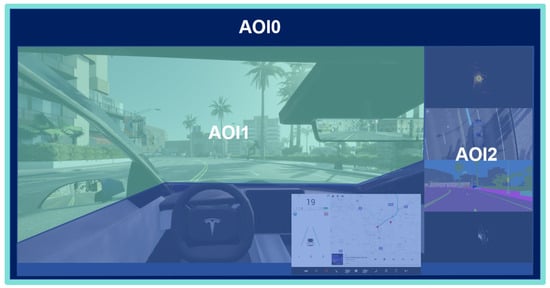

In this study, we designed three visualization conditions with varying levels of system transparency to systematically investigate how information presentation influences user trust and cognitive load. Level 0 (Black-box) displayed only the basic road driving scene, providing no information about the autonomous system’s status or intent. Level 1 (Standard) presented conventional driving information—such as vehicle speed and navigation cues—on the center console display located to the right of the steering wheel. Level 2 (Enhanced) extended the standard condition by overlaying an augmented reality (AR) semi-transparent blue dashed trajectory (extending 10 m ahead) onto the windshield and adding multimodal sensor visualizations—including LiDAR point clouds, 360° camera views, and semantic segmentation outputs—on the right-side console (see Table 1). All interface elements were positioned and sized to ensure readability and comply with the human–computer interaction efficiency principles described by Fitts’ Law.

Table 1.

Interface Visualization Conditions.

Considering the impact of visualization on the interactive characteristics of the entire autonomous driving system, the eye-tracking Area of Interest (AOI) was divided into three primary regions, as illustrated in Figure 3: AOI0 encompasses the entire cockpit view, including both the external driving scene and the interior cabin; AOI1 focuses on the external view, covering the roadway and side/rearview mirrors; and AOI2 focuses on the interior cabin, specifically the steering wheel and the in-vehicle visual interface (see Figure 3).

Figure 3.

AOI definition.

Drawing on prior studies on eye-tracking in autonomous driving, we selected six visual behavior metrics: Number of Fixations, Fixation Duration (or Average Fixation Duration), Number of Glances, Number of Saccades within AOI, and Average Pupil Diameter. These metrics are detailed in the Table 2 below.

Table 2.

Key eye movement indicators that reflect cognitive state and trust.

2.4.4. Procedure

Each participant was required to complete one experimental trial with each autonomous driving system, resulting in a total of three driving sessions per participant. To control for potential learning effects, the order in which participants used the different systems was randomized across individuals. Each experimental trial lasted approximately 3 min, and the entire experimental procedure—including system interaction, questionnaire completion, and post-task interviews—took about 20 min in total, thereby minimizing the risk of fatigue effects [49]. The experimental procedure was conducted as follows.

Information Collection: Upon arrival at the laboratory, participants were provided with an informed consent form. They then completed a demographic questionnaire, reporting their age range, gender, and years of driving experience. Additionally, they filled out a pre-experiment questionnaire to assess their initial level of trust in autonomous driving systems.

Training: Participants received a brief training session to ensure familiarity with the simulated driving platform, including manual vehicle control and the operational tasks required during the experiment. A short driving test was administered to confirm that participants could successfully navigate to a destination using manual control.

Eye-Tracker Calibration: Prior to the experimental trials, participants were assisted in calibrating the eye-tracking system using a standard calibration card.

Experimental Trials: Each participant completed three driving trials. During each trial, they encountered two unexpected (non-routine) events. The order of the autonomous driving systems and the timing of the non-routine events were randomized across trials to mitigate memory or order effects. Participants were not informed in advance about the number or frequency of these events.

Post-Experiment Interview: After completing all three trials, participants completed TAM-based questionnaire. This was followed by a semi-structured interview to collect qualitative feedback and self-reported evaluations regarding their experience with the systems.

3. Results

All data were first subjected to validity screening. The internal consistency of the questionnaire was assessed using Cronbach’s alpha, and visual behavior data were retained for analysis only when eye-tracking accuracy exceeded 90%. One participant (3.3%) was excluded from the analysis due to having less than 85% valid data. No imputation was performed for missing values; instead, the listwise deletion method was employed. Subsequently, statistical analyses were conducted using IBM SPSS Statistics (Version 26.0; IBM Corp., Armonk, NY, USA) to examine the relationships between the independent variable (three levels of visualization transparency) and the dependent variables (questionnaire responses, behavioral measures, and eye-tracking metrics).

The Shapiro–Wilk test was used to assess the normality of the dependent variables. Given that each participant experienced all three transparency conditions, a one-way repeated-measures analysis of variance (ANOVA)was performed when the normality assumption was satisfied, followed by Bonferroni-corrected pairwise comparisons. For Mauchly’s test of sphericity, the significance value under the assumption of sphericity was used when the assumption was met. If the sphericity assumption was violated, the Greenhouse–Geisser correction was applied to adjust the degrees of freedom and associated p values.

When the normality assumption was not met, the non-parametric Friedman test was used as an alternative. In cases where the Friedman test indicated significant differences, post hoc pairwise comparisons were conducted using the Wilcoxon signed-rank test with Bonferroni correction for multiple comparisons. All statistical tests were evaluated at a significance level of p < 0.05.

Some figures in this paper were generated using ChiPlot (https://www.chiplot.online/) (accessed on 10 August 2025), including the correlation heatmap, violin plot, and box plot.

3.1. Self-Reported Evaluation

The questionnaire data did not meet the assumption of normality; therefore, the Friedman test was employed to assess whether there were significant differences in participants’ self-reported ratings of perceived usefulness and perceived ease of use across the three levels of information presentation (Level 0, Level 1, and Level 2). The results are summarized in Table 3.

Table 3.

Friedman Test Results for the Technology Acceptance Questionnaire.

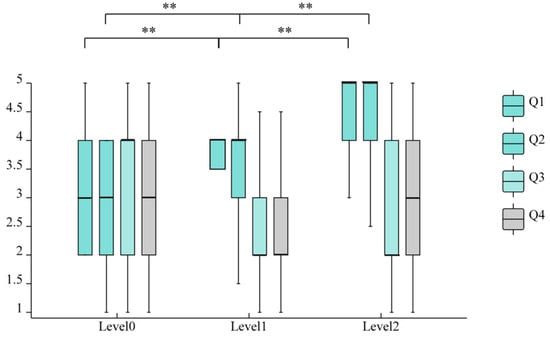

As shown in Table 3, significant differences were observed for Q1 (χ2 = 35.489, p < 0.01), Q2 (χ2 = 25.371, p < 0.01), and Q3 (χ2 = 10.299, p < 0.01). In contrast, Q4 did not reach statistical significance (χ2 = 2.605, p > 0.05), indicating that participants’ responses to the statement “I need to expend considerable mental effort to understand the system’s prompts” did not differ significantly across the three information presentation levels.

To explore significant omnibus effects, post hoc pairwise comparisons were conducted using the Wilcoxon signed-rank test (see Figure 4), with p-values adjusted via the Holm–Bonferroni procedure (family-wise α = 0.05; number of comparisons = 6:3 questions × 2 pairwise contrasts each). For perceived usefulness (Q1 and Q2), significant differences were observed between Level 1 and Level 0 (adjusted p-values, padj < 0.001) and between Level 1 and Level 2 (padj < 0.001), indicating that users’ evaluations of functional effectiveness varied significantly across information load levels. Specifically, increased visual information density correlated with higher perceived system effectiveness in supporting driving tasks (MLevel 1 = 4.3 vs. MLevel 0 = 3.1; MLevel 2 = 3.6), reflecting enhanced functional utility.

Figure 4.

Boxplot of Self-Reported Technology Acceptance Evaluation Scale for Three-Level Visualization (** p < 0.01).

In contrast, perceived ease of use Q3 showed no significant differences between Level 1 and Level 0 (p = 0.288) or between Level 1 and Level 2 (p = 0.172). Q4 did not reach statistical significance (χ2 = 2.605, p > 0.05), suggesting that information density had no significant effect on perceived mental effort. These differences remained non-significant before and after correction (padj = 0.34). Although the median score for Q3 was numerically higher under Level 2 (median = 3.0) than Level 1 (median = 2.0) on the 5-point Likert scale (with higher values indicating greater difficulty), this difference did not reach statistical significance. Therefore, we cannot conclude that increased information density meaningfully affected perceived ease of use.

However, a significant increase in cognitive effort was observed for Q4 when comparing Level 2 to Level 1 (p < 0.001), indicating that participants found the system prompts more mentally demanding under enhanced visualization. This suggests a potential trade-off: while functional usefulness improved (as shown in Q1–Q2), the added information may have imposed a higher cognitive cost, even if overall ease-of-use ratings did not change significantly.

3.2. Intervention Behavior

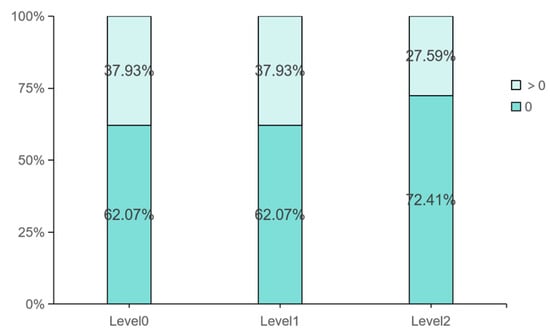

Over 50% of participants made no interventions across all tasks, yielding a right-skewed distribution of intervention frequency (see Figure 5). While approximately 38% of participants intervened at least once under Level 0 and Level 1, this proportion dropped significantly to 27.59% under Level 2. Given the non-normal distribution, nonparametric tests were used: a Friedman test to assess overall differences in intervention frequency across the three visualization levels, followed by Wilcoxon signed-rank post hoc pairwise comparisons.

Figure 5.

Distribution of Participants’ Intervention Behaviors.

The Friedman test results revealed that no statistically significant differences were observed in intervention frequency (χ2 = 2.085, p > 0.05), intervention duration (χ2 = 0.792, p > 0.05), or average intervention intensity (χ2 = 2.528, p > 0.05) across the three information presentation levels, as shown in Table 4.

Table 4.

Friedman test results for intervention behavior across visualization levels.

Further analysis using the Wilcoxon signed-rank test for paired samples was conducted to pairwise comparisons between visualization levels on intervention frequency, intervention duration, and average intervention intensity. As shown in Table 5, no statistically significant differences were observed between Level 1–Level 0 across all three metrics (p = 0.856, p = 0.397, p = 0.510). For Level 1–Level 2 comparisons, non-significant differences were found in intervention frequency (p = 0.101) and intervention duration (p = 0.084). Notably, the uncorrected p-value for average intervention intensity (Level 1 vs. Level 2) was 0.031, but this did not survive multiple comparison correction (padj = 0.186) and should not be interpreted as evidence of a true effect, especially in the absence of a significant global test.

Table 5.

Post hoc Wilcoxon signed-rank test results for pairwise comparisons of intervention metrics.

3.3. Visual Behavior

We employed eye-tracking heatmaps to visualize drivers’ attention distribution across different levels of information visualization systems. The heatmaps utilized transparency gradients to indicate visual focus intensity (high transparency reflecting high attention, low transparency/black representing low attention). As illustrated in Figure 6, participants’ attention was predominantly concentrated on road areas within the driving simulation environment. With increased visualization levels, attention distribution transitions from focused to dispersed patterns.

Figure 6.

Visual behavior of drivers under different levels of information during autonomous driving tasks: (a) Level 0; (b) Level 1; (c) Level 2.

Shapiro–Wilk normality tests were conducted on the collected visual behavior data. As shown in Table 6, significant deviations from normality (p < 0.05) were observed for total fixation duration, average fixation duration, average pupil diameter, and saccade frequency, necessitating nonparametric analyses for these metrics. However, saccade frequency and fixation count within AOIs across Level 0, Level 1, and Level 2 conditions did not show significant deviations (p > 0.05), indicating normal distribution and satisfying parametric test assumptions.

Table 6.

Friedman Test Results for Visual Behavior Across Information Presentation Levels.

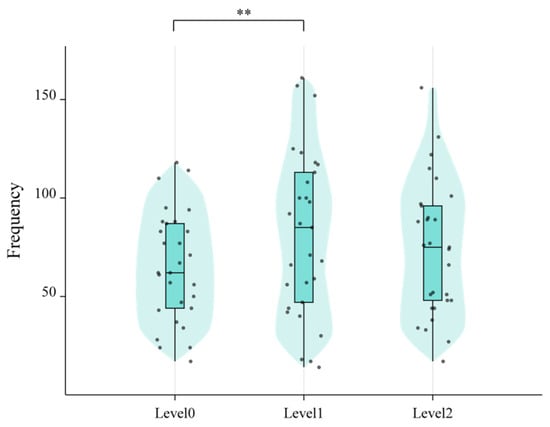

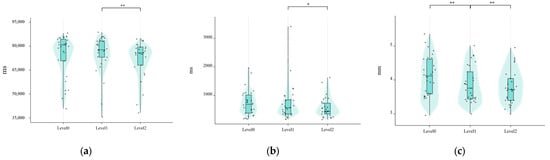

Paired-samples t-tests were conducted to examine differences between visualization levels in saccade frequency and fixation count within AOIs (see Figure 7). Results revealed that saccade frequency in Level 0 was significantly lower than in Level 1 (p < 0.05), as illustrated in Figure 7. However, no significant differences were observed between Level 1 and Level 2 for either metric.

Figure 7.

Paired Comparisons of Saccade Frequency in AOIs Across Three-Level Visualization Conditions (** p < 0.01).

The Friedman test revealed statistically significant differences across information presentation levels for total fixation duration (χ2 = 16.828, p < 0.001), average fixation duration (χ2 = 7.103, p = 0.029), and average pupil diameter (χ2 = 38.345, p < 0.001), as presented in Table 6. No significant effect was observed for number of glances (χ2 = 0.538, p = 0.764); thus, it was excluded from post hoc analysis.

For the three significant metrics, post hoc pairwise comparisons were conducted using Wilcoxon signed-rank tests (see Figure 8), with p-values adjusted via the Holm–Bonferroni procedure (family-wise α = 0.05; 6 comparisons: Level 1 vs. Level 0 and Level 1 vs. Level 2 for each metric). Additionally, for the normally distributed metric saccade frequency within AOIs, paired t-tests with Holm–Bonferroni correction (2 comparisons) were performed. After correction, the following differences remained statistically significant (see Table 7)

Figure 8.

Paired Comparisons of Visual Behavior Across Three-Level Visualization Conditions: (a) Total duration of fixations; (b) Average duration of fixations; (c) Average pupil diameter (* p < 0.05; ** p < 0.01).

Table 7.

Post hoc pairwise comparisons with Holm–Bonferroni-adjusted p-values.

Notably, the uncorrected difference in average fixation duration between Level 1 and Level 2 (p = 0.048) did not survive Holm–Bonferroni correction (padj = 0.096) and is therefore not considered statistically reliable. Similarly, no significant differences were observed in any metric for the Level 1 vs. Level 0 contrast except for pupil diameter and saccade frequency.

Collectively, these results demonstrate that Level 1 uniquely modulates visual behavior: it elicits the highest pupil dilation (a proxy for cognitive effort), prolongs total visual engagement relative to Level 2, and increases saccadic activity relative to Level 0. In contrast, Level 2 does not confer additional visual processing benefits over Level 1, and may even reduce sustained attention (as reflected in shorter total fixation duration). The absence of significant effects for average fixation duration and number of glances further underscores the metric-specific nature of visualization impacts on eye movement behavior.

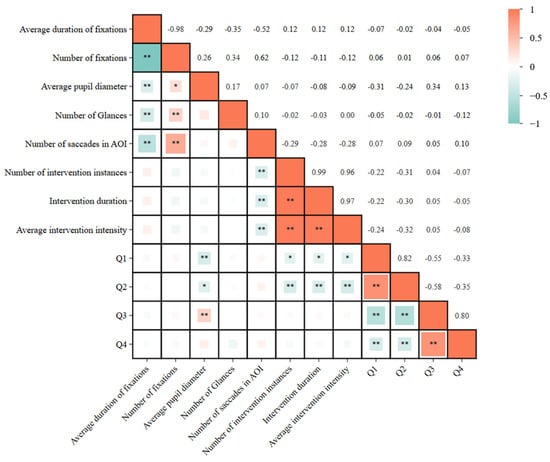

3.4. Correlations Between Subjective and Objective Measures

We further examined the association between subjective trust ratings and objective behavioral measures. Spearman’s correlations revealed associations between self-reported ratings (Q1–Q4) and physiological response metrics, accommodating non-normal or ordinal data distributions, as shown in Figure 9. Results indicated significant negative correlations between Q1/Q2 and both average pupil diameter (a cognitive load indicator) and intervention behavior metrics. Conversely, Q3 demonstrated a significant positive correlation with average pupil diameter. These findings suggest that as perceived system usefulness increases, cognitive workload or physiological arousal decreases, accompanied by reduced intervention intentions. Notably, perceived ease of use exhibited a significant positive correlation with average pupil diameter, though the underlying mechanisms remain to be explored.

Figure 9.

Spearman’s Correlation Matrix of Self-Reported Ratings and Physiological Response Metrics (* p < 0.05; ** p < 0.01).

4. Discussion

In this experiment, by collecting participants’ eye-tracking data, behavioral responses, and results from the Technology Acceptance Questionnaire during the autonomous driving task, we obtained the following findings and systematically investigated two core research questions.

Self-reported Evaluation: Participants rated the system significantly more favorably under Level 2 than under Level 0 or Level 1 on Q1 (“The system helps me complete driving tasks”) and Q2 (“The system’s functions are highly practical”), with Holm–Bonferroni-corrected p-values < 0.01 for both comparisons. Q3 (“I find this system difficult to use”) showed the lowest (most positive) scores in Level 2, indicating improved perceived usability. In contrast, Q4 (“I need to expend significant effort to understand system notifications”) did not differ significantly across transparency levels (padj > 0.05), although the median response was highest (least favorable) under Level 2.

Intervention behavior: Overall intervention frequency was low across all conditions. No statistically significant differences were observed in intervention frequency (Friedman χ2 = 2.528, p = 0.282) or total intervention duration across the three transparency levels. The comparison between Level 1 and Level 2 yielded an uncorrected p-value of 0.031 for average intervention intensity, but this effect did not remain significant after Holm–Bonferroni correction (padj = 0.186).

Visual Behavior: Eye-tracking metrics revealed significant omnibus effects of transparency level on total fixation duration, average fixation duration, and average pupil diameter (all p < 0.05). Post hoc pairwise comparisons showed that total fixation duration was significantly lower in Level 2 than in Level 1 (padj = 0.030), and average pupil diameter was also significantly lower in Level 2 compared to Level 1 (padj = 0.018). Saccade frequency within regions of interest was significantly higher in Level 1 than in Level 0 (padj = 0.006), with no significant difference between Level 1 and Level 2.

4.1. Information Visualization Enhances User Trust Compared to Black-Box Systems

The significantly higher Q1 and Q2 scores under Level 2 suggest that providing sensor data and decision-making pathways enhances users’ perception of the system’s practical utility and reliability. This aligns with Lee and See’s [50] notion that process transparency fosters capability-based trust. Similarly, the improved Q3 scores indicate that well-designed visualizations can enhance subjective usability.

However, the lack of significant improvement in Q4—coupled with a median increase in perceived effort under Level 2—suggests that explanatory richness may introduce complexity that offsets usability gains. This pattern resonates with the concept of extraneous cognitive load [51,52] and Körber et al.’s [53] warning about “explanation fatigue,” where excessive detail may overwhelm rather than inform.

At the behavioral level, the absence of significant differences in intervention metrics—even after correcting for multiple comparisons—indicates that increased transparency did not translate into measurable changes in takeover behavior in this experimental context. This underscores a potential dissociation between subjective trust and actual behavioral reliance.

Eye-tracking results reveal a more nuanced picture. The reduced pupil diameter and total fixation duration under Level 2, despite higher information density, suggest a decrease in objective cognitive load. This contrasts with the subjective perception of increased complexity (Q4), pointing to a subjective–objective dissociation in cognitive load assessment. This pattern aligns with Hergeth et al. [54], who observed that higher trust in automation is associated with reduced visual monitoring during non-critical phases, reflecting greater reliance and lower vigilance demands. Concurrently, the efficiency of information processing under Level 2 may support the formation of a more accurate and stable mental model of system behavior, as suggested by Bauer et al. [55]. One possible explanation is that predictive visualizations (e.g., AR trajectories) enable more efficient information integration, allowing users to extract greater meaning with fewer cognitive resources—a principle consistent with cognitive load theory [56].

The elevated saccade frequency in Level 1 (vs. Level 0) implies that minimal transparency prompts active visual scanning, possibly due to ambiguity in system cues. In contrast, Level 2’s richer explanations may support information-per-saccade efficiency, reducing the need for compensatory scanning. Together, these findings suggest a nonlinear relationship between transparency level and cognitive demand: moderate-to-high transparency, when well-structured, can reduce load despite greater information volume.

These results collectively highlight that trust, usability, and cognitive load are multidimensional constructs that may diverge across measurement modalities. Future work should investigate individual difference factors (e.g., expertise, cognitive style) and dynamic task contexts that modulate these effects.

4.2. The Low Physiological Stress Caused by the Lack of Explanations Does Not Fully Support Trust

The findings demonstrate that the lack of system explanations in the black-box condition (Level 0) did not reduce physiological arousal—as indicated by elevated average pupil diameter (Level 0 > Levels 1/2, p < 0.05). While behavioral and eye-tracking metrics (e.g., saccade frequency, attention distribution) showed no statistically significant differences, their median values trended upward compared to Levels 1 and 2. Combined with self-reported data (lower Q1–Q3 scores), this suggests participants experienced heightened cognitive demand.

Although Level 0 showed a lower mean saccade frequency in AOIs compared to Level 1—an indicator often associated with reduced cognitive load—the integration of self-report evaluations and behavioral data suggests this low arousal state was not due to psychological relaxation, but rather reflected a passive, disengaged monitoring strategy [57]. Specifically, Q1–Q3 scores in Level 0 were significantly lower, indicating reduced user confidence in system functionality and operational convenience. Concurrently, eye-tracking data revealed the fewest saccades within regions of interest and the most restricted attention distribution under Level 0, reflecting minimal proactive exploration. This aligns with Endsley and Kiris’ [58] concept of “out-of-the-loop” states: due to the system’s lack of explainability, users failed to comprehend its behavioral logic, leading to reduced information seeking and cognitive investment, and ultimately adopting passive following strategies [59,60].

Crucially, the lowest pupil diameter was observed in Level 2—not Level 0—indicating that true cognitive ease arises from predictability and understanding, not from information absence. The slightly elevated pupil diameter in Level 1 (relative to both Level 0 and Level 2) further suggests that moderate but non-explanatory information may impose the highest cognitive demand, as users attempt to infer system intent without sufficient cues.

In summary, the absence of explanations does not maintain a healthy low-stress state. Instead, it risks undermining trust and triggering monitoring complacency, thereby weakening human–machine collaborative efficiency. A genuinely effective low-stress state should be built on predictability, controllability, and shared mental models—principles aligned with human-centered design [61,62]—which represent the core value provided by moderate, meaningful visual explanations.

5. Conclusions

This study reveals that the impact of information visualization on user experience in autonomous driving is nonlinear and context-dependent. Enhanced information visualization (Level 2) produces divergent effects across dimensions: it significantly improves perceived usefulness, user trust, and engagement in proactive monitoring—key enablers of safe and efficient human–machine collaboration. However, these benefits come at a cognitive cost, as Level 2 also leads to substantially higher self-reported cognitive load despite lower physiological indicators of cognitive load (e.g., decreased average pupil diameter). Critically, this added cognitive demand is not offset by improvements in perceived ease of use, suggesting that simply increasing information density does not yield uniformly positive outcomes.

In contrast, both the black-box condition (Level 0) and the standard visualization (Level 1) show limited differentiation across most measures, underscoring that not all increases in transparency are functionally meaningful. The findings therefore advocate for a shift from “more information” to “Meaningful Transparency”—a design paradigm that prioritizes contextually relevant, interpretable, and actionable explanations tailored to users’ situational needs and cognitive capacities, rather than maximal disclosure.

This study has several limitations. The participant sample was skewed toward younger female adults, which may limit generalizability. The simulated driving environment, while enabling controlled measurement, lacks real-world complexities such as unpredictable traffic dynamics, emergency events, or diverse interface layouts. Additionally, eye-tracking metrics like fixation count and saccade frequency are sensitive to variations in the size and placement of visual elements, potentially affecting their validity as cognitive state indicators. Future research should validate these findings in real-world or high-fidelity driving contexts, explore adaptive visualization strategies that modulate information presentation based on workload and task criticality, and examine how individual differences (e.g., age, expertise, cognitive style) influence responses to varying levels of system transparency. Although enhanced visualization significantly improved subjective trust and certain eye-tracking metrics, behavioral indicators did not show the expected reduction in intervention frequency or reaction time. Moreover, while Spearman’s rank-order correlations were computed between TAM item scores (Q1–Q4) and objective measures (fixation entropy, mean pupil diameter, intervention frequency, and reaction time), these analyses were based on the full set of valid participant observations (N = 29) and did not control for potential covariates such as age, driving experience, or prior exposure to automated systems. This limits our ability to isolate the unique contribution of visualization design from individual differences. A dissociation also emerged between self-reported assessments and eye-tracking metrics in the cognitive load dimension. Future research should explore behavioral threshold ranges, incorporate covariate-adjusted models (e.g., partial correlations or regression-based approaches), and further unravel the mechanisms underlying this subjective–objective dissociation.

Author Contributions

Conceptualization, M.L. and Y.L.; methodology, M.L. and Y.L.; software, M.L.; validation, M.L., Y.L. and M.Z.; formal analysis, M.L.; investigation, M.L. and T.Z.; resources, Y.L. and X.X.; data curation, M.L.; writing—original draft preparation, M.L.; writing—review and editing, Y.L., M.L., L.R., W.W., N.Z. and X.X.; visualization, M.L. and J.L.; supervision, Y.L. and R.X.; project administration, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Social Science Foundation of the Department of Education of Jiangsu Province of China, grant number 2021SJZDA016.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (Ethics Committee) of Nanjing University of Science and Technology (Protocol Code: 2025062701; Approval Date: 27 June 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

In accordance with privacy and ethical considerations, as well as specific requests from a subset of participants, the data generated or analyzed in this study contain sensitive information and therefore cannot be made publicly available.

Acknowledgments

During the preparation of this manuscript, the author(s) used ChiPlot (https://www.chiplot.online/, accessed on 10 August 2025) for the purposes of generating statistical visualizations, including correlation heatmaps, violin plots, and box plots. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| XAI | eXplainable Artificial Intelligence |

| AI | Artificial Intelligence |

| AR | Augmented Reality |

| IBM Corp. | International Business Machines Corporation |

| USA | United States of America |

| NY | New York |

| AOI | Area of Interest |

| ANOVA | Analysis of Variance |

| TAM | Technology Acceptance Model |

| PU | Perceived Usefulness |

| PEOU | Perceived Ease of Use |

References

- Shi, E.; Gasser, T.M.; Seeck, A.; Auerswald, R. The Principles of Operation Framework: A Comprehensive Classification Concept for Automated Driving Functions. SAE Int. J. Connect. Autom. Veh. 2020, 3, 27–37. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common. Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Hussain, R.; Zeadally, S. Autonomous Cars: Research Results, Issues, and Future Challenges. IEEE Commun. Surv. Tutor. 2019, 21, 1275–1313. [Google Scholar] [CrossRef]

- Yaqoob, I.; Khan, L.U.; Kazmi, S.M.A.; Imran, M.; Guizani, N.; Hong, C.S. Autonomous Driving Cars in Smart Cities: Recent Advances, Requirements, and Challenges. IEEE Netw. 2020, 34, 174–181. [Google Scholar] [CrossRef]

- Pereira, A.R.; Carneiro, P.S.; Da Silva, M.M. Evaluating a Reference Model for Shared Autonomous Vehicles Using Phoenix. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Canary Islands, Spain, 19–21 July 2023; pp. 1–10. [Google Scholar]

- Wang, S.; Zhao, Z.; Xie, Y.; Ma, M.; Chen, Z.; Wang, Z.; Su, B.; Xu, W.; Li, T. Recent Surge in Public Interest in Transportation: Sentiment Analysis of Baidu Apollo Go Using Weibo Data. arXiv 2024, arXiv:2408.10088. [Google Scholar] [CrossRef]

- Taherdoost, H. Deep Learning and Neural Networks: Decision-Making Implications. Symmetry 2023, 15, 1723. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, J.; Tian, R.; Zhang, Y. End-to-End Autonomous Driving Decision Based on Deep Reinforcement Learning. In Proceedings of the 2019 5th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 19–22 April 2019; pp. 658–662. [Google Scholar]

- Yang, B.; Li, J.; Zeng, T. A Review of Environmental Perception Technology Based on Multi-Sensor Information Fusion in Autonomous Driving. World Electr. Veh. J. 2025, 16, 20. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Von Eschenbach, W.J. Transparency and the Black Box Problem: Why We Do Not Trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Trypke, M.; Stebner, F.; Wirth, J. The More, the Better? Exploring the Effects of Modal and Codal Redundancy on Learning and Cognitive Load: An Experimental Study. Educ. Sci. 2024, 14, 872. [Google Scholar] [CrossRef]

- Cahill, R.A.; Duffourc, M.; Gerke, S. The AI-Enhanced Surgeon—Integrating Black-Box Artificial Intelligence in the Operating Room. Int. J. Surg. 2025, 111, 2823–2826. [Google Scholar] [CrossRef] [PubMed]

- Nakashima, H.H.; Mantovani, D.; Machado Junior, C. Users’ Trust in Black-Box Machine Learning Algorithms. Rev. Gest. 2024, 31, 237–250. [Google Scholar] [CrossRef]

- Bayer, S.; Gimpel, H.; Markgraf, M. The Role of Domain Expertise in Trusting and Following Explainable AI Decision Support Systems. J. Decis. Syst. 2022, 32, 110–138. [Google Scholar] [CrossRef]

- Rosenbacke, R.; Melhus, Å.; McKee, M.; Stuckler, D. How Explainable Artificial Intelligence Can Increase or Decrease Clinicians’ Trust in AI Applications in Health Care: Systematic Review. JMIR AI 2024, 3, e53207. [Google Scholar] [CrossRef]

- Wang, P.; Ding, H. The Rationality of Explanation or Human Capacity? Understanding the Impact of Explainable Artificial Intelligence on Human-AI Trust and Decision Performance. Inf. Process. Manag. 2024, 61, 103732. [Google Scholar] [CrossRef]

- Li, Y.; Wu, B.; Huang, Y.; Luan, S. Developing Trustworthy Artificial Intelligence: Insights from Research on Interpersonal, Human-Automation, and Human-AI Trust. Front. Psychol. 2024, 15, 1382693. [Google Scholar] [CrossRef]

- Ma, R.H.Y.; Morris, A.; Herriotts, P.; Birrell, S. Investigating What Level of Visual Information Inspires Trust in a User of a Highly Automated Vehicle. Appl. Ergon. 2021, 90, 103272. [Google Scholar] [CrossRef]

- Häuslschmid, R.; von Bülow, M.; Pfleging, B.; Butz, A. SupportingTrust in Autonomous Driving. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 319–329. [Google Scholar]

- Poon, A.I.F.; Sung, J.J.Y. Opening the Black Box of AI-medicine. J. Gastroenterol. Hepatol. 2021, 36, 581–584. [Google Scholar] [CrossRef]

- Chuang, J.; Ramage, D.; Manning, C.; Heer, J. Interpretation and Trust: Designing Model-Driven Visualizations for Text Analysis. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 22–27 April 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 443–452. [Google Scholar]

- Pedreschi, D.; Giannotti, F.; Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F. Meaningful Explanations of Black Box AI Decision Systems. Proc. AAAI Conf. Artif. Intell. 2019, 33, 9780–9784. [Google Scholar] [CrossRef]

- Dong, J.; Chen, S.; Miralinaghi, M.; Chen, T.; Li, P.; Labi, S. Why Did the AI Make That Decision? Towards an Explainable Artificial Intelligence (XAI) for Autonomous Driving Systems-Consensus. Transp. Res. Part C Emerg. Technol. 2023, 156, 104358. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, R.; Sherony, R.; Domeyer, J.; Ding, Z. Attention-Based Interrelation Modeling for Explainable Automated Driving. IEEE Trans. Intell. Veh. 2023, 8, 1564–1573. [Google Scholar] [CrossRef]

- Tran, T.T.H.; Peillard, E.; Walsh, J.; Moreau, G.; Thomas, B.H. Impact of Adding, Removing and Modifying Driving and Non-Driving Related Information on Trust in Autonomous Vehicles. In Proceedings of the 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Saint Malo, France, 8–12 March 2025; pp. 1262–1263. [Google Scholar]

- Müller, T.; Colley, M.; Dogru, G.; Rukzio, E. AR4CAD: Creation and Exploration of a Taxonomy of Augmented Reality Visualization for Connected Automated Driving. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–27. [Google Scholar] [CrossRef]

- Colley, M.; Speidel, O.; Strohbeck, J.; Rixen, J.O.; Belz, J.H.; Rukzio, E. Effects of Uncertain Trajectory Prediction Visualization in Highly Automated Vehicles on Trust, Situation Awareness, and Cognitive Load. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 7, 1–23. [Google Scholar] [CrossRef]

- Colley, M.; Eder, B.; Rixen, J.O.; Rukzio, E. Effects of Semantic Segmentation Visualization on Trust, Situation Awareness, and Cognitive Load in Highly Automated Vehicles. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–11. [Google Scholar]

- Rahi, A.; Cardoza, C.O.; Vemulapalli, S.S.T.; Wasfy, T.; Anwar, S. Modeling of Automotive Radar Sensor in Unreal Engine for Autonomous Vehicle Simulation. In Proceedings of the Volume 6: Dynamics, Vibration, and Control; American Society of Mechanical Engineers, New Orleans, LA, USA, 29 October–2 November 2023; p. V006T07A070. [Google Scholar]

- Endsley, M.R. Autonomous Driving Systems: A Preliminary Naturalistic Study of the Tesla Model S. J. Cogn. Eng. Decis. Mak. 2017, 11, 225–238. [Google Scholar] [CrossRef]

- Schneider, T.; Hois, J.; Rosenstein, A.; Ghellal, S.; Theofanou-Fülbier, D.; Gerlicher, A.R.S. Explain Yourself! Transparency for Positive UX in Autonomous Driving. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021; pp. 1–12. [Google Scholar]

- Helldin, T.; Falkman, G.; Riveiro, M.; Davidsson, S. Presenting System Uncertainty in Automotive UIs for Supporting Trust Calibration in Autonomous Driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Netherlands, 28–30 October 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 210–217. [Google Scholar]

- Ma, J.; Zuo, Y.; Du, H.; Wang, Y.; Tan, M.; Li, J. Interactive Output Modalities Design for Enhancement of User Trust Experience in Highly Autonomous Driving. Int. J. Hum. Comput. Interact. 2025, 41, 6172–6190. [Google Scholar] [CrossRef]

- Lu, Y.; Sarter, N. Modeling and Inferring Human Trust in Automation Based on Real- Time Eye Tracking Data. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2020, 64, 344–348. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, H.; Gong, J.; Zhao, X.; Li, Z. Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving. Sustainability 2022, 15, 445. [Google Scholar] [CrossRef]

- Choi, J.K.; Ji, Y.G. Investigating the Importance of Trust on Adopting an Autonomous Vehicle. Int. J. Hum.-Comput. Interact. 2015, 31, 692–702. [Google Scholar] [CrossRef]

- Chen, L.; Wu, P.; Chitta, K.; Jaeger, B.; Geiger, A.; Li, H. End-to-End Autonomous Driving: Challenges and Frontiers. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10164–10183. [Google Scholar] [CrossRef]

- Ma, Q.; Li, M.; Huang, G.; Ullah, S. Overtaking Path Planning for CAV Based on Improved Artificial Potential Field. IEEE Trans. Veh. Technol. 2024, 73, 1611–1622. [Google Scholar] [CrossRef]

- Harada, R.; Yoshitake, H.; Shino, M. Pedestrian’s Avoidance Behavior Characteristics Against Autonomous Personal Mobility Vehicles for Smooth Avoidance. J. Robot. Mechatron. 2024, 36, 918–927. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Li, Z.; Li, X.; Jiang, B. How People Perceive the Safety of Self-Driving Buses: A Quantitative Analysis Model of Perceived Safety. Transp. Res. Rec. J. Transp. Res. Board. 2023, 2677, 1356–1366. [Google Scholar] [CrossRef]

- Chacón Quesada, R.; Estévez Casado, F.; Demiris, Y. An Integrated 3D Eye-Gaze Tracking Framework for Assessing Trust in Human–Robot Interaction. ACM Trans. Hum.-Robot. Interact. 2025, 14, 1–28. [Google Scholar] [CrossRef]

- Zhang, Y.; Hopko, S.; Mehta, R.K. Capturing Dynamic Trust Metrics during Shared Space Human Robot Collaboration: An Eye-Tracking Approach. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 536. [Google Scholar] [CrossRef]

- Wang, K.; Hou, W.; Ma, H.; Hong, L. Eye-Tracking Characteristics: Unveiling Trust Calibration States in Automated Supervisory Control Tasks. Sensors 2024, 24, 7946. [Google Scholar] [CrossRef] [PubMed]

- Krejtz, K.; Duchowski, A.T.; Niedzielska, A.; Biele, C.; Krejtz, I. Eye Tracking Cognitive Load Using Pupil Diameter and Microsaccades with Fixed Gaze. PLoS ONE 2018, 13, e0203629. [Google Scholar] [CrossRef]

- Wei, W.; Xue, Q.; Yang, X.; Du, H.; Wang, Y.; Tang, Q. Assessing the Cognitive Load Arising from In-Vehicle Infotainment Systems Using Pupil Diameter. In Cross-Cultural Design; Rau, P.-L.P., Ed.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 14023, pp. 440–450. ISBN 978-3-031-35938-5. [Google Scholar]

- Vogelpohl, T.; Kühn, M.; Hummel, T.; Vollrath, M. Asleep at the Automated Wheel—Sleepiness and Fatigue during Highly Automated Driving. Accid. Anal. Prev. 2019, 126, 70–84. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory. In Psychology of Learning and Motivation; Elsevier: Amsterdam, The Netherlands, 2011; Volume 55, pp. 37–76. ISBN 978-0-12-387691-1. [Google Scholar]

- Karr-Wisniewski, P.; Lu, Y. When More Is Too Much: Operationalizing Technology Overload and Exploring Its Impact on Knowledge Worker Productivity. Comput. Hum. Behav. 2010, 26, 1061–1072. [Google Scholar] [CrossRef]

- Körber, M. Theoretical Considerations and Development of a Questionnaire to Measure Trust in Automation. In Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018); Bagnara, S., Tartaglia, R., Albolino, S., Alexander, T., Fujita, Y., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2019; Volume 823, pp. 13–30. ISBN 978-3-319-96073-9. [Google Scholar]

- Hergeth, S.; Lorenz, L.; Vilimek, R.; Krems, J.F. Keep Your Scanners Peeled: Gaze Behavior as a Measure of Automation Trust during Highly Automated Driving. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 509–519. [Google Scholar] [CrossRef]

- Bauer, R.; Jost, L.; Günther, B.; Jansen, P. Pupillometry as a Measure of Cognitive Load in Mental Rotation Tasks with Abstract and Embodied Figures. Psychol. Res. 2022, 86, 1382–1396. [Google Scholar] [CrossRef]

- Chen, O.; Paas, F.; Sweller, J. A Cognitive Load Theory Approach to Defining and Measuring Task Complexity through Element Interactivity. Educ. Psychol. Rev. 2023, 35, 63. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and Automation: Use, Misuse, Disuse, Abuse. Hum. Factors J. Hum. Factors Ergon. Soc. 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Endsley, M.R.; Kiris, E.O. The Out-of-the-Loop Performance Problem and Level of Control in Automation. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Körber, M.; Cingel, A.; Zimmermann, M.; Bengler, K. Vigilance Decrement and Passive Fatigue Caused by Monotony in Automated Driving. Procedia Manuf. 2015, 3, 2403–2409. [Google Scholar] [CrossRef]

- Neigel, A.R.; Claypoole, V.L.; Smith, S.L.; Waldfogle, G.E.; Fraulini, N.W.; Hancock, G.M.; Helton, W.S.; Szalma, J.L. Engaging the Human Operator: A Review of the Theoretical Support for the Vigilance Decrement and a Discussion of Practical Applications. Theor. Issues Ergon. Sci. 2020, 21, 239–258. [Google Scholar] [CrossRef]

- Chen, H.; Gomez, C.; Huang, C.-M.; Unberath, M. Explainable Medical Imaging AI Needs Human-Centered Design: Guidelines and Evidence from a Systematic Review. Npj Digit. Med. 2022, 5, 156. [Google Scholar] [CrossRef] [PubMed]

- Saghafian, M.; Vatn, D.M.K.; Moltubakk, S.T.; Bertheussen, L.E.; Petermann, F.M.; Johnsen, S.O.; Alsos, O.A. Understanding Automation Transparency and Its Adaptive Design Implications in Safety–Critical Systems. Saf. Sci. 2025, 184, 106730. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).