Abstract

The increasing complexity of the Edge–Cloud Continuum (ECC), driven by the rapid expansion of the Internet of Things (IoT) and data-intensive applications, necessitates implementing innovative methods for automated and efficient system management. In this context, recent studies focused on the utilization of self-* capabilities that can be used to enhance system autonomy and increase operational proactiveness. Separately, anomaly detection and adaptive sampling techniques have been explored to optimize data transmission and improve systems’ reliability. The integration of those techniques within a single, lightweight, and extendable self-optimization module is the main subject of this contribution. The module was designed to be well suited for distributed systems, composed of highly resource-constrained operational devices (e.g., wearable health monitors, IoT sensors in vehicles, etc.), where it can be utilized to self-adjust data monitoring and enhance the resilience of critical processes. The focus is put on the implementation of two core mechanisms, derived from the current state-of-the-art: (1) density-based anomaly detection in real-time resource utilization data streams, and (2) a dynamic adaptive sampling technique, which employs Probabilistic Exponential Weighted Moving Average. The performance of the proposed module was validated using both synthetic and real-world datasets, which included a sample collected from the target infrastructure. The main goal of the experiments was to showcase the effectiveness of the implemented techniques in different, close to real-life scenarios. Moreover, the results of the performed experiments were compared with other state-of-the-art algorithms in order to examine their advantages and inherent limitations. With the emphasis put on frugality and real-time operation, this contribution offers a novel perspective on resource-aware autonomic optimization for next-generation ECC.

1. Introduction

With the ongoing expansion of the Internet of Things (IoT) and the increasing demand for data-intensive applications that involve capturing, analyzing, and exchanging large amounts of data, which are being processed and used for decision-making, the concept of Edge–Cloud Continuum (ECC) has gained significant popularity [1]. Its use cases span several domains, in which heterogeneous resources are being integrated to provide complex solutions. These include, among others, smart homes and cities [2,3], autonomous vehicles [4], smart manufacturing [5], energy management [6,7], or agriculture [8]. As outlined in [9], ECC can be understood as a complex distributed infrastructure that encompasses a wide variety of technologies and interconnected devices, seamlessly integrating the edge (where data sources and actuators are located) and the cloud (infrastructure with computational resources). Through such an integration, ECC aims to harness the combined benefits of both paradigms, while addressing their inherent limitations [10]. In particular, edge devices located in close proximity to the data sensors and actuators are often utilized for time-critical or privacy-sensitive applications, which require minimal latency [11]. On the other hand, cloud resources provide the ability to perform computationally intensive tasks that exceed the capabilities of resource-restricted devices. With dynamic resource provisioning and scalability, cloud solutions can also better accommodate long-running workflows, or those executed over complex interconnected architectures [12,13].

However, despite the aforementioned benefits, ECC also faces a number of challenges [1,14]. (1) The heterogeneity of computational resources, system topologies, and network connectivity schemes hinders the possibility of applying unified orchestration methods, making system management particularly difficult [15,16]. This encompasses not only aspects of resource provisioning, task offloading, or scheduling, but also (2) mechanisms for data management [17]. Achieving the data consensus, in ECC with distributed data sources, requires advanced mechanisms for synchronization, transmission policies, and control over access rights. Here, an additional level of complexity arises from (3) the need to perform all these operations on real-time streaming data. Moreover, (4) the processing of data on, often, resource-limited edge devices requires the use of algorithms with a low computational overhead, occupying little storage [18]. Developing such frugal algorithms typically involves finding a reasonable compromise between efficiency and effectiveness. Finally, (5) the dynamic and distributed nature of the environment impedes the detection of potential errors, which can hinder the system’s stability, resulting in temporary service interruptions, performance deterioration, and breaches of Service Level Agreements (SLA)s [19,20].

Therefore, attaining ECC-based systems reliability requires the incorporation of smart mechanisms providing fault detection and enabling system adaptability to the ever-changing conditions. In order to achieve these objectives, in a possibly automated and feasible manner, self-* mechanisms [21] have become the focus of recent research studies. These mechanisms aim to enhance the system’s autonomy, allowing it to initiate decisions and adaptations with little to no human intervention. In the survey [22], the authors presented a taxonomy that distinguished nine different types of self-*, of which the primary four are: (1) self-healing, (2) self-protection, (3) self-configuration and (4) self-optimization. Among these, the self-optimization constitutes the focus of this contribution.

Typically, self-optimization is understood as a property of the system that not only recognizes the need for its adaptations, in the case of violation of its high-level objectives, but also attempts to improve the system’s performance in its stable state. This makes it particularly important for complex ECC infrastructures that require automation of complex processes. Interestingly, as identified in [22], among other self-* modules, self-optimization was among the ones mentioned the least number of times in the existing literature. This fact can be attributed to several factors, including (1) difficulty in specifying necessary key performance indicators, (2) vague definition of the self-optimization scope, or (3) the need to incorporate domain-specific expert knowledge. Moreover, the existing works target mostly cloud applications, relying on non-frugal algorithms that either require substantial computational power (e.g., those based on deep neural networks) or storage (e.g., making decisions based on historical data). As a result, they cannot be considered suitable for use on edge nodes that are highly resource-constrained. Interestingly enough, even though not reflected in the literature, self-optimization close to the edge seems to be a very attractive solution, as by being deployed in the proximity of data sources, it facilitates fast reactions and adaptations.

In this context, the research in edge self-optimization still exhibits several unresolved gaps. In terms of the applicability domains, the majority of the studies discuss self-optimization in the context of adaptable resource management, such as resource allocation, auto-scaling, replica control, and task migration. Apart from the use cases of adaptable workload prediction [23], the self-optimization does not appear directly in the context of data dissemination, which pertains to a significant concern in ECC, since frequent transmissions of large data volumes involve considerable energy consumption. Additionally, the current studies mainly focus on how to execute adaptation rather than when the adaptation should be initiated (e.g., when the potential abnormality appears in the system). Consequently, the timely identification of opportunities for adaptation remains insufficiently explored. Moreover, even though some of these works address edge self-optimization, they still require resource-intensive operations to be offloaded to the cloud [24], making them reliant on external high-performance hardware.

The aforementioned gaps in the existing self-optimization literature have been partially addressed in separate studies devoted to anomaly detection [25] and adaptive sampling [26,27]. Consequently, this work will examine how to employ both of these techniques within the self-optimization module.

In particular, this contribution presents a frugal self-optimization module, in which statistical-based techniques are used to identify potential adaptation opportunities. The article describes two principal roles of the module:

- Anomaly detection—detection of potential resource utilization abnormalities, which was achieved through density-based analysis of real-time data streams collected from computational nodes

- Adaptive sampling—estimation of the optimal sampling period for the monitoring of resource utilization and power consumption, obtained by analyzing the changes in data distribution with Probabilistic Exponential Moving Average (PEWMA)

In the context of ECC, such a module is particularly important, since it enables automated decision-making directly at the resource-constrained edge devices, achieving optimizations in (soft) real-time. By adjusting sampling rates autonomously, the module ensures that data are monitored and (in many cases) transmitted only when necessary. This, in turn, can reduce energy consumption and lessen network congestion. Moreover, applying the anomaly detection mechanisms can support not only fault prevention and the security of ECC but may also serve as a trigger for autonomous adaptations that could help preserve the computational resources.

For example, consider a remote health monitoring system, where patients wear electrocardiogram (ECG) smart watches that measure their vital signs (e.g., heart rate). These ECGs serve as sensors that send the collected data to patients’ smartphones (edge devices), which are responsible for making immediate, critical decisions locally and forwarding relevant information to the healthcare provider’s cloud for more extensive, long-term analysis. In this scenario, the proposed self-optimization module could be utilized to automate the collection of patients’ vital signs and recognize potential critical health events. Specifically, by lowering the data collection frequency during long periods of physiological stability (e.g., sleeping or resting), adaptive sampling could prolong the battery life of wearable ECGs. Simultaneously, the anomaly detection mechanism could streamline detection of irregularities (e.g., in a heart rhythm) that could trigger alerts requiring immediate action (e.g., increasing the ECG sampling rate and sending a signal to healthcare professionals). From the perspective of system resilience, anomaly detection could also be used to monitor sensor resources (e.g., temperature) to detect their malfunction (e.g., overheating) and initiate autonomous adaptations (e.g., by switching off non-critical processing).

However, in order to make the self-optimization module deployable on the edge, it was necessary to propose an approach that would address the limitations of resource-constrained edge devices. Specifically, the selection of algorithms was guided by factors such as (1) computational efficiency and (2) frugality, understood in the context of both utilized resources and processed data. Therefore, this contribution also provides a structured perspective on the approach to real-time optimization in the context of edge computing. Moreover, the novelty of the proposed solution lies in its modular architecture, which (1) enables the dynamic extension of models to accommodate new data metrics (e.g., network throughput, sensor battery level) and (2) facilitates Human-In-The-Loop (HiTL) interaction for model parameter adjustment (e.g., acceptable estimation imprecision).

The conceptualized self-optimization module was implemented as part of the Horizon 2020 European research project aerOS (https://aeros-project.eu/, access date: 24 October 2025). aerOS is a platform-agnostic, intelligent meta-operating system for the edge-IoT-Cloud continuum. It is composed of a wide variety of heterogeneous computational nodes called Infrastructure Elements (IE)s, which operate on different types of devices ranging from embedded microcontrollers to cloud servers that can be potentially distributed between different client domains. In this contribution, aerOS was used as a primary testbed for validating the self-optimization module. Nevertheless, it should be noted that the module is also suitable for other ECC applications.

The performance of the developed module has been evaluated using synthetic and real-world complex datasets. In particular, the anomaly detection model was assessed using (1) the Numenta Anomaly Benchmark (NAB) [28] on the synthetic time-series data and (2) a snapshot of CPU utilization monitored in the aerOS continuum. On the other hand, the effectiveness of sampling period estimation was evaluated on both of the aforementioned datasets and, additionally, on RainMon CPU utilization traces (https://github.com/mrcaps/rainmon/blob/master/data/README.md, access date: 24 October 2025). Finally, the achieved results were compared with selected frugal state-of-the-art approaches, including Adaptive Window-Based Sampling (AWBS) [29] and User-Driven Adaptive Sampling Algorithm (UDASA) [30].

The remainder of the article is structured as follows. Section 2 contextualized the implemented approach by reviewing existing studies on the topics of self-optimization, adaptive sampling, and real-time anomaly detection. Next, Section 3 describes the developed self-optimization module, discussing its main assumptions and requirements, as well as explaining the details of the proposed design. It is followed by Section 4, which presents the selected test cases and discusses the achieved results. Finally, Section 6 summarizes the findings of the study and indicates future research directions.

2. Related Works

The self-optimization has been addressed in both cloud and edge–cloud infrastructure contexts. However, it is difficult to find recent works that mention this topic directly. The ones that are doing so target mostly the aspect of resource optimization, whereas the concept of “resource” is often perceived from the cloud perspective, rather than the edge (or jointly).

Among others, this can be observed in one of the recent surveys focused on self-* capabilities in ECC [22]. Out of 77 papers that were selected by the authors, only seven of them address the self-optimization. Furthermore, the vast majority of these articles were published before 2020. This observation may not necessarily imply a decline of interest in autonomous optimization for the edge and the cloud. Instead, it emphasizes a larger issue, of a vague specification of the scope of different self-* modules.

In particular, there is no uniform definition that establishes the range of impact and the subject areas addressed by different self-*. This is of particular concern when it comes to non-principal modules (i.e., those outside of the original IBM autonomic computing manifesto [21]). For example, the distinction between self-adaptation, self-optimization, self-scaling, self-configuration, and self-learning often depends on the approach and background of individual researchers and/or the particular application context. As a result, in existing research works, these terms are used to describe overlapping mechanisms. Moreover, lack of clarity regarding the definition of self-optimization may also result from the broad understanding of the concept of optimization within systems. In the works identified throughout this section, the self-optimization pertained to both (1) a separate module overseeing the infrastructure state and suggesting adaptations to its components, but also (2) an inherent part of one of the internal algorithms, facilitating its autonomy.

For example, in [31], the authors considered self-optimization from the perspective of energy efficiency. In particular, they introduced an energy-efficient mechanism for cloud resource allocation in virtual data centers. The design of the proposed approach followed a commonly adopted MAPE-K model [21]. The information about energy consumption was collected by the monitoring module and then passed to the analysis component, responsible for triggering adaptation alerts and constructing corresponding plans. The authors considered two types of adaptations: (1) resource re-allocation, initiated whenever the estimated value of energy consumption fell outside of the specified threshold, and (2) allocation of new resources from the reserve resource pool, when the execution requirements were violated. Here, it should be noted that the energy consumption of cloud resources was recursively computed using past statistics stored in the database after each resource provisioning. Consequently, this solution may not be suitable for edge devices since it may require substantial storage capacity.

In the context of resource management, the authors of [32] proposed an autonomic resource management system with a main focus on energy-saving and Quality of Service (QoS). The system was operating in three different modes: (1) prioritizing energy-saving, (2) prioritizing QoS, and (3) balancing between energy-saving and QoS. The authors described the self-optimization as the ability of the system to switch between proposed modes autonomously. However, similarly to the previous work, the appropriate mode was selected by applying a set of threshold-based rules to the predicted application’s workload, which was derived from the past resource utilization history.

A different approach to self-optimization was introduced in [23]. There, the generic workload prediction was applied. The authors utilized Long Short-Term Memory (LSTM) to identify workflow patterns. In this case, the self-optimization was perceived through the fine-tuning of model parameters with the use of Bayesian Optimization. Nevertheless, the reliance on computationally intensive models and the limited scalability of the optimization approach hinder the applicability of this method in edge scenarios.

Contrary to previous examples, a perspective on self-optimization, dedicated directly to the application on the edge, was outlined in [33]. Here, an infrastructure was composed of a federation of edge mini-clouds (EMC) supervising the devices with limited resources. The goal of self-optimization was to limit the number of redundant instances of running applications and, as such, optimize the energy utilization. It was achieved by comparing shared applications between neighboring EMCs and identifying users who could be transferred to a single one. Although this approach is computationally efficient and, therefore, well suited for edge applications, it is highly domain-specific since it assumes a very distinct continuum structure composed of mini-clouds. Accordingly, adjusting it to the more generalized case of ECC may be non-trivial.

When evaluating the self-optimization solutions, one must take into account these studies, in different sub-domains, that do not explicitly use self-* terminology. In the context of this work, two such domains were analyzed: (1) adaptive sampling and (2) anomaly detection. These domains were selected as they target two important concerns of ECC: (1) excessive energy consumption associated with frequent dissemination of large data volumes, and (2) prevention of malfunctions in ECC components.

In light of the specificity of edge devices, the selection of literature reviewed in the next sub-sections was guided by the following criteria: (1) frugality of the algorithms, (2) their time efficiency and ability to operate in (soft) real-time, as well as (3) suitability to work on streaming data. Let us begin the overview with frugal adaptive sampling techniques.

2.1. Frugal Algorithms for Adaptive Sampling

Adaptive sampling is a technique that dynamically adjusts the sampling rate to the evolution of the current metric stream [34]. It aims to (1) reduce the monitoring frequency in states when the differences in subsequent observations are negligible, or (2) increase it when the observations begin to fluctuate. In such a manner, it aids in reducing resources and energy wasted with redundant data dissemination, while at the same time ensuring that monitoring components are alerted to unexpected abruptions.

In the context of edge applications, which require real-time processing and are subject to computational constraints, the most suitable adaptive sampling algorithms employ simple statistical-based models.

In [29], the authors introduced AWBS, a window-based adaptive sampling technique that uses simple moving averages. Specifically, the moving averages were computed over the subsequent windows of incoming time-series data, while their percentage difference was used as a measure of the metric stream evolution. The window size increased if the difference remained within the threshold or decreased otherwise. Although the proposed algorithm is lightweight, it may oversimplify the complex data dynamics. For instance, it should be noted that the usage of simple moving averages puts equivalent weights on all considered observations. Thus, it may overlook intermediate changes in metric stream distribution.

A similar approach that facilitates dynamic sliding windows was proposed in ApproxECIoT [35], where the stratification sampling was considered. Here, the size of a dynamic sliding window (stratum) was defined with an adaptable parameter, controlled by the data stream rate and the availability of resources. The adjustment to the stratum size was computed using Neyman allocation, with the use of a standard deviation of the current sample. Even though the proposed method appears feasible, it is highly specialized for stratification sampling. Specifically, it requires a prior division of the data stream into subsequent types. This is achieved using a dedicated Apache Flume framework that combines data streams from different devices. As such, it may not be applicable to cases where, for instance, data are collected from a single device only (i.e., no need for the division into different types) or when it is highly heterogeneous and it is not possible to form coherent strata.

The authors of UDASA [30] proposed a more generalized approach, where instead of the sampling window, the subject of an adaptation was the frequency. In particular, the method facilitated an enhanced sigmoid function that modified the sampling frequency based on the Median Absolute Deviation (MAD) of the most recently sensed data. The size of the collected data was specified using the sliding window, while the range for frequency adaptation was controlled through a user-defined saving level parameter. Despite the fact that MAD is robust to outliers, it is less sensitive to sudden fluctuations. Hence, it may result in omitting individual data spikes or handling them with significant delays. Consequently, such an approach may not be the best suited to time-critical applications.

As an alternative, an algorithm that performs computations on individual observations has been introduced in the Adaptive Monitoring Framework (AdaM) [34]. In this study, the current evolution of the metric stream was estimated using the PEWMA following a Gaussian signal distribution. The sampling period formula was derived from the difference between the estimated and observed stream deviations, and then compared with the target confidence parameter. This algorithm is indeed lightweight and extremely memory efficient. However, it should be noted that despite the author’s claim of a small number of parameters, six of them need to be set by a system operator, which can be considered to be one of the drawbacks of this approach.

There are also adaptive sampling studies that apply more advanced statistical techniques, e.g., linear regression. In [36], the authors proposed a method consisting of three steps: (1) acquisition process, (2) linear fitting, and (3) adaptive sampling strategy. As part of the presented approach, a linear regression model was constructed using time-series sampling data collected from the sensors. The model was trained on the indicated number of samples, and then the median jitter sum was used to represent the changing trend of the data stream. One of the significant limitations of this work is the assumption of the linearity of the short-term data, which may not be applicable in the majority of real-world applications. Furthermore, periodic storage of relevant samples in the database consumes extra storage and computational resources. This, in turn, may impede the scalability of the solution (e.g., not all restricted devices can store large quantities of data samples). Obviously, data could be sent to the “central repository”, but this defeats the purpose of performing analysis at the edge and brings back problems with using this approach in time-critical applications.

Separately, in [37], the adaptation of the sampling period was addressed with the use of a Kalman filter. In particular, the authors proposed the methodology of an adjustable sampling interval for the water burst detection. The water bursts were detected by calculating the flow residuals and using low-pass filtering. The adaptation of the sampling period was carried out when the computed values violated the predefined thresholds. A significant limitation of this work is its assumption that there is a periodic similarity between past and present data patterns. Specifically, the authors argued that the data patterns for the current week are equivalent to those of the previous week. Moreover, they argued that the missing historical data could be sufficiently estimated using Lagrange’s interpolation. Following such an assumption neglects the possibility of the occurrence of unpredictable or abnormal events, potentially degrading the quality of Kalman predictions.

All the analyzed adaptive sampling approaches have their individual benefits and limitations. The summary of reviewed algorithms is presented in Table 1.

Table 1.

Summary of different adaptive sampling algorithms.

As can be observed, the majority of adaptive sampling algorithms target the sampling period while utilizing simple statistical measures (e.g., moving average, median, and standard deviation). Most of them are general-purpose and, hence, can be applied to a wide variety of ECC applications. Moreover, it is noticeable that almost all algorithms perform computations over the batch of data, rather than individual observations. It should be noted that these algorithms may not be the most suitable for highly critical applications, as they may fail to detect sudden data spikes (e.g., in the Kalman filter, abrupt changes can be potentially lost due to smoothing). Additionally, within this group, some of the algorithms require supplemental data storage for historical information to estimate the evolution of the metric stream.

Among the analyzed works, the AdaM adaptive sampling technique [34] was determined to be the most suitable for the self-optimization module. In contrast to the remaining methods, this algorithm relies only on the metrics related to the previous observation, hence requiring a minimal amount of storage. Furthermore, by employing a probabilistic approach, the technique can rapidly accommodate spikes that are likely to occur in edge computing scenarios. These spikes, however, do not dominate future updates, since the probability does not depend on historical observations. Hence, AdaM can be suitable for rapidly fluctuating streams. A notable method’s limitation lies in several model parameters. However, this concern was mitigated on the side of self-optimization design, by integrating the HiTL interfaces (as described in Section 3.4).

Presented adaptive sampling algorithms enhance data monitoring across various ECC components by optimizing the energy used for data transmission. While such online monitoring helps maintain awareness of component states, it does not provide direct measures for detecting sudden abnormal behaviors. This requires adopting dedicated anomaly detection techniques, which are analyzed in the following subsection.

2.2. Frugal Algorithms for Real-Time Anomaly Detection

According to [38], an anomaly is an observation, which deviates so much from other observations as to arouse suspicions that it was generated by a different mechanism. Consequently, anomaly detection is a data mining technique that aims to identify such abnormalities within data. One may distinguish two main types of anomaly detection approaches: contextual or point-based. To illustrate them, let us consider an example of network traffic monitoring. In point-based anomaly detection, any singular abrupt increase in network traffic may be considered anomalous, potentially indicating a security breach such as a DDoS attack. However, in the case of contextual anomaly detection, which often incorporates prior knowledge (e.g., user behavior patterns), the same observation may be classified as normal if it appears within a recurring period of increased user activity.

Both of these approaches have been extensively studied in recent years. For example, in the case of contextual anomaly detection, authors of LightESD [39] introduced an approach that is based on the extraction of periodograms and residuals. In particular, the seasonality is obtained by computing the periodograms using Welch’s method. Then, the technique uses decomposition to extract and remove the trend and seasonality components in order to obtain the residual. Finally, the anomalies are detected by performing a modified version of the Extreme Studentized Deviate (ESD) test. As indicated by the authors, the major limitation of the proposed approach is that the method requires a batch of data in order to learn the underlying patterns, which may not be available in the case of all applications.

Another approach to contextual anomaly detection was proposed in [40], where the authors used binary classification and hypothesis testing. At the first offline stage, the normal data were divided into a predefined number of subsets, where each subset was a subject of k-means clustering. The union of all clusters was regarded as a region of normal data. Hence, a new observation was considered anomalous whenever it did not fit into any of the clusters. This anomaly classification was used to obtain a binary data stream. In the last step, authors employed either of two methods for anomaly pattern detection: (1) APD-HT, with hypothesis testing, or (2) APD-CC, with control charts. Although this technique is lightweight, while working on the streaming data, it relies on the offline model training step. It is assumed that a training dataset contains only normal observations, which is also a significant limitation, especially in systems that lack prior domain data. Moreover, relying on the offline pre-trained model does not accommodate the evolution of the data stream.

The latter problem was addressed in [41], where another Contextual Anomaly Detection (CAD) framework was proposed. Here, the authors described a semi-online anomaly detection method for contextual anomalies in the energy consumption data streams. In order to predict short-term energy consumption, the framework used an LSTM. The anomalies were identified by comparing the predicted and actual consumption values with errors modeled using a rolling normal distribution. Moreover, the authors employed a CAD-D3 method for the concept drift detection, which triggered the retraining of the prediction model. Although the authors mentioned the IoT applications, the method is arguably too computationally intensive to be employed on edge devices. Moreover, it relies on several hyperparameters, tuning of which may require domain expertise and a number of trials and errors (for each individual deployment).

As an alternative, in [42], the authors introduced PiForest, which modifies a well-known anomaly detection technique, iForest [43], by introducing data pre-processing. In particular, the objective was to overcome iForest’s limitation concerning the usage of substantial storage capacity when processing high-dimensional data. The authors utilized principal component analysis (PCA) for dimensionality reduction. Moreover, to handle streaming data, a sliding window was used. Contrary to the previously described methods, PiForest is not necessarily devoted to contextual anomaly detection. However, tests conducted by the authors suggest its efficacy in such scenarios. Moreover, the technique is suited for deployment on resource-constrained devices. The main disadvantage comes with the hyperparameter sensitivity, as the performance of the algorithm relies on the appropriate setting of the number of trees.

Separately, in the context of point-based anomaly detection, in [44], the density-based technique was proposed. In particular, it was established on the concept of collecting data samples into a cluster. Therefore, upon each new upcoming observation, the recursive density formula was employed to update the information about the sample compactness. Whenever the current density decreased below the mean density, the observation was considered anomalous. The authors employed a non-parametric Cauchy function as a density kernel to decrease the sensitivity to outliers. Moreover, in order to control the transition between normal and anomalous states, additional parameters, including anomaly/normality window and transition thresholds, were introduced. Contrary to offline/semi-online approaches, this algorithm is extremely lightweight and requires no memory to operate. It may, however, not be as effective for the detection of contextual anomalies, as it does not learn complex data patterns.

Another online anomaly detection approach, GAHT, was proposed in [45]. The authors modified the Extremely Fast Decision Tree (EFDT) method [46] to improve its energy efficiency. In particular, the tree growth was optimized through dynamic hyperparameters. The split criteria were dynamically adjusted, based on the distribution of instances at each node. Although the algorithm is rather computationally efficient, it does not perform too well with high-dimensional data, since its time and space complexity depend on the number of attributes. Moreover, contrary to the PiForest, the accuracy of GAHT was not evaluated in the case of contextual anomaly detection.

In order to outline the differences between all summarized methods, their selected characteristics are presented in Table 2.

Table 2.

Summary of different anomaly detection algorithms.

It can be observed that the techniques dedicated to the detection of contextual anomalies, in the majority, rely on offline or semi-online learning methods. Moreover, they are often associated with the requirement of additional storage capacity. On the other hand, online methods are more often employed for point-based analysis and are better at accommodating concept drifts.

Interestingly, these observations are consistent with the findings of the survey [47], where the authors reviewed the anomaly detection methods for the streaming data. Here, it was noted that the offline methods are the least suitable for real-time applications, due to their computational complexity and a lack of concept drift accommodation. The semi-online methods were deemed to perform better, but not all of them can be utilized on resource-limited devices, as some require additional storage for historical data. Therefore, among those categories, online methods were considered the most appropriate for ECC.

All of the aforementioned conclusions were taken into account when selecting the anomaly detection approach for the self-optimization module. In particular, it was decided that the module will implement a density-based approach [44]. Among all point-based methods, that approach was considered the most computationally efficient and generalizable. It does not require extensive parameter tuning and is the most explainable, enabling the easy engagement of HiTL. Moreover, the method itself is memoryless, since its calculations are based solely on the mean and scalar product, making it suitable to be deployed on resource-restricted devices.

The selected adaptive sampling and anomaly detection methods served as the core components of the implemented self-optimization module, which is described in detail in the following section.

3. Implemented Approach

The self-optimization module belongs to the collection of nine self-* modules available to be deployed in the aerOS. Its primary role is to analyze the current state of the computational resources and to suggest potential adaptations that could lead to broadly understood efficiency improvements. In order to achieve this objective, self-optimization employs two mechanisms: (1) adaptive sampling, which is used to autonomously control the frequency of data monitoring, and (2) anomaly detection that aims to identify and alert about potential abnormalities in the resource utilization. Before proceeding to the in-depth description of each of these mechanisms, let us first discuss the requirements that guided their design. It should be emphasized that although these requirements were formulated in accordance with the particular infrastructure (e.g., aerOS) in which the module was tested, they are generalizable to other scenarios as well, making the proposed design versatile across different applications.

3.1. Requirements

The requirements were derived based on the specificity of the environment in which the self-optimization module is intended to operate. Therefore, prior to listing them, it is necessary to outline the main characteristics of aerOS.

Let us recall that aerOS is an intelligent meta-operating system for the edge-IoT-Cloud continuum. The operations in aerOS are performed on the computational nodes called IEs. As these may differ in terms of available resources or their internal architecture, no assumptions should (and can) be made regarding their computational capabilities. Moreover, it should be noted that tasks executed on each IE are treated as “black boxes”, meaning that the only attributes known to the system are their execution requirements.

In order to improve the management and performance of the entire infrastructure, the aerOS provides a set of mandatory and auxiliary services deployable on individual IEs. Among these services are nine self-* modules [22], which aim to enrich IEs with autonomous capabilities. In the context of self-optimization, the most important self-* modules are (1) self-orchestration, which invokes rule-based mechanisms that autonomously manage IE resources, and (2) self-awareness, which continuously monitors the IE state, providing insights on its current resource utilization and power consumption. These modules act as points of interaction with the external environment, from the self-optimization perspective. However, they can be substituted with any components that will provide monitoring data and accept the output of the module under discussion.

Taking into account all the aforementioned features of aerOS, the self-optimization module was designed according to the following requirements:

- Tailored to operate on data streams: since the self-optimization module receives data from the self-awareness module, its internal mechanisms must be tailored to work on real-time data streams. Therefore, at this stage, methods that utilize large data batches or process entire datasets were excluded from consideration.

- Employ only frugal techniques: all the implemented algorithms should be computationally efficient and require a minimal amount of storage. This requirement was established to enable deploying self-optimization on a wide variety of IEs, including those operating on small edge devices. Consequently, no additional storage for historical data was considered, which eliminated the possibility of implementing some of the more advanced analytical algorithms. However, it should be stressed that this was a design decision rooted in in-depth analysis of pilots, guiding scenarios, and use cases of aerOS and other real-life-anchored projects dealing with ECC.

- Facilitate modular design: all internal parts of the self-optimization should be seamlessly extendable to enable accommodating new requirements, or analyzing new types of monitoring data. Therefore, self-optimization should employ external interfaces that would facilitate the interaction with human operators, or other components of the aerOS continuum. Fulfilling this requirement will allow for generic adaptability of the module since data/metrics to be analyzed may be deployment-specific.

These requirements dictated the overall architectural design of the self-optimization module, as well as influenced the selection of its underlying algorithms, which are described throughout the following sub-sections.

3.2. Self-Optimization Architecture

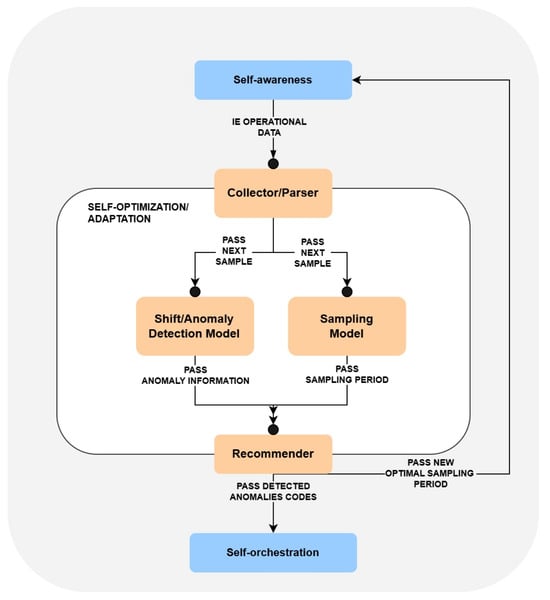

The high-level architecture of the self-optimization is depicted in Figure 1. It illustrates the core parts of the module, which are: (1) Collector/Parser, (2) Shift/Anomaly Detection Model, (3) Sampling Model and (4) Recommender. Let us now describe the responsibilities of each of these components and their role in processing the received monitoring data.

Figure 1.

Architecture of self-optimization module.

The Collector/Parser is the component that receives the hardware state of IE sent periodically by the self-awareness module. It can be thought of as a gateway that collects raw input and maps it into a format that can later be used for further analysis. In particular, the data sent by the self-awareness module consists of a set of different metrics, among which not all are relevant from the perspective of the implemented analytical models (e.g., IE location, CPU architecture, or trust score).

Therefore, it is the responsibility of Collector/Parser to extract the required metrics. Currently, these include the CPU cores, RAM, and disk utilization, as well as the power consumption. Here, it should be noted that these metrics are stored in the form of key-value pairs; hence, extending their scope in the future would be easily possible.

After formatting the IE data, Collector/Parser forwards it to two analytical components— Shift/Anomaly Detection Model and Sampling Model, which are responsible for the identification of potential adaptation possibilities.

In particular, the role of the Shift/Anomaly Detection Model is to determine whether the value of any of the considered utilization metrics should be classified as anomalous. Separately, Sampling Model estimates the most appropriate frequency for monitoring, of the IE state, in self-awareness. The process of detecting the anomalies is further described in Section 3.3, while the applied adaptive sampling approach is discussed in Section 3.4. The results of both analyses are passed to the last self-optimization component, which is the Recommender.

Recommender is responsible for forwarding the suggested adaptations to the associated self-* modules. Specifically, its functionality is organized into two distinct flows. (1) Upon receiving the proposed most optimal sampling period from Sampling Model, Recommender forwards that information to the self-awareness so that it can adjust the monitoring frequency. Moreover, (2) after obtaining the details about the detected anomalies from Shift/Anomaly Detection Model, it maps each anomaly type into a corresponding unique code and sends them to self-orchestrator. In this context, a unique code acts as a particular type of alert (e.g., a sudden increase of CPU usage in a particular infrastructure device can be mapped into a single code 001). The self-orchestrator maps each type of alert into a corresponding mitigation/recovery action, represented through a serverless function, and triggers its execution on the common serverless platform OpenFaaS [48]. In aerOS, such functions are predefined by the developers, hence the type of applied mitigation action is specific to the given application domain (e.g., may involve re-allocation of deployed services). Here, let us also note that the communication between self-optimization and self-orchestrator is highly generic, operating only on the means of a single code. Hence, the proposed self-optimization module can be plugged in as a standalone component to any other type of system, which defines its own mechanisms of performing adaptation actions.

Let us observe that the architecture of the self-optimization module facilitates the separation of concerns. In fact, it resembles a simplified version of the MAPE-K model. In particular, the data collection and pre-processing in Collector/Parser can be viewed as a MAPE-K Monitoring component. The MAPE-K Analysis component is reflected by Sampling Model and Shift/Detection Anomaly Model since these identify potential adaptation possibilities. Finally, the Recommender corresponds to the MAPE-K Planning and Execution. Using such a design supports the high-level modularity of the system, enabling its further extensions.

Since the core components of the analysis are Shift/Anomaly Detection Model and Sampling Model, let us describe them in further detail.

3.3. Shift/Anomaly Detection Model

The primary responsibility of the Shift/Anomaly Detection Model is to identify anomalies in the current IE state. Since the representation of the IE state is composed of multiple metrics, there are two main approaches that can be applied in terms of anomaly detection.

The first approach considers the IE state as a whole, meaning that the detected anomalies represent a potential degradation of an overall IE performance but are not associated with any particular (individual) metric of the resource utilization. Application of such an approach requires either scalarizing the IE state to a single value or implementing anomaly detection techniques, which operate on multivariate data. While this may simplify the anomaly detection, requiring only one model to be implemented, it also sacrifices the metric-level interpretability. In particular, since there is no identification of specific anomalous metrics, the root cause of the problem cannot be easily diagnosed. Therefore, determining appropriate mitigation measures may also be very challenging. As an example, an anomaly may be detected due to a memory leak or excessive CPU utilization. These are two separate issues that require completely different handling solutions.

The second approach addresses such cases by detecting anomalies for separate metrics. In particular, the metrics are divided into scalar values based on their type and fed into separate anomaly detection models. Models of this type require individual parameter tuning, which may be viewed as both a disadvantage and an advantage. On the one hand, such an approach requires the adjustment of a vast number of parameters, which may not always be straightforward. However, on the other hand, it may also improve the performance of the models since it allows their behaviors to be adjusted based on the evolution and distribution of individual metric streams. Here, obviously, it is possible that subgroups of parameters can be used for anomaly detection, but further exploring such possibilities is out of the scope of this contribution. Suffices to say that, for obvious reasons, the proposed approach can support anomaly detection based on any subgroup of parameters. All that is needed is to: (a) adjust the Collector/Parser to extract the needed parameters, and (b) insert the needed Shift/Detection Anomaly Model.

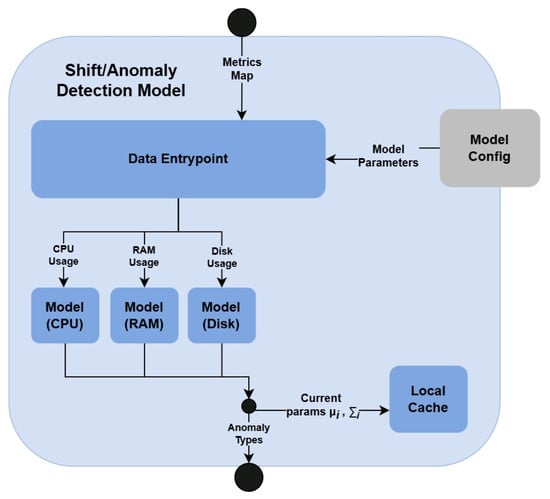

The Shift/Anomaly Detection Model, considered in what follows, employs the second approach, which is illustrated on the high-level architecture of the component in Figure 2.

Figure 2.

Architecture of Shift/Anomaly Detection Model.

The overall flow of the Shift/Anomaly Detection Model is as follows. The IE data are initially sent to the Data Entrypoint, which is responsible for extracting values corresponding to each metric type. Let denote the observed value of metric m. Currently, Shift/Anomaly Detection Model takes into account only three types of metrics: (1) CPU utilization , (2) RAM utilization , and (3) disk utilization . However, as noted, this scope may be extended in the future, without affecting the remaining parts of the system. For each metric m, Data Entrypoint fetches the corresponding model parameters from the Model Config component. Let represent the parameter set associated with the model that handles metric m. Each pair is subsequently fed into the corresponding model, which applies a density-based anomaly detection algorithm [44]. After finishing the detection process, the anomalies returned by individual models are aggregated and passed to the Recommender (see Section 3.2). Moreover, the statistics characterizing the temporal evolution of each metric stream (i.e., sample mean and scalar product of metric m at time step i) are updated and stored in a lightweight Local Cache, to support incremental computations.

Taking this into account, in what follows, a detailed description of the algorithm responsible for anomaly detection is presented.

3.3.1. Density-Based Anomaly Detection

As indicated in Section 2, Shift/Anomaly Detection Model implements a density-based point anomaly detection algorithm described in [44]. Specifically, the algorithm represents the evolution of the metric stream through the computation of consecutive sample densities. The notation used in the subsequent algorithm’s description is summarized in Table 3.

Table 3.

Notation used in the density-based anomaly detection algorithm.

The computations begin upon receiving the next observation from the Collector/Parser component. In the first step, the sample mean and the scalar product are calculated, using the recursive formulas presented in Equations (1) and (2). It should be noted that both these formulas do not rely on any additional data, apart from the previously computed statistics (i.e., , ). Hence, the AdaM method does not require any additional storage aside from a lightweight local cache. This is important in the case of resource-constrained devices.

After updating the sample mean and the scalar product, the obtained values are used in the computation of the sample density. The density formula is inspired by the concept of data clouds (i.e., fuzzy representations of data clusters) introduced in [49]. Here, the Cauchy kernel was used over the data cloud density in order to control the contribution of individual sample points in the estimation, mitigating the impact of outliers. In comparison to other kernels (e.g., Gaussian), Cauchy exhibits heavier tails and slower decay, by which it strongly suppresses the impact of distant samples, while maintaining the contribution of nearby points. As such, it is less sensitive to extreme deviation, anticipating their occurrence, and consequently mitigating their impact on the final estimation. Thus, Cauchy kernels provide greater robustness in scenarios with highly noisy or irregular data. To accommodate the requirements of incremental, stream-based computations, the recursive formula, presented in Equation (3), is applied.

The updated density is then used to calculate the mean density, as represented in Equation (4), where the density difference is computed, as formulated in Equation (5).

In the presented equation, the absolute density difference serves as a weighting factor, enabling adjustment to the impact of previous and current densities on the estimation. In particular, when the changes in density are minor (i.e., similar observations), the updated mean remains closer to the previous density (i.e., ). On the other hand, significant variations favor the contribution of a new mean density (i.e., ). This allows the model to adapt more effectively to large changes, while smoothing out negligible fluctuations.

The mean density is used to control the transition between the normal and the anomalous states. In particular, if the current observation is considered to be normal, then it may switch to the anomalous state according to Equation (6).

There are two conditions that must be satisfied for the anomalous state to occur: (1) the current density must be less than the weighted mean density, and (2) the number of consecutive observations K that remained in the same state (in this case, potentially anomalous) is above a specified threshold . Please note that if the first condition (i.e., ) is fulfilled, then the number K will be incremented, but the state will not change to the anomalous until K reaches a sufficient size. Therefore, by applying the weighting and the threshold parameters, the sensitivity of the model can be seamlessly adjusted for the specific use cases.

A similar transition also occurs from the anomalous to the normal state. However, since it is not the main subject of this study, more details about this mechanism are omitted, while they can be found in [44].

Upon recognizing the anomalous state, the category of anomaly must be specified. However, it should be noted that, since the algorithm does not rely on historical or labeled data, it cannot learn different types of complex anomalies. Therefore, in Shift/Anomaly Detection Model, two anomaly classes were, by default, specified for each type of metric: (1) INCREASE (i.e., for density being above the mean density) and (2) DECREASE (i.e., for density being below the mean density). These categories aim to characterize the type of abrupt shifts in resource utilization. However, it should be noted that due to the modular architecture of the module, the anomaly classes can be easily extended or modified, depending on the particular application context.

The quality of anomaly detection is influenced significantly by the parameters of the individual models. In this context, the next subsection describes which parameters were considered and how the human operator can be involved in their dynamic modification.

3.3.2. Model Configuration

The Shift/Anomaly Detection Model is composed of several sub-models, each of which requires adjustment of its individual parameters. The density-based anomaly detection parameters encompass: (1) , with a value in the interval [0, 1], (2) , with a value in the interval [0, 1], (3) , with an integer value greater or equate to 1 and (4) , with an integer value greater or equate to 1.

All of them are defined accordingly in Table 3. In general, adjusting these parameters is a non-trivial task, which depends on the specific application context. For instance, in time-sensitive applications, such as critical healthcare systems, the detection of anomalies may require a high level of granularity to promptly alert about singular deviations. In such cases, the parameters and should be minimized to accommodate transient fluctuations. In the opposite case, in systems characterized by frequent data fluctuations (e.g., high-traffic web services), and should be adjusted to larger values to react only to sustained abnormalities.

In this context, it should be noted that the module is not domain-specific and can be utilized in a variety of applications. However, this contributes to the complexity of the parameter fine-tuning, as no universal method exists (and can be applied) to determine their most optimal combination. Furthermore, since the self-optimization was designed with modularity in mind, it was considered that Shift/Anomaly Detection Model may potentially be extended (in the future) to support new sub-models. Consequently, it was determined that the configuration of parameters must be: (1) adjustable—to the specific application context and (2) extendable—to support future modifications.

To accommodate these requirements, each of the sub-models was identified by a unique name (e.g., CPU_MODEL), allowing it to be associated with an individual set of parameters. These parameters were stored in an adaptable component configuration Model Config. Moreover, in order to facilitate the integration of the Shift/Anomaly Detection Model into different systems, dedicated interfaces were introduced to support dynamic parameter adjustment by the HiTL.

In particular, the endpoint /anomaly/parameters/{type} was implemented to provide an on-the-fly parameter modification capability, e.g., for the external human operator. In addition, in order to ensure continuity of system operation, the newly introduced parameters are applied only once the next sample is received, whereas the ongoing analysis proceeds using the previous parameters.

Apart from the Shift/Anomaly Detection Model, the second model employed in the self-optimization is the Sampling Model. Its architecture, along with the utilized method, is described in the subsequent section.

3.4. Sampling Model

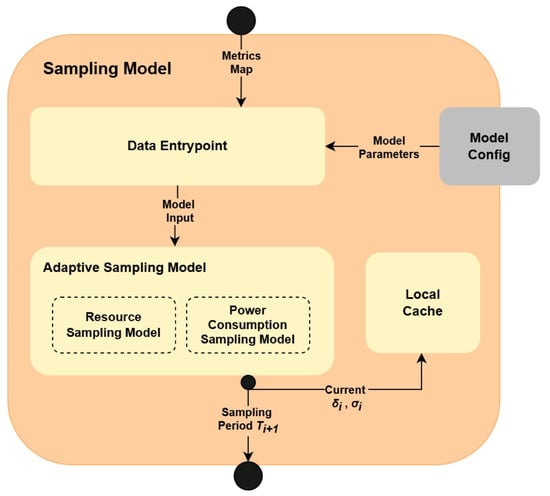

The Sampling Model follows a similar architectural approach as the Shift/Anomaly Detection Model. In particular, as illustrated in Figure 3, it is also composed of the (1) Data Entrypoint, (2) Model Config and (3) Local Cache. However, instead of utilizing separate analytical models for each metric type, it contains a single Adaptive Sampling Model. This model accepts the entire IE data and handles its processing internally using two sub-models: Resource Sampling Model and Power Consumption Sampling Model.

Figure 3.

Architecture of Sampling Model.

The process of handling the IE data in Sampling Model is as follows. As with the Shift/Anomaly Detection Model, Sampling Model receives the information about the current IE state, in Data Entrypoint. The Data Entrypoint can operate in two modes: (1) processing resource utilization data, and (2) processing power consumption data. Each of these modes is identified by a unique name, being, respectively, RESOURCE or POWER. The mode that should be adopted by Data Entrypoint is specified along the data passed by the Collector/Parser component (Section 3.2). Let be a vector representing the resource utilization metrics, and let be a scalar representing the current power consumption. Instead of splitting the data into different metric types, the main responsibility of Data Entrypoint is to select parameters of the corresponding model. These parameters are obtained based on the mode from the Model Config. After obtaining the parameters, Data Enrypoint creates corresponding pairs: (1) , where denotes the set of parameters of Resource Sampling Model for the RESOURCE mode, and (2) , where denotes the set of parameters of the Power Consumption Sampling Model for POWER mode. These pairs are then passed to the Adaptive Sampling Model along with the information about the mode type, so that the component can recognize which internal model should be called. Each of these internal models applies the same AdaM algorithm [34], which, as an output, recommends the optimal sampling frequency. Corollary to the algorithm utilized in Shift/Anomaly Detection Model, AdaM is memory efficient. However, it requires storing updated statistics of the metric stream evolution in the lightweight Local Cache. This happens as the last step. The next subsection describes the details of the AdaM adaptive sampling algorithm.

3.4.1. AdaM Adaptive Sampling

The original AdaM framework [34] introduces two algorithms: (1) adaptive sampling, and (2) adaptive filtering. In the context of this work, the emphasis is placed only on the adaptive sampling. The core of the algorithm is the estimation of metric stream evolution using PEWMA. Table 4 outlines all the notation used in this section. It should be noted that this section provides an overview of the applied formulas. In order to gain a better understanding of the underlying rationale of the selected statistics, it is advised to see the original article [34].

Table 4.

Notation used in the AdaM adaptive sampling algorithm.

In order to calculate the PEWMA, it is necessary, first, to compute the distance between the two consecutive observations. In the implemented algorithm, the Manhattan distance formula is used, as presented in Equation (7).

The distance is utilized in the computation of the probability , which is a core component of the PEWMA estimation. The probability is computed under the Gaussian distribution, in order to accommodate sudden spikes in the data, while simultaneously controlling their long-term influence. In particular, it prevents singular fluctuations from dominating the overall estimation. The formula for is outlined in Equation (8). It should be noted that is computed only when , since, in the other case, the default value of PEWMA estimation is used.

Here, represents a z-score at time i, which is obtained using the current distance , estimated distance (i.e., previous PEWMA estimation), and the estimated moving standard deviation as indicated in Equation (9). It represents the deviation of , relative to the expected data distribution.

In the formula, corresponds to the moving standard deviation, which can be calculated using the previous PEWMA estimation and the smoothed second moment (i.e., PEWMA of squared deviations; ). Specifically, it is derived using Equation (10). It should be noted that since all of the formulas are recursive and depend only on previous values, they are well-aligned to the needs of incremental computations.

Finally, the computed probability is substituted in the recursive PEWMA formula, presented in Equation (11).

In Equations (10) and (11), the parameters and are the weighting factors that control the influence of the previously observed values and the probability.

The anomalies are detected based on the difference between the actual observed standard deviation and the standard deviation estimated using PEWMA . In particular, Equation (12) presents the formula of a confidence interval, which specifies the degree of certainty of the estimation. It should be noted that the may be equal to zero when the consecutive observations are equal. In such a case, the evolution of the metric stream is stable (distance equal to zero). Consequently, the confidence should be maximized in order to increase the sampling period as much as possible, which explains the second condition.

When the confidence is above the acceptable imprecision (i.e., ), a new sampling period is proposed, according to Equation (13).

The formula uses the parameter, which allows scaling the estimated sampling period value. It can be particularly useful when applying the AdaM technique in different scenarios that operate on different units of sampling period (e.g., milliseconds, seconds).

The last step, before returning the estimated sampling period, is the verification whether it falls within the boundaries . If the value does not comply with the specified limitations, it is being clamped.

As can be observed, the AdaM algorithm operates on scalar observations. This perfectly accommodates estimating the sampling period in the case of power consumption. However, applying this technique in the case of the RESOURCE mode, which uses a vector of values, requires introducing additional modifications.

In particular, the proposed approach is based on the minimization of the sampling period. First, the sampling period is computed separately for each resource utilization metric while using a common set of model parameters. Afterwards, the minimal estimated sampling period is selected in order to account for frequent fluctuations. Taking into account the sampling period of relatively stable metrics (associated with the greater values of the sampling period) would not be feasible, since the substantial changes in rapidly shifting ones would not be captured in subsequent monitoring cycles. Such an approach has been adopted due to the architecture of the system, in which the self-optimization module was verified. In particular, in self-awareness all resource utilization metrics are monitored within a single iteration cycle. Consequently, it was not possible to adjust their sampling periods individually. Nevertheless, it should be noted that deploying the self-optimization module within different architectures that support adjusting the sampling period for each metric may not require this unification step.

As with the Shift/Anomaly Detection Model, the performance of the Sampling Model heavily relies on the selection of appropriate parameters as described in the following subsection.

3.4.2. Model Configuration

The parameters used in the AdaM computation are: (1) , (2) , (3) , (4) , with a value between 0 and 1, (5) , with a value between 0 and 1 and (6) , with a value between 0 and 1. All of these parameters are described in Table 4. They are configured separately for each sub-model, i.e., the Resource Sampling Model and the Power Consumption Sampling Model. As the number of parameters is relatively large, relying on their fixed specification, without incorporating domain knowledge, would not be feasible.

Therefore, an approach similar to Shift/Anomaly Detection Model was employed. In particular, an external interface that allows the modification of all parameters on the fly was implemented. Through the endpoint sampling/parameters/type, a human operator, or another system component, can dynamically adjust the selected model settings. This mechanism works in the same manner as the one introduced for the Shift/Anomaly Detection Model, which was described in Section 3.3.2.

It should be easy to notice that the performance of the entire self-optimization module relies primarily on its analytical components. Therefore, they have been evaluated in different experimental settings, as described in the following section.

4. Experimental Validation

The main objective of the conducted experiments was to evaluate the effectiveness of the implemented algorithms against the selected benchmark datasets and compare them to other state-of-the-art approaches. Here, it should be noted that while frugality was a key design consideration, the current evaluation did not explicitly assess the performance of both models on resource-constrained devices. Yet, such an assessment is recognized as a critical next step and is planned to be included in future research.

As of now, all of the experiments were conducted on a computer with Intel(R) Core(TM) i5-10210U @ 1.60GHz 2.11 GH, 4 Core(s), 16GB RAM. For the assessment, four different real-world datasets were selected:

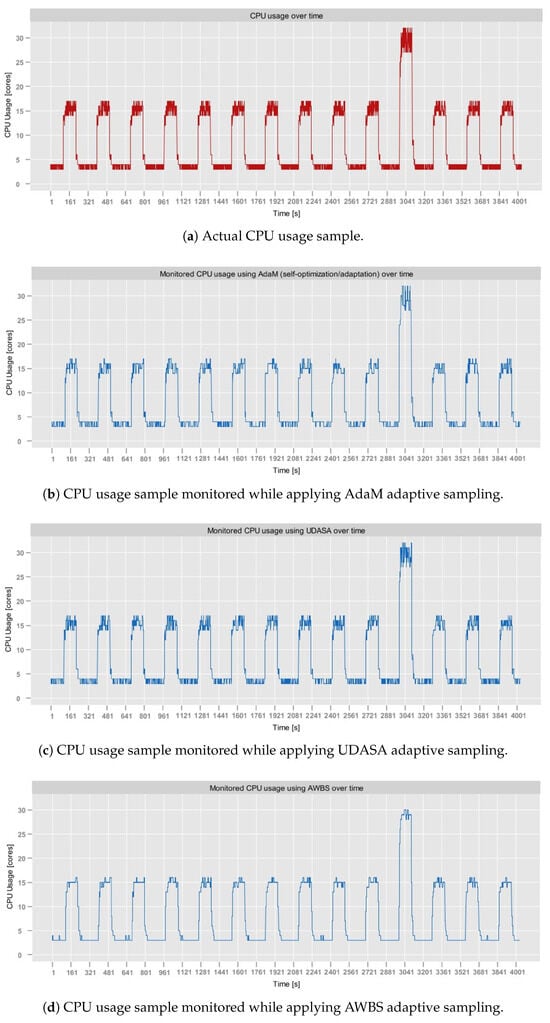

- RainMon monitoring dataset (https://github.com/mrcaps/rainmon/blob/master/data/README.md, access date: 24 October 2025): an unlabeled collection of real-world CPU utilization data that consists of 800 observations. It was obtained from the publicly available data corpus of the RainMon research project [50]. The CPU utilization traces of this dataset exhibit non-stationary, highly dynamic behavior, including multiple abrupt spikes. Therefore, it provides an excellent basis for validating the effectiveness of adaptive sampling in capturing critical variations in monitoring data.

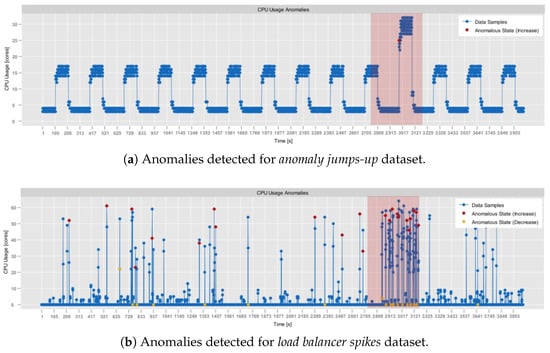

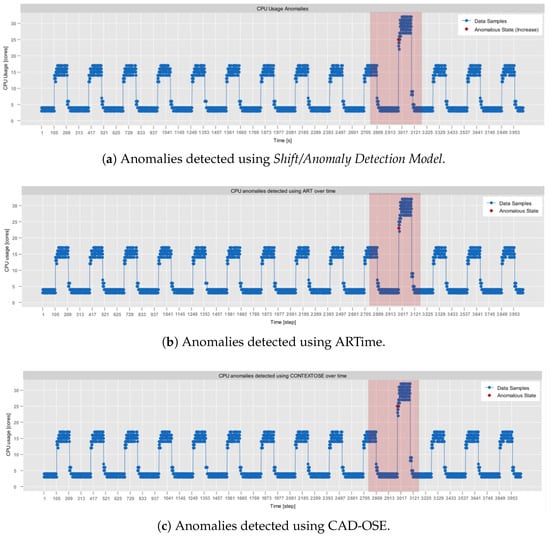

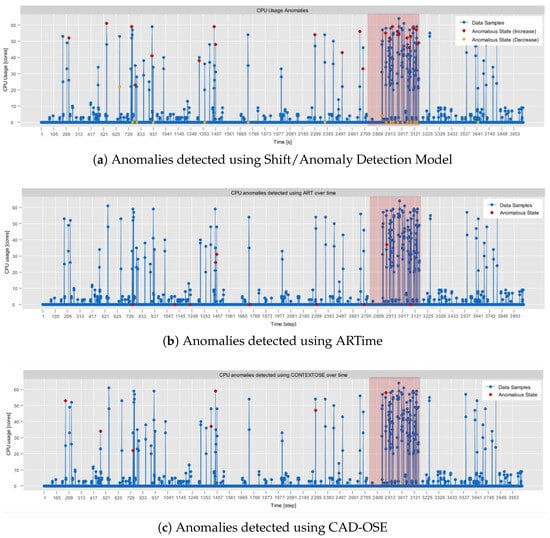

- NAB, synthetic anomaly dataset (anomalies jumps-up) (https://github.com/numenta/NAB/blob/master/data/README.md, access date: 24 October 2025): time-series, labeled, dataset composed of 4032 observations, which was obtained from the NAB data corpus [28]. It features artificially generated anomalies that form a periodic pattern. In particular, in this dataset, CPU usage exhibits regular, continuous bursts of high activity, followed by sharp declines in activity. Consequently, it provides a testbed for anomaly detection, allowing the assessment of its contextual anomaly detection capabilities.

- NAB, synthetic anomaly dataset (load balancer spikes) (https://github.com/numenta/NAB/blob/master/data/README.md, access date: 24 October 2025): similarly to the previous one, a time-series, labeled dataset composed of 4032 observations, which was obtained from the NAB data corpus [28]. It also features artificially generated anomalies, but of different traits than in the anomalies jumps-up dataset. In particular, these represent abrupt individual spikes, providing a basis for the evaluation of both the point-based and the contextual anomaly detection.

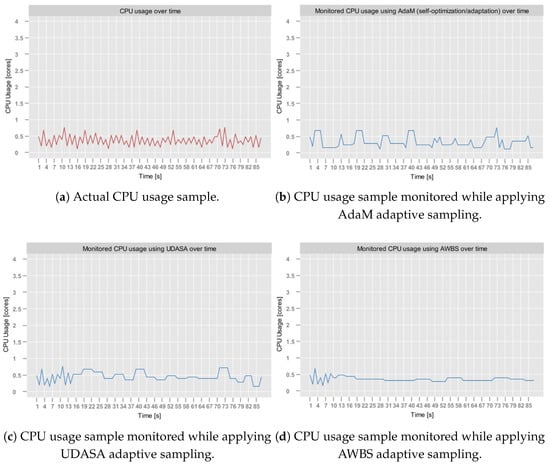

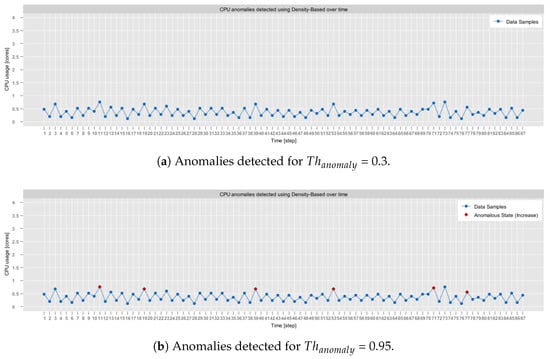

- aerOS cluster IE traces: resource utilization traces of a single IE that were collected using the self-awareness component in the aerOS continuum. They span 87 observations obtained during one hour. Although these traces do not exhibit any substantially abrupt behaviors, they were selected for the analysis since they closely resemble the conditions on which the self-optimization algorithms are to operate.

Since the external datasets (i.e., RainMon and NAB) use data formats that differ from the representation of the IE state in the aerOS continuum, their pre-processing was required. In particular, in order to emulate the results retrieved from self-awareness, individual scalar values had to be mapped to the resource utilization metrics of the IE. To achieve that, it was necessary to first predefine the test configuration of IE. Accordingly, it was assumed that the IE, which was the subject of monitoring in all conducted tests, had 20 CPU cores, 15615 MB RAM capacity, and 68172 MB disk capacity.

For the RainMon dataset, individual percentage CPU utilization values were converted into a number of utilized CPU cores. A similar operation was conducted for the NAB dataset, since it was assumed that its synthetic traces also represent percentage CPU utilization. In both cases, all remaining monitoring metrics (i.e., RAM and disk utilization) were set to 0 values, since they were not relevant to the experiments.

All of the experiments were executed using a simple, dedicated, test framework that was developed as part of the self-optimization module. In particular, the framework emulates the monitoring behavior of self-awareness by transforming the continuous time-based process into a discrete-based one. This is achieved by representing fixed temporal intervals (e.g., five seconds) using steps between successive observations in a dataset (e.g., a gap of 5). The instantiated framework allows specifying the configuration of each individual test scenario, which includes: (1) the name of the scenario, (2) a description of the scenario, (3) a specification of IE data, (4) a reference to the JSON file containing monitoring data, (5) list of evaluation metrics, and (6) list of the names and parameters of additional algorithms that are to be used in results comparison. As such, it allows the execution of repeatable experiments for different scenarios. Let us now describe experiments conducted for Sampling Module and Shift/Anomaly Detection Module.

4.1. Sampling Model Verification

The Sampling Module was tested on Dataset [data:dataset1]1, Dataset [data:dataset2]2 and Dataset [data:dataset4]4. It was not tested on Dataset [data:dataset3]3, since apart from abrupt metric stream spikes (already present in Dataset [data:dataset1]1), this dataset does not exhibit validation means that would be relevant from the perspective of adaptive sampling.

In all conducted tests, it was assumed that the value of the parameter and of the parameter are static, i.e., and . This assumption was made based on the results presented in [34], which indicated that the and do not have a significant impact on the performance of the model. Similarly, the minimal and the maximal sampling periods were fixed to the values determined after consultation with the creators of the self-awareness module, i.e., (ms) and (ms). This ensured that the chosen values closely reflect the real-world conditions.

The only parameters that were not statically defined were multiplicity and imprecision . Therefore, in order to select their best combination, comparative tests were conducted on each dataset. First, the focus was to establish the best . Thus, was fixed to 1000, which is a reasonable baseline as it represents a multiplicity of 1 (no multiplicity). After selecting the most optimal , it was used in tests that have been aiming at selecting the best .

The assessment was based on two different quality measures. First (following [34]) was the Mean Absolute Percentage Error (MAPE). It allows illustrating the error between the predicted stream values and the actual ones. The formula for MAPE is illustrated in Equation (14), where is an actual value, is a predicted value (set to the last monitored value, if the observation is skipped), and n is the size of the sample.

A second metric was the Sample Ratio (SR), which quantifies the percentage of samples that were monitored. This measure provides insight into the reduction in the data dissemination volume. It is computed using Equation (15).

Those two quality metrics were used to compute the Joint-Performance Metric (JPM), which is formulated as in Equation (16). Given that both MAPE and SR are to be minimized, in order to achieve the best performance, JPM serves as an aggregate that evaluates the overall trade-off between these goals. Here, the higher JPM indicates better effectiveness.

Table 5 presents the results of the tests executed for different values for all three datasets. In the case of the aerOS dataset, the MAPE was calculated by averaging the MAPE of all metrics (i.e., CPU, RAM, and disk utilization).

Table 5.

Results of the tests of different values obtained for all three datasets.

As can be observed, the best results were achieved for . In the majority of cases, SR exhibited a decreasing trend as increased, which is consistent with the expected behavior. In particular, higher tolerance of estimation errors expressed through leads to longer sampling intervals, which, as a consequence, reduces the number of monitored samples. Interestingly, for , the SR unexpectedly increased in all conducted tests, achieving slightly degraded overall performance (JPM). Additionally, the experiments conducted on the aerOS dataset yielded the lowest SR values (between 80 and 100%) compared to the NAB and RainMon. This difference may potentially be attributed to the fact that the sampling period computation on the aerOS dataset was performed on multivariate observations (i.e., it accounted for RAM and disk utilization), making the final sampling frequency dependent on the distribution of all three utilization metrics.

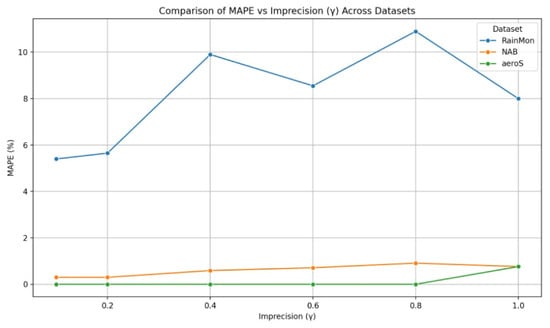

Moreover, it is observable that the MAPE values are significantly below the acceptable imprecision in all cases. However, the results of tests conducted on different datasets exhibit significant differences in the behavior of this metric, as illustrated in Figure 4.

Figure 4.

The comparison of achieved MAPE values for different imprecisions and datasets.

For the RainMon dataset, the MAPE values were fairly regular and oscillated between 5 and 10%. On the other hand, for the NAB dataset, the MAPE values were the lowest, with almost all of them being below 1%. Finally, for the aerOS dataset, the average MAPE values were the least regular, making it difficult to observe any trends. These outcomes emphasize the criticality of adjusting the model parameters for the different application scenarios.

Based on the observed results, the value of was fixed at . Next, it was used in the selection of the multiplicity parameter (). For , four different values were considered: 500, 1000, 2000, and 3000. Table 6 summarizes the outcomes of the tests.

Table 6.

Results of the tests of different values for all three datasets.

The obtained results demonstrate that and MAPE exhibit a positive correlation, while and SR have an inverse relationship. Since the changes in MAPE are relatively small (being significantly below the considered imprecision parameter), a clear increase also appears in the case of JPM. Therefore, in the non-critical applications, where a moderate level of estimation error is tolerable, selecting a higher value of may be beneficial. However, in cases where precision is critical, the overall JPM value might be of secondary importance to maintaining a lower MAPE.

Taking into account the aforementioned findings, the combination of and was considered to be the most optimal. As such, it was used in the subsequent experiments evaluating the performance of the implemented adaptive sampling approach against other existing algorithms. In particular, the (1) UDASA [30] and (2) AWBS [29] adaptive sampling techniques were used in the assessment. However, it should be stressed again that establishing these values has to be performed for each application of the proposed approach.

For UDASA, the following parameters were applied: (1) window size , (2) saving level (controls the level of precision) , and (3) baseline sampling period . These parameters were determined by analyzing the results of the experiments presented in [29] and adjusting them to the specificity of test datasets (e.g., window size decreased due to a smaller number of observations).

Similarly, for the AWBS, the parameters included: (1) maximal window size , (2) initial window size , and (3) threshold (used to determine anomalies) . Here, let us note that in the original version of AWBS, the threshold was not parametrized but determined with respect to the percentage difference of the first two moving averages. However, this approach does not capture edge cases (e.g., when the percentage difference is 0), which appear in the considered test datasets. In such scenarios, since the threshold is static, it does not adapt to the evolution of a metric stream (i.e., it would remain 0). Consequently, there is almost no sampling adjustment. To mitigate that, it was decided that the threshold should be predefined. Hence, it was set to 40% so that it corresponds to the imprecision parameter . The results of all experiments are summarized in Table 7.

Table 7.

Comparison of the performance of all selected algorithms run against all three datasets.

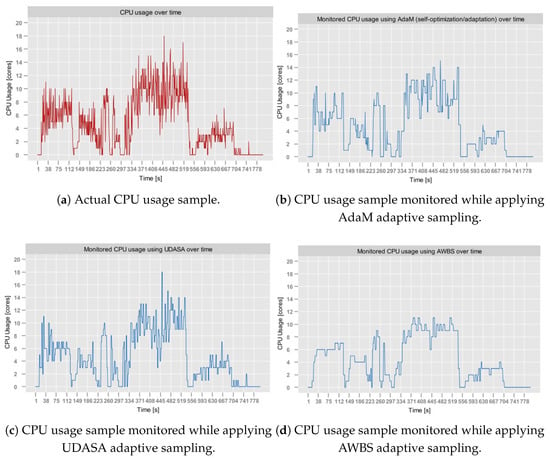

It can be observed that in terms of JPM, the AdaM algorithm implemented in Sampling Model yields comparable results to the remaining state-of-the-art approaches. It outperformed UDASA in all executed tests while achieving slightly worse outcomes (by around 2%) than AWBS. Among the evaluated algorithms, UDASA demonstrated the least effective data volume reduction, which was associated with a relatively high prediction error. This is clearly observable in Figure 5, which depicts the monitoring of the RainMon dataset under various adaptive sampling strategies. While UDASA is able to account for spikes with a larger magnitude, it smooths out smaller fluctuations, which contributes to the prediction error.

Figure 5.

Results of adaptive sampling on the RainMon dataset.