Abstract

Accurate and rapid acquisition of soil texture information is crucial to evaluating soil quality, formulating soil and water conservation strategies, and guiding agricultural resource management. Compared with traditional machine learning methods, convolutional neural networks (CNNs) demonstrate superior accuracy in soil texture prediction. To overcome the limitations of existing lightweight models in spectral modeling, such as insufficient single-scale feature representation, limited channel utilization, and branch redundancy, and to meet the demand for lightweight architectures, we propose a novel dynamic feature modeling approach: Multi-scale Routing Attention Network (MSRA-Net). MSRA-Net integrates grouped multi-scale convolutions with an intra-group Efficient Channel Attention (gECA) mechanism, combined with a multi-scale weighting strategy based on a Branch Routing Attention (BRA) mechanism, thereby enhancing inter-channel feature interaction and improving the model’s ability to capture complex spectral patterns. Furthermore, we introduce a multi-task learning variant, MSRA-MT, which employs uncertainty dynamic weighting to balance gradients magnitude across tasks, thereby improving both stability and predictive accuracy. Experimental results on the LUCAS and ICRAF datasets demonstrate that the MSRA-MT model consistently outperforms baseline models in terms of performance and robustness ( = 9.190 and = 8.189 for ICRAF and LUCAS, respectively). Prior knowledge-based soft constraints may hinder optimization by amplifying intrinsic noise, rather than improving learning effectiveness.

1. Introduction

Soil texture is one of the key indicators characterizing physical (mechanical) properties [1]. To a certain extent, soil texture also affects the chemical and biological properties of soils [2,3]. The ability to rapidly and accurately obtain soil texture information is of great practical significance for evaluating soil quality, formulating soil and water conservation strategies, and guiding agricultural resource management [4]. Moreover, soil texture serves as a critical indicator for assessing land degradation and wind erosion susceptibility [5,6]. It has an important reference value for identifying potential degradation areas and developing differentiated governance measures [7]. Against the backdrop of challenges to agricultural sustainability, a series of large-scale soil data platforms [8,9], have incorporated high-resolution soil texture monitoring into their research scope [10], providing sufficient conditions for validating spectral modeling methods.

Soil texture (clay, silt, and sand) is typically determined using laser particle analyzers or sedimentation methods [11,12]. Although these conventional approaches offer high accuracy, they are unsuitable for the large-scale analyses required in precision agriculture [13]. In contrast, visible/near-infrared (Vis–NIR) diffuse reflectance spectroscopy offers advantages such as rapid analysis, non-destructive measurement, low cost, and suitability for large-scale applications. However, several challenges remain in practical applications. For instance, the spectral response to soil texture is indirect and relatively weak [14]. Conventional methods struggle to capture the complex nonlinear relationships between soil texture and high-dimensional spectral data, resulting in limited predictive accuracy [15].

Over the past two decades, researchers have enhanced and filtered spectral band features using preprocessing methods such as Multiplicative Scatter Correction (MSC) [16,17], Derivative Transformations (DTs) [18], or feature selection methods such as Competitively Adaptive Reweighted Sampling (CARS) [19,20]. When combined with statistical learning and machine learning methods, such as PLSR, RF, and ensemble learning methods like XGBoost [21], stable predictive performance is demonstrated [22]. However, their predictive capability remains limited when confronted with complex, high-dimensional, nonlinear relationships [23]. Additionally, the limited transferability of such models is also hindering their application at large spatial scales.

In contrast, deep learning can automatically learn multi-level and multi-scale spectral features, demonstrating strong capability in modeling large datasets and complex nonlinear relationships [24,25]. Some researchers have adopted Inception-like multi-branch convolutional architectures to enable parallel extraction of multi-scale features [26]. Other researchers have employed Temporal Convolutional Networks (TCNs) based on causal and dilated convolutions aiming to expand the receptive field while preserving sequential dependencies [27]. Similarly, sequence-dependent modeling, Recurrent Neural Networks (RNNs), and their variants, such as Long Short-Term Memory (LSTM), rely on gating mechanisms to model long-term dependencies [28,29]. In addition, the Transformer architecture has demonstrated outstanding performance in modeling long-range dependencies and full-spectrum contextual relationships, owing to its global self-attention mechanism [30]. These methods have demonstrated superior accuracy in soil texture prediction compared with traditional machine learning approaches.

However, most researchers have employed a single-task modeling strategy, in which the contents of clay, silt, and sand are predicted independently. Such approaches fail to fully exploit the intrinsic constraints and correlations among the three components, which often leads to inconsistent prediction results [31]. In contrast, multi-task learning (MTL) employs a compact shared model to simultaneously learn multiple related tasks [32,33], thereby facilitating knowledge transfer and enhancing overall generalization capacity [34,35]. This approach enables better capture of the coupled relationships among soil texture components and improves the model’s generalization performance. However, multi-task learning methods are prone to the “negative transfer” problem [36,37], and the model struggles to achieve satisfactory accuracy across all tasks due to the lack of an effective task weight balancing mechanism [38].

To address the limitations of traditional convolutional neural networks in spectral modeling, including insufficient single-scale feature expression, limited channel utilization, and branch redundancy, as well as the need for lightweight models, a novel dynamic feature modeling method is proposed. This method combines multi-scale convolution and Efficient Channel Attention (ECA) [39] for predicting soil texture.

The primary work of this paper includes the following: (1) The structural introduction of a dynamic routing mechanism that adaptively adjusts the contributions of multi-scale branches, enhancing the model’s capacity to capture complex spectral patterns. (2) The lightweight grouped multi-scale convolutions combined with an Efficient Channel Attention mechanism significantly reduce the model’s parameter count. (3) To address gradient conflicts among different tasks, uncertainty dynamic weighting is employed to modulate the magnitude of inter-task gradients, further enhancing model stability and predictive accuracy. (4) A soft constraint that the sum of clay, silt, and sand is 100 is introduced into the loss function. The experimental results demonstrate that the effectiveness of the soft constraint is contingent upon the base performance of the model: it effectively promotes inter-task information sharing and significantly enhances model robustness in models with strong base performance; conversely, in models with poorer base performance, it may amplify intrinsic noise, disrupting the training process and degrading performance.

Two datasets, including the large-scale LUCAS (Land Use/Land Cover Area Frame Survey) and the medium-sized ICRAF (International Center for Research in Agroforestry), were used to evaluate the performance of our approach against 11 baseline models. Simultaneously, we validated the effectiveness of a soft-constrained loss term based on prior knowledge and demonstrated the effectiveness of the proposed approach, which incorporates a weighted balance mechanism across tasks.

2. Materials and Methods

2.1. Soil Dataset Description

Two independent soil datasets were used for modeling: the ICRAF soil dataset and the LUCAS topsoil dataset. Both datasets contain soil physicochemical properties and Vis–NIR spectral data.

The ICRAF (International Center for Research in Agroforestry) soil dataset comprises 4438 soil samples from 58 countries across five continents. The samples were subjected to standardized laboratory processing, including air-drying and passing through a 2 mm sieve. The percentage contents of clay, silt, and sand were determined according to standardized procedures, with values ranging from 0% to 100%. Spectral data were acquired using a FieldSpec FR spectroradiometer, covering a range of 350–2500 nm with a spectral resolution of 1 nm. The LUCAS (Land Use/Cover Area Frame Survey) topsoil database, initiated in 2009, covers 19,036 standardized topsoil samples from 23 EU member states. These samples also underwent rigorous standardization procedures to determine the percentage contents of clay, silt, and sand. Spectral data were obtained in diffuse reflectance mode by using a FieldSpecXDS hyperspectroradiometer. The acquired raw spectra were subsequently interpolated and normalized, resulting in a spectral range of 400–2500 nm with a spectral resolution of 0.5 nm.

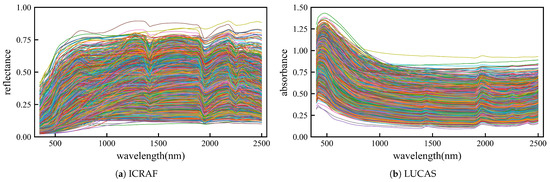

Figure 1 illustrates the reflectance and absorbance curves of the raw spectral sequences for the ICRAF and LUCAS datasets used in the analysis.

Figure 1.

Original spectral reflectance curve. (a) curve of ICRAF-ISRIC dataset (3501 samples). (b) curve of LUCAS-ESDAC dataset (15,762 samples).

2.2. Preprocessing and Dataset Partitioning

2.2.1. Preprocessing

Data preprocessing was performed to ensure data quality and dimensional consistency. We started by performing quality control and outlier removal on the raw data. Specifically, we removed all samples with missing values. Subsequently, we excluded samples where the sum of the soil texture properties (clay, silt, and sand) was either less than 99% or greater than 101%. In the preprocessing stage, to ensure data accuracy, we applied the Mahalanobis Distance () method [40,41] to filter multivariate outliers. This technique effectively accounts for the covariance structure among variables by calculating for each sample, sorting them in descending order, and removing the farthest samples. The Mahalanobis Distance threshold () for the ICRAF dataset was set to , resulting in the removal of approximately of the samples. For the LUCAS dataset, the threshold was set to , which removed approximately of the samples. This thorough outlier removal process left us with a reliable dataset, with 3501 samples being retained from the ICRAF dataset and 15,762 samples being retained from the LUCAS dataset.

Next, we performed noise reduction and dimension compression on the spectral data. Given the intense noise typically present at the spectral edges, we trimmed 50 nm from each end of the spectra [42]. Subsequently, a Savitzky–Golay (SG) filter [43] with a window size of 19 and a polynomial order of 2 was used for smoothing and noise reduction. To create a compressed spectral representation, all bands were divided into 128 equally sized intervals, and the average spectral value within each interval was calculated.

2.2.2. Dataset Partition

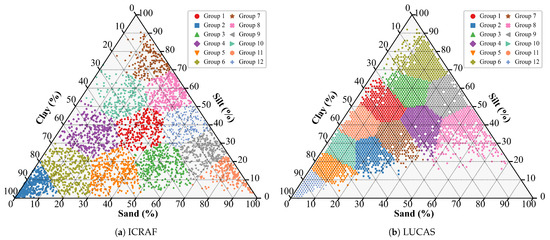

To evaluate model performance, this study employed a 3-fold cross-validation strategy, with the final results reported as the average performance metrics of the 3 folds. To ensure a uniform sample distribution, the K-Means [44] clustering algorithm was applied to the three soil texture properties (clay, silt, and sand), partitioning all samples into 12 clusters, as shown in Figure 2. During dataset construction, samples were allocated to the training and test sets on a cluster-by-cluster basis.

Figure 2.

Soil texture triangle with K-Means clustering results (12 clusters).

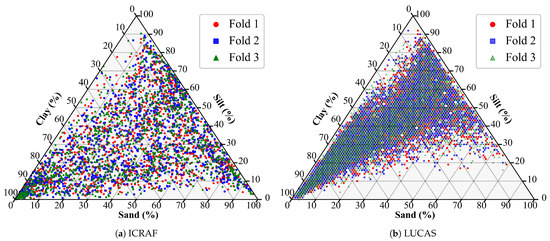

For each cross-validation fold, the dataset was partitioned into three parts: one part served as the test set (33.33% of the total samples), while the remaining two parts served as the modeling set (66.67% of the total samples). Within the modeling set, 20% of the samples were again allocated as the validation set on a cluster-by-cluster basis, with the remaining 80% of the modeling set samples being used as the training set. A visualization of one partition is shown in Figure 3.

Figure 3.

Soil texture triangle of one training/test set partition.

2.3. Baseline Models

In this study, we employed a diverse set of machine learning and deep learning techniques as benchmarks, selecting 11 representative baseline models. These models, from classic machine learning algorithms to state-of-the-art deep learning architectures, were designed to provide a comprehensive evaluation of the strengths and limitations of different methodological approaches.

For machine learning, we chose Partial Least Squares Regression (PLSR), Support Vector Regression (SVR), Random Forest (RF), Ridge Regression, XGBoost, and LightGBM. These models are widely used for hyperspectral regression tasks due to their efficiency and robustness. All machine learning models were implemented using the scikit-learn 1.6.1 library. For deep learning, we selected several common architectures in the field of hyperspectral data analysis as baselines, including ResNet34, VGG11, Temporal Convolutional Network (TCN) [45], CNN-LSTM [46], and CNN-Transformer [47]. Notably, models like VGG11 and ResNet34 are often used as backbone networks for hyperspectral data processing because of their powerful feature extraction capabilities. These models are widely applied and improved in numerous studies for their excellent performance in spectral data inversion tasks.

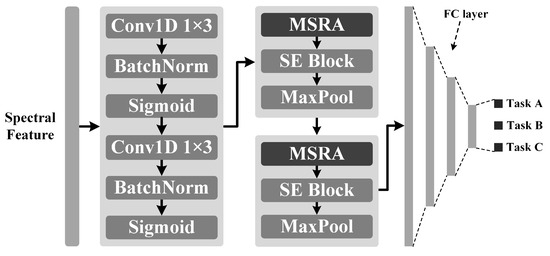

2.4. MSRA-Net

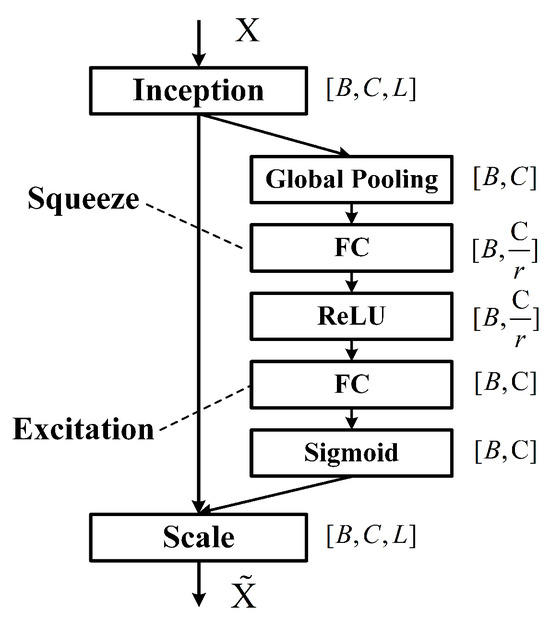

To address the limitations of existing lightweight models in multi-scale feature fusion and inter-channel information interaction, this study proposes a novel network architecture, Multi-scale Routing Attention Network (MSRA-Net). MSRA-Net is built upon the Multi-scale Routing Attention (MSRA) module as its core building block. This module integrates the Squeeze-and-Excitation (SE) [48] attention mechanism to enhance inter-channel feature interaction, achieving efficient extraction and fusion of multi-scale spectral features through multi-layer stacking. We use maximum pooling to reduce the length of feature sequences, thereby reducing computation overload, as shown in Figure 4.

Figure 4.

The overall architecture of Multi-Scale Routing Attention Network (MSRA-Net).

The model takes a spectral sequence with 128 features as input. The input is first processed by two consecutive 1D convolutional layers (), which expand the number of channels to 32 while extracting preliminary features, which serve to extract features and perform initial noise suppression. Each convolutional layer is followed by a Batch Normalization (BN) layer and a Sigmoid activation layer, which enables the progressive extraction of more abstract features and enhances the model’s nonlinear representational capability.

The model’s end is a Multi-Layer Perceptron (MLP) head with three hidden layers, which maps the features extracted by the backbone network to the final prediction results. The input features are flattened into a vector of length 4096. The number of nodes in each hidden layer of the MLP is reduced by 1/8 from the previous layer, and a Dropout layer (with a random dropout rate of p = 0.35) is inserted after each hidden layer to prevent overfitting. The final output layer is a fully connected layer with a length of 3, responsible for the final predictions of clay, silt, and sand percentages.

This section will provide a detailed explanation of the design philosophy and core modules of MSRA-Net and introduce the design of the loss function under uncertainty weighting and prior knowledge soft constraints.

2.4.1. Module Structure

In the design of deep neural networks, a key direction for architectural innovation has been to balance a model’s powerful expressive ability with the need to avoid unnecessary computational redundancy. Selective Kernel Network (SKNet) [49] introduced a soft selection mechanism to achieve the adaptive weighting of features across different convolutional kernels. However, this approach relies on features from branch convolutions to generate weights, which can lead to a potential circular dependency that affects training stability. Subsequently, SkipNet [50] introduced a dynamic skip mechanism into the branch selection process, enabling the network to activate specific layers during inference to reduce computational overhead selectively. However, there is an inconsistency in strategy between the soft gate used during training and the hard gate used during inference. In addition, Dynamic Routing Networks (DRNs) [51] introduced a more refined dynamic routing strategy, allowing the model to automatically determine the optimal information flow path based on the input, significantly enhancing structural adaptability and inference efficiency. Inspired by these works, the proposed framework, MSRA-Net, aims to strike a new balance among the three aspects of multi-scale feature extraction, redundant path compression, and dynamic feature selection.

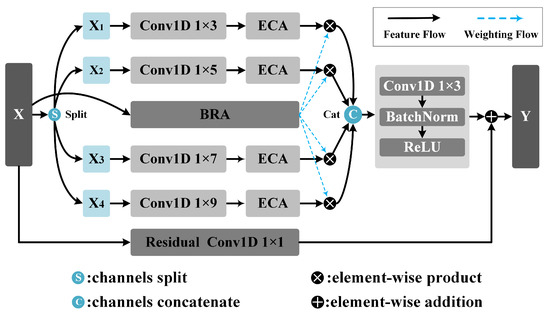

Shown in Figure 5, the MSRA module is designed to simultaneously enhance the representation capability of multi-scale spectral features and the efficiency of inter-channel information interaction while maintaining a lightweight nature.

Figure 5.

The structure of the Multi-Scale Routing Attention (MSRA) module.

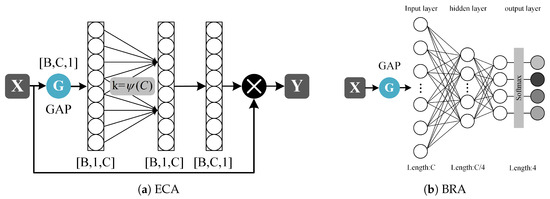

To achieve this goal, the MSRA module introduces grouped multi-scale convolutions and an intra-group Efficient Channel Attention (gECA; shown in Figure 6a mechanism, combined with a multi-scale weighting strategy based on a Branch Routing Attention (BRA; illustrated in Figure 6b mechanism, which enables the adaptive selection and fusion of features at different scales.

Figure 6.

The structure of ECA and BRA modules. (a) the Efficient Channel Attention (ECA) modules. (b) the Branch Routing Attention (BRA) modules.

The two MASR modules divide the channels of the input feature X into four groups of equal size, with 8 and 16 channels in each group. The convolution operation doubles the number of channels to 16 and 32, and the number of channels after splicing in the channel dimension is 64 and 128. It utilizes convolutional kernels of varying lengths (1 × 3, 1 × 5, 1 × 7, 1 × 9) to capture features from receptive fields of different scales and doubles the number of channels, thereby enhancing the model’s multi-scale representation capability. Each convolutional branch is followed by an Efficient Channel Attention (ECA) [39] module.

Channel attention mechanisms are utilized to adaptively recalibrate channel weights, enhancing a model’s feature representation. The Efficient Channel Attention (ECA) module, which efficiently captures local cross-channel interactions via 1D convolution without dimension reduction, is proposed. This design remains lightweight while avoiding information loss. The following formula adaptively determines the size of its convolutional kernel:

where C is the number of channels of the input feature map; and b are two learnable hyperparameters, typically set to 2 and 1, respectively. The convolution kernel size of each ECA convolution in the 2-layer MSRA module is .

The BRA module receives the input feature X. It first extracts global contextual information through a compression–expansion operation implemented by two linear layers and subsequently generates a set of routing weights. The compression ratio r is 8; that is, the number of nodes in the middle layer is 1/8 of the input layer, a structure commonly employed in multi-branch or Dynamic Routing Networks. These weights adaptively adjust the relative importance of each branch and enhance their contributions to the final prediction.

To prevent the branch weights from collapsing too early during training, a higher-temperature Softmax is employed in the initial stage to ensure that all branches are activated. The pre-relaxation mechanism facilitates free exploration among branches. As training progresses into the middle and later stages, a linear annealing strategy is adopted to gradually decrease the temperature [52], resulting in a sharper weight distribution and thus enabling more deterministic branch selection. The normalization is defined as follows:

where T is the temperature coefficient, which decreases from 5.0 to 0.5 during training (linear annealing strategy).

After the grouped convolutions, the feature sequences from all groups are concatenated along the channel dimension, effectively doubling the number of channels compared with the input. The concatenated features are then processed by a convolution followed by BN and a ReLU activation layer, enhancing local spectral feature representation while maintaining training stability. To facilitate residual learning, a convolution is applied to the input to match the channel dimension, and a residual connection is added. Subsequently, the features are fed into an Squeeze-and-Excitation (SE) block (as shown in Figure 7) to recalibrate channel-wise importance, emphasizing informative spectral channels.

Figure 7.

Squeeze-and-Excitation (SE) block structure for channel-wise feature recalibration. The reduction ratio is set to .

Finally, a max-pooling operation is applied to reduce the spatial dimension and aggregate dominant features. In this network, two MSRA modules further expand the number of channels to 64 and 128. After max pooling, the lengths of the feature sequences are reduced to 64 and 32, respectively.

2.4.2. Loss Function

In the multi-task learning (MTL) area, inconsistencies in the magnitudes of different task losses often lead to conflicting gradient directions or uneven gradient scales, thereby impacting model convergence and generalization performance. To address this challenge, we proposed a dynamic loss weighting method based on homoscedastic uncertainty [53], in which each task is referred to as a regression problem with a distinct observation noise variance and dynamically adjusts its weight in the total loss based on this variance. Tasks with larger noise variance are given lower weights, which achieves a balance in the optimization of each task and automatically adapts to their relative importance.

Assuming the observation noise variance for the task is . The dynamically weighted total loss based on homoscedastic uncertainty can be written as

where is the Mean Squared Error (MSE) loss for the task.

When a task exhibits high inherent error or noise, the learned observation noise variance automatically increases, thereby preventing that task from dominating the training process. In this context, serves as a regularization term constraining from growing unboundedly, which would degrade the loss to zero weight. Prior to training, is typically initialized to 1.0.

Simultaneously, to leverage prior knowledge of soil texture—that is, the sum of clay, silt, and sand should equal 100%—we introduce a soft constraint penalty term:

is a weighting coefficient that controls the influence of the prior knowledge constraint on the overall loss. This term is introduced in the form of an L1 penalty.

Finally, the overall loss function is defined as

2.5. Experimental Design and Evaluation Metrics

2.5.1. Experimental Design

In this study, the MSRA-Net architecture was adopted as the base model, developing two versions: a single-task version (designated as -ST) and a multi-task version (designated as -MT). The two variants differ in the number of nodes in the final fully connected layer and in their loss functions. Specifically, the single-task model’s output layer contains a single node, while the multi-task model includes multiple nodes corresponding to the number of prediction tasks. Other baseline models (e.g.,VGG11 and ResNet34) were configured with the same settings as MSRA for task heads and training procedures across both variants. In this research study, since prediction targets are the percentages of clay, silt, and sand, the multi-task output layer consists of three nodes. Furthermore, the multi-task version is optimized using uncertainty weighting and a soft constraint loss function based on prior knowledge, whereas these components are omitted in the single-task version. To ensure a fair comparison, all baseline models were implemented following the same design principles.

Three-fold cross-validation is employed for evaluating model performance. In each fold, models are trained on the training set, and the best-performing model is saved based on the average RMSE across all tasks on the validation set. After training, the models are evaluated on the test set, and we report the mean performance over the three folds for both individual tasks and the overall average performance of the three tasks.

For the traditional machine learning models, a slightly different procedure was followed. We used a grid search to identify the best hyperparameters on the validation set. Afterward, the models were retrained on the combined training and validation sets (the modeling set), and their average results on the test set were reported.

The MSRA-Net models were trained using the Adam optimizer with a learning rate of . We used a cosine annealing learning rate scheduler (CosineAnnealingLR) with T_max set to 500 and eta_min to . To ensure the optimal performance of the proposed model, key hyperparameters were automatically determined using the Optuna hyperparameter optimization framework [54]. Optuna employs Bayesian optimization coupled with the Tree-structured Parzen Estimator (TPE) algorithm, allowing for efficient exploration within a predefined search space to converge on the best-performing parameter set. The boundaries of this search space were defined with comprehensive consideration of the model’s training efficiency and computational cost. The objective function for this optimization process was set as the minimization of the Mean Squared Error (MSE) on the validation set. The batch size was set to 96 for the ICRAF dataset and 128 for the LUCAS dataset. All models were trained for 500 epochs. The learning rate for the noise standard deviation parameter in the loss function was set to , and the regularization coefficient for the loss term was also set to . The open source code is published on https://github.com/surpriseac/MRSE-Net accessed on 20 October 2025.

We fixed the global random number seeds for Python, NumPy, and PyTorch and disabled CuDNN’s automatic selection of optimization algorithms by setting torch.backends.cudnn.deterministic to True. This ensures complete determinism in the training process in the same hardware and software environment. All experiments were conducted on an Ubuntu 22.04 system equipped with an Intel Xeon E5-2696 v3 processor and an NVIDIA Titan Xp GPU. The models were developed using Python 3.10.18 and the PyTorch 2.4.1 framework.

2.5.2. Evaluation Metrics

This study employs four standard metrics to evaluate the model’s overall performance. The Root Mean Square Error (RMSE) quantifies the average deviation between predicted and actual values. The coefficient of determination () represents the proportion of the variance in the dependent variable that can be explained by the independent variable(s). Its value ranges from 0 to 1, with values closer to 1 indicating a better model fit. The Relative Prediction Deviation (RPD) is the ratio of the standard deviation (SD) to the RMSE, commonly used to assess the robustness of model predictions. The Mean Absolute Error (MAE) is the average of the absolute differences between predicted and actual values. Compared with the RMSE, the MAE is less sensitive to outliers. These metrics are defined by Equations (6)–(9) respectively.

where n is the total number of samples; and are the actual and predicted values for the i-th sample, respectively; is the mean of all actual values; and SD is the standard deviation of the actual values.

3. Results

3.1. Model Performance

Table 1 illustrates the average performance of the MSRA-Net model’s multi-task version (MSRA-MT) and single-task version (MSRA-ST) when predicting soil texture properties (clay, silt, and sand) on the ICRAF-ISRIC and LUCAS-ESDAC datasets. The reported values, including RMSE, R2, RPD, and MAE, are the average results from 10 independent runs with different random seeds, including the means and standard deviations of the four evaluation indicators of the three tasks.

Table 1.

Performance of the MSRA-MT-Net multi-task regression model in soil texture prediction.

The multi-task learning paradigm of the MSRA model (MSRA-MT) outperforms the single-task version (MSRA-ST) in both performance and stability, demonstrating the ability to leverage the intrinsic correlations among tasks effectively. Experimental results based on the ICRAF and LUCAS datasets are in accordance with the conclusion. On the ICRAF dataset, the Mean Squared Error (RMSE) of the MSRA-MT for the Clay, Silt, and Sand prediction tasks was reduced by 2.8%, 3.9%, and 7.8%, respectively. Similarly, on the LUCAS dataset, the RMSE was reduced by 2%, 7.2%, and 3.2%, respectively. On average, the RMSE of the MSRA-MT was reduced by 5.22% on the ICRAF dataset and 4.44% on the LUCAS dataset. Concurrently, the standard deviation of the average metrics for the MSRA-MT version was also significantly lower than that of MSRA-ST, indicating that the proposed model’s prediction results are more stable. To examine the performance difference between MSRA-MT and MSRA-ST, we performed a paired t-test on the results of 10 repeated experiments in two groups. For the ICRAF and LUCAS datasets, the t-statistic values corresponding to the mean difference in RMSE for the three tasks were −9.223 (p-value < 0.001) and −27.537 (p-value < 0.001), respectively. The t-values corresponding to the mean difference in were 11.005 (p-value < 0.01) and 19.846 (p-value < 0.001), respectively.

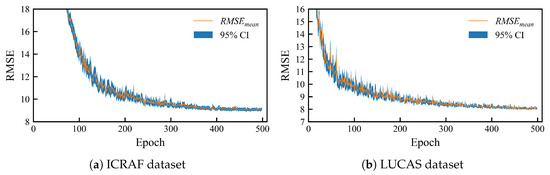

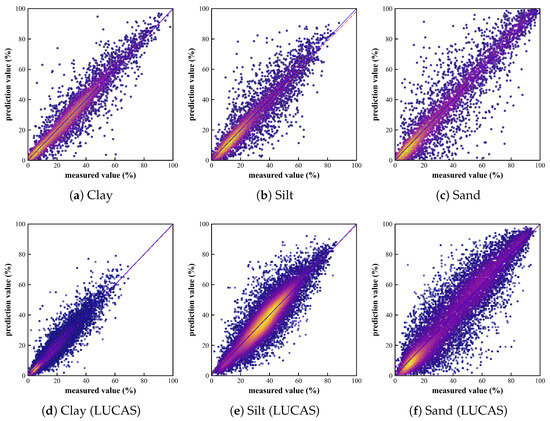

Figure 8 illustrates the results of a repeated experiment on the MSRA-MT model with an uncertainty-weighted and prior knowledge-based soft-constrained loss function; the curves represent the average RMSE values for the validation sets of the three tasks. Figure 9 presents a scatter plot of predicted versus observed values for the median performance from a repeated experiment. This figure superimposes the results from the 3-fold cross-validation.

Figure 8.

Training convergence curves of the MSRA-MT model. (a) from the ICRAF-ISRIC dataset and (b) from the LUCAS-ESDAC dataset. The solid lines represent the average RMSEs of the three tasks, and the semi-transparent intervals are the 95% confidence intervals.

Figure 9.

Scatter plots of predicted vs. observed values for three tasks by MSRA-MT-Net. (a–c) ICRAF-ISRIC dataset, showing results for: (a) clay; (b) silt; and (c) sand. (d–f) LUCAS-ESDAC dataset, showing results for: (d) clay; (e) silt; and (f) sand. The plot superimposes the prediction results from all three folds of the cross-validation. The X-axis and Y-axis correspond to the measured value and the predicted value, respectively, ranging from 0% to 100%. The blue lines represent the 1:1 lines, and the red lines represent the regression lines.

The training curves indicate that the MSRA-MT model’s performance improved rapidly after approximately 100 epochs. Subsequently, the performance curves stabilized around 350 epochs, eventually converging to a steady level. Furthermore, the 95% confidence interval was wider during the early training stages, reflecting the volatility in the model’s performance. However, during the convergence phase, the interval narrowed significantly, indicating that the model’s performance had become robust and stable.

Significant improvement can be attributed to the model’s intrinsic mechanism. By sharing parameters and undergoing co-training, the MSRA-MT model effectively captures the underlying commonalities of different soil texture properties in their spectral response signals. The approach facilitates positive knowledge transfer among tasks, enabling the model to generate a synergistic gain effect. Consequently, this not only enhances the model’s prediction accuracy and generalization capability but also ensures the robustness and reliability of the results.

3.2. Comparative Experiment

3.2.1. Comparison of Multi-Task and Single-Task Learning Models

To comprehensively validate the effectiveness of the two loss function optimization methods we proposed, we designed a series of comparative experiments. We integrated these methods with our MSRA-MT model and five other multi-task baseline models. Each model was then evaluated under three distinct loss function configurations:

- (1)

- Static Weighting: Average of the Mean Squared Error (MSE) losses of the three prediction tasks.

- (2)

- Uncertainty Dynamic Weighting: Its definition is shown in Equation (3).

- (3)

- Static Weighting with Soft Constraints: Based on static weighting, the soft constraint loss term Lprior is introduced, which is defined as shown in Equation (4).

All experiments reported the mean values of the Root Mean Squared Error (RMSE) and the coefficient of determination () to evaluate the models’ performance comprehensively. The detailed experimental results are presented in Table 2.

Table 2.

Multi-task model performance with different loss functions and weighting methods.

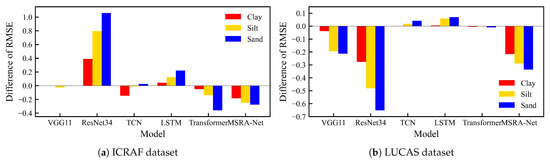

Experimental results on both datasets reveal a significant commonality: almost all models that used an uncertainty-based dynamic weighting form of loss function outperformed those with traditional static average weighting. This performance difference was particularly evident in the MSRA-MT model. However, not all models benefit from the soft constraint loss term. Notably, models with inherently poorer performance (such as ResNet34-MT and LSTM-MT) exhibited a further decline in performance after incorporating the soft constraint loss term. To meticulously analyze the mechanism of the soft constraint loss term () on various models and individual tasks, we plotted the change in the RMSE for repetitive experiments across the three tasks, Clay, Silt, and Sand, for six models (five multi-tasks baseline models and the MSRA-MT model) after the introduction of . The RMSE variation () is defined such that indicates an increase in the model’s prediction loss after the addition of the soft constraint loss term. Figure 10 illustrates the of the six models across the three tasks on two different datasets.

Figure 10.

Impact of the soft constraint loss term () on the RMSE for the three prediction tasks across different models. On the vertical axis, a positive value () indicates an increase in RMSE (performance degradation) after adding the soft constraint term.

The analysis of the data in Figure 10 reveals a critical phenomenon: the impact of the prior knowledge soft constraint loss term () on the prediction performance of individual tasks exhibits a consistent and fixed hierarchy of strength. Across nearly all evaluated models, regardless of whether results in performance gain () or degradation (), the magnitude of the effect, quantified by the absolute (), maintains an identical order: Sand > Silt > Clay. The Sand task is consistently the most strongly affected, while the Clay task shows the minimum change.

This consistent hierarchy strongly suggests that the intrinsic uncertainty and noise level of the individual tasks govern the distribution of the correction. Specifically, the prediction of sand is inherently the most uncertain (or noise-prone) due to its weaker spectral characteristics. Consequently, to minimize the overall closure error of , the multi-task model preferentially allocates the largest corrective or optimization gradient updates to the most volatile task (Sand), where the correction yields the maximum reduction in the soft constraint loss. While this strategy aims for the most effective overall error minimization, it simultaneously causes the optimization process to be dominated by the noisy task, leading to potential optimization conflict among the tasks. This finding highlights the necessity of employing an inter-task optimization conflict mitigation strategy to ensure that the constrained optimization does not disproportionately sacrifice the performance of one task for the benefit of the constraint.

This suggests that a soft constraint is not universally beneficial for all models. We hypothesize that for models with weak initial performance, the structural residuals of their predictions (i.e., abs(clay + silt + sand − 100)) inherently contain more noise and systematic bias. In this case, introducing the L prior soft constraint not only fails to correct the model effectively but may also amplify this inherent noise as an additional loss term, thereby interfering with the optimization process. Therefore, when using prior knowledge-based soft constraints to assist training, a model’s initial performance and intrinsic robustness are crucial.

A core challenge in multi-task learning (MTL) is task conflicts. While shared parameters can theoretically promote knowledge sharing, in practice, when task objective functions are contradictory, the optimization process may lead to negative transfer, thereby degrading overall model performance. To validate this hypothesis and provide a benchmark for subsequent conflict mitigation strategies, we conducted experiments on the single-task versions of MSRA-ST and five baseline models (VGG11-ST, ResNet34-ST, TCN-ST, LSTM-ST, and Transformer-ST). Each model was trained independently for the three tasks of Clay, Silt, and Sand. We also experimented with six commonly used traditional machine learning models, with their average performance reported as shown in Table 3.

Table 3.

Single-task model performance.

Among the 12 models evaluated, deep learning models consistently outperformed traditional machine learning models on two datasets. Among the baseline models, Transformer-ST and TCN-ST performed optimally. Both models effectively addressed the challenge faced by traditional models in capturing long-range dependencies within complex spectral data. In contrast, traditional machine learning methods showed mediocre performance on the soil texture prediction task, with an RDP value of less than 2.0.

It is noteworthy that the performance of these conventional machine learning models (e.g., [mention the traditional models you used, such as SVR, PLSR]) is generally poor (). This is primarily due to the inherent limitations of these models when dealing with high-dimensional, highly collinear, and non-linear hyperspectral data. They typically rely on linear or shallow feature engineering (e.g., principal component analysis or feature selection) to process thousands of bands, making it difficult for them to automatically learn complex, abstract feature representations from the high-dimensional non-linear spectral data. Consequently, they struggle to capture the complex non-linear mapping relationship between soil constituents and spectral reflectance. In contrast, deep learning models can achieve progressive feature abstraction and representation learning through their multi-layer non-linear structures, which is key to their superior performance in hyperspectral prediction tasks.

A comparative analysis was conducted between the deep learning models, including MSRA-ST, and the multi-task models using a static weighting scheme from Table 2. The results indicate that the multi-task models did not exhibit the expected performance advantages on the three tasks without any conflict mitigation strategies. Their average performance was even slightly inferior to that of their corresponding independent single-task models. This outcome confirms the presence of task conflict within the models, which negatively impacted their overall performance.

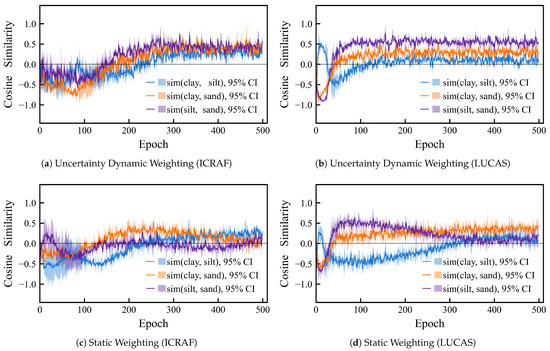

3.2.2. Uncertainty Dynamic Weighting or Static Weighting?

Uncertainty dynamic weighting is adopted as the optimization strategy for the loss function. The core idea of this strategy is to use the predictive uncertainty of each task as the weight for its corresponding loss term, allowing the model to adaptively adjust its focus on different tasks during training, thereby mitigating inter-task conflicts. To validate the effectiveness of this approach, we compared the gradient cosine similarity of the three tasks during the training process of the MSRA-MT model, under both static and dynamic weighting schemes. We computed the gradients of each task with respect to the last MSRA module and SE module. These high-dimensional gradient tensors were then flattened into one-dimensional vectors. Subsequently, the cosine similarity among these three gradient vectors was calculated. As shown in Figure 11, the curves represent the mean values from a 3-fold cross-validation, and the translucent shaded regions indicate the 95% confidence intervals.

Figure 11.

Comparison of inter-task gradient cosine similarity training curves under different weighting strategies, uncertainty dynamic weighting vs. static weighting. (a) Uncertainty dynamic weighting on the ICRAF-ISRIC dataset; (b) Uncertainty dynamic weighting on the LUCAS-ESDAC dataset; (c) Static weighting on the ICRAF-ISRIC dataset; (d) Static weighting on the LUCAS-ESDAC dataset. The solid lines represent the means, and the semi-transparent intervals are the 95% confidence intervals.

The cosine similarity reflects the similarity of two tasks’ gradient directions, with a range from −1.0 to 1.0:

- Cosine = 1.0 indicates that the gradient directions are identical, showing high synergy between tasks.

- Cosine = 0.0 indicates that the gradients are orthogonal, meaning that the updates do not affect each other.

- Cosine = −1.0 indicates that the gradient directions are opposite and that the updates cancel each other out.

Based on the analysis of the MSRT-MT model on the ICRAF and LUCAS datasets, a common issue in multi-task learning was identified: In the baseline model without inter-task optimization, a negative transfer existed between the Clay and Sand tasks and the Silt task, with their gradients exhibiting a competitive tug-of-war. However, under two weighting schemes, the Clay and Sand tasks themselves showed no significant conflict. This suggests that without an effective coordination mechanism, multi-task learning can face considerable conflicts among some of its tasks.

Furthermore, upon introducing the uncertainty-based weighting method, the cosine similarity of the gradients for all task pairs consistently converged to a positive value during training. Although the similarity value was not high, this is sufficient to prove the strategy’s effectiveness. It not only successfully transformed the negative transfer into positive cooperative optimization but also ultimately led to a significant performance improvement for MSRT-MT on all three tasks. The details of these improvements are presented in Table 4 and Table A1.

Table 4.

Mean performance of MSRA-MT model under two task-weighting strategies.

3.3. Ablation Experiments

To evaluate the performance of three key components of the MSRA model (ECA, SE, and BRA), we sequentially removed each module from the complete model (referred to as the “Standard” model). This process was followed by ten repeated experiments to observe the resulting performance degradation. The experiments were conducted on both the ICRAF and LUCAS datasets, and the results are presented in Table 5 and Table A2.

Table 5.

Mean performance of the model after removing different modules.

The experimental results indicate that removing the BRA module resulted in the most significant performance degradation for both datasets, as evidenced by the highest mean RMSE and MAE values. The model with the BRA module removed performed worse than the models with the ECA or SE module removed. Furthermore, the standard deviation of its mean RMSE in repeated experiments was 47% and 34% higher than that of the complete model, highlighting the critical role of the BRA module in the model’s robust performance. While the ECA and SE modules also played a role, their removal led to a less severe decline in performance, suggesting that they provide additional rather than fundamental enhancements to the model’s feature extraction capabilities.

4. Discussion

This paper proposed a lightweight multi-scale convolutional model, MSRA-Net, and its two variants, single-task (ST) and multi-task (MT), for individually and simultaneously predicting multiple soil texture properties (clay, silt, and sand). We employ uncertainty dynamic weighting to balance the optimization of different tasks and utilize prior knowledge as a soft constraint to guide the model in learning inter-task relationships.

4.1. Multi-Task Learning or Single-Task Learning?

Repeated three-fold cross-validation experiments on two public different scales datasets, ICRAF and LUCAS, demonstrate that MSRA-MT outperforms its single-task counterparts, other baseline models, and their multi-task variants. Specifically, on the ICRAF dataset, the R2 for clay, silt, and sand prediction reaches 0.875, 0.780, and 0.831, respectively. On the LUCAS dataset, the values are 0.863, 0.735, and 0.787. Compared with the single-task variant, the average multi-task RMSE of MSRA-MT is reduced by 5.22% and 4.44% on the ICRAF and LUCAS datasets, respectively. Compared with the traditional single-task learning (STL) architecture, the multi-task learning (MTL) framework adopted by MSRA-MT offers significant methodological and practical advantages, particularly for large-scale and heterogeneous remote sensing data processing. The core benefit of MTL lies in enforced feature sharing and enhanced generalization: it requires the model to share the underlying feature representation across all related tasks (sand, silt, and clay contents), thereby compelling the model to learn a more generic and robust soil spectral pattern, rather than local noise or biases specific to a single grain size component. This ultimately and effectively addresses the critical bottlenecks faced by conventional STL models in remote sensing spectral data, namely, the sparsity of labeled samples and the lack of generalization capability (i.e., overfitting) in heterogeneous environments.

By training five baseline models based on uncertainty dynamic weighting and the prior knowledge soft constraint loss terms, we found that multi-task learning models face significant conflicts across specific tasks without an effective coordination mechanism. Uncertainty dynamic weighting effectively mitigates the issue and significantly enhances the stability of performance in repeated experiments. Furthermore, the prior knowledge soft constraint has a dual effect on model performance: it is beneficial for models with excellent initial performance. However, it can be detrimental to models with weaker initial performance. Ablation experiments show that the Branch Routing Attention (BRA) module in MSRA-MT contributes most significantly, validating its effectiveness in integrating multi-scale information and enhancing feature representation. The intra-group Efficient Channel Attention (gECA) and Squeeze-and-Excitation (SE) modules also provide an inevitable performance gain to the proposed model.

4.2. Prospects and Limitations

The proposed model, MSRA-Net, demonstrates superior performance on two different scale datasets, providing an efficient and reliable solution for the accurate prediction of soil texture properties. The lightweight design and multi-task learning architecture can effectively reduce computational demands, thereby meeting the requirements for low latency and low power consumption on on-board edge computing devices such as UAVs or handheld spectrometers. This ensures the model’s capability for the fast, real-time acquisition and processing of soil texture information.

The MTL architecture naturally integrates the three tasks into a single optimization space, resulting in predictions with greater physical plausibility than independent STL models. This robustness is crucial to processing multispectral and hyperspectral satellite imagery (such as Sentinel-2 and Landsat). Once trained, the model can be directly applied to remote sensing data from vast regions to extract soil texture information efficiently. This is crucial to large-scale environmental issues such as regional soil weathering, land degradation, and desertification monitoring. It can quickly and accurately identify soil texture trends, providing data support for macro-level soil and water conservation strategies.

The uncertainty dynamic weighting (UDW) mechanism introduced in MSRA-MT is key to mitigating this conflict. This mechanism dynamically adjusts the penalty weights of each task by assessing its inherent uncertainty, essentially balancing the magnitude of conflicting gradients. This task-agnostic mechanism offers a viable solution for future applications to other soil nutrient prediction tasks. It ultimately enables the construction of a unified model for high-precision prediction of core tasks such as pH, organic carbon, nitrogen, phosphorus, potassium, salinity, and heavy metals.

However, since this method was developed based on large-scale spectral libraries collected under laboratory conditions, its future deployment in field environments will confront complex spectral interferences. Furthermore, the model may encounter Domain Shift when deployed across different times and geographical regions. Therefore, future work will focus on integrating the model with advanced data preprocessing techniques or domain adaptation strategies to ensure that MSRA-Net can maintain or even surpass its current performance on high-variability, large-scale UAV-collected data. On the other hand, soil texture is one of the main covariates influencing soil spectral properties. It indirectly affects the spectral signal of soil nutrients by influencing soil moisture, porosity, specific surface area, and aggregate structure. Inaccurate texture information is a significant factor contributing to the poor cross-regional transfer performance of soil nutrient models. By accurately and interpretably extracting soil texture information through MSRA-MT, we can use the predicted texture parameters or the model’s internal texture representation as auxiliary inputs or constraints for domain adaptation. This can alleviate the paradox of poor soil nutrient model transfer performance due to soil texture differences, significantly improving the robustness and accuracy of cross-regional soil nutrient remote sensing estimation.

In conclusion, this research study provides a valuable reference for identifying potentially degraded areas and formulating differentiated governance measures, laying a solid foundation for the promotion and application of this method at field and remote sensing scales.

Author Contributions

Writing—original draft, Y.D. and Y.X.; Conceptualization, Y.D., Y.X., and Y.S.; Methodology, Y.D., Y.X., and Y.S.; Modeling and software, Y.D. and Y.X.; Data deployment, Y.X.; Visualization, Y.D. and Y.X.; Project administration, Y.D. and Y.X.; Editing and revision, Y.D., Y.X., and Y.S.; Supervision, Y.D. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research study was funded by the Guangxi Key Research and Development Program (GuikeAB24010338 and GuikeAB25069340), the National Natural Science Foundation of China (32360374), and the Innovation Project of Guangxi Graduate Education (YCSW2024383).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used for this study can be found at The Open Soil Spectral Library [55,56] (OSSL), a compilation of several heterogeneous and independent datasets into a common standardized source. It is available as a digital asset and web-service. It contains standardized data from the ICRAF and LUCAS datasets.

Acknowledgments

The authors would like to express their sincere gratitude to Jian Tang and Junyu Zhao for his guidance and assistance during data acquisition and field investigation, and they would like to express their sincere gratitude to Zhijie Guo for her assistance with text proofreading, data organization, and figure preparation. Finally, I sincerely thank all the reviewers for their valuable comments on this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Performance of MSRA-MT model under two task-weighting strategies.

Table A1.

Performance of MSRA-MT model under two task-weighting strategies.

| Weighting | Task | ICRAF | LUCAS | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | R2 | RPD | MAE | RMSE | R2 | RPD | MAE | ||

| Static | Clay | 7.853 | 0.875 | 2.828 | 5.354 | 4.749 | 0.863 | 2.701 | 3.328 |

| Silt | 9.524 | 0.780 | 2.131 | 6.050 | 9.460 | 0.735 | 1.943 | 6.945 | |

| Sand | 11.955 | 0.831 | 2.431 | 7.594 | 12.081 | 0.787 | 2.168 | 8.874 | |

| Uncertainty | Clay | 7.672 | 0.880 | 2.895 | 5.215 | 4.752 | 0.866 | 2.729 | 2.729 |

| Silt | 9.049 | 0.801 | 2.247 | 6.009 | 8.853 | 0.768 | 2.074 | 2.074 | |

| Sand | 11.400 | 0.846 | 2.554 | 7.504 | 11.454 | 0.810 | 2.293 | 2.293 | |

Table A2.

Performance of the model after removing different modules.

Table A2.

Performance of the model after removing different modules.

| Dropped Module | Task | ICRAF | LUCAS | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | R2 | RPD | MAE | RMSE | R2 | RPD | MAE | ||

| Standard | Clay | 7.581 | 0.883 | 2.928 | 5.087 | 4.630 | 0.872 | 2.792 | 3.225 |

| Silt | 8.897 | 0.808 | 2.282 | 5.932 | 8.769 | 0.772 | 2.094 | 6.512 | |

| Sand | 11.091 | 0.854 | 2.624 | 7.335 | 11.169 | 0.818 | 2.343 | 8.329 | |

| Drop ECA | Clay | 7.791 | 0.877 | 2.857 | 5.226 | 4.620 | 0.868 | 2.757 | 3.175 |

| Silt | 9.009 | 0.803 | 2.257 | 6.030 | 8.977 | 0.760 | 2.040 | 6.644 | |

| Sand | 11.525 | 0.843 | 2.526 | 7.664 | 11.467 | 0.808 | 2.280 | 8.440 | |

| Drop SE | Clay | 7.796 | 0.876 | 2.849 | 5.239 | 4.709 | 0.867 | 2.741 | 3.300 |

| Silt | 9.039 | 0.801 | 2.202 | 5.997 | 9.070 | 0.753 | 2.013 | 6.784 | |

| Sand | 11.524 | 0.843 | 2.526 | 7.650 | 11.510 | 0.807 | 2.278 | 8.594 | |

| Drop BRA | Clay | 8.104 | 0.870 | 2.777 | 5.425 | 4.801 | 0.859 | 2.661 | 3.346 |

| Silt | 9.120 | 0.794 | 2.202 | 6.080 | 9.273 | 0.745 | 1.980 | 6.824 | |

| Sand | 11.926 | 0.832 | 2.437 | 7.797 | 11.852 | 0.795 | 2.209 | 8.772 | |

References

- Williams, J.; Prebble, R.; Williams, W.; Hignett, C. The influence of texture, structure and clay mineralogy on the soil moisture characteristic. Soil Res. 1983, 21, 15–32. [Google Scholar] [CrossRef]

- Xia, Q.; Rufty, T.; Shi, W. Soil microbial diversity and composition: Links to soil texture and associated properties. Soil Biol. Biochem. 2020, 149, 107953. [Google Scholar] [CrossRef]

- Regelink, I.; Stoof, C.; Rousseva, S.; Weng, L.; Lair, G.; Krám, P.; Kercheva, M.; Banwart, S.; Comans, R. Linkages between aggregate formation, porosity and soil chemical properties. Geoderma 2015, 247, 24–37. [Google Scholar] [CrossRef]

- Hengl, T.; de Jesus, J.M.; Heuvelink, G.; González, M.R.; Kilibarda, M.; Blagotić, A.; Wei, S.; Wright, M.N.; Geng, X.; Bauer-Marschallinger, B.; et al. SoilGrids250m: Global gridded soil information based on machine learning. PLoS ONE 2017, 12, e0169748. [Google Scholar] [CrossRef]

- Fenta, A.; Tsunekawa, A.; Haregeweyn, N.; Poesen, J.; Tsubo, M.; Borrelli, P.; Panagos, P.; Vanmaercke, M.; Broeckx, J.; Yasuda, H.; et al. Land susceptibility to water and wind erosion risks in the East Africa region. Sci. Total Environ. 2019, 703, 135016. [Google Scholar] [CrossRef]

- Borrelli, P.; Panagos, P.; Ballabio, C.; Lugato, E.; Weynants, M.; Montanarella, L. Towards a Pan-European Assessment of Land Susceptibility to Wind Erosion. Land Degrad. Dev. 2016, 27, 1093–1105. [Google Scholar] [CrossRef]

- Badapalli, P.K.; Nakkala, A.B.; Gugulothu, S.; Kottala, R.B. Dynamic Land Degradation Assessment: Integrating Machine Learning with Landsat 8 OLI/TIRS for Enhanced Spectral, Terrain, and Land Cover Indices. Earth Syst. Environ. 2024, 9, 315–335. [Google Scholar] [CrossRef]

- Tziolas, N.V.; Tsakiridis, N.; Ogen, Y.; Kalopesa, E.; Ben-Dor, E.; Theocharis, J.; Zalidis, G. An integrated methodology using open soil spectral libraries and Earth Observation data for soil organic carbon estimations in support of soil-related SDGs. Remote Sens. Environ. 2020, 244, 111793. [Google Scholar] [CrossRef]

- Rossel, R.V.; Behrens, T.; Ben-Dor, E.; Brown, D.J.; Demattê, J.; Shepherd, K.; Shi, Z.; Stenberg, B.; Stevens, A.; Adamchuk, V.; et al. A global spectral library to characterize the world’s soil. Earth-Sci. Rev. 2016, 155, 198–230. [Google Scholar] [CrossRef]

- Gouda, M.Z.; Nagihi, E.M.; Khiari, L.; Gallichand, J.; Ismail, M. Artificial Intelligence-Based Prediction of Key Textural Properties from LUCAS and ICRAF Spectral Libraries. Agronomy 2021, 11, 1550. [Google Scholar] [CrossRef]

- long Yang, X.; Zhang, Q.; Li, X.; Jia, X.; Wei, X.; Shao, M. Determination of Soil Texture by Laser Diffraction Method. Soil Sci. Soc. Am. J. 2015, 79, 1556–1566. [Google Scholar] [CrossRef]

- Kettler, T.; Doran, J.; Gilbert, T. Simplified Method for Soil Particle-Size Determination to Accompany Soil-Quality Analyses. Soil Sci. Soc. Am. J. 2001, 65, 849–852. [Google Scholar] [CrossRef]

- Benedet, L.; Faria, W.M.; Silva, S.H.; Mancini, M.; Demattê, J.; Guilherme, L.; Curi, N. Soil texture prediction using portable X-ray fluorescence spectrometry and visible near-infrared diffuse reflectance spectroscopy. Geoderma 2020, 376, 114553. [Google Scholar] [CrossRef]

- Zhong, L.; Guo, X.; Xu, Z.; Ding, M. Soil properties: Their prediction and feature extraction from the LUCAS spectral library using deep convolutional neural networks. Geoderma 2021, 402, 115366. [Google Scholar] [CrossRef]

- Gholizadeh, A.; Žížala, D.; Saberioon, M.; Borůvka, L. Soil organic carbon and texture retrieving and mapping using proximal, airborne and Sentinel-2 spectral imaging. Remote Sens. Environ. 2018, 218, 89–103. [Google Scholar] [CrossRef]

- Helland, I.; Næs, T.; Isaksson, T. Related versions of the multiplicative scatter correction method for preprocessing spectroscopic data. Chemom. Intell. Lab. Syst. 1995, 29, 233–241. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamashita, H.; Mihara, H.; Morita, A.; Ikka, T. Estimation of Leaf Chlorophyll a, b and Carotenoid Contents and Their Ratios Using Hyperspectral Reflectance. Remote Sens. 2020, 12, 3265. [Google Scholar] [CrossRef]

- Tafintseva, V.; Lintvedt, T.; Solheim, J.; Zimmermann, B.; Rehman, H.; Virtanen, V.; Shaikh, R.; Nippolainen, E.; Afara, I.; Saarakkala, S.; et al. Preprocessing Strategies for Sparse Infrared Spectroscopy: A Case Study on Cartilage Diagnostics. Molecules 2022, 27, 873. [Google Scholar] [CrossRef]

- Liu, C.; Yu, H.; Liu, Y.; Zhang, L.; Li, D.; Zhang, J.; Li, X.; Sui, Y. Prediction of Anthocyanin Content in Purple-Leaf Lettuce Based on Spectral Features and Optimized Extreme Learning Machine Algorithm. Agronomy 2024, 14, 2915. [Google Scholar] [CrossRef]

- Kumar, C.; Chatterjee, S.; Oommen, T.; Guha, A. Automated lithological mapping by integrating spectral enhancement techniques and machine learning algorithms using AVIRIS-NG hyperspectral data in Gold-bearing granite-greenstone rocks in Hutti, India. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102006. [Google Scholar] [CrossRef]

- Ge, X.; Wang, J.; Ding, J.; Cao, X.; Zhang, Z.; Liu, J.; Li, X. Combining UAV-based hyperspectral imagery and machine learning algorithms for soil moisture content monitoring. PeerJ 2019, 7, e6926. [Google Scholar] [CrossRef]

- Kodikara, G.; McHenry, L. Machine learning approaches for classifying lunar soils. Icarus 2020, 345, 113719. [Google Scholar] [CrossRef]

- Nouri, M.; Gomez, C.; Gorretta, N.; Roger, J. Clay content mapping from airborne hyperspectral Vis-NIR data by transferring a laboratory regression model. Geoderma 2017, 298, 54–66. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE T. Geosci. Remote 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE T. Geosci. Remote 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Li, C.; Wang, Y.; Fang, Z.; Li, P. Hyperspectral Image Classification Based on Multibranch Adaptive Feature Fusion Network. IEEE T. Geosci. Remote 2024, 62, 1–18. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series. Remote Sens. 2018, 11, 523. [Google Scholar] [CrossRef]

- Zhang, N.; Shen, S.; Zhou, A.; Jin, Y. Application of LSTM approach for modelling stress-strain behaviour of soil. Appl. Soft Comput. 2021, 100, 106959. [Google Scholar] [CrossRef]

- Wang, S.; Guan, K.; Zhang, C.; Lee, D.; Margenot, A.J.; Ge, Y.; Peng, J.; Zhou, W.; Zhou, Q.; Huang, Y. Using soil library hyperspectral reflectance and machine learning to predict soil organic carbon: Assessing potential of airborne and spaceborne optical soil sensing. Remote Sens. Environ. 2022, 271, 112914. [Google Scholar] [CrossRef]

- Kühnlein, L.; Keller, S. Evaluation Of Transformers And Convolutional Neural Networks For High-Dimensional Hyperspectral Soil Texture Classification. In Proceedings of the Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE T. Knowl. Data Eng. 2017, 34, 5586–5609. [Google Scholar] [CrossRef]

- Ruder, S.; Bingel, J.; Augenstein, I.; Søgaard, A. Latent Multi-Task Architecture Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 4822–4829. [Google Scholar] [CrossRef]

- Meng, Z.; Yao, X.; Sun, L. Multi-Task Distillation: Towards Mitigating the Negative Transfer in Multi-Task Learning. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 389–393. [Google Scholar] [CrossRef]

- Thung, K.H.; Wee, C.Y. A brief review on multi-task learning. Multimed. Tools Appl. 2018, 77, 29705–29725. [Google Scholar] [CrossRef]

- Zhai, Z.; Chen, F.; Yu, H.; Hu, J.; Zhou, X.; Xu, H. PS-MTL-LUCAS: A partially shared multi-task learning model for simultaneously predicting multiple soil properties. Ecol. Inform. 2024, 82, 102784. [Google Scholar] [CrossRef]

- Liu, S.; Liang, Y.; Gitter, A. Loss-Balanced Task Weighting to Reduce Negative Transfer in Multi-Task Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9977–9978. [Google Scholar] [CrossRef]

- Zhang, W.; Deng, L.; Zhang, L.; Wu, D. A Survey on Negative Transfer. IEEE/CAA J. Autom. Sin. 2020, 10, 305–329. [Google Scholar] [CrossRef]

- Bai, L.; Ong, Y.; He, T.; Gupta, A. Multi-task gradient descent for multi-task learning. Memet. Comput. 2020, 12, 355–369. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.F.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 September 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Maesschalck, R.; Jouan-Rimbaud, D.; Massart, D. The Mahalanobis distance. Chemometr. Intell. Lab. 2000, 50, 1–18. [Google Scholar] [CrossRef]

- Kamoi, R.; Kobayashi, K. Why is the Mahalanobis Distance Effective for Anomaly Detection? arXiv 2020, arXiv:2003.00402. [Google Scholar] [CrossRef]

- Aggarwal, H.; Majumdar, A. Hyperspectral Image Denoising Using Spatio-Spectral Total Variation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 442–446. [Google Scholar] [CrossRef]

- Schafer, R.W. What is a savitzky-golay filter? [lecture notes]. IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Absalom, E.E.; Abualigah, L.; Abuhaija, B.; Jia, H. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2022, 622, 178–210. [Google Scholar] [CrossRef]

- Li, R.; Yin, B.; Cong, Y.; Du, Z. Simultaneous Prediction of Soil Properties Using Multi CNN Model. Sensors 2020, 20, 6271. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, S.; Hong, Y.; Hu, B.; Peng, J.; Shi, Z. A comparison of multiple deep learning methods for predicting soil organic carbon in Southern Xinjiang, China. Comput. Electron. Agric. 2023, 212, 108067. [Google Scholar] [CrossRef]

- Cao, L.; Sun, M.; Yang, Z.; Jiang, D.; Yin, D.; Duan, Y. A Novel Transformer-CNN Approach for Predicting Soil Properties from LUCAS Vis-NIR Spectral Data. Agronomy 2024, 14, 1998. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 September 2019; pp. 510–519. [Google Scholar] [CrossRef]

- Wang, X.; Yu, F.; Dou, Z.Y.; Gonzalez, J.E. SkipNet: Learning Dynamic Routing in Convolutional Networks. arXiv 2017, arXiv:1711.09485. [Google Scholar] [CrossRef]

- Cai, S.; Shu, Y. Dynamic Routing Networks. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; pp. 3587–3596. [Google Scholar] [CrossRef]

- Ben-Ameur, W. Computing the Initial Temperature of Simulated Annealing. Comput. Optim. Appl. 2004, 29, 369–385. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7482–7491. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-Generation Hyperparameter Optimization Framework. In Proceedings of the The 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- Safanelli, J.L.; Hengl, T.; Sanderman, J.; Parente, L. Open Soil Spectral Library (Training Data and Calibration Models), 2021. Available online: https://data.niaid.nih.gov/resources?id=zenodo_5759693. (accessed on 19 October 2025).

- Safanelli, J.L.; Hengl, T.; Parente, L.L.; Minarik, R.; Bloom, D.E.; Todd-Brown, K.; Gholizadeh, A.; Mendes, W.d.S.; Sanderman, J. Open Soil Spectral Library (OSSL): Building reproducible soil calibration models through open development and community engagement. PLoS ONE 2025, 20, 1–35. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).