Abstract

Drones offer a promising solution for automating distribution tower inspection, but real-time defect detection remains challenging due to limited computational resources and the small size of critical defects. This paper proposes TDD-YOLO, an optimized model based on YOLOv11n, which enhances small defect detection through four key improvements: (1) SPD-Conv preserves fine-grained details, (2) CBAM amplifies defect salience, (3) BiFPN enables efficient multi-scale fusion, and (4) a dedicated high-resolution detection head improves localization precision. Evaluated on a custom dataset, TDD-YOLO achieves an mAP@0.5 of 0.873, outperforming the baseline by 3.9%. When deployed on a Jetson Orin Nano at 640 × 640 resolution, the system achieves an average frame rate of 28 FPS, demonstrating its practical viability for real-time autonomous inspection.

1. Introduction

As the energy artery sustaining societal operations, the safe and stable functioning of power distribution systems is critically linked to societal development [1,2]. Within this infrastructure, distribution towers and their components, primarily insulators for electrical insulation and tension clamps for securing conductors, are paramount for reliable power delivery [3,4,5]. However, these components are persistently threatened by a spectrum of defects induced by environmental stressors and operational wear. Beyond the non-standard insulator binding and missing tension clamp shells previously mentioned, common critical failures include insulator cracking or explosion caused by flashovers, corrosion-induced weakening of metal fittings, and the loosening of vital fasteners like bolts and split pins [6]. Although visually minor, these defects can precipitate catastrophic consequences, including localized power outages, large-scale cascading grid failures, and even wildfires, posing significant risks to public safety and economic activity. Consequently, proactively identifying these defects is not merely a maintenance task but a crucial requirement for ensuring the security and efficiency of the entire power system. This study focuses specifically on the automated detection of non-standard insulator binding and missing protective shells on tension clamps, two prevalent yet exceptionally challenging defects due to their small size and low contrast against complex backgrounds.

In recent years, intelligent inspection technologies such as robots [7], helicopters [8], and unmanned aerial vehicles (UAVs) [9,10,11] have been introduced for overcoming the limitations of manual inspection. Among them, UAVs are widely adopted for their flexibility and ability to operate in complex terrains [12], but the acquired images still rely on manual analysis, which is demanding, inefficient, and more likely lead to error, especially for small defects like non-standard insulator bindings and missing tension clamp shells [13,14].

To solve this problem, people have applied machine learning to tower recognition. Initial recognition approaches primarily utilized manually designed feature extraction methods, including Haar-like features and Histogram of Oriented Gradients (HOG), combined with traditional classifiers [15,16,17,18]. However, these approaches suffer from low accuracy, limited efficiency, and poor generalization, making them unsuitable for detecting small defects in large-scale image datasets. Driven by deep learning advancements, methods such as Region-based Convolutional Neural Networks (R-CNNs) and their improved versions improve accuracy but remain heavy for embedded deployment [19,20,21].

However, deployment on edge devices is difficult because computational resources are limited since the two-stage design of these models leads to increased model complexity. To accelerate detection process, Redmon, J. et al. [22] introduced the YOLO algorithm, which significantly improved detection speed while maintaining high accuracy. Subsequent versions of YOLO [23,24,25] have continually enhanced both precision and efficiency.

In recent years, numerous research teams have focused on insulator detection in large-scale high-voltage transmission towers by improving YOLO-based algorithms, achieving significant advancements in both recognition speed and accuracy. Zhiqiang Xing et al. developed an improved model named MobileNet-YOLOv4, making dataset enhancement through Gaussian filtering, K-means clustering, and Mosaic, achieving a recognition accuracy of 97.78% [26]. Nan Zhang et al. introduced Insulator-YOLO, an improved YOLOv5 framework, which enhanced insulator recognition precision with a mean average precision (mAP) of 89.65% [27]. Meng Wang et al. achieved an accuracy of 92% built upon the YOLOv7 architecture by integrating Transformer algorithms, triple attention, and smooth intersection-over-union (IoU) loss algorithms [28]. Guoguang Tan et al. developed the Lightweight-YOLOv8n model through incorporating SimAM attention mechanism and modifying the backbone network, achieving an mAP of 90.6% [29]. Junmei Zhao et al. proposed an improved YOLOv11n model with ODConv convolution and Slimneck, maintaining low computational complexity while achieving a high mAP of 91% [30].

Another group of studies has focused on detecting small defects in power fittings of transmission towers using YOLO-based frameworks, achieving notable improvements in detection precision and speed. Lincong Peng et al. proposed the YOLOv7-CWFD model tailored for bolt defect detection, achieving an mAP of 92.9% [31]. Peng Wang et al. introduced PMW-YOLOv8, an improved object recognition algorithm based on YOLOv8, specifically designed to solve split pin loosening detection [32]. Even when target defects occupied only 0.25% of the total image area, the model attained an mAP of 66.3%. Siyu Xiang et al. developed a modified YOLOv8 architecture to tackle challenges in grading ring defect detection caused by structural similarities between defective and normal components [33]. Their method achieved a 6.8% enhancement in accuracy over the baseline model while minimizing critical defect information loss during feature fusion. Additionally, Zhiwei Jia et al. [34] proposed a live-line detection system for tension clamps in transmission lines using dual UAV collaboration. While the dual-algorithm system achieved a mean precision of 82.7%, it required two UAVs and two recognition models, leading to excessive hardware resource consumption. However, it requires two aircraft and two recognition models, which is too complex and wastes hardware resources, and the accuracy of the improved YOLOv8-TR algorithm is within 60.9%.

The newest research has demonstrated the potential of structurally modifying YOLO architectures for enhanced small-object detection. For instance, Benjumea, A. et al. [35] introduced a series of models, YOLO-Z, by altering components and connections in YOLOv5, achieving a significant mAP gain for small objects with minimal latency cost. In a complementary approach focused on drones, Chen, D. et al. [36] proposed SL-YOLO, which incorporates a dedicated cross-scale fusion network (HEPAN) and lightweight modules to simultaneously improve accuracy and reduce computational complexity on the VisDrone dataset.

A comprehensive analysis of existing YOLO-based improvements for power equipment inspection reveals that their strategies, while effective in their own contexts, fail to address the interconnected challenges of distribution tower defect detection with a holistic and systematic design. The core of the problem lies in a cascade of model limitations when facing minute, low-contrast defects:

- Early-Stage Feature Destruction: Standard convolutional and down-sampling operations irreversibly discard the fine-grained spatial details of tiny defects, leading to an insurmountable information loss at the very beginning of the network.

- Lack of Discriminative Focus: In complex aerial backgrounds, the model’s receptive field is cluttered with irrelevant information, lacking a mechanism to actively suppress background noise and amplify the subtle, defining features of the target defects.

- Inefficient Information Flow: The pathways for fusing shallow, high-resolution features (which contain location details) with deep, semantic features (which provide context) are suboptimal, creating a bottleneck that hinders the construction of robust, multi-scale representations.

- Inadequate Output Resolution for Small Targets: The standard detection heads are often insufficiently sensitive to the sparse and weak feature responses of the smallest defects, limiting the final detection performance.

While prior works have touched upon isolated aspects, such as adding attention modules or modifying feature pyramids, none have proposed a coherent architectural solution that sequentially resolves this entire cascade of challenges. Our work introduces TDD-YOLO as such a solution, with each component meticulously engineered to address a specific link in this problem chain.

To bridge this gap, we propose a tower defect detection framework built upon YOLOv11n (TDD-YOLO). Its architecture is founded on a systematic, four-stage enhancement strategy that guides the visual information from input to output with maximal fidelity and discriminative power:

- Feature Preservation with SPD-Conv: In the backbone network, we replace standard down-sampling layers with Spatial-to-Depth Convolution (SPD-Conv) [37]. This is the foundational step to solve the problem of early-stage feature loss. By converting spatial maps into depth channels without discarding any pixels, SPD-Conv ensures that the fine-grained details of tiny defects are preserved and carried forward into the deeper layers of the network.

- Salience Enhancement with CBAM: Following the feature-preserving convolutions, we integrate the Convolutional Block Attention Module (CBAM) [38]. This module directly addresses the issue of complex backgrounds and insufficient focus. It sequentially applies channel and spatial attention to dynamically weight the feature maps, effectively teaching the model to ’look at’ the defect regions while ignoring irrelevant clutter.

- Efficient Information Fusion with BiFPN: To tackle the inefficiency in multi-scale information propagation, we redesign the neck network using a Bidirectional Feature Pyramid Network (BiFPN) [39]. BiFPN establishes fast, bidirectional cross-scale connections, enabling the high-resolution, detail-rich features and the semantically strong contextual features to be continuously and efficiently fused. This process ensures that the final feature pyramids are rich in both detail and context.

- Precision Output with a High-Resolution Detection Head: Finally, we introduce a dedicated detection head that operates on the highest-resolution feature map. This head is specifically designed to enhance the model’s capability to capture the features of small targets, providing a direct and optimized pathway for locating the minute defects that are easily missed by standard heads.

In essence, TDD-YOLO is not a mere assembly of advanced modules, but a coherent pipeline where each innovation tackles a critical bottleneck, and their sequential integration creates a synergistic effect that is greater than the sum of its parts.

The main contributions of this paper are as follows:

- We propose a novel, four-stage architectural improvement strategy for small defect detection. This strategy systematically addresses the core challenges of feature loss (via SPD-Conv), lack of focus (via CBAM), inefficient fusion (via BiFPN), and insufficient output sensitivity (via a high-resolution head).

- We instantiate this strategy in TDD-YOLO, a task-specific model that demonstrates the synergistic effectiveness of the proposed cohesive pipeline on the challenging task of distribution tower defect inspection.

- We validate the generality of our improvement strategy by successfully applying it to YOLOv8n, demonstrating its consistent performance boost across different base frameworks.

- We implement a fully autonomous UAV-based inspection system, proving the end-to-end practicality of our model in real-world scenarios.

2. Materials and Methods

2.1. Model Architecture and Modifications

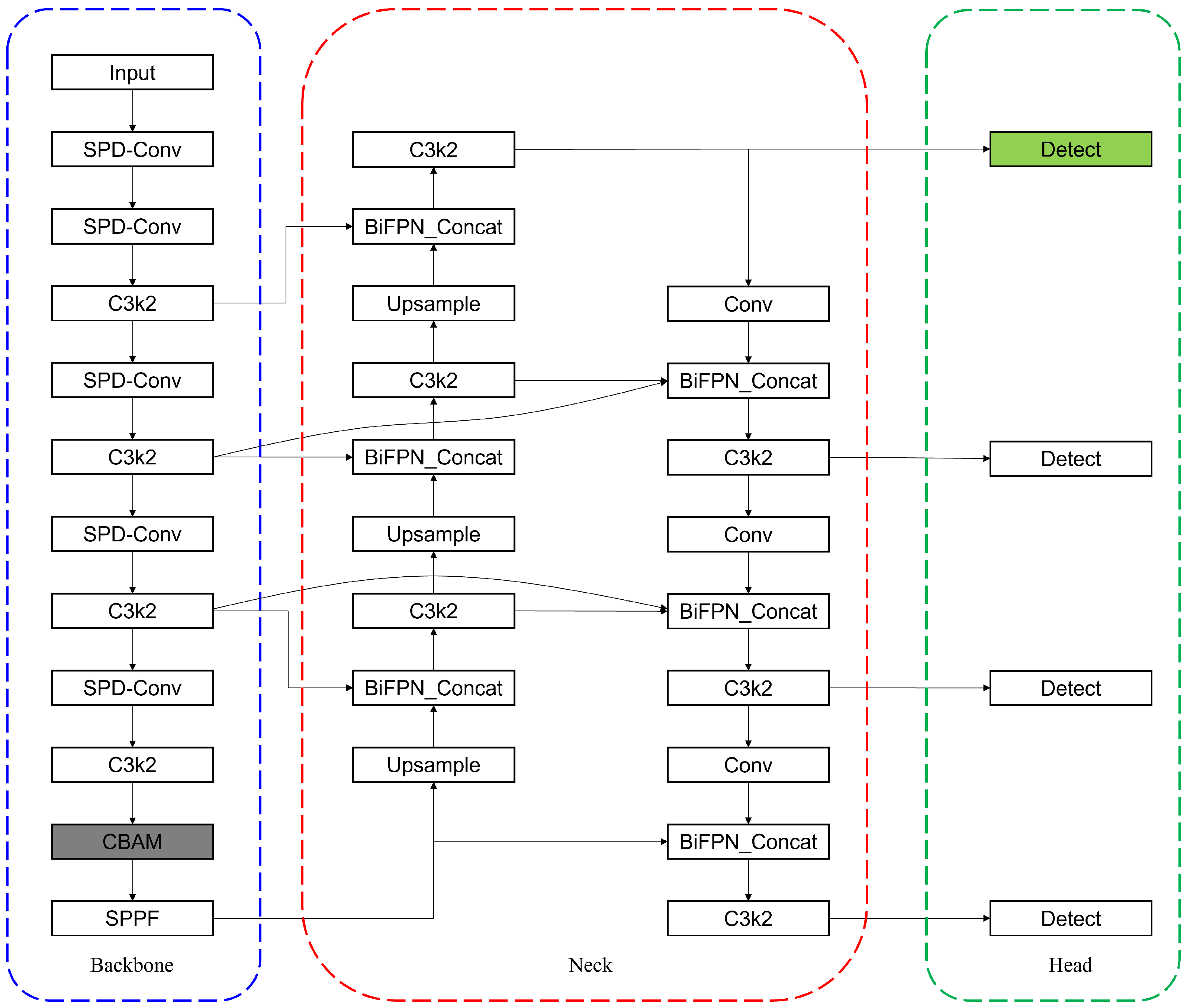

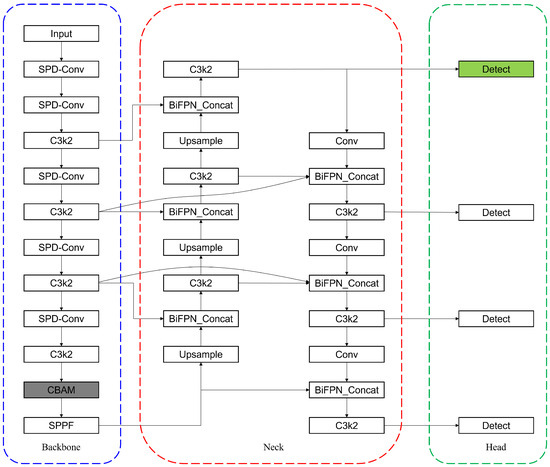

Building upon the YOLOv11n architecture, which balances efficiency and performance for edge deployment, we constructed our task-specific model, TDD-YOLO. The overall architecture is designed to implement the synergistic improvement strategy outlined in the introduction, and its complete structure is depicted in Figure 1.

Figure 1.

Thearchitecture diagram denotes the key enhancements to the YOLOv11n model through colored dashed boxes and color-highlighted components: the SPD-Conv module (blue), a redesigned BiFPN network (red), a CBAM attention module (gray), and a specialized detection head (green).

Based on YOLOv11n, the convolution strategy in the backbone was enhanced by replacing standard Conv layers with SPD-Conv, as visually indicated by the blue box in the diagram. The feature extraction network in the Neck section was re-engineered to strengthen multi-scale feature fusion using a BiFPN structure, as marked by the red box in the diagram. The model integrates a CBAM attention module, which is placed before the SPPF module, with the corresponding components marked by gray-shaded modules in the architecture diagram. Additionally, a specialized detection head was incorporated into the Head structure, with the corresponding components highlighted in green in the architecture diagram.

Detailed specifications of the model architecture, including input/output channels and spatial dimensions at each layer, are provided in Table A1.

2.1.1. SPD-Conv Convolution Module

Detecting small objects is still a challenging work by reason of some factors. The primary limitation lies in feature degradation caused by downsampling operations such as strided convolution and pooling. Large objects retain sufficient feature representation during downsampling due to their broad spatial coverage and rich texture. In contrast, small objects, with their limited pixel area and inherently low resolution, suffer significant information loss during downsampling, thus increasing the likelihood of false negatives or erroneous detection.

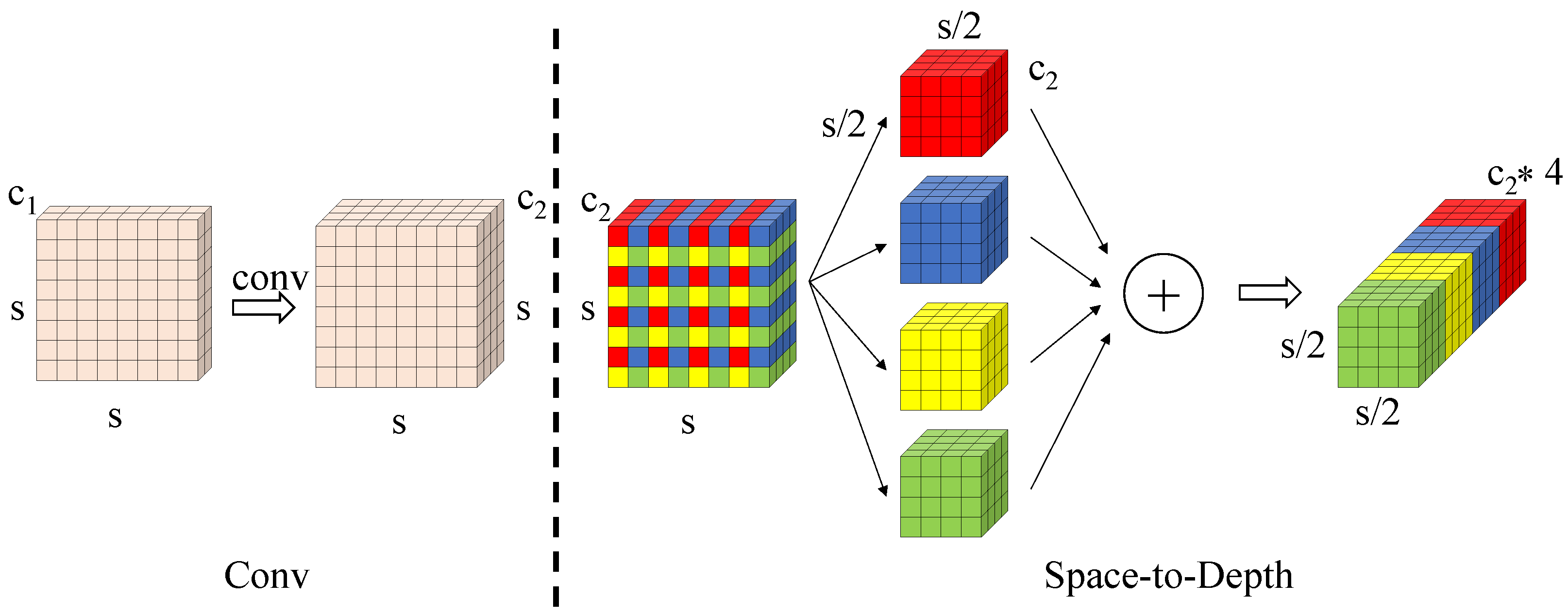

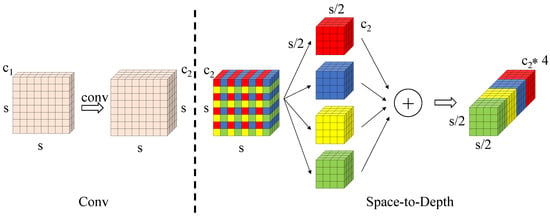

To tackle these challenges, this study take the SPD-Conv in place of the traditional strided convolution in YOLOv11n. SPD-Conv is a novel component that integrates a Space-to-Depth (SPD) transformation with standard convolution. This operation transfers the spatial dimensions of feature maps into channel dimensions, effectively preserving spatial information during downsampling.

In addition, the innovation of the SPD-Conv lies in its space-to-depth transformation. This operation transfers the spatial dimensions of the input feature map into the channel dimension, effectively preserving spatial information during downsampling.

Given an input feature map of size with channels, the transformation begins with non-strided convolution layers that expand the channel dimension to while maintaining the original spatial resolution . Subsequently, the core space-to-depth operation applies a scaling factor s (typically ) to partition the spatial domain. This decomposition splits the feature map into spatial sub-windows, each of size . These sub-maps are then concatenated along the channel dimension, producing an intermediate representation of size .

This hierarchical transformation fundamentally addresses the limitations of traditional downsampling methods by achieving lossless conversion of spatial information into channel-wise representations. The resulting -fold channel expansion effectively preserves the discrete spatial patterns of small targets, enabling deeper network layers to capture their geometric characteristics more reliably. Consequently, the SPD-Conv mechanism significantly mitigates feature degradation throughout the network architecture and substantially enhances small object detection performance, see Figure 2.

Figure 2.

SPD-Conv convolutional structure diagram. The different colors are used solely for visual discrimination and do not represent any specific meaning.

The parameters of SPD-Conv module used in this study are shown in Table 1.

Table 1.

SPD-Conv details.

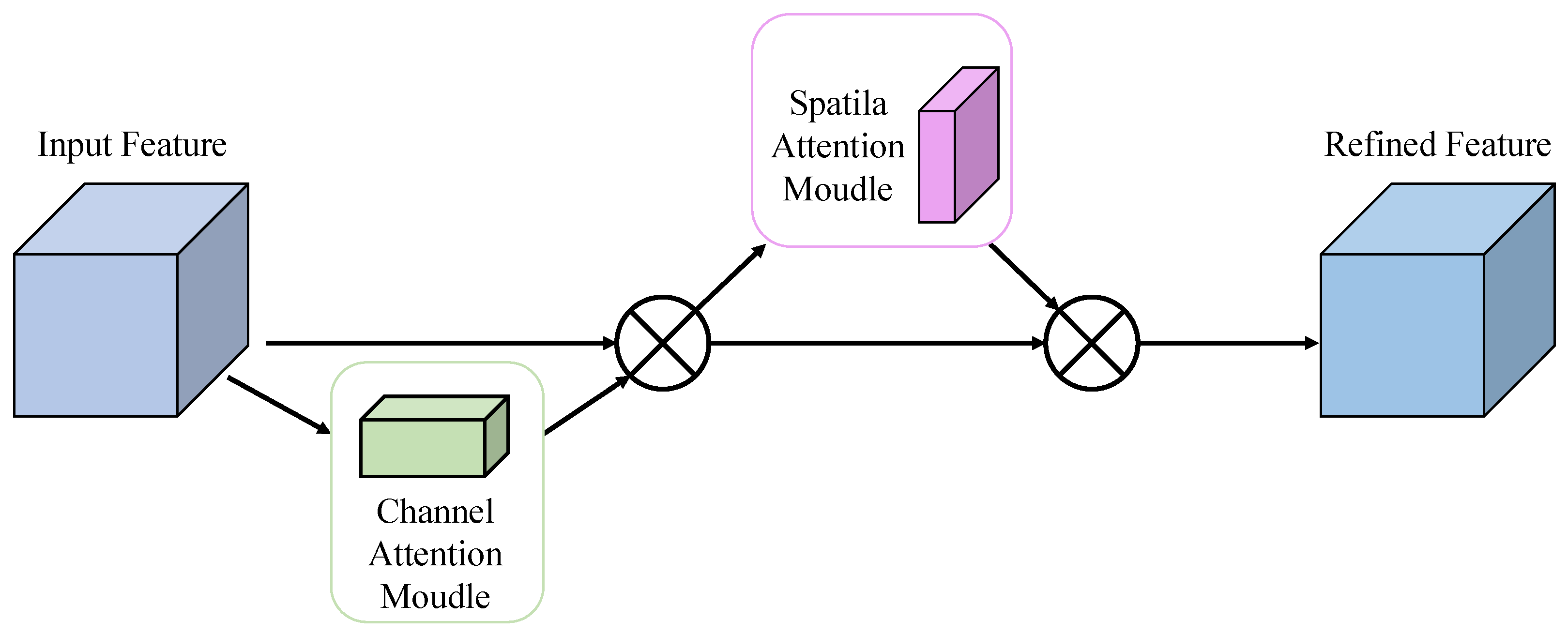

2.1.2. CBAM Attention Mechanism

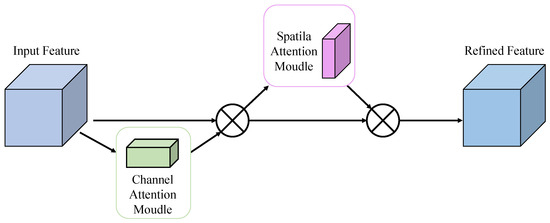

Convolutional Block Attention Module (CBAM) is a lightweight feature enhancement module that integrates channel and spatial attention mechanisms in a dual-path structure, enabling adaptive feature refinement in convolutional neural networks. Its architecture, illustrated in Figure 3, employs a cascaded design to sequentially compute channel and spatial attention maps, thus enhancing the network’s performance and making model focus on important regions while keeping computational overhead minimal. The channel attention submodule exploits inter-channel relationships by employing both global average pooling and global max pooling, followed by shared multi-layer perceptrons (MLPs) to produce attention weights. This mechanism makes the model adaptively emphasize feature channels that are most linked to the given target. The spatial attention submodule utilizes 2D pooling operations along with a convolutional layer to generate spatial attention maps, which help direct the network’s focus toward semantically significant areas. In small object detection tasks, CBAM offers notable benefits: channel attention enhances the expression of weak signals, while spatial attention suppresses background noise, and their combined effect improves detection accuracy for small and subtle targets.

Figure 3.

CBAM Attention Module Diagram.

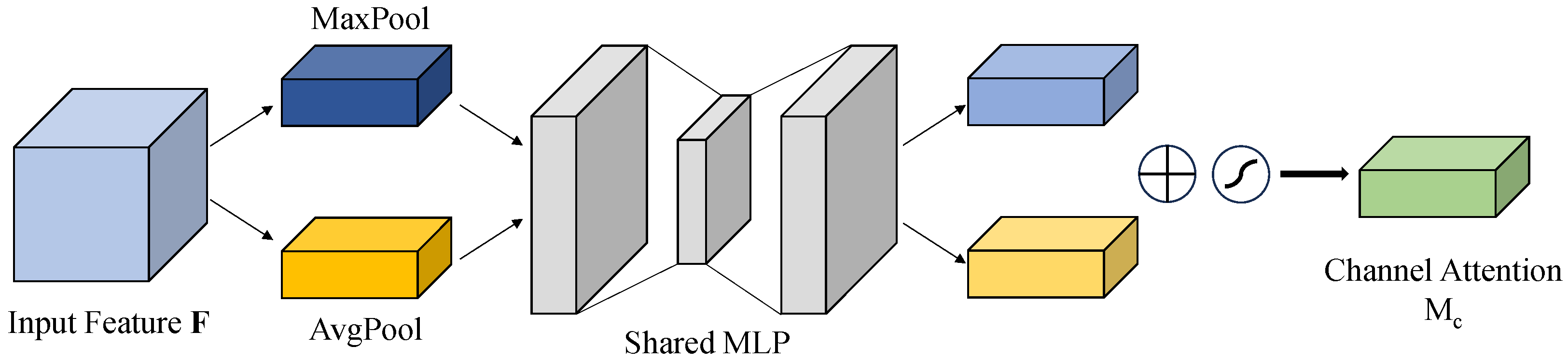

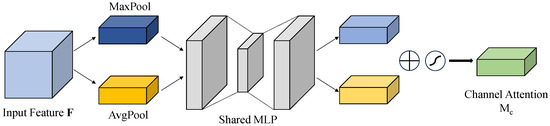

Details of the channel attention module are visually presented on Figure 4. This module applies global average pooling and global max pooling independently across the channel dimension, producing two complementary feature descriptors that capture both statistical characteristics and extreme responses. The extracted descriptors are subsequently processed through a shared two-layer fully connected network equipped with nonlinear activation functions, enabling the modeling of dependencies across channels. In the final step, those weights will be integrated into the origin feature map through using element-wise multiplication, thereby adaptively strengthening informative channels while reducing the impact of less relevant or noisy ones. This data-driven attention mechanism, leveraging both prior statistics and learned parameters, significantly improves the model’s channel-wise feature selection capability, especially in scenarios with weak or noisy signals.

Figure 4.

Channel Attention Module.

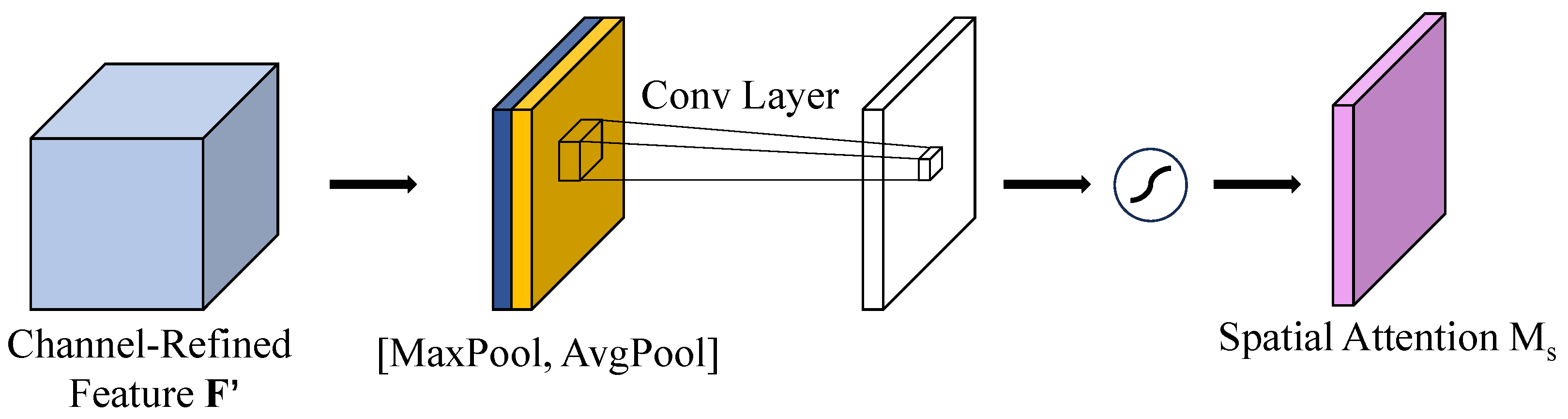

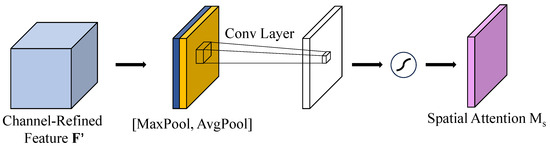

The working principle of the spatial attention algorithm can be seen in Figure 5. This module employs a spatial feature aggregation strategy. The input feature maps are first processed through parallel channel-wise max pooling and average pooling operations, which extract extreme features with prominent spatial variations and statistical features, respectively, resulting in two complementary spatial response maps. The obtained descriptors are combined through concatenation and a spatial attention map is then derived by applying convolutional operations. Following normalization using the Sigmoid function, the obtained weights constrained within the range of [0, 1] are integrated into the input feature map through element-wise multiplication, thereby adaptively enhancing spatial characteristics. By combining both extreme and statistical spatial features, the approach refines the performance of the model to discern tiny defects against complex backgrounds, thus enhancing precision in identifying diminutive targets.

Figure 5.

Spatial Attention Module.

The parameters of CBAM module used in this study are shown in Table 2.

Table 2.

CBAM Details.

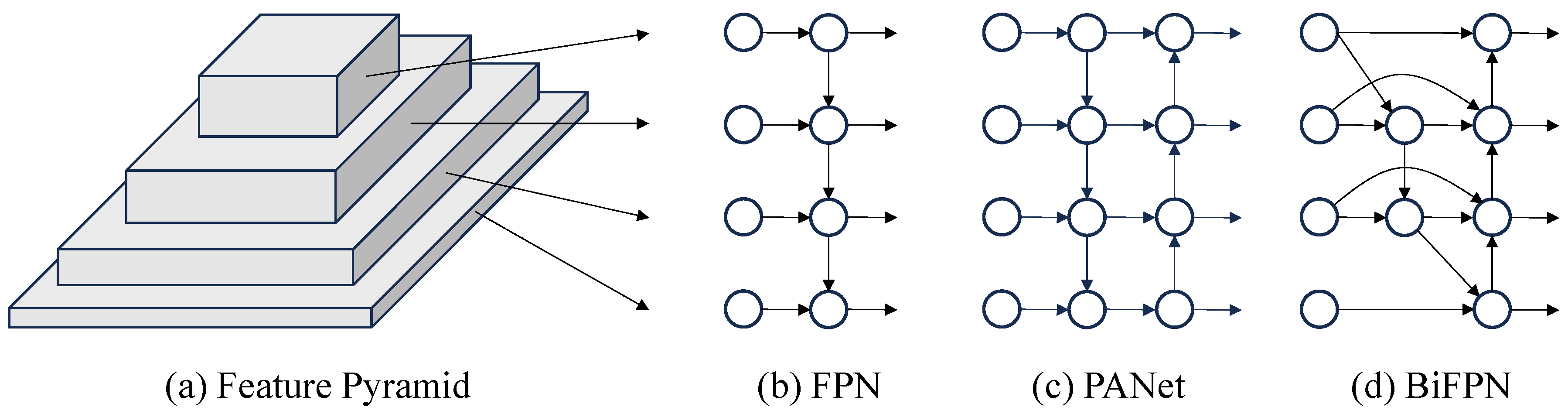

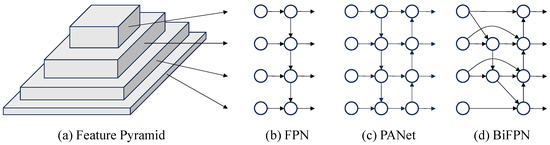

2.1.3. BiFPN Feature Extraction Network

As a critical step in object recognition, feature extraction determines the performance of a model to extract discriminative patterns, where the design of the extraction mechanism plays a key role in enhancing both perceptual accuracy and robustness across diverse scenarios. As research has progressed, the paradigm has evolved from single-path deep abstraction to multi-scale feature fusion, as displayed in Figure 6. Traditional convolutional neural networks adopt a bottom–up architecture, where high-level semantic features are progressively extracted through stacked convolution and pooling layers, as shown in Figure 6a. However, this unidirectional propagation introduces a significant semantic gap: as the network deepens, high-resolution low-level features lose spatial detail due to repeated downsampling, while deep low-resolution features, despite their rich semantics, lack the geometric detail necessary for precise object localization. This semantic–spatial gap degrades small-object detection.

Figure 6.

Structure of Feature Extraction Network.

To address this limitation, scholars have introduced network structures like the Feature Pyramid Network (FPN) and Path Aggregation Network (PANet), which are depicted in Figure 6b,c, respectively. These architectures incorporate top–down pathways along with lateral connections to facilitate multi-scale feature fusion, thereby efficiently narrowing the semantic disparity between deeper and shallower network layers. FPN improves upon conventional feature extraction by preserving the bottom-up backbone and introducing a top–down pathway that integrates low-layer spatial details with high-layer semantic message via lateral connections. It allows the network to maintain rich semantic understanding while achieving accurate spatial localization. Building upon FPN, PANet introduces a bottom-up path to form a bidirectional enhancement strategy. By feeding low-level geometric details back into higher layers, PANet constructs a closed-loop optimization mechanism that improves semantic consistency in shallow features and enhances the localization accuracy of deeper layers. This dual-path design achieves a dynamic balance between semantic abstraction and spatial precision.

Building upon PANet, BiFPN further enhances feature fusion efficiency through architectural refinement and parameter optimization. First, it employs a node reduction strategy to eliminate redundant connections with only a single input or output, forming a more lightweight and streamlined topology. Then, cross-scale skip connections are introduced to establish bidirectional information flow between nodes at the same resolution, improving feature reuse. Finally, a learnable weighted fusion mechanism assigns adaptive weights to features at different scales, enabling dynamic calibration across levels. This dual optimization in structure and parameters reduces computational cost. In addition, it enhances semantic consistency across scales, delivering superior ability in dense small object detection tasks. The structure is illustrated in Figure 6d.

The parameters of BiFPN module used in this study are shown in Table 3.

Table 3.

BiFPN Details.

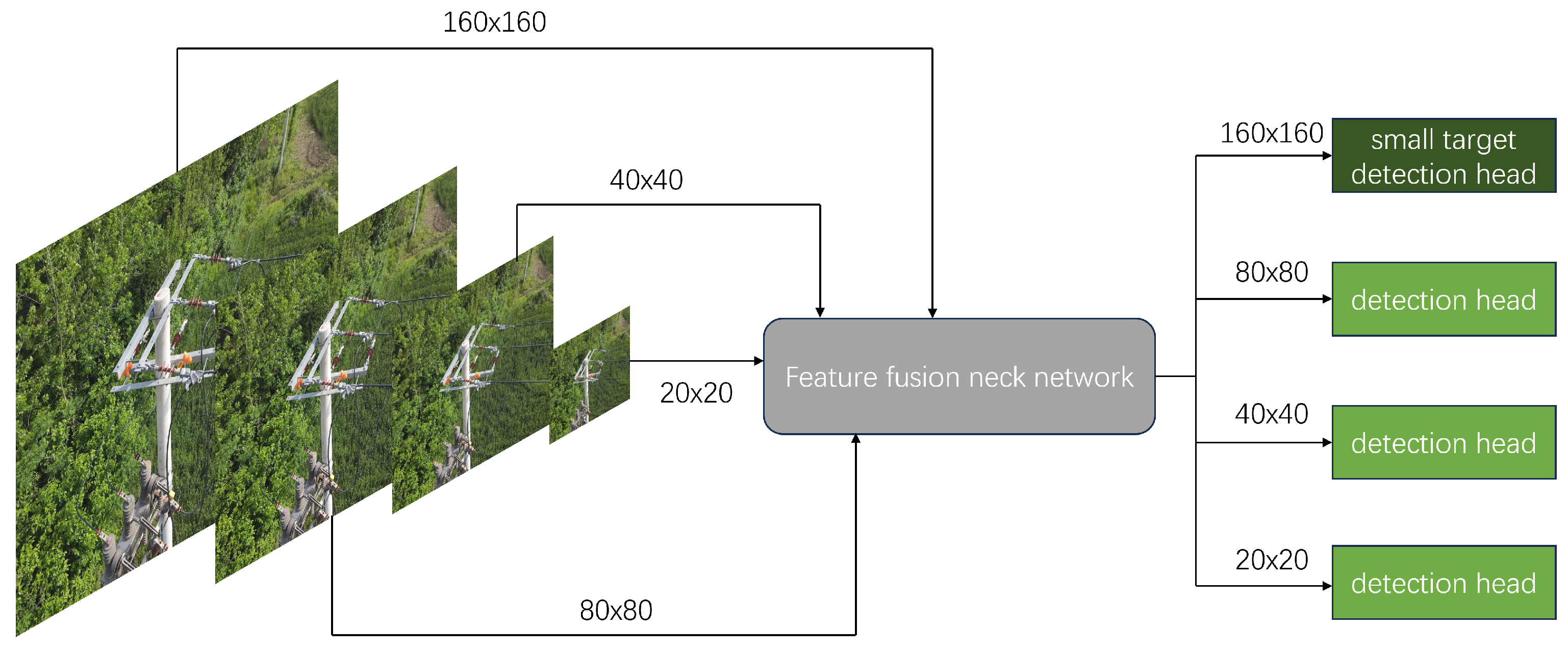

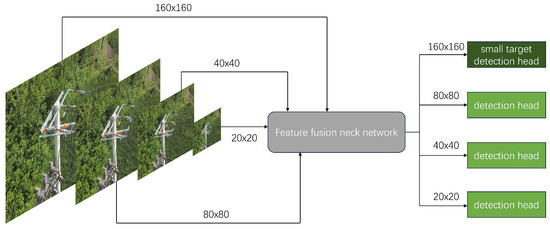

2.1.4. Detection Head for Small Defects

The biggest difficulty in distribution line recognition is that the defect coverage in the original image is significantly limited, which can lead to serious feature loss. YOLOv11n uses three detection heads at , , and (for a input). Original image information is extracted into the three detection heads through feature extraction network, and smaller detection head sizes correspond to deeper semantic information. Finally, the model will integrate the information from the three detection heads and obtain the final recognition result. During the extraction process, the loss of features will cause the model to lose information about small target defects.

Therefore, for small target defects, we add a high-resolution inspection head and the corresponding hierarchical structure, which is displayed in Figure 7. The TDD-YOLO fuses the shallow detail information of the fourth layer of the backbone with the upsampled deep semantic information of the last layer in Neck through BiFPN to obtain the detection head dedicated to small target defects. This structure fully combines the richness of shallow detail features and the abstraction of deep semantic information, which marginally increases the model’s complexity but greatly enhances the model’s recognition performance for tiny target defects.

Figure 7.

Small Target Detection Head.

2.2. Fully Autonomous Defect Detection System

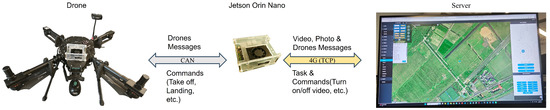

2.2.1. Drones and Embedded Platform

As shown in Figure 8, this study conducted flight experiments using a quadrotor experimental platform independently developed in the laboratory in order to validate the performance of the proposed improved model in detecting defects within real-world application scenarios. The platform is equipped with a six-axis inertial sensor, magnetometer, GPS module, data logging module, aircraft status indicator lights, and a battery unit. Additionally, it integrates an NVIDIA Jetson Orin Nano (4 GB RAM) edge computing unit (up to 20 TOPS) to support the deployment of the proposed model for defect detection.

Figure 8.

UAV Platform.

We chose NVIDIA Jetson Orin Nano as the airborne edge computing device because of its small size, low power consumption and strong CUDA support. Its 20 Tera Operations Per Second (TOPS) computing power can detect towers in real-time based on a 25 Frames Per Second (FPS) video stream, supporting real-time, reliable operation.

The specific information of the equipment is shown in the Table 4.

Table 4.

Platform Details.

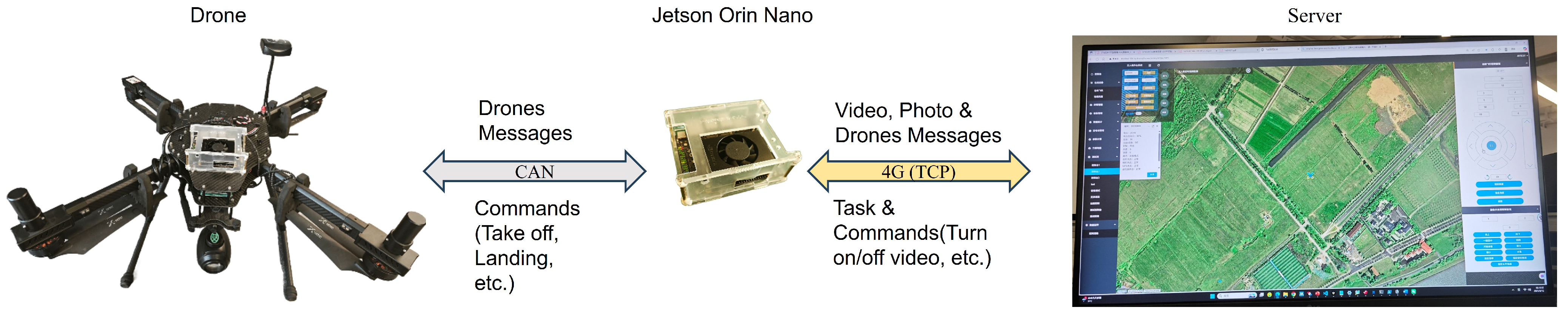

2.2.2. System Framework

The fully autonomous tower defect detection system adopts the following communication framework. The drone communicates with the Jetson Orin Nano (NVIDIA Corporation, headquartered in Santa Clara, CA, USA) via CAN protocol, and the Jetson Orin Nano communicates with the server through its loaded 4G module. Among them, drone information, tasks, and instructions are transmitted through TCP protocol to ensure the reliability of important data transmission. The video stream is transmitted to the server through UDP protocol to ensure the real-time performance of the system. This system communication structure can maximize the reliability and real-time performance of fully autonomous tower defect detection, see Figure 9.

Figure 9.

System framework.

We have developed a Graphical User Interface (GUI) for the server, which aims to display the system status more specifically, making it easier for non professionals to operate and maintain. The main functions are as follows:

- Real-time display of status information from drones, video streams from cameras, and recognition results of fully autonomous tower defect detection.

- Integrated graphical operation commands (such as turning on/off video streams, take-off, landing, remote control of drones, etc.).

- Save the videos and photos taken during the flight for future analysis.

The autonomous system utilizes a 4G LTE link for command and control, as well as for transmitting critical data, such as detected defects, telemetry to the ground station. To address the latency and reliability requirements of real-time operation, the following measures were implemented:

- Telemetry and control signals are given the highest priority. The transmission of all processed images is initiated only once the connection is sufficiently stable.

- A continuous heartbeat signal is used to monitor link integrity. Upon a timeout, the system triggers a pre-programmed fail-safe maneuver, which can be set to automatic return-to-home or hover.

- The performance of the 4G link was rigorously evaluated during field tests. Key metrics, including end-to-end latency, packet loss rate, and average uplink/downlink bandwidth, were logged for analysis. These quantitative results are presented in Section 3.5 to validate the suitability of the communication system for the intended application.

The model’s actual end-to-end processing latency is essential for the fully autonomous defect inspection system. In our practical deployment on the Jetson Orin Nano platform, we reduced this latency by converting the model into an ENGINE format with FP16 precision.

The autonomous inspection system operates through a structured four-stage process. After initialization and vertical ascent for tower height calculation, the drone navigates to each tower, hovers directly above to capture images of the tower head, and executes our defect detection model in real-time. The system cyclically proceeds to subsequent towers along the power line direction until all specified towers are inspected, and then autonomously returns. Manual operator intervention is enabled to handle unexpected situations, ensuring operational robustness.

2.2.3. Autonomous Flight Process Details

The visual input for tower detection comprises a 1080p resolution video stream at 25 FPS. For this purpose, we utilize the YOLOv8n model, which is separated from the core defect detection model introduced in this paper. Given that our research emphasizes the advancement of defect detection methodologies, the tower detection model, being a prerequisite component, falls outside the scope of detailed discussion in this study. The specific task process is as follows:

- Preparation stage: This stage initializes the drone, the onboard Jetson Orin Nano computer, and the communication link with the ground station server. Upon successful initialization, the system interface displays real-time drone status and the camera video feed. The operator can then adjust the drone’s position and, by visually confirming that the target tower route is within the video stream, manually activate the tower recognition module. Once these preparatory checks are satisfactory, the operator issues the command to commence the autonomous inspection task.

- Take-off phase: At the mission outset, the drone ascends vertically while continuously monitoring the tower in real time. It performs two hovering maneuvers at positions where the complete tower is within the recognition frame and the tower head is centered. The tower’s height is calculated through photogrammetric analysis of these two images in conjunction with camera parameters. Subsequently, the drone ascends an additional 10 m beyond the calculated height to attain a safe operational altitude.

- Detection stage: The drone navigates toward the target tower, dynamically adjusting its camera gimbal based on real-time tower detection feedback to maintain continuous tracking. Upon reaching a position directly above the tower, the drone enters a stable hover to capture high-resolution images of the tower head. These images are then automatically processed by the defect detection model proposed in this work to identify and localize critical defects.

- Cycle stage: After completing the target tower detection, the system will search for the next tower along the line based on the cable direction and repeat the actions of the detection stage.

- Tower detection: The tower inspection process comprises three types: the tower head, the tower body, and the entire tower. During the preparation and take-off phases, the tower body and the entire tower are detected to calculate a safe flight distance and tower orientation. The inspection of the tower head, in turn, provides the basis for determining whether the drone is correctly positioned above the tower. Finally, during the cyclic inspection phase, the drone utilizes the inspection data of the entire tower to determine the azimuth for proceeding to the next one.

- Exception handling: Exception handling is categorized into two types. The first type involves system-level issues, including communication links, the AI model, or the UAV’s own status. When these occur, the UAV initiates a predefined contingency procedure, typically opting to hover or perform an automated return-to-home. The second type comprises logic anomalies, such as detecting a corner tower, finding no tower in the video feed, or identifying multiple towers, where the system lacks the autonomy to proceed. These anomalies trigger an alert in the GUI, requiring operator assistance and human-in-the-loop decision-making to resume the task.

The task parameters specify the quantity and identification numbers of the towers to be detected. Once the defect inspection of all towers is finished, the UAV autonomously returns to the take-off point. This hovering action, which involves capturing photos above each tower, serves to document the actual geographic coordinates and associate them with the respective tower ID, thereby streamlining future maintenance operations.

2.3. Dataset Description

Because of the absence of related public datasets, we established a customized dataset comprising inspection flight imagery supplied by a power grid corporation, supplemented with images gathered from online repositories. This dataset encompasses two critical defect types: missing tension clamp shells and improperly tied insulators. The dataset was labeled through LabelImg. A total of 6869 images were collected and split 8:1:1 into train/val/test. Specifically, the training set contains 5495 images, while both the validation and test sets consist of 687 images each, as summarized in Table 5. Among the annotations, there are 11,472 instances of improperly tied defects and 13,039 instances of missing protective shells, covering a wide range of defect types under various backgrounds and UAV perspectives. This comprehensive dataset ensures the adaptability of the model in real-world power distribution line inspection scenarios.

Table 5.

Dataset details.

The defect distribution in the dataset is a direct consequence of the UAV inspection scenario. To maintain a safe distance from the utility tower, the UAV captures images where defects invariably appear as small targets. As shown in Table 6, the majority of defects (66.86%) fall within the 0.1–1% object-to-image area ratio range, comprising 16,387 target instances. An additional 31.02% of defects are even smaller, with area ratios below 0.1%, accounting for 7603 targets. Notably, medium-sized defects (1–10% area ratio) represent only 2.13% of the dataset (521 targets), while no large defects (10–100% area ratio) are present. This distribution highlights the dataset’s predominant focus on the challenging problem of small object detection.

Table 6.

Defect distribution details.

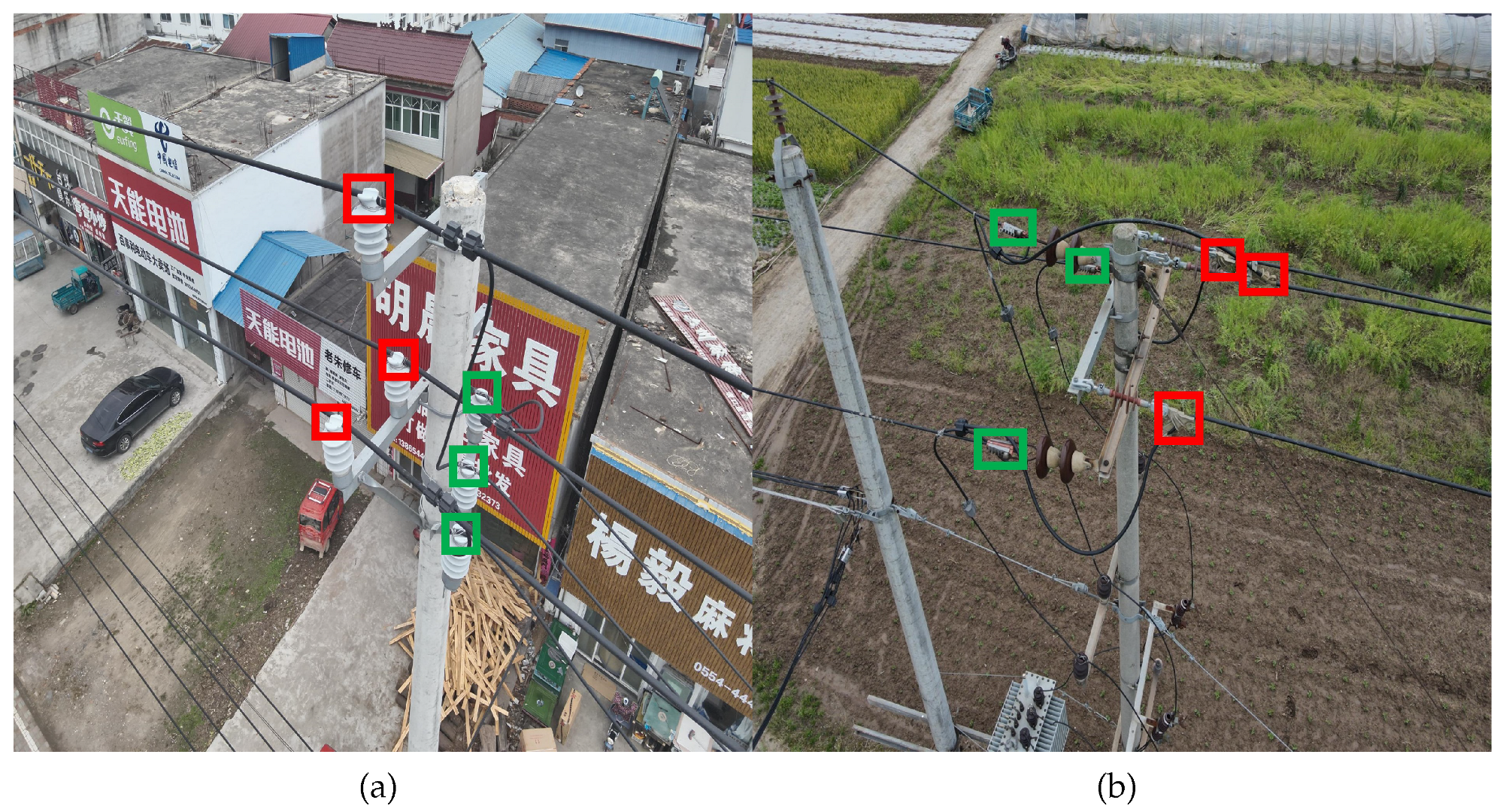

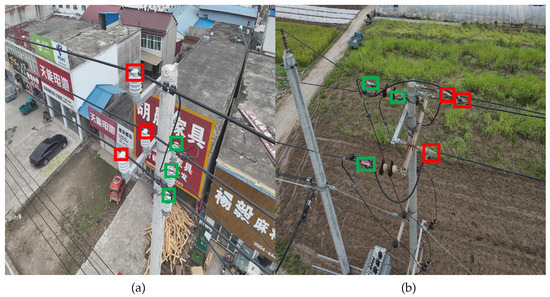

To visually illustrate the two defect types, representative examples are shown in Figure 10. In Figure 10a, red boxes indicate ‘non-standard binding’ defects, while green boxes denote ‘standardized binding’. Normally, distribution cables should be placed and bundled in the grooves of the insulator to ensure that the insulator can support the distribution cable and provide electrical insulation. When the lashing is not standardized, the insulator cannot provide support and electrical protection for the cable, and it cannot guarantee reliable operation of the distribution line. In Figure 10b, red boxes highlight ‘missing tension clamp protective shells’, and green boxes correspond to normal ones. Tension clamps are critical for securing power distribution cables. The absence of a protective cover leaves the tension clamp completely exposed, making it vulnerable to environmental corrosion. Once corrosion or aging occurs, there is a risk of the distribution cable will fall off. The protective cover of the tension clamp provides isolation and protection, effectively extending the service life of the tension clamp.

Figure 10.

Schematic diagram of target defects in power distribution. (a) Non-standard insulator binding and (b) missing tension clamp shell. The red boxes in the picture indicate the defective parts, and the green boxes indicate the normal parts.

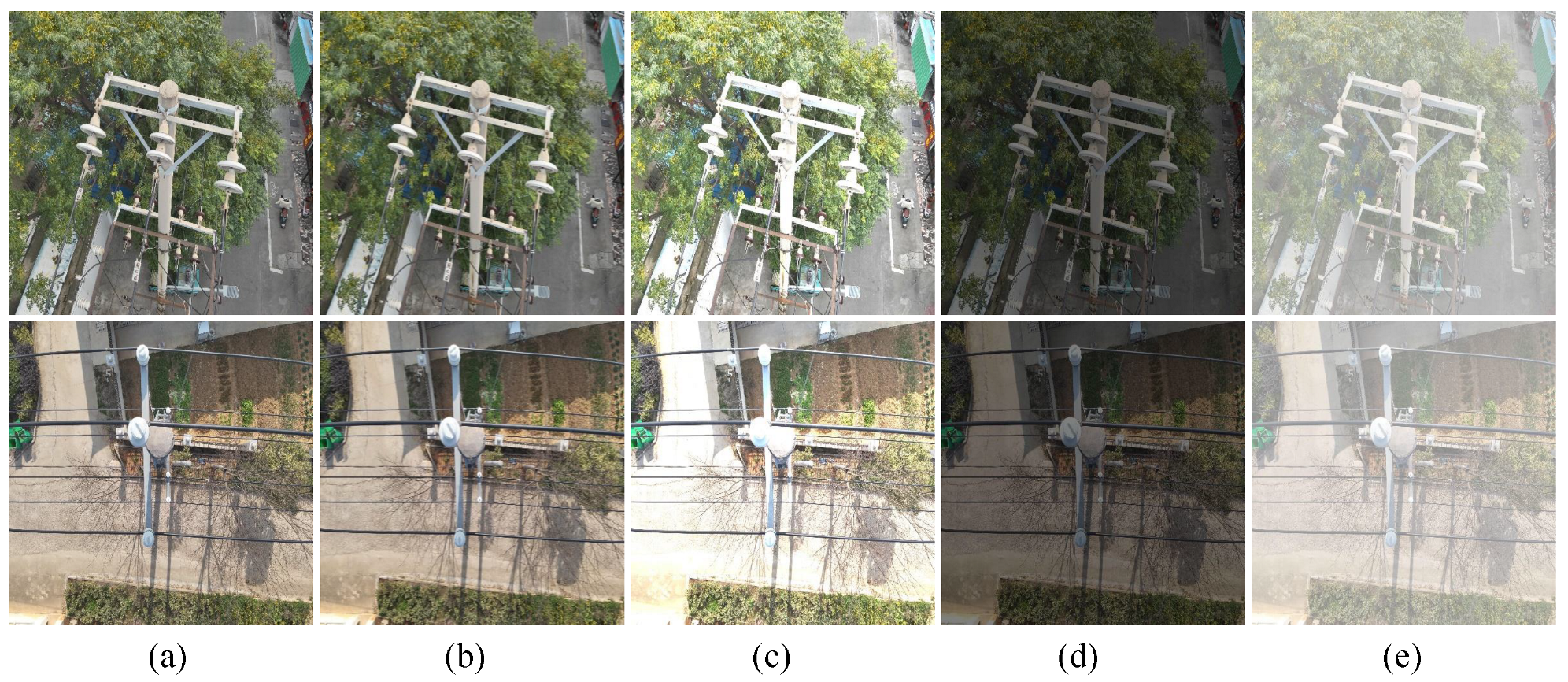

To further explore the effectiveness and advantages of TDD-YOLO, comprehensive experiments were carried out across a range of challenging scenarios to assess its adaptability and performance stability. The defect detection test set was processed using four representative image enhancement approaches:

- motion blur, to simulate dynamic scenarios caused by UAV movement.

- increased brightness, to mimic direct sunlight exposure.

- increased brightness, representing overcast conditions.

- haze addition, to emulate foggy weather conditions.

These environmental perturbations enable systematic evaluation of TDD-YOLO’s performance to practical distribution line inspection tasks. Details of image enhancement approaches are displayed in Table 7.

Table 7.

Details of image enhancement approaches.

2.4. Training Details

Model training was conducted with an Intel Core i5-13400 processor and an NVIDIA RTX 4070Ti GPU. Parameter settings and training environment are displayed in Table 8 and Table 9. According to results of pre-training, the model we introduced began to converge around epoch 200. Therefore, we set epoch to 300. Due to hardware constraints, the batch size was limited to 16.

Table 8.

Training environment.

Table 9.

Training parameters.

The detailed training parameters employed in our experiments are presented in Table 9. The model underwent comprehensive training for 300 epochs, with a consistent batch size of 16. We adopted the Adam-W optimizer, known for its effective handling of sparse gradients and improved generalization capability, with an initial learning rate set to 0.001. All input images were uniformly resized to pixels to maintain consistency in feature extraction. This carefully optimized configuration ensures fair comparison across different model variants in our ablation study while maintaining training stability and convergence efficiency.

2.5. Evaluation Metrics

Standardized evaluation metrics are critical for the objective assessment of recognition algorithms, and we employ them to evaluate the performance of the model, including mean average precision (mAP), precision (P), recall (R), parameters, frames per second (FPS), giga floating-point operations per second(GFLOPs) and model size.

Among the evaluation metrics, mAP is regarded as the primary indicator, providing a balanced assessment of precision and recall across multiple object categories. It is computed by first determining the average precision (AP) for each individual class, followed by calculating the average of all class-specific AP values, as defined in Equation (1). This hierarchical averaging approach ensures fairness across categories and offers a holistic view of the model’s detection ability in complex scenarios.

There are two common evaluation metrics for mAP: mAP@0.5 and mAP@0.5:0.95. mAP@0.5 is calculated using a fixed IoU threshold of 0.5, meaning a predicted bounding box is classified as correct when its overlap with the corresponding ground truth box meets or exceeds this threshold. For each category, the average precision is determined separately, and the final metric is derived by averaging these results across all classes. On the other hand, mAP@0.5:0.95 employs a dynamic IoU threshold ranging from 50% to 95%, with a 5% step. The average precision is calculated at each threshold and then averaged across the range.

In the computation process, the AP for each class is derived from the precision-recall curve, where recall is plotted on the x-axis and precision on the y-axis. The mathematical formulation for this calculation is presented in Equation (2).

Precision is calculated as the fraction of recognized positive instances among predictions labeled as positive. It highlights the effectiveness of model in minimizing false positives. Conversely, recall is expressed as the fraction of identified positive instances out of actual positives, showing the capability of model to avoid missed detections. This metric reflects the model’s capability to differentiate actual positives from incorrectly pretdect as negatives, with a focus on reducing errors where negative samples are mistakenly identified as positive. The mathematical expressions for R and P are presented in Equations (3) and (4), respectively.

In these computations, True Positive (TP) denotes cases where positive instances are correctly recognized by the model, False Positive (FP) indicates that negative instances are incorrectly recognized as positive, and a False Negative (FN) occurs when a positive instance is mistakenly classified as negative. From the confusion matrix, the evaluation metrics are intuitively obtained. It is displayed in Table 10.

Table 10.

Confusion matrix.

We measure computational complexity in Giga Floating Point Operations (GFLOPs) for a single forward pass with batch a size of 1 and input resolution of .

This study establishes a multidimensional model evaluation system by integrating mean Average Precision (mAP), Parameters, GFLOPs and Size. The mAP reflects detection accuracy, Parameters indicates the total number of learnable parameters, and Size measures the overall model size. This analysis of the trade-off between precision and efficiency offers a robust basis for assessing the model’s effectiveness in this study.

To ensure statistical reliability, we performed five independent experiments with different random seeds for each model configuration. The precision, recall, and mAP metrics reported in this paper are the averages across these 5 runs. All values are presented with three decimal places.

3. Results

3.1. Ablation Experiment

To evaluate the effectiveness of proposed enhancement methods in defect detection, this study adopts YOLOv11n as the baseline model and conducts ablation experiments by comparing it with each improvement strategy. This comparison helps validate whether the modifications lead to improvements in detective metrics, which are vital for assessing object detection performance. Table 11 presents the experimental results, with checkmarks indicating the application of specific enhancements to the original YOLOv11n architecture.

Table 11.

Results of ablation experiment.

Firstly, the convolution module was replaced with SPD Conv, increasing mAP@0.5 to 0.842 and mAP@0.5:0.95 to 0.481. This indicates that SPD Conv effectively preserves local features by converting spatial information into depth information. Secondly, replacing the Convolutional block with the Parallel Spatial Attention (C2PSA) module with the CBAM attention module increased mAP@0.5 to 0.841 and mAP@0.5:0.95 to 0.469. Meanwhile, both the model size and computational complexity decreased to a certain extent. This indicates that for small target defects, CBAM’s channel and spatial dual attention are more effective in directly enhancing local details compared to C2PSA’s multi-scale grouped convolution, which is particularly important for low-resolution small targets.

It is worth noting that the original framework of YOLOv11n was first subjected to SPPF processing before C2PSA. When replacing C2PSA with CBAM, we performed CBAM first and then SPPF, but achieved better results. The results of the two models are displayed in Table 12.

Table 12.

Comparison of models.

After modifying the module order, replacing C2PSA with CBAM increased the mAP@0.5 by 0.6% percentage points. The reason is that SPPF blurs local details through multi-scale pooling, which directly affects the feature extraction of small targets. If CBAM is later connected, spatial attention may fail due to reduced feature map resolution. CBAM can prioritize retaining details of key areas in the front. Therefore, in the end, while replacing the C2PSA module, we chose to adjust the model framework, resulting in better recognition performance.

Finally, the feature extraction network was reconstructed by using BiFPN, and a detection head with a size of was integrated, achieving mAP@0.5 = 0.856 and mAP@0.5:0.95 = 0.489. BiFPN connects high-level semantic information and low-level spatial information through bidirectional fusion, thereby enhancing low-level semantic information and high-level spatial information, and improving the performance of the model in identifying small target defects. The added 160 × 160 detection head preserves more local details for the model, which is crucial for detecting small objects.

Ablation experiments showed that all the improved modules we proposed made a positive contribution to performance, with mAP@0.5 increased to 0.873 and mAP@0.5:0.95 increased to 0.508.

3.2. Comparison Experiment

This study assesses the effectiveness of TDD-YOLO by benchmarking it against existing models. The proposed TDD-YOLO was evaluated against several state-of-the-art lightweight object detection models, including YOLOv7-tiny, YOLOv8n, YOLOv9-tiny, YOLOv10n, and YOLOv11n, under standardized experimental conditions. As summarized in Table 13, TDD-YOLO significantly outperformed all other lightweight YOLO variants across multiple core detection metrics. Specifically, it achieved an mAP@0.5 = 0.872, an mAP@0.5:0.95 = 0.50.8, and ran at 29 FPS on the Jetson Orin Nano.

Table 13.

Results of comparison experiment.

While YOLOv9-tiny and YOLOv10n exhibited competitive detection accuracy, their inference speeds were considerably lower than that of TDD-YOLO on the same hardware. Notably, YOLOv9-tiny, despite having the closest recognition performance to our model, attained only 12 FPS. System profiling revealed that YOLOv9-tiny failed to fully utilize the available GPU resources, operating at approximately 60% utilization, which indicates potential architectural or software-level incompatibilities with the Jetson platform. Other models with faster frame rates, such as YOLOv8n, exhibited significantly inferior detection accuracy compared to TDD-YOLO.

Overall, TDD-YOLO achieved the most effective balance between recognition performance and inference speed among the evaluated models, thereby enhancing the reliability of the fully autonomous, real-time distribution tower defect detection system.

Considering that the stronger the model recognition performance, the more effective objects are recognized, and that introducing post-processing is difficult to accurately express the model recognition speed, FPS only calculates the preprocessing and inference parts.

3.3. Robustness Experiment

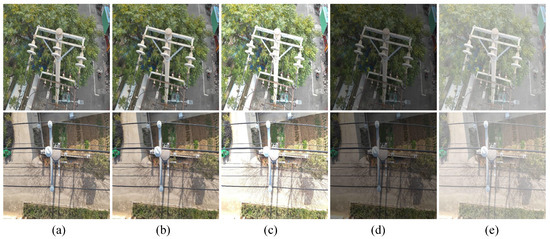

To rigorously assess model robustness, we subjected both the baseline and the proposed TDD-YOLO to the simulated degraded conditions outlined in Section 2.3, including motion blur, extreme brightness, and haze. The results, compared in Figure 11, reveal that TDD-YOLO achieves consistently higher detection accuracy. This demonstrates its superior capability in mitigating the effects of key photometric variations and atmospheric disturbances, a crucial advancement for dependable UAV inspection systems.

Figure 11.

Image Comparison under Different Image Enhancement Operations. (a) Original. (b) Motion Blur. (c) Increased Lightness. (d) Decreased Lightness. (e) Haze Addition.

In this experiment, the val set which has been processed was used, and the test results are shown in Table 14. Experimental results show that TDD-YOLO is significantly better than the benchmark model (YOLOv11n) in the original image and a variety of visual degradation scenarios. When no perturbation is added, the mAP@0.5 of TDD-YOLO reaches 87.3%, which is 3.9% higher than that of the benchmark model, and the mAP@0.5:0.95 increased by 4.1%. In the motion blur, abnormal brightness and fogging interference scenarios, the mAP@0.5 index remained 83.2%, 82.9, 84.7%, and 74.4%, respectively, which was 3.5–4.7% percentage points higher than that of the benchmark model. These results indicate stronger environmental robustness.

Table 14.

Recognition performance under different image enhancement operations.

The experiment verified the reliability of TDD-YOLO in complex distribution tower detection scenarios, providing a more reliable guarantee for unmanned aerial vehicle power distribution detection.

3.4. Portability Experiment

The improvement methods we proposed were applied on the YOLOv8n model to verify its advantages and universality in enhancing the performance of detecting small objects because YOLOv8n is currently the most representative version of YOLO and also one of the most widely used YOLO versions on edge devices. The results of the portability experiment are displayed in Table 15.

Table 15.

Results of portability experiment.

The benchmark model YOLOV8n achieved mAP@0.5 of 0.687 and mAP@0.5:0.95 = 0.343. After adding the CBAM attention module, the performance was slightly improved, reaching mAP@0.5 of 0.698 and mAP@0.5:0.95 = 0.343. After replacing the convolution module with SPD Conv, the model performance was significantly improved, reaching mAP@0.5 = 0.748 and mAP@0.5:0.95 = 0.384, but the number of parameters also increased the most. After modifying the feature extraction network to BiFPN and adding a detection head, the performance improvement was also significant, reaching mAP@0.5 of 0.733 and mAP@0.5:0.95 = 0.382, with a slight increase in size, balancing model complexity and performance. After applying all improvement methods simultaneously to YOLOv8n, mAP@0.5 increased by 8.8% and mAP@0.5:0.95 increased by 7.2%. The number of parameters increased by 0.8 M; a modest increase in parameters (+0.8 M) yielded substantial gains, achieving a balance between detection performance and efficiency.

The experimental results show that the proposed method has universality and can enhance the detection accuracy of small target objects under different YOLO frameworks.

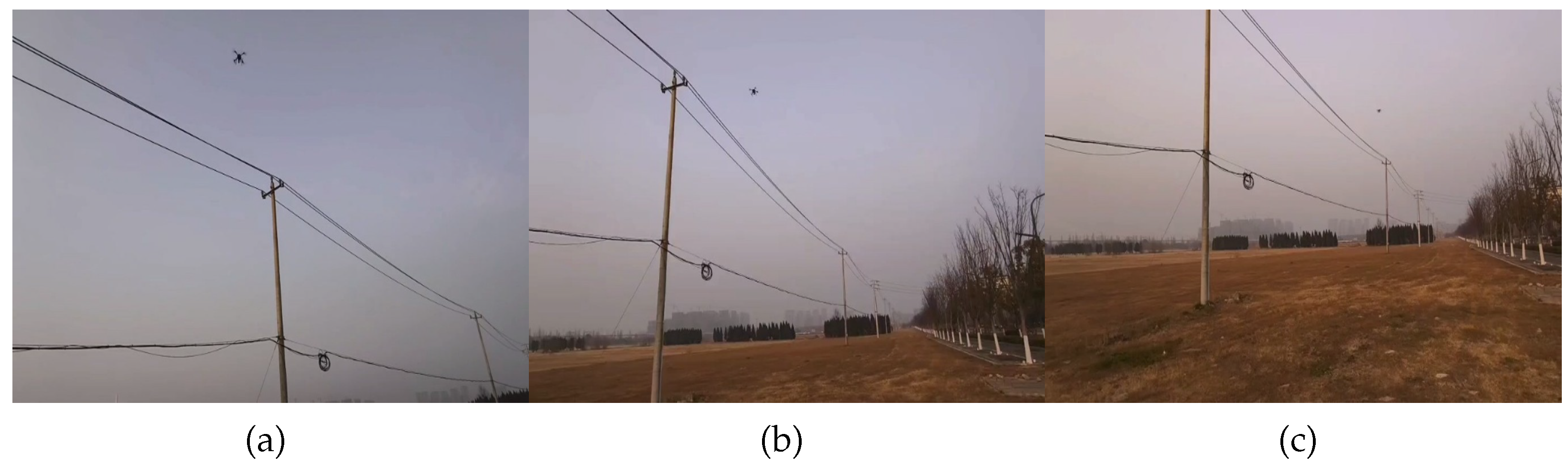

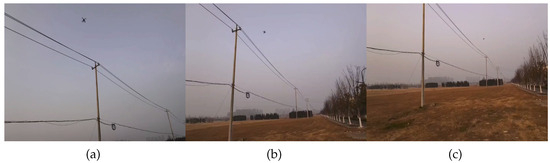

3.5. Flight Experiment

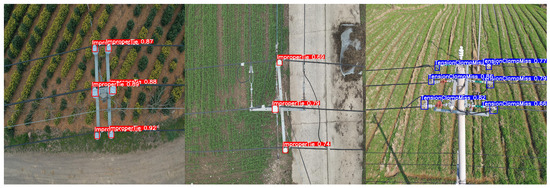

The system proposed in this article was used for defect detection of tower lines, and the results are as below: Figure 12 (Tower body detection) shows that in ground preparation mode, the aircraft identifies the tower based on the video stream. Once the tower is identified and the operator sends command to start detect task, the drone will start operating autonomously. Figure 12 and Figure 13a (tower top detection) show the drone flying towards and detecting the target tower. Figure 13b,c show the drone flying towards the target tower to be inspected at the next level and conducting defect detection on the tower at the next level. Figure 14 shows the defect detection results in field runs.

Figure 12.

Tower detection. (a) Real-time identification of the tower body and (b) the tower head, with red bounding boxes indicating the detected regions.

Figure 13.

Autonomous flight process. (a) UAV inspecting the current transmission tower, (b) autonomously navigating to the next tower, and (c) inspecting the subsequent tower.

Figure 14.

Flight experiment result.

The defect detection results for small targets in real-world applications using the proposed model are shown in the Figure 14.

As outlined in Section 2.2.2, the reliability of the 4G link is critical for safe operation. The link performance was evaluated over 5 separate flight missions in a typical operational environment. Furthermore, the actual operational speed of the TDD-YOLO model was benchmarked under in-flight conditions. For deployment, the model was converted to the ENGINE format and utilized FP16 precision to enhance computational efficiency. The results are summarized in Table 16.

Table 16.

The 4G communication link performance metrics.

The data confirm that the 4G link consistently met the predefined thresholds for real-time control and data transmission. The observed latency and packet loss are sufficiently low to ensure stable drone command and timely reception of defect alerts. The occasional fluctuations in bandwidth did not impact the mission, as the data prioritization scheme ensured that critical messages were always transmitted.

The flight experiment results show that the proposed system and model perform well in practical scenarios. It can accurately detect two types of defects in autonomous flight operations improper insulator binding and missing tension clamps, improving the safety of traditional power tower line inspection processes and saving a lot of manpower resources for tower defect detection. Flight trials show accurate detection of the two target defects during autonomous operation.

4. Discussions

4.1. FP/FN Analysis and Future Directions

The majority of false positives occur when complex background structures are mistaken for defects. For instance, shadows cast by cross-arms or textured patterns on rusted tower metal were occasionally misclassified as “non-standard binding”. The most challenging cases for our model are defects with extremely low pixel area and contrast. Heavy occlusion is another significant factor leading to false negatives. When defects are partially hidden by other wires or components, the model often fails to recognize them.

Based on this error analysis, we identify several promising directions for future work:

- To mitigate false positives, future datasets could be augmented with a wider variety of background challenges (e.g., more shadow patterns, complex textures) and hard negatives (non-defective components that are visually similar to defects).

- Advanced Attention Mechanisms: To reduce false negatives, especially for low-contrast defects, exploring more powerful attention mechanisms that can better focus on subtle, local discrepancies within a cluttered scene could be beneficial.

- For the smallest defects, experimenting with higher-resolution input images or adaptive patch-based detection could provide the necessary pixel-level details to improve recall, albeit at a potential computational cost.

4.2. Device Support

In the portability experiment, we validated the proposed method on YOLOv8n and demonstrated its generality across different architectures. This provides an important basis for selecting different models on different devices. Combined with the results of comparative experiments, if other edge computing devices with lower computational power are selected, we recommend using the improved model of YOLOv8n as the defect detection model. Subsequent work should also explore the deployment effect on other edge computing devices.

4.3. Limitations in Environmental Robustness and Future Data Enhancement

Considering that different weather conditions may be encountered in actual operations, we process the dataset with motion blur, increased brightness, reduced brightness and haze addition methods to validate the robustness of the proposed model. The greatest impact on model accuracy is observed with the introduction of haze. Atomization processing leads to the enhancement of low-frequency components and attenuation of high-frequency details in the image, resulting in the partial or complete disappearance of key features that the model relies on (such as texture, edges, shape), which is not conducive to the recognition of small target defects by the model. Although the model performs well compared to the original model in this situation, the detection accuracy is not ideal due to the limitations of the dataset, only including data from normal weather. In addition, most of the target types are small target defect types, and there are few large-scale detection targets. Therefore, in future work, it is necessary to enrich the weather types of the dataset and increase the proportion of large-scale targets to enhance the multi-scale detection ability and robustness of the model.

4.4. Addressing Class Imbalance

We identified a notable performance disparity between the “improper tie” and “missing clamp” defect classes, with the mAP for the latter being approximately 10% lower in our initial experiments. We attribute this performance gap primarily to the inherently smaller physical size of the “missing clamp” defects, which makes them more susceptible to feature loss during network down-sampling and thus more challenging to detect. To mitigate this bias, we strategically increased the weighting factor of the classification loss. This adjustment compels the model to focus greater attention on these challenging, small-size examples during training. The experimental results confirm the effectiveness of this approach: the performance gap between the two defect classes was significantly narrowed, while the overall mAP also received a concomitant boost.

The results, as comparatively detailed in Table 17, demonstrate the efficacy of this strategy. Not only did the revised training objective lead to a more balanced performance improvement between the two defect classes, effectively narrowing the performance gap, but it also contributed to a further increase in the overall mean Average Precision (mAP). This outcome confirms that mitigating class imbalance is crucial for enhancing the robustness and fairness of our defect detection system. Future work will delve deeper into this direction, exploring more sophisticated strategies such as Focal Loss techniques to achieve even greater class-balanced performance.

Table 17.

Class imbalance.

5. Conclusions

This study presents and validates a complete, edge-deployed system for the fully autonomous and real-time inspection of distribution towers, marking a significant step toward the intelligent and automated maintenance of power grid infrastructure. The core of this system, the TDD-YOLO model, successfully bridges the critical gap between high-accuracy defect detection and practical deployment on resource-constrained platforms, as demonstrated by its robust performance on the NVIDIA Jetson Orin Nano.

The primary significance of this work is three-fold. First, from a methodological perspective, it moves beyond isolated model improvements by offering a principled, synergistic framework for small-target defect detection. The integrated pipeline of SPD-Conv, CBAM, BiFPN, and a high-resolution head provides a reusable blueprint for tackling the compounded challenges of information loss, low contrast, and inefficient feature fusion in other industrial inspection domains. Second, in terms of practical application, this research delivers a tangible solution that transitions defect inspection from a manual, labor-intensive process to a seamless autonomous operation. This has the direct potential to enhance grid safety, reduce operational costs, and prevent catastrophic failures by enabling proactive and frequent inspections. Third, its engineering value is proven through a real-world, edge-computing implementation, confirming that complex deep learning models can be effectively leveraged for real-time analysis in challenging field environments.

While the current system demonstrates considerable promise, future work will focus on three key areas to broaden its impact: (1) enhancing operational efficiency through model compression techniques like pruning to democratize its use across an even wider array of edge devices; (2) architecturally integrating the separate tower recognition and defect detection models into a single, streamlined network to simplify the deployment pipeline; and (3) expanding the diversity and scale of the defect dataset to improve the model’s generalizability and robustness across various geographical and environmental conditions.

Author Contributions

Conceptualization, J.Z.; methodology, J.Z. and Q.W.; software, J.Z.; validation, J.Z., S.C. and W.W.; formal analysis, J.Z.; investigation, J.Z.; resources, J.Z. and W.W.; data curation, J.Z.; writing—original draft preparation, J.Z.; writing—review and editing, J.Z. and Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Module Input and Output Details

Table A1.

Model architecture input and output channels.

Table A1.

Model architecture input and output channels.

| Layer | Module | Input [C,H,W] | Output [C,H,W] |

|---|---|---|---|

| 0 | Conv | [3, 640, 640] | [64, 640, 640] |

| 1 | space_to_depth | [64, 640, 640] | [256, 320, 320] |

| 2 | Conv | [256, 320, 320] | [128, 320, 320] |

| 3 | space_to_depth | [128, 320, 320] | [512, 160, 160] |

| 4 | C3k2 | [512, 160, 160] | [256, 160, 160] |

| 5 | Conv | [256, 160, 160] | [256, 160, 160] |

| 6 | space_to_depth | [256, 160, 160] | [1024, 80, 80] |

| 7 | C3k2 | [1024, 80, 80] | [512, 80, 80] |

| 8 | Conv | [512, 80, 80] | [512, 80, 80] |

| 9 | space_to_depth | [512, 80, 80] | [2048, 40, 40] |

| 10 | C3k2 | [2048, 40, 40] | [512, 40, 40] |

| 11 | Conv | [512, 40, 40] | [1024, 40, 40] |

| 12 | space_to_depth | [1024, 40, 40] | [4096, 20, 20] |

| 13 | C3k2 | [4096, 20, 20] | [1024, 20, 20] |

| 14 | CBAM | [1024, 20, 20] | [1024, 20, 20] |

| 15 | SPPF | [1024, 20, 20] | [1024, 20, 20] |

| 16 | Upsample | [1024, 20, 20] | [1024, 40, 40] |

| 17 | BiFPN_Concat | [1024, 40, 40] + [512, 40, 40] | [512, 40, 40] |

| 18 | C3k2 | [512, 40, 40] | [512, 40, 40] |

| 19 | Upsample | [512, 40, 40] | [512, 80, 80] |

| 20 | BiFPN_Concat | [512, 80, 80] + [512, 80, 80] | [512, 80, 80] |

| 21 | C3k2 | [512, 80, 80] | [256, 80, 80] |

| 22 | Upsample | [256, 80, 80] | [256, 160, 160] |

| 23 | BiFPN_Concat | [256, 160, 160] + [256, 160, 160] | [256, 160, 160] |

| 24 | C3k2 | [256, 160, 160] | [128, 160, 160] |

| 25 | Conv | [128, 160, 160] | [256, 80, 80] |

| 26 | BiFPN_Concat | [256, 80, 80] + [256,80,80] + [256,80,80] | [256, 80, 80] |

| 27 | C3k2 | [256, 80, 80] | [256, 80, 80] |

| 28 | Conv | [256, 80, 80] | [512, 40, 40] |

| 29 | BiFPN_Concat | [512, 40, 40] + [512,40,40] + [512,40,40] | [512, 40, 40] |

| 30 | C3k2 | [512, 40, 40] | [512, 40, 40] |

| 31 | Conv | [512, 40, 40] | [512, 20, 20] |

| 32 | BiFPN_Concat | [512, 20, 20] + [1024, 20, 20] | [512, 20, 20] |

| 33 | C3k2 | [512, 20, 20] | [1024, 20, 20] |

| 34 | Detect | [128, 160, 160] + [256, 80, 80] + [512, 40, 40] + [1024, 20, 20] | - |

References

- Jun, D.; Yanpeng, H.; Licheng, L. An improved method to calculate the radio interference of a transmission line based on the flux-corrected transport and upstream finite element method. J. Electrost. 2015, 75, 1–4. [Google Scholar] [CrossRef]

- Zhang, T.; Zheng, W.; Xie, Y.; Yuan, J.; Xu, T.; Wang, P.; Liu, G.; Guo, D.; Zhang, G.; Liang, Y. A case study of rupture in 110 kV overhead conductor repaired by full-tension splice. Eng. Fail. Anal. 2020, 108, 104349. [Google Scholar] [CrossRef]

- Xie, Y.; Zhao, Y.; Bao, S.; Wang, P.; Huang, J.; Wang, P.; Liu, G.; Hao, Y.; Li, L. Investigation on Cable Rejuvenation by Simulating Cable Operation. IEEE Access 2020, 8, 6295–6303. [Google Scholar] [CrossRef]

- Lai, Q.; Chen, J.; Hu, L.; Cao, J.; Xie, Y.; Guo, D.; Liu, G.; Wang, P.; Zhu, N. Investigation of tail pipe breakdown incident for 110 kV cable termination and proposal of fault prevention. Eng. Fail. Anal. 2020, 108, 104353. [Google Scholar] [CrossRef]

- Zhao, Y.; Han, Z.; Xie, Y.; Fan, X.; Nie, Y.; Wang, P.; Liu, G.; Hao, Y.; Huang, J.; Zhu, W. Correlation Between Thermal Parameters and Morphology of Cross-Linked Polyethylene. IEEE Access 2020, 8, 19726–19736. [Google Scholar] [CrossRef]

- Sun, M.; Tan, C.; Zhang, C.; Yang, C.; Li, H. Analysis of Strain Clamp Failure on 500 kV Transmission Line. J. Mater. Sci. Chem. Eng. 2018, 06, 47–56. [Google Scholar] [CrossRef]

- Alhassan, A.B.; Zhang, X.; Shen, H.; Xu, H. Power transmission line inspection robots: A review, trends and challenges for future research. Int. J. Electr. Power Energy Syst. 2020, 118, 105862. [Google Scholar] [CrossRef]

- Xie, X.; Liu, Z.; Xu, C.; Zhang, Y. A Multiple Sensors Platform Method for Power Line Inspection Based on a Large Unmanned Helicopter. Sensors 2017, 17, 1222. [Google Scholar] [CrossRef]

- Li, L. The UAV intelligent inspection of transmission lines. In Proceedings of the International Conference on Advances in Mechanical Engineering and Industrial Informatics, Zhengzhou, China, 11–12 April 2015; Atlantis Press: Dordrecht, The Netherlands, 2015; pp. 1542–1545. [Google Scholar] [CrossRef]

- Hui, X.; Bian, J.; Zhao, X.; Tan, M. Vision-based autonomous navigation approach for unmanned aerial vehicle transmission-line inspection. Int. J. Adv. Robot. Syst. 2018, 15, 172988141775282. [Google Scholar] [CrossRef]

- Liu, Z.; Xie, Q. Drone path planning for 500KV transmission tower inspection. In Proceedings of the 5th International Conference on Information Science, Electrical, and Automation Engineering (ISEAE 2023), Wuhan, China, 24–26 March 2023; Lei, T., Ed.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2023; Volume 12748, p. 1274847. [Google Scholar] [CrossRef]

- Guan, H.; Sun, X.; Su, Y.; Hu, T.; Wang, H.; Wang, H.; Peng, C.; Guo, Q. UAV-lidar aids automatic intelligent powerline inspection. Int. J. Electr. Power Energy Syst. 2021, 130, 106987. [Google Scholar] [CrossRef]

- Han, J.; Yang, Z.; Zhang, Q.; Chen, C.; Li, H.; Lai, S.; Hu, G.; Xu, C.; Xu, H.; Wang, D.; et al. A Method of Insulator Faults Detection in Aerial Images for High-Voltage Transmission Lines Inspection. Appl. Sci. 2019, 9, 2009. [Google Scholar] [CrossRef]

- Zhong, J.; Liu, Z.; Han, Z.; Han, Y.; Zhang, W. A CNN-Based Defect Inspection Method for Catenary Split Pins in High-Speed Railway. IEEE Trans. Instrum. Meas. 2019, 68, 2849–2860. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Platt, J. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines. Adv. Kernel-Methods-Support Vector Learn. 1998, 208, MSR-TR-98-14. [Google Scholar]

- Jia, K.; Xuan, Z.; Lin, Y.; Wei, H.; Li, G. An Islanding Detection Method for Grid-Connected Photovoltaic Power System Based on AdaboostAlgorithm. Trans. China Electrotech. Soc. 2018, 33, 1106. [Google Scholar] [CrossRef]

- Wu, J.; Cheng, S.; Pan, S.; Xin, W.; Bai, L.; Fan, L.; Dong, X. Detection method based on improved faster RCNN for pin defect in transmission lines. In Proceedings of the 2021 2nd International Conference on Energy, Power and Environmental System Engineering (ICEPESE2021), Shanghai, China, 4–5 July 2021; pp. 103–108. [Google Scholar]

- Yan, X.; Wang, W.; Lu, F.; Fan, H.; Wu, B.; Yu, J. GFRF R-CNN: Object Detection Algorithm for Transmission Lines. Comput. Mater. Contin. 2025, 82, 1439–1458. [Google Scholar] [CrossRef]

- Zhimin, G.; Yangyang, T.; Wandeng, M. A Robust Faster R-CNN Model with Feature Enhancement for Rust Detection of Transmission Line Fitting. Sensors 2022, 22, 7961. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–9 June 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, N.; Su, J.; Zhao, Y.; Chen, H. Insulator-YOLO: Transmission Line Insulator Risk Identification Based on Improved YOLOv5. Processes 2024, 12, 2552. [Google Scholar] [CrossRef]

- Wang, M.; Sun, L.; Jiang, J.; Yang, J.; Zhang, X. Enhancing hyperspectral power transmission line defect and hazard identification with an improved YOLO-based model. Optoelectron. Lett. 2024, 20, 681–688. [Google Scholar] [CrossRef]

- Tan, G.; Ye, Y.; Chu, J.; Liu, Q.; Xu, L.; Wen, B.; Li, L. Real-time detection method of intelligent classification and defect of transmission line insulator based on LightWeight-YOLOv8n network. J.-Real-Time Image Process. 2025, 22, 53. [Google Scholar] [CrossRef]

- Zhao, J.; Miao, S.; Kang, R.; Cao, L.; Zhang, L.; Ren, Y. Insulator Defect Detection Algorithm Based on Improved YOLOv11n. Sensors 2025, 25, 1327. [Google Scholar] [CrossRef]

- Peng, L.; Wang, K.; Zhou, H.; Ma, Y.; Yu, P. YOLOv7-CWFD for real time detection of bolt defects on transmission lines. Sci. Rep. 2025, 15, 1635. [Google Scholar] [CrossRef]

- Wang, P.; Yuan, G.; Zhang, Z.; Rao, J.; Ma, Y.; Zhou, H. Detection of Cotter Pin Defects in Transmission Lines Based on Improved YOLOv8. Electronics 2025, 14, 1360. [Google Scholar] [CrossRef]

- Xiang, S.; Zhang, L.; Chen, Y.; Du, P.; Wang, Y.; Xi, Y.; Li, B.; Zhao, Z. A Defect Detection Method for Grading Rings of Transmission Lines Based on Improved YOLOv8. Energies 2024, 17, 4767. [Google Scholar] [CrossRef]

- Jia, Z.; Ouyang, Y.; Feng, C.; Fan, S.; Liu, Z.; Sun, C. A Live Detecting System for Strain Clamps of Transmission Lines Based on Dual UAVs’ Cooperation. Drones 2024, 8, 333. [Google Scholar] [CrossRef]

- Benjumea, A.; Teeti, I.; Cuzzolin, F.; Bradley, A. YOLO-Z: Improving small object detection in YOLOv5 for autonomous vehicles. arXiv 2023, arXiv:2112.11798. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, L. SL-YOLO: A Stronger and Lighter Drone Target Detection Model. arXiv 2025, arXiv:2411.11477. [Google Scholar]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. arXiv 2022, arXiv:2208.03641. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).