Abstract

This paper introduces an autonomous vision-based tracking system for a quadrotor unmanned aerial vehicle (UAV) equipped with an onboard camera, designed to track a maneuvering target without external localization sensors or GPS. Accurate capture of dynamic aerial targets is essential to ensure real-time tracking and effective management. The system employs a robust and computationally efficient visual tracking method that combines HSV filter detection with a shape detection algorithm. Target states are estimated using an enhanced extended Kalman filter (EKF), providing precise state predictions. Furthermore, a closed-loop Proportional-Integral-Derivative (PID) controller, based on the estimated states, is implemented to enable the UAV to autonomously follow the moving target. Extensive simulation and experimental results validate the system’s ability to efficiently and reliably track a dynamic target, demonstrating robustness against noise, light reflections, or illumination interference, and ensure stable and rapid tracking using low-cost components.

1. Introduction

Unmanned Aerial Vehicles (UAVs) have been steadily growing in both industrial and academic circles, and they are finding increasing applications in military contexts. Autonomous drones offer diverse applications, spanning from room cleaning and assisting disabled individuals to automating factory operations, enhancing security, facilitating transportation, enabling planetary exploration, surveillance, traffic control, etc. [1,2,3,4]. Numerous research studies on unmanned vehicles have documented advancements in autonomous systems that operate independently of human interactions [2,5]. Due to their small size, UAVs can be deployed to navigate confined spaces, asset tracking, even in scenarios where GPS signals are weak or unavailable [6]; this presents significant challenges for autonomous navigation [7] and for visual tracking systems [8].

In recent years, numerous advancements have emerged for visual detection, tracking, and autonomous navigation, spanning both indoor and outdoor settings. These innovations utilize technologies like laser range finders (LIDARs) [9], RGB-D sensors, and stereo vision to meticulously map unknown environments in three dimensions. Classical methods in visual geometry, such as SLAM [10], known as Simultaneous Localization and Mapping, and Structure from Motion (SfM) [11], rely on data from various sensors, including Kinect [12], LIDAR, SONAR, optical flow, stereo camera [8], and monocular cameras for computation. These algorithms integrate measurements from a single sensor or a combination [13], thus continually refining and updating a precise map of the UAV’s surroundings while concurrently estimating its position. Visual tracking [11] constitutes an essential sub-area of computer vision technology has been utilized and advanced in various applications, such as autonomous driving and autonomous navigation and tracking systems for unmanned aerial vehicles [3]. The challenge lies in detecting object localization, extracting accurate information, and tracking a moving object in unknown and highly variable environments, which can be influenced by factors such as noise, light reflections, or illumination interference [14], where targets may experience rapid motion or significant occlusion and even moving out-of-view.

Currently, deep learning-based detection and tracking algorithms have demonstrated high accuracy in detection and strong tracking performance [15,16,17]. Bertinetto et al. [18] introduced an end-to-end fully convolutional Siamese network for visual tracking. Li et al. [19] proposed a Siamese Region Proposal Network–based tracker trained offline on large-scale image pairs, and [20] presents a ResNet-driven Siamese tracker. For drone footage, DB-Tracker [16] is a detection-based multi-object tracker that fuses RFS-based position modeling (Box-MeMBer) with hierarchical OSNet appearance features and a joint position–appearance cost matrix, yielding robust performance in complex, occluded scenes. On the one hand, although these models offer high accuracy, they typically require high-end hardware, making them impractical for real-time applications, given the space and weight limitations of UAVs and the limited computational capacities of airborne computers and their complex computations. Within the framework of Dynamic Autonomous Tracking and Monitoring Operations (DATMO) [21], the process of identifying mobile entities is carried out through the implementation of optical flow methods, followed by their monitoring via a Kalman filter approach. This specific system was implemented on the AscTec Pelican quadrotor, which was equipped with a Firefly imaging sensor. Nevertheless, these works did not advance object surveillance further, since they were only able to conduct a basic estimation of an object’s spatial coordinates within visual frames. Kang and Cha [22] propose an autonomous UAV that replaces GPS with ultrasonic beacons, uses a deep CNN for damage detection and geo-tag detection for inspection, demonstrating high detection performance in GPS-denied zones. Ali et al. [23] integrate a modified Faster R-CNN with an autonomous UAV to map multiple damages under GPS-denied conditions, reducing false positives via streaming/multiprocessing. Waqas et al. [24] combine fiducial marker-based localization (ArUco) with deep learning and an obstacle-avoidance method to achieve autonomy in GPS-denied settings. However, methods based on beacons or visual markers (UWB/ultrasonic anchors; ArUco fields) require external infrastructure and site preparation. In [25], the authors investigate an autonomous vision-based tracking system to monitor a maneuvering target by using a rotorcraft UAV equipped with an onboard gimbaled camera. In this paper, a Kernelized Correlation Filter (KCF) tracker is combined with a redetection method within the system. An IMM-EKF-based estimator is used to estimate the states of the target. Experimental results prove that the proposed real-time vision-based tracking system gives robust and reliable tracking performance. However, when an object exhibits rapid motion or when a jump cut transpires, this vision-based tracking system encounters significant difficulties in re-establishing its tracking capabilities. While the Kernelized Correlation Filters (KCF) methodology is underpinned by a robust theoretical framework and yields commendable outcomes in experimental settings, practical applications may still experience tracking inaccuracies and a failure to recognize tracking disruptions. In instances of total occlusion, the traditional KCF framework is rendered incapable of accurately monitoring targets [26]. Furthermore, this framework exhibits effectiveness for real-time applications on an NVIDIA TK1 onboard computing platform, employing a monocular gimbal camera. In [27], a computer vision-based target tracking method is proposed for locating UAV-mounted targets, such as pedestrians and vehicles, utilizing sparse representation theory. To effectively handle partial occlusion in UAV video footage, the method integrates a Markov Random Field (MRF)-based binary support vector with contiguous occlusion constraints. The results indicate that the proposed tracker delivers enhanced precision and higher success rates. Nevertheless, the efficacy of the system may be compromised under adverse environmental circumstances, including dim illumination or densely populated backgrounds, which could adversely influence both detection and tracking precision. Furthermore, the system is explicitly engineered for the identification of pedestrians and vehicles. In instances of occlusion, the operational limitations may necessitate considerable computational resources, thereby potentially constraining real-time performance, particularly on UAVs with limited processing capabilities. Vision-based methodologies [28,29,30] predominantly concentrate on subjects situated within a specific locale or rely on periodic re-identification, thereby reducing their efficacy in rapidly evolving contexts. Furthermore, these tracking mechanisms exhibit restricted applicability, for instance, certain systems are capable of monitoring exclusively stationary ground targets [31]. This limitation arises from inadequate precision in estimating the target’s state [32]. In [33], Sean proposed a system for detecting and tracking a static spherical object and landing on a platform using a MAV equipped with a monocular camera. The spherical object was identified using a combination of HSV filtering and the Circle Hough Transform (CHT) algorithm. Notwithstanding the elevated precision and favorable success rate observed in the experimental procedures, several limitations warrant consideration: all experiments were executed within an indoor environment, and there exists a potential for the Micro Aerial Vehicle (MAV) to collide with the stationary spherical object during the search process, given the object’s immobility. Reference [34] proposes a physics-guided learning framework that embeds physical constraints into the loss to steer a quadratic neural network toward interpretable, reliable bearing-fault diagnosis when no fault samples are available, reporting high accuracy across load conditions and improved credibility versus purely data driven models. Even so, balancing physics and data losses plus GA-based weighting introduces extra hyperparameters and training overhead. Paper [35] introduces RailFOD23 (a dataset synthesized to mitigate fault-data scarcity) and EPRepSADet, a compact detector using a re-parameterizable bottleneck and lightweight self-attention; it achieves strong mAP with very low FLOPs, outperforming several state-of-the-art (SOTA) baselines on the new dataset. Still, the synthetic-to-real domain gap remains a concern—heavy reliance on AI-generated images can limit real-world transfer unless adaptation or fine-tuning is applied. In [8], a target tracking algorithm that employs correlation filters was formulated, facilitating the proficient tracking of targets that undergo considerable scale fluctuations and rapid movements. Furthermore, a redetection algorithm grounded in support vector machine (SVM) methodology has been developed to adeptly address target occlusions and losses. The estimation of target states is accomplished through the utilization of visual data, integrating an optimized Lucas–Kanade (LK) optical flow technique with an extended Kalman filter (EKF) to enhance precision. Moreover, the algorithm was investigated for tracking the target in outdoor environments. The UAV system is incapable of effectively sensing the surrounding environment to facilitate autonomous obstacle avoidance throughout the tracking procedure.

Nevertheless, constraints persist in the research investigation on quadrotor visual tracking systems. We have identified some challenging problems for moving-target tracking by UAVs:

- The precision of the majority of drone systems is constrained by space limitations and financial considerations, resulting in most micro-UAVs being outfitted with a singular visible camera device.

- It is imperative to acquire a precise state estimation of the target, and the UAV must be engineered to reliably monitor the maneuvering target without any preliminary information regarding it, thereby facilitating the realization of sensitive and robust tracking applications in various scenarios.

- Consequently, the implementation of highly efficient algorithms for the detection, tracking, and estimation of pertinent states is imperative within the UAV tracking system, ensuring real-time performance despite limited onboard computation capacities. This will subsequently enable autonomous tracking in both outdoor and indoor settings.

These reasons are why we here propose a robust algorithm for object detection, tracking, and control laws, where a UAV is capable of autonomously tracking a ball without necessitating any human intervention, relying only on onboard camera sensors. This algorithm is designed to adapt efficiently and quickly to changes within the environmental context. The key contributions of the present work are summarized as follows:

- We present a new algorithm that effectively handles noise, shadows, light reflections, and illumination interference by separating brightness from color information in the HSV color model. The algorithm operates autonomously without any user intervention or prior knowledge about the object and quickly adapts to changes in the surrounding environment.

- An Extended Kalman Filter (EKF)-based target state estimator is presented to estimate the states of the maneuvering target at each instant. It continuously provides an estimation regarding the target’s position and re-establish tracking when the target reappears within any region of the entire frame.

- A flight control algorithm is introduced to ensure stable tracking. This technique, based on visual tracking results, was formulated to enable the UAV to track a rapidly moving sphere while ensuring minimal power usage and enhanced real-time operational efficacy. A comprehensive framework of UAV tracking a moving target, implemented in both a simulation and a physical drone (DJI Tello drone).

The rest of this paper is organized as follows: Section 2 presents the system framework and methodology of the vision-based system. Section 3 presents an explanation of the object detection and target tracking algorithms, together with the control strategy. In Section 4, the simulation results are presented and analyzed. The validation of the developed vision-based tracking system through various experiments is covered in Section 5. The final conclusions and plans for future work are presented in Section 6.

2. System Overview

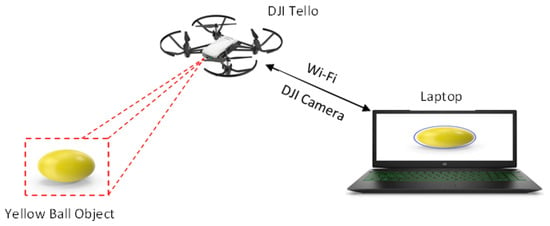

The vision-based target tracking system utilizes a DJI Tello drone as the UAV platform, which communicates with a laptop via a Wi-Fi network connection. An overview of the system is shown in Figure 1.

Figure 1.

Proposed overview of the system.

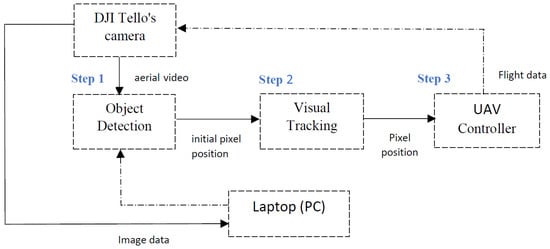

The object detection and tracking system’s work process is shown in Figure 2. The computer vision algorithm and the UAV flight controller run in real-time on a laptop.

Figure 2.

Structure of the vision and control system.

- Step 1: The DJI Tello’s camera captures an image, which is transmitted to the laptop for detection. The position of the yellow spherical object is estimated and sent to the next module as the initial pixel position for visual tracking.

- Step 2: A switching tracking strategy is used based on the estimated states of Step 1. The visual tracking method then calculates the current pixel position of the yellow spherical object in each video frame.

- Step 3: After determining the current pixel position in Step 2, the UAV’s attitude angles and velocities for the next moment are computed by the controller module. This adjusts the UAV’s flight control parameters to ensure that it follows the yellow spherical object, keeping it within the UAV’s field of view. This process enhances the accuracy of visual tracking in Step 2.

The methods utilized in these modules will be explained in detail in the Section 3.

3. Methods

3.1. Object Detection

The object detection remains one of the most interesting and complex challenges in the Computer Vision field, a basic part of robotic perception. First of all, the ball’s position in the image plane needs to be determined via HSV segmentation defining the area in which it occurs with a 2D bounding box.

HSV color space is defined by H (hue), S (saturation), and V (value); hence, it is suited for human visual perception of colors. When required, image segmentation is more effective when using the HSV color space rather than using the RGB color space [14]. Therefore, one key benefit of the HSV color space is that its hue component enhances the algorithm’s robustness to lighting variations.

HSV represents color by a combination of three components: hue, saturation, and value [36]:

- Hue is the attribute of color, including red, blue, yellow color, etc., and its value is from 0 degrees to 360 degrees.

- Saturation is the level of color intensity, ranging from 0 to 100.

- Value is also a representation of the lightness of the color, which also falls within a range of 0–100.

The transformation of color images from RGB to the HSV color space is performed by using Equations (1)–(3) below [14].

After identifying the largest contour in the image, we then calculate the smallest enclosing circle, where we also determine its center coordinates and radius. The following equations have been used in implementing the calculation:

This determines the center coordinates (x,y) and the radius r of the target ball. This initial position is required for the visual tracking algorithm.

3.2. Visual Tracking

3.2.1. System Observations

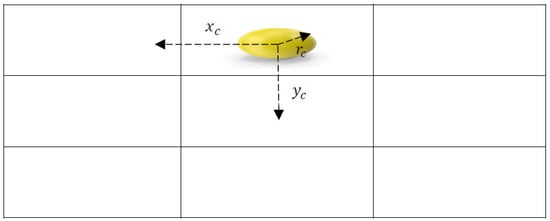

The radius and centroid of the ball detected in the current image frame were calculated by the object detection algorithm [37]. These measurements are used to determine the observations or data input that will be fed into the Extended Kalman Filter. The observation vector at time t is defined as , where and are the centroid coordinates of the ball and denotes the radius of the ball, see Figure 3.

Figure 3.

The system’s observations are represented by a vector based on the centroid position and the radius of the ball in the current image frame.

3.2.2. Visual Tracking by EKF

Extended Kalman Filter is an estimation technique that infers hidden states from indirect, noisy, and uncertain observations. This is done by linearizing nonlinear models around the current estimate through a first-order Taylor series expansion at every frame. Thus, it can handle noisy observations of the detection module to continuously give an estimate about the position of the template at every instant [30]. Consider the following nonlinear system, defined by the state transition and the observation model:

where processes the nonlinear vector function and denotes the nonlinear observation vector function. The terms and are the process and observation noise vectors, respectively, both assumed to be zero-mean multivariate Gaussian noises. The control input vector is set to zero in this model, assuming no external acceleration input. With the functions f and h defined, the pose estimation process of the ball target can be formulated by first computing the predicted state from the previous estimate, and then calculating the corrected state after updating the measurement based on the predicted state.

The prediction step is as follows:

The correction step is as follows:

In the Extended Kalman Filter (EKF), Equations (8)–(13) describe the process. Here, and represent the process and measurement noise covariance matrices, respectively. is defined as the a posteriori estimate covariance matrix, denotes the Kalman gain, and is the residual covariance matrix. In these equations, the non-linear functions and are linearized, and their corresponding Jacobian matrices are denoted and , respectively [38].

By adopting the ball’s position in the image plane and its global velocity as the state variables, we define the ball motion model’s state vector as follows:

3.3. Controller

The developed vision-based control system has been designed for a quadrotor UAV equipped with a 640 × 480 pixel onboard camera, facilitating accurate real-time tracking of a moving airborne object. Maintaining the target at the center of the image plane, a PID controller is employed to regulate the UAV’s velocities and yaw angle [39]. The inputs to the PID-based controllers are the image errors at time t, defined as

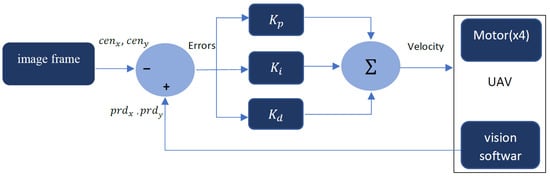

and are the predicted state estimates of the moving object from the Extended Kalman Filter after the measurement update, where and represent the center coordinates of the current image frame, see Figure 4.

Figure 4.

A UAV Proportional Integral-Derivative (PID) control loop system.

This category of controller is widely used across various applications due to its simplicity and effectiveness, with hyperparameters that are relatively easy to tune [40,41]. The controller’s output determines the motor velocity, with the parameters , , and adjusted for optimal performance. Consequently, the final velocity command is calculated as follows:

And the corresponding transfer function is shown in Equation (18):

Algorithm 1 presents the execution of the PID control pipeline for the quadrotor.

| Algorithm 1 PID Control |

|

Every second, the object’s positions from the current and previous video frames are extracted to calculate its speed. These calculated flight control parameters are then sent to the flight controller, enabling the UAV to follow the yellow ball accurately. To keep the ball centered in the drone’s visual frame, the drone uses the ball’s coordinates effectively. The Y-coordinate controls the vertical movement of the drone, allowing for it to ascend or descend to vertically align the ball within the frame. The X-coordinate governs the drone’s yaw, enabling it to rotate left or right around its Z-axis to center the ball horizontally. When the ball shifts to the left or right within the frame, the drone adjusts its yaw accordingly to maintain alignment. To approach the ball, the drone moves forward (pitch) based on the magnitude of the positional error, allowing for it to close the gap smoothly and accurately [42]. The algorithm for tracking the object is summarized in Algorithm 2, with the corresponding pseudo-code for the tracking process provided below.

| Algorithm 2 Tracking Controller |

|

4. Simulation Results

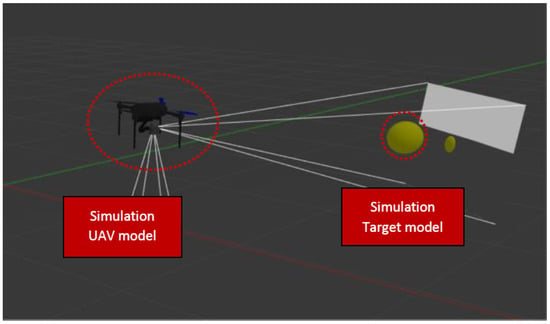

This section details our tracking system, initially validated through a simulation developed using Gazebo and ROS. The tracking of the ball target within the Gazebo simulation environment is depicted in Figure 5.

Figure 5.

Object tracking model in a simulated environment.

This simulation has a drone with onboard cameras that are actively tracking the ball in the environment. The drone, located in the middle of the image plan, is constantly monitoring through its camera sensor to orient and fix its position relative to the ball, ensuring that it stays on track. This paper effectively demonstrates how visual perception algorithms can be used for real-time object tracking; the drone continually adjusts its flight path to maintain alignment with the ball’s movements, even when the ball follows a circular trajectory.

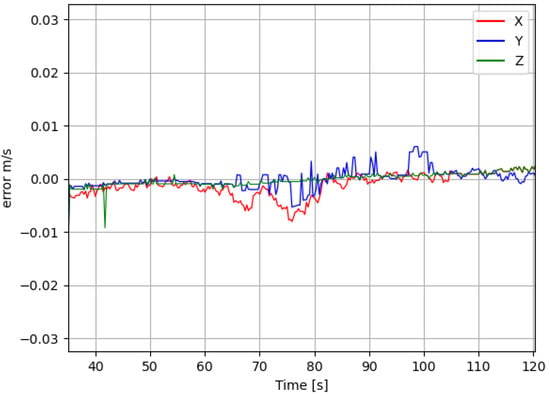

The estimated velocity error of the aerial target is shown in Figure 6. Between 40 and 60 s, the target remains stationary, resulting in minimal velocity error. At , the target begins to move at a constant speed of , causing a slight increase in the error. Despite this increase, the velocity error never exceeds , demonstrating that the proposed state estimation algorithm remains accurate and reliable.

Figure 6.

Estimated velocity error.

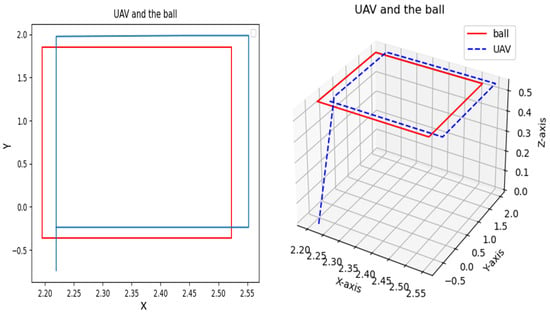

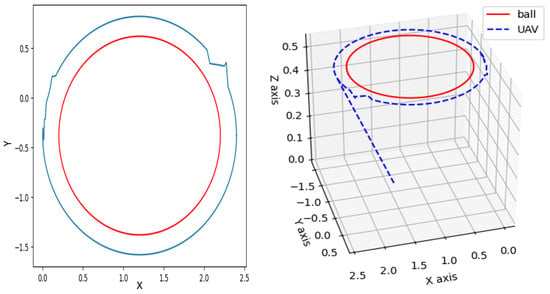

Figure 7 and Figure 8 present the 3D trajectories of both the moving object and the quadrotor, demonstrating the UAV’s ability to successfully navigate and accurately track the target. Within a simulation environment developed using Gazebo and ROS, a ball follows two distinct trajectories a square path (Figure 7) and a circular loop (Figure 8)—while the drone autonomously tracks its motion. Leveraging advanced onboard perception algorithms, the UAV accurately detects the ball’s position and dynamically adjusts its flight path to maintain precise tracking. During the square trajectory (Figure 7), the UAV continuously updates its state estimation to ensure seamless and accurate tracking throughout the entire path. As the ball transitions to the circular trajectory (Figure 8), the drone further refines its control commands to minimize tracking errors. This simulation effectively demonstrates the UAV’s adaptability to different movement patterns and highlights the robustness of integrating vision-based algorithms within the Gazebo–ROS framework for real-time object tracking.

Figure 7.

Tracking a moving target that follows a square path.

Figure 8.

Tracking a moving target that follows a circular path.

5. Experimental Results

In this section, we present results from real-world experiments using a DJI Tello Drone performing an automated tracking sequence. Our ground station utilizes an HP Pavilion Gaming 15 computer, which connects to the DJI Tello drone via a Wi-Fi network with a maximum effective range of 100 meters. In our system, the computer receives aerial video footage for further processing using a vision-based algorithm and sends commands to the DJI Tello by interfacing with its flight controller. The DJI Tello is equipped with an RGB camera sensor. During our experiment, the video was captured at a frame rate of 30 frames per second (fps), each frame having a resolution of 640 × 480 pixels.

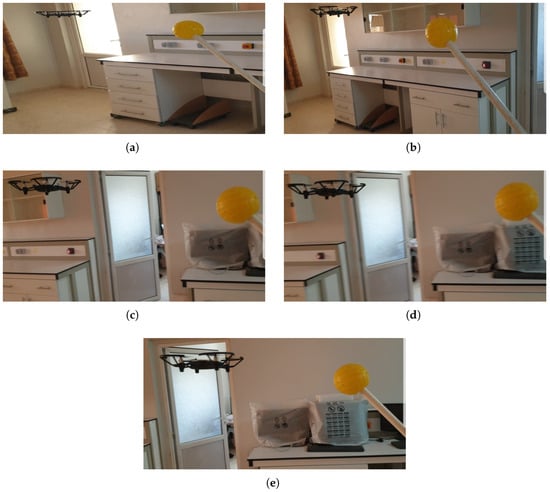

Initially, a yellow ball was chosen as the aerial target. The UAV took off to an altitude of 1 m, positioning itself accordingly, and then detected the yellow ball in the environment while maintaining an expected separation of 5 m. The yellow ball was subsequently moved along a straight-line trajectory. In this setup, the UAV effectively detected and accurately tracked the moving target, showcasing the robust performance of our tracking system. Figure 9 depicts the UAV following the yellow ball as it travels at a constant speed along the straight line.

Figure 9.

UAV tracking a moving target along a straight-line trajectory. (a) The UAV takes off from the ground, (b) approaches the ball, and (c) follows the ball’s motion; (d) continuous tracking as the ball moves; (e) completes the mission with successful and stable tracking of the ball.

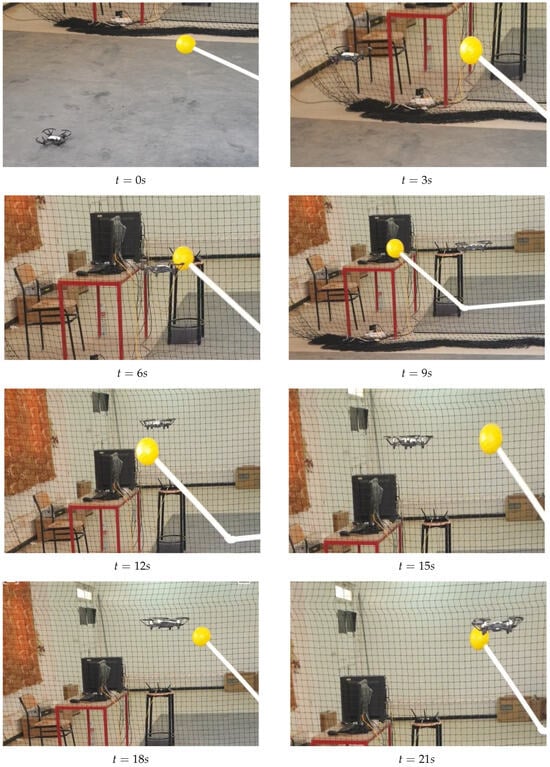

In Figure 10, we demonstrate the detection and tracking performance by moving the yellow ball along a circular trajectory. The DJI Tello drone, programmed to follow and lock onto the yellow ball, provides a controlled scenario to evaluate our algorithms. The results showcase not only the effectiveness and robustness of our detection and tracking system, but also the reliability of our target state estimation methods under varying illumination conditions. This experimental setup validates the stability of our approach and its applicability in dynamic environments.

Figure 10.

UAV tracking a moving target along the circular path.

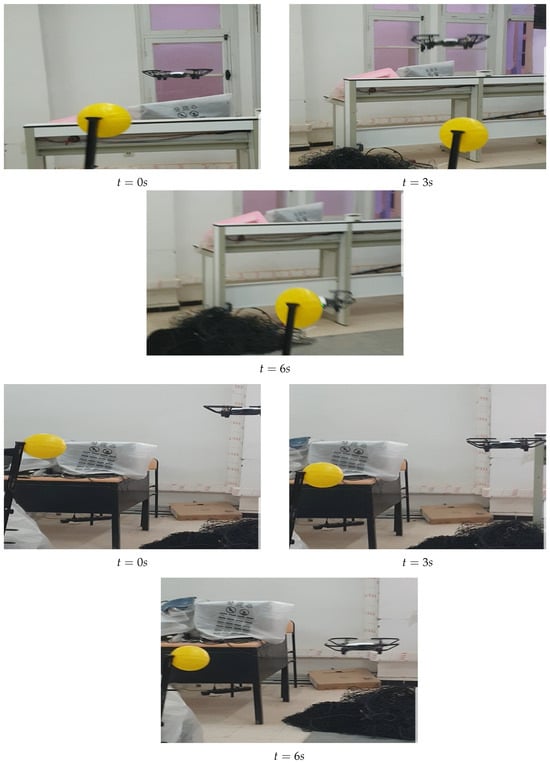

Due to the abundance of objects in the environment, our method remains robust and effective even when environmental conditions change, consistently ensuring accurate tracking of the yellow ball. Figure 11 illustrates the UAV and the target following random trajectories, with the ball varying its speed throughout the sequence. In this scenario, the DJI Tello drone successfully tracks the target, although a slight delay is observed during periods of rapid ball movement.

Figure 11.

UAV tracking a moving target along random trajectories.

Overall, the system achieved a 90% success rate in tracking the yellow ball. In the remaining 10% of trials, failures occurred due to the ball moving outside the DJI Tello drone’s camera field of view. This study focuses on guiding an autonomous drone toward a yellow ball using visual data alone, effectively addressing the challenges posed by GPS inaccuracies and GPS-denied environments. Experimental results demonstrate that the proposed detection algorithm reliably identifies the yellow ball under varying lighting conditions and quickly adapts to changes in the surrounding environment. A total of ten flight tests were conducted to evaluate the performance and robustness of the visual tracking system.

6. Conclusions

In this paper, we presented a comprehensive framework for autonomous vision-based target tracking in UAVs. The proposed system utilizes an onboard RGB camera to detect and track a target using a HSV-based color segmentation approach. An Extended Kalman Filter (EKF) was employed to estimate the target’s state, ensuring continuous tracking even in the presence of temporary occlusions. Additionally, a PID controller was implemented to regulate the UAV’s motion based on visual feedback, enabling stable and efficient tracking.

Simulation and real-world experiments demonstrated the system’s effectiveness, achieving a 90% success rate in tracking the target. However, certain limitations remain. The current detection algorithm is designed specifically for yellow-colored objects, which restricts its applicability to a broader range of targets. Future improvements will focus on developing a more generalized detection algorithm capable of identifying objects of varying colors.

Another limitation is the lack of obstacle avoidance mechanisms in the current system. The UAV operates in an environment without barriers, which does not reflect real-world scenarios where obstacles may obstruct its path. Future work will integrate obstacle detection and avoidance capabilities to enhance the UAV’s autonomous navigation and tracking performance.

The proposed framework can be applied in various fields, including autonomous fruit harvesting, defense systems, and robotic applications that require precise object tracking.

Future developments will also explore optimizing computational efficiency to enable real-time performance on UAVs with limited processing power. Additionally, improving robustness to environmental variations, such as dynamic lighting conditions, will further enhance the system’s reliability in diverse scenarios.

Author Contributions

Conceptualization, M.B.; methodology, O.G.; software, O.G.; validation, O.G. and M.M.T.; formal analysis, O.G.; investigation, O.G.; resources, O.G. and N.A.; data curation, O.G.; writing—original draft preparation, O.G.; writing—review and editing, M.B., M.M.T., and N.A.; visualization, O.G.; supervision, M.M.T.; project administration, M.B.; funding acquisition, N.A. and O.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Valavanis, K.P.; Vachtsevanos, G.J. Handbook of Unmanned Aerial Vehicles; Springer Publishing Company, Incorporated: Princeton, NJ, USA, 2014. [Google Scholar]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Konrad, T.; Gehrt, J.J.; Lin, J.; Zweigel, R.; Abel, D. Advanced state estimation for navigation of automated vehicles. Annu. Rev. Control 2018, 46, 181–195. [Google Scholar] [CrossRef]

- Eskandaripour, H.; Boldsaikhan, E. Last-mile drone delivery: Past, present, and future. Drones 2023, 7, 77. [Google Scholar] [CrossRef]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A comprehensive review of recent research trends on unmanned aerial vehicles (uavs). Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-based deep reinforcement learning for obstacle avoidance in UAV with limited environment knowledge. IEEE Trans. Intell. Transp. Syst. 2019, 22, 107–118. [Google Scholar] [CrossRef]

- Padhy, R.P.; Verma, S.; Ahmad, S.; Choudhury, S.K.; Sa, P.K. Deep neural network for autonomous UAV navigation in indoor corridor environments. Procedia Comput. Sci. 2018, 133, 643–650. [Google Scholar] [CrossRef]

- Wu, S.; Li, R.; Shi, Y.; Liu, Q. Vision-based target detection and tracking system for a quadcopter. IEEE Access 2021, 9, 62043–62054. [Google Scholar] [CrossRef]

- Patel, H. Designing Autonomous Drone for Food Delivery in Gazebo/Ros Based Environments; Technical Report; Binghamton University: Binghamton, NY, USA, 2022. [Google Scholar]

- Blösch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision based MAV navigation in unknown and unstructured environments. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 21–28. [Google Scholar]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Sun, Y.; Weng, Y.; Luo, B.; Li, G.; Tao, B.; Jiang, D.; Chen, D. Gesture recognition algorithm based on multi-scale feature fusion in RGB-D images. IET Image Process. 2023, 17, 1280–1290. [Google Scholar] [CrossRef]

- Ding, S.; Guo, X.; Peng, T.; Huang, X.; Hong, X. Drone detection and tracking system based on fused acoustical and optical approaches. Adv. Intell. Syst. 2023, 5, 2300251. [Google Scholar] [CrossRef]

- Kang, H.C.; Han, H.N.; Bae, H.C.; Kim, M.G.; Son, J.Y.; Kim, Y.K. HSV color-space-based automated object localization for robot grasping without prior knowledge. Appl. Sci. 2021, 11, 7593. [Google Scholar] [CrossRef]

- Zhao, X.; Pu, F.; Wang, Z.; Chen, H.; Xu, Z. Detection, tracking, and geolocation of moving vehicle from uav using monocular camera. IEEE Access 2019, 7, 101160–101170. [Google Scholar] [CrossRef]

- Yuan, Y.; Wu, Y.; Zhao, L.; Chen, J.; Zhao, Q. DB-Tracker: Multi-Object Tracking for Drone Aerial Video Based on Box-MeMBer and MB-OSNet. Drones 2023, 7, 607. [Google Scholar] [CrossRef]

- Liang, J.; Yi, P.; Li, W.; Zuo, J.; Zhu, B.; Wang, Y. Real-time visual tracking design for an unmanned aerial vehicle in cluttered environments. Optim. Control Appl. Methods 2025, 46, 476–492. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Heidelberg/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Rodríguez-Canosa, G.R.; Thomas, S.; Del Cerro, J.; Barrientos, A.; MacDonald, B. A real-time method to detect and track moving objects (DATMO) from unmanned aerial vehicles (UAVs) using a single camera. Remote Sens. 2012, 4, 1090–1111. [Google Scholar] [CrossRef]

- Kang, D.; Cha, Y.J. Autonomous UAVs for structural health monitoring using deep learning and an ultrasonic beacon system with geo-tagging. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 885–902. [Google Scholar] [CrossRef]

- Ali, R.; Kang, D.; Suh, G.; Cha, Y.J. Real-time multiple damage mapping using autonomous UAV and deep faster region-based neural networks for GPS-denied structures. Autom. Constr. 2021, 130, 103831. [Google Scholar] [CrossRef]

- Waqas, A.; Kang, D.; Cha, Y.J. Deep learning-based obstacle-avoiding autonomous UAVs with fiducial marker-based localization for structural health monitoring. Struct. Health Monit. 2024, 23, 971–990. [Google Scholar] [CrossRef]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1732–1738. [Google Scholar]

- Kumar, M.; Mondal, S. Recent developments on target tracking problems: A review. Ocean Eng. 2021, 236, 109558. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Maldague, X.; Chen, Q. Unmanned aerial vehicle video-based target tracking algorithm using sparse representation. IEEE Internet Things J. 2019, 6, 9689–9706. [Google Scholar] [CrossRef]

- Li, Z.; Hovakimyan, N.; Dobrokhodov, V.; Kaminer, I. Vision-based target tracking and motion estimation using a small UAV. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2505–2510. [Google Scholar]

- Falanga, D.; Zanchettin, A.; Simovic, A.; Delmerico, J.; Scaramuzza, D. Vision-based autonomous quadrotor landing on a moving platform. In Proceedings of the 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 200–207. [Google Scholar]

- Saavedra-Ruiz, M.; Pinto-Vargas, A.M.; Romero-Cano, V. Monocular Visual Autonomous Landing System for Quadcopter Drones Using Software in the Loop. IEEE Aerosp. Electron. Syst. Mag. 2021, 37, 2–16. [Google Scholar] [CrossRef]

- Cabrera-Ponce, A.A.; Martinez-Carranza, J. A vision-based approach for autonomous landing. In Proceedings of the 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Linköping, Sweden, 3–5 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 126–131. [Google Scholar]

- Coşkun, M.; Ünal, S. Implementation of tracking of a moving object based on camshift approach with a UAV. Procedia Technol. 2016, 22, 556–561. [Google Scholar] [CrossRef]

- Lee, D.; Park, W.; Nam, W. Autonomous landing of micro unmanned aerial vehicles with landing-assistive platform and robust spherical object detection. Appl. Sci. 2021, 11, 8555. [Google Scholar] [CrossRef]

- Keshun, Y.; Yingkui, G.; Yanghui, L.; Yajun, W. A novel physical constraint-guided quadratic neural networks for interpretable bearing fault diagnosis under zero-fault sample. Nondestruct. Test. Eval. 2025, 40, 1–31. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Li, F.; Feng, Z.; Chen, L.; Jia, L.; Li, P. Foreign Object Detection Method for Railway Catenary Based on a Scarce Image Generation Model and Lightweight Perception Architecture. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 1-1. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Li, H.; Zhang, M.; Shabbir, S.; Saeed, A. Mature tomato fruit detection algorithm based on improved HSV and watershed algorithm. IFAC-PapersOnLine 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Boumehraz, M.; Habba, Z.; Hassani, R. Vision based tracking and interception of moving target by mobile robot using fuzzy control. J. Appl. Eng. Sci. Technol. 2018, 4, 159–165. [Google Scholar] [CrossRef]

- Foehn, P.; Brescianini, D.; Kaufmann, E.; Cieslewski, T.; Gehrig, M.; Muglikar, M.; Scaramuzza, D. Alphapilot: Autonomous drone racing. Auton. Robot. 2022, 46, 307–320. [Google Scholar] [CrossRef] [PubMed]

- Lei, B.; Liu, B.; Wang, C. Robust geometric control for a quadrotor UAV with extended Kalman filter estimation. Actuators 2024, 13, 205. [Google Scholar] [CrossRef]

- Ayala, A.; Portela, L.; Buarque, F.; Fernandes, B.J.; Cruz, F. UAV control in autonomous object-goal navigation: A systematic literature review. Artif. Intell. Rev. 2024, 57, 125. [Google Scholar] [CrossRef]

- Hulens, D.; Van Ranst, W.; Cao, Y.; Goedemé, T. Autonomous visual navigation for a flower pollination drone. Machines 2022, 10, 364. [Google Scholar] [CrossRef]

- Schnipke, E.; Reidling, S.; Meiring, J.; Jeffers, W.; Hashemi, M.; Tan, R.; Nemati, A.; Kumar, M. Autonomous navigation of UAV through GPS-denied indoor environment with obstacles. In Proceedings of the AIAA Infotech@Aerospace, Kissimmee, FL, USA, 5–9 January 2015; p. 0715. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).