Behavioral Biometrics in VR: Changing Sensor Signal Modalities

Abstract

Highlights

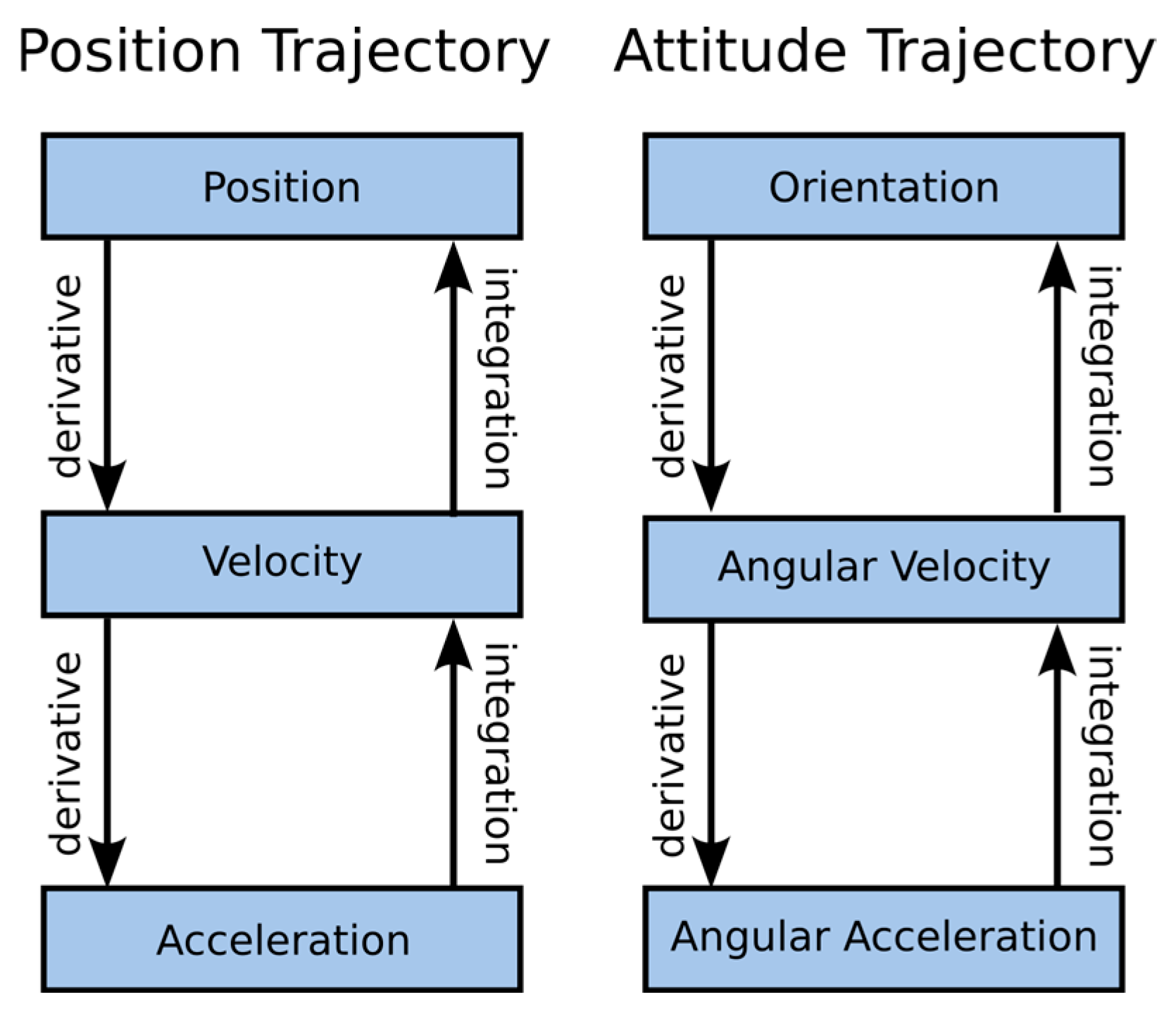

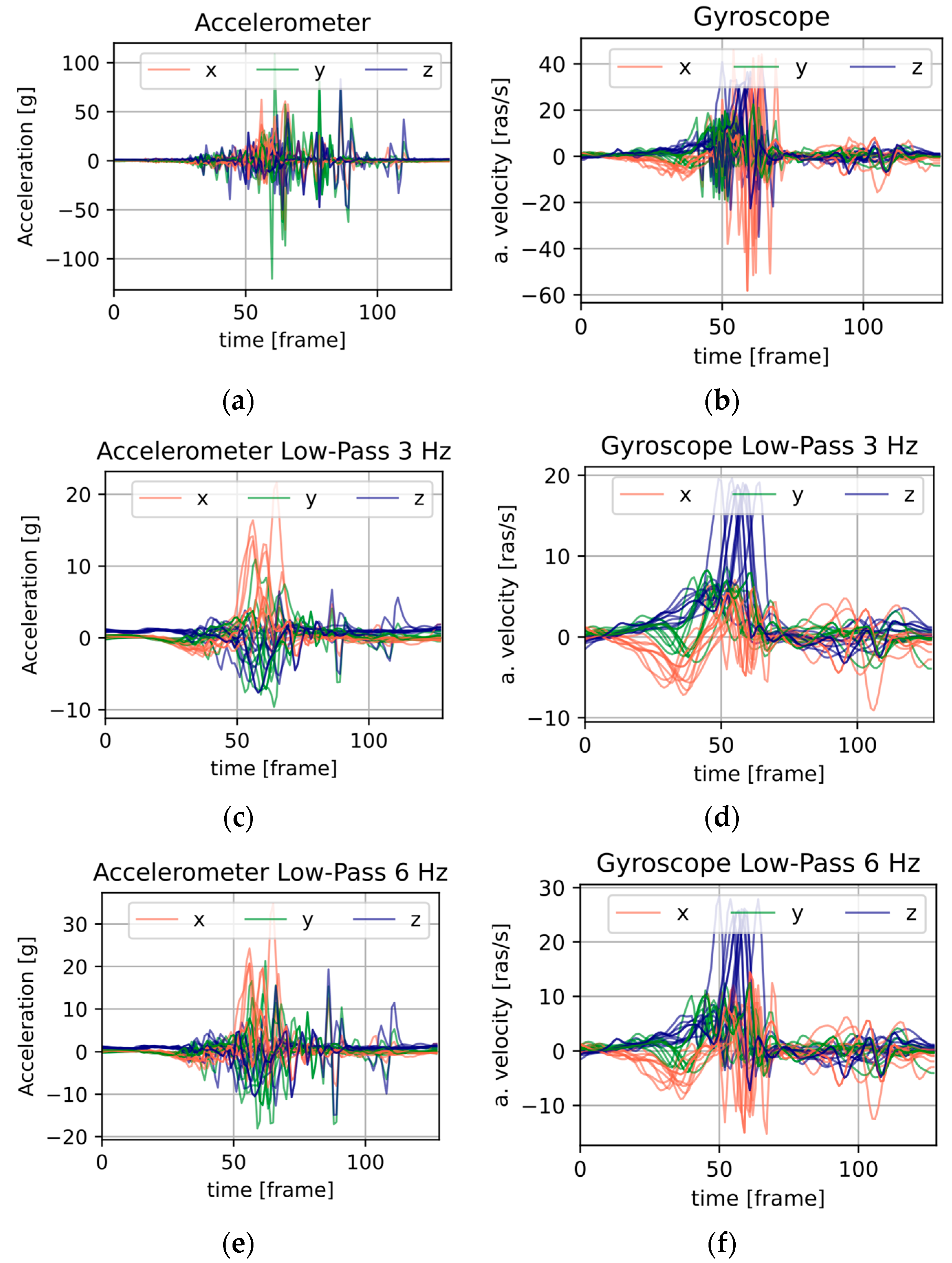

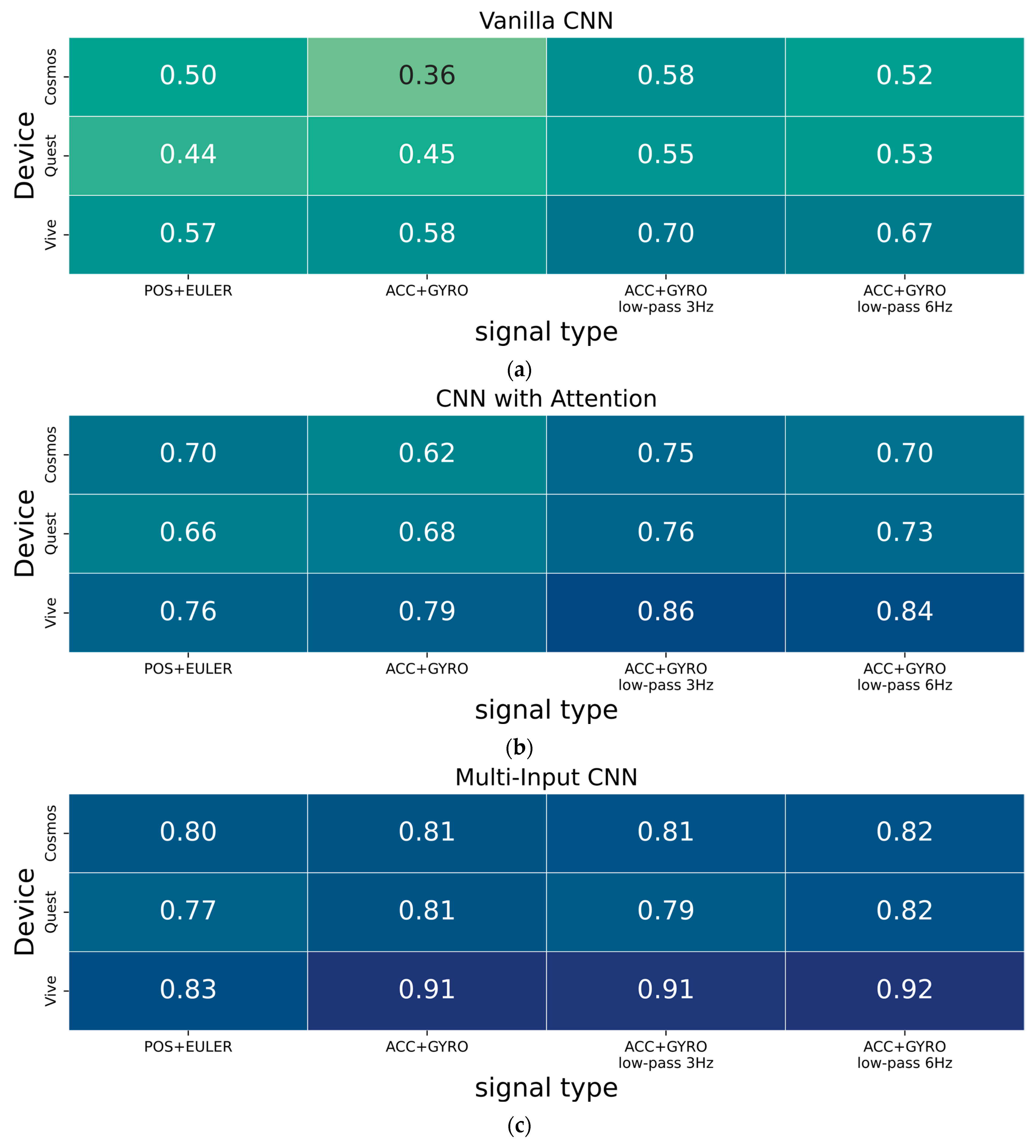

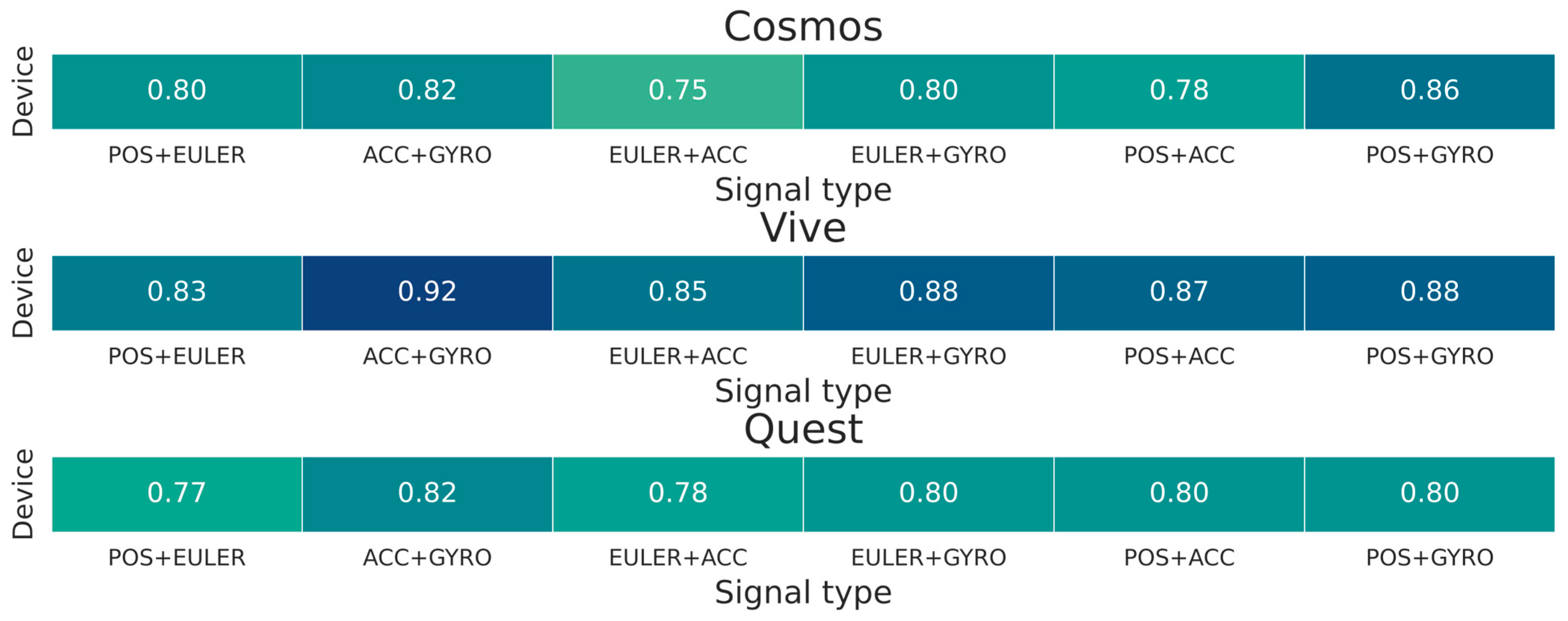

- Processing of orientation signals in the form of Euler angles, which is characterized by discontinuities, by CNNs is disadvantageous. Changing the modality of the trajectory and orientation time series into algebraically produced accelerometer and gyroscope signals has a positive influence on the effectiveness of the biometric system.

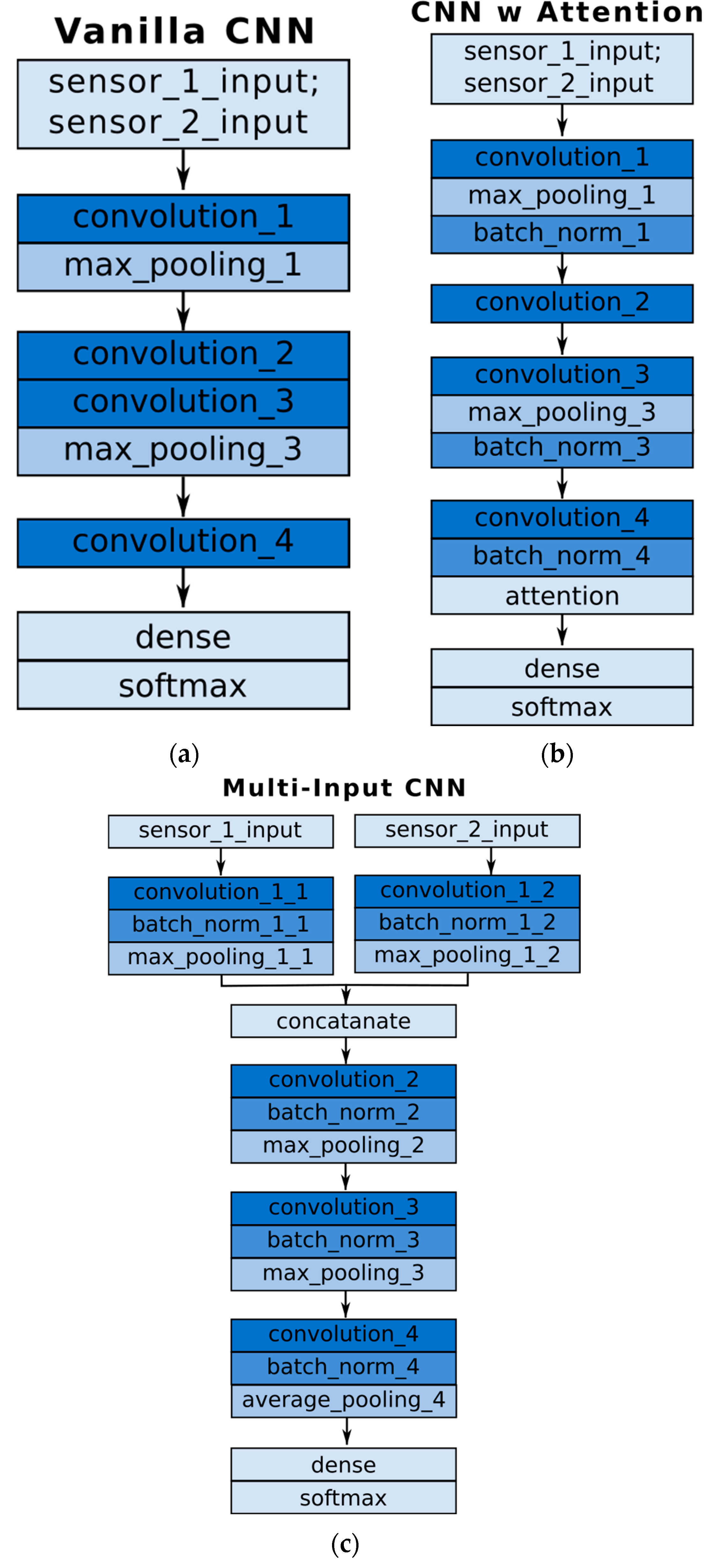

- The choice of neural network architecture has a significant impact on the identification metrics achieved. Sample processing by Multi-Input CNNs provides high classification accuracy. In the architecture mentioned above, acceleration and angular velocity signals are processed by separate branches with a set number of kernels. This makes it possible to determine the number of feature-extraction filters, which consequently enables equal consideration of selected modalities at the decision stage.

- It is possible to significantly increase the efficiency of person recognition by changing only the representation of the data. Sample modification is feasible for available data corpora and does not involve the collection of additional data (and therefore costs).

- The paper summarizes experiments involving several CNN architectures. On the basis of the compiled summary, the Multi-Input CNN is recommended for the processing of sets of varying modalities. This architecture performs feature extraction through filters separately for data processed within a branch.

Abstract

1. Introduction

2. Materials and Methods

2.1. Methods

2.2. Classifiers

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Miller, R.; Banerjee, N.K.; Banerjee, S. Using Siamese Neural Networks to Perform Cross-System Behavioral Authentication in Virtual Reality. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 27 March–1 April 2021; pp. 140–149. [Google Scholar] [CrossRef]

- Liebers, J.; Abdelaziz, M.; Mecke, L. Understanding User Identification in Virtual Reality through Behavioral Biometrics and the Effect of Body Normalization. In Proceedings of the Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021. [Google Scholar] [CrossRef]

- Ajit, A.; Banerjee, N.K.; Banerjee, S. Combining Pairwise Feature Matches from Device Trajectories for Biometric Authentication in Virtual Reality Environments. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 2–4 December 2019; pp. 9–16. [Google Scholar] [CrossRef]

- Heruatmadja, C.H.; Meyliana; Hidayanto, A.N.; Prabowo, H. Biometric as Secure Authentication for Virtual Reality Environment: A Systematic Literature Review. In Proceedings of the 2023 International Conference for Advancement in Technology (ICONAT), Malang, Indonesia, 21–22 June 2023. [Google Scholar] [CrossRef]

- Garrido, G.M.; Nair, V.; Song, D. SoK: Data Privacy in Virtual Reality. Proc. Priv. Enhancing Technol. 2024, 2024, 21–40. [Google Scholar] [CrossRef]

- Shiftall Inc. HaritoraX 1.1/1.1B Online Manual (English). Available online: https://docs.google.com/document/d/14hY7mkejXRbMWGzry_tp7Xw7sEDwiQMw02C53XnVJe4/edit?tab=t.0#heading=h.21vh7twr76m1 (accessed on 5 August 2025).

- Kim, D.; Kwon, J.; Han, S.; Park, Y.L.; Jo, S. Deep Full-Body Motion Network for a Soft Wearable Motion Sensing Suit. IEEE/ASME Trans. Mechatron. 2019, 24, 56–66. [Google Scholar] [CrossRef]

- Milosevic, B.; Leardini, A.; Farella, E. Kinect and Wearable Inertial Sensors for Motor Rehabilitation Programs at Home: State of the Art and an Experimental Comparison. Biomed. Eng. Online 2020, 19, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Wen, H.; Luo, C.; Xu, W.; Zhang, T.; Hu, W.; Rus, D. GaitLock: Protect Virtual and Augmented Reality Headsets Using Gait. IEEE Trans. Depend. Sec. Comput. 2019, 16, 484–497. [Google Scholar] [CrossRef]

- Olade, I.; Fleming, C.; Liang, H.N. BioMove: Biometric User Identification from Human Kinesiological Movements for Virtual Reality Systems. Sensors 2020, 20, 2944. [Google Scholar] [CrossRef] [PubMed]

- Pfeuffer, K.; Geiger, M.J.; Prange, S.; Mecke, L.; Buschek, D.; Alt, F. Behavioural Biometrics in VR: Identifying People from Body Motion and Relations in Virtual Reality. In Proceedings of the Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Kupin, A.; Moeller, B.; Jiang, Y.; Banerjee, N.K.; Banerjee, S. Task-Driven Biometric Authentication of Users in Virtual Reality (VR) Environments; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11295 LNCS, pp. 55–67. [Google Scholar] [CrossRef]

- Mathis, F.; Fawaz, H.I.; Khamis, M. Knowledge-Driven Biometric Authentication in Virtual Reality. In Proceedings of the Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Wright State University TARS Open “VR-Biometric-Authentication” Repository. Available online: https://github.com/weibo053/VR-Biometric-Authentication/tree/4bbc555e6183327747cc29fd4463a71ab7894e09 (accessed on 5 September 2025).

- Miller, R.; Banerjee, N.K.; Banerjee, S. Combining Real-World Constraints on User Behavior with Deep Neural Networks for Virtual Reality (VR) Biometrics. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 19–23 March 2022; pp. 409–418. [Google Scholar] [CrossRef]

- Miller, R.; Banerjee, N.K.; Banerjee, S. Temporal Effects in Motion Behavior for Virtual Reality (VR) Biometrics. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 19–23 March 2022; pp. 563–572. [Google Scholar] [CrossRef]

- Baños, O.; Calatroni, A.; Damas, M.; Pomares, H.; Rojas, I.; Sagha, H.; Millán, J.D.R.; Tröster, G.; Chavarriaga, R.; Roggen, D. Kinect=IMU? Learning MIMO Signal Mappings to Automatically Translate Activity Recognition Systems across Sensor Modalities. In Proceedings of the International Symposium on Wearable Computers (ISWC), Newcastle, UK, 9–13 September 2012; pp. 92–99. [Google Scholar] [CrossRef]

- Rey, V.F.; Hevesi, P.; Kovalenko, O.; Lukowicz, P. Let There Be IMU Data: Generating Training Data for Wearable, Motion Sensor Based Activity Recognition from Monocular RGB Videos. In Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and the 2019 ACM International Symposium on Wearable Computers (UbiComp/ISWC), London, UK, 9–13 September 2019; pp. 699–708. [Google Scholar] [CrossRef]

- Young, A.D.; Ling, M.J.; Arvind, D.K. IMUSim: A Simulation Environment for Inertial Sensing Algorithm Design and Evaluation. In Proceedings of the 2011 10th International Conference on Information Processing in Sensor Networks (IPSN), Chicago, IL, USA, 11–14 April 2011; pp. 199–210. [Google Scholar]

- Stanley, M. Open Source IMU Simulator, Trajectory & Sensor Simulation Toolkit. Freescale Semiconductor & MEMS Industry Group Accelerated Innovation Community. Available online: https://github.com/memsindustrygroup/TSim (accessed on 5 August 2025).

- Kwon, H.; Tong, C.; Gao, Y.; Abowd, G.D.; Lane, N.D.; Plötz, T.; Haresamudram, H. IMUTube: Automatic Extraction of Virtual on-Body Accelerometry from Video for Human Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 87. [Google Scholar] [CrossRef]

- Gadaleta, M.; Rossi, M. IDNet: Smartphone-Based Gait Recognition with Convolutional Neural Networks. Pattern Recognit. 2018, 74, 25–37. [Google Scholar] [CrossRef]

- Zou, Q.; Wang, Y.; Wang, Q.; Zhao, Y.; Li, Q. Deep Learning-Based Gait Recognition Using Smartphones in the Wild. IEEE Trans. Inf. Forensics Secur. 2018, 15, 3197–3212. [Google Scholar] [CrossRef]

- Huang, H.; Zhou, P.; Li, Y.; Sun, F. A Lightweight Attention-Based CNN Model for Efficient Gait Recognition with Wearable IMU Sensors. Sensors 2021, 21, 2866. [Google Scholar] [CrossRef] [PubMed]

- Mahmood Hussein, M.; Hussein Mutlag, A.; Shareef, H.; Pu, J.; Zhang, L.; Zhang, J.; Teoh, K.; Ismail, R.; Naziri, S.; Hussin, R.; et al. Face Recognition and Identification Using Deep Learning Approach. J. Phys. Conf. Ser. 2021, 1755, 012006. [Google Scholar] [CrossRef]

- Matovski, D.S.; Nixon, M.S.; Mahmoodi, S.; Carter, J.N. The Effect of Time on Gait Recognition Performance. IEEE Trans. Inf. Forensics Secur. 2012, 7, 543–552. [Google Scholar] [CrossRef]

- Topham, L.K.; Khan, W.; Al-Jumeily, D.; Hussain, A. Human Body Pose Estimation for Gait Identification: A Comprehensive Survey of Datasets and Models. arXiv 2023, arXiv:2305.13765. [Google Scholar] [CrossRef]

| Corpora Name | Acquisition Device | Number of People | Type of Movement | Description of Movement | Decision Model |

|---|---|---|---|---|---|

| GaitLock: Protect Virtual and Augmented Reality Headsets Using Gait [9] | Google Glass (HMD) | 20 | Gait | Indoor as well as outdoor gait. Data acquisition over two days, each time two sessions of motion tracking (direction of motion clockwise and counterclockwise) for a total of 4 sessions of gait | Hybrid decision-making model Dynamic-SRC (DTW + Sparse Representation Classifier) |

| VR-Biometric-Authentication [1] | Oculus Quest/HTC Vive/HTC Cosmos | 41 | Throwing a virtual basketball | Individuals perform a basketball toss in 10 attempts. There are two sessions, each held on a different day. Process repeated for 3 VR systems | Siamese network with a feature extractor in the form of CNN layers |

| BioMove: Biometric User Identification from Human Kinesiological Movements for Virtual Reality Systems [10] | HTC Vive (HMD, HHC); Glass (eye tracking device) | 15 | Placing objects-spheres/cubes in containers | Placing red balls into cylindrical containers and green/blue cubes into containers. Each participant completed 10 sessions per day over a two-day period. | k-Nearest Neighbors (kNN) and Support Vector Machine (SVM) |

| Understanding User Identification in Virtual Reality Through Behavioral Biometrics and the Effect of Body Normalization [2] | Oculus Quest | 16 | Implementation of two tasks: bowling and archery | Implementation of two task scenarios in a dedicated stage. This included task one for bowling and task two for target archery. Data collected within the two days | Deep Learning Classifier |

| Task-Driven Biometric Authentication of Users in Virtual Reality (VR) Environments [12] | HTC Vive | 10 | Basketball throw | Each action consists of four parts: picking the ball, placing the ball over the shoulder, throwing toward the target, and returning the controller/hand to the neutral position. During each session, 10 attempts/iterations were recorded | (nearest neighbor trajectory matching) similar to k-NN, k = 1 |

| Knowledge-driven Biometric Authentication in Virtual Reality [13] | HTC Vive | 23 | RubikBiom’s proprietary solution. It involves selecting a 4-digit pin on a board in virtual reality | Choosing a number on a specially prepared three-color cube modeled in virtual reality (hence the reference to Rubik’s person). This cube had numbers, and the authors were asked to choose a sequence of 4 numbers with specific colors, e.g., 1 (green), 2 (white), 1 (red), 8 (white). Each participant entered 12 code sequences, assuming 4 repetitions | MLP FCN ResNet Encoder MCDCNN Time-CNN |

| Behavioural biometrics in VR: Identifying people from body motion and relations in virtual reality [11] | HTC Vive, eye tracking supplement by Pupil Labs | 22 | Perform four typical tasks in 3D environments: pointing, grasping, walking, and typing on a virtual keyboard | The task was carried out during two separate sessions over two days. With an interval of 3 days between acquisitions | Random Forest and Support Vector Machine (SVM) |

| Area Under the Curve | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cosmos Subset | Vive Subset | Quest Subset | ||||||||||

| CNN | 0.949 | 0.896 | 0.954 | 0.944 | 0.965 | 0.952 | 0.974 | 0.970 | 0.926 | 0.898 | 0.939 | 0.929 |

| CNN with Attention | 0.979 | 0.972 | 0.986 | 0.982 | 0.986 | 0.978 | 0.986 | 0.985 | 0.970 | 0.962 | 0.975 | 0.971 |

| M-I CNN | 0.986 | 0.989 | 0.988 | 0.989 | 0.991 | 0.997 | 0.998 | 0.998 | 0.979 | 0.986 | 0.981 | 0.985 |

| POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | |

| False Acceptance Rate | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cosmos Subset | Vive Subset | Quest Subset | ||||||||||

| CNN | 0.0111 | 0.0141 | 0.0090 | 0.0102 | 0.0093 | 0.0087 | 0.0065 | 0.0067 | 0.0125 | 0.0116 | 0.0096 | 0.0102 |

| CNN with Attention | 0.0071 | 0.0087 | 0.0060 | 0.0070 | 0.0052 | 0.0051 | 0.0034 | 0.0038 | 0.0083 | 0.0077 | 0.0057 | 0.0064 |

| M-I CNN | 0.0047 | 0.0045 | 0.0049 | 0.0046 | 0.0038 | 0.0021 | 0.0022 | 0.0019 | 0.0054 | 0.0043 | 0.0049 | 0.0045 |

| POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | |

| False Rejection Rate | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cosmos Subset | Vive Subset | Quest Subset | ||||||||||

| CNN | 0.4432 | 0.5641 | 0.3617 | 0.4073 | 0.3709 | 0.3491 | 0.2611 | 0.2698 | 0.5010 | 0.4622 | 0.3844 | 0.4074 |

| CNN with Attention | 0.2823 | 0.3489 | 0.2387 | 0.2785 | 0.2078 | 0.2034 | 0.1341 | 0.1516 | 0.3330 | 0.3067 | 0.2279 | 0.2566 |

| M-I CNN | 0.1898 | 0.1817 | 0.1951 | 0.1838 | 0.1515 | 0.0843 | 0.0894 | 0.0768 | 0.2149 | 0.1739 | 0.1949 | 0.1795 |

| POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | POS + EULER | ACC + GYRO | ACC + GYRO L-P 3 Hz | ACC + GYRO L-P 6 Hz | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sawicki, A.; Saeed, K.; Walendziuk, W. Behavioral Biometrics in VR: Changing Sensor Signal Modalities. Sensors 2025, 25, 5899. https://doi.org/10.3390/s25185899

Sawicki A, Saeed K, Walendziuk W. Behavioral Biometrics in VR: Changing Sensor Signal Modalities. Sensors. 2025; 25(18):5899. https://doi.org/10.3390/s25185899

Chicago/Turabian StyleSawicki, Aleksander, Khalid Saeed, and Wojciech Walendziuk. 2025. "Behavioral Biometrics in VR: Changing Sensor Signal Modalities" Sensors 25, no. 18: 5899. https://doi.org/10.3390/s25185899

APA StyleSawicki, A., Saeed, K., & Walendziuk, W. (2025). Behavioral Biometrics in VR: Changing Sensor Signal Modalities. Sensors, 25(18), 5899. https://doi.org/10.3390/s25185899