1. Introduction

In Japan, the construction industry has faced a labor shortage due to an aging population and declining number of skilled workers. Specifically, the number of construction technicians has been basically decreasing over the past ten years [

1]. According to the statistical survey about the working population of the construction industry in 2024 [

1], those aged 60 and above account for over 25% of the total, while those under 30 account for about 12%. For this social background, the automation of construction tasks has become necessary. One of the major unsolved issues is to automate soil loading onto dump trucks with wheel loaders.

Figure 1a illustrates the component technologies and the pipeline for soil loading. In this case, the automation consists of some processes such as bucket filling [

2,

3], autonomous locomotion [

4,

5], and soil loading onto dump trucks [

6,

7]. Among these processes, we focus on the recognition of dump trucks for soil loading based on point cloud data. To be more precise, the wheel loaders require pose estimation and size classification of the dump trucks to determine the appropriate loading position and capacity.

Figure 1b illustrates the assumed layout of the wheel loader, dump truck, and soil. In this setup, the automatic loading system needs to estimate the pose and classify the size of the dump truck located in front of the wheel loader for automatic soil loading.

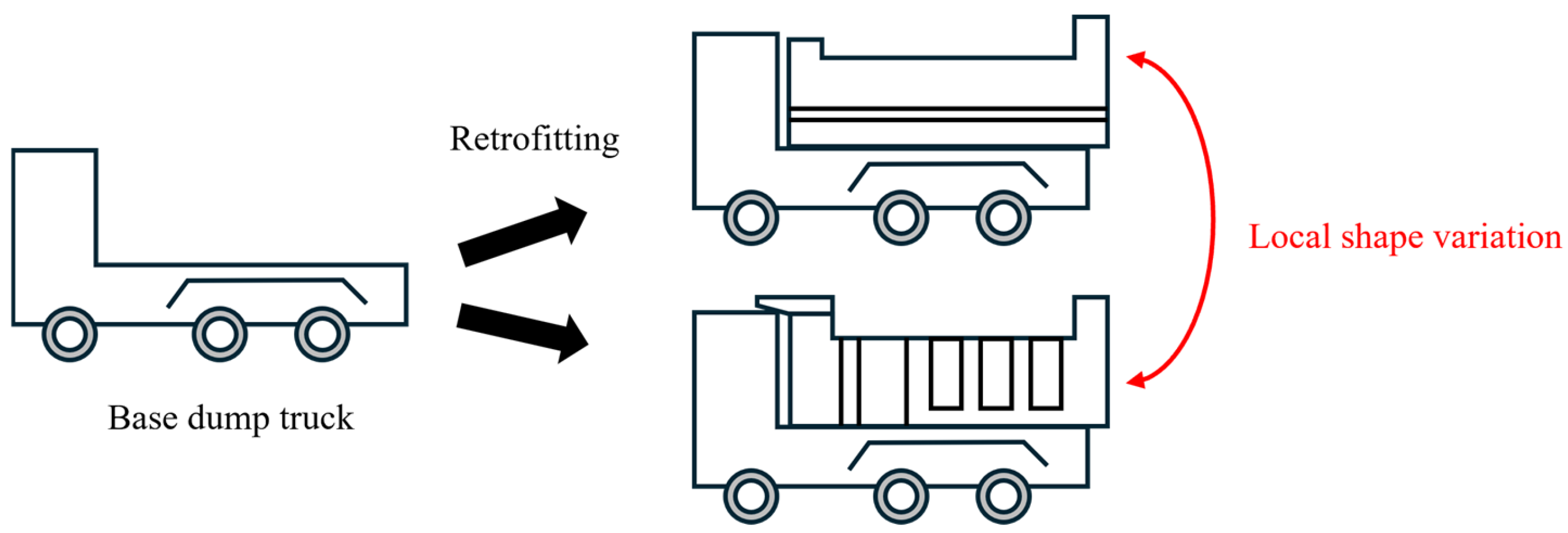

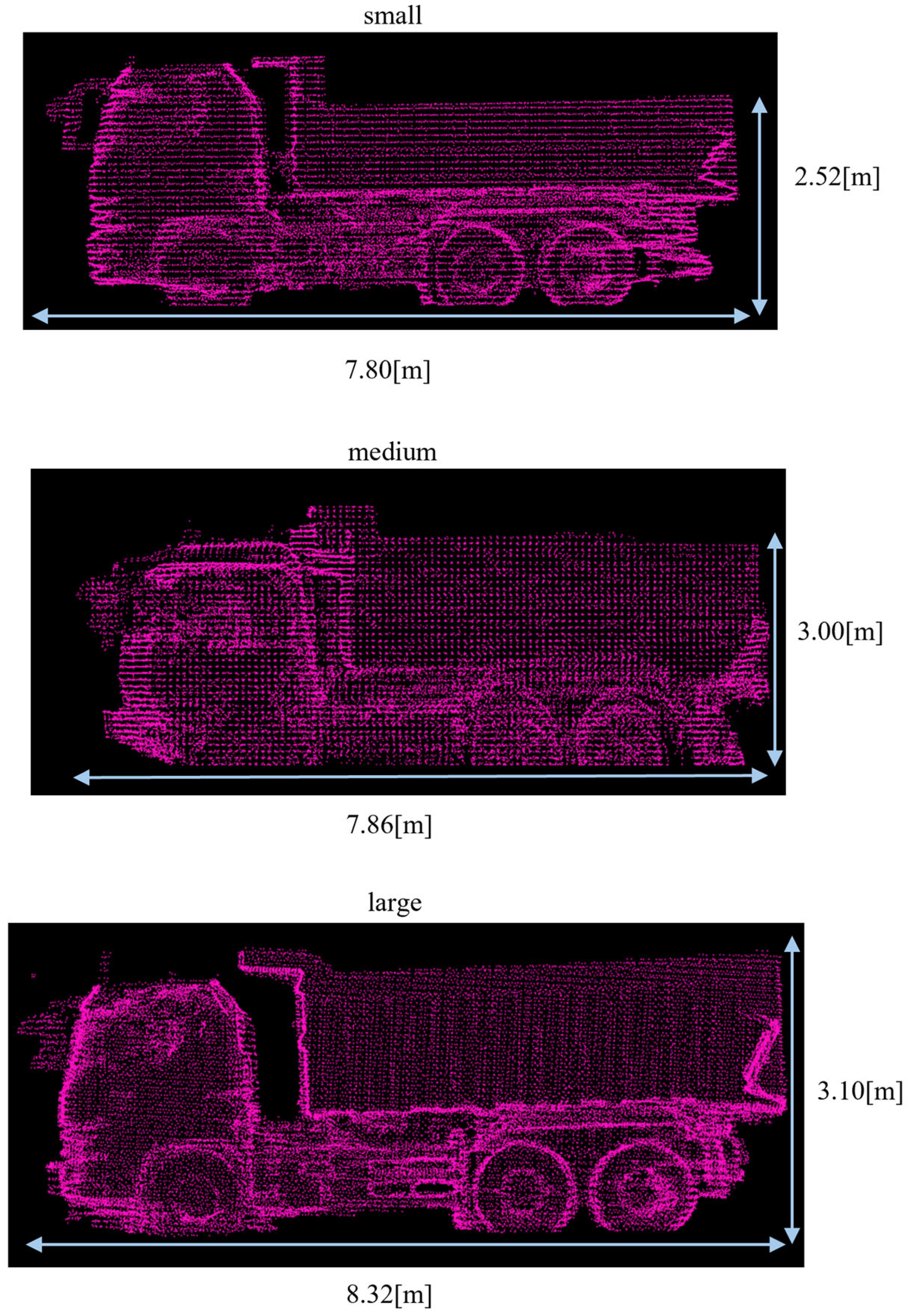

One of the key challenges in actual construction sites is that the specifications of dump trucks are not always known in advance. Because dump trucks from various contractors enter and leave actual construction sites, it is difficult for the automatic loading system to fully manage their specifications. This situation raises two issues. First, classification of size categories is required to estimate the loading capacity of each truck. Second, pose estimation becomes more difficult due to the increased variability in truck shape within each size category. As for the second issue,

Figure 2 illustrates the local shape variations in the unknown dump trucks due to the design of the vessel. Because various contractors retrofit base vehicles with specific vessels, local shape variations occur even within the same size category depending on the vessel design. Therefore, it is necessary to develop a method that performs both pose estimation and size classification for dump trucks with unknown specifications. To address this challenge, the method must distinguish global differences among size categories in size classification and also absorb local shape variations within each category in pose estimation. In addition, for practical implementation on construction machinery, the method should perform fast under limited computational resources.

Based on the above considerations, this study proposes a two-stage method based on Normal Distribution Transform (NDT) [

8], which is a method of point cloud registration. In existing research for recognition of dump trucks using point clouds, truck poses have often been estimated through point cloud registration between an observed point cloud and a reference point cloud. Among such approaches, Iterative Closest Point (ICP) [

9] has been widely used [

10,

11]. ICP matches a pair of point clouds by minimizing the distance between each point in the observed point cloud and its nearest neighbor in the reference point cloud. This approach works well when the specifications of the observed dump truck are known in advance and a corresponding reference point cloud can be prepared. However, as discussed above, such assumptions are not always met in the actual construction sites. In addition, although deep-learning-based methods have also been investigated for dump trucks [

12], they still have challenges in terms of the cost of collecting sufficient training data. On the other hand, NDT represents a reference point cloud by a set of normal distributions and minimizes the distance between the observed point cloud and these distributions. Because NDT approximates local shape variations with probability distributions, it is expected to be more robust against shape variations between the observed dump truck and the reference point cloud in the first step. For size classification, NDT alone does not provide a direct solution. Accordingly, we extend it to a parallel comparison based on the fact that NDT optimizes the matching score to determine the transformation. Specifically, we prepare multiple reference point clouds that represent different size categories and conduct NDT-based pose estimations in parallel. At least in Japan, dump truck sizes can be roughly categorized into a limited number of predefined categories, and it is practical to prepare the corresponding reference point clouds. Size classification is then performed by selecting the size category that provides the highest optimized score in the second step.

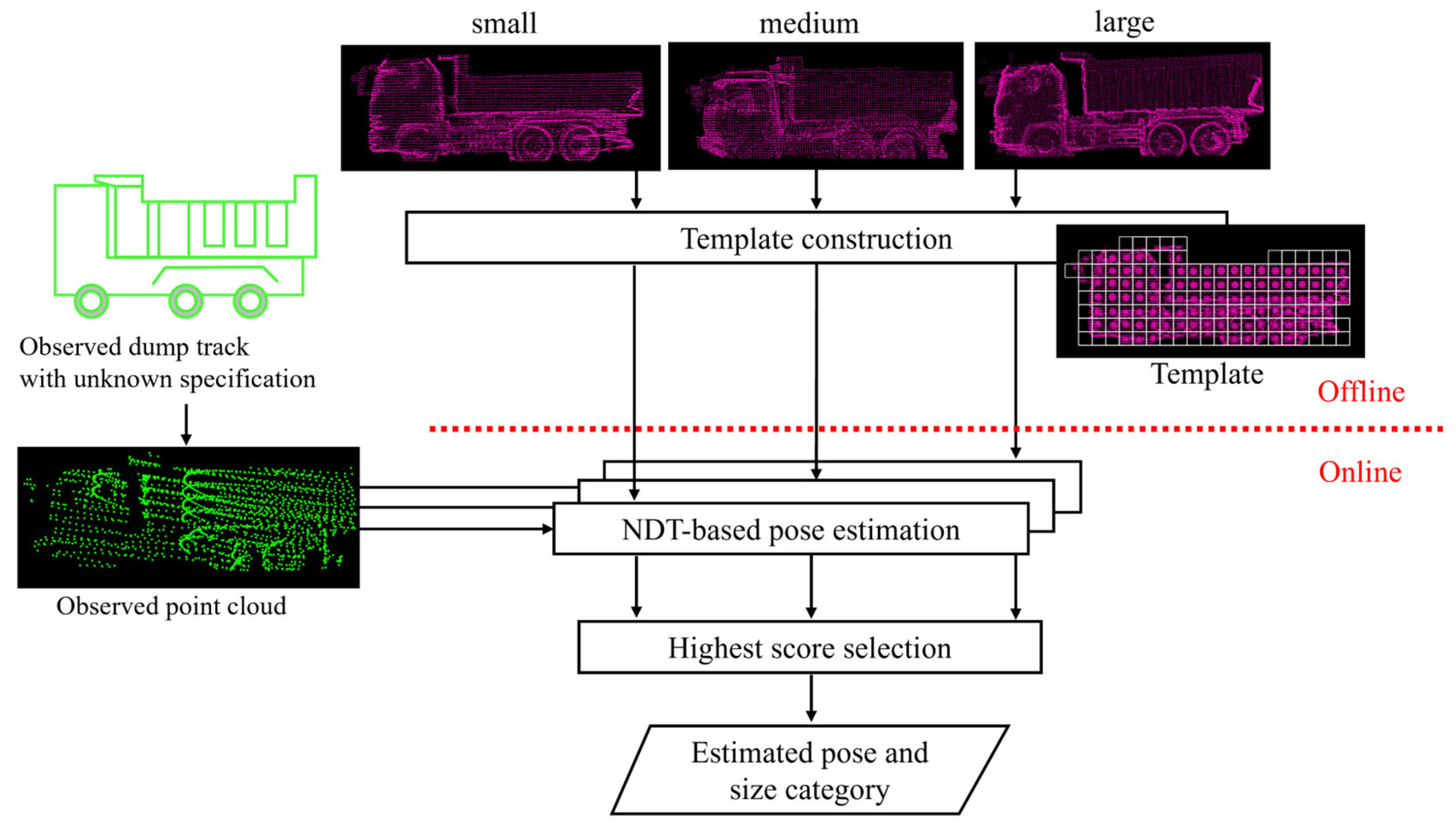

Figure 3 shows the conceptual diagram of the proposed method. First, an NDT template is constructed for each size category of dump trucks. These templates are constructed to distinguish global differences across size categories while absorbing local shape variations within each size category. Then, the pose estimation is performed by matching the input point cloud with the template of each size category by NDT. After that, the size classification is achieved by comparing the optimized scores across size categories and selecting the one with the highest score. In this way, the proposed method achieves robust pose estimation and size classification for unknown dump trucks.

The main contributions of this study are as follows:

Proposal of a two-stage method for pose estimation and size classification of dump trucks with unknown specifications using NDT templates;

Experimental validation of the proposed method using real-world data under various settings of different positions and size categories.

The remainder of this paper is organized as follows:

Section 2 reviews the existing research on the recognition of dump trucks using point cloud data.

Section 3 explains the details of the proposed method. In

Section 4 and

Section 5, the proposed method is evaluated by real-world data for pose estimation and size classification, respectively.

Section 6 organizes discussion and limitations in this study. Finally,

Section 7 presents the conclusions.

2. Related Work

This study focuses on technologies of pose estimation and size classification of dump trucks using point cloud data. Although we use point cloud registration in the proposed framework,

Section 2.1 reviews other approaches to pose and shape estimation and explains motivation for using point cloud registration. Then,

Section 2.2 summarizes existing registration methods and describes the advantage for using NDT. Finally,

Section 2.3 reviews recognition methods of dump trucks in construction automation and emphasizes that pose and size estimation for dump trucks with unknown specifications has not been sufficiently investigated.

2.1. Pose and Shape Estimation Using Point Cloud

In the broader field of automobile recognition, several methods have been proposed to estimate the pose of automobiles by finding a 2D boundary rectangle that fits best to a point cloud. These methods aim to extract pose information from partially observed point clouds of surrounding vehicles captured by onboard LiDAR, using simplified rectangular representations and iterative optimization. Zhang et al. [

13] proposed a fitting method by searching for the optimal orientation angle. Liu et al. [

14] proposed a method for generating candidate attitude angles using a convex hull and fitting a rectangle to the point cloud. Baeg et al. [

15] developed a method for fitting rectangles that encodes the point cloud into 2D grids and uses the number of points in the cell and the center coordinates. Although such rectangle fitting is fast and robust for partially observed point clouds, it is too simplified and insufficient for accurate pose estimation required in soil loading. In addition to such 2D rectangle fitting approaches, deep learning-based methods for detecting 3D bounding boxes have also advanced. While the former iteratively fits rectangles to the observed point cloud through optimization, the latter is trained on labeled datasets and directly outputs bounding boxes for the observed point cloud. For example, Yan et al. [

16] proposed SECOND, which applies sparse convolutions on voxel features. Lang et al. [

17] proposed PointPillars, which encodes point clouds into vertical pillars and then processes them with 2D convolutions. Shi et al. [

18] proposed PV-RCNN, which integrates voxel-based features and keypoint-based features in a two-stage framework. While these methods have high detection accuracy, 3D box representations remain a simplified approximation that does not fully capture the detailed geometry of automobiles and are insufficient for soil loading. To be more precise, automation tasks such as soil loading require recognizing not only the whole dump truck but also more detailed parts, such as the vessel. In contrast, because point cloud registration matches the observed point cloud with a reference point cloud or template, it helps to recognize more detailed parts of the dump truck.

Apart from the above simplified rectangle or box detection approaches, some studies have aimed to recognize more detailed automobile size and geometry. Zhang et al. [

19] classified automobiles using aerial LiDAR data based on differences in height and overall shape. Kraemer et al. [

20] developed a method that estimates vehicle shape using polylines constrained by free-space information. In addition, Kraemer et al. [

21] employed multi-layer laser scanners and used ICP-based registration to estimate both motion and shape through point cloud accumulation. Monica et al. [

22] introduced a recurrent neural network-based approach that estimates shape and pose from sequential LiDAR data. Other approaches, such as that of Ding et al. [

23], use multiple monocular cameras and convolutional neural networks to detect vehicle key points and estimate 3D geometry. Monica et al. [

24] further extended shape estimation by converting stereo depth maps into point clouds using Pseudo-LiDAR++. While they achieve detailed shape reconstruction using various representations, such as polylines, keypoints, and accumulated point clouds, they typically assume dense observations of the vehicle. In contrast, in the assumed setup in this study, only a partial point cloud of a dump truck is observed by LiDAR. Moreover, computational efficiency is critical for practical operation.

In summary, methods that detect vehicles as 2D rectangles or 3D bounding boxes are too simplified, while those that aim at more detailed shape estimation have high computational cost. In contrast, because this study focuses on dump trucks representative templates can be prepared in advance. Therefore, the proposed method employs point cloud registration to match the observed point cloud with such templates. To be more precise, the proposed method performs NDT-based pose estimation with multiple normal distribution templates that represent different dump truck size categories in parallel. By comparing the NDT-based scores, our method classifies the size of the dump truck in a computationally efficient way.

2.2. Methods of Point Cloud Registration

As described in

Section 2.1, point cloud registration is used for pose estimation of dump trucks in this study. Point cloud registration methods estimate the pose by searching for the coordinate transformation that matches the observed point cloud with a reference point cloud. Among them, one of the most representative and traditional approaches is ICP. ICP matches an observed point cloud with a reference point cloud by iteratively searching for the nearest neighbor points [

9]. On the other hand, in NDT, the reference point cloud is divided into voxels at uniform intervals, and the distribution of the reference point cloud within each voxel is modeled as a normal distribution. Matching is then performed by minimizing the Mahalanobis’ distance between each observed point cloud after transformation and the corresponding normal distribution [

8]. Magnusson et al. [

25] compared the performance of ICP and NDT in terms of robustness to initial pose and computation time. In their experiments, NDT converges from a larger range of initial poses and performs faster than ICP. In addition, an extension of ICP called Generalized-ICP (GICP) [

26] has been proposed. In GICP, each point in the reference and observed point cloud is assumed to be generated from a different normal distribution. Although GICP achieved higher accuracy than both ICP and NDT, it requires more computation time than NDT.

In the assumed setup in this study, the specifications of the observed dump trucks are unknown, and it is impossible to prepare a reference point cloud of the same vehicle. Therefore, local shape variations within the same size category become a challenge in the case of ICP, which minimizes the distance between individual points. In contrast, GICP and NDT can handle this issue by modeling the reference point cloud not as discrete points but as spatial distributions. Due to its computational efficiency, this study employs NDT and utilizes it for both pose estimation and size classification.

In addition to the above traditional methods, several learning-based registration methods have also been proposed with the development of deep learning. Aoki et al. [

27] proposed PointNetLK, which integrates PointNet [

28] with a modified Lucas–Kanade algorithm for point cloud registration. Wang and Solomon [

29] proposed Deep Closest Point, which employs PointNet or DGCNN [

30] to extract point features and applies attention-based soft matching to estimate rigid transformations. Although these deep-learning-based methods achieve higher accuracy compared with traditional methods, they also have a challenge in training. Collecting sufficient labeled data requires considerable cost, and their generalization for out-of-domain data, such as point clouds captured by different LiDAR sensors, is limited. In contrast, NDT template can be constructed if one representative dump truck point cloud is available for each size category. This makes the implementation more practical.

2.3. Recognition Methods of Dump Trucks in Construction Automation

To determine the appropriate loading position, the automatic loading system requires pose estimation of the dump truck. One possible approach is to equip the dump truck with sensors or markers in advance and then utilize this information for pose estimation [

31,

32]. However, as mentioned earlier, because dump trucks from various contractors operate in actual construction sites, such information is not always available. Apart from this approach, various studies have investigated methods based on point cloud data. Stentz et al. [

33] estimated the truck pose by fitting planar regions extracted from point cloud to a six-plane dump truck model. Phillips et al. [

34] generated multiple pose hypotheses of a dump truck in advance and then performed pose estimation by evaluating their likelihood against the acquired point cloud data. Lee et al. [

10] proposed a two-stage pose estimation method which consists of an initial transformation using a hexahedral truck model and a refinement using ICP. Sugasawa et al. [

11] estimated the poses of dump trucks with LiDAR mounted on an excavator using ICP. An et al. [

12] developed a deep point cloud registration network aimed at fusing local and global features and applied it to pose estimation of a dump truck. All of these methods estimate poses by preparing template model or reference point cloud in advance and matching the observed point cloud to it. As a result, the vessel area of the dump truck can be identified and utilized for following soil loading.

However, the above methods have assumed that the dump truck’s specifications are known in advance. Therefore, these methods are not robust to the local shape variation in actual construction sites, where the specifications of the dump trucks are not always known in advance. Although learning-based registration approaches may be able to address the issue of shape variation, their applicability is limited by the cost of data collection and the computational resources. To overcome these limitations, we utilize an NDT-based pose estimation method. The important point is that NDT represents reference dump trucks as normal distribution templates, which absorb local shape variations in the dump trucks and perform sufficiently fast for practical operation.

3. Method

3.1. Overview

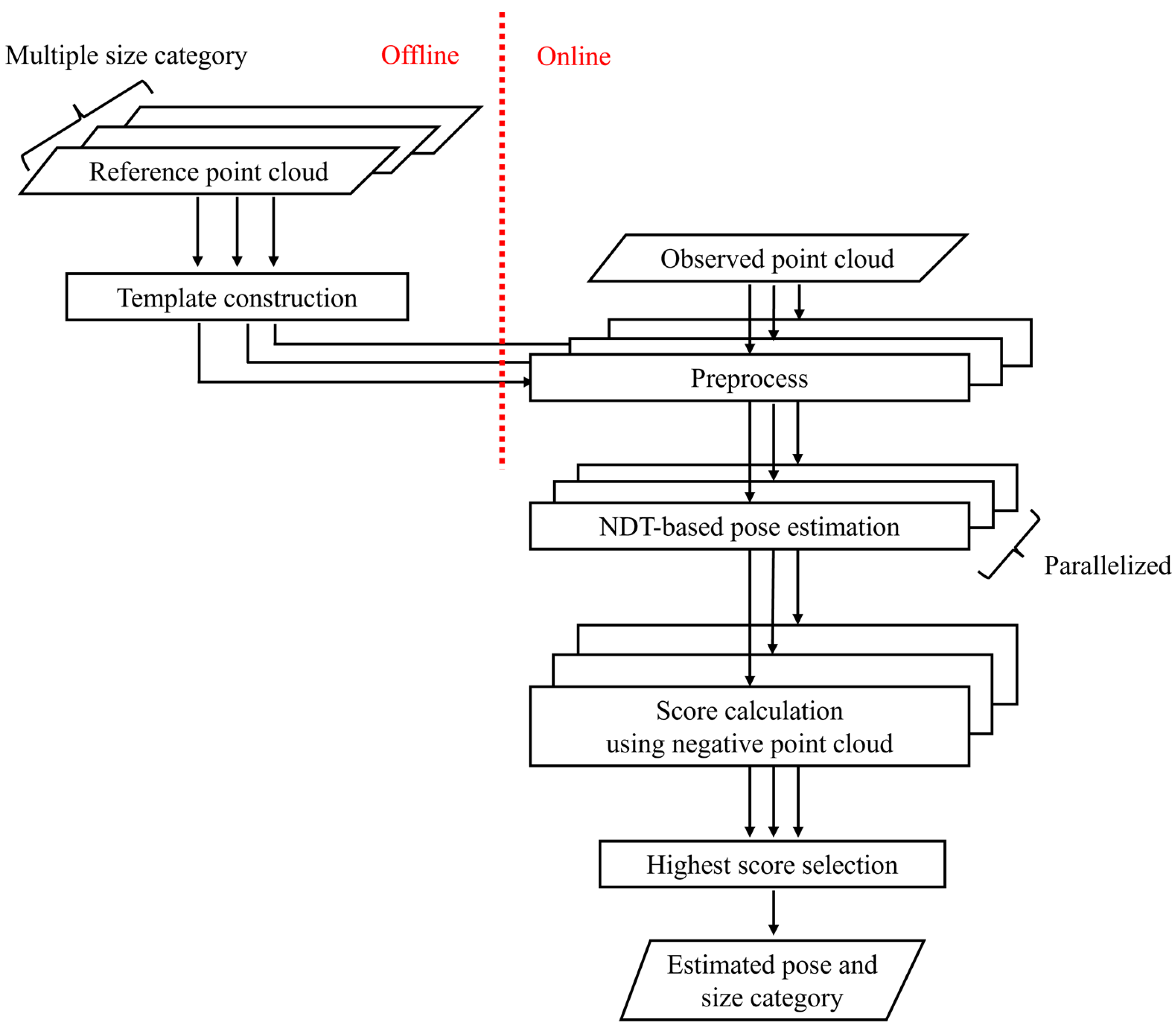

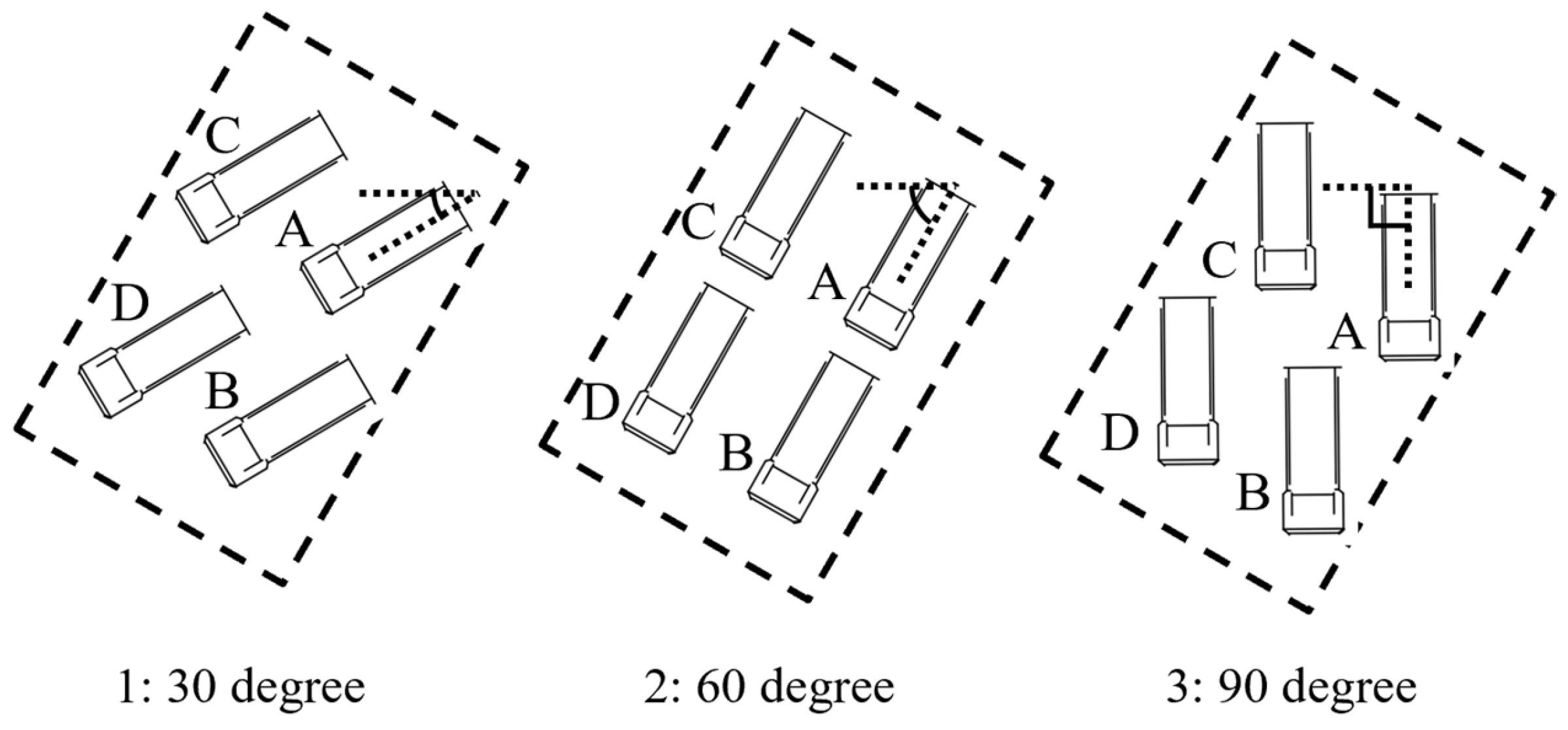

Figure 4 shows the pipeline of the proposed method. In advance, a set of templates is constructed from reference point clouds for each size category (

Section 3.2). During online operation, first, the point cloud of the dump truck is observed by the LiDAR sensors mounted on the wheel loader. The observed point cloud is then preprocessed with a rectangle fitting method to obtain an initial transformation for the following NDT process (

Section 3.3). Next, NDT iteratively updates transformation parameters by matching the observed point cloud with pre-constructed templates (

Section 3.4). These preprocess and NDT-based pose estimation are performed in parallel for multiple templates. After the pose estimation for each size category, the method compares the scores which are designed for size classification and then selects the one with the highest value (

Section 3.5). To be more precise, we introduce negative point clouds into the conventional NDT score to correctly distinguish between different size categories.

In this study, pose estimation is formulated as the problem of estimating a coordinate transformation that matches the observed point cloud with a template whose pose is already known. Because both the wheel loader and the dump truck are positioned horizontally on flat ground at the construction site, we consider the 2-D transformation parameter

defined as follows in this study:

where

and

represent translational parameters along the

x-axis and

y-axis, respectively, and

denotes the yaw angle. When a point (

,

,

) in the observed point cloud is transformed by this transformation parameter

, the position of the point after the transformation (

,

,

) is expressed as follows:

where

indicates the center of the template. In other words, the transformation is defined as a translation in the xy-plane and a rotation around the

z-axis that passes through the center of the template.

3.2. Construction of Normal Distribution Template

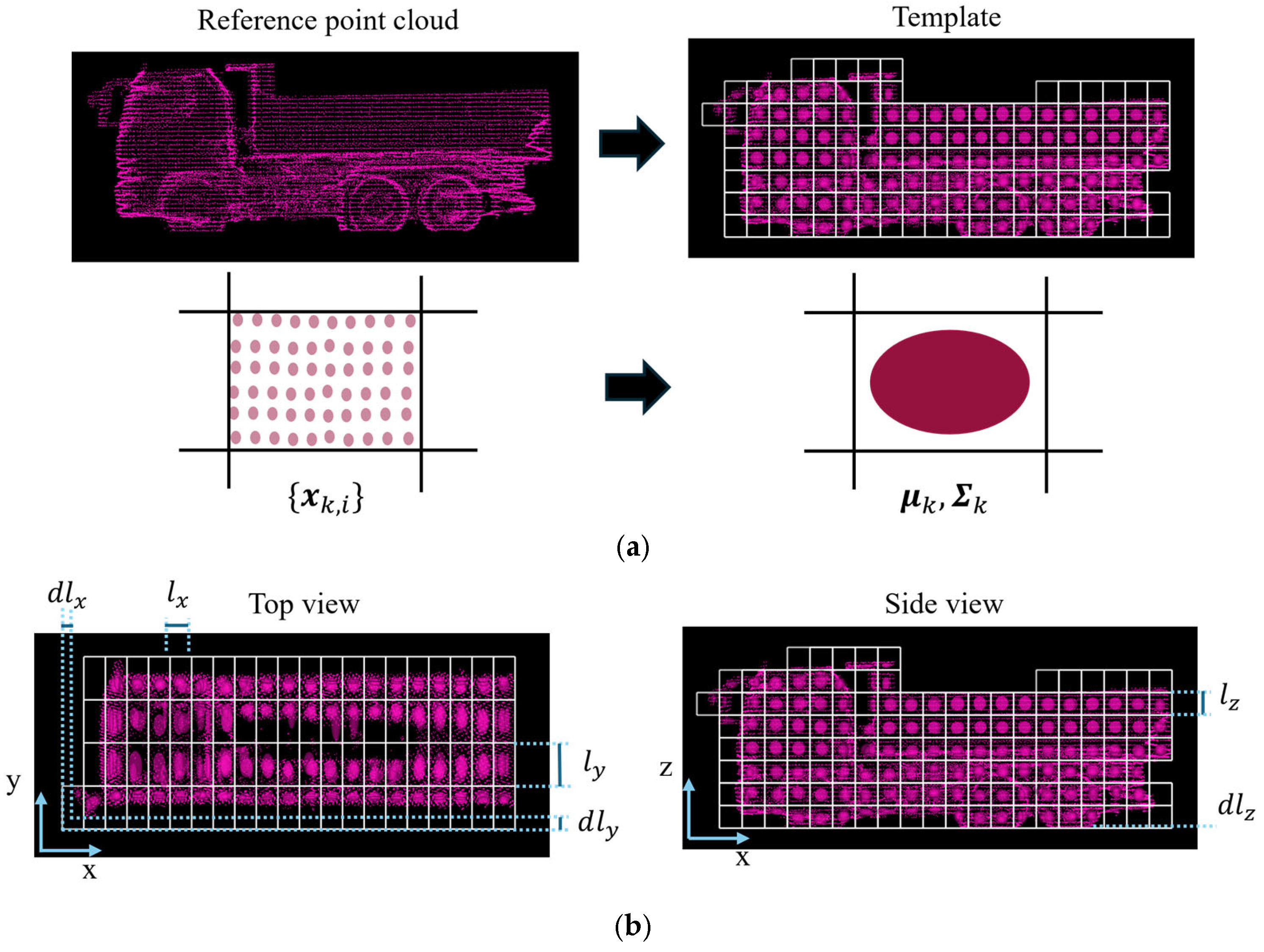

Before the online operation, normal distribution templates are constructed for different size categories using reference point clouds. These reference point clouds represent the typical shape of dump trucks in each category, and they are prepared in advance. Specifically, they are created by merging point clouds of a dump truck captured from all directions. We emphasize that the dump trucks which the reference point clouds represent are not always identical to those which will be observed during actual operation. Local shape variations may exist between the reference and observed dump trucks, even within the same size category.

The following part describes the process of constructing a single template from a reference point cloud. First, the reference point cloud is divided into a grid of voxels. Then, the mean vector

and covariance matrix

are computed for a subset of points within the voxel

as follows:

where

represents the number of points within voxel

, and

(

) represents the

-th point within the voxel

.

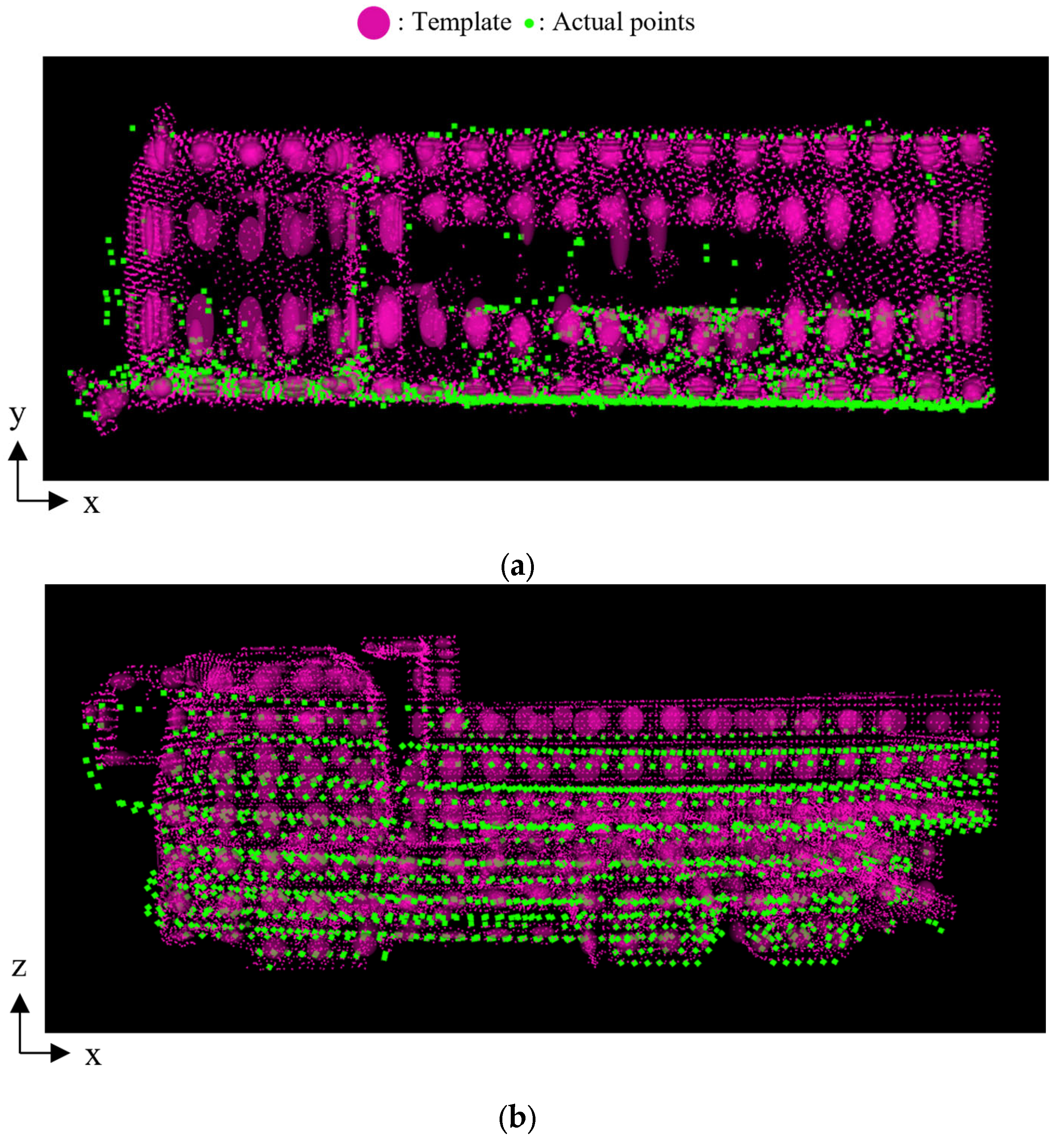

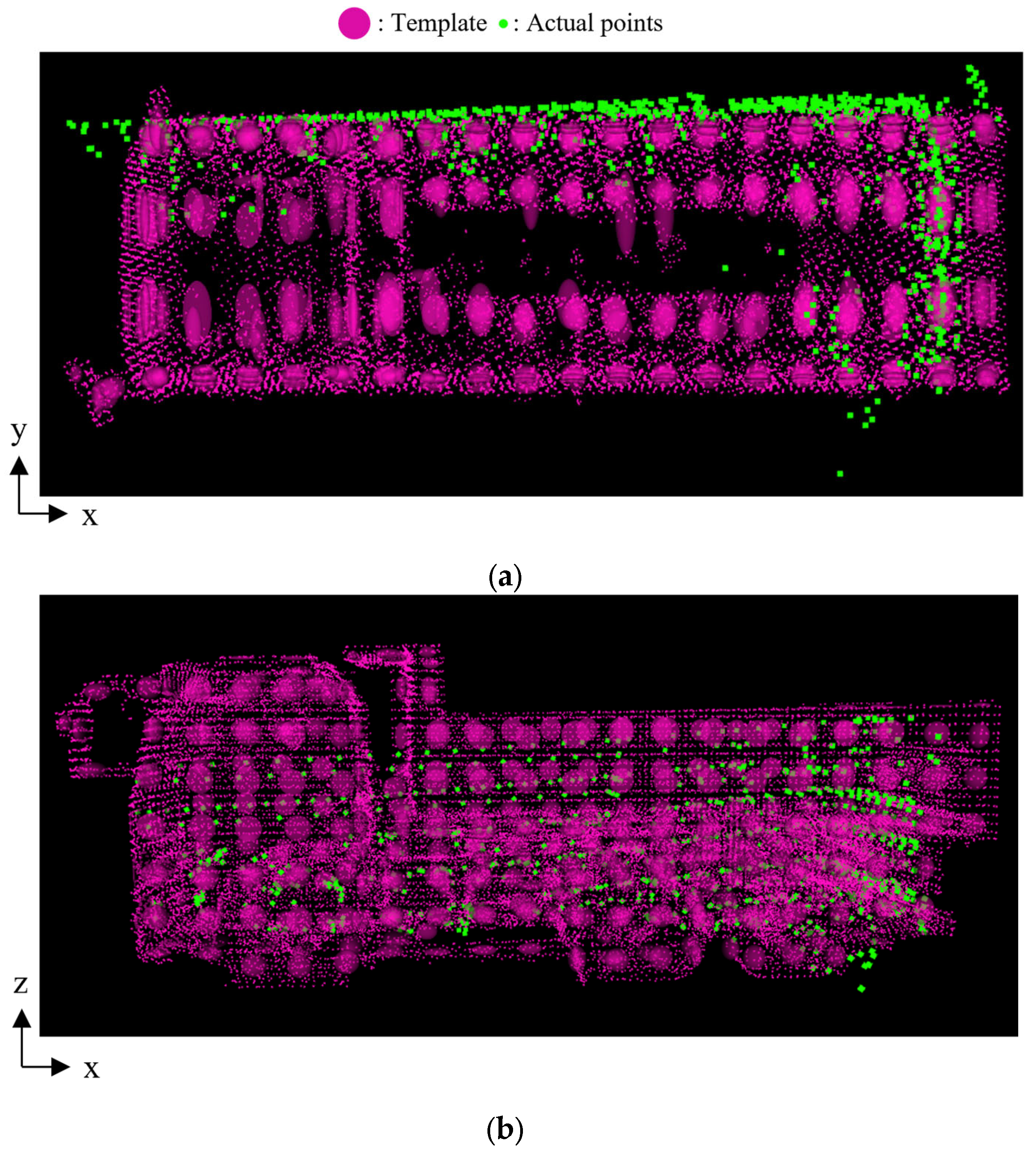

Figure 5a illustrates the reference point cloud and constructed template. As shown in

Figure 5a, an ellipsoid represents the 95% confidence region of the normal distribution in each voxel. In addition,

Figure 5b illustrates how the template is divided into a voxel grid. The voxel grid is defined by 6 parameters: The voxel sizes (

,

,

) and the offsets (

,

,

). As an example, the process of the voxel placement along the

x-axis is described as follows:

The point with the smallest x-coordinate is extracted from the reference point cloud.

The starting line of the grid is then determined by subtracting the offset from the x-coordinate of the above extracted point.

From this starting point, the space is divided along the x-axis at intervals lx

Figure 5.

Visualization of Template. In this case, (, , ) is (0.4, 0.8, 0.4) [m] and (, , ) is (0.2, 0.2, 0.0) [m]. (a) Reference point cloud and constructed template. (b) Top view and side view of the template with associated parameters.

Figure 5.

Visualization of Template. In this case, (, , ) is (0.4, 0.8, 0.4) [m] and (, , ) is (0.2, 0.2, 0.0) [m]. (a) Reference point cloud and constructed template. (b) Top view and side view of the template with associated parameters.

The same process is applied to the y-axis and z-axis using voxel sizes and , and offsets and , respectively.

3.3. Preprocess

To avoid convergence to an unfavorable local optimum in the NDT-based pose estimation, a preprocess is conducted to roughly estimate the truck’s pose before the NDT. Although applications that deal with large-scale point clouds often require downsampling or compression [

35,

36] to improve data transfer speed or execution efficiency, this study uses only a single frame and does not include such processing in the preprocess. This preprocess consists of the following two steps:

Filtering of the observed point cloud based on the predefined parking area and height threshold.

Rectangle fitting to approximate the truck’s shape with a rectangle for estimation of an initial transformation.

The details of each step are explained in the following parts.

3.3.1. Filtering Based on Parking Area and Height

First, the observed point cloud is filtered based on the positions. Specifically, points outside the predetermined parking area are removed. In addition, to remove noise and ground points, only points above a certain height threshold are retained. This process ensures that the filtered point cloud primarily represents the dump truck.

3.3.2. Initial Transformation Using Rectangle Fitting

After the filtering, the rectangle fitting method [

13] is applied to the filtered point cloud to obtain a 2-D bounding rectangle. This rectangle approximates the horizontal projection of the filtered points.

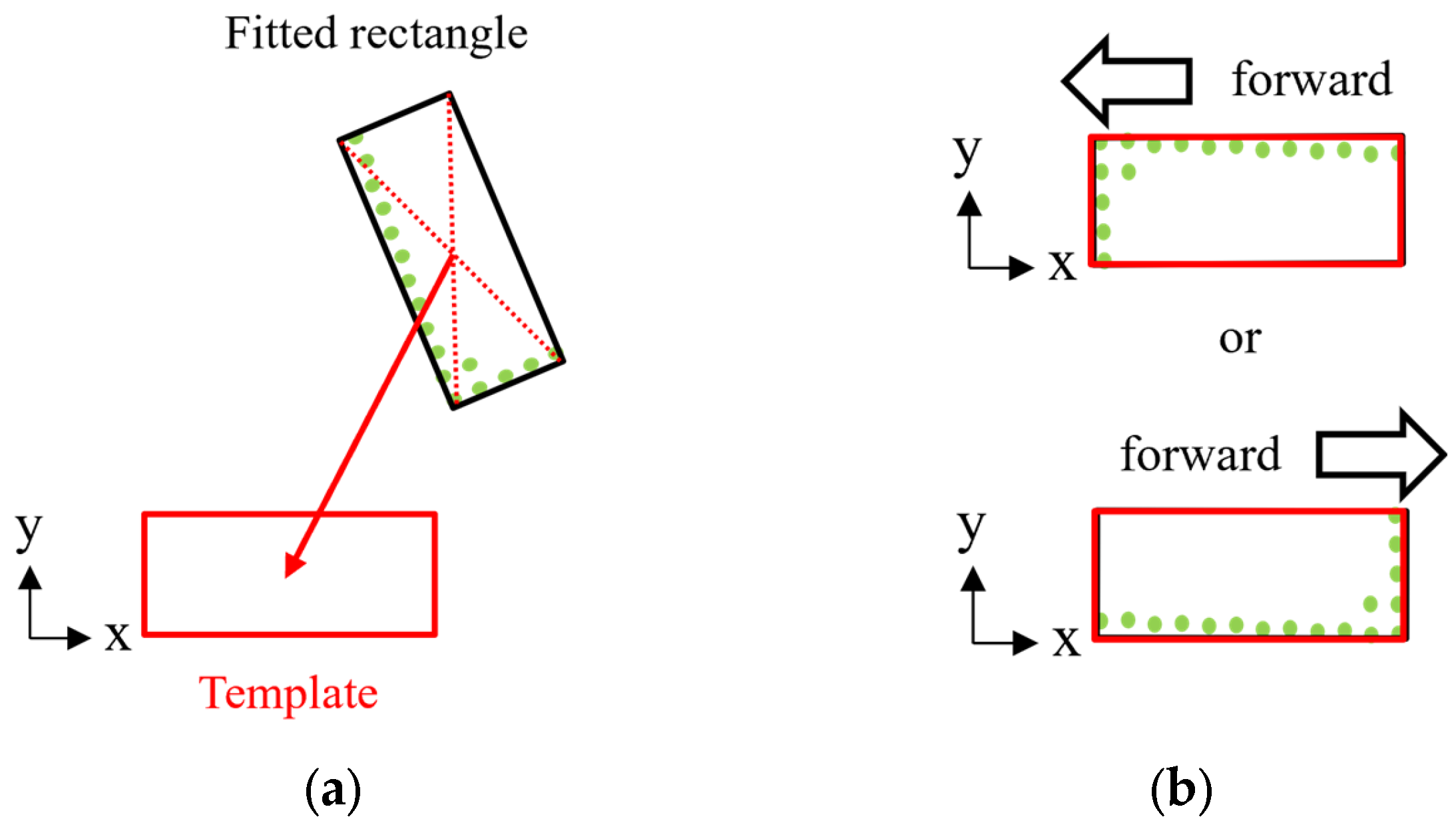

Figure 6 illustrates the process for deriving the initial transformation. Here, the coordinate system is defined such that the long side of the template is aligned with the

x-axis. First, the center of the bounding rectangle is computed from its four vertices. A translational transformation is then applied to align this center with the center of the template, as shown in

Figure 6a. Next, a rotational transformation is performed to align the orientation of the bounding rectangle with that of the template. However, because this initial transformation is based only on the geometric shape of the rectangle, it cannot distinguish between forward and backward orientations of the dump truck, as shown in

Figure 6b. To address this ambiguity, two types of initial transformations are used in the following NDT-based pose estimation. One transformation is a 180-degree rotation of the other. In other words, one corresponds to the correct orientation and the other to the reversed orientation.

3.4. NDT-Based Pose Estimation

In this study, we assume that the yaw angle is estimated with sufficiently high accuracy in the preprocess, and only the translational transformation parameters are updated in the NDT-based pose estimation for computational cost. First, the observed point cloud is transformed by a parameter

according to Equation (2). The probability density function is evaluated for all transformed points

and all voxels in the template. Here,

denotes the total number of the points in the point cloud. For each point

, let

denote the nearest voxel in the template. Then, the likelihood of

is expressed as follows:

where

and

denote the mean vector and covariance matrix of a voxel

, and

denotes the dimension of

, which is 3 in this study.

This study employs the NDT formulation proposed by Biber et al. [

37], who introduced a mixture model of a normal distribution and a uniform distribution. By incorporating this mixture model and applying further approximations, their method improves both robustness and computational efficiency. Following their formulation, the evaluation function for parameter

becomes computable as a sum of exponential functions [

38] as follows:

where

and

are constraints.

To optimize , the Newton method has been generally used in existing research. However, in this study, we employ the Euler method instead. This decision is based on two reasons. First, we found that the Newton method exhibits unstable behavior near inflection points, which can lead to divergence in the update of transformation parameters. Second, the Newton method requires the computation of the Hessian matrix, resulting in a high computational cost. Based on these considerations, we employed the Euler method to improve stability and efficiency in NDT-based pose estimation for dump trucks.

In the Euler method, the transformation parameter’s increment

is given as follows:

where

represents the step size and

is the gradient vector of

. Because the preprocess provides a rough initial transformation and the NDT aims to refine this transformation, we limit the maximum value of

by setting

as follows:

This ensures a maximum movement of 0.01 m within one iteration.

As described in

Section 3.1, the preprocess and the NDT-based pose estimation are performed with multiple templates corresponding to different size categories. In addition, as described in

Section 3.3, to determine the correct forward-backward orientation, two different initial transformations are inputted to the NDT-based pose estimation. Therefore, for the observed point cloud, the system conducts NDT-based pose estimation in parallel for all combinations of size categories and forward-backward orientations. In other words, if

represents the number of size categories, the system performs

NDT-based evaluations in parallel, considering both possible orientations for each category.

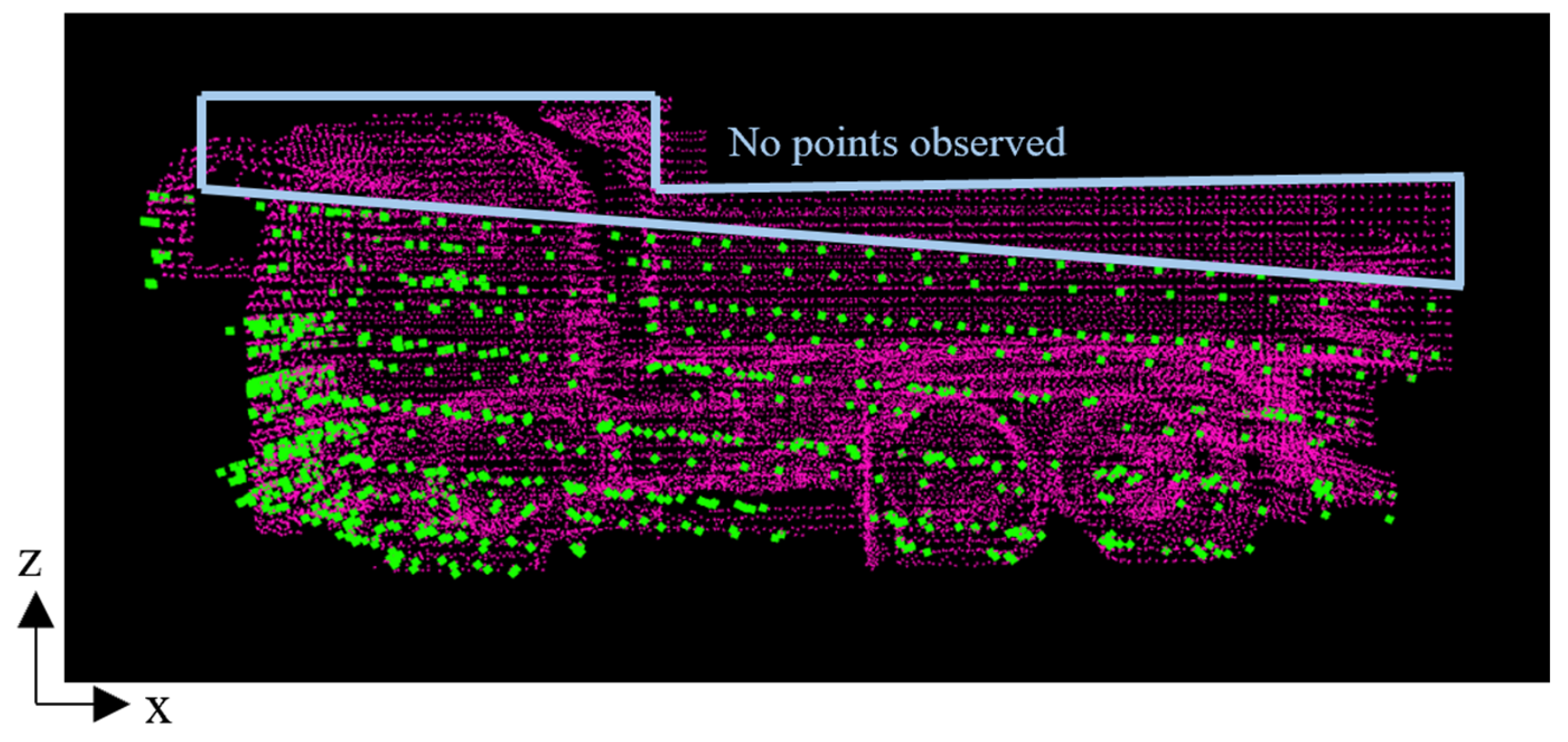

3.5. Size Classification with Negative Point Cloud

For the NDT-based pose estimations which are conducted in parallel, the system performs a two-step score selection. First, for each size category, it compares the two NDT scores with different initial orientations and selects the higher score. This first step determines the correct forward-backward orientation within each size category. Next, among the NDT results that correspond to different size categories, the system selects the highest score. This second step determines the correct size category. Through this two-step process, the system determines both the forward-backward orientation and the size category of the observed dump truck.

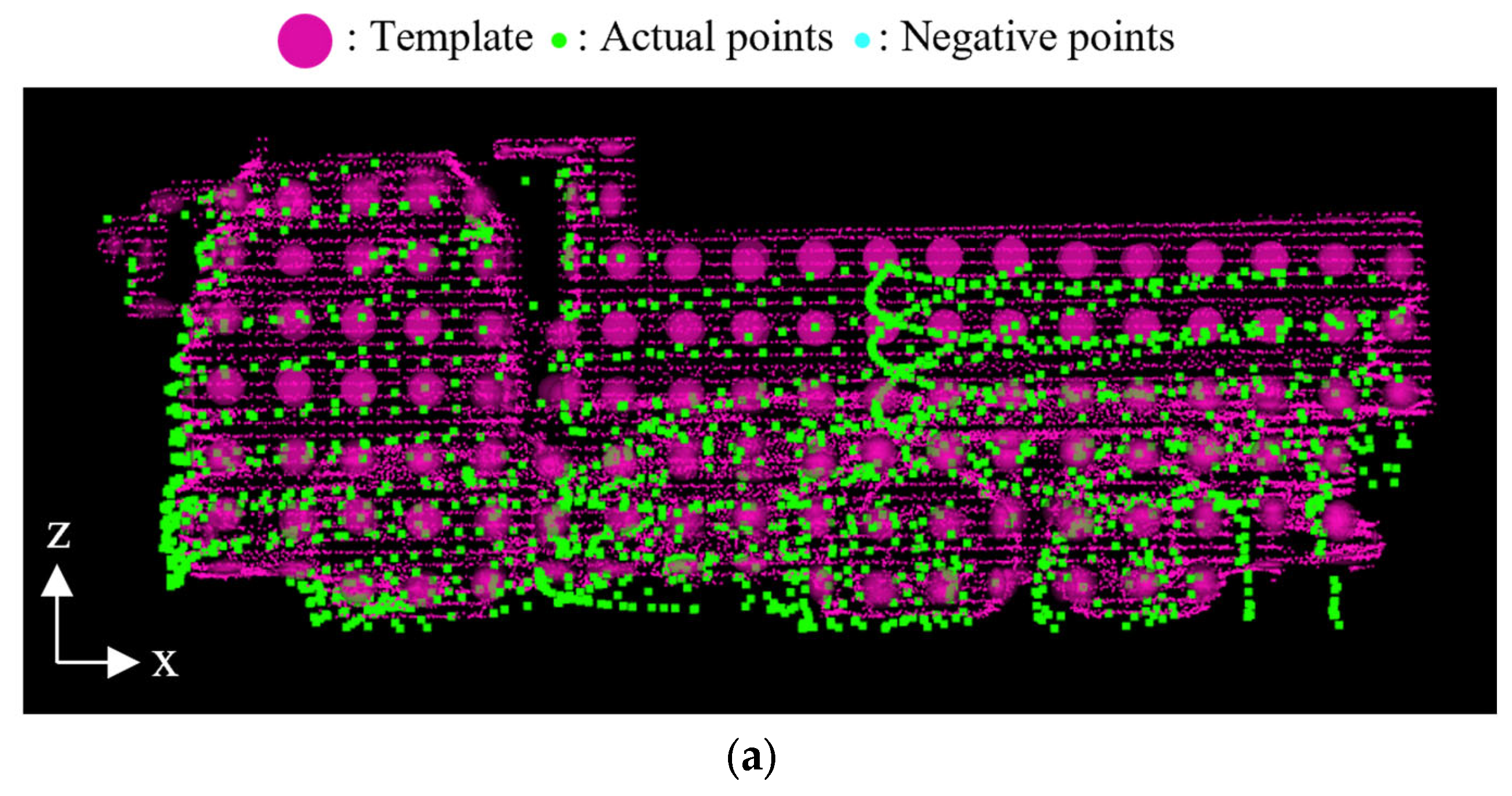

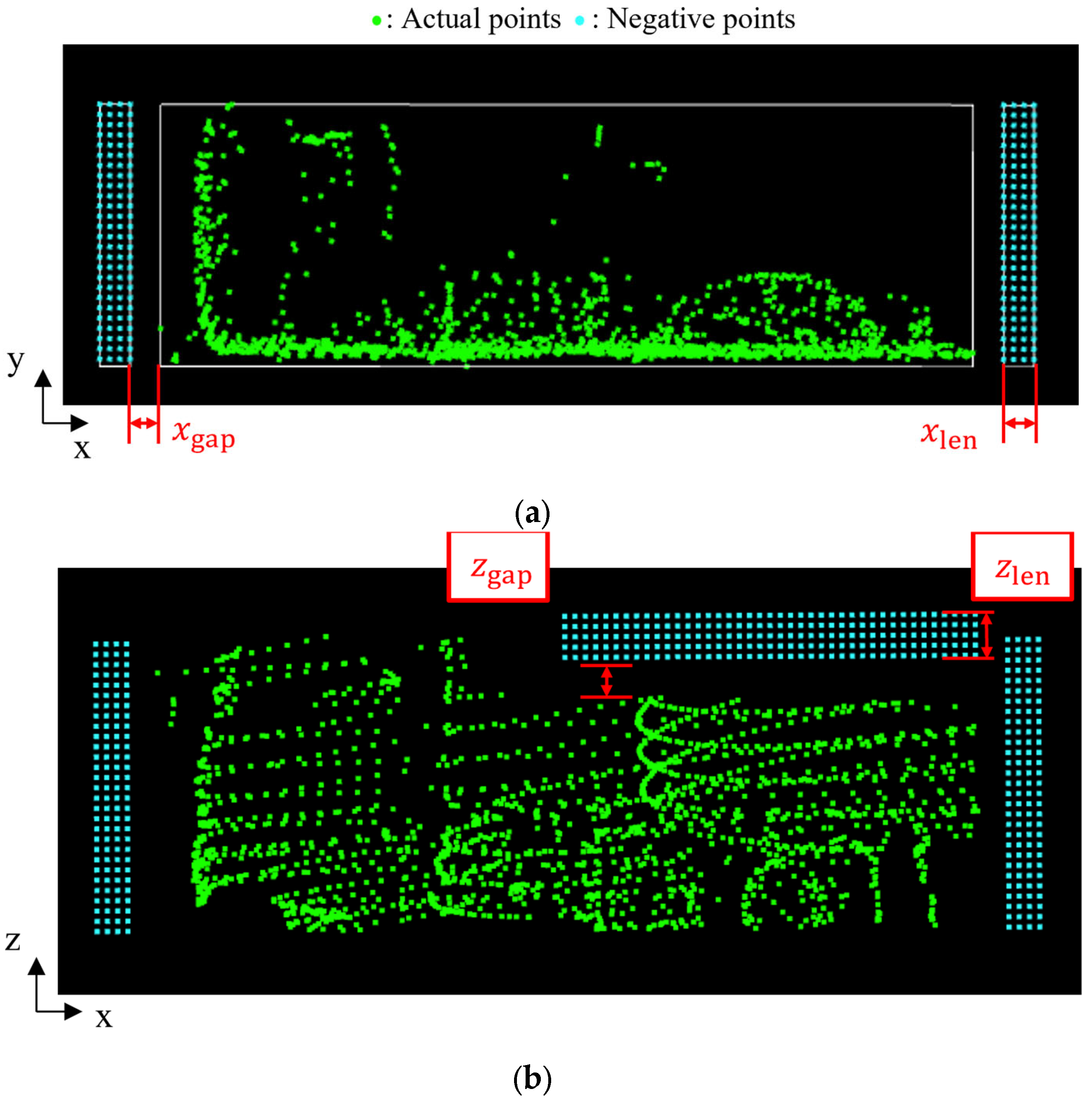

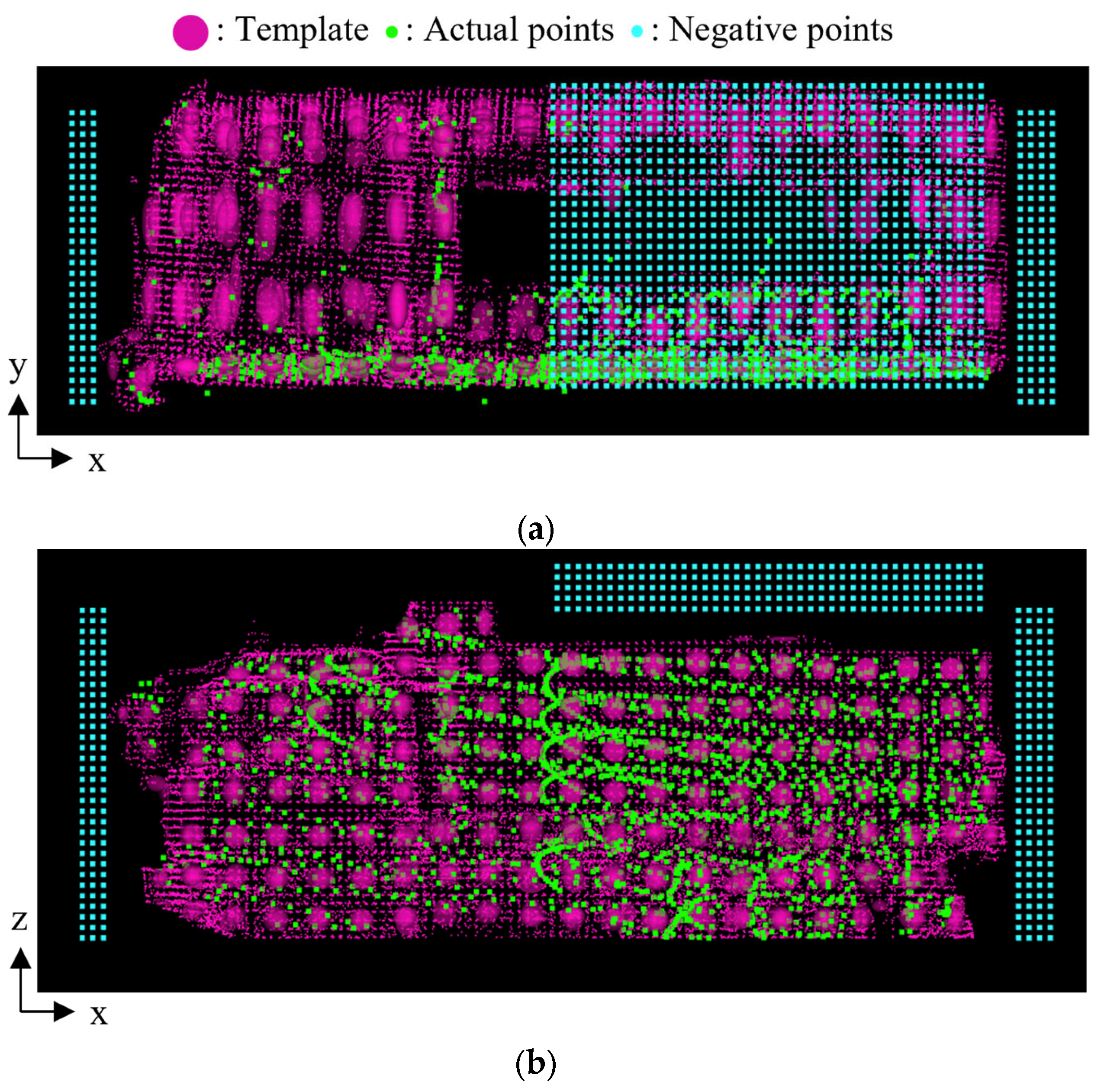

However, in the second step of the score selection, the size classification cannot be reliably performed only by comparing the conventional NDT scores. Because NDT maximizes the overlap between the template and the transformed point cloud, it may result in an invalid higher score when the template corresponds to a larger dump truck than the observed point cloud.

Figure 7a,b illustrate valid and invalid overlap between the point cloud and the template, respectively. In the figures, the green points represent the observed point cloud, and a set of red ellipsoids represents the template. To mitigate the issue of this invalid overlap, we propose a method to reduce the score when the template size is larger than the actual dump truck. Specifically, in the second step of the score selection, we introduce a virtual “negative” point cloud around the observed point cloud, which overlaps with the larger templates as shown in

Figure 7c. In the figure, the blue points represent the negative point cloud. This negative point cloud contributes as a negative term when calculating the NDT score. The NDT score incorporating the negative point cloud is expressed as

where

and

represent the number of points of the actual and negative point cloud, respectively. Here, the negative term is incorporated into the score by setting

as follows:

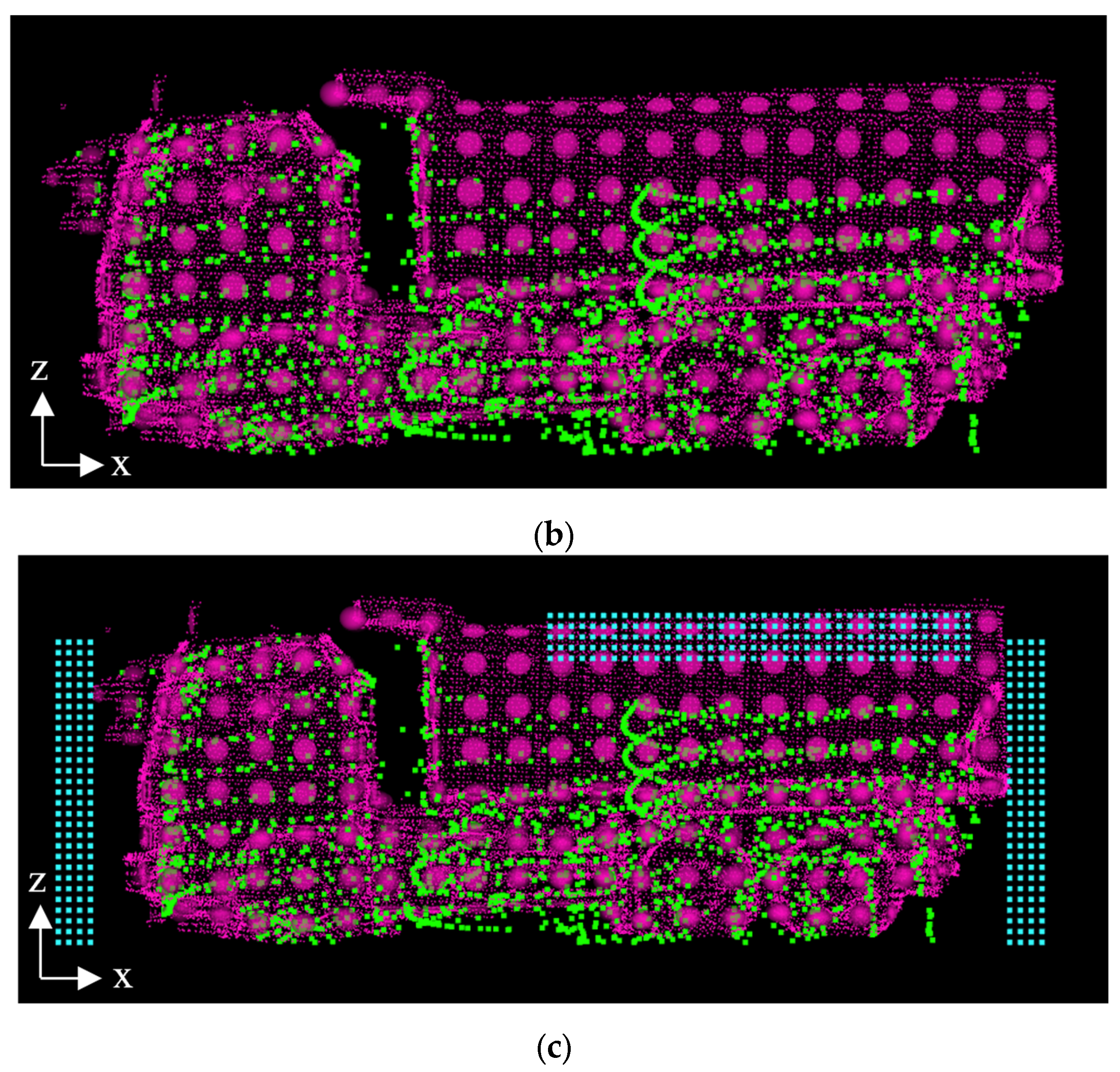

Figure 8 illustrates the positioning of the negative point cloud. In the figure, the green points represent the observed point cloud, while the blue points represent the negative point cloud. The negative point cloud is assigned to the areas in front of and behind the observed point cloud, as well as above the vessel. The spatial range of the negative point cloud in front of and behind the dump truck is defined by the fitted rectangle obtained in the preprocess and parameters

and

, as shown in

Figure 8a. Here,

represents the gap distance between the negative point cloud and the fitted rectangle. Moreover,

represents the length of the negative point cloud range. In addition, the range in the y-direction is defined by the minimum and maximum y-coordinates of the fitted rectangle. Similarly, the range of the negative point cloud above the vessel is defined by

and

, as shown in

Figure 8b. In addition, the range in the x-direction is defined by the x-coordinate of the center of the fitted rectangle and the maximum x-coordinate. Furthermore, the interval between the negative points

is also a parameter in the assignment process. These five parameters

,

,

,

, and

are designed based on the requirements for size classification, and their numerical values are described in

Section 5.1.

6. Discussion and Limitations

This study proposed an NDT-based two-stage framework that addresses pose estimation and size classification of dump trucks with unknown specifications. The method utilizes the probabilistic representation of point clouds to handle local shape variations and extends NDT to parallel comparisons across multiple templates for size classification. The experimental results demonstrated that the method could estimate truck poses with sufficient accuracy while correctly classifying trucks into three predefined size categories. The computational time is approximately 0.13 s per estimation, which is suitable for practical operation under limited computational resources. These results support the feasibility of applying the proposed framework to construction machinery in practice.

Compared with the design approaches in existing studies, two main approaches have been explored for pose estimation of dump truck using point cloud registration: ICP-based methods and deep-learning-based methods. The former generally assumes that the observed dump truck and the reference point cloud correspond to the same vehicle. In contrast, this study verified that pose estimation can be achieved even when the observed dump truck and the template are from different vehicles. On the other hand, although deep-learning-based approaches have the potential to achieve high accuracy and robustness, they require large training data, which have a considerable cost in terms of data collection, and they also have challenges for practical operation on construction machinery with limited computational resources. In contrast, the proposed method only requires representative reference point clouds as templates, which is more practical for construction machinery. Furthermore, by extending NDT to parallel comparisons, the proposed method also achieves size classification of dump trucks. To the best of our knowledge, this function has not been addressed in existing studies.

Despite these contributions, several limitations remain. First, the experiments were limited to three size categories, which does not fully reflect the diversity of actual size categories of dump truck. Although the number of size categories for dump trucks is finite and few, the three categories considered in the experiments are somewhat fewer than those in practice. Therefore, further validation with additional size categories is necessary. Second, the robustness of the method under adverse environmental conditions, such as rain, fog, or dust, was not evaluated. Accordingly, further experiments under real or simulated adverse environments are needed. Third, the proposed method depends on the coverage of LiDAR sensors, and pose errors may occur if parts of the truck lie outside the coverage. Although this issue could be mitigated by carefully selecting the mounting position of LiDAR sensors, in actual application, the sensor placement is determined not only for dump truck recognition but also in consideration of other functions, such as recognition of piled soil or autonomous locomotion. Specifically, one possible way to reduce the problem in this study is to mount the LiDAR upward so that the top part of the dump truck can be observed. However, this also creates a trade-off because the blind area on the ground becomes larger and makes it harder to detect surrounding objects. Therefore, a comprehensive design discussion is required regarding sensor placement. Finally, parameters related to negative point clouds were tuned for specific scenarios and their adaptability to other cases was not validated. For future work, we intend to develop an automatic parameter searching approach to improve adaptability across datasets.

7. Conclusions

In this study, we proposed a two-stage method for pose estimation and size classification of dump trucks with unknown specifications. The proposed method performs pose estimation and size classification by NDT in parallel with multiple templates that represent different size categories. For appropriate size classification, we incorporate negative point clouds into the conventional NDT score. The performance of the proposed method was evaluated using data acquired in a real-world environment. The results demonstrated that the proposed method could estimate the pose of dump trucks robustly and classify their size by incorporating negative point clouds. Based on these results, the proposed method will contribute to not only wheel loader operation but also the overall automation of soil loading onto dump trucks at construction sites.

However, as described in

Section 6, several limitations, such as the limited number of size categories, the evaluation under adverse environments, the investigation on LiDAR placement, and the requirement for more adaptable parameter settings, remain to be addressed. It is necessary for future work to address these issues to enhance the robustness and applicability of the proposed method in real construction environments.