Abstract

Accurate and scalable gait assessment is essential for clinical and research applications, including fall risk evaluation, rehabilitation monitoring, and early detection of neurodegenerative diseases. While electronic walkways remain the clinical gold standard, their high cost and limited portability restrict widespread use. Wearable inertial measurement units (IMUs) and markerless depth cameras have emerged as promising alternatives; however, prior studies have typically assessed these systems under tightly controlled conditions, with single participants in view, limited marker sets, and without direct cross-technology comparisons. This study addresses these gaps by simultaneously evaluating three sensing technologies—APDM wearable IMUs (tested in two separate configurations: foot-mounted and lumbar-mounted) and the Azure Kinect depth camera—against ProtoKinetics Zeno™ Walkway Gait Analysis System in a realistic clinical environment where multiple individuals were present in the camera’s field of view. Gait data from 20 older adults (mean age years) performing Single-Task and Dual-Task walking trials were synchronously captured using custom hardware for precise temporal alignment. Eleven gait markers spanning macro, micro-temporal, micro-spatial, and spatiotemporal domains were compared using mean absolute error (MAE), Pearson correlation (r), and Bland–Altman analysis. Foot-mounted IMUs demonstrated the highest accuracy (MAE –, –), followed closely by the Azure Kinect (MAE –, –0.98). Lumbar-mounted IMUs showed consistently lower agreement with the reference system. These findings provide the first comprehensive comparison of wearable and depth-sensing technologies with a clinical gold standard under real-world conditions and across an extensive set of gait markers. The results establish a foundation for deploying scalable, low-cost gait assessment systems in diverse healthcare contexts, supporting early detection, mobility monitoring, and rehabilitation outcomes across multiple patient populations.

1. Introduction

Gait is fundamental indicators of overall health and functional mobility, and their quantitative assessment plays an increasingly critical role across a wide range of clinical applications. These include fall risk prediction, post-stroke rehabilitation, musculoskeletal disorders, and early detection of neurological diseases such as Alzheimer’s and Parkinson’s disease [1,2,3]. Traditionally, gait evaluations rely heavily on clinician observation or standardized tests conducted in controlled settings. However, studies have shown that gait parameters may vary significantly between structured clinical assessments and real-world environments, including outdoor or crowded spaces, underscoring the importance of conducting gait analysis in more naturalistic contexts [4,5]. While informative, these methods are inherently subjective, time-consuming, and dependent on expert availability—posing barriers to widespread, routine use, particularly in resource-limited or remote environments [6,7].

To overcome these limitations, the field has witnessed a surge in the development of sensing technologies that offer objective, precise, and scalable gait assessments. These systems range from optoelectronic motion capture tools (e.g., Vicon), to pressure-sensitive walkways (e.g., GAITRite or Zeno™ Walkways), to wearable inertial measurement units (IMUs), to markerless depth cameras (e.g., Kinect v2, Azure Kinect) [8,9,10]. Such technologies are increasingly used not only in research settings but also in clinical environments, enabling fine-grained analysis of spatiotemporal gait features that can support diagnosis, monitor disease progression, and evaluate therapeutic outcomes across diverse patient populations [11,12,13,14].

A growing body of literature has explored the validity and reliability of these technologies by comparing them to reference systems. For example, several studies have benchmarked wearable sensors against pressure-sensitive walkways [15,16,17,18,19,20,21], or compared depth cameras with motion capture systems and clinical standards to evaluate gait parameters in controlled environments [22,23,24,25,26,27]. However, these studies lack precise temporal synchronization across sensing modalities, compromising the accuracy of multimodal comparisons. Moreover, prior studies focus on a narrow set of gait metrics—typically averaging only a handful of spatiotemporal parameters such as stride length, step time, or velocity. While informative, such limited analyses may overlook more subtle but clinically relevant aspects of gait, particularly in applications requiring early or differential diagnosis—such as mild cognitive impairment, atypical Parkinsonian syndromes, or neuropathies like those associated with diabetes [28,29,30,31]. Furthermore, these studies often share critical limitations. Most collect data under highly controlled laboratory conditions with only the subject in view of the camera—conditions that fail to reflect the complexities of real-world clinical environments where caregivers, clinical staff, or assistive devices are often present [22,23,24,25,32]. This discrepancy limits the generalizability and clinical readiness of such systems. Despite these shortcomings, recent advances in sensor technologies—including soft, flexible, and high-resolution wearable devices—are helping to overcome prior limitations in cost, scalability, and long-term reliability of gait monitoring systems [33,34,35]. These innovations promise broader clinical and home-based applications by improving accuracy and usability. While our study focuses on widely adopted tools such as the Azure Kinect and foot/lumbar IMUs, future integration of next-generation sensors may further enhance precision and deployment flexibility.

In response to these limitations, this study makes several novel contributions aimed at advancing the clinical utility of sensor-based gait analysis systems. First, we developed and utilized a custom hardware-based system for automated, millisecond-level synchronization across three sensing platforms: the Zeno™ Walkway pressure-sensitive walkway (serving as the gold standard), APDM wearable IMUs, and the Azure Kinect depth camera. This synchronization ensures accurate multimodal alignment for reliable validation. Second, we expanded the analytic scope by extracting a rich set of gait markers—including two macro-level and nine micro-level parameters—providing a detailed profile of gait dynamics. This high-resolution marker set enables a more nuanced assessment of gait alterations associated with neurodegenerative disease risk and supports future work on digital biomarker discovery. Third, we conducted gait recordings in real-world clinical environments where multiple individuals—such as caregivers or staff—may be present within the depth camera’s field of view. This design introduces realistic visual complexity and tests the robustness of tracking algorithms under practical conditions. Collectively, this work advances the state of the art by demonstrating the feasibility, accuracy, and clinical relevance of depth cameras and wearable sensors for detailed gait analysis in realistic healthcare settings. The resulting multimodal, synchronized dataset and comprehensive marker set lay the groundwork for scalable, objective, and versatile tools capable of supporting diverse clinical needs, from diagnostics to long-term monitoring and beyond.

2. Materials and Methods

2.1. Participants and Recruitment

This study initially recruited 20 participants (12 females, 8 males), aged between 52 and 82 years. Participants were either enrolled through the Healthy Brain Initiative (HBI) [36] at the University of Miami’s Comprehensive Center for Brain Health (CCBH) or were members of the general public who expressed interest in the study. The inclusion criteria included the following: (1) age 21 years or older; (2) ability to walk independently, with or without a walking aid (e.g., cane, walker); (3) capacity to provide informed consent; (4) willingness to be video recorded during walking and movement assessments. The exclusion criteria were the following: (1) severe mobility impairments preventing safe ambulation even with assistive devices;(2) unstable medical conditions that could pose safety risks during participation (e.g., uncontrolled hypertension, recent surgery, or acute illness).

Each participant underwent a physical evaluation—including InBody body composition analysis and anthropometric measurements—as well as a cognitive assessment using the Montreal Cognitive Assessment (MoCA). Data from two participants were excluded due to technical issues, specifically poor signal quality caused by sensor displacement during walking trials. As a result, data from 18 participants were deemed suitable for further analysis. Table 1 summarizes the demographic characteristics of the participants whose data were successfully recorded and included in the final analysis. All procedures adhered to the principles of the Declaration of Helsinki and were approved by the Institutional Review Board (IRB) at Florida Atlantic University and University of Miami. Written informed consent was obtained from all participants prior to data collection after providing enough information about the study.

Table 1.

Participant demographics for all recruited participants and those included in the final analysis. Values are presented as numbers or Mean ± SD.

2.2. Study Design

The participants wore three APDM IMUs, secured with straps and placed on the dorsal surface of the left and right feet, as well as the lower back at the level of the fifth lumbar vertebra (see Figure 1B). Each recording session included three tasks: a brief practice trial, followed by Single-Task and Dual-Task conditions. The practice trial, which followed the same walking pattern as the Single and Dual-Task, was conducted to familiarize participants with the procedure. In the Single-Task condition, participants performed straight, back-and-forth walking over approximately seven meters on the Zeno™ walkway, which is a pressure sensor integrated mat. In the Dual-Task condition, participants repeated the same walking route while performing a cognitive task—counting backward from 80 in steps of seven—a widely used paradigm in neurodegenerative research to impose additional cognitive load [37]. Dual-tasking typically results in slower walking speeds and altered gait dynamics, allowing evaluation of sensor performance across two distinct gait patterns within the same individuals. Two blue lines were marked on the outside of the Zeno™ Walkway, each placed one meter from the respective ends. The line at the starting end indicated both the start and end points, while the line at the opposite end marked the turning point. Participants began at the start line, walked to the turning line, turned around, and returned to the start line. The walking surface consisted of the Zeno™ Walkway, which features a flat, non-slip, industrial-grade vinyl surface integrated with pressure sensors. Before task execution, the Azure Kinect system was activated to record continuously throughout all tasks. The recording setups are described in detail in Section 2.3, Section 2.4, Section 2.5.

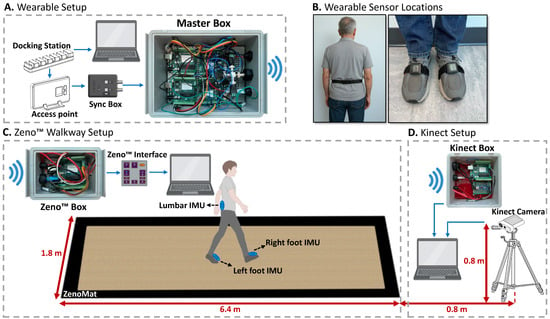

Figure 1.

Designed wireless synchronization setup for APDM, Zeno™ Walkway, and Kinect systems. (A) The APDM system acts as the master, generating trigger signals via a Sync Box. (B) Sensor placement on lumbar (L5) and feet. (C) Signals are wirelessly sent to the Zeno™ Box to synchronize with the Zeno Interface. (D) Simultaneously, signals reach the Kinect Box to align camera recordings with the APDM timeline.

2.3. Recording Tools

Participants performed walking tasks while their gait was simultaneously recorded using three synchronized systems: a pressure-sensitive gait analysis mat, a wearable sensor system with IMUs, and a depth camera–based system. Among these, the Zeno™ Walkway—previously validated for accurate spatiotemporal gait assessment in similar applications [38]—served as the gold standard for evaluating and comparing gait measures derived from the other two systems.

2.3.1. Pressure-Sensitive Gait Mat

Zen Electronic Walkway (Zeno™ Walkway; ProtoKinetics Inc., Havertown, PA, USA) contains a matrix of pressure-activated sensors. The system used in this study was 1.8 m in width and 6.4 m in length (activated width = 1.2 m, activated length = 6 m) and had a spatial and temporal resolution of 1.27 cm and 120 Hz, respectively. The raw pressure data were analyzed using PKMAS (Protokinetics Movement Analysis Software; ProtoKinetics Inc.), which automatically identifies gait events—such as heel strike (HS) and toe-off (TO)—and computes spatiotemporal gait parameters such as step length, stride length, cadence, and gait speed [38]. To ensure accurate comparisons, only gait cycles fully captured within the Zeno™ Walkway’s active sensing area were included in the analysis. The PKMAS software automatically excluded partial or off-mat steps, helping to eliminate boundary effects and ensure high-fidelity spatiotemporal data. This approach, consistent with prior validation studies [16,17,19,20], supports the use of Zeno™ Walkway as a reliable reference for gait analysis.

2.3.2. Wearable Sensors Systems

Three inertial measurement units (APDM Opal, Portland, OR, USA) were used to capture motion data at a sampling frequency of 128 Hz. Sensors were positioned on both feet and the lumbar region. Two sensor configurations were evaluated: (1) a dual-sensor setup with foot-mounted sensors, and (2) a single-sensor setup with only the lumbar-mounted unit. To ensure consistent analysis and comparison across wearable sensors, a unified coordinate system was established. The Y-axis was oriented outward from the skin, the X-axis was defined by the right-hand rule, and the Z-axis, aligned with the sensor’s side buttons, pointed toward the ground, as detailed in [11]. Data acquisition was managed through APDM’s Motion Studio software, which facilitated sensor calibration, configuration, and real-time signal visualization. However, no proprietary APDM algorithms or software packages were used for gait parameter extraction. Instead, the raw IMU data were exported and analyzed offline using our custom algorithms, specifically designed to extract spatiotemporal gait features relevant to this study.

2.3.3. Depth Camera

The Azure Kinect, Microsoft’s latest depth-sensing camera, was used to capture gait during the walking trials. Introduced in 2020 as the successor to Kinect v2, Azure Kinect utilizes time-of-flight technology to estimate pixel depth and offers improved spatial resolution and enhanced body tracking capabilities [39]. The device outputs three data streams—RGB, depth, and skeletal data—and can track up to 32 body joints [39]. The Azure Kinect was set to record the data up to 30 frames per second.

2.4. Synchronization Setup

To ensure precise temporal alignment across all recording systems—Zeno™ Walkway, APDM wearable sensors, and Azure Kinect—a custom hardware-based synchronization solution was developed. This system relied on a Bluetooth-enabled protocol to wirelessly transmit trigger signals to each device, coordinating data acquisition across the platforms. In this setup, the APDM system functioned as the master controller, as it supports the integration of a dedicated Sync Box into its wearable sensor configuration (Figure 1A). When the APDM system initiates data recording, the Sync Box outputs a 5-volt, TTL-level trigger signal via its output port. This signal serves as the master synchronization pulse and is transmitted wirelessly to the receiving systems—Zeno™ Walkway and Azure Kinect—using a custom-built Master Box.

The Zeno™ Walkway system receives the synchronization signal through a dedicated Zeno™ Box, which routes the signal to the SYNC INPUT port of the Zeno Interface (Figure 1C), thereby aligning its internal recording timeline with the APDM system. In parallel, the Kinect system receives the same signal via the Kinect Box (Figure 1D), which relays it to the Kinect’s computing interface, enabling synchronization of the depth camera data with the wearable sensor recordings. The APDM system offers the flexibility to output both edge-triggered and TTL-level signals. Since the Zeno™ Walkway’s Zeno Interface requires TTL-level signals and interprets signal states such that a low signal (0 V) denotes active recording and a high signal (5 V) indicates non-recording periods, the APDM Sync Box was configured accordingly. Specifically, it was set to emit a constant high-level signal during idle periods and to drop to a low-level signal during active task performance, ensuring compatibility with Zeno™ Walkway’s synchronization requirements.

2.5. Setup and Procedure

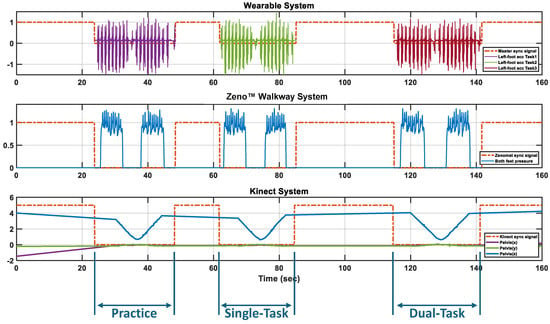

Figure 1 illustrates the complete setup used to collect synchronized gait data from wearable IMUs, the Zeno™ Walkway, and the Azure Kinect depth camera. Prior to task execution, the Zeno™ Walkway and Kinect systems were activated to record continuously throughout the practice, Single-Task, and Dual-Task walking conditions. Synchronization was controlled by sending trigger signals from the wearable sensor setup to the Zeno™ Walkway and Kinect at the beginning and end of each task. Figure 2 presents an example of the raw gait signals and synchronized trigger signals across all three systems for a single subject, demonstrating how the signal transitions from a high level during non-task periods to a low level during active walking. For the Zeno™ Walkway and Kinect systems, which recorded continuously, these triggers served as temporal markers to segment task-specific data, whereas the APDM system inherently aligned recordings with the active walking periods defined by the master synchronization signal.

Figure 2.

Synchronized trigger signals across APDM, Zeno™ Walkway, and Kinect systems during multiple tasks. The sync signal remains high when no task is active and drops low during task execution. APDM records only during active task periods, while Zeno™ Walkway and Kinect record continuously, using the sync signal as a temporal reference to identify the practice trial, Single-Task, and Dual-Task.

2.6. Gait Marker Extraction

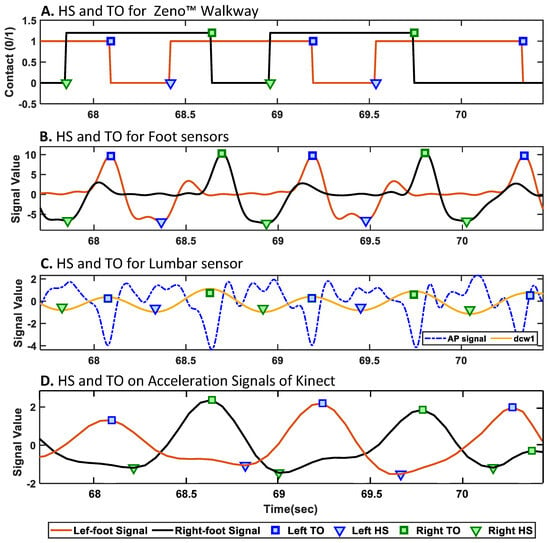

In this section, we describe the methodology used to extract temporal and spatial gait markers from the three technologies. The first step is to detect gait events—HS (Heel-Strike) and TO (Toe-Off)—from the recorded data of each system. For the Zeno™ Walkway system, the gait events and markers were derived using PKMAS software. Figure 3A illustrates the HS and TO events on the foot contact data for a representative subject using Zeno™ Walkway. It is important to note that all the gait events were independently extracted from wearable IMUs (lumbar and bilateral foot-mounted) and Kinect video recordings using custom algorithms tailored, as explained in Section 2.6.1, Section 2.6.2, Section 2.6.3. Section 2.6.4 then explains the calculation of temporal and spatial gait markers based on the detected events.

Figure 3.

Detected gait events (heel strike [HS] and toe-off [TO]) for a representative subject across multiple modalities. (A) Zeno™ Walkway; (B) foot-mounted IMUs; (C) lumbar-mounted IMU; (D) acceleration signals extracted from heel joints by the Azure Kinect camera. Each subplot shows the automatically extracted HS and TO events using sensor-specific algorithms.

2.6.1. HS/TO Detection with APDM Foot IMUs

HS and TO events were detected using the medial–lateral angular velocity (Y-axis gyroscope signal) from the foot-mounted inertial sensors [40]. The raw signal was first processed using a two-stage filtering pipeline. A 12th-order, high-pass Butterworth filter, implemented in second-order sections, was applied to remove low-frequency drift. This was followed by a custom FIR low-pass filter with 216 coefficients to attenuate high-frequency noise. The filtered signal was then mean-centered to obtain a clean oscillatory waveform suitable for event detection. HS events were identified as the negative peaks (minima), and TO events as the positive peaks (maxima) of the processed signal. Events were detected using MATLAB’s findpeaks function, with parameters set for minimum peak height, prominence, and inter-peak distance to ensure reliable identification. All analyses were conducted using MATLAB R2024a. This approach is entirely signal-based and does not involve strapdown integration. Figure 3B illustrates the detected HS and TO events for the left and right foot sensors for the representative subject.

2.6.2. HS/TO Detection with APDM Lumbar IMU

The gait event detection approach was based on the method described in [41], with a modification to improve TO detection. The anterior–posterior acceleration signal (Z-axis of the accelerometer) was first preprocessed by linear detrending and low-pass filtering at 10 Hz using a second-order Butterworth filter. The filtered signal was then integrated using the cumtrapz function and subsequently differentiated using the continuous wavelet transform (CWT) with the function in MATLAB. A Gaussian first-order () wavelet was applied at an estimated optimal scale. Local minima and maxima of the first-order differentiated signal were identified as HS and TO events, respectively, using the function. All analyses for the lumbar sensor were performed in MATLAB R2014a. Spatiotemporal gait parameters were then calculated from the timing of HS and TO events, along with step length estimations based on an inverted pendulum model, as described in [42]. Figure 3D shows the detected HS and TO events for the left and right foot estimated using the lumbar-mounted sensor.

2.6.3. HS/TO Detection with Azure Kinect

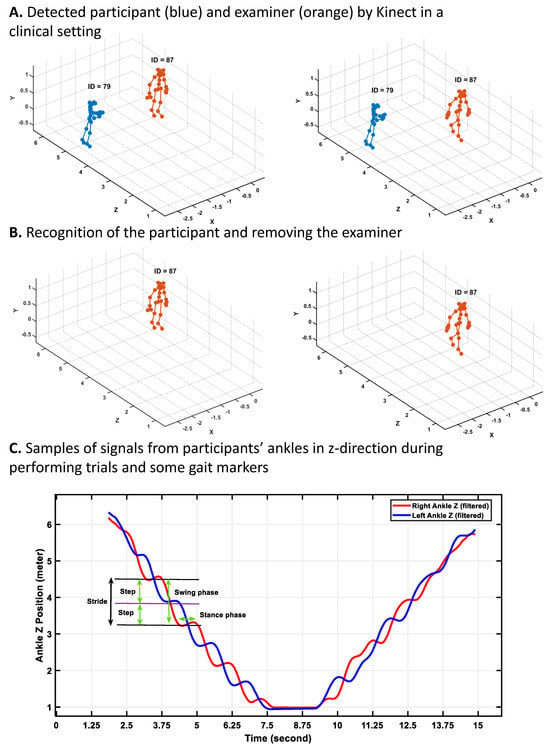

In this study, the data were recorded via Azure Kinect in a real setting of the clinics, where there were other people in the recording environment. Thus, more than one person was in the angle of view of the Azure Kinect, and those people were also detected by the Azure Kinect (see Figure 4A). Also, as the data were recorded continuously in a synchronized way with other technologies, different IDs were assigned to the people who came and went out of the camera’s angle. Consequently, before working on the signals of the skeletal data of the participant to extract gait markers, several successive preprocessing steps were performed on the recorded data in the clinics.

Figure 4.

Processing Azure Kinect data in a real clinical setting with attention to other people for gait marker extraction. (A) Detected participant (blue) and examiner (orange) by Kinect in a clinical setting; (B) Recognition of the participant and removing the examiner; (C) Samples of signals from participants’ ankles in z-direction (depth) during performing trials and some gait markers. When the participant walks toward the camera, the z-values of the ankles decrease, and after turning and coming back toward the start point, the z-values increase.

First, using the annotation signals, various types of recorded tests were separated. Then, for each of the recorded tasks, the recorded skeletal data of people in the clinics tracked by Kinect were visualized. Our analysis showed that for the participant, the ID assigned by Azure Kinect did not change during each of the separate trials as the camera was set to track the participant’s body without missing it during the closing process (see Figure 4A). However, the assigned ID to other people who came into view and went out of the camera’s view changed. Regarding these findings and the location of the participants during the trials, we could separate the participants’ data from other people detected by Kinect. Figure 4 shows the samples of the skeletal data visualized via the MATLAB 2024a version, the recognition of the participants, and finally the plotting of signals of the right and left ankle of the participants during walking to detect gait cycles, strides, steps, subphases of gait cycles, etc.

After separating the skeletal data of the participants from other people tracked by Kinect, in the preprocessing stage (see Figure 4B), a sixth-order Butterworth filter with a 3 Hz cutoff frequency was applied to the joint movement signals to eliminate noise [43,44]. To detect the gait cycles and their related gait markers, such as stance and swing phases, step and stride times, and length, we need to detect the heel strike and toe-off occurrence, like other technologies (e.g., electronic walkways or IMU sensors). We applied the second gradient on the signals of the right and left ankles in the y-direction to convert the location signals of the ankle and foot to acceleration signals. We found the local maximum and minimum of the ankle acceleration signals as heel strikes and toe-off, respectively (see Figure 3D). Next, various gait markers were calculated using heel strike and toe-off incidents as described in the following section.

2.6.4. Calculation of Gait Markers

Temporal and spatial gait parameters were calculated following TO and HS detection. The temporal gait markers (e.g., step time, stride time, stance time) were computed based on the timing of HS and TO events and are consistent across all sensing technologies. These formulas are presented in Table 2 for the right foot, assuming the left foot initiates the gait cycle; analogous formulas were applied to the left foot by adjusting the event sequence accordingly. In contrast, the spatial gait markers (e.g., step length, stride length) were derived using technology-specific methods that do not rely directly on HS and TO timing, and are therefore shown separately for each sensing technology in Table 2. The index i in the formulas shows the current events (i.e., current HS or TO), and shows the next event.

Table 2.

Formulas used to calculate temporal and spatial gait markers.

Step length from the foot-mounted sensors was estimated using an empirical equation, as proposed by Weinberg [45]. The calculation requires determining the vertical acceleration component (), derived from the foot’s local acceleration signals and using the relation , where is the pitch angle estimated from gyroscope data, and g is the gravitational acceleration [46]. The parameters and represent the maximum and minimum values of within a single step, and K is a calibration constant used for subject-specific adjustment. Stride length was calculated using the same formula, but and were extracted over the duration of a full stride instead of a single step.

Step length from the lumbar-mounted sensor was estimated using the inverted pendulum model, as described in [42]. In this model, h denotes the vertical displacement of the center of mass, obtained through double integration of the vertical acceleration () using the cumulative trapezoidal method (). The parameter l represents the pendulum length, which was approximated by the subject’s foot length.

Step length from the Kinect is calculated by subtracting the z-direction position of one foot at its heel strike from the z-direction position of the opposite foot at the time of its toe-off. Also, to calculate the stride length, the location of the same foot during two successive heel strikes is subtracted from each other (see Table 2 for step and stride length formulas used to extract via Azure Kinect data).

2.7. Evaluation and Statistical Analysis

All statistical analyses were performed using MATLAB R2024a. Agreement between gait markers derived from Zeno™ Walkway and those obtained from APDM foot-mounted sensors, APDM lumbar-mounted sensors, and Azure Kinect was evaluated using Pearson correlation coefficient (r), mean absolute error (MAE) [11], and Bland–Altman analysis. r assessed the linear association between the systems, while MAE quantified the average magnitude of error. Bland–Altman plots were used to visualize the mean differences and 95% limits of agreement (LoA) between measurement methods, providing insight into systematic bias and variability. Statistical significance was defined as [47].

3. Results

The analysis was conducted across a range of gait markers categorized into macro- and micro-level parameters, where macro-level gait markers capture overall performance and include average gait velocity and cadence, and micro-level gait markers reflect more detailed aspects of the gait cycle. The micro-level markers were further classified into the following: Temporal markers (e.g., stride time, step time, stance time, swing time, single and double support time), Spatial markers (e.g., stride length, step length), and Spatiotemporal markers (e.g., stride velocity). These categories allowed for a comprehensive evaluation of each sensing technology’s ability to measure both high-level and fine-grained gait characteristics under both single-task and dual-task walking conditions.

Table 3 presents the results of the comparison for 11 extracted gait markers obtained using three different sensor technologies: the Zeno™ Walkway, APDM IMUs mounted on the feet and the lumbar region, and the Azure Kinect depth camera. For each gait marker, the mean ± standard deviation () is reported along with the MAE, which was computed by comparing each technology against Zeno™ Walkway that serves as the reference system. The results are presented separately for both Single-Task and Dual-Task walking trials. Also, Table 4 presents the capability of these various sensing technologies in capturing trends in gait markers relative to those obtained from the Zeno™ Walkway via correlation analysis for both Single-Task and Dual-Task trials.

Table 3.

Comparison of gait markers in Single-Task and Dual-Task conditions, including mean and mean absolute error (MAE) between Zeno™ Walkway and other sensors. Macro gait markers are separated from micro gait markers by a line.

Table 4.

Pearson Correlation (r) and significance (p-value) between Zeno™ Walkway and other sensor-based measurements across gait markers in Single-Task and Dual-Task conditions.

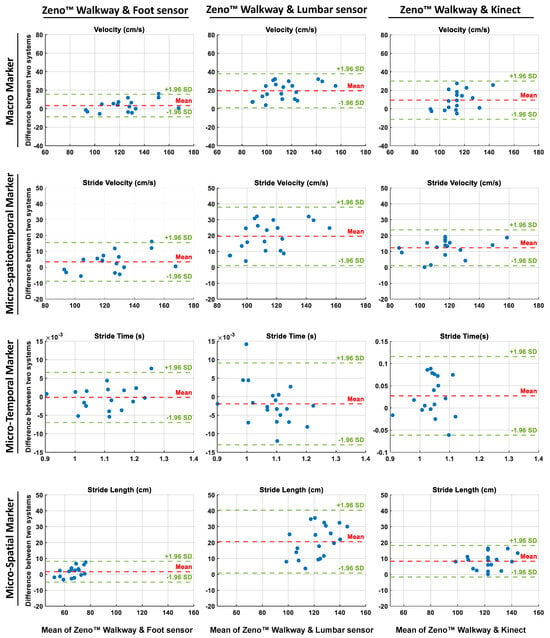

Moreover, to assess the agreement between the gait markers extracted from the Zeno™ Walkway and those derived from alternative sensor technologies, Bland–Altman analyses were conducted. These analyses evaluated the mean differences and LoA between the Zeno™ Walkway and foot-mounted sensors, lumbar-mounted sensors, and Azure Kinect, across macro and micro-temporal, micro-spatial, and micro-spatiotemporal gait marker categories. Figure 5 illustrates the Bland–Altman plots, where the first, second, and third columns correspond to the agreement between Zeno™ Walkway and foot-mounted sensors, lumbar-mounted sensors, and Azure Kinect, respectively. For each sensor comparison, the plots display the Bland–Altman analysis of gait markers selected as the best representatives in their respective categories: velocity for macro markers, stride velocity for micro-spatiotemporal markers, stride time for temporal markers, and stride length for spatial markers.

Figure 5.

Bland–Altman plots comparing gait markers derived from the Zeno™ Walkway with those obtained from foot-mounted sensors (left column), lumbar-mounted sensors (middle column), and Azure Kinect (right column). Each row represents a specific category of gait markers: macro (velocity), micro-spatiotemporal (stride velocity), temporal (stride time), and spatial (stride length). The x-axes show the mean of the two measurement systems, while the y-axes indicate their differences. Red dashed lines represent the mean bias, and green dashed lines indicate the 95% limits of agreement ( SD). These plots illustrate the level of agreement and systematic bias across different sensor modalities for representative gait markers.

3.1. Overall Agreement Between Sensing Technologies and Zeno™ Walkway

In the Single-Task condition, the MAE for gait markers derived from foot-mounted IMU sensors ranged from to (), with the lowest errors observed in temporal parameters such as step time and stride time and the highest error found in stride length. For the lumbar-mounted sensors, MAEs ranged from for step time to for average velocity, indicating markedly lower accuracy compared to foot-mounted sensors. The Azure Kinect demonstrated MAEs between and , with the lowest errors for step and stride times and the highest for stride velocity. Under the Dual-Task condition, similar trends were observed, although errors were generally slightly higher. This decrease in accuracy may be partly explained by slower walking speeds during dual-tasking, which reduce the number of gait cycles captured within a given time window. At lower speeds, maintaining the same level of precision would likely require a higher sampling frequency to preserve the temporal resolution observed in the Single-Task condition. Foot-mounted sensors exhibited MAEs from for step time to for stride length, lumbar-mounted sensors ranged from to (again highest in average velocity), and Azure Kinect ranged from for stride time to for stride length.

Correlation analyses revealed moderate-to-strong agreement between the three sensor modalities and Zeno™ Walkway, with the highest correlations observed for foot-mounted sensors. In Single-Task trials, foot-mounted sensors achieved correlations ranging from () for step and stride length to () for step and stride time and cadence. Lumbar-mounted sensors exhibited perfect correlations for step and stride time (, ) but substantially weaker correlations for other temporal markers, including swing and single support time (, ). Azure Kinect correlations ranged from () for single support time to () for stride velocity, with all markers significantly correlated. In Dual-Task trials, foot-mounted sensors continued to demonstrate strong correlations across all markers, while lumbar-mounted sensors yielded significant correlations for 8 out of 11 markers, ranging from () for stance time to () for step and stride time; however, swing, single support, and double support times remained weak (, for swing and single support, and , for double support). Azure Kinect correlations in the Dual-Task condition ranged from () for swing and single support times to () for stride velocity, with all correlations statistically significant. Overall, foot-mounted IMU sensors and the Azure Kinect consistently demonstrated high agreement with Zeno™ Walkway across both conditions, while the lumbar-mounted sensor performed well for select markers (step and stride time) but showed reduced reliability for more detailed temporal and phase-specific gait parameters.

3.2. Macro-Level Gait Marker Agreement Across Technologies

The comparison of macro-level gait markers, specifically average velocity and cadence, between wearable sensors and the Azure Kinect relative to Zeno™ Walkway revealed clear performance differences across sensing modalities. In the Single-Task condition, the of average velocity and cadence measured by Zeno™ Walkway were and , respectively. Corresponding values were and for foot-mounted wearable sensors, and for the lumbar-mounted sensor, and and for the Azure Kinect. Analysis of the MAE for average velocity demonstrated that foot-mounted sensors () and Azure Kinect () closely approximated the Zeno™ Walkway reference, whereas the lumbar-mounted sensor exhibited a markedly higher MAE of , reflecting poor agreement.

Dual-Task trials showed similar patterns but with expected reductions in overall gait performance; Zeno™ Walkway-measured average velocity decreased to 108.68 ± 20.43 cm/s, with corresponding values of (MAE ) for foot-mounted sensors, (MAE ) for lumbar-mounted sensors, and (MAE ) for Azure Kinect. Correlation analyses reinforced these findings: in Single-Task trials, foot-mounted sensors exhibited higher correlation with Zeno™ Walkway for average velocity and cadence (, and , ) than Azure Kinect (, and , ) and lumbar-mounted sensors (, and , ). In the Dual-Task condition, foot-mounted sensors achieved the strongest correlations for both markers ( and , ), followed by Azure Kinect ( and , ) and lumbar-mounted sensors ( and , ).

As Figure 5 shows, Bland–Altman analysis further supported these results: foot-mounted sensors displayed a bias profile similar to Azure Kinect, with minimal mean differences, narrow limits of agreement, and randomly distributed differences, indicating consistent agreement with Zeno™ Walkway. In contrast, the lumbar-mounted sensor showed substantial mean bias and broader limits of agreement, highlighting systematic underestimation and reduced reliability in measuring macro-level gait velocity.

3.3. Micro-Temporal Gait Marker Agreement Across Technologies

For gait cycle duration (stride time), the mean ± SD was 1.08 ± 0.08 s (MAE = 0.00 ± 0.00) for the Zeno™ Walkway, (MAE ) for the foot-mounted wearable sensors, (MAE = 0.00 ± 0.00) for the lumbar-mounted sensor, and (MAE ) for the Azure Kinect. For subphases of the gait cycle, including step time, stance time, and swing time, both the foot-mounted sensors and Azure Kinect demonstrated very low errors—often or near zero—indicating high accuracy in the Single-Task condition. In contrast, the lumbar-mounted sensor showed higher MAEs for most of these markers, with the exception of step time, which remained accurate at . A similar trend was observed in the Dual-Task condition, where foot-mounted sensors and Azure Kinect maintained low MAEs for stride time, step time, stance, and swing times, while lumbar-mounted sensors exhibited consistently higher errors for all except step time. The high standard deviation in lumbar stance time reflects consistent underestimation relative to Zeno™ Walkway values rather than isolated outliers. Upon further investigation, it is revealed that this variability arises from limitations in estimating gait subphases using lumbar IMUs, which rely on indirect approximations. In contrast, foot-mounted IMUs offer more precise detection of contact events, leading to lower variance and higher agreement. For additional micro-temporal markers, such as single and double support times, foot-mounted sensors again achieved the lowest MAEs ( and , respectively), followed by Azure Kinect ( and ), whereas the lumbar-mounted sensor showed markedly higher errors ( and ) in the Single-Task condition; these differences persisted in the Dual-Task condition.

Correlation analyses further confirmed these patterns: for stride time, both foot-mounted and lumbar-mounted sensors achieved perfect correlation with Zeno™ Walkway (, ), while Azure Kinect showed slightly lower but still strong correlation (, ) in Single-Task trials. For step time, correlations were similarly perfect for foot- and lumbar-mounted sensors (, ) and slightly lower for Azure Kinect (, ). For other temporal markers—stance, swing, single support, and double support times—foot-mounted sensors showed the highest correlations (, 0.96, 0.96, and 0.98, for all), followed by Azure Kinect (, ; , ; , ; and , , respectively). The lumbar-mounted sensor showed substantially weaker performance, with correlations of () for stance time, () for both swing and single support times, and () for double support time. In the Dual-Task condition, stride and step time correlations remained perfect (, ) for foot-mounted and lumbar sensors and slightly lower for Azure Kinect (, for stride time; , for step time), while other temporal markers continued to show superior correlations for foot-mounted and Kinect sensors compared to lumbar-mounted sensors.

Bland–Altman analysis supported these findings: stride time exhibited strong agreement across all technologies, with foot-mounted and lumbar-mounted sensors displaying minimal dispersion (on the order of s), while Azure Kinect showed slightly wider variability (on the order of s), indicating a small but notable bias compared to the reference system.

3.4. Micro-Spatial Gait Marker Agreement Across Technologies

For spatial gait markers, specifically step and stride length, all three sensing technologies exhibited higher MAEs compared to their corresponding temporal markers (step and stride time). In the Single-Task condition, the of step length and stride length were cm (MAE ) and cm (MAE ) for foot-mounted wearable sensors, cm (MAE ) and cm (MAE ) for lumbar-mounted wearable sensors, and cm (MAE ) and cm (MAE ) for Azure Kinect. In comparison, Zeno™ Walkway recorded cm for step length and cm for stride length. In the Dual-Task condition, similar patterns were observed, with spatial errors remaining larger than temporal errors. Specifically, step and stride lengths were cm (MAE ) and cm (MAE ) for foot-mounted sensors, cm (MAE ) and cm (MAE ) for lumbar-mounted sensors, and cm (MAE ) and cm (MAE ) for Azure Kinect, while Zeno™ Walkway measured cm and cm for step and stride length, respectively.

Correlation analyses showed that, in Single-Task trials, step and stride lengths measured via foot-mounted sensors and Azure Kinect were strongly correlated with Zeno™ Walkway (, for both step and stride length with foot-mounted sensors; , and , with Azure Kinect). Lumbar-mounted sensors showed lower correlations (, for step length and , for stride length). In the Dual-Task condition, Azure Kinect demonstrated the highest correlations for both step and stride length ( and , ), followed by foot-mounted sensors ( and , ) and lumbar-mounted sensors ( and , ).

Bland–Altman analysis further confirmed these findings: stride length measured via lumbar-mounted sensors showed wider limits of agreement and evident systematic bias compared to Zeno™ Walkway, while foot-mounted sensors and Azure Kinect demonstrated narrower limits of agreement and minimal bias, with data points scattered randomly around the mean difference line (Figure 5).

3.5. Micro-Spatiotemporal Gait Marker Agreement Across Technologies

For the spatiotemporal marker of stride velocity, all the other sensor technologies underestimated the values than the Zeno™ Walkway system. The stride velocity reported cm/s via Zeno™ Walkway while this marker was cm/s (5.50 ± 4.31), cm/s (), and cm/s () via wearable sensors mounted on the feet and lumbar, and Azure Kinect, respectively, in Single-Task condition. Similarly, these three technologies reported lower stride velocity than Zeno™ Walkway with cm/s () via feet-mounted wearable sensors, cm/s () via lumbar-mounted wearable sensors, and cm/s () via Azure Kinect camera, while it was measured cm/s by the Zeno™ Walkway reference system in the Dual-Task condition too. These results showed that the stride velocity measured by the feet-mounted wearable sensor and the Azure Kinect camera resulted in high accuracy in comparison to the Zeno™ Walkway, while the lumbar-mounted wearable sensor could not be measured as accurately as these two technologies.

Regarding the correlation metric, they also confirmed the higher capability of feet-mounted wearable sensor and Azure Kinect camera for measuring the stride velocity with () and () in the Single-Task condition while it was () when measured via lumbar-mounted wearable sensors in the same condition. Similarly, in the Dual-Task condition, they were (), (), and () for feet-mounted wearable sensors, Azure Kinect camera, and lumbar-mounted wearable sensors, respectively.

The Bland–Altman analysis also resulted in the lowest bias and narrowest limit of agreement for the feet-mounted wearable sensors, followed by results of Azure Kinect, as presented in Figure 5. In comparison, the measured stride velocity showed higher bias and wider agreement lines, which showed the lower capability of lumbar-mounted wearable sensors than foot-mounted wearable sensors and the Azure Kinect camera for measuring the spatiotemporal marker of stride velocity in comparison to the Zeno™ Walkway as the reference system.

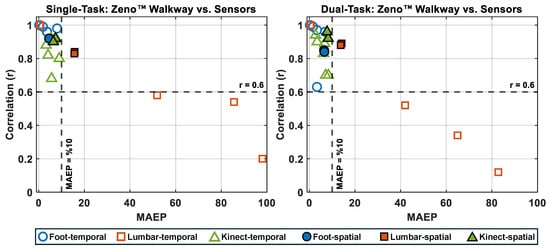

3.6. Correlation vs. Mean Absolute Error Percentage Analysis

To further evaluate micro gait markers, the relationship between correlation and mean absolute error percentage (MAEP) was visualized for each technology relative to Zeno™ Walkway (Figure 6). In both Single-Task and Dual-Task conditions, foot-mounted wearable sensors consistently demonstrated lower MAEP (below ) and higher correlation coefficients (greater than ) across all micro-temporal and micro-spatial gait markers. Azure Kinect exhibited comparable performance, with similarly low MAEP and high correlation, albeit slightly lower than foot-mounted sensors for some markers. In contrast, lumbar-mounted sensors displayed reduced performance, with several markers—particularly stance time, swing time, single support time, and double support time—showing MAEP values exceeding 10% and correlation coefficients below . This performance gap remained evident in Dual-Task conditions, highlighting the superior reliability of foot-mounted sensors and Azure Kinect for capturing fine-grained gait characteristics across varying cognitive loads.

Figure 6.

Correlation (r) versus Mean Absolute Error Percentage (MAEP) for temporal and spatial micro gait markers measured by foot-mounted, lumbar-mounted, and Kinect sensors compared to the Zeno™ Walkway reference. Unfilled symbols represent temporal markers and filled symbols represent spatial markers. The left panel shows Single-Task walking results, and the right panel shows Dual-Task walking results. Markers positioned toward the top-left quadrant (high r, low MAEP) indicate better agreement with Zeno™ Walkway. Horizontal and vertical dashed lines represent thresholds of and MAEP = 10%, respectively.

4. Discussion

Gait is increasingly recognized as valuable clinical markers across a broad range of applications, from fall risk assessment and rehabilitation monitoring to early detection of neurodegenerative diseases such as Alzheimer’s and Parkinson’s disease [2,28,48]. Advances in sensing technologies—particularly wearable IMUs and markerless depth cameras—offer promising alternatives to traditional electronic walkways like Zeno™ Walkway, which remain the clinical gold standard for gait analysis. These emerging tools have the potential to enable scalable, cost-effective, and remote assessments, particularly in real-world settings where controlled laboratory conditions are impractical. This study directly compared three sensing technologies—APDM wearable sensors (foot- and lumbar-mounted) and the Azure Kinect depth camera—against Zeno™ Walkway across Single- and Dual-Task walking conditions in a real-world clinical environment. A key methodological strength was the development of a custom hardware system enabling precise temporal synchronization across all devices. We analyzed 11 gait markers, including macro-level metrics such as average velocity and cadence and micro-level temporal, spatial, and spatiotemporal parameters, to assess each technology’s agreement with Zeno™ Walkway using correlation, mean absolute error, and Bland–Altman analyses.

4.1. Main Findings

The findings consistently showed that foot-mounted wearable sensors and the Azure Kinect outperformed lumbar-mounted sensors across both Single- and Dual-Task conditions. Foot-mounted sensors achieved near-perfect agreement with Zeno™ Walkway for macro and temporal markers and high accuracy for spatial and spatiotemporal markers. Azure Kinect performed comparably, showing strong correlations and low error for most markers despite operating in a complex clinical environment with multiple people in view. In contrast, lumbar-mounted sensors exhibited greater variability and underperformed, particularly for phase-specific temporal markers like swing time and support phases. These trends were further confirmed by visualization of correlation versus mean absolute error percentage, where foot-mounted sensors and Azure Kinect clustered in the high-correlation, low-error quadrant, while lumbar-mounted sensors frequently exceeded the 10% error threshold and fell below the acceptable correlation threshold (Figure 6).

4.2. Comparison with Previous Studies

Our study extends prior research on gait analysis by directly comparing three distinct sensing technologies—APDM wearable sensors mounted on the feet and lumbar, the Azure Kinect depth camera, and the Zeno™ Walkway electronic walkway—within the same experimental framework. Previous studies have typically examined only two technologies at a time, such as depth cameras versus infrared motion capture systems [24,25,26], depth cameras versus electronic walkways like GAITRite [22,23,27], or wearable sensors versus GAITRite [15,16,17,18,19,20,21] (see Table 5). To our knowledge, no prior work has concurrently evaluated all three modalities against a clinical gold standard, allowing our findings to provide a more comprehensive perspective on the strengths and limitations of each technology.

Table 5.

Comparison of our study with previous studies.

A major methodological advancement of this study is the real-world clinical setting used for data collection. Unlike earlier studies that ensured a controlled environment with only one individual visible to the depth camera [22,23,24,25,26,27], our recordings included additional personnel such as caregivers and staff, reflecting typical clinical conditions. This introduces realistic challenges for markerless tracking but enhances ecological validity and supports future deployment in routine clinical or home settings. Additionally, we developed custom hardware to achieve precise temporal synchronization across all devices, an improvement over earlier studies that relied on manual alignment or approximate step-count matching [17,20].

In terms of test conditions, most prior studies conducted evaluations exclusively under Single-Task walking scenarios, typically assessing participants at their preferred, self-selected walking speed. Some investigations extended this by incorporating variations within the Single-Task paradigm, such as treadmill-based assessments [26] or trials requiring participants to walk at slow, normal, and fast speeds [18,27]. In contrast, only one previous study [22], alongside our work, evaluated both Single-Task and Dual-Task conditions, with the latter incorporating a cognitive challenge—normal-speed walking combined with backward counting. Including a Dual-Task condition allows for assessment of each system’s robustness under increased cognitive load, which is critical for understanding their applicability in real-world clinical and home settings where multitasking is common [37].

Another distinguishing feature is the breadth of gait markers evaluated: we analyzed 11 markers spanning macro (e.g., cadence, average velocity) and micro domains (temporal, spatial, and spatiotemporal markers), whereas previous studies generally focused on a limited set of two to nine markers [18]. This comprehensive analysis enables deeper insights into marker-specific performance, particularly for markers like stride velocity that have rarely been studied [28].

When comparing our results with previous findings, we observed several consistencies as well as important differences. For macro-level markers, such as average velocity and cadence, our foot-mounted sensors and Azure Kinect produced mean absolute errors and correlations similar to or better than those previously reported. For example, Lanotte et al. [18] reported an MAE of cm/s for average velocity using combined foot and lumbar sensors, while our foot-mounted configuration achieved lower errors, highlighting the importance of sensor placement. Similarly, prior evaluations of Azure Kinect in controlled settings demonstrated high correlations for average velocity and cadence [23]; our results confirmed comparable accuracy in a less controlled clinical environment, underscoring its robustness under real-world conditions.

For micro-temporal markers, previous studies using APDM sensors reported high accuracy for stride and step time, with broader variability in stance, swing, and support phases [18,20]. Our results align with these patterns but further demonstrate that foot-mounted sensors maintain high accuracy across all temporal phases, while lumbar-mounted sensors show notable reductions in agreement, particularly for swing and support times. Importantly, no prior studies have benchmarked Azure Kinect for micro-temporal markers against an electronic walkway, making our findings a novel contribution that expands understanding of this technology’s capabilities. Spatial markers, including step and stride length, exhibited similar trends. Prior work reported correlations of 0.93–0.98 for foot-mounted sensors and lower values around – for lumbar-mounted sensors [18,20]. Our results confirmed this performance gradient and showed that Azure Kinect achieved strong correlations (≈0.92) despite being evaluated with a single camera in an unconstrained clinical environment, compared to prior studies using multiple cameras in laboratory settings [23]. These differences suggest that Azure Kinect remains viable for clinical applications even under more realistic constraints, though additional work may optimize tracking algorithms for multi-person scenes.

Collectively, these comparisons highlight both the strengths of our approach and the limitations of earlier studies. By incorporating multiple technologies, broader marker coverage, and realistic data collection conditions, we provide a more nuanced assessment of each sensor’s clinical applicability. Our findings reinforce the suitability of foot-mounted sensors and Azure Kinect for accurate and scalable gait analysis, while also demonstrating the limitations of lumbar-mounted sensors for fine-grained markers. This evidence supports their use in future clinical trials and informs decisions about technology adoption in routine gait assessments, especially for early detection and monitoring of neurodegenerative disorders.

4.3. Limitations and Future Directions

Although the sample size in this study was modest, it is comparable to that used in many prior validation studies of gait technologies (e.g., [22,24,25]) and was sufficient to demonstrate clear performance trends across the evaluated systems. Importantly, our cohort included both individuals with mild cognitive impairment and cognitively healthy controls, enabling us to capture variability across different cognitive states. While longer walking distances offer more comprehensive gait analysis, our study used a 7 m forward path (15 m total including return) due to clinical space limitations. This setup aligns with common clinical protocols, where 6 and 10 m walk tests are standard and have shown reliable accuracy for gait assessment [49,50,51]. Thus, our design reflects real-world clinical applicability. Also, while Azure Kinect demonstrated strong performance, its practical limitations—such as fixed camera height and angle, sensitivity to lighting, and a need for >6 m of unobstructed space—may restrict its use in some home or clinic settings. These factors can hinder generalizability without careful setup. In contrast, wearable IMUs are more portable and environment-flexible, making them suitable alternatives or complements in diverse real-world applications. Another area for growth is the range of gait tasks examined. This study focused on straight-line walking, which is widely used and directly comparable to prior work, but additional tasks such as curved walking, Timed Up and Go, or dual-task paradigms with higher cognitive demands could provide richer insights into functional mobility and better reflect real-world conditions. Building on these encouraging results, future work will expand to larger and more diverse populations and incorporate complex walking paths and functional mobility tasks.

5. Conclusions

This study compared three sensing technologies—APDM wearable sensors (feet and lumbar), Azure Kinect, and a computerized electronic walkway (Zeno™ Walkway)—for gait analysis in 18 older adults (mean age: 70.06 ± 9.45). Unlike prior work, this study simultaneously evaluated all three technologies under real-world clinical conditions where multiple individuals were present in the camera’s field of view, employed a custom-designed hardware synchronization system for precise temporal alignment, and analyzed an extensive set of detailed gait markers spanning macro, micro-temporal, micro-spatial, and micro-spatiotemporal domains. Results showed that APDM foot-mounted sensors offered the lowest MAE and highest correlation with Zeno™ Walkway (MAE = 0.00 ± 0.00, r = 1), while Azure Kinect demonstrated high promising performance with greater ease of use and lower cost (MAE = 0.01 ± 0.01, r = 0.98). These findings highlight the feasibility of both foot-mounted wearable sensors and Azure Kinect as scalable alternatives to electronic walkways for accurate gait assessment. Beyond their value in research, these technologies have broad clinical relevance, offering potential for fall risk assessment, rehabilitation monitoring, and early detection of various conditions, including, but not limited to, neurodegenerative diseases.

Author Contributions

M.N.: Conceptualization, Formal analysis, Methodology, Writing—original draft, Data curation, Investigation, Software, Validation, Visualization. M.S.: Conceptualization, Formal analysis, Methodology, Writing—original draft, Data curation, Investigation, Software, Validation, Visualization. A.R.: Conceptualization, Investigation, Methodology. M.I.T.: Conceptualization, Investigation, Methodology. J.E.G.: Validation, Writing—review and editing, Conceptualization, Investigation, Methodology, Funding acquisition. B.G.: Conceptualization, Formal analysis, Funding acquisition, Methodology, Supervision, Writing—review and editing, Investigation, Project administration, Resources, Validation. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science Foundation under grant number 1942669 and National Institute of Health under grant number R01 AG071514.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Florida Atlantic University and University of Miami, Protocol ID IRB2402041.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

We would like to acknowledge the participants of this study and the staff at CCBH for their valuable support during the data collection process.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Better, M.A. Alzheimer’s disease facts and figures. Alzheimers Dement. 2023, 19, 1598–1695. [Google Scholar]

- Del Din, S.; Godfrey, A.; Galna, B.; Lord, S.; Rochester, L. Free-living gait characteristics in ageing and Parkinson’s disease: Impact of environment and ambulatory bout length. J. Neuroeng. Rehabil. 2016, 13, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Sharafian, M.E.; Ellis, C.; Sidaway, B.; Hayes, M.; Hejrati, B. The effects of real-time haptic feedback on gait and cognitive load in older adults. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 2335–2344. [Google Scholar] [CrossRef]

- Richardson, J.K.; Thies, S.B.; DeMott, T.K.; Ashton-Miller, J.A. Gait analysis in a challenging environment differentiates between fallers and nonfallers among older patients with peripheral neuropathy. Arch. Phys. Med. Rehabil. 2005, 86, 1539–1544. [Google Scholar] [CrossRef]

- Hulleck, A.A.; Menoth Mohan, D.; Abdallah, N.; El Rich, M.; Khalaf, K. Present and future of gait assessment in clinical practice: Towards the application of novel trends and technologies. Front. Med Technol. 2022, 4, 901331. [Google Scholar] [CrossRef]

- Lord, S.; Halligan, P.; Wade, D. Visual gait analysis: The development of a clinical assessment and scale. Clin. Rehabil. 1998, 12, 107–119. [Google Scholar] [CrossRef]

- Ghoreishi, S.G.A.; Boateng, C.; Moshfeghi, S.; Jan, M.T.; Conniff, J.; Yang, K.; Jang, J.; Furht, B.; Newman, D.; Tappen, R.; et al. Quad-tree Based Driver Classification using Deep Learning for Mild Cognitive Impairment Detection. IEEE Access 2025, 13, 63129–63142. [Google Scholar] [CrossRef] [PubMed]

- Kvist, A.; Tinmark, F.; Bezuidenhout, L.; Reimeringer, M.; Conradsson, D.M.; Franzén, E. Validation of algorithms for calculating spatiotemporal gait parameters during continuous turning using lumbar and foot mounted inertial measurement units. J. Biomech. 2024, 162, 111907. [Google Scholar] [CrossRef]

- Bilney, B.; Morris, M.; Webster, K. Concurrent related validity of the GAITRite® walkway system for quantification of the spatial and temporal parameters of gait. Gait Posture 2003, 17, 68–74. [Google Scholar] [CrossRef]

- Seifallahi, M.; Soltanizadeh, H.; Hassani Mehraban, A.; Khamseh, F. Alzheimer’s disease detection using skeleton data recorded with Kinect camera. Clust. Comput. 2020, 23, 1469–1481. [Google Scholar] [CrossRef]

- Nassajpour, M.; Shuqair, M.; Rosenfeld, A.; Tolea, M.I.; Galvin, J.E.; Ghoraani, B. Objective estimation of m-CTSIB balance test scores using wearable sensors and machine learning. Front. Digit. Health 2024, 6, 1366176. [Google Scholar] [CrossRef] [PubMed]

- Nassajpour, M.; Shuqair, M.; Rosenfeld, D.A.; Tolea, M.I.; Galvin, J.E.; Ghoraani, B. Integrating Wearable Sensor Technology and Machine Learning for Objective m-CTSIB Balance Score Estimation. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4. [Google Scholar]

- Nassajpour, M.; Shuqair, M.; Rosenfeld, A.; Tolea, M.I.; Galvin, J.E.; Ghoraani, B. Smartphone-Based Balance Assessment Using Machine Learning. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; pp. 1–4. [Google Scholar]

- Khaniki, M.; Mirzaeibonehkhater, M.; Fard, S. Class Imbalance-Aware Active Learning with Vision Transformers in Federated Histopathological Imaging. J. Med. Med. Stud. 2025, 1, 65–73. [Google Scholar]

- Moore, S.A.; Hickey, A.; Lord, S.; Del Din, S.; Godfrey, A.; Rochester, L. Comprehensive measurement of stroke gait characteristics with a single accelerometer in the laboratory and community: A feasibility, validity and reliability study. J. Neuroeng. Rehabil. 2017, 14, 130. [Google Scholar] [CrossRef]

- Buckley, C.; Micó-Amigo, M.E.; Dunne-Willows, M.; Godfrey, A.; Hickey, A.; Lord, S.; Rochester, L.; Del Din, S.; Moore, S.A. Gait asymmetry post-stroke: Determining valid and reliable methods using a single accelerometer located on the trunk. Sensors 2019, 20, 37. [Google Scholar] [CrossRef] [PubMed]

- Muthukrishnan, N.; Abbas, J.J.; Krishnamurthi, N. A wearable sensor system to measure step-based gait parameters for parkinson’s disease rehabilitation. Sensors 2020, 20, 6417. [Google Scholar] [CrossRef]

- Lanotte, F.; Shin, S.Y.; O’Brien, M.K.; Jayaraman, A. Validity and reliability of a commercial wearable sensor system for measuring spatiotemporal gait parameters in a post-stroke population: The effects of walking speed and asymmetry. Physiol. Meas. 2023, 44, 085005. [Google Scholar] [CrossRef]

- Jacobs, D.; Farid, L.; Ferré, S.; Herraez, K.; Gracies, J.M.; Hutin, E. Evaluation of the validity and reliability of connected insoles to measure gait parameters in healthy adults. Sensors 2021, 21, 6543. [Google Scholar] [CrossRef]

- Rudisch, J.; Jöllenbeck, T.; Vogt, L.; Cordes, T.; Klotzbier, T.J.; Vogel, O.; Wollesen, B. Agreement and consistency of five different clinical gait analysis systems in the assessment of spatiotemporal gait parameters. Gait Posture 2021, 85, 55–64. [Google Scholar] [CrossRef]

- Psaltos, D.; Chappie, K.; Karahanoglu, F.I.; Chasse, R.; Demanuele, C.; Kelekar, A.; Zhang, H.; Marquez, V.; Kangarloo, T.; Patel, S.; et al. Multimodal wearable sensors to measure gait and voice. Digit. Biomarkers 2020, 3, 133–144. [Google Scholar] [CrossRef]

- Dolatabadi, E.; Taati, B.; Mihailidis, A. Concurrent validity of the Microsoft Kinect for Windows v2 for measuring spatiotemporal gait parameters. Med. Eng. Phys. 2016, 38, 952–958. [Google Scholar] [CrossRef] [PubMed]

- Arizpe-Gómez, P.; Harms, K.; Janitzky, K.; Witt, K.; Hein, A. Towards automated self-administered motor status assessment: Validation of a depth camera system for gait feature analysis. Biomed. Signal Process. Control 2024, 87, 105352. [Google Scholar] [CrossRef]

- Clark, R.A.; Bower, K.J.; Mentiplay, B.F.; Paterson, K.; Pua, Y.H. Concurrent validity of the Microsoft Kinect for assessment of spatiotemporal gait variables. J. Biomech. 2013, 46, 2722–2725. [Google Scholar] [CrossRef]

- Guess, T.M.; Bliss, R.; Hall, J.B.; Kiselica, A.M. Comparison of Azure Kinect overground gait spatiotemporal parameters to marker based optical motion capture. Gait Posture 2022, 96, 130–136. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the pose tracking performance of the azure kinect and kinect v2 for gait analysis in comparison with a gold standard: A pilot study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef] [PubMed]

- Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.; Mansow-Model, S.; Müller-Werdan, U. Using new camera-based technologies for gait analysis in older adults in comparison to the established GAITRite system. Sensors 2019, 20, 125. [Google Scholar] [CrossRef] [PubMed]

- Seifallahi, M.; Galvin, J.E.; Ghoraani, B. Curve walking reveals more gait impairments in older adults with mild cognitive impairment than straight walking: A Kinect camera-based study. J. Alzheimer’s Dis. Rep. 2024, 8, 423–435. [Google Scholar] [CrossRef]

- Bang, C.; Bogdanovic, N.; Deutsch, G.; Marques, O. Machine learning for the diagnosis of Parkinson’s disease using speech analysis: A systematic review. Int. J. Speech Technol. 2023, 26, 991–998. [Google Scholar] [CrossRef]

- Wang, Z.; Peng, S.; Zhang, H.; Sun, H.; Hu, J. Gait parameters and peripheral neuropathy in patients with diabetes: A meta-analysis. Front. Endocrinol. 2022, 13, 891356. [Google Scholar] [CrossRef]

- Esser, P.; Collett, J.; Maynard, K.; Steins, D.; Hillier, A.; Buckingham, J.; Tan, G.D.; King, L.; Dawes, H. Single sensor gait analysis to detect diabetic peripheral neuropathy: A proof of principle study. Diabetes Metab. J. 2018, 42, 82–86. [Google Scholar] [CrossRef] [PubMed]

- Brognara, L.; Arceri, A.; Zironi, M.; Traina, F.; Faldini, C.; Mazzotti, A. Gait Spatio-Temporal Parameters Vary Significantly Between Indoor, Outdoor and Different Surfaces. Sensors 2025, 25, 1314. [Google Scholar] [CrossRef]

- Cheng, Z.; Wen, Y.; Xie, Z.; Zhang, M.; Feng, Q.; Wang, Y.; Liu, D.; Cao, Y.; Mao, Y. A multi-sensor coupled supramolecular elastomer empowers intelligent monitoring of human gait and arch health. Chem. Eng. J. 2025, 504, 158760. [Google Scholar] [CrossRef]

- Zhang, Q.; Jin, T.; Cai, J.; Xu, L.; He, T.; Wang, T.; Tian, Y.; Li, L.; Peng, Y.; Lee, C. Wearable triboelectric sensors enabled gait analysis and waist motion capture for IoT-based smart healthcare applications. Adv. Sci. 2022, 9, 2103694. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; He, T.; Zhu, M.; Sun, Z.; Shi, Q.; Zhu, J.; Dong, B.; Yuce, M.R.; Lee, C. Deep learning-enabled triboelectric smart socks for IoT-based gait analysis and VR applications. npj Flex. Electron. 2020, 4, 29. [Google Scholar] [CrossRef]

- Besser, L.M.; Chrisphonte, S.; Kleiman, M.J.; O’Shea, D.; Rosenfeld, A.; Tolea, M.; Galvin, J.E. The Healthy Brain Initiative (HBI): A prospective cohort study protocol. PLoS ONE 2023, 18, e0293634. [Google Scholar] [CrossRef] [PubMed]

- Ghoraani, B.; Boettcher, L.N.; Hssayeni, M.D.; Rosenfeld, A.; Tolea, M.I.; Galvin, J.E. Detection of mild cognitive impairment and Alzheimer’s disease using dual-task gait assessments and machine learning. Biomed. Signal Process. Control 2021, 64, 102249. [Google Scholar] [CrossRef]

- Greenfield, J.; Guichard, R.; Kubiak, R.; Blandeau, M. Concurrent validity of Protokinetics movement analysis software for estimated centre of mass displacement and velocity during walking. Gait Posture 2025, 115, 34–40. [Google Scholar] [CrossRef] [PubMed]

- Romeo, L.; Marani, R.; Malosio, M.; Perri, A.G.; D’Orazio, T. Performance analysis of body tracking with the microsoft azure kinect. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; pp. 572–577. [Google Scholar]

- Fraccaro, P.; Coyle, L.; Doyle, J.; O’Sullivan, D. Real-world gyroscope-based gait event detection and gait feature extraction. In Proceedings of the Sixth International Conference on eHealth, Telemedicine, and Social Medicine, Barcelona, Spain, 24–27 March 2014. [Google Scholar]

- Pham, M.H.; Elshehabi, M.; Haertner, L.; Del Din, S.; Srulijes, K.; Heger, T.; Synofzik, M.; Hobert, M.A.; Faber, G.S.; Hansen, C.; et al. Validation of a step detection algorithm during straight walking and turning in patients with Parkinson’s disease and older adults using an inertial measurement unit at the lower back. Front. Neurol. 2017, 8, 457. [Google Scholar] [CrossRef]

- Del Din, S.; Godfrey, A.; Rochester, L. Validation of an accelerometer to quantify a comprehensive battery of gait characteristics in healthy older adults and Parkinson’s disease: Toward clinical and at home use. IEEE J. Biomed. Health Inform. 2015, 20, 838–847. [Google Scholar] [CrossRef]

- Seifallahi, M.; Galvin, J.E.; Ghoraani, B. Detection of mild cognitive impairment using various types of gait tests and machine learning. Front. Neurol. 2024, 15, 1354092. [Google Scholar] [CrossRef]

- Ma, M.; Proffitt, R.; Skubic, M. Validation of a Kinect V2 based rehabilitation game. PLoS ONE 2018, 13, e0202338. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications; Analog Devices AN-602 Application Note; Technical Report; Analog Devices, Inc.: Norwood, MA, USA, 2002; Volume 2, pp. 1–6. [Google Scholar]

- Sabatini, A.M.; Martelloni, C.; Scapellato, S.; Cavallo, F. Assessment of walking features from foot inertial sensing. IEEE Trans. Biomed. Eng. 2005, 52, 486–494. [Google Scholar] [CrossRef] [PubMed]

- Ceyhan, B.; LaMar, J.; Nategh, P.; Neghabi, M.; Konjalwar, S.; Rodriguez, P.; Hahn, M.K.; Blakely, R.D.; Ranji, M. Optical imaging reveals liver metabolic perturbations in Mblac1 knockout mice. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–4. [Google Scholar]

- Horak, F.B.; Mancini, M. Objective biomarkers of balance and gait for Parkinson’s disease using body-worn sensors. Mov. Disord. 2013, 28, 1544–1551. [Google Scholar] [CrossRef]

- Chan, W.L.; Pin, T.W. Reliability, validity and minimal detectable change of 2-minute walk test, 6-minute walk test and 10-meter walk test in frail older adults with dementia. Exp. Gerontol. 2019, 115, 9–18. [Google Scholar] [CrossRef]

- Cheng, F.Y.; Chang, Y.; Cheng, S.J.; Shaw, J.S.; Lee, C.Y.; Chen, P.H. Do cognitive performance and physical function differ between individuals with motoric cognitive risk syndrome and those with mild cognitive impairment? BMC Geriatr. 2021, 21, 36. [Google Scholar] [CrossRef] [PubMed]

- Windham, B.G.; Parker, S.B.; Zhu, X.; Gabriel, K.P.; Palta, P.; Sullivan, K.J.; Parker, K.G.; Knopman, D.S.; Gottesman, R.F.; Griswold, M.E.; et al. Endurance and gait speed relationships with mild cognitive impairment and dementia. Alzheimer’S Dement. Diagn. Assess. Dis. Monit. 2022, 14, e12281. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).