A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection

Abstract

1. Introduction

- The detection method improvements proposed by the above scholars mainly focus on optimizing false positives and false negatives in ship detection tasks caused by multi-scale detection in SAR images. Therefore, when processing a large number of small targets, detection accuracy may be affected.

- Most of the improvements made by the above scholars focused on improving accuracy without giving sufficient consideration to parameter issues. Although Wu, K. et al. [16] took parameter issues into account, the parameters still reached 5.855 M.

- To address the issues of false positives and false negatives in SAR ship image target detection tasks caused by multi-scale changes in ships and a large number of small targets, this paper proposes the DRGD-YOLO detection algorithm, which achieves higher detection accuracy while balancing parameters.

- A hybrid structure, CSP_DTB, was designed to replace the last two layers of C3k2 in the main trunk, expand the receptive field of the model, reduce the loss of small target de-pendency information caused by an insufficient receptive field, and enhance the feature extraction ability of the model. Compared with existing traditional transformer-based methods, our main innovation lies in the design of a dynamic transformer block (DTB), which dynamically allocates feature channels to convolutional neural networks (CNNs) and transformer branches through learnable channel ratio parameters (tcr) to achieve complementary processing. It also combines the convolutional gated linear unit (CGLU) to replace standard feedforward networks, thereby reducing the computational cost of transformers while maintaining global modeling capabilities.

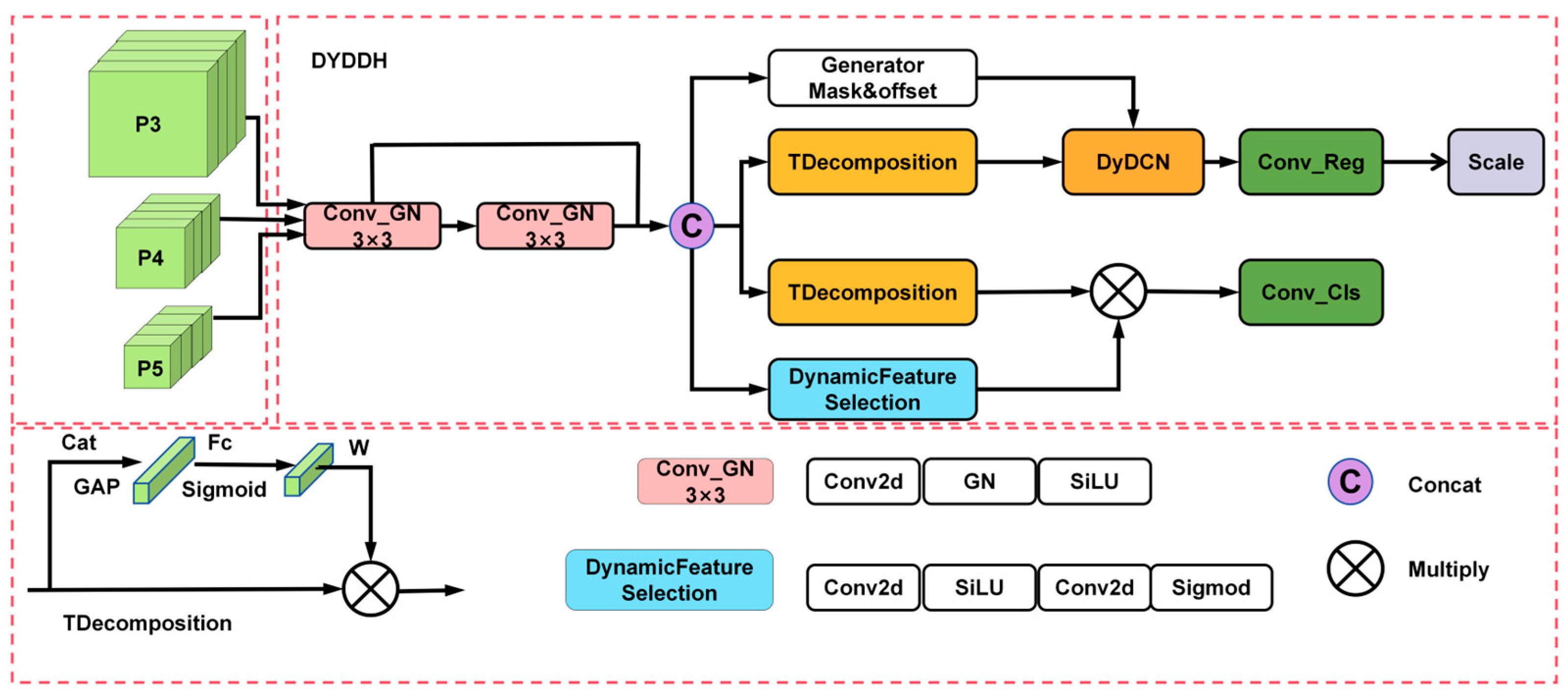

- A Dynamic Decoupled Dual-Alignment Head was designed to replace the detection head of YOLOv11, enhancing information interaction between the classification and localization decoupling heads and facilitating superior localization and classification in the detection head. The main novelty of the DYDDH module lies in its use of a task decomposition mechanism guided by layer attention, which dynamically reconstructs convolution kernel parameters through weights generated by adaptive global average pooling to achieve feature decoupling for classification and regression tasks. It also combines a spatial adaptive alignment mechanism and classification probability attention to perform task-specific feature alignment. Compared to traditional channel or spatial attention mechanisms, our method dynamically modulates at the convolution weight level, enabling more precise handling of the differences in feature requirements and spatial misalignment issues between classification and localization tasks in SAR target detection.

2. Methods

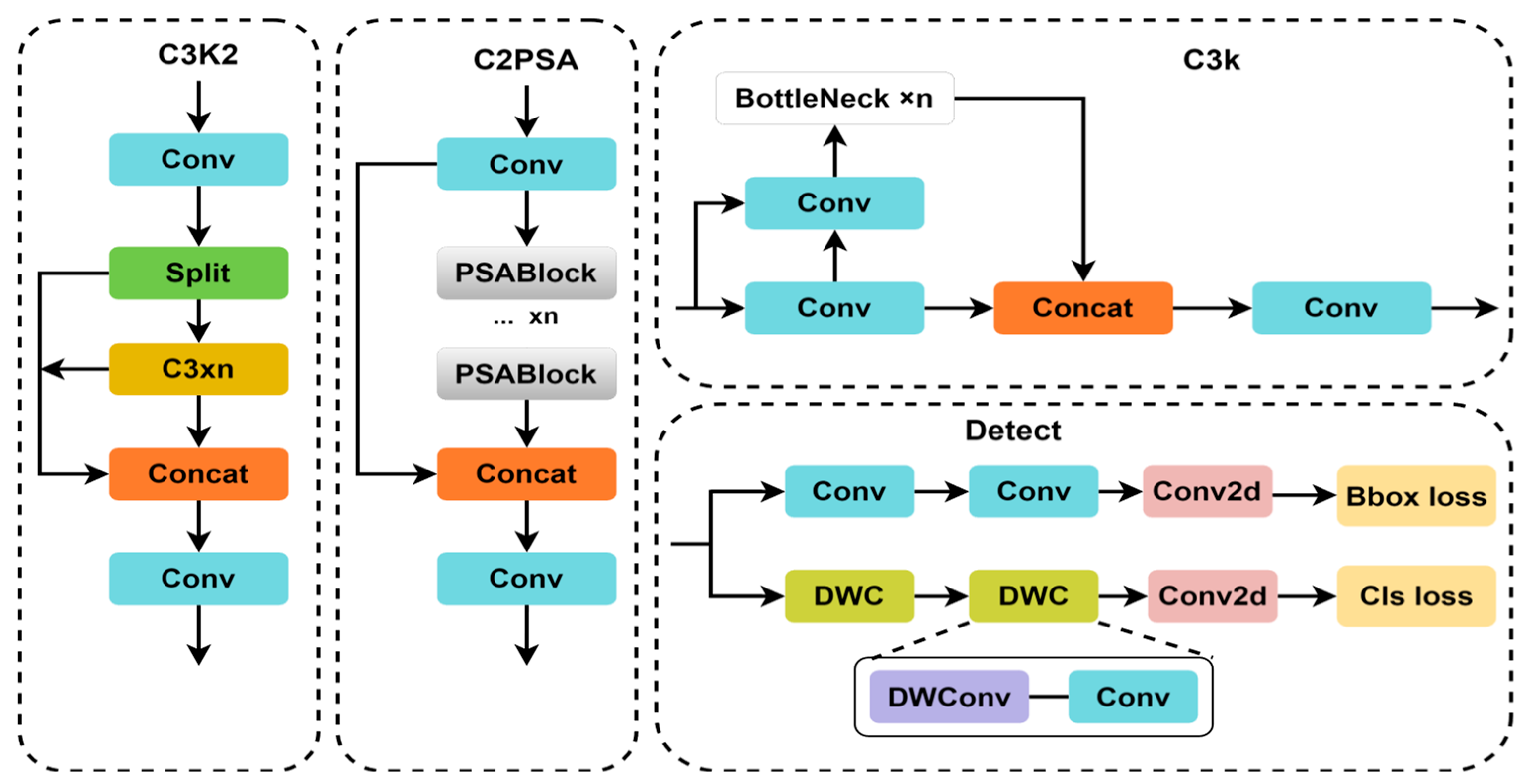

2.1. Introduction to the YOLOv11 Algorithm

2.2. Improved DRGD-YOLO

2.3. Cross-Level Partial Dynamic Transformer Block

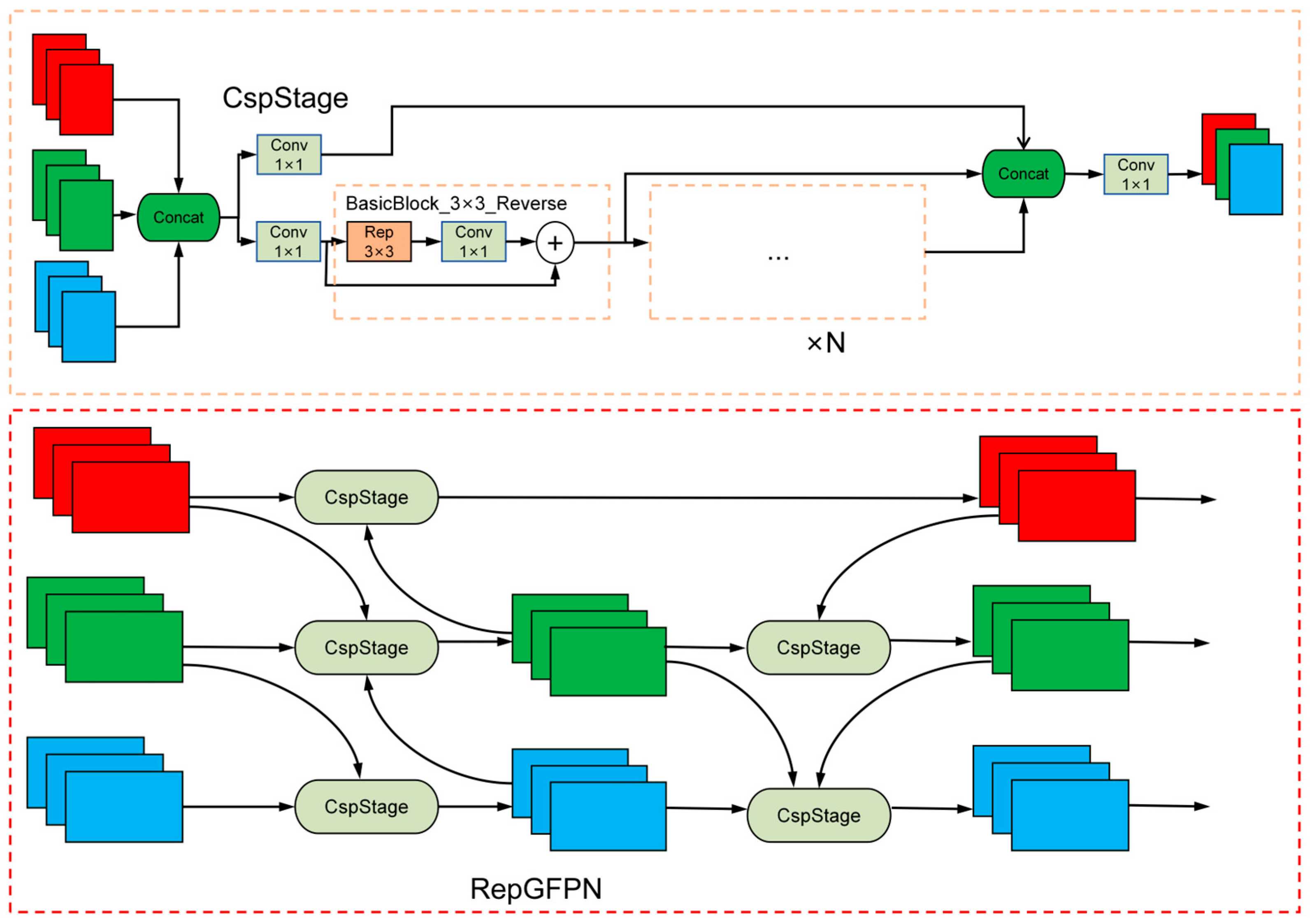

2.4. Utilizing RepGFPN to Replace the Neck

2.5. Dynamic Decoupled Dual-Alignment Head

3. Experiments and Discussions

3.1. Experimental Environment and Configuration

3.2. Introduction to the Dataset

3.3. The Evaluation Indicators of the Experiment

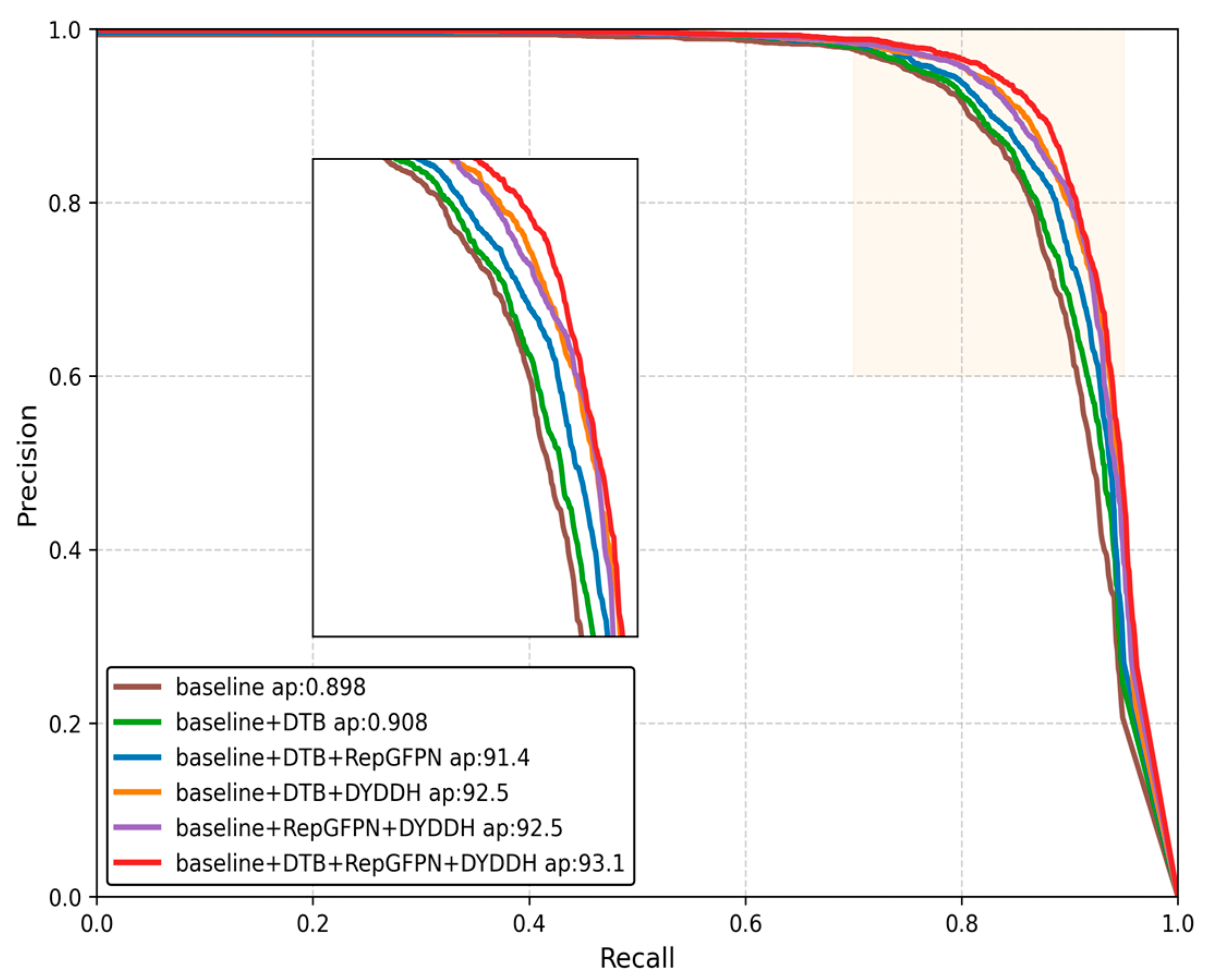

3.4. Ablation Experiment

3.5. Comparative Experiments

3.5.1. Comparison of Various Classical Pyramid Network Structures

3.5.2. Comparison of the Effects of Different Detection Heads

3.5.3. TCR Sensitivity Analysis in CSP_DTB

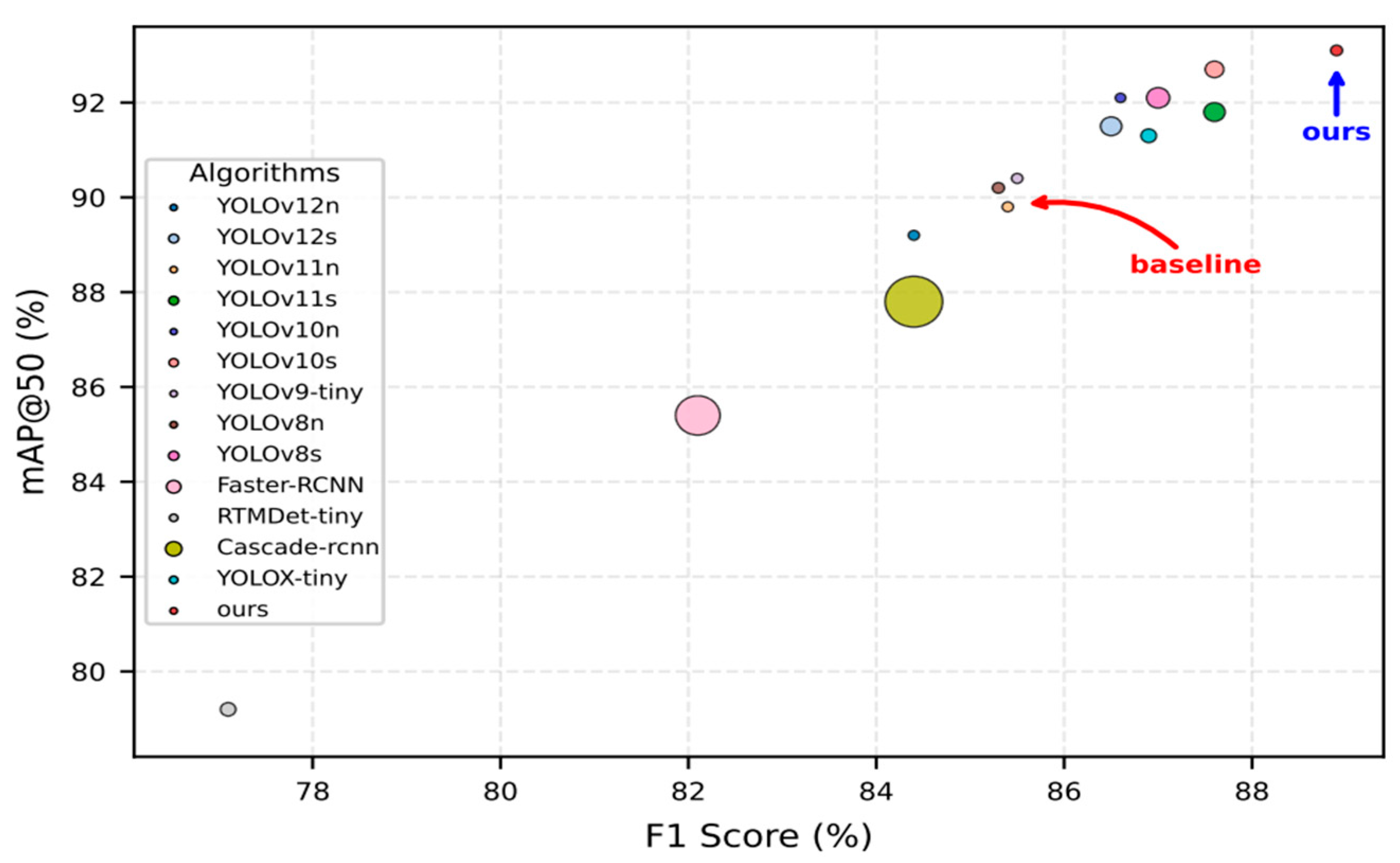

3.5.4. Model Generalization, Validation, and Comparison Test

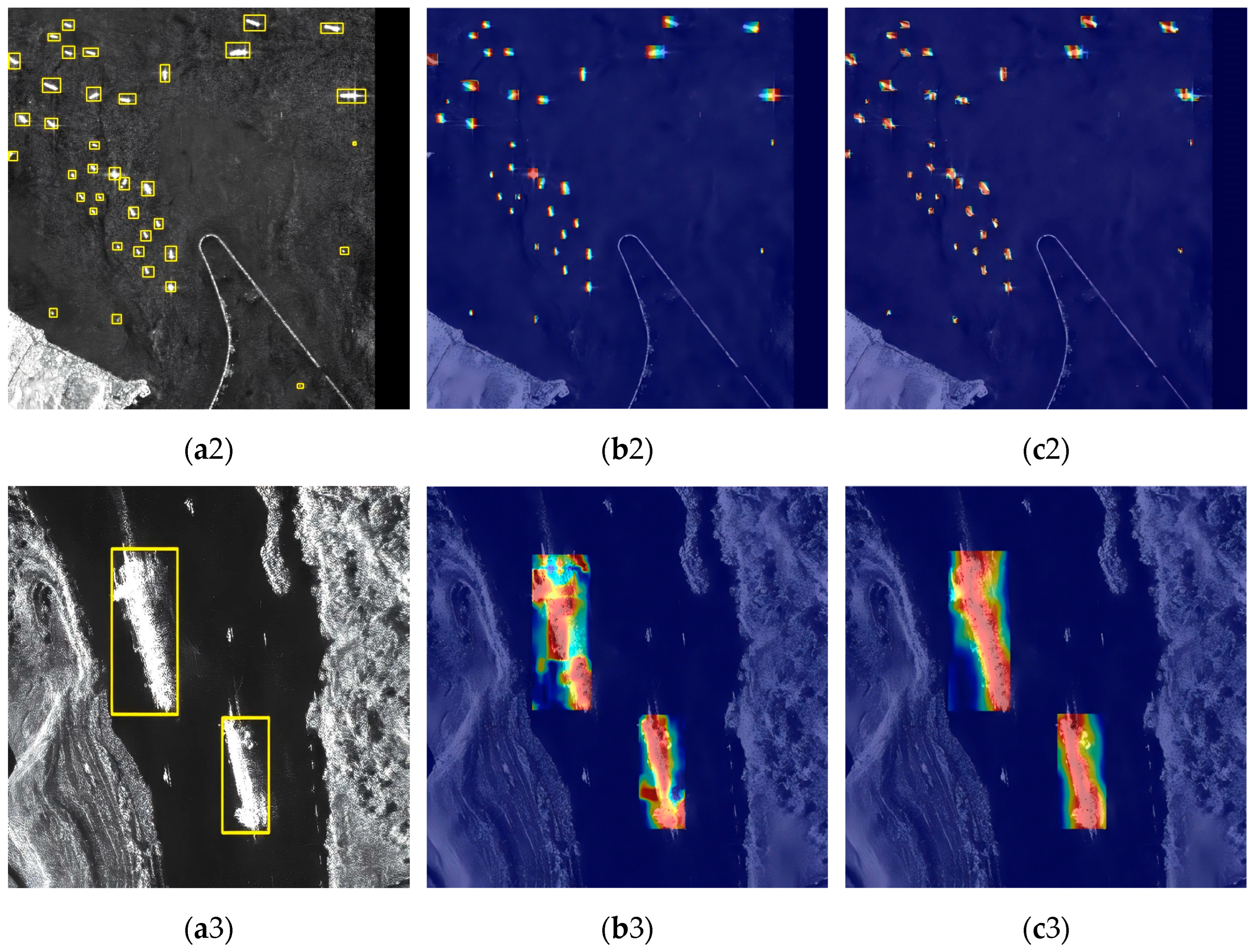

4. Visualization of the Effects of Model Improvement

4.1. Comparison of Inference Result Visualization

4.2. Heat Map Visualization Comparison

- (1)

- Enhanced Feature Focus Capability: The GRGD-YOLO model demonstrates a superior ability to concentrate on features that are directly relevant to the target. For instance, in the selected HRSID samples, the GRGD-YOLO model directs greater attention to the ship itself, whereas the attention of YOLOv11 is comparatively more dispersed.

- (2)

- Excellent Detection of Small Targets: In the selected LS-SSDD samples, the DRGD-YOLO model demonstrates superior performance, with its generated thermograms covering a significant portion of the target area. This indicates that the improved model is more adept at capturing relevant information.

- (3)

- Superior Multi-Scale Detection Performance: The DRGD-YOLO model demonstrates a strong focus on the ship targets themselves, rather than on irrelevant details, even when applied to the selected large target samples from the SSDD dataset. This indicates that the DRGD-YOLO model exhibits superior performance in addressing the challenges of multi-scale SAR ship image detection tasks.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, G.; Zhang, B.; Yu, H.; Chen, J.; Hong, W. Sparse Synthetic Aperture Radar Imaging from Compressed Sensing and Machine Learning: Theories, Applications, and Trends. IEEE Geosci. Remote Sens. Mag. 2022, 10, 32–69. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, Z.; Huang, Y.; Yang, S.; Xu, L.; Xu, M. Visible-infrared person re-identification with real-world label noise. IEEE Trans. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4857–4869. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. Deformable Feature Fusion and Accurate Anchors Prediction for Lightweight SAR Ship Detector Based on Dynamic Hierarchical Model Pruning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 15019–15036. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, R.; Xu, L.; Lu, X.; Yu, Y.; Xu, M.; Zhao, H. FasterSal: Robust and Real-Time Single-Stream Architecture for RGB-DSalient Object Detection. IEEE Trans. Multimed. 2025, 27, 2477–2488. [Google Scholar] [CrossRef]

- Zhou, S.; Zhang, M.; Wu, L.; Yu, D.; Li, J.; Fan, F.; Liu, Y.; Zhang, L. SAR ship detection network based on global context and multi-scale feature enhancement. Signal Image Video Process. 2024, 18, 2951–2964. [Google Scholar] [CrossRef]

- Chen, S.; Li, X. A new CFAR algorithm based on variable window for ship target detection in SAR images. Signal Image Video Process. 2019, 13, 779–786. [Google Scholar] [CrossRef]

- Wei, W.; Shi, Y.; Wang, Y.; Liu, S. RDFCNet: RGB-guided depth feature calibration network for RGB-D Salient Object Detection. Neurocomputing 2025, 652, 131127. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Proceedings, Part I 14, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Li, C.; Xi, L.; Hei, Y.; Li, W.; Xiao, Z. Efficient Feature Focus Enhanced Network for Small and Dense Object Detection in SAR Images. IEEE Signal Process. Lett. 2025, 32, 1306–1310. [Google Scholar] [CrossRef]

- Tang, H.; Gao, S.; Li, S.; Wang, P.; Liu, J.; Wang, S.; Qian, J. A Lightweight SAR Image Ship Detection Method Based on Improved Convolution and YOLOv7. Remote Sens. 2024, 16, 486. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Xia, Y.; Xiao, H. DBW-YOLO: A High-Precision SAR Ship Detection Method for Complex Environments. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7029–7039. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, Z.; Chen, Z.; Liu, G. Object-Enhanced YOLO Networks for Synthetic Aperture Radar Ship Detection. Remote Sens. 2024, 16, 1001. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Wang, Q.; Qian, Y.; Hu, Y.; Wang, C.; Ye, X.; Wang, H. M2YOLOF: Based on effective receptive fields and multiple-in-single-out encoder for object detection. Expert Syst. Appl. 2023, 213, 118928. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Shi, D. TransNeXt: Robust Foveal Visual Perception for Vision Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 17773–17783. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. DAMO-YOLO: A Report on Real-Time Object Detection Design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Tan, Z.; Wang, J.; Sun, X.; Lin, M.; Li, H. Giraffedet: A heavy-neck paradigm for object detection. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zheng, Z.; Yu, W. RG-YOLO: Multi-scale feature learning for underwater target detection. Multimed. Syst. 2025, 31, 26. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y. Accurate Leukocyte Detection Based on Deformable-DETR and Multi-Level Feature Fusion for Aiding Diagnosis of Blood Diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef]

- Fu, Z.; Ling, J.; Yuan, X.; Li, H.; Li, H.; Li, Y. Yolov8n-FADS: A Study for Enhancing Miners’ Helmet Detection Accuracy in Complex Underground Environments. Sensors 2024, 24, 3767. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Tang, H.; Jiang, Y. An Improved YOLOv8n Algorithm for Object Detection with CARAFE, MultiSEAMHead, and TripleAttention Mechanisms. In Proceedings of the 2024 7th International Conference on Computer Information Science and Application Technology (CISAT), Hangzhou, China, 12–14 July 2024; pp. 119–122. [Google Scholar]

- Yi, X.; Chen, H.; Wu, P.; Wang, G.; Mo, L.; Wu, B.; Yi, Y.; Fu, X.; Qian, P. Light-FC-YOLO: A Lightweight Method for Flower Counting Based on Enhanced Feature Fusion with a New Efficient Detection Head. Agronomy 2024, 14, 1285. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-Cam: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| The initial learning rate | 0.01 |

| Momentum | 0.937 |

| Total training rounds | 400 |

| Batch size | 32 |

| Size of input image | 640 × 640 |

| Optimizer used | SGD |

| Weight decay | 0.0005 |

| Working threads | 6 |

| Early stop rounds | 50 |

| Pre-training weights | Not loaded |

| Mixed Precision Used | Used |

| Precision | Hidden Meaning |

|---|---|

| AP50:95 | AP for IoU = 0.50:0.05:0.95 |

| AP50 | AP for IoU = 0.50 |

| AP75 | AP for IoU = 0.75 |

| RepGFPN | DYDDH | CSP_DTB | P (%) | R (%) | mAP 50 (%) | mAP 75 (%) | mAP 50:95 (%) | F1 (%) | FLOPs (G) | Params (M) |

|---|---|---|---|---|---|---|---|---|---|---|

| 91.5 | 80.1 | 89.8 | 74.3 | 65.2 | 85.4 | 6.3 | 2.6 | |||

| √ | 90.6 | 83.7 | 91.6 | 76.9 | 68.5 | 86.2 | 8.2 | 3.6 | ||

| √ | 90.6 | 83.9 | 91.9 | 76.0 | 67.2 | 87.1 | 7.9 | 2.2 | ||

| √ | 92.2 | 80.1 | 90.8 | 74.7 | 66.5 | 85.7 | 6.2 | 2.5 | ||

| √ | √ | 91.7 | 83.9 | 92.5 | 76.3 | 67.9 | 87.6 | 10.6 | 3.1 | |

| √ | √ | 92.3 | 81.2 | 91.4 | 75.2 | 67.0 | 86.4 | 8.0 | 3.5 | |

| √ | √ | 92.1 | 84.0 | 92.5 | 77.2 | 68.2 | 87.9 | 7.7 | 2.1 | |

| √ | √ | √ | 92.4 | 85.6 | 93.1 | 78.5 | 69.8 | 88.9 | 10.4 | 3.0 |

| Method | mAP50 (%) | mAP75 (%) | mAP50:95 (%) | F1 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Baseline | 89.8 | 74.3 | 65.2 | 85.4 | 2.6 | 6.3 |

| RepGFPN [23] | 91.3 | 77.3 | 67.6 | 86.7 | 3.6 | 8.4 |

| BIFPN [33] | 90.2 | 75.0 | 65.9 | 85.5 | 1.9 | 6.4 |

| GDFPN [34] | 90.4 | 75.1 | 65.9 | 86.0 | 3.7 | 8.4 |

| HSFPN [35] | 89.9 | 73.1 | 64.9 | 85.1 | 1.8 | 5.7 |

| Method | mAP50 (%) | mAP75 (%) | mAP 50:95 (%) | F1 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Baseline | 89.8 | 74.3 | 65.2 | 85.4 | 2.6 | 6.3 |

| SEAMHead [36] | 91.5 | 75.0 | 66.4 | 86.3 | 2.5 | 6.0 |

| PGI [37] | 91.4 | 76.9 | 67.7 | 86.8 | 3.6 | 8.8 |

| MultiSEAMHead [38] | 91.3 | 76.9 | 67.8 | 86.3 | 4.6 | 6.3 |

| EfficientHead [39] | 90.4 | 74.1 | 65.7 | 85.7 | 2.3 | 5.2 |

| DYDDH (ours) | 91.9 | 76.0 | 67.2 | 87.1 | 2.2 | 7.9 |

| Model | TCR1 (Layer6) | TCR2 (Layer8) | mAP50 (%) | mAP75 (%) | mAP 50:95 (%) | F1 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|---|

| 0.25 | 0.25 | 92.8 | 79.4 | 69.6 | 88.5 | 2.98 | 10.4 | |

| 0.25 | 0.5 | 93.1 | 78.5 | 69.8 | 88.9 | 2.95 | 10.4 | |

| Ours | 0.25 | 0.75 | 92.1 | 77.5 | 68.5 | 88.2 | 2.94 | 10.4 |

| 0.5 | 0.5 | 92.4 | 78.4 | 69.3 | 88.3 | 2.94 | 10.4 | |

| 0.75 | 0.5 | 92.4 | 78.1 | 69.0 | 88.0 | 2.94 | 10.4 |

| Hyperparameter | Value |

|---|---|

| The initial learning rate | 0.01 |

| Momentum | 0.937 |

| Total training rounds | 400 |

| Batch size | 32 |

| Size of input image | 640 × 640 |

| Optimizer used | SGD |

| Weight decay | 0.0005 |

| Working threads | 6 |

| Early stop rounds | 0 |

| Pre-training weights | Not loaded |

| Mixed Precision Used | Not Used |

| Method | Params (M) | FLOPs (G) | P (%) | R (%) | mAP50 (%) | mAP75 (%) | mAP50:95 (%) | F1 (%) |

|---|---|---|---|---|---|---|---|---|

| YOLO12n | 2.55 | 6.0 | 90.0 | 79.5 | 89.2 | 72.9 | 64.4 | 84.4 |

| YOLO12s | 9.41 | 19.4 | 92.3 | 81.5 | 91.5 | 78.2 | 69.0 | 86.5 |

| YOLO11n | 2.58 | 6.3 | 91.5 | 80.1 | 89.8 | 74.3 | 65.2 | 85.4 |

| YOLO11s | 9.41 | 21.5 | 91.9 | 83.6 | 91.8 | 77.8 | 70.5 | 87.6 |

| YOLOv10n | 2.27 | 6.7 | 90.3 | 83.2 | 92.1 | 77.9 | 68.1 | 86.6 |

| YOLOv10s | 7.22 | 21.6 | 91.2 | 84.4 | 92.7 | 79.8 | 69.5 | 87.6 |

| YOLOv9-tiny | 2.62 | 10.6 | 90.2 | 81.3 | 90.4 | 73.2 | 64.9 | 85.5 |

| YOLOv8n | 3.01 | 8.7 | 91.7 | 79.7 | 90.2 | 74.4 | 65.4 | 85.3 |

| YOLOv8s | 11.13 | 28.6 | 91.0 | 83.4 | 92.1 | 76.7 | 67.8 | 87.0 |

| Faster-RCNN | 41.35 | 208 | 89.9 | 75.5 | 85.4 | 75.5 | 65.4 | 82.1 |

| RTMDet-tiny | 4.87 | 8.03 | 86.8 | 69.4 | 79.2 | 61.7 | 55.6 | 77.1 |

| Cascade-RCNN | 69.15 | 236 | 90.8 | 78.9 | 87.8 | 78.7 | 68.4 | 84.4 |

| YOLOX-tiny | 5.03 | 7.58 | 89.4 | 84.5 | 91.3 | 74.5 | 66.1 | 86.9 |

| Ours | 2.95 | 10.4 | 92.4 | 85.6 | 93.1 | 78.5 | 69.8 | 88.9 |

| Dataset | Method | mAP50 (%) | mAP75 (%) | mAP50:95 (%) | F1 (%) | FLOPs (G) | Params (M) |

|---|---|---|---|---|---|---|---|

| LS-SSDD-v1.0 | YOLOv12n | 74.5 | 14.9 | 29.8 | 74.3 | 6.0 | 2.55 |

| YOLOv12s | 75.3 | 15.2 | 30.2 | 75.3 | 19.4 | 9.41 | |

| YOLOv11n | 74.1 | 14.5 | 29.7 | 73.8 | 6.3 | 2.58 | |

| YOLOv11s | 74.1 | 15.1 | 29.8 | 74.4 | 21.5 | 9.41 | |

| YOLOv10n | 71.5 | 15.7 | 29.2 | 70.1 | 6.7 | 2.27 | |

| YOLOv10s | 73.0 | 16.0 | 30.4 | 71.5 | 21.6 | 7.22 | |

| YOLOv9-tiny | 74.5 | 15.9 | 30.3 | 74.5 | 10.6 | 2.62 | |

| YOLOv8n | 74.6 | 15.6 | 29.9 | 74.4 | 8.7 | 3.01 | |

| YOLOv8s | 75.9 | 15.5 | 30.2 | 75.7 | 28.6 | 11.13 | |

| Faster-RCNN | 73.0 | 16.5 | 29.9 | 72.1 | 208 | 41.35 | |

| RTMDet-tiny | 64.5 | 12.2 | 25.7 | 61.7 | 8.03 | 4.87 | |

| Cascade-RCNN | 76.4 | 17.8 | 32.0 | 75.2 | 236 | 69.15 | |

| YOLOX-tiny | 72.4 | 11.8 | 28.0 | 72.6 | 7.58 | 5.03 | |

| Ours | 75.6 | 15.6 | 30.1 | 75.2 | 10.4 | 2.95 | |

| SSDD | YOLOv12n | 97.1 | 87.2 | 71.6 | 93.9 | 6.0 | 2.55 |

| YOLOv12s | 97.5 | 90.2 | 74.8 | 95.6 | 19.4 | 9.41 | |

| YOLOv11n | 97.5 | 90.2 | 73.2 | 94.7 | 6.3 | 2.58 | |

| YOLOv11s | 98.0 | 91.2 | 74.4 | 95.1 | 21.5 | 9.41 | |

| YOLOv10n | 97.3 | 88.5 | 72.8 | 94.2 | 6.7 | 2.27 | |

| YOLOv10s | 94.7 | 71.6 | 61.7 | 91.6 | 21.6 | 7.22 | |

| YOLOv9-tiny | 97.3 | 88.9 | 72.2 | 94.0 | 10.6 | 2.62 | |

| YOLOv8n | 97.1 | 91.4 | 74.0 | 94.9 | 8.7 | 3.01 | |

| YOLOv8s | 97.6 | 91.7 | 75.4 | 95.4 | 28.6 | 11.13 | |

| Faster-RCNN | 96.4 | 86.1 | 71.3 | 91.9 | 208 | 41.35 | |

| RTMDet-tiny | 95.8 | 85.1 | 70.3 | 91.5 | 8.03 | 4.87 | |

| Cascade-RCNN | 96.4 | 87.4 | 72.7 | 91.8 | 236 | 69.15 | |

| YOLOX-tiny | 93.7 | 59.6 | 55.1 | 90.6 | 7.58 | 5.03 | |

| ours | 98.2 | 91.9 | 76.8 | 95.9 | 10.4 | 2.95 |

| Method | Params (M) | FLOPs (G) | FPS |

|---|---|---|---|

| Baseline | 2.55 | 6.3 | 88.7 |

| Ours | 2.95 | 10.4 | 66.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, R.; Sui, J. A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection. Sensors 2025, 25, 5194. https://doi.org/10.3390/s25165194

Cao R, Sui J. A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection. Sensors. 2025; 25(16):5194. https://doi.org/10.3390/s25165194

Chicago/Turabian StyleCao, Rui, and Jianghua Sui. 2025. "A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection" Sensors 25, no. 16: 5194. https://doi.org/10.3390/s25165194

APA StyleCao, R., & Sui, J. (2025). A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection. Sensors, 25(16), 5194. https://doi.org/10.3390/s25165194