Abstract

This paper proposes a novel virtual-fixtures-based shared control concept for eye surgery systems focusing on cataract procedures, one of the most common ophthalmic surgeries. Current research on haptic force feedback aims to enhance manipulation capabilities by integrating teleoperated medical robots. Our proposed concept utilizes teleoperated medical robots to improve the training of young surgeons by providing haptic feedback during cataract operations based on geometrical virtual fixtures. The core novelty of our concept is the active guidance to the incision point generated directly from the geometrical representation of the virtual fixtures, and, therefore, it is computationally efficient. Furthermore, novel virtual fixtures are introduced for the posterior corneal surface of the eye during the cataract operation. The concept is tested in a human-in-the-loop pilot study, where non-medical engineering students participated. The results indicate that the proposed shared control system is helpful for the test subjects. Therefore, the inclusion of the proposed concept can be beneficial for the training of non-experienced surgeons.

1. Introduction

In the field of human–machine systems, creating seamless interactions between humans and technology is crucial, particularly as automation becomes increasingly prevalent in our daily lives; see, e.g., [1,2]. Adaptive human–machine systems are designed to provide intuitive and responsive control, improving user experience and precision in complex tasks [3,4]. Surgical applications represent a field where these systems demonstrate significant value [5,6]. Achieving full automation in surgery is challenging due to the intricate tasks requiring exceptional precision and adaptability, as highlighted in [7,8]. The approval and registration of these systems can be critical due to the stringent safety, performance, and regulatory standards that must be met to ensure patient safety.

However, the integration of teleoperated medical robots has the potential to increase surgical precision (teleoperated surgery and robot-assisted surgical systems are overlapping areas of research. In this paper, robot assistance is implemented through teleoperation, and, as such, both terms are used interchangeably). Robot-assisted surgical systems help surgeons to perform their tasks more efficiently and safely. Features such as virtual fixtures provide tool positioning constraints to prevent damage to sensitive tissues [9].

Medical robots are utilized for various surgeries in clinical practice, with ongoing research focused on their integration into ophthalmic surgery. Robotic assistance offers several advantages, including greater precision, fewer complications, and faster healing times. This technology is particularly beneficial in surgical procedures requiring high accuracy in areas with limited visibility and space, such as cataract surgery [10]. In developing countries, where access to highly experienced surgeons may be limited, robotic assistance can empower less experienced surgeons to perform complex surgeries effectively and can help to increase the cataract surgery rate [9].

One significant focus within various surgical procedures is cataract removal, a common clinical condition primarily treated surgically. The most common type, senile cataracts, leads to age-related lens opacity. In Germany, lenticular opacity affects 50% of individuals aged 60 and above, rising to 90% for those over 75 [11]. If left untreated, cataracts can ultimately result in blindness. Globally, cataracts account for one-third of the 35 million blind individuals [9], and approximately 20 million cataract surgeries are performed yearly [12]. The rising life expectancy in industrialized nations is leading to an increased need for primary and secondary cataract surgeries [11]. Most cataract surgeries are still performed manually, without any robotic assistance.

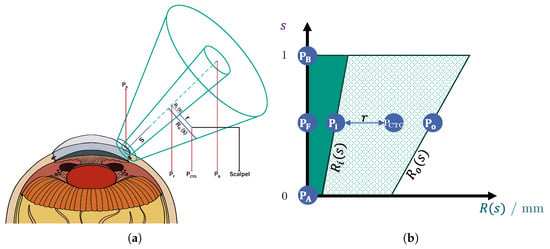

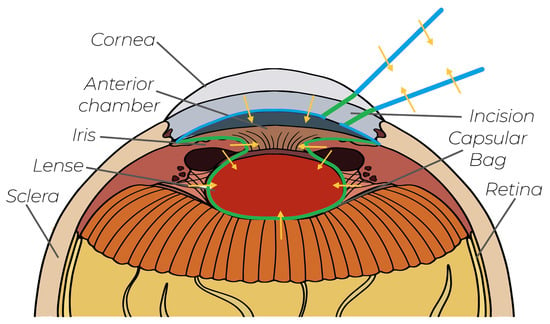

Cataract removal typically demands several hours of training for young surgeons to achieve proficiency [13]. As an enhancement to the existing simulation-based training setups, a haptic human–machine interface could accelerate the learning process for novice surgeons, reducing the time required to reach competency [5]. To address this need, we propose a shared control system that incorporates virtual fixtures to effectively guide the surgeon’s actions. The contributions of this work can be summarized graphically by Figure 1: (1) virtual-fixture-based shared control for the incision and (2) virtual-fixture-based protection of the posterior corneal surface. Furthermore, a usability study is conducted, in which the benefits of our virtual-fixture-based shared control is validated.

Figure 1.

Existing concepts in cataract surgery: Green lines are the conventional virtual fixtures from the literature. The blue lines are our novel virtual fixtures, which are implemented in this work. The orange arrows indicate the FF directions.

The remainder of the paper is organized as follows: in Section 2, the state of the art and the research gap are discussed. Section 3 presents the technical system used for the development and validation of our shared control concepts. This is followed by the presentation of our novel virtual-fixture-based shared control guidance in Section 4. Then, our pilot study is presented in Section 5 and their results are discussed in Section 6. Finally, Section 7 concludes our work.

2. State of the Art and Research Gap

This state-of-the-art overview first includes the Teleoperation and Shared Control Medical Assistance Systems in a general manner, which is followed by the discussion of the Cataract Surgery Training and Safety Systems. Please note that no learning-based and black-box models or concepts are presented in this overview, since their formal admissions by the FDA or EMA (FDA: Food and Drug Administration and EMA: European Medicines Agency) are still challenging and open. However, they provide interesting and promising approaches.

As an add-on to a simulation-based training system like [14], an adaptive human–machine interface is a promising accelerator for young surgeons, reducing the required time to be able to conduct cataract operation on their own. To address this, we propose a shared control system that incorporates virtual fixtures to guide the surgeon’s actions, combining robotic assistance with human-in-the-loop characteristics; see [15]. Our proposed cooperative assistance system enhances surgical outcomes and facilitates faster, more effective training for new surgeons, providing a compelling solution for the future of surgical education and practice.

2.1. Teleoperation and Shared Control Medical Assistance Systems

In the literature, there are various shared control and teleoperation systems for robot-assisted surgeries for different types of operations; see, e.g., robot-assisted vitreoretinal surgery [16]. One of the most commonly used teleoperation systems is the Da Vinci, which has been used in clinical practice for minimally invasive surgeries and research worldwide since 2000 ([17] (Chapter 9)), ([18] (Chapter 3)). The integration of haptic feedback for teleoperating surgeons into the Da Vinci system was only realized in 2024 due to the challenges in designing intuitive feedback that meaningfully improves the system’s safety and efficiency; see [19,20].

The importance of a haptic FF for surgical teleoperation systems has been highlighted in [21], where the fundamental challenges of providing haptic feedback are discussed. Furthermore, many current robot-assisted surgical systems are at level 1 according to LASR (Levels of Autonomy in Surgical Robotics), as stated in [8,22]. Thus, in the near future, haptic support can also be beneficial for training since surgeons will not be completely replaced by automation in the next few years.

Such haptic support is realized by means of shared control systems [23], which involve “sensory awareness and motor accuracy of the surgeon, thereby leading to improved surgical procedures and outcomes for patients”. Such a shared control system is presented in [24,25], in which shared control systems are proposed for drilling craniotomy and mandibular angle split osteotomy robot-assisted surgeries. Both indicated that surgical procedures with haptic feedback had better outcomes compared to manually conducted ones.

In [26], dynamic virtual fixtures are generated from intra-operative 3D images, which are used to generate haptic feedback for the operating surgeon. The proposed method is used for thoracic surgeries, in which the main focus is the generation of forbidden-region virtual fixture; see [26] for more details. In [27], virtual fixtures are generated from a reference path, and are used as an attractive potential. In [28], a shared control system is presented for medical applications. Even though the authors describe their system as shared control, there is no simultaneous haptic interaction between automation and the human surgeon: specific subtasks of the bimanual peg transfer task are automated by trained, data-based control algorithms to optimally support the surgeon.

2.2. Cataract Surgery Training and Safety Systems

Unlike systems aimed at full automation, virtual fixtures are designed to enhance the operator’s medical proficiency, which is an advantageous characteristic for medical training systems. Since shorter cataract surgeries are associated with improved patient outcomes [29], it is crucial that young surgeons quickly achieve proficiency, as surgical experience significantly reduces operation duration [30].

In the current state-of-the-art training systems, there are approaches that help surgeons to reach the necessary experience level. An adaptive and skill-oriented training system is presented in [31], in which the benefits of a systematic training curriculum are validated in a feasibility study. Such systems could be extended in the future with our proposed haptic shared control concept. In [32], haptic guidance is proposed for the training of surgeons; however, it is based on the so-called Transformer model, which requires a predefined reference trajectory of the path. In our work, we have no reference: the FF is directly generated from the virtual fixtures.

To optimally challenge inexperienced surgeons, in [33], an optimal-challenge-point controller is proposed based on the skill assessment of the training surgeon. In [34], a Unity-based training simulator is proposed, which can be used for the virtual training of young surgeons. However, the system does not provide haptic feedback, which would be beneficial for reducing their training time. A recent thesis [35] was proposed using haptic cues, suggesting that guidance-based and error-amplifying haptic feedback training could improve performance, especially among participants with initially lower skill levels in robot-assisted telesurgery.

In the context of cataract operations, a few studies in the literature have explored the use of virtual fixtures for generating FF. In [9], a serial robot was employed to implement various types of virtual fixtures for teleoperated cataract surgery. These virtual fixtures included a remote center-of-motion control algorithm to protect the corneal incision site, as well as constraints to facilitate the safe removal of the lens. The virtual fixtures were designed to automatically adapt to eye rotations, providing protection to the posterior side of the iris and the capsular bag. This approach relied on geometric primitives derived from a mathematical eye model. Another method for incision protection is detailed in [36], where a cooperative robotic system was used to measure interaction forces between the surgical tool and the scleral incision. Various control algorithms were tested on a dynamic physical eye model to limit interaction forces, including a virtual fixture that constrained motion when the interaction force surpassed a predefined threshold. In [37], a torus-shaped virtual guidance force field was proposed to assist in capsulorhexis during cataract surgery, effectively preventing ruptures of the anterior capsular bag and damage to the interior of the iris.

2.3. Research Gap

In summary, virtual fixtures can effectively protect certain eye regions that are vulnerable to complications during cataract surgery, see Table 1. To further reduce the risk of complications in a future cataract surgery, complementary virtual fixtures are introduced in this paper. By integrating the proposed algorithms, simulation-based training systems can provide enhanced support for trainee surgeons. Thus, the contributions of this paper are the following:

- Guidance to Incisions: This paper uses virtual-fixture-based shared control to generate FF for the guidance of surgeons along a predefined axis to the incision point. The novel guidance concept incorporates an efficient geometrical representation of the virtual fixtures, which can be directly taken from the soft tissue geometry, making our concept generalizable.

- Protection of the Posterior Corneal Surface: The posterior corneal surface is fragile, and therefore it must not be touched during manipulation inside the anterior chamber. A semisphere-shaped virtual fixture is used to generate FF toward the center of the anterior chamber, guiding the operating tool away from the cornea.

Table 1.

Literature on virtual fixtures in ophthalmic surgery. A checkmark (✓) indicates that a work solves the challenge, while a cross (✗) signifies a failure to do so.

Table 1.

Literature on virtual fixtures in ophthalmic surgery. A checkmark (✓) indicates that a work solves the challenge, while a cross (✗) signifies a failure to do so.

| Work | Positioning Support for Incision | Protection of Incision | Protection of Posterior Cornea | Protection of Iris | Guidance for Capsulorhexis | Protecting Capsular Bag |

|---|---|---|---|---|---|---|

| [9] | ✗ | ✓ | ✗ | ✓ back side | ✗ | ✓ |

| [38] | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ |

| [37] | ✗ | ✗ | ✗ | ✓ inner side | ✓ | ✗ |

| [39] | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ |

To visually summarize the contributions of this work, these two novel protection areas are marked in purple in Figure 1. Additionally, an experimental setup was established, and a pilot study was conducted to validate and analyze the proposed incision-point-finding shared control method, since, in the literature, there has been no research work conducting such a study.

3. System Overview

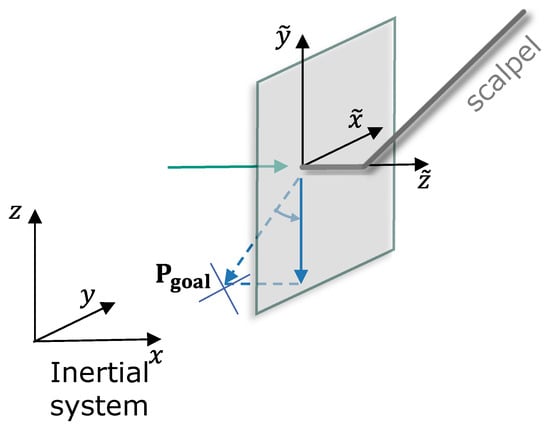

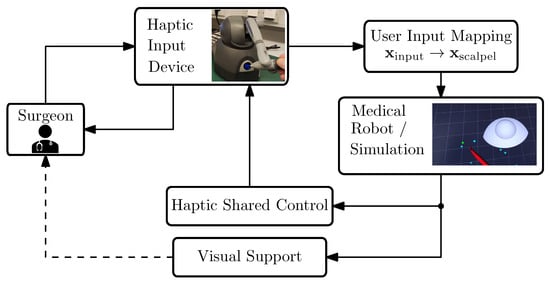

This section introduces the technical system used for the development and validation of our virtual-fixture-based shared control concept; see Figure 2 with the structure of the teleoperation surgery system. Furthermore, the requirements of the shared control system are discussed.

Figure 2.

System overview of our robotic-assisted surgical system. The arrows indicate the communication flow within our system.

3.1. Technical System

Due to resource optimization, the current research utilizes a simulated environment for the medical robot. This decision does not negatively influence the validation or verification of our shared control concepts, as the accuracy of robot motion tracking in the simulation environment remains high.

In robot-assisted telesurgery, surgeons rely exclusively on visual and haptic interfaces to interact indirectly with patients, since the robot handles physical contact remotely. This separation results in the absence of direct feedback to surgeons, creating additional challenges; see, e.g., ([21], pp. 341–342). Our system works with the ROS2 middleware and includes the following components and functionalities:

- A haptic input device, a 3D Systems Touch (in the literature, this device is also referred to as the master robot, haptic input device, etc. For brevity, in this work, we will refer to it simply as the input device. More information can be found on the manufacturer’s website and in e.g., [40]).

- Mapping of the user inputs to the medical robot’s motion.

- Simulation of the scalpel’s motion. In our framework, we used SOFA for soft tissue interaction while we employed RViz for rapid deployment in situations that did not demand detailed modeling of tissue–scalpel interactions.

- Haptic shared control function. In general, this function can be virtual-fixture-based, model-based, or model-free. In this work, we propose a virtual-fixture-based haptic support.

- Visual clues for the guidance. It has been shown that visual cues can be helpful for training inexperienced surgeons; therefore, our setup includes visual guidance as well; see [41].

Users operate the input device similarly to holding a pen, freely moving it within its workspace to control the teleoperated medical robot. This device uses a serial kinematic structure resembling a robotic arm, featuring six revolute joints. Positional FF is provided via actuation of the first three joints, though the underactuated structure prevents orientation FF (this is a common limitation in current teleoperation devices, as no commercially available input device offers full 6-DoF FF). The device offers FF of up to 3.3 N in any direction and incorporates two buttons at its end effector for extra functionality.

3.2. Technical Requirements

Based on discussions with our industrial partners and potential future users of the proposed shared control concept, the core requirements of the system are derived. These revealed that the following points should be respected for the implementation of virtual fixtures.

- It must be safe at any time to release the input device. When operators relax their grasp, no dangerous motion should result from the generated FF.

- The system should have a modular architecture. Different types of virtual fixtures should have the same interfaces to be easily exchangeable. This is necessary for the generalization of the shared control concept for various applications.

- The system should have a low latency. The time delay between measuring a new pose and setting the corresponding force field should not be perceptible by the user. The latency of the system needs to be tested.

In the following sections, the proposed shared control concept is presented in detail.

5. Initial Validation

To validate the usability of our novel virtual-fixture-based shared control concept, it is tested in a simulator setup.

Validation Setup

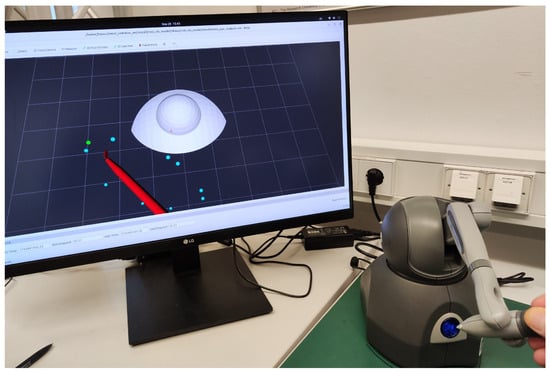

Figure 7 provides a visual illustration of the simulator configuration previously shown schematically in Figure 2. To streamline validation and concentrate solely on functional aspects, the simulation incorporates only the visual models of the eye and scalpel, thereby limiting external interference. It should be emphasized that achieving an accurate biomechanical simulation of corneal tissue interactions was not the objective, as the dynamics of soft tissue interactions were beyond this study’s scope.

Figure 7.

Illustration of the experimental setup featuring a haptic device used to control a virtual scalpel in our eye surgery simulation environment.

Visual cues assisted the participant in performing the task, using an input device to control the scalpel. The incision start points were distributed in a circular area and initially highlighted in red. As the tool reached a point, its color changed to cyan, and completed tasks were marked in green. The task focused on both speed and positional stability at the target.

One participant performed the validation because we were unable to recruit enough qualified, active surgeons to conduct the experiment. The participant, who was not involved in the development, performed 12 incisions, each initiated from different randomized starting points. Three metrics were defined for performance evaluation:

- 1

- Average completion time:where incisions. This metric evaluates the participant’s speed in completing the task.

- 2

- Time spent near to the incision point (critical proximity region):This metric assesses performance during the most critical phase regarding patient safety.

- 3

- Average positional error within the critical proximity region:where and define a safety-critical proximity.

We compared our virtual-fixture-based shared control concept with a classical potential field method using (6), where the axial gain is constant and does not depend on s. Since this gain is set to , the feedback is only proportional to the distance from the center of the constraint. Furthermore, the case in which no haptic support was applied is taken into account.

6. Results and Discussions of the Initial Experiment

6.1. Results

The outcomes of the initial experiment are presented in Table 2. Our newly proposed virtual-fixture-based shared control method achieves an average completion time of 8.18 s, outperforming the standard potential field technique with constant feedback, which averages a 10.27 s completion time. Without haptic support, the participant required 11.49 s.

Table 2.

Results of the initial validation.

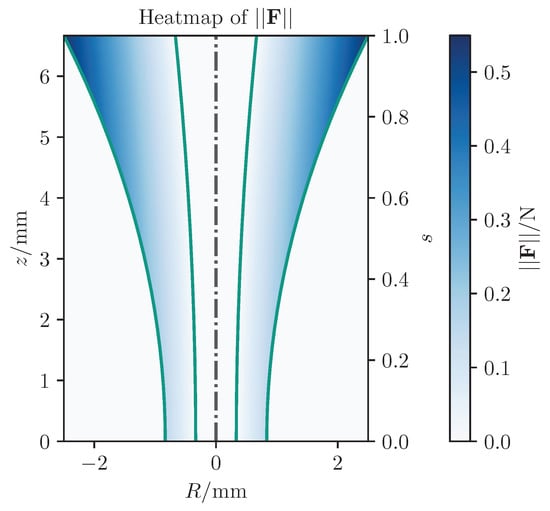

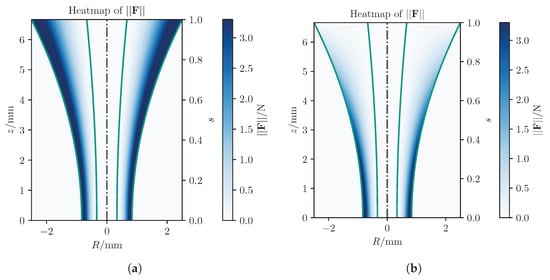

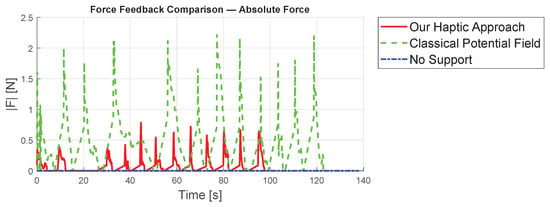

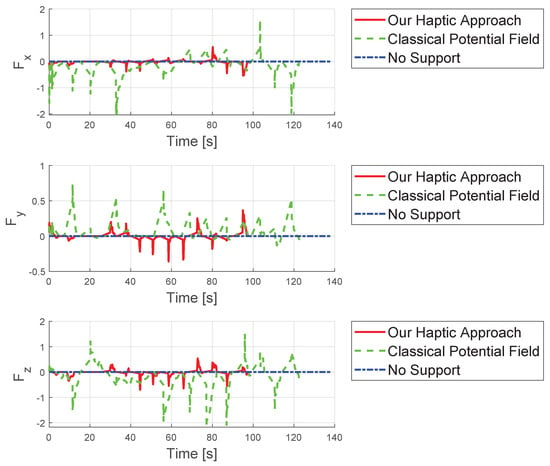

Analyzing maximum forces as shown in Figure 8, the classical potential-field method exerts significantly larger forces, reaching up to app. N, compared to N with our approach, largely due to the relatively large initial distance. Figure 9 presents detailed forces along individual axes. In summary, the proposed concept leads to improved results compared to existing methods from standard techniques.

Figure 8.

Comparison of the absolute value of the forces from the three different concepts.

Figure 9.

Comparison of the forces from the three different concepts evaluated in the three spatial directions.

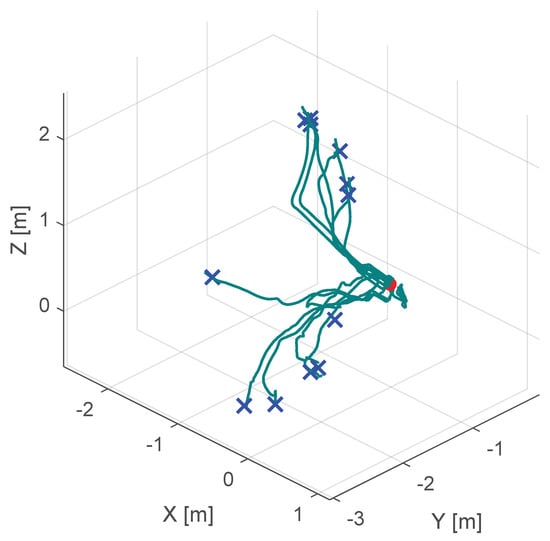

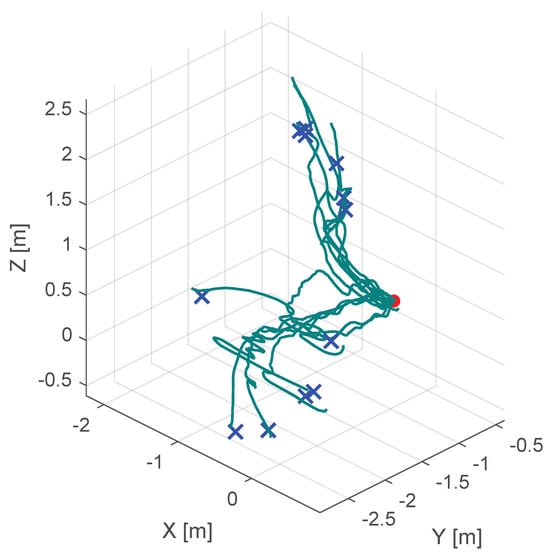

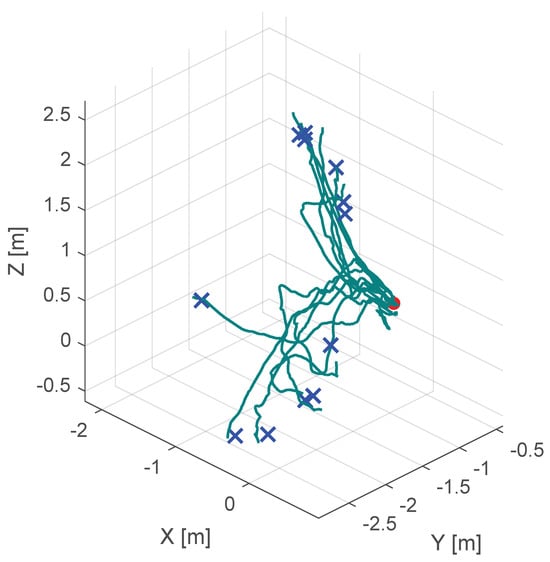

Additionally, Figure 10, Figure 11 and Figure 12 show the corresponding trajectories. As depicted in the center of Figure 11, the classical potential-field approach produces oscillations caused by its constant feedback gain. In contrast, the trajectory obtained with the proposed method is noticeably smoother, demonstrating that the operator remains closer to the primary cutting axis; see Figure 12. When no supportive feedback is applied (center of Figure 12), movement toward the target point becomes non-linear.

Figure 10.

Course of the trajectory of the our novel virtual-fixture-based shared control concept. The goal point is the red dot, the blue crosses are the various starting points and the green lines are the resulting trajectories.

Figure 11.

Course of the trajectory of the classical potential field haptic support. The goal point is the red dot, the blue crosses are the various starting points and the green lines are the resulting trajectories.

Figure 12.

Course of the trajectory of the concept without haptic support. The goal point is the red dot, the blue crosses are the various starting points and the green lines are the resulting trajectories.

Thus, the proposed assistance system is particularly beneficial for training purposes, as younger physicians can thereby learn which movements best align with the desired main cutting point. Consequently, the method is well suited for integration into surgical simulators aimed at educating less experienced practitioners.

6.2. Limitation of the Current Experimental Setup

Despite promising initial outcomes, some limitations inherent in the experimental setup necessitate careful interpretation of our findings. Firstly, the experiment involved only a single participant who was not medically trained, which significantly restricts the generalizability and external validity of the results. Moreover, the simulation environment employed in this study was simplified, excluding realistic soft-tissue interactions such as deformation and realistic tactile responses, potentially reducing the relevance of the findings to real surgical scenarios. Additionally, the short-term nature of the validation prevents analysis of learning effects or long-term skill acquisition. Finally, the experimental setup did not include typical stressors present during actual surgeries, such as time pressure or patient-critical scenarios. Nevertheless, these initial findings show strong potential, and addressing these limitations thoroughly forms a central aspect of our planned future research activities.

7. Conclusions and Outlook

In this work, we propose a novel virtual-fixture-based method specifically designed to train young surgeons in performing cataract operations according to established standards. By implementing non-linear scaling of force feedback, our method effectively prevents large and potentially disruptive feedback forces, enhancing both safety and comfort for the trainee surgeon. The novelty of our approach lies in its geometric representation, which enables adaptability to various shapes and leads to a generalized formulation applicable to different surgical operations, thus facilitating broader training for surgeons throughout their professional careers. This generalization could significantly accelerate surgical training across various medical disciplines, addressing the global shortage of skilled surgeons, particularly in developing regions where advanced surgical expertise remains limited.

In the future, we plan to extend our framework to cover additional phases of cataract surgery, such as capsular bag tearing. Assisting with these challenging tasks within a shared control system promises to shorten the learning curve significantly, allowing novice surgeons to rapidly achieve proficiency, thereby increasing surgical precision, patient safety, and overall procedural success. Identifying novice surgeons [45] and implementing shared control systems that account for human variability (see, e.g., [46]) are essential for our future work to ensure both adaptability and safety.

Author Contributions

Conceptualization, B.V. and M.P.; methodology, B.V. and M.P.; software, M.P.; validation, B.V. and M.P.; formal analysis, B.V. and M.P.; investigation, B.V. and M.P.; resources, B.V.; data curation, B.V. and M.P.; writing—original draft preparation, B.V.; writing—review and editing, M.P.; visualization, B.V. and M.P.; supervision, B.V.; project administration, B.V.; funding acquisition, B.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the fact the no personal or pseudoanonymous data were recorded.

Data Availability Statement

The data presented in this study are available on request from the authors.

Acknowledgments

We would like to acknowledge Jonas Züfle for technical support, Rebekka Peter for constructive discussions, and Sören Hohmann the opportunity to use the lab infrastructure at KIT.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| FF | Force Feedback |

| FDA | Food and Drug Administration |

| EMA | European Medicines Agency |

| CTG | Constrained Tool Geometry |

References

- Chen, J.Y.C.; Flemisch, F.O.; Lyons, J.B.; Neerincx, M.A. Guest Editorial: Agent and System Transparency. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 189–193. [Google Scholar] [CrossRef]

- Guo, X.; McFall, F.; Jiang, P.; Liu, J.; Lepora, N.; Zhang, D. A Lightweight and Affordable Wearable Haptic Controller for Robot-Assisted Microsurgery. Sensors 2024, 24, 2676. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.N. Online Learning Human Behavior for a Class of Human-in-the-Loop Systems via Adaptive Inverse Optimal Control. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 1004–1014. [Google Scholar] [CrossRef]

- Varga, B. Toward Adaptive Cooperation: Model-Based Shared Control Using LQ-Differential Games. Acta Polytech. Hung. 2024, 21, 439–456. [Google Scholar] [CrossRef]

- Wang, T.; Li, H.; Pu, T.; Yang, L. Microsurgery Robots: Applications, Design, and Development. Sensors 2023, 23, 8503. [Google Scholar] [CrossRef] [PubMed]

- Christou, A.S.; Amalou, A.; Lee, H.; Rivera, J.; Li, R.; Kassin, M.T.; Varble, N.; Tse, Z.T.H.; Xu, S.; Wood, B.J. Image-Guided Robotics for Standardized and Automated Biopsy and Ablation. Semin. Interv. Radiol. 2021, 38, 565–575. [Google Scholar] [CrossRef]

- Muñoz, V.F. Sensors Technology for Medical Robotics. Sensors 2022, 22, 9290. [Google Scholar] [CrossRef]

- Lee, A.; Baker, T.S.; Bederson, J.B.; Rapoport, B.I. Levels of Autonomy in FDA-cleared Surgical Robots: A Systematic Review. Npj Digit. Med. 2024, 7, 103. [Google Scholar] [CrossRef]

- Yang, Y.; Jiang, Z.; Yang, Y.; Qi, X.; Hu, Y.; Du, J.; Han, B.; Liu, G. Safety Control Method of Robot-Assisted Cataract Surgery with Virtual Fixture and Virtual Force Feedback. J. Intell. Robot. Syst. Theory Appl. 2020, 97, 17–32. [Google Scholar] [CrossRef]

- Gerber, M.J.; Pettenkofer, M.; Hubschman, J.P. Advanced robotic surgical systems in ophthalmology. Eye 2020, 34, 1554–1562. [Google Scholar] [CrossRef]

- Shajari, M.; Priglinger, S.; Kohnen, T.; Kreutzer, T.C.; Mayer, W.J. Katarakt- und Linsenchirurgie; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Rossi, T.; Romano, M.R.; Iannetta, D.; Romano, V.; Gualdi, L.; D’Agostino, I.; Ripandelli, G. Cataract surgery practice patterns worldwide: A survey. BMJ Open Ophthalmol. 2021, 6, e000464. [Google Scholar] [CrossRef]

- Dormegny, L.; Lansingh, V.C.; Lejay, A.; Chakfe, N.; Yaici, R.; Sauer, A.; Gaucher, D.; Henderson, B.A.; Thomsen, A.S.S.; Bourcier, T. Virtual Reality Simulation and Real-Life Training Programs for Cataract Surgery: A Scoping Review of the Literature. BMC Med. Educ. 2024, 24, 1245. [Google Scholar] [CrossRef]

- Hutter, D.E.; Wingsted, L.; Cejvanovic, S.; Jacobsen, M.F.; Ochoa, L.; González Daher, K.P.; La Cour, M.; Konge, L.; Thomsen, A.S.S. A Validated Test Has Been Developed for Assessment of Manual Small Incision Cataract Surgery Skills Using Virtual Reality Simulation. Sci. Rep. 2023, 13, 10655. [Google Scholar] [CrossRef]

- Varga, B.; Flemisch, F.; Hohmann, S. Human in the Loop. Automatisierungstechnik 2024, 72, 1109–1111. [Google Scholar] [CrossRef]

- Koyama, Y.; Marinho, M.M.; Mitsuishi, M.; Harada, K. Autonomous Coordinated Control of the Light Guide for Positioning in Vitreoretinal Surgery. IEEE Trans. Med. Robot. Bionics 2022, 4, 156–171. [Google Scholar] [CrossRef]

- Rosen, J.; Hannaford, B.; Satava, R.M. (Eds.) Surgical Robotics: Systems Applications and Visions; Springer: Boston, MA, USA, 2011. [Google Scholar] [CrossRef]

- Abedin-Nasab, M.H. (Ed.) Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Intuitive Surgical Operations, Inc. Da Vinci 5 Has Force Feedback Surgeons Can Now Feel Instrument Force to Aid in Gentler Surgery; Intuitive Surgical Operations, Inc.: Sunnyvale, CA, USA, 2024. [Google Scholar]

- Intuitive. Da Vinci 5 Has Force Feedback, 2024. Available online: https://www.intuitive.com/en-us/about-us/newsroom/Force%20Feedback (accessed on 19 June 2025).

- Patel, R.V.; Atashzar, S.F.; Tavakoli, M. Haptic Feedback and Force-Based Teleoperation in Surgical Robotics. Proc. IEEE 2022, 110, 1012–1027. [Google Scholar] [CrossRef]

- Haidegger, T. Autonomy for Surgical Robots: Concepts and Paradigms. IEEE Trans. Med. Robot. Bionics 2019, 1, 65–76. [Google Scholar] [CrossRef]

- Flemisch, F.; Abbink, D.; Itoh, M.; Pacaux-Lemoine, M.P.; Weßel, G. Shared Control Is the Sharp End of Cooperation: Towards a Common Framework of Joint Action, Shared Control and Human Machine Cooperation. IFAC-PapersOnLine 2016, 49, 72–77. [Google Scholar] [CrossRef]

- Duan, X.; Tian, H.; Li, C.; Han, Z.; Cui, T.; Shi, Q.; Wen, H.; Wang, J. Virtual-Fixture Based Drilling Control for Robot-Assisted Craniotomy: Learning From Demonstration. IEEE Robot. Autom. Lett. 2021, 6, 2327–2334. [Google Scholar] [CrossRef]

- Tian, H.; Duan, X.; Han, Z.; Cui, T.; He, R.; Wen, H.; Li, C. Virtual-Fixtures Based Shared Control Method for Curve-Cutting With a Reciprocating Saw in Robot-Assisted Osteotomy. IEEE Trans. Automat. Sci. Eng. 2024, 21, 1899–1910. [Google Scholar] [CrossRef]

- Shi, Y.; Zhu, P.; Wang, T.; Mai, H.; Yeh, X.; Yang, L.; Wang, J. Dynamic Virtual Fixture Generation Based on Intra-Operative 3D Image Feedback in Robot-Assisted Minimally Invasive Thoracic Surgery. Sensors 2024, 24, 492. [Google Scholar] [CrossRef]

- Marinho, M.M.; Ishida, H.; Harada, K.; Deie, K.; Mitsuishi, M. Virtual Fixture Assistance for Suturing in Robot-Aided Pediatric Endoscopic Surgery. IEEE Robot. Autom. Lett. 2020, 5, 524–531. [Google Scholar] [CrossRef]

- Hu, Z.J.; Wang, Z.; Huang, Y.; Sena, A.; Rodriguez Y Baena, F.; Burdet, E. Towards Human-Robot Collaborative Surgery: Trajectory and Strategy Learning in Bimanual Peg Transfer. IEEE Robot. Autom. Lett. 2023, 8, 4553–4560. [Google Scholar] [CrossRef]

- Kong, C.F.; Lee, B.W.; George, A.; Ling, M.L.; Jain, N.S.; Francis, I.C. Cataract Surgery Operating Times: Relevance to Surgical and Visual Outcomes. J. Cataract Refract. Surg. 2019, 45, 1849. [Google Scholar] [CrossRef]

- Nderitu, P.; Ursell, P. Factors Affecting Cataract Surgery Operating Time among Trainees and Consultants. J. Cataract Refract. Surg. 2019, 45, 816–822. [Google Scholar] [CrossRef] [PubMed]

- Mariani, A.; Pellegrini, E.; De Momi, E. Skill-Oriented and Performance-Driven Adaptive Curricula for Training in Robot-Assisted Surgery Using Simulators: A Feasibility Study. IEEE Trans. Biomed. Eng. 2021, 68, 685–694. [Google Scholar] [CrossRef] [PubMed]

- Shi, C.; Madera, J.; Boyea, H.; Fey, A.M. Haptic Guidance Using a Transformer-Based Surgeon-Side Trajectory Prediction Algorithm for Robot-Assisted Surgical Training. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; pp. 1942–1949. [Google Scholar] [CrossRef]

- Rota, A.; Sun, X.F.; De Momi, E. Performance-Driven Tasks with Adaptive Difficulty for Enhanced Surgical Robotics Training. In Proceedings of the 2024 10th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Heidelberg, Germany, 1–4 September 2024; pp. 465–470. [Google Scholar] [CrossRef]

- Fan, K.; Marzullo, A.; Pasini, N.; Rota, A.; Pecorella, M.; Ferrigno, G.; De Momi, E. A Unity-based Da Vinci Robot Simulator for Surgical Training. In Proceedings of the 2022 9th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Seoul, Republic of Korea, 21–24 August 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, Y. Toward Augmenting Surgical Performance Using Motion Analysis and Haptic Guidance. Ph.D. Thesis, The University of Texas at Austin, Austin, TX, USA, 2024. [Google Scholar] [CrossRef]

- Ebrahimi, A.; Alambeigi, F.; Zimmer-Galler, I.E.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. Toward Improving Patient Safety and Surgeon Comfort in a Synergic Robot-Assisted Eye Surgery: A Comparative Study. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 7075–7082. [Google Scholar] [CrossRef]

- Liu, W.; Su, Y.; Wu, W.; Xin, C.; Hou, Z.G.; Bian, G.B. An operating smooth man–machine collaboration method for cataract capsulorhexis using virtual fixture. Future Gener. Comput. Syst. 2019, 98, 522–529. [Google Scholar] [CrossRef]

- Nasseri, M.A.; Gschirr, P.; Eder, M.; Nair, S.; Kobuch, K.; Maier, M.; Zapp, D.; Lohmann, C.; Knoll, A. Virtual fixture control of a hybrid parallel-serial robot for assisting ophthalmic surgery: An experimental study. In Proceedings of the 5th IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014; pp. 732–738. [Google Scholar] [CrossRef]

- Ebrahimi, A.; Urias, M.; Patel, N.; He, C.; Taylor, R.H.; Gehlbach, P.; Iordachita, I. Towards securing the sclera against patient involuntary head movement in robotic retinal surgery. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication, RO-MAN 2019, New Delhi, India, 14–18 October 2019. [Google Scholar] [CrossRef]

- Gudiño-Lau, J.; Chávez-Montejano, F.; Alcalá, J.; Charre-Ibarra, S. Direct and inverse kinematic model of the OMNI PHANToM Modelo cinemático directo e inverso del OMNI PHANToM. ECORFAN Boliv. J. 2018, 5, 25–32. [Google Scholar]

- Al-Gailani, M.Y.; Grantner, J.L.; Shebrain, S.; Sawyer, R.G.; Abdel-Qader, I. Force Measurement Methods for Intelligent Box-Trainer System? Artificial Bowel Suturing Procedure. Acta Polytech. Hung. 2022, 19, 43–64. [Google Scholar] [CrossRef]

- Bowyer, S.A.; Davies, B.L.; Rodriguez y Baena, F. Active Constraints/Virtual Fixtures: A Survey. IEEE Trans. Robot. 2014, 30, 138–157. [Google Scholar] [CrossRef]

- Bettini, A.; Marayong, P.; Lang, S.; Hager, G.D. Vision-assisted control for manipulation using virtual fixtures. IEEE Trans. Robot. 2004, 20, 953–966. [Google Scholar] [CrossRef]

- Mieling, R.; Stapper, C.; Gerlach, S.; Neidhardt, M. Proximity-Based Haptic Feedback for Collaborative Robotic Needle Insertion. In Haptics: Science, Technology, Applications; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13316, pp. 301–309. [Google Scholar] [CrossRef]

- Karg, P.; Kienzle, A.; Kaub, J.; Varga, B.; Hohmann, S. Trustworthiness of Optimality Condition Violation in Inverse Dynamic Game Methods Based on the Minimum Principle. In Proceedings of the 2024 IEEE Conference on Control Technology and Applications (CCTA), Newcastle upon Tyne, UK, 21–23 August 2024; pp. 824–831. [Google Scholar] [CrossRef]

- Kille, S.; Leibold, P.; Karg, P.; Varga, B.; Hohmann, S. Human-Variability-Respecting Optimal Control for Physical Human-Machine Interaction. In Proceedings of the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), Pasadena, CA, USA, 26–30 August 2024; pp. 1595–1602. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).