Abstract

Bragg gratings are fundamental components in a wide range of sensing applications due to their high sensitivity and tunability. In this work, we present an augmented Bayesian approach for efficiently acquiring limited but highly informative training data for machine learning models in the design and simulation of Bragg grating sensors. Our method integrates a distance-based diversity criterion with Bayesian optimization to identify and prioritize the most informative design points. Specifically, when multiple candidates exhibit similar acquisition values, the algorithm selects the point that is farthest from the existing dataset to enhance diversity and coverage. We apply this strategy to the Bragg grating design space, where various analytical functions are fitted to the optical response. To assess the influence of output complexity on model performance, we compare different fit functions, including polynomial models of varying orders and Gaussian functions. Results demonstrate that emphasizing output diversity during the initial stages of data acquisition significantly improves performance, especially for complex optical responses. This approach offers a scalable and efficient framework for generating high-quality simulation data in data-scarce scenarios, with direct implications for the design and optimization of next-generation Bragg grating-based sensors.

1. Introduction

Bragg gratings are periodic structures that enable precise control of light propagation in optical systems [,,,,]. They function by selectively reflecting specific wavelengths while allowing others to pass through, making them fundamental components in optical fiber communication [], sensing [,], and laser stabilization []. Their ability to manipulate spectral properties with high resolution has led to widespread use in fields such as telecommunications [], biomedical sensing [,,,], and industrial monitoring [,,,,,]. However, optimizing the design and spectral response of Bragg gratings remains a computationally intensive challenge, requiring advanced modeling techniques to predict and tailor their behavior effectively [,,,].

Despite their versatility, predicting the spectral response of Bragg gratings is complex due to the interplay of multiple parameters and complex multi-mode interactions. Traditional approaches, such as the transfer matrix method and coupled mode theory [,,,,,,,,], offer computationally efficient but less accurate methods. The challenge is intensified by the need to balance accuracy and computational efficiency, making it difficult to explore a diverse range of grating structures without excessive computational costs.

Inverse design [,,,,] and machine learning (ML) for modeling and predicting the spectral response of Bragg gratings [,,] are promising alternatives. By training models on precomputed datasets, ML techniques can rapidly approximate spectral characteristics, significantly reducing computational overhead [,,]. Deep learning and regression-based models have shown potential in capturing the nonlinear relationships between design parameters and spectral outputs, providing a data-driven approach to spectrum prediction and evaluation [,,,]. However, the effectiveness of these models heavily depends on the quality and diversity of the training data, highlighting the importance of selecting representative samples that accurately span the parameter space [].

Traditional data selection methods, such as uniform sampling or random selection, often fail to provide an optimal dataset for training ML models, especially in high-dimensional parameter spaces. These approaches may yield redundant data points, increasing computational costs [,]. Moreover, they may overlook critical spectral variations that arise from complex interactions between grating parameters. As a result, there is a need for more intelligent data selection strategies that ensure comprehensive coverage of the design space while minimizing computational costs.

To address these limitations, this study proposes integrating Bayesian Optimization (BO) with distance-based criteria for selecting training data more effectively for Bragg grating spectrum prediction. BO employs probabilistic surrogate models to efficiently explore the design space, prioritizing data points that are expected to yield the greatest improvements in model accuracy [,,]. When combined with distance-based sampling, this approach ensures a more diverse and representative dataset, leading to more robust and generalizable machine learning (ML) models.

BO has previously been applied to Bragg grating design, leveraging surrogate models—typically Gaussian Processes—to navigate high-dimensional parameter spaces while reducing the number of costly simulations []. Prior studies have demonstrated its effectiveness in optimizing grating parameters such as chirp profiles, apodization, and segmentation for various photonic applications [,,].

Building on these developments, this work introduces a systematic framework for optimizing training data selection in ML-based Bragg grating models. By combining BO with distance-based sampling, the method aims to improve prediction accuracy while minimizing the training dataset. Comparative evaluations against conventional data selection strategies highlight the efficiency and effectiveness of the proposed approach, contributing to the advancement of data-driven optical modeling for integrated photonics.

2. Materials and Methods

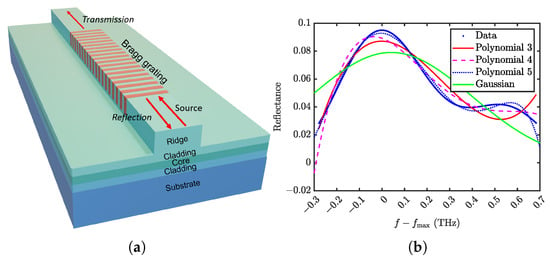

To evaluate our approach, we start by generating an initial dataset in the Bragg grating design space using a uniform mesh grid to ensure a comprehensive representation of the design variables. For the investigation, we choose a ridge waveguide with surface Bragg gratings (Figure 1a). The structure was designed in a GaAs material platform, known for its excellent electro-optical properties. However, it is applied in other material platforms as well.

Figure 1.

(a) Ridgewaveguide with the surface Bragg grating. (b) Example reflection spectrum fitted with various fit functions.

To generate a comprehensive dataset for analyzing the optical performance of GaAs ridge waveguides with integrated Bragg gratings, a specific device geometry was designed and simulated. The waveguide features a ridge width and height of 5 μm and 1.4 μm, respectively, tailored for single-mode operation. On top of this ridge lies a higher-order rectangular Bragg grating of varying length. These features allow precise tuning of the structure’s optical reflectance, which is centered near 2.8 × 1014 Hz (wavelength ≈ 1064 nm), making it highly suitable for specific laser applications. We generated a database of the reflectance spectra by varying the parameters, namely, length of Bragg grating (25–75 μm), depth (1.1–1.6 μm), width (0.025–0.12 μm) and refractive index of gratings’ grooves (1.95–2.9), chirp value (relative period variation along the grating ±0.001) and the order of the grating (5–9). Each of the parameters was sampled with 5 linearly spaced points.

To accurately model and simulate the interaction of light with this complex structure, the Lumerical FDTD solver was integrated with MATLAB R2022a for automated simulation control (see [,,] for more details). A uniform mesh size of 20 nm was employed across all dimensions, providing high spatial resolution with approximately one billion mesh points per simulation. A total of 15,625 simulations were conducted, resulting in a dataset that spans a technologically feasible range of parameters.

After completing the simulations, mode projection was used to compute reflectance values, and the central lobe of the reflectance spectra was analyzed using Gaussian fitting techniques. Due to the shallow groove depths and high duty cycles used, radiation losses were minimal, and the central reflection lobe remained symmetric. This allowed for an accurate Gaussian approximation of the central lobe using data points exceeding one-third of the peak amplitude. The fitting achieved over 99% accuracy, validating the use of this method for analyzing the amplitude and bandwidth dependence on the Bragg grating’s physical parameters. This dataset provides a reliable foundation for optimizing ridge waveguide Bragg gratings in high-performance photonic devices.

3. XGBoost-Based Prediction of Bragg Spectra

3.1. Data Generation Fit Comparison: Gaussian vs. Polynomial

To reduce the dimensionality of the output data, we fit the reflectance spectra with various functions and use the fit parameters as the output features in our database (Figure 1b). As a benchmark, we use a simple fit of the top two-thirds of the central lobe in reflectance spectra with a Gaussian function. It was previously demonstrated that such an approach is efficient for describing the amplitude and bandwidth of the central lobe of the Bragg spectrum [].

Due to the length variation of the Bragg gratings in the database, the bandwidth of the Bragg reflectivity peak varies significantly. As a result, describing the spectrum in the same bandwidth might cause low precision for narrow spectra (longer structures) or negligence of the spectral features for broad spectra (shorter structures). To avoid this, two approaches are generally possible to recalculate bandwidth for each spectrum: (i) considering the fixed bandwidth for each length, (ii) automatically recalculating the bandwidth depending on the shape of the central lobe. The bandwidth of the Bragg reflectance of the weak grating is inversely proportional to its length. Thus, one can identify the base bandwidth for a fixed length and recalculate it for other cases. Such an approach would be efficient for mostly symmetric spectra. The second approach requires an automatic determination of the bandwidth for the point above a threshold reflectivity, but it can be more flexible and applicable for complex spectra. We applied the second approach in the text to adapt to the asymmetry of some of the spectra. Those spectra are observed for chirped gratings and structures with deep grooves, causing high radiation losses.

The results of the comparison of the Gaussian and polynomial fitting methods are presented in Table 1. The fit performed by different methods was applied to every spectrum in the database. To evaluate the accuracy of the fit, we use the coefficient of determination () and root mean squared error (RMSE) metrics. As expected, a polynomial function of the 5th order demonstrates the highest precision. In particular, the presence of additional peaks in some of the Bragg spectra (Figure 1b) requires higher-order polynomials to efficiently fit the top of the spectra and their asymmetrical features. The produced dataset of coefficients for various simulations is available here: Ref. [].

Table 1.

Precision of the fit to the Bragg reflectance for different functions. The minimum and the average values are calculated for the full database of available simulations. In the table n corresponds to the order of the polynomials.

3.2. ML Performance: Parameter Prediction vs. Curve Prediction

Once the fit coefficients for every fit function are calculated, we consider the problem of predicting the spectrum. By reframing the problem as a regression task, we use machine learning to train and predict the fit parameters of the spectra and evaluate the performance using two approaches. The first approach, parameter prediction, directly compares the predicted fit parameters with the true fit parameters. The second approach, curve prediction, assesses the accuracy of the entire reconstructed spectra. After predicting the fit parameters with machine learning, we regenerate the reflectance spectra using both the predicted and true parameters. These reconstructed spectra are then compared, providing an evaluation of how well the model captures the shape and features of the spectra.

To solve the regression problem with limited data, we apply extreme gradient boosting (XGBoost) technique, a data-efficient machine learning algorithm, to predict the parameters of interest []. The XGBoost method is a highly efficient implementation of gradient-boosted decision trees, which performs well in regression and classification tasks. Its core principle involves iteratively constructing a series of decision trees, where each subsequent tree minimizes the errors of its predecessor by focusing on the residuals. Unlike standard gradient boosting, XGBoost incorporates several enhancements such as regularization to reduce over-fitting, a sparsity-aware algorithm for better handling of missing data, and parallelized tree construction for improved scalability [,,]. In this study, XGBoost was utilized to predict fit parameters, achieving high precision due to its ability to model non-linear relationships and adapt to varying complexities of spectral features. The chosen configuration included the use of the ’dart’ booster, which introduces dropout techniques into the boosting process to improve generalization. The learning rate is set to 0.08 to balance training speed and convergence stability. A maximum tree depth of 8 allows the model to capture moderately complex interactions in the data, while the number of estimators is fixed at 170 to control the model’s capacity.

The selection of XGBoost is motivated by the limited availability of data. While neural network-based techniques offer high efficiency and flexibility, they typically require large amounts of training data, which is not feasible when simulations are performed with high precision [,]. On the other hand, simpler methods such as k-NN or linear regression require less data but fail to achieve the necessary accuracy []. As a result, XGBoost provides a well-balanced compromise for analyzing limited simulation data obtained from highly accurate FDTD methods.

We apply XGBoost to our databases of fit coefficients (Table 2). To evaluate the accuracy of the prediction, we use the coefficient of determination (), root mean squared error (RMSE) metrics, and mean absolute percentage error (MAPE). The dataset Ref. [] for each fit method was randomly split into 90% training and 10% test subsets. This process was repeated 100 times to ensure statistical robustness, and the average values along with standard deviations of the evaluation metrics were computed and reported. We can observe the overall decrease in the precision of prediction for all the evaluation methods with an increase in the polynomial order.

Table 2.

Precision of the fit to the Bragg reflectance for different functions based on floating frequency range. The minimum and the average values are calculated for the full database of available simulations. In the table n corresponds to the order of the polynomials.

In Table 3, we compare the precision of the predicted coefficients for the fit functions. We also compare the accuracy of curve prediction, which involves comparing the reconstructed spectra to the true spectra obtained from the FDTD simulation method. Predicting the Gaussian fit parameters exhibited higher precision, due to its simplicity and fewer parameters, achieving an average mean squared error, . However, their oversimplified representation of spectral features, such as asymmetry and radiation losses, resulted in lower accuracy for curve prediction .

Table 3.

Precision of parameters and curve prediction with XGBoost using the databases obtained by different fitting methods with floating bandwidth.

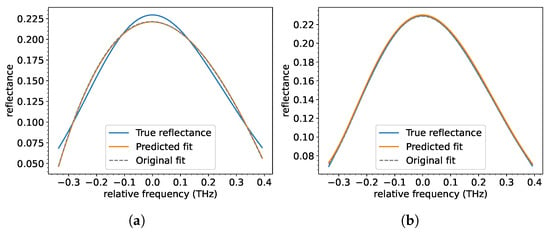

In contrast, polynomial fits, especially of higher orders (e.g., ), provided a more detailed representation of the spectra, capturing critical features like side peaks and asymmetries (Figure 2b). This resulted in a superior curve prediction accuracy despite slightly reduced precision for parameter prediction. These results underscore the trade-off between computational simplicity and spectral-prediction accuracy. The analysis highlights XGBoost’s suitability for tasks requiring detailed spectral reconstruction.

Figure 2.

True and predicted fit for a spectrum performed with a polynomial function of the (a) 3rd and (b) 5th order. Relative frequency here is defined as the difference between the actual and the reference frequency (Bragg resonance frequency).

In summary, XGBoost effectively balances parameter prediction accuracy and curve reconstruction precision, demonstrating adaptability to complex spectral features. Its efficiency highlights its potential for optimizing machine learning workflows in optical system simulations. These insights lay the foundation for applying advanced data selection strategies, explored further in Section 3, to enhance data acquisition efficiency and model performance.

4. Data Acquisition

4.1. Bayesian Optimization for Database Generation

Similar to the previous analysis, we implement a Bayesian-based sampling (BBS) approach [] to select the most informative data points for the best curve prediction. In this approach, Gaussian process regression identifies data points with maximal uncertainty, building minimal yet highly informative datasets. The approach was applied to Bragg grating designs, where the Bayesian-based database required significantly fewer data points compared to traditional uniform and random sampling methods while maintaining the same accuracy. BBS has been shown to reduce data requirements by an order of magnitude, highlighting its efficiency, particularly in resource-intensive simulations like FDTD. This methodology not only ensures high accuracy in predictions performed by ML but also demonstrates its broader potential for data-driven modeling across scientific disciplines, enabling cost-effective and precise database generation for ML applications.

In order to avoid dependency on initial conditions in the BBS method, we averaged values for five different initial conditions. We also averaged the outcomes on randomly selected data points 10 times. The efficiency was calculated for different fit methods. To do that, we took the maximum prediction precision achieved with the random database and calculated the data points needed to achieve the same precision with BBS.

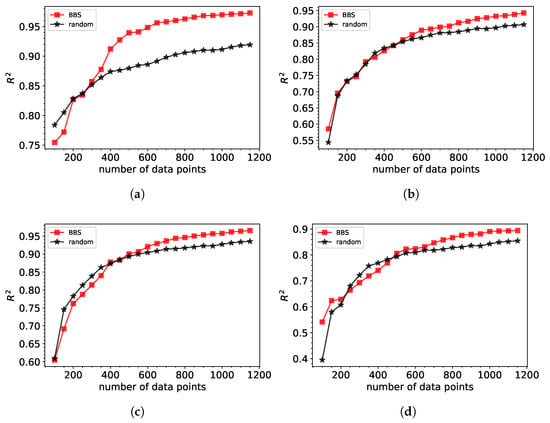

Figure 3 compares the performance of machine learning models trained on datasets generated using BBS and those created via random sampling. The results demonstrate that the BBS method significantly outperforms random sampling in terms of predictive accuracy, as measured by the (), for both Gaussian and polynomial fits. Databases constructed through BBS achieve similar values with much fewer data points, emphasizing the efficiency of the BBS-selected data.

Figure 3.

Performance of XGBoost machine learning model trained on databases generated by BBS versus random selection of points for (a) Gaussian fit (b) polynomial of 3rd order (c) polynomial of 4th order (d) polynomial 5th order.

For Gaussian fits with three parameters, the BBS approach reaches an of approximately 0.95 using about 600 data points, whereas random sampling requires considerably more data to attain comparable accuracy. Similarly, for polynomial fits of the 3rd and 4th orders, BBS demonstrates its ability to handle increasing spectral complexity, consistently achieving better predictions with reduced dataset sizes. In the case of the 4th-order polynomial fit, BBS excels in managing the more intricate structure of the spectra, achieving high accuracy with far fewer points compared to random sampling.

In this study, we intentionally limited the number of data points to 1200 to reflect realistic constraints in simulation-based workflows, where generating additional data through full-wave simulations like FDTD can be computationally prohibitive. The proposed augmented BBS method is specifically designed to be most effective during the early stages of data acquisition, providing improved prediction accuracy with fewer samples. Moreover, in practical Bragg grating applications, the achievable precision is often bounded by fabrication tolerances, meaning further increases in R2 beyond 0.95 may not translate into meaningful design improvements. Therefore, our focus remains on maximizing efficiency and performance within a limited data regime, which is more representative of real-world design constraints.

As the number of data points increases, the gap between the performance of BBS and random sampling narrows, reflecting the diminishing returns of additional data when the dataset nears saturation. However, BBS maintains its advantage by prioritizing the selection of the most informative data points. These findings highlight the capability of Bayesian BBS to optimize data acquisition in high-dimensional parameter spaces, reducing the required training dataset while maintaining or improving prediction accuracy.

4.2. Augmented BBS for Improved Database Generation

In the Bayesian approach, the Gaussian process regression (GPR) model selects data points by using the upper confidence bound (UCB) acquisition function, which identifies the data point with the highest predicted standard deviation from a dense mesh []. In our implementation of the UCB acquisition function, we set the weight parameter for the mean () to zero and the standard deviation weight () to one, effectively prioritizing exploration over exploitation. In UCB, the mean term promotes exploitation (sampling where predictions are high), while the standard deviation term promotes exploration (sampling where the model is uncertain). By emphasizing the predictive uncertainty of the surrogate model, the acquisition function drives sampling toward underexplored areas. This maximizes the uncertainty in the model, leading to the acquisition of the most informative data point for further training of the GPR model.

However, due to the multi-dimensionality of the output space, the UCB acquisition function could have equal or almost equal values for several data points. This is more pronounced at the beginning of the data selection, when only a few data points are known. To improve the data selection procedure and avoid the limitations of having several data points with similar values for the acquisition function, we add an additional condition to the data selection procedure. This modified approach, augmented BBS, is as follows.

We first identify data points with maximum values for their acquisition function. This is accomplished using the predictive standard deviation for all the unknown data points. We use a small threshold epsilon (10−6) to account for numerical precision, capturing all values close to the maximum in the UCB acquisition function. If there are multiple candidates with the maximum acquisition value within the epsilon range, we take all these data points for the secondary screening. We then compute their Euclidean distances to all the points that have already been selected (selected data). We used Euclidean distance to assess point separation due to its simplicity and efficiency. All input parameters were normalized to the same range, making Euclidean distance an appropriate choice in the absence of strong inter-parameter correlations. While alternative distance metrics may be explored in future work, Euclidean distance provided a good balance between interpretability and computational cost in our setting. This returns a matrix of distances. We take the minimum distance for each candidate from the selected data. Among the candidates with the maximum acquisition value, we choose the data with the largest of these minimum distances, ensuring maximal separation. This guarantees that the selection process favors the points that not only have a high acquisition value but are also as diverse as possible–in other words, spread out within the design space.

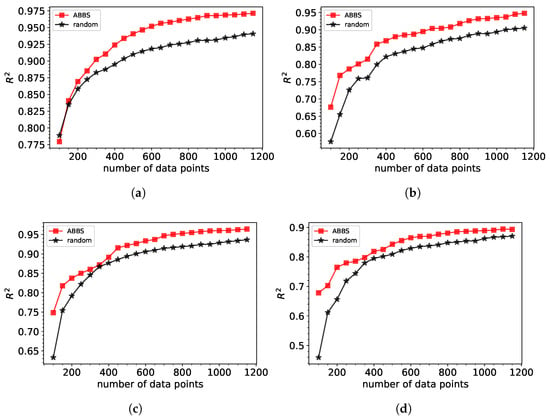

The augmented BBS method’s primary advantage lies in its ability to adaptively focus on regions of the parameter space that provide maximum informational gain, even as the dataset grows. By incorporating mechanisms to avoid redundancy and refine data selection based on prior iterations, the augmented BBS reduces the required training dataset. This is especially evident in Figure 4, where, in contrast to the standard, augmented BBS significantly outperforms random from the beginning of the database generation.

Figure 4.

Performance of XGBoost machine learning model trained on database generated by augmented BBS (ABBS) approach and by a random selection of points for (a) Gaussian fit (b) polynomial of 3rd order (c) polynomial of 4th order (d) polynomial 5th order.

In addition to improving model accuracy with fewer training points, the augmented BBS approach also leads to a reduction in the training dataset acquisition time. Assuming all simulations require comparable computational effort, the smaller, more informative datasets generated by ABBS resulted in up to a 35% reduction in dataset acquisition compared to random sampling (for 4th- and 5th-order polynomial fits). This computational advantage is particularly relevant for iterative design workflows, where both simulation and training time are critical bottlenecks.

This early advantage of the augmented BBS method is attributed to the diversity criterion introduced via the epsilon threshold. At the start of database generation, the UCB acquisition function yields several candidates with nearly identical values due to the limited training data. The epsilon filter identifies such points, and the algorithm then selects the one farthest from existing data points. This ensures that the initial selections are not only informative in terms of uncertainty but also well-distributed across the parameter space, enabling faster learning and better generalization even with small datasets.

Overall, the augmented BBS method enhances the efficiency of Bayesian optimization by improving data selection strategies, making it particularly valuable for applications involving high-dimensional parameter spaces and resource-intensive simulations. It offers a practical pathway for achieving high prediction accuracy with minimal data, thereby reducing computational costs and enabling faster convergence in machine learning workflows.

To apply machine learning methods discussed in this work, we use two distinct models tailored to different stages of the methodology. For Bayesian data selection, we employ a Gaussian process regressor. This model provides both predictions and uncertainty estimates, which are essential for guiding the acquisition function. For the machine learning modeling of the Bragg grating response, we use XGBRegressor from the XGBoost library, chosen for its strong performance in structured regression tasks. Evaluation of model accuracy and generalization is carried out using standard regression metrics from the scikit-learn library.

5. Conclusions

In this study, we explored a Bayesian-based sampling (BBS) approach for the efficient generation of simulation data for Bragg grating structures—critical components in modern optical sensing technologies. The constructed database consisted of reflectance spectra corresponding to various geometrical configurations of the gratings. We analyzed the performance of different fitting functions applied to the central lobe of the reflectance spectrum, including polynomials of varying orders and Gaussian fits. Our results showed that polynomial fitting outperforms Gaussian fitting due to inherent asymmetries in the spectral shape, and we identified an optimal polynomial order that balances accuracy and complexity.

The BBS method has been shown to enhance data efficiency by selecting diverse and informative points within the design space. Notably, our findings highlight the importance of incorporating distance-based diversity in the early stages of database generation, particularly when dealing with complex and highly nonlinear spectral responses, conditions often encountered in real-world sensing applications.

Overall, this work provides practical insights into data-efficient modeling of Bragg gratings, offering a robust framework for the development of advanced machine learning-driven sensor design and optimization. These findings contribute to the broader effort to accelerate simulation-based design in photonic sensors, especially under constraints of limited computational resources or experimental data.

Author Contributions

I.N., M.R.M. and A.W. conceived and planned the research. I.N., Y.R. and M.R.M. carried out the method development. I.N. performed the FDTD Bragg grating simulations, generated the database and carried out optimizations and ML simulations. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the VDI Technologiezentrum GmbH with funds provided by the Federal Ministry of Education and Research under grant no. 13N14906 and the DLR Space Administration with funds provided by the Federal Ministry for Economic Affairs and Climate Action (BMWK) under Grants Nos. 50WM2152 and 50WK2272.

Data Availability Statement

Data available in a publicly accessible repository https://github.com/NechepurenkoIgor/Data-Files-for-Augmented-Bayesian-Data-Selection (accessed on 3 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest regarding this article.

Abbreviations

The following abbreviations are used in this manuscript:

| ABBS | Augmented Bayesian-based sampling |

| BBS | Bayesian-based sampling |

| BO | Bayesian Optimization |

| CMT | Coupled mode theory |

| GPR | Gaussian process regression |

| ML | Machine learning |

| RMSE | Root mean squared error |

| UCB | Upper confidence bound |

| XGBoost | Extreme gradient boosting |

References

- Zhang, W.; Yao, J. A fully reconfigurable waveguide Bragg grating for programmable photonic signal processing. Nat. Commun. 2018, 9, 1396. [Google Scholar] [CrossRef] [PubMed]

- Knall, J.M.; Engholm, M.; Boilard, T.; Bernier, M.; Digonnet, M.J. Radiation-balanced silica fiber amplifier. Phys. Rev. Lett. 2021, 127, 013903. [Google Scholar] [CrossRef]

- Butt, M.A.; Kazanskiy, N.L.; Khonina, S.N. Advances in waveguide Bragg grating structures, platforms, and applications: An up-to-date appraisal. Biosensors 2022, 12, 497. [Google Scholar] [CrossRef] [PubMed]

- La, T.A.; Ülgen, O.; Shnaiderman, R.; Ntziachristos, V. Bragg grating etalon-based optical fiber for ultrasound and optoacoustic detection. Nat. Commun. 2024, 15, 7521. [Google Scholar] [CrossRef] [PubMed]

- Alhussein, A.N.; Qaid, M.R.; Agliullin, T.; Valeev, B.; Morozov, O.; Sakhabutdinov, A. Fiber Bragg Grating Sensors: Design, Applications, and Comparison with Other Sensing Technologies. Sensors 2025, 25, 2289. [Google Scholar] [CrossRef]

- Kumar, S.; Rathee, S.; Arora, P.; Sharma, D. A comprehensive review on fiber bragg grating and photodetector in optical communication networks. J. Opt. Commun. 2022, 43, 585–592. [Google Scholar] [CrossRef]

- Choi, B.K.; Kim, J.S.; Ahn, S.; Cho, S.Y.; Kim, M.S.; Yoo, J.; Jeon, M.Y. Simultaneous temperature and strain measurement in fiber Bragg grating via wavelength-swept laser and machine learning. IEEE Sens. J. 2024, 24, 27516–27524. [Google Scholar] [CrossRef]

- Theodosiou, A. Recent advances in fiber Bragg grating sensing. Sensors 2024, 24, 532. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Y.; Xu, L.; Yang, L.; Dai, S. Frequency-stabilized and high-efficiency Brillouin random fiber laser based on a fiber Bragg grating ring. Infrared Phys. Technol. 2025, 147, 105799. [Google Scholar] [CrossRef]

- Bazakutsa, A.; Rybaltovsky, A.; Belkin, M.; Lipatov, D.; Lobanov, A.; Abramov, A.; Butov, O. Highly-photosensitive Er/Yb-codoped fiber for single-frequency continuous-wave fiber lasers with a short cavity for telecom applications. Opt. Mater. 2023, 138, 113669. [Google Scholar] [CrossRef]

- Chubchev, E.D.; Tomyshev, K.A.; Nechepurenko, I.A.; Dorofeenko, A.V.; Butov, O.V. Machine learning approach to data processing of TFBG-assisted SPR sensors. J. Light. Technol. 2022, 40, 3046–3054. [Google Scholar] [CrossRef]

- Huang, Z.; Yang, N.; Xie, J.; Han, X.; Yan, X.; You, D.; Li, K.; Vai, M.I.; Tam, K.W.; Guo, T.; et al. Improving accuracy and sensitivity of a tilted fiber Bragg grating refractometer using cladding mode envelope derivative. J. Light. Technol. 2023, 41, 4123–4129. [Google Scholar] [CrossRef]

- Fasseaux, H.; Loyez, M.; Caucheteur, C. Machine Learning approach for fiber-based biosensor. In Optical Fiber Sensors; Optica Publishing Group: Washington, DC, USA, 2023; p. W4-4. [Google Scholar]

- Leal-Junior, A.; Avellar, L.; Frizera, A.; Caucheteur, C.; Marques, C. Machine learning approach for automated data analysis in tilted FBGs. Opt. Fiber Technol. 2024, 84, 103756. [Google Scholar] [CrossRef]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proc. IEEE 2015, 104, 148–175. [Google Scholar] [CrossRef]

- Frazier, P.I. Bayesian optimization. In Recent Advances in Optimization and Modeling of Contemporary Problems; Informs: Catonsville, MD, USA, 2018; pp. 255–278. [Google Scholar]

- Garnett, R. Bayesian Optimization; Cambridge University Press: Cambridge, UK, 2023. [Google Scholar]

- Lin, W.; Liu, Y.; Yu, F.; Zhao, F.; Liu, S.; Liu, Y.; Chen, J.; Vai, M.I.; Shum, P.P.; Shao, L.Y. Temperature fiber sensor based on 1-D CNN incorporated time-stretch method for accurate detection. IEEE Sens. J. 2023, 23, 5773–5779. [Google Scholar] [CrossRef]

- Adibnia, E.; Ghadrdan, M.; Mansouri-Birjandi, M.A. Chirped apodized fiber Bragg gratings inverse design via deep learning. Opt. Laser Technol. 2025, 181, 111766. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Y.; Wei, H.; Teng, C.; Yu, Q.; Luo, Z.; Lian, Z.; Qu, H.; Caucheteur, C. Tilted Bragg grating in a glycerol-infiltrated specialty optical fiber for temperature and strain measurements. Opt. Lett. 2024, 49, 2869–2872. [Google Scholar] [CrossRef]

- Ngo, N.Q.; Zheng, R.T.; Ng, J.; Tjin, S.; Binh, L.N. Optimization of fiber Bragg gratings using a hybrid optimization algorithm. J. Light. Technol. 2007, 25, 799–802. [Google Scholar] [CrossRef]

- Nechepurenko, I.; Rahimof, Y.; Mahani, M.; Wenzel, S.; Wicht, A. Finite-difference time-domain simulations of surface Bragg gratings. In Proceedings of the 2023 International Conference on Numerical Simulation of Optoelectronic Devices (NUSOD), Turin, Italy, 18–21 September 2023; pp. 3–4. [Google Scholar]

- Rahimof, Y.; Nechepurenko, I.; Mahani, M.R.; Tsarapkin, A.; Wicht, A. The study of 3D FDTD modelling of large-scale Bragg gratings validated by experimental measurements. J. Phys. Photonics 2024, 6, 045024. [Google Scholar] [CrossRef]

- Brückerhoff-Plückelmann, F.; Buskasper, T.; Römer, J.; Krämer, L.; Malik, B.; McRae, L.; Kürpick, L.; Palitza, S.; Schuck, C.; Pernice, W. General design flow for waveguide Bragg gratings. Nanophotonics 2025, 14, 297–304. [Google Scholar] [CrossRef]

- Agrawal, G.P. Nonlinear fiber optics. In Nonlinear Science at the Dawn of the 21st Century; Springer: Berlin/Heidelberg, Germany, 2000; pp. 195–211. [Google Scholar]

- Kashyap, R. Fiber Bragg Gratings; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Kogelnik, H.; Shank, C.V. Coupled-wave theory of distributed feedback lasers. J. Appl. Phys. 1972, 43, 2327–2335. [Google Scholar] [CrossRef]

- Yariv, A. Coupled-mode theory for guided-wave optics. IEEE J. Quantum Electron. 2003, 9, 919–933. [Google Scholar] [CrossRef]

- Coldren, L.A.; Corzine, S.W.; Mashanovitch, M.L. Diode Lasers and Photonic Integrated Circuits; John Wiley & Sons: Hoboken, NJ, USA, 2012; Volume 218. [Google Scholar]

- Siegman, A.E. Lasers; University Science Books: Melville, NY, USA, 1986. [Google Scholar]

- Butov, O.V.; Tomyshev, K.; Nechepurenko, I.; Dorofeenko, A.V.; Nikitov, S.A. Tilted fiber Bragg gratings and their sensing applications. Uspekhi Fiz. Nauk. 2022, 192, 1385–1398. [Google Scholar] [CrossRef]

- Rahimof, Y.; Nechepurenko, I.A.; Mahani, M.R.; Wicht, A. Coupled Mode Theory Fitting of Bragg Grating Spectra Obtained with 3D FDTD Method. In CLEO: Applications and Technology; Optica Publishing Group: Washington, DC, USA, 2024; p. JTh2A-208. [Google Scholar]

- Saleh, B.E.; Teich, M.C. Fundamentals of Photonics; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Tang, Y.; Kojima, K.; Koike-Akino, T.; Wang, Y.; Wu, P.; Xie, Y.; Tahersima, M.H.; Jha, D.K.; Parsons, K.; Qi, M. Generative deep learning model for inverse design of integrated nanophotonic devices. Laser Photonics Rev. 2020, 14, 2000287. [Google Scholar] [CrossRef]

- Mengu, D.; Sakib Rahman, M.S.; Luo, Y.; Li, J.; Kulce, O.; Ozcan, A. At the intersection of optics and deep learning: Statistical inference, computing, and inverse design. Adv. Opt. Photonics 2022, 14, 209–290. [Google Scholar] [CrossRef]

- Mahani, M.; Nechepurenko, I.A.; Rahimof, Y.; Wicht, A. Frequency shift detection mechanism in GaAs waveguides with mode-coupled Bragg gratings. Opt. Express 2025, 33, 13519–13529. [Google Scholar] [CrossRef]

- Li, S.; Luo, M.; Lee, S.S. Cycle cGAN-driven inverse design of 1 × 2 MMI couplers with arbitrary power-splitting ratios and high efficiency. Opt. Express 2025, 33, 18556–18572. [Google Scholar] [CrossRef]

- MacLellan, B.; Roztocki, P.; Belleville, J.; Romero Cortés, L.; Ruscitti, K.; Fischer, B.; Azaña, J.; Morandotti, R. Inverse Design of Photonic Systems. Laser Photonics Rev. 2024, 18, 2300500. [Google Scholar] [CrossRef]

- Hammond, A.M.; Camacho, R.M. Designing integrated photonic devices using artificial neural networks. Opt. Express 2019, 27, 29620–29638. [Google Scholar] [CrossRef]

- Dey, K.; Nikhil, V.; Chaudhuri, P.R.; Roy, S. Demonstration of a fast-training feed-forward machine learning algorithm for studying key optical properties of FBG and predicting precisely the output spectrum. Opt. Quantum Electron. 2023, 55, 16. [Google Scholar] [CrossRef]

- Mahani, M.; Nechepurenko, I.; Rahimof, Y.; Wicht, A. Designing rectangular surface bragg gratings using machine learning models. In Proceedings of the 2023 International Conference on Numerical Simulation of Optoelectronic Devices (NUSOD), Turin, Italy, 18–21 September 2023; pp. 69–70. [Google Scholar]

- Liu, Z.; Zhu, D.; Raju, L.; Cai, W. Tackling photonic inverse design with machine learning. Adv. Sci. 2021, 8, 2002923. [Google Scholar] [CrossRef]

- Mahani, M.; Rahimof, Y.; Wenzel, S.; Nechepurenko, I.; Wicht, A. Data-Efficient Machine Learning Algorithms for the Design of Surface Bragg Gratings. ACS Appl. Opt. Mater. 2023, 1, 1474–1484. [Google Scholar] [CrossRef]

- Adibnia, E.; Mansouri-Birjandi, M.A.; Ghadrdan, M.; Jafari, P. A deep learning method for empirical spectral prediction and inverse design of all-optical nonlinear plasmonic ring resonator switches. Sci. Rep. 2024, 14, 5787. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Chen, M.; Fan, J.A. Deep neural networks for the evaluation and design of photonic devices. Nat. Rev. Mater. 2021, 6, 679–700. [Google Scholar] [CrossRef]

- Head, S.; Keshavarz Hedayati, M. Inverse design of distributed bragg reflectors using deep learning. Appl. Sci. 2022, 12, 4877. [Google Scholar] [CrossRef]

- Yu, Q.; Norris, B.R.; Edvell, G.; Luo, L.; Bland-Hawthorn, J.; Leon-Saval, S.G. Inverse design and optimization of an aperiodic multi-notch fiber Bragg grating using neural networks. Appl. Opt. 2024, 63, D50–D58. [Google Scholar] [CrossRef] [PubMed]

- Gayathri, M.; Porkodi, V. Computational Design of Strip Waveguide Bragg Gratings Using Neural Networks for Hexagonal Shaped Refractive Index Biosensors Based Blood Sample Detection. ECS J. Solid State Sci. Technol. 2025, 14, 037004. [Google Scholar] [CrossRef]

- Mahani, M.; Nechepurenko, I.A.; Rahimof, Y.; Wicht, A. Optimizing data acquisition: A Bayesian approach for efficient machine learning model training. Mach. Learn. Sci. Technol. 2024, 5, 035013. [Google Scholar] [CrossRef]

- Chen, R.C.; Dewi, C.; Huang, S.W.; Caraka, R.E. Selecting critical features for data classification based on machine learning methods. J. Big Data 2020, 7, 52. [Google Scholar] [CrossRef]

- Theng, D.; Bhoyar, K.K. Feature selection techniques for machine learning: A survey of more than two decades of research. Knowl. Inf. Syst. 2024, 66, 1575–1637. [Google Scholar] [CrossRef]

- Mahani, M.; Nechepurenko, I.A.; Flisgen, T.; Wicht, A. Combining Bayesian Optimization, Singular Value Decomposition, and Machine Learning for Advanced Optical Design. ACS Photonics 2025, 12, 1812–1821. [Google Scholar] [CrossRef]

- Li, Z.; Xue, Y. Health assessment and health trend prediction of wind turbine bearing based on BO-BiLSTM model. Sci. Rep. 2025, 15, 9169. [Google Scholar] [CrossRef]

- Zhang, D.; Qin, F.; Zhang, Q.; Liu, Z.; Wei, G.; Xiao, J.J. Segmented Bayesian optimization of meta-gratings for sub-wavelength light focusing. J. Opt. Soc. Am. B 2019, 37, 181–187. [Google Scholar] [CrossRef]

- Rickert, L.; Betz, F.; Plock, M.; Burger, S.; Heindel, T. High-performance designs for fiber-pigtailed quantum-light sources based on quantum dots in electrically-controlled circular Bragg gratings. Opt. Express 2023, 31, 14750–14770. [Google Scholar] [CrossRef]

- Grebot, J.; Helleboid, R.; Mugny, G.; Nicholson, I.; Mouron, L.H.F.; Lanteri, S.; Rideau, D. Bayesian Optimization of Light Grating for High Performance Single-Photon Avalanche Diodes. In Proceedings of the 2023 International Conference on Simulation of Semiconductor Processes and Devices (SISPAD), Kobe, Japan, 27–29 September 2023; pp. 365–368. [Google Scholar]

- Nechepurenko, I.; Mahani, M.R.; Rahimof, Y.; Wicht, A. Simulation Dataset for Bragg Grating ML Modeling. 2025. Available online: https://github.com/NechepurenkoIgor/Data-Files-for-Augmented-Bayesian-Data-Selection (accessed on 29 July 2025).

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Zhang, X.; Yan, C.; Gao, C.; Malin, B.A.; Chen, Y. Predicting missing values in medical data via XGBoost regression. J. Healthc. Informatics Res. 2020, 4, 383–394. [Google Scholar] [CrossRef] [PubMed]

- Rana, J.; Sharma, A.K.; Prajapati, Y.K. Intervention of machine learning and explainable artificial intelligence in fiber optic sensor device data for systematic and comprehensive performance-optimization. IEEE Sens. Lett. 2024, 8, 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).