1. Introduction

Tactile sensing is an essential capability for both animals and robots, enabling tasks such as object manipulation, navigation, texture classification, and force estimation [

1,

2,

3]. In nature there are numerous forms of tactile sensing. For instance, some nocturnal animals, such as whip spiders, utilise specialised low-resolution tactile sensors—antennae or antenniform legs—to effectively scan their environments so as to navigate without relying on vision [

4]. Other species, including insects and small mammals, employ hairs to detect vibrations, which assist in navigation and surface manipulation and recognition [

5]. In contrast, humans primarily rely on high-resolution tactile sensing via a very large number of receptors distributed throughout the skin, enabling sophisticated object manipulation and texture classification [

6]. This diversity in tactile sensing mechanisms in nature is mirrored in the field of robotics, where tactile sensing is divided into four main categories: thermal, inductive, capacitive/electrical, and optical [

7,

8]. However, unlike in nature where we assume that natural selection has favoured the most appropriate sensor given constraints on brain size, energy, and the demands of the tasks, to understand the relative strengths and weaknesses of different sensor types for a robotic task we must compare them. To this end, this paper presents a comparison of sensors for tactile texture classification and friction prediction, focusing on optical and capacitive/electrical sensors as they are the most prominent approaches [

3,

9,

10,

11,

12].

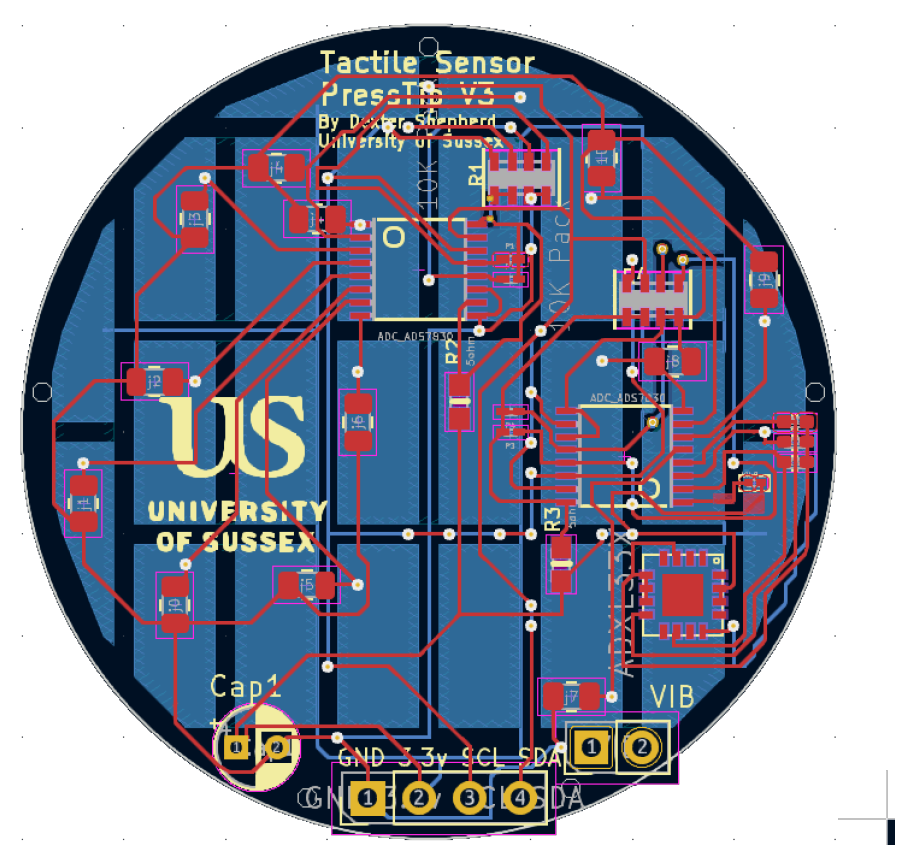

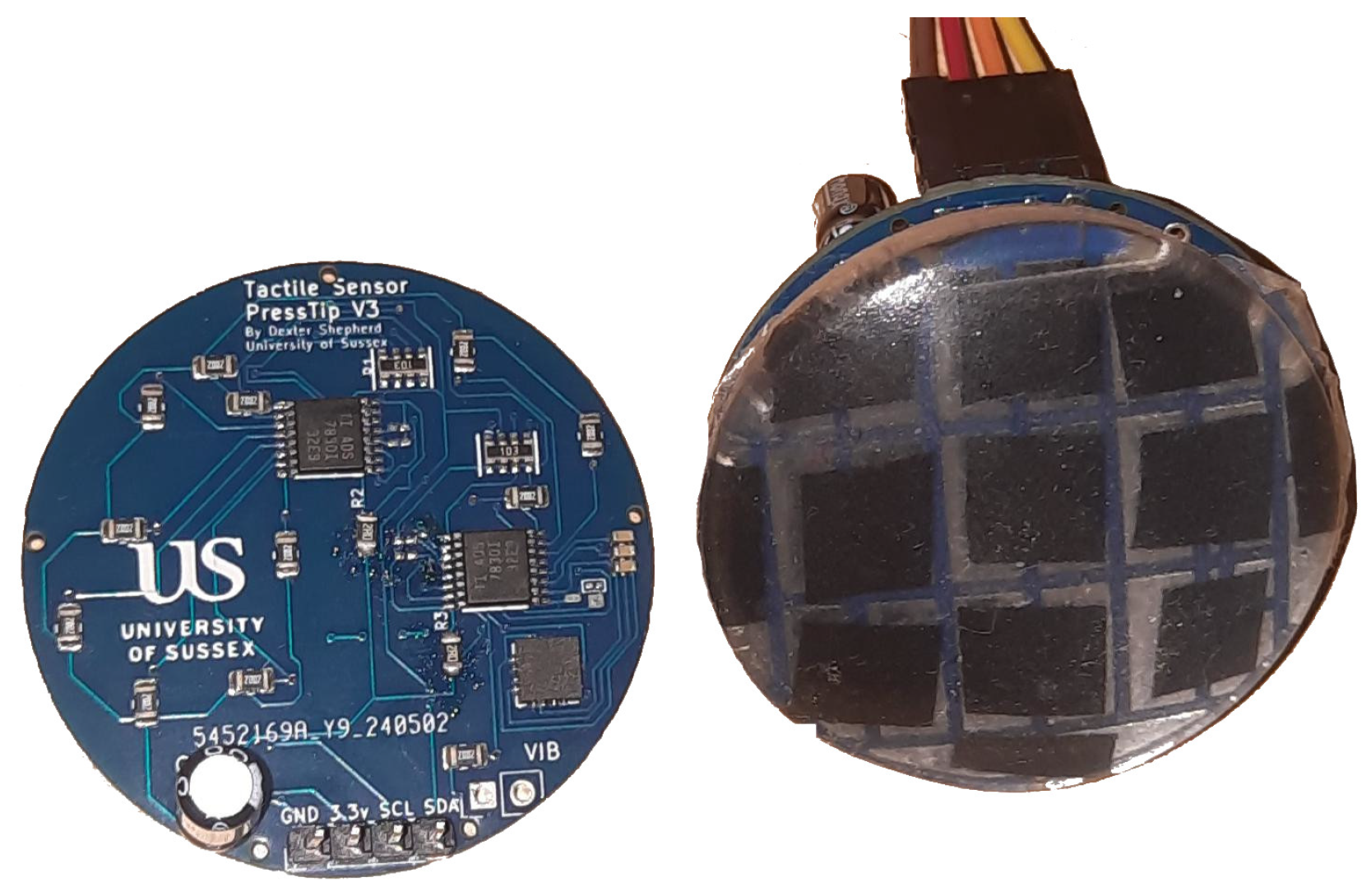

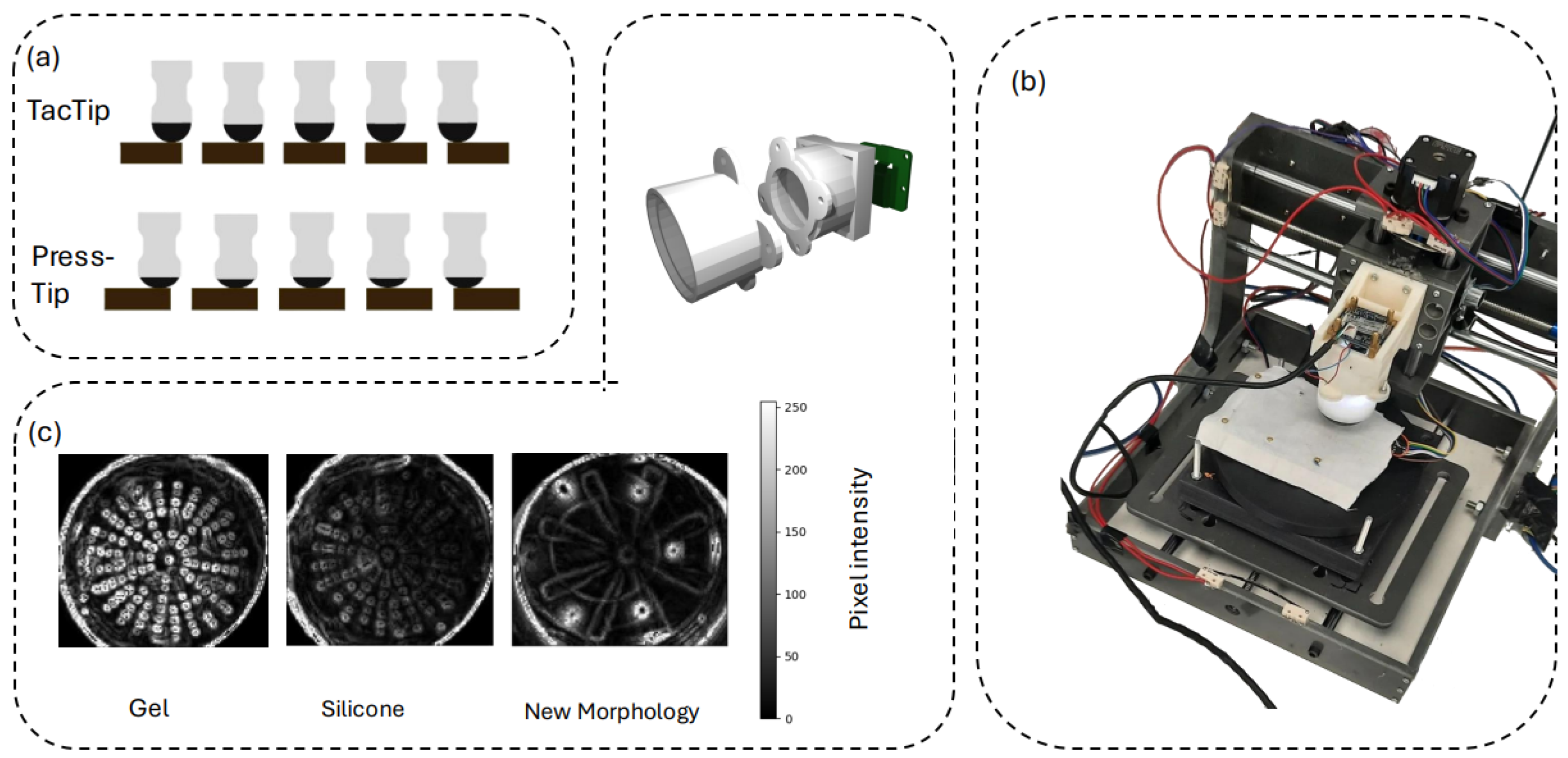

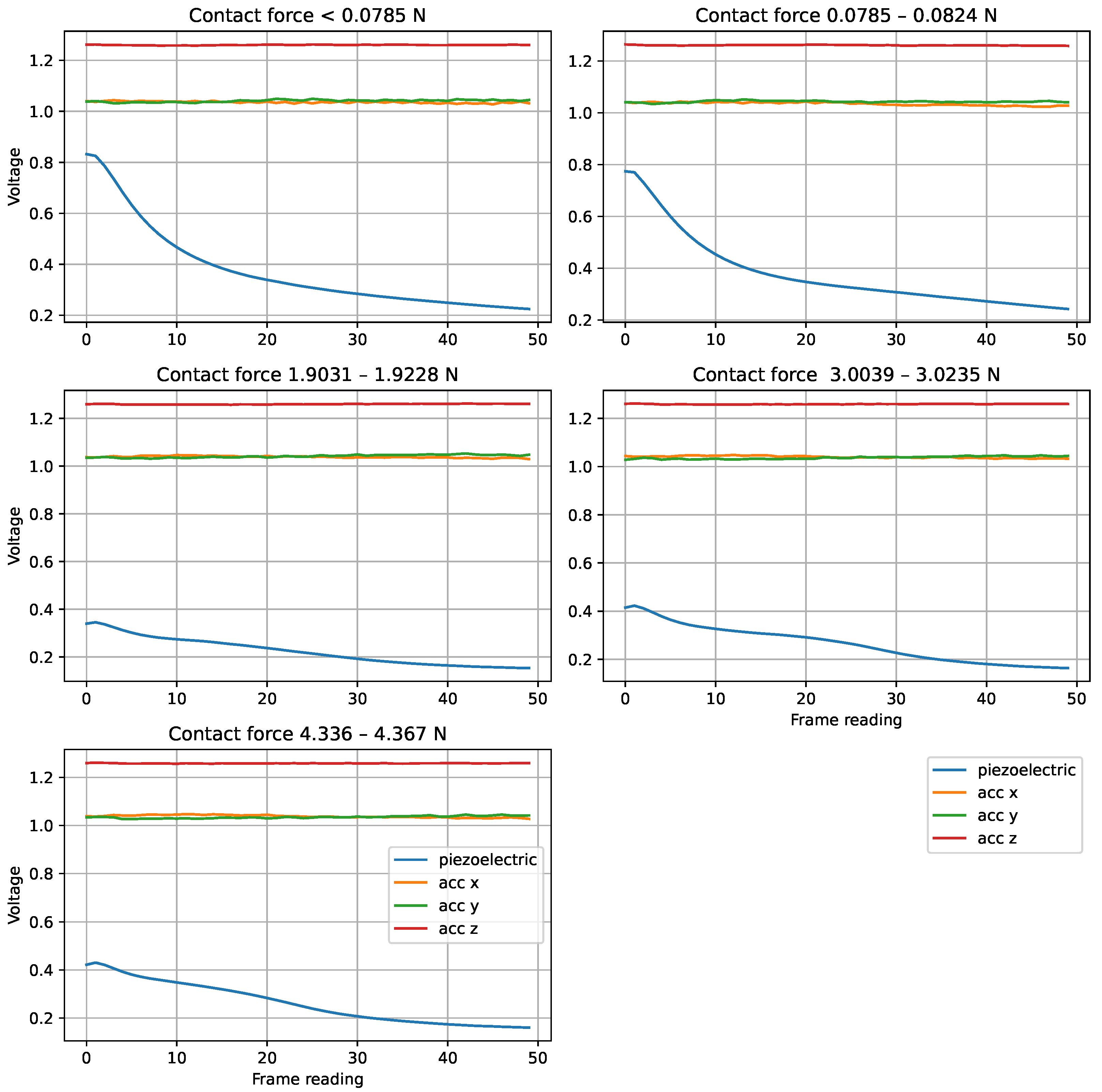

In recent years, optical tactile sensors, such as TacTips, have gained significant attention for their high-resolution, contact-based sensing capabilities [

1]. These sensors utilise camera images to track the deformation of optical markers embedded in soft materials such as silicone gels, translating mechanical interactions into changes in visual features that can be processed using computer vision techniques for a variety of applications, including texture classification [

13,

14]. In contrast, electrical sensors typically have a relatively small number of input channels—often one for piezoelectric sensors and three for accelerometers. Such low-resolution electrical sensors—which have been shown to be highly versatile [

2,

15]—tend to require less processing and be much more cost-effective than optical tactile sensors, where the primary expenses are cameras and manufacturing costs. Thus, these two sensor types have contrasting characteristics. However, to date, there has been no extensive comparison of optical tactile sensors and low-resolution electrical tactile sensors on the texture classification problem. Although comparisons exist between various optical sensors [

1] and between different electrical sensors [

2], a rigorous comparison between capacitive/electrical and optical sensors is missing. Even in comprehensive papers such as [

2], where the physical properties and typical energy consumption of different types of sensors are defined, the dataset and the task used to measure accuracy differed between sensor types. Inevitably, reviews of multiple studies analyse one type of sensor separately from another, using different datasets, making direct comparisons challenging [

16]. This paper provides a detailed comparison of these two sensor types on the texture classification task.

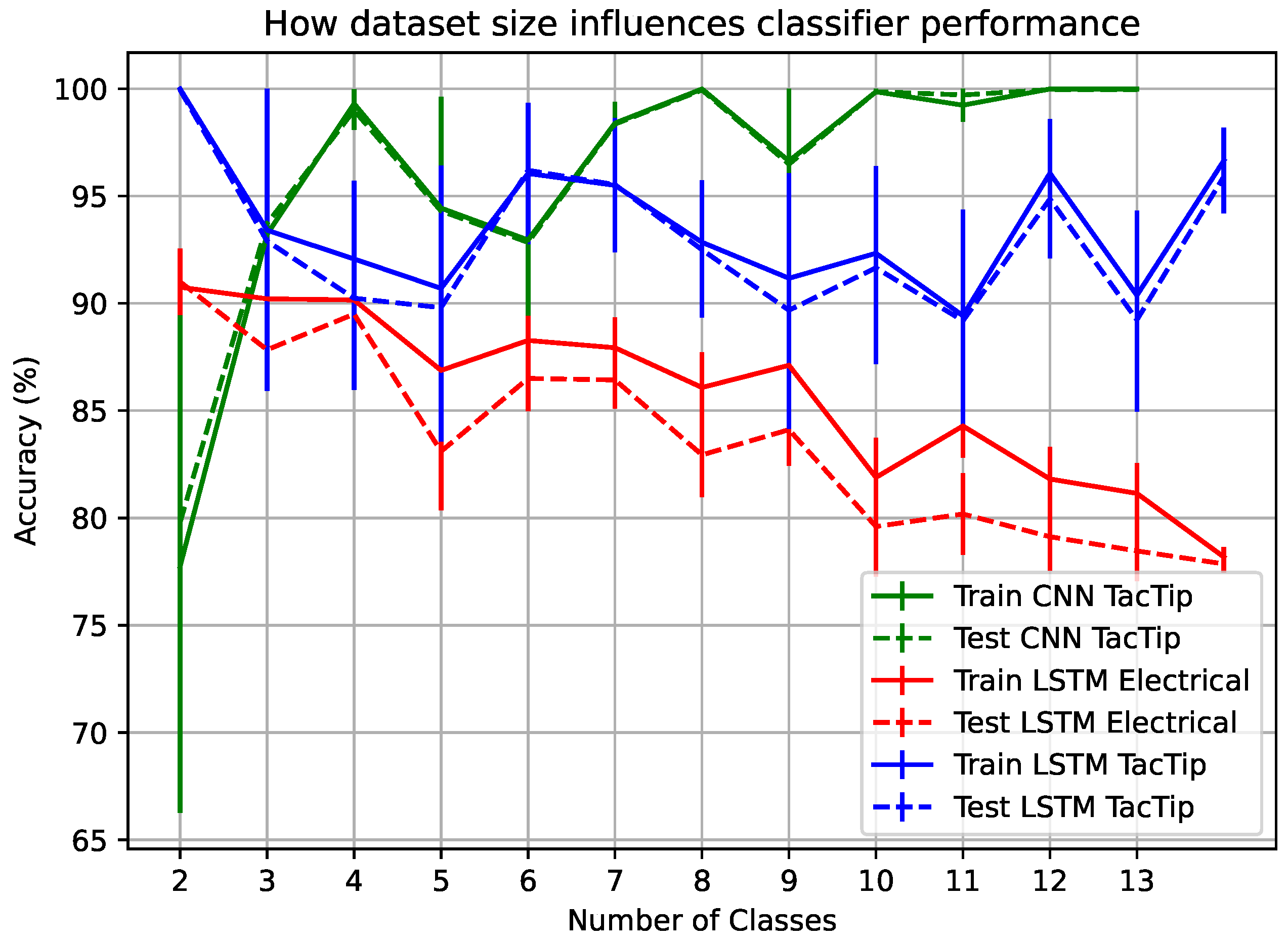

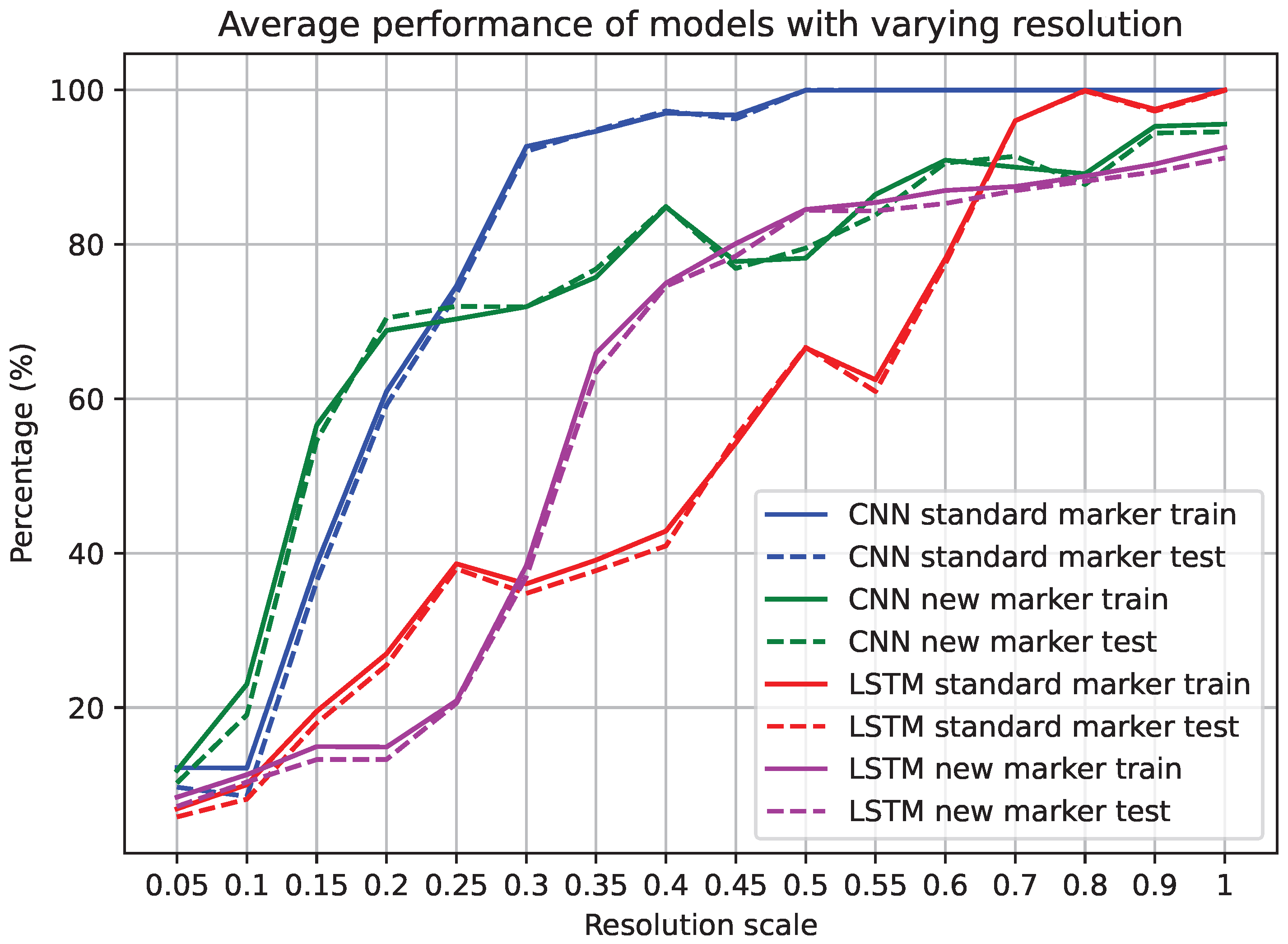

Another related question, which has also been neglected until now, concerns the resolution of the optical sensor when used for the texture classification task. It is usually assumed that the highest resolution that the sensor is capable of should be used. There has been no examination of whether it is possible to scale down the image resolution without having any significant impact on the classification accuracy. Comparison of optical and electrical sensors across varying resolutions is essential to establish when and if optical sensing provides a tangible advantage. This question is directly addressed in this paper.

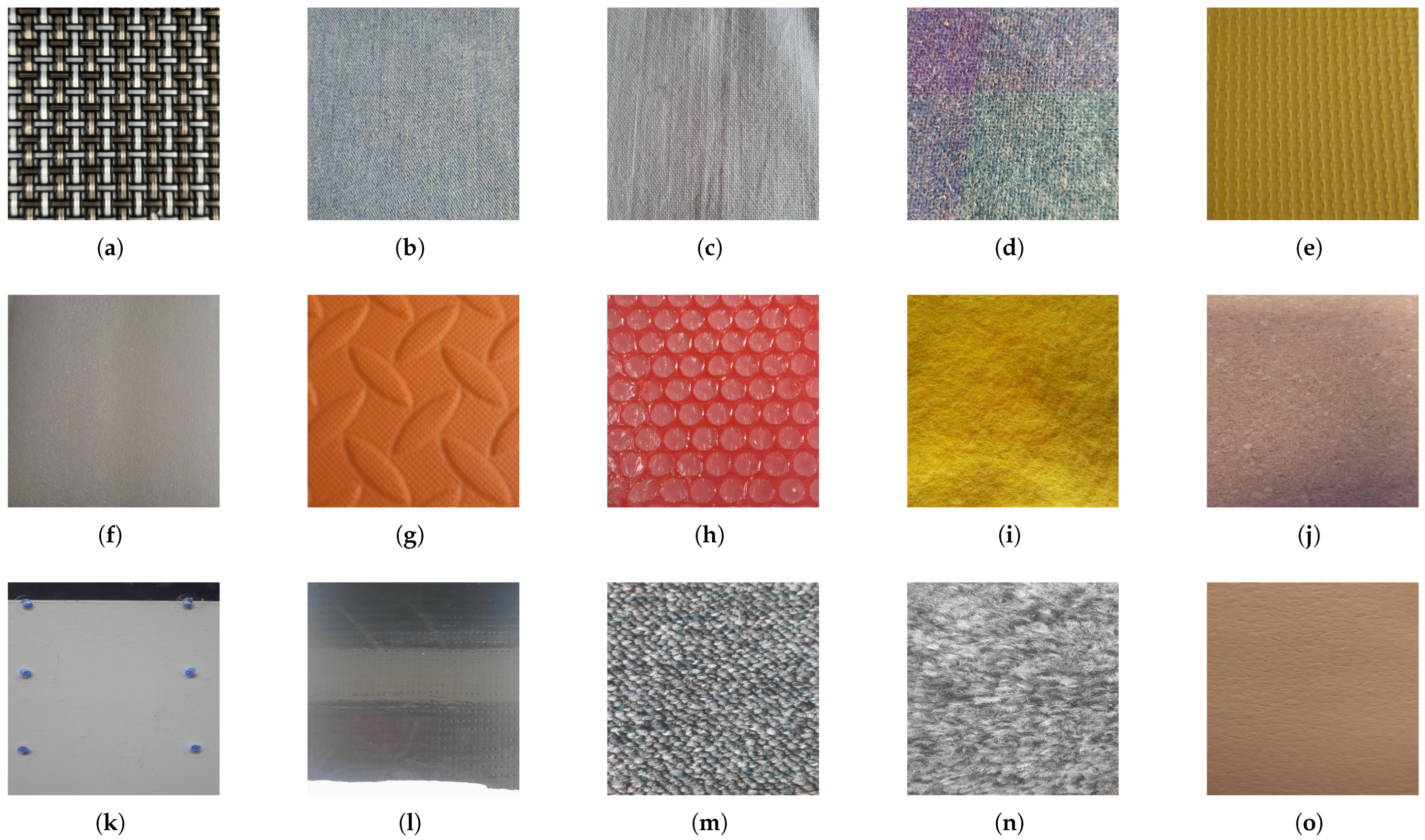

Within the field of tactile sensing, although texture classification has been established as a benchmark task, a standardised set of textures for comparison remains elusive. Much existing literature focuses on optimising individual tactile sensors to perform well on tactile datasets [

10,

11,

12,

17], which can vary greatly between different studies. These datasets often include diverse textures selected for convenience rather than consistency. In the research reported in this paper, we therefore created a new set of textures based on commonalities between previous datasets in order to improve comparability.

While rigorous comparative studies between optical and electrical tactile sensors on texture classification are lacking, there has been extensive individual testing of various sensor types. Accelerometers, for example, have been shown to classify multiple textures quite well with varying accelerations applied to the sensor [

18]. Comparisons between accelerometers and piezoelectric sensors have been made, with arguments that accelerometers may be more effective for certain texture classification tasks [

19]. However, these studies are often specific to particular applications; for instance, piezoelectric sensors [

20] and capacitive sensors [

21] have been explored for texture classification in dynamic environments, often yielding varying degrees of accuracy depending on the surface interaction, as well as the area of surface being tested.

Our main interest in tactile sensors is in relation to robot locomotion. Such applications demand sensors capable of adapting to continuous surface contact rather than discrete insertions (such as inserting a sensor into a fruit for classification [

19]). Hence our sensors are dragged across the surface to be classified, mimicking the way an animal might stroke a surface with its foot to detect ground texture. This is also consistent with the most commonly used technique for artificial finger tactile texture classification [

9].

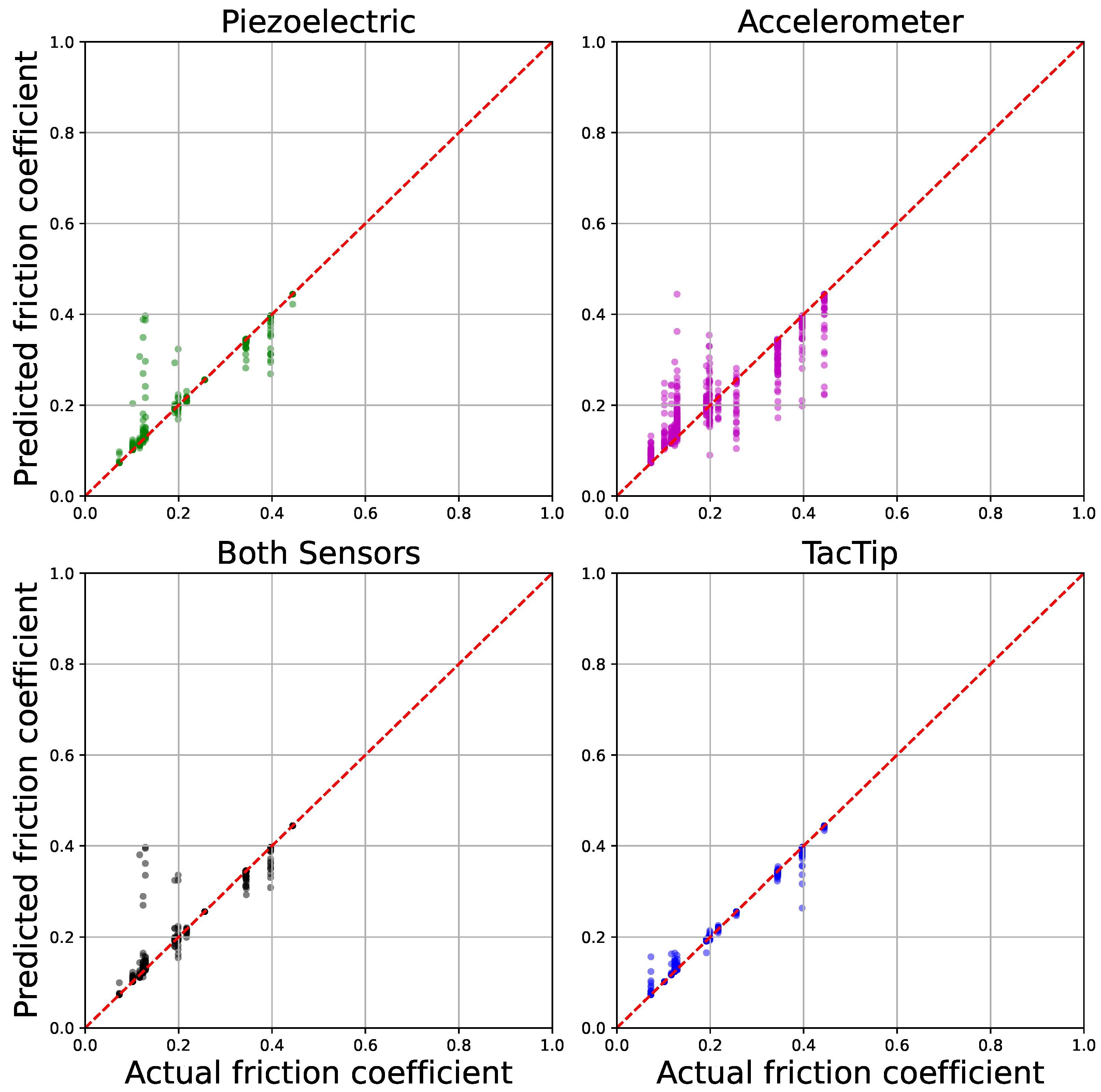

This paper aims to fill the gaps identified above by rigorously comparing and exploring both optical (modified TacTips at varying resolutions) and electrical (piezoelectric and accelerometer-based) tactile sensing methods, using a dataset designed to encompass a diverse range of textures. In keeping with previous studies [

9], we also assessed these sensors on a coefficient of friction identification task to evaluate their performance in broader applications of relevance to robotic locomotion. In addition, we evaluated the physical resolution of both types of sensing. Our findings indicate that while both methods demonstrate strong performance, optical sensors significantly outperform their electrical counterparts overall.