Abstract

Efficient autonomous exploration in unknown obstacle cluttered environments with interior obstacles remains a challenging task for mobile robots. In this work, we present a novel exploration process for a non-holonomic agent exploring 2D spaces using onboard LiDAR sensing. The proposed method generates velocity commands based on the calculation of the solution of an elliptic Partial Differential Equation with Dirichlet boundary conditions. While solving Laplace’s equation yields collision-free motion towards the free space boundary, the agent may become trapped in regions distant from free frontiers, where the potential field becomes almost flat, and consequently the agent’s velocity nullifies as the gradient vanishes. To address this, we solve a Poisson equation, introducing a source point on the free explored boundary which is located at the closest point from the agent and attracts it towards unexplored regions. The source values are determined by an exponential function based on the shortest path of a Hybrid Visibility Graph, a graph that models the explored space and connects obstacle regions via minimum-length edges. The computational process we apply is based on the Walking on Sphere algorithm, a method that employs Brownian motion and Monte Carlo Integration and ensures efficient calculation. We validate the approach using a real-world platform; an AmigoBot equipped with a LiDAR sensor, controlled via a ROS-MATLAB interface. Experimental results demonstrate that the proposed method provides smooth and deadlock-free navigation in complex, cluttered environments, highlighting its potential for robust autonomous exploration in unknown indoor spaces.

1. Introduction

Mobile autonomous exploration, also known as Active Simultaneous Localization and Mapping (A-SLAM) [1], in indoor environments, refers to the capability of robots to navigate and gather information in unknown environments with minimal or no human guidance. It is a challenging topic in the robotics field, as it seeks to address three interconnected challenges simultaneously: trajectory planning, localization, and mapping. Essentially, the primary objective for a mobile robot in such scenarios is to build a reliable map of an unknown region while navigating safely in it without any prior information.

This process relies on data collected from the robot’s onboard sensors, such as LiDAR [2], RGB-D cameras [3], and IMUs [4]. Such sensor data helps the robot to understand the surrounding environment and make intelligent decisions to move to the next state, expanding the explored space safely. The ability to autonomously map unknown areas is vital for numerous real-world applications, including domestic and service robotics, autonomous warehouse and factory operations, and also critical missions like search and rescue in disaster or hazardous areas without human responders to be in danger.

1.1. Related Works

Recent advances in robotics have led to the development of sophisticated algorithms that address the challenges of autonomous exploration. These exploration algorithms can be classified according to how they represent and exploit the exploration space to navigate and map unknown regions. One such category is the methods based on Reinforcement Learning (RL). In this method, the mobile robot learns an exploration policy by interacting with the environment and maximizing a reward function that encourages efficient coverage of the map and goal-directed behavior in unknown spaces. In the research community, the RL method is state-of-the-art, and a great number of works have been presented. In [5], the authors propose a Cumulative Curriculum Reinforcement Learning (CCRL) framework to enhance Deep RL-based autonomous exploration by introducing a size-adaptive map representation and accelerating training through a custom lightweight grid-based simulator. The work in [6] presents a Deep RL-based exploration architecture that predicts long-term visiting sequences for unexplored sub-regions and integrates Next Best of View (NBV) selection. And finally, the authors in [7] develop a Graph-based Spatiotemporal Neural Network (G-STNN) combined with Deep RL to guide autonomous robot exploration, using previous trajectories and exploration space boundaries for enhancing the decision-making. Similar approaches have been presented in [8,9,10,11].

Another class of algorithms is based on sampling methods, where the robot randomly samples points within the explored space and selects the next best goal to expand it. They usually use motion graph planners [12] to navigate through an unknown area to the next state following utility-based criteria. For example, in [13], the authors propose a Dynamic Exploration Planner (DEP) that uses incremental sampling and Probabilistic Roadmaps to efficiently and safely explore dynamic environments by optimizing paths based on the NBV point. In [14], a Sampling-based Frontier-Block Detection (SFBD) method is presented that improves RRT-based exploration by reducing redundant sampling and costly nearest-point searches through a block-based structure for efficient collision avoidance and frontier detection. Finally, the work in [15] introduces an improved sampling-based exploration method that extends RRT until frontier endpoints are found and evaluates them using an enhanced utility function, leading to more efficient indoor exploration in 2D environments.

Another type of algorithms is based on the frontiers exploration. In these exploration algorithms, the mobile robot identifies and moves toward free boundaries to expand the explored region, exploiting the information from occupied and free explored boundaries. The paper of Yamauchi [16] was the first attempt that addressed this kind of problem, where the agent navigates to the free frontiers with A* algorithm. A class of frontier-based methods, on which our paper relies, is the Harmonic Potential Field (HPF). The HPF provides the agent with smooth navigation to unknown areas and easy implementation. These fields are typically derived from the solution of an elliptic Partial Differential Equation (PDE) with boundary conditions. We obtain their solution with numerical methods as the analytical solution is intractable [17]. A first attempt was presented in [18], which applies a HPF to explore an unknown area, solving with relaxation methods the Boundary Value Problem (BVP) with Dirichlet condition. That HPF leads the agent to the free frontiers (low values), avoiding collision with obstacles (high values). However, such kind of condition exhibits large flat areas of the potential, which result in the agent being stuck in regions which are far away from the frontier. Certain works proposed solutions of the above problem, such as [19], which applies potential rails that guide the robot to regions that are either unexplored or were visited a long time ago. The work in [20] develops a strategy to distort the potential field without loosing the qualities of the HPF, and the work in [21] enhances the BVP for integrated exploration by introducing active loop closure through dynamic barriers and a Voronoi-based local update window. Moreover, in the research community, there are projects which apply another boundary condition, the Neumann. The papers [22,23] solve the BVP using as a numerical method the Fast Multipole accelerated Boundary Element Method (FMBEM), which yields linear complexity in terms of computational effort and required memory. In [24], the authors use the panel method to create an HPF that leads the agent to the goal point. Finally, the paper [25] introduces a novel reactive, frontier-based exploration scheme that utilizes harmonic transformation to map a workspace onto a disk, ensuring safe navigation and enabling selective exploration of frontiers. Alternatively, Sawhney et al. [26] proposed another method to solve the BVP based on Brownsian Motion, known as the Walking on Sphere (WoS) Algorithm, which is a stochastic process applying Monte Carlo Integration (MCI) [27]. This method calculates the potential, and also its gradient, of a linear elliptic equation at any point in a connected region with different boundary value conditions, e.g., Dirichlet [26], Neumann [28], and Robin [29]. The aforementioned method is a grid-free algorithm and has been applied to geometrical problems in computer graphics exhibiting low computational cost.

1.2. Contributions

In this work, we address the problem of autonomous exploration for a mobile agent in an unknown indoor 2D environment that may contain interior obstacles. Our focus is on the map building problem and we assume the agent calculates its position via a certain localization process. We calculate velocity commands for navigating the agent by solving an elliptic PDE with Dirichlet condition over the explored space boundary. Most specifically, we solve a Poisson equation with a source point on the closest located free frontier. In this way, the agent escapes from potential flat regions created by the Dirichlet condition. The potential function associated with the source point is defined using a Hybrid Visibility Graph (HVG), which gives an estimate of the distance to the source point. The HVG involves the vertices and edges of the explored space boundary, as well as the minimum-length edges between the interior obstacles, enabling more accurate field estimation. Finally, we use the stochastic process WoS [26] for computational and memory efficiency. The key properties of our algorithm are (1) it is grid-free, i.e., it does not need to discretize the explored space to calculate the field and (2) we can calculate the gradient at the agent’s position without finding the potential field anywhere, thus reducing significantly the computational cost. We validated the approach using a real-world platform: an AmigoBot equipped with a LiDAR sensor, controlled via a ROS-MATLAB interface. Experimental results demonstrate that the proposed method provides smooth and deadlock-free navigation in complex, cluttered environments, highlighting its potential for robust autonomous exploration in unknown indoor spaces. In the results, we provide a comparative analysis of our method against other exploration approaches, aiming to present its advantages and disadvantages.

The rest of this paper is organized as follows: in Section 2, we formulate mathematically the exploration problem, in Section 3, we discuss the basic theory of elliptic PDEs with Dirichlet conditions and we present the WoS algorithm. In Section 4, we present the implementation of the proposed exploration process, and in Section 5, we describe the tools for realizing the real-world experiment. In Section 6, we present the results of the proposed exploration algorithm in three studies, and in Section 7, we discuss the results. Finally, we draw conclusions and future research directions.

1.3. Notations

1.3.1. Mathematic Symbols

For any set , we use to denote its boundary, to denote its interior, and to denote its volume. We use to denote a probability density function on and write for a random point drawn from . The uniform probability density function on is . Moreover, we use to denote a ball contained in n-dimensional space with radius r centered at x. Additionally, is denoted as the Euclidean distance from a point to the closest point on the and is denoted as the closet point. Furthermore, we define the -shell around as . Finally, we use for the Laplace operator on .

1.3.2. Pseudocode Formation

For our exploration method, we introduce pseudocodes to clarify the WoS algorithm and the structure of the HVG. The notation for arrays and sets aligns with the MATLAB framework. Additionally, we denote a zero matrix as zeros and a matrix of ones as ones, consistent with MATLAB properties.

2. Problem Formulation

In this section, we formulate our problem. First, we define the unknown workspace and the properties of the mobile agent, including its kinematic model and the onboard sensor model. Then, we discuss the properties of the explored region. Finally, we explain the data structure in which we store the unexplored region.

2.1. Workspace and Kinematics

We denote as W the unknown workspace, a compact and connected subset of and the free space. Let consist of the mutually disjoint sets of the obstacle boundaries, as well as of the external boundary , such that . For this paper, we assume that the workspace W is time invariant and does not involve dynamic obstacles.

For simplicity, we use a differential mobile agent, which is a disk-shaped robot with radius . The configuration space of the agent is defined as . The kinematic equation of a differential drive robot is given by:

where is the position of the robot, the orientation of the agent is , and and denote the linear and angular velocity of the agent with . Notice that the agent relies on two motions to navigate in its free configuration space ; it rotates and moves forward. Moreover, we assume that the position and orientation of the agent are known through the exploration processes via a localization algorithm that assumes a known initial position .

Additionally, we equip the agent with a range sensor, which gives the robot the capability to determine how close it is to the workspace boundary and explore unknown regions. The range sensor in each specific time step creates a sensing space , where is the maximum range of the sensor. We assume that the minimum range of the sensor is rather small with .

2.2. Explored Region

We define as the path traversed by the agent during the time interval ; for brevity, when and , . Given a continuous path , we define the explored region of W as . The boundary of the explored region at some time instance is denoted as , where and belong to the agent’s known free space and workspace boundaries , respectively. In general, each of and consists of zero or more disjoint line curves, that is, and with and , indexing the distinct parts of the respective set.

One should note that any point that enters the agent’s sensing region becomes instantly part of . In this way, as the agent approaches the free boundary, the interior of the explored space augments. In contrast, occupied boundary points will remain on the boundary, since if some point , then [22]. So the problem that we try to solve is formulated as follows.

Problem.

Design a control law such that there exists a finite time instant for which .

2.3. Map Representation

The unknown workspace is discretized in a 2D grid, called an occupancy matrix [30]. We use this kind of matrix so that we can estimate the real form of the workspace. This grid is a 2D matrix consisting of cells centered at , with . To associate each point with a cell, we define a map , where and denotes the grid resolution. We attach in each cell a binary occupancy value , specifying whether the cell is free (), occupied (), or unknown (). In the exploration process, we use the binary occupancy map, which takes zero value for free and the unknown cells () and true value for the occupied ones (). This structure is applied for simplicity and minimization of both memory and time complexity of the algorithm. Thus, we classify the points of the explored boundary, , as occupied or free and we also define the sets and or . Finally, we initialize the values of the occupancy grid map with zero value.

Remark 1.

We apply the binary occupancy map for mapping because it is particularly simple and well suited for our proposed method. Unlike other methods that might benefit from detailed probabilistic representations, our approach does not necessitate intermediate probabilities of matrix cells. This choice contributes to the low memory and time complexity of our exploration method.

3. Solving PDEs with Monte Carlo Integration

3.1. Linear Elliptic Equation

The central idea behind the design of our exploration controller is to find a potential field by solving a Poisson equation, subject to boundary conditions that assign low potential to unexplored frontiers and high potential to boundaries corresponding to obstacles. The Poisson equation with pure Dirichlet boundary condition is:

Here, is the source term and .

Under the Dirichlet boundary condition, the values of the potential function at the boundaries are fixed, assigning the highest values to obstacles and the lowest to the unexplored boundary. Thus, this formulation generates a vector field that safely guides the agent towards the unexplored area. However, in complex environments, the agent may become trapped in regions distant from free frontiers, where the potential field becomes almost flat, and consequently the agent’s velocity nullifies as the gradient vanishes. To address this problem, a source point is introduced at the nearest free frontier relative to the agent’s position so that the agent moves away from low-gradient regions. The definition of the source function will be discussed later in the next section.

The solution of the Poisson equation at any point is based on the generalized mean value property of Laplace equation.

Proposition 1 (Generalized Mean Value Property [31]).

If the boundary of the exploration space is sufficiently smooth, and the solution of (2) with the source term is continuous in , then the solution of the Poisson Equation (2) attains the integral term:

where is the largest ball contained in with radius R centered at p, and is the Green’s function for the ball (see Appendix B).

Notice that the above form attains a probabilistic interpretation, as the value at the center of the sphere is the uniform average of the boundary values on the surface of the ball plus the average of the source term w.r.t. the Green function for the ball. Therefore, if the boundary values on the sphere are known, we may apply the MCI method (see Appendix A) to estimate the solution .

However, the solution of the Poisson equation is not sufficient. We have to calculate the gradient of the solution in order to determine the velocity commands of the controller. In [26], the authors provide the gradient of the solution based on Malliavin Calculus [32]:

where is the outward unit normal at y.

3.2. The Walking on Sphere

In this subsection, we discuss the algorithm that we will use to find the solution of (2) and its gradient at a specific position in the explored region , following the integral (4). According to [26], we can find the solution (3) following Kakutani’s principle.

Definition 1 (Kakutani’s principle [33]).

the solution value at any point is equal to the expected value , where is the first boundary point reached by a random walk starting at p.

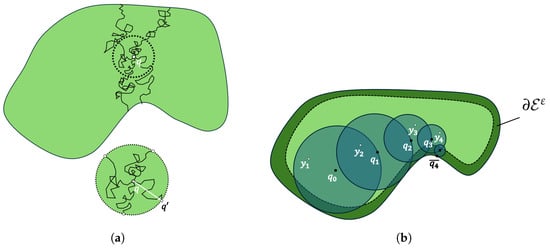

For simplicity, let us assume . Following the Kakutani’s principle or Brownian motion process, we can exploit the occupancy grid map by taking random steps from a starting cell until reaching and get the specific Dirichlet value. Nevertheless, this process has a lot of computational effort, as we decrease the resolution of the grid and the unexplored workspace is quite large. For that reason, we use a more efficient grid-free algorithm, which was proposed in [34], called Walking on Sphere. Essentially, this algorithm proposes taking the largest steps from the starting point over a sphere boundary centered at with radius the minimum distance to . This walk is equal to the walk occurring from Brownian motion, since the point that we take on the sphere is independent of which steps we take in the sphere, as we see in Figure 1a. Thus, the WoS algorithm provides less computational and memory complexity.

Figure 1.

(a) Brownian motion process. (b) Walking on Sphere algorithm process finding the Poisson equation solution.

The WoS algorithm for finding the solution (3) of the Poisson equation relies on a recursive Monte Carlo single-sample estimator at point :

where is the closest distance from point to explored region boundary , and are sampled from uniform probabilities and , respectively. Because we deal with the problem in the 2D world, then the above estimator becomes:

Essentially, as we also see in Figure 1b, the algorithm determines a random walk starting at point , finds the largest ball contained in , picks a point and another one , and continues the same process with starting point . The algorithm terminates when is in the region , e.g., , and then we get the Dirchlet value . For random walks, the solution of the Poisson equation is:

Algorithm 1 involves the process that we discussed above. It should be noted that in line 7, the function closestPointOfBoundary returns the closest point from a point and the distance of these points. Also, in lines 11 and 13, we call the sampling functions over a ball. The function samplePointInBall samples a point , and samplePointOnBallBoundary samples a new point .

| Algorithm 1 WalkingOnSpherePoissonEquation |

|

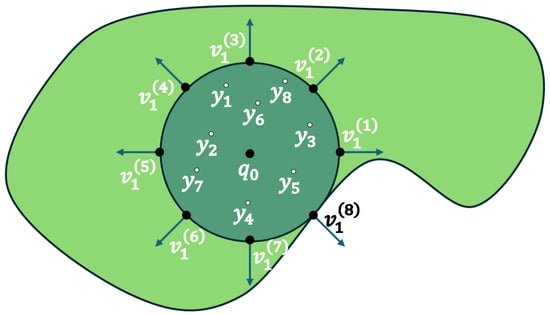

Since we analyzed how to find the solution of the Poisson equation, now we will present how to calculate its gradient . According to [26], a basic WoS estimator for calculating the gradient of the solution for the ball is shown below:

where . Essentially, in (8), we sample uniform points on the boundary of the largest sphere in and calculate the solution for each point with Algorithm 1. Notice that instead of sampling randomly in the , we rather use a deterministic method so that each point maintains an approximately equal geodesic distance from its neighbors, as we present in Figure 2. In this manner, we do not violate the uniform distribution properties. Furthermore, we uniformly sample in the ball points to calculate the Green term of our estimator. In Algorithm 2, we demonstrate the calculation process of the gradient. The extra function that is added to the algorithm is the outwardVectorPoints that returns an array of dimension with the radial directions of points sampled as we said before.

| Algorithm 2 WalkingOnSphereGradientSolution |

|

Figure 2.

The order of outward unit vectors on the .

Remark 2.

The complexity of the WoS algorithm depends on the number of sampled points as well as on the termination factor that effects the number of steps needed to reach the . According to [35], the typical number of steps for a single walk is . Consequently, the time complexity of Algorithm 2 is , which can be computationally expensive. In order to resolve this, we modify the algorithm by introducing a dynamic, vectorized implementation. This approach allows the execution of multiple parallel walks, approaching step complexity, and improves the overall computational efficiency of the WoS algorithm. The dynamic modification of the algorithms is not complicated.

4. Implementation of the Exploration Process

In this section, we discuss the process followed to address the exploration problem. More specifically, we present the controller architecture and we provide the safety and completeness guarantees of the proposed control law.

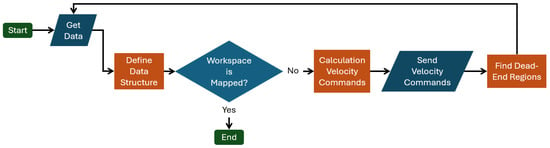

4.1. Control Architecture

In Figure 3, we present the main steps of the proposed method. First, we gather data from the range sensor—set )—with the map of the workspace and the position of the agent. The agent updates this data as it moves in the workspace. Afterwards, in the block Define Data Structure, we construct the explored space and the Hybrid Visibility Graph, and we determine the boundary value conditions and source function. Then, we check if the map is complete. If it is not, we calculate in the block Calculation Velocity Commands the velocity commands of the agent through the WoS algorithm and execute it. As the agent moves, in the block Find Dead-End Regions, we inspect if some region is Dead-End, e.g., a region that the agent has explored and does not need to traverse it again. If the dead-end algorithm finds a region in block Define Data Structure, it removes it from the explored set . So, the above exploration process is recursive and stops when the map is fully explored.

Figure 3.

Flowchart of the exploration method.

4.2. Velocity Control Law

The velocity control law is given by:

where is a small positive constant so as to prevent the discontinuity when . The control law is a normalized vector w.r.t. the x-coordinate and y-coordinate, i.e., .

As we mentioned previously, the agent moves in two ways: it rotates to reduce the orientation error , where , and as , the agent moves forward, following the linear and angular velocity commands defined as and , where are the gains of linear and angular velocity and , with , where is a bump function that asymptotically approaches zero as the agent approaches the boundary. A candidate function is [22]:

Notice that whenever the minimum Euclidean distance , the agent slows down.

4.3. Structure of Explored Region and Definition of Hybrid Visibility Graph

The boundary of the explored space involves disjoint continuous curves of free and occupied cells, and , respectively, which form a polygon in 2D space, defined by a connected undirected graph , with denoting the vertices of the polygon, where is the number of vertices of the exterior boundary of the polygon, is the number of vertices in the interior, is the edge list of the graph, and denotes the weight’s set of the graph’s edges. Notice that the distances of the edges are the same and equal to the resolution of the map . Beyond the aforementioned graph, we define a set of points that belong to the middle of the edges, with the total number of them to be . Some of them are on the free boundary and others on the occupied , denoted as , respectively. Also, the sets and involve the subsets of the free and occupied middle points of the discretized curves.

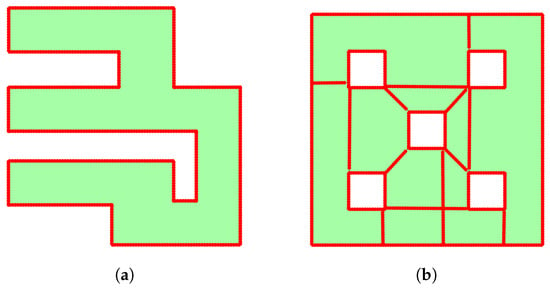

Now, in order to solve the problem of the flat gradient, we first have to define the HVG. The HVG is a connected undirected graph. Its vertices involve the set of middle points and the set of points forming minimum-length edges between exterior and interior obstacles, denoted by . In Algorithm 3, we introduce the steps for constructing this graph. We initialize the by adding the middle points , edge list of this set, and the edge’s weight such as in the (lines 1–3). We use the boolean function isInvolveObstacles to check if the involves inner obstacles or holes (lines 4–6). If the explored space has no holes, the algorithm returns the pre-initialized graph. However, if holes are present, we begin the process of finding the minimum-length edges, connecting each exterior and interior obstacle. First of all, we separate the holes from the explored region and store them in a set where represents the exterior boundary of the explored space and the boundary of interior obstacles. This separation is handled by the function getObstaclesSet, a property of structure (line 7). After separating the obstacles, we proceed with the connection process as detailed in the lines 8–21. This involves taking two obstacles and using the function findMinimumVisibleEdge to locate points that make the minimum-length edge and not cross the occupied cells. If the above properties are true, the boolean variable isVisible will be equal to the true value (line 12). If isVisible is false, we continue the process; otherwise, we start the process by adding this edge to the (lines 13–15). Before adding the edge to the graph, the function discretizeEdge discretizes it into smaller segments, each with a distance of (line 16). Finally, we add the newly generated vertices and edges to (lines 17–19). At the end of the process, the algorithm returns the final HVG (line 22). In Figure 4, we present some results of this algorithm, one workspace without (Figure 4a) and one with (Figure 4b) obstacles.

Figure 4.

Examples of HVG form. (a) Workspace without interior obstacles and (b) workspace with interior obstacles. The red points are the vertices of HVG.

Remark 3.

The time and memory complexity of the aforementioned process depend on the number of explored interior obstacles. As the number of holes increases in the explored region, our approach slows down when extracting the new state of the agent. To address this, we apply techniques like the Dead-End algorithm (Section 4.5), which restricts the number of obstacles within the exploration space.

The extraction of this graph is helpful because we have to make an approximation of the geodesic distance from any point in to free continuous curves. In that way, we can determine the source function that attracts the agent from the dead lock regions to the free space.

| Algorithm 3 DefineHybricVisibilityGraph |

|

4.4. Dirichlet Boundary Value and Source Function

Having defined the explored polygon and the sets of middle points, let us define the boundary values (2). We determine the weight of each edge of the graph equal to a Dirichlet boundary value , where .

To determine the closest free curve from the current agent’s position p, which is assigned attractive, let be a point that belongs to the continuous free curve and is located in the middle of this curve. The distance is calculated by:

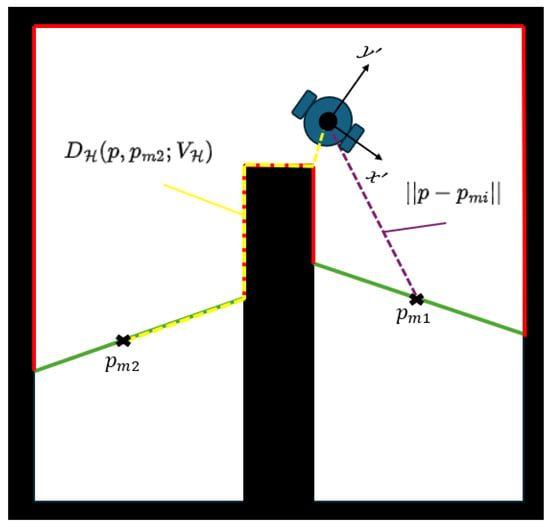

where is the visibility region of the agent in the explored space and is the sum of the minimum distance from the agent’s position to the set and the distance , with , which is the shortest path from to based on the HVG. An example of this metric is shown in Figure 5. The aforementioned distance indicates that if the free curve lies in the field of view, we calculate the Euclidean distance. If it is not, then we take the distance from the HVG. We follow this policy for the shortest point, because the Euclidean distance is not a proper choice to estimate the distance from one point to another in non-convex regions [36].

Figure 5.

An example where we see how the agent calculates the distance to the free frontiers (green lines). In the first frontier with middle point , we calculate the Euclidean distance (purple dashed line) and the other with (yellow dashed line).

Remark 4.

The selection of agent’s free frontier is not governed by a utility function. Thus, optimal path determination is not guaranteed. Consequently, if the agent proceeds in a sub-optimal direction, it will explore and will approach the next closest free frontier.

So, the attractive curve is calculated by , and the rest of the free curves, which are set as repulsive, are denoted as . Since we have defined the attractive and occupied regions, we assign the boundary value function as follows:

where are constant variables.

Remark 5.

The policy selection of a single free frontier as attractive serves to create a potential field, leading agents from anywhere in the explored region towards it effectively.

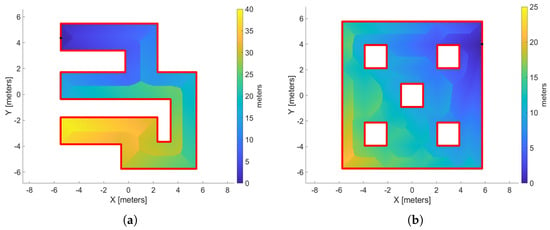

As we chose the attractive free curve, the middle point is defined as the source point denoted by . In a workspace with obstacles, we connect the point to any obstacles within its field of view, adding these new edges to . In this manner, we improve the estimation of geodesic distances to , i.e., the function . The choice of the source function of point will be based on the attributes of the above distance metric. In Figure 6, we have two workspaces—without (Figure 6a) and with (Figure 6b) obstacles—and see the distribution of the distance metric in these platforms with respect to the source point , denoted by the black dot.

Figure 6.

Two workspace examples that exhibit how the distance metric to the source point is distributed in those regions. (a) Workspaces without interior obstacles and (b) workspace with interior obstacles. The black dot is the source point.

As we expected, boundaries are created in the explored space (like the Voronoi Diagram), which separate the regions close to the source point and those far from it. Thus, we have to enhance the regions which are close to the source point and reduce the influence of the distant regions so that it promotes the sampling of outward vectors of to lead the agent toward the attractive region . To achieve, this we proposed the following exponential source function:

where is the perimeter of the explored space boundary and is a fixed positive gain. Hence, the function f attains values in , which means that if the agent is close enough to the source point, then , and when it is far away, then . So, the value of with denoting the sampling point we get in the process of the WoS algorithm approximates value when . As a result, the solution (8) amplifies the vectors , thus leading the agent to the free frontier.

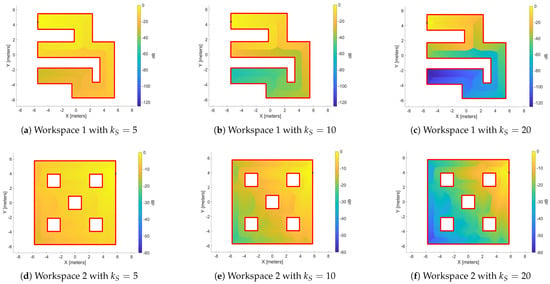

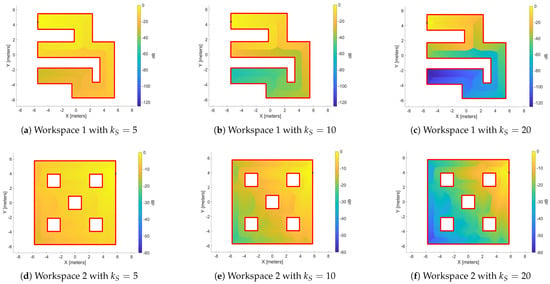

Remark 6.

The source function is derived heuristically, based on the distribution of the distance function (Figure 6) with respect to the source point . The primary goal of this design is to enable the agent, when situated in a region distant from point , to escape that area and be led toward the free frontier. Figure 7 illustrates the distribution of the source values in decibel () to the the workspaces of Figure 6 for different values of variable . As observed in the heat maps, increasing the variable reduces the influence of distant regions, thereby achieving our stated objective.

Figure 7.

The distribution of the source value with different values of variable . (a–c) present a workspace without obstacles and (d–f) a workspace with interior obstacles. The black dot denotes the source point , and the source values is in dB.

4.5. Dead-End Region Algorithm

During the WoS algorithm, walkers may enter regions containing only occupied cells with a single escape path. This leads to increased computational and memory complexity, as walkers must traverse substantial distances to reach the explored region’s boundary. As a result, this does not offer further exploratory value. To eliminate this, our exploration process introduces an algorithm, based on [24], that removes these explored, dead-end regions, preventing the agent from re-traversing them.

The identification method of dead-end regions is handled within the Find Dead-End Regions block. The process is as follows: Let be the current exploration time step at position , where presents the number of current iteration number of exploration process. Up to this point, the agent has traversed a path and stored all scanned regions at each time step in a set denoted as , where for with being the initial exploration time step. At each exploration time step, the dead-end method checks all regions within to determine if they are dead-end. If the method identifies regions as dead-end and the agent is not currently within them, these regions are removed from and stored in a separate set, . So, in the Define Data Structure block, it subtracts these regions from the overall exploration space. To optimize the algorithm’s time performance, the removal of dead-end regions is not executed at every exploration step. Instead, we perform this subtraction every iterations, as the process can become computationally slow if executed too frequently or if the set contains a large number of regions.

Remark 7.

The aforementioned process can become computationally slow if executed too frequently or if the set contains a large number of regions. As a result, the agent can be stopped in order to calculate the new exploration region or be trapped if the cutting is abrupt. To optimize our algorithm’s time performance and ensure the agent to be distant from the reducing region, the subtraction of dead-end regions is not executed always at every exploration step, but it performs every iterations.

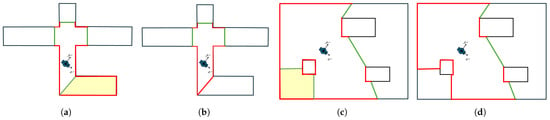

For a region to be labeled as dead-end and then removed from exploration space, its boundaries must contain a specific number of continuous free boundary curves. This number is related to the number of occupied boundaries from known interior obstacles within region . Specifically, a region is considered as dead-end if it possesses one plus the number of occupied boundary obstacles as continuous free boundaries. In Figure 8, we introduce this concept with two examples of removed regions (yellow area). In the first example (Figure 8a,b), the removed region contains no occupied boundaries from any obstacle. So, it is labeled as dead-end, since it includes only one continuous free boundary curve. Similarly, in the second example (Figure 8c,d), the region contains a continuous occupied boundary curve from an obstacle. Therefore, it requires two continued free boundary curves to be identified as dead-end.

Figure 8.

Two examples of dead-end exploration region removing, presented with yellow area. In (a,b) the removed region doesn’t involve occupied boundaries of interior obstacles, whereas (c,d) involve them. Also, (a,c) are in the time step before removing and (b,d) after. The red lines denote the occupied boundaries of exploration space and green lines the free one.

4.6. Safety and Completeness of Exploration

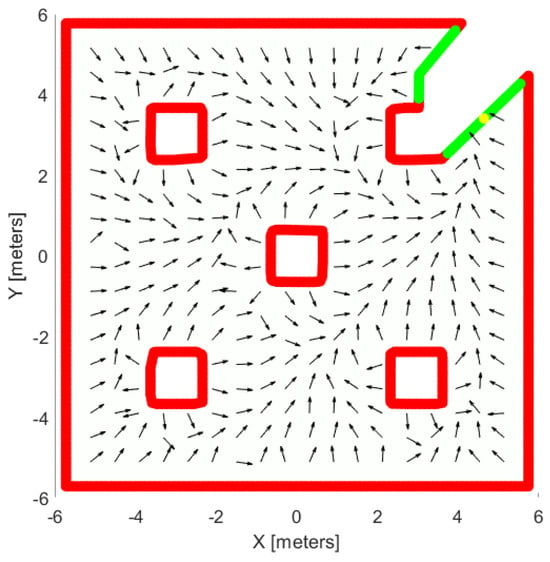

The proposed exploration process is safe and complete. Based on the proposed controller , and the definition of the Dirichlet boundary conditions as well as of the source function, the agent always is repulsed by the occupied boundary of the explored region. Moreover, owning to the term s in the linear velocity command, the agent never collides with the boundary, since leads to . Regarding the completeness, if we tune properly the exploration parameter for boundary conditions and source function values, the agent is led to the pre-defined free regions and there is no deadlock situation in the flat areas. In Figure 9, we illustrate a workspace with obstacles and the potential field created by the proposed controller, which leads the agent towards the free frontier without colliding with the obstacles from anywhere in the workspace.

Figure 9.

An example of a vector field made from our exploration controller. The red lines are the occupied cells, the green lines are the free frontiers, the yellow point is the source point and black vectors show the orientation of the field.

The aforementioned solution is heuristic, as the agent occasionally demonstrates regressions in its exploration process. However, by tuning the exploration parameters and incorporating a lot of walkers within the Walk on Spheres (WoS) method, the agent is able to move away from regions with flat gradients and expand the explored domain.

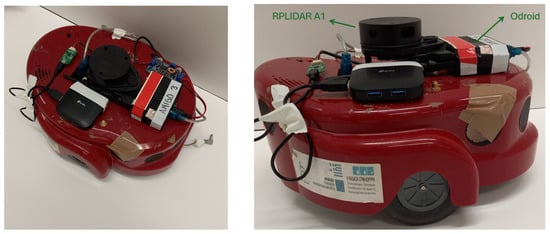

5. Tools for Real Experiment

In this project, we test our exploration process with a real experiment, utilizing a differential drive AmigoBot [37] (see Figure 10). The AmigoBot is a mobile robot appropriate for motion planning applications, which can be monitored using the ROS framework and Matlab. For our experiment, the AmigoBot is integrated with an RPLIDAR A1 laser scanner, developed by SLAMTEC (Shanghai, China), and an odroid processing unit embedded with Ubuntu 20.04.6 LTS (Focal Fossa), 2GB RAM, and ARMv7 processor. Also, the MATLAB environment version 2023b is installed in our laptop with the operating system Ubuntu 20.04, 8GB RAM, and Intel(R) Core(TM) i7-7500U processor. The ROS Noetic and ARIA library for gathering sensor data and monitoring the AmigoBot are installed in both systems.

Figure 10.

The AmigoBot unit (Left) and its equipment (Right).

The robot’s position is obtained from the gmapping package version 1.4.2, which is a library of the ROS Noetic framework that provides laser-based SLAM. The AmigoBot is assumed to be a disk-shaped robot with radius m with the LiDAR sensor mounted at its center with maximum range 3 m.

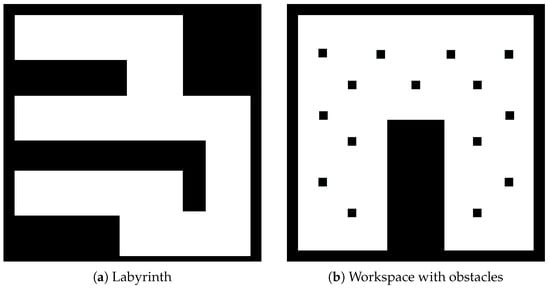

The workspace of our experiment was in the Autonomous Robotics Lab of the Department of Electrical & Computer Engineering at the University of Patras. We built within a space two different maps to test our method, one labyrinth and another that involves only obstacles as we see in Figure 11.

Figure 11.

Real-world maps: (Left) Labyrinth and (Right) workspace with obstacles.

6. Results

In this section, we present the results of the proposed method in three studies. First, we present a simulation in Matlab, where we demonstrate the safety and completeness of our algorithm. In the second part, we present a real-world experiment with the AmigoBot unit. Finally, in the third part, we introduce a comparison study of our method’s results with other exploration methods. In Table 1, we define the parameter values of the exploration process for the three parts.

Table 1.

Values of exploration method parameters for two parts.

Furthermore, to evaluate our algorithm’s efficiency, we use three metrics: (1) the number of exploration steps, (2) the total path length, and (3) the overall exploration time. Moreover, for each iteration, we also report the average time required to (i) collect and process data, (ii) calculate the velocity command with the WoS algorithm, (iii) move the agent to the new state, and (iv) discover the dead-end regions. Finally, we provide the average iteration time both with and without the robot’s movement.

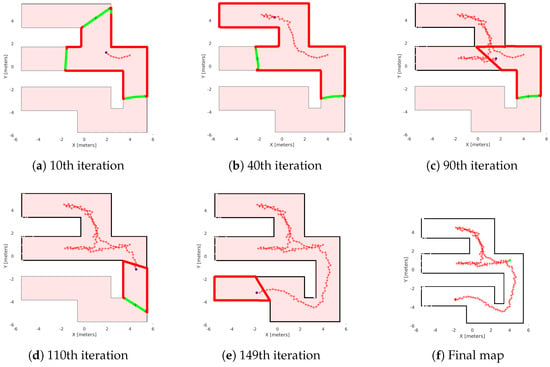

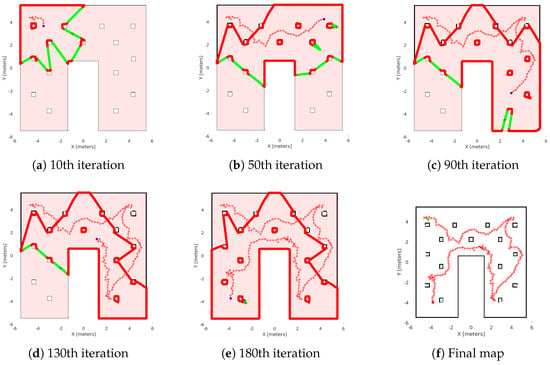

In the first study, we present two simulations. We have two 11 m × 11 m maps to explore, as we see in Figure 12. The first map (see Figure 12a) is a labyrinth and the second one is a workspace with obstacles (see Figure 12b). In the results, we omit the time of the agent’s movement to examine only the average time of exploration algorithms. In Figure 13, we see the exploration process of the mobile agent into the labyrinth and in Figure 14 the workspace with obstacles. In Table 2, we introduce the results of the first part. For these simulations, we use the same hardware as in the real experiment.

Figure 12.

Exploration maps for part A with Matlab.

Figure 13.

Exploration process of the Matlab simulation in the labyrinth. (a–e) depict the exploration process at various stages of the method’s execution, with (f) representing the agent’s complete explored map. The green lines are the free frontiers, red lines are the occupied frontiers, the black cross point is the source point, and the starred red points denote the exploration path of the agent denoted with blue dot.

Figure 14.

Exploration process of the Matlab simulation in the workspace with obstacles. (a–e) depict the exploration process at various stages of the method’s execution, with (f) representing the agent’s complete explored map. The green lines are the free frontiers, red lines are the occupied frontiers, the black cross point is the source point, and the starred red points denote the exploration path of the agent denoted with blue dot.

Table 2.

Simulation results of part A: Matlab.

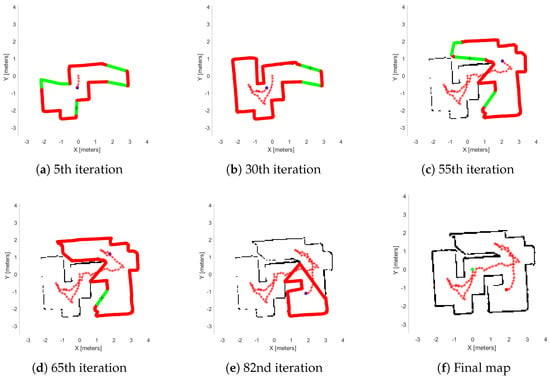

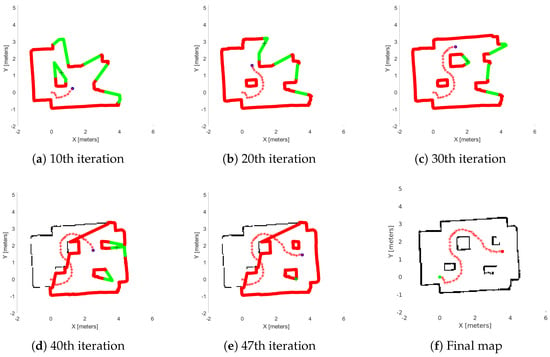

Next, we demonstrate two real-world experiments. As previously mentioned, we use an AmigoBot unit for our experiment and as a communication tool, the ROS framework. We constructed two maps similar to the first study, as we can see in Figure 11. In Figure 15, we see the exploration process of the AmigoBot unit into the labyrinth and in Figure 16 in the workspace with obstacles. The corresponding experimental results are summarized in Table 3. In this video https://youtu.be/EmfcYx3QZMo accessed on 12 June 2025, we present the simulation of the labyrinth of the first part and the workspace with obstacles of the second part.

Figure 15.

Real-world exploration process in the labyrinth. (a–e) depict the exploration process at various stages of the method’s execution, with (f) representing the agent’s complete explored map. The green lines are the free frontiers, red lines are the occupied frontiers, the black cross point is the source point, and red star points denote the exploration path of the agent denoted with blue dot.

Figure 16.

Real-world exploration process in the Workspace with obstacles. (a–e) depict the exploration process at various stages of the method’s execution, with (f) representing the agent’s complete explored map. The green lines are the free frontiers, red lines are the occupied frontiers, the black cross point is the source point, and the starred red points denote the exploration path of the agent denoted with blue dot.

Table 3.

Simulation results of part B: real world.

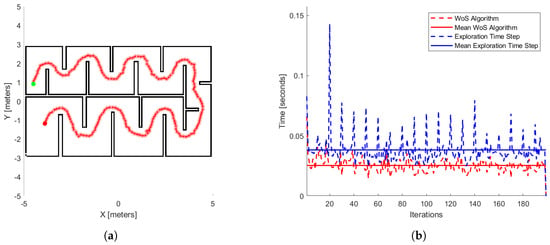

Finally, in the last stage of our results, we run our exploration method 100 times for different initial positions in the workspace illustrated in Figure 17. The same process appears in the papers [22,25,38]. In Table 4, we have the time exploration process of each method and also ours. In Figure 17a, we see the exploration process of the mobile agent at a specific initial position, such as in [25] and in Figure 17b, where it presents the process time per iteration of the WoS algorithm (red dash line) and our exploration method (blue dash line). In Figure 17b, we have the mean processing time of the WoS algorithm, which is 0.0255 s and the method 0.0388 s. The computer’s hardware that runs the third part of the simulations operates with Win11 Home, AMD Ryzen 7 7840HS processor, and RAM 16 GB.

Figure 17.

The workspace for comparative study (a). Green and red dots are the start and end agent’s position, respectively. And red continues across the curve of the agent’s trajectory. The numerical results of this exploration process are also depicted (b). The red dashed line represents the process time per iteration of the WoS algorithm, and the blue dashed line shows the exploration method’s time per iteration. The solid red line indicates the mean processing time of the WoS algorithm, while the solid blue line represents the mean processing time of the exploration method.

Table 4.

Numerical results of part C: comparison study.

7. Discussion

Figure 13f and Figure 14f, show the final results of the agent’s exploration in the first part of the simulations, while Figure 15f and Figure 16f present the results of the experiment with the AmigoBot unit. These plots illustrate the completeness of the workspace mapping and the no-collision trajectories provided by the proposed control architecture. Nevertheless, some irregularities can be observed in the agent’s trajectory, especially in the workspace with obstacles of the first part. This lack of smoothness is influenced by the complexity of the environment and the tuning of the exploration parameters, particularly the boundary values and source parameters of the Poisson equation.

In Table 2, we introduce the metrics of the overall results and average execution time of the sub-routines of the first study. Notice that the exploration parameters are identical for both simulations (see Table 1). As we observe, the agent needs more iterations and processing time to explore the workspace with obstacles compared to the labyrinth. This is due to the higher complexity involved in the obstacle-cluttered workspace and the fact that the exploration area remains large over many iterations, which is attributed to a result of the weakness of the Dead-End algorithm to manage such workspaces. This behavior is also reflected in the average time of the Dead-End sub-routine, which increases as the number of iterations grows, since more regions have to be checked. Thus, the time of the iteration process and particularly of the WoS algorithm increases in proportion to the complexity and the area of the explored region.

Similarly, in Table 3, we show the metrics of the second study, which involved real-world experiments using the AmigoBot. First of all, one notable observation is the relatively high average time of the Extract Data sub-routine. This is primarily due to the communication delay in transferring data from the AmigoBot to the PC during the experiment as well as the overhead introduced by the dead-end removal process, which is affected by the low map resolution (see Table 1). However, notice that the agent needs more iterations in the first workspace (labyrinth) than the second one (workspace with obstacles). This happens because the labyrinth involves narrow corridors; as a result, the agent is close enough to the workspace boundary and thus reduces its velocity. Furthermore, since the agent starts near the center, it must explore three separate sub-regions of the labyrinth. This behavior is evident in the high average time of the Agent’s Movement and Iteration Process sub-routines. However, the average time of the WoS Algorithm and Iteration Process (Without Movement) are less than the obstacle-cluttered workspace, since the Dead-End algorithm decreases significantly the explored region area.

Regarding the comparative simulation results, Table 4 presents the numerical results for the mean exploration time per iteration. According to these results, our method overcomes the methods presented by [22,38] in this metric. Our performance is also marginally better than [25], with a difference of only 0.028 s. Furthermore, comparing our Figure 17a with the corresponding figure in [25], we observe a significant distinction. While the author’s method exhibits a better exploration time, its solution time increases as the area of exploration space increases. In contrast, our method maintains an exploration time that remains close to its average value, due to applying the Dead-End algorithm—that reduces the exploration space—and the behavior of the WoS algorithm.

8. Conclusions

In this work, we proposed a novel method for autonomous exploration in unknown 2D indoor environments with obstacles using harmonic potential fields and the Monte Carlo Integration method. Velocity commands are computed by solving a Poisson equation with Dirichlet boundary conditions, placing a source point at the agent’s closest free frontier. The adapting WoS method ensures efficient, grid-free computation and direct gradient estimation. Moreover, the use of HVG plays a vital role in the exploration process, as the agent may possibly become stuck in the flat gradient region without it. Finally, real-world validation on an AmigoBot with LiDAR verified smooth, deadlock-free navigation in cluttered workspaces, and also a comparison study highlighted the advantages of our method. For future work, we aim at applying the exploration process to a CrazyFlie [39] equipped with a range sensor and expanding the method to 3D environments like a block of flats.

Author Contributions

Conceptualization, D.K., G.C.K., and C.P.B.; methodology, D.K., G.C.K., and C.P.B.; software, D.K.; validation, D.K.; formal analysis, D.K., G.C.K., and C.P.B.; investigation, D.K., G.C.K., and C.P.B.; resources, G.C.K. and C.P.B.; writing—original draft preparation, D.K.; writing—review and editing, G.C.K. and C.P.B.; visualization, D.K.; supervision, G.C.K. and C.P.B.; project administration, G.C.K. and C.P.B.; funding acquisition, G.C.K. and C.P.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the project “Applied Research for Autonomous Robotic Systems” (MIS 5200632), which is implemented within the framework of the National Recovery and Resilience Plan “Greece 2.0” (Measure: 16618-Basic and Applied Research) and is funded by the European Union-NextGenerationEU.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| A-SLAM | Active Simultaneous Localization and Mapping |

| BVP | Boundary Value Problem |

| FMBEM | Fast Multipole accelerated Boundary Element Method |

| HPF | Harmonic Potential Field |

| HVG | Hybrid Visibility Graph |

| MCI | Monte Carlo Integration |

| NBV | Next Best of View |

| PDE | Partial Differential Equation |

| RL | Reinforcement Learning |

| ROS | Robot Operating System |

| RRT | Rapidly Exploring Random Tree |

| SLAM | Simultaneous Localization and Mapping |

| WoS | Walking on Sphere |

Appendix A. Monte Carlo Integration

According to [27], Monte Carlo Integration is a stochastic numerical method for estimating the value of integrals in a specific domain. Hence, let function be an integrable function on a domain . Then, the integral is equal to the expected value of the Monte Carlo estimator:

The error of is characterized by the variance, e.g., the squared deviation from the true value of I. In some cases, when we sample arbitrary points uniformly, there is a possibility of selecting points from regions that have little influence on the value of the integral I. As a result, the variance of the estimator may increase. To address this, we use the general Monte Carlo estimator with importance sampling, as presented below.

This approach focuses sampling on more significant regions and helps reduce the variance of the integral I. Moreover, an important advantage of the Monte Carlo estimator is that the number of samples N can be chosen arbitrarily, regardless of the dimension of the integrand. With this property, the Monte Carlo estimator is more efficient for calculating integrals in any n-dimensional space over traditional deterministic quadrature techniques.

Appendix B. Harmonic Green Function in 2D

The free-space Green’s function in 2D for two points x and y is given by:

where .

For a ball of radius R centered at x, we can derive

These functions integrate over the ball to:

And the gradient w.r.t. x is given by:

References

- Lluvia, I.; Lazkano, E.; Ansuategi, A. Active Mapping and Robot Exploration: A Survey. Sensors 2021, 21, 2445. [Google Scholar] [CrossRef] [PubMed]

- Son, D.T.; Anh, M.T.; Tu, D.D.; Van Chuong, L.; Cuong, T.H.; Phuong, H.S. The Practice of Mapping-based Navigation System for Indoor Robot with RPLIDAR and Raspberry Pi. In Proceedings of the 2021 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh City, Vietnam, 26–28 August 2021; pp. 279–282. [Google Scholar] [CrossRef]

- Eldemiry, A.; Zou, Y.; Li, Y.; Wen, C.Y.; Chen, W. Autonomous Exploration of Unknown Indoor Environments for High-Quality Mapping Using Feature-Based RGB-D SLAM. Sensors 2022, 22, 5117. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Xie, F.; Huang, Y.; Zhao, J.; Luo, H.; Yan, X.; Zhao, F.; Lv, P. A novel autonomous exploration algorithm via LiDAR/IMU SLAM and hierarchical subsystem for mobile robot in unknown indoor environments. Meas. Sci. Technol. 2025, 36, 016307. [Google Scholar] [CrossRef]

- Li, Z.; Xin, J.; Li, N. Autonomous Exploration and Mapping for Mobile Robots via Cumulative Curriculum Reinforcement Learning. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 7495–7500. [Google Scholar] [CrossRef]

- Zhu, D.; Li, T.; Ho, D.; Wang, C.; Meng, M.Q.H. Deep Reinforcement Learning Supervised Autonomous Exploration in Office Environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7548–7555. [Google Scholar] [CrossRef]

- Zhang, Z.; Shi, C.; Zhu, P.; Zeng, Z.; Zhang, H. Autonomous Exploration of Mobile Robots via Deep Reinforcement Learning Based on Spatiotemporal Information on Graph. Appl. Sci. 2021, 11, 8299. [Google Scholar] [CrossRef]

- Cimurs, R.; Suh, I.H.; Lee, J.H. Goal-Driven Autonomous Exploration Through Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2022, 7, 730–737. [Google Scholar] [CrossRef]

- Zuo, S.; Niu, J.; Ren, L.; Ouyang, Z. Fast Robot Hierarchical Exploration Based on Deep Reinforcement Learning. In Proceedings of the 2023 International Wireless Communications and Mobile Computing (IWCMC), Marrakesh, Morocco, 19–23 June 2023; pp. 138–143. [Google Scholar] [CrossRef]

- Li, Z.; Xin, J.; Li, N. End-to-End Autonomous Exploration for Mobile Robots in Unknown Environments through Deep Reinforcement Learning. In Proceedings of the 2022 IEEE International Conference on Real-time Computing and Robotics (RCAR), Guiyang, China, 17–22 July 2022; pp. 475–480. [Google Scholar] [CrossRef]

- Liu, J.; Lv, Y.; Yuan, Y.; Chi, W.; Chen, G.; Sun, L. An Efficient Robot Exploration Method Based on Heuristics Biased Sampling. IEEE Trans. Ind. Electron. 2023, 70, 7102–7112. [Google Scholar] [CrossRef]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Xu, Z.; Deng, D.; Shimada, K. Autonomous UAV Exploration of Dynamic Environments Via Incremental Sampling and Probabilistic Roadmap. IEEE Robot. Autom. Lett. 2021, 6, 2729–2736. [Google Scholar] [CrossRef]

- Lu, Y.; Li, C.; Li, B.; Qiao, W. Sample-based Frontier-Block Detection for Autonomous Robot Exploration. In Proceedings of the 2021 4th International Conference on Intelligent Robotics and Control Engineering (IRCE), Lanzhou, China, 18–20 September 2021; pp. 73–78. [Google Scholar] [CrossRef]

- Tran, D.H.Q.; Phan, H.A.; Van, H.D.; Van Duong, T.; Bui, T.T.; Thanh, V.N.T. An Enhanced Sampling-Based Method with Modified Next-Best View Strategy For 2D Autonomous Robot Exploration. In Proceedings of the 2023 20th International Joint Conference on Computer Science and Software Engineering (JCSSE), Phitsanulok, Thailand, 28 June–1 July 2023; pp. 225–230. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. ‘Towards New Computational Principles for Robotics and Automation’, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar] [CrossRef]

- Biner, S.B. Introduction to Numerical Solution of Partial Differential Equations. In Programming Phase-Field Modeling; Springer International Publishing: Cham, Switzerland, 2017; pp. 9–11. [Google Scholar] [CrossRef]

- Prestes, E.; Idiart, M.; Engel, P.; Trevisan, M. Exploration technique using potential fields calculated from relaxation methods. In Proceedings of the Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), Maui, HI, USA, 29 October–3 November 2001; Volume 4, pp. 2012–2017. [Google Scholar] [CrossRef]

- Maffei, R.; Jorge, V.A.M.; Prestes, E.; Kolberg, M. Integrated exploration using time-based potential rails. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3694–3699. [Google Scholar] [CrossRef]

- Prestes, E.; Engel, P.M. Exploration driven by local potential distortions. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1122–1127. [Google Scholar] [CrossRef]

- Jorge, V.A.M.; Maffei, R.; Franco, G.S.; Daltrozo, J.; Giambastiani, M.; Kolberg, M.; Prestes, E. Ouroboros: Using potential field in unexplored regions to close loops. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2125–2131. [Google Scholar] [CrossRef]

- Grontas, P.D.; Vlantis, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Computationally Efficient Harmonic-Based Reactive Exploration. IEEE Robot. Autom. Lett. 2020, 5, 2280–2285. [Google Scholar] [CrossRef]

- Kopo, R.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Harmonic Field-based Provable Exploration of 3D Indoor Environments. arXiv 2023, arXiv:2303.07042. [Google Scholar] [CrossRef]

- Rousseas, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Trajectory Planning in Unknown 2D Workspaces: A Smooth, Reactive, Harmonics-Based Approach. IEEE Robot. Autom. Lett. 2022, 7, 1992–1999. [Google Scholar] [CrossRef]

- Rousseas, P.; Panetsos, F.; Vlachos, C.; Karras, G.C.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Frontier-Based Exploration using Harmonic Transformations. In Proceedings of the 2025 33nd Mediterranean Conference on Control and Automation (MED), Tangier, Morocco, 10–13 June 2025. [Google Scholar]

- Sawhney, R.; Crane, K. Monte Carlo geometry processing: A grid-free approach to PDE-based methods on volumetric domains. ACM Trans. Graph. 2020, 39, 123. [Google Scholar] [CrossRef]

- Pharr, M.; Jakob, W.; Humphreys, G. Physically Based Rendering: From Theory to Implementation, 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2016. [Google Scholar]

- Sawhney, R.; Miller, B.; Gkioulekas, I.; Crane, K. Walk on Stars: A Grid-Free Monte Carlo Method for PDEs with Neumann Boundary Conditions. ACM Trans. Graph. 2023, 42, 80. [Google Scholar] [CrossRef]

- Miller, B.; Sawhney, R.; Crane, K.; Gkioulekas, I. Walkin’ Robin: Walk on Stars with Robin Boundary Conditions. ACM Trans. Graph. 2024, 43, 41. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; Intelligent Robotics and Autonomous Agents; MIT Press: Cambridge, MA, USA; London, UK, 2006. [Google Scholar]

- DeLaurentis, J.M.; Romero, L.A. A Monte Carlo method for Poisson’s equation. J. Comput. Phys. 1990, 90, 123–140. [Google Scholar] [CrossRef]

- Bell, D.R. The Malliavin Calculus; Dover Publications: Garden City, NY, USA, 2012. [Google Scholar]

- Kakutani, S. Two-dimensional Brownian motion and harmonic functions. Proc. Jpn. Acad. Ser. Math. Sci. 1944, 20, 706–714. [Google Scholar] [CrossRef]

- Muller, M.E. Some Continuous Monte Carlo Methods for the Dirichlet Problem. Ann. Math. Stat. 1956, 27, 569–589. [Google Scholar] [CrossRef]

- Binder, I.; Braverman, M. The rate of convergence of the Walk on Spheres Algorithm. Geom. Funct. Anal. 2012, 22, 558–587. [Google Scholar] [CrossRef]

- Lantuejoul, C.; Beucher, S. On the use of the geodesic metric in image analysis. J. Microsc. 1981, 121, 39–49. [Google Scholar] [CrossRef]

- ActivMedia Robotics. AmigoBOT Users Guide; ActivMedia Robotics: Peterborough, NH, USA, 2003; Available online: https://shorturl.at/6Yqjj (accessed on 6 August 2025).

- Bai, S.; Wang, J.; Chen, F.; Englot, B. Information-theoretic exploration with Bayesian optimization. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE Press: New York, NY, USA, 2016; pp. 1816–1822. [Google Scholar] [CrossRef]

- Home|Bitcraze. Available online: https://www.bitcraze.io/ (accessed on 1 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).