Abstract

In intelligent vehicular networks, the accuracy of semantic segmentation in road scenes is crucial for vehicle-mounted artificial intelligence to achieve environmental perception, decision support, and safety control. Although deep learning methods have made significant progress, two main challenges remain: first, the difficulty in balancing global and local features leads to blurred object boundaries and misclassification; second, conventional convolutions have limited ability to perceive irregular objects, causing information loss and affecting segmentation accuracy. To address these issues, this paper proposes a global–local collaborative attention module and a spider web convolution module. The former enhances feature representation through bidirectional feature interaction and dynamic weight allocation, reducing false positives and missed detections. The latter introduces an asymmetric sampling topology and six-directional receptive field paths to effectively improve the recognition of irregular objects. Experiments on the Cityscapes, CamVid, and BDD100K datasets, collected using vehicle-mounted cameras, demonstrate that the proposed method performs excellently across multiple evaluation metrics, including mIoU, mRecall, mPrecision, and mAccuracy. Comparative experiments with classical segmentation networks, attention mechanisms, and convolution modules validate the effectiveness of the proposed approach. The proposed method demonstrates outstanding performance in sensor-based semantic segmentation tasks and is well-suited for environmental perception systems in autonomous driving.

1. Introduction

The vigorous development of the intelligent Internet of Things has driven the deep integration of sensors, communication networks, and cloud computing technologies, thereby jointly building a comprehensive and three-dimensional intelligent traffic information interaction network. As a key node, autonomous vehicles utilize multiple intelligent sensors, such as lidar and cameras, to achieve real-time transmission and efficient sharing of traffic data. Within this technical architecture, accurate perception of road conditions and the behavior of other traffic participants has become the cornerstone of safe operation of the autonomous driving system, directly affecting decision-making accuracy and driving safety [,,]. As a fundamental technique in computer vision, semantic segmentation enables accurate localization and identification of multiple objects in road scenes through pixel-level fine-grained classification and annotation. In autonomous driving scenarios, this technology enables rapid differentiation of targets such as vehicles, pedestrians, road boundaries, and traffic lights, while outlining their contours and positions. This allows the autonomous driving system to maintain real-time situational awareness, thereby significantly improving its reliability and safety [,,].

Currently, deep learning-based encoder–decoder architectures have demonstrated excellent performance in the field of semantic segmentation for autonomous driving. The encoder converts the original image pixel information into high-level semantic features and extracts abstract semantic representations. At the same time, the decoder upsamples the low-resolution feature maps and restores spatial details. This process maps semantic features back to their original spatial resolution, enabling accurate pixel-wise prediction. Long et al. [] proposed the first end-to-end, fully convolutional network for pixel-level prediction, known as FCN. This network discards the dependence of traditional semantic segmentation methods on manual feature extraction, replaces the fully connected layer in the convolutional neural network with a convolutional layer, and solves the problem of fixed image size in traditional neural networks. However, after multiple convolution and pooling processes, the spatial resolution of the feature map continues to decrease, and a large amount of information about small target objects in the image is lost, making it difficult to restore the original contours and details later. To solve the above problems, Ranneberger et al. [] proposed the classic segmentation network U-Net. This network architecture constructs a symmetric encoder–decoder architecture. The encoder on the left extracts high-level semantic features, while the decoder on the right, aided by skip connections, integrates high- and low-resolution information to enhance segmentation accuracy in target regions. Although this network improves segmentation accuracy, it still has limitations in terms of multi-scale feature extraction and high- and low-level feature fusion complementation. When faced with complex scenes of autonomous driving, the segmentation results are prone to blur and inaccuracy. To overcome this problem, many variant networks have been extended. Zhou et al. [] introduced U-Net++, which incorporates dense skip connections and nested architectures to reduce the information gap between encoder and decoder, resulting in more effective feature fusion across different levels. Cao et al. [] proposed the Swin-UNet, which incorporates a transformer-based module into the U-Net to improve the network’s ability to capture global features, demonstrating clear advantages in high-resolution image segmentation.

In autonomous driving applications, to balance the accuracy and computational efficiency of semantic segmentation, the lightweight backbone network MobileNetV2 [] is often combined with DeepLabv3+ []. This architecture achieves real-time and accurate segmentation of targets in road scenes by optimizing feature extraction and multi-scale dilated convolution. However, this method still has certain limitations. On the one hand, although the lightweight design of MobileNetV2 reduces the computational complexity of the model, it may lead to insufficient feature representation capability, especially in complex scenes, making the model more prone to false detections. On the other hand, the dilated convolutions in the DeepLabv3+ may cause grid artifacts with large dilation rates, which weaken spatial detail information. To address the above problems, existing studies have proposed various improvement strategies. Ren et al. [] replaced the dilated convolution with a depthwise separable convolution. They introduced the shuffle attention mechanism to enhance the model’s ability to capture details and accurately segment object boundaries. In addition, Wang et al. [] replaced the residual units in the Res2Net backbone with traditional residual blocks to achieve a more detailed multi-scale feature extraction. Furthermore, they designed a multi-level loss function system by constructing loss functions for feature maps at different network depths and their corresponding ground truth labels. These losses were then fused and optimized to enhance the model’s ability to learn multi-scale features.

From the above analysis, we can see that in the application of intelligent Internet of Things, scene understanding ability, feature expression ability, and information interaction ability of deep learning networks are the core elements to ensure the safe operation of intelligent driving systems. Among these, an accurate perception of the global scene enables a vehicle to plan its path, while quick perception of local details is crucial for responding to emergencies. The efficient feature extraction mechanisms for both global and local information form the basis of the model’s ability to learn meaningful information from massive autonomous driving data. However, when dealing with complex environments, existing networks often encounter problems such as an imbalance between global and local information, as well as insufficient feature extraction efficiency. To this end, the method proposed in this paper enhances the understanding of high-dimensional image data captured by vehicle-mounted sensors. By improving feature extraction mechanisms, the model’s perception accuracy and semantic knowledge of its surrounding environment are significantly enhanced, thereby effectively supporting decision-making and control tasks in complex road scenarios for autonomous driving systems. The main contributions of this paper are as follows:

- (1)

- We propose a collaborative network for semantic segmentation tailored to autonomous driving perception tasks. The network effectively integrates two complementary plug-and-play modules to construct a new feature interaction processing mechanism, which improves segmentation accuracy by enhancing cross-scale information collaboration capabilities and multi-branch parallel feature capture capabilities.

- (2)

- We design a novel global–local collaborative attention (GLCA) module, using a dual-path architecture to aggregate global context and local details, as well as incorporating a dynamic attention fusion strategy that adaptively balances global and local features across channels and spatial dimensions, thus achieving an effective trade-off between long-range semantic dependencies and fine-grained target details in complex traffic scenarios.

- (3)

- We propose an innovative spider web convolution (SWConv) module that simulates the spider web topology to obtain six-dimensional context information on the image. This module uses a differentiated padding strategy and multi-dilation rate convolution to integrate multi-view spatial relationships, enhance feature representation capabilities, and improve the perception of irregular target geometric features in complex scenes.

- (4)

- Experimental results on the Cityscapes, CamVid, and BDD100K datasets validate the effectiveness of the proposed GLCA module and SWConv module in the task of semantic segmentation for autonomous driving. Compared with various mainstream attention mechanisms and convolutional structures, the proposed modules achieve state-of-the-art performance across multiple evaluation metrics. Furthermore, when integrated into the baseline model, they demonstrate significant advantages over typical semantic segmentation models.

2. Related Work

The network proposed in this paper is based on an encoder–decoder architecture. In semantic segmentation for autonomous driving, recent research has mainly focused on enhancing classical convolutional structures, introducing attention mechanisms, and exploring novel feature interaction strategies. This subsection provides an overview of existing methods based on their design optimization priorities.

2.1. Context-Based Network

In semantic segmentation tasks for autonomous driving, context-aware networks enhance segmentation accuracy and overall scene understanding by capturing long-range dependencies between pixels and modeling the scene’s semantic structure. These methods leverage contextual information as a core cue to explore spatial relationships and category dependencies among objects, thereby alleviating issues such as semantic ambiguity and structural discontinuity caused by the limited receptive field of traditional convolution [,]. For example, Wu et al. [] proposed the CGNet semantic segmentation network, which introduces a context guidance module to jointly model local features and contextual information. This effectively enhances the representational capacity of features at each stage, improves the overall segmentation accuracy. Elhassan et al. [] proposed the DSANet network, which incorporates a spatial encoding network and a dual attention mechanism. It extracts multi-depth features via hierarchical convolution, effectively preserving spatial information while reducing edge detail loss. The dual attention module fuses contextual dependencies in both spatial and channel dimensions to enable more effective integration of semantic and spatial features, thus significantly improving segmentation accuracy. KIM et al. [] proposed the ESCNet network, which features an efficient spatio-channel dilated convolution module (ESC). By introducing a dilation rate mechanism in the spatial dimension, this module effectively expands the receptive field and enables efficient capture of multi-scale contextual features.

2.2. Context Boundary Network

In the field of semantic segmentation for autonomous driving, context-boundary-based networks aim to integrate object edge information with global semantic context. They improve the precision and semantic consistency of segmentation results by constructing cross-level interaction mechanisms between boundary features and scene semantics [,]. For example, Xiao et al. [] proposed the BASeg semantic segmentation network, which includes a boundary refinement module (BRM) and a context aggregation module (CAM). The BRM integrates high-level semantic features with low-level boundary information by combining convolution and attention mechanisms to refine boundary regions. Moreover, it uses semantic features to guide boundary positioning, effectively suppresses noise, and preserves key pixel-level positional information. The CAM focuses on reconstructing long-range dependencies between boundary regions and internal pixels of target objects, thereby enhancing the understanding of feature association. Lyu et al. [] proposed an edge-guided semantic segmentation network ESNet, which is composed of an edge network, a segmentation backbone network, and a multi-layer fusion module. It first extracts global edge information to enhance its category discrimination capability. Then it integrates the features generated by the segmentation network with edge information at multiple levels in the fusion module, significantly improving segmentation accuracy. Luo et al. [] proposed a guided downsampling network that uses an autoencoder structure to compress image size while retaining edge, texture, and structural information as much as possible. It adopts a dual-branch encoder architecture to capture long-range dependencies between objects and the global scene layout, providing a strong foundation for pixel-level semantic segmentation in autonomous driving.

2.3. Attention and Convolution Optimization

In recent years, attention mechanisms and convolutional optimization techniques have been widely applied to semantic segmentation in autonomous driving. Attention modules enable models to focus on key image regions, thereby enhancing the perception of semantic elements such as roads, vehicles, and buildings. Different types of attention mechanisms vary in scope, implementation, and effectiveness, directly impacting the model’s ability to understand the relationships between multi-scale objects and contextual information in complex road scenes [,]. For example, Hu et al. [] proposed the SE attention mechanism module, which extracts channel features via global information compression and assigns weights to each channel, enhancing the perception of key semantic elements in complex environments. Woo et al. [] developed the CBAM module, which combines channel and spatial attention using average and max pooling operations to improve feature responses to core objects such as roads and pedestrians while suppressing background noise. Li et al. [] proposed the SK attention module, which uses parallel multi-scale convolution kernels along with fusion and weighting mechanisms to dynamically select receptive fields, thereby enhancing multi-scale object recognition. Hou et al. [] introduced the Coordinate Attention module, which incorporates coordinate information through global pooling along horizontal and vertical directions, thereby enabling more precise localization of road targets and improved semantic feature representation. Wang et al. [] proposed the ECA module, employing one-dimensional convolution to model local channel dependencies while keeping computational costs low. It strengthens core semantic channels such as roads and vehicles, supporting real-time segmentation requirements. Park et al. [] proposed the BAM module, which jointly models channel semantics and spatial positioning. This facilitates semantic enhancement and precise localization of key targets like road structures and dynamic vehicles, improving the model’s perception in autonomous driving scenarios.

Convolution optimization techniques effectively address challenges such as significant variations in target scale, shape diversity, and complex environments in autonomous driving scenarios by introducing innovative feature extraction methods. For example, Howard et al. [] proposed depthwise separable convolution, which decomposes standard convolution into depthwise and pointwise operations. This significantly reduces the number of parameters and computational cost, enabling lightweight models to efficiently extract multi-scale features and adapt to the morphological variations of diverse targets, such as vehicles and pedestrians. Chen et al. [] introduced a dynamic convolution module that generates kernel weights through contextual perception and combines them with spatial convolution to achieve adaptive feature modeling of complex targets. Li et al. [] proposed the involution module, which uses position-sensitive dynamic kernels to balance fine-grained local modeling and global context extraction. This design enhances the model’s recognition accuracy for complex road conditions and small-scale targets, while also improving its real-time perception capability.

3. Materials and Methods

3.1. Overall Architecture

This paper adopts the lightweight and efficient MobileNetV2 combined with DeepLabV3+ as the baseline architecture. Owing to its compact size, fast inference speed, and ease of deployment, this architecture is widely used in autonomous driving for scene perception and object segmentation. However, it still faces challenges in complex road scenarios, such as the diverse directional distribution of multi-class targets, blurred boundaries, and the tendency to miss small objects. To address these issues, we introduce the GLCA and SWConv module. These enhancements improve the network’s ability to capture directional spatial patterns and to jointly model global and local features, thereby significantly improving segmentation accuracy and edge detail restoration.

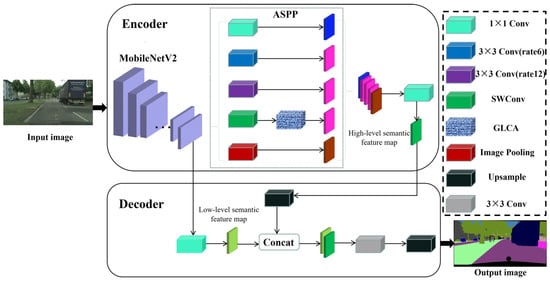

As shown in Figure 1, the model adopts an overall encoder–decoder architecture. MobileNetV2 is first used as the backbone network to extract basic semantic features from the input image. Then, the atrous spatial pyramid pooling (ASPP) module from the DeepLabV3+ architecture is introduced to capture contextual information at multiple receptive fields, enhancing the model’s multi-scale feature representation capability. However, the standard ASPP struggles to fully capture multi-directional structural patterns and complex object boundaries in autonomous driving scenarios. To address this, we improve the ASPP module by replacing its final atrous convolution branch with the proposed SWConv module, thereby enhancing directional perception during feature learning. In addition, we incorporate a GLCA module to guide the network in better integrating global semantic information with local details, further improving segmentation accuracy. The enhanced semantic features are then fused with shallow spatial detail features from the encoder to restore spatial resolution. Finally, the decoder progressively upsamples the fused features to produce a pixel-wise segmentation map with the same resolution as the input image, enabling fine-grained perception and segmentation of multi-class objects in autonomous driving scenarios.

Figure 1.

Overall network structure.

3.2. Global–Local Collaborative Attention (GLCA) Module

The ASPP module in the baseline model processes feature maps in parallel using dilated convolutions with different sampling rates to capture multi-scale global contextual information, thereby enhancing the model’s semantic understanding of input targets. This module has been widely adopted in various image processing tasks. However, autonomous driving scenarios are significantly more complex than conventional image tasks due to the highly dynamic and diverse road environments. In images captured by onboard cameras, targets vary dramatically in size, shape, color, and position. Under such conditions, the traditional ASPP module demonstrates clear limitations when handling densely distributed and diverse target regions, particularly in capturing fine details of small objects. This can lead to missed or incorrect detections caused by insufficient feature extraction. Moreover, when processing large-scale targets such as vehicles and buildings, ASPP demonstrates limited efficiency in modeling and aggregating global information across diverse scenarios. As a result, it struggles to perceive the complete structure of large objects, leading to poor boundary discrimination and significantly affecting the clarity and accuracy of segmentation outcomes, especially in dynamic environments.

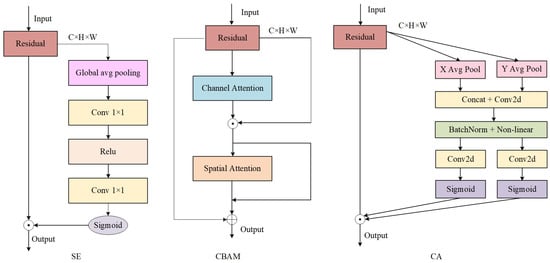

Currently, various classic attention modules are widely employed to optimize the ASPP component in semantic segmentation tasks for autonomous driving. As illustrated in Figure 2, three commonly used attention mechanisms include the SE module, which focuses on channel information; the CBAM module, which fuses channel and spatial information; and the Coordinate Attention (CA) module. Channel attention mechanisms such as SE, ECA, and SK enhance feature representation by modeling inter-channel dependencies, but they neglect spatial information, resulting in limited perception of critical spatial regions. To address this, modules like CBAM and BAM incorporate spatial attention to improve spatial modeling capabilities. However, they still struggle to balance global semantics and local details in complex scenarios, especially in directional modeling tasks such as lane alignment and vehicle positioning. The CA module introduces a direction-aware mechanism that integrates positional and channel information. Nevertheless, its single-scale structure and static weighting design limit its adaptability to multi-scale targets and dynamic scenes.

Figure 2.

Three types of attention structures.

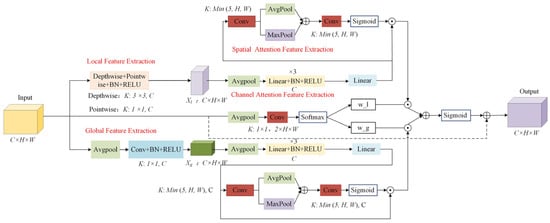

To address the limitations of the baseline model and existing attention mechanisms, this paper proposes a novel global–local collaborative attention module, designed to construct an enhanced feature representation framework and comprehensively improve the model’s ability to perceive complex targets. As illustrated in Figure 3, the module adopts a dual-branch architecture consisting of global feature extraction and local detail modeling, specifically tailored to accommodate the significant scale variations commonly observed in autonomous driving scenarios. The input features are first decomposed into global and local components, enabling scale-aware feature learning. In the global branch, adaptive average pooling and convolution operations are applied to reduce spatial resolution and expand the receptive field, thereby enhancing the model’s capability to capture large-scale objects such as buildings and roads. In the local branch, depthwise separable convolutions are employed to extract fine-grained features within each channel without increasing computational complexity. This branch preserves rich spatial and texture details, particularly improving the model’s sensitivity to small objects and their edge structures. Additionally, pointwise convolutions are used to efficiently fuse channel-wise information. Compared to conventional convolutions, depthwise separable convolutions not only reduce parameter overhead but also enhance the extraction of localized features. The detailed implementation of the global and local feature extraction modules is as follows:

where is the input feature, and represent the global feature and local feature after being processed by the feature extraction module.

Figure 3.

Global–Local Collaborative Attention Module.

To further enhance the model’s ability to parse and present features, this paper proposes a channel–spatial attention collaborative learning module that jointly mines the salient information of features. In the designed channel attention module, we employ a three-layer, fully connected network to adequately model the potential nonlinear dependencies among channels. First, the input features are compressed into a global descriptor vector along the channel dimension via global average pooling, followed by a multi-layer, fully connected network to perform nonlinear transformations on this vector. Compared to the traditional SE module, which utilizes a two-layer structure, the three-layer network offers enhanced nonlinear representation capabilities, enabling more effective modeling of complex inter-channel interactions and thereby improving the discriminative power of the attention mechanism in identifying target regions. Furthermore, the three-layer architecture introduces a deeper transformation path during feature compression and recovery, which strengthens the module’s ability to select critical channels and helps suppress background or redundant features that may interfere with the backbone feature flow. Considering the balance between computational efficiency and modeling capacity, we fixed the number of fully connected layers to three. We kept this setting consistent across all experiments without further hyperparameter tuning. However, relying solely on the channel attention mechanism, although effective in enhancing the importance of different channels, inherently overlooks the saliency of features in the spatial dimension. To address this limitation, we further feed the channel-enhanced features into a spatial attention module to achieve a deeper integration of attention mechanisms across both semantic and spatial dimensions. This “channel-first, then spatial” attention strategy enables the model to not only strengthen the representation of key semantic channels but also focus on salient regions within the spatial domain, thereby improving the perception of object locations and boundaries. Specifically, the spatial attention module first applies average pooling and max pooling along the channel dimension of the channel-enhanced feature map to extract spatial response information from different statistical perspectives. These two pooled feature maps are then concatenated along the channel axis and passed through a convolutional layer to generate a spatial attention map. To enhance the module’s adaptability to objects of various scales, the convolution kernel size is dynamically adjusted based on the spatial resolution of the input feature map, allowing the attention mechanism to capture both local details and global structures. Finally, the resulting spatial attention map is activated by a sigmoid function and multiplied with the input feature for spatially selective enhancement. This progressive modeling facilitates complementary fusion of semantic and spatial information, enabling the model to achieve greater robustness and discriminative power in multi-scale recognition. The implementation process is as follows:

where features and denote the channel features extracted from the global and local feature branches, respectively. Features and , obtained from Equations (1) and (2), are then fed into the channel attention module to learn channel-wise attention. The resulting channel features are subsequently input into the spatial attention module to capture spatial saliency information, ultimately yielding the final output features and from the global and local branches.

Given the varying feature extraction requirements of targets at different scales in autonomous driving scenarios, this paper introduces a dynamic weight allocation module within the attention mechanism, replacing the traditional fixed-weight strategy. The module first applies global average pooling to the input features to extract global semantic information. Then it compresses the feature channels using a 1 × 1 convolution to produce two independent feature weighting branches. Finally, the two branches are normalized using the Softmax function to dynamically generate weighting coefficients for the global and local branches. The specific implementation process is as follows:

where , , and represent the original feature weight, global feature weight, and local feature weight, respectively.

After the channel and spatial attention processing, the global–local collaborative attention module performs a weighted fusion of global and local features to generate the final attention weight map. Subsequently, a residual connection is incorporated to adaptively enhance the features, effectively retain key information, and dynamically focus on targets of different scales. The implementation process is as follows:

where feature denotes the final feature obtained after learning through the global–local collaborative attention module. Weight tensors and , each with shape , represent global-level weighting coefficients that are applied to the corresponding features, and , through broadcasting. Both and features have dimensions , indicating batch size, channel number, height, and width, respectively. The operator denotes element-wise multiplication, meaning corresponding elements are multiplied one by one to form a new tensor. According to broadcasting rules, tensors of shape are automatically expanded to to enable element-wise multiplication with the feature tensors. After the multiplication and addition operations, a Sigmoid activation function is applied, resulting in a weight tensor of shape . This weight is then multiplied element-wise with the original input tensor to perform weighted fusion, yielding the final output .

3.3. Spider Web Convolution (SWConv) Module

In the context of semantic segmentation for autonomous driving, the surrounding environment is typically highly structured. Yet, it encompasses a diverse range of target categories, including pedestrians, vehicles, lane lines, and traffic signs. These targets exhibit complex directional distributions, drastic scale variations, and blurred or hard-to-distinguish boundaries. Although the ASPP module captures multi-scale contextual information through dilated convolutions, it still faces certain limitations in autonomous driving scenarios. First, when extracting features from target regions, dilated convolutions exhibit uniform perception in all directions and lack apparent directional selectivity. This may lead to inaccurate feature representations when dealing with complex and directionally structured driving scenes, resulting in unclear object boundaries or category confusion. Therefore, a semantic segmentation model for autonomous driving should not only have a sufficiently large receptive field to capture rich contextual information but also possess the ability to sensitively perceive directional structures and accurately delineate edge contours, thereby improving segmentation accuracy and robustness.

To address the above limitations, various convolutional module optimization schemes have been proposed, such as depthwise separable convolution, dynamic convolution, and involution. These methods optimize traditional convolution from different perspectives, such as reducing computational complexity by decomposing standard convolution, introducing dynamic mechanisms for adaptive weight adjustment, and designing spatially specific kernels to enhance the modeling of long-range context. Although these optimizations have improved the expressiveness and efficiency of the model to some extent, they still suffer from insufficient adaptability in the specific context of autonomous driving. Current methods have not fully accounted for the hierarchical differences in multi-scale targets prevalent in autonomous driving scenes, nor the correlations among long-range semantic features. The lack of multi-faceted collaborative feature modeling and contextual semantic perception capabilities makes it difficult to achieve fine-grained feature extraction and learning in complex scenarios.

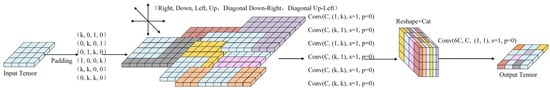

Based on the above problems, this paper proposes a spider web convolution module suitable for complex autonomous driving scenarios with multi-directional perception capabilities, which effectively integrates contextual information from horizontal, vertical, and diagonal directions to enhance feature representation capabilities. In order to retain the multi-scale feature extraction advantages of the ASPP module, this paper finally replaces its last layer of hole convolution with spider web convolution. The spider web convolution is based on directional asymmetry as its core design concept and is inspired by the radial structure of a spider web. It aims to enhance the feature capture capability of convolution in multiple directions, thereby improving the model’s perception of target boundaries and spatial structures. As shown in Figure 4, the module first constructs six asymmetric feature filling strategies to perform multi-directional filling processing on the original input features, namely, top, bottom, left, right, upper left, and lower right. By applying asymmetric padding to the feature boundaries, the receptive field around the edges is effectively expanded, thereby helping to alleviate the problem of edge information loss. In addition, the directional padding design guides the convolutional kernels to focus and perceive in specific directions, thereby enhancing the model’s ability to capture the directional distribution of target structures as well as improving boundary modeling and feature integrity in complex scenes. The implementation process is as follows:

where is the original input feature map, , , , , , and represent the original features after padding in different directions, guiding the convolutional kernels to focus and perceive toward the right, bottom, left, top, bottom-right, and top-left, respectively. represents zero-fill operation.

Figure 4.

Spider web convolution module. The convolution kernel size (k) is set to 3, and after concatenating the six-directional perception paths, the number of channels becomes 6C. And final 1 × 1 convolution is then applied to reduce the channels back to the original dimension C.

After implementing the above-mentioned asymmetric padding processing, the module performs convolution operations on the features after padding in six directions in turn. For the features after padding on the left and right sides, convolution is performed in the horizontal direction to capture the horizontal structural information; for the features after padding on the top and bottom sides, convolution is performed in the vertical direction to extract the longitudinal features; for the features after padding in the upper left and lower right directions, they are processed by standard convolution kernels, so that the convolution kernel can effectively cover and learn the contextual structure in the diagonal direction, thereby enhancing the model’s perception and expression capabilities of diagonal features. In this design, the convolution operation in each direction focuses on the structural pattern in the corresponding direction. Through the separated direction perception strategy, the convolution module can independently learn and strengthen the spatial structural features from six different directions, thereby improving the integrity and directional sensitivity of the overall feature representation.

where is the activation function, is the batch normalization operation, , , , , , and are the convolution operations in specific directions applied to the padded features, and the feature maps in six directions obtained by normalization and activation functions.

After completing the convolution operations in the above directions, the feature maps in all directions are restored to the same spatial dimensions as the original input features through bilinear interpolation. Subsequently, a 1 × 1 convolution is used to fuse and splice the features in each direction in the channel dimension, thereby realizing the integration and feature enhancement of multi-directional perception information. This process not only improves the directional selectivity but also effectively enhances the model’s ability to express complex structural information. The specific implementation process is as follows:

where , , , , , and are feature maps that have been processed to match the size of the output feature map, and represents the final output feature.

4. Results

4.1. Experiment Setup

Datasets: To validate the effectiveness of the proposed modules, we selected three widely recognized and authoritative benchmark datasets in the autonomous driving domain—Cityscapes, CamVid, and BDD100K. Vehicle-mounted sensors collect all three datasets and authentically reflect the visual information captured by camera sensors in urban driving environments, representing diverse types of autonomous driving scenarios and varying visual complexities. By conducting systematic training and evaluation on these absolute sensor datasets, we comprehensively verify the performance and robustness of the proposed method in practical application scenarios, further enhancing its practical relevance and applicability to sensor-based autonomous driving systems.

Cityscapes []: The dataset captures urban street scenes from multiple viewpoints, encompassing a variety of architectural styles, road layouts, and traffic conditions. It includes 5000 finely annotated and 20,000 coarsely annotated images. In this study, only the finely annotated images are used, with 2975 images for training. As the official test set does not provide publicly available labels, 500 images from the validation set are used for evaluation, following the common practice in previous works. The resolution of each image in this dataset is 2048 × 1024, and it contains 19 evaluation categories, such as roads, pedestrians, cars, traffic lights, traffic signs, and buildings. To verify the robustness of the model under varying practical scenarios, the input resolution is adjusted. After applying random scaling, cropping, and padding as data augmentation, the final training image sizes are set to 256 × 256 and 768 × 768 pixels, respectively.

CamVid []: The dataset is divided into training, validation, and test sets, containing 367, 101, and 233 images, respectively. The original resolution of each image is 480 × 360 pixels. To evaluate the effectiveness of the proposed model and module, the resolution is increased to 960 × 720 pixels through padding. Additionally, data augmentation techniques such as random scaling, cropping, and padding are applied during training to enhance the diversity of the dataset. The dataset includes 11 evaluation categories, covering scene elements such as roads, buildings, trees, and vehicles.

BDD100K []: The dataset is a large-scale, multi-task dataset specifically designed to support autonomous driving research. It encompasses a range of computer vision tasks, including image classification, object detection, instance segmentation, panoptic segmentation, and semantic segmentation. This dataset comprises 10,000 images that are fully annotated with pixel-level semantic segmentation labels, encompassing a diverse range of real-world driving scenarios. It captures a diverse set of weather conditions, lighting variations, and road environments, offering extensive scene diversity and reflecting the complexities encountered in actual autonomous driving tasks. The dataset is structured into three distinct subsets, with 7000 images allocated for training, 1000 images designated for validation, and 2000 images reserved for testing. Since the true labels for the test set are not publicly released, the performance evaluation in this study is conducted on the validation set. Initially, the image resolution is 1280 × 720 pixels, which is standard for high-definition video content. However, for this study, the images have been resized to 512 × 512 pixels to fit the model’s training requirements. The dataset contains a total of 19 distinct evaluation categories, each corresponding to specific elements or regions of interest in the driving environment.

Evaluation Metrics: In this study, we evaluate model performance using mean Intersection over Union (mIoU), mean recall (mRecall), mean precision (mPrecision), mean pixel accuracy (mPA), frames per second (FPS), the number of parameters (Params), and floating-point operations (FLOPs). mIoU provides a comprehensive evaluation of the model’s ability to predict semantic categories and their boundaries. mRecall measures the model’s capacity to identify true targets across categories, while mPrecision focuses on the accuracy of predictions, effectively evaluating the model’s ability to reduce false positives. mPA assesses the classification accuracy at the pixel level. FPS (frames per second) represents the number of image frames processed per second and reflects the model’s real-time inference capability. The number of parameters (Params) refers to the total count of learnable weights in the model, reflecting the model’s memory footprint and architectural complexity. FLOPs (floating-point operations) measure the total number of operations required during a single forward pass, providing an estimate of the computational cost and inference efficiency. Specifically, the model’s parameter count is obtained by summing all learnable parameters across layers, while FLOPs are calculated by accumulating the computational cost of each layer based on the model architecture and the dimensions of the input feature maps. The definitions of these metrics are provided as follows:

where C is the total number of categories in the image, is the number of pixels correctly classified in the i-th category, is the total number of pixels in the i-th category, is the number of pixels that are actually in the i-th category but predicted to be in the j-the category, is the number of pixels in the i-th category correctly predicted as positive samples by the model, is the number of samples in the i-th category that are actually positive but incorrectly predicted as negative samples by the model, is the number of false positives in the i-th category, is the number of image frames processed in a period of time, and is the total processing time.

Implementation details: All experiments are conducted on a system equipped with an RTX 4090 GPU using the PyTorch 2.5.0 framework. The initial learning rate is set to 0.0001, and the Adam optimizer is used. Additionally, a cosine annealing decay strategy is introduced to dynamically adjust the learning rate and avoid convergence to local minima. For the loss function, a combination of cross-entropy loss and Dice loss is adopted. During training and testing on the Cityscapes dataset, when the image resolution is adjusted to 768 × 768 pixels, the batch size is set to 4; when the resolution is 256 × 256, the batch size is set to 8. The model is trained for 200 epochs. In the CamVid dataset experiments, when the image resolution is 960 × 720, the batch size is set to 4; when the resolution is adjusted to 480 × 360, the batch size is set to 8. The model is trained for 150 epochs. When conducting experiments on the BDD100K dataset, a batch size of 8 was used, and the model was trained for 200 epochs.

4.2. Comparative Experiment

4.2.1. Attention Comparison Experiment

To evaluate the effectiveness of the global–local collaborative attention module proposed in this paper within the autonomous driving scenario, representative classical attention mechanisms currently prevalent in this field were selected for comparison and systematic experimentation. In addition to traditional attention mechanisms, Transformer-based methods, including Swin Transformer and Vision Transformers with Hierarchical Attention, were also considered. Multiple performance metrics, including mIoU, mean recall, mean precision, and mean pixel accuracy, were used to compare and analyze the performance differences of each attention module on the Cityscapes, CamVid, and BDD100K datasets, thereby thoroughly validating the advantages and innovations of the proposed module. Table 1 presents a quantitative comparison of various attention mechanisms on the Cityscapes dataset, which has a resolution of 256 × 256 pixels. The evaluated modules include SE, CBAM, SK, CA, ECA, BAM, Swin Transformer (Swin-TF) [], and Vision Transformers with Hierarchical Attention (HAT-Net) []. All of these modules enhance the model’s ability to focus on key information through design strategies targeting different dimensions such as channels, spatial attention, or scale. Meanwhile, Transformer-based methods further improve the model’s capability to capture long-range dependencies in complex scenarios by modeling global information and leveraging multi-level self-attention mechanisms. These approaches enable the model to focus on critical features over a larger context, distinguishing them from traditional attention mechanisms. To highlight top results, all the best metrics are marked in bold.

Table 1.

Quantitative comparative experimental results of different attention mechanisms and transformer modules on the Cityscapes dataset.

To ensure the fairness of the experiment, all attention modules were inserted at the same position within the baseline segmentation model, with consistent experimental environments and hyperparameter settings. As can be seen from the results in Table 1, after integrating different attention mechanisms (including Transformer-based modules) into the ASPP module, the model demonstrated improvements across all four evaluation metrics. Among these, the proposed GLCA module achieved the best performance on all metrics and the most significant improvements. Specifically, the GLCA module improved mIoU by 2.22%, mRecall by 2.11%, mPrecision by 1.58%, and mean pixel accuracy by 0.23%. These quantitative results indicate that the GLCA module significantly enhances semantic segmentation accuracy and classification stability in complex scenes by strengthening multi-dimensional feature interactions and dynamic weighting.

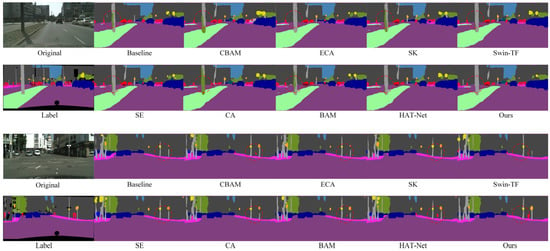

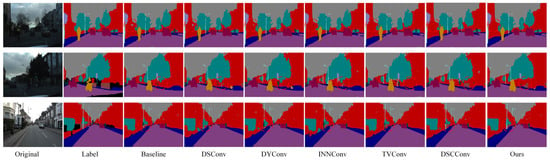

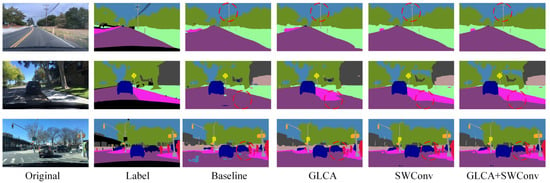

Figure 5 shows a visual comparison of semantic segmentation results after introducing different attention mechanisms and transformer modules. The proposed GLCA module yields superior segmentation in complex scenes compared to the baseline and other attention methods. Especially in the red-circled area, the contours of small targets, such as pedestrians and traffic signs, are more precise and complete, and the misclassification of large targets is significantly reduced, further demonstrating the GLCA module’s advantages in improving segmentation accuracy and robustness.

Figure 5.

Qualitative comparison of experimental results for different attention and transformer modules on the Cityscapes dataset.

To further verify the effectiveness of the proposed module in the semantic segmentation task of autonomous driving, we conducted both quantitative and qualitative comparative experiments on the CamVid dataset, which has a resolution of 480 × 360. Table 2 presents the quantitative results obtained by integrating various attention mechanisms into the baseline model. The results show that the GLCA module achieves significant performance improvements on this dataset and outperforms all other attention mechanisms. Figure 6 displays the corresponding qualitative segmentation results. As shown in the red-marked areas, the GLCA module exhibits fewer misclassifications for both small and large objects, and its segmentation outputs are consistent with the ground truth, further demonstrating the module’s robustness and generalization capability in segmentation accuracy.

Table 2.

Quantitative comparative experimental results of different attention mechanisms and transformer modules on the CamVid dataset.

Figure 6.

Qualitative comparison of experimental results for different attention and transformer modules on the CamVid dataset.

In real-world autonomous driving scenarios, models are expected not only to perform well on standard datasets but also to demonstrate robustness under complex environmental conditions. To this end, we conducted additional experiments on the BDD100K dataset, which includes diverse weather, lighting, and traffic conditions, to further evaluate the generalization ability of the proposed method in challenging scenes. As shown in Table 3, we present a quantitative comparison of different attention mechanisms and Transformer-based modules on the BDD100K dataset. The results clearly indicate that the proposed GLCA module achieves notable advantages across several key performance metrics. Specifically, compared to the widely used CBAM module, GLCA improves mIoU, mRecall, mPrecision, and mAccuracy by 0.95%, 2.13%, 0.03%, and 0.07%, respectively. Given that BDD100K is a highly challenging dataset characterized by complex and diverse real-world conditions, these improvements underscore the strong robustness and generalization capability of GLCA. Even under such demanding settings, GLCA consistently delivers stable and superior performance, further validating its practical applicability and effectiveness in real-world scenarios.

Table 3.

Quantitative comparative experimental results of different attention mechanisms and transformer modules on the BDD100K dataset.

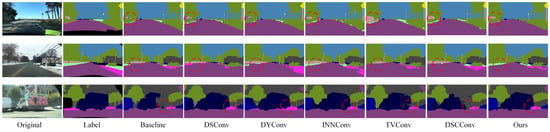

In addition to quantitative evaluations, we also conducted qualitative visual analyses on the BDD100K dataset to further assess the performance of each module under complex scenarios. As shown in Figure 7, we compare the segmentation results of different attention mechanisms and Transformer-based modules across various challenging environments. The proposed GLCA module achieves more accurate feature extraction and boundary recognition in critical regions, enabling more precise delineation of essential targets such as road edges, pedestrian contours, and traffic signs. In contrast, other modules often suffer from blurred boundaries or missed detections in certain areas, whereas GLCA consistently maintains strong regional consistency and structural integrity. These results further demonstrate the superior adaptability and robustness of GLCA in handling the complexities of real-world autonomous driving environments, highlighting its potential and effectiveness in practical applications.

Figure 7.

Qualitative comparison of experimental results for different attention and transformer modules on the BDD100K dataset.

To further assess the deployment potential of the proposed GLCA module in practical applications, this paper analyzes the computational costs of models embedding different attention mechanisms and Transformer modules. To further evaluate the deployment potential of the proposed GLCA module in practical applications, this paper analyzes the computational costs of models incorporating various attention mechanisms and Transformer modules. The results are presented in Table 4. Compared to segmentation models that embed different attention mechanisms, the proposed GLCA module introduces minimal computational overhead. Compared with SKAttention, GLCA demonstrates lower computational cost. Moreover, in terms of both parameter count and FLOPs, the GLCA module significantly outperforms models integrating Transformer modules.

Table 4.

Comparative analysis of parameters (Params/M) and FLOPs (FLOPs/G) in models integrating various attention mechanisms and transformer modules.

Additionally, the GLCA module achieves notable improvements in segmentation performance while maintaining low computational cost, surpassing models with other embedded modules. A comprehensive analysis of Parameters and FLOPs indicates that the GLCA module effectively controls model complexity while maintaining high segmentation accuracy, demonstrating superior computational resource efficiency and design advantages. This module significantly reduces the computational burden of the model, thereby lowering resource consumption during inference and facilitating the demands for lightweight and efficient deployment in practical applications. Therefore, the GLCA module exhibits strong adaptability and potential for broad adoption, making it especially suitable for embedded systems and edge computing environments where computational resources are limited and real-time performance is crucial.

4.2.2. Convolution Comparison Experiment

To verify the adaptability of the spider web convolution proposed in this paper in complex autonomous driving scenarios, we selected several representative convolution methods for comparative experiments, including depthwise separable convolution (DSConv), dynamic convolution (DYConv), inner convolution (INNConv), TVConv [], and Snake Convolution (DSCConv) []. The specific method is to replace the last layer of the hole convolution in the ASPP module with the above different types of convolution structures. All experiments are conducted under the same operating environment and hyperparameter settings to ensure fairness.

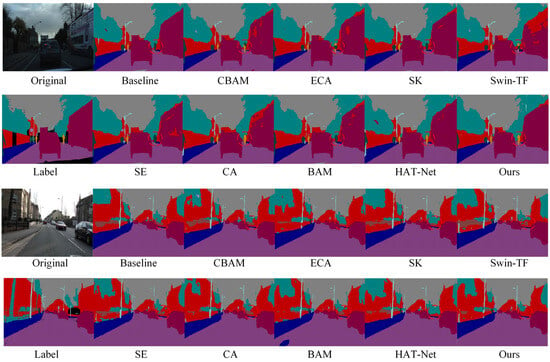

The quantitative experimental results on the Cityscapes dataset are shown in Table 5. The results demonstrate that the proposed convolution modules improve the performance of the baseline model to varying extents. Among them, the spider web convolution proposed in this paper achieves the best performance across multiple key metrics. After introducing the spider web convolution, the mIoU increased by 2.35%, mRecall by 2.62%, mPrecision by 1.45%, and mAccuracy by 0.27%, compared to the baseline model. These results indicate that the spider web convolution, through its multi-directional feature perception and fusion design, significantly enhances the model’s ability to extract structural features, thereby improving overall semantic segmentation performance. Compared with other convolution methods, the spider web convolution demonstrates stronger adaptability in accurately recognizing diverse targets such as road boundaries, traffic signs, vehicles, and pedestrians, highlighting its practical value in complex autonomous driving scenarios.

Table 5.

Quantitative comparison experimental results of different convolution modules on the Cityscapes dataset.

Figure 8 shows a visual comparison of the segmentation results of the model after replacing different convolution modules on the Cityscapes dataset. It can be clearly seen that compared with the baseline model, after the introduction of various convolution modules, the segmentation effect of the model in multiple key areas has been significantly improved: the continuity of road boundaries is stronger, the outlines of traffic signs are clearer, the edge details of targets such as vehicles and pedestrians are more accurate, and the perception of overall multi-directional structural features is significantly enhanced. In particular, in the position marked by the red circle in the figure, it can be seen that the model using spider web convolution is more accurate in the recognition and segmentation of targets such as roads, traffic signs, and pedestrians, and the generated results are more delicate and highly consistent with the real labels, which further verifies the effectiveness and advantages of spider web convolution in processing complex structural features.

Figure 8.

Qualitative comparison experimental results of different convolution modules on the Cityscapes dataset.

To verify the effectiveness and generalization ability of the proposed module across different scenarios, we conducted a similar comparative experiment on the CamVid dataset. Table 6 presents the quantitative evaluation results after replacing the dilated convolution in the ASPP module with different convolution methods. The results show that substituting the original dilated convolution with the spider web convolution leads to improvements in all metrics: mIoU increases by 1.02%, mRecall by 0.35%, mPrecision by 1.20%, and mAccuracy by 0.26%. Notably, when other classic convolution modules are used as replacements, the model’s performance on this dataset declines, suggesting that these methods are less effective in capturing fine-grained features and adapting to complex environments. These findings further demonstrate the advantages of spider web convolution in complex semantic segmentation tasks, highlighting its superior feature modeling capability and robustness across diverse scenarios.

Table 6.

Quantitative comparison experimental results of different convolution modules on the CamVid dataset.

As shown in the red-circled region of Figure 9, the model employing spider web convolution demonstrates more accurate and complete recognition of building areas compared to the baseline and other convolution modules. It exhibits significantly fewer misclassifications, clearer edge contours, and finer detail preservation, further illustrating its superior feature representation capability in complex scenes.

Figure 9.

Qualitative comparison experimental results of different convolution modules on the CamVid dataset.

To evaluate the performance of different convolutional structures in complex scenarios, Table 7 presents a quantitative comparison conducted on the BDD100K dataset. It is evident that the proposed SWConv module outperforms other convolutional variants in more challenging autonomous driving environments. Specifically, compared to the DSConv module, SWConv achieves improvements of 1.26%, 0.70%, 1.63%, and 0.27% in mIoU, mRecall, mPrecision, and mAccuracy, respectively. These results indicate that SWConv is more effective in extracting key regional features, enhancing the accuracy and completeness of target recognition, and thereby improving the model’s practical adaptability to complex real-world conditions.

Table 7.

Quantitative comparison experimental results of different convolution modules on the BDD100K dataset.

In addition to the quantitative comparison, we also conducted qualitative visualization analysis to more comprehensively evaluate the performance of different convolution structures in complex scenarios. As shown in Figure 10, we selected several representative challenging environments to visually compare the segmentation results of each module. The results indicate that the model based on the SWConv module produces more complete and coherent segmentation maps in key regions, exhibiting superior structural consistency and semantic accuracy. This further highlights the advantages of SWConv in feature extraction, as well as its strong robustness in complex real-world environments, demonstrating its effectiveness in adapting to diverse scene variations.

Figure 10.

Qualitative comparison experimental results of different convolution modules on the BDD100K dataset.

To further validate the advantages of the proposed module in convolutional structure selection, this paper conducts a comparative analysis of segmentation models replacing different types of convolutional operators in terms of parameter count and FLOPs. The experimental results are shown in Table 8. It can be observed that, compared to most convolutions, the proposed SWConv module incurs some increase in computational cost due to its padding branch design, but remains within a reasonable and acceptable range. Among these, configurations with only two padding branches and four padding branches (e.g., SW-UD padding the up and down directions, SW-UDLF padding up, down, left, and right directions) demonstrate clear computational advantages over TVConv and DSCConv. Meanwhile, SWConv significantly improves segmentation performance while maintaining a reasonable computational burden, exhibiting superior representational capacity and feature extraction capabilities compared to other convolution modules. Furthermore, considering the strict constraints on computational resources and power consumption in embedded devices, the advantages of the SWConv module in parameters and FLOPs provide a solid foundation for its practical deployment. Its balanced computational cost and efficient feature representation endow SWConv with strong adaptability, enabling it to meet the dual requirements of real-time performance and energy efficiency in embedded systems, thereby demonstrating promising application potential.

Table 8.

Comparative analysis of parameters (Params/M) and FLOPs (FLOPs/G) in models incorporating different convolutional structures.

4.2.3. Comparison of Experimental Performance of Other Methods

The above experimental analysis shows that integrating the global–local collaborative attention module and the spider convolution module into the baseline model significantly improves semantic segmentation performance across multiple evaluation metrics. To further evaluate the overall performance of the final model in the context of autonomous driving, we conducted comparative experiments with several classic semantic segmentation models. Since different methods apply image cropping with varying sizes during training on the Cityscapes and BDD100K datasets, a comparative evaluation was conducted on the CamVid dataset (resolution 480 × 360) to ensure fairness and consistency. The models compared include SegNet [], Enet [], ESCNet [], Algorithm [], NDNet [], LEANet [], EDANet [], CGNet [], DABNet [], LRDNet [], DSANet [], MSCFNet [], and the benchmark model DeepLabv3+. The results for each model are obtained from their original publication. The experimental results are presented in Table 9, where N denotes values that are either unknown or not reported. All experimental equipment was provided by NVIDIA Corporation, headquartered in Santa Clara, California, USA.

Table 9.

Comparative experimental results of various semantic segmentation models on the CamVid dataset.

To better highlight the performance differences between methods, this study provides a detailed analysis of all compared models and discloses the devices, training configurations, and parameter settings used, ensuring transparency and comparability of results. Notably, while the original baseline model performed worse than LRDNet, DSANet, and MSCFNet, integrating the GLCA and SWConv modules led to a significant performance improvement, surpassing all other models.

As shown in Table 9, the final model enhanced with the two proposed modules achieves the best performance across all evaluation metrics, demonstrating its strong capability in semantic segmentation for autonomous driving. The model significantly outperforms other approaches, with the mIoU improving by 0.35% compared to the second-best model. This improvement is not only a testament to the effectiveness of the GLCA module in enhancing feature interaction across different spatial scales but also to the spider web convolution’s ability to capture complex structural information in diverse environments. By effectively integrating global and local feature representations, the model exhibits a much better capacity to handle the intricate and variable nature of real-world driving scenes. These results underscore the strong potential of the proposed modules to enhance segmentation accuracy while maintaining efficient computation, making the model suitable for practical applications in autonomous driving systems.

4.3. Ablation Experiment

4.3.1. Ablation Study on GLCA and SWConv Modules

In the ASPP module, convolutions with different dilation rates and standard convolutions are used to capture multi-scale contextual information. The first layer of the ASPP module employs a standard 1 × 1 convolution, which has the smallest receptive field and mainly serves to retain fine-grained local texture information. However, it is limited in terms of semantic modeling. The second and third layers use dilated convolutions with rates of 6 and 12, respectively, providing medium-to-relatively large receptive fields. These layers strike a balance between capturing local details and fusing contextual semantics. The fourth layer employs a dilated convolution with a rate of 18, providing the largest receptive field and is thus better suited for capturing wide-range, global semantic information. However, as the receptive field increases, the sampling points of the convolution become increasingly sparse. This sparsity, especially in the rate = 18 path, impairs the model’s ability to perceive local details and object boundaries, making it difficult to model targets with complex shapes or irregular structures.

To address this issue, we incorporate the GLCA and SWConv modules into the rate = 18 path of the ASPP module, aiming to enhance this path’s capacity for semantic fusion and structural modeling. The GLCA module leverages bidirectional feature interaction and a dynamic weighting mechanism to effectively integrate global semantics with local detail, enhancing the representation of key regions and mitigating issues such as semantic ambiguity and blurred boundaries. This is particularly beneficial for the rate = 18 path, where the receptive field is large but information is sparse. The SWConv module introduces an asymmetric sampling topology and six-directional perception paths, breaking conventional convolution’s reliance on regular structures. This improves the model’s ability to capture object shapes, edge contours, and spatial structures, thereby compensating for the rate = 18 path’s deficiencies in shape adaptability. In contrast, the 1 × 1 convolution path and low-to-medium rate dilated convolution paths already possess strong capabilities in capturing local features due to their denser structures. As a result, the benefits of adding GLCA and SWConv to these paths are relatively limited. The rate = 18 path, however, combines high-level semantic abstraction with a significant information gap, making it the optimal location for the combined application of both modules.

To evaluate the individual effectiveness and synergistic adaptability of the proposed GLCA and SWConv modules in semantic segmentation, we conducted a systematic ablation study. Table 10 presents the results on the Cityscapes dataset. The two modules were inserted into or used to replace different components of the ASPP module under two input resolutions (256 × 256 and 768 × 768). Specifically, the positions labeled 1, 6, 12, and 18 correspond to the first feature extraction layer and the dilated convolution layers with dilation rates of 6, 12, and 18 in the ASPP module, respectively.

Table 10.

Quantitative results of ablation experiments on each module at various embedding positions in the Cityscapes dataset at different resolutions.

Experimental results show that regardless of the insertion position within the ASPP module, both the GLCA and SWConv modules consistently improve performance. Notably, placing the GLCA module and SWConv module in the fourth layer of the ASPP module (rate = 18) yields the best results across all evaluation metrics. This indicates that introducing these modules at the final stage of feature extraction helps integrate the multi-scale semantic information captured by the preceding layers more effectively, thereby enhancing the model’s ability to capture cross-layer feature dependencies.

Furthermore, to further validate the synergistic benefits of the two modules, we integrated both the GLCA module and the SWConv module into the final layer of the ASPP module. Comparative experimental results demonstrate that the combined use of these modules leads to significantly better performance than using either module alone. At an input resolution of 256 × 256, the model achieved an improvement of 2.73% in mIoU, 2.64% in mRecall, 2.15% in mPrecision, and 0.27% in mAccuracy. At a higher resolution of 768 × 768, mIoU increased by 1.47%, mRecall by 1.80%, mPrecision by 0.32%, and mAccuracy by 0.13%. Based on the above quantitative experimental results, the proposed GLCA and SWConv modules demonstrate superior performance across different input resolutions, validating their strong scale adaptability and cross-scene generalization capability. These modules consistently and stably enhance model performance in multi-resolution semantic segmentation tasks.

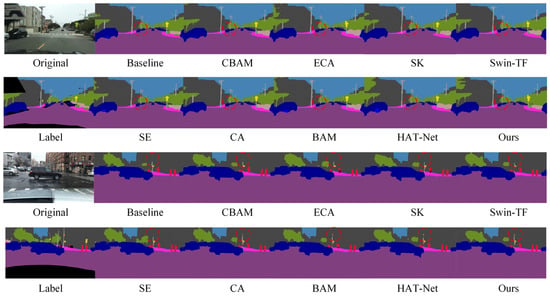

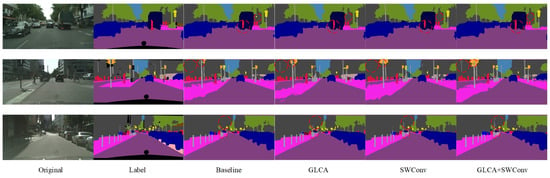

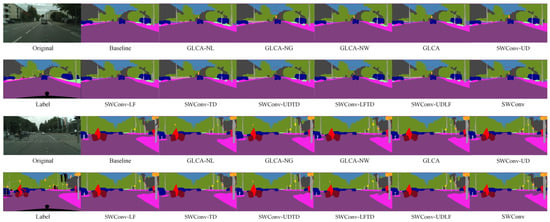

Figure 11 presents the semantic segmentation visualization results on the Cityscapes dataset, showing the step-by-step integration of the GLCA and SWConv modules into the baseline model. As highlighted in the annotated regions of the figure, the inclusion of these two modules leads to more accurate recognition of fine-grained objects such as pedestrians, poles, and traffic lights. The object boundaries become noticeably clearer, misclassifications are significantly reduced, and the segmentation results show substantial improvements in both completeness and precision. These visual results further validate the synergistic effect of the GLCA and SWConv modules, demonstrating that they not only enhance the model’s capability in object perception and boundary localization but also effectively mitigate semantic confusion across categories.

Figure 11.

Qualitative ablation comparison experimental results of GLCA and SWConv modules on the Cityscapes dataset.

To validate the stability and generalization of the proposed modules across different scenarios and resolutions, additional experiments were conducted on the CamVid dataset. Since no complex cropping and restoration operations were performed during training and testing at 480 × 360 resolution, the FPS metric is introduced to better assess the real-time processing capability of the model and its modules. Table 11 shows the ablation experiment results of the GLCA and SWConv modules on the CamVid dataset, tested at two resolutions, 480 × 360 and 960 × 720. The experiments show that the two proposed modules significantly improve model performance when integrated into the ASPP module at various positions, with the best effect achieved when placed at the dilation convolution with a dilation rate of 18. At a resolution of 480 × 360, after introducing the two modules, the mIoU improves by 1.29%, mRecall by 0.64%, and mPrecision and mAccuracy by 1.81% and 0.34%, respectively, compared to the baseline model. At a high resolution of 960 × 720, mIoU is improved by 1.48%, mRecall by 1.95%, and mAccuracy by 0.26%, while mPrecision is optimal after replacing it with the SWConv module. The overall experimental results further verify the effectiveness of the GLCA and SWConv modules in enhancing model performance, particularly demonstrating stronger stability and adaptability under high-resolution input. Both modules bring performance gains at different insertion positions, with the combined use yielding the most significant effect, reflecting good module compatibility and complementarity. It is worth mentioning that although there is a slight sacrifice in inference speed, the accuracy is significantly improved, demonstrating a better trade-off between accuracy and efficiency.

Table 11.

Quantitative results of ablation experiments on each module at various embedding positions in the CamVid dataset at different resolutions.

Figure 12 shows the semantic segmentation results on the CamVid dataset after gradually adding modules. As seen in the marked area, the baseline model exhibits noticeable semantic confusion and boundary discontinuities, especially around structures like walls, indicating its limited ability to capture fine-grained details. With the introduction of the GLCA and SWConv modules, the model’s ability to understand semantics and accurately locate boundaries improves significantly, with the segmentation results progressively refining from coarse to fine. Finally, when both modules are integrated, they not only reduce semantic interference between categories but also enhance the clarity and integrity of structural edges. The segmented images become visually closer to the true labels, both in terms of appearance and structural consistency.

Figure 12.

Qualitative ablation comparison experimental results of GLCA and SWConv modules on the CamVid dataset.

Table 12 presents the quantitative ablation results on the BDD100K dataset. It can be observed that, based on the baseline segmentation model, progressively introducing the GLCA and SWConv modules leads to improvements across all four evaluation metrics, fully demonstrating the effectiveness and applicability of these two modules in complex autonomous driving scenarios. When the GLCA and SWConv modules are integrated simultaneously, the segmentation performance is further enhanced. Specifically, compared to the baseline model, mIoU increases by 1.73%, mRecall by 1.82%, mPrecision by 1.62%, and mAccuracy by 0.32%. These results indicate that the proposed modules work synergistically to effectively enhance the model’s ability to perceive and recognize diverse semantic information in autonomous driving environments, thereby improving overall segmentation performance and robustness.

Table 12.

Quantitative ablation comparison experimental results of GLCA and SWConv modules on the BDD100K dataset.

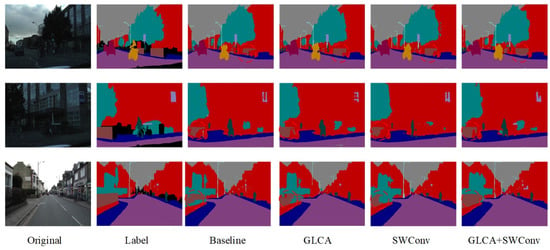

To more intuitively verify the performance improvements brought by the proposed modules in complex scenarios, qualitative ablation experiments were conducted on the BDD100K dataset. Figure 13 shows a comparison of segmentation results from the baseline model, the model with the GLCA module, the model with the SWConv module, and the model combining both modules. The baseline model exhibits semantic ambiguity and unclear boundaries in some detailed regions. After introducing the GLCA module, the model achieves more accurate feature representation in key local areas, enhancing its ability to capture small objects and fine details. The addition of the SWConv module strengthens the model’s perception of multi-directional spatial information, effectively improving the representation of object contours and shapes. When both modules are combined, the model maintains overall semantic consistency while producing finer details and more precise boundaries, significantly enhancing the visual quality of the segmentation results. This qualitative analysis further validates the complementary advantages and practical effectiveness of the GLCA and SWConv modules in complex autonomous driving scenarios.

Figure 13.

Qualitative ablation comparison experimental results of GLCA and SWConv modules on the BDD100K dataset.

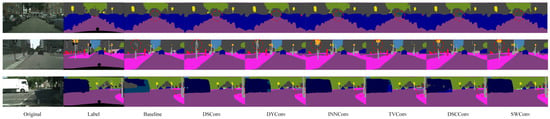

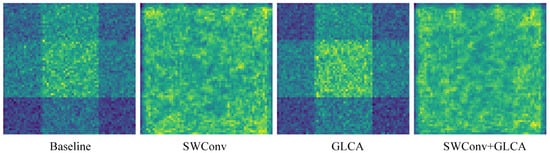

To better understand the function of each module, we will next demonstrate the specific changes in the feature maps after introducing different modules and analyze their impact on the model’s performance. The feature map results are shown in Figure 14. It can be observed that after introducing SWConv, the feature extraction performance is significantly improved compared to the original feature map. In the original feature map, the effective features in the bright regions are scattered, and multi-directional information is not fully integrated, leading to blurred target boundaries and spatial relationships. After adopting SWConv, the module integrates multi-directional contextual information, making the bright regions in the feature map more coherent and providing more comprehensive coverage. Features such as road markings, vehicle contours, and pedestrian trajectories are more precisely presented, enhancing the perception of target boundaries and spatial relationships, providing more effective feature support for tasks in autonomous driving scenarios. After introducing the GLCA module, the key regions in the feature map become more prominent, indicating a significant increase in feature concentration in those areas. The GLCA module enhances the model’s ability to focus on critical regions of the image by integrating global and local contextual information. It dynamically adjusts the importance of these areas, thereby increasing attention on key features. This improvement enables the model to more accurately recognize fine details and comprehend spatial relationships, thereby enhancing its ability to capture the essential aspects of the scene.

Figure 14.

Feature map changes and representations after the introduction of SWConv and GLCA modules.

Finally, after using both modules together, the performance is further improved. The attention module complements SWConv in global context understanding and feature correlation capture, allowing SWConv to focus more on the key features and strengthening the connection between various parts of the feature map. The combination of the attention module and SWConv enhances feature extraction, making it more comprehensive and precise, as they work synergistically to optimize the model’s recognition and decision-making capabilities in autonomous driving.

4.3.2. Ablation Study on Hyperparameters of GLCA and SWConv

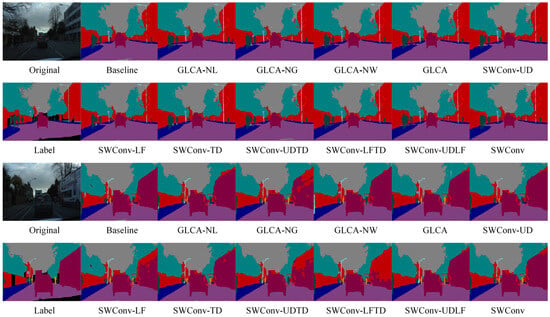

To comprehensively evaluate the impact of key module designs on the overall performance of the segmentation model, we further analyze the role of core hyperparameters. This work conducts an extensive experimental analysis on the design of the local feature extraction branch, global feature extraction branch, and dynamic weighting branch in the GLCA module, as well as the hyperparameter of padding direction numbers in the SWConv module. By systematically adjusting these parameters and observing the resulting performance changes, we can clarify the performance boundaries and stability of each structural design. Here, GLCA-NL, GLCA-NG, and GLCA-NW represent the removal of the local feature extraction branch, global feature extraction branch, and the absence of the dynamic weighting branch, wherein the latter adopts equal-weight averaging for feature fusion. SWConv-UD, SWConv-LF, SWConv-TD, SWConv-UDTD, SWConv-LFTD, and SWConv-UDLF denote configurations using only the up-down padding branch, left-right padding branch, diagonal padding branch, up-down plus diagonal branches, left-right plus diagonal branches, and up-down, left-right plus diagonal branches, respectively. To systematically assess and analyze these components, experiments are conducted on the Cityscapes and CamVid datasets.