Abstract

Image dehazing has become a crucial prerequisite for most outdoor computer applications. The majority of existing dehazing models can achieve the haze removal problem. However, they fail to preserve colors and fine details. Addressing this problem, we introduce a novel high-performing attention-based dehazing model (-net)that successfully incorporates both RGB and HSV color spaces to maintain color properties. This model consists of two parallel densely connected sub-models (RGB and HSV) followed by a new efficient attention module. This attention module comprises pixel-attention and channel-attention mechanisms to get more haze-relevant features. Experimental results analyses can validate that our proposed model (-net) can achieve superior results on synthetic and real-world datasets and outperform most of state-of-the-art methods.

1. Introduction

Image dehazing is a core concept in digital image processing, the process of eliminating haze from images. It has become a crucial task in the field of computer vision due to its extensive applications in areas such as maritime and air transport, driver assistance systems, surveillance, and remote sensing. The challenge of dehazing lies in the fact that haze is an atmospheric phenomenon that can substantially degrade the quality of images, obscuring details and reducing contrast, color fidelity, and visibility.

In the literature, researchers first have used image enhancement based methods to enhance image intrinsic properties (such as contrast, brightness) and details. In [1,2,3], authors have employed common contrast enhancement techniques to enhance the visibility of hazy images. However, these techniques can only handle hazy images with thin haze and would lead to some distortions and halo effects in case of thick haze. Also, the Retinex theory has been exploited to remove haze from single images [4,5] by enhancing the brightness of the image. This technique can remove the haze and enhance the vividness of colors, but it cannot be able to preserve edge properties.

Differently, using fusion-based strategy, Schechner et al. [6] and Liang et al. [7] have combined the visible light image and the near-infrared image to obtain a high-quality image free from haze. These methods also has attained satisfactory results, however, since additional information is required, the restoration would be a little bit complicated, because a single hazy image cannot provide easily much useful information.

Recently, the image restoration-based Dehazing strategy is extensively studied considering the image degradation source. With the appearance of this scattering degradation model, many researchers have built their Dehazing solutions on it, to recover the scene radiance of degraded hazy images. It can be expressed as follows:

where I(x) is the captured hazy image, J(x) is the scene radiance to be recovered. A(x) represents the atmospheric light and (x) denotes the transmission medium. x symbolizes image pixels.

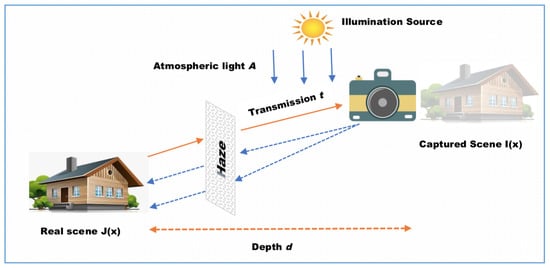

Figure 1 describes how haze affects captured images.

Figure 1.

Hazy Image Degradation Model.

This model requires the estimation of two crucial keys, transmission map (x) and the atmospheric light A to perform the Dehazing process.

Accordinly, He et al. [8] have proposed the dark channel prior (DCP) assumption that sourced from remote sensing of natural haze-free images. Its principle is that for most patches in clear outdoor images, at least one color channel contains very low intensity for some pixels excluding sky region. This assumption can effectively handle thick-haze images and provide the transmission map simply. Despite that, it has significant block effects and halos on the transmission map of the sky region. On the other hand, it is computationally expensive because of the soft matting used in the refinement. Plenty of image dehazing techniques have been proposed [9,10,11]. Berman et al. [9] have proposed haze-lines based-dehazing method to restore the scene radiance by estimating the transmission map distance. Nevertheless, this method suffers from significant problems, such as color distortion and fails for thick-haze images. In contrast, Fan et al. [10] have proposed a dehazing method based on a fusion between a visible image and the near-infrared image. Despite, the pleasant achievement of this method, it has divers halo artefacts may be seen, and it is not easy to get the source images. Based on the fact that the haze thickness is related to the blurriness of the hazy image, Haouassi et al. [11] have presented a powerful Dehazing algorithm that produces excellent results, even so, it doesn’t work with nighttime hazy images.

With the success of machine learning in many image processing related fields, numerous deep learning-based Dehazing methods [12,13,14,15] have been proposed to achieve better Dehazing results and restore clear images with high visual quality. Such Dehazing methods perform well on both synthetic and outdoor hazy images. An End-to-End framework called “DehazeNet” has been presented in [12] that adopts the Convolutional Neural Networks (CNN) algorithm. It takes as input a single hazy image and gives the estimated transmission map as output, and according to the experimental results, it attains high performance. In [13], Golts et al. have designed a different dehazing network using real-world outdoor images and minimizing the Dark Channel Prior (DCP) energy function. Song et al. [14] proposed an efficient Dehazing method based on Ranking-CNN model, which facilitates the haze-relevant feature extraction process. This proposed approach shows its high performance through extensive experimental results on both synthetic and real-world hazy images. However, it is computationally expensive because of the ranking layer.

In our previous paper [15], we proposed an efficient Dehazing algorithm based on the estimation of both key components, transmission map and the atmospheric light. The transmission map is generated automatically by a cascaded Multi-scale CNN termed as (CMTnet), and the atmospheric light value is estimated using an effective algorithm called A-Est. This method can produce high-quality free-haze images but it ability to preserve colors is poor.

So far, the majority of proposed Dehazing algorithms are suffering from non-preservation of real-scene colors and fine details or unsatisfying results (for instance: halo effects in specific regions, over-enhancement). Each of these proposed methods has its level of success in haze removal process.

In summary, we can present our contributions on several points:

- -

- To retain color properties (Saturation, brightness), we integrate the HSV color space and RGB color space rather than RGB only.

- -

- We propose an efficient attention module that incorporates both channel-level and pixel-level to ensure improved color preservation and varying haze conditions adaptability. This attention mechanism is powered by mixed-pooling technique (Fusion of Max pooling and Average pooling) that can be beneficial to improve the performance and robustness of the model.

- -

- To generalize well the proposed model we train it with both synthetic data and real-world challenging benchmarks (Reside [16], O-haze [17], Dense-haze [18], and NH-haze [19]).

2. Related Works

Single image Dehazing has become a topic of interest in recent years, due to its great importance in many computer vision-related fields. The traditional dehazing techniques predominantly relied on image processing, involving the direct manipulation of pixels or the utilization of manually crafted priors [1,2,3,4,5,6,7,8,9,10,11].

2.1. Prior-Based Dehazing Techniques

These methods employ diverse prior information or assumptions related to the scene, haze distribution, or the image itself to estimate and remove the haze, such as DCP [8], haze-line model [9], color attenuation prior [20], and Non-local Color Prior (NCP) [21]. He et al. [8] introduced the dark channel prior, positing that the minimum intensity within the RGB channels at a local level should approximate zero in haze-free natural images. DCP pixel tends to be associated with a non-hazy scene. It is used to estimate the transmission map, which represents the scene’s haze. In [9], a Haze-Line based dehazing model is proposed, it operates on the premise that the color of a scene undergoes linear changes in relation to depth. This model employs this assumption as a basis for estimating the transmission map, allowing for the effective removal of haze from the image. Also, Zhu et al. [20] have introduced a Color Attenuation Prior method that operates under the assumption that the color of a scene exhibits greater consistency in the transmission-reflection model in HSV color space. In [21] the Non-local Color Prior (NCP) is introduced, leveraging the insight that a crisp image is characterized by a limited number of clusters within the RGB color space.

Although these prior-based methods have proven effective in many scenarios, they are not applicable in complex hazy scenes.

2.2. Deep Learning-Based Dehazing Methods

These techniques have carried about a revolutionary shift in the field of image dehazing, significantly improving performance over traditional techniques.

Unlike prior-based approaches that rely on evident assumptions or handcrafted priors, deep learning models, in particular convolutional neural networks (CNNs), can adapt and generalize across various landscapes. Moreover, deep learning models can easily handle noise and learn scene-related features contributes to enhanced dehazing performance.

In [12] Cai et al. have proposed an end-to-end trainable CNN model that can generate transmission map for the input hazy image, which is subsequently used to recover a clear image via the atmospheric scattering model. This model has shown superior dehazing results comparing with traditional methods, however, it can not fully capture the diverse range of real-world hazy conditions. Likewise, MSCNN [22] introduced a dehazing approach that progresses from coarse to fine by establishing a multi-scale convolution network. This model shows promising results in haze removal however it may suffer from constructive errors. Differently, Li et al. [23] have introduced an All-in-One model that employs a reformulated Equation (1) to estimate both transmission medium and airlight simultaneously. Many studies [14,15,24,25] have employed deep learning-based technologies and show promising dehazing results, however, cannot handle complex senes and varying haze conditions scenes. Additionally, most of these proposed deep-learning based dehazing techniques may suffer from computational complexity because of the feature redundancy, which leads to inaccurate dehazing outcomes particulary in challenging scenes (non-uniform haze, variying light conditions,..., etc.).

To address these issues, several researchers have joined the attention mechanism with deep learning techniques in image dehazing task, to selectively emphasize or de-emphasize certain parts of the input hazy image.

2.3. Attention Mechanisms-Based Deep Learning Dehazing Methods

Recently, the integration of attention mechanisms into deep learning models for image dehazing tasks has been pivotal for optimizing the model’s performance. Plenty of works have adopted attention mechanisms to improve the effectiveness of dehazing-based models. In [26], the authors have presented a robust attention-based multi-scale network that consists of three key components and implements a novel effective attention mechanism to capture haze-relevant features. It has been demonstrated to promote good results; however, it has some inherent limitations, such as sensitivity to lighting conditions, and poor performance on thick haze scenes. Also, in [27,28] authors have proposed pixel-channel attention models to address the non-uniform distribution of haze at different pixel levels. CP-net [27] incorporates a double attention module (DA), and FFA-net [28] introduces a powerful feature attention module along a basic residual block. Furthermore, an effective network was introduced in [29] it comprises a residual spatial and channel attention module to adaptively adjust feature weights, considering haze distribution, enhancing feature representation and dehazing performance. Moreover, Sun et al. [30] have proposed a fast and robust semi-supervised dehazing method (SADnet) that incorporates both channel and spatial attention mechanisms. This technique shows its effectiveness in haze removal; however, it produces some color artefacts on dehazing outcomes.

It is evident that the above-discussed attention-based models [26,27,28,29,30] can exhibit notable improvements in enhancing dehazing robustness. They outperform existing end-to-end models, showcasing their efficacy in addressing haze-related challenges. However, they can produce some color artefacts and cannot handle complex real-world scenarios. Addressing these challenges and further refining the existing methodologies will be pivotal in advancing the field and ensuring the practicality of these models in real-world settings, with different lighting conditions and degrees of complexity.

To address such weaknesses, this paper leverages the synergistic advantages benifiting from the fusion of channel and pixel attention, along with the use of various color spaces (RGB and HSV). The fusion of channel and pixel attention allows the model to refine its focus localy and globally, enabling a more precise and context-aware dehazing process. Moreover, the incorporation of two color spaces further enriches the feature representation, exploiting characteristics of each space. By integrating information from RGB, HSV color representations, the model enhances its ability to deal with complex color variations in hazy scenes, resulting in superior color restoration.

This multi-attentional and multi-colour space strategy contributes to the robustness and adaptability of the proposed method, making it well-suited for a broad spectrum of real-world dehazing scenarios. The proposed network (-net) details are discussed in the next section.

3. Proposed Learning-Based Image Dehazing Method

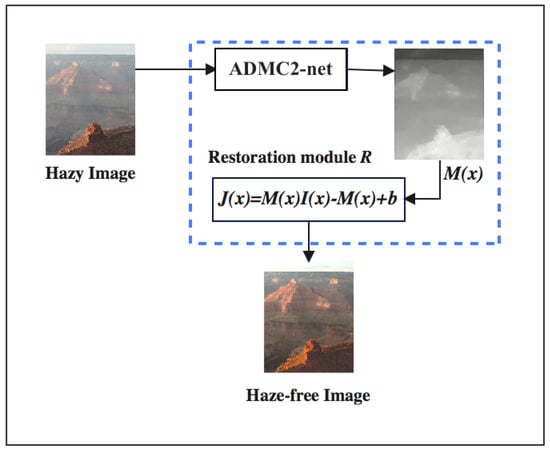

In this section, we discuss the detailed framework of the proposed Dehazing network (-net). The proposed model consists of a two-path block of trained dense units (D-Unit), a concatenation module, a Channel-Pixel fusion attention module, and a restoration module. First, we provide an overview of the proposed Dehazing network Figure 2. Second, we present the network’s main components in detail, including color space used, dense units (D-unit), concatenation, channel and pixel modules, fusion attention, and restoration module. Then, we introduce the loss function to train the network.

Figure 2.

Overall design of the proposed end−to−end model.

3.1. Overview of the Proposed Architecture

Inspired by the impressive success of end-to-end deep neural networks in image dehazing field [12,23], we propose a robust dehazing model (-net), that can effectively generate the mapping M(x) directly and minimize the reconstruction errors.

Our network (-net) inspires AOD-Net’s [23] overall design as shown in Figure 2 to generate clear images from hazy ones directly.

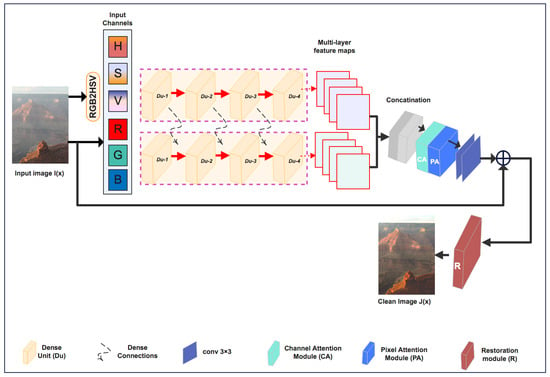

As shown in Figure 3, the input hazy image undergoes an RGB to HSV colorspace conversion. Then, we create two paths, RGB and HSV, to extract more informative features separately. After that, the input feature maps were fed into four consecutive dense units (D-units) in each path, obtaining different representative and informative feature maps from the two parallel paths, RGB and HSV.

Figure 3.

Architecture of the proposed dehazing model (-net).

Densely connecting the RGB path’s features with the HSV path’s features can improve the performance of the network D-unit block. The generated RGB and HSV features are then input into a recovery module after being fed into a fusion attention module to extract the most important features.

Our proposed dehazing network (-net) can handle the image dehazing issue effectively in terms of image quality and haze removal.

3.2. Network Architecture

Our proposed model (-net) consists of four key components: HSV-RGB Color Space Representation, dense units (D-unit), Fusion Attention Module, and Recovery Module. In this section, we will explain each of these components in more detail.

3.2.1. Two-Color Space Model

Generally, most of proposed dehazing methods process in the RGB color space, it has a strong physical color property that allows for easy image display and storage; However, its channels have a high level of correlation, so the entire image’s appearance might be affected easily through luminance changes, lights, shadows, white areas, haze drops, and other factors. As we mentioned before, the majority of recent image dehazing techniques have the ability to remove the haze from single hazy images in most cases, however; these methods have shown their sensibility to lighting conditions and produced some color artefacts on the dehazing outcomes.

Our proposed dehazing model takes a step further by incorporating feature extraction from two color spaces, RGB and HSV. This unique combination offers significant benefits for image dehazing, as it enables better representation of visual information and enhances the ability to handle both color distortions and halo artifact challenges. By leveraging the strengths of both color spaces, our model delivers superior results compared to traditional dehazing techniques.

RGB is an additive color model widely used in electronic displays, such as computer monitors, television screens, and digital cameras. It is composed of three color channels, Red, Green, and Blue, each channel rangesfrom 0 to 255 (8 bits per channel).

HSV is a cylindrical model where the hue is the angle of the color relative to red, the saturation is the intensity of the color relative to the hue, and the value is the brightness of the color. HSV is often preferred for color representation and manipulation as it is more intuitive and easier to work with. It consists of three independent attributes: hue (H), saturation (S), and value (V). Hue specifies the property of color, saturation represents the purity of a specific color, and value signifies pixel intensity and brightness.

- Hue (H): 0 to 360 degrees.

- Saturation (S): 0 to 1

- Value (V): 0 to 1

Furthermore, the HSV color space has proven to be highly effective in a variety of image-related tasks [31,32,33,34], including image deahzing task, yielding exceptional results. This benefit has motivated us to combine the advantages of both the HSV and RGB color spaces.

HSV-RGB color space integration solves most learning-based dehazing problems, color costs, and halo artifacts. HSV promotes brightness and color, whereas RGB enhances detail. A dehazing network using both color spaces increases data-driven learning and robustness. Later layers may focus on intensity-related variables as the model learns to blend HSV and RGB properties. Real-world applications with varied input images need this generalization.

Network funtionality: The input of our network is a single hazy image.

RGB−network: First, RGB channels are fed into a four D-units sub-network with multiple skip connections. Denote is the input hazy image with three channels }, and , represent the weights and biases of the i-th D-units, respectively. f is the activation function. The output of each D-unit can be expressed as:

Then, the overall network expression can be expressed as:

HSV−network: First, the input hazy image is transformed to HSV representation (RGB—HSV), it is used as an input for the HSV subnetwork. Then, the process is quite similar to what we explained for RGB image. However, HSV image typically has three channels (H,S,V), so the dimensions of matrices weights must be ajusted.

The final network formula can be expressed as:

where = h,s,v where H, S, and V are the individual channels. , represent the weights and biases of the i-th D-units, respectively. f is the activation function.

Finally, the output features and are fused and concatenated with the HSV-network’s features. Finally, all these features passed through the proposed feature attention module. In the next subsections we give details of D-unit and Feature Attention Module.

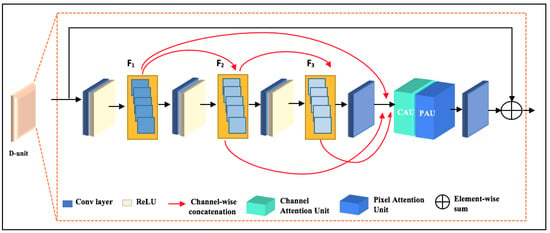

3.2.2. D-Unit Structure

The D-unit consists of dense convolutions network and a feature attention module. Inspired by our previous work [15], we exploit the D-unit architecture to build the two-color space dehazing model (-net). Figure 4 shows the general internal structure of a D-unit. To reduce the computational complexity while also increasing the receptive field, we use (3 × 3) convolutions followed by a rectified linear unit (ReLU) (except for the last convolutional layer). The DenseNet [35] framework inspired the basic concept of a D-unit, in which each convolutional layer is connected to all other layers in a feed-forward manner. For instance, feature maps of the first layer C1 are densely connected to all subsequent layers C2, C3, and C4.

Figure 4.

Structure of D-unit.

Most traditional deep learning-based dehazing methods treat pixel-wise and channel-wise features equally. As a result, these methods cannot deal with dense haze and uneven haze distribution scenes. In contrast, Qin et al. [28] proposed a robust feature attention mechanism that can be more flexible with different hazy scene types and treats all haze levels well. It comprises channel attention and pixel attention, as explained in the next section.

The proposed unit architecture has many benefits that make it more advantageous than other deep learning-based dehazing models. These benefits includes its ability to avoid the gradient vanishing problem that deep CNNs have. In addition, this architecture is designed to maximize the flow of information with non-redundancy of feature maps. This proposed unit can highlight dense haze and bypass the less important information like low frequency regions and thin haze, which can lead to outstanding haze removal results.

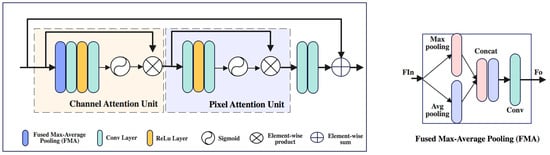

3.2.3. Feature Attention Module

Recently, the attention mechanism has become increasingly crucial in deep learning-based dehazing methods. It shows an outstanding ability to help the model adaptively process the most critical parts while ignoring irrelevant ones.

Several studies [28,29,30] have employed this mechanism to enhance the effectiveness of DNN-based dehazing models by incorporating channel attention, pixel attention or both. However, these attempts failed to yield satisfactory results because of the difficulty of tackling intense haze in scenarios with dense haze, the complexity for integration to other existing DNN-based dehazing methods, and limited robustness due to sensitivity to some variations, such as scene complexity, lightning conditions, or weather.

By addressing these problems, we propose an efficient and robust attention module that inspires the significant achievement of fusing channel and pixel mechanisms for image dehazing task.

As depicted in Figure 5, our proposed attention module inspires FFA-Net [28] attention architecture, which employs w fusion of both channel and pixel attention mechanisms. However, for channel attention stage we employ a hybrid max-average pooling technique that can capture a more comprehensive representation of the input by preserving global and local informatioin within the model.

Figure 5.

Sheme of proposed Attention module.

First, for channel attention we use max-average (MA) pooling to transform channel-wise global spatial information into a channel descreptor.

Then, the features undergo two convolutional layers, with ReLU activation function and then a sigmoid function .

Finally, we perform element-wise multiplication between the input feature and the channel weights .

Similarly, we input (output of channel attention) to two convolutional layers with ReLu and the sigmoid activation function .

In the end, multiplying the output and the input element-wise.

This feature attention module adopts a lightweight architecture that can be smoothly fused into existing image-dehazing models without imposing significant computational costs. It aims improve the performance of image dehazing model by effectively augmenting the overall clarity and quality of the dehazed results and preserving varying haze conditions adaptability.

Overall, the proposed attention model demonstrates its ability in dealing with most of dehazing challenging problems in particuraly, varying lightening and vaze conditions, color distortion, contextual information preservation.

3.2.4. Loss Function

Generally, image dehazing models employed L2 loss (Mean Squared Error) to train the model efficiently because of its simplicity and ease of converging to an optimal solution. However, it has limitations regarding overly smoothed outputs that can not preserve textures or details well. Its main goal is to minimize the error between output and the ground truth of a hazy image. loss is expressed below.

where is the ground-truth image and represents the predicted output, M is the number empirical values.

As we mentioned above, is a pixel-wise metric that can not maintain the perceptual quality of an image and can produce overly smoothed mages. In contrast, a robust dehazing system ought to not only effectively remove haze but also preserve edges, textures, and fine details.

On the other hand, (Structural Similarity Index) encourages structural details preservation. It considers three key components to evaluate the perceptual similarity between two images: structure, contrast, and brightness. Its value varies between −1 and 1, where 1 represents an excellent similarity.

where and represent constants of regularization, x and y are the compared images. and are averages of x and y, and , represent variances of x and y.

For image dehazing, choosing the loss function requires a trade-off between pixel accuracy and perceptual feature preservation. Therefore, combining loss and loss can be beneficial for training and help the dehazing model handle all distortions and challenging scene conditions.

In this research we employ a combination of L2 loss () and loss, which can be defined as follows.

4. Results

In this section, we disscuss the dataset and experimental setup used in our study (some visual results Figure 6). Furthermore, the proposed dehazing model is first assessed by conducting various experiments using state-of-the-art dehazing methods on real-world and synthetic data.

Figure 6.

Some Visual Results of Proposed Dehazing Model: The hazy image, our result, Ground truth, respectively.

The validation of these investigations incorporates both qualitative visual effects and quantitative assessment metrics. Finally, we perform ablation studys to prove the significance of each unit in our proposed architecture.

4.1. Datasets and Implementation

4.1.1. Datasets

To assess the effectiveness of our innovative dehazing model, we performed comprehensive experiments on real-world images and synthetic datasets. For our experiments, we chose the publicly accessible large-scale dataset RESIDE (REalistic Single Image DEhazing) [16]. It contains thousands of pairs of indoor and outdoor hazy images as well as their corresponding ground truth.

For training, we picked 2500 synthetic hazy and corresponding ground truth images from OTS (Outdoor Training Set) and 500 from ITS (Indoor Training Set). For validation on synthetic and real-world images, we select 400 synthetic hazy images from SOTS (Synthetic Objective Testing Set) and 15 real-world foggy images from HSTS (Hybrid Sujective Testing Set).

Furthermore, we trained our approach on real-world challenging haze-removal benchmarks, Dense-Haze [18], NH-HAZE [19], and O-HAZE [17] datasets, 90% used for training and 10% for evaluation.

4.1.2. Implementation Details

In our experiments, for training, we utilized an ADAM optimizer as an optimization approach to update network parameters recurrently. The model was trained using the standard learning rate of 0.001 and network parameters and set to 0.9 and 0.999, respectively, with 200 epochs. We implement our network on a computer of 12th Gen Intel(R) Core i5-12600k, GPU is NVIDIA RTX 2080Ti.

4.2. Comparisons and Experimental Results

In this section, we perform an experimental analysis by comparing our proposed model to other existing approaches, both traditional and learning-based, TA-3DP [36], MB-TF [37], GRIDdehaze-Net [26], CMTnet [15], GEN-ADV [38], DP-IPN [25], ADE-CGAN [39]. First, we conduct a qualitative comparison to assess the compared methods on both synthetic and real-world hazy images. Then, to confirm the qualitative comparison analysis, we perform a quantitative comparison using the extensively used metrics SSIM and PSNR, and [40] that can compare two images in terms of color, it is a color difference function.

4.2.1. Qualitative Comparison on Both Real-World and Synthetic Hazy Images

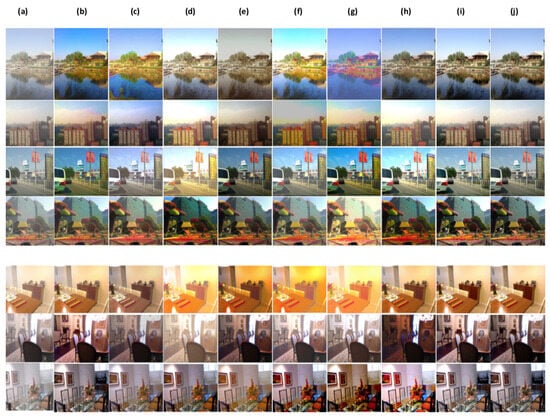

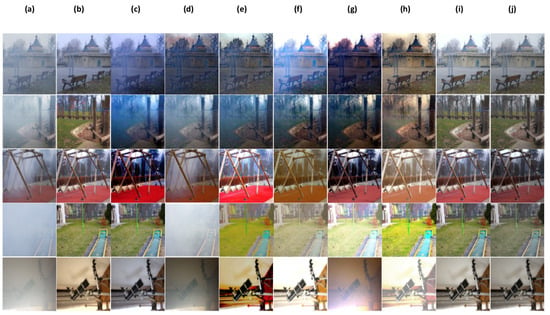

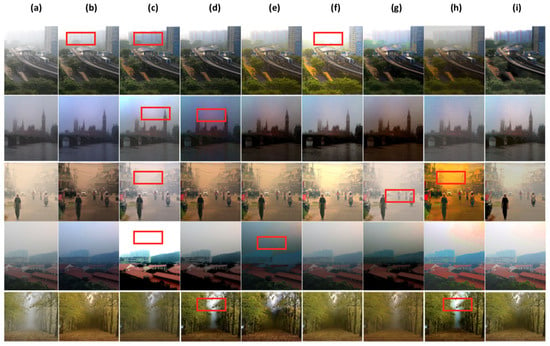

In this part, we conduct a qualitative comparative analysis, by presenting a range of dehazing results for the above methods [15,25,26,36,37,38,39] including ours. In Figure 7, Figure 8 and Figure 9, we illustrate outcomes obtained from both synthetic (indoor, outdoor settings) and real-world outdoor hazy images.

Figure 7.

Subjective comparison on synthetic dataset RESIDE. (a) hazy image, (b) TA-3DP [36], (c) MB-TF [37], (d) GRIDdehaze-Net [26], (e) CMTnet [15], (f) GEN-ADV [38], (g) DP-IPN [25], (h) ADE-CGAN [39], (i) Ours. (j) Ground truth.

Figure 8.

Subjective comparison on Real-world datasets Dense-Haze, NH-HAZE, and O-HAZE. (a) hazy image, (b) TA-3DP [36], (c) MB-TF [37], (d) GRIDdehaze-Net [26], (e) CMTnet [15], (f) GEN-ADV [38], (g) DP-IPN [25], (h) ADE-CGAN [39], (i) Ours. (j) Ground truth.

Figure 9.

Some Visual comparisons of comparing methods on Real-world images (without ground truth). (a) hazy image, (b) TA-3DP [36], (c) MB-TF [37], (d) GRIDdehaze-Net [26], (e) CMTnet [15], (f) GEN-ADV [38], (g) DP-IPN [25], (h) ADE-CGAN [39], (i) Ours.

Figure 7 presents some dehazing outcomes from the Reside dataset (SOTS) on synthetic hazy images. As illustrated in Figure 7b,c, the methods show good performance in haze removal, however, TA-3DP [36] method’s shows some color distortion in the outputs. Also, MB-TF [37] produces over-saturated colors. Figure 7d method’s shows some residual haze.

In contrast, learning-based methods perform well in haze removal in most cases. However, they show some color distortions and poor saturation. For example, in Figure 7e CMTnet [15] results are substantially obscure in most cases (trees in the fourth image). As depicted from Figure 7f–h the results of GEN-ADV [38], DP-IPN [25], ADE-CGAN [39] have higher illumination, and some color shifts. Overall, as shown through all results in Figure 7, unlike our proposed model that can recover the real scene while preserving colours and saturation, most state-of-the-art compared methods fail to restore the hazy images and are unable to preserve colours and saturation.

Figure 8 shows some examples of haze removal results on three real-world challenging datasets: Dense-Haze (dense haze), NH-HAZE (non-homogonous haze), and O-HAZE (outdoor hazy images). At first glance, our results are visually almost identical to the ground-truth images. Contrary, as displayed in Figure 8b,c the methods TA-3DP [36], MB-TF [37] perform well in haze removal from dense-haze in most cases images and non-homogonous. Also Figure 8d GRIDdehaze-Net [26] method’s shows some light haze for example, the third and fourth images.

As shown in Figure 8e–h CMTnet [15], GEN-ADV [38], DP-IPN [25], ADE-CGAN [39] can successfully remove haze for most images, and the results are close to the ground-truth images to some extent. However, we can notice some color degradations for example, herbs in the second image tend to be dark green unlike in the ground truth which is light green. Also, these methods are unable to preserve edges and fine details, for example, the house cone in the first image. Additionally, all comparing methods fail to handle images with white objects or background (last image Figure 8).

To further assess the performance of our proposed approach, we carry out dehazig results of the comparing methods, including ours on real-world hazy images(without ground truth images), these images can be considered as challenging conditions hazy images (images with sky area, images with non-homogonous haze, images with low light condition). As demonstrated in Figure 9b,f,g TA-3DP [36], GEN-ADV [38], and DP-IPN [25] method’s can remove most of haze fromhazy images, however, the outputs have some color cost in sky area. Figure 9h shows ADE-CGAN [39] method’s results, it can be good in haze removal but it produces over-saturated colors. Alghough most of the comparing methods can remove haze to some extent, however, they have severe colour degradation in many scene parts, especially in the sky area (red frame shows the colour distortions and show some detail loss in different parts).

Differently, our proposed system can perform well in haze removal with colour and detail preserving. Overall, the proposed method can outperform the comparing methods in most cases, and has a superior generalization ability.

To validate all these qualitative evaluations, we conduct a quantitative comparison using FR-IQA (Full-reference Image Quality Assessement)and NR-IQA (no-reference Image Quality Assessement) in the next section.

4.2.2. Quantitative Comparison on Both Real-World and Synthetic Hazy Images

In this section, we perform a quantitative comparison to rank the comparing methods, including ours. As we mentioned before, in our study we selected hazy images from a synthetic dataset Reside(SOTS, HSTS) and the three challenging real-world benchmarks (O-hazy, NH-hazy, Dense-hazy). Table 1 shows the average values of SSIM, PSNR and indicators, where a higher value indicates the best one for all metrics.

Table 1.

Average Values of SSIM, PSNR and of Comparing Methods on Synthetic and Real-world Datasets.

By analyzing Table 1 SSIM, PSNR and values, our proposed model achieved the best values for all SSIM, PSNR and . This achievement indicates that our proposed method outperforms state-of-the-art dehazing methods in terms of structural perception and color preserving.

Additionally, we assess the accuracy of the restored images on both real-world and synthetic images by using some common NR-IQA (FADE [41], e, ). The indexes e and are two contrast enhancement indicators presented by Hautiere et al. [42], which measures the ability to recover the invisible edges and the quality of the contrast enhancement, respectively. FADE indicator evaluates the ability to remove haze from hazy images. Table 2 shows the average scores of e, , FADE, and running time (T) of the comparative methods, including ours.

Table 2.

Average Values of FADE, e, r, and T(x) of Comparing Methods on Synthetic and Real-world Datasets.

According to Table 2, our proposed model achieves the best scores (Values writen in bold on the table) for most of comparing metrics and ranks the second in recovering the visibility of edges.

In summary, both quantitative and qualitative analyses verify the effectiveness and the outstanding performance of our proposed attentional dehazing model, in terms of haze removal, color preserving, contrast enhancement, edges visibility and time complexity.

4.3. Ablation Studies

To validate the significance of the proposed attention module to enhance the performance of our dehazing model, we investigate two ablation studies with and without the attention module, as well as RGB-based model and RGB-HSV based model. Table 3 shows the SSIM and PSNR values of testing the dehazing model with and without the attention module with synthetic and both synthetic and real-world datasets. Table 4 shows the SSIM, PSNR and values of testing the dehazing model in these cases.

Table 3.

Average Values of SSIM and PSNR.

Table 4.

Average Values of SSIM, PSNR and .

As demonstrated from Table 3, our attention module has an outstanding ability to increase the performance of the dehazing process (SSIM and PSNR average values are the best in case of using our attention module). Additionally, employing both synthetic and real-world datasets has an excellent impact in enhancing dehazing performance (As marked in bold).

By contrast, the employing the integration of both RGB and HSV color space to implement our dehazing network contributes in enhancing color visibility and clarity while removing haze, Table 4 shows average values of SSIM, PSNR, and are the best in case of RGB-HSV with both synthetic and real-world datasets.

5. Disscussion and Failure Cases

Based on our comprehensive analysis, the proposed method demonstrates superior performance compared to most state-of-the-art dehazing approaches, excelling in terms of haze removal, visibility enhancement, edge preservation, color preserving, and time complexity. Our method effectively addresses and overcomes the limitations observed in existing approaches.

The outstanding success of our proposed method is underscored by its robustness, efficacy, and efficient time complexity, achieved through the strategic utilization of both RGB and HSV color spaces, as well as the incorporation of the proposed attention module. These combined strengths significantly contribute to the exceptional results attained by our approach.

Combining RGB and HSV color spaces with an attention module can lead to a more comprehensive and adaptive approach for haze removal. This significant combination enables the algorithm to better understand and address the complexities of haze in diverse scenarios, contributing to more effective and robust results.

As any proposed network, our proposed network - has a failure case, - fails to handling super-resolution hazy image. This failure may stem from the inherent challenges associated with reconciling the intricate details required for super-resolution with the complexities introduced by atmospheric haze. The challenge lies in developing a sophisticated algorithm that can navigate this trade-off without compromising the effectiveness of either objective.

6. Conclusions

In this paper, we presented an innovative two-color space attention-based dehazing model (-net), employing both HSV color space and RGB color space. This model structure incorporates two parallel densely connected sub-networks with an efficient fused channel-pixel attention module. This integration can solve several image dehazing issues (generalization, color degradation, and loss of fine details problems). Through extensive qualitative and quantitative comparisons and by analyzing some image quality metrics (SSIM, PSNR, , e, r, FADE), our proposed model shows outstanding dehazing results that can outperform state-of-the-art dehazing methods in terms of haze removability, color preserving, time consumming, by solving most existing image dehazing limitations and treating all haze and light scene conditions. As a future work, we would investigate and incorporate advanced super-resolution techniques that can effectively handle hazy conditions. This may involve exploring deep learning architectures specifically designed for super-resolution in challenging environments.

Author Contributions

Conceptualization, S.H.; Formal analysis, S.H. and D.W.; Funding acquisition, D.W.; Investigation, S.H.; Methodology, S.H.; Project administration, D.W.; Software, S.H. and D.W.; Supervision, D.W.; Validation, D.W.; Writing—original draft, S.H.; Writing—review and editing, S.H., D.W. All authors have read and agreed to the published version of the manuscript.

Funding

The work presented in this paper was supported by the National Natural Science Foundation of China under Grant no. 61370201.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the existing affiliation information. This change does not affect the scientific content of the article.

References

- Uche, A.N. Partial Differential Equation-based Hazy Image Contrast Enhancement. Comput. Electr. Eng. 2018, 72, 670–681. [Google Scholar] [CrossRef]

- Zhou, L.; Bi, D.Y.; He, L.Y. Variational Histogram Equalization for Single Color Image Defogging. Math. Probl. Eng. 2016, 2016, 9897064. [Google Scholar] [CrossRef]

- Xie, B.; Shen, J.; Yang, J.; Lv, Z. Single Image Dehazing based upon Modified Image Enhancement Algorithm. In Proceedings of the 2020 5th International Conference on Information Science, Computer Technology and Transportation (ISCTT), Shenyang, China, 13–15 November 2020; pp. 448–451. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, F. Single image dehazing motivated by Retinex theory. In Proceedings of the 2nd International Symposium on Instrumentation and Measurement, Sensor Network and Automation (IMSNA), Toronto, ON, Canada, 23–24 December 2013; pp. 243–247. [Google Scholar] [CrossRef]

- Liu, X.; Liu, C.; Lan, H.; Xie, L. Dehaze Enhancement Algorithm Based on Retinex Theory for Aerial Images Combined with Dark Channel. Open Access Libr. J. 2020, 7, 1–12. [Google Scholar] [CrossRef]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Polarization-Based Vision through Haze. Appl. Opt. 2003, 42, 511–525. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Ding, X.; Mi, Z.; Wang, Y.; Fu, X. Effective Polarization-Based Image Dehazing With Regularization Constraint. IEEE Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Single Image Dehazing Using Haze-Lines. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 720–734. [Google Scholar] [CrossRef]

- Guo, F.; Zhao, X.; Tang, J.; Peng, H.; Liu, L.; Zou, B. Single Image Dehazing Dased on Fusion Strategy. Neurocomputing 2020, 378, 9–23. [Google Scholar] [CrossRef]

- Haouassi, S.; Wu, D.; Hamidaoui, M.; Tobji, R. An Efficient Image Haze Removal Algorithm based on New Accurate Depth and Light Estimation Algorithm. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 64–76. [Google Scholar] [CrossRef][Green Version]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Golts, A.; Freedman, D.; Elad, M. Unsupervised Single Image Dehazing Using Dark Channel Prior Loss. IEEE Trans. Image Process. 2019, 29, 2692–2701. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Li, J.; Wang, X.; Chen, X. Single Image Dehazing Using Ranking Convolutional Neural Network. IEEE Trans. Multimed. 2018, 20, 1548–1560. [Google Scholar] [CrossRef]

- Haouassi, S.; Wu, D. Image Dehazing Based on (CMTnet) Cascaded Multi-scale Convolutional Neural Networks and Efficient Light Estimation Algorithm. Appl. Sci. 2020, 10, 1190. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking Single-Image Dehazing and Beyond. IEEE Trans. Image Process. 2019, 1, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.; Ancuti, C.; Timofte, R.; Vleeschouwer, C. O-HAZE: A Dehazing Benchmark with Real Hazy and Haze-Free Outdoor Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–23 June 2018; pp. 867–8678. [Google Scholar]

- Ancuti, C.; Ancuti, C.; Sbert, M.; Timofte, R. Dense-Haze: A Benchmark for Image Dehazing with Dense-Haze and Haze-Free Images. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1014–1018. [Google Scholar]

- Ancuti, C.; Ancuti, C.; Timofte, R. NH-HAZE: An Image Dehazing Benchmark with Non-Homogeneous Hazy and Haze-Free Images. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1798–1805. [Google Scholar]

- Qingsong, Z.; Jiaming, M.; Ling, S. A Fast Single Image Haze Removal Algorithm using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef] [PubMed]

- Berman, D.; treibitz, T.; Avidan, S. Non-local Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-scale Convolutional Neural Networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Li, B.; Peng, X.L.; Wang, Z.Y.; Xu, J.Z.; Feng, D. AOD-Net: All-in-One Dehazing Network. In Proceedings of the International Conference on Computer Vision, (ICCV), Venice, Italy, 22–29 October 2017; pp. 4780–4788. [Google Scholar]

- Yanyun, Q.; Yizi, C.; Huang, J.; Yuan, X. Enhanced pix2pix dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8160–8168. [Google Scholar]

- Kim, G.; Park, J.; Kwon, J. Deep Dehazing Powered by Image Processing Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 1209–1218. [Google Scholar] [CrossRef]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. GridDehazeNet: Attention-Based Multi-Scale Network for Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7313–7322. [Google Scholar] [CrossRef]

- Gao, S.; Zhu, J.; Yang, Y. CP-Net: Channel Attention and Pixel Attention Network for Single Image Dehazing. In ICPCSEE 2020—Communications in Computer and Information Science; Springer: Singapore, 2020; Volume 1257. [Google Scholar] [CrossRef]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. In Proceedings of the 2020 AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11908–11915. [Google Scholar] [CrossRef]

- Jiang, X.; Zhao, C.; Zhu, M.; Hao, Z.; Gao, W. Residual Spatial and Channel Attention Networks for Single Image Dehazing. Sensors 2021, 21, 7922. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Zhang, Y.; Bao, F.; Wang, P.; Yao, X.; Zhang, C. SADnet: Semi-supervised Single Image Dehazing Method Based on an Attention Mechanism. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 2. [Google Scholar] [CrossRef]

- Hema, D.; Kannan, S. Interactive Color Image Segmentation using HSV Color Space. Sci. Technol. J. 2020, 7, 41. [Google Scholar] [CrossRef]

- Moreira, G.; Magalhaes, S.A.; Pinho, T.; dos Santos, F.N.; Cunha, M. Benchmark of Deep Learning and a Proposed HSV Colour Space Models for the Detection and Classification of Greenhouse Tomato. Agronomy 2022, 12, 356. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC2-Net: CNN-based underwater image enhancement using two color space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Zhang, T.; Hu, H.-M.; Li, B. A Naturalness Preserved Fast Dehazing Algorithm Using HSV Color Space. IEEE Access 2018, 6, 10644. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Guo, C.; Yan, Q.; Anwar, S.; Cong, R.; Ren, W.; Li, C. Image Dehazing Transformer with Transmission-Aware 3D Position Embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhang, K.; Wang, C.; Luo, W.; Li, H.; Jin, Z. MB-TaylorFormer: Multi-branch Efficient Transformer Expanded by Taylor Formula for Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 12802–12813. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, J.; Jia, F.; Yan, W.; Liu, Z.; Ni, M. Single image dehazing using generative adversarial networks based on an attention mechanism. IET Image Process. 2022, 16, 1897–1907. [Google Scholar] [CrossRef]

- Yan, B.; Yang, Z.; Sun, H.; Wang, C. ADE-CycleGAN: A Detail Enhanced Image Dehazing CycleGAN Network. Sensors 2023, 23, 3294. [Google Scholar] [CrossRef] [PubMed]

- Robertson, A.R. The CIE 1976 color-difference formulae. Color Res. Appl. 1977, 2, 7–11. [Google Scholar] [CrossRef]

- Choi, L.; You, J.; Bovik, A. Referenceless Prediction of Perceptual fog Density and Perceptual Image Defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Hautiere, A.; Tarel, J.; Aubert, D.; Dumont, E. Blind Contrast Enhancement Assessment by Gradient Ratioing at Visible Edges. Image Anal. Stereol. 2008, 27, 87–95. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).