Recognition of Grasping Patterns Using Deep Learning for Human–Robot Collaboration

Abstract

:1. Introduction

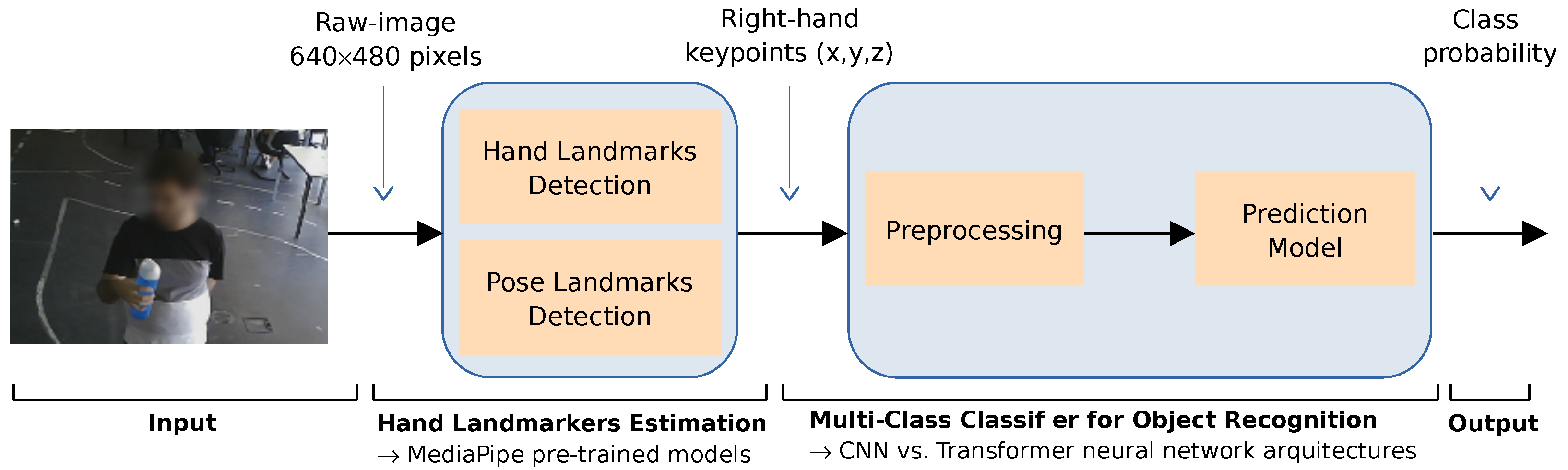

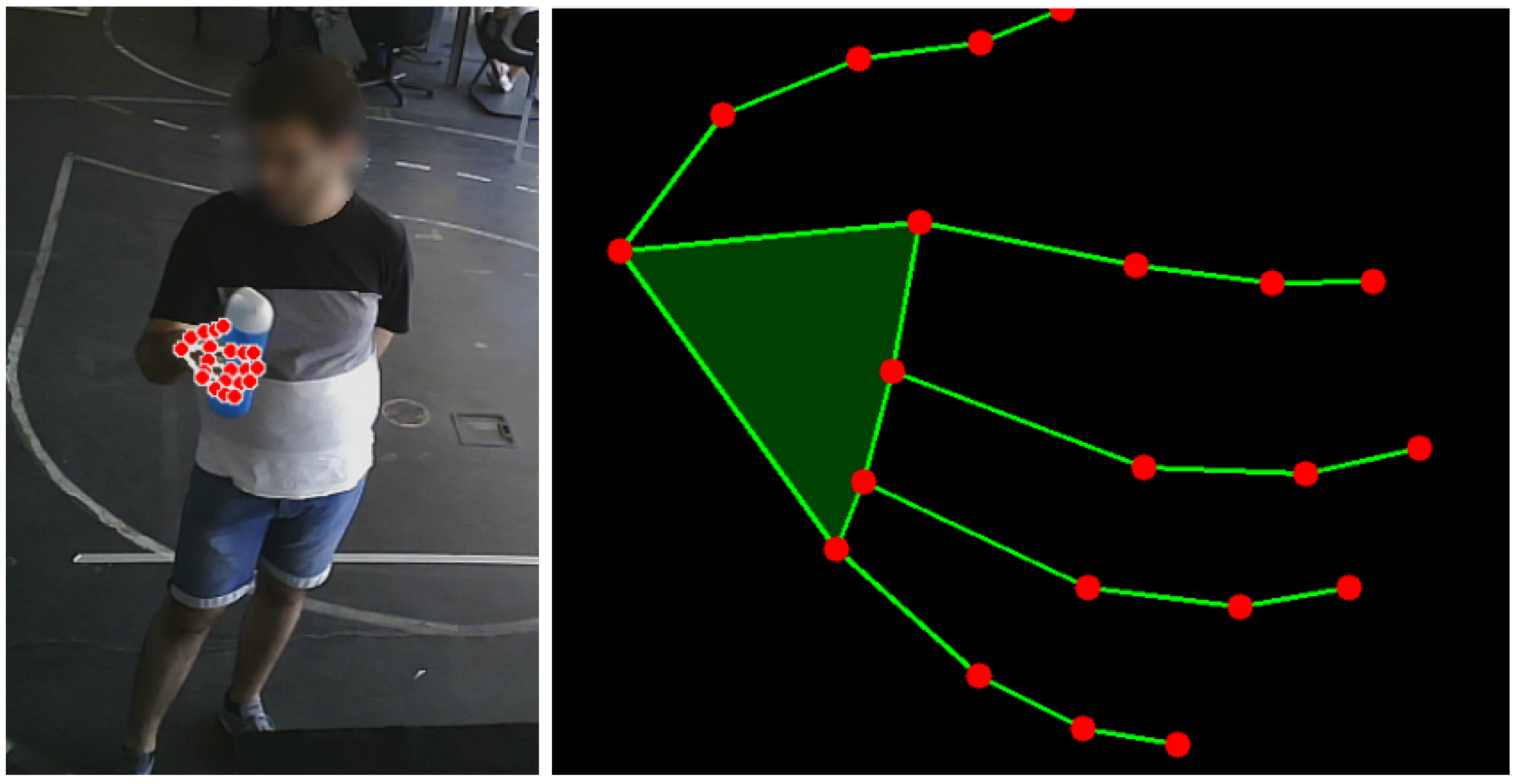

- We propose a novel object recognition system that combines the AI-based MediaPipe software for extracting the hand keypoints with a deep classifier for recognizing the category of the object held by the user’s hand. To the best of our knowledge, this is a unique computational perspective to recognize the grasped objects for application in the context of intention prediction in collaborative tasks.

- We perform extensive experiments on a new annotated dataset to demonstrate the potential and limitations of the proposed approach. In particular, we analyze the generalization performance and/or model failure both across different trials for the same user and across multiple users.

2. Related Work

2.1. Object Sensing

2.2. Hand Sensing

3. Materials and Methods

3.1. Proposed Approach

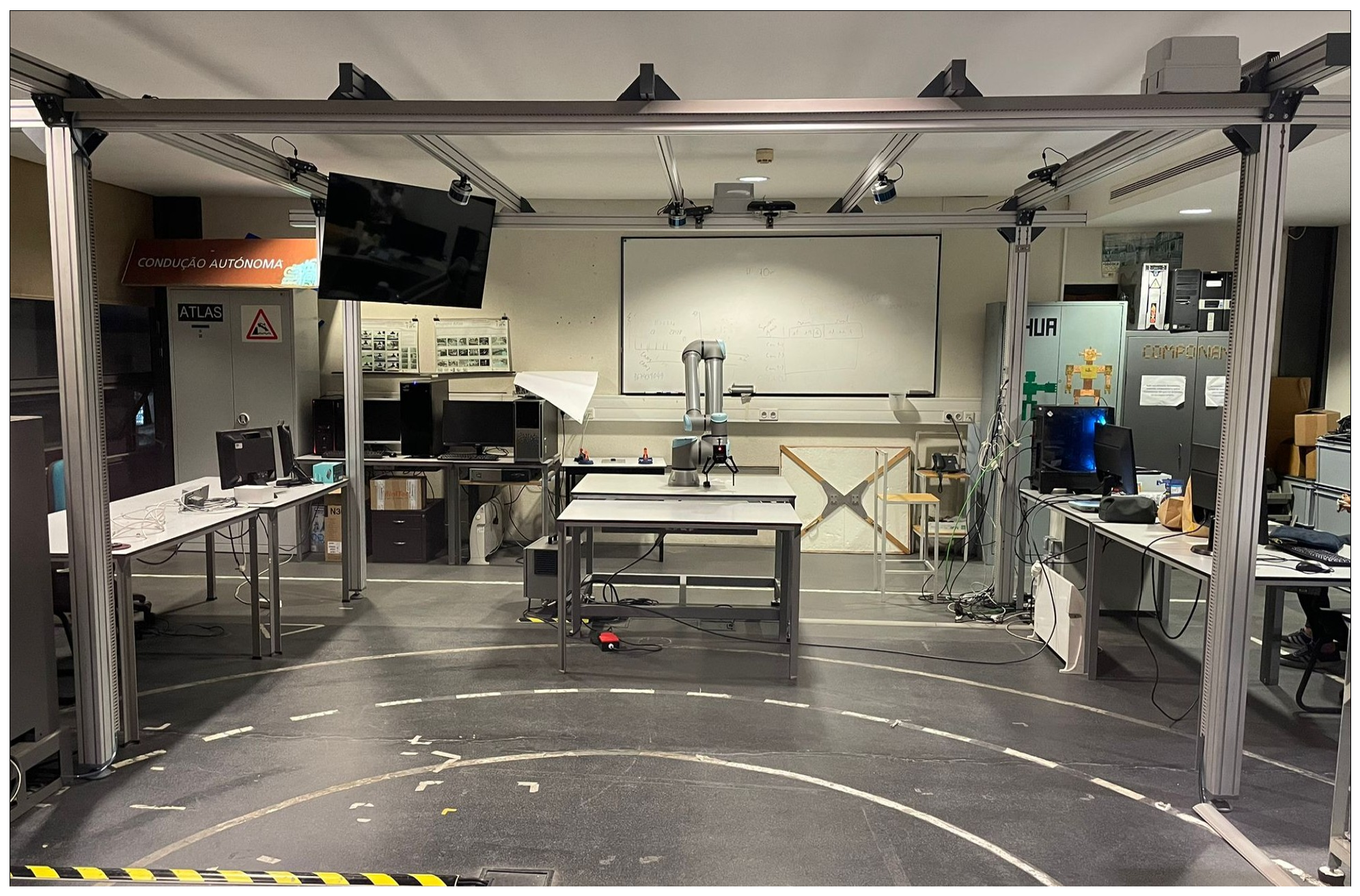

3.2. Data Acquisition

3.3. Data Pre-Processing

3.4. Neural Network Architectures

4. Results

4.1. Experiments and Metrics

- Experiment 1: Session-Based Test. The first experiment aims to assess the impact of session-based testing on the classifier’s performance. For that purpose, the classifier will be trained on data from all users and all acquisition sessions except one that will be used for testing.

- Experiment 2: User-Specific Test. In our pursuit of refining the hand–object recognition classifier, we will conduct a second experiment with a focus on individual user data. This experiment aims to provide insights into how the classifier performs when trained and tested on data collected from a single user, with the process repeated separately for each of the selected users.

- Experiment 3: Leave-One-User-Out Test. The third experiment follows a distinctive approach termed the “Leave-One-User-Out Test”. This experiment is designed to evaluate the classifier’s performance when trained on data from two users and tested on data from the third user.

4.2. Session-Based Testing

4.3. User-Specific Test

4.4. Leave-One-User-Out Test

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Robla-Gómez, S.; Becerra, V.M.; Llata, J.R.; González-Sarabia, E.; Torre-Ferrero, C.; Pérez-Oria, J. Working Together: A Review on Safe Human-Robot Collaboration in Industrial Environments. IEEE Access 2017, 5, 26754–26773. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and Prospects of the Human-Robot Collaboration. Auton. Robot. 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human-Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Kumar, S.; Savur, C.; Sahin, F. Survey of Human-Robot Collaboration in Industrial Settings: Awareness, Intelligence, and Compliance. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 280–297. [Google Scholar] [CrossRef]

- Castro, A.; Silva, F.; Santos, V. Trends of human-robot collaboration in industry contexts: Handover, learning, and metrics. Sensors 2021, 21, 4113. [Google Scholar] [CrossRef] [PubMed]

- Michalos, G.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Dimoulas, K.; Koukas, S.; Mparis, K.; Papavasileiou, A.; Makris, S. Seamless human robot collaborative assembly—An automotive case study. Mechatronics 2018, 55, 194–211. [Google Scholar] [CrossRef]

- Papanastasiou, S.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Papavasileiou, A.; Dimoulas, K.; Baris, K.; Koukas, S.; Michalos, G.; Makris, S. Towards seamless human robot collaboration: Integrating multimodal interaction. Int. J. Adv. Manuf. Technol. 2019, 105, 3881–3897. [Google Scholar] [CrossRef]

- Hoffman, G. Evaluating Fluency in Human–Robot Collaboration. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 209–218. [Google Scholar] [CrossRef]

- Rozo, L.; Ben Amor, H.; Calinon, S.; Dragan, A.; Lee, D. Special issue on learning for human–robot collaboration. Auton. Robot. 2018, 42, 953–956. [Google Scholar] [CrossRef]

- Jiao, J.; Zhou, F.; Gebraeel, N.Z.; Duffy, V. Towards augmenting cyber-physical-human collaborative cognition for human-automation interaction in complex manufacturing and operational environments. Int. J. Prod. Res. 2020, 58, 5089–5111. [Google Scholar] [CrossRef]

- Hoffman, G.; Breazeal, C. Effects of anticipatory action on human-robot teamwork efficiency, fluency, and perception of team. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 10–12 March 2007; pp. 1–8. [Google Scholar]

- Williams, A.M. Perceiving the intentions of others: How do skilled performers make anticipation judgments? Prog. Brain Res. 2009, 174, 73–83. [Google Scholar] [PubMed]

- Huang, C.M.; Mutlu, B. Anticipatory robot control for efficient human-robot collaboration. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 83–90. [Google Scholar]

- Duarte, N.F.; Raković, M.; Tasevski, J.; Coco, M.I.; Billard, A.; Santos-Victor, J. Action anticipation: Reading the intentions of humans and robots. IEEE Robot. Autom. Lett. 2018, 3, 4132–4139. [Google Scholar] [CrossRef]

- Huang, C.M.; Andrist, S.; Sauppé, A.; Mutlu, B. Using gaze patterns to predict task intent in collaboration. Front. Psychol. 2015, 6, 1049. [Google Scholar] [CrossRef] [PubMed]

- Görür, O.C.; Rosman, B.; Sivrikaya, F.; Albayrak, S. Social cobots: Anticipatory decision-making for collaborative robots incorporating unexpected human behaviors. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 398–406. [Google Scholar]

- Gkioxari, G.; Girshick, R.; Dollár, P.; He, K. Detecting and recognizing human-object interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8359–8367. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.; Lee, J.; et al. Mediapipe: A framework for perceiving and processing reality. In Proceedings of the Third Workshop on Computer Vision for AR/VR at IEEE Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 17 June 2019; Volume 2019. [Google Scholar]

- Kuutti, K. Activity theory as a potential framework for human-computer interaction research. Context Consciousness Act. Theory Hum.-Comput. Interact. 1996, 1744, 9–22. [Google Scholar]

- Taubin, G.; Cooper, D.B. Object Recognition Based on Moment (or Algebraic) Invariants. In Geometric Invariance in Computer Vision; MIT Press: Cambridge, MA, USA, 1992; pp. 375–397. [Google Scholar]

- Mindru, F.; Moons, T.; Van Gool, L. Color-Based Moment Invariants for Viewpoint and Illumination Independent Recognition of Planar Color Patterns. In Proceedings of the International Conference on Advances in Pattern Recognition, Plymouth, UK, 23–25 November 1998; Singh, S., Ed.; Springer: London, UK, 1999; pp. 113–122. [Google Scholar] [CrossRef]

- Sarfraz, M. Object Recognition Using Moments: Some Experiments and Observations. In Proceedings of the Geometric Modeling and Imaging–New Trends (GMAI’06), London, UK, 5–7 July 2006; pp. 189–194. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Barabanau, I.; Artemov, A.; Burnaev, E.; Murashkin, V. Monocular 3D Object Detection via Geometric Reasoning on Keypoints. In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020)—Volume 5: VISAPP. INSTICC, Valletta, Malta, 27–29 February 2020; SciTePress: Setúbal, Portugal, 2020; pp. 652–659. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Zimmermann, C.; Welschehold, T.; Dornhege, C.; Burgard, W.; Brox, T. 3D Human Pose Estimation in RGBD Images for Robotic Task Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE Press: Piscataway, NJ, USA, 2018; pp. 1986–1992. [Google Scholar] [CrossRef]

- Rato, D.; Oliveira, M.; Santos, V.; Gomes, M.; Sappa, A. A sensor-to-pattern calibration framework for multi-modal industrial collaborative cells. J. Manuf. Syst. 2022, 64, 497–507. [Google Scholar] [CrossRef]

- Qi, S.; Ning, X.; Yang, G.; Zhang, L.; Long, P.; Cai, W.; Li, W. Review of multi-view 3D object recognition methods based on deep learning. Displays 2021, 69, 102053. [Google Scholar] [CrossRef]

- Chao, Y.W.; Liu, Y.; Liu, X.; Zeng, H.; Deng, J. Learning to detect human-object interactions. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 381–389. [Google Scholar]

- Cao, Z.; Radosavovic, I.; Kanazawa, A.; Malik, J. Reconstructing hand-object interactions in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12417–12426. [Google Scholar]

- Liu, S.; Jiang, H.; Xu, J.; Liu, S.; Wang, X. Semi-supervised 3d hand-object poses estimation with interactions in time. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14687–14697. [Google Scholar]

- Gupta, S.; Malik, J. Visual semantic role labeling. arXiv 2015, arXiv:1505.04474. [Google Scholar]

- Zhuang, B.; Wu, Q.; Shen, C.; Reid, I.; van den Hengel, A. HCVRD: A benchmark for large-scale human-centered visual relationship detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Koppula, H.S.; Saxena, A. Anticipating human activities using object affordances for reactive robotic response. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 14–29. [Google Scholar] [CrossRef] [PubMed]

- Hayes, B.; Shah, J.A. Interpretable models for fast activity recognition and anomaly explanation during collaborative robotics tasks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6586–6593. [Google Scholar]

- Furnari, A.; Farinella, G.M. What would you expect? Anticipating egocentric actions with rolling-unrolling lstms and modality attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6252–6261. [Google Scholar]

- Xu, B.; Li, J.; Wong, Y.; Zhao, Q.; Kankanhalli, M.S. Interact as you intend: Intention-driven human-object interaction detection. IEEE Trans. Multimed. 2019, 22, 1423–1432. [Google Scholar] [CrossRef]

- Roy, D.; Fernando, B. Action anticipation using pairwise human-object interactions and transformers. IEEE Trans. Image Process. 2021, 30, 8116–8129. [Google Scholar] [CrossRef] [PubMed]

- Fan, J.; Fan, X.; Tian, F.; Li, Y.; Liu, Z.; Sun, W.; Wang, H. What is that in your hand? Recognizing grasped objects via forearm electromyography sensing. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; Association for Computing Machinery: New York, NY, USA, 2018; Volume 2, pp. 1–24. [Google Scholar]

- Paulson, B.; Cummings, D.; Hammond, T. Object interaction detection using hand posture cues in an office setting. Int. J. Hum.-Comput. Stud. 2011, 69, 19–29. [Google Scholar] [CrossRef]

- Vatavu, R.D.; Zaiţi, I.A. Automatic recognition of object size and shape via user-dependent measurements of the grasping hand. Int. J. Hum.-Comput. Stud. 2013, 71, 590–607. [Google Scholar] [CrossRef]

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The grasp taxonomy of human grasp types. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 66–77. [Google Scholar] [CrossRef]

- MacKenzie, C.L.; Iberall, T. The Grasping Hand; Elsevier: Amsterdam, The Netherlands, 1994. [Google Scholar]

- Feix, T.; Bullock, I.M.; Dollar, A.M. Analysis of human grasping behavior: Object characteristics and grasp type. IEEE Trans. Haptics 2014, 7, 311–323. [Google Scholar] [CrossRef]

- Puhlmann, S.; Heinemann, F.; Brock, O.; Maertens, M. A compact representation of human single-object grasping. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1954–1959. [Google Scholar]

- Betti, S.; Zani, G.; Guerra, S.; Castiello, U.; Sartori, L. Reach-to-grasp movements: A multimodal techniques study. Front. Psychol. 2018, 9, 990. [Google Scholar] [CrossRef]

- Egmose, I.; Køppe, S. Shaping of reach-to-grasp kinematics by intentions: A meta-analysis. J. Mot. Behav. 2018, 50, 155–165. [Google Scholar] [CrossRef]

- Valkov, D.; Kockwelp, P.; Daiber, F.; Krüger, A. Reach Prediction using Finger Motion Dynamics. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–8. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Berger, E.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Amprimo, G.; Masi, G.; Pettiti, G.; Olmo, G.; Priano, L.; Ferraris, C. Hand tracking for clinical applications: Validation of the Google MediaPipe Hand (GMH) and the depth-enhanced GMH-D frameworks. arXiv 2023, arXiv:2308.01088. [Google Scholar]

- Amprimo, G.; Ferraris, C.; Masi, G.; Pettiti, G.; Priano, L. Gmh-d: Combining google mediapipe and rgb-depth cameras for hand motor skills remote assessment. In Proceedings of the 2022 IEEE International Conference on Digital Health (ICDH), Barcelona, Spain, 10–16 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 132–141. [Google Scholar]

- Saudabayev, A.; Rysbek, Z.; Khassenova, R.; Varol, H.A. Human grasping database for activities of daily living with depth, color and kinematic data streams. Sci. Data 2018, 5, 180101. [Google Scholar] [CrossRef]

| Dataset | Bottle | Cube | Phone | Screw | Total |

|---|---|---|---|---|---|

| User1 | 828 | 928 | 950 | 957 | 3663 |

| User2 | 886 | 926 | 939 | 946 | 3697 |

| User3 | 904 | 907 | 937 | 946 | 3694 |

| Total | 2618 | 2761 | 2826 | 2849 | 11,054 |

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| CNN | 0.9210 | 0.9214 | 0.9211 | 0.9211 |

| Transformer | 0.9017 | 0.9020 | 0.9016 | 0.9017 |

| Metric | Model | Session 1 | Session 2 | Session 3 | Session 4 |

|---|---|---|---|---|---|

| Accuracy | CNN | 0.8493 | 0.8138 | 0.7844 | 0.7718 |

| Transformer | 0.8458 | 0.8027 | 0.7902 | 0.7613 | |

| Precision | CNN | 0.8515 | 0.8160 | 0.8024 | 0.7723 |

| Transformer | 0.8469 | 0.8028 | 0.8045 | 0.7623 | |

| Recall | CNN | 0.8493 | 0.8138 | 0.7844 | 0.7718 |

| Transformer | 0.8458 | 0.8027 | 0.7902 | 0.7613 | |

| F1-Score | CNN | 0.8499 | 0.8136 | 0.7878 | 0.7717 |

| Transformer | 0.8461 | 0.8019 | 0.7932 | 0.7614 |

| Metric | Model | User1 | User2 | User3 |

|---|---|---|---|---|

| Accuracy | CNN | 0.9674 | 0.9100 | 0.9163 |

| Transformer | 0.9423 | 0.8929 | 0.8730 | |

| Precision | CNN | 0.9678 | 0.9109 | 0.9171 |

| Transformer | 0.9435 | 0.8943 | 0.8745 | |

| Recall | CNN | 0.9674 | 0.9100 | 0.9163 |

| Transformer | 0.9423 | 0.8929 | 0.8730 | |

| F1-Score | CNN | 0.9675 | 0.9101 | 0.9164 |

| Transformer | 0.9423 | 0.8930 | 0.8729 |

| Metric | Model | Session 1 | Session 2 | Session 3 | Session 4 |

|---|---|---|---|---|---|

| Accuracy | CNN | 0.9257 | 0.8364 | 0.9053 | 0.7883 |

| Transformer | 0.9078 | 0.8171 | 0.8742 | 0.7636 | |

| Precision | CNN | 0.9288 | 0.8543 | 0.9135 | 0.7971 |

| Transformer | 0.9112 | 0.8401 | 0.8806 | 0.7846 | |

| Recall | CNN | 0.9257 | 0.8364 | 0.9053 | 0.7884 |

| Transformer | 0.9078 | 0.8172 | 0.8742 | 0.7636 | |

| F1-Score | CNN | 0.9261 | 0.8382 | 0.9073 | 0.7892 |

| Transformer | 0.9083 | 0.8196 | 0.8759 | 0.7671 |

| Metric | Model | User1 | User2 | User3 |

|---|---|---|---|---|

| Accuracy | CNN | 0.7969 | 0.5827 | 0.5488 |

| Transformer | 0.8006 | 0.5730 | 0.5350 | |

| Precision | CNN | 0.8123 | 0.5889 | 0.5675 |

| Transformer | 0.8094 | 0.5794 | 0.5492 | |

| Recall | CNN | 0.7969 | 0.5827 | 0.5488 |

| Transformer | 0.8006 | 0.5730 | 0.5350 | |

| F1-Score | CNN | 0.8008 | 0.5791 | 0.5483 |

| Transformer | 0.8028 | 0.5702 | 0.5321 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amaral, P.; Silva, F.; Santos, V. Recognition of Grasping Patterns Using Deep Learning for Human–Robot Collaboration. Sensors 2023, 23, 8989. https://doi.org/10.3390/s23218989

Amaral P, Silva F, Santos V. Recognition of Grasping Patterns Using Deep Learning for Human–Robot Collaboration. Sensors. 2023; 23(21):8989. https://doi.org/10.3390/s23218989

Chicago/Turabian StyleAmaral, Pedro, Filipe Silva, and Vítor Santos. 2023. "Recognition of Grasping Patterns Using Deep Learning for Human–Robot Collaboration" Sensors 23, no. 21: 8989. https://doi.org/10.3390/s23218989

APA StyleAmaral, P., Silva, F., & Santos, V. (2023). Recognition of Grasping Patterns Using Deep Learning for Human–Robot Collaboration. Sensors, 23(21), 8989. https://doi.org/10.3390/s23218989