Abstract

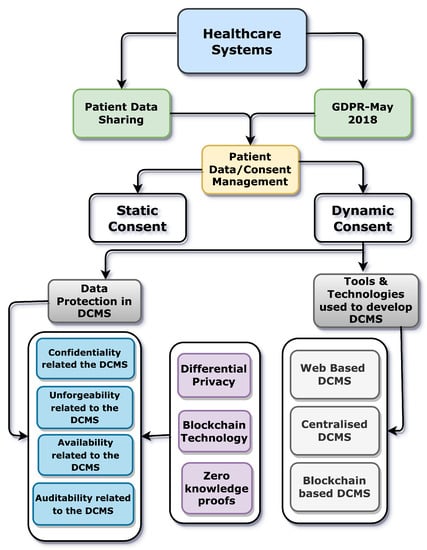

Dynamic consent management allows a data subject to dynamically govern her consent to access her data. Clearly, security and privacy guarantees are vital for the adoption of dynamic consent management systems. In particular, specific data protection guarantees can be required to comply with rules and laws (e.g., the General Data Protection Regulation (GDPR)). Since the primary instantiation of the dynamic consent management systems in the existing literature is towards developing sustainable e-healthcare services, in this paper, we study data protection issues in dynamic consent management systems, identifying crucial security and privacy properties and discussing severe limitations of systems described in the state of the art. We have presented the precise definitions of security and privacy properties that are essential to confirm the robustness of the dynamic consent management systems against diverse adversaries. Finally, under those precise formal definitions of security and privacy, we have proposed the implications of state-of-the-art tools and technologies such as differential privacy, blockchain technologies, zero-knowledge proofs, and cryptographic procedures that can be used to build dynamic consent management systems that are secure and private by design.

1. Introduction

Users are often concerned about how their personal data is used. In the view of Asghar et al. in [1], individuals want to be sure that any data they furnish is being used legally and exclusively for the purposes they have consented to. In addition, users should be capable of withdrawing their consent at any time. Consent is captured as a lawful record, which can serve as proof in disputes or legal proceedings. Steinsbekk et al. in [2] point out that when users give consent about their data, they constrain how their data can be used and transferred. These limitations help to safeguard their privacy and stop their data from being misused or transmitted without their consent. According to Almeida et al. in [3], given the evident digitization of our society, traditional static paper-based procedures for recording consent are no longer suitable. For instance, digitization is becoming increasingly crucial in the medical sector, and the use of digital consent is an incredible advancement to expedite research tasks that utilize confidential health data. Similarly, in the view of Ekong et al. in [4], a noticeable portion of sensitive private data gathered and used by scientists, corporations, and administrations generates unprecedented hazards to individual privacy rights and the security of personal information.

Vigt et al. in [5] and Gstrein et al. in [6] explained the legal aspects of consent by providing a few insights from the General Data Protection Regulation in Europe; according to them, as stated in the article 7 and specified further in recital 32 of the GDPR, consent can be characterized as “consent of the data subject signifies any voluntarily provided, explicit, declared and precise gesture of the data subject’s desires by which he or she, by a remark or by a transparent affirmative activity, denotes understanding to the processing of confidential data connecting to him or her”.

Overall, the definition of consent delivered by the GDPR stresses the significance of acquiring informed and voluntary consent from individuals to process their personal information, e.g., receiving consent from patients when performing any research-related tasks on their data. By doing so, organizations can demonstrate their commitment to respecting individual’s privacy rights and complying with the GDPR’s provisions for data protection. The GDPR provisions for data protection aim to ensure that individuals have control over their personal data and are aware of the extent to which their data are being used [5,6].

Vigt et al. in [5] and Gstrein et al. in [6] demonstrated further the design of a dynamic consent management system; they stated that when designing a DCMS, five prerequisites must be satisfied: (i) consent should be willingly disseminated, meaning that individuals should have the option to give or withhold consent freely and without coercion; (ii) consent must be explicit, indicating that it should be given through a specific and affirmative action or statement; (iii) consent must be informed, meaning that individuals should have access to all necessary information regarding the processing of their data before giving consent; (iv) consent must be precise, indicating that it should be specific to the particular processing activities and purposes for which it is being given, and (v) conceded consent must be cancellable, meaning that individuals should have the right to withdraw their consent at any time. The GDPR framework provides guidance and recommendations on how organizations handle and process individuals’ confidential data [5]. There can be numerous applications where dynamic consent management systems can be applied. However, in the existing literature, the leading research is being done on implementing dynamic consent management systems for the healthcare sector in order to create sustainable e-healthcare services with the implications of state-of-the-art tools, systems, and technologies.

1.1. Using Subject’s Confidential Data

Following the GDPR provisions about privacy by design regulations, Wolford et al. in [5,7] elaborated that in recital 40 of the GDPR, there are five lawful grounds for utilizing confidential details of data subjects. These are: (i) when the processing is necessary for the performance of a contract to which the data subject is a party or to take steps at the request of the data subject before entering into a contract; (ii) when the processing of private data is required to rescue lives; (iii) when processing is mandated to complete a duty associated with the general welfare; (iv) when obtaining consent from data subjects to utilize their data; and (v) when the data processing is needed to comply with any legal duty.

Hence, “consent” can be considered one of the most straightforward bases for processing the subject’s data. Wolford et al. in [7] discussed the implications of GDPR on clinical trials and highlighted the importance of obtaining valid consent from research participants. Their work emphasizes the need for transparency, clarity, and specificity in obtaining consent from participants and suggests that a DCMS can efficiently facilitate the process. Their paper provides insights into the practical aspects of implementing GDPR in the context of clinical trials and offers recommendations to researchers and regulators to ensure compliance with GDPR requirements.

1.2. Dynamic Consent

Dynamic consent aims to address the limitations of paper-based and static consent methods (e.g., paper-based methods to write the data subject’s preferences about her data utilization) that have been used for a long time. Tokas et al. in [8], devised a new term, “dynamic consent”. According to [8], the term dynamic consent originated from large-scale data collection in biomedical research where participants were formerly hired for a long time. Dynamic consent is an informed consent approach in which the user who gives her data by approval can control the flow of her data from the user’s perspective. Likewise, Goncharov et al. in [9] provided extended details about the dynamic consent. According to [9], dynamic consent has imposed granular consent in dealing with personal information.

Ideally, dynamic consent is a method of digital communication without having paper-based procedures in which the data and the consent used to be kept on paper. Wee et al. in [10] pointed out that a dynamic consent mechanism connects the data subject, data controller, and requester in a way that authorizes individuals to provide organized consent agreements on specific personal information and manage who can collect, access, and use their data for which purposes. So, using a dynamic consent-based mechanism, a data subject could track who collected her data and for which purposes. In [9,10], authors emphasize that dynamic consent mechanisms connect the data subject, controller, and requester to provide more control and organization over shared personal information. Dynamic consent permits individuals to control who can collect, access, and use their data for specific objectives and periods, enriching transparency and confidence in data processing activities.

1.3. Actors in a Dynamic Consent Management System

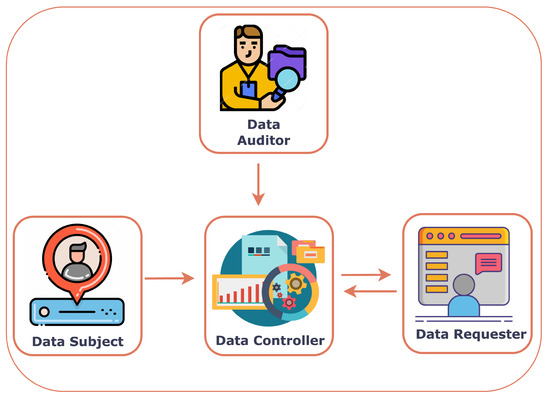

In a dynamic consent management system, there clearly are the following actors:

- Data Subject (DS);

- Data Controller (DC);

- Data Auditor (DA);

- Data Requester (DR).

- Data Subject: A data subject is a person who submits her data and the corresponding consent to a data controller. She can be directly or indirectly identified.

- Data Controller: A data controller is an entity working as a mediator and receiving information, possibly in some encoded form, from data subjects and providing data to data requesters. Notice that, in general, a data controller does not simply store the personal data of the data subject since this could already be a confidentiality violation. Indeed, notice that while a data subject might not want to share personal data with a DC, they might want to share personal data with a data requester through a data controller. A data controller is usually also accountable for keeping and correctly using the data subject’s consent.

- Data Auditor: A data auditor is an entity with the authority and responsibility to inspect the operations performed by the data controller (e.g., to check if a DC is correctly implementing its role).

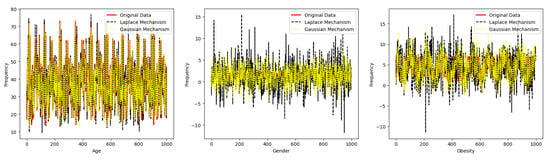

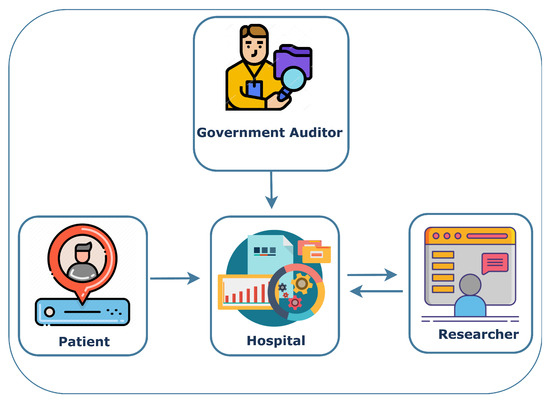

- Data Requester: A data requester is an individual or organization that would like to process the private data of the data subject through a data controller. The data requester can be a researcher who wants to employ the subject’s data for research-associated tasks. Figure 1 depicts all the actors and their interactions in a dynamic consent management system.

Figure 1. A typical dynamic consent management system.

Figure 1. A typical dynamic consent management system.

1.4. Privacy by Design

Traditionally, abuses in data protection are punished and discouraged by the fact that laws explicitly address sanctions for the violators. More recently, there has been an extensive effort toward achieving appealing properties by design. This is a more demanding requirement aiming at guaranteeing that abuses are impossible. Belli et al. [11] discuss the significance of including privacy by design principles in developing and managing those systems that utilize data. Their work in [11] argues that privacy should not be treated as an afterthought to a component but incorporated into the design stage.

The notion of privacy by design strives to furnish technical and managerial solutions to safeguard the confidentiality of data subjects. Likewise, their work in [11] emphasizes the challenges developers and data controllers encounter in executing privacy by design, including balancing privacy problems with the demand for data collection and processing for honest purposes. The authors in [11] urge that privacy by design can be accomplished via a combined effort between designers, developers, and data protection professionals by entrenching privacy protections into the design of data systems.

1.5. Dynamic Consent Management Systems (DCMSs)

The existing literature has made significant efforts to define and explain the implications and benefits of dynamic consent management systems for enabling data subjects to manage their consent dynamically [12,13]. Considering this fact, Budin et al. in [14] demonstrated DCMS as a personalized online contact tool that encourages continuing two-way communication between the data requester and controllers and the data controller and subjects. To clarify further, Kaye et al. in [15] suggest that the DCMS can enable data subjects to modify their consent in response to changing circumstances, such as changes in the purpose of data processing or changes in the data subject’s preferences. In other words, a DCMS allows individuals to update their consent as their circumstances change, which can help ensure that their personal data is being processed in a way that is consistent with their wishes.

Spencer et al. in [16] suggested that a DCMS can enhance transparency and public trust by employing well-designed user interfaces and data infrastructures. Multiple methods exist for managing consent in real-world scenarios [17,18]. Hils et al. in [17] discuss a methodology for measuring the effectiveness of dynamic consent in research settings. Their paper provides an overview of dynamic consent, including using electronic consent forms and implementing DCMS. Likewise, Santos et al. in [18] focused on implementing dynamic consent in the context of health research. Their paper provides an overview of the critical considerations for implementing dynamic consent, including designing user-friendly interfaces, ensuring transparency and accountability, and addressing ethical and legal issues. Considering currently used DCMSs, we mention MyData-Operators in [19] which provides a DCMS in healthcare and research settings, Onetrust in [20], and Ethyca in [21], which both offer a privacy management platform that includes DCMS. Onetrust and Ethyca discuss DCMS focusing on privacy, but only Ethyca, in [21], claims to provide privacy by design.

Additionally, in the white papers of [19,20,21], it is evident that the data controller stores data subject’s personal information and consent in a centralized database. Furthermore, in those white papers, there are no precise definitions of privacy and security properties, e.g., how the confidentiality of involved partie’s data (e.g., data subject’s data) will be preserved when sharing that subject’s data with the data controller and requester. Likewise, there is no precise information on how the unforgeability of the outputs for each honest entity is protected in the presence of diverse adversaries. Apart from confidentiality and unforgeability, there are no explicit details regarding how the availability and auditability of their given DCMS will be fulfilled in the face of challenges from various foes. Therefore, the claims of [21] about privacy by design remain excessively vague. Hence, as discussed in Asghar et al.’s work in [22], creating a DCMS that guarantees the security and privacy of the actors in the presence of adversaries is a big challenge.

1.6. Access Controls in Dynamic Consent Management Systems

Access control is an essential security mechanism for organizations to manage sensitive data. It involves restricting access to resources based on the identity and privileges of users, as well as enforcing policies that determine what actions are allowed or denied [23]. In the context of DCMSs, access control is crucial to maintaining the confidentiality and integrity of DS, DC, DA, and DR. Access control mechanisms, policies, and systems can be used to ensure that only authorized individuals can access or modify sensitive data and that any such access or modification is recorded and audited. Dynamic access control policies are more flexible than traditional access control mechanisms and adapt to changing requirements and access patterns. One major challenge in DCMSs is the need to balance the privacy of DSs with the legitimate needs of DC, DA, and DR to access the subject’s data. Access control mechanisms in DCMS could be designed to ensure that only authorized players can access the DS’s data while also providing sufficient transparency and accountability to ensure the DS’s data is used appropriately without violating the access control preferences of DSs.

In this ongoing Ph.D. research project, “Security and Privacy in DCMS”, whose central part is this work, we are also interested in using access control mechanisms for DCMS to explore the possibility of developing new techniques and policies that balance the competing interests of privacy and access to the desired data. For example, for a DR in a DCMS, the possibility of using role-based access controls can be employed to explore the use of multi-factor authentication or biometric-based access control mechanisms to increase the security of DCMS. Meanwhile, there could be a focus on developing access control policies that consider the dynamic nature of DCMS, including changes in the types of data being collected, differences in access patterns, and changes in the entities who require access to the DSs data. Additional investigations into implementing access controls in DCMS can enhance these system’s security, privacy, flexibility, and adaptability to cater to the evolving needs of both users and organizations.

1.7. Why Previous Works in Access Controls Are Insufficient to Resolve the Problems Faced by This Paper

The study carried out in this work is about data protection in DCMSs, when there are diverse internal attackers who can attack the system in various ways. Previous work in access control is insufficient for managing the complex challenges posed by data protection in DCMSs. Fine-grained access control policies that can be modified dynamically based on the context of the data requests are needed, along with tools for organizing and managing consent for data usage and establishing trust between involved parties, i.e., the data subject, controller, auditor, and the requester. Traditional access control mechanisms depend on static policies and do not deliver ways to manage and enforce consent or set trust, making them ineffective for handling the exceptional challenges of DCMSs. New access control mechanisms explicitly developed for DCMSs are required to guarantee the integrity and confidentiality of the data subject while enabling data sharing to meet the needs of all parties involved. Due to these obvious facts, the previous work in access control is insufficient to resolve the problem faced by this paper.

1.8. Research Questions This Work has Addressed

- What data protection issues are related to all the dynamic consent management system entities?

- What is the current state of the art in dynamic consent management systems regarding implementations considering privacy and security properties?

- Which procedures are run by two parties, i.e., DS, DC-DC, DR, where two parties interact to perform a joint computation in a dynamic consent management system, and what do both parties get in output?

- How do we state the correctness of a dynamic consent management system when there are no attacks on such systems, and what does each party get from these systems upon giving input?

- What vital security and privacy properties are crucial to ensure robust data protection for all the dynamic consent management system entities, and how to formally define these security and privacy properties?

- How to address the essential tools and techniques to ascertain the robustness and resilience against diverse attacks in dynamic consent management systems, and how the latest tools and techniques could be utilized in dynamic consent management systems?

1.9. Our Contributions

This paper proposes a model in terms of formally defining a sequence of resilient properties to assess the security and privacy of DCMSs, taking into account the data collection procedures, data storage, data audit, and data processing by the data subject, data controller, data auditor, and data requester, respectively. To investigate the need for security and privacy for such a DCMS, we have elaborated on how the integrity and confidentiality of all the actors of a DCMS should be preserved in the presence of adversaries who will attack the system.

Summing up, this work includes the following contributions:

- We have proposed a model in terms of defining what a dynamic consent management system (DCMS) is, and then we have described all the procedures that are run by each of the entities in a dynamic consent management system while interacting with each other. In this procedure’s description model, we have mainly stressed the procedures where two actors interact with each other to perform a joint computation.

- We elaborate on what ideally (i.e., without considering adversaries) a DCMS should provide to the involved parties. Here, we mainly address the correctness of a dynamic consent management system when there are no attacks, and the system provides correct and accurate output to each interacting party.

- We have discussed the definitions aiming at capturing privacy and security properties for a dynamic consent management system in the presence of adversaries that can attack the system. We have formally discussed privacy and security properties to have precise insights into the robustness of a dynamic consent management system (DCMS).

- Next, we emphasize limits in both definitions and constructions of existing dynamic consent management systems (DCMSs) from the state of the art. We highlighted with appropriate clarifications how the existing studies in dynamic consent management systems lack security and privacy for all the entities of these dynamic consent management systems.

- We propose the use of specific techniques and tools that could be used to mitigate security and privacy risks in dynamic consent management systems (DCMSs).

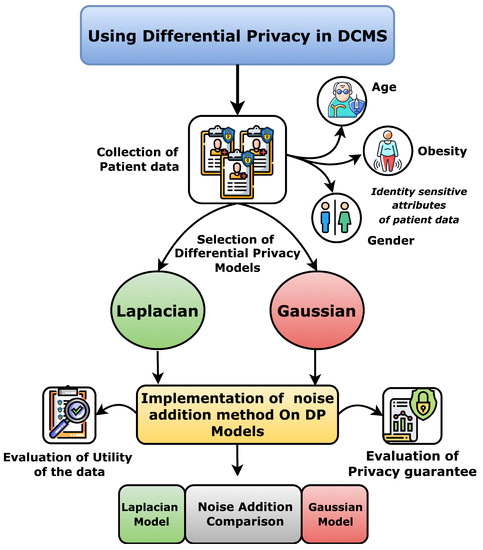

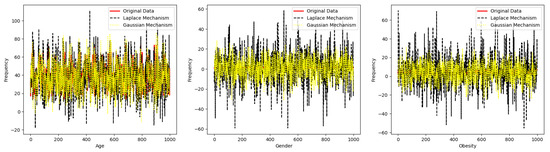

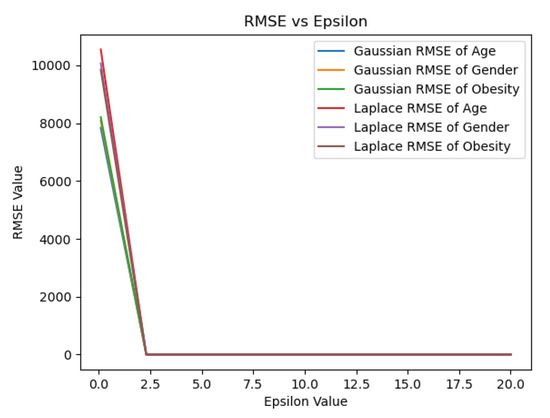

- We have mainly studied the potential of differential privacy to increase the robustness of the dynamic consent management systems in terms of fulfilling the needs of all the involved entities such as Data Subject and Data Requester. The code for these experiments is available here Differential-Privacy-for-DCMS: https://github.com/Khalid-Muhammad-Irfan/Differntial-Privacy-for-DCMS (accessed on 3 July 2023). Furthermore, we have also discussed the implications of zero-knowledge proofs along with blockchains where only an abstract entity Data Controller should be decentralized to have enhanced data protection for all the entities.

The rest of this paper is organized as follows. The detailed critical analysis of the existing works in DCMS constructions has been specified in Section 2. The motivations for considering security and privacy properties for DCMS have also been illustrated in Section 2. Section 3 elaborates on the model we have defined in terms of specifying precise procedures run by each party in DCMS to interact with each other. Section 4 describes the attacker’s model. Section 5 is about the proposed formal definitions of security and privacy properties in DCMSs. The mitigations under the proposed sketch of formal privacy and security definitions have been elaborated in Section 6. The brief discussions about this article are described in Section 7. The conclusions and the future recommendations in light of this work have been described in Section 8. The list of acronyms is explained in Table 1.

Table 1.

List of Acronyms.

2. State-of-the-Art on Existing Solutions for Dynamic Consent Management Systems

Though various solutions exist for implementing a dynamic consent management system, we briefly review the most related ones in Section 2. Specifically, we will focus on stressing the main weaknesses of existing dynamic consent management system solutions concerning the security, privacy, and functional requirements addressed in Section 3, Section 3.1 and Section 5. There can be numerous applications where DCMS can be applied. However, in the existing literature, the leading research is being done on implementing DCMS in the healthcare sector. In Table 2, we point to the prior works in available dynamic consent management systems. We have found that none or only a few satisfy particular security and privacy property definitions concerning the proposed dynamic consent management systems, i.e., the definition of confidentiality related to the involved player’s data/consent (e.g., data subject) when sharing with the data controller and requester the definition of unforgeability of the output for honest parties.

Table 2.

Analysis of the related works in DCMS (why DCMSs presented in prior results are not good).

The availability definition concerns the given dynamic consent management system (e.g., how proposed dynamic consent management systems ensure high availability with minimal downtime or disruption). The definition of auditability involves e.g., maintaining audit trails of consent-related activities, such as when consent was obtained, updated, or revoked and by whom. Apart from this, from Table 2, it is clear that we do not consider “security and privacy” as the complete, correct, and absolute definitions for all the underlying properties for data protection in the dynamic consent management system. Instead, when a DCMS is designed and developed, all the underlying definitions of security and privacy properties, e.g., “confidentiality, unforgeability, availability, auditability”, must also be explicitly discussed at each player’s level. Futuristic DCMSs must ensure these definitions of security and privacy from the design phase.

2.1. Explanation of the Referenced Paper(s) in Table 2

Merlec et al. in [12] proposed a smart contract-based DCMS, backed by blockchain technology, targeting personal data usage under GDPR. Merlec et al. developed a few design requirements in this work to explain the need for secure and confidential DCMS. In their work in [12], there are no definitions of the underlying security and privacy properties, e.g., no precise definition of the confidentiality of involved parties data (e.g., data subject) when sharing it with the data controller and requester. This work did not consider the unforgeability of the output for the honest parties. There are no considerations about the possible adversaries and attacks (e.g., DoS and DDoS attacks) while stating the performance evaluation of the given DCMS. Likewise, there are no definitions of the availability (e.g., how high availability of the proposed DCMS is ensured with minimal downtime to the data subject, controller, and processor). Although Merlec et al. employed blockchain in their contribution, there needs to be formal or informal information on how the auditability of the entire DCMS is ensured and which entity is responsible for backtracking things. As a result, this study in [12] did not consider the security and privacy properties we defined in Section 5 concerning the proposed DCMS.

Prictor et al. in [24] propose a logic model framework for evaluating and reporting DCMS effectiveness. Though with the provided framework, Prictor et al. explained various benefits of DCMSs, e.g., regarding DCMS as a precise means of consent management compared to other means of consent like paper-based and static consent procedures. Nevertheless, Prictor et al. did not consider the privacy and security aspects of the proposed DCMS framework, e.g., there is no information about the confidentiality of the involved actor’s data in the DCMS. Also, there are no considerations about the possible adversaries, internal and external, to the system. The demonstration of the unforgeability of the outputs for the involved honest players, i.e., the data subject, the data controller, and the data user, needed to be included in this work. Authors in [24] failed to define availability and auditability concerning the given DCMS precisely. Thus, it is apparent that the study did not consider the security and privacy properties we have described in Section 5 concerning the proposed DCMS.

In this work, Casass et al. in [25] propose an approach, “Encore”, which is initialized after a project to ensure the patient’s participation in clinical and medical research. In their work, authors have demonstrated use cases where a data subject sends data and consent to the controller, and then a requester requests that subject’s data and consent from the controller. For all three use cases, authors in [25] claimed the definitions of our proposed security and privacy properties concerning each use case, such as confidentiality, unforgeability, and availability. Still, no precise information could demonstrate how the confidentiality of involved parties’ data (i.e., data subject) is achieved when sharing with the data controller and requester. Similarly, there was no precise definition of the unforgeability of the output for the honest actors considering numerous adversaries in the system. In this work, Casass et al. failed to explain the availability of their given system, i.e., how the proposed DCMS will manage to get high availability when numerous data subjects access the system to update their shared consent and data. Hence, the confidentiality, unforgeability, and availability descriptions were excessively vague. Moreover, in this work [25], auditability concerning the given DCMS is claimed to be achieved at each use case level (the data subject, controller, requester). Still, Casass et al. could not provide sufficient evidence in their work that this claim is valid. Therefore, it is clear that the paper [25] failed to address the security and privacy properties that we described in Section 5 regarding the proposed DCMS.

In this work, Genestier et al. in [26] discuss the possibilities of using hyper ledger fabric blockchain for DCM and to address privacy and security challenges in the eHealth domain where a patient is granting and revoking access to her personnel data. Their work assesses blockchain as a tool to tackle the existing problems in consent management, e.g., centralized storage and sharing of data and consent of a data subject by a trusted third party. Despite blockchain implications in this work, Genestier et al. did not give any security and privacy evaluations that can ascertain the definitions of our proposed model. For instance, in the work by Genestier et al., we could not find any suitable description of the confidentiality of the actor’s data and the unforgeability related to the output of each honest actor in the proposed system. Similarly, availability and auditability definitions of the proposed DCMS were not considered in the work by Genestier et al. In light of our detailed description of the security and privacy properties necessary for a robust DCMS in Section 5, it is evident that work of Genestier et al. in [26] did not take these properties into account for the proposed DCMS. Therefore, the work lacks a thorough evaluation of the system’s security and privacy features.

Rupasinghe et al. in [27] described a privacy-preserving consent model architecture using blockchain to facilitate patient data acquisition for clinical data analysis. Their proposed architecture is a high-level illustration of blockchain-based DCMS. In their work, the details about the confidentiality of the involved parties’ data, e.g., the confidentiality of patient data/consent and unforgeability concerning the output for each honest entity, were found missing. For example, in this work [27], there was no formal or informal evidence through which one can comprehend that involved parties in the proposed blockchain-based DCMS are enjoying confidentiality and unforgeability. Meanwhile, the definitions of availability (e.g., how high availability is concerning proposed DCMS and how efficiency is ensured when the number of entities accessing DCMS is increasing exponentially) pertaining to the proposed DCMS were not considered in [27]. Utilizing blockchain technology, Rupasinghe et al. claimed that auditability is achieved where parties, e.g., patients, can audit logs to ensure auditability. Still, we could not find any relevant evidence about auditability in this work. Given the specific security and privacy properties that we have outlined for a robust DCMS in Section 5, it is evident that Rupasinghe et al. did not incorporate these properties into the proposed DCMS. As a result, the study falls short in evaluating the system’s security and privacy measures.

Jaiman et al. in [28] proposed an Ethereum blockchain-based DCMS to control access to individual health data, where smart contracts represent separate consent and authorize the requester to request and access health data from the patients. The proposed DCMS consists of patients as data subjects, a data-sharing agreement-based smart contract, and a data processor (who wants to process the subject’s data). In their work, the confidentiality of patient data was not discussed precisely when shared with the processor and stored onto the blockchain in plain text form (e.g., plain-text data shared onto a blockchain that poses various privacy constraints). The unforgeability of the output of each involved honest player (when the number of foes can forge the outcome) is not considered at all. Furthermore, Jaiman et al. failed to define the proposed DCMS’s availability (e.g., how the availability of patient data is ensured for the processor while keeping it on a public blockchain and how Ethereum blockchain-based DCMS is scalable when many processors request it through invoking the data-sharing smart contract?). Likewise, in this work [28], auditability descriptions concerning the opted DCMS were found to be quite vague. There is no precise information on how patients can perform audit trials to know how the processor uses their data. The paper [28] in question failed to address the security and privacy properties that we specified for an effective DCMS in Section 5 in the context of the proposed DCMS. Therefore, the study does not provide a comprehensive evaluation of the security and privacy features of the system.

This paper by Albanese et al. in [29] presents SCoDES, an approach for trusted and decentralized control of DCM in clinical trials based on blockchain technology. This work utilized hyper ledger fabric blockchain to remove the trust in third parties while managing the consent dynamically in clinical trials. Their work focuses on creating a web-based platform using a private blockchain network where entities, e.g., patients, can dynamically control their consent and data. In their work, Albanese et al. did not provide clear definitions of confidentiality regarding how involved parties’ data (e.g., patient’s data) are ensured while considering numerous adversaries and also when these sensitive data are shared with the data processor. Furthermore, the unforgeability of the output of the honest parties, i.e., data subject and processor, was not explored and disseminated clearly. Meanwhile, the authors of [29] could not describe the precise definitions of availability concerning the proposed DCMS. In this work [29], auditability claimed to be acquired through blockchain, but we could not find any evidence that this was true. The paper [29] under review failed to incorporate the security and privacy properties we have carefully defined for an effective DCMS in Section 5.

This work by Mamo et al. in [30] provides a construction of the DCMS that should be conceived in the bio-banking sector. Intrinsically, their work presents a web portal backed by a hyper ledger composer blockchain framework and a hub connecting various Malta bio-banking stakeholders. In their work, the precise definitions of confidentiality of involved parties’ data (e.g., data of customers and banks) and the unforgeability of the output of honest customers and other involved stakeholders were not demonstrated. Likewise, the definition of availability (e.g., how the system is enjoying availability while adding more nodes onto the fabric network) of the proposed DCMS was missing under what we have conceived and presented in our model, as we stated in Section 5. Furthermore, the auditability was claimed to be achieved using the hyper ledger composer framework. Still, we did not find suitable proof to elaborate on how an actor such as a customer (whose data are with the banks) can backtrack auditability in the proposed DCMS. The analyzed paper did not consider the security and privacy properties specified for the proposed DCMS in Section 5. Consequently, the work by [30] did not adequately address the requirements for ensuring data confidentiality, unforgeability, availability, and auditability, which are crucial for a robust DCMS.

In this work, Bhaskaran et al. in [31] described designing and implementing a smart contract-based DCMS driven by double-blind data sharing on the hyper ledger fabric platform. Bhaskaran et al. have demonstrated how a (know your customers) KYC application builds around their proposed DCM model to address the needs of the banks while meeting regulatory requirements. In their work, the unforgeability of the outputs for the involved honest actors (e.g., a bank and a customer) is claimed to be achieved. Still, we did not find any precise information about the claim’s validity. Apart from that, the description of the confidentiality of entities’ data (e.g., data of a customer while sharing with a bank) was not considered. Bhaskaran et al. failed to define the availability of their blockchain-based network (when the number of customers increases exponentially in permissioned setup) under what we have conceived and proposed in our model as we stated in Section 5. Using blockchain, the work in [31] claims that auditability concerning actors (e.g., customer can audit trials to know how her data are used and by whom) is being achieved. Still, we need help finding sufficient evidence that this claim is valid in this paper. The reviewed paper did not consider the security and privacy properties that we defined in Section 5 for the proposed DCMS.

Rupasinghe, in her doctoral thesis in [13], presented a blockchain-based DCMS for the secondary use of electronic medical records. Her suggested method for DCMS has almost all the components that should satisfy the possible constraints set by data governance authorities such as GDPR and HIPAA. Rupasinghe tried to create a few design goals for this DCMS to highlight the importance of design activity while developing a blockchain-based DCMS. In the doctoral thesis by Rupasinghe, the proposed DCMS only consists of a prototype implemented using hyper ledger fabric blockchain. However, the precise definitions of underlying important security and privacy properties are missing, such as how the confidentiality of involved parties’ data (e.g., data subject) is achieved when sharing with the data steward and requester. How is the integrity of the outputs for each honest party, such as data subject, steward, and requester, guaranteed considering adversaries? Instead, Rupasinghe assumed that confidentiality and unforgeability are acquired by employing blockchain in her given DCMS. Apart from that, Rupasinghe did not provide definitions for availability concerning her proposed DCMS (e.g., how high availability of the proposed DCMS is ensured with minimal downtime to the data subject, steward, and requester? In addition, how scalable and efficient is the proposed system when the number of data subjects increases exponentially?). As Rupasinghe utilized blockchain technology, there were claims about achieving auditability, but we could not witness any proof of this claim. Our defined security and privacy properties for the proposed DCMS, as elaborated in Section 5, were not considered in this Ph.D. thesis research context.

Kim et al. in [32] have proposed a DCMS backed by blockchain technology based on a rule-set management algorithm for managing healthcare data called Dynamichain. Their proposed approach was implemented where the exercise management healthcare company provided health management services based on data from the data provider’s hospital. For the implementation, the work in [32] has utilized hyper ledger fabric blockchain where known entities interact. In this work by Kim et al., there is no information about the performance analysis that can state the security and privacy of the proposed DynamiChain model. For example, in work given by Kim et al., there are no definitions of the underlying security and privacy properties such as how the confidentiality of involved parties data is being achieved (e.g., the confidentiality of data provider data in DynamiChain) when shared with the hospital, and data utilizer. Likewise, no information about the unforgeability of the output for honest parties in the system (like the data provider, the data controller, and the data utilizer) is guaranteed when foes can change the output for these honest entities. Meanwhile, in [32], the authors did not provide definitions for availability concerning DynamiChain (e.g., how high availability of the proposed DCMS is ensured with minimal downtime to actors such as data providers, or how scalable and efficient the proposed system is when the number of parties who are accessing the proposed DynamiChain increased exponentially?). As Kim et al. have utilized hyper ledger blockchain technology to attain decentralization, there were claims about achieving auditability about actors (e.g., data providers can backtrack things and see who used their data and for what purpose). Still, we could not witness any actual proof of this claim. Therefore, Kim et al. failed to consider the security and privacy properties we defined for the proposed DCMS in Section 5.

2.2. Motivation behind Considering Security and Privacy Properties for DCMSs

The reason for concentrating on these security and privacy properties of DCMSs is that various researchers have determined them as critical obstacles to the widespread adoption of DCMSs [33]. Moreover, legislation worldwide has emphasized the significance of designing future systems that handle public data with privacy and security in mind, such as the GDPR by the European Union [5]. These properties are also established on previously published best techniques. For example, the European Health Data Space (EHDS) [34] has highlighted the need for secure and private system designs that can be used for data sharing. Table 3 describes previous works that aimed to enforce privacy and security by design in any system that utilizes public data.

Table 3.

Motivation behind considering security and privacy properties of DCMSs.

3. Defining Dynamic Consent Management System (DCMS)

A dynamic consent management system is a complex system that involves multiple procedures to perform various tasks related to managing a subject’s consent and data. Here in our model, we are not interested in knowing what an actor does on its own locally in DCMS, but we are interested in learning the interactions of two parties (e.g., interactions between DS and DC, interactions among DC and DA, and interactions between DR and DC). So, in our model, we are mainly interested in procedures where two parties interact to perform a joint computation. When two parties give input to the DCMS, a procedure executes among those two parties as the result of those inputs. After the execution of that particular procedure, both parties get a possible output from the DCMS.

Below, we describe the procedures run by the involved actors of a DCMS.

- DS Sharing Data/Consent:In this procedure, DS and DC are involved since DS wants to share its data/consent, and DC is supposed to update its state according to the interaction with DS.

- Adding Data/ConsentHere, DS would like to share some data and consent with DC.Input for DS: Data, consent, and access control policies related to data and consent.Input for DC: The current state associated to DS.Output for DS: A receipt about the executed procedure (both in case the outcome has been positive or negative).Output for DC: An updated state associated to DS.

- Updating Data/ConsentIn this scenario, DS and DC are involved since DS shared some data and consent with DC and now DS wishes to make some updates to her shared data and consent.Input for DS: A receipt issued by DC upon receiving DS data/consent.Input for DC: The current state associated to DS.Output for DS: A receipt about the updated executed procedure (both in case the outcome has been positive or negative).Output for DC: The updated state associated to DS.

- DA Verifying DC:Here, DA and DC are involved since DA wants to audit whether DC has done all the operations related to DS’s data/consent honestly.Input for DA: Credentials proving the right to audit the DC.Input for DC: The current states associated to all DS.Output for DA: A receipt about the verified information (both in case the outcome has been positive or negative).Output for DC: The current state associated to DS.

- DR Requesting Data/Consent from DC:Here, DR would like to access DS’s data and consent. Notice that due to our discussion in Section 3.1, regarding the choice of discouraging the direct communication between DR and DS, DR must ask DC for DS’s data/consent.

- Requesting Data/Consent:In this procedure, DR and DC are involved. DR wishes to get DS’s data/consent to perform his research-related tasks. DC is supposed to update its state according to the interactions with DR.Input for DR: Credentials proving the right to access DS’s data/consent.Input for the DC: The current state associated to DS.Output for DR: A receipt about the executed procedure (both in case the outcome has been positive or negative).Output for DC: The updated state associated to DS.

3.1. Correctness of a Dynamic Consent Management System (DCMS)

A DCMS is said to satisfy a correctness requirement if whenever the procedures are run correctly, the outputs obtained by every actor in every phase are always correct according to the inputs provided. We remark that for correctness, it is assumed that there are no attacks on a DCMS. We also remark that in our model, we excluded a direct communication between DS and DR. Indeed, in a DCMS that relies on direct communication between DS and DR, there can be several potential issues. Typically, a DS would like to passively share its data, avoiding time-consuming interactions with all possible (i.e., legitimate and non-legitimate ones) DRs who are interested in obtaining its data. DS typically would simply like to give consent to a DC that is specialized in dealing with DRs. Such consent can even be generic in the sense of specifying categories of those DRs who can access data.

In the correctness of a DCMS, every player is giving the correct input to run the desired procedures described in Section 3. As a result, those players get the correct output from the system. The precise requirements for the correctness of a DCMS are defined below. In all previous procedures that have been elaborated in Section 3, whenever DS, DC, DA, and DR behave honestly, they get the following outputs:

- DS Adding data/consent:Output for DS: Receipt about the correctly executed procedure (in result of correct input).Output for DC: The updated state associated to DS.

- DS Updating data/consent:Output for DS: Receipt about the correctly executed procedure (in result of correct input).Output for DC: The Updated state associated to DS.

- DA Verifying DC:Output for DA: A receipt about the verified information (in result of correct input).Output for DC: The current state associated to DS.

- DR Requesting Data/Consent from DC:Output for DR: A receipt about the executed procedure (in result of correct input).Output for DC: The updated state associated to DS.

4. Attackers Model

Keeping in mind the properties we defined in Section 5 for the resilience of a DCMS, all the actors can attack the DCMS in numerous ways.

- Possible Attackers: It has been evident from the literature that the threats to every system start for two types of reasons. An internal user with legal system access, such as a data subject, controller, auditor, or requester, will access the subject’s data. On the other hand, there is an external agent. Although external agents are not allowed to enter the systems, there is always a higher likelihood that a critical opponent from the external environment can offer various threats to the system. In DCMSs, we have recognized four kinds of actors who can play with the system.

- Data Subject: In a DCMS, the data subject providing her data and giving consent could also be permitted to access and interact with the system in diverse ways. This could be for recreational purposes or other motivations.

- Data Controller: In a DCMS, the data controller is one of the most critical actors with a substantial stake in the system’s operation. The data controller has access to the system throughout the process, from data collection and storage of consent in the server to monitoring all system activities from a central position. However, the data controller could also pose a risk as a potential attacker who may attempt to compromise the system. For instance, data controllers may unlawfully access and sell data subject’s confidential information or share it with unauthorized organizations. Apart from that, since the data controller is a centralized entity that is processing data/consent, it is crucial to avoid a single point of failure due to a corrupted data controller; thus, this must be an abstract entity which should be decentralized only. We will be giving a follow-up work to this paper that will essentially consist of studying the techniques to decentralize the data controller in a DCMS.

- Data Auditor: A data auditor can be a potential DCMS attacker. Although the primary role of a data auditor is to ensure that the data controller complies with the policies and regulations related to data privacy and security, he may still have access to sensitive data that could be used for malicious purposes. Suppose a data auditor gains unauthorized access to the system. In that case, they may be able to manipulate data, compromise the integrity of the audit trail, or interfere with the consent management process.

- Data Requester: The data requester may have access to data and consent of the data subject through DCMS, and there is a severe risk that he may misuse the subject’s data for harmful purposes. For example, a requester could use the subject’s data for identity theft, fraud, or other illegal activities. In addition, the data requester may not have the necessary security measures to protect the data, leading to the potential exposure of the subject’s data to unauthorized parties. This can significantly harm the data subject, including through financial loss, reputational damage, and emotional distress.

5. Defining Security and Privacy in Dynamic Consent Management Systems

In a DCMS, both privacy and security properties concern the protection of honest players in the presence of some misbehaving players. The goal is to preserve the confidentiality of the honest player’s data and the unforgeability of outputs for each honest party.

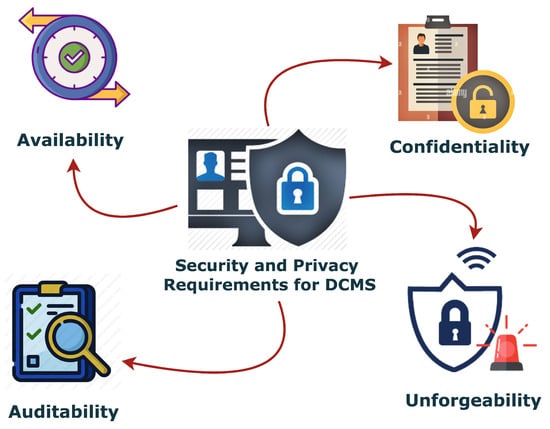

We will more concretely discuss the security and privacy of a DCMS, considering more specific properties such as Confidentiality, Unforgeability, Availability, and Auditability.

As described in Figure 2, a robust DCMS should enjoy the following properties:

- Confidentiality: The confidentiality property in a dynamic consent management system should be guaranteed, e.g., for an honest DS when DC is dishonest, when DA is dishonest, or when DR is dishonest, or maybe when all are dishonest. The system provides the minimum amount of data required by the requester according to the consent released by the data subject, and unauthorized access to the data of any actors in a DCMS should be infeasible. Confidentiality of honest parties (i.e., DS, DC, DA, DR) is defined as follows:

- Confidentiality for DS:According to the procedures defined in Section 3, in a DCMS parties are involved in procedures and a DS provides her data in input to some of those procedures (e.g., sharing or updating data/consent). As motivated when discussing the threat model in Section 4, we can expect players to misbehave and thus deviate from the expected steps specified by the procedures (e.g., providing incorrect inputs) with the goal of obtaining unauthorized information. In the context of a DCMS ensuring the confidentiality of the honest DS from a dishonest DC, we can describe the confidentiality for the DS with the help of an experiment that will illustrate the formal specification of the definition. In addition, in the following experiment, we assume that in a dynamic consent management system only the adversary operates within polynomial time. We acknowledge that is an adversary and may select the encrypted message from DS with a probability slightly more significant than 1/2.Experiment:We write , to denote the experiment being run with security parameter n:, The experiment is defined for any private-key encryption scheme , any adversary DC, and any value n for the security parameter:The adversarial indistinguishability experiment

- −

- In the DCMS, the adversary , is given input (), and outputs a pair of messages , with || = ||.

- −

- A key k is generated by running Gen (), and a uniform bit is chosen. Cipher-text c←) is computed in a dynamic consent management system and sent to the adversary . We refer to c as the challenge cipher-text, which is a message essentially consisting of the data/consent of the data subject (DS).

- −

- outputs a bit .

- −

- The output of the experiment is defined to be 1 if = b, and 0 otherwise. If , we say that succeeds.

Here, we are considering adversary running in polynomial time, and we accept that the might determine the encrypted message from the data subject (DS) with a probability negligibly better than . In the context of a dynamic consent management system ensuring the confidentiality of the honest data subject from a dishonest data controller, we can describe it as follows:Formal Definition: A DCMS has indistinguishable encryptions in the presence of a dishonest , or is secure, if, for all probabilistic polynomial-time dishonest data controllers (DCs), there is a negligible function such that, for all n:, Here, the idea is that an adversary must not be able to get information about the plain text that essentially contains the data/consent of the data subject (DS) encrypted using an encryption key K and is in cipher-text form. secure refers to the Indistinguishable encryptions, which is a property of the security definition, and represents the dynamic consent management system (DCMS) comprising three algorithms:Gen: The key generation algorithm generates a secret key for the data subject (DS) and the data controller (DC) to securely communicate and establish trust.Share: The data sharing algorithm allows the DS to share her data/consent with the DC securely. This algorithm takes the data/consent and secret key K as input and produces a ciphertext consisting of encrypted data.Access: The access algorithm enables DC to access and process the subject’s (DSs) shared data/consent. The access algorithm takes the cipher-text, which is actually encrypted data/consent, and the secret key K as input and produces the plain-text data of DS.Indistinguishable encryptions: Indistinguishable encryptions are the property of the security definition. A DCMS is said to have indistinguishable encryptions if it is computationally hard for a dishonest to differentiate between two instances of encrypted data by observing the encrypted data.Probabilistic polynomial-time dishonest data controllers (DCs): The dishonest DCs are computationally bounded algorithms that attempt to break the security of a DCMS by giving incorrect input to one of the procedures run by the DS to share/update her data/consent. DCs can access public algorithms (Share and Access) and perform polynomial-time computations.Negligible function (): A negligible function is a minimal function that approaches zero as its input size increases. In the context of DCMS security analysis, a negligible function represents a level of DCMS security that is considered practically impossible to break.: This inequality expresses the security property of DCMS. It states that for all probabilistic polynomial-time dishonest , the probability that the adversary correctly determines whether a given encrypted data corresponds to a specific data/consent is at most a negligible function . In other words, the advantage of the dishonest data controllers (DCs) distinguishing the shared data is minimal, approaching zero as n (the security parameter) increases.The above description defines the requirement for a dynamic consent management system to have indistinguishable encryptions, ensuring the confidentiality of the data subjects (DSs) data/consent from dishonest data controllers . Fundamentally, in the above scenario, DS is an honest entity giving correct inputs to procedures as specified in Section 3, and expecting correct output from the dynamic consent management system. In this situation, the confidentiality property of DCMS will come into play to preserve an honest DS’s sensitive data/consent when DC, DA, or DR may act as adversaries while deviating from the procedures. Since DA and DR also have direct or indirect contact with DS’s data/consent, they might also be believed as possible foes for the confidentiality of DS’s data/consent. Hence, to satisfy confidentiality for DS, a DCMS should have strong security and privacy tools in place to ensure that DS’s data/consent are not misused by dishonest parties. Confidentiality can be foreseen in a given construction of DCMS by employing useful tools and techniques such as advanced encryption schemes (as the indistinguishability property can be achieved using advanced encryption schemes), zero-knowledge proofs, and differential privacy. - Confidentiality for DC:According to the above procedures in Section 3, the data controller (DC) takes part in procedures the Data Auditor (DA) runs to verify the DC. Initially, DA runs a procedure to verify the DC. The DC behaves as an honest party by giving the procedure the correct input (i.e., the current state of all data subjects (DSs)). Keeping in view the experiment that is explained in defining the confidentiality of an honest data subject (DS), we are now stating the confidentiality for an honest DC when a DA may misbehave as follows:Experiment:We write , to denote the experiment being run with security parameter n:, The experiment is defined for any private-key encryption scheme , any adversary , and any value n for the security parameter:The adversarial indistinguishability experiment

- −

- The adversary , is given input (), and outputs a pair of messages , with || = ||.

- −

- A key K is generated by running (), and a uniform bit is chosen. Ciphertext c←) is computed and given to the adversary . We refer to c as the challenge ciphertext.

- −

- outputs a bit .

- −

- The output of the experiment is defined to be 1 if b = b, and 0 otherwise. If , we say that succeeds.

Here, we are considering adversary running in polynomial time, and we accept that the might determine the encrypted message, which is the data/consent of all DSs from the DC with a probability negligibly better than . In the context of a dynamic consent management system ensuring the confidentiality of the honest DC from a dishonest , we can describe it as follows:Formal Definition: A DCMS has indistinguishable encryptions in the presence of a dishonest , or is secure, if for all probabilistic polynomial-time dishonest data auditors , there is a negligible function such that, for all n:, Here, the idea is that an adversary must not be able to get information about the plain-text that essentially contains the data/consent of all DSs that is encrypted using an encryption key K and is in cipher-text form and being held by the honest DC. secure refers to the Indistinguishable encryptions, which is a property of the security definition, and represents the DCMS comprising three algorithms: . - Confidentiality for DA:As specified in Section 3, the DA is supposed to verify whether all the operations performed by the DC related to the DS’s data/consent have been performed honestly or not. For these verifications, for instance, when the DA wishes to verify the DC, he runs a procedure in DCMS where the DA gives correct input that is essentially his right to access and audit the DC. Likewise, the DC needs to feed input to this procedure by stating the current state associated with all DSs. In this procedure, the DA acts as an honest player and provides the correct credentials to audit the DC. Still, at the same time, DC can deviate from the procedure by feeding the wrong input.So, the DC is one of the potential attackers for the confidentiality of DA’s data. The confidentiality property of a dynamic consent management system will ensure the inputs and outputs of an honest DA when is acting as an adversary. Being a dishonest party, will be sharing the wrong data/consent records with the DA. Keeping in view the experiment that is explained in defining the confidentiality of an honest DS, we are now stating the confidentiality for an honest DA as follows:Experiment:We write , to denote the experiment being run with security parameter n:, The experiment is defined for any private-key encryption scheme , any adversary , and any value n for the security parameter:The adversarial indistinguishability experiment

- −

- The adversary , is given input (), and outputs a pair of messages , with || = ||.

- −

- A key K is generated by running Gen (), and a uniform bit is chosen. Ciphertext c ←) is computed and given to the adversary . We refer to c as the challenge ciphertext.

- −

- outputs a bit .

- −

- The output of the experiment is defined to be 1 if = b, and 0 otherwise. If , we say that succeeds.

Here, we are considering adversary running in polynomial time, and we accept that the might determine the encrypted message from the DA with a probability negligibly better than . In the context of a DCMS ensuring the confidentiality of the honest DA from a dishonest , we can describe it as follows:Formal Definition: A DCMS has indistinguishable encryptions in the presence of a dishonest DC, or is secure, if, for all probabilistic polynomial-time dishonest , there is a negligible function such that, for all n:, Here, the idea is that an adversary must not be able to get information about the plain text that essentially contains the confidential information of an honest DA. secure refers to the Indistinguishable encryptions, which is a property of the security definition, and represents the DCMS comprising three algorithms: . - Confidentiality for DR:According to the procedures described in Section 3, DR runs a procedure to ask for the DS’s data/consent from DC. Here, we assume that the DR is an honest entity feeding correct input, i.e., correct credentials proving the right to access the DS’s data/consent. Meanwhile, the DR expects the DC to also give correct input (i.e., the current state associated with DS) to the procedure. If, somehow, the DC is not providing correct input, the DC is deviating from the procedure run by the DR. Hence, the DC is a possible adversary who can misuse the information of the DR. Since the DR would be sharing his credentials to access the DS’s data/consent, the DC may use this information.In addition, the DR does not want to share his access request information with any other player, i.e., DS and DA, but lawful DC should see the requests from DR. Now, if the DC misbehaves, then the DR’s data would also be compromised by the dishonest DC. The confidentiality property of DCMS will ensure that the requests made by honest DRs have been protected efficiently in the sense that both the input and output of a genuine DR shall be maintained by the confidentiality property of DCMS. Keeping in view the experiment that is explained in defining the confidentiality of an honest DS, we are now stating the confidentiality for an honest DR as follows:Experiment:We write , to denote the experiment being run with security parameter n:, The experiment is defined for any private-key encryption scheme , any adversary , and any value n for the security parameter:The adversarial indistinguishability experiment

- −

- The adversary , is given input (), and outputs a pair of messages , with || = ||.

- −

- A key K is generated by running Gen (), and a uniform bit is chosen. Ciphertext c←) is computed and given to the adversary . We refer to c as the challenge ciphertext.

- −

- outputs a bit .

- −

- The output of the experiment is defined to be 1 if = b, and 0 otherwise. If , we say that succeeds.

Here, we are considering adversary running in polynomial time, and we accept that the might determine the encrypted message from the DR with a probability negligibly better than . In the context of a DCMS ensuring the confidentiality of the honest DR from a dishonest DC, we can describe it as follows:Formal Definition: A DCMS has indistinguishable encryptions in the presence of a dishonest , or is secure, if for all probabilistic polynomial-time dishonest , there is a negligible function such that, for all n:, Here, the idea is that an adversary must not be able to get information about the plain text that essentially contains the confidential information of an honest DR. secure refers to the Indistinguishable encryptions, which is a property of the security definition and represents the DCMS comprising three algorithms: .

- 2.

- Unforgeability:The dynamic consent management system should be able to provide correct outputs to honest players, i.e., DS, DC, DA, and DR, even in the presence of adversaries who can misbehave during the executions of the procedures specified in Section 3. We now consider the unforgeability for each player of a DCMS.Experiment: Dynamic-Consent-OutputForge A,

- Gen () is run to obtain keys for the DCMS.

- Adversary A is given and access to the DCMS, including the player DS.

- The adversary A interacts with the DCMS, providing inputs and receiving outputs.

- The adversary A outputs a forged output for the honest entity, i.e., DS, DC, DA, or DR. The adversary A succeeds if and only if the forged output is accepted as a valid output by the DCMS.

- In this case, the output of the experiment is defined to be 1.

Definition: Dynamic-Consent-Output-Unforgeability for an honest party:A DCMS is considered output secure for the honest entity like DS, DC, DA, and DR, if for all probabilistic polynomial-time adversaries A, there exists a negligible function such that the probability of the adversary A successfully forging the output of the honest entity in the experiment Dynamic-Consent-OutputForge A, is negligible:[A, = 1]Here A is denoted as dishonest party that might act as an adversary. So, a DCMS is an output secure for an honest entity (DS, DC, DA, and DR) if the probability of an adversary A forging a valid output that a legitimate entity would receive is extremely low (negligible) when faced with a computational adversary A who has access to the DCMS functionalities. The output refers to the correctness of an honest entity in the form of receipts or any other relevant information generated by the DCMS. We now map the above experiment to each of the involved parties’ interactions in a DCMS below:- Unforgeability for DS:Here, DS is an honest party providing correct input to the procedures executed with DC. Being an honest party, DS has the privilege of getting the correct output from the system, even if any adversary, such as a DC, tries to forge the output of DS. In the defined procedures in Section 3, while interacting with DC, the outcomes for DS are receipts about the executed procedures that state that data/consent have been received, stored, and processed correctly by the DC. Even though other players in DCMS (such as DA, DR, and DC) will still be accessing DS data/consent directly or indirectly, the output (essentially the correctness of DS’s data/consent) must be guaranteed by the DCMS. In this experiment, two parties, i.e., DS and DC, are involved since both these parties are interacting with each other in a couple of procedures that have been stated in Section 3.Experiment: Dynamic-Consent-OutputForge DC,

- −

- Gen () is run to obtain keys for the DCMS.

- −

- Adversary is given and access to the DCMS, including the player DS.

- −

- interacts with the DCMS, providing inputs and receiving outputs.

- −

- The adversary outputs a forged output for the honest DS. succeeds if and only if the forged output is accepted as a valid output by the DCMS.

- −

- In this case, the experiment’s output is defined to be 1.

Formal Definition: Dynamic-Consent-Output-Unforgeability for honest DS:A dynamic consent management system is considered output secure for the honest DS if, for all probabilistic polynomial-time adversaries like , there exists a negligible function such that the probability of the adversary successfully forging the output of DS in the experiment Dynamic-Consent-OutputForge , is negligible:[, = 1]A DCMS is an output secure for the honest DS if the probability of a dishonest forging a valid output that DS would receive is extremely low (negligible) when faced with a computational adversary DC with access to the system functionalities and DS. The output refers to the correctness of DS’s data and consent in the form of receipts or any other relevant information generated by the DCMS. - Unforgeability for DC:In the defined procedures in Section 3, the possible outputs for DCs are the current and updated states associated with all DSs in DCMS. Here, we have to protect the correctness of the output of DC when DS, DA, or DR misbehaves. Unforgeability for DC means that output for an honest DC should be correct in the sense that when a DC is honest, then the output for DC should be correct regardless of the honesty or dishonesty of other players, i.e., DS, DA, and DR that are interacting with DC. Since DC is considered an honest player, he must be guaranteed the correct output from DCMS even if the other players misbehave.So, here we are concerned with protecting the unforgeability of outputs for a DC being an honest party. Hence, the states corresponding to all DSs must not be altered or modified by any misbehaving player in DCMS. Since DC is first interacting with DS to receive data/consent, being an honest party providing the correct input to the procedures, DC must be ensured to get the correct output from the system. In the sense that even if DS tries to modify the output for DC, the DCMS should provide the correct output to DC.In this experiment, two parties, i.e., DC and DA, are involved since both these parties are interacting with each other in a couple of procedures that have been stated in Section 3.Experiment: Dynamic-Consent-OutputForge DA,

- −

- Gen () is run to obtain keys for the DCMS.

- −

- Adversary DA is given and access to the DCMS.

- −

- Adversary DA interacts with the DCMS, providing inputs and receiving outputs.

- −

- The adversary then outputs a forged output for the honest DC. Hence, the DA succeeds if and only if the forged output is accepted as a valid output by the DCMS.

- −

- In this case, the experiment’s output is defined to be 1.

Formal Definition: Dynamic-Consent-Output-Unforgeability for honest DC:A dynamic consent management system is considered output secure for the honest DC, if for all probabilistic polynomial-time adversaries like DA, there exists a negligible function negl such that the probability of the adversary DA successfully forging the output of DC in the experiment Dynamic-Consent-OutputForge A, is negligible:[, = 1] ≤In simpler terms, a DCMS is an output secure for the honest DC if the probability of an adversary DA forging a valid output that DC would receive is extremely low (negligible) when faced with a computational adversary DA who has access to the system functionalities and DC. The output refers to the correctness of DCs data in the form of receipts or any other relevant information generated by the DCMS. - Unforgeability for DA:Here, we need to protect the correctness of the output of the DA when DC misbehaves. As detailed in Section 3, the DA is an honest entity feeding correct input while executing a procedure with DC. So, DA must be ensured to get correct output from DCMS even if DC misbehaves, providing incorrect input to the procedure. The output for DA is the receipt that states all the procedures related to DS’s data/consent have been performed honestly by the DC. For a DA, unforgeability mainly refers to any audit report generated by the DCMS that should be genuine and accurately reflect the system’s state and cannot be tampered with or modified by any malicious party such as DC or DR.Since adversaries such as DC or DR may try to forge the output for the DA, i.e., making modifications in audit records, the integrity property of DCMS will ensure that an honest DA should always get a correct output, even if other interacting players try to forge the output for DA by giving incorrect input to the procedure with DA. In this experiment, two parties, i.e., DA and DC, are involved since both these parties are interacting with each other in a couple of procedures that have been stated in Section 3.Experiment: Dynamic-Consent-OutputForge DC,

- −

- Gen () is run to obtain keys for the DCMS.

- −

- Adversary DC is given and access to the DCMS.

- −

- Adversary DC interacts with the DCMS, providing inputs and receiving outputs.

- −

- The adversary then outputs a forged output for the honest DA. DC succeeds if and only if the forged output is accepted as a valid output by the DCMS.

- −

- In this case, the experiment’s output is defined to be 1.

Formal Definition: Dynamic-Consent-Output-Unforgeability for honest DA:A dynamic consent management system is considered output secure for the honest DA, if for all probabilistic polynomial-time adversaries like DC, there exists a negligible function negl such that the probability of the adversary DC successfully forging the output of DA in the experiment Dynamic-Consent-OutputForge A, is negligible:[, = 1]In simpler terms, a DCMS is output secure for the honest DA if the probability of an adversary forging a valid output that would receive is extremely low (negligible) when faced with a computational adversary with access to the system functionalities and DA. The output refers to the correctness of DAs data in the form of receipts or any other relevant information generated by the DCMS. - Unforgeability for DR:Here, the DR is an honest party running a procedure with the DC to have the DS’s data/consent. Being a legitimate party, the DR is providing the correct input to the procedure. Still, there is a possibility that the DC may give an incorrect input to the procedure, i.e., providing the wrong states associated with DS. In this case, the integrity of outputs for an honest DR will be protected by the DCMS. Hence, the correctness of the output of DR shall be protected when the DC misbehaves.The possible outputs for the DR are required DS’s data/consent according to his credentials. We need to preserve the integrity of the outputs for a DR to be an honest party. In the sense that even though adversaries (such as DA and DC) are present inside the DCMS, a DR must be able to get the correct data/consent for which he requested to a DC. If, for instance, an adversary tries to modify the output for the DR, the DCMS should implement robust tools and techniques that can hinder these malicious modifications. More formally, the unforgeability for a DR states that any data/consent as an output obtained from the DCMS must be genuine and accurate and cannot be tampered with or modified by any malicious or unauthorized player.In this experiment, two parties, i.e., DR and DC, are involved since both these parties are interacting with each other in a couple of procedures that have been stated in Section 3.Experiment: Dynamic-Consent-OutputForge DC,

- −

- Gen () is run to obtain keys for the DCMS.

- −

- Adversary DC is given and access to the DCMS.

- −

- Adversary DC interacts with the DCMS, providing inputs and receiving outputs.

- −

- The adversary then outputs a forged output for the honest DR. DA succeeds if and only if the forged output is accepted as a valid output by the DCMS.

- −

- In this case, the experiment’s output is defined to be 1.

Formal Definition: Dynamic-Consent-Output-Unforgeability for honest DR:A dynamic consent management system is considered output secure for the honest DR, if for all probabilistic polynomial-time adversaries like DC, there exists a negligible function negl such that the probability of the adversary DC successfully forging the output of DR in the experiment Dynamic-Consent-OutputForge A, is negligible:[, = 1]Hence, a DCMS is output secure for the honest DR if the probability of an adversary forging a valid output that DR would receive is extremely low (negligible) when faced with a computational adversary with access to the system functionalities and DR. The output refers to the correctness of DRs data in the form of receipts or any other relevant information generated by the DCMS.

- 3.

- Availability:Access to the dynamic consent management system should only be granted to authenticated actors (DS, DC, DA, and DR). The DCMS must be designed so that it should be highly available. Highly available means that there should be no interruption or downtime in the system, even due to hardware or software failures, which may result in delays or errors in data processing and communication among peers. If there are any delays or interruptions, these can ultimately impact the privacy and security of the data and the players involved in DCMS. High availability of the DCMS must be ensured to remain accessible to actors at all times.The system should be designed to be resilient to denial of service (DoS) and distributed denial of service (DDoS) attacks, ensuring that it remains up and running even during a large-scale attack. There should be no server downtime to ensure that actors such as DSs have timely and reliable access to their data/consent. DSs should be able to view, update, or delete their data/consent and provide or withdraw consent for collecting, using, and disclosing their data. DSs should also be informed about data processing, including privacy or security breaches. Adversaries such as DS, DC, DA, and DR should not be able to compromise the system’s high availability.

- 4.