Abstract

The rapid development of deep learning has brought novel methodologies for 3D object detection using LiDAR sensing technology. These improvements in precision and inference speed performances lead to notable high performance and real-time inference, which is especially important for self-driving purposes. However, the developments carried by these approaches overwhelm the research process in this area since new methods, technologies and software versions lead to different project necessities, specifications and requirements. Moreover, the improvements brought by the new methods may be due to improvements in newer versions of deep learning frameworks and not just the novelty and innovation of the model architecture. Thus, it has become crucial to create a framework with the same software versions, specifications and requirements that accommodate all these methodologies and allow for the easy introduction of new methods and models. A framework is proposed that abstracts the implementation, reusing and building of novel methods and models. The main idea is to facilitate the representation of state-of-the-art (SoA) approaches and simultaneously encourage the implementation of new approaches by reusing, improving and innovating modules in the proposed framework, which has the same software specifications to allow for a fair comparison. This makes it possible to determine if the key innovation approach outperforms the current SoA by comparing models in a framework with the same software specifications and requirements.

1. Introduction

The field of computer vision has seen significant advancements in recent years, particularly in the area of 3D object detection from point cloud data. However, there is still a need for a general representation framework that can be applied to a wide range of 3D object detection tasks, regardless of the specific sensor or application domain. The development verified in recent years of the computational power offered by cutting-edge GPUs has allowed for the application of deep learning algorithms to detect objects in several domains. One such domain is autonomous driving using light detection and ranging (LiDAR) data, representing a considerable gain in detection efficiency, precision and inference speed [1].

In recent years, there has been significant progress in 3D object detection models based on LIDAR data for self-driving applications. A multitude of frameworks and projects have been proposed, each with its own unique approach to addressing the challenges of detecting and tracking objects in a 3D environment. However, this diversity also poses a challenge when it comes to deploying these models for onboard inference in a self-driving vehicle [2,3].

The misclassification of off-road regions is one of the difficulties with LiDAR-based object detection highlighted in [4]. Finding and classifying off-road areas is essential for safe and accurate autonomous navigation. It also suggests combining high-definition (HD) maps with LiDAR data to overcome this problem. The platform improves the process of item recognition and categorization by adding HD maps, which offer comprehensive information about the road network. The LiDAR system can more easily distinguish between legitimate impediments and off-road areas thanks to HD maps’ comprehensive road geometry data. By enhancing object identification precision and lowering false positives and false negatives, this integration makes autonomous navigation safer and more dependable. Another key idea is that to improve item recognition and classification in the context of automated driving systems, it uses LiDAR’s abilities to capture exact 3D information about the environment and integrate it with HD maps.

One major issue is the enormous variation in software versions, libraries and supported platforms, making it difficult to assemble and deploy these models correctly. Additionally, self-driving requirements must be taken into consideration, such as the need for operationalization with different modules and the limited computational resources available in onboard systems.

Regardless, the 3D object detection models discussed in the literature take point clouds as input and are known to be more complex. These models have a deeper pipeline and process a more significant amount of data. For example, a point cloud usually comprises between 100 k–120 k [3], where each point holds data related to the Euclidean distance and signal reflection, that is, 128 bits to translate each information of each point.

The literature includes recent research such as [3,5,6,7]; it has been suggested that the minimum operating requirements for self-driving applications should include an overall class classification of at least 60 mAP and an inference time of less than 100 ms.

In this context, the need for a standardized and optimized framework for 3D object detection based on LIDAR data becomes even more important. Such a framework could simplify the deployment process, enable better interoperability across different systems and facilitate the development of more efficient and effective self-driving systems.

Our Contribution

This paper aims to propose a general SoA representation framework for 3D object detection from the point cloud. It supports multiple SoA 3D object detection methods with highly refactored codes for both one-stage and two-stage methods. Also, it enables the implementation and reusing of different approaches with less manual engineering effort by proposing an abstract way of building object detectors. At the same time, it facilitates the implementation of new methods in each module of the framework. By implementing different SoAs, we are trying to facilitate a new approach for the scientific community. In this way, it will be possible to offer a framework for real-time testing inference and measure the trade-off between metrics (mAPvs inference time) in single-framework 3D model objects applied to self-driving applications.

Therefore, the contributions proposed in this paper are as follows:

- An abstract framework for the implementation/representation of edges for 3D object detection models using LIDAR data.

- Less engineer effort to implement new methods in different framework models.

- A simpler way to change hyperparameters and retrain models using YML files.

- An easily represented model using these YML files automatically.

The organization of this paper is as follows: In the next Section 2, some of the state-of-the-art works related to 3D object detection systems and hardware platforms for their implementation are presented. Section 3 shows a four-step method used to select, train and tune a deep learning model for deployment on a hardware device. Section 4 presents the selected 3D object detection model, as well as its deep learning components, specifying the details about the architecture of the target hardware device and the implementation of the hardware components and software. The presentation of performance evaluation results, comparison of results and discussion of these results occur in Section 7. Finally, Section 8 presents the main results achieved in this paper and future work.

2. Related Work

In recent years, object detection models in point clouds presented in the literature have been highly improved, and higher and higher detection performance has been achieved. Based on the literature, the most discussed models are divided into two broad categories: approaches based on CNN 3D and approaches based on CNN 2D, where different data representations, backbone networks and multiscale resource learning techniques can be adopted [3].

When it comes to 3D object detection approaches, they can be classified into three types. The first category is based on volumetric representation. The second is based on pillars. Finally, the third is based on raw points. Furthermore, they are novel models recognized by the scientific community that provide innovation in the diverse architecture pipeline, as well as high accuracy and performance in 3D object detection.

The first category, which can be divided into one-stage or two-stage, is usually based on the volumetric representation to discretise the point cloud. The one-stage representation only has a single stage, and SECOND [8] is an example. This 3D convolution-based technique produces item class prediction, bounding box regression and orientation classification. The two-stage representation obtained the same results as the single stage but fine-tuned the bounding box. Examples of two-stage representation are P-RCNN [9], VoxelRCNN [10] and [11]. Usually, these methods require more resources in terms of computing power because they either use the costly volumetric representation of the point cloud or rely on computationally intensive 3D convolutions.

The second category of models fall under one-stage methods, and use 2D convolutions in place of the computationally intensive 3D convolutions. PointPillars [12] is an example of this approach. To decrease the high computational cost of handling 3D LiDAR data, these models usually compress the data into a 2D projection or organize it into pillars [12]. While these methods are quicker and suitable for real-time applications, they sacrifice detection capabilities by losing some information. This highlights the trade-off between inference time and accuracy.

The third category of methods, such as Point RCNN [13], utilizes a two-stage approach based on raw point data and voxel representation to take advantage of their respective benefits. In the first stage, the network uses voxel representation as input and performs light convolutional operations, which results in a small number of high-quality initial predictions. An attention mechanism effectively combines coordinate and indexed convolutional features of each point in the initial forecast, maintaining both accurate localization and contextual information. The second stage uses the fused feature of interior points to refine the prediction [14].

Accurate object recognition in autonomous vehicles can be considerably improved by utilizing shared visual data from numerous vehicles and infrastructure sensors. This method can get beyond restrictions like occlusion and a narrow field of view by exchanging information with nearby infrastructure and vehicles. Accurate vehicle position, velocity and attitude information are essential to achieve this improvement [4].

The autonomous vehicle kinematics and dynamics synthesis can estimate a vehicle’s side slip angle (SSA), which is an important vehicle state parameter in vehicle dynamics and is based on a consensus Kalman filter. The kinematics and dynamics of the vehicle are very nonlinear; yet, after linearization, the linear system may mimic them well. The vehicle state, which consists of the vehicle’s position, velocity and attitude, is present in the linear system. The first-order differential equation can simulate the vehicle kinematics and dynamics, determining how the vehicle state changes in the linear system. The consensus Kalman filter-based SSA estimation approach can estimate the SSA accurately and robustly. Based on a first-order differential equation, the technique estimates the SSA using the vehicle’s kinematics and dynamics. The nonlinear SSA estimation approach is well approximated by the linear system following linearization. The very accurate SSA estimate approach can be utilized to enhance vehicle control and safety [4]. This method’s accurate estimation of vehicle kinematics, including location, velocity and attitude, can considerably improve autonomous cars’ ability to identify objects. The system may better comprehend the dynamics and behavior of nearby automobiles, pedestrians and other objects in the environment by adding this information into the object detection algorithms. As a result, object detection becomes more accurate, especially in conditions when occlusion and a small field of view present difficulties. So, autonomous vehicles can get over limitations like occlusion and a small field of view by incorporating this information into object detection algorithms. The precision of object identification and the general perception abilities of autonomous vehicles are considerably improved by the precise estimation of vehicle kinematics.

Object detection in vehicle surrounding surroundings or remote sensing provide distinct problems in comparison to natural scene picture detection. Specialized detection techniques are needed in these domains to identify certain things of interest, such as cars, people walking or tassels in UAV footage. The “YOLOv5-Tassel” method is one of the many strategies being investigated by researchers to improve object detection performance in these fields [15]. Improvements including architecture adjustments, data augmentation methods and hyperparameter optimization are included in the YOLOv5-Tassel model. These improvements aim to increase the reliability and precision of tassel detection in UAV photography. The YOLOv5-Tassel model’s performance on a variety of datasets is thoroughly evaluated by the authors, who also compare it to other detection techniques to show how successful it is. The results of the tests show that YOLOv5-Tassel detects tassels in UAV imagery with a high degree of accuracy. When comparing remote sensing with object recognition in natural scenarios, object detection in remote sensing images is more challenging because it calls for the detection of targets from different scenes. Although there are a lot of remote sensing images, there are not as many of them labeled as there are in a dataset of natural scenes, which makes it harder for training models to converge [16].

3. Methodology

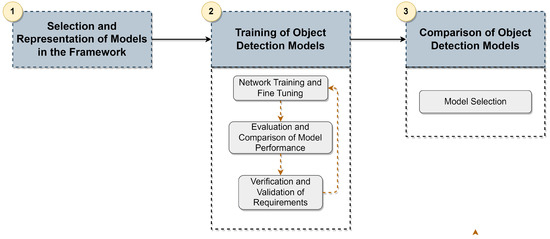

To implement/represent the 3D object detection models based on deep learning in the framework, we employed a three-step methodology, which is depicted in Figure 1. (1) Firstly, a set of model architecture and hyperparameter specifications are defined in different configuration files. These files define the specifications of the components of each module in the framework (described in Section 4) as well as the training and test specifications that are then used to build, train and test the object detectors. We chose the models for 3D object detection based on a review of the existing literature, which is outlined in Section 2 and elaborated further in [3]. The framework, described in Section 4, was developed to facilitate the representation of any object detection model.

Figure 1.

Methodology for object detection model fine-tuning.

Once the object detector is built, it is subjected to a training and evaluation pipeline (2), where various optimizations can be performed to enhance the accuracy metrics and fulfill the inference time requirements. In our project, since different components need to operate simultaneously, such as the SLAM algorithm and object detector, we define an overall mAP of 60% and an inference time of less than 100 ms (metrics are always subject to trade-offs). The training and evaluation step can be carried out by changing the training specification in the respective model configuration. The concept behind defining the training and testing parameters in these configuration files is to make it easier to modify them and subsequently submit the object detector to the same training and evaluation pipeline. The pipeline was executed on a server-side node with an Intel Core i9 processor, 64 GB of RAM and a Quadro RTX 8000 GPU. Therefore, the proposed workflow follows an iterative process, where the model is fine-tuned. The training and evaluation steps are repeated whenever necessary until they meet the requirements and satisfy the application requirements. The evaluation and comparison process is carried out using KITTI benchmarks using the validation set on the aforementioned server node. In conclusion, this workflow guarantees that the models meet the application requirements and attain the highest possible accuracy. This procedure identifies a group of potential object detection models for the subsequent step.

After completing step (2) workflow, a comparison phase of the resulting models (step (3)) is conducted to select the model that can ensure a better balance between precision and inference time. The subsequent section presents information on the architecture of the framework, the chosen deep learning models and the parameters used in the fine-tuning process.

4. Framework for Representing 3D Object Detection Models

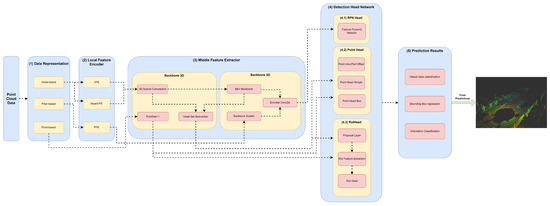

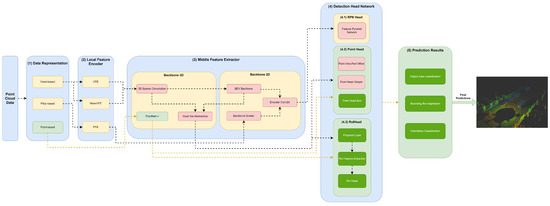

Our framework’s key innovation is that it facilitates the representation of any object detector through YML configuration files that define their module specifications in each framework component. Moreover, this framework, shown in Figure 2, aims to facilitate the implementation and integration of new modules in each framework component to allow for the comprehensive representation of the different state-of-the-art 3D object detectors.

Figure 2.

Framework used for the implementation/representation of object detection models.

The first component, (1) data representation, receives the set of points and discretizes them in a set of data structures, such as pillars or voxels, or only passes the set of points to be used by the middle extractor module (3). (2) The local feature encoder receives as input these data structures—more specifically, the set of pillars or voxels—and encodes and concatenates their features. Then, in the middle extractor (3), 3D and/or 2D backbones extract features from local encoded features, which are used by the (4) detection head to predict object class, bounding box offsets and direction (5). (4.1) This detection head based on RPN can be assisted by two modules, a (4.2) point head module and (4.3) region of interest (RoI) head module, which refines the predicted bounding box offsets and orientation. (4.2) The point head module is composed of three networks: a point intrapart offset head [11], a point-based segmentation head for keypoint segmentation [17] and another point-based segmentation head based on [13]. The (4.3) RoiHead module is defined for each state-of-the-art model based on their specificities, but typically it is composed of a proposal layer, which proposes a set of RoIs, a RoI feature extraction that pools the RoI features and a RoI head that predicts RoI class and bounding box offsets.

4.1. Point Cloud Data Representation

We receive an unordered set of points , where and each point p is represented as , where and correspond to coordinates in the three-dimension Cartesian axis and is the reflectance value provided by the LiDAR sensor. A point cloud range is a tuple , where L consists of , H consists of and W consists of . We denote a point cloud subset with respect to as .

4.1.1. Pillar Representation

The framework receives the points in and discretizes them in the X–Y axis, thus creating a set of pillars , where , is the max number of pillars and . Each has a fixed size in , and it is represented by a tuple , where w is the width of the pillar along the x axis, and h is the height of the pillar along the y axis. The points are grouped accordingly with the pillar that resides.

To deal with the sparsity problem and save computation, a max number of points per pillar is defined. The points are randomly sampled if the number of points in each pillar is higher than . On the other hand, zero padding is added in cases of less than points.

4.1.2. Voxel-Based Representation

The voxelization process assumes a similar way as proposed in pillar discretization; however, the received points are discretized in the X–Y–Z axis. It allows for the creation of a set of voxels , where , means the max number of voxels and , and each assumes a fixed size in ; and a tuple represents . w is the width of the voxel along the x axis, h is the height of the voxel along the y axis and d is the depth of the voxel along the z axis.

A random sampling strategy is also applied to save computation, and a max number of points per voxel is also used. The strategy to sample points or apply zero padding is the same as the pillar representation.

4.1.3. Point-Based

The idea in the point-based strategy is to pass the cropped point cloud, herein denoted as , to the middle feature encoder.

4.2. Local Feature Encoder

The local feature encoder receives the data representation structures , such as pillars, denoted as ; voxels, ; or just the set of points of cropped area, . Then, a set of methods are applied to obtain features and produce dense tensors in the case of pillar feature network (PFN) and voxel feature encoder (VFE) or calculate these features by simply calculating the mean values of point coordinates within each voxel using the mean VFE method.

4.2.1. Pillar Feature Network

The features of each pillar, , are augmented in a tensor

, where c describes the distance to the arithmetic mean of all points in , and is the offset distance from the center.

For this purpose, (1) the pillar feature network (PFN) receives the pillar augmented features as input and applies linear transformations to each point, herein described as , where corresponds to the initial tensor , and to the output tensor. In , all but the last dimension are the same shape as the input. Dimension results from the linear transformation of , thus producing . Then, batch-norm and ReLU are applied to this tensor. Afterwards, all resulting features are aggregated. This process allows for the generation of a dense tensor to represent the pillar as a tuple , where D is the above-mentioned augmented point, P is the number of non-empty pillars per batch and N is the number of points per pillar. Next, max pooling operations over the channels are used to generate a tensor of size .

4.2.2. Voxel Feature Encoder

Similar to PFN, the points in each voxel, , are augmented by calculating offset distance of the point to the center, herein denoted as , which generates the tensor , where as mentioned before is the max number of points per Voxel. Afterwards, each is subject to VFE layers , where . Each is composed by a set of transformations, where linear transformation, batch-norm and ReLU are applied. Then, all points features of resulting from the above-mentioned transformations, herein described as , are aggregated. Each can be described as , where is the output dimension that results from the linear transformation of all points . The output size resulting from the linear transformation can be described as , where means the output features of a specific index l. Then, all point features are subject to a max pooling operation over the channels. The output tensor is described as , where . Afterwards, a repeat process of the above tensor is performed in , which means the repeat point feature resulted from max pooling k times, where . Each is augmented with to generate and . The set of features for each voxel can be described by the tuple , where , , and applies linear, batch-norm, ReLU and max pooling to each . Thus, means that has the output dimension F, the output feature of the last VFE layer.

Finally, it generates a list of obtained voxel features , , where is the above-mentioned augmented features of all voxels.

4.2.3. Mean Voxel Feature Encoder

Mean VFE receives a set of voxels , sums all points residing in each voxel in a specific axis and divides by the number of points of each one. This operation can be described as , , . corresponds to the total number of points of the voxel in a given axis; the max number of voxels; and corresponds to a resulting point. This strategy considers the voxel-wise features of a new voxel center and approximate equivalence to raw point cloud data. The idea herein is to process the voxel-wise features in the middle feature encoder more efficiently, especially by the 3D sparse convolutions, since they generate (max number of voxels, as described in Section 4.1) number of non-empty voxels.

4.3. Middle Feature Extractor

The (3) middle feature extractor is responsible for extracting more features from the (2) local feature encoders to provide more context for the shape description of objects for the networks of the detection head module. Various methods are used; herein, we separated them into 3D backbones and 2D backbones, which will be described in more detail below.

4.3.1. Backbone 3D

A variety of methods resort to 3D backbones using the sparse CNN component. Also, models can use a voxel set abstraction 3D backbone, aiming to encode the multiscale semantic features obtained by sparse CNN to keypoints. Others use PointNet++ with multiscale grouping for feature extraction and to obtain more context to the shape of objects, and then pass these features to the (4) detection head module.

3D Sparse Convolution

The 3D sparse convolution method receives the voxel-wise features of VFE, or mean VFE, .

This backbone is represented as a set of blocks , in the form , where . Each block can be defined by a set of sparse sequential operations denoted as . Each is described by , where means submanifold sparse convolution 3D [18], means spatially sparse convolution 3D [19], means 1D batch normalization operation and represents the ReLU method. The last method assumes the standard procedure, as mentioned in [20].

In our framework, the set of blocks assumes the following configurations:

- The input block can be described by ;

- The next block is represented in the form ;

- The block 3 is represented as , ;

- The block 4 is denoted as , ;

- The block 5 is denoted as , ;

- The last block is defined by .

The batch normalization element is defined by , which represents the formula in [21]. represents the input features, which are the output features of submanifold sparse or spatially sparse convolutions 3D, so that . represents the eps, and the momentum values. These values are defined in Table 1.

Table 1.

Values used in .

The element can be represented as . represents the input features of , and it is denoted as , where represents the output features of submanifold sparse Conv3D. The element represents the output features resulting from applying . means kernel size of a spatially sparse convolution 3D, and it is denoted as , where , and . The stride can be described as a set and . designates padding, and a set can define it , . means dilation, and can be defined as a set , . The output padding is represented as a in the form and . The configurations used in our framework are represented in Table 2.

Table 2.

Configurations used in for each element.

is represented by [18]. represents the input features passed by (2) the local feature encoder or by the last sparse sequential block , and represents the output features of . Thus, in the case of the local encoder being mean VFE, otherwise , where F represents the output features of the VFE network. Also, can be represented by and , where represents the output features of a . The element represents the kernel size, which can be defined as , where , and . means stride, and can be defined as a set , and . represents padding, and a set can describe it in the form . means dilation, and can be described as a set . represents the output padding, and a set describes it in the form , and . The configurations used in our framework are represented in Table 3.

Table 3.

Configurations used in and for each block. N.A.—not applicable.

The hyperparameters used in each are defined in Table 7.

Finally, the output spatial features are defined by , where SP is defined by a tuple (B, C, D, H, W). B represents the batch size; C the output features of represented in as ; D depth; H height; and W width.

PointNet++

We use a modified version of PointNet++ [9] based on [13] to learn undiscretized raw point cloud data (herein denoted as ) features in multiscale grouping fashion. The objective is to learn to segment the foreground points and contextual information about them. For this purpose, a set abstraction module, herein denoted as , is used to subsample points at a continuing increase rate, and a feature proposal module, described as , is used to capture feature maps per point with the objective of point segmentation and proposal generation. A is composed of , , where means PointNet set abstraction module operations. Each is represented by , where corresponds to query and grouping operations to learn multiscale patterns from points, and is the set of specifications of the PointNet before the global pooling for each scale.

means ball query operation followed by a grouping operation . It can be defined by the set , where and correspond to two query and group operations. A ball query is represented as , where R means the radius within all points will be searched from the query point with an upper limit , in a process called ball query; P means the coordinates of the point features in the form that are used to gather the point features; represents the coordinates of the centers of the ball query in the form , where , , and are center coordinates of a ball query. Thus, this ball query algorithm searches for point features P in a radius R with an upper limit of query points from the centroids (or ball query centers) . This operation generates a list of indices in the form , , where corresponds to the number of . represents the indices of point features that form the query balls. Then, a grouping operation is performed to group point features, and can be described by , in which and correspond to point features and indices of the features to group with, respectively. In each of a , the number of centroids will decrease, so that , and due to the relation of the centroids in ball query search, the number of indices and corresponding point features will also decrease. Thus, in each , the number of points features is defined by . The number of centroids defined in QGL during operations is defined in Table 4.

Table 4.

Configurations used in for each element.

Afterwards, an is performed, defined by a set of specifications of the PointNet before the operations. The idea herein is to capture point-to-point relations of the point features in each local region. The point feature coordinate translation to the local region relative to the centroid point is performed by the operation . , and are coordinates of point features as mentioned before, and , and are coordinates of the centroid center. can be defined by a set that represents two sequential methods. Each is represented by the set of operations , , where means convolution 2D, 2D batch normalization and represents the ReLU method. is defined by . , where represents the input features that can be received by or by the output features of the , the kernel size, and represents the stride of the convolution 2D. The kernel size is defined by the set and . Also, the stride is represented by a set and with . The set of specifications used in our models regarding are summarized in Table 5. can be defined as:

where denotes max pooling, denotes random sampling of features and denotes the multilayer perceptron network to encode features and relative locations.

Table 5.

Set of configurations used in of a specific of the element in a specific .

Finally, a feature proposal is applied employing a set of feature proposal modules . Each is defined by the element as defined above. Also, the element assumes a set , and each has the same operations, with the only difference in the element s that describes the number of operations, assuming instead of . The configurations used in our models are summarized in Table 6.

Table 6.

Set of configurations used in of a specific in a specific .

Voxel Set Abstraction

This method aims to generate a set of keypoints from given point cloud and use a keypoint sampling strategy based on farthest point sampling. This method generates a small number of keypoints that can be represented by , , where is the number of points features that have the largest minimum distance, and B the batch size. The farthest point sampling method is defined according to a given subset , where M is the maximum number of features to sample, and subset , , where N is the total number of points features of ; the point distance metric is calculated based on . Based on D, an operation is performed, which calculates the smallest value distance between and . , and represent the list of the last known largest minimum distances of point features. Assuming , it returns the index . Based on , thus . Finally, this operation generates a set of indexes in the form , and , where B corresponds to the batch size and M represents the maximum number of features to sample. The keypoints K are given by .

These keypoints K are subject to an interpolation process utilizing the semantic features encoded by the 3D sparse convolution as . In this interpolation process, these semantic features are mapped with the keypoints to the voxel features that reside. Firstly, this process defines the local relative coordinates of keypoints with voxels by means . Then, a bilinear interpolation is carried out to map the point features from 3D sparse convolution in a radius R with the , the local relative coordinates of keypoints. This is perform . Afterwards, indexes of points are defined according to in the form and another . The expression that gives the features from the BEV perspective based on and is the following:

Thus, the weights between these indexes , and are calculated, as follows:

- ;

Finally, the bilinear expression that gives the features from the BEV perspective is , where , , , . Also, , , , , and .

The local features of are indicated by , and aggregated using PointNet++ according with their specification defined above. They will generate , which are voxel-wise features within the neighboring voxel set of , transforming using PointNet++ specifications. This generates according to , and each is an aggregate feature of 3D sparse convolution with from different levels according to Table 4.

4.3.2. Backbone 2D

Two-dimensional backbones are used to extract features from 2D feature maps resulting from a PFN component, such as those used by PointPillars, and to readjust the objects back to LiDAR’s Cartesian 3D system with minimal information loss utilizing a backbone scatter component. Also, models can compress the feature map of 3D backbones into a bird’s-eye view (BEV) feature map employing a BEV backbone and use an encoder Conv2D to perform feature encoding and concatenation. Such methodology is employed by models such as by SECOND, PV-RCNN, and Voxel-RCNN.

Backbone Scatter

The features resulting from the PFN are used by the PointPillars scatter component, which scatters them back to a 2D pseudoimage of size , where H and W denote height and width, respectively.

BEV Backbone

The BEV backbone module receives 3D feature maps from 3D sparse convolution and reshapes them to the BEV feature map. Admitting the given sparse features , the new sparse features are . The BEV backbone is represented as a set of blocks , in the form , where . Each block , is represented by . The element n represents the number of convolutional layers in . The set of convolutional layers C in is described as a set , where . F represents the number of filters of each , U is the number of upsample filters of . Each of the upsample filters has the same characteristics, and their outputs are combined through concatenation. S denotes the stride in . If , we have a downsampled convolutional layer (). There are a certain convolutional layers (, such that ) that follow this layer. batch-norm and ReLU layers are applied after each convolutional layer.

The input for this set of blocks is spatial features extracted by 3D sparse convolution or voxel set abstraction modules and reshaped to the BEV feature map.

Encoder Conv2D

Based on features extracted in each block and after upsampling based on , where D means the downsample factor of the convolution layer C, the upsample features , are concatenated, such that , where cat means .

4.4. Detection Head

After that, the (4) detection head component receives the 2D encoded features as input and performs operations based on three modules: RPN head, point head, and RoI head.

4.4.1. RPN Head

Based on the 2D encoded features, a set of convolutions to predict class labels, regression offsets and direction are performed. Thus, a set of 1 × 1 convolutions , where , is applied. Each can be represented by , where means convolution 2D, input channels, output channels and kernel size. is the class prediction convolution, and can be described by , where means number of anchors per location and number of target classes to predict. is the convolution for bounding box offset regression, and can be defined by , where it generates two anchors for each class and seven bounding box offsets. Finally, is performed based on where represents the same number of anchors per location, as previously mentioned; represents the number of bins per anchor location; and represents kernel size.

The figure representing our baseline network for each block can be seen in Figure 2. We use three blocks with a BEV backbone for PointPillars, while for the other models, we use two blocks. Each block is represented as described in Table 7. Table 8 describes the configuration of the RPN head.

Table 8.

The different RPN configurations () used. N.A.—not applicable.

Table 7.

The different block configuration () used. N.A.—not applicable.

Table 7.

The different block configuration () used. N.A.—not applicable.

| Models | |||

|---|---|---|---|

| PointPillars | (3, 64, 128, 2) | (5, 128, 128, 2) | (5, 128, 128, 2) |

| SECOND | (5, 64, 128, 1) | (5, 128, 256, 2) | N.A. |

| PV-RCNN | (5, 64, 128, 1) | (5, 128, 256, 2) | N.A. |

| PointRCNN | N.A. | N.A. | N.A. |

| PartA2 | (5, 128, 256, 2) | (5, 128, 256, 2) | N.A. |

| VoxelRCNN | (5, 128, 256, 2) | (5, 128, 256, 2) | N.A. |

4.4.2. Point Head

Different implementations of point head have been proposed to refine RPN predictions or generate class labels, bounding box regression offsets and direction. It can be composed of a class layer regression in the form and bounding box layer described as . The point class layer provides the segmentation score of foreground points, and gives the relative location of foreground points as and calculated based on a foreground point using , where , , are center coordinates of the bounding box; h, w, and l means height, width and length of the bounding box, respectively; and is the box orientation in bird view.

Firstly, bounding box targets are normalized in a canonical coordinate system by first checking if the given points are within the bounding box by performing where if the given statement is true, the local and are calculated. The operation is and . Then, we determine the local relative coordinate of concerning bounding box in X–Y by means , and then determine if a point belongs, and return the respective index to bounding box by . After obtaining the points indexes within the bounding boxes, all inside points are aggregated with PointNet++.

Point Intrapart Offset

This consists of both and to predict point class labels and point bounding box offsets.

Point Head Simple

This is only composed of . However, it has modifications to its architecture , where each is represented by a tuple , where means linear regression, means batch normalization and means the ReLU method. can be defined by , where means the number of features, and typically assumes the same value as .

Point Head Box

This is composed of and with architecture modifications. where where means linear regression, means batch normalization and means the ReLU method. is composed of , where each is defined by the same tuple .

4.4.3. RoI Head

The regions of interest (RoI) head is responsible for taking the RoI features of each box proposal of the RPN Head and then optimizing the imperfect bounding box proposals by predicting and fixing the size and location (centre and orientation) residuals relative to the input bounding box predictions. Besides each model’s specificities, any RoI head is composed of a proposal layer that generates/refines a set of RoIs based on RPN RoIs, denoted as ; an RoI feature extraction method ; and a head module that can be composed but not restricted to the shared fully connected layer , up–down layer and , class layer , regression layer , RoI point pool 3D layer (), RoI grid pool layer (), RoI-aware pool 3D layer () and a convolution part () and convolution RPN ().

is responsible for feature extraction and can be defined by a set , , and and are represented by a tuple , where means convolution 1D, means batch normalization 1D, means ReLU and means dropout. can be defined by the set and each by . produces box predictions and is composed by the set , where each is defined by . and mean bottom-up box generation proposal layers from foreground points. A sequence of convolution 2D and ReLU methods can define the . A is represented as and each by the same sequence of convolution 2D and ReLU methods.

are specifically pool 3D points and their corresponding point features according to the location of each 3D proposal of . Admitting the given output of bounding boxes and a specific bounding box , where , where x, y, z are center coordinates of the predicted bounding box, h, w, and l denote the height, width and length of the bounding box, and denotes the orientation of the bounding box. Herein, the produces an enlarged set of that can be defined by , where represents a constant value to resize the bounding box. The depth information loss for each bounding box proposal is compensated by including the distance information to the LiDAR sensor to the that are BEV spatial features. Each is augmented with , , where , , and correspond to coordinates of point features of the local encoder module and , and are the center coordinates of the LiDAR sensor. Thus, it generates a tensor in the form that is fed to PointNet++, as described in Section 4.3.1, to encode the augmented tensor with local features with global semantic BEV features . This generates a feature vector for confidence classification and box refinement.

The idea of is to aggregate the keypoint features to the RoI grid points with multiple receptive fields. Grid points are uniform sampling, and can be described by , which means that a grid is usually adopted. Firstly, the identification of neighboring keypoints to grid in a radius R is performed by means . After all, a PointNet block is used to aggregate the neighboring keypoint set in the same way as Equation (2):

Then, the two MLP layers, and , are performed.

aims to provide bounding box score confidence and refinement by aggregating the local feature information () with global semantic BEV features () within the proposals. Two operations are performed within the point features of bounding boxes , such that and is scattered to the voxel data structures where , , are encoded in canonical coordinates using the point head module, and m is the number of inside points within bounding box . The objective is to solve the problem of different proposals generating the same pooled points. For this purpose, average pooling for pooled part features operation—denoted as —and max pooling for pooled RPN features—defined as —are adopted, and can be described as and where , , are the resolution of the voxels’ spatial shape. The operations RoIMax and RoIAvg can be described more specifically:

5. Three-Dimensional Object Detection Model Specifications

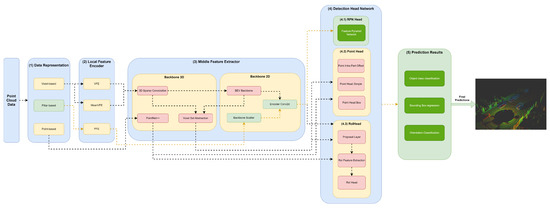

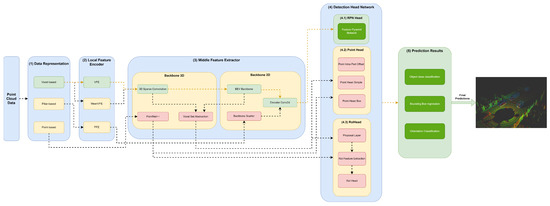

Herein, we will specify each model in the different module frameworks. These models were selected based on the requirements established and defined in Section 1, since they are the models that best guarantee the trade-off between metrics (mAP and inference time). The set of models and their specificities concerning the developed framework are illustrated in Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8. The modules of each model are represented in the figures as green boxes, and the flow of the tensors occurs in the direction of the orange arrows.

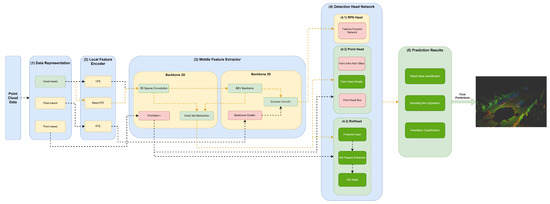

Figure 3.

Structure of the PointPillars model represented in the developed framework.

Figure 4.

Structure of the SECOND model represented in the developed framework.

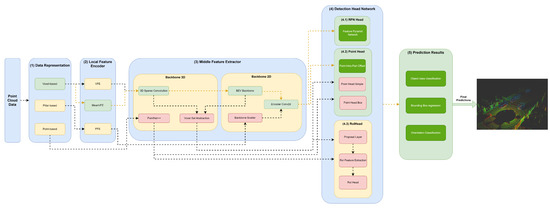

Figure 5.

Structure of the PointRCNN model represented in the developed framework.

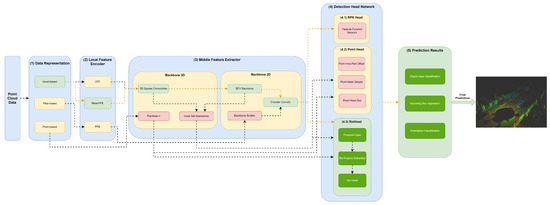

Figure 6.

Structure of the PV RCNN model represented in the developed framework.

Figure 7.

Structure of the model represented in the developed framework.

Figure 8.

Structure of the VoxelRCNN model represented in the developed framework.

5.1. Data Representation

Typically, the models of Figure 4 and Figure 6, Figure 7 and Figure 8 are chosen to represent the point cloud in Voxels. In this data structure, the point cloud is delimited (using the cropping technique), and a grid is produced where the data are discretized along the X–Y–Z axis.

Only PointPillars, illustrated in Figure 3, discretizes this delimited space of the point cloud on the X–Y axis, creating a set of pillars.

In the case of the PointRCNN model (Figure 5), it provides the delimited point cloud without any data discretization and structuring process for the middle feature encoder.

5.2. Local Feature Encoders

As illustrated in the Figures, three strategies are used by the models to improve the efficiency of the object detectors in the feature extraction of the data structures. Typically, these modules are responsible for the local feature extraction, and then, via concatenation, aggregate these features. Three networks are used: VFE for SECOND (Figure 4), PFE for PointPillars (Figure 3) and mean VFE for PV RCNN (Figure 6), (Figure 7) and VoxelRCNN (Figure 8).

5.3. Middle Feature Extractor

The methods described herein use 3D backbones based on sparse and submanifold convolutions, such as SECOND (Figure 4), PV-RCNN (Figure 6), (Figure 7) and Voxel-RCNN (Figure 8). PV-RCNN uses the 3D voxel set abstraction backbone to encode the feature maps obtained by the 3D sparse CNN for keypoints. PointRCNN (Figure 5) uses PointNet++ [9] to extract features and pass them to the detection head module.

Only PointPillars (Figure 3) uses 2D backbones, since they require fewer computational resources when compared to 3D backbones. However, they introduce a loss in the information that is easily mitigated, since it is possible to readjust the objects again to the Cartesian 3D system of LiDAR with less loss of information. For this purpose, the resulting PFE features are used by the backbone scatter component, which scatters them back into a 2D pseudoimage. The next detection head component then uses this 2D pseudoimage.

Other models, such as SECOND (Figure 4), PV RCNN (Figure 6), (Figure 7) and Voxel-RCNN (Figure 8) compress the information in a bird’s-eye view (BEV) using the BEV backbone for feature extraction, then encode and concatenate the features using the encoder Conv2D component. After this process, the resulting features are passed to the detection head.

5.4. Detection Head

As mentioned earlier, this module comprises three networks: RPN head, point head and RoI head.

All models except PointRCNN use the RPN head to generate RoIs using a low-level algorithm called selective search [22] to produce proposed regions per frame of the point cloud. Selective search generates subsegments to generate many candidate regions, and following bottom-up grouping, recursively combines similar regions into larger regions to provide more accurate final candidate proposals. Each of these regions is submitted independently to the CNN module. The output feature map is then fed to an SVM classifier to predict the object class within the candidate RoI. Along with object class prediction, the algorithm also predicts four bounding box offset values.

The point head is used to assist the RPN head, as illustrated in Figure 6 and Figure 7, or generate predictions of object classes and predict four values that are the bounding box offsets, as shown in Figure 5 and Figure 8. Point head generates various masks of objects or parts of objects in a multiscale way, followed by a simple bounding box inference to generate proposals, also called point proposals, using each point to contribute to the reconstruction of the 3D geometry of the object.

The RoI head used by the PointRCNN (Figure 5), PV-RCNN (Figure 6), (Figure 7) and Voxel-RCNN (Figure 8), naturally uses the RoI features of each bounding box proposed in the RPN, and then optimizes the imperfect bounding boxes from previous stages, predicting and correcting the size and location (center and orientation) in relation to the predictions of the input bounding boxes.

6. Network Training and Fine Tuning

The models described in this document were trained using the KITTI data sets. In addition, the models were evaluated based on the KITTY benchmarks, namely for detecting 3D objects and BEV, considering a validation set. Regarding the number of epochs used in the training phase, a methodology spread by the literature was considered. Thus, we use 200 epochs, considering the data described in Table 13. Considering training hyperparameters, We define the initial learning rate of 0.01, learning rate decay of 0.1, decay epoch methodology, weight decay of 0.01, gradient clipping normalization with a max value of 10, beta1 of 0.95 and beta2 of 0.85. We use the learning rate decay, weight decay, and gradient clipping normalization as regularization procedures to prevent overfitting. The evaluation metrics in the results were based on the official KITTY evaluation detection metrics. Hence, the metrics used were mAP for a BEV and 3D object detection. The partition of the training data used in this work consisted of a division discussed in [2]. This approach divides the 7481 training examples that are provided into a training set of 3712 samples, with the remaining 3769 samples belonging to the evaluation set. Moreover, the benchmarks presented in this article are based on the evaluation set only.

We select three target classes in all experiments: car, pedestrian and cyclist. Typically, all the models described herein generate two separate networks. One network is optimized for predicting cars and another for pedestrians and cyclists. However, this approach can be improper in self-driving car applications since low-edge devices with few resources must cope with two parallel models. For this reason, we trained all classes in a one-single model for all 3D object detectors.

For the fine-tuning process, we save the results of the mAP for each epoch to understand when models converge. Herein, we provide a study with the consequences of the number of samplings and min points per class sampling compared with the study made in [23]. In [23], we used different class sampling strategies but without changing the number of min points for class sampling.

Sampling Instance Strategy. We focus on optimizing the number of sampling instances and min points per class sample. The main objective of the sampling strategy is to soften the KITTI dataset imbalance issue. During training, the point cloud is randomly fed with these instances, which means they are placed into the current point cloud. Although this is true, the min points affect whether or not a certain instance can be used for sampling. If we increase the min number of points in the training process, instances such as pedestrians and cyclists are less sampled because few points exist to describe their shape. On the other hand, if we decrease too many min points, the model suffers in distinguishing between the foreground and the background points. In our experiments, we use the configurations described in Table 9. The min point for class sampling was fixed per class as 5 instead of 10 points for pedestrian and cyclist classes and 5 points for the car.

Table 9.

Number of sampling instances (SI) per class.

Point Cloud Range. Any object detector’s detection range is impacted by the point cloud range, which reduces it. For all models in our study, the ground truth object locations are represented using the original point cloud range for all frames in the KITTI dataset frame. For instance, it is feasible to confirm using depth data that most ground truth events in automobiles occur between 0 and 70 metres. The number of cases starts to decline sharply beyond 70 metres from the middle of the LiDAR sensor. This can be explained by the fact that beyond this range, relatively few points can accurately characterize an item’s geometry, making object detection challenging. In this experiment, the point cloud range of PointPillars is compared to that of other models whose detection range is unaffected. Table 10 shows the point cloud ranges. We also compare the research in [23] to the quantity of data structures (maximum number of pillars or voxels).

Table 10.

The different point cloud ranges () configurations used in fine tuning.

Data structure sizes. The object detection model receives the points in and discretizes them in the X–Y axis, thus creating a set of pillars, or discretizes in X–Y–Z and creates a set of voxels. Each data structure has a fixed size in . The data structure size directly impacts model accuracy and inference time. Increasing the data structure size can result in too much data being encoded and consequently randomly sampled, leading to information loss (the maximum number of points per data structure is set for computational saving purposes). On the other hand, reducing the data structure size can increase the number of non-empty data structures, increasing memory usage and inference time. Two configurations were used in our fine-tuning process, as shown in Table 11.

Table 11.

Pillar size () configurations used in fine tuning.

Number of Data Structures.

Since most data structures will be empty, a maximum number of data structures is established to investigate the KITTI dataset sparsity problem. In order to generate a dense tensor, using several data structures might cause the majority of them to be filled with zeros, making inference time inefficient. A maximum number of points is also established using the KITTI dataset’s distribution of the number of points per data structure, as shown in Table 12.

Table 12.

Total number of data structures used in fine tuning.

7. Performance Evaluation, Comparison and Discussion

This section details a series of tests conducted using the random search approach to improve the trade-off between accuracy and inference time performance parameters. The experiments and related network setups and models are shown in Table 13. To comprehend the effects of constructing a model tuned to create three classes of output instead of splitting into two separate networks (one for cars and another for pedestrians and cyclists), PointPillars settings and their outcomes are also supplied.

Table 13.

The set of experiments conducted and respective network configurations.

Table 13.

The set of experiments conducted and respective network configurations.

| Experiment | Model Config. | Config. | SI Config. | No. Output Classes | Config. | P Config. |

|---|---|---|---|---|---|---|

| 1 | PointPillars | 3 | ||||

| 2 | SECOND | 3 | ||||

| 3 | PV-RCNN | 3 | ||||

| 4 | PointRCNN | 3 | ||||

| 5 | PartA2 | 3 | ||||

| 6 | VoxelRCNN | 3 | ||||

| 7 | PointPillars | 3 | ||||

| 8 | SECOND | 3 | ||||

| 9 | PV-RCNN | 3 | ||||

| 10 | PointRCNN | 3 | ||||

| 11 | PartA2 | 3 | ||||

| 12 | VoxelRCNN | 3 |

The results of the experiments provided in Table 13 are shown in Table 14, Table 15, Table 16 and Table 17. We use the metric AP for three difficulty levels (easy, moderate and hard) and various intersection-over-union (IOU) thresholds according to KITTI benchmarks to provide the results. IOU is 70% for cars and 50% for cyclists and pedestrians. The experiment results from this study are compared to the original ones from the literature in Table 18. The comparison considers the three target classes for both 3D and BEV. The results presented for the conceived experiments consider the overall values per class for the best detection metric.

Table 14.

Results in validation set for BEV detection metric for experiments 1–6.

Table 15.

Results in validation set for 3D detection metric for experiments 1–6.

Table 16.

Results in validation set for BEV detection metric for experiments 7–12.

Table 17.

Results in validation set for 3D detection metric for experiments 7–12.

Table 18.

Our results in KITTI validation set vs. original results in KITTI test set for 3D and BEV detection metrics.

As demonstrated in the aforementioned results, the model implementations in our framework generally produced better mAP. Regarding the point cloud range in our networks, we reproduced original configurations for all models, with fewer when compared with the study in [23] since most will be empty. This improvement drastically decreases the inference time when comparing PointPillars with the same research. As shown in Table 19 and Table 20, some models, such as PointPillars, and PointRCNN, produce very close inference time results. On the other hand, our results for SECOND are better, and are worse in the cases of PV-RCNN and VoxelRCNN. Clearly, there is always a trade-off in terms of inference time for producing three-class inference models. This can be explained by the fact that original models obtained their results by training separated networks, one for cars and another for pedestrians and cyclists (a standard literature practice on KITTI benchmarks). By training three-class models, gradients are affected by all those instances, which leads to our models losing the specialization for prediction. However, as mentioned in [23], producing separate networks is impractical for self-driving applications. One solution can be increasing the model’s layers to improve the capability to learn the required patterns/weights/representations of the data. Although this is true, increasing the model’s depth will decrease the inference speed, which can result in a model not meeting the self-driving requirement for that metric (model’s inference time above 100 ms).

Table 19.

Our inference time metric results.

Table 20.

Original model inference time metric results.

Reducing the minimum points to consider a sample instance brought gains in terms of mAP and for the same model architecture, since more instances can be used for data augmentation. This allows for the expansion of the diversity of the training data and our models to learn more patterns from data.

8. Conclusions

The research about deep learning methods for 3D object detection on LiDAR data has increased tremendously in recent years, with many models, repositories and different technologies being developed. Although this benefits scientific development in this area, the various technologies, software, repositories and models are a bottleneck for testing and improving the current methods.

To cope with this limitation, we developed a framework for representing multiple SoA 3D object detectors with highly refactored codes for both one-stage and two-stage methods. The main idea of this framework is to facilitate the implementation, reusing and implementation of new techniques in each framework module with less manual engineering effort. In conclusion, it enables the abstract implementation, reusing and building of any object detector in one single 3D object detector framework.

Nonetheless, it is evident that creating three-class inference models comes with a trade-off regarding inference time. Our study’s results are based on the KITTI validation set, while the original findings were obtained using the KITTI test set. We replicated the original network configurations for all models concerning the point cloud range but with fewer DS than the research mentioned in the previous section. The improvement mentioned earlier leads to a considerable reduction in the inference time when PointPillars is compared to the same research.

The current models for 3D object detection in LiDAR data targeting self-driving applications show their results in powerful servers with dedicated graphics cards and an unlimited power source. However, using this kind of server in the context of a self-driving car is impractical due to limited space and power supply. This shows a limitation regarding deploying 3D object detectors in such an environment. Research must evolve to produce models capable of meeting performance metrics while being deployable in resource-constrained edge devices with limited power supply and computational power.

Besides the capability to easily represent SoA 3D object detectors, other models should be integrated as future work. This requires the constant update of the framework in integrating the new components brought by novel methods since scientific research consistently produces innovation, especially in this area.

Author Contributions

Conceptualization, A.L.S., P.O. and D.D.; methodology, A.L.S., P.O. and D.D.; software, A.L.S. and P.O.; validation, P.M.-P., J.M. (José Machado), P.N., A.S, P.O., D.D., D.F., R.N. and J.M. (João Monteiro); formal analysis, A.L.S., P.O., D.D., J.M. (José Machado), P.N., D.F., R.N., P.M.-P. and J.M. (João Monteiro); investigation, A.L.S., P.O. and D.D.; resources, J.M. (José Machado), P.N., P.M.-P. and J.M. (João Monteiro); data curation, A.L.S., P.O. and R.N.; writing—original draft preparation, A.L.S., P.O. and D.D.; writing—review and editing, A.L.S., P.O., D.D., R.N., D.F., J.M. (José Machado), P.N., J.M. (João Monteiro) and P.M.-P.; visualization, A.L.S., P.O., D.D., D.F., J.M. (José Machado), P.N., P.M.-P. and J.M. (João Monteiro); supervision, J.M. (José Machado), P.N., P.M.-P. and J.M. (João Monteiro); project administration, J.M. (José Machado), P.N., J.M. (João Monteiro) and P.M.-P.; funding acquisition, J.M. (José Machado), P.N., J.M. (João Monteiro) and P.M.-P. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by FCT—Fundação para a Ciência e Tecnologia within the R&D Units Project Scope: UIDB/00319/2020 and the project “Integrated and Innovative Solutions for the well-being of people in complex urban centers” within the Project Scope NORTE-01-0145-FEDER-000086. The work of Pedro Oliveira was supported by the doctoral Grant PRT/BD/154311/2022 financed by the Portuguese Foundation for Science and Technology (FCT), and with funds from European Union, under MIT Portugal Program. The work of Paulo Novais and Dalila Durães is supported by National Funds through the Portuguese funding agency, FCT—Fundação para a Ciência e a Tecnologia within project 2022.06822.PTDC.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Beltrán, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; De La Escalera, A. Birdnet: A 3d object detection framework from lidar information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3517–3523. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Fernandes, D.; Silva, A.; Névoa, R.; Simões, C.; Gonzalez, D.; Guevara, M.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inf. Fusion 2021, 68, 161–191. [Google Scholar] [CrossRef]

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An automated driving systems data acquisition and analytics platform. Transp. Res. Part Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Cosmas, K.; Kenichi, A. Utilization of FPGA for onboard inference of landmark localization in CNN-Based spacecraft pose estimation. Aerospace 2020, 7, 159. [Google Scholar] [CrossRef]

- Ngadiuba, J.; Loncar, V.; Pierini, M.; Summers, S.; Di Guglielmo, G.; Duarte, J.; Harris, P.; Rankin, D.; Jindariani, S.; Liu, M.; et al. Compressing deep neural networks on FPGAs to binary and ternary precision with hls4ml. Mach. Learn. Sci. Technol. 2020, 2, 015001. [Google Scholar] [CrossRef]

- Sharma, H.; Park, J.; Amaro, E.; Thwaites, B.; Kotha, P.; Gupta, A.; Kim, J.K.; Mishra, A.; Esmaeilzadeh, H. Dnnweaver: From high-level deep network models to fpga acceleration. In Proceedings of the Workshop on Cognitive Architectures, Atlanta, GA, USA, 2 April 2016. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. arXiv 2017, arXiv:cs.CV/1711.06396. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From points to parts: 3d object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast point r-cnn. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9775–9784. [Google Scholar]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Chen, C.; Gong, W.; Chen, Y.; Li, W. Object detection in remote sensing images based on a scene-contextual feature pyramid network. Remote Sens. 2019, 11, 339. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Graham, B.; van der Maaten, L. Submanifold Sparse Convolutional Networks. arXiv 2017, arXiv:1706.01307. [Google Scholar]

- Graham, B. Spatially-sparse convolutional neural networks. arXiv 2014, arXiv:1409.6070. [Google Scholar]

- Lu, L.; Shin, Y.; Su, Y.; Karniadakis, G.E. Dying relu and initialization: Theory and numerical examples. arXiv 2019, arXiv:1903.06733. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Silva, A.; Fernandes, D.; Névoa, R.; Monteiro, J.; Novais, P.; Girão, P.; Afonso, T.; Melo-Pinto, P. Resource-Constrained Onboard Inference of 3D Object Detection and Localisation in Point Clouds Targeting Self-Driving Applications. Sensors 2021, 21, 7933. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).