Abstract

With the wide application of visual sensors and development of digital image processing technology, image copy-move forgery detection (CMFD) has become more and more prevalent. Copy-move forgery is copying one or several areas of an image and pasting them into another part of the same image, and CMFD is an efficient means to expose this. There are improper uses of forged images in industry, the military, and daily life. In this paper, we present an efficient end-to-end deep learning approach for CMFD, using a span-partial structure and attention mechanism (SPA-Net). The SPA-Net extracts feature roughly using a pre-processing module and finely extracts deep feature maps using the span-partial structure and attention mechanism as a SPA-net feature extractor module. The span-partial structure is designed to reduce the redundant feature information, while the attention mechanism in the span-partial structure has the advantage of focusing on the tamper region and suppressing the original semantic information. To explore the correlation between high-dimension feature points, a deep feature matching module assists SPA-Net to locate the copy-move areas by computing the similarity of the feature map. A feature upsampling module is employed to upsample the features to their original size and produce a copy-move mask. Furthermore, the training strategy of SPA-Net without pretrained weights has a balance between copy-move and semantic features, and then the module can capture more features of copy-move forgery areas and reduce the confusion from semantic objects. In the experiment, we do not use pretrained weights or models from existing networks such as VGG16, which would bring the limitation of the network paying more attention to objects other than copy-move areas.To deal with this problem, we generated a SPANet-CMFD dataset by applying various processes to the benchmark images from SUN and COCO datasets, and we used existing copy-move forgery datasets, CMH, MICC-F220, MICC-F600, GRIP, Coverage, and parts of USCISI-CMFD, together with our generated SPANet-CMFD dataset, as the training set to train our model. In addition, the SPANet-CMFD dataset could play a big part in forgery detection, such as deepfakes. We employed the CASIA and CoMoFoD datasets as testing datasets to verify the performance of our proposed method. The Precision, Recall, and F1 are calculated to evaluate the CMFD results. Comparison results showed that our model achieved a satisfactory performance on both testing datasets and performed better than the existing methods.

1. Introduction

With the development of computer science and technology, image forgery problems are increasingly emerging. Among them, image copy-move forgery detection has become a hot research direction. Image forensics can be divided into active and passive forensics. Image active forensic technology involves pre-inserting relevant information, which can be used to verify copyright ownership or detect tampering, such as watermarks and digital signatures. However, there are restrictions to both digital watermarking and signatures, because only images with a watermark or signature are detectable using these techniques. The passive image forensic techniques are of broader use, and copy-move forgery detection (CMFD) is one of the approaches that can deal with the problem of copied pieces coming from the same image, which is shown in Figure 1 and Figure 2.

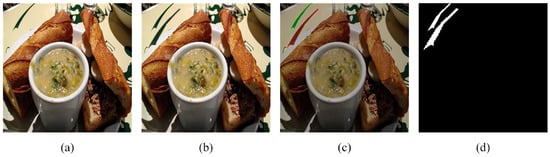

Figure 1.

An example of image copy-move forgery in daily life: (a) is the original image, (b) is the copy-move image, (c) shows the copied and pasted regions, which are marked green and red, respectively, and (d) is the binary mask of the copy-move image.

Figure 2.

An example of image copy-move forgery in the military: (a) is the original image, (b) is the copy-move image, (c) shows the copied and pasted regions, which are marked green and red, respectively, and (d) is the binary mask of copy-move image.

Compared to copy-move forgery, splicing forgery is copying and pasting between different images. The tampered images have a lot of clues from edge artifacts, statistical features, and imaging features; for example, a color filter array (CFA) consists of a series of color sensors, CFA interpolation reconstructs a full color image by converting the captured output into red, green, and blue primary color channels (red, green, and blue, RGB), and different visual sensors have different interpolation algorithms. In [1], image splicing was detected using the difference of sensor noise power in CFA interpolated pixels, when copy-move forgery occurred within the same image.The statistical properties of a real region and a tampered region can be very similar, so some splicing forgery detection methods [2,3,4,5] do not have advantages for CMFD. Therefore, various methods have been presented for detecting copy-move forgery, whose main steps are feature extraction and feature matching.

Traditional CMFD includes block-based approaches and keypoint-based approaches. The primary idea of block-based approaches is to divide an image into a number of patches, and to compare these with each other to find the matched regions. Kakar and Sudha [6] proposes to extract blur moment invariant features as block features and then employ a polar cosine transform (PCT) to match block features. Singular value decomposition (SVD) is designed to extract features for each small overlapping block, and a discrete cosine transform (DCT) is applied for capturing discriminating features. Various other papers also proposed different ideas: in [7], blur moment in-variants are used to better detect the blur region, and principal component analysis (PCA) is adopted to reduce the dimensions, as well as to find features that are easier to understand by taking the greatest individual differences, which are shown by the main components. However, the disadvantage is that the computational cost is very high. Next, ref. [8] proposes using singular value decomposition (SVD) to reduce the feature description and decrease the computational cost, and the morphological opening refines the result, to avoid further losses. However, the robustness against geometric transformations was limited. After extracting the features, different feature matching methods have been proposed. In [8], a K-dimensional tree (KD-Tree) is used to achieve matching. In the feature map that is divided into blocks, each block could find the one with the highest similarity. The similarity standard is determined using a given threshold, whereas only those whose similarity was equal to or greater than the threshold could be recorded. Next, the similar blocks are eliminated if their neighbors were different. In [9], an approximate nearest neighbor search is performed by hashing vectors using a set of hash functions, which is designed to obtain values that had the same hash. Locality-sensitive hashing (LSH) guarantees that a given vector and its approximate nearest neighbor can be mapped to the hash with a high probability.

Keypoint-based methods are also essential types of CMFD. The core of key-point-based methods is to extract distinctive local features, such as corners, blobs, and edges from an image. Each feature is presented with a set of descriptors produced within a region around the features. The descriptor is used to increase the reliability of the features for the affine transformation. Scale-invariant feature transform (SIFT) and speeded up robust features (SURF) have been widely used to extract key points in images. SIFT techniques are highly robust against post processing and inter-mediate operations; however, they are computationally complex and incapable of determining forgeries in areas which are flat, due to a lack of reliable key points. SURF features have been proposed to improve the performance of SIFT. SIFT [10] has become indispensable in this area. Ref. [11] takes advantage of SIFT and best-bin-first. Then, refs. [12,13,14] proposed an improvement on this basis. SIFT has driven the development of SURF [15]. The two methods have similar procedures: local feature point extraction, feature point description, and feature point matching. It is worth mentioning that SURF has two tools to increase the execution efficiency, one is the use of an integral graph in Hessian (Hesse matrix), and the other is the use of a dimension reduction feature descriptor. The Harris corner detector extracts edges and corners from a region, and it was employed for keypoint extraction in [16]. In feature matching of a keypoint-based area, the nearest neighbor determines the similarity between points by calculating the distance between each point in the vector space. If the distance meets the specified threshold, the points are considered similar, which was applied in [11].

Although a lot of block-based and keypoint-based approaches have been proposed for CMFD, several flaws exist. Block-based techniques have high processing costs and undetectable large-scale distortion. Meanwhile, the performance in addressing smoothing forgery snippets is not good with keypoint-based techniques. Likewise, fusion methods integrate feature extraction from keypoint-based and region matting from block-based methods. Unfortunately, the smoothing forgery fragment is not addressed by the fusion method using sparse key point features. Auxiliary deep neural networks (DNNs) have recently been proposed, to help with various aspects of CMFD [17,18,19,20,21]. CMFD using DNN algorithms involves these basic steps: (1) a pre-processing stage, which involves transforming the image into the format that the network requires, such as scaling, data augmentation, format conversion, and so on; (2) a feature extraction stage, which is the process of extracting the image’s significant hierarchical characteristics, to be used in the following procedures; (3) feature matching stage, which includes block-based and key-point-based matching methods, and which is used to match the extracted features; and (4) the post-processing stage, which involves transforming the results into the format required, such as visualizing them in an image format. [17] proposed a deep learning technology-based method for detection of both splicing and copy-move forgery. This method contained 10 convolution layers, among which, the first layer was a spatial rich model (SRM) [22] pre-processing layer for feature extraction. [18] proposed using VGG16 for feature extraction and using self-correlation as a matching method. In [20], a pyramid feature extraction method was proposed. Reference [23] also applied an encoder-to-decoder network and used a classification layer to obtain the copy-move area. However, reference [17] could only identify whether the image had been tampered and could not locate the tampered area in the image. Meanwhile, reference [18] only performed matching in a pretrained VGG16, which put particular emphasis on the object instead of the forgery area. VGG16 is a model for image classification or target recognition, which contains rich semantic information. However, in CMFD, the tampered objects are not necessarily objects with rich semantic information but may be ordinary meaningless areas. Reference [20] showed this may lead to much useless information passing across the network model.The proposed SPA-Net is an end-to-end DNN method for solving the shortcomings of the existing deep learning methods, and our contributions can be listed as follows:

- 1

- In the SPA-Net feature extractor, we use a combination of a span-partial structure and residual attention mechanism, to reduce the repeated gradient information and pay more attention to the copy-move forgery area. To the best of our knowledge, this structure has not been used in the previous CMFD methods;

- 2

- We use a feature matching module to locate the copy-move regions by calculating the similarity between features. To avoid only locating the objects that have rich semantic information but not the copy-move areas, we use no pretrained models or weights;

- 3

- Moreover, we generated a SPANet-CMFD dataset with original images from SUN [24] and COCO [25], and we selected the source area using Labelme manually in each image. In addition, to enhance the ability of SPA-Net, we not only selected the objects, but also the areas that contained less semantic information as copy-move regions.

This paper is organized as follows: Section 2 gives related studies on residual structure and attention mechanism; Section 3 describes the proposed SPA-Net for CMFD in detail; Section 4 shows the experimental results, where the performance of the proposed SPA-Net was measured, the comparisons with the state-of-the-art approaches were conducted, and the challenges and weaknesses that require further improvement are discussed; Finally, we give the conclusions and future work in Section 5.

2. Related Work

2.1. Residual Structure

To optimize the repeated gradient information in their network, Reference [26] proposed a cross-stage partial network (CSPNet), which divided the underlying feature graph into two parts (the same feature graph was fed into two different convolutions, and the number of channels output from the two convolution was halved) and then fused them through the proposed cross-stage hierarchical results. The main concept is to truncate the gradient flow and add a transition layer after truncation, to make the gradient flow propagate through different network paths and prevent different layers from learning repeated gradient information. Using CSPNet as a backbone can effectively enhance CNN’s learning ability and reduce the amount of calculation. In addition, CSPNet is easy to implement and generic enough to handle different architectures.Therefore, in SPA-Net, we propose a span-partial structure in SPA-Net feature extractor module, and the main concept is to truncate the gradient flow and add a transition layer after truncation to make the gradient flow propagate through different network paths and prevent different layers from learning repeated gradient information.

2.2. Attention Mechanism

In the area of deep learning, models often need to receive and process large amounts of data, but sometimes the equivalent processing of images is not necessary, because only a small part of certain data is important. To reduce this information redundancy and make areas of interest more prominent, reference [22] proposed a squeeze and excitation net (SENet) [27] as an improvement. The principle of SENet is to enhance the important features and weaken the unimportant features by controlling the weight. In addition, the flexibility of the SE module lies in the fact that it can be directly applied to the existing network architecture, such as [28], a mobile inverted bottleneck convolution (MBConv), whose core idea is to learn feature weights through the network. In the proposed SPA-Net feature extractor module, we use a combination of a span-partial structure and residual attention mechanism to reduce repeated gradient information and pay more attention to the copy-move forgery area that we are interested in. To the best of our knowledge, this structure has not been used in the previous CMFD methods. The principle of the residual attention mechanism is to enhance the important features and weaken the unimportant features, by controlling the weight, so as to make the extracted features more directional.

3. Proposed SPA-Net for CMFD

The aim of CMFD is to find the copy-move forgery area of images; however, most existing networks do not follow the core process of CMFD, some are biased toward image segmentation, while others are biased toward image target recognition. Although some methods [19,29] have been proposed using existing pretrained network models and parameters, these cannot achieve satisfactory results. Therefore, instead of using pretrained models, we propose a deep learning approach without any pretrained models, and which uses a span-partial structure and attention mechanism (SPA-Net) for image CMFD. The SPA-Net is constructed using a sequence of DNN layers, and thus it can be trained from end to end.

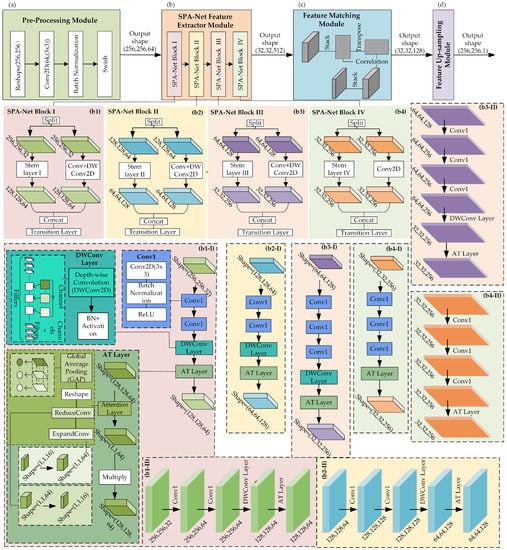

Figure 3 shows an overview of the proposed SPA-Net for CMFD, which is composed of (a) preprocessing module, (b) SPA-Net feature extractor module, (c) feature matching module, and (d) feature upsampling module. The preprocessing module is used to process an input image of any size to the fixed size of that is required by the network and to extract a rough feature map. The SPA-Net feature extractor module is composed of three blocks: SPA-Net Block I, SPA-Net Block II, and SPA-Net Block III. Each of the blocks includes two branch structures, where one is a stem layer that contains multiple residual structures and a convolution layer, and the other carries out convolution. The addition of a residual structure can increase the gradient value of backpropagation between layers and avoid the disappearance of the gradient caused by deepening, so that more fine-grained features can be extracted without worrying about network degradation. The feature matching module is used to learn the correlation between the features obtained with the SPA-Net feature extractor module. Finally, the feature upsampling module is used to resize the image to its original size.

Figure 3.

Overview of the proposed SPA-Net for CMFD. (a–d) denote the four modules of SPA-Net, (b1–b4) are the steps of four SPA-Net blocks, (b1-I–b4-I) are the details of each Stem Layer, (b1-II–b4-II) represent the shapes of intermediate outputs in Stem Layer I–IV.

3.1. Preprocessing Module

Before applying the SPA-Net feature extractor module, the preprocessing module as shown in Figure 3a is applied to adjust the input image to the format required by the model. During preprocessing, we first adjust the shape of the input image to fit the required by our network and employ a Conv2D layer to reshape the image to extract a feature of size . Then, batch normalization is used to pull the layer eigenvalue distribution back to the standard normal distribution, and the eigenvalue falls in the interval where the activation function is sensitive to the input. Small changes in the input can lead to large changes in the loss function, making the gradient larger, avoiding the disappearance of the gradient, and speeding up the convergence. Finally, the swish activation function is applied to help prevent gradients from gradually approaching 0 and causing saturation during slow training. In this way, the preprocessing helps to speed up the processing of the image.

3.2. SPA-Net Feature Extractor Module

The SPA-Net feature extraction module aims to obtain a feature map with rich gradient information. The proposed SPA-Net feature extractor module is composed of four blocks: SPA-Net Block I, SPA-Net Block II, SPA-Net Block III, and SPA-Net Block IV, as shown in Figure 3(b1–b4). Each of the SPA-Net blocks includes dual span-partial structures, with the stem layer on the left branch and the layer of Conv_DWConv2D (CDW) on the right branch. A concatenate layer is used to combine the two branches in the end, and a traditional layer that contains convolution layer, BN, and activation function is used to further extract the feature map. The output of each stem layer and its fusion operations on the left and right branches can be defined as

where means the output of the corresponding SPA-Net block. Meanwhile, the subscript i also refers to the variables and used in the relevant SPA-Net block guidance by Equation (1), respectively.

Due to the feature map being of the same size, each SPA-Net Block uses a concatenate layer at the end, for the purpose of merging the feature maps from the left and right channel dimensions. The feature map from the preprocessing layer is split into two feature maps, each with a size . One feature map uses stem layer I, while the other takes CDW to extract the features of interest, each with a size of , which are then connected together using concatenation. Using the split and merge strategy across stages can effectively reduce the possibility of duplication during the process of information integration and thus greatly improve the learning ability of the network.One branch stem layer contains multiple residual structures and convolution layers and then can increase the gradient value of backpropagation between layers and avoid the disappearance of the gradient caused by deepening, so that more fine-grained features can be extracted, without worrying about network degradation. Another branch CDW layer has the standard kernel convolution and depth-wise convolution (DWConv2D) with kernel to improve the channels and reduce the size, respectively. The outputs of the stem layer are , , , and , respectively, in the subsequent four blocks and the same as the outputs of the CDW layer. From Figure 3(b1-I–b4-I), in the feature extractor module, the scale of the feature map is decreased with multiples, while the channels are increased in dimension using multiples. The process of extracting high-dimensional information from Figure 3(b1-I–b4-I) involves calling three specific structures: the Conv1 group, the DWConv layer, and the AT layer. We describe the call relationship in the feature extractor submodule as follows:

where the output in Equation (2) is the expansion variable in Equation (1) and denotes the feature extraction result of the stem layer. To describe the number of calls to the three structures at different stem layers, we use three coefficients: a, b, and c. Taking Figure 3(b1-I) as an example, the coefficients a, b, and c are each equal to 3, 1, and 1. In the same way, a, b, and c have the values 3, 0, and 1. In fact, we did not design a fixed matrix of coefficients for the stem layer when designing the feature extractor module in SPA-Net. These coefficients were dynamically adjusted during the experiment, and we present the adjusted results in this paper.

In the stem layers of the SPA-Net blocks, we replace the max-pooling layer, which is used in VGG16, with a DWConv layer. The max-pooling layer could lead some crucial information being dropped, but the problem can be efficiently avoided using convolution. The DWConv layer consists of DWConv2D and BN-activation, where the filter kernel is responsible for one channel, and is only convolved by one kernel; therefore, the number of feature map channels generated in this process is exactly the same as the number of input channels. In addition, compared with standard convolution operations, DWConv2D has a lower parameter number and operation cost to change the size of feature map, Moreover, BN-Activation can help the process of stochastic gradient descent by smoothing and hiding the layer input distribution and alleviate the negative impact of weight updating of the stochastic gradient descent on subsequent layers. However, a DWConv layer is not applied in SPA-Net Block IV, to ensure the resolution of the feature map. Therefore, the DWConv2D in DWConvLayer can be defined as

where h, w, and c represent the height, width, and channel of the input feature map x. We selected a fixed size filter kernel for DWConv2D in our experiments, so the superscript of the kernel W was fixed to 3. In addition, we eliminated the effect of the size of the kernel using control of padding. Thus, the size of the DWConv2D output feature map is mainly determined by s, which determines whether the size of the input and output feature maps are h and w or halved, respectively.

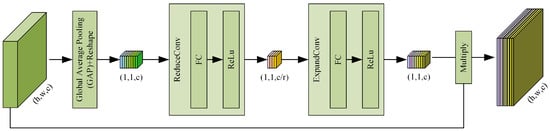

Moreover, an attention (AT) layer follows the DWConv Layer. The DWConv layer and AT layer improve the efficiency and accuracy of visual information processing, which not only aims to speed up the network training but also makes the grid sparse, to reduce overfitting. Subsequently, we used an AT Layer. As shown in Figure 4, the AT Layer is composed of global average pooling (GAP), reshape, ReduceConv, and ExpandConv. The global compressed feature quantity of the current feature map is obtained by performing GAP on the feature map. The GAP method calculates the mean value of all pixels in each feature map, and its operation is as follows:

where represents the output of GAP, is the output of DWConv Layer, and R is the number of pixels contained in a feature map in a single channel, with . Weights for each channel in the feature map are obtained using the congestion structure for both layers. The weighted feature map is used as the input for the next layer, and 64 feature maps output 64 data points in Equation (4). These data-points have a vector and reshape it into features in Reshape, before ReduceConv and ExpandConv. The Fully Connected (FC)-ReLU forms ReduceConv and FC-Sigmoid forms of the ExpandConv operation, respectively, refer to squeeze and excitation, which generate a feature map and feature map, separately.Afterwards, 64 scalars between 0 and 1 are obtained as the weights of the channel, each output of the channel in the DWConv layer is weighted using the corresponding weight, each element of the corresponding channel is multiplied by the respective weight, and the new weighted feature is obtained. This operation can be summarized as a multiplication of the different channel feature maps using the global characteristics in Equation (5).

Figure 4.

The detailed steps of the AT Layer.

As for the DWConv Layer in Stem Layer II, Stem Layer III and Stem Layer IV, it is the same as the DWConv Layer in Stem Layer I, to capture more useful information than the Max-pooling layer and reduce the number of parameters and computing cost more efficiently than standard convolution. In the AT Layer, for the first squeeze operation, we can obtain the global characteristics for the channel level. Then, we use the global characteristics control to learn the relationship between the various channels. We can obtain the weights for the different channels and multiply each channel by its corresponding weight. In essence, this AT layer performs attention operations on the channel dimension. This attention mechanism enables the model to pay more attention to channel features with the largest amount of information, while suppressing the unimportant channel features. Moreover, in all AT Layers in Stem Layers I-IV, the r in Figure 4 is 4, so the number of nodes in the first FC layer is of the characteristic matrix channels of the input layer, and the number of nodes in the second FC layer is the same as that of the characteristic matrix channels of the input layer. After a series operation, the result of the residual branch is obtained, with . GAP is used to obtain the weights of each feature map channel, the Reshape step reshapes points to a tensor, ReduceConv reduces the channels to , and ExpandConv increases the channels to be same as the channels of feature map before the AT Layer.

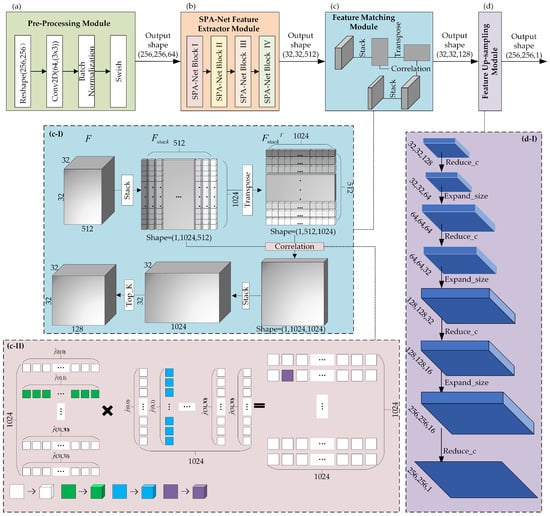

3.3. Feature Matching Module

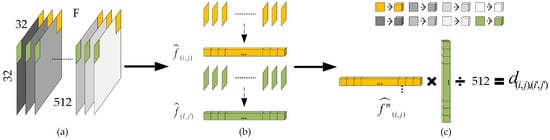

After extracting features with the SPA-Net feature extractor module, inspired by [18,20], a feature matching module is applied to match the characteristic representation and locate the tampered area by addressing the high-dimensional information. Figure 5 shows the feature matching module and feature upsampling module. The global structure of the feature matching module is shown in Figure 5(c-I). To begin with, we stack the F into with shapes of , which can be regarded as 512 patches with , and is a high-dimensional transpose matrix. To match the features, we need to calculate the correlation between each patch. The correlation distance is an efficient indicator to identify the inherent connections between each patch, so we use Equation (6) to calculate the correlation distance between and the sub-element belonging to arbitrary coordinates , as shown in Figure 6c.

where represents the index of patches in and represents the index of patches in , , and the range of is the same as . The and are not a match when . and are standard deviations of and , which are shown in Figure 6b, and calculated using

where is the mean of , and is the variance of . These are defined as

Figure 5.

The structure of the feature matching module and feature upsampling module. (a–d) The four Modules of SPA-Net. (c-I) The global process of feature matching module. (c-II) The details of correlation in the feature matching module. (d-I) The intermediate outputs of the feature upsampling module, where Reduce_c is the convolution layer to reduce the channels of feature map, and Expand_size is used to upsample the feature map.

Figure 6.

Illustration of the variation within the feature map, where each square represents a cube: (a) is the extracted feature map F, (b) represents output of each patch and is of , and (c) shows the procedure for calculating the similarity between patches.

Consequently, the correlation coefficient of with total calculate scores is denoted as , . Figure 5(c-II) describes the correlation step between every pair of patches in detail. Each patch generates a tensor of through a correlation calculation. Therefore, the final algorithm results in a correlation coefficient matrix of . The example marked in Figure 5(c-II) shows the result of correlation calculation in the green part, blue part, and purple part. In order to better eliminate errors and over-fitting, we refine the output. Specifically, each is sorted from highest to lowest, and we select the top K patches that best match it, except itself. In SPA-Net, we select , so we obtain a correlation coefficient tensor after the feature matching module.

3.4. Feature Upsampling Module

The previous SPA-Net feature extraction module and feature matching module are used to analyze the local pixels of the image, so as to obtain high-order feature information; in this way, we can obtain the region of interest and general location information. In addition, due to the difference in size and channels between with and input image with , we designed a feature upsampling module to recover the shape of , in order to facilitate more intuitive observation of the results of our methods. Some interpolation methods do not learn parameters; for a deep learning architecture, these interpolation methods could affect the updating of parameters and thus reduce the accuracy of the architecture. Therefore, we chose transpose convolution in the feature upsampling module. Transpose convolution can, not only change the size and dimensions of the feature map, but also learn parameters, just like convolution. The change in the output shape is shown in Figure 5(d-I), where Reduce_c is a transpose convolution layer that has a kernel with and the stride is 1, to reduce the channels of the feature map; and Expand_size is a transpose convolution layer, which has a kernel with the size of and a stride of 2 to upsample the size.

4. Experiments and Discussions

In this section, we implemented the proposed SPA-Net model in the Tensorflow platform with Anaconda and Jupyter, and a GPU with Tesla V100-SXM3-32GB. As mentioned above, the existing pretrained DNN models are not suitable for our purposes, so we needed to train the model ourselves. In DNN training, the learning rate is a very important hyperparameter, because too large a learning rate cannot converge to the optimal solution and oscillates on both sides of the optimal solution, while too small a learning rate will cause the learning speed to be very slow in reducing the optimization speed. Therefore, we set the initial learning rate to 0.0001 at the beginning of training, for the purpose of obtaining a better solution quickly. Then, if the validation loss with the number of epochs reached 20, the learning rate would be halved, which made the model more stable during the training. The inputs of this model were the same size of images.

To evaluate the performance of the proposed SPA-Net model, we calculated the metrics Precision, Recall, and F1 [30]. Precision indicates the proportion of correctly detected forgery images/pixels of all detected images or pixels; Recall indicates the proportion of correctly detected forgery images or pixels of all forgery images or pixels; and F1 is the combination of Precision and Recall. Precision, Recall, and F1 are defined as:

where TP is the true positives, the number of forged pixels predicted as forged; FP is false positives, the number of genuine pixels predicted as forged; and FN is False Negatives, the number of forged pixels predicting as genuine.

4.1. Datasets

4.1.1. Training Dataset

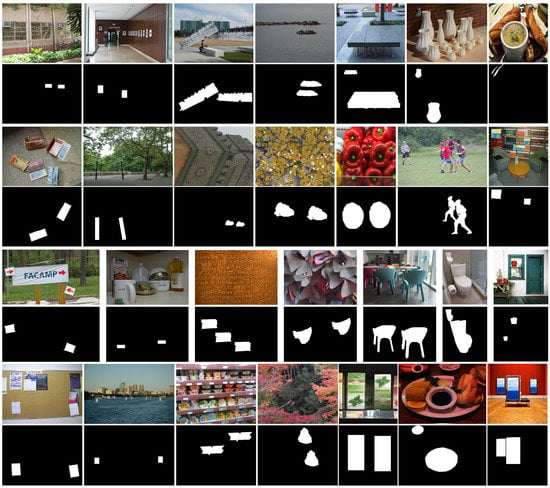

None of the existing datasets for CMFD provide a sufficient number of images and corresponding ground truth masks for training. Since we did not use a pretraining model, we needed many images containing a copy-move forgery area to train our proposed network. Therefore, in this paper, we built a SPANet-CMFD dataset. With original images from SUN [24] and COCO [25], we selected the source area using Labelme manually for each image. During the generation, we selected 550 images from SUN [24] and COCO [25], and applied attacks including rotation with rotation angles of 5°, 20°, 45°, 60°, 90°, and 180°, and scaling with scaling degree of 30%, 50%, 100%, 110%, 120%, and 150%, to the copied regions. In addition, when non-electronic tampered images are used with our detector, they are generally sent to our detector through sensors, such as scanners and cameras. In the process of sampling and transmission, visual sensors will generally have some impact on image quality, such as extra blur and noise. Therefore, in order to increase the robustness of our network, during the production of the training sets, different kinds of postprocessing were applied after rotation and scaling, including contrast adjustments (CA) with (0.01, 0.95), and (0.01, 0.9), (0.01, 0.8); image blurring (IB) with 3 × 3, 5 × 5; Gaussian noise addition (NA) with degrees from 0.2 to 1; and brightness changing (BC) with factors of (0.01, 0.95), (0.01, 0.09), and (0.01, 0.8). The number of forgery images in the SPANet-CMFD dataset was 550 × 12 × 11 = 72,600. We selected about 50,000 useful images. In addition to the SPANet-CMFD dataset, we also used 50,000 images from [18], and some other public copy-move datasets for training, including CMH [31], MICC-F220 [32], MICC-F600 [33], GRIP [34], and Coverage [35], and we also carried out pos-processing for these datasets, so we obtained 558 × 11 = 6138 copy-move forgery samples. Figure 7 shows several images and their corresponding masks on training dataset.

Figure 7.

Copy-move images and their corresponding masks on the training dataset. Columns 1–7 are from CMH [31], MICC-F220 [32], MICC-F600 [33], GRIP [34], Coverage [35], USCISI-CMFD [18], and SPANet-CMFD.

CMH [31]: This consists of four CMH sub-datasets, with a total of 108 copy-move tampered images, which involve attacks with rotation and scaling transformations.

MICC-F220 [32]: There are 110 bases and 110 tampered images, with sizes ranging from 722 × 480 to 800 × 600. However, the dataset does not provide a ground truth for tampered images, we used Labelme to make a ground-truth for them.

MICC-F600 [33]: This consists of 160 tampered images and 440 original images, with a resolution ranging from 800 × 533 to 3888 × 2592.

GRIP [34]: There are 80 basic images and 80 corresponding tampered images with a size of 768×1024, and the dataset provides a corresponding ground truth. Most of the duplicated pieces in the image are smooth.

Coverage [35]: This dataset has 100 basic images and corresponding tampered images with an average size of 400 × 486.

USCISI-CMFD [18]: We selected 50,000 copy images and their ground-truths randomly.

4.1.2. Testing Dataset

To facilitate comparison with the existing state-of-the-art CMFD methods [9,17,18,21,31,36,37,38], we used two public datasets, CASIA [39] and CoMoFoD [40], as our testing dataset. CASIA [39] contains 5123 splicing and copy-move forged images, involving attacks with rotation and scaling, and we imitated [18,21] and selected 1266 copy-move forged images for the test. CoMoFoD [40] contains 200 basic images and 4800 copy-move forged images generated by applying various post-processing approaches, including JPEG compression, CA, NA, IB, BC, and color reduction (CR); and the setting details of these attacks are listed in Table 1.

Table 1.

The setting details of each attack with different levels, with the CoMoFoD database.

4.2. Performance and Comparison with the CASIA Dataset

Table 2 shows the comparison results of our method with the other state-of-the-art methods [9,17,18,21,36,37], on the CASIA dataset. The compared works [9,36,37] were traditional methods, where [9,36] are block-based methods, and [37] is a keypoint-based method. Where [18,21] and our method are DNN-based. It can be seen from Table 2 that [9,36] performed significantly worse than the keypoint-based method [37], because most of the images in the CASIA dataset were processed using large-scaling, while the block-based methods, [9,36] were not robust against this kind of attack. On the contrary, the key-point-based approaches were robust against large-scale distortions, but they were not good at dealing with smooth areas. For the deep learning methods, except [17] which depends on obviously tampered edges, such as splicing feature information, the mehod of reference [18,21] and ours obtain the best F1, because the deep learning methods could resolve the disadvantages of the traditional methods. Overall, our approach learned useful rich feature information with a span-partial structure with an attention mechanism and had outstanding performance, and the training dataset of SPA-Net included much rotation and scaling of images.

Table 2.

Comparison of performance on the CASIA dataset.

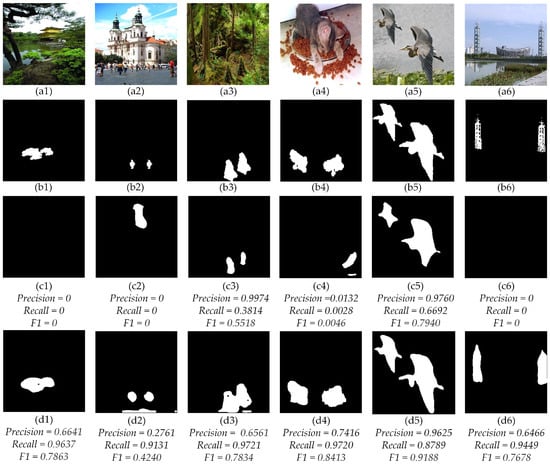

Figure 8 shows a visualization comparison between the state-of-the-art work BusterNet [18] and our proposed SPA-Net. The 1st and 2nd rows show the forgery images and the corresponding ground-truth. The 3rd row sshows the detection results of BusterNet [18], with the {Precision, Recall, F1} calculated as {0, 0, 0}, {0, 0, 0}, {0.9974, 0.3814, 0.5518}, {0.0132, 0.0028, 0.0046}, {0.9760, 0.6692, 0.7940}, and {0, 0, 0}, respectively. The 4th row shows the corresponding results of the proposed SPA-Net, with the {Precision, Recall, F1} calculated as {0.6641, 0.9637, 0.7863}, {0.2761, 0.9131, 0.4240, {0.6561, 0.9721, 0.7834}, {0.7416, 0.9720, 0.8413}, {0.9265, 0.8789, 0.9188}, and {0.6466, 0.9449, 0.7678}, respectively. The comparison results demonstrated the significantly better performance of the proposed SPA-Net. The results of (a1) and (a4) showed that our method was better at scaling and rotation than BusterNet [18], the results of (a2) showed that BusterNet [18] was poor at dealing with smaller areas.

Figure 8.

Comparison of BusterNet [18] and the proposed SPA-Net on the CASIA dataset. (a1–a6) The copy-move images, (b1–b6) The groud-truth of copy-move images, (c1–c6) The results of BusterNet [18], (d1–d6) The results of SPA-Net.

4.3. Performance Comparison on the Comofod Dataset

Table 3 shows the pixel-level results of [9,17,18,31,38] and ours, where [9,31,38] are traditional methods, and reference [17,18] and ours are DNN-based methods. It is worth mentioning that compared with deep learning-based methods, traditional methods lack universality and stability. In addition, our method outperformed Deep-L [17] and BusterNet [18], which are both deep-learning-based methods, and it can also be seen that the performance of the proposed SPA-Net was outstanding under most attacks.

Table 3.

Comparison of the CMFD results on the CoMoFoD dataset at pixel level.

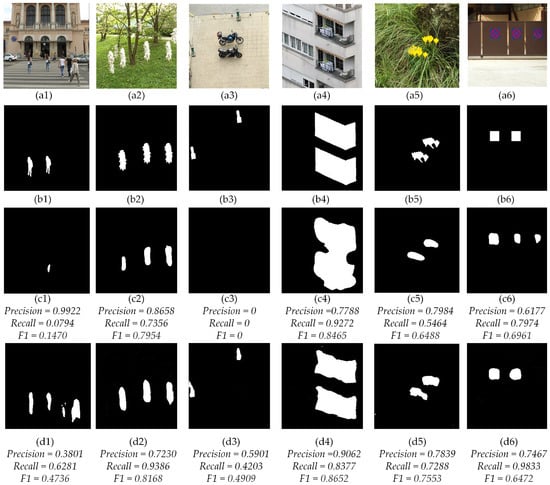

Figure 9 shows the visualization comparison between the state-of-the-art work BusterNet [18] and our proposed SPA-Net. The 1st and 2nd rows show the forgery images and the corresponding ground-truth. The 3rd row shows the detection results of BusterNet [18], with the {Precision, Recall, F1} calculated as {0.9922, 0.0794, 0.1470}, {0.8658, 0.7456, 0.7954}, {0, 0, 0}, {0.7788, 0.9272, 0.8456}, {0.7984, 0.5464, 0.6488} and {0.6177, 0.7974, 0.6961}, respectively. The 4th row shows the corresponding results of the proposed SPA-Net, with the {Precision, Recall, F1} calculated as {0.3801, 0.6281, 0.4736}, {0.7230, 0.9386, 0.8168}, {0.5901, 0.4203, 0.4909}, {0.9062, 0.8377, 0.8652}, {0.7839, 0.7288, 0.7533}, and {0.7467, 0.9833, 0.6472}, respectively. The comparison results demonstrate the significantly better performance of the proposed SPA-Net. In the results of (a1) and (a4), BusterNet [18] can not detect the small copy-move areas, but ours methods can, in (d1) we detect some genuine pixels as copy-move areas, so the Recall is higher. our method outperforms BusterNet [18] in Precision, Recall and F1 in the results of (a6), because BusterNet [18] detect other similiary areas as the copy-move areas.

Figure 9.

Comparison of BusterNet [18] and proposed SPA-Net on CoMoFoD Dataset. (a1–a6) The copy-move images, (b1–b6) The groud-truth of copy-move images, (c1–c6) The results of BusterNet [18], (d1–d6) The results of SPA-Net.

5. Conclusions

In this paper, we proposed an end-to-end neural network SPA-Net for CMFD. In the SPA-Net Feature Extractor Module, the gradients from left branch of the stem layer and right branch of the Conv_BN_Leaky Relu (CBLR) are integrated without duplicate feature information, we also pay sufficient attention to the correlation between high-dimensional feature channels, and use the corresponding enhanced Attention Mechanism to find the potential characteristic representation. The Feature Matching Module can be more flexible adapted to the need for high-dimensional matching in deep learning. As well, the Feature Up-Sampling Module is easier to be embedded in SPA-Net. Compared with the traditional linear interpolation, the transposed convolution in Up-Sampling Module is learnable. Moreover, we create the SPANet-CMFD dataset by applying various processing on the benchmark images from SUN and COCO datasets. Next, we use the existing copy move forgery datasets, CMH, MICC-F220, MICC-F600, GRIP, Coverage, and USCISI-CMFD, together with our generated SPANet-CMFD dataset as the training set to train our model. Therefore, the inclusiveness of copy move tampering part of the network is improved. Compared with other CMFD methods, the proposed SPA-Net achieves good results in both CASIA dataset and CoMoFoD dataset. According to the evaluation results of the test datasets, the generalization ability of SPA-Net is better than most traditional and deep learning methods, but the ability of SPA-Net to detect duplicate areas of the image still needs to be improved, especially improve the detection ability of smaller copy-move areas. Therefore, in the future research, we will improve from two aspects. Firstly, we will combine SPA-Net with traditional methods to improve network generalization ability and eliminate mismatched areas. Next, on the basis of the network structure using deep learning, we should also pay attention to the popular image processing research fields, such as image segmentation, because CMFD and image segmentation have the same characteristic of detecting the location, so they can be combined in the feature extraction or result optimization stage, and it will have a certain effect on the improvement of network performance. Additionally, in terms of application, SPA-Net can be regarded as a sub-stage to distinguish the source and target areas in the future, it can also be combined with smart visual sensors to automatically analyze and locate copy-move forgery of images. Moreover, the generation of SPANet-CMFD can also be made into other datasets with simple processing, such as splicing and removal, as well as it can be applied to any field related to image tampering, such as remote sensing and deepfake.

Author Contributions

Conceptualization, K.Z. and X.Y.; Data curation, K.Z., Z.X. and Y.X.; Formal analysis, K.Z., X.Y., Z.X., Y.X., G.H. and L.F.; Funding acquisition, X.Y.; Investigation, K.Z., X.Y., Z.X., Y.X. and L.F.; Methodology, K.Z., X.Y., Z.X. and G.H.; Project administration, X.Y.; Resources, K.Z.; Software, Z.X.; Supervision, X.Y. and L.F.; Validation, K.Z. and L.F.; Writing—original draft, K.Z.; Writing—review & editing, X.Y., G.H. and L.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Research Project of the Macao Polytechnic University [Project No. RP/ESCA-03/2021], and the Science and Technology Development Fund of Macau SAR [grant number 0045/2022/A].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: CMH: http://dx.doi.org/10.6084/m9.figshare.978736, accessed on 10 January 2021. MICC-F220: http://www.micc.unifi.it/downloads/MICC-F220.zip, accessed on 12 January 2021. MICC-F600: http://www.micc.unifi.it/downloads/MICC-F600.zip, accessed on 12 January 2021. GRIP: http://www.grip.unina.it/, accessed on 16 March 2021. Coverage: https://github.com/wenbihan/coverage, accessed on 1 January 2021. USCISI-CMFD: https://github.com/isi-vista/BusterNet/tree/master/Data/USCISI-CMFD-Small, accessed on 2 February 2021. CASIA: https://github.com/isi-vista/BusterNet/tree/master/Data/CASIA-CMFD, accessed on 2 February 2021. CoMoFoD: https://www.vcl.fer.hr/comofod/, accessed on 18 June 2021.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Swaminathan, A.; Wu, M.; Liu, K.R. Nonintrusive component forensics of visual sensors using output images. IEEE Trans. Inf. Forensics Secur. 2007, 2, 91–106. [Google Scholar] [CrossRef]

- Yao, H.; Xu, M.; Qiao, T.; Wu, Y.; Zheng, N. Image forgery detection and localization via a reliability fusion map. Sensors 2020, 20, 6668. [Google Scholar] [CrossRef] [PubMed]

- Pu, H.; Huang, T.; Weng, B.; Ye, F.; Zhao, C. Overcome the Brightness and Jitter Noises in Video Inter-Frame Tampering Detection. Sensors 2021, 21, 3953. [Google Scholar] [CrossRef] [PubMed]

- Seo, Y.; Kook, J. DRRU-Net: DCT-Coefficient-Learning RRU-Net for Detecting an Image-Splicing Forgery. Appl. Sci. 2023, 13, 2922. [Google Scholar] [CrossRef]

- Lin, Y.K.; Yen, T.Y. A Meta-Learning Approach for Few-Shot Face Forgery Segmentation and Classification. Sensors 2023, 23, 3647. [Google Scholar] [CrossRef]

- Kakar, P.; Sudha, N. Exposing postprocessed copy–paste forgeries through transform-invariant features. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1018–1028. [Google Scholar] [CrossRef]

- Farid, H. Exposing digital forgeries in scientific images. In Proceedings of the 8th Workshop on Multimedia and Security, Geneva, Switzerland, 26–27 September 2006; pp. 29–36. [Google Scholar]

- Wang, J.; Liu, G.; Zhang, Z.; Dai, Y.; Wang, Z. Fast and robust forensics for image region-duplication forgery. Acta Autom. Sin. 2009, 35, 1488–1495. [Google Scholar] [CrossRef]

- Ryu, S.J.; Kirchner, M.; Lee, M.J.; Lee, H.K. Rotation invariant localization of duplicated image regions based on Zernike moments. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1355–1370. [Google Scholar]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef]

- Huang, H.; Guo, W.; Zhang, Y. Detection of copy-move forgery in digital images using SIFT algorithm. In Proceedings of the 2008 IEEE Pacific-Asia Workshop on Computational Intelligence and Industrial Application, Wuhan, China, 19–20 December 2008; Volume 2, pp. 272–276. [Google Scholar]

- Chen, C.C.; Lu, W.Y.; Chou, C.H. Rotational copy-move forgery detection using SIFT and region growing strategies. Multimed. Tools Appl. 2019, 78, 18293–18308. [Google Scholar] [CrossRef]

- Prakash, C.S.; Panzade, P.P.; Om, H.; Maheshkar, S. Detection of copy-move forgery using AKAZE and SIFT keypoint extraction. Multimed. Tools Appl. 2019, 78, 23535–23558. [Google Scholar] [CrossRef]

- Wang, X.Y.; Wang, C.; Wang, L.; Jiao, L.X.; Yang, H.Y.; Niu, P.P. A fast and high accurate image copy-move forgery detection approach. Multidimens. Syst. Signal Process. 2020, 31, 857–883. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. Lect. Notes Comput. Sci. 2006, 3951, 404–417. [Google Scholar]

- Zhu, Y.; Ng, T.T.; Wen, B.; Shen, X.; Li, B. Copy-move forgery detection in the presence of similar but genuine objects. In Proceedings of the 2017 IEEE 2nd International Conference on Signal and Image Processing (ICSIP), Singapore, 4–6 August 2017; pp. 25–29. [Google Scholar]

- Rao, Y.; Ni, J. A deep learning approach to detection of splicing and copy-move forgeries in images. In Proceedings of the 2016 IEEE International Workshop on Information Forensics and Security (WIFS), Abu Dhabi, United Arab Emirates, 4–7 December 2016; pp. 1–6. [Google Scholar]

- Wu, Y.; Abd-Almageed, W.; Natarajan, P. Busternet: Detecting copy-move image forgery with source/target localization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 168–184. [Google Scholar]

- Wu, Y.; Abd-Almageed, W.; Natarajan, P. Image copy-move forgery detection via an end-to-end deep neural network. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–25 March 2018; pp. 1907–1915. [Google Scholar]

- Zhong, J.L.; Pun, C.M. An end-to-end dense-inceptionnet for image copy-move forgery detection. IEEE Trans. Inf. Forensics Secur. 2019, 15, 2134–2146. [Google Scholar] [CrossRef]

- Zhu, Y.; Chen, C.; Yan, G.; Guo, Y.; Dong, Y. AR-Net: Adaptive attention and residual refinement network for copy-move forgery detection. IEEE Trans. Ind. Inform. 2020, 16, 6714–6723. [Google Scholar] [CrossRef]

- Fridrich, J.; Kodovsky, J. Rich models for steganalysis of digital images. IEEE Trans. Inf. Forensics Secur. 2012, 7, 868–882. [Google Scholar] [CrossRef]

- Koul, S.; Kumar, M.; Khurana, S.S.; Mushtaq, F.; Kumar, K. An efficient approach for copy-move image forgery detection using convolution neural network. Multimed. Tools Appl. 2022, 81, 11259–11277. [Google Scholar] [CrossRef]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. Sun database: Large-scale scene recognition from abbey to zoo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3485–3492. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. pp. 740–755. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Wu, Y.; Abd-Almageed, W.; Natarajan, P. Deep matching and validation network: An end-to-end solution to constrained image splicing localization and detection. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1480–1502. [Google Scholar]

- Christlein, V.; Riess, C.; Jordan, J.; Riess, C.; Angelopoulou, E. An evaluation of popular copy-move forgery detection approaches. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1841–1854. [Google Scholar] [CrossRef]

- Silva, E.; Carvalho, T.; Ferreira, A.; Rocha, A. Going deeper into copy-move forgery detection: Exploring image telltales via multi-scale analysis and voting processes. J. Vis. Commun. Image Represent. 2015, 29, 16–32. [Google Scholar] [CrossRef]

- Amerini, I.; Ballan, L.; Caldelli, R.; Del Bimbo, A.; Serra, G. A sift-based forensic method for copy–move attack detection and transformation recovery. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1099–1110. [Google Scholar] [CrossRef]

- Amerini, I.; Ballan, L.; Caldelli, R.; Del Bimbo, A.; Del Tongo, L.; Serra, G. Copy-move forgery detection and localization by means of robust clustering with J-Linkage. Signal Process. Image Commun. 2013, 28, 659–669. [Google Scholar] [CrossRef]

- Cozzolino, D.; Poggi, G.; Verdoliva, L. Efficient dense-field copy–move forgery detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 2284–2297. [Google Scholar] [CrossRef]

- Wen, B.; Zhu, Y.; Subramanian, R.; Ng, T.T.; Shen, X.; Winkler, S. COVERAGE—A novel database for copy-move forgery detection. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 161–165. [Google Scholar]

- Emam, M.; Han, Q.; Niu, X. PCET based copy-move forgery detection in images under geometric transforms. Multimed. Tools Appl. 2016, 75, 11513–11527. [Google Scholar] [CrossRef]

- Pun, C.M.; Yuan, X.C.; Bi, X.L. Image forgery detection using adaptive oversegmentation and feature point matching. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1705–1716. [Google Scholar]

- Li, J.; Li, X.; Yang, B.; Sun, X. Segmentation-based image copy-move forgery detection scheme. IEEE Trans. Inf. Forensics Secur. 2014, 10, 507–518. [Google Scholar]

- Dong, J.; Wang, W.; Tan, T. Casia image tampering detection evaluation database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013; pp. 422–426. [Google Scholar]

- Tralic, D.; Zupancic, I.; Grgic, S.; Grgic, M. CoMoFoD—New database for copy-move forgery detection. In Proceedings of the Proceedings ELMAR-2013, Zadar, Croatia, 25–27 September 2013; pp. 49–54. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).