Abstract

Finding dominating sets in graphs is very important in the context of numerous real-world applications, especially in the area of wireless sensor networks. This is because network lifetime in wireless sensor networks can be prolonged by assigning sensors to disjoint dominating node sets. The nodes of these sets are then used by a sleep–wake cycling mechanism in a sequential way; that is, at any moment in time, only the nodes from exactly one of these sets are switched on while the others are switched off. This paper presents a population-based iterated greedy algorithm for solving a weighted version of the maximum disjoint dominating sets problem for energy conservation purposes in wireless sensor networks. Our approach is compared to the ILP solver, CPLEX, which is an existing local search technique, and to our earlier greedy algorithm. This is performed through its application to 640 random graphs from the literature and to 300 newly generated random geometric graphs. The results show that our algorithm significantly outperforms the competitors.

1. Introduction

The field of wireless sensor networks (WSNs) has been enjoying a lot of attention in the last 20 years, both in research and in industry. This is certainly due to a multitude of different applications, including environmental monitoring, medical and health applications, security surveillance, transportation applications, structural applications, and emergency operations [1,2], just to name a few. WSNs are generally composed of a number of small devices equipped with one or more sensors, limited storage capacity, a limited power supply, and a radio communication system. As the weight of sensor devices often plays an important role, power supply—for example, by means of a battery—is generally limited, and battery-saving techniques are often used. The lifetime of a sensor device (in hours) may be computed by a division of the battery capacity (in Watt hours) and the average power drain (in Watts). However, the estimation of the lifetime of a sensor node is not a trivial task (see [3]) because energy consumption is the result of various factors, including, for example, the environmental temperature.

For these reasons, one of the principal research topics concerning WSNs is about network lifetime extension, while at the same time, providing sufficient communication reliability and sensing coverage. Note that in this context, the term network lifetime refers to the time during which the network is fully operational with respect to its tasks. In other words, the network lifetime is the time duration in which the overall sensing coverage is maintained. The lifetime of a WSN, therefore, heavily depends on the energy consumption of the individual sensor devices. Real-world examples of mechanisms for maximizing the network lifetime are manifold. They include, but are not limited to, smart agriculture monitoring [4], structural health monitoring [5], human activity monitoring [6], and road traffic monitoring [7]. Power-saving strategies such as the ones found in these examples can—according to [8]—be classified as belonging to one of the following groups:

- Sleep–wake cycling, also referred to as duty cycling. Here, sensor devices alternate between active and sleep mode;

- Power control through the adjustment of the transmission range of the radio communication systems;

- Routing and data gathering in an energy efficient way;

- Reduction of the amount of data transmissions and avoidance of useless activity.

In this paper, we will provide a technique for WSN lifetime extension that falls into the first category. More precisely, our technique makes use of the so-called communication graph. The nodes of this graph are the sensor devices belonging to the network (together with their locations). Two such nodes are connected by an edge if the corresponding sensor devices can communicate with each other via their radio communication systems. Note that sensor nodes have at least two tasks, also known as functionalities: (1) sensing data and (2) data processing and forwarding data to a base station. Between these two tasks, the latter one is by far more energy-intensive than the first one. In order to keep energy spending to a minimum, the nodes in a sensor network may be organized in terms of dominating sets in which the dominators (that is, the sensor nodes that form part of the dominating set) assume the task of cluster heads that take care of data processing and forwarding. However, sensor nodes are never switched off in this model. Those sensor nodes that do not form part of the (current) dominating set save energy by not having to perform data processing and forwarding. In contrast, data sensing is performed by all sensor nodes at all times. Such a model (or similar models) have been used in a wide range of papers in the literature; for example, see refs. [9,10,11]. For the above-mentioned reasons, our technique organizes the sensor nodes into a number of disjoint dominating sets, which are used—one after the other—for data processing and data forwarding.

1.1. Necessary Graph Theoretic Concepts

This paper makes use of some basic definitions and notations from graph theory. The most important ones are outlined in the following. (For a more profound introduction, the interested reader may refer to [12].) First, the communication graph is modeled by means of an undirected graph, , where V is the set of nodes, and E is the set of edges connecting (some of) the nodes. Hence, two nodes, , which are connected by an edge, , are called neighbors. They may also be called adjacent nodes. The open neighborhood of a node, , is defined as . Sometimes, however, it is necessary to refer to the closed neighborhood of a node, , which is defined by adding v to , that is, . Next, the degree of a node, , is defined as the cardinality of , that is, . The concept of neighborhood can also be extended, from nodes to sets of nodes, in the following way. The open neighborhood, , of a set, , is defined as . Correspondingly, a node v’s closed neighborhood, , is defined as .

In this context, we also formally introduce the terms: dominating set, domatic partition, and domatic number of a graph. First, a subset, , in which each node, , has at least one neighbor that forms part of D is called a dominating set of G. A node, —where D is a dominating set—is said to cover all its neighbors, in addition to itself. A trivial example of a dominating set of an undirected graph, , is V. Next, a set of subsets is called a domatic partition of a given, undirected graph, , if the following two conditions are fulfilled: (1) () is a dominating set of G, and (2) all sets of are pairwise disjoint, that is, for all . If is a set of disjoint dominating sets of G with (1) and (2) is not a dominating set, a domatic partition of G can easily be obtained by adding all vertices from , for example, to . The domatic number of an undirected graph, , is defined as the size of the largest domatic partition of G, that is, the domatic number of G is , where . It was shown in the literature that the domatic number of a graph, G, can be at most , where . The problem of identifying a domatic partition of an undirected graph, G, is sometimes called the maximum disjoint dominating sets (MDDS) problem.

1.2. Graph Problems Used to Model WSN Lifetime Maximization

The related literature offers different approaches for the maximization of the sensor network lifetime. Most of these approaches have modeled this problem either in terms of the set K-cover problem (also known as the target coverage problem) or as the MDDS problem. Modeling the problem as a K-cover problem was performed for the first time in [13]. In the same work, the problem was shown to be NP-hard. In this context, note that the set K-cover problem is defined on the basis of a bipartite graph in which the first set of nodes are the sensor devices and the second set of nodes are the sensing targets. The aim of the problem is to partition the sensor devices into a maximum number of disjoint sets, with each one covering all targets. As mentioned before, these disjoint sets are then activated one after the other in order to keep the network alive for the maximum period of time. Due to the NP-hardness of the problem, a range of approximate algorithms have been proposed in the literature in order to solve it. Examples, which also include algorithms for closely related problem formulations, are a greedy heuristic [13], some memetic algorithms [14,15,16], a cuckoo search approach [17], and finally, a genetic algorithm [18].

As already indicated above, the problem of maximizing sensor network lifetime is also frequently modeled as an MDDS problem, the goal of which is to identify a partition of the sensor devices into a maximum number of disjoint dominating sets of the corresponding communication graph. The MDDS problem, which belongs to the important family of dominating set problems [19,20,21], was shown to be NP-hard for general graphs [22]. Cardei et al. [23] proved the NP-completeness of a special case of the MDDS problem known as the 3-disjoint dominating sets problem. This variant deals with the question of whether or not a given graph contains three disjoint dominating sets. Nguyen and Huynh [9] proved that the 3-disjoint dominating sets problem remains NP-complete even for the special cases of planar unit disk graphs. Moreover, they introduced and evaluated the performance of four greedy heuristics for the general MDDS problem. In [23], it was also proved that unless P = NP, the MDDS problem has no polynomial-time approximation with a performance guarantee better than 1.5. Finally, the same authors introduced a graph coloring-based heuristic approach. Next, Feige et al. [24] showed that any graph with n nodes, a maximum degree of , and a minimum degree of has a domatic partition with a size of . Note that the term tends to zero with increasing n. Moreover, the same authors were able to show the non-existence of an approximation algorithm with an approximation ratio of unless . Finally, they also introduced a centralized algorithm generating a domatic partition with a size of . Moscibroda and Wattenhöfer [25] regarded the MDDS problem as one with a maximizing cluster lifetime. They introduced a randomized, distributed algorithm having—with high probability—a performance ratio of . Finally, a greedy heuristic for the MDDS problem, with a time complexity of , was described in [26].

1.3. Existing Work for the MWDDS Problem and Our Contribution

Recently, a weighted variant of the MDDS problem, in which the weights of the nodes of a given undirected graph, , indicate the remaining lifetime of single sensor devices, was introduced in [27]. The authors labeled this problem as the maximum weighted disjoint dominating sets (MWDDS) problem. The lifetime of a dominating set in G is hereby defined as the minimum of the lifetimes of the nodes that form part of the set. The MWDDS problem asks to identify a domatic partition that maximizes the sum of the lifetimes of the corresponding dominating sets.

In addition, three algorithms based on a local search were provided in [27]. Each of these local search methods takes a solution generated by a greedy heuristic from [26] as the initial solution. The proposed local search methods make use of swap neighborhoods, trying, for example, to exchange nodes between different dominating sets and to incorporate nodes not belonging to any dominating set of the current solution. The three local search methods differ in the type of swaps that are considered. The current state-of-the-art approach for the MWDDS problem is, surprisingly, a greedy heuristic that was introduced in [28]. This algorithm generates one dominating set after the other by adding one node at a time to the partial dominating set under construction.

Given that the greedy heuristic from [28] seems to be very powerful, in this work, we propose a metaheuristic extension of this greedy heuristic. More specifically, we propose a population-based iterated greedy (PBIG) algorithm on the lines of [29,30]. Just as with any other iterated greedy (IG) approach [31], our algorithm iteratively generates a new solution to the problem by partially destroying incumbent solutions and re-constructing the obtained partial solutions by means of a randomized greedy technique. As we will show in the section on the experimental results, our proposed approach clearly outperforms all existing algorithms for the MWDDS problem. In addition to 640 problem instances from the related literature, we likewise evaluate our algorithm—in comparison to the competitors—on 300 random geometric graphs.

1.4. Paper Organization

The remainder of this paper is organized as follows. The MWDDS problem is formally introduced, together with notations and basic definitions, in Section 2. This also includes a graphical example and an ILP model for the MWDDS problem. In Section 3, we introduce our algorithmic proposal, a population-based iterated greedy algorithm for solving the MWDDS problem. Finally, Section 4 presents a comprehensive experimental evaluation and a comparison to the current state of the art, while Section 5 summarizes our paper and offers directions for future lines of work.

2. The MWDDS Problem

Let be an undirected, node-weighted graph. As already mentioned before, V refers to the set of nodes, while is the set of edges. Moreover, is a weight function defined over the set of nodes, assigning a positive weight, , to each node, . The maximum weighted disjoint dominating sets (MWDDS) problem is then defined, such that any domatic partition of G is a valid solution. The objective function value of a valid solution, , is defined as follows:

In other words, the quality of a subset, , is determined as the minimum lifetime of all its nodes. The objective is to find a valid solution, , that maximizes objective function f.

2.1. Graphical Example

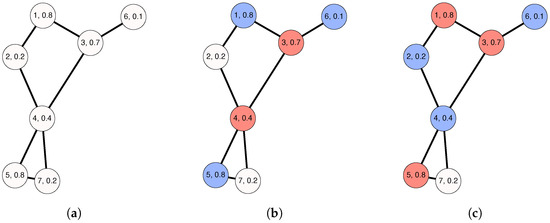

Figure 1 shows a graphical example of the MWDDS problem. In particular, Figure 1a shows an undirected graph on seven nodes. The labels printed within the nodes have the format , where x is the nodes’ ID and y is the nodes’ lifetime. These lifetime values are normalized to the range for the sake of simplicity. Furthermore, the graphic in Figure 1b shows a feasible solution, , which consists of two dominating sets, and . According to the node labels, the remaining lifetimes of nodes 3 and 4 are 0.7 and 0.4, respectively. Correspondingly, has a lifetime of 0.4. Next, it can also be easily calculated that the lifetime of is 0.1 because the individual lifetimes of nodes 1, 5, and 6 are 0.8, 0.8, and 0.1, respectively. The objective function value of D is calculated as the sum of the lifetimes of the dominating sets in D. Therefore, the lifetime of D is 0.5. Finally, the graphic in Figure 1c demonstrates the optimal solution, , to this problem instance. Hence, the lifetime of is 0.7, while the lifetime of is 0.1. Therefore, the objective function value of is 0.8. Since this small sample graph contains a node with a degree of 1, any valid solution can contain at most two disjoint dominating sets.

Figure 1.

An illustrative example of the MWDDS problem. (a) Problem instance; (b) Feasible solution , with ) = 0.5; (c) Optimal solution , with .

2.2. ILP Model for the MWDDS Problem

The following integer linear programming (ILP) model for the MWDDS problem was introduced in [28]. First, we describe the sets of variables and their domains utilized by this model:

- 1.

- A binary variable, , for each combination of a node, (), and a possible disjoint set, (), indicates whether or not node forms part of the dominating set . That is, when , node is assigned to the dominating set . In this context, remember that (1) , and (2) the number of disjoint dominating sets in a graph is bounded from above by .

- 2.

- Second, a binary variable, (), indicates whether the th dominating set is utilized at all.

- 3.

- Finally, a real-valued variable, , is used to store the weight of the th dominating set. In our implementation of the model, we used .

The MWDDS can then be stated in terms of an ILP model in the following way:

The objective function is the sum of the weight values of all dominating sets. Constraints (3) ensure that each node is assigned to one dominating set at most. In this way, the chosen dominating sets are disjoint. Next, constraints (4) are the usual dominating set constraints, that is, they make sure that the set of nodes assigned to the j-th set (if utilized) form a dominating set of G. Furthermore, constraints (5) make sure that nodes can only be assigned to utilized dominating sets. Constraints (6) correctly determine the lifetimes of the utilized dominating sets. This is accomplished by setting the value of the variable of the j-th dominating set (if utilized) to the minimum of the lifetime values of all nodes assigned to it. Next, note that the objective function (Equation (2)) is only correct if the weight value of the dominating sets not utilized is set to zero. This is ensured by constraints (7). The remaining two sets of constraints, (8) and (9), are not required for the correctness of the ILP. They were introduced for tie-breaking purposes that have the following effect: (1) if k dominating sets are utilized, they are assigned to sets , and (2) the utilized dominating sets are ordered according to a non-increasing weight value.

3. Proposed Algorithm

Our population-based iterated greedy (PBIG) algorithm is a population-based extension of the well-known iterated greedy (IG) metaheuristic [31], that is, it produces a sequence of solutions by iterating over a constructive greedy heuristic in the following way. At each iteration, first, some part of the current/incumbent solution is removed, and second, the greedy heuristic is applied to the resulting partial solution in order to again obtain a complete solution. The first of these phases is called the destruction phase, while the second one is known as the reconstruction phase. A high-level description of our PBIG algorithm for solving the MWDDS problem is given in Algorithm 1. Apart from a problem instance, PBIG requires seven input parameters: (1) the population size (), (2) the lower bound of the degree of greediness during solution construction (), (3) the upper bound of the degree of greediness during solution construction (), (4) the lower bound of the degree of solution destruction (), (5) the upper bound of the degree of solution destruction (), (6) the maximum number of iterations without the improvement of the best-so-far solution before applying a restart (), and (7) the degree of partial solution removal (). Moreover, note that in our algorithm, each solution has two solution-specific parameters: the individual destruction rate, , and the individual degree of greediness, . The use of all parameters will be carefully described below.

| Algorithm 1 PBIG for the MWDDS problem. |

Input: A problem instance and values for parameters , , , , , , and . Output: A family of disjoint dominating sets

|

The algorithm works as follows. A set of solutions for the initial population are generated in the function (see line 1 of Algorithm 1). Moreover, the best-so-far solution, , and the counter are initialized. Afterwards, the main loop of the algorithm starts. Each iteration consists of the following steps. Each solution, , is partially destroyed using procedure (see line 7 of Algorithm 1), resulting in a partial solution, . On the basis of , a complete solution, , is then constructed using the procedure (see line 8 of Algorithm 1). Each newly obtained complete solution is stored in an initially empty, new population, . Moreover, the individual destruction rates and the individual degree of greediness of and are adapted in the function, . Then, the iteration-best solution, , is determined, and in case this solution improves over , the non-improvement counter is set back to zero. Otherwise, this counter is incremented. As a last step in each iteration procedure, chooses the solutions for the population of the next iteration, maintaining the population size constant at at all times. Finally, the algorithm terminates when a given CPU time limit has been reached, and the best found solution is returned. The five procedures mentioned above are described in more detail below.

: Each of the solutions of the initial population is constructed by applying the procedure , with the empty solution as input. Note that this procedure depends on the degree of greediness, which is the same for all solutions because each empty partial solution, , is initialized with , that is, the upper bound for the greediness of solution construction. Moreover, it is also initialized with , that is, the lower bound of the destruction rate is set as the initial value.

: The reconstruction procedure follows the general principle of a greedy algorithm, which builds a complete solution step-by-step, selecting one additional node at each construction step. In this work, we adopt the recent greedy heuristic presented in [28]. However, we extend this greedy heuristic (1) in order to allow for randomized steps and (2) to be able to take a partial (possibly non-empty) solution as input. In other words, our randomized greedy mechanism takes as input a partial solution, , which might be empty. Note that such a partial solution is composed of independent, partially destroyed dominating sets. Now, the construction of a complete solution, , on the basis of (where ) is performed by consecutively dealing with the generation of starting from for all . In this process, whenever or , is initialized with the empty set. In the following, denotes the set that includes all nodes that are not yet added to a dominating set of a current (partial) solution . That is, when receiving a partial solution as input, . Thus, if , then .

In the following, we describe the way to obtain a dominating set, , starting from a partial dominating set, (possibly being empty). At the start, all nodes in V can be divided into three disjoint subsets with respect to :

- Black nodes: node from ;

- Gray nodes: nodes that are not in but are dominated by black nodes, that is, all nodes in , where and is the neighborhood of v in G;

- White nodes: all nodes from V that are neither black nor gray.

With this classification of the nodes, we can define the white degree of a node, —with respect to —as the number of white nodes from the closed neighborhood of v:

To be able to choose the next node to be added to set at the current construction step, all nodes from are evaluated using a greedy function denoted by , which is calculated as follows:

Then, the randomization incorporated in our greedy heuristic is implemented using a quality-based restricted candidate list (RCL). The size of the RCL is controlled by the solution-specific parameter, , called the degree of greediness. Its value is adaptive and depends on the quality of the generated solution, as explained further below.

Let and . The RCL then contains all nodes, , whose scoring value is greater than or equal to . Note that when , our solution construction procedure behaves similar to a deterministic greedy heuristic. On the other hand, setting leads to pure random construction. Finally, a node is selected at random from the RCL to be incorporated into the partial dominating set, .

Once is a dominating set, it might contain redundant nodes which—after identification—can be safely removed. In this context, note that a node is redundant if and only if any node from its closed neighborhood is dominated by at least two nodes from . If, by removing redundant nodes, the node with the lowest lifetime in can be removed, the overall lifetime of is improved. After removing redundant nodes, is placed in the solution, , under construction. Afterwards, the set is updated accordingly before moving to the construction of the next dominating set, . This solution construction process ends once no further dominating set can be generated from the nodes in . This occurs when it is impossible to complete the partial dominating set under construction because either (1) is empty or (2) no node from has a white closed neighbor. The pseudo-code of the complete procedure is shown in Algorithm 2.

: Let be the valid solution given as input to the destruction procedure. In the following, we outline the three strategies for destruction that are conducted sequentially: partial solution removal, worst node removal, and random node removal. In this context, it is important to note that, on the one hand, the best solution does not necessarily correspond to the solution with the maximum number of disjoint dominating sets; on the other hand, the size of a re-constructed solution after performing the destruction and reconstruction phases may be different to the size of the solution that served as input to these two phases. With this in mind, some disjoint dominating sets should be completely removed as a first step of partial solution destruction. For this purpose, the randomly chosen dominating sets are removed from , resulting in a partial solution , where .

Then, since the quality of a subset, , is determined as the minimum lifetime of all its nodes, keeping the node with the smallest lifetime during the partial destruction makes its further improvement impossible. For this reason, the removal of the node, , from each subset, , , becomes necessary. Afterwards, a set of randomly chosen nodes are removed from each subset, , in an iterative way. Thus, at each step, exactly one randomly chosen vertex is removed.

| Algorithm 2 Procedure . |

Input: A (possibly empty) partial solution . Output: A complete valid solution

|

: The solution-specific parameters—concerning the degree of greediness (RCL parameter) and the destruction rate—are adapted in relation to the results of the destruct and re-construct procedures. More specifically, while the newly generated solution, , is initialized with the default values—that is, and —the adaptation of the parameter values of depends on , and vice versa. In case , will adopt the values of , that is, and . Otherwise, the values of are adapted as follows:

Once the value of falls below the lower bound, , it is set back to the upper bound, . In the same way, once the value of exceeds the upper bound, , it is set back to the lower bound, .

Note that that the constants and 9 were fixed after preliminary experiments. In contrast, the values of seven important parameters will be determined by scientific tuning experiments (see Section 4.2). The denominator in the case of the adaptation of was set to 9 in order to have 10 different values between and . The motivation behind this adaptive scheme for the degree of greediness and the destruction rate is to use a higher degree of greediness and a smaller solution destruction as this leads to better solutions, and to move towards a lower degree of greediness and a higher destruction once no more improving solutions are found.

: This last function concerns the selection/generation of the solutions for the population of the next iteration. If , the new population, , is simply filled with the best solutions from . In case , all solutions, apart from the best one, are deleted from , and new solutions are added via the use of the ) procedure (used with as input). Note that in this case, the RCL parameter, , is each time randomly picked from . This set of values was chosen after preliminary experiments. The variation in this set potentially ensures some diversification in the search space, with the hope of covering unexplored areas of the search space. Moreover, is set to zero. In summary, this function implements a restart procedure which is performed once iterations have been performed without the improvement of the best-so-far solution, .

4. Experimental Evaluation

We implemented the proposed PBIG algorithm in ANSI C++ using GCC 10.2.0 for the compilation of the software. Moreover, we decided to compare PBIG with the following algorithmic approaches: (1) the best of three available local search approaches from the literature, called VD (Variable Depth) [27]; (2) our own greedy heuristic, labeled GH-MWDDS [28]; (3) application of the ILP solver ILOG CPLEX 20.01 in a single-threaded mode to all problem instances. Note that, surprisingly, GH-MWDDS is currently the state-of-the-art method used to solve the MWDDS problem, outperforming both the local search method (VD) and CPLEX. In order to conduct a fair comparison to the VD algorithm, we used the original source code provided by the authors of [27].

The time limit for each application of CPLEX was set to 2 CPU hours. Moreover, the experiments were performed on a cluster of machines with two Intel® Xeon® Silver 4210 CPUs, with 10 cores of 2.20 GHz and 92 Gbytes of RAM.

4.1. Problem Instances

All considered techniques were applied to two sets of benchmark instances. The first set, consisting of 640 random graph instances, was already used for the evaluation of GH-MWDDS in [28]. In particular, this set—henceforth called Set1—contained graphs with nodes. For each value of n, there were graphs of different densities, expressed by the average degree, d. In the case of , for example, Set1 contained graphs with average degrees of . For each combination of n and d, Set1 contained 20 randomly generated graphs.

In order to test our algorithms on graphs that are also commonly used to model sensor networks, we generated an additional set of benchmark instances (Set2) consisting of random geometric graphs (RGGs). These graphs were generated by scattering nodes randomly on the square, . This means that each node, i, had its location in . Two nodes, i and j, were then connected by an edge if and only if the Euclidean distance between i and j was smaller or equal to a predefined threshold value, . For each , we considered five different threshold values. Moreover, for each combination of n and r, we randomly generated 20 graphs. Accordingly, Set2 consists of 300 problem instances.

Finally, note that—both in the case of Set1 and Set2—each node (sensor) of the network was given a random real value between 0 and 1 as node weight (lifetime). Both benchmark sets can be obtained at https://www.iiia.csic.es/~christian.blum/research.html#Instances (accessed on 16 January 2022).

4.2. Algorithm Tuning

PBIG requires well-working parameter values for the following seven parameters:

- 1.

- Population size ();

- 2.

- Lower bound of the determinism rate ();

- 3.

- Upper bound of the determinism rate ();

- 4.

- Lower bound of the destruction rate ();

- 5.

- Upper bound of the destruction rate ();

- 6.

- Number of iterations without improvement ();

- 7.

- Deletion rate ().

For the the purpose of parameter tuning, we used the scientific tuning software irace [32]. More specifically, we tuned PBIG separately for Set1 and Set2. For this purpose, we generated specific tuning instances as follows. For Set1, exactly one instance was randomly generated for the following combinations of n (number of nodes) and d (average degree): , , , , , , , , , and . In other words, 10 instances were specifically generated in order to tune PBIG for its application to instances from Set1. In this case, the irace software was run with a budget of 5000 algorithm applications. Regarding Set2, we randomly generated one tuning instance for each of the following combinations of n and r (threshold value for connecting two nodes): , , , , , and . In this case, as irace is applied with only six tuning instances, the budget was limited to 3000 algorithm applications. The obtained parameter value settings are shown in Table 1.

Table 1.

Parameters, value domains, and values chosen for PBIG by .

4.3. Results and Discussion

First of all, note that PBIG was applied exactly once to each problem instance, with a computation time limit of CPU seconds. Table 2 presents the results of all competing methods—that is, CPLEX, VD, GH-MWDDS, and PBIG—for the instances of Set1. The first two columns indicate the problem instance type in terms of: (1) the number of nodes (n) and (2) the average degree (d). Naturally, the density of the networks grows with an increasing average degree, d. Each table row provides the average result of each competing algorithm for the 20 generated problem instances concerning the corresponding combination of n and d. Table columns 3 and 4 show the results of CPLEX. The first of these columns (with the heading “Value”) provides the average quality of the best solutions generated by CPLEX for 20 instances, while the second column presents the average gap (in percent) between the objective function value of the solutions obtained by CPLEX and the best upper bounds identified by CPLEX. The results of the other three competitors are shown by the columns with the headings “Value” and “Time”. The first column provides—as in the case of CPLEX—the average quality of the generated solutions, while the second one provides the computation time. In the case of VD and GH-MWDDS, the computation time corresponds to the time at which the algorithm terminated, while in the case of PBIG, the computation time is the time at which the best solution of a run was found on average. The corresponding standard deviation in the case of PBIG is provided in an additional column with the heading “”. Finally, note that the best result in each row is indicated in bold font.

Table 2.

Numerical results for the instances of Set1 (random graphs).

The results displayed in Table 2 allow for the following observations:

- As already mentioned in [28], solving the MWDDS problem by means of an ILP solver such as CPLEX is only useful in the context of the smallest of all problem instances. In fact, even though CPLEX obtains the best results in the case of , the gap information indicates that—even in this case—CPLEX is far from being able to prove optimality.

- For all instances, apart from , PBIG outperforms the remaining approaches. In particular, the current state-of-the-art method, GH-MWDDS, is consistently outperformed. This shows that our way of extending the solution construction mechanism of GH-MWDDS into a PBIG algorithm was successful.

- Both GH-MWDDS and PBIG clearly outperform the best local search algorithm (VD) from the literature. In fact, while VD achieves an average solution quality of 1.757, GH-MWDDS obtains an average solution quality of 9.515, and PBIG achieves one of 10.321. This does not only hold for solution quality but also for computation time. While VD requires a computation time of 984.143 seconds on average, GH-MWDDS requires only 0.006 seconds. Even the average computation time of PBIG is, with 20.125 seconds, around 50 times lower than that of VD.

Next, we study the results obtained by CPLEX, GH-MWDDS, and PBIG for the new RGG instances from Set2. These results are shown in Table 3, which has the same structure as Table 2. The only exception is the second table column, which provides the threshold value, r, used to generate the RGGs, instead of the average degree, d. The following conclusions can be drawn based on the obtained results:

Table 3.

Numerical results for the instances of Set 2 (random geometric graphs).

- First of all, CPLEX does seem to have fewer problems in solving RGG instances in comparison to random graphs. In fact, CPLEX is able to solve all 60 instances with and to proven optimality. Furthermore, 19 out of 20 instances with and are solved to optimality, as well as 18 out of 20 cases with and . This is in contrast to the case of RGs, for which CPLEX was not even able to solve problem instances with 50 nodes to optimality. Nevertheless, for the larger instances (with ) CPLEX was, with very few exceptions, only able to derive the trivial solutions that do not contain any dominating sets.

- PBIG obtains the same results as CPLEX in those cases in which CPLEX is able to provide optimal solutions. Moreover, PBIG is able to do so in very short computation times of less than 10 s.

- As in the case of the instances in Set1, PBIG also consistently outperforms the other competitors for the RGG instances in Set2. While GH-MWDDS obtains an average solution quality of 2.911, PBIG achieves one of 3.134.

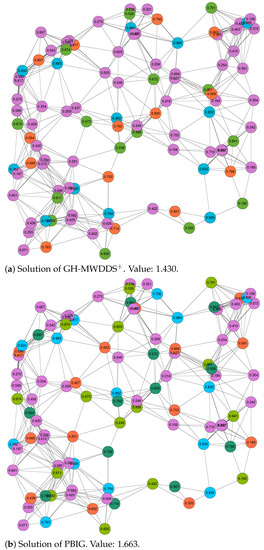

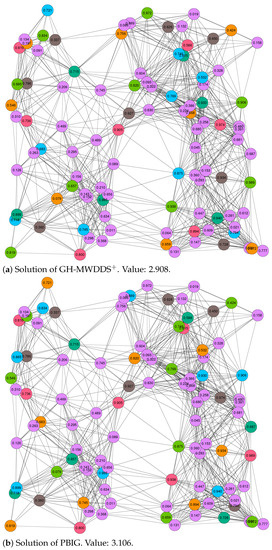

Finally, we decided to study the structure of the solutions provided by GH-MWDDS and PBIG in more detail. In particular, this was performed for two cases: the first (out of 20) RGG graphs with 100 nodes and a threshold value of , and the first (out of 20) RGG graphs with 100 nodes and a threshold value of . In both cases, we graphically present the solutions of GH-MWDDS and PBIG in Figure 2 (for the graph with ) and Figure 3 (for the graph with ). Note that the node color in all the graphics indicates the dominating set to which a node belongs; the purple color indicates that the corresponding node is not assigned to any of the disjoint dominating sets. The four solutions shown in these figures are additionally provided in textual form in Table 4. The four sub-tables provide all disjoint dominating sets, the color in which these sets are shown in the graphics of Figure 2 and Figure 3, the number of nodes contained in all dominating sets, their lifetime, and the lists of nodes belonging to the dominating sets.

Figure 2.

Solutions of GH-MWDDS (a) and PBIG (b) for the first RGG graph with 100 nodes and a threshold value of . The lifetime of each node is provided as the node label. Moreover, the node colors indicate to which dominating set a node belongs. In both cases, the color purple indicates that the respective node is not chosen for a dominating set.

Figure 3.

Solutions of GH-MWDDS (a) and PBIG (b) for the first RGG graph with 100 nodes and a threshold value of . The lifetime of each node is provided as the node label. Moreover, the node colors indicate to which dominating set a node belongs. In both cases, the color purple indicates that the respective node is not chosen for a dominating set.

The following interesting observations can be made. First, in both cases, the structure of the PBIG solution is quite different to the structure of the GH-MWDDS solution. This means that PBIG does not just locally improve the GH-MWDDS solutions; it often seems to perform a profound restructuring. Second, in the case of the graph with , PBIG comes up with a solution that contains one more dominating set than the GH-MWDDS solution. This leads to the fact that the PBIG solution leaves less nodes unused in comparison to the GH-MWDDS solution (44 nodes unused vs. 58 nodes). Third, the solutions of PBIG and GH-MWDDS, in the case of the graph with and (Figure 3), indicates that making use of more nodes does not always lead to better solutions. More specifically, both algorithms generate solutions with six disjoint dominating sets. While the GH-MWDDS solution makes use of 47 nodes, the PBIG solution only makes use of 46 nodes. Nevertheless, the PBIG solution is better, with an objective function value of 3.106, in comparison to a quality of 2.908 for the solution generated by GH-MWDDS. This is because the dominating sets in the PBIG solutions have a longer lifetime on average than those in the GH-MWDDS solution.

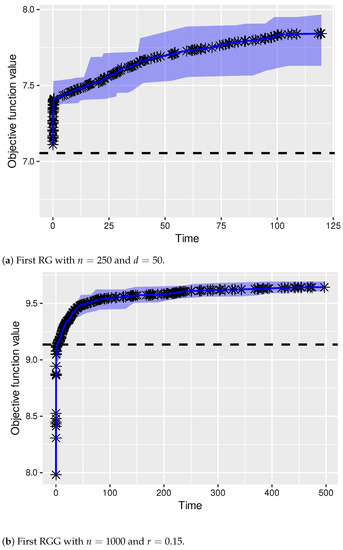

Finally, we decided to show the evolution of the quality of the solutions produced by PBIG over time. This was performed for two of the hardest cases. In particular, we chose the first random graph, with nodes and an average node degree of , and the first random geometric graph, with nodes and a threshold of . Remember that the computation time limit is CPU seconds in both cases. The graphics in Figure 4 show PBIG’s evolution for 10 runs per problem instance. The shaded area around the average behavior line indicates the variance of the algorithm. Moreover, the horizontal, dashed lines indicate the quality of the solutions produced by GH-MWDDS. Note that in both cases, PBIG outperforms GH-MWDDS very early in each run. In the case of Figure 4a, most of the runs even start off with a solution better than the one of GH-MWDDS. Additionally, note that the given computation time is sufficient in both cases as PBIG shows clear signs of convergence before reaching the computation time limit of 125 CPU seconds in the case of Figure 4, and 500 CPU seconds in the case of Figure 4b.

Figure 4.

Evolution of the quality of the solutions produced by PBIG over time. Both graphics show the results of 10 independent PBIG runs.

5. Conclusions

This paper dealt with lifetime maximization in wireless sensor networks by means of solving an optimization problem known as the maximum weighted disjoint dominating sets problem. As shown by the weak results of the ILP solver, CPLEX, this problem is a challenging combinatorial optimization problem. In this work, we extended an existing high-quality greedy algorithm from the literature towards a population-based iterated greedy algorithm. This algorithm worked on a population of solutions. At each iteration, it applied partial destruction to each solution in the population. Subsequently, the obtained partial solutions were subjected to re-construction, often resulting in different and improved solutions. We compared our approach to three competitors: the application of CPLEX, the best greedy algorithm from the literature, and the best available local search method. This comparison was based on two benchmark sets. The first set, consisting of 640 random graphs, was taken from the related literature, while the second set, consisting of 300 random geometric graphs, was newly generated for this work. In summary, we can say that our population-based iterated greedy algorithm consistently outperformed all competitors. This algorithm can therefore be called the new state-of-the-art approach for the tackled problem.

Given the weak performance of CPLEX for this problem, one promising line of future work might be that of dealing with the development of specialized exact approaches. Another line of research might focus on hybrid techniques such as construct, merge, solve, and adapt (CMSA) [33]. In particular, CMSA allows users to take profit from ILP solvers such as CPLEX, even in the context of large problem instances for which a direct application of CPLEX is currently not beneficial.

Author Contributions

Conceptualization, S.B.; investigation, S.B. and P.P.-D.; methodology, S.B. and C.B.; software, S.B.; supervision, C.B.; writing—original draft preparation, S.B. and C.B.; writing—review and editing, C.B. and P.P.-D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was suppoorted by grant PID2019-104156GB-I00 (project CI-SUSTAIN) funded by MCIN/AEI/10.13039/501100011033.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Both benchmark sets used in this work can be obtained at https://www.iiia.csic.es/~christian.blum/research.html#Instances (accessed on accessed on 16 January 2022).

Acknowledgments

The authors would like to thank Tayler Pino for providing the code of the VD local search algorithm; S. Bouamama is grateful to DGRSDT-MESRS (Algeria) for financial support.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PBIG | Population-Based Iterated Greedy |

| MDDS | Maximum Disjoint Dominating Sets |

| MWDDS | Maximum Weighted Disjoint Dominating Sets |

References

- Yetgin, H.; Cheung, K.T.K.; El-Hajjar, M.; Hanzo, L.H. A survey of network lifetime maximization techniques in wireless sensor networks. IEEE Commun. Surv. Tutor. 2017, 19, 828–854. [Google Scholar] [CrossRef] [Green Version]

- Kandris, D.; Nakas, C.; Vomvas, D.; Koulouras, G. Applications of wireless sensor networks: An up-to-date survey. Appl. Syst. Innov. 2020, 3, 14. [Google Scholar] [CrossRef] [Green Version]

- Rodrigues, L.M.; Montez, C.; Budke, G.; Vasques, F.; Portugal, P. Estimating the lifetime of wireless sensor network nodes through the use of embedded analytical battery models. J. Sens. Actuator Netw. 2017, 6, 8. [Google Scholar] [CrossRef] [Green Version]

- Sharma, H.; Haque, A.; Jaffery, Z.A. Maximization of wireless sensor network lifetime using solar energy harvesting for smart agriculture monitoring. Ad Hoc Netw. 2019, 94, 101966. [Google Scholar] [CrossRef]

- Mansourkiaie, F.; Ismail, L.S.; Elfouly, T.M.; Ahmed, M.H. Maximizing lifetime in wireless sensor network for structural health monitoring with and without energy harvesting. IEEE Access 2017, 5, 2383–2395. [Google Scholar] [CrossRef]

- Lewandowski, M.; Płaczek, B.; Bernas, M. Classifier-Based Data Transmission Reduction in Wearable Sensor Network for Human Activity Monitoring. Sensors 2021, 21, 85. [Google Scholar] [CrossRef]

- Lewandowski, M.; Bernas, M.; Loska, P.; Szymała, P.; Płaczek, B. Extending Lifetime of Wireless Sensor Network in Application to Road Traffic Monitoring. In Computer Networks, Proceedings of the International Conference on Computer Networks, Kamień Śląski, Poland, 25–27 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 112–126. [Google Scholar]

- Cardei, M.; Thai, M.T.; Li, Y.; Wu, W. Energy-efficient target coverage in wireless sensor networks. In Proceedings of the IEEE 24th Annual Joint Conference of the IEEE Computer and Communications Societies, Miami, FL, USA, 13–17 March 2005; Volume 3, pp. 1976–1984. [Google Scholar]

- Nguyen, T.N.; Huynh, D.T. Extending sensor networks lifetime through energy efficient organization. In Proceedings of the International Conference on Wireless Algorithms, Systems and Applications (WASA 2007), Chicago, IL, USA, 1–3 August 2007; pp. 205–212. [Google Scholar]

- Kui, X.; Wang, J.; Zhang, S.; Cao, J. Energy Balanced Clustering Data Collection Based on Dominating Set in Wireless Sensor Networks. Adhoc Sens. Wirel. Netw. 2015, 24, 199–217. [Google Scholar]

- Hedar, A.R.; Abdulaziz, S.N.; Mabrouk, E.; El-Sayed, G.A. Wireless sensor networks fault-tolerance based on graph domination with parallel scatter search. Sensors 2020, 20, 3509. [Google Scholar] [CrossRef]

- Haynes, T.W.; Hedetniemi, S.T.; Henning, M.A. Topics in Domination in Graphs; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Slijepcevic, S.; Potkonjak, M. Power efficient organization of wireless sensor networks. In Proceedings of the IEEE International Conference on Communications, Conference Record (Cat. No. 01CH37240), Helsinki, Finland, 11–14 June 2001; Volume 2, pp. 472–476. [Google Scholar]

- Wang, H.; Li, Y.; Chang, T.; Chang, S. An effective scheduling algorithm for coverage control in underwater acoustic sensor network. Sensors 2018, 18, 2512. [Google Scholar] [CrossRef] [Green Version]

- Liao, C.C.; Ting, C.K. A novel integer-coded memetic algorithm for the set k-cover problem in wireless sensor networks. IEEE Trans. Cybern. 2017, 48, 2245–2258. [Google Scholar] [CrossRef]

- Chen, Z.; Li, S.; Yue, W. Memetic algorithm-based multi-objective coverage optimization for wireless sensor networks. Sensors 2014, 14, 20500–20518. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Balaji, S.; Anitha, M.; Rekha, D.; Arivudainambi, D. Energy efficient target coverage for a wireless sensor network. Measurement 2020, 165, 108167. [Google Scholar] [CrossRef]

- D’Ambrosio, C.; Iossa, A.; Laureana, F.; Palmieri, F. A genetic approach for the maximum network lifetime problem with additional operating time slot constraints. Soft Comput. 2020, 24, 14735–14741. [Google Scholar] [CrossRef]

- Li, J.; Potru, R.; Shahrokhi, F. A Performance Study of Some Approximation Algorithms for Computing a Small Dominating Set in a Graph. Algorithms 2020, 13, 339. [Google Scholar] [CrossRef]

- Li, R.; Hu, S.; Liu, H.; Li, R.; Ouyang, D.; Yin, M. Multi-Start Local Search Algorithm for the Minimum Connected Dominating Set Problems. Mathematics 2019, 7, 1173. [Google Scholar] [CrossRef] [Green Version]

- Bouamama, S.; Blum, C. An Improved Greedy Heuristic for the Minimum Positive Influence Dominating Set Problem in Social Networks. Algorithms 2021, 14, 79. [Google Scholar] [CrossRef]

- Garey, M.; Johnson, D. Computers and Intractability. A Guide to the Theory of NP-Completeness; W. H. Freeman: New York, NY, USA, 1979. [Google Scholar]

- Cardei, M.; MacCallum, D.; Cheng, M.X.; Min, M.; Jia, X.; Li, D.; Du, D.Z. Wireless sensor networks with energy efficient organization. J. Interconnect. Netw. 2002, 3, 213–229. [Google Scholar] [CrossRef]

- Feige, U.; Halldórsson, M.M.; Kortsarz, G.; Srinivasan, A. Approximating the domatic number. SIAM J. Comput. 2002, 32, 172–195. [Google Scholar] [CrossRef]

- Moscibroda, T.; Wattenhofer, R. Maximizing the lifetime of dominating sets. In Proceedings of the 19th IEEE International Parallel and Distributed Processing Symposium, Denver, CO, USA, 4–8 April 2005; p. 8. [Google Scholar]

- Islam, K.; Akl, S.G.; Meijer, H. Maximizing the lifetime of wireless sensor networks through domatic partition. In Proceedings of the 2009 IEEE 34th Conference on Local Computer Networks, Zurich, Switzerland, 20–23 October 2009; pp. 436–442. [Google Scholar]

- Pino, T.; Choudhury, S.; Al-Turjman, F. Dominating set algorithms for wireless sensor networks survivability. IEEE Access 2018, 6, 17527–17532. [Google Scholar] [CrossRef]

- Balbal, S.; Bouamama, S.; Blum, C. A Greedy Heuristic for Maximizing the Lifetime of Wireless Sensor Networks Based on Disjoint Weighted Dominating Sets. Algorithms 2021, 14, 170. [Google Scholar] [CrossRef]

- Bouamama, S.; Blum, C.; Boukerram, A. A population-based iterated greedy algorithm for the minimum weight vertex cover problem. Appl. Soft Comput. 2012, 12, 1632–1639. [Google Scholar] [CrossRef]

- Bouamama, S.; Blum, C. On solving large-scale instances of the knapsack problem with setup by means of an iterated greedy algorithm. In Proceedings of the 6th International Conference on Systems and Control (ICSC), Batna, Algeria, 7–9 May 2017; pp. 342–347. [Google Scholar]

- Ruiz, R.; Stützle, T. A simple and effective iterated greedy algorithm for the permutation flowshop scheduling problem. Eur. J. Oper. Res. 2007, 177, 2033–2049. [Google Scholar] [CrossRef]

- López-Ibánez, M. The irace package: Iterated racing for automatic algorithm configuration. Oper. Res. Perspect. 2016, 3, 43–58. [Google Scholar] [CrossRef]

- Blum, C.; Davidson, P.P.; López-Ibáñez, M.; Lozano, J.A. Construct, Merge, Solve & Adapt: A new general algorithm for combinatorial optimization. Comput. Oper. Res. 2016, 68, 75–88. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).