Investigating the Overall Experience of Wearable Robots during Prototype-Stage Testing

Abstract

1. Introduction

2. Theories and Research Method

2.1. Usability

2.2. User Experience

2.3. Overall Experience

2.4. Attitude

2.5. Research Model and Hypotheses

3. Methods

3.1. Participants

3.2. Wearable Robot

3.3. Experimental Procedure

3.4. Data Collection

3.5. Data Analysis

4. Results

4.1. Measurement Model Evaluation

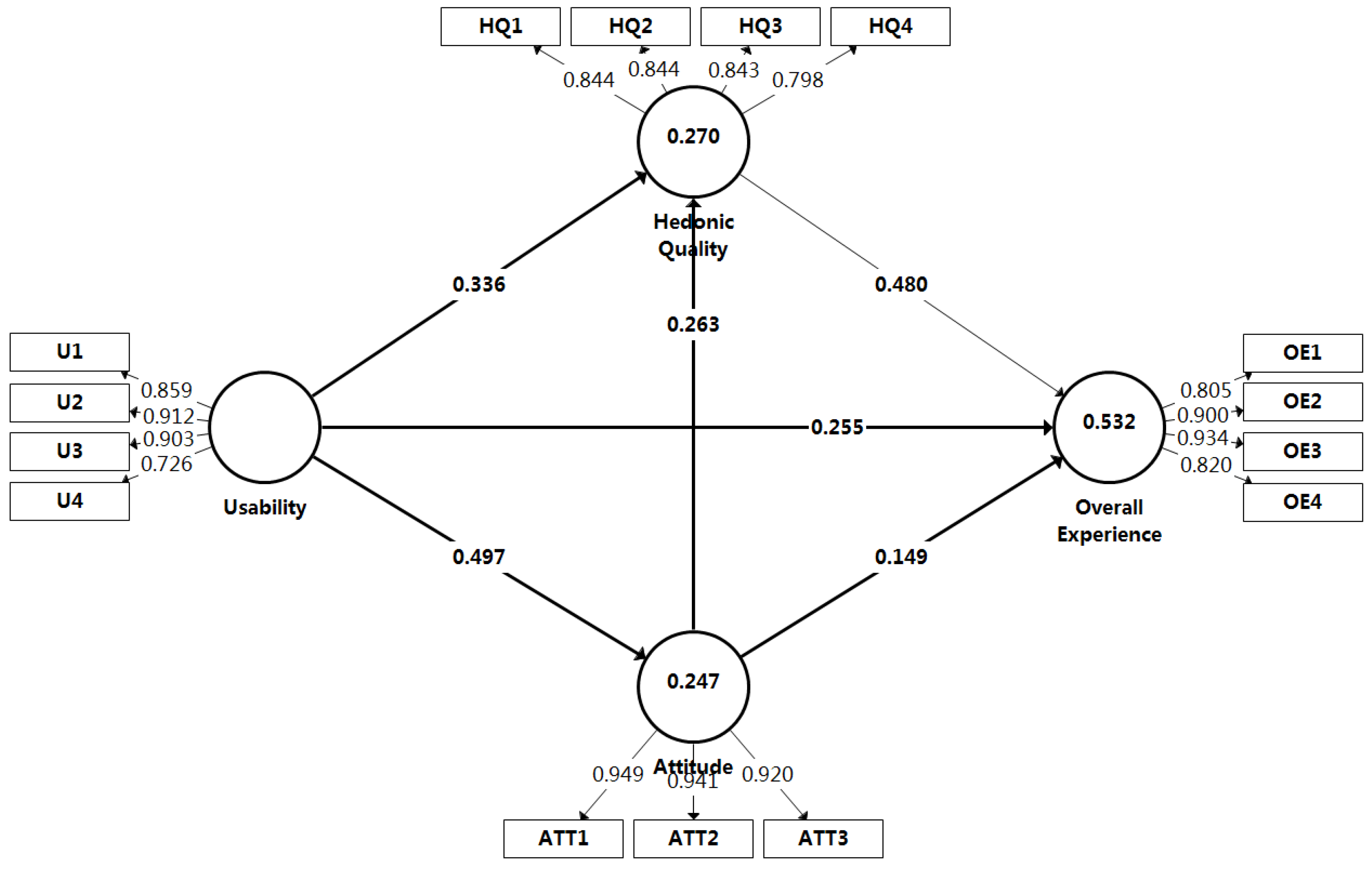

4.2. Structural Model Evaluation

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hoffmann, N.; Prokop, G.; Weidner, R. Methodologies for Evaluating Exoskeletons with Industrial Applications. Ergonomics 2021, 65, 276–295. [Google Scholar] [CrossRef] [PubMed]

- Elprama, S.A.; Vanderborght, B.; Jacobs, A. An industrial exoskeleton user acceptance framework based on a literature review of empirical studies. Appl. Ergon. 2021, 100, 103615. [Google Scholar] [CrossRef] [PubMed]

- Kermavnar, T.; de Vries, A.W.; de Looze, M.P.; O’Sullivan, L.W. Effects of industrial back-support exoskeletons on body loading and user experience: An updated sys-tematic review. Ergonomics 2021, 64, 685–711. [Google Scholar] [CrossRef] [PubMed]

- Elprama, S.A.; Vannieuwenhuyze, J.; De Bock, S.; VanderBorght, B.; De Pauw, K.; Meeusen, R.; Jacobs, A. Social Processes: What Determines Industrial Workers’ Intention to Use Exoskeletons? Hum. Factors J. Hum. Factors Ergon. Soc. 2020, 62, 337–350. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Nussbaum, M.A.; Smets, M. Usability, User Acceptance, and Health Outcomes of Arm-Support Exoskeleton Use in Automotive Assembly An 18-month Field Study. J. Occup. Environ. Med. 2022, 64, 202–211. [Google Scholar] [CrossRef] [PubMed]

- Hassan, H.M.; Galal-Edeen, G.H. From Usability to User Experience. In Proceedings of the 2nd International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 24–26 November 2017; Graduate University Okinawa Institute of Science and Technology: Okinawa, Japan, 2017; pp. 216–222. [Google Scholar]

- Shourmasti, E.; Colomo-Palacios, R.; Holone, H.; Demi, S. User Experience in Social Robots. Sensors 2021, 21, 5052. [Google Scholar] [CrossRef] [PubMed]

- Baltrusch, S.; van Dieën, J.; van Bennekom, C.; Houdijk, H. The effect of a passive trunk exoskeleton on functional performance in healthy individuals. Appl. Ergon. 2018, 72, 94–106. [Google Scholar] [CrossRef]

- ISO 9241-11; Ergonomic requirements for office work with visual display terminals (VDTs): Part 11: Guidance on usability. ISO: Geneva, Switzerland, 1998.

- ISO 9241-210; Ergonomics of Human-system Interaction: Part 210: Human-Centred Design for Interactive Systems. ISO: Geneva, Switzerland, 2019.

- Brooke, J. Sus: A quick and dirty’usability. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Finstad, K. The Usability Metric for User Experience. Interact. Comput. 2010, 22, 323–327. [Google Scholar] [CrossRef]

- Lewis, J.R.; Utesch, B.S.; Maher, D.E. UMUX-LITE: When there’s no time for the SUS. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 2099–2102. [Google Scholar]

- Lewis, J.R. Measuring User Experience With 3, 5, 7, or 11 Points:Does It Matter? Hum. Factors 2021, 63, 999–1011. [Google Scholar] [CrossRef] [PubMed]

- ISO 9241-210; Ergonomics of Human-system Interaction: Part 210: Human-centred Design for Interactive Systems. ISO: Geneva, Switzerland, 2010.

- Law, E.L.C. The measurability and predictability of user experience. In Proceedings of the ACM Sigchi Symposium on Engineering Interactive Computing Systems, Pisa, Italy, 13–16 June 2011; p. 1. [Google Scholar]

- Law, E.L.C.; Roto, V.; Hassenzahl, M.; Vermeeren, A.P.O.S.; Kort, J. Understanding, Scoping and Defining User eXperience: A Survey Approach. In Proceedings of the 27th Annual CHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 719–728. [Google Scholar]

- Frison, A.-K.; Riener, A. The “DAUX Framework”: A Need-Centered Development Approach to Promote Positive User Experience in the Development of Driving Automation. In Studies in Computational Intelligence, Proceedings of the User Experience Design in the Era of Automated Driving; Riener, A., Jeon, M., Alvarez, I., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 237–271. [Google Scholar]

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. In Mensch & Computer; Springer: Berlin/Heidelberg, Germany, 2003; pp. 187–196. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and evaluation of a user experience questionnaire. In Symposium of the Austrian HCI and Usability Engineering Group; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the user experience question-naire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103–108. [Google Scholar]

- Sutcliffe, A.; Hart, J. Analyzing the Role of Interactivity in User Experience. Int. J. Hum. Comput. Interact. 2016, 33, 229–240. [Google Scholar] [CrossRef]

- Lah, U.; Lewis, J.R.; Šumak, B. Perceived Usability and the Modified Technology Acceptance Model. Int. J. Hum. Comput. Interact. 2020, 36, 1216–1230. [Google Scholar] [CrossRef]

- Lewis, J.R.; Mayes, D.K. Development and Psychometric Evaluation of the Emotional Metric Outcomes (EMO) Ques-tionnaire. Int. J. Hum. Comput. Interact. 2014, 30, 685–702. [Google Scholar] [CrossRef]

- Hart, J.; Sutcliffe, A. Is it all about the Apps or the Device?: User experience and technology acceptance among iPad users. Int. J. Hum. Comput. Stud. 2019, 130, 93–112. [Google Scholar] [CrossRef]

- Hart, J. Investigating User Experience and User Engagement for Design. Ph.D. Dissertation, The University of Manchester, Manchester, UK, 2015. [Google Scholar]

- O’Brien, H.L. The influence of hedonic and utilitarian motivations on user engagement: The case of online shopping expe-riences. Interact. Comput. 2010, 22, 344–352. [Google Scholar] [CrossRef]

- Hornbæk, K.; Hertzum, M. Technology acceptance and user experience: A review of the experiential component in HCI. ACM Trans. Comput. Hum. Interact. (TOCHI) 2017, 24, 1–30. [Google Scholar] [CrossRef]

- Hassenzahl, M. The Effect of Perceived Hedonic Quality on Product Appealingness. Int. J. Hum. Comput. Interact. 2001, 13, 481–499. [Google Scholar] [CrossRef]

- Hassenzahl, M. The Interplay of Beauty, Goodness, and Usability in Interactive Products. Hum. Comput. Interact. 2004, 19, 319–349. [Google Scholar] [CrossRef]

- Hassenzahl, M.; Tractinsky, N. User experience—A research agenda. Behav. Inf. Technol. 2006, 25, 91–97. [Google Scholar] [CrossRef]

- Sauer, J.; Sonderegger, A.; Schmutz, S. Usability, user experience and accessibility: Towards an integrative model. Ergonomics 2020, 63, 1207–1220. [Google Scholar] [CrossRef] [PubMed]

- Van Schaik, P.; Hassenzahl, M.; Ling, J. User-experience from an inference perspective. ACM Trans. Comput. Hum. Interact. (TOCHI) 2012, 19, 1–25. [Google Scholar] [CrossRef]

- Lavie, T.; Tractinsky, N. Assessing dimensions of perceived visual aesthetics of web sites. Int. J. Hum. Comput. Stud. 2004, 60, 269–298. [Google Scholar] [CrossRef]

- Lewis, J.R.; Utesch, B.S.; Maher, D.E. Measuring Perceived Usability: The SUS, UMUX-LITE, and AltUsability. Int. J. Hum. Comput. Interact. 2015, 31, 496–505. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a Benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40. [Google Scholar] [CrossRef]

- Hassenzahl, M.; Monk, A. The Inference of Perceived Usability From Beauty. Hum. Comput. Interact. 2010, 25, 235–260. [Google Scholar] [CrossRef]

- Porat, T.; Tractinsky, N. It’s a pleasure buying here: The effects of web-store design on consumers’ emotions and attitudes. Hum. Comput. Interact. 2012, 27, 235–276. [Google Scholar]

- Shore, L.; Power, V.; Hartigan, B.; Schülein, S.; Graf, E.; de Eyto, A.; O’Sullivan, L. Exoscore: A Design Tool to Evaluate Factors Associated With Technology Acceptance of Soft Lower Limb Exosuits by Older Adults. Hum. Factors J. Hum. Factors Ergon. Soc. 2019, 62, 391–410. [Google Scholar] [CrossRef]

- Hart, J.; Sutcliffe, A.G.; Angeli, A.D. Love it or hate it! interactivity and user types. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris. France, 27 April–2 May 2013; Association for Computing Machinery: Paris, France, 2013; pp. 2059–2068. [Google Scholar]

- Sarstedt, M.; Hair, J.F., Jr.; Ringle, C.M. “PLS-SEM: Indeed a silver bullet”—Retrospective observations and recent advances. J. Mark. Theory Pract. 2022, 1–15. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Sarstedt, M.; Cheah, J.-H. Partial least squares structural equation modeling using SmartPLS: A software review. J. Mark. Anal. 2019, 7, 196–202. [Google Scholar] [CrossRef]

- Ringle, C.M.; Sarstedt, M.; Straub, D.W. A Critical Look at the Use of PLS-SEM in MIS Quarterly. MIS Q. 2012, 36, iii–xiv. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial Least Squares Structural Equation Modeling. In Handbook of Market Research; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Dijkstra, T.K.; Henseler, J. Consistent Partial Least Squares Path Modeling. MIS Q. 2015, 39, 297–316. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Shmueli, G.; Sarstedt, M.; Hair, J.F.; Cheah, J.-H.; Ting, H.; Vaithilingam, S.; Ringle, C.M. Predictive model assessment in PLS-SEM: Guidelines for using PLSpredict. Eur. J. Mark. 2019, 53, 2322–2347. [Google Scholar] [CrossRef]

- Franke, G.; Sarstedt, M. Heuristics versus statistics in discriminant validity testing: A comparison of four procedures. Internet Res. 2019, 29, 430–447. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Shmueli, G.; Ray, S.; Estrada, J.M.V.; Chatla, S.B. The elephant in the room: Predictive performance of PLS models. J. Bus. Res. 2016, 69, 4552–4564. [Google Scholar] [CrossRef]

- Perreault, M., Jr. Collinearity, Power, and Interpretation of Multiple Regression Analysis. J. Mark. Res. 1991, 28, 268. [Google Scholar]

- Becker, J.-M.; Ringle, C.M.; Sarstedt, M.; Völckner, F. How collinearity affects mixture regression results. Mark. Lett. 2014, 26, 643–659. [Google Scholar] [CrossRef]

- Streukens, S.; Leroi-Werelds, S. Bootstrapping and PLS-SEM: A step-by-step guide to get more out of your bootstrap results. Eur. Manag. J. 2016, 34, 618–632. [Google Scholar] [CrossRef]

- Aguirre-Urreta, M.I.; Rönkkö, M. Statistical Inference with PLSc Using Bootstrap Confidence Intervals. MIS Q. 2018, 42, 1001–1020. [Google Scholar] [CrossRef]

- Shmueli, G.; Koppius, O.R. Predictive Analytics in Information Systems Research. MIS Q. 2011, 35, 553–572. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Ringle, C.M. Rethinking some of the rethinking of partial least squares. Eur. J. Mark. 2019, 53, 566–584. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sinkovics, R.R. The use of partial least squares path modeling in international marketing. In New Challenges to International Marketing; Emerald Group Publishing Limited: Bradford, UK, 2009; pp. 277–319. [Google Scholar]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. PLS-SEM: Indeed a silver bullet. J. Mark. Theory Pract. 2011, 19, 139–152. [Google Scholar] [CrossRef]

- Raithel, S.; Sarstedt, M.; Scharf, S.; Schwaiger, M. On the value relevance of customer satisfaction. Multiple drivers and multiple markets. J. Acad. Mark. Sci. 2012, 40, 509–525. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988; pp. 20–26. [Google Scholar]

- Liu, S.; Zheng, X.S.; Liu, G.; Jian, J.; Peng, K. Beautiful, usable, and popular: Good experience of interactive products for Chinese users. Sci. China Inf. Sci. 2013, 56, 1–14. [Google Scholar] [CrossRef]

| Code | Items |

|---|---|

| Usability [3,12,23,35] | |

| U1 | This wearable robot’s capabilities meet my requirements. |

| U2 | Using this wearable robot enables me to operate accurately. |

| U3 | This wearable robot is easy to use. |

| U4 | Using this wearable robot enables me to accomplish tasks more quickly. |

| Hedonic quality [20,21,33,36,37] | |

| HQ1 | I would feel interesting wearing the wearable robot. |

| HQ2 | The wearable robot looks exciting to wear and use. |

| HQ3 | Working with the wearable robot is original. |

| HQ4 | It would be innovative for me to use the wearable robot at work. |

| Attitude [4,38,39] | |

| ATT1 | Using the wearable robot is a good idea. |

| ATT2 | Using the wearable robot in my coursework would be a pleasant experience. |

| ATT3 | I like working with the wearable robot. |

| Overall experience [14,23,25,26,40] | |

| OE1 | I feel motivated to continue to use the wearable robot. |

| OE2 | I would recommend the wearable robot to my friends. |

| OE3 | My experience of using the wearable robot is enjoyable. |

| OE4 | Overall, I am very satisfied with the wearable robot. |

| Constructs | Items | Loadings | α | ρA | ρC | AVE |

|---|---|---|---|---|---|---|

| >0.7 | >0.7 | >0.7 | >0.7 | >0.5 | ||

| Attitude | ATT1 | 0.949 | 0.930 | 0.937 | 0.956 | 0.878 |

| ATT2 | 0.941 | |||||

| ATT3 | 0.920 | |||||

| Hedonic Quality | HQ1 | 0.844 | 0.852 | 0.859 | 0.900 | 0.693 |

| HQ2 | 0.844 | |||||

| HQ3 | 0.843 | |||||

| HQ4 | 0.798 | |||||

| Overall Experience | OE1 | 0.805 | 0.888 | 0.894 | 0.923 | 0.751 |

| OE2 | 0.900 | |||||

| OE3 | 0.934 | |||||

| OE4 | 0.820 | |||||

| Usability | U1 | 0.859 | 0.875 | 0.907 | 0.914 | 0.728 |

| U2 | 0.912 | |||||

| U3 | 0.903 | |||||

| U4 | 0.726 |

| Attitude | Hedonic Quality | Overall Experience | Usability | |

|---|---|---|---|---|

| Attitude | ||||

| Hedonic quality | 0.482 | |||

| Overall Experience | 0.525 | 0.757 | ||

| Usability | 0.529 | 0.519 | 0.605 |

| Constructs | Attitude | Hedonic Quality | Overall Experience |

|---|---|---|---|

| Attitude | 1.327 | 1.422 | |

| Hedonic quality | 1.370 | ||

| Usability | 1 | 1.327 | 1.482 |

| Direct Effects | O | M | STDEV | T | P | 95% Confidence Interval |

|---|---|---|---|---|---|---|

| ATT→HQ | 0.263 | 0.262 | 0.097 | 2.703 | 0.007 | [0.070, 0.448] |

| ATT→OE | 0.149 | 0.148 | 0.079 | 1.897 | 0.058 | [−0.006, 0.448] |

| HQ→OE | 0.480 | 0.481 | 0.061 | 7.812 | 0.000 | [0.355, 0.597] |

| U→ATT | 0.497 | 0.499 | 0.066 | 7.468 | 0.000 | [0.361, 0.620] |

| U→HQ | 0.336 | 0.340 | 0.088 | 3.802 | 0.000 | [0.166, 0.511] |

| U→OE | 0.255 | 0.255 | 0.079 | 3.211 | 0.001 | [0.097, 0.406] |

| Specific Indirect Effects | ||||||

| U→ATT→HQ | 0.130 | 0.131 | 0.053 | 2.448 | 0.014 | [0.033, 0.242] |

| U→ATT→OE | 0.074 | 0.075 | 0.042 | 1.746 | 0.081 | [−0.003, 0.166] |

| ATT→HQ→OE | 0.126 | 0.127 | 0.051 | 2.49 | 0.013 | [0.031, 0.230] |

| U→ATT→HQ→OE | 0.063 | 0.063 | 0.027 | 2.286 | 0.022 | [0.015, 0.122] |

| U→HQ→OE | 0.162 | 0.163 | 0.047 | 3.418 | 0.001 | [0.075, 0.261] |

| Total Effect | ||||||

| ATT→HQ | 0.263 | 0.262 | 0.097 | 2.703 | 0.007 | [0.070, 0.448] |

| ATT→OE | 0.275 | 0.274 | 0.092 | 2.996 | 0.003 | [0.089, 0.451] |

| HQ→OE | 0.480 | 0.481 | 0.061 | 7.812 | 0.000 | [0.355, 0.597] |

| U→ATT | 0.497 | 0.499 | 0.066 | 7.468 | 0.000 | [0.361, 0.620] |

| U→HQ | 0.467 | 0.472 | 0.067 | 6.998 | 0.000 | [0.332, 0.595] |

| U→OE | 0.553 | 0.557 | 0.064 | 8.658 | 0.000 | [0.425, 0.674] |

| f2 | Category | |

|---|---|---|

| U→ATT | 0.327 | Large |

| U→HQ | 0.117 | Moderate |

| U→OE | 0.094 | Small |

| ATT→HQ | 0.071 | Small |

| ATT→OE | 0.034 | Small |

| HQ→OE | 0.360 | Large |

| Items | PLS | LM | PLS-LM | |

|---|---|---|---|---|

| RMSE | Q2predict | RMSE | RMSE | |

| ATT1 | 1.028 | 0.232 | 1.030 | −0.002 |

| ATT2 | 1.097 | 0.220 | 1.095 | 0.002 |

| ATT3 | 1.114 | 0.162 | 1.134 | −0.020 |

| HQ1 | 1.135 | 0.130 | 1.155 | −0.020 |

| HQ2 | 1.052 | 0.221 | 1.066 | −0.014 |

| HQ3 | 1.086 | 0.103 | 1.099 | −0.013 |

| HQ4 | 1.066 | 0.088 | 1.080 | −0.014 |

| OE1 | 1.006 | 0.169 | 1.008 | −0.002 |

| OE2 | 1.036 | 0.197 | 1.045 | −0.009 |

| OE3 | 0.921 | 0.259 | 0.918 | 0.003 |

| OE4 | 0.935 | 0.253 | 0.921 | 0.014 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Yu, S.; Yuan, X.; Wang, Y.; Chen, D.; Wang, W. Investigating the Overall Experience of Wearable Robots during Prototype-Stage Testing. Sensors 2022, 22, 8367. https://doi.org/10.3390/s22218367

Wang J, Yu S, Yuan X, Wang Y, Chen D, Wang W. Investigating the Overall Experience of Wearable Robots during Prototype-Stage Testing. Sensors. 2022; 22(21):8367. https://doi.org/10.3390/s22218367

Chicago/Turabian StyleWang, Jinlei, Suihuai Yu, Xiaoqing Yuan, Yahui Wang, Dengkai Chen, and Wendong Wang. 2022. "Investigating the Overall Experience of Wearable Robots during Prototype-Stage Testing" Sensors 22, no. 21: 8367. https://doi.org/10.3390/s22218367

APA StyleWang, J., Yu, S., Yuan, X., Wang, Y., Chen, D., & Wang, W. (2022). Investigating the Overall Experience of Wearable Robots during Prototype-Stage Testing. Sensors, 22(21), 8367. https://doi.org/10.3390/s22218367