Abstract

Unmanned Aerial Vehicles (UAVs, also known as drones) have become increasingly appealing with various applications and services over the past years. Drone-based remote sensing has shown its unique advantages in collecting ground-truth and real-time data due to their affordable costs and relative ease of operability. This paper presents a 3D placement scheme for multi-drone sensing/monitoring platforms, where a fleet of drones are sent for conducting a mission in a given area. It can range from environmental monitoring of forestry, survivors searching in a disaster zone to exploring remote regions such as deserts and mountains. The proposed drone placing algorithm covers the entire region without dead zones while minimizing the number of cooperating drones deployed. Naturally, drones have limited battery supplies which need to cover mechanical motions, message transmissions and data calculation. Consequently, the drone energy model is explicitly investigated and dynamic adjustments are deployed on drone locations. The proposed drone placement algorithm is 3D landscaping-aware and it takes the line-of-sight into account. The energy model considers inter-communications within drones. The algorithm not only minimizes the overall energy consumption, but also maximizes the whole drone team’s lifetime in situations where no power recharging facilities are available in remote/rural areas. Simulations show the proposed placement scheme has significantly prolonged the lifetime of the drone fleet with the least number of drones deployed under various complex terrains.

1. Introduction

As Unmanned Aerial Vehicles (UAVs) are getting more attractions due to their mobility, convenience and low costs, both industry and academia have investigated and deployed drone-related applications and services [1,2,3,4]. UAV-based remote sensing is one of those promising applications [5,6,7]. Drones as camera/sensor platforms offer key advantages over traditional spaceborne and aircraft methods. Compared to satellite observations, the drone-based systems are more capable of having high spatial resolution images. In addition, drone flights can be scheduled whenever the weather and hardware equipment are permitted, as opposed to spaceborne sensing which requires that the satellite orbits back to where the site of interest is covered [8]. As for systematic aerial photography through flights, it requires more resources including aircraft, pilots as well as photographers and has more constraints such as speeds and altitudes.

Due to the above-mentioned capabilities of drone-based sensing systems, drones find many promising applications in crop science, environmental studies, remote sensing and archaeological research [9,10,11].

1.1. Related Work

Most researchers focus on planning the flight trajectory of a single drone to serve the sensing/monitoring mission. In [12], Holness et al. let DJI Phantom 2 Vision+ fly linear transects, which were oriented over the area of interest and allowed for the collection of overlapping imagery. They treated overlapping as a necessary requirement for post-processing image stitching to create the large high-resolution mosaic needed for analysis. Authors in [13] applied multispectral, thermal and visible light imaging modules simultaneously for acquiring diagnostic information to serve smart farming purposes. The drone ACSL-PF1 flew at the altitude of 100 m with the speed of 4 m s over the target area. In [14], a drone scheduling problem in which a drone flew on a fixed path was presented. The authors proposed a dynamic algorithm to optimize the speed of the drone to complete tasks within a certain time and without depleting its battery.

The above works produce good results with applications that only require one-time noncontinuous data, but are not suitable with applications that require monitoring the whole area simultaneously for a certain period of time. Studies on coordinated multi-drone surveillance have gained increasing attention over the past years. The multi-drone surveillance mission requires two critical problems to be resolved: drone placement and battery endurance.

The drone placement is a coverage problem. The work in [15,16,17] studied the 3D placement of drone base station. Unlike remote sensing using visual cameras, it applies the electromagnetic (EM) wave for communication. The EM wave can be treated as a type of active sensing while the camera is passive sensing. Alzenad et al. [15] applied line-of-sight and non-line-of-sight communication models to find the best height for drones that maximizes the covered area with a fixed transmitting power. It then formulated the coverage problem to a 2D placement strategy. The authors in [16] applied the same model from [15] to do the placement with extra environmental parameters provided: urban, suburban and dense urban. Reference [17] proposed a simplified placement scheme that utilized equal circles without overlapping to improve coverage efficiency at the cost of some uncovered zones.

These studies, however, only considered the given landscape as a smooth horizontal plane. They typically obtained the optimal altitude first, then formulated the coverage problem into a 2D placement strategy. In real-world applications, especially for visual sensing, each drone’s coverage varies tremendously as topography changes, which complicates the placement.

The battery endurance plays an important role in designing the drone sensing fleet. Naturally, flying drones have limited battery supplies which are supposed to cover physical motions, message transmissions and data calculation. Since the surveillance job demands a drone fleet to hold its position for certain periods, the drone energy model needs to be explicitly investigated and necessary adjustments should be deployed on drone locations. Some existing drone-related papers took energy efficiency into account and tried to minimize the power of each drone. In paper [15], authors tried to find the smallest coverage circle while supporting the same amount of clients, which saved the drone’s transmitting power. Authors in [18,19] managed to find an optimal travel path to improve energy efficiency.

However, these only consider the total energy consumption without evaluating the impact of bottleneck drones on the lifetime of the drone fleet, which is an important factor in the operations of real-world applications. In addition, the fact that drones typically operate in geographically challenging areas is often ignored. Note that in our paper, the lifetime of the drone fleet is defined as the time of the first drone running out of power, which is widely adopted [20,21].

1.2. Our Contribution

To address these issues, in this paper, we propose a 3D placement algorithm for the multi-drone sensing fleet, which targets large areas with continuous omnidirectional surveillance requirements and various geographical conditions. The sensing technology ranges from visible light, multispectral, hyperspectral camera to EM transceivers. Potential applications include the assistance of rescuing missions in flooded regions, temporary recovery of telecommunication systems in earthquake areas and environmental monitoring of areas with rough terrains.

In the 3D placement problem, we consider the visual blocking situation for each drone hovering over various terrains. To save the overall energy consumption, we analyze the drone’s energy model and study the impact of mutual distances among the drones. Considering the finite amount of energy sources as well as the fact that each drone has to work in coordination with remaining drones to share information as required by the application, the lifetime of the entire drone fleet is strongly affected by the lifetime of individual drones, especially those in critical locations. In order to balance the entire network’s energy, we design the routing algorithm that chooses the relaying neighbors and selects the data path to reach the destination. In addition, dynamic adjustments are applied to drones’ locations during the mission to prolong the drone fleet’s lifetime.

In our work, we have the following assumptions: The geographical conditions of the investigated area are known with its 3D dimensional contour map given. There are no charging facilities or battery replacements available. Compared to other research, our work contributes in the following ways: (1) We study the placement of a multi-drone sensing problem that requires continuous omnidirectional monitoring. (2) Our model considers topographical factors, including rough terrain situations where mountains, valleys or other obstacles exist. (3) Our 3D placement, routing scheme and real-time location adjustments aim at improving individual energy efficiency while prolonging the lifetime of the whole drone fleet at the same time.

The rest is organized as follows: First, the system architecture is described in Section 2. More specifically, the system model and energy model are elaborated. Next, research methods are illustrated in Section 3, which shows the details of the mesh simplification, sub-section division, drone placement, routing scheme and dynamic adjustments. Then, Section 4 presents simulation results and evaluates the performance. Finally, Section 5 draws the conclusion.

2. System Architecture

2.1. System Model

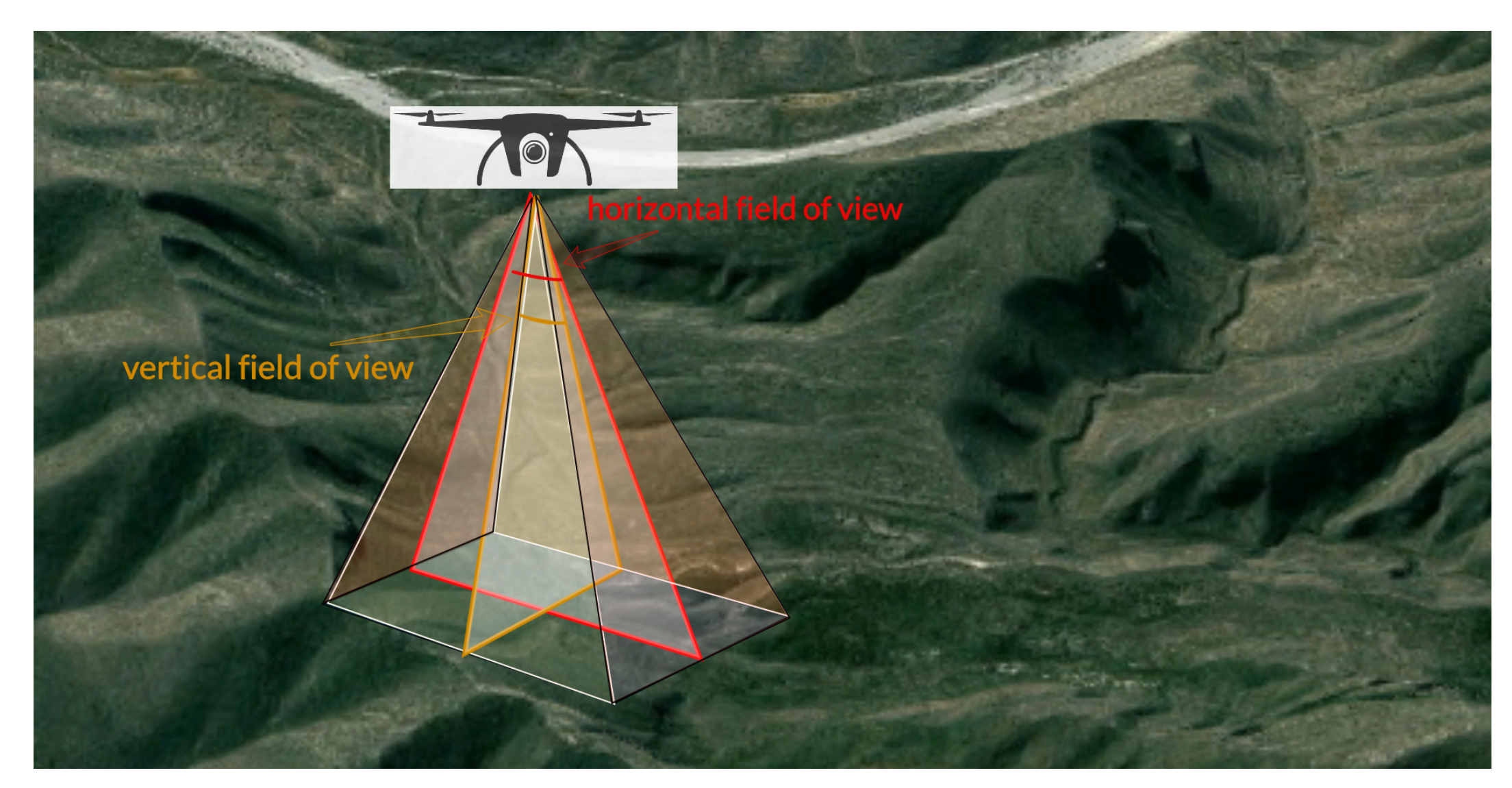

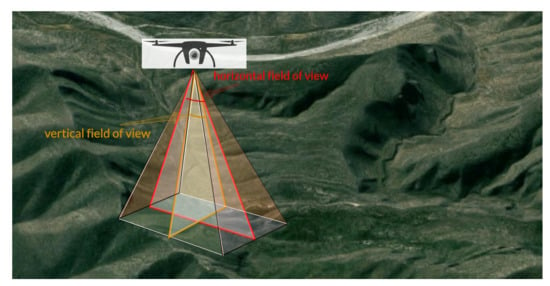

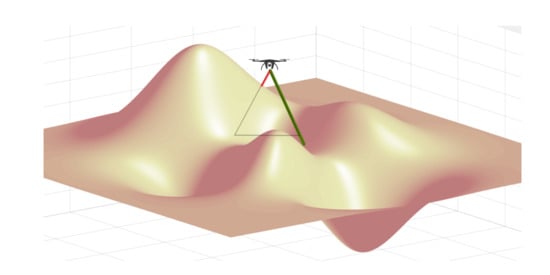

For simplicity, the target area T is defined as a square with a side length of a. The terrains are generated by translating and scaling Gaussian distributions. A set of drones are responsible for the area of interest. The number n is kept as small as possible to serve the monitoring job. The whole surveillance area is supposed to be covered by the drone fleet’s cameras. Based on the requirements of different applications, the camera can be visible light, multispectral, hyperspectral and even be replaced by EM transceivers. Since our focus is on the placing algorithm and the mission duration, we simplify this model with the most common visible light camera HD 1080p. and are the camera’s horizontal and vertical field of view (FOV), respectively. Figure 1 illustrates the drone camera’s FOV and shows its coverage during the mission execution. (pixels m) is the image resolution parameter required by the surveillance task, e.g., the requirements of vary greatly from object identification, motion detection to object tracking. The objectives of the placement algorithm include:

Figure 1.

The drone camera’s field of view.

- building a regional coverage model;

- determining the least number of drones needed for coverage in a given area;

- finding optimal drones’ 3D-placement locations to save communication energy;

- proposing a strategy to prolong the entire drone fleet’s lifetime.

2.2. Energy Model

The energy consumption of the drone during the mission execution process is given by:

where , and are the energy consumed during the flight, calculation and communication modes, respectively.

2.2.1. Flight Mode

Even without any communication cost, drones need to consume power to maintain flying status, which consumes the majority of the battery energy. Drone’s mechanical energy involves two processes, namely, hover and motion. It can be written as:

The hover energy can be calculated through:

where is the time duration of the hover status, and is the hover power. According to the model in [18,22], is a function of the drone’s mass m, the number of propellers n as well as the propeller’s radius r:

where g and are the Earth’s gravitational acceleration and the air density, respectively. As stated in Equation (4), we want to have a lighter drone with more and larger propellers to achieve low . It is a paradox since larger numbers and sizes of propellers will incur larger mass, which goes against the “lightweight” drones. In addition, there is another antinomy: in the real world, manufacturers intend to equip drones with high-capacity batteries for longer flight time; however, the mass of the battery itself has a linear relationship with its charging capacity.

The above constraints limit the drone’s flight duration based on today’s technology. New low-density materials and high-volume batteries are the technical bottlenecks for energy efficiency research in the flight mode, which is in the domain of mechanical and chemical engineering. We apply the latest commercial drone’s parameters as the basis of our following analysis.

As for the motion energy, it can be obtained by:

where is the motion power that measures the “moving” process from one location to another and can be modeled as a linear function of the drone speed v [18]:

where and represent the power of drone when moving at the maximum speed and holding the hover status, respectively. To some extent, is equal to . and are usually known drone parameters. The speed v is time-variant and is subject to:

where d is the distance between the departure and destination locations.

2.2.2. Calculation Mode

The calculation energy can be written as:

where is the power consumption of computation. These calculations range from image processing, pattern identification, object tracking to decision making and event reporting. The critical technologies that impact energy efficiency are the calculation algorithms and data structures that are related to specific tasks and applications. In this paper, we do not restrict the type of applications of the drone fleet. However, for simulation purposes, is simplified as a constant and the detail is shown in the Section 4.

2.2.3. Communication Mode

The energy spent in message sharing among drones made up another significant portion of battery consumption. For example, in some applications, surveillance area videos should be transmitted to the server where groups of experts and officials can make real-time decisions. It incurs a large amount of data to be sent from drones’ antennas, which makes the batteries drain faster and it might even exceed the energy consumption of the flight mode. Even for cases where there is no need for real-time video transmissions, the critical event reporting messages and nearby drone information sharing packets can still involve a large energy consumption when the communication distance is far. As we do not restrict the type of applications of the drone fleet, we focus on 3D placement schemes that optimize energy spent in the communication mode.

The communication energy is:

where and are the energy required for the transmitter and the receiver, respectively. To simplify the energy consumption during the radio communication process, we adopt the model as depicted in [23]. For transmitter or receiver circuitry, the radio transmission dissipates (nJ bit). The (nJ bit m) is defined for the transmitter amplifier’s consumption. The energy to transfer a message of bits over distance d is:

The energy consumption to receive the packet is:

3. Research Methods

We define as the terrain function. The location of the drone is denoted as . labeled as the covering region of drone , is the collection of all points where each can be detected by drone . As a result that the sensing camera is HD 1080p (1920p × 1080p), with the task resolution requirement (pixels m) known, we can obtain the maximum region a drone can supervise by:

and

where l and w represent the length in the X axis (east–west) and the width in the Y axis (north–south), respectively. To meet the resolution requirement, is constrained by:

where represents an rectangular area. The horizontal and vertical FOV , are constrained by:

For convenience, we assume that the drone’s horizontal FOV is always along the X axis and the vertical FOV is corresponds to the Y axis. Considering the light blockage, the coverage problem can be formulated as follows: For any , we say is under the surveillance of drone , if

and

and no other that satisfies Equations (16) and (17) as well as:

3.1. Terrain Simplification

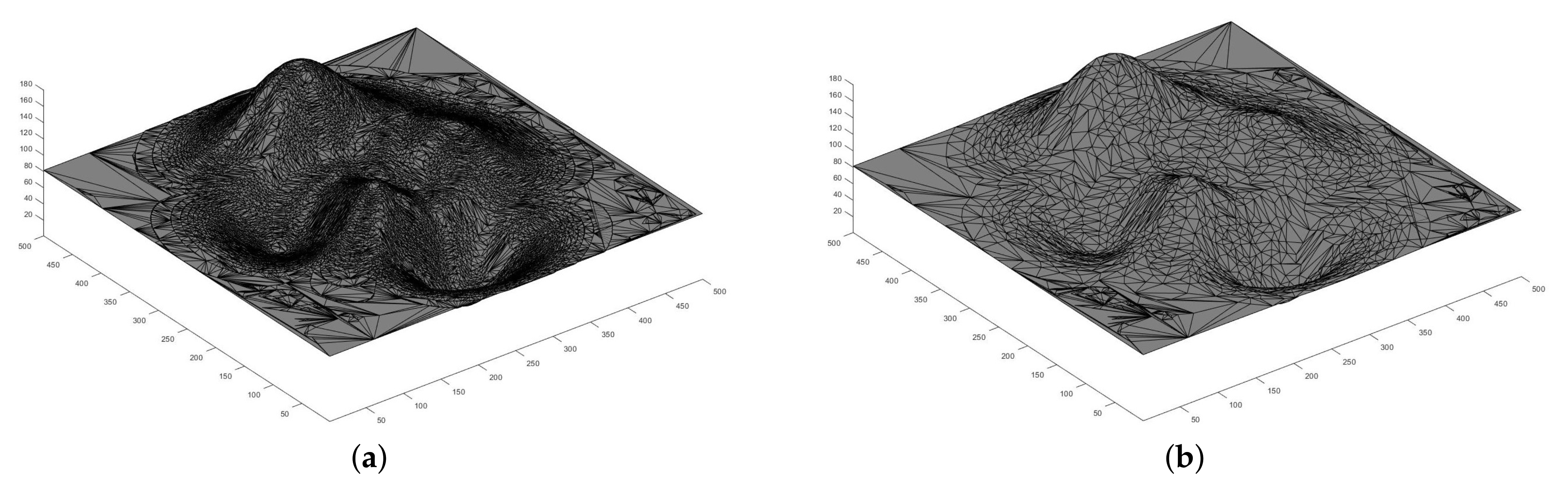

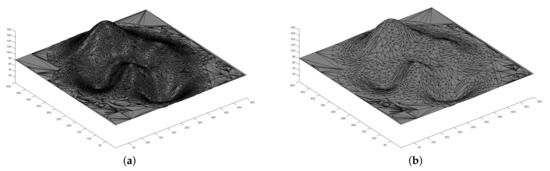

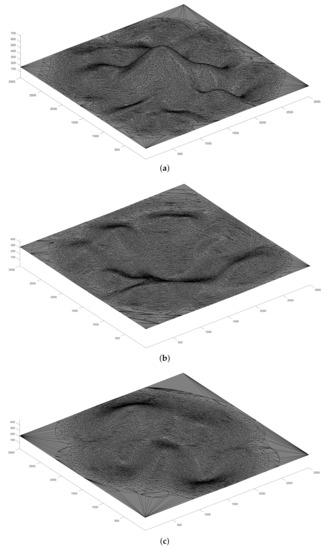

In real-world surveillance tasks, the target region can stretch for several miles. Time and space complexity are critical bottlenecks for the coverage problem and the drone placement scheme. Considering the target area’s size, it is not possible or even necessary to test feasible drone locations point by point. We only need to try the particular coordinates which are iconic “landmarks”. We simplify the terrains as illustrated in Figure 2. Only the space above the simplified vertices is explored. The given topography of the target area T is a height map .

Figure 2.

The results of the terrains’ simplification: (a) the terrain before simplification, and (b) the terrain after simplification.

Simplification is performed as follows:

- transfer height map H into a 3D binary volume array ;

- extract the isosurface of the volumetric array V and store the faces and vertices information as ;

- apply a mesh decimation algorithm to simplify the surface and obtain faces and vertices as .

The isosurface extraction and patch reduction algorithm are mature [24,25,26] and can be found in many packages or libraries. One example using MATLAB library is shown in Algorithm 1 for other researchers to replicate our works. In addition, some of the notations in the algorithm are used in later discussion.

| Algorithm 1: Terrain Simplification: Calculate |

| Require: |

| Ensure: |

| 1: |

| 2: for to a do |

| 3: for to a do |

| 4: for to |

| 5: |

| 6: end for |

| 7: end for |

| 8: end for |

| 9: |

| 10: |

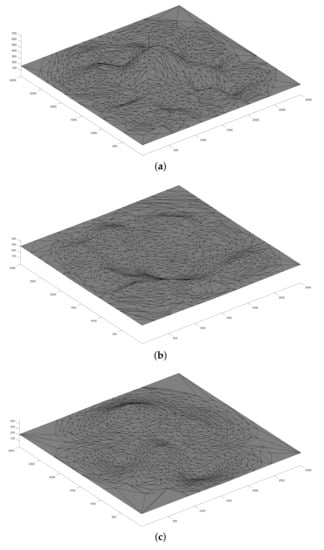

3.2. Area Division

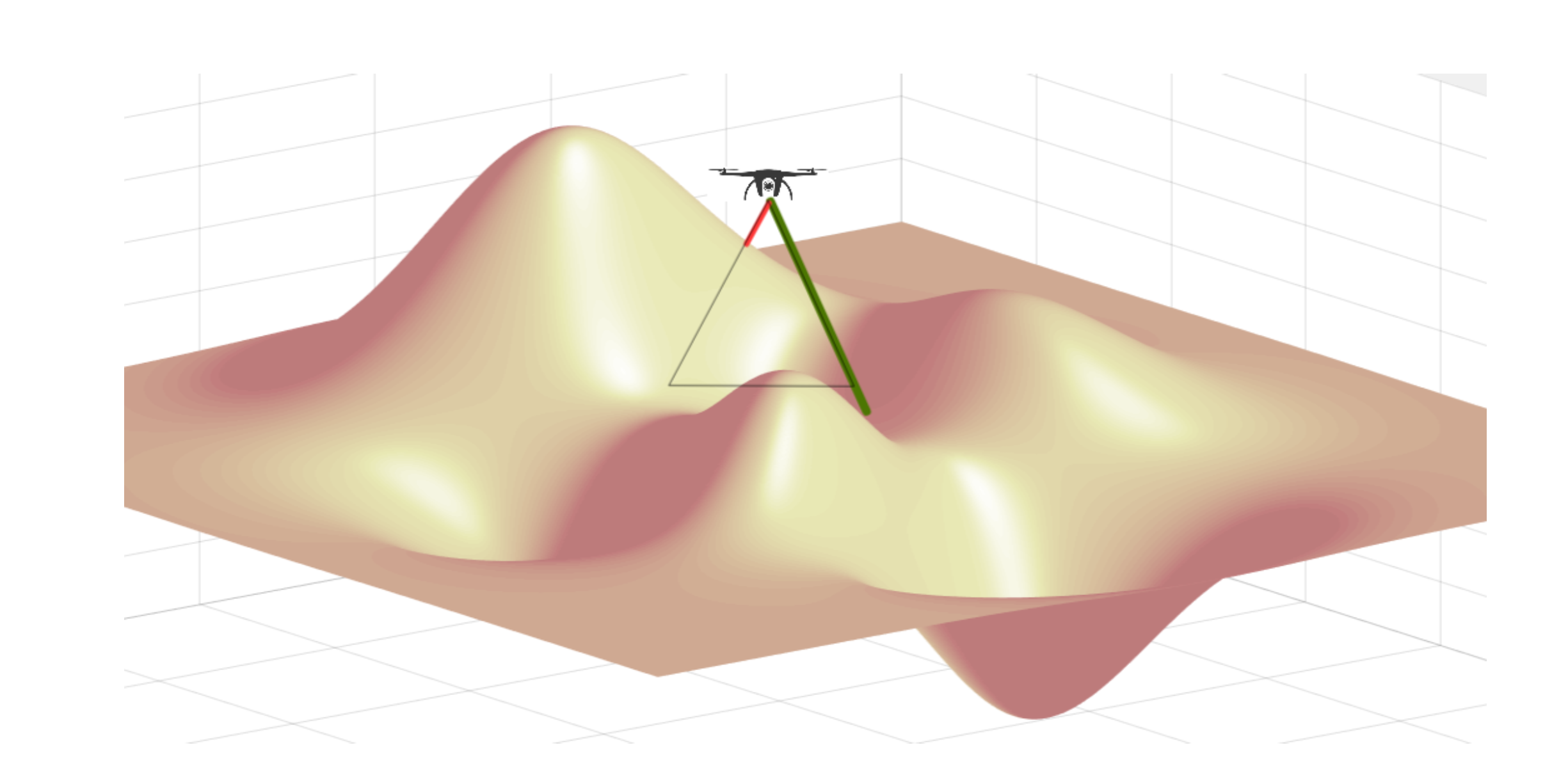

The given area T is supposed to be covered by the drone fleet, where each drone will be assigned to a small section . We denote the number of sections as n. In papers where a flat plane is assumed for the whole area T, the division problem is usually simplified. More specifically, every drone will be distributed with an equal rectangular shape. However, due to the sophisticated terrains in our study, the above algorithm does not apply to our system model. As shown in Figure 3, the coverage of a drone can be stretched when placed over the valley (green line) and shrunk when near the mountain (red line). Hence, we propose our division algorithm, which detects the terrain variances and automatically adjusts each subarea’s range so that the drone can cover as large a region as possible, with the least number of drones needed for the mission. The objective of sub-section division is formulated as:

subject to:

and

Figure 3.

The coverage of the drone is impacted by topography’s variations.

Since the coverage area is a variable and there is no explicit math model to follow, we adopt the greedy algorithm. We perform a search on the remaining uncovered area to find the largest region for each , and a location that allows to cover . As we analyzed earlier, can be calculated by Equations (12)–(18). However, the terrain function is not available and needs to be inferred first. In Algorithm 1, we have simplified faces and vertices stored as . We can fit the vertices to a function, which approximates the terrain function [27,28]. More details are shown in Algorithm 2.

| Algorithm 2: Area Division: Calculate |

| Require: |

| Ensure: |

| 1: |

| 2: |

| 3: while do |

| 4: |

| 5: T |

| 6: |

| 7: repeat |

| 8: |

| 9: |

| 10: for do |

| 11: if and then |

| 12: |

| 13: end if |

| 14: end for |

| 15: for do |

| 16: for to do |

| 17: |

| 18: |

| 19: for do |

| 20: if then |

| 21: |

| 22: end if |

| 23: end for |

| 24: |

| 25: if and and then |

| 26: |

| 27: end if |

| 28: end for |

| 29: if then |

| 30: |

| 31: end if |

| 32: end for |

| 33: |

| 34: until |

| 35: |

| 36: end while |

3.3. Placement

In the placement scheme, we aim to save overall energy consumption, which can be written as:

According to Equations (1)–(11), we know that depends on drone’s hardware parameters, and is related to the specific application. We focus on 3D placement schemes that optimize the energy spent in the communication mode . Equation (10) shows that distance , between a pair of drones and , has large impacts on the energy consumed at the transmitter . We further rewrite the target Equation (22) as:

subject to:

where the shortest path algorithm is applied to calculate the distance by considering the fact that to save the transmitting power during communication, drone might be assigned to relay the packets sending from to . More specifically, we adopted Dijkstra’s algorithm [29].

In order to decide the drone location , we need to find the collection of all feasible drone locations first. We label the desired collection within subarea as . The calculation of is implemented in Algorithm 3. The main steps are as follows:

- initialize each sub-area’s drone location with a random element of ;

- for each sub-area , find the best location within that minimizes the total distance from drone to where ;

- compare the new drone coordinate set with previous ones;

- if the differences are greater than a predefined threshold, repeat steps 3 and 4. Otherwise, return current drone fleet coordinates.

| Algorithm 3: Placement: Calculate |

| Require: |

| Ensure: |

| 1: for to n do |

| 2: for do |

| 3: for to do |

| 4: |

| 5: |

| 6: for do |

| 7: if then |

| 8: |

| 9: end if |

| 10: end for |

| 11: |

| 12: if and and then |

| 13: if |

| 14: end if |

| 15: end for |

| 16: end for |

| 17: end for |

| 18: for to n do |

| 19: |

| 20: end for |

| 21: |

| 22: |

| 23: while do |

| 24: for n do |

| 25: |

| 26: for do |

| 27: |

| 28: if then |

| 29: |

| 30: |

| 31: end if |

| 32: end for |

| 33: end for |

| 34: |

| 35: |

| 36: end while |

3.4. Dynamic Adjustment

According to Equation (10), the radio transmission power significantly increases with distances. When communication packets are delivered between two remote drones, we prefer to use one or more intermediary drones for relaying. As all the drone’s locations are fixed and known, we use Dijkstra’s algorithm to find the shortest path from each drone to . We denote Q as the routing table. is the next-hop drone when the packet source is and the destination is . Detailed routing table calculation is illustrated in Algorithm 4.

| Algorithm 4: Routing Table: Calculate |

| Require: |

| Ensure: |

| 1: for n do |

| 2: for do n do |

| 3: |

| 4: |

| 5: end for |

| 6: end for |

| 7: for to n do |

| 8: |

| 9: |

| 10: |

| 11: while do |

| 12: for do |

| 13: if then |

| 14: |

| 15: |

| 16: end if |

| 17: end for |

| 18: j |

| 19: |

| 20: |

| 21: end while |

| 22: end for |

The initial 3D placement ensures optimal overall energy saving. During the mission execution, the drone at the team center is more likely to relay the most packets and have the least energy left. We further propose a dynamic location switching scheme to prolong the drone fleet’s lifetime. The main idea is to switch the location of the lowest remaining battery drone with the highest remaining battery drone when a threshold condition is met.

Compared to the initial 3D placement, which is performed before the mission with no constraints in time, memory and energy, the dynamic adjustments are calculated by the drones in real time. Each drone maintains a battery volume table, which stores every drone’s remaining energy and will be updated periodically by receiving other drone’s broadcast packets. This somehow implies extra energy consumption. However, comparing to other messages and information exchanged among the drones, the remaining battery information can be sent with a single short packet and it has negligible overheads comparing with the potential gains in extending the lifetime of the fleet. In addition, the maintenance costs of a one-dimensional array are negligible compared to the drone’s other processing tasks. Considering energy efficiency, only the drone with the highest remaining battery will run the dynamic switching algorithm. Denote the highest energy drone as . By checking its battery volume table, will start to execute the switching strategy as follows. First, calculates the approximate power of each drone through:

where is the old battery volume table and is the newly updated table. is the table update period. Next, computes the ideal maximum lifetime left for the drone fleet via:

is the upper limit, which can only be achieved when the entire drone fleet is balanced and every drone has the same power. Then, checks whether the lowest battery drone (denoted as ) needs switching according to:

where the inequality means the battery capacity of is extremely low and a switching process is urgently needed. If the condition in Equation (27) is met, will further compare the movement energy consumption from to and with the switching benefits. is the energy consumption caused by the switching overheads and is calculated by:

where accounts for the extra power needed for switching and is the time needed for the switching process between and . As a result that our works do not restrict the type of applications running on the drone fleet or the specific hardware configurations, and the switching overheads are application/machine-dependent, we simplify as a constant, which is given in Section 4 and identical to . Finally, as long as the and are affordable compared to the savings, drone will initiate a switching request to . Once drone received the request, it will respond according to its application process. If drone is executing a critical program, it will decline the request with a busy response. Drone can wait for a certain period before initiating the next request.

To avoid the cases where the high energy drone keeps switching with other drones, we design in such a way that once it makes a switch, it will initiate a protocol to inform the next highest energy drone to take over the processing role. also broadcasts a message to mark as switched, and will not be taken into account in future switching processes. Details are shown in Algorithm 5.

| Algorithm 5: Positions Dynamic Switch: Calculate |

| Require: |

| Ensure: |

| 1: while do |

| 2: update() |

| 3: if then |

| 4: |

| 5: |

| 6: lowest battery drone |

| 7: if then |

| 8: Calculate SwitchMoveCost(ID,j) |

| 9: Initiate switch protocol |

| 10: if request accepted then |

| 11: Inform next highest battery drone |

| 12: Broadcast has been switched |

| 13: end if |

| 14: end if |

| 15: end if |

| 16: end while |

| 17: Current time |

4. Results Evaluation

We conducted simulations with rough terrain profiles using MATLAB and the results are analyzed. The proposed scheme is evaluated by comparing the drone team lifetime with/without the 3D-placement algorithm and dynamic location switching strategy. Table 1 defines the parameters used in the simulation.

Table 1.

The simulation parameters.

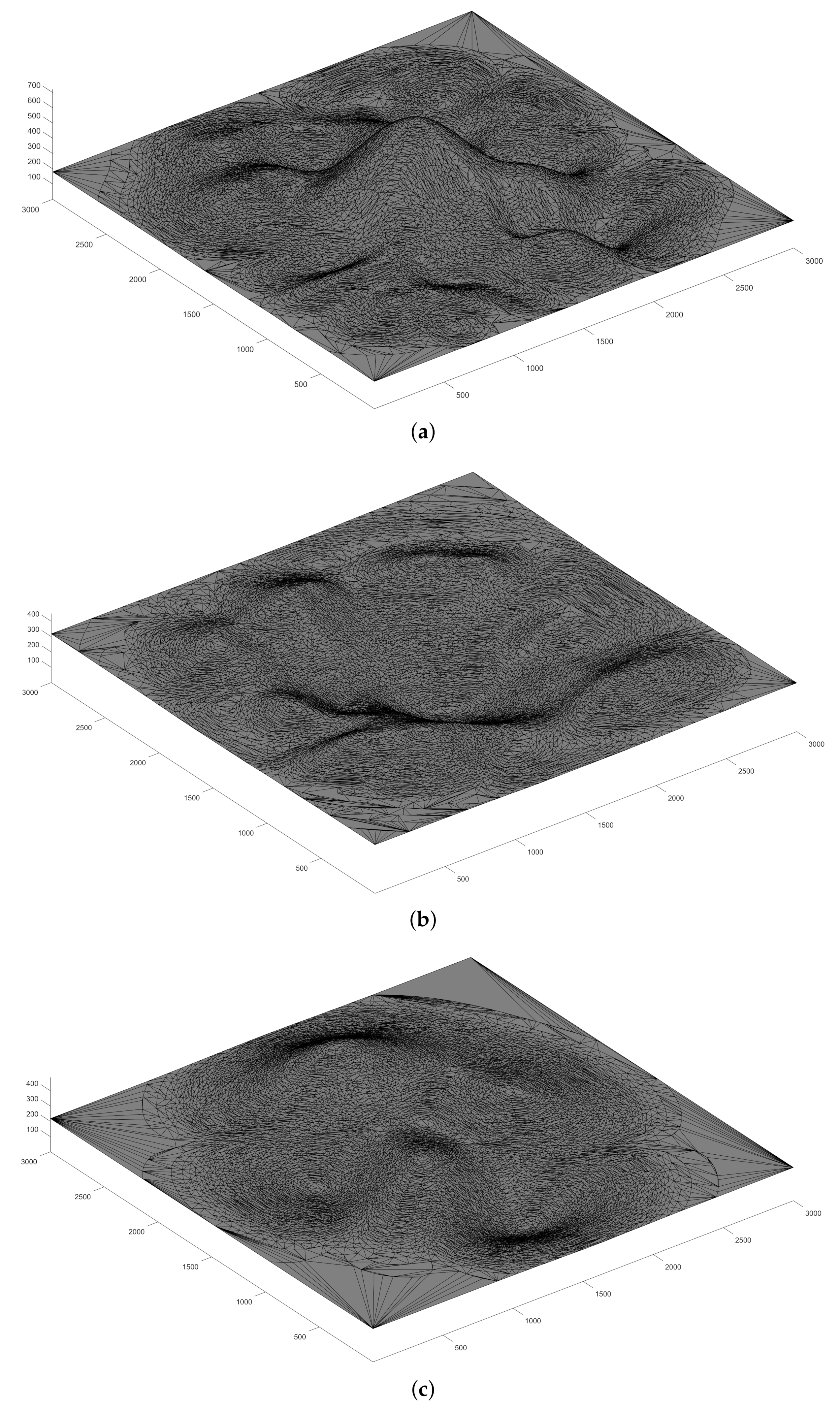

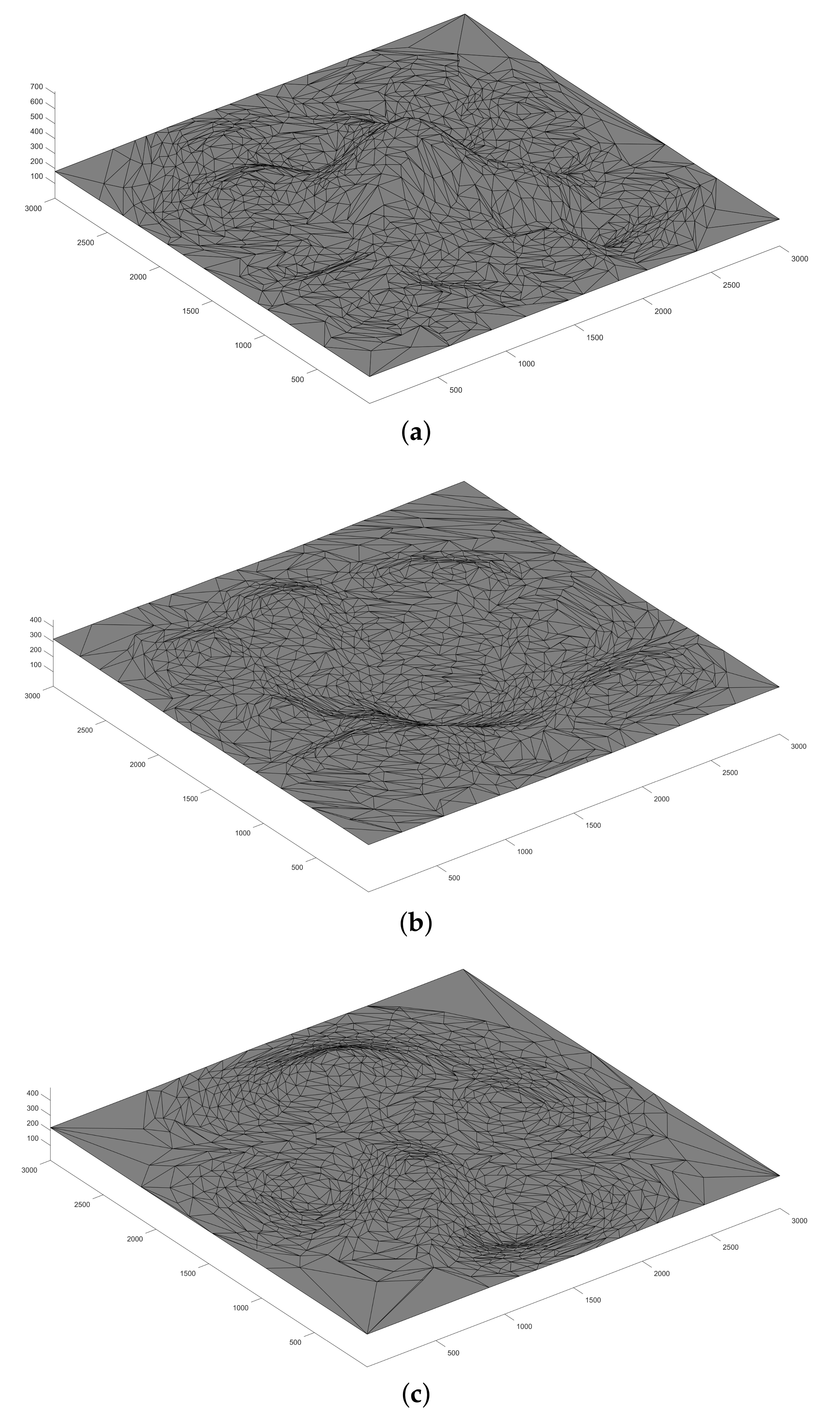

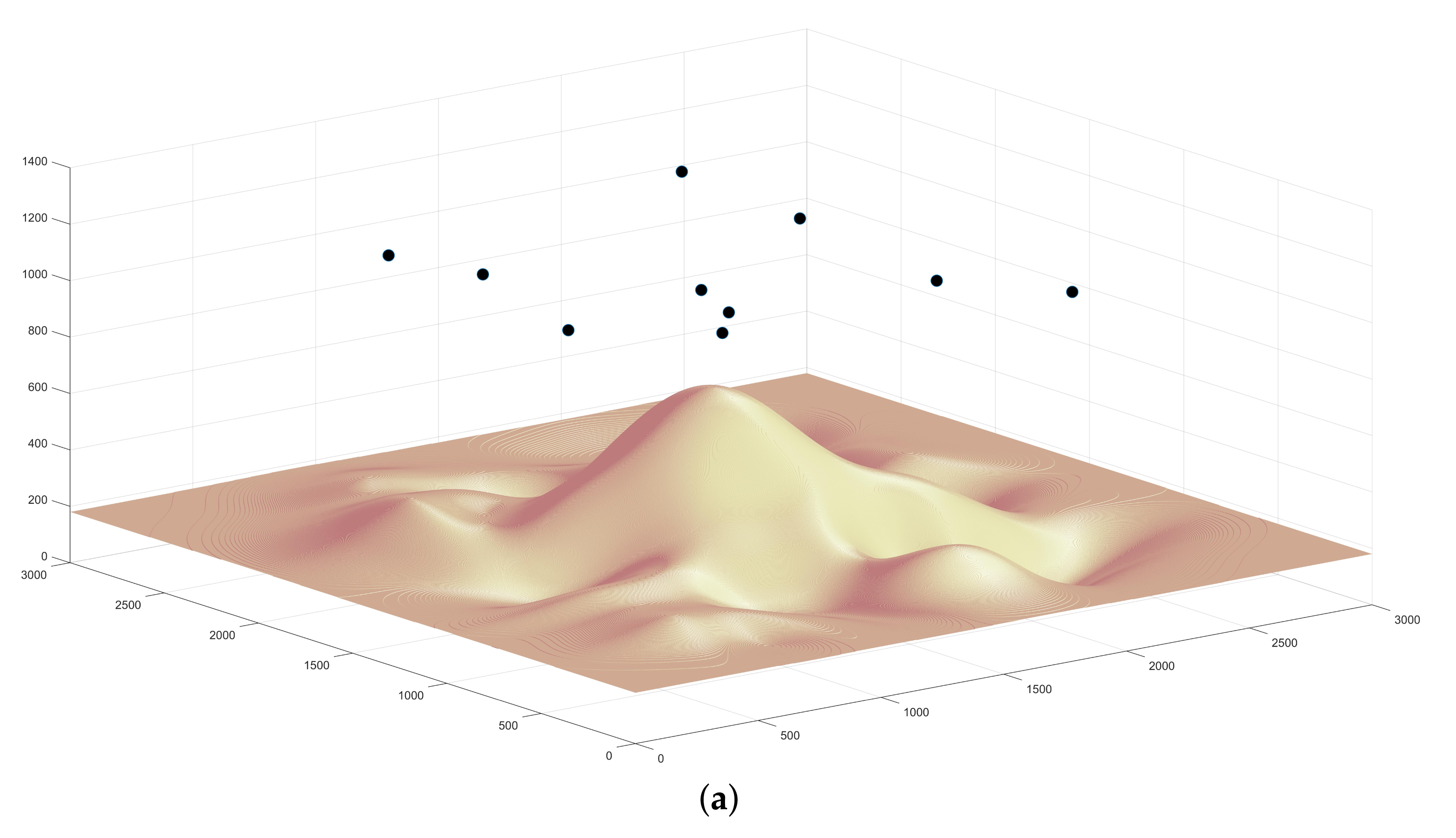

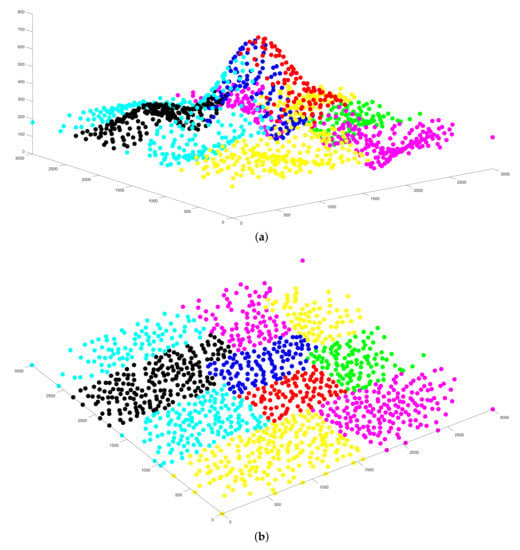

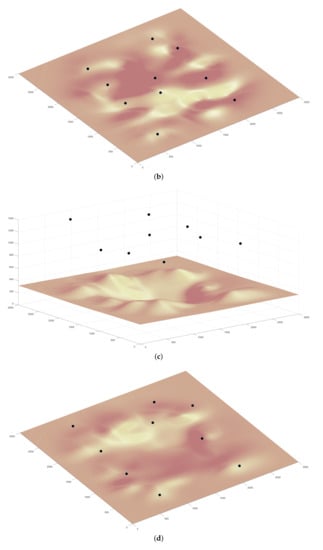

To ensure that our model applies to various landscapes, we ran three sets of simulations with different terrains as shown in Figure 4: mountain, valley and complex topography.

Figure 4.

The three different terrains used in our simulations: (a) the mountain topography; (b) the valley topography and (c) the complex topography.

The first part of the simulations deals with the terrains’ profile simplification. As described in Algorithm 1, the height map of the target area needs to be converted to a volumetric array and be reduced further in scale. Figure 5 shows the terrains’ extraction results. The simplification ratio we applied is 0.0001, which means the number of vertices is approximately reduced by a factor of 10,000. Originally, given the target square area side length as 3000 m, even if we only consider the integer coordinates, there are 9,000,000 possible locations for drone placement in the horizontal plane. After applying the terrain simplification algorithm, there are only 1295, 1196 and 1127 possible locations for mountain, valley and complex topography, respectively. It is clear that the simplified terrain tremendously reduces the number of vertices and edges while preserving critical landmarks and features.

Figure 5.

The terrain simplification results of: (a) mountain topography; (b) valley topography and (c) complex topography.

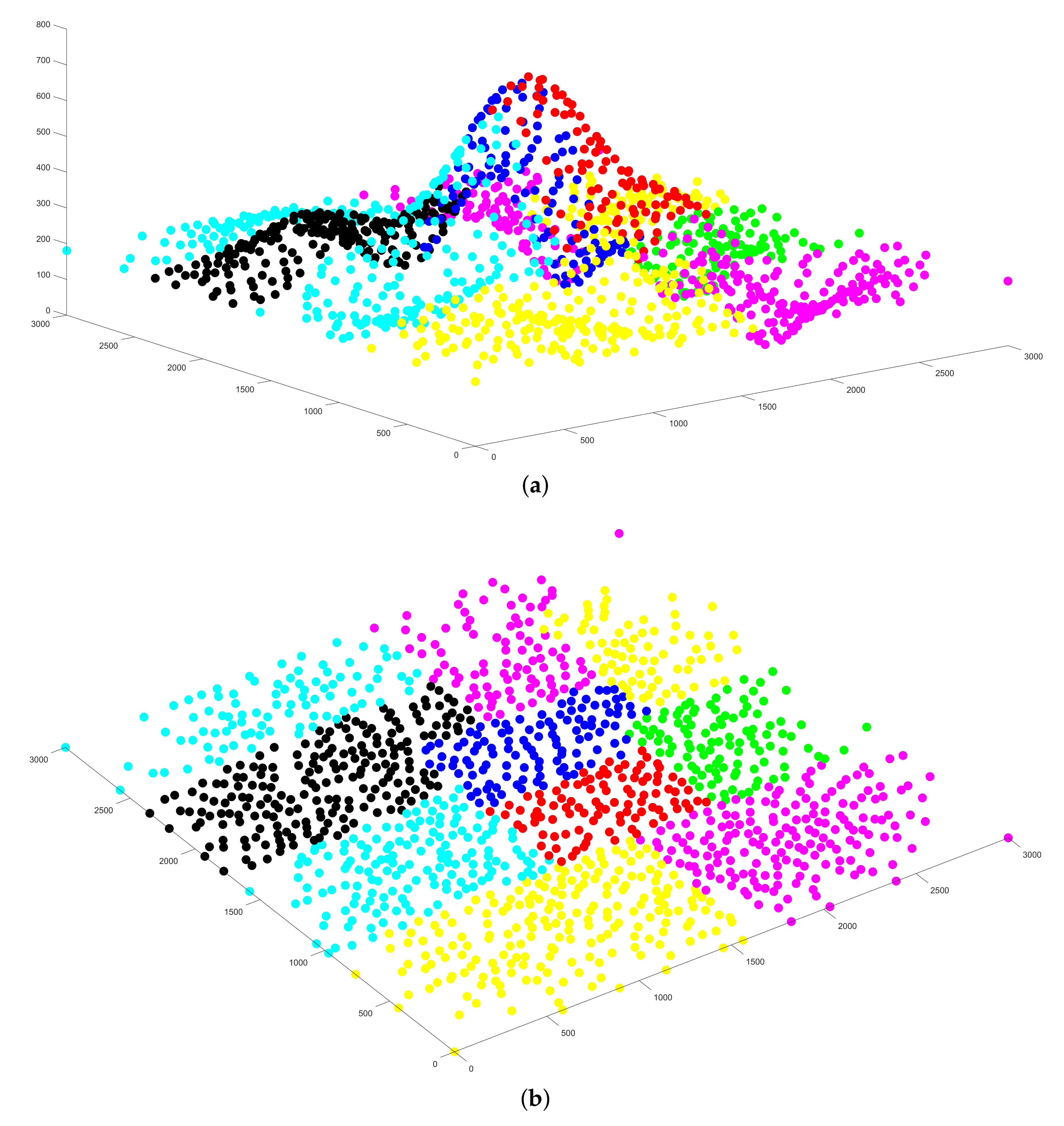

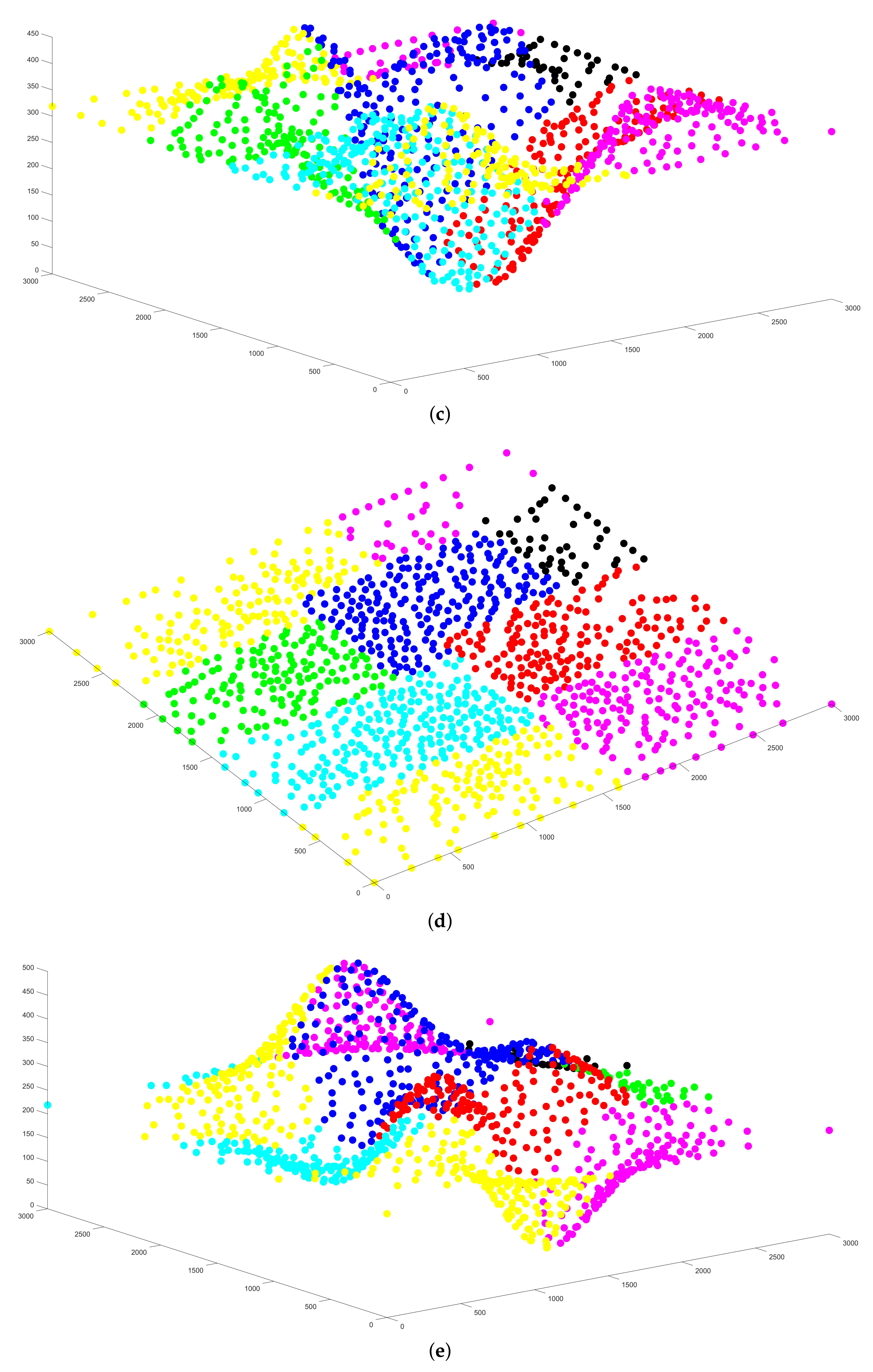

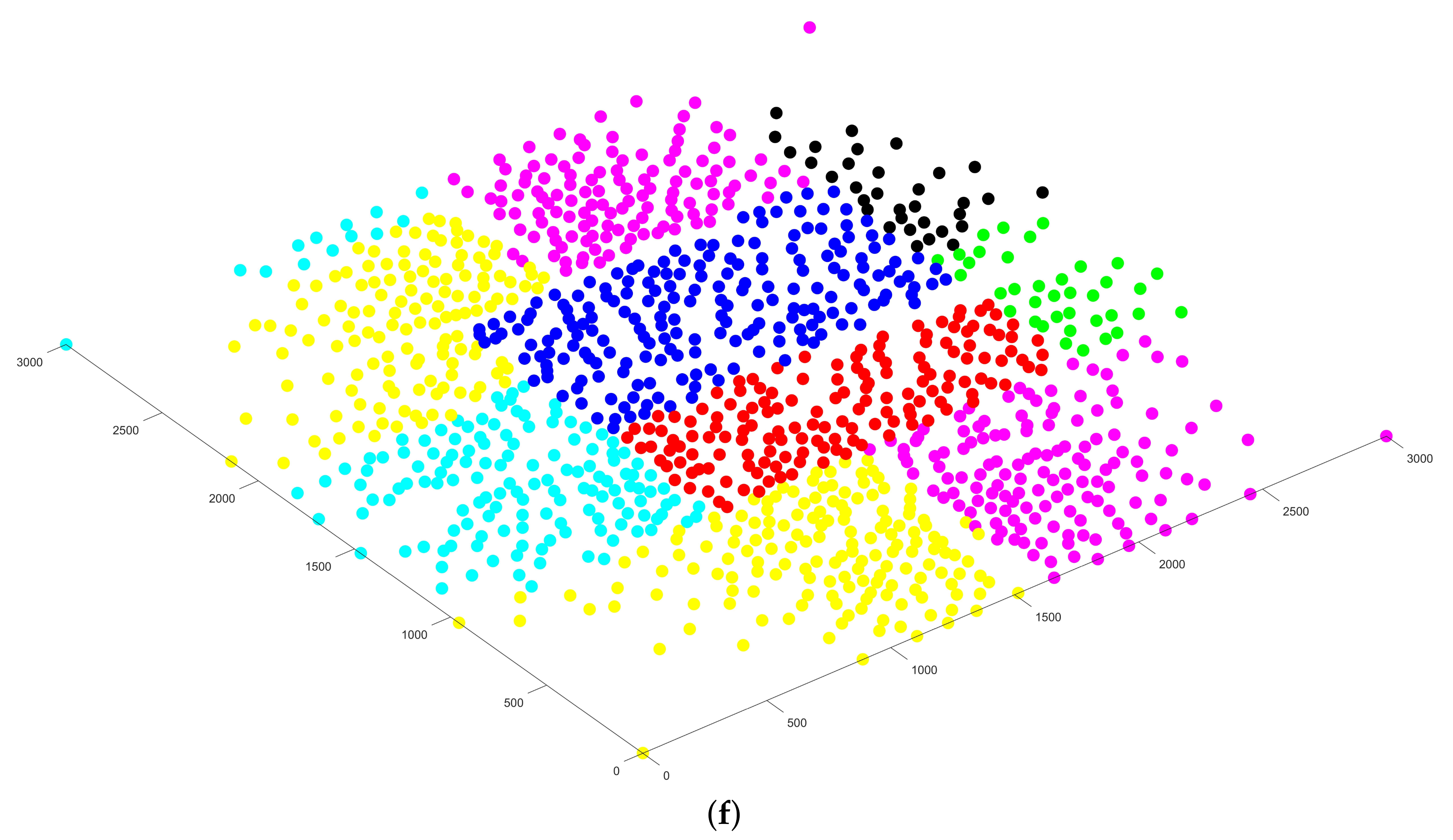

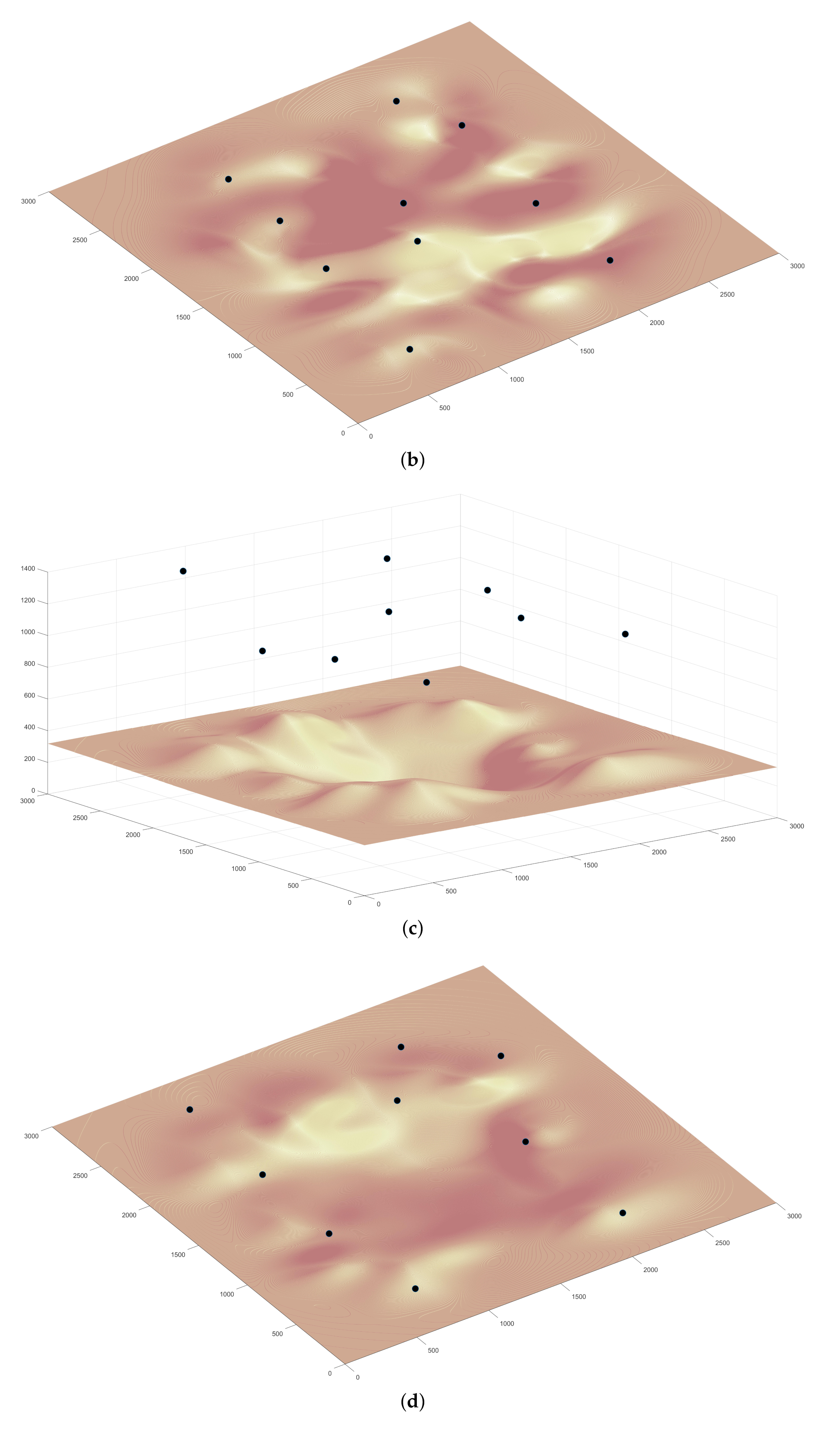

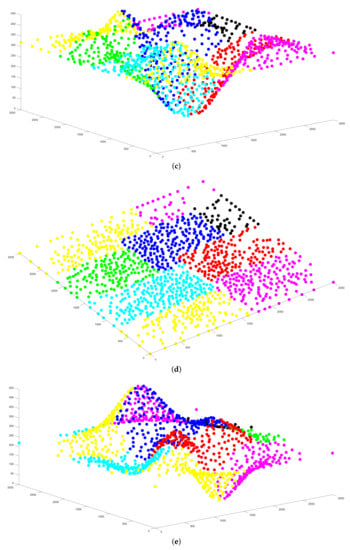

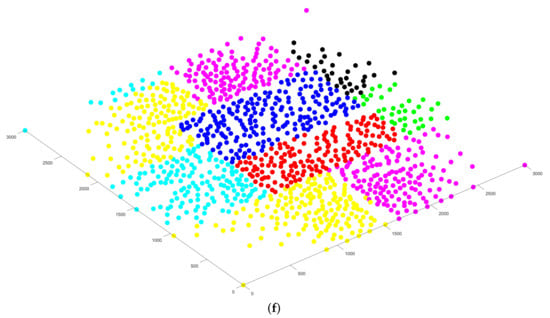

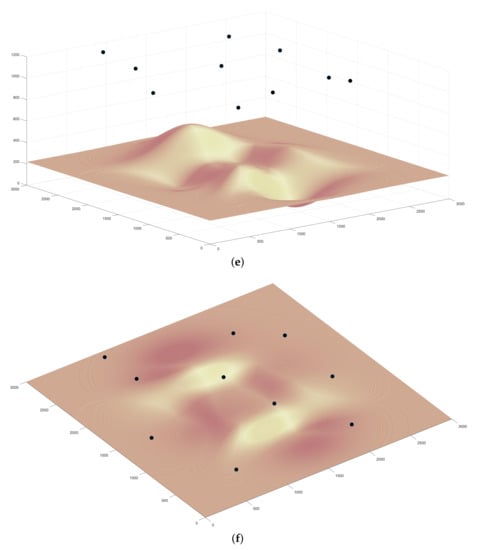

The simulations of the sub-area divisions are immediately followed. The segmentation of various terrains is illustrated in Figure 6. Each sub-region is identified with dots of different colors. For better understanding, each terrain’s division results are demonstrated with both aerial and top-down views. By applying Algorithm 2, we segment the target mountain, valley and complex topography area into 10, 9 and 10 sub-areas, respectively. We can discover that the rectangular coverage over the area with steep slopes is relatively small, which is conformed to the theoretical analysis that the highlands are vulnerable to line-of-sight blockage, resulting in a small surveillance region for the drone. Our area division algorithm can adapt to terrain variances and use the least number of drones for the monitoring mission.

Figure 6.

The area division results of: (a) mountain topography in aerial view; (b) mountain topography in top-down view; (c) valley topography in aerial view; (d) valley topography in top-down view; (e) complex topography in aerial view; and (f) complex topography in top-down view.

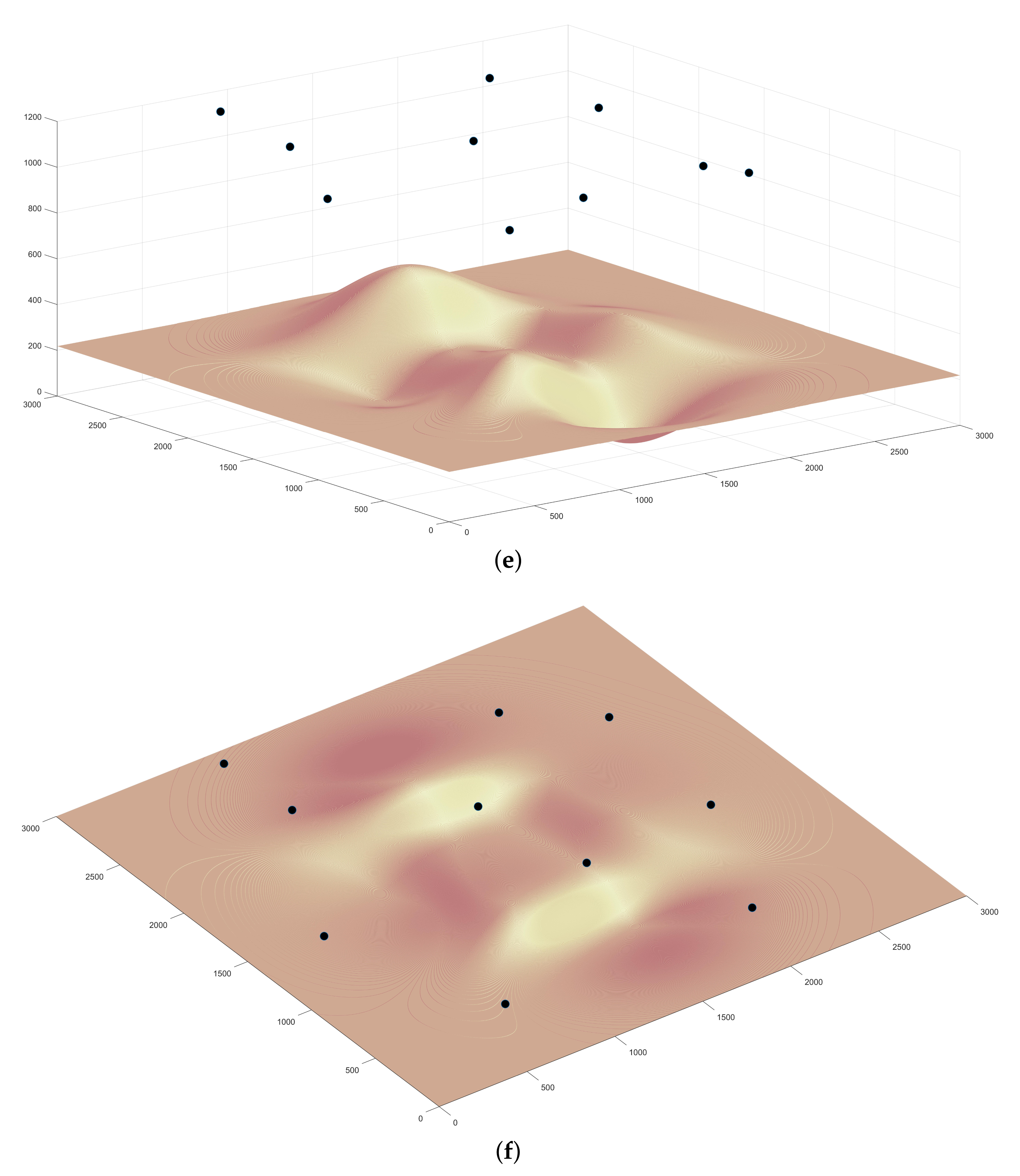

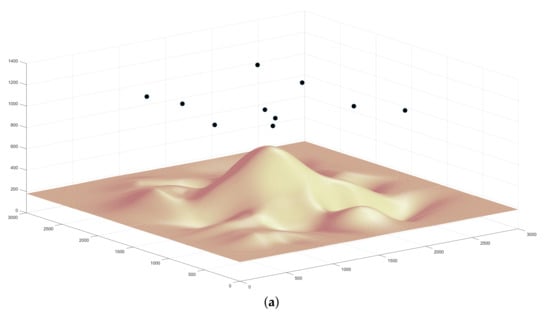

The following simulations deal with the optimal positioning of the drones in order to cover the target area with energy constraints applied. The final 3D-placement locations of the three different terrains are depicted in Figure 7. The black dots represent the drones’ positions over the target zone. A more detailed drone placement coordinates for the three terrains are illustrated in Table 2.

Figure 7.

The 3D placement results of: (a) mountain topography in aerial view; (b) mountain topography in top-down view; (c) valley topography in aerial view; (d) valley topography in top-down view; (e) complex topography in aerial view; and (f) complex topography in top-down view.

Table 2.

The drone fleet’s locations of three different topographies.

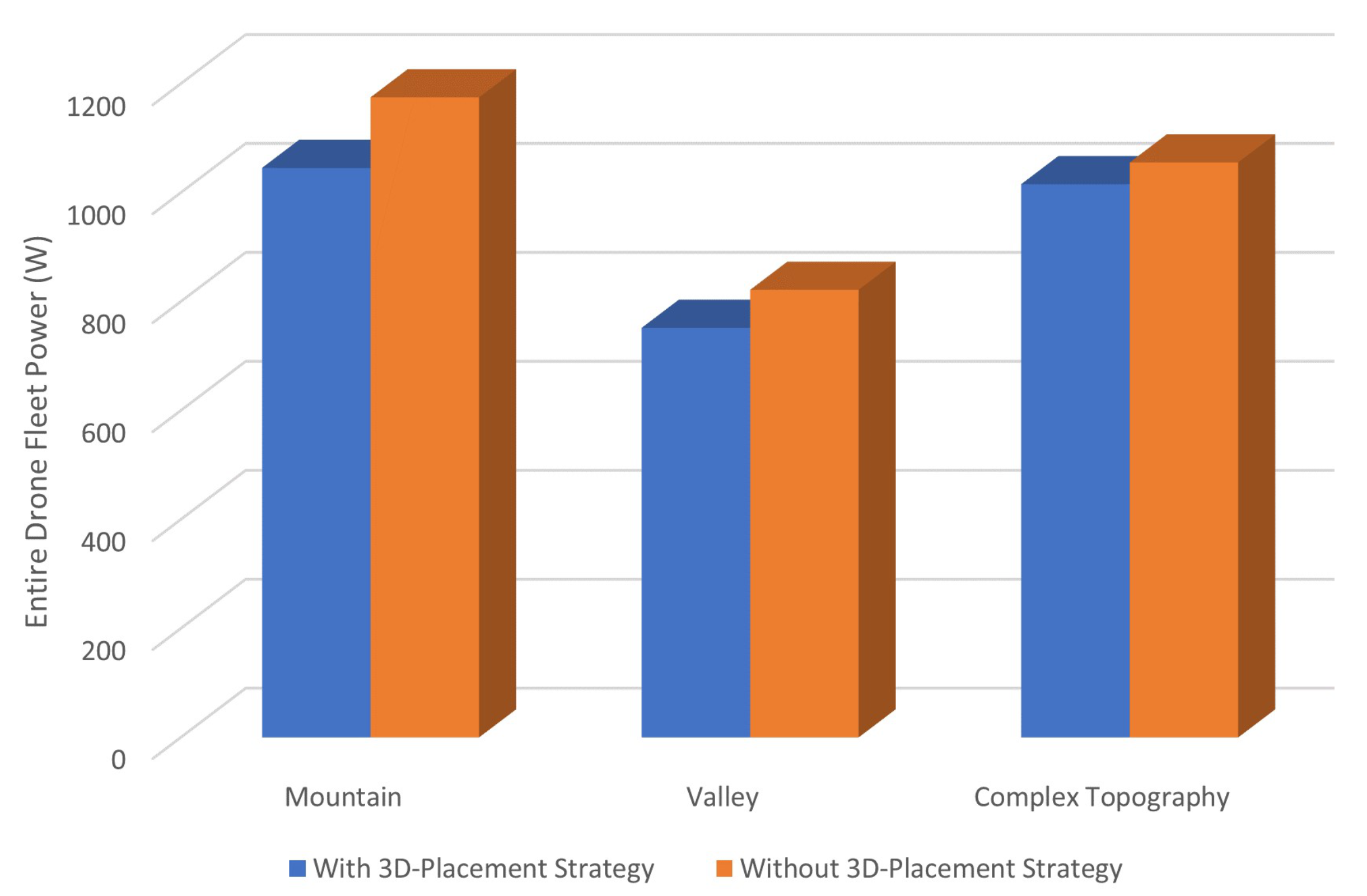

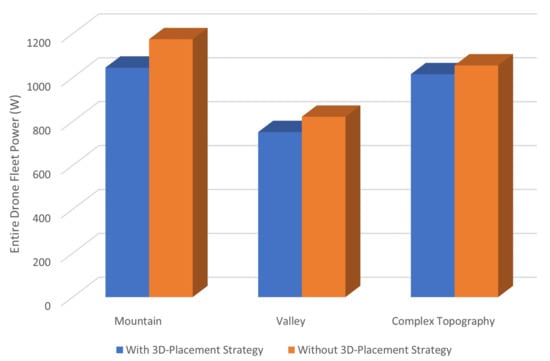

To evaluate our placement algorithm, we further simulated drone task processing and packet transmissions with the energy model applied. Equation (22) indicates that our placement algorithm aims to save the overall drone fleet energy consumption. In order to show the performance of our 3D positioning algorithm, we made a comparison of drone fleet power between our placement and other random placements, as shown in Figure 8. The reason why random placements are applied is because there is no similar placement strategy available due to the terrain complexity, multi-unit coordination and supervision continuity. To the best of the authors’ knowledge, our work is the pioneer in the topography-aware 3D placement of a multi-drone fleet. The random placements are performed 10 times and only the best performance (minimum power) is used for comparison with our 3D placement algorithm. Clearly, with our 3D-placement scheme applied, the drone fleet consumes less power under all three terrains. This is because our strategy takes drones’ mutual distances into account to minimize the overall communication cost.

Figure 8.

The drone positioning algorithm performance.

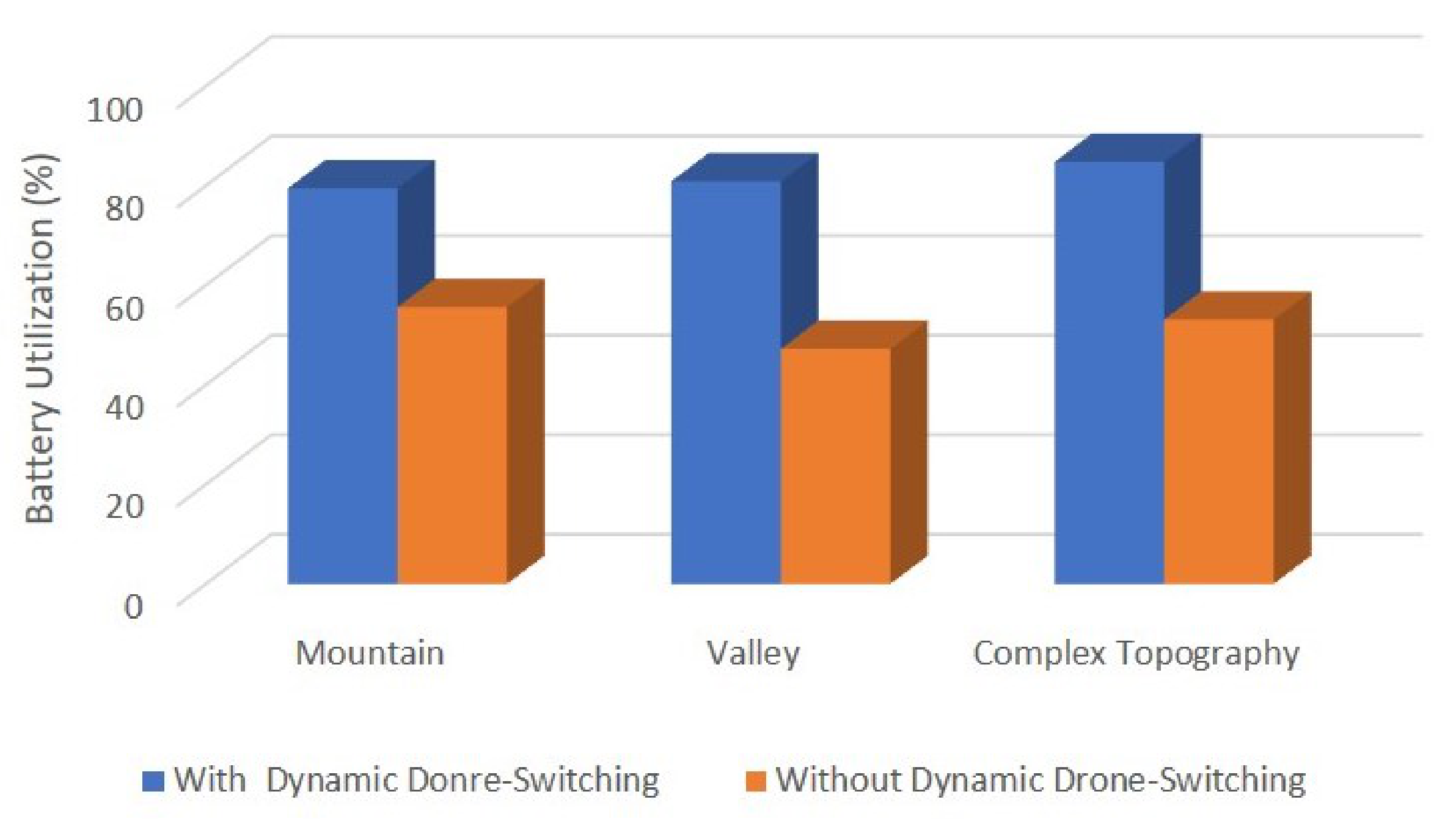

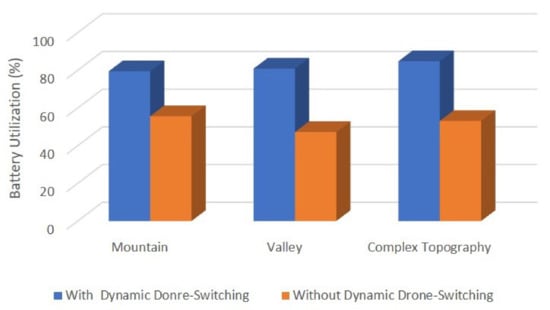

Since our proposed 3D placement algorithm is efficient in saving overall drone fleet power, the following simulation results are based on the 3D placement algorithm. To further extend the duration of drone-based tasks, we applied the dynamic position switching algorithm. Figure 9 shows the detailed performance improvements after the switching algorithm is deployed. Since the lifetime parameter in our simulation results can be greatly impacted by the drone’s initial energy , we use the battery utilization U instead for evaluation purposes. U is obtained by:

where is the drone’s remaining energy after the sensing mission is terminated. Of course, large battery utilization is preferred, which indicates a longer lifetime. Obviously, our strategy significantly improved battery utilization. Since the battery utilization is proportional to the drone fleet’s lifetime, it is clear that with our dynamic switching scheme applied, the lifetime of mountain topography is increased by 43% (from 55.6 to 79.5). There are 71.3% (from 47.2 to 80.9) improvements for valley topography and complex topography is increased by 59.7% (from 53.1 to 84.8). Due to the unbalanced drone networks, some drones need to relay more data, and some may supervise larger regions and spend more power on data processing. As a result, there are cases when a drone drains all its battery while most of the others have a lot of power left, which causes the entire surveillance mission to be terminated prematurely. Our scheme addresses this problem by dynamically switching low-energy-capacity drones with high-energy-capacity drones.

Figure 9.

The location dynamic switching algorithm performance.

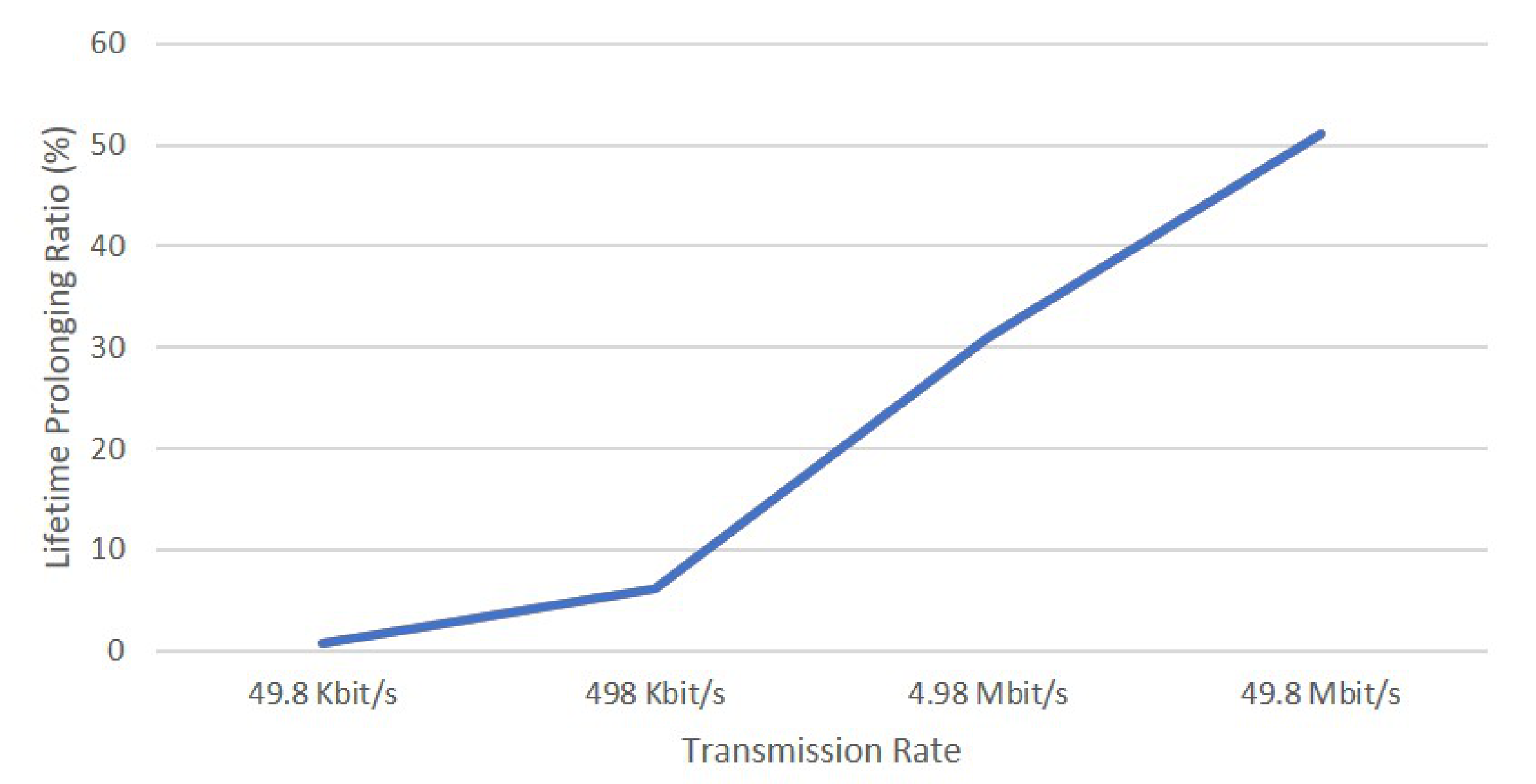

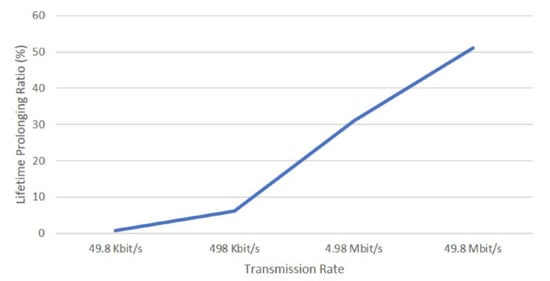

We also analyzed the communication rate parameter. The data rate of drone’s transmission is proportional to the energy consumption in communication. The terrain applied in this set of simulations is the mountain. Figure 10 illustrates the impact of the transmission rate on the placement strategy. The lifetime prolonging ratio (LPR) is defined as:

where is the lifetime with both our 3D placement algorithm and dynamic adjustments algorithm applied. is the lifetime without applying the 3D placement algorithm and dynamic adjustments. It is obvious that our placement scheme is a clear winner, especially as the transmission rate increases.

Figure 10.

Shows the transmission rate’s impacts on placement strategy.

5. Conclusions

In this paper, we studied the dynamic 3D placement of a multi-drone-based sensing system that maximizes the sensing mission time with a minimized number of drones. The target sensing area is of various rough terrains. We analyzed the 3D coverage problem by extracting the terrain features and dividing sub-areas. The drone fleet placement was deployed with energy efficiency taken into account. Moreover, a dynamic position switching algorithm was proposed to prolong the entire drone fleet’s lifetime. Simulations have shown that our placement and routing schemes, as well as the dynamic switching algorithms, are effective in improving the lifetime of the fleet. In the future, a more dynamic positioning algorithm will be studied, where the drone can tour within a specific range, which increases the surveillance area and avoids any potential blind spot.

Author Contributions

Conceptualization, Y.L. and Y.C.; methodology, Y.L.; software, Y.L.; validation, Y.L.; formal analysis, Y.L. and Y.C.; writing—original draft preparation, Y.L.; writing—review and editing, Y.C.; visualization, Y.L.; supervision, Y.C.; project administration, Y.C.; funding acquisition, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the computational resources support of Hewlett Packard Enterprise Data Science Institute (HPE DSI), University of Houston.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Buffi, A.; Nepa, P.; Cioni, R. SARFID on drone: Drone-based UHF-RFID tag localization. In Proceedings of the IEEE International Conference on RFID Technology & Application (RFID-TA 2017), Warsaw, Poland, 20–22 September 2017; pp. 40–44. [Google Scholar]

- Alwateer, M.; Loke, S.W.; Rahayu, W. Drone services: An investigation via prototyping and simulation. In Proceedings of the IEEE 4th World Forum on Internet of Things (WF-IoT 2018), Singapore, 5–8 February 2018; pp. 367–370. [Google Scholar]

- Nouacer, R.; Ortiz, H.E.; Ouhammou, Y.; González, R.C. Framework of Key Enabling Technologies for Safe and Autonomous Drones’ Applications. In Proceedings of the 22nd Euromicro Conference on Digital System Design (DSD 2019), Kallithea, Greece, 28–30 August 2019; pp. 420–427. [Google Scholar]

- He, S.; Chen, X.; Hung, M.H.; Chen, X.; Ji, Y. Steering Angle Measurement of UAV Navigation Based on Improved Image Processing. J. Inf. Hiding Multimed. Signal Process. 2019, 10, 384–391. [Google Scholar]

- Wallerman, J.; Bohlin, J.; Nilsson, M.B.; Franssen, J.E. Drone-Based Forest Variables Mapping of ICOS Tower Surroundings. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2018), Valencia, Spain, 22–27 July 2018; pp. 9003–9006. [Google Scholar]

- Mitcheson, P.D.; Boyle, D.; Kkelis, G.; Yates, D.; Saenz, J.A.; Aldhaher, S.; Yeatman, E. Energy-autonomous sensing systems using drones. In Proceedings of the 2017 IEEE SENSORS, Glasgow, UK, 29 October–1 November 2017; pp. 1–3. [Google Scholar]

- D’Odorico, P.; Besik, A.; Wong, C.Y.; Isabel, N.; Ensminger, I. High-throughput drone-based remote sensing reliably tracks phenology in thousands of conifer seedlings. New Phytol. 2020, 226, 1667–1681. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Jia, X.; Paull, D.; Zhang, J.; Farooq, A.; Chen, X.; Islam, M.N. A drone-based sensing system to support satellite image analysis for rice farm mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2019), Yokohama, Japan, 28 July–2 August 2019; pp. 9376–9379. [Google Scholar]

- Reinecke, M.; Prinsloo, T. The influence of drone monitoring on crop health and harvest size. In Proceedings of the 1st International Conference on Next Generation Computing Applications (NextComp 2017), Moka, Mauritius, 19–21 July 2017; pp. 5–10. [Google Scholar]

- Nithyavathy, N.; Kumar, S.A.; Rahul, D.; Kumar, B.S.; Shanthini, E.; Naveen, C. Detection of Fire Prone Environment Using Thermal Sensing Drone; IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2021; Volume 1055, p. 012006. [Google Scholar]

- Hill, A.C.; Laugier, E.J.; Casana, J. Archaeological remote sensing using multi-temporal, drone-acquired thermal and Near Infrared (NIR) Imagery: A case study at the Enfield Shaker Village, New Hampshire. Remote Sens. 2020, 12, 690. [Google Scholar] [CrossRef]

- Holness, C.; Matthews, T.; Satchell, K.; Swindell, E.C. Remote sensing archeological sites through unmanned aerial vehicle (UAV) imaging. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2016), Beijing, China, 11–15 July 2016; pp. 6695–6698. [Google Scholar]

- Inoue, Y.; Yokoyama, M. Drone-Based Optical, Thermal, and 3D Sensing for Diagnostic Information in Smart Farming–Systems and Algorithms. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2019), Yokohama, Japan, 28 July–2 August 2019; pp. 7266–7269. [Google Scholar]

- Yi, W.; Sutrisna, M. Drone scheduling for construction site surveillance. Comput. Aided Civ. Infrastruct. Eng. 2021, 36, 3–13. [Google Scholar] [CrossRef]

- Alzenad, M.; El-Keyi, A.; Lagum, F.; Yanikomeroglu, H. 3-D placement of an unmanned aerial vehicle base station (UAV-BS) for energy-efficient maximal coverage. IEEE Wirel. Commun. Lett. 2017, 6, 434–437. [Google Scholar] [CrossRef]

- Wang, L.; Hu, B.; Chen, S. Energy efficient placement of a drone base station for minimum required transmit power. IEEE Wirel. Commun. Lett. 2018, 9, 2010–2014. [Google Scholar] [CrossRef]

- Gao, F.; Zhou, Y.; Ma, X.; Yang, T.; Cheng, N.; Lu, N. Coverage-maximization and Energy-efficient Drone Small Cell Deployment in Aerial-Ground Collaborative Vehicular Networks. In Proceedings of the IEEE 4th International Conference on Computer and Communication Systems (ICCCS 2019), Singapore, 23–25 February 2019; pp. 559–564. [Google Scholar]

- Ghorbel, M.B.; Rodríguez-Duarte, D.; Ghazzai, H.; Hossain, M.J.; Menouar, H. Joint position and travel path optimization for energy efficient wireless data gathering using unmanned aerial vehicles. IEEE Trans. Veh. Technol. 2019, 68, 2165–2175. [Google Scholar] [CrossRef]

- Kouroshnezhad, S.; Peiravi, A.; Haghighi, M.S. Energy-Efficient Drone Trajectory Planning for the Localization of 6G-enabled IoT Devices. IEEE Internet Things J. 2020, 8, 5202–5210. [Google Scholar] [CrossRef]

- Huynh, T.; Hwang, W.J. Network lifetime maximization in wireless sensor networks with a path-constrained mobile sink. Int. J. Distrib. Sens. Netw. 2015, 11, 679093. [Google Scholar] [CrossRef]

- Engmann, F.; Katsriku, F.A.; Abdulai, J.D.; Adu-Manu, K.S.; Banaseka, F.K. Prolonging the lifetime of wireless sensor networks: A review of current techniques. Wirel. Commun. Mob. Comput. 2018, 2018, 8035065. [Google Scholar] [CrossRef]

- Verbeke, J.; Hulens, D.; Ramon, H.; Goedeme, T.; De Schutter, J. The design and construction of a high endurance hexacopter suited for narrow corridors. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS 2014), Orlando, FL, USA, 27–30 May 2014; pp. 543–551. [Google Scholar]

- Yuan, H.; Xie, J.; Hu, N. Lifetime-optimal sensor deployment for wireless sensor networks with mobile sink. In Proceedings of the 2011 6th IEEE Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 20–22 August 2011; Volume 1, pp. 451–454. [Google Scholar]

- Sun, Y.; Liu, Y.; Zhou, N.; Lueth, T.C. A MATLAB-Based Framework for Designing 3D Topology Optimized Soft Robotic Grippers. TechRxiv 2020. [Google Scholar] [CrossRef]

- Remelli, E.; Lukoianov, A.; Richter, S.R.; Guillard, B.; Bagautdinov, T.; Baque, P.; Fua, P. MeshSDF: Differentiable Iso-Surface Extraction. arXiv 2020, arXiv:2006.03997. [Google Scholar]

- Echegaray, S.; Bakr, S.; Rubin, D.L.; Napel, S. Quantitative Image Feature Engine (QIFE): An open-source, modular engine for 3D quantitative feature extraction from volumetric medical images. J. Digit. Imaging 2018, 31, 403–414. [Google Scholar] [CrossRef] [PubMed]

- Maddams, W. The scope and limitations of curve fitting. Appl. Spectrosc. 1980, 34, 245–267. [Google Scholar] [CrossRef]

- Qing-Wan, H. Curve fitting by curve fitting toolbox of Matlab. Comput. Knowl. Technol. 2010, 21, 68. [Google Scholar]

- Fan, D.; Shi, P. Improvement of Dijkstra’s algorithm and its application in route planning. In Proceedings of the 2010 Seventh International Conference on Fuzzy Systems and Knowledge Discovery, Yantai, China, 10–12 August 2010; Volume 4, pp. 1901–1904. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).