Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning

Abstract

:1. Introduction

2. Related Work

2.1. Speech Emotion Recognition

2.2. Facial Emotion Recognition

2.3. Multimodal Emotion Recognition

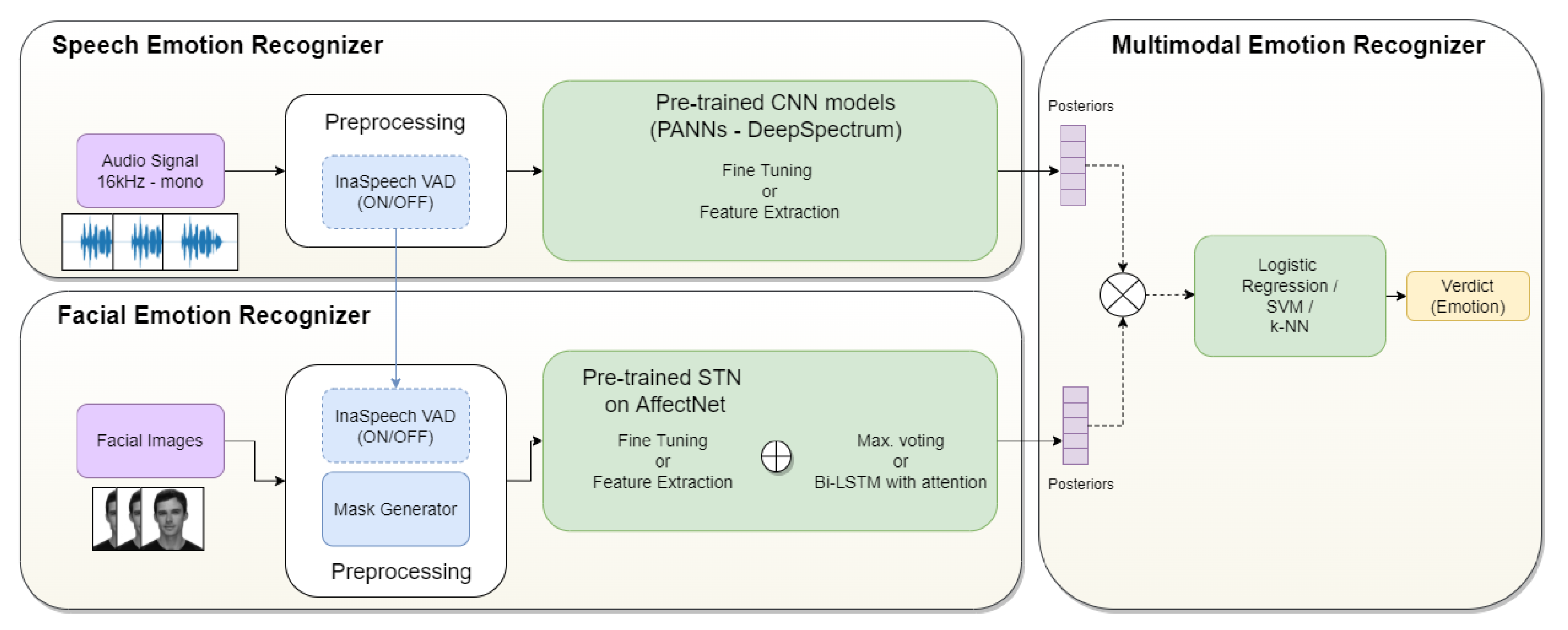

3. Methodology

3.1. The Dataset and Evaluation

- Fold 0: (2, 5, 14, 15, 16)

- Fold 1: (3, 6, 7, 13, 18)

- Fold 2: (10, 11, 12, 19, 20)

- Fold 3: (8, 17, 21, 23, 24)

- Fold 4: (1, 4, 9, 22)

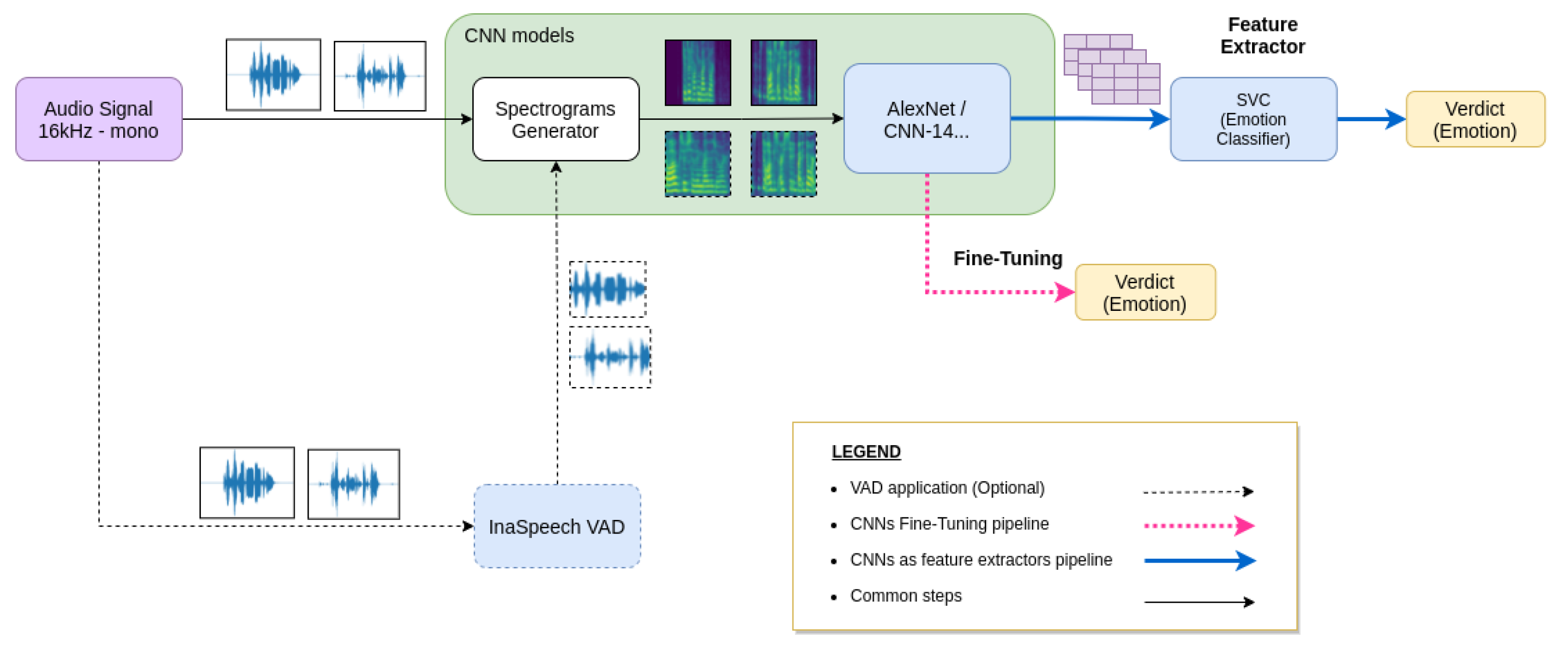

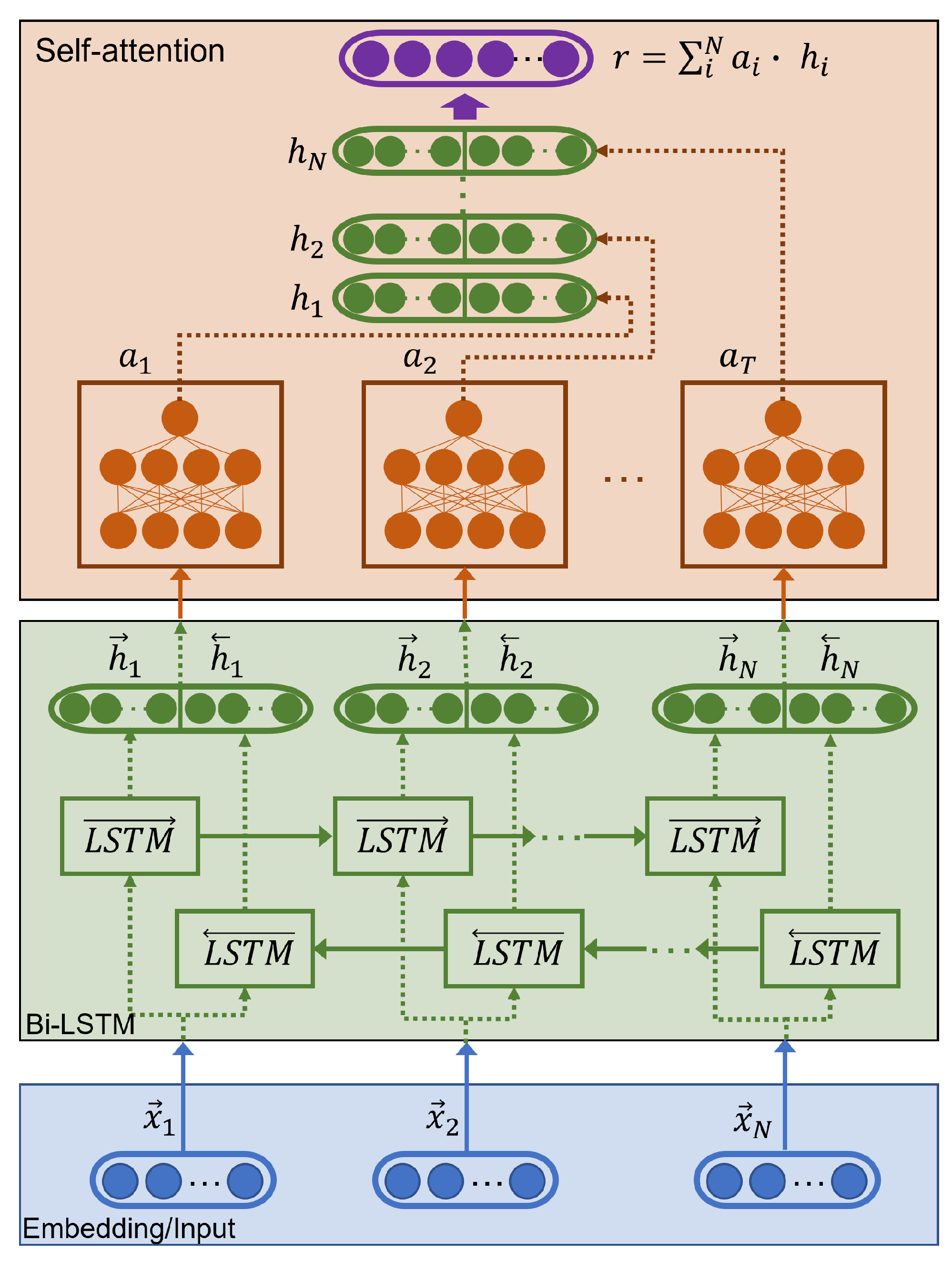

3.2. Speech Based Emotion Recognizer

3.2.1. Feature Extraction

3.2.2. Fine-Tuning

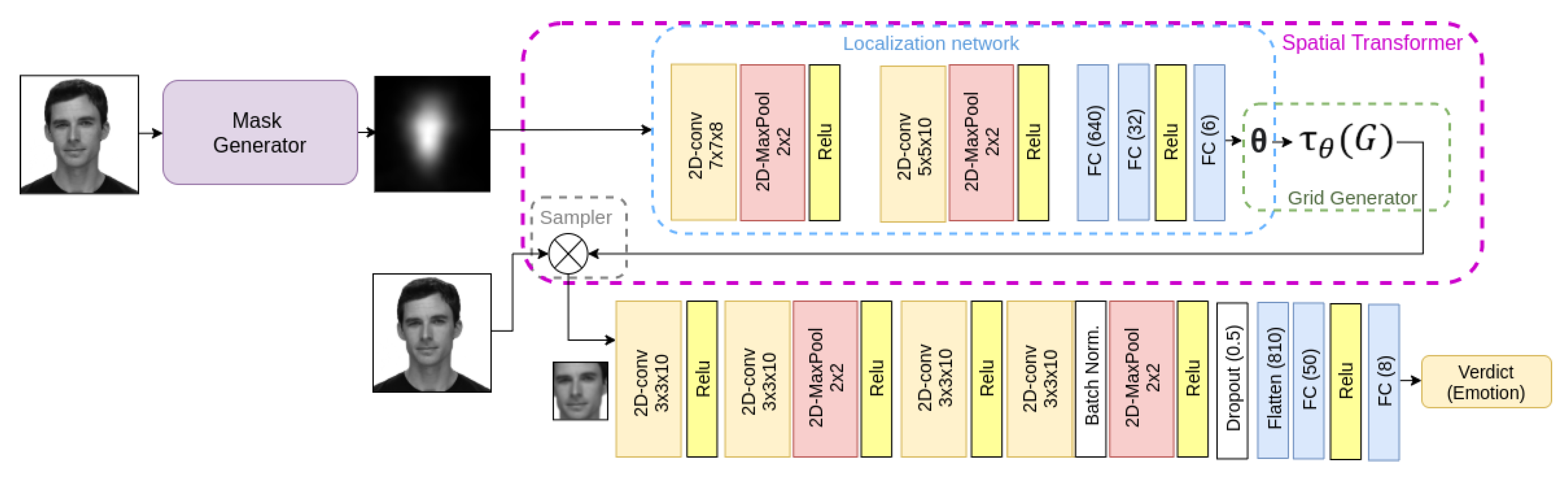

3.3. Facial Emotion Recognizer

3.3.1. Feature Extraction

3.3.2. Fine-Tuning

3.4. Multimodal Recognizer

4. Experiments

4.1. Speech Emotion Recognizer Setup

4.2. Facial Emotion Recognizer Setup

- For the training of the bi-LSTM with the embeddings extracted from the flattened layer of size 810, we ran experiments with two identical bi-LSTM cells of 50, 150, 200, or 300 neurons and two attention layers.

- For the version with the 50-dimensional embeddings, we tested one or two stacked bi-LSTM layers with 25, 50, or 150 neurons. The number of layers of the attention mechanism was the same as the number of stacked bi-LSTM layers.

- For the posteriors of the STN, we trained six models modifying the number of bi-LSTM layers in one or two and the number of neurons in the range [25, 50, 150]. When we used two layers, both layers were identical. For each experiment, the number of layers of the attention mechanism coincided with the number of layers of the bi-LSTM.

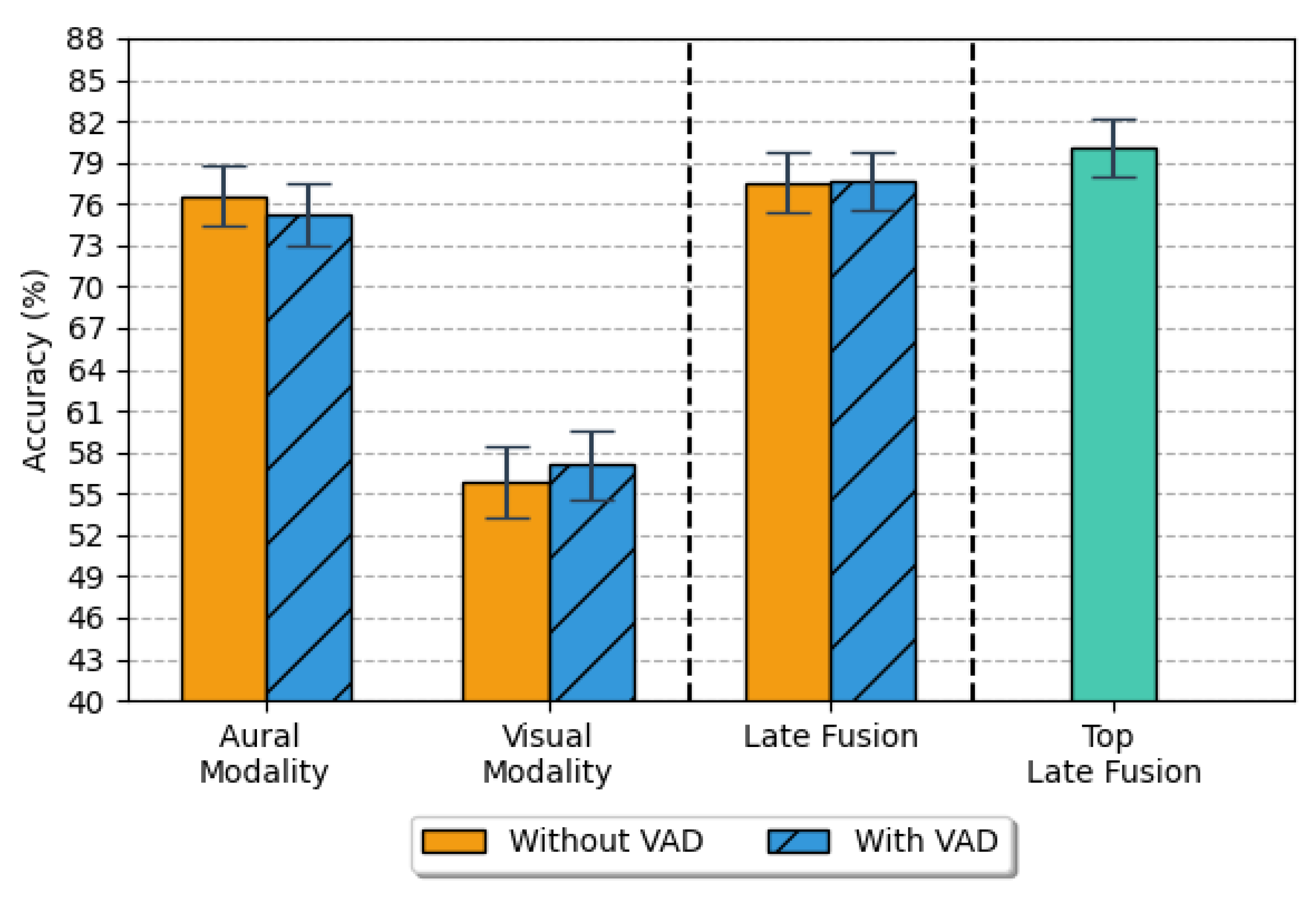

Multimodal Emotion Recognizer Setup

5. Results

5.1. Speech Emotion Recognition Results

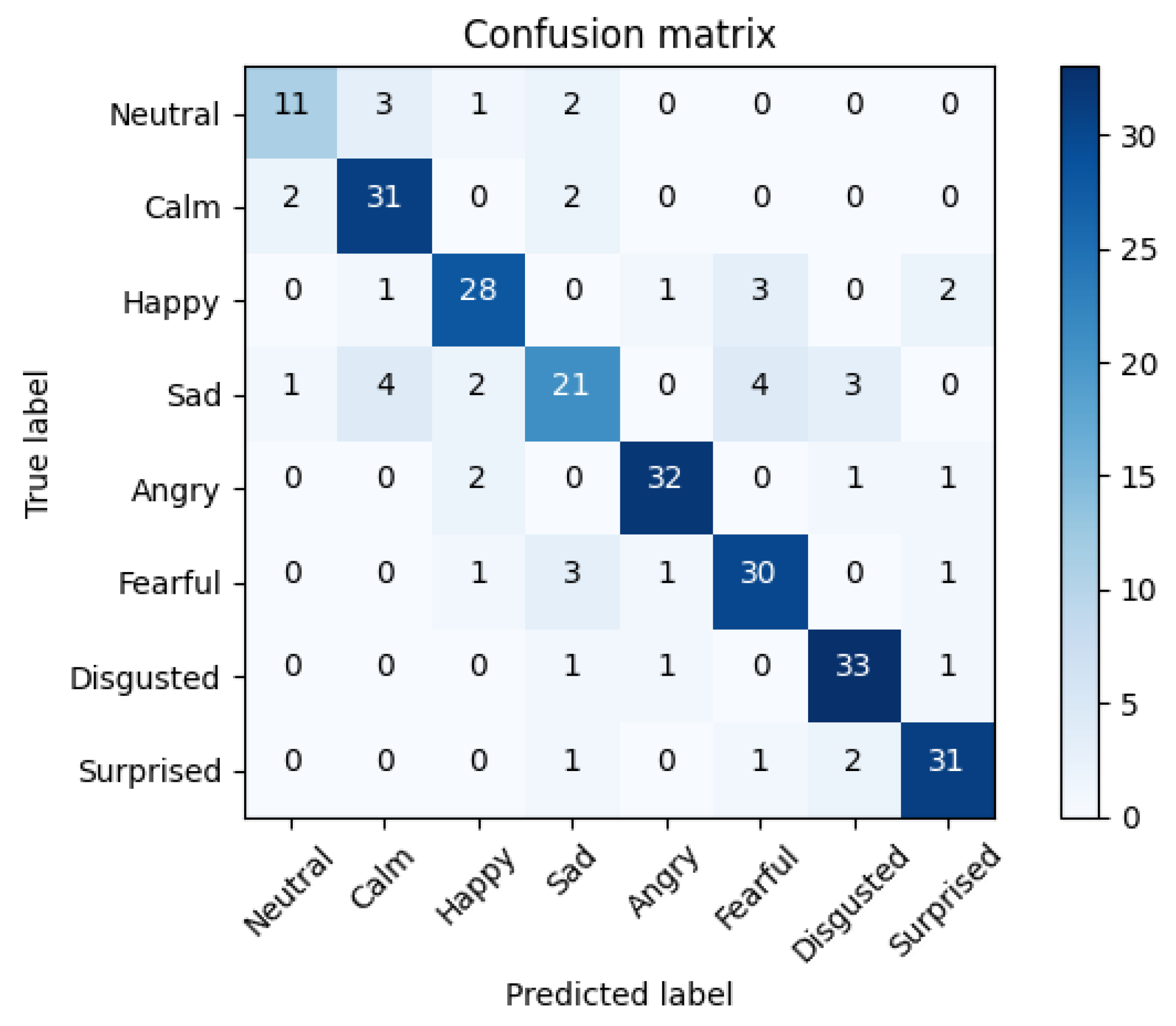

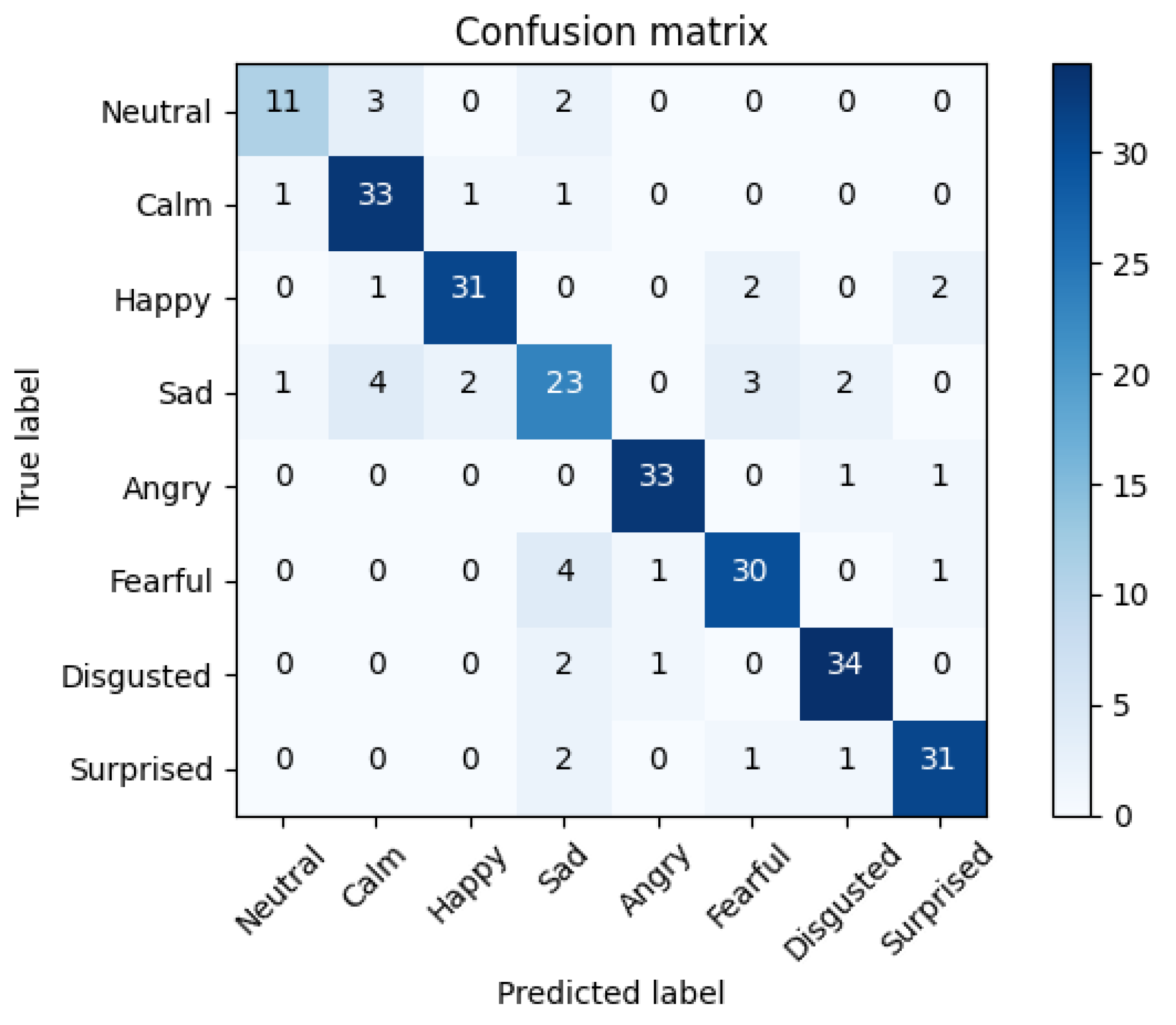

5.2. Facial Emotion Recognition Results

5.3. Multimodal Fusion Results

5.4. Comparative Results with Related Approaches

5.5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| FER | Facial Emotion Recognition |

| SER | Speech Emotion Recognition |

| RAVDESS | The Ryerson Audio-Visual Database of Emotional Speech and Song |

| ST | Spatial Transformer |

| CNN | Convolutional Neural Network |

| MTCNN | Multi-task Cascaded Convolutional Networks |

| Bi-LSTM | Bi-Directional Short-Term Memory networks |

| GAN | Generative Adversarial Networks |

| embs | embeddings |

| fc | fully-connected |

| SVC | Support Vector Machines/Classification |

| VAD | Voice Activity Detector |

| TL | Transfer-Learning |

| CI | Confidence Interval |

| CV | Cross-Validation |

Appendix A. Architecture Layers and Dimensions of the Spatial Transformer Network

| Branches of the Model | Input Layer | Output Size | Filter Size/Stride | Depth |

|---|---|---|---|---|

| Input | Input Image | 48 × 48 × 1 | - | - |

| Localization Network | Convolution-2D | 42 × 42 × 8 | 7 × 7/1 | 8 |

| MaxPooling-2D | 21 × 21 × 8 | 2 × 2/2 | - | |

| Relu | 21 × 21 × 8 | - | - | |

| Convlution-2D | 17 × 17 × 10 | 5 × 5/1 | 10 | |

| MaxPooling-2D | 8 × 8 × 10 | 2 × 2/2 | - | |

| Relu | 8 × 8 × 10 | - | - | |

| Fully-Connected | 640 | - | - | |

| Fully-Connected | 32 | - | - | |

| Relu | 32 | - | - | |

| Fully-Connected () | 6 | - | - | |

| Input | Transformed Image | 48 × 48 × 1 | - | - |

| Simple-CNN | Convolution-2D | 46 × 46 × 10 | 3 × 3/1 | 10 |

| Relu | 46 × 46 × 10 | - | - | |

| Convolution-2D | 44 × 44 × 10 | 3 × 3/1 | 10 | |

| MaxPooling-2D | 22 × 22 × 10 | 2 × 2/2 | - | |

| Relu | 22 × 22 × 10 | - | - | |

| Convolution-2D | 20 × 20 × 10 | 3 × 3/1 | 10 | |

| Relu | 20 × 20 × 10 | - | - | |

| Convolution-2D | 18 × 18 × 10 | 3 × 3/1 | 10 | |

| Batch Normalization | 18 × 18 × 10 | - | - | |

| MaxPooling-2D | 9 × 9 × 10 | 2 × 2/2 | - | |

| Relu | 9 × 9 × 10 | - | - | |

| Dropout (p = 0.5) | 9 × 9 × 10 | - | - | |

| Flatten | 810 | - | - | |

| Fully-Connected | 50 | - | - | |

| Relu | 50 | - | - | |

| Fully-Connected | 8 | - | - |

Appendix B. Top bi-LSTM Models with Attention Mechanism

| TL Strategy | Inputs | Models | With VAD (InaSpeech) | Model Architecture |

|---|---|---|---|---|

| - | - | Human perception | - | - |

| - | - | ZeroR | - | - |

| Feature Extraction (from pre-trained STN onAffectNet) | posteriors (7 classes) | Max. voting | No | - |

| Yes | - | |||

| Sequential (bi-LSTM) | No | 1 layer bi-LSTM with 150 neurons +1 attention layer | ||

| Yes | 1 layer bi-LSTM with 150 neurons +1 attention layer | |||

| fc50 | Sequential (bi-LSTM) | No | 2 layers bi-LSTM with 50 neurons +1 attention layer | |

| Yes | 1 layer bi-LSTM with 150 neurons +1 attention layer | |||

| flatten-810 | Sequential (bi-LSTM) | No | 2 layer bi-LSTM with 200 neurons +2 attention layers | |

| Yes | 1 layer bi-LSTM with 150 neurons +1 attention layer | |||

| Fine-Tuning on RAVDESS | posteriors (8 classes) | Max. voting | No | - |

| Yes | - | |||

| Sequential (bi-LSTM) | No | 2 layer bi-LSTM with 50 neurons +2 attention layers | ||

| Yes | 1 layer bi-LSTM with 25 neurons +1 attention layer | |||

| fc50 | Sequential (bi-LSTM) | No | 1 layer bi-LSTM with 150 neurons +1 attention layer | |

| Yes | 2 layer bi-LSTM with 150 neurons +2 attention layers | |||

| flatten-810 | Sequential (bi-LSTM) | No | 2 layer bi-LSTM with 150 neurons +2 attention layers | |

| Yes | 2 layer bi-LSTM with 300 neurons +2 attention layers |

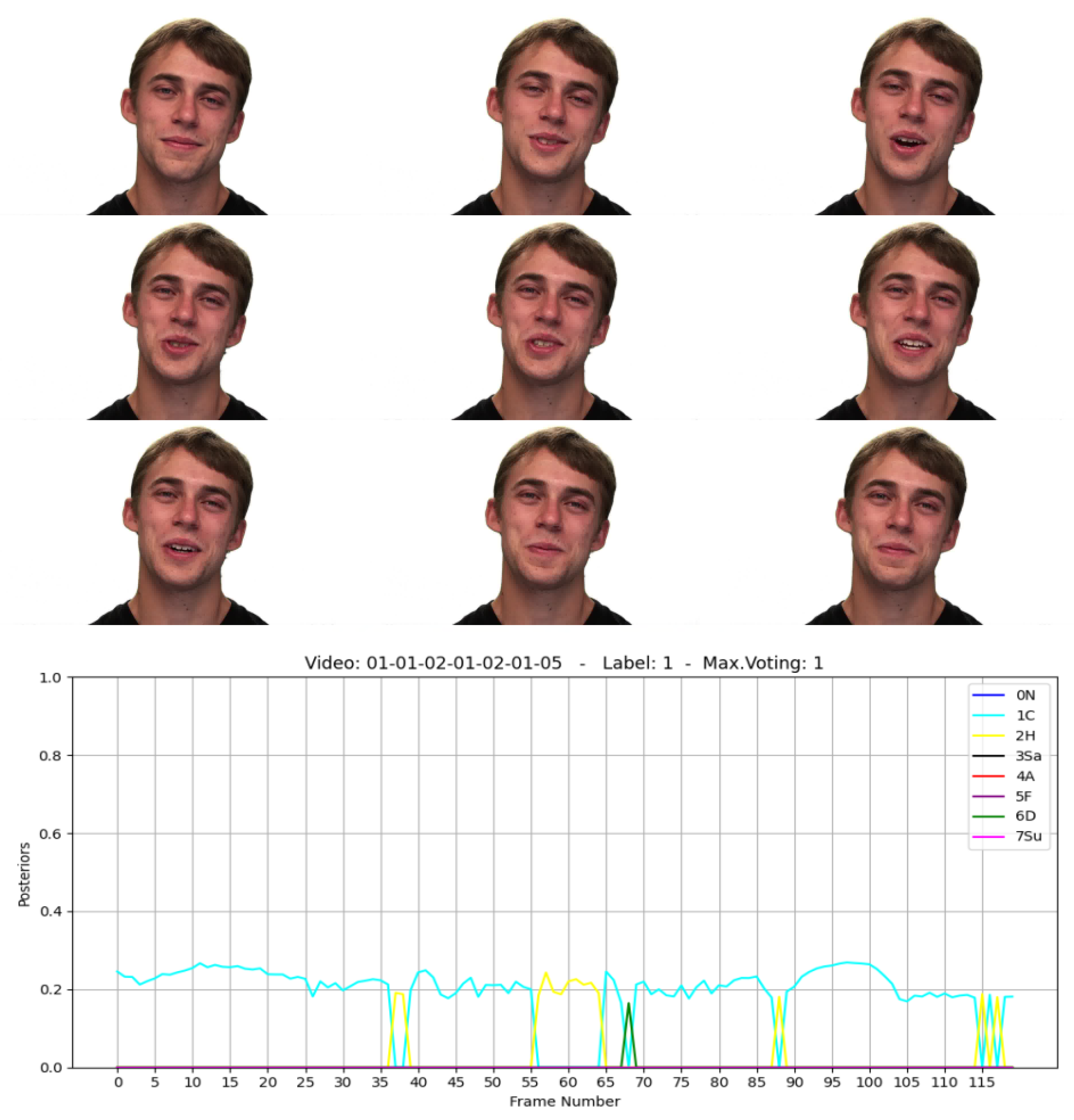

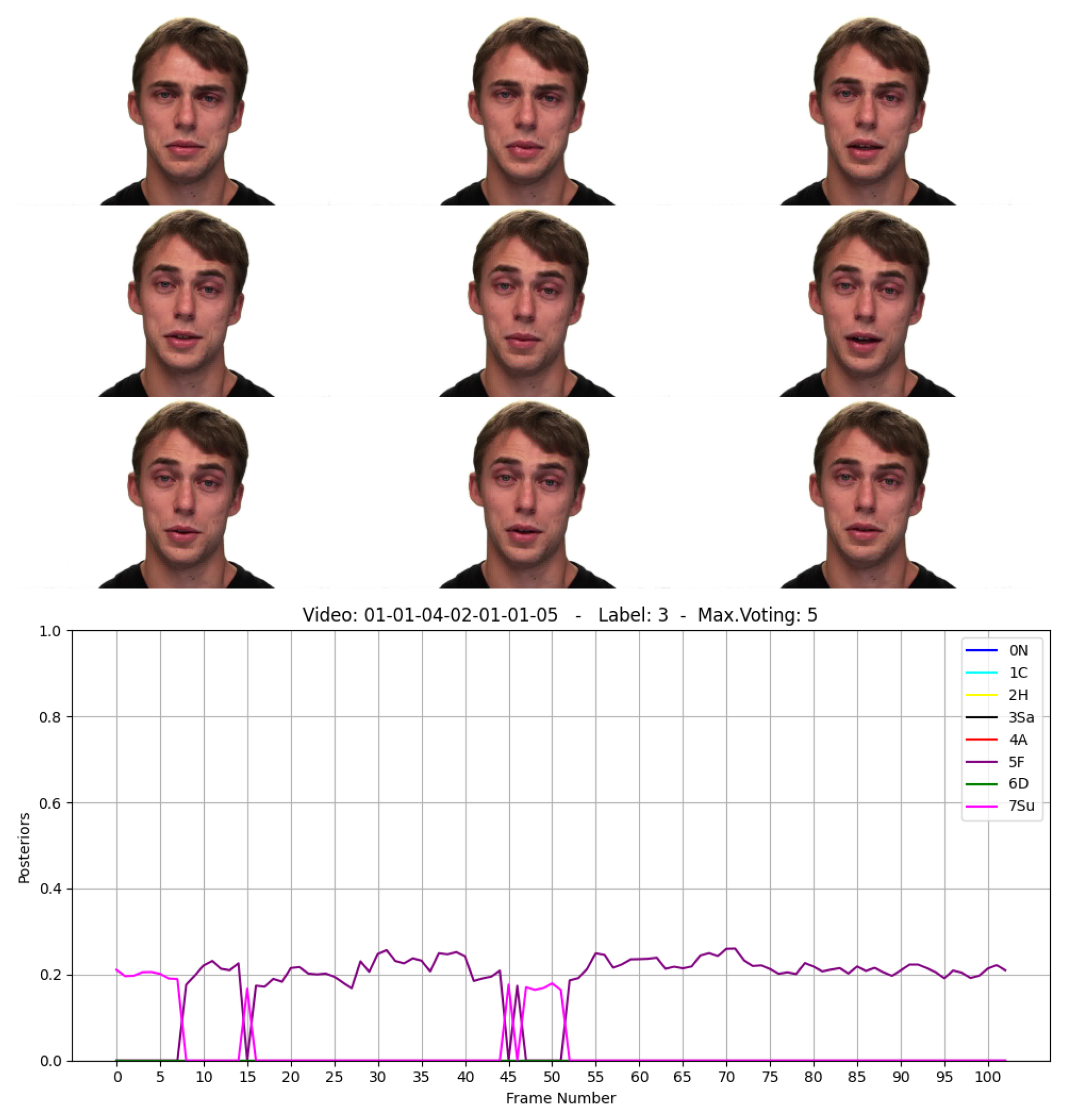

Appendix C. Examples of Frames Extracted from the RAVDESS Videos

Appendix D. Evaluation of the Fusion Models

| Fusion Model | Hyper Parameters | Accuracy with VAD | Accuracy without VAD | Accuracy withVAD FER and withouth VAD SER |

|---|---|---|---|---|

| Logistic Regression | 71.6 | 68.18 | 70.72 | |

| 71.93 | 69.27 | 71.13 | ||

| 72.76 | 69.47 | 71.47 | ||

| 76.62 | 73.50 | 75.68 | ||

| 77.78 | 76.98 | 79.35 | ||

| 77.33 | 77.33 | 79.63 | ||

| 77.55 | 77.68 | 79.70 | ||

| 77.55 | 77.78 | 79.63 | ||

| SVM | kernel = ‘linear’; | 77.4 | 77.37 | 79.58 |

| kernel = ‘linear’; | 77.65 | 77.80 | 80.08 | |

| kernel = ‘linear’; | 77.70 | 77.20 | 79.20 | |

| kernel = ‘linear’; | 77.70 | 76.17 | 78.77 | |

| kernel = ‘linear’; | 77.83 | 76.68 | 78.77 | |

| kernel = ‘linear’; | 77.77 | 76.82 | 78.77 | |

| kernel = ‘rbf’; | 60.03 | 59.62 | 62.47 | |

| kernel = ‘rbf’; | 66.95 | 61.57 | 63.57 | |

| kernel = ‘rbf’; | 70.10 | 64.30 | 66.48 | |

| kernel = ‘rbf’; | 70.10 | 64.33 | 66.57 | |

| k-NN | k = 10 | 60.32 | 59.30 | 60.33 |

| k = 20 | 58.95 | 57.15 | 59.07 | |

| k = 30 | 57.63 | 57.78 | 59.17 | |

| k = 40 | 56.78 | 57.55 | 59.01 | |

| k = 50 | 55.90 | 57.00 | 58.65 |

References

- Kraus, M.; Wagner, N.; Callejas, Z.; Minker, W. The Role of Trust in Proactive Conversational Assistants. IEEE Access 2021, 9, 112821–112836. [Google Scholar] [CrossRef]

- Cassell, J.; Sullivan, J.; Prevost, S.; Churchill, E.F. Embodied Conversational Agents; The MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- de Visser, E.J.; Pak, R.; Shaw, T.H. From ‘automation’ to ‘autonomy’: The importance of trust repair in human–machine interaction. Ergonomics 2018, 61, 1409–1427. [Google Scholar] [CrossRef]

- Nyquist, A.C.; Luebbe, A.M. An Emotion Recognition–Awareness Vulnerability Hypothesis for Depression in Adolescence: A Systematic Review. Clin. Child Fam. Psychol. Rev. 2019, 23, 27–53. [Google Scholar] [CrossRef]

- Greco, C.; Matarazzo, O.; Cordasco, G.; Vinciarelli, A.; Callejas, Z.; Esposito, A. Discriminative Power of EEG-Based Biomarkers in Major Depressive Disorder: A Systematic Review. IEEE Access 2021, 9, 112850–112870. [Google Scholar] [CrossRef]

- Argaud, S.; Vérin, M.; Sauleau, P.; Grandjean, D. Facial emotion recognition in Parkinson’s disease: A review and new hypotheses. Mov. Disord. 2018, 33, 554–567. [Google Scholar] [CrossRef]

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R.W. Driver Emotion Recognition for Intelligent Vehicles: A Survey. ACM Comput. Surv. 2020, 53, 1–30. [Google Scholar] [CrossRef]

- Franzoni, V.; Milani, A.; Nardi, D.; Vallverdú, J. Emotional machines: The next revolution. Web Intell. 2019, 17, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Rheu, M.; Shin, J.Y.; Peng, W.; Huh-Yoo, J. Systematic Review: Trust-Building Factors and Implications for Conversational Agent Design. Int. J. Hum. Comput. Interact. 2021, 37, 81–96. [Google Scholar] [CrossRef]

- McTear, M.; Callejas, Z.; Griol, D. The Conversational Interface: Talking to Smart Devices; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Schuller, B.; Batliner, A. Computational Paralinguistics: Emotion, Affect and Personality in Speech and Language Processing, 1st ed.; Wiley Publishing: Hoboken, NJ, USA, 2013. [Google Scholar]

- Anvarjon, T.; Mustaqeem; Kwon, S. Deep-Net: A Lightweight CNN-Based Speech Emotion Recognition System Using Deep Frequency Features. Sensors 2020, 20, 5212. [Google Scholar] [CrossRef]

- Franzoni, V.; Biondi, G.; Perri, D.; Gervasi, O. Enhancing Mouth-Based Emotion Recognition Using Transfer Learning. Sensors 2020, 20, 5222. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers); Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Santucci, V.; Spina, S.; Milani, A.; Biondi, G.; Bari, G.D. Detecting Hate Speech for Italian Language in Social Media. In Proceedings of the Sixth Evaluation Campaign of Natural Language Processing and Speech Tools for Italian. Final Workshop (EVALITA 2018) Co-Located with the Fifth Italian Conference on Computational Linguistics (CLiC-it 2018), Turin, Italy, 12–13 December 2018; Volume 2263. [Google Scholar]

- Franzoni, V.; Milani, A.; Biondi, G. SEMO: A Semantic Model for Emotion Recognition in Web Objects. In Proceedings of the International Conference on Web Intelligence, Leipzig, Germany, 23–26 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 953–958. [Google Scholar] [CrossRef]

- Livingstone, S.; Russo, F. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [Green Version]

- Clavel, C.; Callejas, Z. Sentiment Analysis: From Opinion Mining to Human-Agent Interaction. IEEE Trans. Affect. Comput. 2016, 7, 74–93. [Google Scholar] [CrossRef]

- Shah Fahad, M.; Ranjan, A.; Yadav, J.; Deepak, A. A survey of speech emotion recognition in natural environment. Digit. Signal Process. 2021, 110, 102951. [Google Scholar] [CrossRef]

- Naga, P.; Marri, S.D.; Borreo, R. Facial emotion recognition methods, datasets and technologies: A literature survey. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Newmark, C. Charles Darwin: The Expression of the Emotions in Man and Animals. In Hauptwerke der Emotionssoziologie; Senge, K., Schützeichel, R., Eds.; Springer Fachmedien Wiesbaden: Wiesbaden, Germnay, 2013; pp. 85–88. [Google Scholar] [CrossRef]

- Ekman, P. Basic Emotions. In Handbook of Cognition and Emotion; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 1999. [Google Scholar] [CrossRef]

- Plutchik, R. The Nature of Emotions. Am. Sci. 2001, 89, 344. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in Representation Learning: A report on three machine learning contests. In Proceedings of the 20th International Conference, ICONIP 2013, Daegu, Korea, 3–7 November 2013. [Google Scholar] [CrossRef] [Green Version]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 527–536. [Google Scholar] [CrossRef] [Green Version]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower Provost, E.; Kim, S.; Chang, J.; Lee, S.; Narayanan, S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Ringeval, F.; Sonderegger, A.; Sauer, J.; Lalanne, D. Introducing the RECOLA multimodal corpus of remote collaborative and affective interactions. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Furey, E.; Blue, J. The Emotographic Iceberg: Modelling Deep Emotional Affects Utilizing Intelligent Assistants and the IoT. In Proceedings of the 2019 19th International Conference on Computational Science and Its Applications (ICCSA), Saint Petersburg, Russia, 1–4 July 2019; pp. 175–180. [Google Scholar] [CrossRef]

- Franzoni, V.; Milani, A.; Vallverdú, J. Emotional Affordances in Human–Machine Interactive Planning and Negotiation. In Proceedings of the International Conference on Web Intelligence, Leipzig, Germany, 23–26 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 924–930. [Google Scholar] [CrossRef]

- Prasanth, S.; Roshni Thanka, M.; Bijolin Edwin, E.; Nagaraj, V. Speech emotion recognition based on machine learning tactics and algorithms. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Akçay, M.B.; Oguz, K. Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 2020, 116, 56–76. [Google Scholar] [CrossRef]

- Ancilin, J.; Milton, A. Improved speech emotion recognition with Mel frequency magnitude coefficient. Appl. Acoust. 2021, 179, 108046. [Google Scholar] [CrossRef]

- Eyben, F.; Wöllmer, M.; Schuller, B. Opensmile: The Munich Versatile and Fast Open-Source Audio Feature Extractor. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 1459–1462. [Google Scholar] [CrossRef]

- Boersma, P.; Weenink, D. PRAAT, a system for doing phonetics by computer. Glot Int. 2001, 5, 341–345. [Google Scholar]

- Bhavan, A.; Chauhan, P.; Hitkul; Shah, R.R. Bagged support vector machines for emotion recognition from speech. Knowl.-Based Syst. 2019, 184, 104886. [Google Scholar] [CrossRef]

- Singh, P.; Srivastava, R.; Rana, K.; Kumar, V. A multimodal hierarchical approach to speech emotion recognition from audio and text. Knowl.-Based Syst. 2021, 229, 107316. [Google Scholar] [CrossRef]

- Pepino, L.; Riera, P.; Ferrer, L. Emotion Recognition from Speech Using wav2vec 2.0 Embeddings. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 3400–3404. [Google Scholar] [CrossRef]

- Issa, D.; Fatih Demirci, M.; Yazici, A. Speech emotion recognition with deep convolutional neural networks. Biomed. Signal Process. Control 2020, 59, 101894. [Google Scholar] [CrossRef]

- Mustaqeem; Kwon, S. Att-Net: Enhanced emotion recognition system using lightweight self-attention module. Appl. Soft Comput. 2021, 102, 107101. [Google Scholar] [CrossRef]

- Atila, O.; Şengür, A. Attention guided 3D CNN-LSTM model for accurate speech based emotion recognition. Appl. Acoust. 2021, 182, 108260–doi10. [Google Scholar] [CrossRef]

- Wijayasingha, L.; Stankovic, J.A. Robustness to noise for speech emotion classification using CNNs and attention mechanisms. Smart Health 2021, 19, 100165. [Google Scholar] [CrossRef]

- Sun, L.; Zou, B.; Fu, S.; Chen, J.; Wang, F. Speech emotion recognition based on DNN-decision tree SVM model. Speech Commun. 2019, 115, 29–37. [Google Scholar] [CrossRef]

- Akhand, M.A.H.; Roy, S.; Siddique, N.; Kamal, M.A.S.; Shimamura, T. Facial Emotion Recognition Using Transfer Learning in the Deep CNN. Electronics 2021, 10, 1036. [Google Scholar] [CrossRef]

- Ahmad, Z.; Jindal, R.; Ekbal, A.; Bhattachharyya, P. Borrow from rich cousin: Transfer learning for emotion detection using cross lingual embedding. Expert Syst. Appl. 2020, 139, 112851. [Google Scholar] [CrossRef]

- Amiriparian, S.; Gerczuk, M.; Ottl, S.; Cummins, N.; Freitag, M.; Pugachevskiy, S.; Baird, A.; Schuller, B. Snore Sound Classification Using Image-Based Deep Spectrum Features. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 3512–3516. [Google Scholar]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Dinkel, H.; Wu, M.; Yu, K. Towards Duration Robust Weakly Supervised Sound Event Detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 887–900. [Google Scholar] [CrossRef]

- Sweeney, L.; Healy, G.; Smeaton, A.F. The Influence of Audio on Video Memorability with an Audio Gestalt Regulated Video Memorability System. In Proceedings of the 2021 International Conference on Content-Based Multimedia Indexing (CBMI), Lille, France, 28–30 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Amiriparian, S.; Cummins, N.; Ottl, S.; Gerczuk, M.; Schuller, B. Sentiment analysis using image-based deep spectrum features. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), San Antonio, TX, USA, 23–26 October 2017; pp. 26–29. [Google Scholar] [CrossRef]

- Ottl, S.; Amiriparian, S.; Gerczuk, M.; Karas, V.; Schuller, B. Group-Level Speech Emotion Recognition Utilising Deep Spectrum Features. In Proceedings of the 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 821–826. [Google Scholar] [CrossRef]

- King, D.E. Dlib-Ml: A Machine Learning Toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Nguyen, B.T.; Trinh, M.H.; Phan, T.V.; Nguyen, H.D. An efficient real-time emotion detection using camera and facial landmarks. In Proceedings of the 2017 Seventh International Conference on Information Science and Technology (ICIST), Da Nang, Vietnam, 16–19 April 2017; pp. 251–255. [Google Scholar] [CrossRef]

- Bagheri, E.; Esteban, P.G.; Cao, H.L.; De Beir, A.; Lefeber, D.; Vanderborght, B. An Autonomous Cognitive Empathy Model Responsive to Users’ Facial Emotion Expressions. Acm Trans. Interact. Intell. Syst. 2020, 10, 20. [Google Scholar] [CrossRef]

- Tautkute, I.; Trzcinski, T. Classifying and Visualizing Emotions with Emotional DAN. Fundam. Inform. 2019, 168, 269–285. [Google Scholar] [CrossRef] [Green Version]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; kavukcuoglu, K. Spatial Transformer Networks. In Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Minaee, S.; Minaei, M.; Abdolrashidi, A. Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network. Sensors 2021, 21, 3046. [Google Scholar] [CrossRef]

- Luna-Jiménez, C.; Cristóbal-Martín, J.; Kleinlein, R.; Gil-Martín, M.; Moya, J.M.; Fernández-Martínez, F. Guided Spatial Transformers for Facial Expression Recognition. Appl. Sci. 2021, 11, 7217. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal Machine Learning: A Survey and Taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Xu, M.; Lian, Z.; Liu, B.; Tao, J.; Wang, M.; Cheng, Y. Multimodal Emotion Recognition and Sentiment Analysis via Attention Enhanced Recurrent Model. In Proceedings of the 2nd on Multimodal Sentiment Analysis Challenge, Virtual Event China, 24 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 15–20. [Google Scholar] [CrossRef]

- Sun, L.; Lian, Z.; Tao, J.; Liu, B.; Niu, M. Multi-Modal Continuous Dimensional Emotion Recognition Using Recurrent Neural Network and Self-Attention Mechanism. In Proceedings of the 1st International on Multimodal Sentiment Analysis in Real-Life Media Challenge and Workshop, Seattle, WA, USA, 16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 27–34. [Google Scholar] [CrossRef]

- Deng, J.J.; Leung, C.H.C. Towards Learning a Joint Representation from Transformer in Multimodal Emotion Recognition. In Brain Informatics; Mahmud, M., Kaiser, M.S., Vassanelli, S., Dai, Q., Zhong, N., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 179–188. [Google Scholar]

- Pandeya, Y.R.; Lee, J. Deep learning-based late fusion of multimodal information for emotion classification of music video. Multimed. Tools Appl. 2021, 80, 2887–2905. [Google Scholar] [CrossRef]

- Wang, S.; Wu, Z.; He, G.; Wang, S.; Sun, H.; Fan, F. Semi-supervised classification-aware cross-modal deep adversarial data augmentation. Future Gener. Comput. Syst. 2021, 125, 194–205. [Google Scholar] [CrossRef]

- Abdulmohsin, H.A.; Abdul wahab, H.B.; Abdul hossen, A.M.J. A new proposed statistical feature extraction method in speech emotion recognition. Comput. Electr. Eng. 2021, 93, 107172. [Google Scholar] [CrossRef]

- García-Ordás, M.T.; Alaiz-Moretón, H.; Benítez-Andrades, J.A.; García-Rodríguez, I.; García-Olalla, O.; Benavides, C. Sentiment analysis in non-fixed length audios using a Fully Convolutional Neural Network. Biomed. Signal Process. Control 2021, 69, 102946. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Tomar, S. Converting video formats with FFmpeg. LINUX J. 2006, 2006, 10. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Atmaja, B.T.; Akagi, M. Speech Emotion Recognition Based on Speech Segment Using LSTM with Attention Model. In Proceedings of the 2019 IEEE International Conference on Signals and Systems (ICSigSys), Bandung, Indonesia, 16–18 July 2019; pp. 40–44. [Google Scholar] [CrossRef]

- Doukhan, D.; Carrive, J.; Vallet, F.; Larcher, A.; Meignier, S. An Open-Source Speaker Gender Detection Framework for Monitoring Gender Equality. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Kroner, A.; Senden, M.; Driessens, K.; Goebel, R. Contextual encoder-decoder network for visual saliency prediction. Neural Netw. 2020, 129, 261–270. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Huang, S.; Duan, J.; Zhao, Q. SALICON: Saliency in Context. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1072–1080. [Google Scholar] [CrossRef]

- Baziotis, C.; Nikolaos, A.; Chronopoulou, A.; Kolovou, A.; Paraskevopoulos, G.; Ellinas, N.; Narayanan, S.; Potamianos, A. NTUA-SLP at SemEval-2018 Task 1: Predicting Affective Content in Tweets with Deep Attentive RNNs and Transfer Learning. In Proceedings of the 12th International Workshop on Semantic Evaluation, Orleans, LA, USA, 5–6 June 2018. [Google Scholar] [CrossRef]

- Romero, S.E.; Kleinlein, R.; Jiménez, C.L.; Montero, J.M.; Martínez, F.F. GTH-UPM at DETOXIS-IberLEF 2021: Automatic Detection of Toxic Comments in Social Networks. In Proceedings of the Iberian Languages Evaluation Forum (IberLEF 2021) Co-Located with the Conference of the Spanish Society for Natural Language Processing (SEPLN 2021), XXXVII International Conference of the Spanish Society for Natural Language Processing, Málaga, Spain, 21 September 2021; Volume 2943, pp. 533–546. [Google Scholar]

- Pavlopoulos, J.; Malakasiotis, P.; Androutsopoulos, I. Deep Learning for User Comment Moderation. In Proceedings of the First Workshop on Abusive Language Online, Vancouver, BC, Canada, 4 August 2017. [Google Scholar] [CrossRef] [Green Version]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: New York, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Dissanayake, V.; Zhang, H.; Billinghurst, M.; Nanayakkara, S. Speech Emotion Recognition ‘in the Wild’ Using an Autoencoder. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 526–530. [Google Scholar] [CrossRef]

| TL Strategy | Inputs | Models | With VAD (InaSpeech) | Accuracy ± 95% CI |

|---|---|---|---|---|

| - | - | Human perception [18] | - | 67.00 |

| - | - | ZeroR | - | 13.33 ± 2.06 |

| Feature Extraction | Deep-Spectrum embs. from fc7 of AlexNet | SVC | No | 43.32 ± 2.56 |

| Yes | 45.80 ± 2.57 | |||

| PANNs embs. from CNN-14 | SVC | No | 39.73 ± 2.53 | |

| Yes | 37.22 ± 2.50 | |||

| Fine Tuning | Mel spectrograms | AlexNet | No | 60.72 ± 2.52 |

| Yes | 61.67 ± 2.51 | |||

| Mel spectrograms | CNN-14 | No | 76.58 ± 2.18 | |

| Yes | 75.25 ± 2.23 |

| Initialization Strategy | Inputs | Model | Accuracy ± 95% CI |

|---|---|---|---|

| Training from scratch | Frames and saliency maps | STN + max. voting | 39.88 ± 2.53 |

| Fine-Tuning AffectNet weigths | Frames and saliency map | STN + max. voting | 54.20 ± 2.57 |

| TL Strategy | Inputs | Models | With VAD (InaSpeech) | Accuracy ± 95% CI |

|---|---|---|---|---|

| - | - | Human perception [18] | - | 75.00 |

| - | - | ZeroR | - | 13.33 ± 2.06 |

| Feature Extraction (from pre-trained STN on AffectNet) | posteriors (7 classes) | Max. voting | No | 30.49 * ± 2.38 |

| Yes | 30.35 * ± 2.37 | |||

| Sequential (bi-LSTM) | No | 38.87 ± 2.52 | ||

| Yes | 39.75 ± 2.53 | |||

| fc50 | Sequential (bi-LSTM) | No | 50.40 ± 2.58 | |

| Yes | 48.77 ± 2.58 | |||

| flatten-810 | Sequential (bi-LSTM) | No | 53.85 ± 2.57 | |

| Yes | 51.70 ± 2.58 | |||

| Fine-Tuning on RAVDESS | posteriors (8 classes) | Max. voting | No | 54.20 ± 2.56 |

| Yes | 55.07 ± 2.56 | |||

| Sequential (bi-LSTM) | No | 55.82 ± 2.56 | ||

| Yes | 56.87 ± 2.56 | |||

| fc50 | Sequential (bi-LSTM) | No | 46.48 ± 2.58 | |

| Yes | 46.13 ± 2.57 | |||

| flatten-810 | Sequential (bi-LSTM) | No | 54.14 ± 2.57 | |

| Yes | 57.08 ± 2.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luna-Jiménez, C.; Griol, D.; Callejas, Z.; Kleinlein, R.; Montero, J.M.; Fernández-Martínez, F. Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning. Sensors 2021, 21, 7665. https://doi.org/10.3390/s21227665

Luna-Jiménez C, Griol D, Callejas Z, Kleinlein R, Montero JM, Fernández-Martínez F. Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning. Sensors. 2021; 21(22):7665. https://doi.org/10.3390/s21227665

Chicago/Turabian StyleLuna-Jiménez, Cristina, David Griol, Zoraida Callejas, Ricardo Kleinlein, Juan M. Montero, and Fernando Fernández-Martínez. 2021. "Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning" Sensors 21, no. 22: 7665. https://doi.org/10.3390/s21227665

APA StyleLuna-Jiménez, C., Griol, D., Callejas, Z., Kleinlein, R., Montero, J. M., & Fernández-Martínez, F. (2021). Multimodal Emotion Recognition on RAVDESS Dataset Using Transfer Learning. Sensors, 21(22), 7665. https://doi.org/10.3390/s21227665