Abstract

The huge quantity of information and the high spectral resolution of hyperspectral imagery present a challenge when performing traditional processing techniques such as classification. Dimensionality and noise reduction improves both efficiency and accuracy, while retaining essential information. Among the many dimensionality reduction methods, Independent Component Analysis (ICA) is one of the most popular techniques. However, ICA is computationally costly, and given the absence of specific criteria for component selection, constrains its application in high-dimension data analysis. To overcome this limitation, we propose a novel approach that applies Discrete Cosine Transform (DCT) as preprocessing for ICA. Our method exploits the unique capacity of DCT to pack signal energy in few low-frequency coefficients, thus reducing noise and computation time. Subsequently, ICA is applied on this reduced data to make the output components as independent as possible for subsequent hyperspectral classification. To evaluate this novel approach, the reduced data using (1) ICA without preprocessing; (2) ICA with the commonly used preprocessing techniques which is Principal Component Analysis (PCA); and (3) ICA with DCT preprocessing are tested with Support Vector Machine (SVM) and K-Nearest Neighbor (K-NN) classifiers on two real hyperspectral datasets. Experimental results in both instances indicate that data after our proposed DCT preprocessing method combined with ICA yields superior hyperspectral classification accuracy.

1. Introduction

Hyperspectral imagery contains hundreds of bands at a very high spectral resolution providing detailed information about objects, making hyperspectral imagery appropriate for source separation and classification. However, this high-dimensional data increases computation time and decreases the effectiveness of a classifier [1], which is one consequence of the curse of dimensionality [2]. Therefore, hyperspectral images require preprocessing to reduce spectral bands [3,4] and denoising prior to further processing. Many techniques have been applied in hyperspectral data analysis to reduce data dimensionality, including selection-based [5] and transformation-based techniques [6] such as Independent Component Analysis (ICA).

ICA is a popular unsupervised Blind Source Separation (BSS) technique [7], which determines statistically independent components. Unlike correlation-based transformations such as Principal Component Analysis (PCA), ICA not only decorrelates signals but also makes the signals as independent as possible [8]. ICA accurately recognizes patterns, reduces noise and data effectively, and is widely applied in systems involving multivariable data [9]. The general idea is to reduce high dimension space to a lower dimension with the transformed components describing the essential structure of the data; containing the relevant information, but without the volume of the original data.

ICA has been applied to hyperspectral data for dimensionality reduction, for source separation, and data compression [9]. It however, is computationally expensive and does not have specific criteria for selecting components [9], which limits its usefulness in high-dimensional data analysis. To overcome this limitation, PCA is the most commonly used preprocessing technique in ICA applications for hyperspectral data dimensionality reduction [10].

In this paper, the Discrete Cosine Transform (DCT) is proposed as a data preprocessing procedure for ICA to reduce dimension, noise, and computation time. DCT is among the filters based on orthogonal transforms that have demonstrated good performance in additive white Gaussian noise removal [11,12], which is the dominant noise component in hyperspectral imagery [13,14,15]. However, very few studies have taken advantage of DCT in hyperspectral imagery. For instance, spatial DCT was proposed as preprocessing for source separation exploiting the inter-pixel spatial correlation in [16].

By exploiting DCT ability to concentrate signal energy in few low-frequency coefficients, our procedure considers each pixel vector as a 1-D discrete signal to obtain its frequency domain profile. In the frequency domain, we can easily retain the most useful information as represented by these few low-frequency components [17], and discards high-frequency components that generally represent noise. Therefore, by performing DCT, we can either reduce data dimensionality and noise.

Therefore, our objective was to implement and test a new DCT-based preprocessing procedure for ICA that overcomes the limits of ICA regarding computational cost and make it more effective for dimensionality reduction in hyperspectral imagery analysis. To evaluate this procedure, SVM and K-NN classifiers were applied on the reduced data using ICA only, on ICA with PCA as preprocessing procedure and on ICA with our proposed DCT preprocessing procedure.

The rest of this paper is organized as follows. Section 2 briefly reviews the mathematical formulation of ICA and DCT. Section 3 describes the proposed approach. Section 4 presents datasets, experimental setup, evaluation process and illustrates the results. In Section 5, some conclusions are drawn.

2. ICA

The main idea behind ICA assumes that data are linearly mixed by a set of separate independent sources, and that it is possible to decompose these signal sources according to measurements of their statistical independence. ICA analysis is applied in spectral un-mixing, and anomaly and target detection [18]. There are several applications specifically designed for remote sensing imagery [19,20,21]. Indeed, the ICA transform is based on a non-Gaussian assumption of independence among sources, a usual characteristic of hyperspectral datasets [22]. To measure source independence, different criteria have been proposed [7], most are based on the concept of mutual information as a criterion to measure the discrepancy between two random sources [23].

Given an observation vector with x = [x0, x1, x2, …, xP−1] as a linear mixture of P independent elements of a random vector source s = [s0, s1, s2, …, sP−1]. In matrix terms, the model is given by [7]:

where, A represents a mixing matrix.

X = A·S

ICA estimates an unmixing matrix W (i.e., the inverse of A) to give the best possible approximation of S.

Y = W·X ≈ S

Such a model has been used to capture the essential structure of the data in many applications, including signal separation, feature extraction, and target detection; by taking in consideration some factors, such as the statistical independence between sources, equality between the numbers of mixtures and sources. This requirement however, can be moderated as in [24]. Furthermore, no external noise and such requirement can be resolved during denoising preprocessing. To reduce computational complexity, the data ideally should be centered and whitened. Finally, the non-Gaussianity distribution of the source signals.

Different algorithms are used to measure independence, these output slightly different unmixing matrices. These algorithms can be divided into two main families [25], those based on the minimization of mutual information, and those seeking to maximize the non-Gaussianity of sources.

Mutual information is based on the entropy of random variable, defined by Shannon 1948 to measure of uncertainty. Indeed, there is information about behavior of given system if its entropy value is low. Mutual information can be seen as the reduction of uncertainty regarding a given variable after the observation of another variable. These algorithms seek to minimize mutual information, searching for maximally independent components. We find examples in the literature, especially in [26,27,28,29].

Since ICA assumes that the distribution of each source is not normal or Gaussian, then we can use the non-Gaussianity criterion; as an extracted component is forced to be far as possible from the normal distribution [30].The negentropy measurement can be used to estimate non-Gaussianity by measuring the distance from normality; however, it is difficult to be computed. Hyvärinen and Oja proposed in [31] an approximate formula, that gives birth to the algorithm known as FastICA [32] .

As a statistical technique, FastICA approach results rely on the initialization conditions, the parameterizations of the algorithm and the sampling of the dataset [33]. Therefore, the result of FastICA algorithm should be treated carefully and reliability analysis of the estimated components should be taken in consideration. In this regards, an approach for the estimation of the algorithmic and statistical reliability of the independent components resulting from FastICA called ICASSO, was proposed in [34]. However, ICASSO is an exploratory visualization method that requires users to set the initial parameters and visually interpret the relations between estimates. Furthermore, data dimensionality reduction using PCA is also recommended as a preprocessing step before running the algorithm [34].

In our approach, ICA is used as dimensionality reduction technique after performing DCT to overcome the limitations that compromise the use of ICA in hyperspectral processing.

3. DCT

The Discrete Cosine Transform (DCT) represents a sequence of values in terms of a sum of cosine functions oscillating at different frequencies. DCT is similar to the Discrete Fourier Transform (DFT) but uses only real numbers. The complete set of DCTs and Discrete Sine Transforms (DSTs), referred to as discrete trigonometric transforms, was described by Wang and Hunt [35]. DCT concentrates the energy of a signal into a small number of low-frequency DCT coefficients [17].

DCT is employed in many science and engineering applications, such as data lossy compression (e.g., MP3 and JPEG) and spectral methods to solve partial differential equations, numerically.

Assuming that each pixel x is a discrete signal represented by a vector x = [x0, x1, x2, …, xP−1] in P dimensional spectral bands space (P is the count of spectral bands), the DCT coefficients of vector x are given by [36]:

where u is the discrete frequency variable, du is the uth DCT coefficient in P space dimension and each vector d corresponds to the original pixel x. Each component of DCT coefficients (DCT coefficients curve) represents the amplitude of a specific cosine base function, proportional to the importance of a cosinusoid present in the pixel spectral curve.

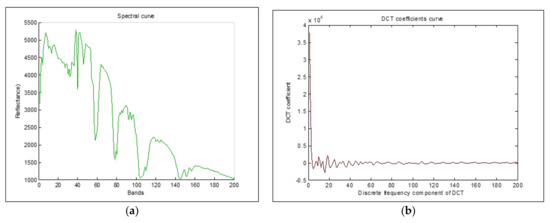

An examination of DCT coefficients distribution in Figure 1b, shows that most of the large amplitudes are concentrated in minority of low-frequency components, given the ability of DCT transform to concentrate information energy in a few low frequencies.

Figure 1.

(a) Spectral curve, (b) DCT Coefficients curve.

In our procedure, we transform the spectral data from the original feature space to a reduced feature space using DCT. We generate a frequency domain profile for the spectral curve of each pixel as illustrated in Figure 1.

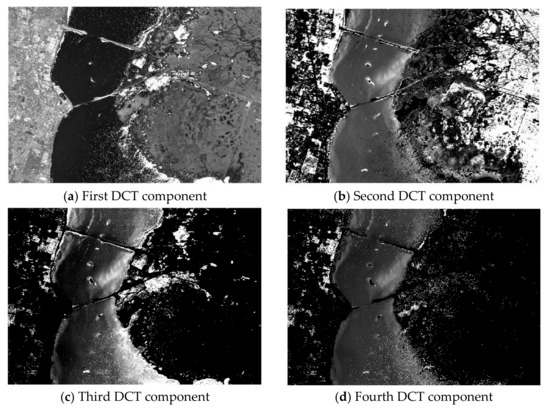

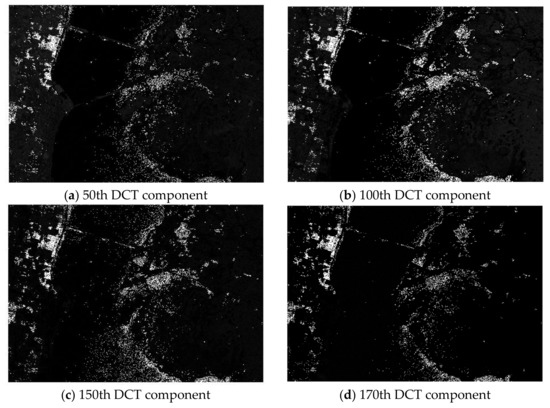

Figure 2 shows the plot of the first four transformed components of the new feature space after performing spectral-DCT on the Kennedy Space Center (KSC) dataset cube. Indeed, we can visually confirm the ability of DCT to concentrate most of the spectral energy in the first low-frequency coefficients. In contrast, Figure 3 presents the plot of four high-frequency components after performing DCT on the same dataset cube (component 50, component 100, component 150, and component 170). For these high-frequency components, the presence of heavy noise is visible, as there is no effective discrimination between the different classes.

Figure 2.

The first four transformed components of the spectral-DCT feature space from the Kennedy Space Center dataset.

Figure 3.

Four transformed high-frequency components of the spectral-DCT feature space from the Kennedy Space Center dataset.

4. The Proposed Preprocessing Procedure Description

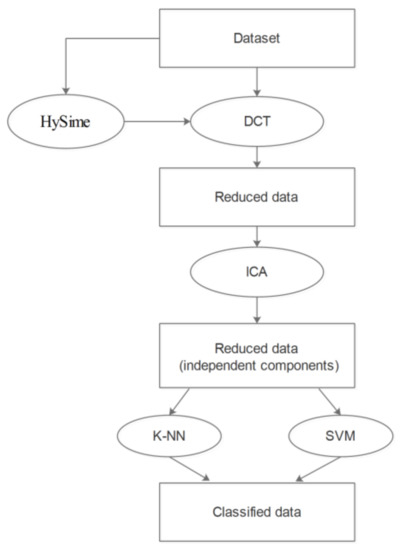

Our approach is based on applying DCT as preprocessing procedure for ICA to reduce data dimensionality and evaluate its effectiveness by performing classification on the resulting reduced data. The flowchart of our approach is detailed in the following figure (Figure 4).

Figure 4.

Flowchart of DCT-based preprocessing procedure for ICA.

In this approach we assume that the hyperspectral data cube is a matrix XNxP (N: number of pixels, P: number of spectral bands) with each row representing a pixel as a 1-D discrete signal x = [x0, x1, x2, …, xP−1] in P-dimensional spectral bands space. Applying this formula, the DCT of X is given by DNxP where each row d = [d0, d1, d2, …, dP−1] is the DCT coefficients curve of the corresponding vector x.

Due to ability of DCT to concentrate energy in a few first coefficients, it is sufficient to take the first L (where L << P) coefficients to preserve the major part of useful information. By taking the first coefficients and discarding the high frequencies that generally represent noise, the result can be considered as denoised data.

The required DCT coefficient number L is estimated using Hyperspectral Signal Subspace Identification by the Minimum Error (HySime) method as proposed in [37] to find independent bands in the image for estimating the count of distinct spectral signatures in hyperspectral image. reference [38], demonstrated that by using four real datasets, this method, yields a more accurate estimation than other methods such as Noise-Whitened HFC (NWHFC) and the Harsanyi-Farrand-Chang (HFC) approaches.

After applying DCT and estimating the count of coefficients to be retained using the HySime method, we can now perform ICA on the preprocessed data. To evaluate the effectiveness of our DCT-based preprocessing procedure on ICA, SVM and K-NN classifiers were applied on the present data.

5. Data and Evaluation Process

In this section, we present data and our experimental results. We overview the characteristics of the data used in the experimental setup, explain the evaluation process, and the accuracy measurements. Experiments were carried out using Matlab R2014a on a Dual-Core 2 GHz CPU with 8 GB of memory. Specifically, the implementation of the ICA technique was based on the Kurtosis Maximization method.

5.1. Data

Two real world remote sensing hyperspectral datasets with different spatial resolution were used for the experiments:

5.1.1. The Indian Pines Dataset

The Indian Pines scene capturing the agricultural Indian Pine area in Northwestern Indiana, USA was recorded by Airborne Visible Infrared Imaging Spectrometer (AVIRIS) at a 3.7 m spatial resolution. This image contains 220 bands and 145 × 145 pixels. Channels affected by noise and water absorption were removed leaving 200 channels. There are 16 classes in the ground reference data. Table 1 describes the classes and gives the number of samples, the number of training and the test points in Indian Pines dataset corresponding to Five-Fold cross-validation model.

Table 1.

Classes and number of test and training samples for Indian Pines (corresponding to Five-Fold cross-validation model).

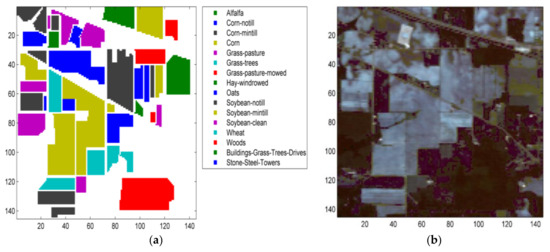

Figure 5 shows a ground reference map and a false color composition of the Indian Pines scene.

Figure 5.

(a) Ground reference map of Indian Pines dataset. (b) False color composition of the Indian Pines dataset.

5.1.2. The Kennedy Space Center (KSC) Dataset

The Kennedy Space Center (KSC), located in Florida, scene was acquired by AVIRIS. This image is 512 × 614 pixels with 18 m spatial resolution. After removing affect water absorption and noisy bands, only 176 bands were retained in our experiments. The ground reference data contains 13 classes. Table 2 describes the classes and gives the number of samples, the number of training and the test points in Kennedy Space Center (KSC) dataset corresponding to Five-Fold cross-validation model.

Table 2.

Classes and number of test and training samples for Kennedy Space Center (KSC) dataset (corresponding to Five-Fold cross-validation model).

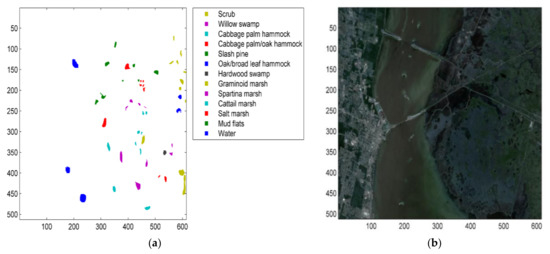

Figure 6 shows a ground reference map and a false color composition of the Kennedy Space Center scene.

Figure 6.

(a) Ground reference map of Kennedy Space Center dataset. (b) False color composition of Kennedy Space Center dataset.

5.2. Evaluation Process

To assess the new DCT-based preprocessing procedure for ICA, linear SVM and K-NN classifiers were applied on the experimental datasets after ICA without preprocessing; after ICA with PCA as preprocessing; and after ICA with DCT as preprocessing. In this study, accuracy was estimated using three traditional accuracy measurements, kappa, Average Accuracy (AA), and Overall Accuracy (OA) defined as in [39]:

where, CAc is the class wise accuracy of cth class, and n is the total number of classes in the hyperspectral image. CA is defined by [39]:

where, P is the total number of pixels, C represents the number of pixels that are accurately classified, and S is the sum of the product of rows and columns of the confusion matrix.

The 5-fold cross-validation process was used as the validation model for classification.

6. Results and Discussion

6.1. Intrinsic Dimension Criterion

In this section, we apply the Hysime criterion for intrinsic dimension estimation to the experimental datasets regarding the three dimensionality reduction techniques: ICA, PCA and DCT. The results are summarized in Table 3.

Table 3.

Intrinsic dimension estimation.

The results obtained in the following section are based on a selection of 18 components in the Indian pines dataset and 32 components in Kennedy Space Center dataset for ICA, PCA and DCT techniques as listed in Table 3.

6.2. ClassificationofIndian Pines and Kennedy Space Centerdatasets

Table 4 and Table 5 present the classification accuracy of individual class, OA, AA, kappa coefficient, and execution time for the two experimental datasets: Indian Pines and Kennedy Space Center respectively.

Table 4.

Classification accuracy (%) using K-NN and SVM classifiers on the Indian Pines Dataset.

Table 5.

Classification accuracy using K-NN and SVM classifiers on the KSC Dataset.

A comparison of the accuracy results presented in Table 4 and Table 5, shows that the proposed DCT-ICA method yields the best results than the other tested approaches on almost all of classes. In addition, OA, AA, and kappa coefficient are also larger than those of ICA and PCA-ICA. Specifically, DCT-ICA delivered about (10% to 15%) and (13% to 15%) gain in OA with SVM classifier for Indian Pines and Kennedy Space Center datasets, respectively.

The proposed DCT-ICA approach offers a great improvement in performance, even for classes with few labeled training samples such as class 1, class 7, and class 9, in the Indian Pines dataset; these classes are usually discarded to improve the average classification accuracy [40,41,42,43].

Furthermore, classification execution times (on a Dual-Core 2 GHz CPU with 8 GB of memory) were considerably reduced for the two datasets, using both classifiers. For instance, in Kennedy Space Center dataset, the K-NN execution time was around 0.95 s when performing ICA, around 0.49 s when performing PCA-ICA, and was reduced to around 0.20 s when using our proposed DCT-ICA approach.

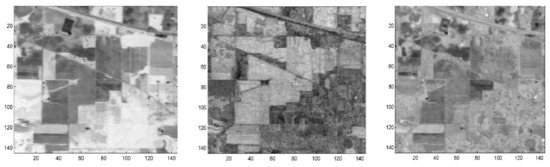

Figure 7 and Figure 8 show three arbitrary selected independent components, after performing DCT-ICA, on both the Indian Pines and Kennedy Space Center datasets.

Figure 7.

Plot of three arbitrary selected ICs components from the DCT-ICA feature space in the Indian Pines dataset.

Figure 8.

Plot of three arbitrary selected ICs components from the DCT-ICA feature space in the Kennedy Space Center dataset.

As can be seen in Figure 7 and Figure 8, there is a high contrast between objects, with effective discrimination between the different classes, in almost all the selected independent components after performing DCT-ICA.

Experimental results confirm the superiority of the proposed DCT preprocessing procedure for ICA dimensionality reduction, over all datasets and with both classifiers. We observed significant advantages when we used the DCT preprocessing procedure as classification accuracy was enhanced, overall. In the experiment with the SVM classifier, accuracy improvements using the DCT preprocessing procedure were the most evident. Furthermore, our proposed preprocessing procedure yielded the sharpest improvements in execution time with the K-NN classifier.

7. Conclusions

In this study, a novel DCT preprocessing procedure for ICA in hyperspectral dimensionality reduction is proposed. This procedure is based on applying DCT on each pixel spectral curve and estimating the retained coefficients with the HySime method to construct a new reduced feature space where the most useful information is packed in the first low-frequency components. Performing ICA on this reduced feature space overcomes the time consumption problem as well as the absence of specific criteria to select components produced by the ICA. Indeed, useful information is already selected by DCT.

SVM and K-NN classifiers were applied on the reduced data to assess the effectiveness of our approach. Experimental results on two real datasets demonstrate that the proposed preprocessing procedure, DCT-ICA, outperforms ICA without preprocessing and ICA with PCA as a preprocessing technique in terms of accuracy, even for small training sets and short execution times. Indeed, the overall classification accuracy of ICA in the reduced feature space improved by about (10% to 15%), and (13% to 15%) for two experimental datasets, with reduced execution times.

Author Contributions

All authors conceived and designed the study. Kamel BOUKHECHBA carried out the experiments. All authors discussed the basic structure of the manuscript, and Razika BAZINE finished the first draft. HUAYI Wu and Kamel BOUKHECHBA reviewed and edited the draft.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Feng, F.; Li, W.; Du, Q.; Zhang, B. Dimensionality reduction of hyperspectral image with graph-based discriminant analysis considering spectral similarity. Remote Sens. 2017, 9, 323. [Google Scholar] [CrossRef]

- Hughes, G.F. On the Mean Accuracy of Statistical Pattern Recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Pan, L.; Li, H.-C.; Deng, Y.-J.; Zhang, F.; Chen, X.-D.; Du, Q. Hyperspectral dimensionality reduction by tensor sparse and low-rank graph-based discriminant analysis. Remote Sens. 2017, 9, 452. [Google Scholar] [CrossRef]

- Li, J.; Marpu, P.R.; Antonio, P.; Bioucas-Dias, J.M.; Benediktsson, J.A. Generalized Composite Kernel Framework for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2010, 32, 29–43. [Google Scholar] [CrossRef]

- Du, Q.; Yang, H. Similarity-based unsupervised band selection for hyperspectral image analysis. IEEE Geosci. Remote Sens. Lett. 2008, 5, 564–568. [Google Scholar] [CrossRef]

- Gao, L.; Zhao, B.; Jia, X.; Liao, W.; Zhang, B. Optimized kernel minimum noise fraction transformation for hyperspectral image classification. Remote Sens. 2017, 9, 548. [Google Scholar] [CrossRef]

- Hyvarinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; Haykin, S., Ed.; John Wiley: Hoboken, NJ, USA, 2001; ISBN 9780471405405. [Google Scholar]

- Villa, A.; Chanussot, J.; Jutten, C.; Benediktsson, J.; Moussaoui, S. On the Use of ICA for Hyperspectral Image Analysis. Int. Geosci. Remote Sens. Symp. 2009, 4, 97–100. [Google Scholar]

- Wang, J.; Chang, C.I. Independent component analysis-based dimensionality reduction with applications in hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1586–1600. [Google Scholar] [CrossRef]

- Du, Q.; Kopriva, I.; Szu, H. Independent-component analysis for hyperspectral remote sensing imagery classification. Opt. Eng. 2006, 45, 17008. [Google Scholar]

- Wang, J.; Wu, Z.; Jeon, G.; Jeong, J. An efficient spatial deblocking of images with DCT compression. Digit. Signal Process. A Rev. J. 2015, 42, 80–88. [Google Scholar] [CrossRef]

- Oktem, R.; Ponomarenko, N.N. Image filtering based on discrete cosine transform. Telecommun. Radio Eng. 2007, 66, 1685–1701. [Google Scholar]

- Gao, L.R.; Zhang, B.; Zhang, X.; Zhang, W.J.; Tong, Q.X. A New Operational Method for Estimating Noise in Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 83–87. [Google Scholar] [CrossRef]

- Martin-Herrero, J. A New Operational Method for Estimating Noise in Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 705–709. [Google Scholar] [CrossRef]

- Chen, G.; Qian, S.-E. Denoising of hyperspectral imagery using principal component analysis and wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 2011, 49, 973–980. [Google Scholar] [CrossRef]

- Karray, E.; Loghmari, M.A.; Naceur, M.S. Second-Order Separation by Frequency-Decomposition of Hyperspectral Data. Am. J. Signal Process. 2012, 2, 122–133. [Google Scholar] [CrossRef][Green Version]

- Jing, L.; Yi, L. Hyperspectral remote sensing images terrain classification in DCT SRDA subspace. J. China Univ. Posts Telecommun. 2015, 22, 65–71. [Google Scholar] [CrossRef]

- Fakiris, E.; Papatheodorou, G.; Geraga, M.; Ferentinos, G. An automatic target detection algorithm for swath sonar backscatter imagery, using image texture and independent component analysis. Remote Sens. 2016, 8, 373. [Google Scholar] [CrossRef]

- Tu, T. Unsupervised signature extraction and separation in hyperspectral images: a noise-adjusted fast independent component analysis approach. Opt. Eng. 2000, 39, 897–906. [Google Scholar] [CrossRef]

- Chang, C.-I. I.; Chiang, S.-S. S.; Smith, J.A.; Ginsberg, I.W. Linear spectral random mixture analysis for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2002, 40, 375–392. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, C.H. New independent component analysis method using higher order statistics with application to remote sensing images. Opt. Eng. 2002, 41, 12–41. [Google Scholar] [CrossRef]

- Yusuf, B.L.; He, Y. Application of hyperspectral imaging sensor to differentiate between the moisture and reflectance of healthy and infected tobacco leaves. African J. Agric. Res. 2011, 6, 6267–6280. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley-Interscience: New York, NY, USA, 1991; ISBN 0-471-06259-6. [Google Scholar]

- Hyvärinen, A.; Oja, E. Independent component analysis: Algorithms and applications. Neural Netw. 2000, 13, 411–430. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks and Learning Machines; Pearson: Hoboken, NJ, USA, 2009; ISBN 978-0-13-147139-9. [Google Scholar]

- Amari, S.; Cichocki, A.; Yang, H.H. A new learning algorithm for blind signal separation. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1996; pp. 757–763. [Google Scholar]

- Bell, A.J.; Sejnowski, T.J. A non-linear information maximisation algorithm that performs blind separation. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1995; pp. 467–474. [Google Scholar]

- Cardoso, J.-F. Infomax and maximum likelihood for blind source separation. IEEE Signal Process. Lett. 1997, 4, 112–114. [Google Scholar] [CrossRef]

- Pham, D.; Garrat, P.; Jutten, C. Separation of a mixture of independent sources through a maximum likelihood approach. In Proceedings of the EUSIPCO-92, VI European Signal Processing Conference, Brussels, Belgium, 24–27 August 1992; pp. 771–774. [Google Scholar]

- Langlois, D.; Chartier, S.; Gosselin, D. An introduction to independent component analysis: InfoMax and FastICA algorithms. Tutor. Quant. Methods Psychol. 2010, 6, 31–38. [Google Scholar] [CrossRef]

- Hyvärinen, A.; Oja, E. One-unit learning rules for independent component analysis. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1997; pp. 480–486. [Google Scholar]

- Hyvarinen, A. Fast and robust fixed-point algorithms for independent component analysis. IEEE Trans. Neural Netw. 1999, 10, 626–634. [Google Scholar] [CrossRef] [PubMed]

- Saalbach, A.; Lange, O.; Nattkemper, T.; Meyer-Baese, A. On the application of (topographic) independent and tree-dependent component analysis for the examination of DCE-MRI data. Biomed. Signal Process. Control 2009, 4, 247–253. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Du, W.; Ma, S.; Fu, G.S.; Calhoun, V.D.; Adali, T. A novel approach for assessing reliability of ICA for FMRI analysis. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 2084–2088. [Google Scholar]

- International Organization for Standardization. ISO/IEC JTC1 CD 11172, Coding of Moving Pictures and Associated Audio for Digital Storage Media up to 1.5 Mbits/s; ISO: Geneva, Switzerland, 1992. [Google Scholar]

- Ahmed, N.; Natarajan, T.; Rao, K.R. Discrete Cosine Transform. Comput. IEEE Trans. 1974, C-23, 90–93. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Nascimento, J.M.P. Estimation of signal subspace on hyperspectral data. Proc. SPIE 2005, 5982, 59820L. [Google Scholar]

- Ghamary Asl, M.; Mojaradi, B. Virtual dimensionality estimation in hyperspectral imagery based on unsupervised feature selection. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-7, 17–23. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Accuracy assessment: a user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar] [CrossRef]

- Li, J.; Xi, B.; Li, Y.; Du, Q.; Wang, K. Hyperspectral Classification Based on Texture Feature Enhancement and Deep Belief Networks. Remote Sens. 2018, 10, 396. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Tramel, E.W.; Prasad, S.; Fowler, J.E. Nearest regularized subspace for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 477–489. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).