Abstract

Color image demosaicking for the Bayer color filter array is an essential image processing operation for acquiring high-quality color images. Recently, residual interpolation (RI)-based algorithms have demonstrated superior demosaicking performance over conventional color difference interpolation-based algorithms. In this paper, we propose adaptive residual interpolation (ARI) that improves existing RI-based algorithms by adaptively combining two RI-based algorithms and selecting a suitable iteration number at each pixel. These are performed based on a unified criterion that evaluates the validity of an RI-based algorithm. Experimental comparisons using standard color image datasets demonstrate that ARI can improve existing RI-based algorithms by more than 0.6 dB in the color peak signal-to-noise ratio and can outperform state-of-the-art algorithms based on training images. We further extend ARI for a multispectral filter array, in which more than three spectral bands are arrayed, and demonstrate that ARI can achieve state-of-the-art performance also for the task of multispectral image demosaicking.

1. Introduction

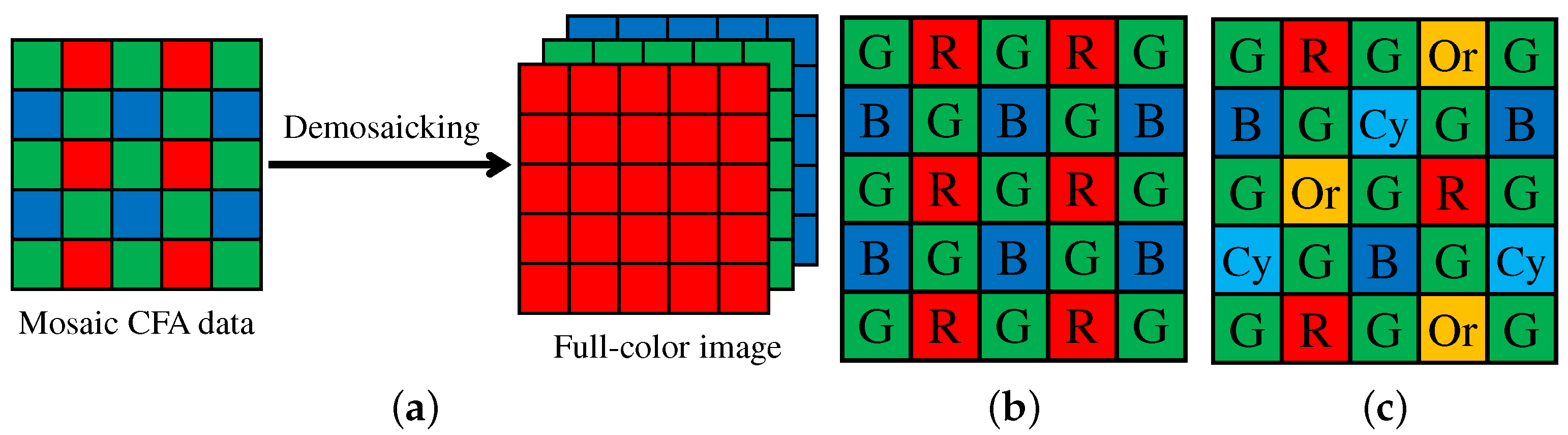

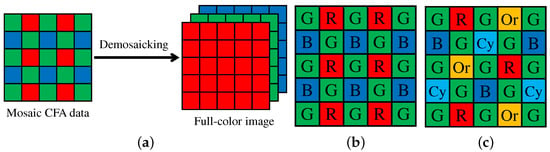

A single image sensor with a color filter array (CFA) is widely used in current color digital cameras, in which only one pixel value among RGB values is recorded at each pixel [1]. The other two missing pixel values are estimated from the recorded mosaic data of RGB values by an interpolation process called demosaicking (or demosaicing) [2,3,4,5]. Figure 1a illustrates the demosaicking process, which plays a crucial role in acquiring high-quality color images using a color digital camera.

Figure 1.

(a) Color image demosaicking process; (b) Bayer CFA [6]; (c) five-band MSFA [7].

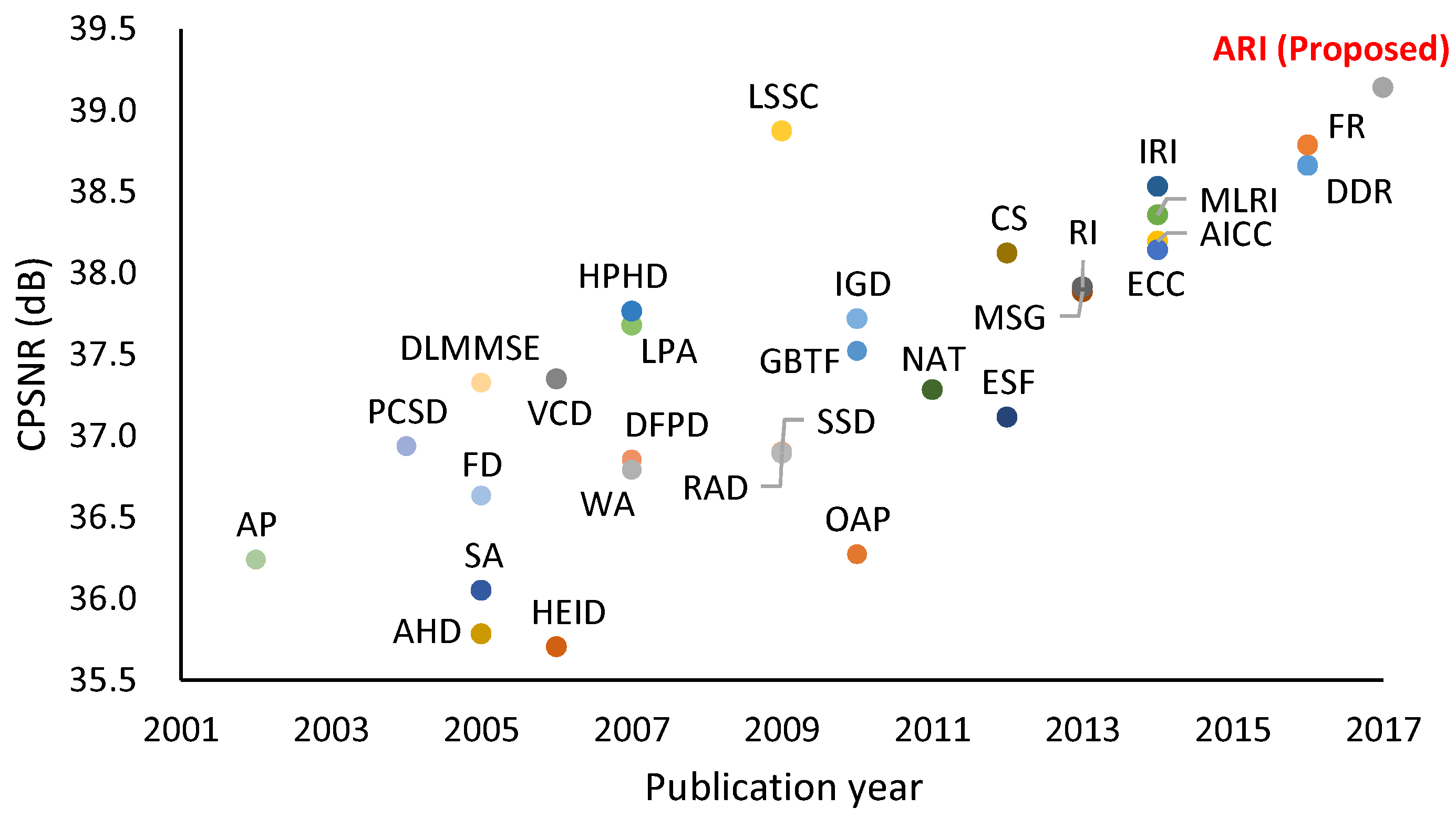

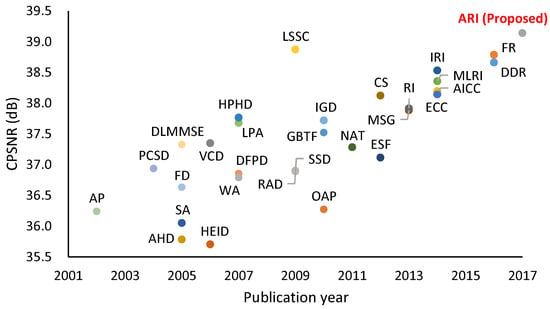

The most widely used CFA is the Bayer CFA [6] (Figure 1b), for which numerous demosaicking algorithms have been proposed [2,3,4,5]. Figure 2 shows the color peak signal-to-noise ratio (CPSNR) performance of representative algorithms on a standard color image dataset [4]. The CPSNR performance has continuously been improved, suggesting the ongoing demand for more highly accurate demosaicking algorithms.

Figure 2.

CPSNR performance of representative Bayer demosaicking algorithms on standard IMAX and Kodak 30 color images [4]. The publication year is primarily based on the journal publication except for recent algorithms presented at conferences.

As a recent trend, residual interpolation (RI)-based algorithms have demonstrated superior demosaicking performance [8,9,10,11,12]. A key feature of the RI-based algorithms is to perform interpolation in a residual domain, where the residual is defined as the difference between a tentatively estimated pixel value and a corresponding observed pixel value. The papers [10,12] show that the residuals generally become smoother than conventional color differences, contributing to more accurate interpolation. The RI was originally proposed in [8] (denoted as original RI (In Section 1 and Section 2, the bold typeface is used to represent the abbreviated name of the algorithms compared in Figure 2. Hereafter, we might omit full notations of the names for the simplicity of the notations.)) and then extended in a minimized-Laplacian version (MLRI [9,10]) or an iterative version (IRI [11,12]). Recent algorithms such as ECC [13] and fused regression (FR) [14,15] also use an RI-based algorithm to assist in improving demosaicking performance. The framework of the RI-based algorithms will be reviewed in Section 3.

In this paper, we first propose adaptive residual interpolation (ARI) for the Bayer CFA. ARI improves the existing RI-based algorithms by introducing adaptive aspects as follows. (i) ARI adaptively combines RI and MLRI at each pixel, and (ii) ARI adaptively selects a suitable iteration number for each pixel, instead of using a common iteration number for all of the pixels, as conducted in IRI. These are performed based on a unified criterion that evaluates the validity of an RI-based algorithm. Figure 2 demonstrates that ARI can improve the existing RI-based algorithms by more than 0.6 dB in CPSNR and can outperform state-of-the-art algorithms based on training images, such as LSSC [16] and FR [14,15].

We next consider the task of multispectral image demosaicking. The CFA and demosaicking technologies are extensible for multispectral imaging with a single image sensor [17]. The only modification required in hardware is to replace the CFA with a multispectral filter array (MSFA), in which more than three spectral bands are arrayed. Figure 1c shows a representative five-band MSFA, consisting of typical RGB bands and additional orange and cyan bands (denoted as Or and Cy bands, respectively) in the visible spectrum [7]. The multispectral extension of the CFA has attracted increasing attention because of its potential for compact and low-cost multispectral image acquisition. However, the demosaicking process for an MSFA poses a challenging problem owing to the very sparse sampling of each spectral band in the MSFA. To address this challenge, we extend our proposed ARI for multispectral image demosaicking, considering the five-band MSFA of Figure 1c. Experimental comparisons using several multispectral image datasets demonstrate that ARI can achieve state-of-the-art performance also for the task of multispectral image demosaicking.

An earlier version of this paper was published in [18]. This paper provides three major extensions. First, we improve the demosaicking accuracy of the R and B bands in color image demosaicking by incorporating ARI into not only the G band interpolation (as performed in [18]), but also the R and B bands’ interpolation. Second, we extend ARI for multispectral image demosaicking and demonstrate that ARI can achieve state-of-the-art performance. Third, we include the detailed explanation of our algorithm and extensive experimental comparisons with existing algorithms.

The rest of this paper is organized as follows. Section 2 briefly reviews existing Bayer and multispectral demosaicking algorithms. Section 3 outlines the framework of RI-based algorithms. Section 4 explains our proposed ARI for the Bayer CFA. Section 5 extends ARI for multispectral image demosaicking. Section 6 presents experimental results, and Section 7 concludes the paper.

2. Related Works

2.1. Bayer Demosaicking Algorithms

Numerous demosaicking algorithms have been proposed for the Bayer CFA. While referring to the well-categorized review in [19], we classify existing algorithms into several categories.

Interpolation-based algorithms first interpolate the G band, which has a sampling density double that of the R and B bands. Then, the R and B bands are interpolated in a color ratio [20,21,22] or a color difference domain [23,24] based on the assumption that the color ratios (i.e., / and /) or the color differences (i.e., - and -) are smooth within a local area of an image. In practice, color differences are used more often than color ratios because of their stable properties [25].

The interpolation-based algorithms mainly focus on improving the interpolation accuracy of the G band using directional interpolation that combines two interpolation results along the horizontal and vertical directions. The two results are combined (or one result is selected) effectively based on such a primary-consistent soft-decision (PCSD [26]), homogeneity metrics on the CIE Lab color space (AHD [27]), directional linear minimum mean square-error estimation (DLMMSE [28]), variance of color differences (VCD [29]), directional filtering and a posteriori decision (DFPD [30]), heterogeneity-projection hard-decision (HPHD [31]), local polynomial approximation (LPA [32]), integrated gradients (IGD [33]), gradients of color differences (GBTF [34]) and multi-scale color gradients (MSG [35]). Some algorithms update interpolated pixel values once or iteratively (SA [25], HEID [36] and ESF [37]). Non-local self-similarities (SSD [38,39], NAT [40] and AICC [41,42]) or more than two directions (CS [43]) are also used. The algorithm (ECC [13]) effectively combines band-independent and color difference interpolation results.

RI-based algorithms have shown superior demosaicking performance in recent years. The RI-based algorithms also interpolate the G band first. Then, they generate tentative estimates of the R and B bands (denoted as and , respectively) from the interpolated G band. Then, the residuals (denoted as - and -, respectively) are calculated and interpolated. The RI-based algorithms are motivated by the observation that the residuals generally become smoother than the conventional color differences (i.e., - and -), contributing to more accurate interpolation. After the original RI was proposed (RI [8]), several extensions were introduced using minimized-Laplacian (MLRI [9,10]), iteration (IRI [11,12]) or four directionality [44]. The RI-based algorithms will be further reviewed in Section 3.

Frequency-domain algorithms first transform the mosaic CFA image into the frequency domain, where the CFA image is expressed as a combination of luminance and chrominance components. These components are then separated by frequency filtering and finally converted into the RGB components (FD [45,46]). In this category, researchers focus on designing effective frequency filtering algorithms such as adaptive filtering [47] and least-squares luma-chroma demultiplexing [48] (The source code of this algorithm is publicly available. However, we excluded this algorithm from comparison in Figure 2 because the necessary trained filters are provided only for the Kodak dataset.).

Wavelet-based algorithms perform a sub-band analysis of the RGB or luminance image. The alternative projection algorithm (AP [49]) decomposes initially interpolated R, G and B images into sub-bands and iteratively updates the high-frequency sub-bands of the R and B images in accordance with those of the G image. This algorithm is accelerated using one-step implementation without iteration (OAP [50]). In another algorithm, a wavelet analysis of the luminance component is performed to estimate interpolation weights for the horizontal and vertical directions (WA [51]).

Reconstruction-based algorithms solve the demosaicking process as an optimization problem with a proper regularization or prior. A regularization approach (RAD [52]) uses prior knowledge regarding natural color images, such as smoothness and inter-band correlations. Other algorithms are based on dictionary learning with non-local sparse models (LSSC [16]) or the theory of compressed sensing [53].

Regression-based algorithms learn efficient regressors based on training images [14,15]. Directional difference regression (DDR [15]) learns the regressors that estimate directional color differences of the training images (as ground truths without mosaicking) closest to those calculated from the input mosaic CFA data. To improve performance, fused regression (FR [15]) fuses the DDR and the other regressors that estimate the RGB values of the training images (as ground truths without mosaicking) closest to the RGB values initially interpolated using MLRI [9,10].

Short summary: Generally, demosaicking algorithms based on training images, such as LSSC [16], DDR [15] and FR [15], can offer high performance results, as demonstrated in Figure 2. In contrast, interpolation-based algorithms are flexibly applicable to any kind of input data without relying on training images. This property is important in fields such as medical and multispectral imaging, because obtaining high-quality and sufficient training images is laborious. In this paper, we focus on improving the RI-based algorithms as will be explained in Section 4. We refer to the survey papers [2,3,4,5] for complementary information.

2.2. Multispectral Demosaicking Algorithms

While the study of Bayer demosaicking algorithms has a long history, that of multispectral demosaicking algorithms has attracted attention only in recent years. Because there is no wide-spread MSFA currently, research on multispectral demosaicking algorithms has been conducted in conjunction with the design of an MSFA.

Miao et al. proposed a generic algorithm for designing an MSFA with an arbitrary number of spectral bands [54]. They also proposed a general binary tree-based edge-sensing (BTES) demosaicking algorithm [55] for the MSFA designed by the generic method. The BTES algorithm recursively performs edge-sensing interpolation based on a binary tree. Although the generic and the BTES algorithms are useful in providing a general framework, the performance of classical edge-sensing interpolation is limited.

Monno et al. proposed a five-band MSFA [7], as shown in Figure 1c. This five-band MSFA consists of typical RGB bands and additional Or and Cy bands in the visible spectrum. The advantage of this MSFA lies in keeping the sampling density of the G band in the MSFA as high as that in the Bayer CFA. Based on that advantage, several efficient demosaicking algorithms have been proposed [7,56,57,58,59,60]. These algorithms first interpolate the most densely-sampled G band, which is effectively used as a guide for interpolating the other bands. In [56], adaptive kernel upsampling (AKU) was proposed to generate an interpolated five-band image using the interpolated G band as a guide for estimating interpolation directions of the other bands. In [7,57], guided filtering (GF) [61] is applied to obtain an interpolated result. In [58], an RI-based algorithm was incorporated into the interpolation of both the G band and the other bands. In [59,60], the high-frequency component of the G band was effectively exploited for interpolating the other bands based on the inter-band correlation analysis.

Recently, multispectral demosaicking algorithms for other types of MSFA have also been proposed, such as the algorithm based on linear minimum mean square errors for an eight-band MSFA [62] and the algorithm using a pseudo-panchromatic image for a 16-band MSFA [63]. We refer to the paper [17] for a comprehensive review, in which other MSFAs, such as uniform MSFAs [64,65] and a seven-band MSFA [66], and demosaicking algorithms for those MSFAs are introduced.

In this paper, we propose a multispectral demosaicking algorithm for the five-band MSFA of Figure 1c, as will be described in Section 5. We consider that the use of that MSFA is a reasonable choice for three reasons. (i) As reported in [7], the considered MSFA has demonstrated better performance in comparison with other five-band MSFAs generated by the generic algorithm. (ii) The considered MSFA has already been realized in hardware as a prototype sensor [7]. (iii) Jia et al. have also used the same MSFA arrangement and have reported its effectiveness by the frequency domain analysis of the MSFA [67,68]. These facts suggest the potential of the considered MSFA.

3. Residual Interpolation Framework

In this section, we review the RI framework and three specific RI-based algorithms: RI [8], MLRI [9,10] and IRI [11,12].

3.1. General Processing Flow

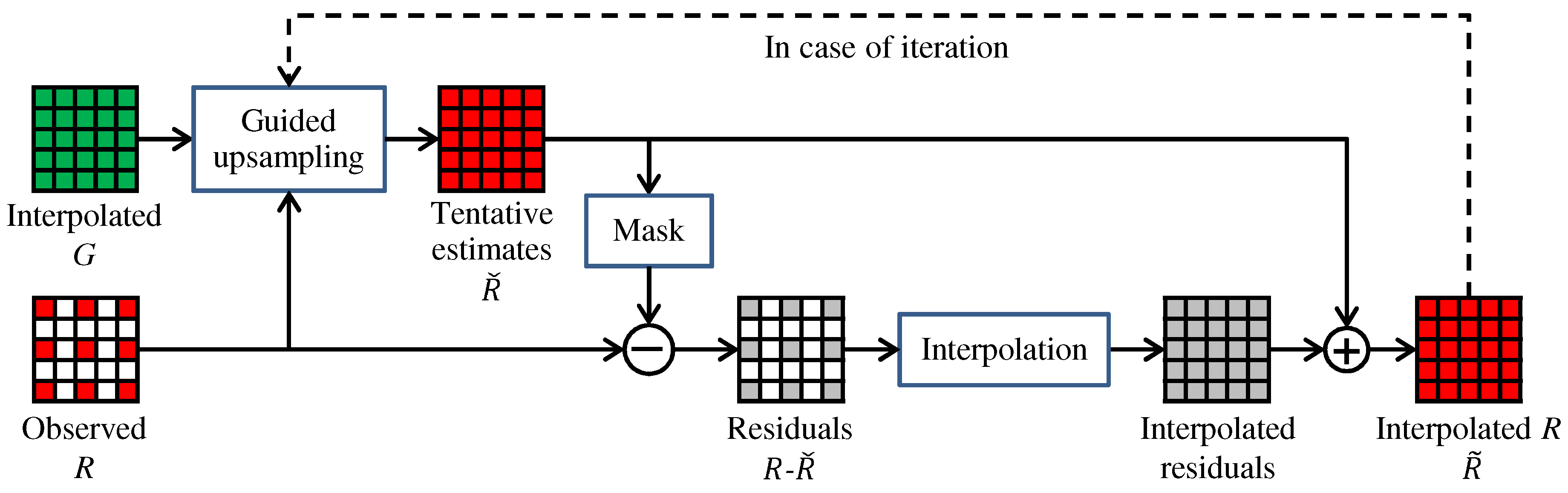

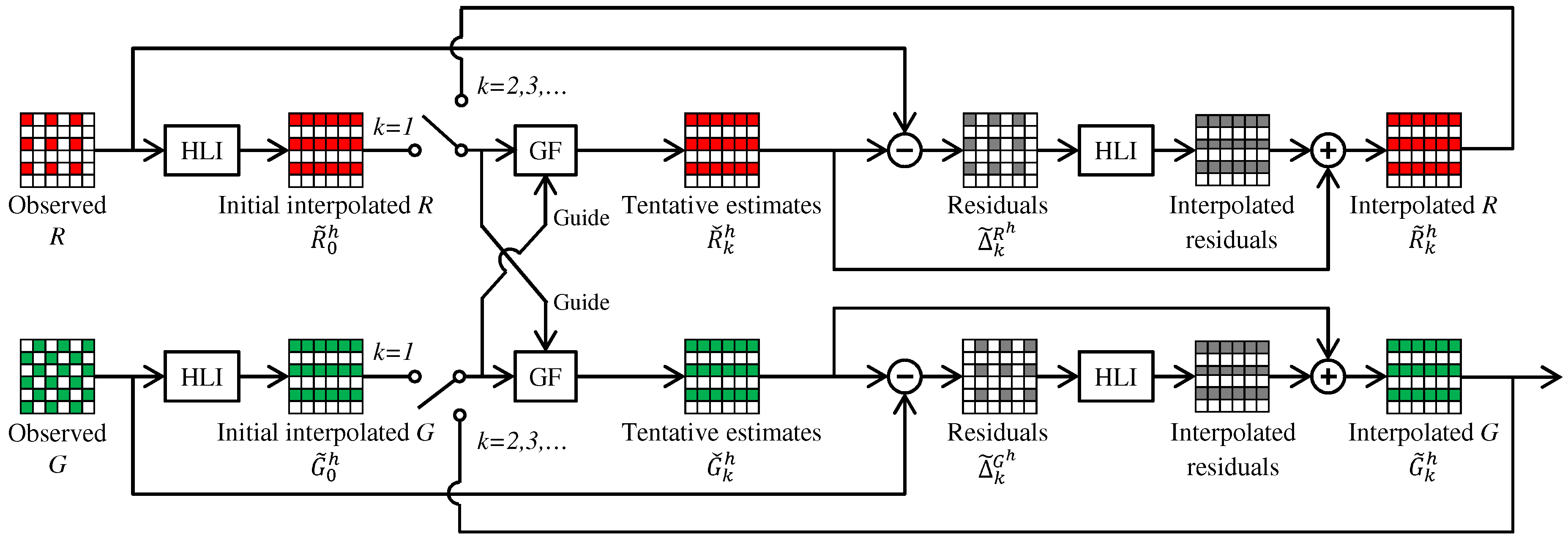

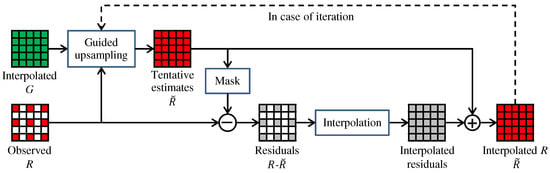

Figure 3 outlines the RI framework. Here, we assume that the G band interpolation is already completed and take the R band interpolation as an example to explain the framework. The RI framework consists of four steps. (i) Tentative estimates of the R band (denoted as ) are generated from the interpolated G band by guided upsampling of the observed R values. (ii) Residuals (denoted as R-) are calculated by taking the differences between the tentative estimates and the observed R values. (iii) The residuals are interpolated. (iv) The tentative estimates are added to the interpolated residuals to obtain the interpolated R band (denoted as ). The key effect of the RI framework is that the residuals become smoother than the conventional color differences (i.e., R-G) by effectively generating the tentative estimates [10,12]. This property increases the interpolation accuracy. A similar procedure can be applied for the G band interpolation, as will be detailed in Section 4. In the following, we explain three RI-based algorithms, which are different regarding tentative estimates generation.

Figure 3.

The RI framework.

3.2. Original RI

Original RI [8] generates the tentative estimates using GF [61]. For each local image window, GF generates the tentative estimates as a linear transformation of a guide. Here, the interpolated G band is used as the guide. The tentative estimates in a local window around a pixel are expressed as:

where represents the tentative estimate at a pixel in the window and is the pair of linear coefficients assumed to be constant in the window.

The cost function for to be minimized is expressed as:

where is a binary mask at the pixel that takes the value one for the observed R pixels and zero for the others (Original GF [61] has a smoothness term . For our purpose, the smoothness parameter is set as a very small value just to avoid division by zero, and thus, we omit the smoothness term from the cost function in Equations (2) and (3).). The above cost function indicates that the original RI minimizes the residuals (i.e., R-) themselves. Since the linear coefficients are calculated in a sliding window, the overlaps of the windows are averaged uniformly or with weights [10].

3.3. Minimized-Laplacian RI

MLRI [9,10] generates the tentative estimates by minimizing the Laplacian energy of the residuals, instead of the residuals themselves. For this purpose, GF is modified as follows. For each local window, the cost function for the gain component is expressed as:

where represents an approximate Laplacian value, and and are the R and the G values masked by , respectively. The approximate Laplacian value is calculated from the masked mosaic data by convolving the following sparse Laplacian filter:

Although the bias component does not affect the minimization of the Laplacian energy (i.e., ), is determined by minimizing Equation (2) under a given .

3.4. Iterative RI

IRI [11,12] introduces an iterative manner to the RI framework. The iterative manner is indicated by the dashed line in Figure 3, where the interpolated R band is used as the guide in the next iteration. In [11], the iteration is stopped based on a criterion that is defined by the magnitude and the smoothness of the residuals at the k-th iteration (i.e., R-) to assess how effectively the tentative estimates fit the observed values. In [12], a different stopping criterion is used to assess whether the new interpolation result becomes sufficiently close to the previous iteration result with a proper threshold (i.e., ). Both in [11,12], the iteration is stopped in a global manner, which means that a common iteration number is used for all pixels based on the criterion combined for all pixels.

4. Proposed Bayer Demosaicking Algorithm

4.1. Interpolation of the G Band

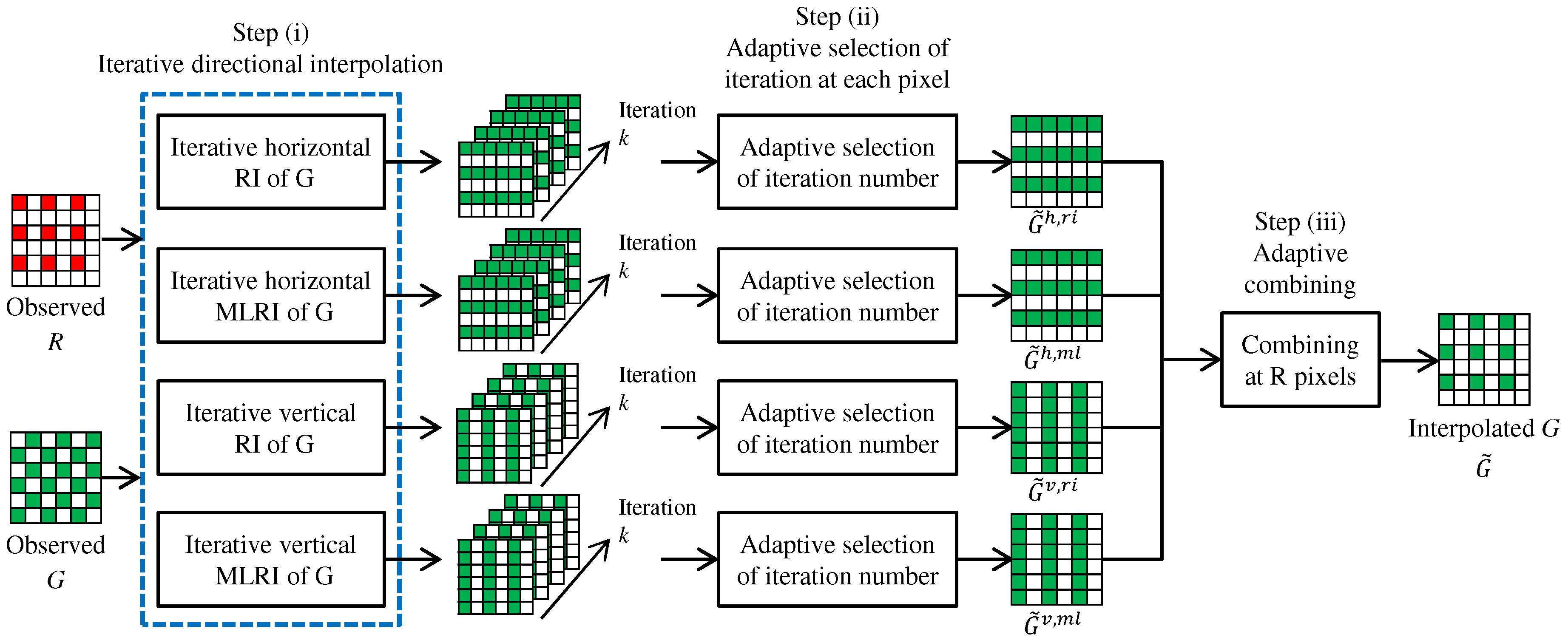

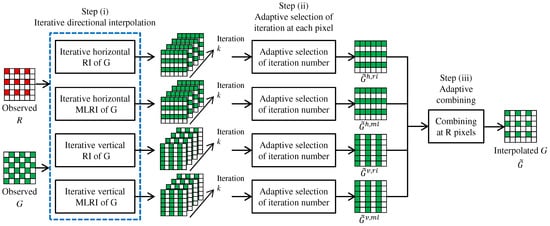

Our proposed algorithm first interpolates the G band. The overall flow of the G interpolation is illustrated in Figure 4. Here, we only explain the G interpolation for the R pixels. The G interpolation for the B pixels is performed in the same manner.

Figure 4.

Overall flow of the G interpolation at R pixels using ARI.

Our proposed G interpolation algorithm consists of three steps. (i) The G interpolation at the R lines, which represent the pixel rows that contain the R pixels, is performed in the horizontal and vertical directions. For each direction, RI and MLRI are applied in an iterative manner, respectively. As a result of each directional interpolation, a set of directionally-interpolated G results, where each result corresponds to one iteration, is generated. (ii) For each directional interpolation, a suitable iteration number is selected adaptively at each pixel from the set of directionally-interpolated G results. (iii) All directional results are adaptively combined by a weighted averaging at each R pixel to obtain the final G interpolation result. The sequence of the above steps is called ARI in the sense that it adaptively combines RI and MLRI and selects the iteration number at each pixel. Each step is detailed as below.

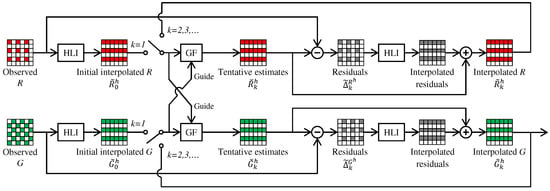

Step (i): Iterative directional interpolation. In this step, RI and MLRI are applied for iterative directional interpolation. Figure 5 illustrates the flow of the G interpolation at the R lines in the horizontal direction. The G interpolation in the vertical direction is performed in the same manner.

Figure 5.

Flow of the iterative horizontal G interpolation at the R lines by RI or MLRI.

First, horizontal linear interpolation (HLI) of the R and the G bands is performed at the R lines to obtain initial interpolation results as:

where and represent the interpolated pixel values, the subscript indicates the initial interpolation result at a pixel and the superscript h represents the horizontal direction.

Then, at each k-th iteration, tentative estimates of R and G are generated by linear transformations of the previous interpolation results (if , the initial interpolation results are used) as:

where and represent the tentative estimates and and are the linear coefficients. As described in Section 3.2 and Section 3.3, RI and MLRI calculate the linear coefficients using GF [61] and its modified version, respectively. Here, is used as the guide for generating and vice versa. At each local window, RI and MLRI calculate the linear coefficients by minimizing the following costs, respectively.

where and represent the costs for the tentative estimates of R and G, respectively.

After generating the tentative estimates, the residuals are calculated as:

Then, the residuals are interpolated by the HLI as:

Finally, the tentative estimates are added to obtain the k-th horizontally interpolated results as:

In the iterative manner [11,12], the tentative estimates are updated in accordance with Equation (6) using the obtained interpolation results. In [11,12], the iteration is stopped globally based on the criterion described in Section 3.4, meaning that a common iteration number is used for all of the pixels. In contrast, ARI generates a set of directionally-interpolated results, in which each result corresponds to one iteration, and adaptively selects a suitable iteration number for each pixel in the next step.

Step (ii): Adaptive selection of iteration number. In this step, for each directional interpolation, a suitable iteration number is adaptively selected at each pixel. This is performed based on a criterion similar to that in [11]. Here, we only explain the criterion for the horizontal direction. The criterion for the vertical direction is calculated in the same manner.

We define the criterion in a pixel-by-pixel manner, instead of the global manner in [11], based on the following differences.

where and represent the differences between the k-th tentative estimates and the previous interpolation results. These differences assess how effectively the tentative estimates converge at the k-th iteration. The criterion value for a pixel at the k-th iteration is defined based on the magnitude and the smoothness of the above differences as:

where and . The above criterion value becomes small if the magnitude of the differences (minimized in RI) or the smoothness of the differences (minimized in MLRI) is small. The parameters are set empirically as , the same parameter values as used in [11].

Based on criterion values corresponding to each pixel, the suitable iteration number is adaptively selected at each pixel as:

where the function represents spatial Gaussian smoothing. We empirically chose for the spatial Gaussian smoothing of criterion values and for the maximum iteration number. Although our iteration strategy does not guarantee theoretical convergence at each pixel, the selection of the most suitable iteration number based on the minimal criterion value performs well. Hereafter, we remove the subscript k, indicating that the suitable iteration number has already been selected at each pixel.

Step (iii): Adaptive combining of all directional results. In this step, directional interpolation results of RI and MLRI are combined by the weighted averaging as:

where h and v represent the horizontal and the vertical directions and and represent the results of RI and MLRI, respectively. Each weight is calculated based on the smoothed criterion value as:

where a small criterion value contributes to a large weight.

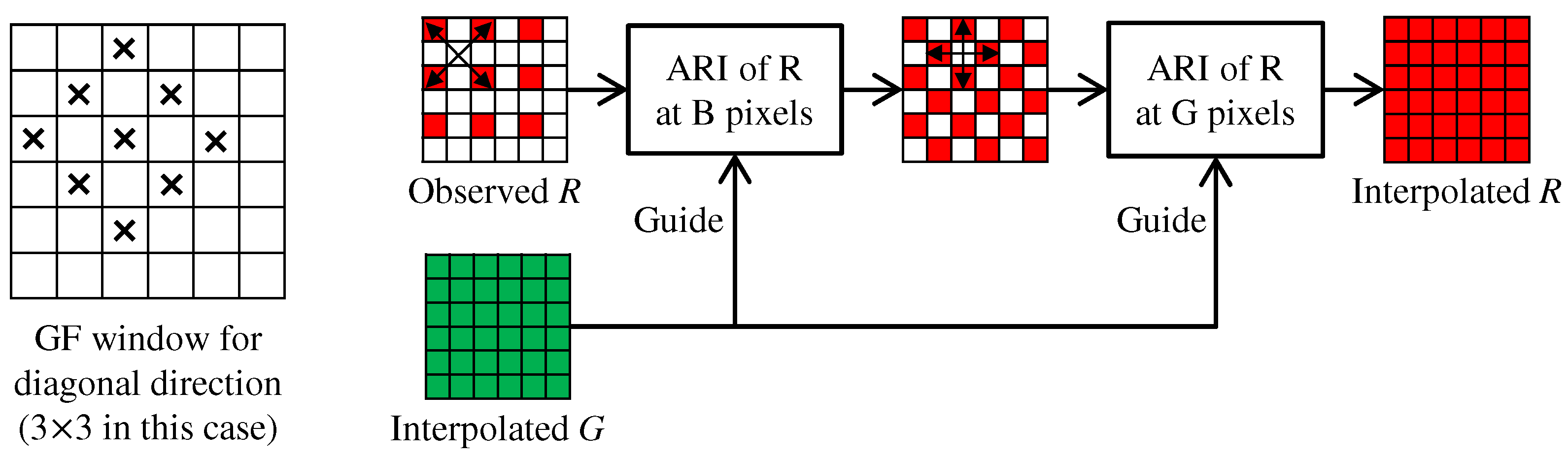

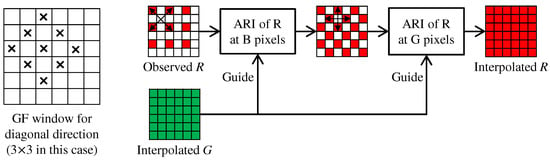

4.2. Interpolation of the R and B Bands

In IRI [11,12], iteration is performed only for the G interpolation, while the R and B interpolations are performed without iteration. In contrast, we fully incorporate ARI not only into the G interpolation, but also into the R and B interpolations.

Figure 6 shows the flow of the R interpolation. We take a progressive approach [55] as follows. (i) The R values at the B pixels are interpolated using ARI along the diagonal directions. (ii) The R values at the G pixels are interpolated using ARI along the horizontal and vertical directions. In ARI, the interpolated G band is fixed throughout the process and is used as the guide for generating the tentative estimates of the R band at each iteration. The B interpolation is performed in the same manner. In our experiments, a maximum of two iterations provides sufficiently high performance results for the R and B interpolations. This iteration process improves the demosaicking performance by approximately 0.13 dB in PSNR of the R and B bands and 0.1 dB in CPSNR.

Figure 6.

Flow of the R interpolation for the Bayer CFA. A window of GF for the diagonal direction is shown in the left.

4.3. Window Size of GF

In our implementation, we empirically set the window size of GF in Equation (6) as follows. Here, we explain the window size for the horizontal interpolation. The window size for the vertical interpolation is set symmetrically.

In the G interpolation, the window size of GF (denoted as height (H) × width (W)) is initially set as for horizontal RI and for horizontal MLRI. As conducted in [11], the window size is gradually enlarged at each k-th iteration as and . In the first step of the R and B interpolations, the GF window is set as shown in the left of Figure 6. We initially use the diagonal window for diagonal RI and the diagonal window for diagonal MLRI. The window size is then diagonally enlarged in the same manner as the horizontal G interpolation. In the second step of the R and B interpolations, the window size of GF is initially set as for horizontal RI and for horizontal MLRI. The window size is then enlarged as and . The window enlargement greatly affects the demosaicking performance and improves the CPSNR by more than 1 dB compared with the case without the enlargement.

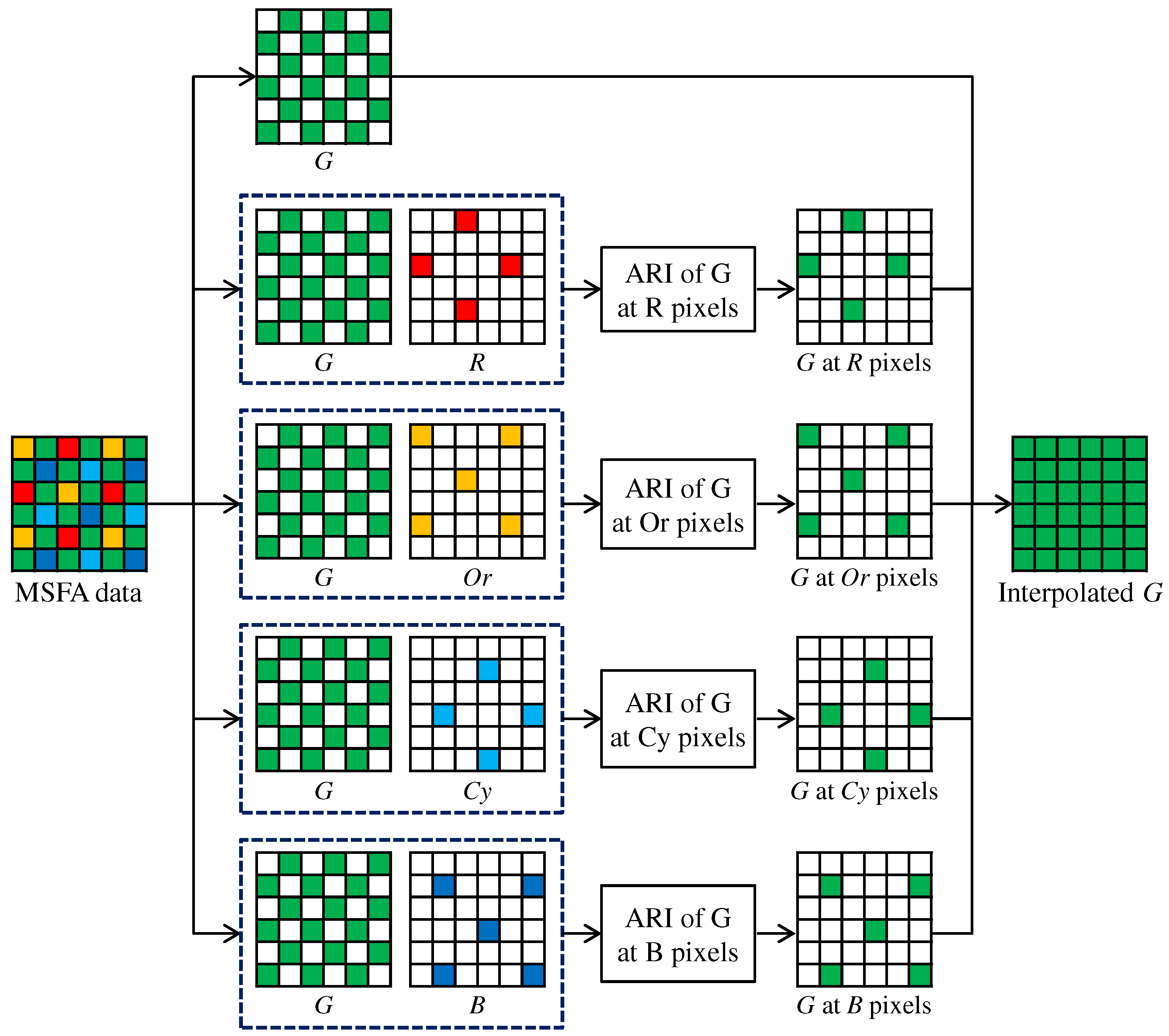

5. Multispectral Extension

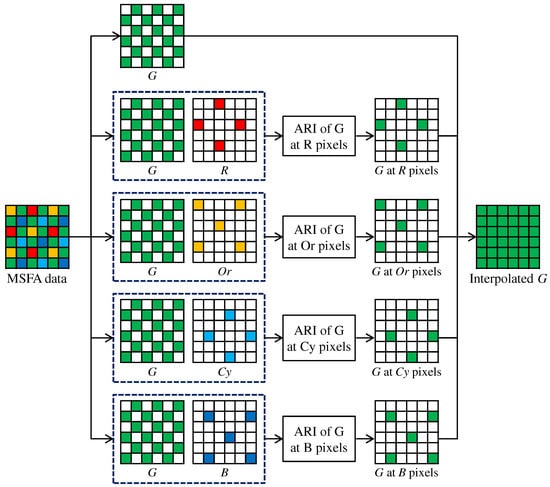

In this section, we extend our proposed ARI for multispectral image demosaicking with the five-band MSFA of Figure 1c. Our proposed multispectral demosaicking algorithm using ARI first interpolates the G band, which is the most densely sampled in the five-band MSFA. Then, the other four bands are interpolated using the G band as a guide.

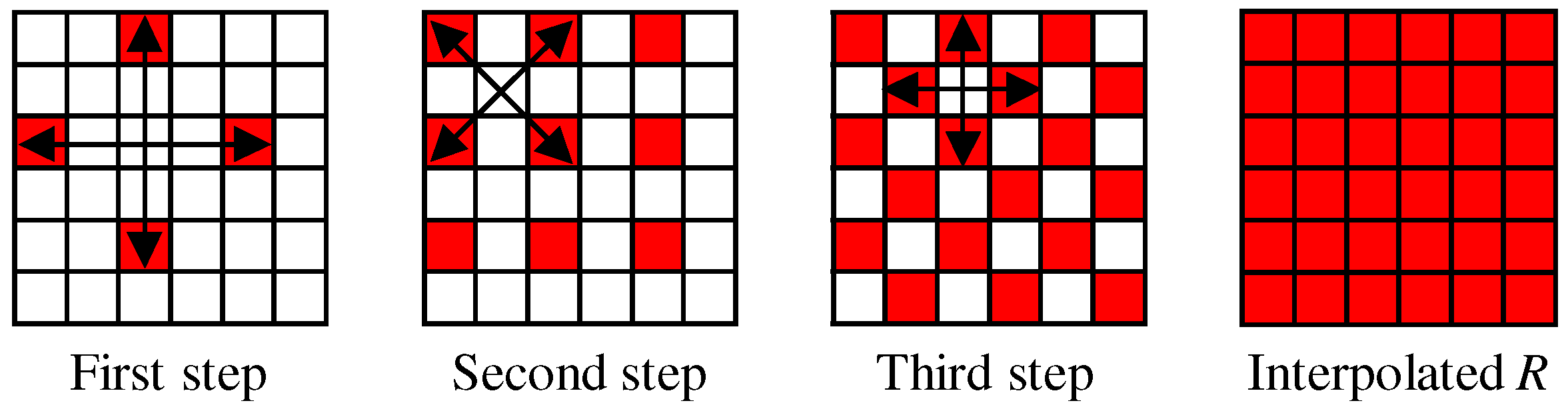

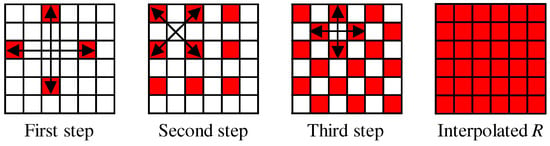

Figure 7 shows the flow of the G interpolation for the five-band MSFA. The G interpolation is decomposed into four streams, which correspond to the G interpolation at the R, Or, Cy and B pixels, respectively. In each stream, the G interpolation is performed in the same manner as that for the Bayer CFA (Figure 4 and Figure 5), considering the differences in the sampling patterns. A notable difference is that the linear interpolation is performed using the filter for the horizontal direction and the filter for the vertical direction. The interpolation of the other four bands is performed in the progressive approach, as explained in Section 4.2. For the five-band MSFA, three steps of Figure 8, in which the R interpolation is taken as an example, are performed.

Figure 7.

Flow of the G interpolation for the five-band MSFA.

Figure 8.

Three steps of the R interpolation for the five-band MSFA.

6. Experimental Results

6.1. Performance of Bayer Demosaicking Algorithms

To evaluate the performance of Bayer demosaicking algorithms, we used two standard color image datasets, the IMAX and the Kodak datasets. These two datasets are used for the benchmark comparison in the representative survey paper [4]. The IMAX dataset contains 18 images of size 500 × 500 pixels [40]. The Kodak dataset contains 12 images of size 768 × 512 pixels [4].

Our proposed ARI (Code available at http://www.ok.sc.e.titech.ac.jp/res/DM/RI.html.) was compared extensively with 29 algorithms that were briefly reviewed in Section 2. The source codes are publicly available or executable at their authors’ websites. Only for IRI [11,12], we asked the authors to send us the resulting images, because the source code is not publicly available. We used a default set of parameters provided by the authors for the evaluation. DDR [15] and FR [15] have two sets of parameters, in which each set is optimized for each dataset. To evaluate all algorithms under a common parameter condition in both datasets, we selected a better set of parameters for DDR and FR in terms of the average CPSNR performance on both datasets. We also included the previous version of ARI (denoted as ARI (Previous)) [18] as a reference. We omit ten border pixels from the evaluation to discount implementation errors in those pixels.

Table 1 summarizes the average PSNR and CPSNR performance. For the IMAX dataset, our proposed ARI outperforms all existing algorithms in the CPSNR performance. The regression-based algorithms based on training images, i.e., DDR and FR, follow ARI. The existing RI-based algorithms such as MLRI and IRI, also offer reasonably high performance results.

Table 1.

Average PSNR and CPSNR performance for the standard IMAX and Kodak datasets. The bold typeface represents the best performance.

For the Kodak dataset, the algorithm based on dictionary learning, i.e., LSSC [16], offers the best performance. The interpolation-based algorithm with multiscale gradients, i.e., MSG [35], follows LSSC. For the average of all images for both datasets, our proposed ARI improves IRI by approximately 0.6 dB in CPSNR and outperforms all existing algorithms, including state-of-the-art algorithms based on training images, such as LSSC and FR. Let us note that FR uses MLRI as a source of initial interpolation to learn efficient regressors. Therefore, the integration of our proposed ARI into FR has the potential to further improve the performance. Table 2 summarizes the average structural similarity (SSIM) [69] performance, which demonstrates results similar to those of the PSNR performance in Table 1.

Table 2.

Average structural similarity (SSIM) performance for the standard IMAX and Kodak datasets. The bold typeface represents the best performance.

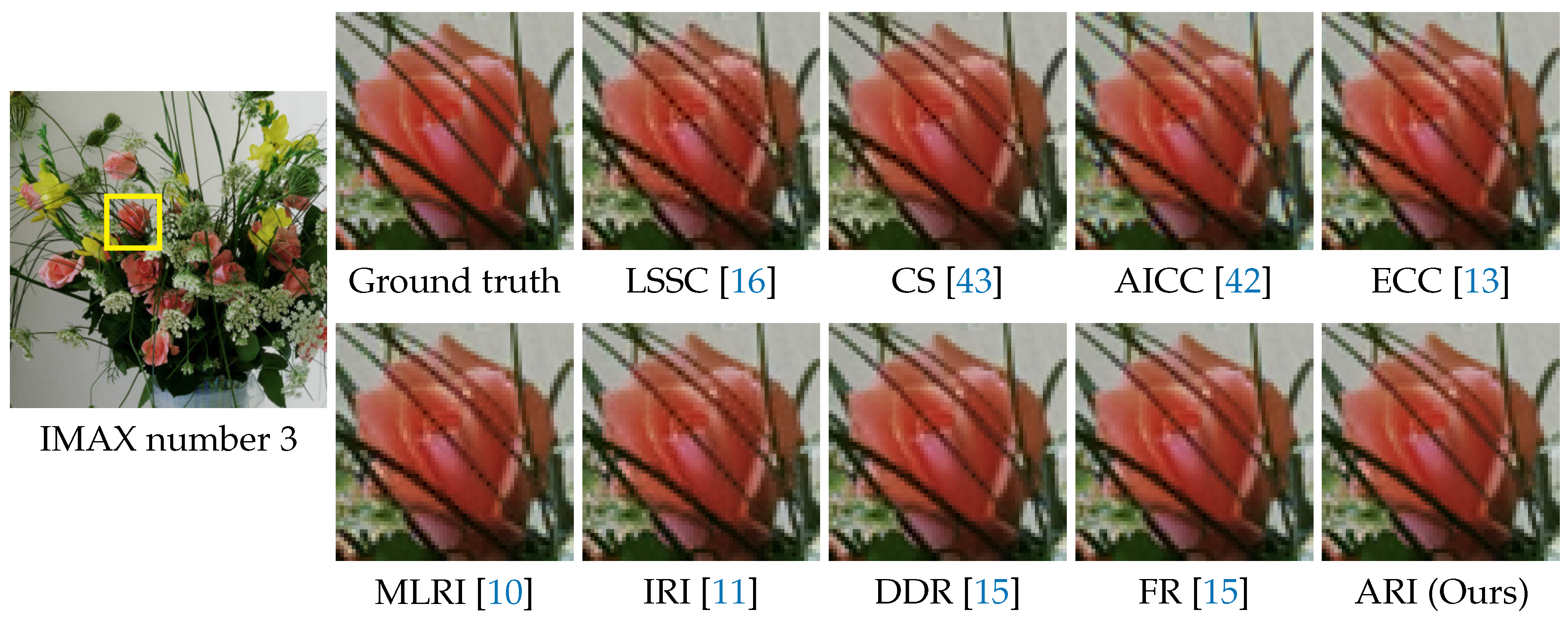

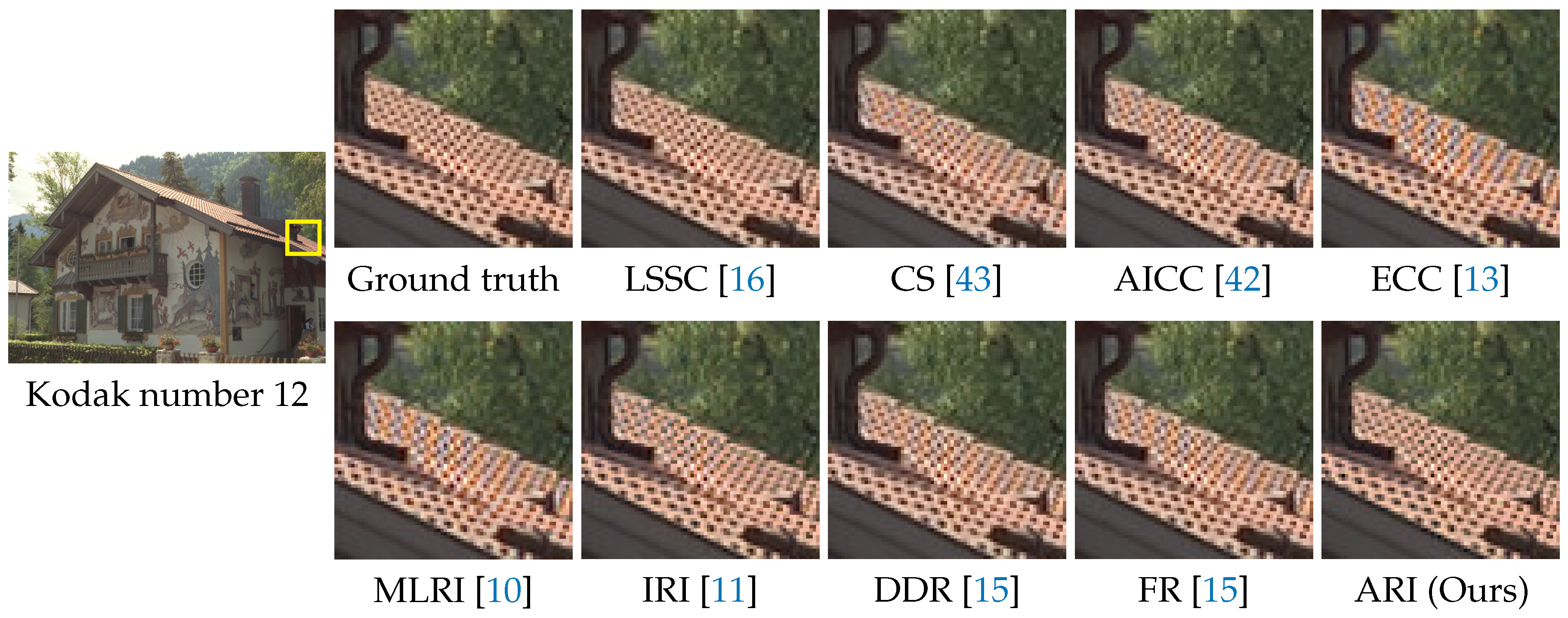

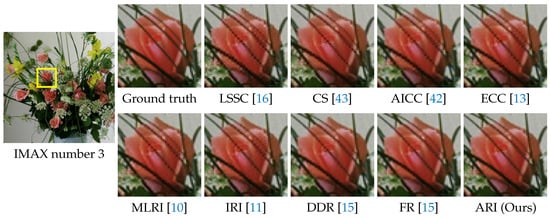

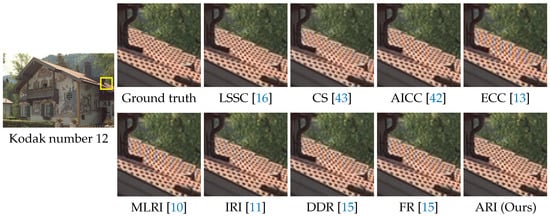

Figure 9 shows the visual comparison of demosaicking results on the IMAX number 3 image. In the figure, the results of the top nine algorithms, which offer average CPNR performance greater than 38 dB in Table 1, are shown. One can see that ARI can generate a high quality image without severe zipper artifacts. FR also can generate a comparable result. Figure 10 shows the visual comparison of demosaicking results on the Kodak number 12 image. One can see that ARI can reduce severe color artifacts that appear in the results of existing algorithms other than LSSC. Both the numerical and visual comparisons validate that our proposed ARI can achieve state-of-the-art performance for the color image demosaicking with the Bayer CFA.

Figure 9.

Visual comparison of the demosaicking results on the IMAX number 3 image. The results of the top nine algorithms for the average CPSNR in Table 1 are shown.

Figure 10.

Visual comparison of the demosaicking results on the Kodak number 12 image. The results of the top nine algorithms for the average CPSNR in Table 1 are shown.

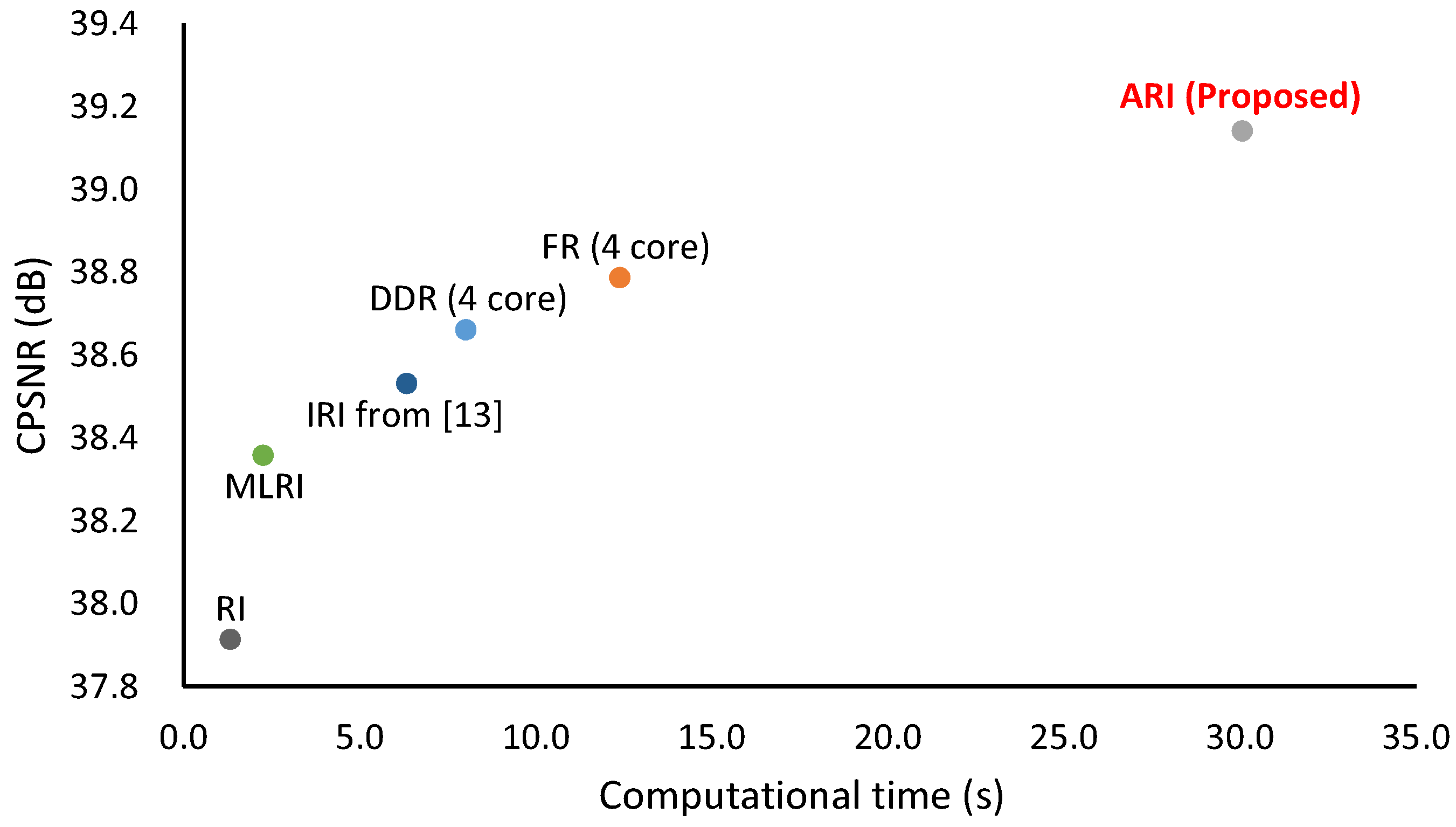

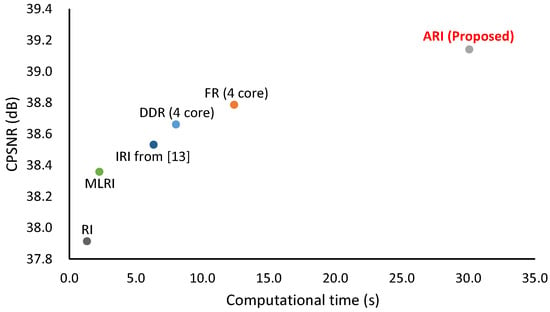

Figure 11 shows the computational time versus CPSNR performance plot of state-of-the-art algorithms, in which the average computational time of the IMAX and Kodak 30 images is measured using a desktop PC (Intel Xeon CPU E5-1603 v3 2.80-GHz processor with 80-GB RAM). Note that the computational time of IRI was extracted from [12] because the authors only provide result images, and we cannot run IRI in our computation environment. The other algorithms were executed using MATLAB R2016b. DDR and FR exploit parallelized implementation (four cores in our environment). One can see that the computational time and the CPSNR performance are positively correlated. IRI takes more time than RI because IRI iterates RI. ARI takes more time than IRI because ARI iterates both RI and MLRI and combines them. The computational time of DDR and FR is between that of IRI and that of ARI. Because each directional interpolation in Figure 4 can be parallelized, our future work is to reduce the computation time of ARI with parallelized implementation.

Figure 11.

Computational time versus CPSNR performance plot of state-of-the-art algorithms, in which IMAX and Kodak 30 images are used. The computational time is averaged for the 30 images. Note that the computational time of IRI was extracted from [12] because IRI was not executable in our computation environment. DDR and FR exploit parallelized implementation (four cores in our environment).

6.2. Performance of Multispectral Demosaicking Algorithms

To evaluate the performance of multispectral demosaicking algorithms for the five-band MSFA of Figure 1c, we conducted the same comparisons as performed in [58] and [7].

The first comparison was performed on the five-band image dataset used in [58]. The dataset contains 16 scenes of size 1824 × 1368 pixels. The five-band images were mosaicked according to the five-band MSFA of Figure 1c and demosaicked using compared demosaicking algorithms. Our proposed ARI (Code available at http://www.ok.sc.e.titech.ac.jp/res/MSI/MSIdata.html.) was compared with four existing algorithms, BTES [55], AKU [56], GF [57] and RI [58]. The RI algorithm is one of the current state-of-the-art algorithms. We evaluated the PSNR performance of the five-band images and the PSNR and the CIEDE2000 [70] performance of the standard RGB (sRGB) images. The sRGB images were generated from the five-band images using a calibrated color transformation matrix from the five bands to the sRGB.

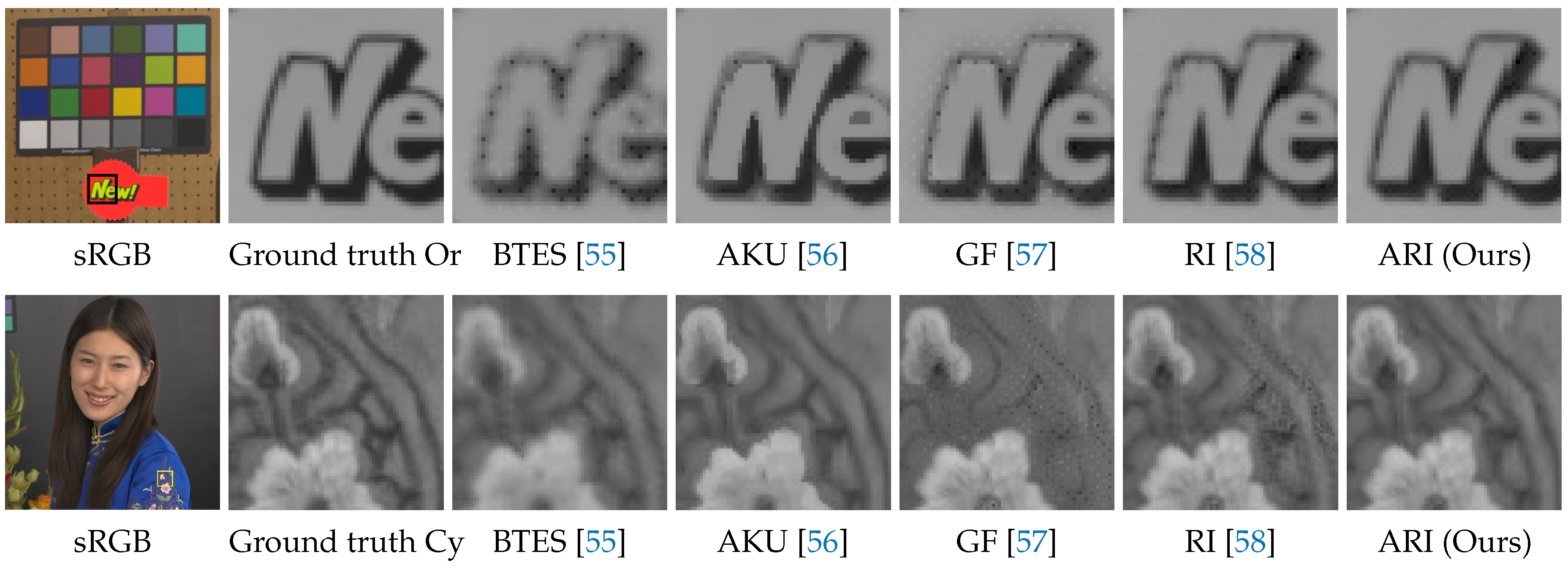

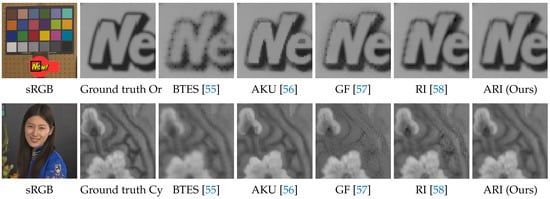

Table 3 shows the average PSNR and CIEDE2000 performance of all 16 scenes. One can see that ARI outperforms all existing algorithms in the numerical evaluation. Figure 12 shows visual comparisons of the demosaicking results for the Or and the Cy band images. One can see that ARI can sharply generate the images without the severe zipper artifacts and blurring that appear in the results of the existing algorithms.

Table 3.

Average PSNR and CIEDE2000 [70] performance of all 16 scenes in the five-band dataset. The bold typeface represents the best performance.

Figure 12.

Visual comparisons of the demosaicking results on the five-band image dataset.

The second comparison was performed on three 31-band multispectral image datasets, the CAVE [66], the TokyoTech [7] and the NUS [71] datasets. The CAVE and the TokyoTech datasets were captured using a monochrome camera with a liquid crystal tunable filter [72]. The NUS dataset was captured using a Specim’s hyperspectral camera (http://www.specim.fi/products/pfd-65-v10e/). The CAVE dataset contains 32 scenes of size 512 × 512 pixels. The TokyoTech dataset contains 30 scenes of size 500 × 500 pixels. The NUS dataset contains 66 scenes (training 41 scenes and testing 25 scenes for the purpose of [71]) of different pixel resolutions. In the NUS dataset, we used the testing 25 scenes for evaluation.

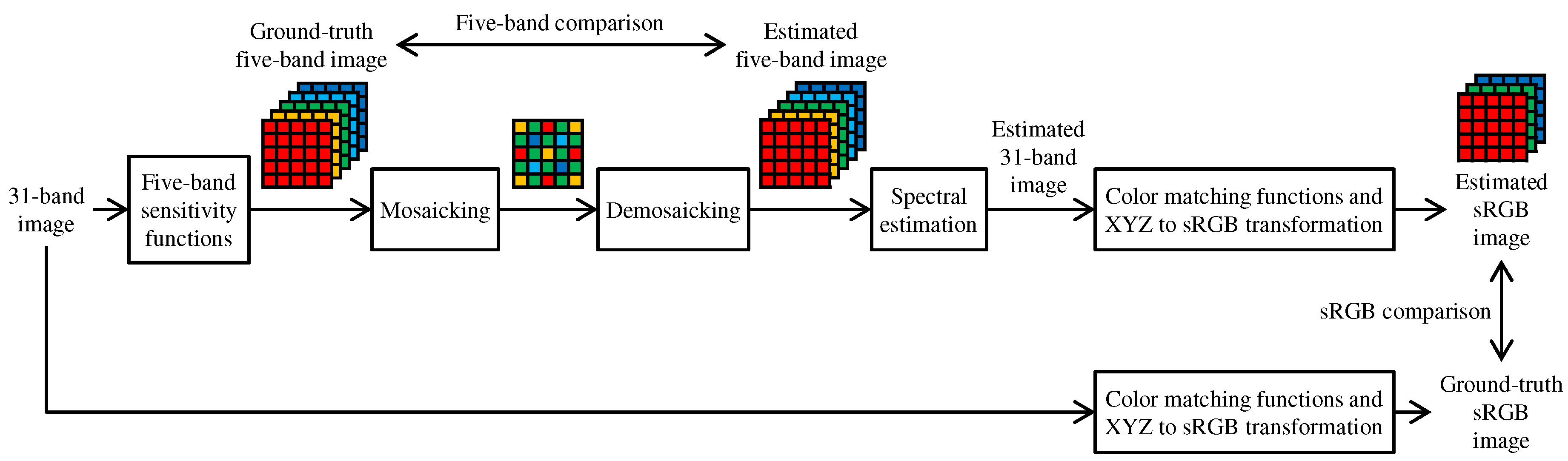

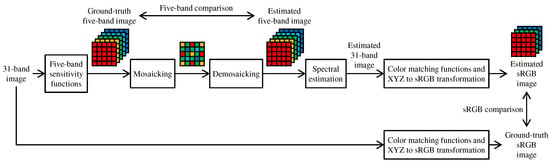

Figure 13 shows the flow of experimental comparison using the 31-band datasets. As shown in the bottom row of Figure 13, ground truth sRGB images were simulated from the 31-band images based on the XYZ color matching functions and the XYZ-to-sRGB transformation matrix with a correct white point. As shown in the top row of Figure 13, ground truth five-band images were simulated from the 31-band images using the spectral sensitivity of the five-band MSFA as described in [7]. To generate the five-band images, we used the CIE D65 illumination for the CAVE and the TokyoTech datasets. For the NUS dataset, we used given illumination spectrum for each scene. Then, the ground truth five-band images were mosaicked according to the five-band MSFA of Figure 1c and demosaicked using compared demosaicking algorithms. Finally, the demosaicked five-band images were converted to sRGB images using a linear model-based spectral reflectance (31-band image) estimation [73] and a rendering process from the spectrum to the sRGB with the XYZ color matching functions. The ground truth and the estimated images were compared in the five-band and the sRGB domains. Our proposed ARI was compared with three existing algorithms, BTES [55], GF [7] and RI [58]. We evaluated the PSNR performance of the five-band images and PSNR, CPSNR, DeltaE (Euclidean distance in the CIE Lab space) and CIEDE2000 performance of the sRGB images.

Figure 13.

Flow of experimental comparison using 31-band multispectral datasets.

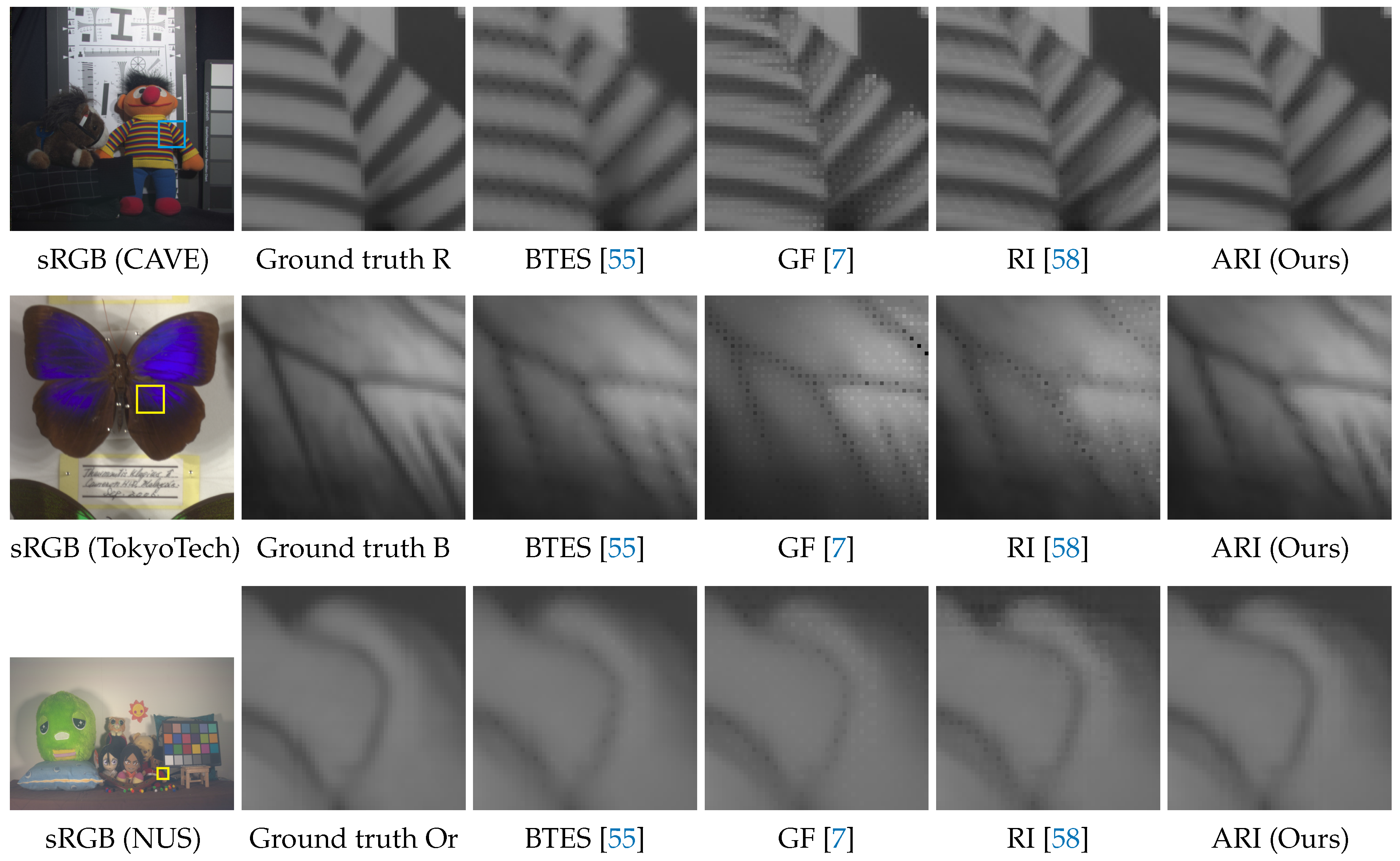

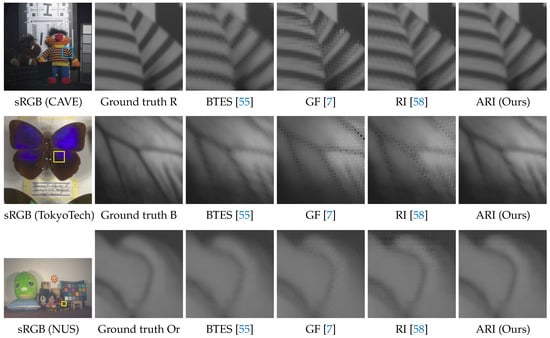

Table 4 presents a summary of numerical performance. One can see that ARI generally outperforms the existing algorithms and yields results with lower colorimetric errors. Figure 14 shows visual comparisons of the demosaicking results for the R band image of the CAVE dataset, the B band image of the TokyoTech dataset and the Or band image of the NUS dataset. One can see that ARI can significantly reduce the zipper artifacts that are apparent in the results of the existing algorithms. Both the numerical and visual comparisons validate that our proposed ARI can achieve state-of-the-art performance for the task of multispectral image demosaicking. As demonstrated in the above results, our proposed ARI is very effective when (i) one of spectral channels has a higher sampling density and (ii) each spectral channel is regularly sampled in horizontal/vertical directions or diagonal directions.

Table 4.

Average PSNR, CPSNR, DeltaE and CIEDE2000 [70] performance on the CAVE, TokyoTech and NUS datasets. The bold typeface represents the best performance.

Figure 14.

Visual comparisons of the demosaicking results on CAVE, TokyoTech and NUS datasets.

7. Conclusions

In this paper, we proposed a novel algorithm for both color and multispectral image demosaicking. Our proposed algorithm is based on a new interpolation technique called ARI that improves existing RI-based algorithms by the adaptive combination of two RI-based algorithms and the adaptive selection of a suitable iteration number at each pixel. Experimental comparisons using standard color image datasets demonstrated that ARI can improve existing RI-based algorithms by approximately 0.6 dB in CPSNR performance and can outperform state-of-the-art algorithms based on training images. Experimental comparisons using multispectral image datasets demonstrated that ARI can achieve state-of-the-art performance also for the task of multispectral image demosaicking.

Acknowledgments

This work was partly supported by the MIC/SCOPE #141203024. We would like to thank all authors of the compared algorithms for making the source codes publicly available.

Author Contributions

Yusuke Monno and Daisuke Kiku implemented the algorithm and conducted the experiments. Yusuke Monno wrote the paper. Masayuki Tanaka designed the main structure of the algorithm. Masatoshi Okutomi supervised the project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lukac, R. Single-Sensor Imaging: Methods and Applications for Digital Cameras; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Ramanath, R.; Snyder, W.E.; Bilbro, G.L. Demosaicking methods for Bayer color arrays. J. Electron. Imaging 2002, 11, 306–315. [Google Scholar] [CrossRef]

- Gunturk, B.K.; Glotzbach, J.; Altunbasak, Y.; Schafer, R.W.; Mersereau, R.M. Demosaicking: Color filter array interpolation. IEEE Signal Process. Mag. 2005, 22, 44–54. [Google Scholar] [CrossRef]

- Li, X.; Gunturk, B.K.; Zhang, L. Image demosaicing: A systematic survey. In Proceedings of the SPIE, San Jose, CA, USA, 28 January 2008; Volume 6822, p. 68221J. [Google Scholar]

- Menon, D.; Calvagno, G. Color image demosaicking: An overview. Signal Process. Image Commun. 2011, 26, 518–533. [Google Scholar] [CrossRef]

- Bayer, B. Color Imaging Array. U.S. Patent 3971065, 20 July 1976. [Google Scholar]

- Monno, Y.; Kikuchi, S.; Tanaka, M.; Okutomi, M. A practical one-shot multispectral imaging system using a single image sensor. IEEE Trans. Image Process. 2015, 24, 3048–3059. [Google Scholar] [CrossRef] [PubMed]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Residual interpolation for color image demosaicking. In Proceedings of the 2013 20th IEEE International Conference on Image Processing (ICIP), Melbourne, VIC, Australia, 15–18 September 2013; pp. 2304–2308. [Google Scholar]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Minimized-Laplacian residual interpolation for color image demosaicking. In Proceedings of the SPIE, San Francisco, CA, USA, 7 March 2014; p. 90230L. [Google Scholar]

- Kiku, D.; Monno, Y.; Tanaka, M.; Okutomi, M. Beyond color difference: Residual interpolation for color image demosaicking. IEEE Trans. Image Process. 2016, 25, 1288–1300. [Google Scholar] [CrossRef] [PubMed]

- Ye, W.; Ma, K.K. Image demosaicing by using iterative residual interpolation. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1862–1866. [Google Scholar]

- Ye, W.; Ma, K.K. Color image demosaicing by using iterative residual interpolation. IEEE Trans. Image Process. 2015, 24, 5879–5891. [Google Scholar] [CrossRef] [PubMed]

- Jaiswal, S.P.; Au, O.C.; Jakhetiya, V.; Yuan, Y.; Yang, H. Exploitation of inter-color correlation for color image demosaicking. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1812–1816. [Google Scholar]

- Wu, J.; Timofte, R.; Gool, L.V. Efficient regression priors for post-processing demosaiced images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3495–3499. [Google Scholar]

- Wu, J.; Timofte, R.; Gool, L.V. Demosaicing based on directional difference regression and efficient regression priors. IEEE Trans. Image Process. 2016, 25, 3862–3874. [Google Scholar] [CrossRef] [PubMed]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 Ocober 2009; pp. 2272–2279. [Google Scholar]

- Lapray, P.J.; Wang, X.; Thomas, J.B.; Gouton, P. Multispectral filter arrays: Recent advances and practical implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef] [PubMed]

- Monno, Y.; Kiku, D.; Tanaka, M.; Okutomi, M. Adaptive residual interpolation for color image demosaicking. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3861–3865. [Google Scholar]

- Bai, C.; Li, J.; Lin, Z.; Yu, J.; Chen, Y.W. Penrose demosaicking. IEEE Trans. Image Process. 2015, 24, 1672–1684. [Google Scholar] [PubMed]

- Cok, D. Signal Processing Method and Apparatus for Producing Interpolated Chrominance Values in a Sampled Color Image Signal. U.S. Patent 4642678, 10 Febrary 1987. [Google Scholar]

- Kimmel, R. Demosaicing: Image reconstruction from color CCD samples. IEEE Trans. Image Process. 1999, 8, 1221–1228. [Google Scholar] [CrossRef] [PubMed]

- Lukac, R.; Plataniotis, K.N. Normalized color-ratio modeling for CFA interpolation. IEEE Trans. Consum. Electron. 2004, 50, 737–745. [Google Scholar] [CrossRef]

- Hibbard, R. Apparatus and Method for Adaptively Interpolating a Full Color Image Utilizing Luminance Gradients. U.S. Patent 5382976, 17 Janurary 1995. [Google Scholar]

- Hamilton, J.; Adams, J. Adaptive Color Plan Interpolation in Single Sensor Color Electronic Camera. U.S. Patent 5629734, 13 May 1997. [Google Scholar]

- Li, X. Demosaicing by successive approximation. IEEE Trans. Image Process. 2005, 14, 370–379. [Google Scholar] [PubMed]

- Wu, X.; Zhang, N. Primary-consistent soft-decision color demosaicking for digital cameras. IEEE Trans. Image Process. 2004, 13, 1263–1274. [Google Scholar] [CrossRef] [PubMed]

- Hirakawa, K.; Parks, T.W. Adaptive homogeneity-directed demosaicking algorithm. IEEE Trans. Image Process. 2005, 14, 360–369. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wu, X. Color demosaicking via directional linear minimum mean square-error estimation. IEEE Trans. Image Process. 2005, 14, 2167–2178. [Google Scholar] [CrossRef] [PubMed]

- Chung, K.H.; Chan, Y.H. Color demosaicing using variance of color differences. IEEE Trans. Image Process. 2006, 15, 2944–2955. [Google Scholar] [CrossRef] [PubMed]

- Menon, D.; Andriani, S.; Calvagno, G. Demosaicing with directional filtering and a posteriori decision. IEEE Trans. Image Process. 2007, 16, 132–141. [Google Scholar] [CrossRef] [PubMed]

- Tsai, C.Y.; Song, K.T. Heterogeneity-projection hard-decision color interpolation using spectral-spatial correlation. IEEE Trans. Image Process. 2007, 16, 78–91. [Google Scholar] [CrossRef] [PubMed]

- Paliy, D.; Katkovnik, V.; Bilcu, R.; Alenius, S.; Egiazarian, K. Spatially adaptive color filter array interpolation for noiseless and noisy data. Int. J. Imaging Syst. Technol. 2007, 17, 105–122. [Google Scholar] [CrossRef]

- Chung, K.H.; Chan, Y.H. Low-complexity color demosaicing algorithm based on integrated gradients. J. Electron. Imaging 2010, 19, 021104. [Google Scholar] [CrossRef]

- Pekkucuksen, I.; Altunbasak, Y. Gradient based threshold free color filter array interpolation. In Proceedings of the 2010 17th IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010; pp. 137–140. [Google Scholar]

- Pekkucuksen, I.; Altunbasak, Y. Multiscale gradients-based color filter array interpolation. IEEE Trans. Image Process. 2013, 22, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Su, C.Y. Highly effective iterative demosaicing using weighted-edge and color-difference interpolations. IEEE Trans. Consum. Electron. 2006, 52, 639–645. [Google Scholar]

- Pekkucuksen, I.; Altunbasak, Y. Edge strength filter based color filter array interpolation. IEEE Trans. Image Process. 2012, 21, 393–397. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Coll, B.; Morel, J.M.; Sbert, C. Self-similarity driven color demosaicking. IEEE Trans. Image Process. 2009, 18, 1192–1202. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Coll, B.; Morel, J.M.; Sbert, C. Self-similarity driven demosaicking. Image Process. Line 2011, 1, 1–6. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, X.; Buades, A.; Li, X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 2011, 20, 023016. [Google Scholar]

- Duran, J.; Buades, A. Self-similarity and spectral correlation adaptive algorithm for color demosaicking. IEEE Trans. Image Process. 2014, 23, 4031–4040. [Google Scholar] [CrossRef] [PubMed]

- Duran, J.; Buades, A. A demosaicking algorithm with adaptive inter-channel correlation. Image Process. Line 2015, 5, 311–327. [Google Scholar] [CrossRef]

- Getreuer, P. Image demosaicking with contour stencils. Image Process. Line 2012, 2, 22–34. [Google Scholar] [CrossRef]

- Kim, Y.; Jeong, J. Four-direction residual interpolation for demosaicking. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 881–890. [Google Scholar] [CrossRef]

- Dubois, E. Frequency-domain methods for demosaicking of Bayer-sampled color images. IEEE Signal Process. Lett. 2005, 12, 847–850. [Google Scholar] [CrossRef]

- Alleysson, D.; Süsstrunk, S.; Hérault, J. Linear demosaicking inspired by the human visual system. IEEE Trans. Image Process. 2005, 14, 439–449. [Google Scholar] [PubMed]

- Lian, N.X.; Chang, L.; Tan, Y.P.; Zagorodnov, V. Adaptive filtering for color filter array demosaicking. IEEE Trans. Image Process. 2007, 16, 2515–2525. [Google Scholar] [CrossRef] [PubMed]

- Leung, B.; Jeon, G.; Dubois, E. Least-squares luma–chroma demultiplexing algorithm for Bayer demosaicking. IEEE Trans. Image Process. 2011, 20, 1885–1894. [Google Scholar] [CrossRef] [PubMed]

- Gunturk, B.K.; Altunbasak, Y.; Mersereau, R.M. Color plane interpolation using alternating projections. IEEE Trans. Image Process. 2002, 11, 997–1013. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.M.; Karzand, M.; Vetterli, M. Demosaicking by alternating projections: Theory and fast one-step implementation. IEEE Trans. Image Process. 2010, 19, 2085–2098. [Google Scholar] [CrossRef] [PubMed]

- Menon, D.; Calvagno, G. Demosaicing based on wavelet analysis of the luminance component. In Proceedings of the IEEE International Conference on Image Processing, ICIP 2007, San Antonio, TX, USA, 16 September–19 October 2007; pp. 181–184. [Google Scholar]

- Menon, D.; Calvagno, G. Regularization approaches to demosaickingn. IEEE Trans. Image Process. 2009, 18, 2209–2220. [Google Scholar] [CrossRef] [PubMed]

- Moghadam, A.A.; Aghagolzadeh, M.; Kumar, M.; Radha, H. Compressive framework for demosaicing of natural images. IEEE Trans. Image Process. 2013, 22, 2356–2371. [Google Scholar] [CrossRef] [PubMed]

- Miao, L.; Qi, H. The design and evaluation of a generic method for generating mosaicked multispectral filter arrays. IEEE Trans. Image Process. 2006, 15, 2780–2791. [Google Scholar] [CrossRef] [PubMed]

- Miao, L.; Qi, H.; Ramanath, R.; Snyder, W.E. Binary tree-based generic demosaicking algorithm for multispectral filter arrays. IEEE Trans. Image Process. 2006, 15, 3550–3558. [Google Scholar] [CrossRef] [PubMed]

- Monno, Y.; Tanaka, M.; Okutomi, M. Multispectral demosaicking using adaptive kernel upsampling. In Proceedings of the 2011 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 3218–3221. [Google Scholar]

- Monno, Y.; Tanaka, M.; Okutomi, M. Multispectral demosaicking using guided filter. In Proceedings of the Volume 8299, Digital Photography VIII, Burlingame, CA, USA, 24 January 2012; p. 82990O. [Google Scholar]

- Monno, Y.; Kiku, D.; Kikuchi, S.; Tanaka, M.; Okutomi, M. Multispectral demosaicking with novel guide image generation and residual interpolation. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 29 January 2015; pp. 645–649. [Google Scholar]

- Jaiswal, S.P.; Fang, L.; Jakhetiya, V.; Kuse, M.; Au, O.C. Optimized high-frequency based interpolation for multispectral demosaicking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2851–2855. [Google Scholar]

- Jaiswal, S.P.; Fang, L.; Jakhetiya, V.; Pang, J.; Mueller, K.; Au, O.C. Adaptive multispectral demosaicking based on frequency-domain analysis of spectral correlation. IEEE Trans. Image Process. 2017, 26, 953–968. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Amba, P.; Thomas, J.B.; Alleysson, D. N-LMMSE demosaicing for spectral filter arrays. J. Imaging Sci. Technol. 2017, 61, 40407-1–40407-11. [Google Scholar] [CrossRef]

- Mihoubi, S.; Losson, O.; Mathon, B.; Macaire, L. Multispectral demosaicing using pseudo-panchromatic image. IEEE Trans. Comput. Imaging 2017, 3, 982–994. [Google Scholar] [CrossRef]

- Shinoda, K.; Hamasaki, T.; Hasegawa, M.; Kato, S.; Ortega, A. Quality metric for filter arrangement in a multispectral filter array. In Proceedings of the Picture Coding Symposium (PCS), San Jose, CA, USA, 8–11 December 2013; pp. 149–152. [Google Scholar]

- Aggarwal, H.K.; Majumdar, A. Single-sensor multi-spectral image demosaicing algorithm using learned interpolation weights. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 2011–2014. [Google Scholar]

- Yasuma, F.; Mitsunaga, T.; Iso, D.; Nayar, S.K. Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef] [PubMed]

- Jia, J.; Hirakawa, K. Single-shot Fourier transform multispectroscopy. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4205–4209. [Google Scholar]

- Jia, J.; Ni, C.; Sarangan, A.; Hirakawa, K. Guided filter demosaicking for Fourier spectral filter array. In Electronic Imaging, Visual Information Processing and Communication VII; Society for Imaging Science and Technology: Washington, DC, USA; pp. 1–5.

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Nguyen, R.M.H.; Prasad, D.K.; Brown, M.S. Training-based spectral reconstruction from a single RGB image. In Computer Vision (ECCV); Springer: Berlin, Germany, 2014; pp. 186–201. [Google Scholar]

- Hardeberg, J.Y.; Schmitt, F.; Brettel, H. Multispectral color image capture using a liquid crystal tunable filter. Opt. Eng. 2002, 41, 2532–2548. [Google Scholar]

- Park, J.; Lee, M.; Grossberg, M.D.; Nayar, S.K. Multispectral imaging using multiplexed illumination. In Proceedings of the IEEE 11th International Conference on Computer Vision, ICCV 2007, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).