1. Introduction

High-speed photography is an important tool for studying rapid physical phenomena in shock waves, fluidics, collision, photochemistry, and photophysics [

1,

2,

3,

4,

5]. The CCD or CMOS sensor has been widely used in optical imaging technology by far. They have several advantages, such as small size, high quality image, relatively low cost, and digital imaging. However, off-the-shelf CCD or CMOS cameras usually have a frame rate of 30 Hz, and cannot effectively capture the rapid phenomena with high-speed and high-resolution. The main technological limitation is that it takes time for the data readout and storage from the sensor array.

To address the bandwidth limitation for scientific research and industrial applications, various high-speed imaging methods have been developed and commercialized over recent decades. In general, the conventional high-speed imaging methods for solid-state image sensors can be classified into two categories. One is the use of parallel readout imaging scheme [

6,

7,

8]. Tochigi

et al. had demonstrated a global-shutter high-speed CMOS image sensor with a readout speed of 1-Tpixel/s in burst video and 780-Mpixel/s continuous [

9]. It is noteworthy that the more readout ports, the more complex it is for the drive circuit. Currently, imaging frame rate based on parallel readouts method cannot reach 10

5–10

7 fps. The other breakthrough method is ISIS (

In situ Storage Imaging Sensor), which was introduced for the development of a video camera capable of more than 10

6 fps. The key to this technology is the image sensor chip production, and its essence is the use of parallel readout of all pixels and pixel transfer and storage. In 1996, Lowrance had used a burst image sensor with “SPS (Serial-Parallel-Serial) storage” concept for the first time to develop a max frame rate of 10

6 fps with 32 consecutive frames [

10]. Then the ISIS technology has experienced rapid development, from the most primitive hole-type, linear-type to the slanted linear image sensor

in situ storage [

11,

12,

13,

14,

15]. Recently, Etoh

et al. had developed a backside illuminated (BSI) CCD image sensor, mounting the ISIS structure and placing metal wires on the front surface to distribute driving voltages of the

in situ CCD memory, and its highest frame rate can reach 16 Mfps with higher sensitivity [

16]. The image sensor performances, including resolution, frame rate, frame continuous shooting and so on have been greatly improved. Nevertheless, the ISIS needs to complete the data readout and storage in a very short frame interval, and its sensitivity is insufficient due to the lack of light throughput.

Therefore, the conventional high-speed imaging methods require a solid-state image sensor with high photoresponsivity at a very short integration time, synchronous exposure, interframe coherence, and high-speed parallel readout due to the necessary bandwidth. In addition, they are also faced with massive data storage problems in real time. Because of all these inherent bandwidth limitations, it is difficult to achieve a higher imaging frequency, larger space bandwidth, and higher frames.

Can we turn a low-frame-rate camera into a powerful high-speed video camera for capturing the rapid phenomena with high-speed and high-resolution without increasing bandwidth requirements? With the advent of computational cameras, a new approach to achieve high-speed imaging is offered. Computational cameras combine the features of the computer and the camera, it samples the light field in a completely different way to create new and useful forms of visual information [

17]. In recent years, many scientists in this way make some beneficial attempts. Raskar

et al. had built a coded-exposure camera with a ferroelectric liquid crystal shutter to address motion blur, and the camera shutter was controlled opened and closed during the chosen exposure time with a binary pseudo-random sequence [

18]. Gupta

et al. demonstrated a co-located camera-projector system that could enable fast per-pixel temporal modulation [

19]. Olivas

et al. had proposed a computational imaging system based on position sensing detector (PSD) to reconstruct images degraded by spatially variant platforms in low-light conditions [

20]. In order to perform temporal super resolution, Reddy

et al. had designed a reconstruction algorithm that used the data from a programmable pixel compressive camera (P2C2) along with additional priors [

21]. Liu

et al. had proposed techniques for sampling, representing, and reconstructing the space-time volume by using a prototype imaging system based on a liquid crystal on silicon device [

22]. Veeraraghavan

et al. had presented a sampling scheme and reconstruction algorithm, and turned an off-the-shelf camera into a high-speed camera for periodic signals based on coded strobing photography [

23]. In addition to the ideas proposed above, Bub

et al. had proposed a temporal pixel multiplexing (TPM) paradigm to realize simultaneous high-speed and high-resolution imaging [

24]. In Bub

et al.’s work, an imaging modality by using a DMD could flexibly control the trade-off between spatial and temporal resolution, and it was implemented to offset pixel-exposure times during a frame time of the camera. However, in the imaging modality they propose, the TPM, as far as we can tell, is just a paradigm, which has not been theorized. Meanwhile, the image processing algorithm is relatively complex for the coded exposure image. All those factors would limit the application of the temporal pixel multiplexing.

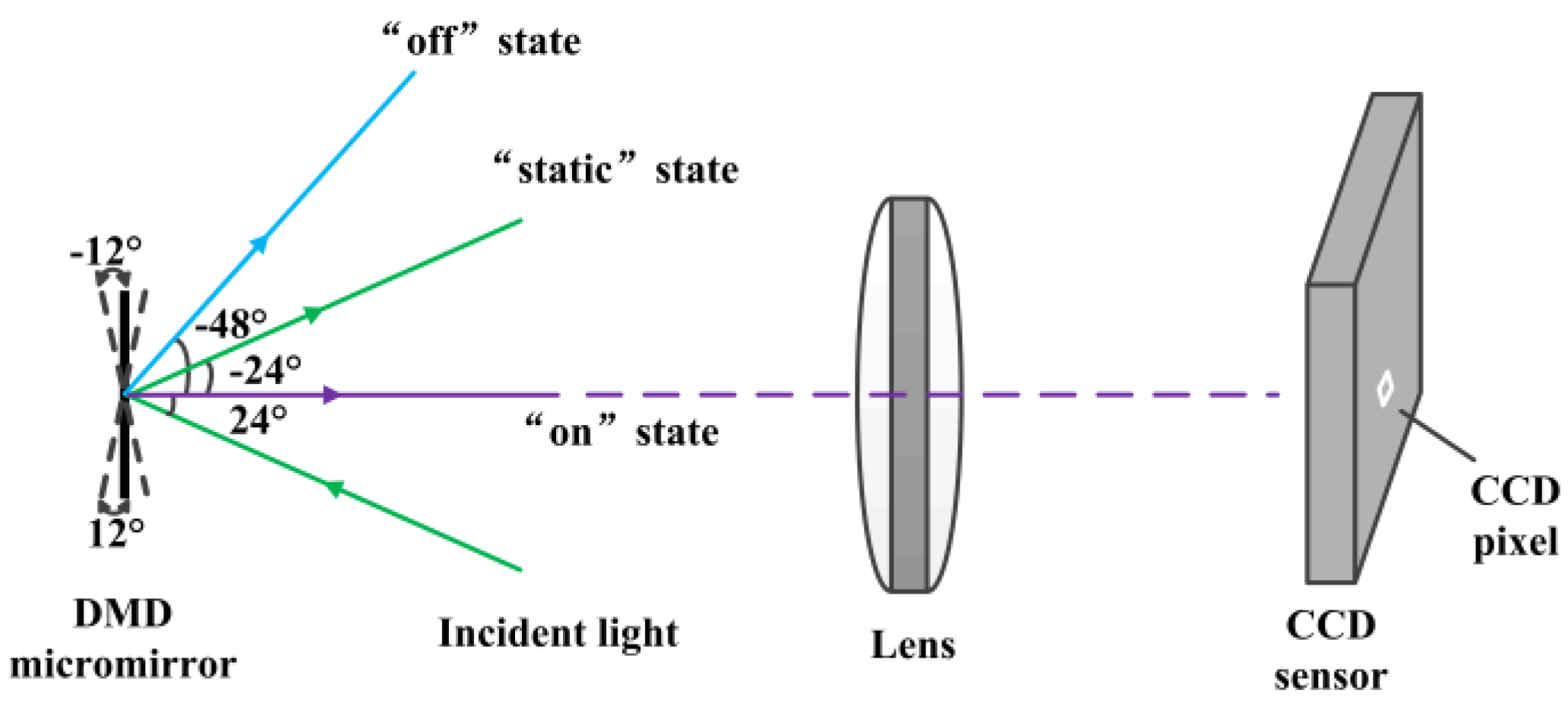

Instead of increasing bandwidth requirements, we exploit a computational camera approach based on per-pixel coded exposure to achieve high-speed imaging. With the flexible controller and advanced micro-electromechanical system (MEMS) techniques, the DMD mirror direction can be controlled by digital signals within 24 μs [

25], which is approximately 1/1000th of the frame time of an ordinary CCD camera. Therefore, the per-pixel coded DMD pattern can be easily flipped by many times during one integration time of the CCD camera, and the temporal information can be embedded in each frame by modulating light. After that, different subframes can be extracted based on the light intensity distribution of the image. As a result, the computational camera can simultaneously acquire high-speed and high-resolution images compared with a conventional digital camera.

The per-pixel coded exposure method has several advantages compared with traditional high-speed imaging methods. Thus, it becomes possible that the low-frame-rate cameras with their known advantages in terms of low cost, signal-to-noise ratio (SNR), and high dynamic range can be also used for high-speed imaging while without increasing the inherent properties of the image sensor. The light intensity amplitude of imaging system should be kept constant. In this case, static regions of the full-resolution image are unchanged at native detector frame, and high-speed image sequences are obtained by temporal information at reduced resolution. Therefore, this approach not only used one detector to achieve high-speed and high-resolution imaging, but also did so without increasing the bandwidth requirements for data transfer and storage which are the same as the original single detector. Moreover, this approach uses a new programmable imaging system, and it also supports different spatial light modulators or different frame rates for the different areas of the image sensor, which may be useful for unconventional detectors.

In addition, the pixel binning is a commonly known technique to increase frame rate and SNR at the sacrifice of spatial resolution [

26], which may be similar to the per-pixel coded exposure method. However, there are fundamental differences between them. On the one hand, the pixel binning is the process of combining the electrical charge from adjacent multiple pixels together to form a new pixel, and the charge amount of the new pixels may easily exceed the capacity of the CCD potential well, which will cause over-saturation and result in information distortion. On the other hand, the pixel binning can only use the rectangular masks such as 2 × 2 binning, 3 × 3 binning to form one single large pixel, which is a lack of flexibility. By contrast, the per-pixel coded exposure method can realize simultaneous high-speed and high-resolution imaging and does not easily cause over-saturation. Furthermore, the DMD masks can be represented by binary encoding, which may have different shapes, including diamond-shaped, square, rectangular, and other nonrectangular regions of interest. Therefore, the per-pixel coded exposure method is more flexible and adaptable compared with the pixel binning method.

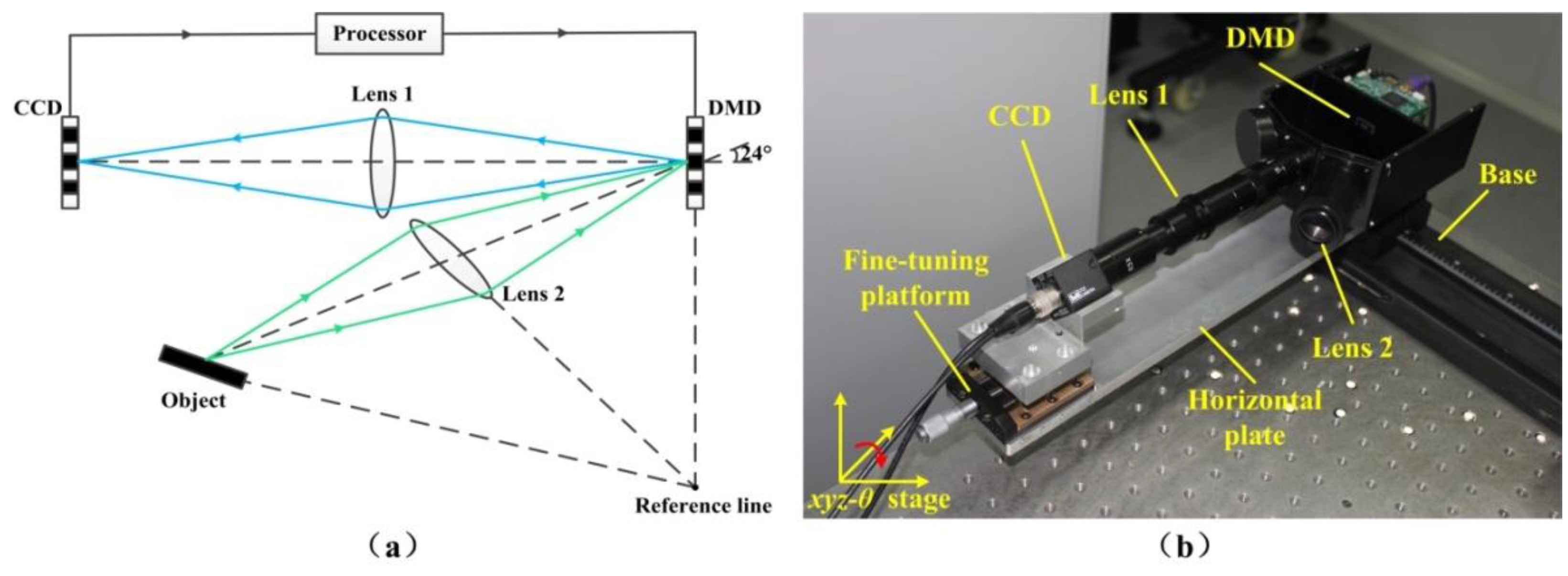

In this paper, we incorporate the hardware restrictions of existing image sensors, design the sampling functions, and implement a hardware prototype with a DMD camera in which spatial and temporal information can be flexibly modulated. We build and analyze the optical model of the DMD camera, then theoretically analyze the per-pixel coded exposure and propose a three-element median quicksort method to increase the temporal resolution of imaging system. The rest of this paper is organized as follows: firstly, the outline and the optical model of our DMD camera are introduced in

Section 2. Then the theory of per-pixel coded exposure for high-speed imaging is proposed in

Section 3, where the DMD pixel-level modulation process, the sampling clock control and extraction algorithm of subframe are described in detail. Experimental results are given in

Section 4. Finally, conclusions are summarized in

Section 5.

3. The Theory of Per-Pixel Coded Exposure for High-Speed and High-Resolution Imaging

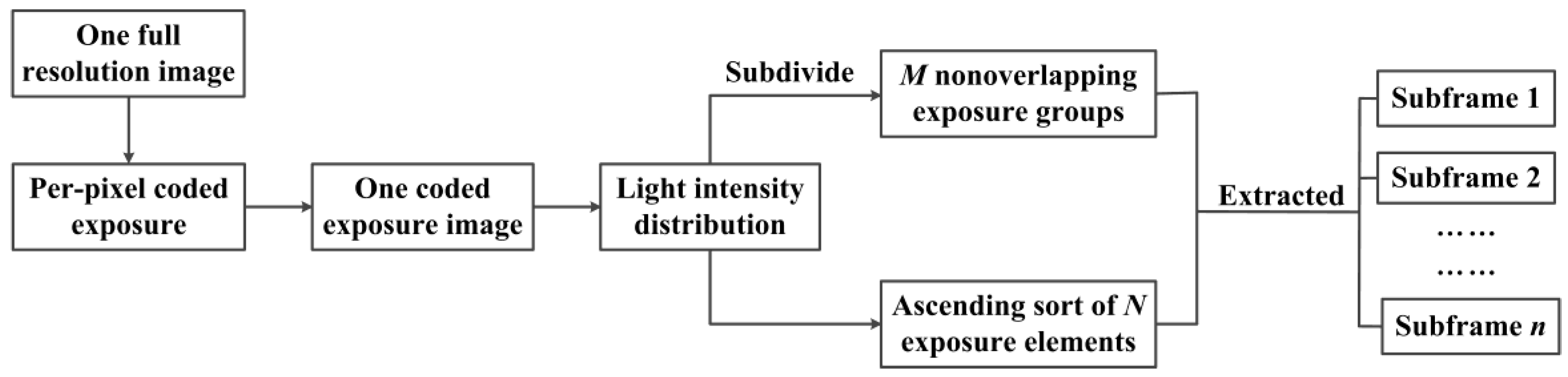

In the present section, we propose a theory of per-pixel coded exposure for high-speed and high-resolution imaging, which can accurately reveal the DMD pixel-level modulation process. Then we discuss the sampling clock control based on the clock features of DMD and CCD to achieve the synchronization. Finally, an extraction algorithm of subframe is proposed to combine different exposure pixels into a new subframe based on the light intensity distribution of the image.

3.1. Working Principle of Per-Pixel Coded Exposure

As mentioned above, the DMD camera belongs to secondary imaging system, and it can make sure that the images captured by CCD camera are obtained the DMD space-time modulation via the switching state and switching time of each DMD mirror. Also, because each DMD mirror corresponds to each CCD pixel, it means that mirrors and pixels are functionally equivalent. Thus the DMD micromirror array can be programmed to cycle through a series of binary coded patterns created on the image.

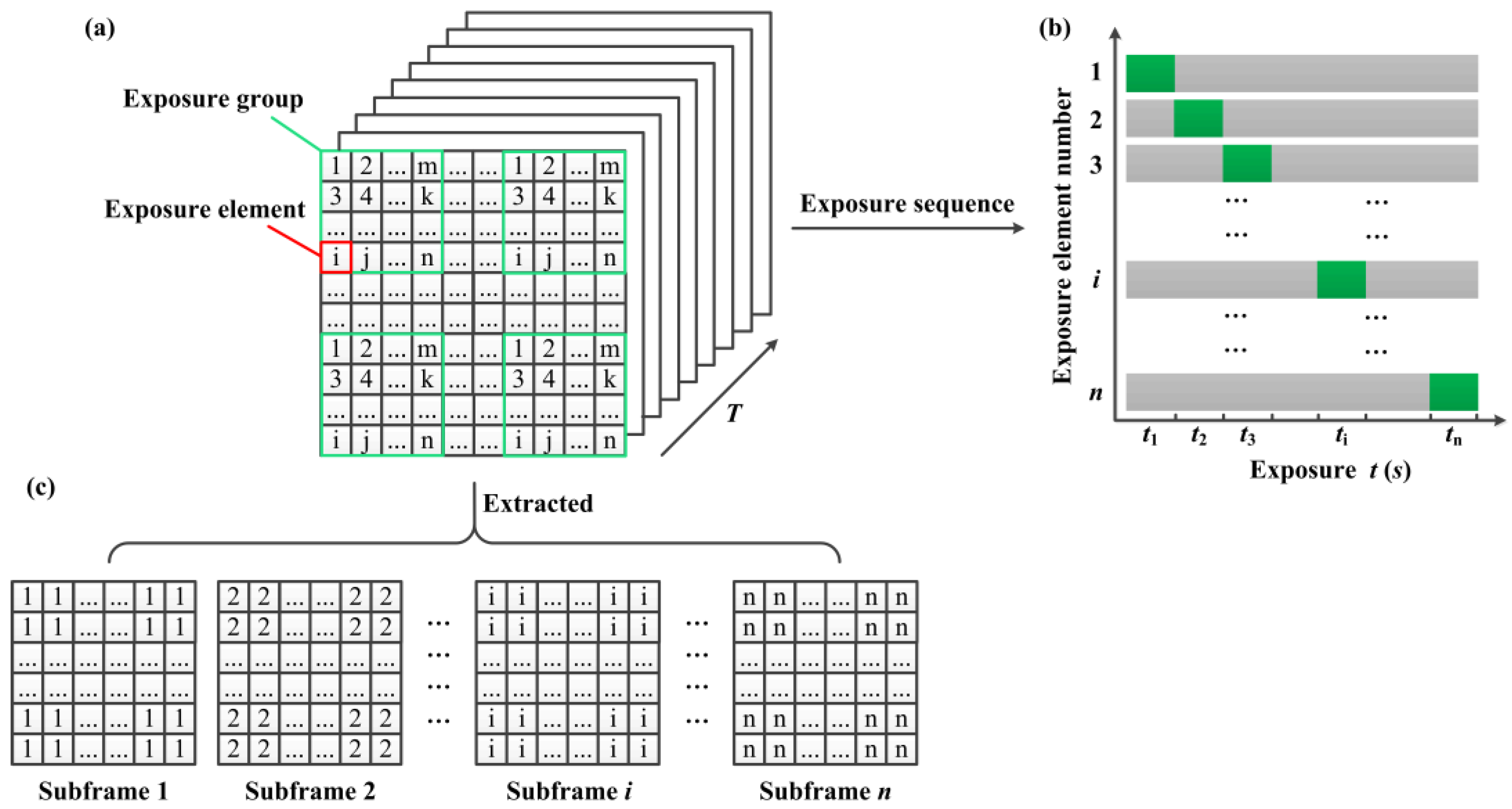

Individual mirrors on DMD plane are classified for programming purposes as being part of exposure elements, exposure groups, and subframe elements as shown in

Figure 4a. Here, each DMD coded pattern can be taken as a mask. Suppose we subdivide a DMD mask into

m non-overlapping exposure groups, each exposure group consists of

n exposure elements. Each exposure element has the different identifiers in every exposure group but with the same identifier in all exposure groups.

Figure 4b shows the individual exposure elements with same identifier are simultaneously exposed, and the exposure elements with different identifiers are sequentially exposed during one frame integration time of the CCD camera. So that at any instant, the grayscale of corresponding pixels in image sensor are different because of different exposure time. That is, the temporal information has been embedded in each frame by per-pixel coded exposure. The coded exposure images with full-resolution are read out and stored after one frame integration time of the CCD camera. In addition,

n subframes can be extracted from the resulting coded exposure image in all

m exposure groups based on the different light intensity distribution of the image. Then, pixels labeled as 1 in all exposure groups are extracted to constitute subframe 1 and pixels labeled as 2 in all exposure groups are extracted to constitute subframe 2, and so on, until the composition of the subframe

n as shown in

Figure 4c. Therefore, the coded exposure images with the full-resolution are unchanged at native detector frame and the spatial resolution of each subframe is

n times lower than that of the coded exposure image, but the temporal resolution of the DMD camera can be greatly improved by

n times higher than the conventional image-capture mode of the same detector. Thus, the per-pixel coded exposure for high-speed and high-resolution imaging by using a DMD camera can be implemented without increasing the inherent properties of camera.

Suppose we expect to increase the temporal resolution of imaging system by

N times,

i.e.,

N frames different DMD masks have been sequentially exposed in one frame integration time of the CCD camera. Let

Mi(

x,

y,

t) denote the DMD mask function, which is active for

ti ms, and

M’(

x,

y,

t) represents the per-pixel coded exposure modulation function which can accurately reveal the DMD pixel-level modulation process can be expressed as

Combined with the optical model of DMD camera, the actually detected light intensity

V’(

x,

y) by using the per-pixel coded exposure method can be expressed as

Theoretically, this approach can rapidly increase the temporal resolution of the imaging system by several times, or even hundreds of times without increasing bandwidth requirements of the camera. In addition, each DMD mask can be represented by binary encoding, and exposure groups may have different shapes, including diamond-shaped, square, rectangular, and other nonrectangular regions of interest.

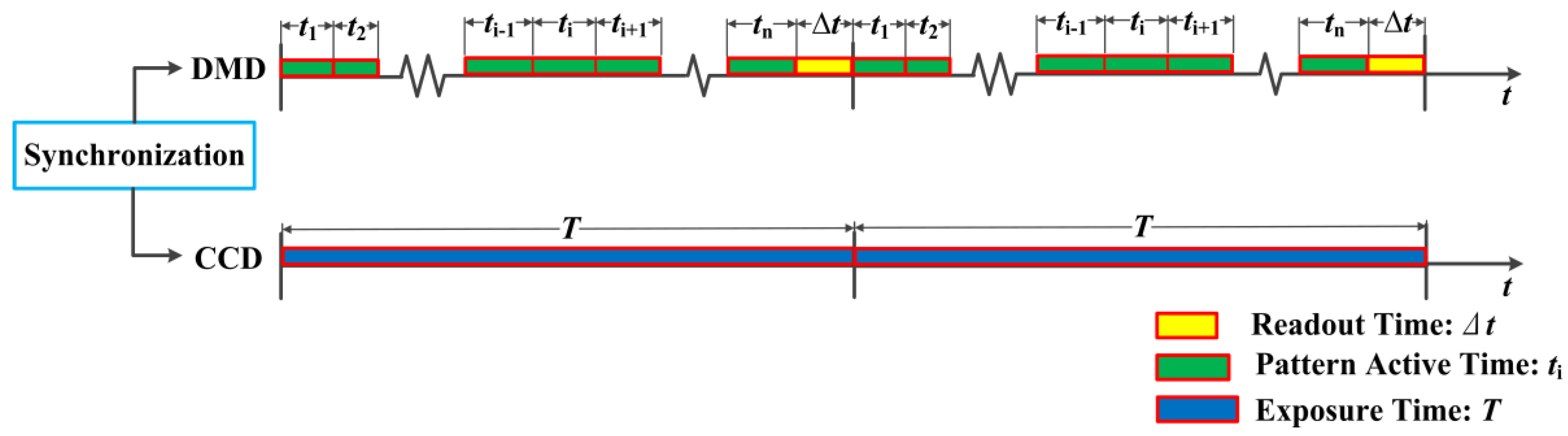

3.2. Sampling Clock Control

In DMD camera, the direction of each mirror can be controlled by digital signals within 24 μs, which is approximately 1/1000 of a frame time of an ordinary CCD camera, and it means that different patterns on the DMD can be projected for many times during one frame integration time of the CCD camera. Firstly, we need to make the CCD and DMD synchronization. Suppose that the DMD patterns are set to cycle for

N times sequentially, the sampling period of CCD camera can be expressed as

where

Δt stands for the frame readout time. In general, its value is very small.

Figure 5 shows the sampling clock control of the DMD camera based on the clock features of CCD and DMD. The CCD camera is set to be triggered every

n DMD patterns during the camera frame exposure time

T. After that, frames are continually saved to hard disk for later analysis.

3.3. Extraction of Subframe

The temporal information has been embedded into the coded exposure images captured by DMD camera through DMD per-pixel coded exposure modulation. We need to read the temporal information to extract the different subframes to achieve high-speed imaging. Generally, the grayscale of neighborhood pixels is smooth but now will appear a large difference because of the DMD modulation. Meanwhile, different exposure elements have the same sorting in their exposure groups, before or after the per-pixel coded exposure. Therefore, we can sort the exposure elements based on their ascending grayscale in each exposure group, and the resulting elements sequences can be labeled as 1, 2, 3, ...,

n, which corresponds to the CCD pixels. Therefore,

n frames subframes can be extracted from one coded exposure image, that is, the temporal resolution of DMD camera can be greatly improved by

n times. The flowchart of extraction of subframes is shown in

Figure 6.

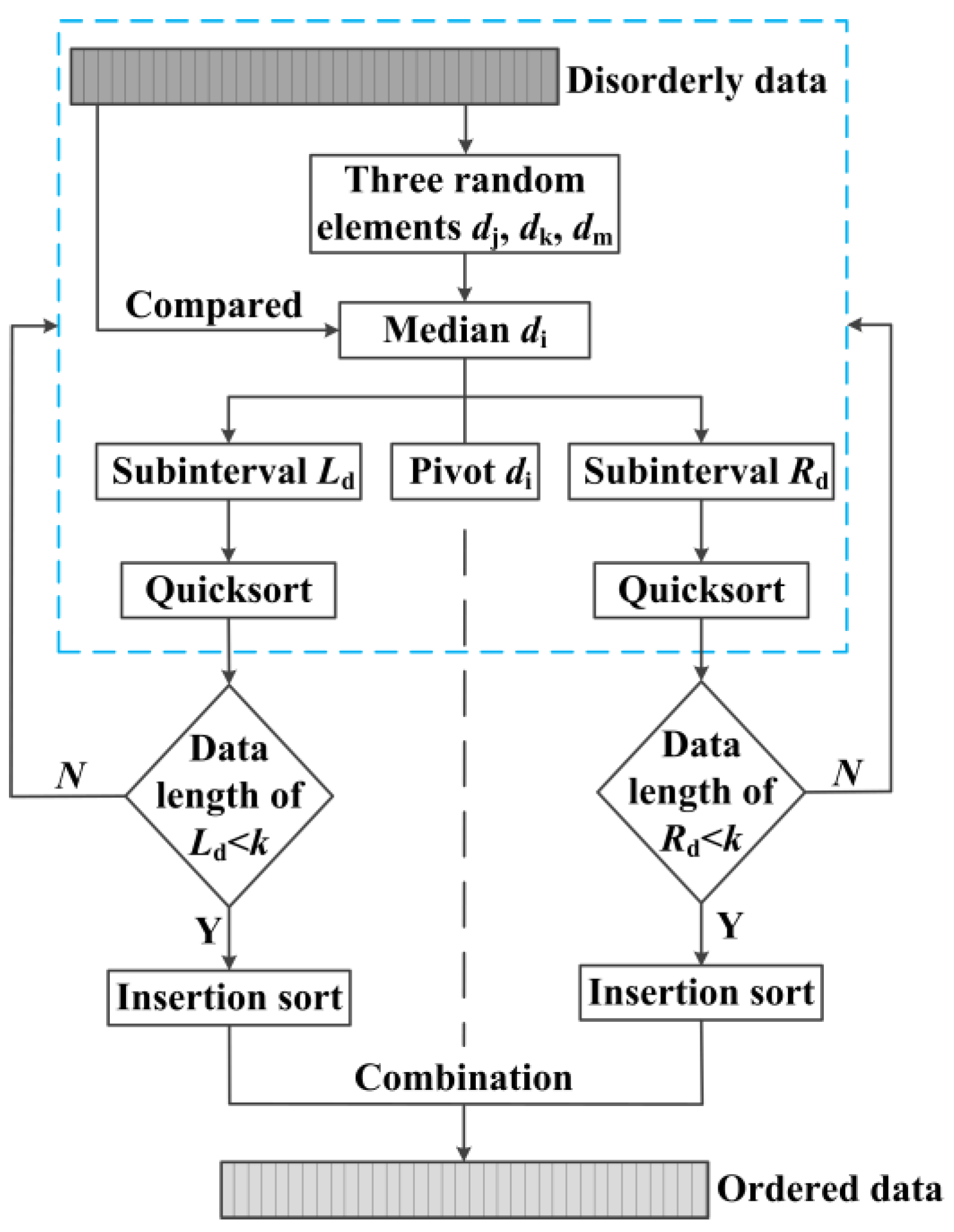

It becomes the key step that the exposure elements in each exposure are ascending sorted, and the sorting algorithm will directly determine the efficiency of image processing. Many algorithms have been proposed to implement the data sorting, such as bubble sort, select sort, insertion sort, merge sort, and quicksort [

33]. The quicksort based on divide-and-conquer is currently recognized as the best kind of internal sorting algorithm and widely used because of its simple structure and above average performance.

However, the traditional quicksort algorithm has the following disadvantages. On the one hand, if the first element in data sequence is selected as the pivot, the worst time complexity O(n2) will probably appear when grouped data are partly identical or partly in order. On the other hand, the subsequences will become smaller and smaller with the execution of the algorithm, and when the length of the subsequence reduces to a certain value, the speed of quicksort will not run as fast as other primary sorting methods. As the large amount of image data in our research needs to be processed, it is necessary to improve the efficiency of traditional quicksort.

In this paper, a three-element median quicksort method is proposed to overcome the shortcomings of traditional quicksort method. Suppose the n disorderly data can be expressed as {di} = (d1, ...,dn), the new algorithm can be described as follows.

Step1: three elements dj, dk, dm are randomly selected in sequence {di}, and compared with each other to take their median di as the pivot. Then the pivot di will be compared with other elements, and the current disorderly sequence will be divided into the left subinterval Ld = (d1, ..., di−1) and the right subinterval Rd = (di+1, ..., dn). All the data in Ld should be less than or equal to the pivot di while all the data in Rd should be greater than the pivot di. The pivot di keeps the original position unchanged.

Step2: all the data in Ld or Rd will make a recursive call with the Step1 to quicksort each element, respectively; until the data length is less than k, then Step2 stops;

Step3: the remaining left and right subinterval are respectively used the insertion sort: the data would be inserted in an appropriate position by being compared with the previously sorted data, until insertion sorting of all data are completed.

Figure 7 shows the flowchart of three-element median quicksort method. After that, an ascending sequence of data will be available. In order to obtain the reasonable value of

k, we need to calculate the time complexity of the new algorithm. The average time complexity of traditional quicksort can be expressed as

where

To(

i − 1) and

To(

n − i) represent the average time complexity of subsequences

Ld and

Rd, respectively. When variable

i changes from 1 to

n, there will be two equal

To(0),

To(1), ...,

To(

n − 1), so the Equation (9) is equivalent to

The Equation (10) is respectively transposed and subtracted and we can get

Then we define

, the Equation (11) can be expressed as

The recursive method is used in Equation (12), and it can be calculated as follows.

Therefore, the average time complexity of traditional quicksort can also be expressed as

Similarly, when the variable

i changes from

k to

n, the average time complexity of quicksort can be expressed as

The average time complexity of median comparison can be expressed as

The average time complexity of insertion sort can be expressed as

Therefore, the average time complexity of three-element median quicksort method can be expressed as

Here, we define a new function

f (

n,

k) to express the difference of the average time complexity of the above two method.

Because of

, the Equation (19) can be expressed as

When

f’(

n,

k) = 0, the function

f (

n,

k) will obtain extremum. Therefore, we define

, the Equation (20) can be expressed as

We calculate the inequality Equation (21) to get k ≥ 7, and the function f (n, k) obtains the maximum value, where the difference of the average time complexity of the above two methods is the maximum. Therefore, when the data length of left or right subinterval is less than 7, the insertion sort is used in the remaining left and right subinterval, and the average time complexity of three-element median quicksort algorithm has the minimum. In this case, the algorithm works best.

4. Experimental Results

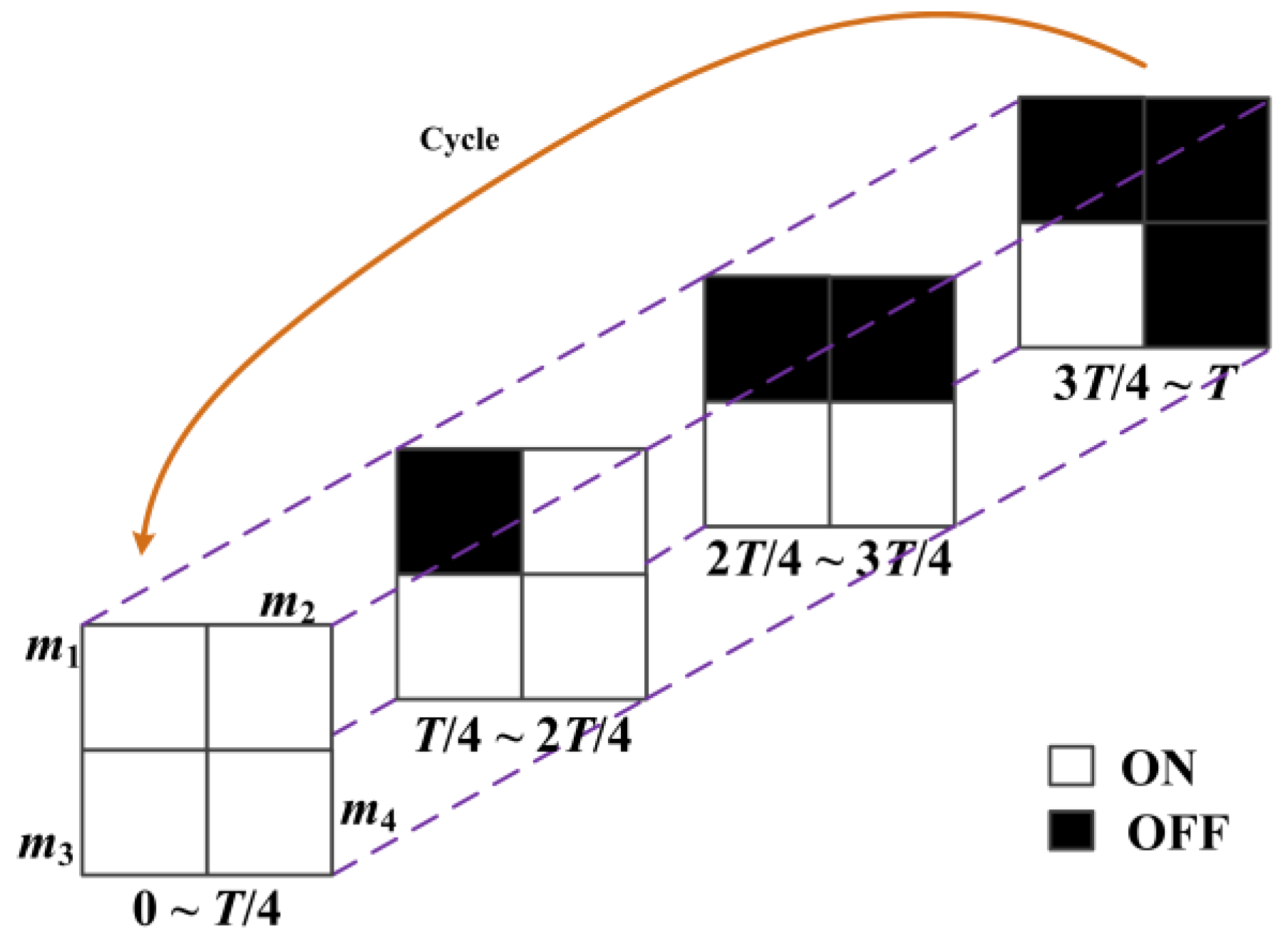

In this section, we do experiments to use the method mentioned in

Section 3 to capture the rapid phenomena with high-speed and high-resolution. In general, we can use the different shapes or different amount of DMD masks (such as 3 × 3, 9 × 9, 16 × 16 and other type grid patterns) to rapidly increase the temporal resolution of the imaging system by several times, or even hundreds of times. However, in our experiments, due to the limitations of our DMD resolution, we want to achieve four times gain in temporal resolution by using a 25 fps camera (

T = 40 ms,

tr = 0.2 ms), yielding a final frame rate of the embedded lower-resolution image sequence of 100 fps.

Figure 8 shows the modulation process of DMD mirrors in each exposure group. The exposure group in DMD pattern mask consists of four (2 × 2 grid pattern) exposure elements, which are labeled as

m1,

m2,

m3,

m4, respectively. All the mirrors are opened during the exposure time from 0 to

T/4; then

m1 is closed and

m2,

m3,

m4 are opened during the exposure time from

T/4 to 2

T/4;

m1,

m2 are closed and

m3,

m4 are opened during the exposure time from 2

T/4 to 3

T/4; finally,

m1,

m2,

m3 are closed and

m4 is opened during the exposure time from 3

T/4 to

T. The DMD pattern masks (active time

ti = 9.8 ms) are set to cycle four times sequentially during a frame time of CCD camera. After that, one coded exposure image with full-resolution would be obtained and four different subframes could be extracted from the coded exposure image by using the three-element median quicksort method. In addition, all our experiments are done in a dark room and backlighted to highlight the phenomenon.

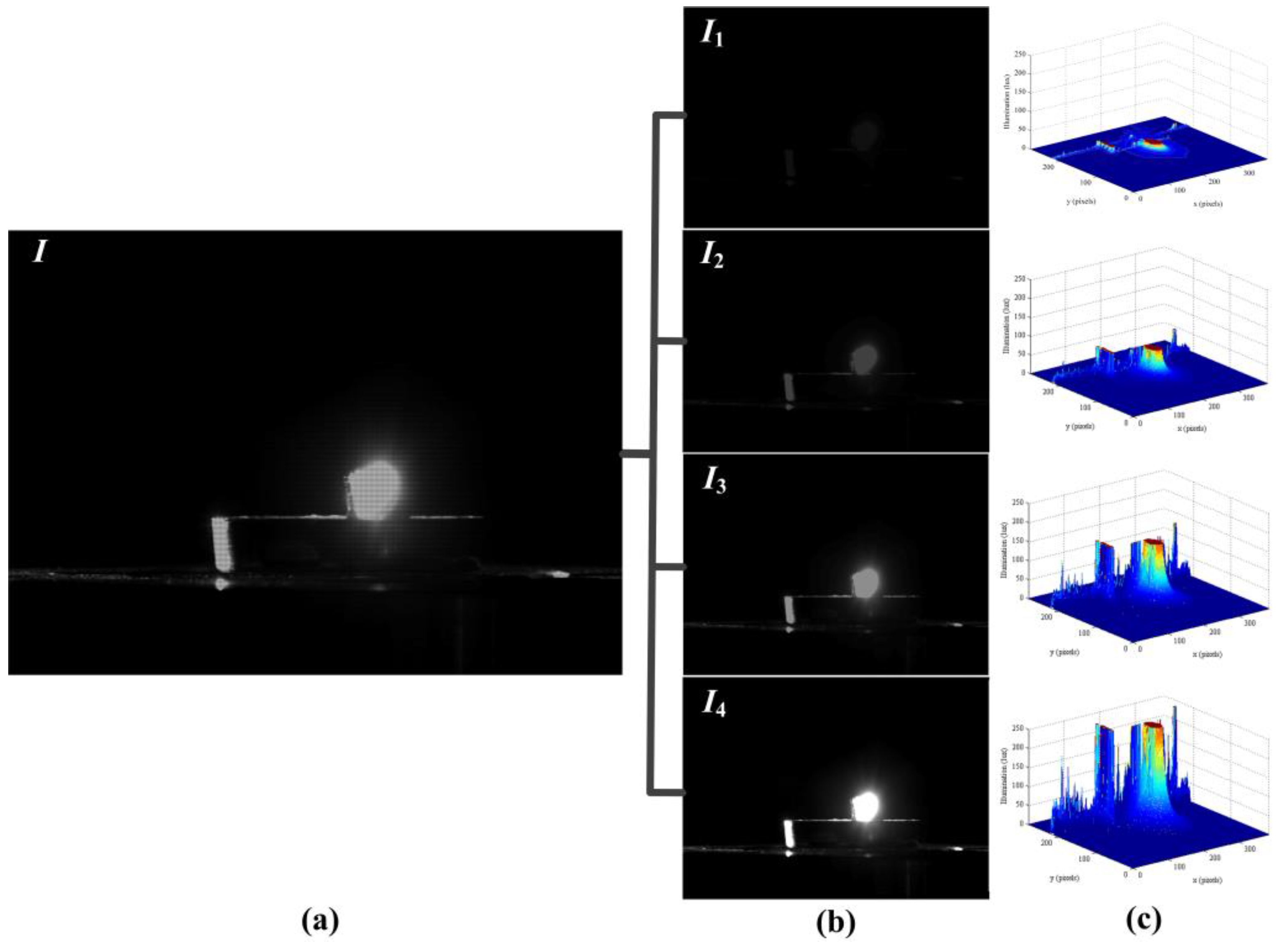

We have used our DMD camera to capture different scenes comprising a wide range of high-speed images. The temporal information had been embedded into the coded exposure image with high-resolution (768 × 576 pixels, 25 fps), and the high-speed subframes (384 × 288 pixels, 100 fps) were recorded and decomposed the process of the high-speed imaging. In order to show the effect of the DMD camera for temporal information modulation, the first example demonstrated the brightness changes of candle flame as shown in

Figure 9. The coded exposure image captured by DMD camera was shown in

Figure 9a,b showed that four different subframes were extracted from

Figure 9a. The light intensity of the subframes were increased gradually as shown in

Figure 9c, thus it indicated that the candle flame was brighter with the growth of the exposure time. In a sense, increasing the temporal resolution is equivalent to make the fast-moving phenomenon gradually slow.

Figure 10 recorded the second example result for the motion of the candles flame.

Figure 10a was the coded exposure image which recorded the instantaneous change of blowing out the candles, and four subframes were extracted as shown in

Figure 10b.

Figure 10c showed that the light intensity distribution of candle flame was gradually decreased, so the whole process of blowing out candles was fully described. The subtle change of the liquid mixing motion was clearly shown in

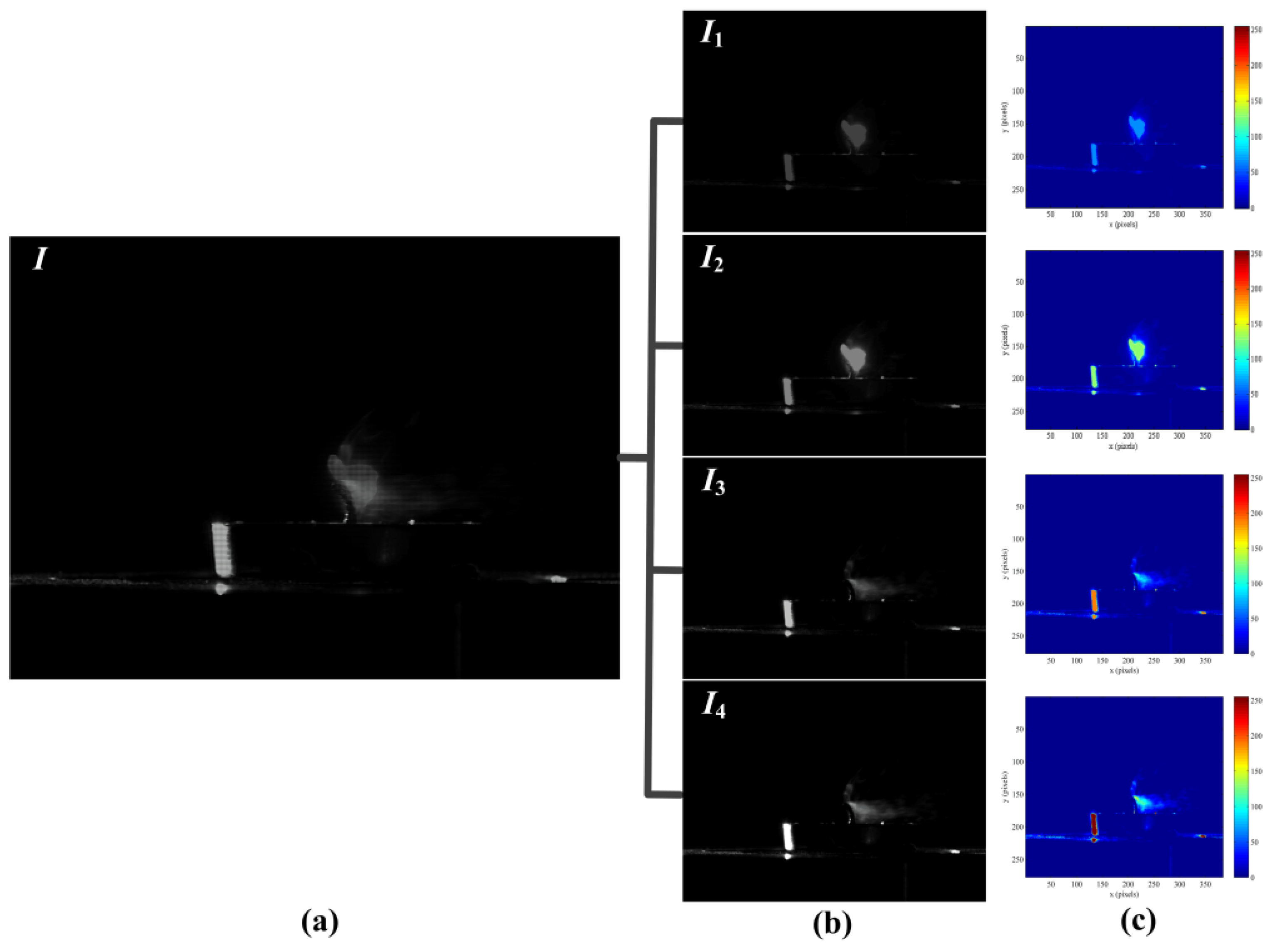

Figure 11.

Figure 11a was the turbid coded exposure image, and

Figure 11b recorded the process with four subframes when the milk was poured into the water. The entropy of image can represent the aggregation feature of image gray distribution, and the large entropy means that the image has more valuable.

Figure 11c showed the entropy of different frames, the entropy of subframe

I3 was very close to the coded exposure image

I, and the entropy of subframe

I4 was exceeded to the coded image

I. That is, subframes with effective information had been gradually increased and the sharpness change of liquid mixing was accurately recorded. Therefore, all the experiments show that our method has achieved a good visual result for high-speed image.

5. Conclusions

In this paper, we propose an efficient way to capture high-speed and high-resolution images by using a DMD camera with per-pixel coded exposure method. Compared with the conventional high-speed imaging methods, we break through the bandwidth limitations of traditional cameras, design and build a DMD camera that could greatly increase the temporal resolution of the imaging system while increasing neither memory requirements nor the intrinsic frame rate of the camera. In order to overcome the disadvantages of the pixel binning, we use the optical model of the DMD camera, theoretically analyze the per-pixel coded exposure, and propose a three-element median quicksort method to realize the quick sorting of pixels. Theoretically, this approach can rapidly increase the temporal resolution of the imaging system several, or even hundreds, of times. In addition, this approach uses a new programmable imaging system, and it also supports different spatial light modulators or different frame rates for the different areas of the image sensor, which may be useful for unconventional detectors. Finally, we have achieved 100 fps gain in temporal resolution by using a 25 fps camera, and all experiments on a wide range of scenes show that our method has achieved a good visual result for high-speed images.

However, the proposed method has several limitations. Firstly, the physical resolution of DMD determines the maximum resolution of the DMD camera, especially maximum frame rate and maximum spatial resolution. Secondly, a DMD camera with low light efficiency cannot obtain high quality images in low-light conditions. For the future application of this technology, it is useful in various fields such as observing the activities of cells in the biomedical field, high dynamic range imaging, extending the depth of field photography, three-dimensional shape measurement, and so on, and it will encourage researchers and engineers to do further research and development.