Abstract

We consider the lossless compression bound of any individual data sequence. Conceptually, its Kolmogorov complexity is such a bound yet uncomputable. According to Shannon’s source coding theorem, the average compression bound is , where n is the number of words and H is the entropy of an oracle probability distribution characterizing the data source. The quantity obtained by plugging in the maximum likelihood estimate is an underestimate of the bound. Shtarkov showed that the normalized maximum likelihood (NML) distribution is optimal in a minimax sense for any parametric family. Fitting a data sequence—without any a priori distributional assumption—by a relevant exponential family, we apply the local asymptotic normality to show that the NML code length is , where d is dictionary size, is the determinant of the Fisher information matrix, and is the parameter space. We demonstrate that sequentially predicting the optimal code length for the next word via a Bayesian mechanism leads to the mixture code whose length is given by , where is a prior. The asymptotics apply to not only discrete symbols but also continuous data if the code length for the former is replaced by the description length for the latter. The analytical result is exemplified by calculating compression bounds of protein-encoding DNA sequences under different parsing models. Typically, compression is maximized when parsing aligns with amino acid codons, while pseudo-random sequences remain incompressible, as predicted by Kolmogorov complexity. Notably, the empirical bound becomes more accurate as the dictionary size increases.

1. Introduction

The computation of the compression bound of any individual sequence is both a philosophical and a practical problem. It touches on the fundamentals of human intelligence. After several decades of effort, many insights have been gained by experts from various disciplines.

In essence, the bound is the length of the shortest program that prints the sequence on a Turing machine, referred to as the Solomonoff–Kolmogorov–Chaitin algorithmic complexity. Under this framework, if a sequence cannot be compressed by any computer program, it is considered random. On the other hand, if we can compress the sequence using a certain program or coding scheme, then it is not random, and we uncover some pattern or knowledge within the sequence. Nevertheless, Kolmogorov complexity is not computable.

Along another line, the source coding theorem proposed by Shannon [1] claimed that the average shortest code length is no less than , where n is the number of words and H is the the entropy of the source, assuming its distribution can be specified. Although Shannon’s probabilistic framework has inspired the inventions of some ingenious compression methods, is an oracle bound. Some further questions need to be addressed. First, where does the probability distribution come from? A straightforward approach is to infer it from the data themselves. However, in the case of discrete symbols, plugging in the empirical word frequencies observed in the sequence results in , which can be shown to be an underestimate of the bound. Second, the word frequencies are counted according to a dictionary. Different dictionaries yield different distributions and thus different codes. What is the criterion for selecting a good dictionary? Third, the behavior of some compression algorithms, such as Lempel–Ziv coding, shows that as the sequence length increases, the size of the dictionary also grows. What is the exact impact of the dictionary size on the compression? Fourth, can we achieve the compression limit using a predictive code that processes the data in only one pass? Fifth, how is the bound derived from the probabilistic framework, if at all, connected to the conclusions drawn from the algorithmic complexity?

In this article, we review the key ideas of lossless compression and present some new mathematical results relevant to the aforementioned problems. Besides the algorithm complexity and the Shannon source coding theorem, the technical tools center around the normalized maximum likelihood (NML) coding [2,3] and predictive coding [4,5]. The expansions of these code lengths lead to an empirical compression bound that is indeed sequence specific and naturally linked to algorithmic complexity. Although the primary theme is the pathwise asymptotics, their related average results are also discussed for the sake of comparison. The analytical results apply not only to discrete symbols but also to continuous data provided the codelength for the former is replaced by the description length for the latter [6]. Other than theoretical justification, the empirical bound is exemplified by protein-coding DNA sequences and pseudo-random sequences.

2. A Brief Review of the Key Concepts

2.1. Data Compression

The basic concepts of lossless coding can be found in the textbook [7]. Before we proceed, it is helpful to clarify the jargon used in this paper: strings, symbols, and words. We illustrate them with an example. The following “studydnasequencefromthedatacompressionpointofviewforexampleabcdefghijklmnopqrstuvwxyz”, is a string. The 26 small case distinct English letters appearing in the string are called symbols, and they form an alphabet. If we parse the string into “study”, “dnasequence”, “fromthedata”, “compressionpointofview”, “forexample”, “abcdefg”, “hijklmnopq”, and “rstuvwxyz”, these substrings are called words.

The implementation of data compression involves an encoder and a decoder. The encoder parses the string to be compressed into words and replaces each word by its codeword. This produces a new string, which is hopefully shorter than the original one in terms of bits. The decoder, conversely, parses the new string into codewords, and interprets each codeword back to a word of the original symbols. The collection of all distinct words in a parsing is called a dictionary.

In the context of data compression, two issues arise naturally. First, is there a lower bound? Second, how do we compute this bound, or is it computable at all?

2.2. Prefix Code

A fundamental concept in lossless compression is the prefix code or instantaneous code. A code is called a prefix one if no codeword is a prefix of any other codeword. The prefix constraint has a close relationship to the metaphor of the Turing machine, by which the algorithmic complexity is defined. Given a prefix code over an alphabet of symbols, the codeword lengths , , ⋯, , where m is the dictionary size, must satisfy the Kraft inequality: . Conversely, given a set of code lengths that satisfy this inequality, there exists a prefix code with those code lengths. Note that the dictionary size in a prefix code could be either finite or countably infinite.

The class of prefix codes is a subset of the more general class of uniquely decodable codes, and one may expect that some uniquely decodable codes could be advantageous over prefix codes in terms of data compression. However, this is not necessarily the case, for it can be shown that the codeword lengths of any uniquely decodable code must satisfy the Kraft inequality. Therefore, a prefix code can always be constructed to match the codeword lengths of any given uniquely decodable code.

A prefix code has an attractive self-punctuating feature: it can be decoded without reference to the future codewords since the end of a codeword is immediately recognizable. For these reasons, prefix coding is commonly used in practice. A conceptual yet convenient generalization of the Kraft inequality is to drop the integer requirement for code lengths and ignore the effect of rounding. A general set of code lengths can be implemented by the arithmetic coding [8,9]. This generalization leads to a correspondence between probability distributions and prefix code lengths: for every distribution P over the dictionary, there exists a prefix code C whose length is equal to for all words x. Conversely, for every prefix code C on the dictionary, there exists a probability measure P such that is equal to the code length for all words x.

2.3. Shannon’s Probability-Based Coding

In his seminal work [1], Shannon proposed the source coding theorem based on a probabilistic framework. Supposing a finite number of words , , ⋯, are generated from a probabilistic source denoted by a random variable X with frequencies , , then the expected length of any prefix code is no shorter than the entropy of this source defined as . This result offers a lower bound of data compression if a probabilistic model can be assumed. Throughout this paper, we take 2 as the base of the logarithm operation, and thereby bit is the unit of code lengths.

Huffman code is such an optimal code that reaches the expected code length. The codewords are defined by a binary tree constructed from word frequencies. Another well-known method is the Shannon–Fano–Elias code, which uses at most two bits more than the theoretical lower bound. The code length of in Shannon–Fano–Elias code is approximately equal to .

2.4. Kolmogorov Complexity and Algorithm-Based Coding

Kolmogorov, who laid the foundation of probability theory, interestingly set aside probabilistic models and, along with other researchers including Solomonoff and Chaitin, pursued an alternative path to understanding the informational structure of data based on the notion of a universal Turing machine. Kolmogorov [10] stated that “information theory must precede probability theory, and not be based on it.”

We give a brief account of some facts about Kolmogorov complexity relevant to our study, and refer readers to Li and Vitányi [11], Vitányi and Li [12] for further detail. A Turing machine is a computer with a finite state operating on a finite symbol set, and is essentially the abstraction of any physical computer that has CPUs, memory, and input and output devices. At each unit of time, the machine reads in one operation command from the program tape, writes some symbols on a work tape, and changes its state according to a transition table. Two important features need more explanation. First, the program is linear, namely, the machine reads the tape from left to right, and never goes back. Second, the program is prefix-free, namely, no program that leads to a halting computation can be the prefix of another such program. This feature is an analog to the concept of prefix-coding. A universal Turing machine can reproduce the results of any other machines. The Kolmogorov complexity of a word x with respect to a universal computer , denoted by , is defined as the minimum length overall programs that print x and then halt.

The Kolmogorov complexities of all words satisfy the Kraft inequality, due to its natural connection to prefix coding. In fact, for a fixed machine , we can encode x by the minimum length program that prints x and halt. Given a long string, if we define a way to parse it into words, we can then encode each word by the above program. Consequently, we encode the string by concatenating the programs one after another. Decoding is straightforward: we input the concatenated program into , and it reconstructs the original string.

One obvious way of parsing is to take the string itself as the only word. Thus, how much we can compress the string depends on the complexity of this string. At this point, we see the connection between data compression and the Kolmogorov complexity, which is defined for each string with respect to an implementable type of computational machine—the Turing machine.

Next, we highlight some theoretical results about Kolmogorov complexity. First, it is not machine specific except for a machine-specific constant. Second, the Kolmogorov complexity is unfortunately not computable. Third, there exists a universal probability with respect to a universal machine such that for all strings, where c is a constant independent of x. This means that up to an additive constant, is equivalent to , which can be viewed as the code lengths of a prefix code in light of the Shannon–Fano–Elias code. Because of the non-computability of Kolmogorov complexity, the universal probability is likewise not computable.

The study of the Kolmogorov complexity reveals that the assessment of the exact compression bounds of strings is beyond the ability of any specific Turing machine. However, any program executed on a Turing Machine provides, up to an additive constant, an upper bound on the complexity.

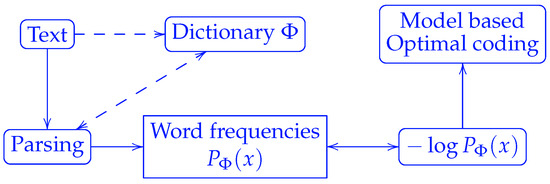

2.5. Correspondence Between Probability Models and String Parsing

A critical question remaining to be answered in the Shannon source coding theorem is the following: Where does the model that defines probabilities come from? According to the theorem, the optimal code lengths are proportional to the negative logarithm of the word frequencies. Once the dictionary is defined, the word frequencies can be counted for any individual string to be compressed. Equivalently, a dictionary can be induced by the way we parse a string, c.f. Figure 1. It is worth noting that the term “letter” instead of “word” was used in Shannon’s original paper [1], which did not address how to parse strings into words at all.

Figure 1.

Correspondence between probability models and string parsing by a dictionary. The parsing of a string requires a dictionary , which can be defined either prior to parsing or dynamically during parsing as in Lempel–Ziv coding.

2.6. Fixed-Length and Variable-Length Parsing

The words generated from the parsing process could be either of the same length or of variable lengths. For example, we can encode Shakespeare’s work letter by letter, or encode it by natural words of varying lengths. A choice made at this point leads to two quite different coding schemes.

If we decompose a string into words containing the same number of symbols, this is a fixed-length parsing. The two extra bits for each word is a big deal when each word contains only a few symbols. As the word length gets longer and longer, the relative impact of the two extra bits becomes negligible for each block. An effective alternative to avoid the issue of extra bits is the arithmetic coding, which integrates the codes of successive words at the cost of more computations.

Variable-length parsing decomposes a string into words containing a variable number of symbols. The popular Lempel–Ziv coding is such a scheme. Although the complexity of a string x is not computable, the complexity of ‘’ relative to ‘x’ is small. To concatenate an ‘1’ to the end of ‘x’, we can simply use the program that prints x followed by printing ‘1’. A recursive implementation of this idea leads to the Lempel–Ziv coding, which concatenates the address of ‘x’ and the code of ‘1’.

Please notice that as the data length increases, the dictionary size resulting from the parsing scheme of the Lempel–Ziv coding increases as well—unless an upper limit is imposed. Along the process of encoding, each word occurs only once because, down the road, either it will not be a prefix of any other word, or a new word concatenating it with a certain suffix symbol will be found. To a good approximation, all the words encountered up to a point are equally likely. If we use the same number of bits to store the addresses of these words, their code lengths are equal. Approximately, it obeys Shannon’s source coding theorem too.

2.7. Parametric Models and Complexity

Hereafter, we use parametric probabilistic models to count prefix code lengths. The specification of a parametric model includes three aspects: a model class; a model dimension; and parameter values. Suppose we restrict our attention to some hypothetical model classes. Each of these model classes is indexed by a set of parameters, and we define the number of parameters in each model its dimension. We also assume the identifiability of the parameterization, that is, different parameter values correspond to different models. Let us denote one such model class by a probability measure , and their corresponding frequency functions by . The model class is usually defined by a parsing scheme. For example, if we parse a string symbol by symbol, then the number of words equals the number of symbols appearing in the string. We denote the number of symbols by , then . If we parse the string by every two symbols, then the number of words increases to , and so on.

From the above review of Kolmogorov complexity, it is clear that strings themselves do not admit probability models in the first place. Nevertheless, we can fit a string using a parametric model. By doing so, we need to pay extra bits to describe the model as observed by Dr. Rissanen. He termed them as stochastic complexity or parametric complexity. The total code lengths under a model include both the data description and the parametric complexity.

2.8. Two References for Code Length Evaluation

The evaluation of redundancy of a given code requires a reference. Two such references are discussed in the literature. In the first scenario, we assume that the words are generated according to as independent and identically distributed (i.i.d.) random variables, whose outcomes are denoted by . Then the optimal code length is given by . As n goes large, its average code length is given by . In general, the code length corresponding to any distribution is given by , and its redundancy is . The expected redundancy is the Kullback–Leibler divergence between the two distributions:

It can be shown that minmax and maxmin values of average redundancy are equal [13]:

A key historical result on redundancy [14,15] is that for each positive number and for all except in a set whose volume goes to zero as

All these results are about average code length over all possible strings.

Another reference with which any code can be compared is obtained by replacing by the maximum likelihood estimate in ; that is, . Please notice that does not satisfy the Kraft inequality. This perspective is a practical one, since in reality is simply data without any probability measure. Given a parametric model class , we fit the data using one surrogate model that maximizes the likelihood. Then we consider

2.9. Optimality of Normalized Maximum Likelihood Code Length

Minimizing the above quantity leads to the normalized maximum-likelihood (NML) distribution:

The NML code length is thus given by

Shtarkov [3] proved the optimality of NML code by showing it solves

where q ranges over the set of virtually all distributions. Later Rissanen [2] further proved that NML code solves

where q and g range over the set of virtually all distributions. This result states that the NML code is still optimal even if the data are generated from outside the parametric model family. Namely, regardless of the source nature in practice, we can always find the optimal code length from a distribution family.

3. Empirical Code Lengths Based on Exponential Family Distributions

In this section, we fit data from a source, either discrete or continuous, by an exponential family due to the following considerations. First, the multinomial distribution, which is used to encode discrete symbols, is an exponential family. Second, according to the Pitman–Koopman–Darmois theorem, exponential families are, under certain regularity conditions, the only models that admit sufficient statistics whose dimensions remain bounded as the sample size grows. On one hand, this property is most desirable in data compression. On the other hand, the results would be valid in the broader context of statistical learning, beyond source coding. Third, as we will show, the first term in the code length expansion is nothing but the empirical entropy for exponential families, which is a straightforward extension of Shannon’s source coding theorem.

3.1. Exponential Families

Consider a canonical exponential family of distributions , where the natural parameter space is an open set of . The density function is given by

with respect to some measure on the support of data. The transposition of a matrix (or vector) V is represented by here and throughout the paper. is the sufficient statistic for the parameter . We denote the first and the second derivatives of respectively by and . The entropy or differential entropy of is . The following result is an empirical and pathwise version of Shannon’s source coding theorem.

Theorem 1.

[Empirical optimal source code length] If we fit an individual data sequence by an exponential family distribution, the NML code length is given by

where is the entropy evaluated at the maximum likelihood estimate (MLE) , and is the determinant of the Fisher information . The integral in the expression is taken over the parameter space Θ, and is assumed to be finite.

Importantly, we do not assume that the data are generated from an exponential family. Rather, for any given data sequence, we fit a distribution from a relevant exponential family to describe the data. In this context, the distribution serves purely as a modeling tool, and any appropriate option from the exponential family toolbox may be used.

The first term in (2) is , namely, the entropy in Shannon’s theorem except that the model parameter is replaced by the MLE. The second term has a close relationship to the BIC introduced by Akaike [16] and Schwartz [17], and the third term involves the Fisher information which characterizes the local property of a distribution family. Surprisingly and interestingly, this empirical version of the lossless coding theorem brings together three foundational contributions: those of Shannon, Akaike–Schwarz, and Fisher.

Next, we give a heuristic proof of (5) by the local asymptotic normality (LAN) [18], though a complete proof can be found in the Appendix A. In the definition of NML code length (2), the first term becomes empirical entropy for exponential families. Namely,

The remaining difficulty is the computation of the summation. In a general problem of data description length, Rissanen [19] derived an analytical expansion requiring five assumptions, which were hard to verify. Here we show for sources from exponential families, the expansion is valid as long as the integral is finite.

Let be a cube of size centering at , where r is a constant. LAN states that we can expand probability density in each neighborhood as follows:

where . Maximizing the likelihood in with respect to h leads to

Consequently, if falls into the neighborhood , we have

where solves . Applying the Taylor expansion, we get

Plugging it into (7) leads to

If we consider i.i.d. random variables sampled from the exponential distribution (4), then the MLE is a random variable. The summation of the quantity (8) in the neighborhood can be expressed as the following expectation of :

Due to the asymptotic normality of MLE , namely, , the density of is approximated by

Applying this density to the expectation in (9), we find the two exponential terms cancel out, and obtain . The sum of its logarithm over all neighborhoods leads to the remaining terms in (5).

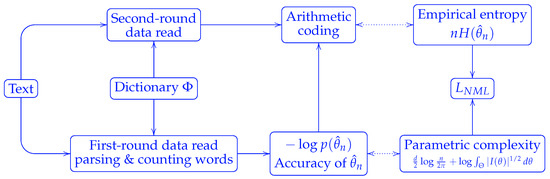

The optimality of the NML code is established in the minimax settings. Yet its implementation requires two passes over the data: one for word counting with respect to a dictionary, and another for encoding, c.f. Figure 2.

Figure 2.

An implementation of NML code length. The data were read in two rounds, one for counting word frequencies, and the other for encoding.

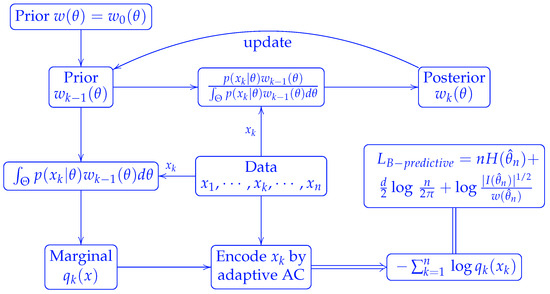

3.2. Bayesian Predictive Coding

It is natural to ask whether there exists a scheme that passes through the data only once and still can compress the data equally well. It turns out that predictive coding is such a scheme for a given dictionary. The idea of predictive coding is to sequentially make inferences about the parameters in the probability function , which is then used to update the code book. That is, after obtaining observations , we calculate the MLE , and in turn encode the next observation according to the current estimated distribution. Its code length is thus . This procedure (Rissanen [15,20]) is closely related to the prequential approach to statistical inference as advocated by Dawid [21,22]. Predictive coding is intuitively optimal due to two important fundamental results. First, the MLE is asymptotically most accurate since it gathers all the information in for inference in the parametric model . Second, the code length is optimal as dictated by the Shannon source coding theorem. In the case of exponential families, Proposition 2.2 in [5] showed that can be expanded as follows:

where the sequence of random variables converges to an almost surely finite random variable .

Alternatively, we can use Bayesian estimates in the predictive coding. Starting from a prior distribution , we encode by the marginal distribution resulted from . The posterior is given by

We then use this posterior as the updated prior to encode the next word . Using induction, we can show that the marginal distribution to encode the k-th word is

Meanwhile, the updated posterior, also the prior for the next round encoding, becomes

Proposition 1.

[Bayesian predictive code length] The total Bayesian predictive code length for a string of n words is

Thus the Bayesian predictive code is nothing but the mixture code referred to in reference [4]. The above scheme is illustrated in Figure 3.

Figure 3.

Scheme of Bayesian predictive coding.

Theorem 2.

[Expansion of Bayesian predictive code length] If we fit a data sequence by an exponential family distribution, the mixture code length has the expansion

where is any mixture of conjugate prior distributions.

Once again, we do not assume that the data are generated from an exponential family. Rather than using a single distribution from an exponential family, Bayesian predictive coding employs a mixture of such distributions, thereby generally enhancing its approximation capability.

The result holds for general priors that can be approximated by a mixture of conjugate ones. In the case of multinomial distributions, the conjugate prior is the Dirichlet distribution. Any prior continuous on the d-dimensional simplex within the cube can be uniformly approximated by the Bernstein polynomials of m variables, each term of which corresponds to a Dirichlet distribution [23,24]. It is important to note that in the current setting, the source is not assumed to be i.i.d. samples from an exponential family distribution as in Theorem 2.2 and Proposition 2.3 in [5].

3.3. Redundancy

Now we examine the empirical code length under Shannon’s setting. That is, we evaluate the redundancy of the code length assuming the source is from a hypothetical distribution. The reference is the optimal code length as introduced in Section 2.8, and .

Proposition 2.

If we assume that a source follows an exponential family distribution, then

where the sequence of non-negative random variables have a bounded upper limit, , almost surely. If we further assume that , then

where is the same as the above.

The left side of (11) is , and the rest is true according to the proof of Proposition 2.2 in [5], Equation (18). The NML code is a special case of the mixture code, whose redundancy is given by Theorem 2.2 in [5]. The details on the law of the iterated logarithm can be found in the book [25]. We note that is bounded below by 1. This proposition confirms that is an underestimate of the theoretical optimal code length. Notably, this setting in which the data is assumed to originate from a probabilistic source of fixed dimension serves only the theoretical analysis.

3.4. Coding of Discrete Symbols and Multinomial Model

For compressing strings of discrete symbols, it is sufficient to consider the discrete distribution specified by a probability vector, , where . Its frequency function is . The Fisher information matrix can be shown to be

Thus .

Suppose are i.i.d. random variables obeying the above discrete distribution. Then follows a multinomial distribution . Its conjugate prior distribution is the Dirichlet distribution , whose density function is

where . Since the Jeffreys prior is proportional to , in this case, it equals Dirichlet(1/2,1/2, ⋯, 1/2), whose density is . The Jeffreys prior was also used by Krichevsky [14] to derive optimal universal codes.

It is noticed that . Plug it into Equation (10), we have the following specific form of the NML code length for the multinomial distribution. Remember that the distribution or word frequencies are specific for a given dictionary , and we thus term it as . If we change the dictionary, the code length changes accordingly.

Proposition 3.

[Optimal code lengths for a multinomial distribution]

where , —the frequency of the k-th word appearing in the string.

4. Compression of Random Sequences and DNA Sequences

4.1. Lossless Compression Bound and Description Length

Given a dictionary of words, we parse a string into words followed by counting their frequencies , the total number of words n, and the number of distinct words d. Plugging them into expression (13), we obtain the lossless compression bound for this dictionary or parsing. If a different parsing is tried, the three quantities—word frequencies, number of words, dictionary size (number of distinct words)—would change, and the resulting bound would change accordingly. In the general situation where the data are not necessarily discrete symbols, we replace the code length with description length (10) as termed by Rissanen.

Since each parsing corresponds to a probabilistic model, the code length is model dependent. The comparison of two or more coding schemes is exactly the selection of models, with the expression (13) as the objective function.

4.2. Rissanen’s Principle of Minimum Description Length and Model Selection

Rissanen, in his works [15,19,26], proposed the principle of minimum description length (MDL) as a more general modeling rule than that of maximum likelihood, which was recommended, analyzed, and popularized by R. A. Fisher. From the information-theoretic point of view, when we encode data from a source by prefix coding, the optimal code is the one that achieves the minimum description length. Because of the equivalence between a prefix code length and the negative logarithm of the corresponding probability distribution—via Kraft’s inequality –this leads naturally to the modeling principle: the MDL principle. That is, one should choose the model or prefix coding algorithm that gives the minimal description of data; see Hansen and Yu [27] for a review on this topic. We also refer readers to [6,28] for a more complete account of the MDL principle.

MDL is a mathematical formulation of the general principle known as Occam’s razor: choose the simplest explanation consistent with the observed data [7]. We make one remark about the significance of MDL. On the one hand, Shannon’s work establishes the connection between optimal coding and probabilistic models. On the other hand, Kolmogorov’s algorithmic theory says that the complexity, or the absolute optimal coding, cannot be proved by any Turing machines. MDL offers a practical principle: it allows us to make choices among possible models and coding algorithms without requiring proof of optimality. As more model candidates are evaluated over time, our understanding continues to progress.

4.3. Compression Bounds of Random Sequences

A random sequence is incompressible by any model-based or algorithmic prefix coding as indicated by the complexity results [11,12]. Thus a legitimate compression bound of a random sequence should be no less than one up to certain variations. Conversely, if the compression rates of a sequence using as the compression bound are no less than 1 under all dictionaries , namely,

where is the theoretical bit-length of the raw sequence, then the sequence can be considered random. If we assume the source follows a uniform distribution, while can be calculated by (13). Although testing all dictionaries is challenging, we can experiment with a subset—particularly those suggested by the domain experience. More theoretical analysis on randomness testing based on universal codes can be found in the book [29].

4.4. A Simulation Study: Compression Bounds of Pseudo-Random Sequences

Simulations were carried out to test the theoretical bounds. First, a pseudo-random binary string of size 3000 was simulated in R according to Bernoulli trials with a probability of 0.5. In Table 1, the first column shows the word length used for parsing the data; The second column shows the word number; and the third column shows the number of distinct words. We group the terms in (13) into three parts: the term involving n, the term involving , and others. The bounds by , and in (13) are respectively shown in the next three columns. As the word length increases, d increases, and the bounds by exhibit a decreasing trend. A bound smaller than 1 indicates the sequence can be compressed, contradicting the assertion that random sequences cannot be so. When the word length is 8, the dictionary size is 375, and the bound by is only 0.929. The incompressibility nature of random sequences falsifies as a legitimate compression bound. If the term is included, the bounds are always larger than 1. The bounds by (13) are tighter while remaining larger than 1—except the case at the bottom row, where the number of distinct words approaches the total number of words, making the expansion insufficient. Since is an achievable bound, is an overestimate.

Table 1.

The data compression rates of a binary string of size 3000 under different parsing models. The data were simulated in R according to Bernoulli trials with probability 0.5.

4.5. Knowledge Discovery by Data Compression

On the other hand, if we can compress a sequence by a certain prefix coding scheme, then this sequence is not random. In the meantime, this coding scheme presents a clue to understanding the information structure hidden in the sequence. Data compression is one general learning mechanism, among others, to discover knowledge from nature and other sources.

Ryabko, Astola, and Gammerman [30] applied the idea of Kolmogorov complexity to the statistical testing of some typical hypotheses. This approach was used to analyze DNA sequences in [31].

4.6. DNA Sequences of Proteins

The information carried by the DNA double helix consists of two long complementary strings of the letters A, G, C, and T. It is interesting to see if we can compress DNA sequences at all. Next, we carried out the lossless compression experiments on a couple of protein-encoding DNA sequences.

4.7. Rediscovery of the Codon Structure

In Table 2, we present the result of applying the NML code length in (13) to an E. coli protein gene sequence labeled by b0059 [32], which has 2907 nucleotides. Each row corresponds to one model used for encoding. All the models being tested are listed in the first column. In the first model, we encode the DNA nucleotides one by one and name it Model 1. In the second or third model, we parse the DNA sequence by pairs and then encode the resulting dinucleotide sequence according to their frequencies. Different starting positions result two different phases, denoted by 2.0 and 2.1, respectively. Other models are understood in the same fashion. Note that all these models are generated by fixed-length parsing. The last model “a.a.” means we first translate DNA triplets into amino acids and then encode the resulting amino acid sequence. The second column shows the total number of words in each parsed sequence. The third column shows the number of different words in each parsed sequence, or the dictionary size. The fourth column is the empirical entropy estimated from observed frequencies. The next column is the first term in expression (13), which is the product of the second and fourth columns. Then we calculate the rest terms in (13). The total bits are then calculated, and the compression rates are the ratios . The last column displays the compression rates for each model.

Table 2.

The data compression rates of E. coli ORF b0059 calculated by (13) under different parsing models.

All the compression rates are around 1, except for the rate obtained from Model 3.0, which aligns with the correct codon and phase. Thus the comparison of compression bounds rediscovers the codon structure of this protein-coding DNA sequence and the phase of the open reading frame. It is somewhat surprising that the optimal code length allows us to mathematically identify the triplet coding system using only the sequence of one gene. Historically, this system was discovered by Francis Crick and his colleagues in the early 1960s using frame-shift mutations on bacteria-phage T4.

Next, we take a closer look at the results. The compression rate of the four-nucleotide word coding is closest to 1, and thus it behaves more like “random”. For example, it is 0.9947 for Model 4.2. The first term of empirical entropy contributes 5431 bits, while the rest terms contribute 346 bits. If we use instead, the rest term is bits, and the compression rate becomes 1.11, which is less tight. If the Ziv–Lempel algorithm is applied to the b0059 sequence, 635 words are generated along the way. Each word requires bits for keeping the address of its prefix and 2 bits for the last nucleotide. In total, it needs bits for storing addresses, and bits for storing the words’ last symbol. The compression rate of Ziv–Lempel coding is 1.24.

4.8. Redundant Information in Protein Gene Sequences

It is known that the triplets correspond to only 20 amino acids plus stop codons. Thus redundancy does exist in protein-coding sequences. Most of the redundancy lies in the third position of a codon. For example, GGA, GGC, GGT, and GGG all correspond to glycine. According to Table 2, the amino acid sequence contains 4048.22 bits of information, while there are 5277.51 bits of information in Model 3.0. Thus the redundancy in this sequence is estimated to be (5277.51 − 4048.22)/4048.22 = 0.30.

4.9. Randomization

To evaluate the accuracy or significance of the compression rates of a DNA sequence, we need a reference distribution for comparison. A common method is to consider the randomness obtained by permutations. That is, given a DNA sequence, we permute the nucleotide bases and re-calculate the compression rates. By repeating this permutation procedure, we generate a reference distribution.

In Table 3, we examine the compression rates for E. coli ORF b0060, which has 2352 nucleotides. First, the optimal compression rate of 0.958 is achieved with model 3.0. Second, we further carry out the calculations for permuted sequences. The averages, standard deviations, and 1% (or 99%) quantiles of compression rates under different models are shown in Table 3 as well. Except for Model 1, all the compression rates, in terms of either averages or 1% quantiles are above 1 for both and . Third, the results by the single term are about 0.996, 0.994, 0.986, and 0.952, respectively, for one-, two-, three-, and four-nucleotide models. The quantiles of for the four-nucleotide models are no larger than 0.961. Fourth, the results of show extra bits compared to those of , and the compression ratio go from 1.02 to 1.17, suggesting the rest terms in (13) are not negligible.

Table 3.

The data compression rates of E. coli ORF b0060 and statistics from permutations. The protein-coding gene sequence consists of 2352 nucleotide bases. The second row shows the parsing models, and the third row shows the compression rates computed by for the raw sequence. The subsequent rows present statistics obtained from permutations, in order, for (13), , and , respectively. For , the -quantiles are shown, all of which are smaller than 1. For the other two, the -quantiles are shown, all which are larger than 1 except in Model 1.0.

It is noted Models 3.1 and 3.2 are obtained by phase-shifting from the correct Model 3.0. Other models are obtained by incorrect parsing. These models can serve as references for Model 3.0. The incorrect parsing and phase-shifting resemble the behavior of the linear congruential pseudo-random number generator, and serve as a form of randomization.

5. Discussion

Putting together the analytical results and numerical examples, we show the compression bound of a data sequence using an exponential family is the code length derived from the NML distribution (5). The empirical bound can be implemented by the Bayesian predictive coding for any given dictionary or model. Different models are then compared by their empirical compression bounds.

The examples of DNA sequences and pseudo-random sequences indicate that the compression rates by any dictionary are indeed larger than 1 for random sequences, in line with the assertions of the Kolmogorov complexity theory. Conversely, if significant compression is achieved by a specific model, certain knowledge is gained. The codon structure is such an instance.

Unlike the algorithmic complexity, which includes an additive constant, the results based on probability distributions give the exact bits of code lengths. All three terms in (5) are important for the compression bound. Using only the first term can lead to bounds of random sequences smaller than 1. The discrepancy increases as the dictionary size grows as seen from Table 1 and Table 3. The bound by adding the second term had been proposed by the two-part coding or the Kolmogorov complexity. It is equivalent to BIC widely used in model selection. However, it overestimates the influence of the dictionary size as shown by the examples of simulations and DNA sequences. The inclusion of the Fisher information in the third term results in a tighter bound. The terms other than become larger as the dictionary size increases in Table 1 and Table 3. The observation that the compression bounds—considering all terms in (5)—remain slightly above 1 for all tested libraries meets our expectation on the incompressibility of random sequences.

Although the empirical compression bound is obtained under the i.i.d. model, the word length can be set sufficiently large to capture the local dependencies between symbols. Indeed, as shown in the examples of DNA sequences, the empirical entropy term in (5) may become smaller, for either the original sequences or the permuted ones. Meanwhile, the second term become larger. For a specific sequence, a better dictionary is selected by trading off the entropy term against the model complexity term.

Rissanen [19] obtained an expansion of the NML code length, in which the first term is the log-likelihood of data with the parameters plugged in by the MLE. In this article, we show it is exactly the empirical entropy if the parametric model takes any exponential family. According to this formulation, the NML code length can be viewed as an empirical and pathwise version of Shannon’s source coding theorem. Furthermore, the asymptotics in [19] requires five assumptions, which are hard to examine. Suzuki and Yamanishi proposed a Fourier approach to calculate the NML code length [33] for continuous random variables with certain assumptions. In contrast, we show that (5) is valid for exponential families, as long as , without any additional assumptions. If the Jeffreys prior is improper in the interior of the full parameter space, we can restrict the parameter to a compact subset. Exponential families include not only distributions of discrete symbols, such as the multinomial but also continuous distributions, such as the normal distribution.

The mathematics underlying the expansion of NML is the structure of local asymptotic normality as proposed by LeCam [18]. LAN has been used to demonstrate the optimality of certain statistical estimates. This article connects LAN to compression bound. We have shown as long as LAN is valid, a similar expansion to (2) can be obtained.

Compared to Shannon’s source coding theorem that assumes the data are from a source with a given distribution, we reported empirical versions that apply to each individual data sequence without any a priori distributional assumption. In contrast to the average optimal code length in Shannon’s theorem, the results presented in Theorems 1 and 2 are sequence specific, although distributions are used as tools for analysis. We describe the data by fitting distributions from exponential families and mixtures of exponential families, which are sufficient for most scenarios in coding and statistical learning. Knowledge discovery is illustrated through an example involving DNA sequences. The results are primarily conceptual, and their connection to recent progress in data compression—such as [34]—is worth further investigation.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/e27080864/s1.

Funding

This research is supported by the National Key Research and Development Program of China (2022YFA1004801), the National Natural Science Foundation of China (Grant No. 11871462, 32170679, 91530105), the National Center for Mathematics and Interdisciplinary Sciences of the Chinese Academy of Sciences, and the Key Laboratory of Systems and Control of the CAS.

Data Availability Statement

The numerical results reported in the article can be reproduced by the Supplementary Files. One file with R code corresponds to Table 1. The other with Python 3.13 code corresponds to Table 2 and Table 3.

Acknowledgments

The author is grateful to Bin Yu and Jorma Rissanen for their guidance in exploring this topic. He would like to thank Xiaoshan Gao for his support of this research, Zhanyu Wang for proofreading the manuscript, and his family for their unwavering support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NML | normalized maximum likelihood |

| NML code length under dictionary | |

| Bayesian predictive code length | |

| AC | arithmetic coding |

| LAN | local asymptotic normality |

Appendix A

This section contains the proofs of the results in Section 2 and Section 3. The following basic facts about the exponential family (4) are needed, see [35].

- , and .

- is one to one on the natural parameter space.

- The MLE based on is given by , where .

- The Fisher information matrix .

Proof of Theorem 1.

In the canonical exponential family, the natural parameter space is open and convex. Since , we can find a series of bounded set such that . where . Furthermore, we can select each bounded set so that it can be partitioned into disjoint cubes, each of which is denoted by with as its center and as its side length. Namely, , and for .

The normalizing constant in Equation (6) can be summed (integration in the case of continuous variables) by the sufficient statistic , and in turn by the MLE

Now expand around within the neighborhood .

Since the MLE , the second term is zero. Furthermore, we expand around , and rearrange the terms in the equation, then we have

where the constant involves the third-order derivatives of , which is continuous in the canonical exponential family and thus bounded in the bounded set . In other words, is bounded uniformly across all . Similar bounded constants will be used repeatedly hereafter. Then Equation (A2) becomes

Notice that the exponential form is the density of . If we consider i.i.d. random variables sampled from the exponential distribution (4), the MLE is a random variable. Take , then the sum of the above quantity over the neighborhood is nothing but the expectation of with respect to the distribution of , evaluated at the parameter :

Let . Now ) if and only if , where is the d-dimensional cube centered at zero with the side length r. Next expanding in the neighborhood, the above becomes

According to the central limit theorem, . Moreover, the approximation error has the Berry–Esseen bound , where the constant is determined by the bound on ’s third-order derivatives. Similarly, we have the asymptotic normality of MLE, , where the Berry–Esseen bound is valid for the convergence, see [36]. Therefore, the expectation converges as follows:

Plugging this into the sum (A1), we obtain

Note that the bound of the last term relies solely on . For a given k, we select n such that the last term is sufficiently small. This completes the proof. □

Proof of Theorem 2.

First we consider the conjugate prior of (4), which takes the form

where is a vector in , is a scalar, and . Then the marginal density is

according to the definition of . Therefore

where The minimum of is achieved at

Notice that

Through Taylor’s expansion, it can be shown that

Notice that Let By expanding at the saddle point and applying the Laplace method (see [37]), we have

Next,

The last step is valid because of and (A5). This proves the case of the prior in (A3). Meanwhile, we obtain the expansion of (A4)

If the prior of takes the form of a finite mixture of the conjugate distributions (A3) as in the following

where , . Then the marginal density is given by

Each summand is approximated by (A6). This completes the proof because of . □

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423, 623–656. [Google Scholar] [CrossRef]

- Rissanen, J. Strong optimality of the normalized ML models as universal codes and information in data. IEEE Trans. Inf. Theory 2001, 47, 1712–1717. [Google Scholar] [CrossRef]

- Shtarkov, Y.M. Universal sequential coding of single messages. Probl. Inf. Transm. 1987, 23, 3–17. [Google Scholar]

- Clarke, B.S.; Barron, A.R. Jeffrey’ prior is asymptotically least favorable under entropy risk. J. Stat. Plan. Inference 1994, 41, 37–64. [Google Scholar] [CrossRef]

- Li, L.M.; Yu, B. Iterated logarithmic expansions of the pathwise code lengths for exponential families. IEEE Trans. Inf. Theory 2000, 46, 2683–2689. [Google Scholar]

- Barron, A.; Rissanen, J.; Yu, B. The minimum description length principle in coding and modeling. IEEE. Trans. Inform. Theory 1998, 44, 2743–2760. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: Hoboken, NJ, USA, 1991. [Google Scholar]

- Rissanen, J. Generalized Kraft inequality and arithmetic coding. IBM J. Res. Dev. 1976, 20, 199–203. [Google Scholar] [CrossRef]

- Rissanen, J.J.; Langdon, G.G. Arithmetic coding. IBM J. Res. Dev. 1979, 23, 149–162. [Google Scholar] [CrossRef]

- Cover, T.M.; Gacs, P.; Gray, R.M. Kolmogorov’s Contributions to Information Theory and Algorithmic Complexity. Ann. Probab. 1989, 17, 840–865. [Google Scholar] [CrossRef]

- Li, M.; Vitányi, P. An Introduction to Kolmogorov Complexity and Its Applications; Springer: New York, NY, USA, 1996. [Google Scholar]

- Vitányi, P.; Li, M. Minimum description length induction, Bayesianism, and Kolmogorov complexity. IEEE Trans. Inform. Theory 2000, 46, 446–464. [Google Scholar] [CrossRef]

- Haussler, D. A general minimax result for relative entropy. IEEE Trans. Inf. Theory 1997, 43, 1276–1280. [Google Scholar] [CrossRef]

- Krichevsky, R.E. The connection between the redundancy and reliability of information about the source. Probl. Inform. Trans. 1968, 4, 48–57. [Google Scholar]

- Rissanen, J. Stochastic complexity and modeling. Ann. Stat. 1986, 14, 1080–1100. [Google Scholar] [CrossRef]

- Akaike, H. On entropy maximisation principle. In Applications of Statistics; Krishnaiah, P.R., Ed.; North Holland: Amsterdam, The Netherlands, 1970; pp. 27–41. [Google Scholar]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Cam, L.L.; Yang, G. Asymptotics in Statistics: Some Basic Concepts. 2000. Available online: https://link.springer.com/book/10.1007/978-1-4612-1166-2 (accessed on 14 July 2025).

- Rissanen, J. Fisher information and stochastic complexity. IEEE Trans. Inform. Theory 1996, 42, 40–47. [Google Scholar] [CrossRef]

- Rissanen, J. A predictive least squares principle. IMA J. Math. Control Inf. 1986, 3, 211–222. [Google Scholar] [CrossRef]

- Dawid, A.P. Present position and potential developments: Some personal views, statistical theory, the prequential approach. J. R. Stat. Soc. Ser. B 1984, 147, 278–292. [Google Scholar] [CrossRef]

- Dawid, A.P. Prequential analysis, stochastic complexity and Bayesian inference. In Proceedings of the Fourth Valencia International Meeting on Bayesian Statistics, Peñíscola, Spain, 15–20 April 1991; pp. 15–20. [Google Scholar]

- Powell, M.J.D. Approximation Theory and Methods; Cambridge University Press: Cambridge, UK, 1981. [Google Scholar]

- Prolla, J.B. A generalized bernstein approximation theorem. Math. Proc. Camb. Philos. Soc. 1988, 104, 317–330. [Google Scholar] [CrossRef]

- Durrett, R. Probability: Theory and Examples; Wadsworth & Brooks/Cole: Pacific Grove, CA, USA, 1991. [Google Scholar]

- Rissanen, J. Stochastic Complexity and Statistical Inquiry; World Scientific: Singapore, 1989. [Google Scholar]

- Hansen, M.; Yu, B. Model selection and minimum description length principle. J. Am. Stat. Assoc. 2001, 96, 746–774. [Google Scholar] [CrossRef]

- Grünwald, P. The Minimum Description Length Principle; MIT Press: Cambridge, MA, USA; London, UK, 2007. [Google Scholar]

- Ryabko, B.; Astola, J.; Malyutov, M. Statistical Methods Based on Universal Codes; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Ryabko, B.; Astola, J.; Gammerman, A. Application of Kolmogorov complexity and universal codes to identity testing and nonparametric testing of serial independence for time series. Theor. Comput. Sci. 2006, 359, 440–448. [Google Scholar] [CrossRef]

- Usotskaya, N.; Ryabko, B. Applications of information-theoretic tests for analysis of DNA sequences based on markov chain models. Comput. Stat. Data Anal. 2009, 53, 1861–1872. [Google Scholar] [CrossRef]

- E. coli Genome and Protein Genes. Available online: https://www.ncbi.nlm.nih.gov/genome (accessed on 14 July 2025).

- Suzuki, A.; Yamanishi, K. Exact calculation of normalized maximum likelihood code length using Fourier analysis. In Proceedings of the 2018 IEEE International Symposium on Information Theory (ISIT), Vail, CO, USA, 17–22 June 2018; pp. 1211–1215. [Google Scholar]

- Ulacha, G.; Łazoryszczak, M. Lossless image compression using context-dependent linear prediction based on mean absolute error minimization. Entropy 2024, 26, 1115. [Google Scholar] [CrossRef] [PubMed]

- Brown, L.D. Fundamentals of Statistical Exponential Families: With Applications in Statistical Decision Theory; Institute of Mathematical Statistics: Hayward, CA, USA, USA, 1986. [Google Scholar]

- Pinelis, I.; Molzon, R. Optimal-order bounds on the rate of convergence to normality in the multivariate delta method. Electron. J. Stat. 2016, 10, 1001–1063. [Google Scholar] [CrossRef]

- De Bruijn, N.G. Asymptotic Methods in Analysis; North-Holland: Amsterdam, The Netherlands, 1958. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).