Abstract

This paper proposes a low-complexity signal detection method for orthogonal time frequency space (OTFS) communication systems, based on a separable convolutional neural network (SeCNN), termed SeCNN-OTFS. A novel SeparableBlock architecture is introduced, which integrates residual connections and a channel attention mechanism to enhance feature discrimination and training stability under high Doppler conditions. By decomposing standard convolutions into depthwise and pointwise operations, the model achieves a substantial reduction in computational complexity. To validate its effectiveness, simulations are conducted under a standard OTFS configuration with 64-QAM modulation, comparing the proposed SeCNN-OTFS with conventional CNN-based models and classical linear estimators, such as least squares (LS) and minimum mean square error (MMSE). The results show that SeCNN-OTFS consistently outperforms LS and MMSE, and when the signal-to-noise ratio (SNR) exceeds 12.5 dB, its bit error rate (BER) performance becomes nearly identical to that of 2D-CNN. Notably, SeCNN-OTFS requires only 19% of the parameters compared to 2D-CNN, making it highly suitable for resource-constrained environments such as satellite and IoT communication systems. For scenarios where higher accuracy is required and computational resources are sufficient, the CNN-OTFS model—with conventional convolutional layers replacing the separable convolutional layers—can be adopted as a more precise alternative.

1. Introduction

Orthogonal time frequency space (OTFS) modulation [1,2,3,4] has emerged as a promising candidate for the air interface of sixth-generation (6G) wireless networks, particularly in high-mobility scenarios such as vehicular communications, aerial platforms, and satellite systems [5]. Unlike conventional modulation schemes, OTFS transforms the time-varying wireless channel into a two-dimensional (2D) quasi-static representation in the delay–Doppler (DD) domain [6], thereby offering enhanced robustness to both delay spread and Doppler effects. Compared to orthogonal frequency division multiplexing (OFDM), OTFS simplifies signal detection by converting the time-varying channel into a nearly static 2D convolutional model.

In communication environments, multipath propagation and Doppler effects cause significant variations in the time-frequency characteristics of signals. Accurately capturing these variations is crucial to fully harness the performance advantages of OTFS modulation. Although various detection algorithms have been proposed, many of them suffer from high computational complexity, which limits their applicability in practical real-time systems. Consequently, designing reliable yet low-complexity OTFS detection schemes remains a critical challenge for enabling high-performance 6G communications.

Previous studies have shown that linear detectors such as minimum mean square error (MMSE) and linear MMSE exhibit limited performance in highly dynamic environments. In contrast, nonlinear methods, including maximum likelihood (ML), generalized approximate message passing (GAMP), and expectation propagation (EP), improve detection accuracy but incur significant computational costs [7,8,9]. More recently, deep learning techniques have been introduced to OTFS systems, leveraging their strong nonlinear modeling capabilities to enhance detection accuracy while reducing the need for explicit signal detection [10,11].

In this paper, we propose a low-complexity signal detection method for OTFS systems based on separable convolutional neural networks (SeCNN-OTFS). Our work priovides the following key contributions. Firstly, we design a lightweight yet effective feature extraction SeparableBlock structure that integrates global residual learning in the identity path and recursive learning in the residual path, enabling efficient deep feature learning with minimal computational overhead. Secondly, compared to conventional two-dimensional convolutional neural network (2D-CNN)-based detectors, our model achieves an 81% reduction in parameters while maintaining near-optimal detection accuracy, making it highly suitable for resource-constrained systems. Thirdly, the proposed SeCNN-OTFS is particularly advantageous for high-mobility communication scenarios, such as unmanned aerial vehicle (UAV) and vehicle-to-everything (V2X) systems, where computational efficiency is critical.

2. Related Work

Research on OTFS signal detection can be broadly categorized into two major approaches: model-driven detection methods and data-driven detection methods. The former relies on physical modeling and probabilistic inference, while the latter leverages data-driven optimization to capture complex transmission characteristics.

2.1. Model-Driven Detection Methods

Model-driven techniques include linear detectors, nonlinear iterative detectors, and Turbo-aided structures.

Among the linear approaches, MMSE and zero-forcing (MMSE-ZF) [12] and linear MMSE [13] are widely used due to their low complexity and straightforward implementation. These methods operate in the DD domain and offer initial interference mitigation. MMSE-ZF provides simple equalization, making it suitable for low-mobility scenarios, whereas LMMSE achieves a better performance–complexity trade-off. However, both suffer considerable performance degradation under high Doppler spreads or fast-fading channels.

To enhance detection accuracy, a variety of nonlinear iterative detection algorithms have been proposed. Maximum a posteriori (MAP)–parallel interference cancellation (PIC) [14], message passing (MP) [15], GAMP [7], unitary approximate message passing (UAMP) [16], parallel message passing (PMP) [17], variational Bayesian (VB) [18], and EP [8,9] algorithms introduce Bayesian inference and iterative updates to suppress interference and estimate transmitted symbols more precisely. Some of these methods also integrate domain information or alternate subspace structures for improved robustness. These algorithms often outperform linear detectors, but at the cost of increased computational complexity.

Turbo detection architectures further improve performance by incorporating channel decoding into the iterative process. Turbo maximal ratio combining (MRC) [19] exploits mutual information between detection and decoding stages to refine symbol estimation. Methods like sliding window LMMSE [20] and doubly-iterative sparsified minimum mean square error (DI-S-MMSE) [21] have also been introduced to reduce latency and integrate deep learning enhancements into the iterative MMSE framework. While turbo-aided detection delivers superior bit error rate (BER) performance, it typically incurs significant processing overhead and implementation complexity.

2.2. Data-Driven Detection Methods

Deep learning has emerged as a powerful alternative for OTFS signal detection due to its capability to learn complex mappings without explicit channel modeling. These methods utilize large-scale training data to learn the delay–Doppler structure and corresponding symbol representations, making them well-suited to dynamic and non-stationary channel conditions.

Notable architectures include underwater acoustic communication (UWA)-OTFS [22], deep neural networks (DNN) [22,23], 2D-CNN [24], reservoir computing (RC)Net [25], ViterbiNet [26], and OTFS-MatNet [27]. CNN-based models exploit spatial correlation in the DD domain, while RCNet introduces residual connections for training stability. Models like UAM and DNN have been applied to various scenarios including UWA, V2X, and multiple-input multiple-output (MIMO) systems, demonstrating strong generalization across environments. ViterbiNet integrates the classic Viterbi decoding process with learnable neural networks to enable data-driven path selection under low-SNR conditions. 2D-CNN exploits the delay–Doppler channel by learning the MIMO-OTFS input–output relationship. However, the use of 5 × 5 and 7 × 7 convolutional kernels results in increased complexity.

Despite their high accuracy, deep learning-based detectors often involve large parameter sets and intensive computation during inference, limiting their deployment in real-time or embedded systems. As a result, designing lightweight neural architectures, optimizing model compression, and leveraging feature sharing mechanisms are becoming key research directions in this field.

3. Problem Formulation

3.1. OTFS Transmitter

OTFS modulation maps information symbols onto a 2D DD domain, which can directly capture the geometric features of delay and Doppler shifts caused by relative motion. This mapping transforms time-varying multipath channels into time-invariant channels in the DD domain, making it highly advantageous for high-mobility scenarios.

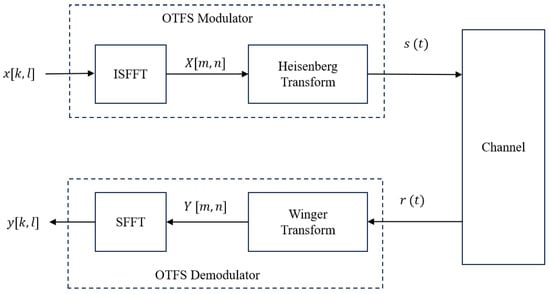

As illustrated in Figure 1, the OTFS modulator maps the input symbols onto a 2D DD grid, where and represent the Doppler and delay indices, respectively.

Figure 1.

OTFS system.

The inverse symplectic finite fourier transform (ISFFT) is applied to transform the DD-domain signal into the time–frequency (TF) domain:

where is the TF domain symbol at delay index m and Doppler index n.

Then, the Heisenberg transform converts to the time-domain signal :

where is the transmit pulse shaping function, T is the symbol duration, and is the subcarrier spacing.

3.2. Delay–Doppler Domain Channel Model

The signal received after propagation through a time-varying channel is given by:

where is the DD-domain channel response and is zero-mean complex white Gaussian noise with power .

In practice, the channel is modeled as a sparse sum of propagation paths:

where , , and are the gain, delay, and Doppler shift of the p-th path, respectively.

3.3. OTFS Receiver

At the receiver, the Wigner transform maps the received signal to the TF domain:

where is the receive pulse shaping function.

Then, the symplectic finite fourier transform (SFFT) is used to obtain the DD-domain signal:

3.4. Input-Output Relationship

By vectorizing the DD-domain signals, the overall input–output relationship of the OTFS system can be expressed as:

where is the transmitted symbol vector, is the received symbol vector, is the DD-domain channel matrix, and is the vectorized noise.

4. Proposed SeCNN-OTFS Based Signal Detection Method

4.1. Separable Convolutional Neural Network

Depthwise separable convolution is an efficient convolutional operation that decomposes a standard convolution into two separate steps: depthwise convolution and pointwise convolution. This decomposition significantly reduces computational complexity and the number of trainable parameters.

Depthwise convolution operates independently on each input channel. For an input feature map of size , depthwise convolution applies kernels of size , with each kernel convolving over a single channel. The output feature map has a size of . This step extracts spatial features, but does not allow information exchange between channels.

Pointwise convolution then uses kernels of size to linearly combine the depthwise outputs across channels, projecting them to the desired number of output channels. The size of output feature map becomes . This step enables inter-channel information fusion and dimensionality transformation.

The computational cost of standard convolution is given by the following:

The computational cost of depthwise separable convolution includes both depthwise and pointwise parts:

Therefore, the total computational cost of depthwise separable convolution compared to standard convolution is reduced by the ratio of . When and , the computational cost is reduced to approximately to of the standard convolution. Similarly, the number of parameters is reduced from to .

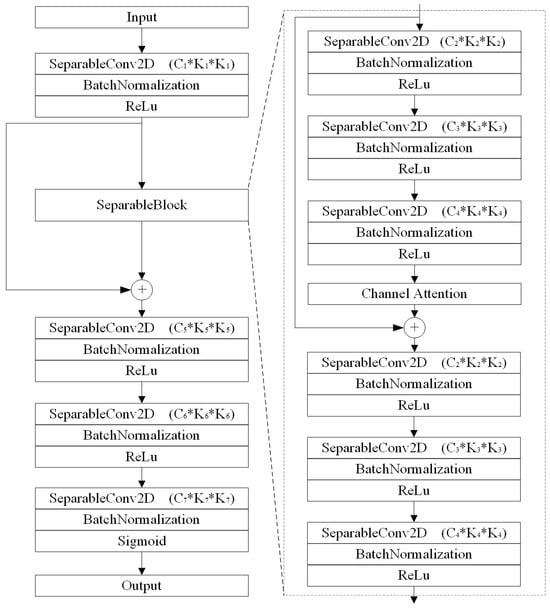

4.2. SeparableBlock

The SeparableBlock contains six separable convolutional layers, effectively reducing the computational complexity compared to traditional convolutional layers. The latter three convolutional layers mirror the structure of the first three layers. These tri-layered segments are interconnected through local residual and recursive residual connections, thereby preventing gradient explosion or vanishing gradient issues. The introduction of a channel attention mechanism effectively enhances the model’s ability to identify critical channel features and improves its robustness against noise interference and Doppler spread. This design ensures training stability while maintaining computational efficiency. Furthermore, multiple SeparableBlocks can be stacked in different application scenarios, enabling reliable convergence even as the network depth increases.

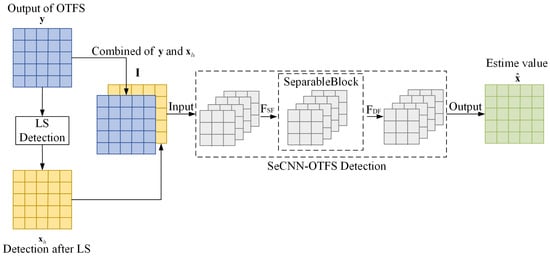

4.3. SeCNN-OTFS Framework

The architecture of SeCNN-OTFS consists of 10 layers of separable convolution, as illustrated in Figure 2. Throughout SeCNN-OTFS, the feature map dimensions remain consistent with the input and output sizes. SeCNN-OTFS primarily comprises three components: shallow feature extraction, deep feature extraction based on SeparableBlock, and reconstruction. A detailed flow of the proposed SeCNN-OTFS network is shown in Figure 3, where the first nine convolutional layers all employ ReLU active function, while the final convolutional layer uses the Sigmoid active function.

Figure 2.

The architecture of SeCNN-OTFS.

Figure 3.

The network of proposed SeCNN-OTFS, including SeparableBlock.

Let the input of the network be , which consists of the output and , given by:

where is the LS estimate of . As shown in Figure 2, only one SeparableConv2D layer is introduced to extract the shallow feature from the input:

where represents the Separable convolution operation.

Then, the extracted shallow feature is utilized for the SeparableBlock to extract the deep feature via:

where denotes the deep feature extraction model based on the SeparableBlock. The SeparableBlock is established to stack three SeparableConv2D layers. Then, the SeparableBlock is connected by the global residual learning in the identity branch and applied using recursive learning in the residual branch.

Finally, the deep feature is mapped into the output by decrementing layer by layer with three SeparableConv2D layers, as follows:

where is the estimate of .

This framework extensively uses separable convolutions, significantly reducing the number of parameters and computational cost while maintaining robust feature extraction capabilities. Batch normalization after each Separable convolutional layer stabilizes the training process and accelerates convergence, and ReLU activations provide the necessary non-linearities for capturing complex data patterns. Skip connections in the SeparableBlocks improve gradient flow, ensuring effective training even in deeper network architectures.

Therefore, the loss function of SeCNN-OTFS is defined as the mean square error (MSE) loss:

5. Complexity Analysis

To quantify the performance of the proposed method, the CNN-OTFS model was used for contrasting the experimental results. It is noted that to make a fair comparison, the network structure of this CNN model is equivalent to that of SeCNN-OTFS, which only replaces the separable convolution with traditional convolution.

To analyze the complexity of SeCNN-OTFS and CNN-OTFS, both the number of parameters and the computational cost are considered in this paper. The computational complexity of convolutional layer is given by:

where is the filter size, is the number of input channels, is the number of output channels, H and W are the height and width of the feature map. Separable convolution decomposes a convolution into depthwise convolution and pointwise convolution. The corresponding computational complexity is:

and

respectively. Thus, the total computational complexity for SeCNN is:

The total complexity for CNN is given by:

6. Experimental Result

The dataset used in this paper is generated using MATLAB R2022b [24], consisting of 100,000 samples divided into training, validation, and testing at a ratio of 6:3:1. The simulation parameter settings are shown in Table 1. The subcarrier spacing is set to 15 kHz within a 10 MHz bandwidth. To evaluate the BER of the signal detection against 2D-CNN, a range of SNRs between 0 dB and 20 dB is used with a step size of 2.5 dB. The SeCNN-OTFS is trained with a batch size of 2048, where each sample within a batch corresponds to a unique DD domain channel realization. These realizations are independently generated using random path gains and Doppler–delay indices, ensuring high diversity in the training dataset and allowing the network to generalize well across a wide range of channel conditions. The learning rate is set to 0.01 for a total of 2000 training iterations. A stochastic gradient descent (SGD) optimizer is employed to speed up the convergence. The simulation environment is built on Pycharm Community Edition (python 3.6, Tensorflow 2.15.0).

Table 1.

Simulation parameter settings.

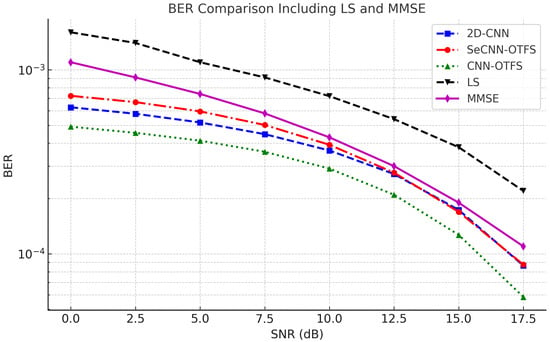

The parameter configuration of kernel sizes and network layers is summarized in Table 2. All separable convolutional layers use a kernel size of , and the number of filters gradually decreases in the last three layers, with the final layer containing only one filter to match the output dimensions. To ensure a fair comparison, we construct CNN-OTFS with the same architecture as SeCNN-OTFS, replacing the separable convolutional layers with standard convolutional layers. Similarly, 2D-CNN [24] is included as a baseline model. Also, two classical linear estimation methods of LS and minimum mean square error (MMSE) are introduced as baseline references. The total number of parameters in 2D-CNN, CNN-OTFS, and SeCNN-OTFS are 81,085, 99,501, and 15,511, respectively. Overall, SeCNN-OTFS significantly reduces both computational and parameter cost compared to CNN-based baselines, thereby improving model efficiency and deployment feasibility. The BER performance of different methods under various signal-to-noise ratio (SNR) conditions is shown in Figure 4.

Table 2.

The parameters setup of the kernel size and layer.

Figure 4.

Comparision between SeCNN-OTFS, CNN-OTFS, 2D-CNN, LS, and MMSE of BER.

Simulation results indicate that the LS method, due to its lack of consideration for noise statistics, yields the highest BER across all SNR ranges. The MMSE offers a significant improvement over LS in the low and medium SNR regions, but its performance saturates at high SNRs, where the BER cannot be further reduced. In contrast, all three deep learning-based models outperform the classical methods across the full SNR ranges. Notably, 2D-CNN and SeCNN-OTFS share roughly the same BER at high SNRs (over 12.5 dB), while 2D-CNN outperforms SeCNN-OTFS at low SNRs. This is because at high SNR, the parameter efficiency of SeCNN-OTFS is sufficient to learn channel features with less noise, achieving a performance comparable to 2D-CNN. Although SeCNN-OTFS exhibits a slightly inferior BER performance compared to CNN-OTFS across all SNR levels, it achieves a substantial reduction in model complexity. Specifically, the number of parameters in SeCNN-OTFS is only approximately 15% that of CNN-OTFS, and 19% that of 2D-CNN, demonstrating a favorable balance between performance and computational efficiency, as shown in Table 3. Nevertheless, CNN-OTFS may be preferred in scenarios where higher accuracy is required and computational resources permit.

Table 3.

Complexity and average BER performance comparison.

7. Conclusions

In this paper, we proposed a low-complexity SeCNN-OTFS method to reduce the computational cost of signal detection in OTFS systems. By replacing conventional convolutional layers with depthwise separable convolutions, the proposed model achieves approximately an 81% reduction in parameter count compared to the 2D-CNN framework. To enhance feature representation while maintaining computational efficiency, we designed a lightweight SeparableBlock architecture, which incorporates global residual learning in the identity branch and recursive learning in the residual branch. Furthermore, a channel attention mechanism was introduced to adaptively highlight salient features while suppressing irrelevant responses, thereby improving robustness under noisy and high-mobility channel conditions. Simulation results confirm that the proposed SeCNN-OTFS achieves competitive BER performance, while significantly reducing model complexity. These findings demonstrate that SeCNN-OTFS is a promising and scalable solution for real-time wireless communication systems, particularly in resource-constrained scenarios such as satellite or IoT communications.

Author Contributions

Conceptualization, Y.W.; methodology, Y.W.; validation, Y.W. and Z.Z.; formal analysis, Y.W.; investigation, Y.W. and Z.Z.; resources, Z.C.; data curation, Y.W.; writing—original draft preparation, Y.W.; writing—review and editing, H.L. and T.Z.; visualization, Y.W.; supervision, Z.C. and H.L.; project administration, Y.W. and Z.C.; funding acquisition, Z.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Project of Ministry of Science and Technology (Grant D20011).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The dataset in this paper is obtained from Matlab.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SeCNN | separable oonvolutional neural network |

| OTFS | orthogonal time frequency space |

| LS | least squares |

| MMSE | minimum mean square error |

| BER | bit error rate |

| 6G | sixth-generation |

| 2D | two-dimensional |

| DD | delay-doppler |

| OFDM | orthogonal frequency division multiplexing |

| ML | maximum likelihood |

| GAMP | generalized approximate message passing |

| EP | expectation propagation |

| SeCNN-OTFS | separable oonvolutional neural network for orthogonal time frequency space |

| 2D-CNN | two-dimensional convolutional neural network |

| UAV | unmanned aerial vehicle |

| V2X | vehicle-to-everything |

| MMSE-ZF | minimum mean square error and zero-forcing |

| LMMSE | linear minimum mean square error |

| MAP | maximum a posteriori |

| PIC | parallel interference cancellation |

| MP | message passing |

| UAMP | unitary approximate message passing |

| PMP | parallel message passing |

| VB | ariational Bayesian |

| MRC | bo maximal ratio combining |

| DI-S-MMSE | doubly-iterative sparsified minimum mean square error |

| UWA | underwater acoustic communication |

| DNN | deep neural network |

| CNN | convolutional neural network |

| MIMO | and multiple-input multiple-output |

| ISFFT | inverse symplectic finite fourier transform |

| TF | e time-frequency |

| SFFT | symplectic finite fourier transform |

| MSE | mean square error |

| SGD | Stochastic gradient descent |

| SNR | signal-to-noise ratio |

References

- Hadani, R.; Rakib, S.; Tsatsanis, M.; Monk, A.; Goldsmith, A.J.; Molisch, A.F.; Calderbank, R. Orthogonal time frequency space modulation. In Proceedings of the 2017 IEEE Wireless Communications and Networking Conference (WCNC), San Francisco, CA, USA, 19–22 March 2017; pp. 1–6. [Google Scholar]

- Lin, H.; Yuan, J. Orthogonal delay-Doppler division multiplexing modulation. IEEE Trans. Wirel. Commun. 2022, 21, 11024–11037. [Google Scholar] [CrossRef]

- Mishra, H.B.; Singh, P.; Prasad, A.K.; Budhiraja, R. OTFS channel estimation and data detection designs with superimposed pilots. IEEE Trans. Wirel. Commun. 2021, 21, 2258–2274. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Guo, Q.; Zhang, J.A.; Huang, X.; Cheng, Z. Sensing aided uplink transmission in OTFS ISAC with joint parameter association, channel estimation and signal detection. IEEE Trans. Veh. Technol. 2024, 73, 9109–9114. [Google Scholar] [CrossRef]

- Payami, M.; Blostein, S.D. Deep Learning-based Channel Estimation for Massive MIMO-OTFS Communication Systems. In Proceedings of the 2024 Wireless Telecommunications Symposium (WTS), Oakland, CA, USA, 10–12 April 2024; pp. 1–6. [Google Scholar]

- Priya, P.; Hong, Y.; Viterbo, E. OTFS Channel Estimation and Detection for Channels with Very Large Delay Spread. IEEE Trans. Wirel. Commun. 2024, 23, 11920–11930. [Google Scholar] [CrossRef]

- Xiang, L.; Liu, Y.; Yang, L.L.; Hanzo, L. Gaussian Approximate Message Passing Detection of Orthogonal Time Frequency Space Modulation. IEEE Trans. Veh. Technol. 2021, 70, 10999–11004. [Google Scholar] [CrossRef]

- Li, H.; Dong, Y.; Gong, C.; Zhang, Z.; Wang, X.; Dai, X. Low Complexity Receiver via Expectation Propagation for OTFS Modulation. IEEE Commun. Lett. Publ. IEEE Commun. Soc. 2021, 25, 3180–3184. [Google Scholar] [CrossRef]

- Shan, Y.; Wang, F.; Hao, Y. Orthogonal Time Frequency Space Detection via Low-Complexity Expectation Propagation. IEEE Trans. Wirel. Commun. 2022, 21, 10887–10901. [Google Scholar] [CrossRef]

- Yong, L.; Xiaojin, H. Research Progress on Channel Estimation and Signal Detection in OTFS Systems Based on Machine Learning. Mob. Commun. 2024, 48, 46–56. [Google Scholar]

- Yongchuan, W.; Ping, Z.; Ju, H. Research Status of Channel Estimation and Signal Detection Techniques for Orthogonal Time-Frequency-Space Modulation. J. Commun. 2024, 45, 229–243. [Google Scholar]

- Surabhi, G.D.; Chockalingam, A. Low-Complexity Linear Equalization for OTFS Modulation. IEEE Commun. Lett. 2019, 24, 330–334. [Google Scholar] [CrossRef]

- Tiwari, S.; Das, S.S.; Rangamgari, V. Low Complexity LMMSE Receiver for OTFS. IEEE Commun. Lett. 2019, 23, 2205–2209. [Google Scholar] [CrossRef]

- Li, S.; Yuan, W.; Wei, Z.; Yuan, J.; Xie, Y. Hybrid MAP and PIC Detection for OTFS Modulation. IEEE Trans. Veh. Technol. 2021, 70, 7193–7198. [Google Scholar] [CrossRef]

- Raviteja, P.; Phan, K.T.; Yi, H.; Emanuele, V. Interference Cancellation and Iterative Detection for Orthogonal Time Frequency Space Modulation. IEEE Trans. Wirel. Commun. 2018, 17, 6501–6515. [Google Scholar] [CrossRef]

- Yuan, Z.; Liu, F.; Yuan, Z.; Yuan, J. Iterative Detection for Orthogonal Time Frequency Space Modulation with Unitary Approximate Message Passing. IEEE Trans. Wirel. Commun. 2022, 21, 714–725. [Google Scholar] [CrossRef]

- Yuan, Z.; Tang, M.; Chen, J.; Fang, T.; Wang, H.; Yuan, J. Low Complexity Parallel Symbol Detection for OTFS Modulation. IEEE Trans. Veh. Technol. 2023, 72, 4904–4918. [Google Scholar] [CrossRef]

- Yuan, W.; Wei, Z.; Yuan, J.; Ng, D.W.K. A Simple Variational Bayes Detector for Orthogonal Time Frequency Space (OTFS) Modulation. IEEE Trans. Veh. Technol. 2020, 69, 7976–7980. [Google Scholar] [CrossRef]

- Thaj, T.; Viterbo, E. Low Complexity Iterative Rake Decision Feedback Equalizer for Zero-Padded OTFS systems. IEEE Trans. Veh. Technol. 2020, 69, 15606–15622. [Google Scholar] [CrossRef]

- Lampel, F.; Alvarado, A.; Willems, F.M.J. A Sliding-Window LMMSE Turbo Equalization Scheme for OTFS. IEEE Commun. Lett. 2023, 27, 3320–3324. [Google Scholar] [CrossRef]

- Li, H.; Yu, Q. Doubly-Iterative Sparsified MMSE Turbo Equalization for OTFS Modulation. IEEE Trans. Commun. 2023, 71, 1336–1351. [Google Scholar] [CrossRef]

- Shen, X. Deep Learning-Based Signal Detection for Underwater Acoustic OTFS Communication. J. Mar. Sci. Eng. 2022, 10, 1920. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Y.; Chang, J.; Wang, B.; Bai, W. DNN-based Signal Detection for Underwater OTFS Systems. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC Workshops), Foshan, China, 11–13 August 2022; pp. 348–352. [Google Scholar] [CrossRef]

- Enku, Y.K.; Bai, B.; Li, S.; Liu, M.; Tiba, I.N. Deep-learning based signal detection for MIMO-OTFS systems. In Proceedings of the 2022 IEEE International Conference on Communications Workshops (ICC Workshops), Seoul, Republic of Korea, 16–20 May 2022; pp. 1–5. [Google Scholar]

- Zhou, Z.; Liu, L.; Xu, J.; Calderbank, R. Learning to Equalize OTFS. IEEE Trans. Wirel. Commun. 2022, 21, 7723–7736. [Google Scholar] [CrossRef]

- Gong, Y.; Li, Q.; Meng, F.; Li, X.; Xu, Z. ViterbiNet-Based Signal Detection for OTFS System. IEEE Commun. Lett. 2023, 27, 881–885. [Google Scholar] [CrossRef]

- Cheng, Q.; Shi, Z.; Yuan, J.; Zhou, M. Environment-robust Signal Detection for OTFS Systems Using Deep Learning. In Proceedings of the GLOBECOM 2022—2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 5219–5224. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).