Abstract

In network analysis, developing a unified theoretical framework that can compare methods under different models is an interesting problem. This paper proposes a partial solution to this problem. We summarize the idea of using a separation condition for a standard network and sharp threshold of the Erdös–Rényi random graph to study consistent estimation, and compare theoretical error rates and requirements on the network sparsity of spectral methods under models that can degenerate to a stochastic block model as a four-step criterion SCSTC. Using SCSTC, we find some inconsistent phenomena on separation condition and sharp threshold in community detection. In particular, we find that the original theoretical results of the SPACL algorithm introduced to estimate network memberships under the mixed membership stochastic blockmodel are sub-optimal. To find the formation mechanism of inconsistencies, we re-establish the theoretical convergence rate of this algorithm by applying recent techniques on row-wise eigenvector deviation. The results are further extended to the degree-corrected mixed membership model. By comparison, our results enjoy smaller error rates, lesser dependence on the number of communities, weaker requirements on network sparsity, and so forth. The separation condition and sharp threshold obtained from our theoretical results match the classical results, so the usefulness of this criterion on studying consistent estimation is guaranteed. Numerical results for computer-generated networks support our finding that spectral methods considered in this paper achieve the threshold of separation condition.

1. Introduction

Networks with latent structure are ubiquitous in our daily life, for example, social networks from social platforms, protein–protein interaction networks, co-citation networks and co-authorship networks [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15]. Community detection is a powerful tool to learn the latent community structure in networks and graphs in social science, computer science, machine learning, statistical science and complex networks [16,17,18,19,20,21,22]. The goal of community detection is to infer a node’s community information from the network.

Many models have been proposed to model networks with latent community structure; see [23] for a survey. The stochastic blockmodel (SBM) [24] stands out for its simplicity, and it has received increasing attention in recent years [25,26,27,28,29,30,31,32,33,34,35]. However, the SBM only models a non-overlapping network in which each node belongs to a single community. Estimating mixed memberships of the network whose node may belong to multiple communities has received a lot of attention [36,37,38,39,40,41,42,43,44]. To capture the structure of the network with mixed memberships, Ref. [36] proposed the popular mixed membership stochastic blockmodel (MMSB), which is an extension of SBM from non-overlapping networks to overlapping networks. It is well known that the degree-corrected stochastic blockmodel (DCSBM) [45] is an extension of SBM by considering the degree heterogeneity of nodes to fit real-world networks with various node degree. Similarly, Ref. [41] proposed a model named the degree-corrected mixed membership (DCMM) model as an extension of MMSB by considering the degree heterogeneity of nodes. There are alternative models based on MMSB, such as the overlapping continuous community assignment model (OCCAM) of [40] and the stochastic blockmodel with overlap (SBMO) proposed by [46], which can also model networks with mixed memberships. As discussed in Section 5, OCCAM equals DCMM, while SBMO is a special case of DCMM.

1.1. Spectral Clustering Approaches

For the four models SBM, DCSBM, MMSB and DCMM, many researchers focus on designing algorithms with provable consistent theoretical guarantees. Spectral clustering [47] is one of the most widely applied methods with guarantees of consistency for community detection.

Within the SBM and DCSBM frameworks for a non-overlapping network, spectral clustering has two steps. It first conducts the eigen-decomposition of the adjacency matrix or the Laplacian matrix [26,48,49]. Then it runs a clustering algorithm (typically, k-means) on some leading eigenvectors or their variants to infer the community membership. For example, Ref. [26] showed the consistency of spectral clustering designed based on Laplacian matrix under SBM. Ref. [48] proposed a regularized spectral clustering (RSC) algorithm designed based on regularized Laplacian matrix and shows its theoretical consistency under DCSBM. Ref. [30] studied the consistencies of two spectral clustering algorithms based on the adjacency matrix under SBM and DCSBM. Ref. [50] designed the spectral clustering on the ratios-of-eigenvectors (SCORE) algorithm with a theoretical guarantee under DCSBM. Ref. [49] studied the impact of regularization on a Laplacian spectral clustering under SBM.

Within the MMSB and DCMM frameworks for the overlapping network, broadly speaking, spectral clustering has the following three steps. One first conducts an eigen-decomposition of the adjacency matrix or the graph Laplacian, then hunts corners (also known as vertexes) using a convex hull algorithm, and finally has a membership reconstruction step by projection. The convex hull algorithms suggested in [41] differ in the k-means algorithm a lot. For example, Ref. [44] designed the sequential projection after cleaning (SPACL) algorithm based on the finding that there exists a simplex structure in the eigen-decomposition of the population adjacency matrix and studies the SPACL theoretical properties under MMSB. Meanwhile, SPACL uses the successive projection algorithm proposed in [51] to find the corners for its simplex structure. To fit DCMM, Ref. [41] designs the Mixed-SCORE algorithm based on the finding that there exists a simplex structure in the entry-wise ratio matrix obtained from the eigen-decomposition of the population adjacency matrix under DCMM. Ref. [41] also introduces several choices for convex hull algorithms to find corners for the simplex structure and show the estimation consistency of the Mixed-SCORE under DCMM. Ref. [43] finds the cone structure inherent in the normalization of eigenvectors of the population adjacency matrix under DCMM as well as OCCAM, and develops an algorithm to hunt corners in the cone structure.

1.2. Separation Condition, Alternative Separation Condition and Sharp Threshold

SBM with n nodes belonging to K equal (or nearly equal) size communities and vertices connect with probability within clusters and across clusters, denoted by , has been well studied in recent years, especially for the case when ; see [21] and the references therein. In this paper, we call the network generated from the standard network for convenience. Without causing confusion, we also call the standard network, occasionally. Let . Refs. [21,52] found that exact recovery in is solvable, and efficiently so, if (i.e., ) and unsolvable if as summarized in Theorem 13 of [53]. This threshold can be achieved by semidefinite relaxations [21,54,55,56] and spectral methods with local refinements [57,58]. Unlike semidefinite relaxations, spectral methods have a different threshold, which was particularly pointed out by [21,52]: one highlight for is a theorem by [59] which says that when , if

then spectral methods can exactly recover node labels with high probability as n goes to infinity (also known as consistent estimation [30,40,41,43,44,48,50]).

Consider a more general case with ; this paper finds that the above threshold can be extended as

which can be alternatively written as

In this paper, when , the lower bound requirement on (and ) for the consistent estimation of spectral methods is called the separation condition (alternative separation condition). The network generated from with is an assortative network in which nodes within the community have more edges than across communities [60]. The network generated from with is a dis-assortative network in which nodes within the community have fewer edges than across communities [60]. Therefore, Equation (2) holds for both assortative and dis-assortative networks.

Meanwhile, when such that , degenerates to Erdös–Rényi (ER) random graph [53,61,62]. Ref. [61] finds that the ER random graph is connected with high probability if

We call the lower bound requirement on p for generating a connected ER random graph the sharp threshold in this paper.

1.3. Inconsistencies on Separation Condition in Some Previous Works

In this paper, we focus on the consistency of spectral method in community detection. The study of consistency is developed by obtaining the theoretical upper bound of error rate for a spectral method through analyzing the properties of the population adjacency matrix under the statistical model. To compare the consistencies of the theoretical results under different models, it is meaningful to study whether the separation condition and sharp threshold obtained from upper bounds of theoretical error rates for different methods under different models are consistent or not. Meanwhile, the separation condition and sharp threshold can also be seen as alternative unified theoretical frameworks to compare all methods and model parameters mentioned in the concluding remarks of [30].

Based on the separation condition and sharp threshold, here we describe some phenomena of the inconsistency in the community detection area. We find that the separation conditions of with obtained from the error rates developed in [41,43,44] under DCMM or MMSB are not consistent with those obtained from the main results of [30] under SBM, and the sharp threshold obtained from the main results of [43,44] do not match the classical results. A summary of these inconsistencies is provided in Table 1 and Table 2. Furthermore, after delicate analysis, we find that the requirement on the network sparsity of [43,44] is stronger than that of [30,41], and [63] also finds that the requirement of Ref. [44] of network sparsity is sub-optimal.

Table 1.

Comparison of separation condition and sharp threshold. Details of this table are given in Section 4. The classical result on separation condition given in Corollary 1 of [59] is (i.e., Equation (1)). The classical result on sharp threshold is (i.e., Equation (3)) given in [61], Theorem 4.6 [62] and the first bullet in Section 2.5 [53]. In this paper, n is the number of nodes in a network, A is the adjacency matrix, is the expectation of A under some models, is a regularization of A, is the sparsity parameter such that and it controls the overall sparsity of a network, denotes spectral norm, and .

Table 2.

Comparison of alternative separation condition, where the classical result on alternative separation condition is 1 (i.e., Equation (2)).

1.4. Our Findings

Recall that we reviewed several spectral clustering methods under SBM, DCSBM, MMSB and DCMM introduced in [26,30,41,43,44,48,49,50] and DCSBM, MMSB and DCMM are extensions of SBM (i.e., is a special case of DCSBM, MMSB and DCMM). We have the following question:

Can these spectral clustering methods achieve the threshold in Equation (1) (or Equation (2)) for with and the threshold in Equation (3) for the Erdös–Rényi (ER) random graph ?

The answer is yes. In fact, spectral methods for network with mixed memberships still achieve thresholds in Equations (1) and (2) for defined in Definition 2 when , where can be seen as a generalization of such that there exist nodes belonging to multiple communities. Explanations for why these spectral clustering methods achieve thresholds in Equations (1)–(3) will be provided in Section 3, Section 4 and Section 5 via re-establishing theoretical guarantee for SPACL under MMSB and its extension under DCMM because we find that the main theoretical results of [43,44] are sub-optimal. Meanwhile, we can obtain (and cannot obtain) the separation condition and sharp threshold from the theoretical bounds of error rates for spectral methods analyzed in [30,41,43,44] ([26,30,48,49,50]) directly. Instead of re-establishing the theoretical guarantee for all spectral methods reviewed in this paper to show that they achieve thresholds in Equations (1) and (3) for with , we mainly focus on the SPACL algorithm under MMSB and its extension under DCMM since MMSB and DCMM are more complex than SBM and DCSBM.

We then summarize the idea of using the separation condition and sharp threshold to study the consistencies, and compare the error rates and requirements on network sparsity of different spectral methods under different models as a four-step criterion, which we call the separation condition and sharp threshold criterion (SCSTC for short). With an application of this criterion, this paper provides an attempt to answer the questions of how the above inconsistency phenomena occur, and how to obtain consistent resultswith weaker requirements on the network sparsity of [43,44]. To answer the two questions, we use the recent techniques on row-wise eigenvector deviation developed in [64,65] to obtain consistent theoretical results directly related with model parameters for the SPACL and the SVM-cone-DCMMSB algorithm of [43]. The two questions are then answered by delicate analysis with an application of SCSTC to the theoretical upper bounds of error rates in this paper and some previous spectral methods. Using SCSTC for the spectral methods introduced and studied in [26,30,48,49,50] and some other spectral methods fitting models that can reduce to with , one can prove that these spectral methods achieve thresholds in Equations (1)–(3). The main contributions in this paper are as follows:

- (i)

- We summarize the idea of using the separation condition of a standard network and sharp threshold of the ER random graph to study the consistent estimations of different spectral methods designed via eigen-decomposition or singular value decomposition of the adjacency matrix or its variants under different models that can degenerate to SBM under mild conditions as a four-step criterion, SCSTC. The separation condition is used to study the consistency of the theoretical upper bound for the spectral method, and the sharp threshold can be used to study the network sparsity. The theoretical results of upper bounds for different spectral methods can be compared by SCSTC. Using this criterion, a few inconsistent phenomenons of some previous works are found.

- (ii)

- Under MMSB and DCMM, we study the consistencies of the SPACL algorithm proposed in [44] and its extended version using the recent techniques on row-wise eigenvector deviation developed in [64,65]. Compared with the original results of [43,44], our main theoretical results enjoy smaller error rates by lesser dependence on K and . Meanwhile, our main theoretical results have weaker requirements on the network sparsity and the lower bound of the smallest nonzero singular value of the population adjacency matrix. For details, see Table 3 and Table 4.

Table 3. Comparison of error rates between our Theorem 1 and Theorem 3.2 [44] under . The dependence on K is obtained when . For comparison, we have adjusted the error rates of Theorem 3.2 [44] into error rates. Note that as analyzed in the first bullet given after Lemma 2, whether using or does not change our , and has no influence on bound in Theorem 1. For [44], using , the power of in their Theorem 3.2 is ; using , the power of in their Theorem 3.2 is .

Table 3. Comparison of error rates between our Theorem 1 and Theorem 3.2 [44] under . The dependence on K is obtained when . For comparison, we have adjusted the error rates of Theorem 3.2 [44] into error rates. Note that as analyzed in the first bullet given after Lemma 2, whether using or does not change our , and has no influence on bound in Theorem 1. For [44], using , the power of in their Theorem 3.2 is ; using , the power of in their Theorem 3.2 is . Table 4. Comparison of error rates between our Theorem 2 and Theorem 3.2 [43] under . The dependence on K is obtained when . For comparison, we adjusted the error rates of Theorem 3.2 [43] into error rates. Since Theorem 2 enjoys the same separation condition and sharp threshold as Theorem 1, and Theorem 3.2 [43] enjoys the same separation condition and sharp threshold as Theorem 3.2 [44], we do not report them in this table. Note that as analyzed in Remark 11, whether using or does not change our under DCMM, and has no influence on the results in Theorem 2. For [43], using , the power of in their Theorem 3.2 is ; using , the power of in their Theorem 3.2 is .

Table 4. Comparison of error rates between our Theorem 2 and Theorem 3.2 [43] under . The dependence on K is obtained when . For comparison, we adjusted the error rates of Theorem 3.2 [43] into error rates. Since Theorem 2 enjoys the same separation condition and sharp threshold as Theorem 1, and Theorem 3.2 [43] enjoys the same separation condition and sharp threshold as Theorem 3.2 [44], we do not report them in this table. Note that as analyzed in Remark 11, whether using or does not change our under DCMM, and has no influence on the results in Theorem 2. For [43], using , the power of in their Theorem 3.2 is ; using , the power of in their Theorem 3.2 is . - (iii)

- Our results for DCMM are consistent with those for MMSB when DCMM degenerates to MMSB under mild conditions. Using SCSTC, under mild conditions, our main theoretical results under DCMM are consistent with those of [41]. This answers the question that the phenomenon that the main results of [43,44] do not match those of [41] occurs due to the fact that in Refs. [43,44], the theoretical results of error rates are sub-optimal. We also find that our theoretical results (as well as those of [41]) under both MMSB and DCMM match the classical results on the separation condition and sharp threshold, i.e., achieve thresholds in Equations (1)–(3). Using the bound of instead of to establish the upper bound of error rate under SBM in [30], the two spectral methods studied in [30] achieve thresholds in Equations (1)–(3), which answers the question of why the separation condition obtained from error rate of [41] does not match that obtained from the error rate of [30]. Using or influences the row-wise eigenvector deviations in Theorem 3.1 of [44] and Theorem I.3 of [43], and thus using or influences the separation conditions and sharp thresholds of [43,44]. For comparison, our bound on row-wise eigenvector deviation is obtained by using the techniques developed in [64,65] and that of [41] is obtained by applying the modified Theorem 2.1 of [66]; therefore, using or has no influence on the separation conditions and sharp thresholds of ours and that of [41]. For details, see Table 1 and Table 2. In a word, using SCSTC, the spectral methods proposed and studied in [26,30,41,43,44,48,49,50,67,68] or some other spectral methods fitting models that can reduce to achieve thresholds in Equations (1)–(3).

- (iv)

- We verify our threshold in Equation (2) by some computer-generated networks in Section 6. The numerical results for networks generated under when and show that SPACL and its extended version achieve a threshold in Equation (2), and results for networks generated from when and show that the spectral methods considered in [26,30,48,50] achieve the threshold in Equation (2).

The article is organized as follows. In Section 2, we give the formal introduction to the mixed membership stochastic blockmodel and review the algorithm SPACL considered in this paper. The theoretical results of consistency for the mixed membership stochastic blockmodel are presented and compared to related works in Section 3. After delicate analysis, the separation condition and sharp threshold criterion is presented in Section 4. Based on an application of this criterion, the improvement consistent estimation results for the extended version of SPACL under the degree corrected mixed membership model are provided in Section 5. Several computer-generated networks under MMSB and SBM are conducted to show that some spectral clustering methods achieve the threshold in Equation (2) in Section 6. The conclusion is given in Section 7.

Notations. We take the following general notations in this paper. Write for any positive integer m. For a vector x and fixed , denotes its -norm. We drop the subscript if occasionally. For a matrix M, denotes the transpose of the matrix M, denotes the spectral norm, denotes the Frobenius norm, denotes the maximum -norm of all the rows of M, and denotes the maximum absolute row sum of M. Let denote the rank of matrix M. Let be the i-th largest singular value of matrix M, denote the i-th largest eigenvalue of the matrix M ordered by the magnitude, and denote the condition number of M. and denote the i-th row and the j-th column of matrix M, respectively. and denote the rows and columns in the index sets and of matrix M, respectively. For any matrix M, we simply use to represent for any . For any matrix , let be the diagonal matrix whose i-th diagonal entry is . and are column vectors with all entries being ones and zeros, respectively. is a column vector whose i-th entry is 1, while other entries are zero. In this paper, C is a positive constant which may vary occasionally. means that there exists a constant such that holds for all sufficiently large n. means there exists a constant such that . indicates that as .

2. Mixed Membership Stochastic Blockmodel

Let be a symmetric adjacency matrix such that if there is an edge between node i to node j, and otherwise. The mixed membership stochastic blockmodel (MMSB) [36] for generating A is as follows.

where is called the membership matrix with and for and , is an non-negative symmetric matrix with for model identifiability under MMSB, is called the sparsity parameter which controls the sparsity of the network, and is called the population adjacency matrix since . As mentioned in [41,44], is a measure of the separation between communities, and we call it the separation parameter in this paper. and are two important model parameters directly related with the separation condition and sharp threshold, and they will be considered throughout this paper.

Definition 1.

Call model (4) the mixed membership stochastic blockmodel (MMSB), and denote it by .

Definition 2.

Let be a special case of when has diagonal entries and non-diagonal entries , and .

Call node i ‘pure’ if is degenerate (i.e., one entry is 1, all others entries are 0) and ‘mixed’ otherwise. When all nodes are pure in , we see that exactly reduces to . Thus, is a generalization of with mixed nodes in each community. In this paper, we show that SPACL [44] fitting MMSB and SVM-cone-DCMMSB [43] and Mixed-SCORE [41] fitting DCMM also achieve thresholds in Equations (1)–(3) for with . By Theorems 2.1 and 2.2 [44], the following conditions are sufficient for the identifiability of MMSB, when for all ,

- (I1) .

- (I2) There is at least one pure node for each of the K communities.

Unless specified, we treat conditions (I1) and (I2) as the default from now on.

For , let be the set of pure nodes in community k such that . For , select one node from to construct the index set , i.e., is the index of nodes corresponding to K pure nodes, one from each community. Without loss of generality, let where is the identity matrix. Recall that . Let be the compact eigen-decomposition of such that , and . Lemma 2.1 [44] gives that , and such a form is called ideal simplex (IS for short) [41,44] since all rows of U form a K-simplex in and the K rows of are the vertices of the K-simplex. Given and K, as long as we know , we can exactly recover by since is a full rank matrix. As mentioned in [41,44], for such IS, the successive projection (SP) algorithm [51] (i.e., Algorithm A1) can be applied to U with K communities to exactly find the corner matrix . For convenience, set . Since , we have for .

Based on the above analysis, we are now ready to give the ideal SPACL algorithm with input and output .

- Let be the top-K eigen-decomposition of such that .

- Run SP algorithm on the rows of U assuming that there are K communities to obtain .

- Set .

- Recover by setting for .

With the given U and K, since the SP algorithm returns , we see that the ideal SPACL exactly (for detail, see Appendix B) returns .

Now, we review the SPACL algorithm of [44]. Set to be the top K eigen-decomposition of A such that , and contains the top K eigenvalues of A. For the real case, use given in Algorithm 1 to estimate , respectively. Algorithm 1 is the SPACL algorithm [44] where we only care about the estimation of the membership matrix , and omit the estimation of P and . Meanwhile, Algorithm 1 is a direct extension of the ideal SPACL algorithm from the oracle case to the real case, and we omit the prune step in the original SPACL algorithm of [44].

| Algorithm 1 SPACL [44] |

|

3. Consistency under MMSB

Our main result under MMSB provides an upper bound on the estimation error of each node’s membership in terms of several model parameters. Throughout this paper, K is a known positive integer. Assume that

- (A1)

- .

Assumption (A1) provides a requirement on the lower bound of sparsity parameter such that it should be at least . Then we have the following lemma.

Lemma 1.

Under , when Assumption (A1) holds, with probability at least for any , we have

In Lemma 1, instead of simply using a constant to denote , we keep the explicit form here.

Remark 1.

When Assumption (A1) holds, the upper bound of in Lemma 1 is consistent with Corollary 6.5 in [69] since under .

Lemma 1 is obtained via Theorem 1.4 (Bernstein inequality) in [70]. For comparison, Ref. [44] applies Theorem 5.2 [30] to bound (see, for example, Equation (14) of [44]) and obtains a bound as for some . However, is the bound between a regularization of A and as stated in the proof of Theorem 5.2 [30], where such regularization of A is obtained from A with some constraints in Lemmas 4.1 and 4.2 of the supplemental material [30]. Meanwhile, Theorem 2 [71] also gives that the bound between a regularization of A and is , where such a regularization of A should also satisfy few constraints on A; see Theorem 2 [71] for detail. Instead of bounding the difference between a regularization of A and , we are interested in bounding by the Bernstein inequality, which has no constraints on A. For convenience, use to denote the regularization of A in this paper. Hence, with high probability, and this bound is model independent as shown by Theorem 5.2 [30] and Theorem 2 [71] as long as (here, let without considering models, a satisfying is also the sparsity parameter which controls the overall sparsity of a network). Note that is not , where is obtained by the top K eigen-decomposition of A, while is obtained by adding constraints on degrees of A; see Theorem 2 [71] for detail.

In [41,43,44], the main theoretical results for their proposed membership estimating methods hinge on a row-wise deviation bound for the eigenvectors of the adjacency matrix, whether under MMSB or DCMM. Different from the theoretical technique applied in Theorem 3.1 [44], which provides sup-optimal dependencies on and K, and needs sub-optimal requirements on the sparsity parameter and the lower bound of , to obtain row-wise deviation bound for the singular eigenvector of , we use Theorem 4.2 [64] and Theorem 4.2 [65].

Lemma 2

(Row-wise eigenspace error). Under , when Assumption (A1) holds, suppose , with probability at least ,

- When we apply Theorem 4.2 of [64], we have

- When we apply Theorem 4.2 of [65], we have

For convenience, set , and let denote the upper bound in Lemma 2 when applying Theorem 4.2 of [64] and Theorem 4.2 of [65], respectively. Note that when , we have , and therefore we simply let be the bound since its form is slightly simpler than .

Compared with Theorem 3.1 of [44], since we apply Theorem 4.2 of [64] and Theorem 4.2 of [65] to obtain the bound of row-wise eigenspace error under MMSB, our bounds do not rely on while Theorem 3.1 [44] does. Meanwhile, our bound in Lemma 2 is sharper with lesser dependence on K and , has weaker requirements on the lower bounds of and the sparsity parameter . The details are given below:

- We emphasize that the bound of Theorem 3.1 of [44] should be instead of for where the function is defined in Equation (7) of [44], and this is also pointed out by Table 2 of [63]. The reason is that in the proof part of Theorem 3.1 [44], from step (iii) to step (iv), they should keep the term since this term is much larger than 1. We can also find that the bound in Theorem 3.1 [44] should multiply from Theorem VI.1 [44] directly. For comparison, this bound is times our bound in Lemma 2. Meanwhile, by the proof of the bound in Theorem 3.1 of [44], we see that the bound depends on the upper bound of , and [44] applies Theorem 5.2 of [30] such that with high probability. Since is the upper bound of the difference between a regularization of A and . Therefore, if we are only interested in bounding instead of , the upper bound of Theorem 3.1 [44] should be , which is at least times our bound in Lemma 2. Furthermore, the upper bound of the row-wise eigenspace error in Lemma 2 does not rely on the upper bound of as long as holds. Therefore, whether using or does not change the bound in Lemma 2.

- Our Lemma 2 requires , while Theorem 3.1 [44] requires by their Assumption 3.1. Therefore, our Lemma 2 has a weaker requirement on the lower bound of than that of Theorem 3.1 [44]. Meanwhile, Theorem 3.1 [44] requires while our Lemma 2 has no lower bound requirement on as long as it is positive.

- Since by basic algebra, the lower bound requirement on in Assumption 3.1 of [44] gives that , which suggests that Theorem 3.1 [44] requires , and this also matches with the requirement on in Theorem VI.1 of [44] (and this is also pointed out by Table 1 of [63]). For comparison, our requirement on sparsity given in Assumption (A1) is , which is weaker than . Similarly, in our Lemma 2, the requirement gives , thus we have which is consistent with Assumption (A1).

If we further assume that (i.e., ) and , the row-wise eigenspace error is of order , which is consistent with the row-wise eigenvector deviation of the result of [63], shown in their Table 2. The next theorem gives the theoretical bounds on the estimations of memberships under MMSB.

Theorem 1.

Under , let be obtained from Algorithm 1, and suppose the conditions in Lemma 2 hold; there exists a permutation matrix such that, with probability at least , we have

Remark 2

(Comparison to Theorem 3.2 [44]). Consider a special case by setting , i.e., and . We focus on comparing the dependencies on K in bounds of our Theorem 1 and Theorem 3.2 [44]. Under this case, the bound of our Theorem 1 is proportional to by basic algebra; since and the bound in Theorem 3.2 [44] should multiply because (in [44]’s language) instead of in Equation (45) [44], the power of K is 2 by checking the bound of Theorem 3.2 [44]. Meanwhile, note that our bound in Theorem 2 is bound, while the bound in Theorem 3.2 [44] is bound. When we translate the bound of Theorem 3.2 [44] into bound, the power of K is 2.5 for Theorem 3.2 [44]. Hence, our bound in Theorem 1 has less dependence on K than that of Theorem 3.2 [44], and this is also consistent with the first bullet given after Lemma 2.

Table 3 summarizes the necessary conditions and dependence on the model parameters of the rates in Theorem 1 and Theorem 3.2 [44] for comparison. The following corollary is obtained by adding conditions on the model parameters similar to Corollary 3.1 in [44].

Corollary 1.

Under with , when the conditions of Lemma 2 hold, with probability at least , we have

Remark 3.

Consider a special case in Corollary 1 by setting as a constant, we see that the error bound in Corollary 1 is directly related to Assumption (A1), and for consistent estimation, ρ should shrink slower than .

Remark 4.

Under the setting of Corollary 1, the requirement in Lemma 2 holds naturally. By Lemma II.4 [44], we know that . To make the requirement always hold, we just need , which gives that , and it just matches with the requirement of the consistent estimation of memberships in Corollary 1.

Remark 5

(Comparison to Theorem 3.2 [44]). When and , by the first bullet in the analysis given after Lemma 2, the row-wise eigenspace error of Theorem 3.1 [44] is , and it gives that their error bound on estimation membership given in their Equation (3) is , which is times of the bound in our Lemma 1.

Remark 6

(Comparison to Theorem 2.2 [41]). Replacing the Θ in [41] by , their DCMM model degenerates to MMSB. Then their conditions in Theorem 2.2 are our Assumption (A1) and for MMSB. When , the error bound in Theorem 2.2 in [41] is , which is consistent with ours.

4. Separation Condition and Sharp Threshold Criterion

After obtaining Corollary 1 under MMSB, now we are ready to give our criterion after introducing the separation condition of with and the sharp threshold of ER random graph in this section.

Separation condition. Let be the probability matrix for when , so P has diagonal (and non-diagonal) entries (and ) and . Recall that under , we have . So, we have the separation condition (also known as the relative edge probability gap in [44]) and the alternative separation condition . Now, we are ready to compare the thresholds of the (alternative) separation condition obtained from different theoretical results.

- (a) By Corollary 1, we know that should shrink slower than for consistent estimation. Therefore, the separation condition should shrink slower than (i.e., Equation (1)), and this threshold is consistent with Corollary 1 of [59] and Equation (17) of [49]. The alternative separation condition should shrink slower than 1 (i.e., Equation (2)).

- (b) Undoubtedly, the (alternative) separation condition in (a) is consistent with that of [41], since Theorem 2.2 [41] shares the same error rate for with .

- (c) By Remark 5, using , we know that in Ref. [44], Equation (3) is , so should shrink slower than . Thus, for [44], the separation condition is , and the alternative separation condition is , which are sub-optimal compared with ours in (a). Using , and Equation (3) in Ref. [44], which is , we see that for [44], now the separation condition is and the alternative separation condition is .

- (d) For comparison, the error bound of Corollary 3.2 [30] built under SBM for community detection is for with , so should shrink slower than . Thus the separation condition for [30] is . However, as we analyzed in the first bullet given after Lemma 2, [30] applied to build their consistency results. Instead, we apply to the built theoretical results of [30], and the error bound of Corollary 3.2 [30] is , which returns the same separation condition as our Corollary 1 and Theorem 2.2 of [41] now. Following a similar analysis to (a)–(c), we can obtain an alternative separation condition for [30] immediately, and the results are provided in Table 2. Meanwhile, as analyzed in the first bullet given after Lemma 2, whether using or does not change our error rates. By carefully analyzing the proof of 2.1 of [41], we see that whether using or also does not change their row-wise large deviation, hence it does not influence their upper bound of the error rate for their Mixed-SCORE.

Sharp threshold. Consider the Erdös–Rényi (ER) random graph [61]. To construct the ER random graph , let and be an vector with all entries being ones. Since and the maximum entry of is assumed to be 1, we have in and hence . Then we have , i.e, . Since the error rate is , for consistent estimation, we see that p should shrink slower than (i.e., Equation (3)), which is just the sharp threshold in [61], Theorem 4.6 [62], strongly consistent with [72], and the first bullet in Section 2.5 [53] (called the lower bound requirement of p for the ER random graph to enjoy consistent estimation as the sharp threshold). Since the sharp threshold is obtained when , which means a connected ER random graph , this is also consistent with the connectivity in Table 2 of [21]. Meanwhile, since our Assumption (A1) requires , it gives that p should shrink slower than since under , which is consistent with the sharp threshold. Since Theorem 2.2 of Ref. [41] enjoys the same error rate as ours under the settings in Corollary 1, [41] also reaches the sharp threshold as . Furthermore, Remark 5 says that the bound for the error rate in Equation (3) [44] should be when using ; following a similar analysis, we see that the sharp threshold for [44] is , which is sub-optimal compared with ours. When using , the sharp threshold for [44] is . Similarly, the error bound of Corollary 3.2 [30] is under ER since and . Hence, the sharp threshold obtained from the theoretical upper bound for error rates of [30] is , which does not match the classical result. Instead, we apply with a high probability to build the theoretical results of [30], and the error bound of Corollary 3.2 [30] is , which returns the classical sharp threshold now.

Table 1 summarizes the comparisons of the separation condition and sharp threshold. Table 2 records the respective alternative separation condition. The delicate analysis given above supports our statement that the separation condition of a standard network (i.e., with or with ) and the sharp threshold of ER random graph can be seen as unified criteria to compare the theoretical results of spectral methods under different models. To conclude the above analysis, here, we summarize the main steps to apply the separation condition and sharp threshold criterion (SCSTC for short) to check the consistency of the theoretical results or compare the results of spectral methods under different models, where spectral methods mean methods developed based on the application of the eigenvectors or singular vectors of the adjacency matrix or its variants for community detection. The four-stage SCSTC is given below:

- Check whether the theoretical upper bound of the error rate contains (note that is probability matrix and maximum entries of should be set as 1), where the separation parameter always appears when considering the lower bound of . If it contains , move to the next step. Otherwise, it suggests possible improvements for the consistency by considering in the proofs.

- Let and network degenerate to the standard network whose numbers of nodes in each community are in the same order and can been seen as (i.e., a with in the case of a non-overlapping network or a with in the case of an overlapping network, and we will mainly focus on with for convenience.). Let the model degenerate to with , and then we obtain the new theoretical upper bound of the error rate. Note that if the model does consider degree heterogeneity, sparsity parameter should be considered in the theoretical upper bound of error rate in . If the model considers degree heterogeneity, when it degenerates to with , appears at this step. Meanwhile, if is not contained in the error rate of when the model does not consider degree heterogeneity, it suggests possible improvements by considering .

- Let be the probability matrix when the model degenerates to such that P has diagonal entries and non-diagonal entries . So, and separation condition since the maximum entry of is assumed to be 1. Compute the lower bound requirement of for consistency estimation through analyzing the new bound obtained in . Compute separation condition using the lower bound requirement for . The sharp threshold for the ER random graph is obtained from the lower bound requirement on for the consistency estimation under the setting that and .

- Compare the separation condition and the sharp threshold obtained in with Equations (1) and (3), respectively. If the sharp threshold or the separation condition , then this leaves improvements on the requirement of the network sparsity or theoretical upper bound of the error rate. If the sharp threshold is and the separation condition is , the optimality of the theoretical results on both error rates and the requirement of network sparsity is guaranteed. Finally, if the sharp threshold or separation condition , this suggests that the theoretical result is obtained based on instead of .

Remark 7.

This remark provides some explanations on the four steps of SCSTC.

- In , we give a few examples. When applying SCSTC to the main results of [40,48,67], we stop at as analyzed in Remark 8, suggesting possible improvements by considering for these works. Meanwhile, for the theoretical result without considering , we can also move to to obtain the new theoretical upper bound of the error rate, which is related with ρ and n. Discussions on the theoretical upper bounds of error rates of [50,68] given in Remark 8 are examples of this case.

- In , letting and the model reduce to for the non-overlapping network or for the overlapping network can always simplify the theoretical upper bound of error rate, as shown by our Corollaries 1 and 2. Here, we provide some examples about how to make a model degenerate to SBM. For in this paper, when all nodes are pure, MMSB degenerates to SBM; for the model introduced in Section 5 or DCSBM considered in [30,48,50], setting makes DCMM and DCSBM degenerates to SBM when all nodes are pure, similar to the ScBM and DCScBM considered in [67,68,71], the OCCAM model of [40], the stochastic blockmodel with the overlap proposed in [46], the extensions of SBM and DCSBM for hypergraph networks considered in [73,74,75], and so forth.

- In and , the separation condition can be replaced by an alternative separation condition.

- When using SCSTC to build and compare theoretical results for the spectral clustering method, the key point is computing the lower bound for when the probability matrix P has diagonal entries and non-diagonal entries from the theoretical upper bound of the error rate for a certain spectral method. If this lower bound is consistent with that of Equation (1), this suggests theoretical optimality, and otherwise it suggests possible improvements by following the four steps of SCSTC.

The above analysis shows that SCSTC can be used to study the consistent estimation of model-based spectral methods. Use SCSTC, the following remark lists a few works whose main theoretical results leave possible improvements.

Remark 8.

The unknown separation condition, or sub-optimal error rates, or a lack of requirement of network sparsity of some previous works, suggest possible improvements of their theoretical results. Here, we list a few works whose main results can be possibly improved until considering the separation condition.

- Theorem 4.4 of [48] proposes the upper bound of the error rate for their regularized spectral clustering (RSC) algorithm, designed based on a regularized Laplacian matrix under DCSBM. However, since [48] does not study the lower bound (in the [48] language) of and m, we cannot directly obtain the separation condition from their main theorem. Meanwhile, the main result of [48] does not consider the requirement on the network sparsity, which leaves some improvements. Ref. [48] does not study the theoretical optimal choice for the RSC regularizer τ. After considering and sparsity parameter ρ, one can obtain the theoretical optimal choice for τ, and this is helpful for explaining and choosing the empirical optimal choice for τ. Therefore, the feasible network implementation of SCSTC is obtaining the theoretical optimal choices for some tuning parameters, such as regularizer τ of the RSC algorithm. By using SCSTC, we can find that RSC achieves thresholds in Equations (1)–(3), and we omit proofs for it in this paper.

- Refs. [26,49] study two algorithms designed based on the Laplacian matrix and its regularized version under SBM. They obtain meaningful results, but do not consider the network sparsity parameter ρ and separation parameter . After obtaining improved error bounds which are consistent with separation condition using SCSTC, one can also obtain the theoretical optimal choice for regularizer τ of the RSC-τ algorithm considered in [49] and find that the two algorithms considered in [26,49] achieve thresholds in Equations (1)–(3).

- Theorem 2.2 of [50] provides an upper bound of their SCORE algorithm under DCSBM. However, since they do not consider the influence of , we cannot directly obtain the separation condition from their main result. Meanwhile, by setting their , DCSBM degenerates to SBM, which gives that their by their assumption Equation (2.9). Hence, when , the upper bound of Theorem 2.2 in [50] is . The upper bound of error rate in Corollary 3.2 of [30] is when using under the setting that and . We see that grows faster than , which suggests that there is space to improve the main result of [50] in the aspects of the separation condition and error rates. Furthermore, using SCSTC, we can find that SCORE achieves thresholds in Equations (1)–(3) because its extension mixed-SCORE [41] achieves thresholds in Equations (1)–(3).

- Ref. [67] proposes two models, ScBM and DCScBM, to model the directed networks and an algorithm DI-SIM based on the directed regularized Laplacian matrix to fit DCScBM. However, similar to [48], their main theoretical result in their Theorem C.1 does not consider the lower bound of (in the language of Ref. [67]) and , which causes that we cannot obtain the separation condition when DCScBM degenerates to SBM. Meanwhile, their Theorem C.1 also lacks a lower bound requirement on network sparsity. Hence, there is space to improve the theoretical guarantees of [67]. Similar to [48,49], we can also obtain the theoretical optimal choices for regularizer τ of the DI-SIM algorithm and prove that DI-SIM achieves the thresholds in Equations (1)–(3) since it is the directed version of RSC [48].

- Ref. [68] mainly studies the theoretical guarantee for the D-SCORE algorithm proposed by [14] to fit a special case of the DCScBM model for directed networks. By setting their for , their directed-DCBM degenerates to SBM. Meanwhile, since their , their mis-clustering rate is , which matches that of [30] under SBM when setting as a constant. However, if setting as , then the error rate is , which is sub-optimal compared with that of [30]. Meanwhile, similar to [50,68], the main result does not consider the influences of K and , causing a lack of a separation condition. Hence, the main results of [68] can be improved by considering K, , or a more optimal choice of to make their main results comparable with those of [30] when directed-DCBM degenerates to SBM. Using SCSTC, we can find that the D-SCORE also achieves thresholds in Equations (1)–(3) since it is the directed version of SCORE [50].

5. Degree Corrected Mixed Membership Model

Using SCSTC to Theorem 3.2 of [43], as shown in Table 1 and Table 2 results in Theorem 3.2 [43] being sub-optimal. To obtain the improvement theoretical results, we give a formal introduction of the degree corrected mixed membership (DCMM) model proposed in [41] first, then we review the SVM-cone-DCMMSB algorithm of [43] and provide the improvement theoretical results. A DCMM for generating A is as follows.

where is a diagonal matrix whose i-th diagonal entry is the degree heterogeneity of node i for . Let with for . Set and .

Definition 3.

Call model (5) the degree corrected mixed membership (DCMM) model, and denote it by .

Note that if we set and choose such that , then we have , which means that the stochastic blockmodel with overlap (SBMO) proposed in [46] is just a special case of DCMM. Meanwhile, if we write as , where are two positive diagonal matrices and let , then we can choose such that . By , we see that the OCCAM model proposed in [40] equals the DCMM model. By Equation (1.3) and Proposition 1.1 of [41], the following conditions are sufficient for the identifiability of DCMM when :

- (II1) and has unit diagonals.

- (II2) There is at least one pure node for each of the K communities.

Note that though the diagonal entries of are ones, may be larger than 1 as long as under DCMM, and this is slightly different from the setting that under MMSB.

Without causing confusion, under , we still let be the top-K eigen-decomposition of such that and . Set by and let be a diagonal matrix such that for . Then can be rewritten as . The existence of the ideal cone (IC for short) structure inherent in mentioned in [43] is guaranteed by the following lemma.

Lemma 3.

Under , where with being an diagonal matrix whose diagonal entries are positive.

Lemma 3 gives . Since and , we have

Since , we have . Then we have when Condition (II1) holds such that has unit-diagonals. Set . By Equation (6), we have

Meanwhile, since is an positive diagonal matrix, we have

With given and K, we can obtain and . The above analysis shows that once is known, we can exactly recover by Equations (7) and (8). From Lemma 3, we know that forms the IC structure. Ref. [43] proposes the SVM-cone algorithm (i.e., Algorithm A2) which can exactly obtain from the ideal cone with inputs and K.

Based on the above analysis, we are now ready to give the ideal SVM-cone-DCMMSB algorithm. Input . Output: .

- Let be the top-K eigen-decomposition of such that . Let , where is a diagonal matrix whose i-th diagonal entry is for .

- Run SVM-cone algorithm on assuming that there are K communities to obtain .

- Set .

- Recover by setting for .

With given and K, since the SVM-cone algorithm returns , the ideal SVM-cone-DCMMSB exactly (for detail, see Appendix B) returns .

Now, we review the SVM-cone-DCMMSB algorithm of [43], where this algorithm can be seen as an extension of SPACL designed under MMSB to fit DCMM. For the real case, use given in Algorithm 2 to estimate , respectively.

| Algorithm 2 SVM-cone-DCMMSB [43] |

|

Consistency under DCMM

Assume that

- (A2)

- .

Since we let , Assumption (A2) equals . The following lemma bounds under when Assumption (A2) holds.

Lemma 4.

Under , when Assumption (A2) holds, with probability at least , we have

Remark 9.

Consider a special case when such that DCMM degenerates to MMSB, since is assumed to be 1 under MMSB, Assumption (A2) and the upper bound of in Lemma 4 are consistent with that of Lemma 1. When all nodes are pure, DCMM degenerates to DCSBM [45], then the upper bound of in Lemma 4 is also consistent with Lemma 2.2 of [50]. Meanwhile, this bound is also consistent with Equation (6.34) in the first version of [41], which also applies the Bernstein inequality to bound . However, the bound is in Equation (C.53) of the latest version for [41], which applies Corollary 3.12 and Remark 3.13 of [76] to obtain the bound. Though the bound in Equation (C.53) of the latest version for [41] is sharper by a term, Corollary 3.12 of [76] has constraints on (here, ) such that can be written as , where are independent symmetric random variables with unit variance, and are given scalars; see the proof of Corollary 3.12 [76] for detail. Therefore, without causing confusion, we also use to denote the constraint A used in [41] such that . Furthermore, if we set such that , the bound in Lemma 4 also equals and the assumption (A2) reads . The bound in Equation (C.53) of [41] reads .

Lemma 5.

(Row-wise eigenspace error) Under , when Assumption (A2) holds, suppose , with probability at least .

- When we apply Theorem 4.2 of [64], we have

- When we apply Theorem 4.2 of [65], we have

Without causing confusion, we also use under DCMM as Lemma 2 for notation convenience.

Remark 10.

When such that DCMM degenerates to MMSB, bounds in Lemma 5 are consistent with those of Lemma 2.

Remark 11

(Comparison to Theorem I.3 [43]). Note that the ρ in [43] is , which gives that the row-wise eigenspace concentration in Theorem I.3 [43] is when using and this value is at least . Since by Lemma II.1 of [43] and by the proof of Lemma 5, we see that the upper bound of Theorem I.3 [43] is , which is (recall that ) times than our . Again, Theorem I.3 [43] has stronger requirements on the sparsity of and the lower bound of than our Lemma 5. When using the bound of in our Lemma 4 to obtain the row-wise eigenspace concentration in Theorem I.3 [43], their upper bound is times than our . Similar to the first bullet given after Lemma 2, whether using or does not change our ϖ under DCMM.

Remark 12

(Comparison to Lemma 2.1 [41]). The fourth bullet of Lemma 2.1 [41] is the row-wise deviation bound for the eigenvectors of the adjacency matrix under some assumptions translated to our , Assumption (A2) and lower bound requirement on since they apply Lemma C.2 [41]. The row-wise deviation bound in the fourth bullet of Lemma 2.1 [41] reads , where the denominator is instead of our due to the fact that [41] uses to roughly estimate while we apply to strictly control the lower bound of . Therefore, we see that the row-wise deviation bound in the fourth bullet of Lemma 2.1 [41] is consistent with our bounds in Lemma 5 when , while our row-wise eigenspace errors in Lemma 5 are more applicable than those of [41] since we do not need to add a constraint on such that . The upper bound of of [41] is given in their Equation (C.53) under , while ours is in Lemma 4, since our bound of the row-wise eigenspace error in Lemma 5 is consistent with the fourth bullet of Lemma 2.1 [41], this supports the statement that the row-wise eigenspace error does not rely on given in the first bullet after Lemma 2.

Let , where measures the minimum summation of nodes belonging to a certain community. Increasing makes the network tend to be more balanced, and vice versa. Meanwhile, the term appears when we propose a lower bound of defined in Lemma A1 to keep track of the model parameters in our main theorem under . The next theorem gives the theoretical bounds on estimations of memberships under DCMM.

Theorem 2.

Under , let be obtained from Algorithm 2, suppose conditions in Lemma 5 hold, and there exists a permutation matrix such that with probability at least , we have

For comparison, Table 4 summarizes the necessary conditions and dependence on model parameters of rates for Theorem 2 and Theorem 3.2 [43], where the dependence on K and are analyzed in Remark 13 given below.

Remark 13.

(Comparison to Theorem 3.2 [43]) Our bound in Theorem 2 is written as combinations of model parameters and Π can follow any distribution as long as Condition (II2) holds, where such model parameters’ related form of estimation bound is convenient for further theoretical analysis (see Corollary 2), while the bound in Theorem 3.2 [43] is built when Π follows a Dirichlet distribution and . Meanwhile, since Theorem 3.2 [43] applies Theorem I.3 [43] to obtain the row-wise eigenspace error, the bound in Theorem 3.2 [43] should multiply by Remark 11, and this is also supported by the fact that in the proof of Theorem 3.1 [43], when computing bound of (in the language in Ref. [43]) [43] ignores the term.

Consider a special case by setting and with , where such case matches the setting in Theorem 3.2 [43]. Now we focus on analyzing the powers of K in our Theorem 2 and Theorem 3.2 [43]. Under this case, the power of K in the estimation bound of Theorem 2 is 6 by basic algebra; since , by Lemma A1 where η in Lemma A1 follows the same definition as that of Theorem 3.2 [43], and the bound in Theorem 3.2 [43] should multiply because (in the language of Ref. [43]) should be no larger than instead of in the proof of Theorem 2.8 [43], the power of K is 6 by checking the bound of Theorem 3.2 [43]. Meanwhile, note that our bound in Theorem 2 is bound, while the bound in Theorem 3.2 [43] is bound, and when we translate the bound of Theorem 3.2 [43] into bound, the power of K is 6.5 for Theorem 3.2 [43], suggesting that our bound in Theorem 2 has less dependence on K than that of Theorem 3.2 [43].

The following corollary is obtained by adding some conditions on the model parameters.

Corollary 2.

Under , when conditions of Lemma 5 hold, suppose and , with probability at least , we have

Meanwhile, when (i.e., ), we have

Remark 14.

When and , the requirement in Lemma 5 holds naturally. By the proof of Lemma 5, has a lower bound . To make the requirement always hold, we just need , and it gives , which matches the requirement of consistent estimation in Corollary 2.

Using SCSTC to Corollary 2, let such that DCMM degenerates to MMSB, and it is easy to see that the bound in Lemma 2 is consistent with that of Lemma 1. Therefore, the separation condition, alternative separation condition and sharp threshold obtained from Corollary 2 for the extended version of SPACL under DCMM are consistent with classical results, as shown in Table 1 and Table 2 (detailed analysis will be provided in next paragraph). Meanwhile, when and settings in Corollary 2 hold, the bound in Theorem 2.2 [41] is of order , which is consistent with our bound in Corollary 2.

Consider a mixed membership network under the settings of Corollary 2 when such that DCMM degenerates to MMSB. By Corollary 2, should shrink slower than . We further assume that for ; we see that this with unit diagonals and as non-diagonal entries still satisfies Condition (II1). Meanwhile, and , so should shrink slower than . Setting as the probability matrix for such , we have , and . Sure, the separation condition should shrink slower than , which satisfies Equation (1). For an alternative separation condition and sharp threshold, just follow a similar analysis as that of MMSB, and we obtain the results in Table 1 and Table 2.

6. Numerical Results

In this section, we present the experimental results for an overlapping network by plotting the phase transition behaviors for both SPACL and SVM-cone-DCMMSB to show that the two methods achieve the threshold in Equation (2) under when and . We also use some experiments to show that the spectral methods studied in [26,30,48,50] achieve the threshold in Equation (2) under when and for the non-overlapping network. To measure the performance of different algorithms, we use the error rate defined below:

For all simulations, let and be diagonal and non-diagonal entries of P, respectively. Since P is the probability matrix, and should be located in . After setting P and , each simulation experiment has the following steps:

- (a)

- Set .

- (b)

- Let W be an symmetric matrix such that all diagonal entries of W are 0, and are independent centered Bernoulli with parameters . Let be the adjacency matrix of a simulated network with mixed memberships under MMSB (so there are no loops).

- (c)

- Apply spectral clustering method to A with K communities. Record the error rate.

- (d)

- Repeat (b)–(c) 50 times, and report the mean of the error rates over the 50 times.

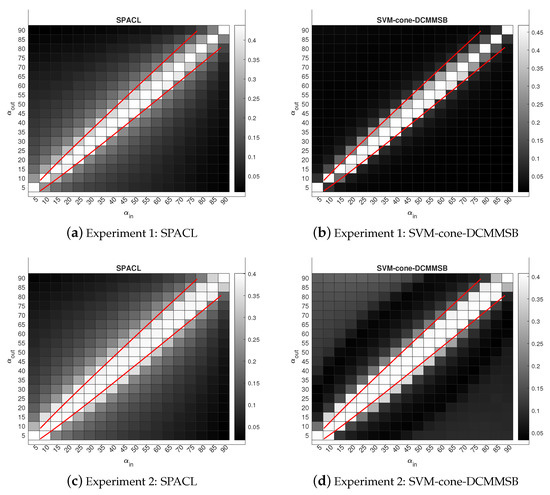

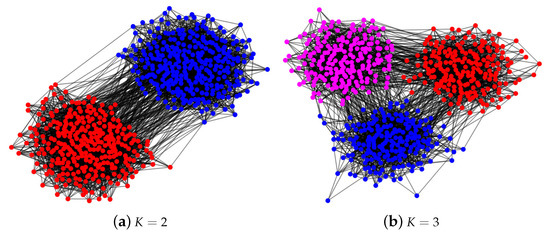

Experiment 1: Set , and , where is the number of pure nodes in each community. Let all mixed nodes have mixed membership . Since and should be set to less than , we let and be in the range of . For each pair of , we generate P and then run steps (a)–(d) for this experiment. So, this experiment generates an adjacency matrix of network with mixed memberships under . The numerical results are displayed in panels (a) and (b) of Figure 1. We can see that our theoretical bounds (red lines) are quite tight, and the threshold regions obtained from the boundaries of light white areas in panels (a) and (b) are close to our theoretical bounds. Meanwhile, both methods perform better when increases and SVM-cone-DCMMSB outperforms SPACL for this experiment since panel (b) is darker than panel (a). Note that the network generated here is an assortative network when , and the network is a dis-assortative network when . So, the results of this experiment support our finding that SPACL and SVM-cone-DCMMSB achieves the threshold in Equation (2) for both assortative and dis-assortative networks.

Figure 1.

Phase transition for SPACL and SVM-cone-DCMMSB under MMSB: darker pixels represent lower error rates. The red lines represent .

Experiment 2: Set , and . Let all mixed nodes have mixed membership . Let and range in . Sure, this experiment is under . The numerical results are displayed in panels (c) and (d) of Figure 1. We see that both methods perform poorly in the region between the two red lines, and the analysis of the numerical results for this experiment is similar to that of Experiment 1.

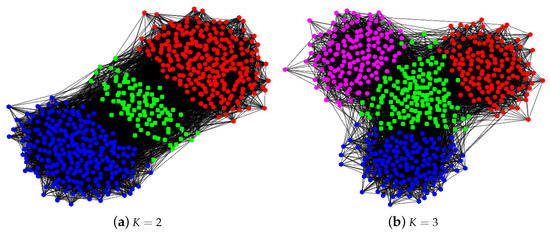

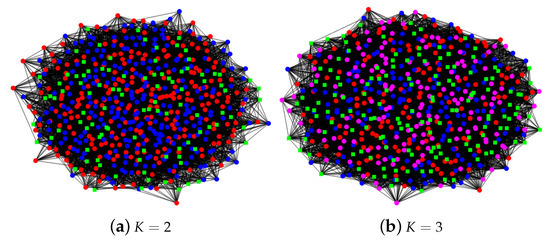

For visualization, we plot two networks generated from when and in Figure 2. We also plot two dis-assortative networks generated from when and in Figure A1 in Appendix A. In Experiments 1 and 2, there exist some mixed nodes for network generated under MMSB. The following two experiments only focus on network under SBM such that all nodes are pure. Meanwhile, we only consider four spectral algorithms studied in [26,30,48,50] for the non-overlapping network. For convenience, we call the spectral clustering method studied in [26] normalized principle component analysis (nPCA), and Algorithm 1 studied in [30] ordinary principle component analysis (oPCA), where nPCA and oPCA are also considered in [50]. Next, we briefly review nPCA, oPCA, RSC and SCORE.

Figure 2.

Panel (a): a graph generated from the mixed membership stochastic blockmodel with nodes and 2 communities. Among the 600 nodes, each community has 250 pure nodes. For the 100 mixed nodes, they have mixed membership . Panel (b): a graph generated from MMSB with nodes and 3 communities. Among the 600 nodes, each community has 150 pure nodes. For the 150 mixed nodes, they have mixed membership . Nodes in panels (a,b) connect with probability and , so the two networks in both panels are assortative networks. For panel (a), error rates for SPACL and SVM-cone-DCMMSB are 0.0285 and 0.0175, respectively, where error rate is defined in Equation (9). For panel (b), error rates for SPACL and SVM-cone-DCMMSB are 0.0709 and 0.0436, respectively. For both panels, dots in the same color are pure nodes in the same community and green square nodes are mixed.

The nPCA algorithm is as follows with input and output .

- Obtain the graph Laplacian , where D is a diagonal matrix with for .

- Obtain , the top K eigen-decomposition of L.

- Apply k-means algorithm to to obtain .

The oPCA algorithm is as follows with input and output .

- Obtain , the top K eigen-decomposition of A.

- Apply k-means algorithm to to obtain .

The RSC algorithm is as follows with input , regularizer , and output .

- Obtain the regularized graph Laplacian , where , and the default is the average node degree.

- Obtain , the top K eigen-decomposition of . Let be the row-normalized version of .

- Apply k-means algorithm to to obtain .

The SCORE algorithm is as follows with input , threshold and output .

- Obtain the K (unit-norm) leading eigenvectors of A: .

- Obtain an matrix such that for ,where , and the default is .

- Apply k-means algorithm to to obtain .

We now describe Experiments 3 and 4 under when and .

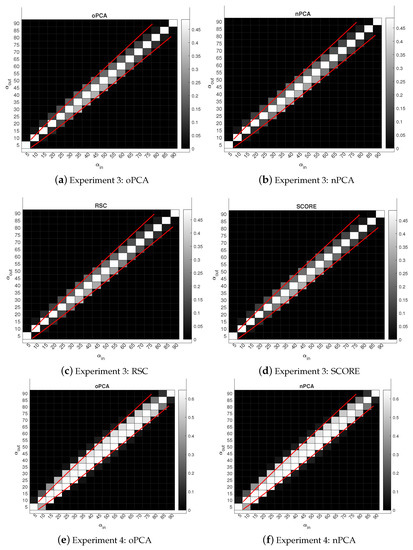

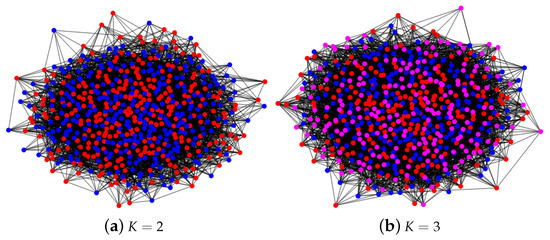

Experiment 3: Set , and , i.e., all nodes are pure and each community has 300 nodes. So this experiment generates the adjacency matrix of the network under . Numerical results are displayed in panels (a)–(d) of Figure 3. We can see that these spectral clustering methods achieve the threshold in Equation (2).

Figure 3.

Phase transition for oPCA, nPCA, RSC and SCORE under SBM: darker pixels represent lower error rates. The red lines represent .

Experiment 4: Set , and , i.e., all nodes are pure and each community has 200 nodes. So, this experiment is under . The numerical results are displayed in panels (e)–(h) of Figure 3. The results show that these methods achieve threshold in Equation (2).

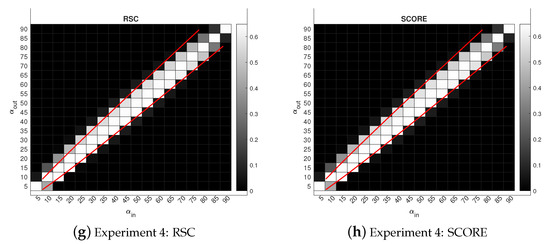

For visualization, we plot two assortative networks generated from when and in Figure 4. We also plot two dis-assortative networks generated from when and in Figure A2 in Appendix A.

Figure 4.

Panel (a): a graph generated from . Panel (b): a graph generated from . So, in panel (a), there are 2 communities and each community has 300 nodes; in panel (b), there are 3 communities and each community has 200 nodes. Networks in panels (a,b) are assortative networks since . For both panels, error rates for oPCA, nPCA, RSC and SCORE are 0. Colors indicate clusters.

7. Conclusions

In this paper, the four-step separation condition and sharp threshold criterion SCSTC is summarized as a unified framework to study the consistencies and compare the theoretical error rates of spectral methods under models that can degenerate to SBM in a community detection area. With an application of this criterion, we find some inconsistent phenomena of a few previous works. In particular, using SCSTC, we find that the original theoretical upper bounds on error rates of the SPACL algorithm under MMSB and its extended version under DCMM are sub-optimal for the error rates and requirements on network sparsity. To find how the inconsistent phenomena occur, we re-establish theoretical upper bounds of error rats for both SPACL and its extended version by using recent techniques on row-wise eigenvector deviation. The resulting error bounds explicitly keep track of seven independent model parameters , which allow us to have a further delicate analysis. Compared with the original theoretical results, ours have smaller error rates with lesser dependence on K and , weaker requirements on the network sparsity and the lower bound of the smallest nonzero singular value of population adjacency matrix under both MMSB and DCMM. For DCMM, we have no constraint on the distribution of the membership matrix as long as it satisfies the identifiability condition. When considering the separation condition of a standard network and the probability to generate a connected Erdös–Rényi (ER) random graph by using SCSTC, our theoretical results match the classical results. Meanwhile, our theoretical results also match those of Theorem 2.2 [41] under mild conditions, and when DCMM degenerates to MMSB, the theoretical results under DCMM are consistent with those under MMSB. Using the SCSTC criterion, we find that the reasons behind the inconsistent phenomena are the sup-optimality of the original theoretical upper bounds on error rates for SPACL as well as its extended version, and the usage of a regularization version of the adjacency matrix when building theoretical results for spectral methods designed to detect nodes labels for a non-mixed network. The processes of finding these inconsistent phenomena, sub-optimality theoretical results on error rates and the formation mechanism of these inconsistent phenomena guarantee the usefulness of the SCSTC criterion. As shown by Remark 8, the theoretical results of some previous works can be improved by applying this criterion. Using SCSTC, we find that spectral methods considered in [26,41,43,44,48,49,50,67,68] achieve thresholds in Equations (1)–(3), and this conclusion is verified by both theoretical analysis and the numerical results in this paper. A limitation of this criterion is that it is only used for studying the consistency of spectral methods for a standard network with a constant number of communities. It would be interesting to develop a more general criterion that can study the consistency of all methods besides spectral methods, and models besides those can degenerate to SBM for a non-standard network with large K. Finally, we hope that the SCSTC criterion developed in this paper can be widely applied to build and compare theoretical results for spectral methods in the community detection area and that the thresholds in Equations (1)–(3) can be seen as benchmark thresholds for spectral methods.

Funding

This research was funded by Scientific research start-up fund of China University of Mining and Technology NO. 102520253, the High level personal project of Jiangsu Province NO. JSSCBS20211218.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SCSTC | separation condition and sharp threshold criterion |

| SBM | stochastic blockmodels |

| DCSBM | degree corrected stochastic blockmodel |

| MMSB | mixed membership stochastic blockmodel |

| DCMM | degree corrected mixed membership model |

| SBMO | stochastic blockmodel with overlap |

| OCCAM | overlapping continuous community assignment model |

| RSC | regularized spectral clustering |

| SCORE | spectral clustering on ratios-of-eigenvectors |

| SPACL | sequential projection after cleaning |

| ER | Erdös–Rényi |

| IS | ideal simplex |

| IC | ideal cone |

| SP | successive projection algorithm |

| oPCA | ordinary principle component analysis |

| nPCA | normalized principle component analysis |

Appendix A. Additional Experiments

Figure A1.

Panel (a): a graph generated from MMSB with and . Each community has 250 pure nodes. For the 100 mixed nodes, they have mixed membership . Panel (b): a graph generated from MMSB with and . Each community has 150 pure nodes. For the 150 mixed nodes, they have mixed membership . Nodes in panels (a,b) connect with probability and , so the two networks in both panels are dis-assortative networks. For panel (a), error rates for SPACL and SVM-cone-DCMMSB are 0.0298 and 0.0180, respectively. For panel (b), error rates for SPACL and SVM-cone-DCMMSB are 0.1286 and 0.0896, respectively. For both panels, dots in the same color are pure nodes in the same community, and green square nodes are mixed.

Figure A2.

Panel (a): a graph generated from . Panel (b): a graph generated from . Networks in panels (a,b) are dis-assortative networks since . For panel (a), error rates for oPCA, nPCA, RSC and SCORE are 0. For panel (b), error rates for oPCA, nPCA, RSC and SCORE are 0.0067. Colors indicate clusters.

Appendix B. Vertex Hunting Algorithms

The SP algorithm is written as below.

| Algorithm A1 Successive projection (SP) [51] |

|

Based on Algorithm A1, the following theorem is Theorem 1.1 in [51], and it is also the Lemma VII.1 in [44]. This theorem provides the bound between the corner matrix and its estimated version returned by letting be the input of the SP algorithm when enjoys the ideal simplex structure.

Theorem A1.

Fix and . Consider a matrix , where has a full column rank, is a non-negative matrix such that the sum of each column is at most 1, and . Suppose that has a submatrix equal to . Write . Suppose , where and are the minimum singular value and condition number of , respectively. If we apply the SP algorithm to columns of , then it outputs an index set such that and , where is the k-th column of .

For the ideal SPACL algorithm, since inputs of the ideal SPACL are and K, we see that the inputs of SP algorithm are U and K. Let and . Then, we have . By Theorem A1, the SP algorithm returns up to permutation when the input is U, assuming there are K communities. Since under , we see that as long as . Therefore, though may be different up to the permutation, is unchanged. Therefore, following the four steps of the ideal SPACL algorithm, we see that it exactly returns .

Algorithm A2 below is the SVM-cone algorithm provided in [43].

| Algorithm A2 SVM-cone [43] |

|

As suggested in [43], we can start and incrementally increase it until K distinct clusters are found. Meanwhile, for the ideal SVM-cone-DCMMSB algorithm, when setting and K as the inputs of the SVM-cone algorithms, since , Lemma F.1. [43] guarantees that SVM-cone algorithm returns up to permutation. Since by Lemma 3 under , we have when by basic algebra, which gives that is unchanged though may be different up to permutation. Therefore, the ideal SVM-cone-DCMMSB exactly recovers .

Appendix C. Proof of Consistency under MMSB

Appendix C.1. Proof of Lemma 1

Proof.

We apply Theorem 1.4 (Bernstein inequality) in [70] to bound , and this theorem is written as shown below.

Theorem A2.

Consider a finite sequence of independent, random, self-adjoint matrices with dimension d. Assume that each random matrix satisfies

Then, for all ,

where .

Let be an vector, where and 0 elsewhere, for . For convenience, set . Then we can write W as . Set as the matrix such that , which gives where and

For the variance parameter . We bound as shown below:

Next we bound as shown below:

Set for any , combine Theorem A2 with , and we have

where we use Assumption (A1) such that . □

Appendix C.2. Proof of Lemma 2

Proof.

Let , and be the SVD decomposition of with , where and represent respectively the left and right singular matrices of H. Define . Since , by the proof of Lemma 1, holds by Assumption (A1) where is the incoherence parameter defined as . By Theorem 4.2 [64], with high probability, we have

provided that for some sufficiently small constant . By Lemma 3.1 of [44], we know that , which gives

Remark A1.

By Theorem 4.2 of [65], when , we have

By Lemma 3.1 [44], we have

Unlike Lemma V.1 [44] which bounds via the Chernoff bound and obtains with high probability, we bound by the Bernstein inequality using a similar idea as Equation (C.67) of [41]. Let be any vector; by Equation (C.67) [41], we know that with an application of the Bernstein inequality, for any and , we have

By the proof of Lemma 1, we have . Set as 1 or such that , we have

Set for any , by Assumption (A1), we have

Hence, when where , with probability at least ,

Note that when , the above bound turns to be , which is consistent with that of Equation (A1). Also note that this bound is sharper than the of Lemma V.1 [44] by Assumption (A1).

Since and U have orthonormal columns, now we are ready to bound :

where the last inequality holds since under by Lemma II.4 [44] This bound is if we use Theorem 4.2 of [65].

Remark A2.

By Theorem 4.5 [77], we have

. Sure our bound enjoys concise form. In particular, when and , the two bounds give that , which provides same error bound of the estimated memberships given in Corollary 1.

□

Appendix C.3. Proof of Theorem 1

Proof.

Follow almost the same proof as Equation (3) of [44]. For , there exists a permutation matrix such that

Note that the bound in Equation (A2) is times the bound in Equation (3) of [44], and this is because in Equation (3) of [44], (in the language of Ref. [44]) denotes the Frobenius norm of instead of the spectral norm. Since , the bound in Equation (3) [44] should multiply .

Recall that , for , since

□

Appendix C.4. Proof of Corollary 1

Proof.

Under the conditions of Corollary 1, we have

Under the conditions of Corollary 1, Lemma 2 gives , which gives that

□

Appendix D. Proof of Consistency under DCMM

Appendix D.1. Proof of Lemma 3

Proof.

Since , we have since . Recall that , we have , where we set for convenience. Since , we have .

Set . Then we have , which gives that for . Therefore, , and combined with the fact that , we have

Therefore, we have

where is a diagonal matrix whose i-th diagonal entry is for . □

Appendix D.2. Proof of Lemma 4

Proof.

Similar to the proof of Lemma 1, set and , we have , and . Since

we have

Set for any , combine Theorem A2 with , we have

where we use Assumption (A2) such that . □

Appendix D.3. Proof of Lemma 5

Proof.

The proof is similar to that of Lemma 2, so we omit most details. Since , , holds by Assumption (A2) where . By Theorem 4.2 [64], with high probability, we have

provided that for some sufficiently small constant . By Lemma H.1 of [43], we know that under , which gives

Remark A3.

Similar to the proof of Lemma 2, by Theorem 4.2 of [65], when , we have

Let be any vector, and by the Bernstein inequality, for any and , we have

By the proof of Lemma 4, we have , which gives . Set as 1 or such that , we have

Set for any , by Assumption (A2), we have

Hence, when where , with probability at least ,

Meanwhile, since , for convenience, we let the lower bound requirement of be .

Similar to the proof of Lemma 2, we have

where the last inequality holds since . And this bound is if we use Theorem 4.2 of [65]. □

Appendix D.4. Proof of Theorem 2

Proof.

To prove this theorem, we follow similar procedures as Theorem 3.2 of [43]. For , recall that and , where and M are defined in the proof of Lemma 3 such that and , we have

Now, we provide a lower bound of as below

Therefore, by Lemma A3, we have

□

Appendix D.5. Proof of Corollary 2

Proof.

Under conditions of Corollary 2, we have

Under the conditions of Corollary 2, Lemma 5 gives , which gives that

By basic algebra, this corollary follows. □

Appendix D.6. Basic Properties of Ω under DCMM

Lemma A1.

Under , we have

where .

Proof.

Since by the proof of Lemma 3, we have , which gives that

where x is a vector whose norm is 1. Then, for , we have

where we use the fact that since and all entries of are non-negative.

Since , we have

where we set and we use the fact that are diagonal matrices and . Then we have

□

Appendix D.7. Bounds between Ideal SVM-cone-DCMMSB and SVM-cone-DCMMSB

The next lemma focuses on the 2nd step of SVM-cone-DCMMSB and is the cornerstone to characterize the behaviors of SVM-cone-DCMMSB.

Lemma A2.

Under , when conditions of Lemma 5 hold, there exists a permutation matrix such that with probability at least , we have

where , i.e., are the row-normalized versions of and , respectively.

Proof.

Lemma G.1. of [43] says that using as input of the SVM-cone algorithm returns the same result as using as the input. By Lemma F.1 of [43], there exists a permutation matrix such that

where and . Next we give upper bound of .

where the last inequality holds by Lemma A1. Then, we have . By Lemma H.2. of [43], . By the lower bound of given in Lemma A1, we have

□

Next the lemma focuses on the 3rd step of SVM-cone-DCMMSB and bounds .

Lemma A3.

Under , when the conditions of Lemma 5 hold, with a probability of at least , we have

Proof.